Design and Performance of the Atlas Muon Detector

- Slides: 18

Design and Performance of the Atlas Muon Detector Control System A. Polini (on behalf of the ATLAS Muon Collaboration) Outline: • • • Detector Description System Requirements Architecture and Special Features Status and Performance Future and Outlook A. Polini CHEP 2010, October 18 -22, Taipei, Taiwan

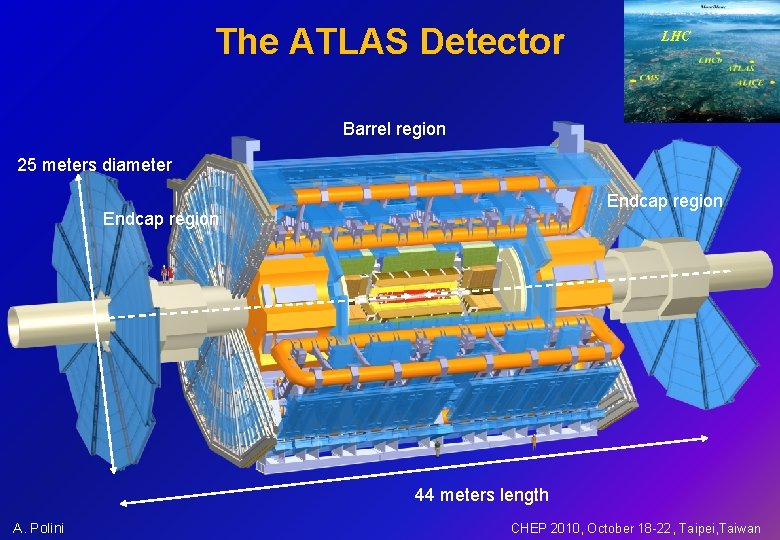

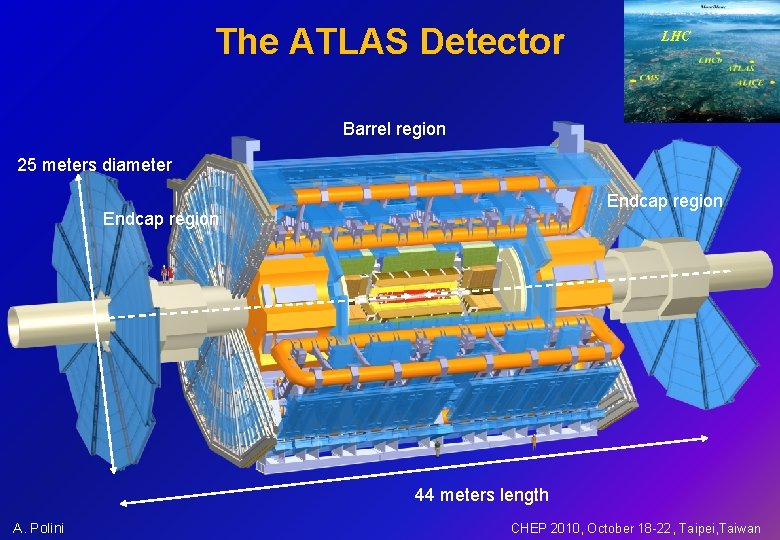

The ATLAS Detector LHC Barrel region 25 meters diameter Endcap region 44 meters length A. Polini CHEP 2010, October 18 -22, Taipei, Taiwan

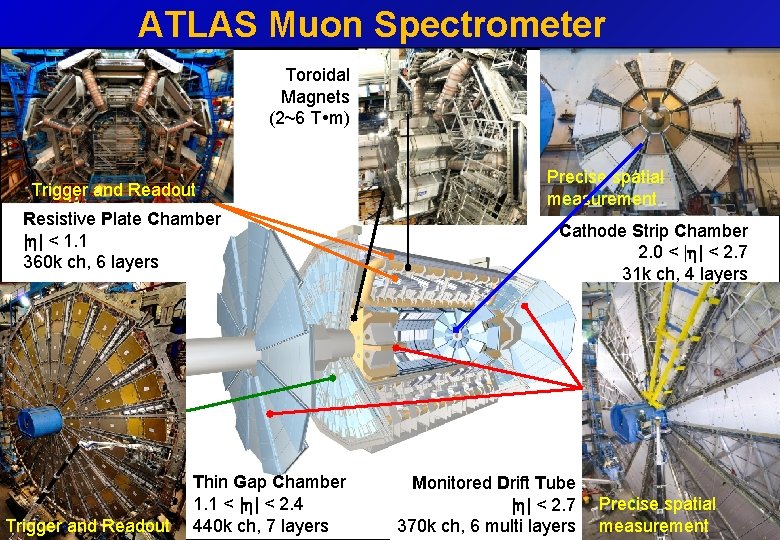

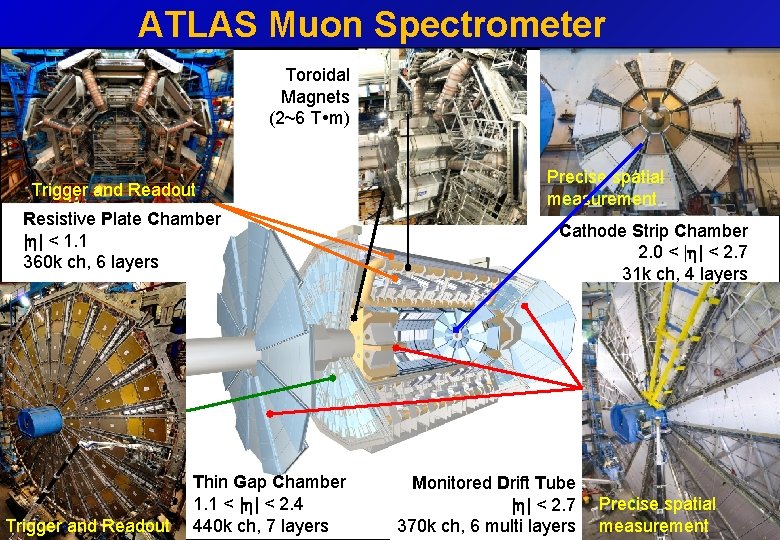

ATLAS Muon Spectrometer Toroidal Magnets (2~6 T m) Trigger and Readout Resistive Plate Chamber |h| < 1. 1 360 k ch, 6 layers Trigger A. Polini and Readout Thin Gap Chamber 1. 1 < |h| < 2. 4 440 k ch, 7 layers Precise spatial measurement Cathode Strip Chamber 2. 0 < |h| < 2. 7 31 k ch, 4 layers Monitored Drift Tube |h| < 2. 7 Precise spatial measurement 370 k ch, 6 multi layers CHEP 2010, October 18 -22, Taipei, Taiwan 3

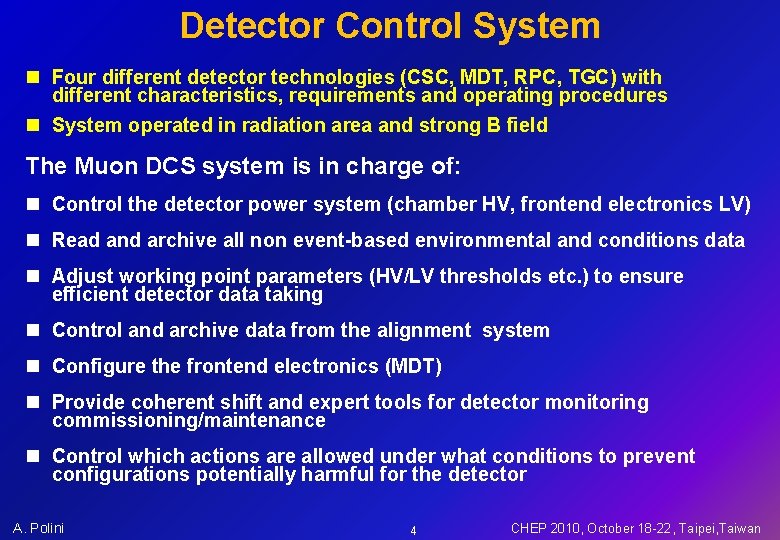

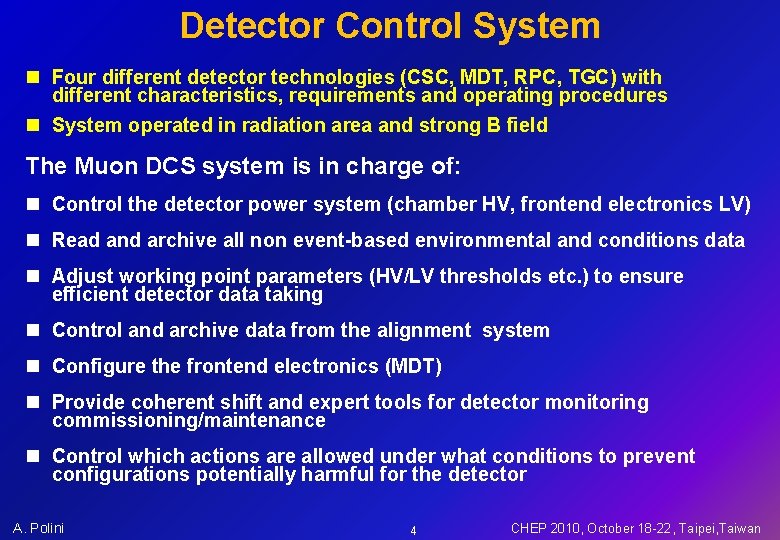

Detector Control System n Four different detector technologies (CSC, MDT, RPC, TGC) with different characteristics, requirements and operating procedures n System operated in radiation area and strong B field The Muon DCS system is in charge of: n Control the detector power system (chamber HV, frontend electronics LV) n Read and archive all non event-based environmental and conditions data n Adjust working point parameters (HV/LV thresholds etc. ) to ensure efficient detector data taking n Control and archive data from the alignment system n Configure the frontend electronics (MDT) n Provide coherent shift and expert tools for detector monitoring commissioning/maintenance n Control which actions are allowed under what conditions to prevent configurations potentially harmful for the detector A. Polini 4 CHEP 2010, October 18 -22, Taipei, Taiwan

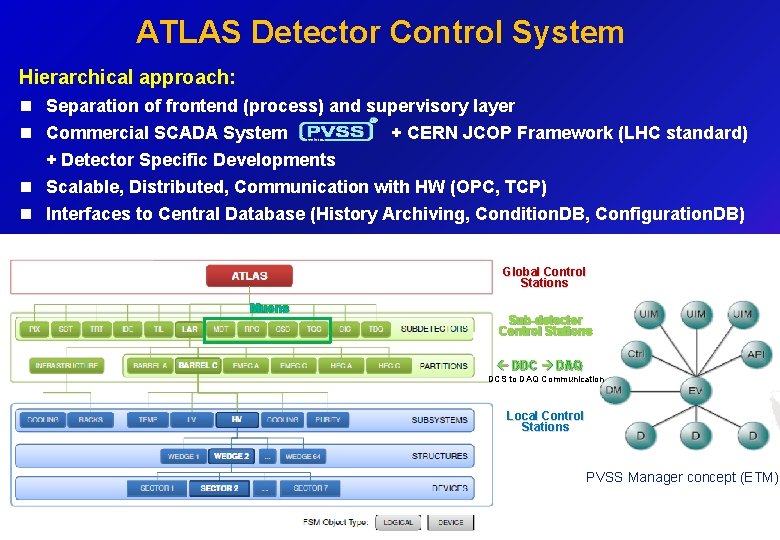

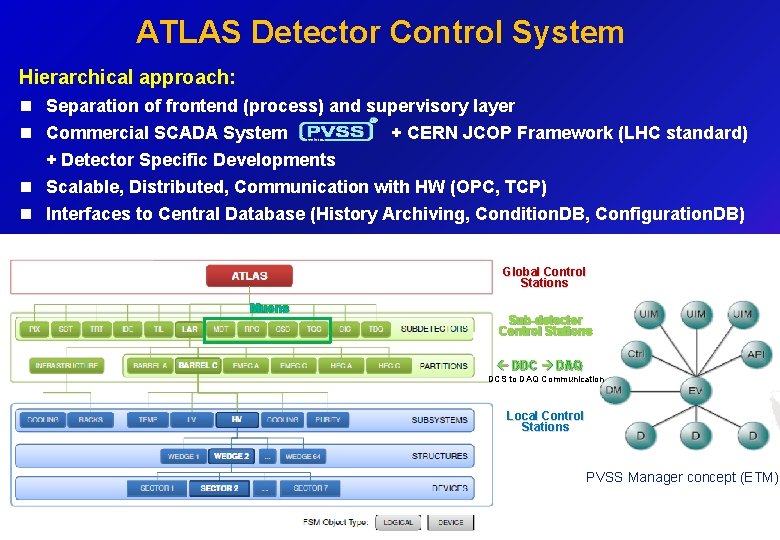

ATLAS Detector Control System Hierarchical approach: n Separation of frontend (process) and supervisory layer n Commercial SCADA System + CERN JCOP Framework (LHC standard) + Detector Specific Developments n Scalable, Distributed, Communication with HW (OPC, TCP) n Interfaces to Central Database (History Archiving, Condition. DB, Configuration. DB) Global Control Stations Muons Sub-detector Control Stations DDC DAQ DCS to DAQ Communication Local Control Stations PVSS Manager concept (ETM) A. Polini 5 CHEP 2010, October 18 -22, Taipei, Taiwan

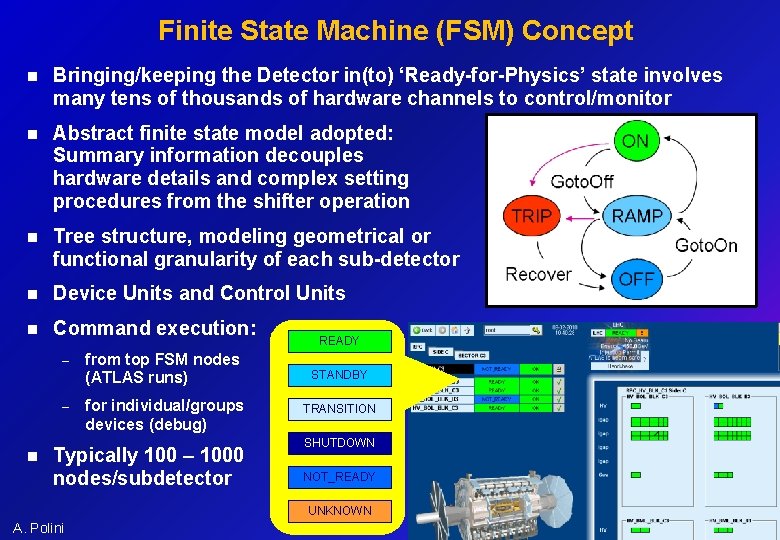

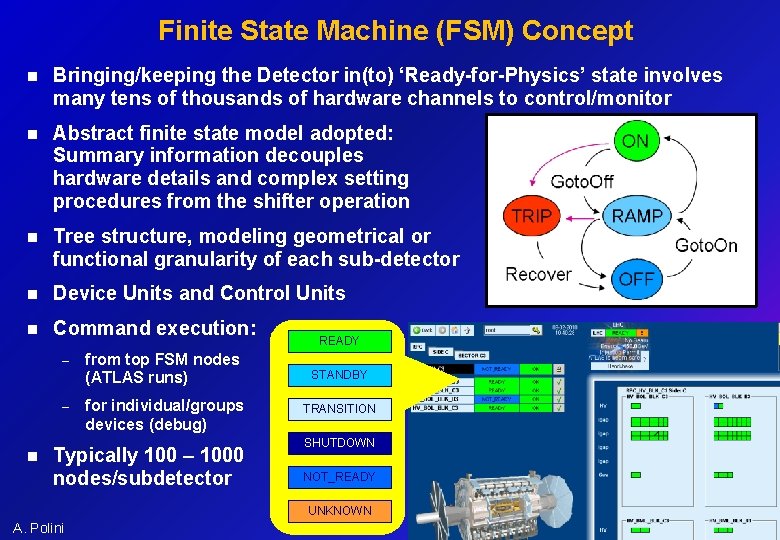

Finite State Machine (FSM) Concept n Bringing/keeping the Detector in(to) ‘Ready-for-Physics’ state involves many tens of thousands of hardware channels to control/monitor n Abstract finite state model adopted: Summary information decouples hardware details and complex setting procedures from the shifter operation n Tree structure, modeling geometrical or functional granularity of each sub-detector n Device Units and Control Units n Command execution: – – n from top FSM nodes (ATLAS runs) for individual/groups devices (debug) Typically 100 – 1000 nodes/subdetector READY STANDBY TRANSITION SHUTDOWN NOT_READY UNKNOWN A. Polini 6 CHEP 2010, October 18 -22, Taipei, Taiwan

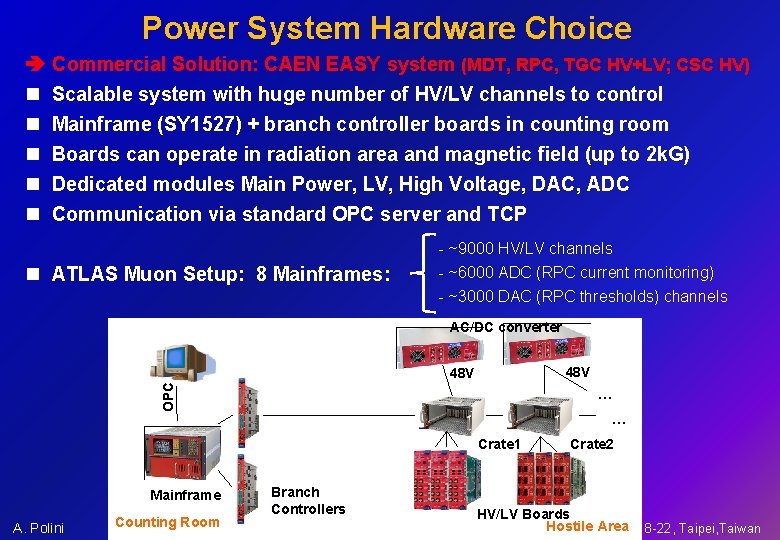

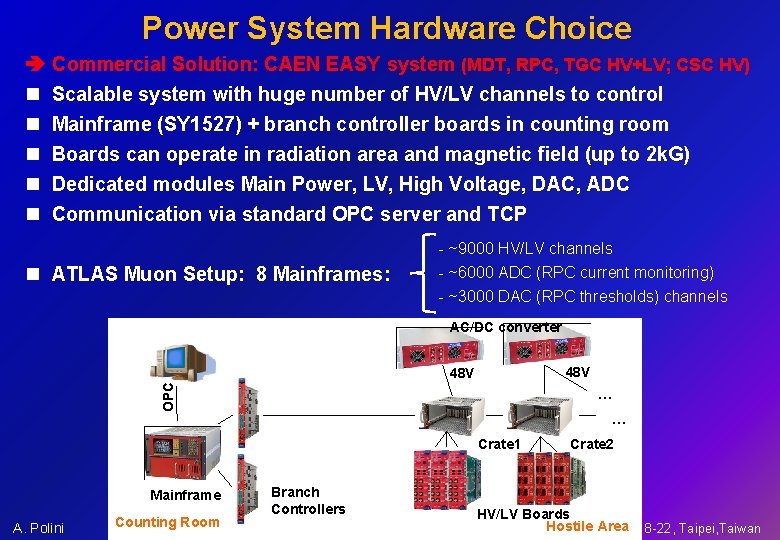

Power System Hardware Choice Commercial Solution: CAEN EASY system (MDT, RPC, TGC HV+LV; CSC HV) n n n Scalable system with huge number of HV/LV channels to control Mainframe (SY 1527) + branch controller boards in counting room Boards can operate in radiation area and magnetic field (up to 2 k. G) Dedicated modules Main Power, LV, High Voltage, DAC, ADC Communication via standard OPC server and TCP - ~9000 HV/LV channels n ATLAS Muon Setup: 8 Mainframes: - ~6000 ADC (RPC current monitoring) - ~3000 DAC (RPC thresholds) channels AC/DC converter 48 V OPC 48 V … … Crate 1 Mainframe A. Polini Counting Room Branch Controllers 7 Crate 2 HV/LV Boards Area 18 -22, Taipei, Taiwan CHEPHostile 2010, October

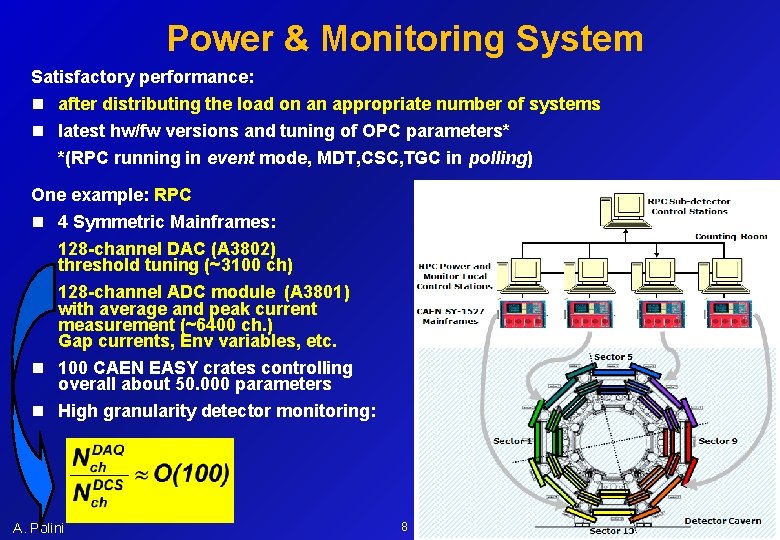

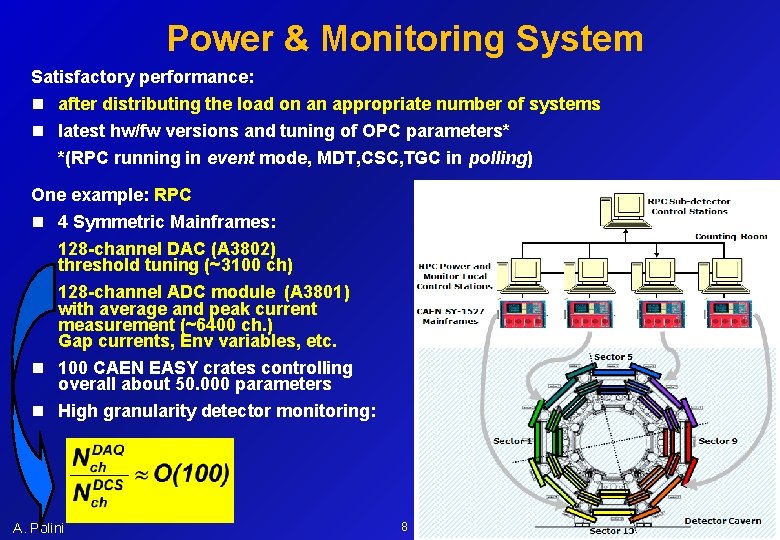

Power & Monitoring System Satisfactory performance: n after distributing the load on an appropriate number of systems n latest hw/fw versions and tuning of OPC parameters* *(RPC running in event mode, MDT, CSC, TGC in polling) One example: RPC n 4 Symmetric Mainframes: 128 -channel DAC (A 3802) threshold tuning (~3100 ch) 128 -channel ADC module (A 3801) with average and peak current measurement (~6400 ch. ) Gap currents, Env variables, etc. n 100 CAEN EASY crates controlling overall about 50. 000 parameters n High granularity detector monitoring: A. Polini 8 CHEP 2010, October 18 -22, Taipei, Taiwan

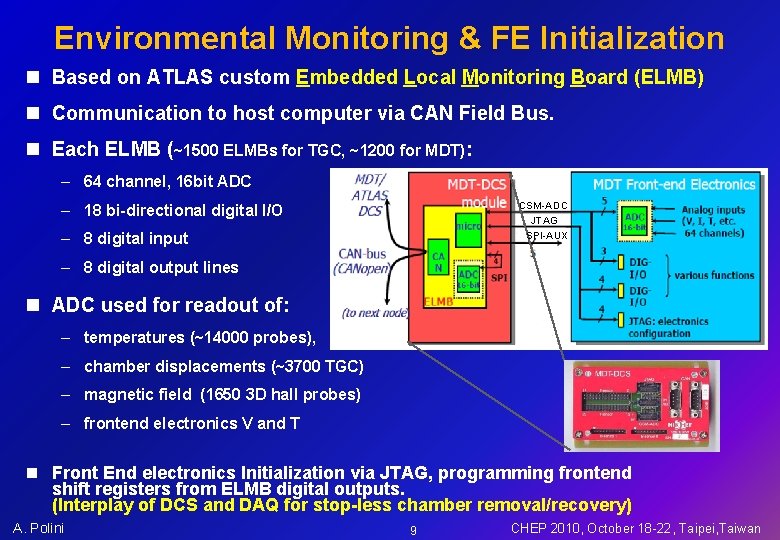

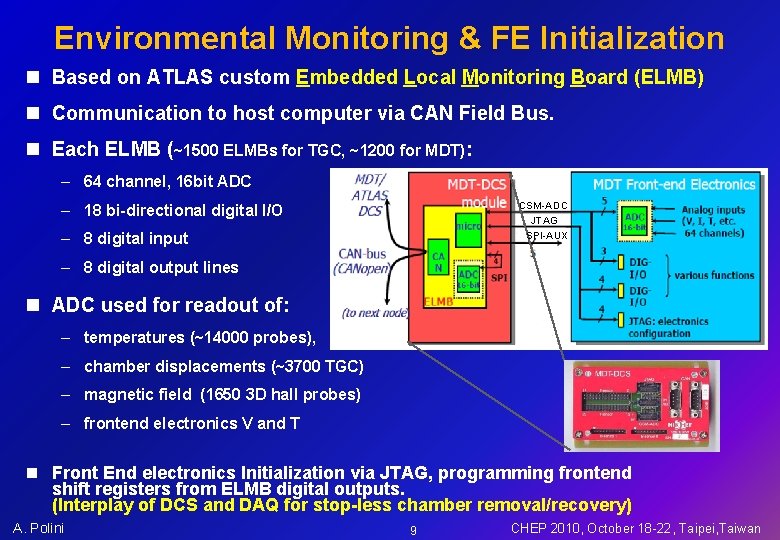

Environmental Monitoring & FE Initialization n Based on ATLAS custom Embedded Local Monitoring Board (ELMB) n Communication to host computer via CAN Field Bus. n Each ELMB (~1500 ELMBs for TGC, ~1200 for MDT): – 64 channel, 16 bit ADC CSM-ADC JTAG SPI-AUX – 18 bi-directional digital I/O – 8 digital input – 8 digital output lines n ADC used for readout of: – temperatures (~14000 probes), – chamber displacements (~3700 TGC) – magnetic field (1650 3 D hall probes) – frontend electronics V and T n Front End electronics Initialization via JTAG, programming frontend shift registers from ELMB digital outputs. (Interplay of DCS and DAQ for stop-less chamber removal/recovery) A. Polini 9 CHEP 2010, October 18 -22, Taipei, Taiwan

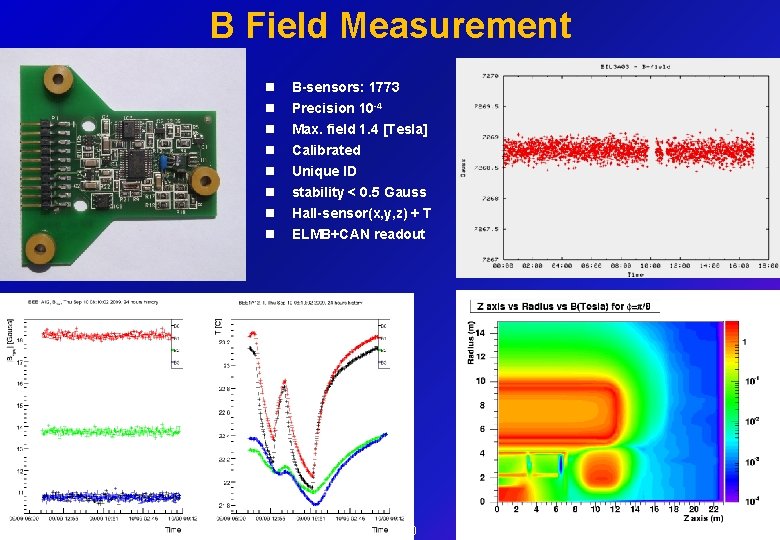

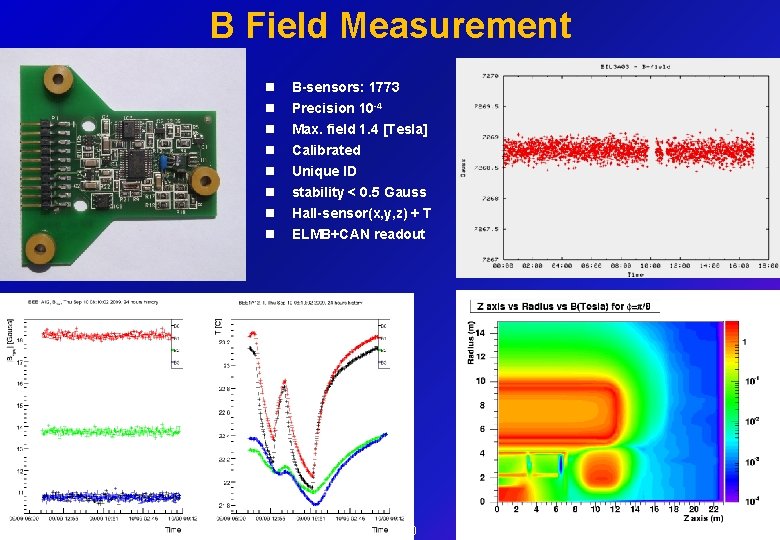

B Field Measurement n n n n A. Polini B-sensors: 1773 Precision 10 -4 Max. field 1. 4 [Tesla] Calibrated Unique ID stability < 0. 5 Gauss Hall-sensor(x, y, z) + T ELMB+CAN readout 10 CHEP 2010, October 18 -22, Taipei, Taiwan

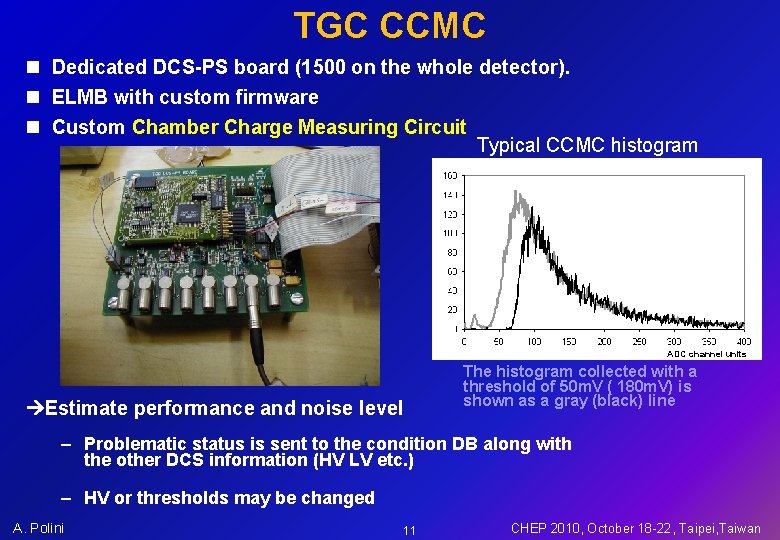

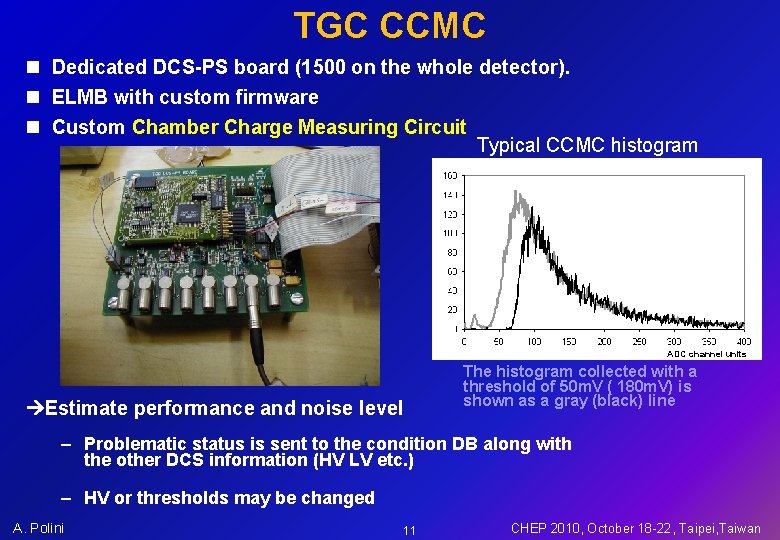

TGC CCMC n Dedicated DCS-PS board (1500 on the whole detector). n ELMB with custom firmware n Custom Chamber Charge Measuring Circuit Typical CCMC histogram ADC channel units Estimate performance and noise level The histogram collected with a threshold of 50 m. V ( 180 m. V) is shown as a gray (black) line – Problematic status is sent to the condition DB along with the other DCS information (HV LV etc. ) – HV or thresholds may be changed A. Polini 11 CHEP 2010, October 18 -22, Taipei, Taiwan

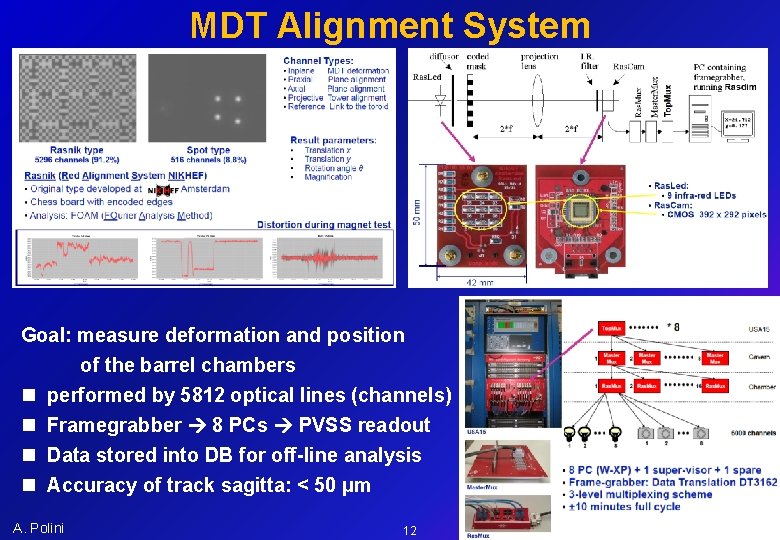

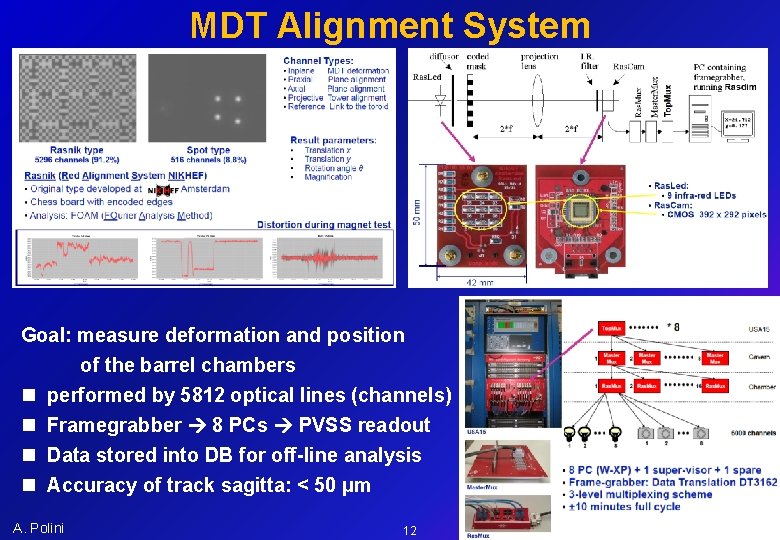

MDT Alignment System Goal: measure deformation and position of the barrel chambers n performed by 5812 optical lines (channels) n Framegrabber 8 PCs PVSS readout n Data stored into DB for off-line analysis n Accuracy of track sagitta: < 50 μm A. Polini 12 CHEP 2010, October 18 -22, Taipei, Taiwan

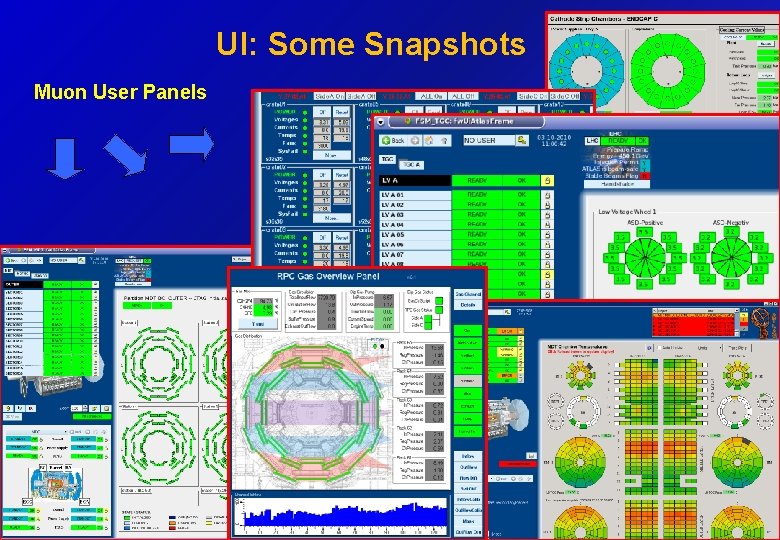

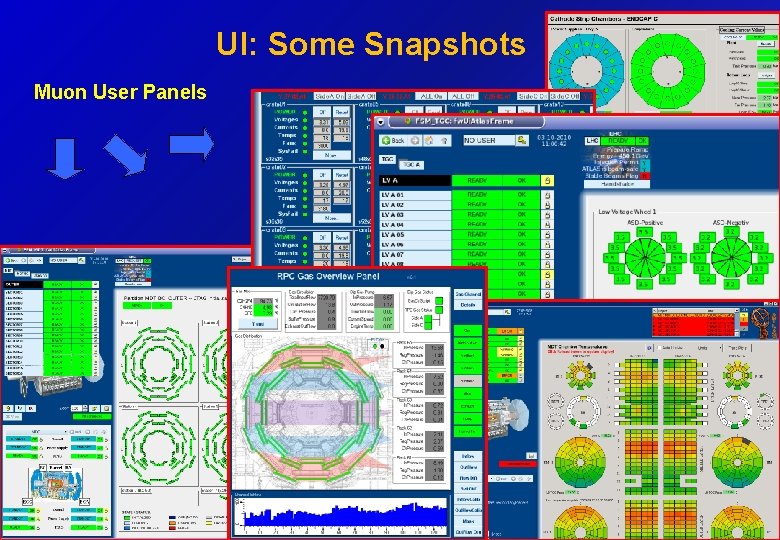

UI: Some Snapshots Muon User Panels A. Polini 13 CHEP 2010, October 18 -22, Taipei, Taiwan

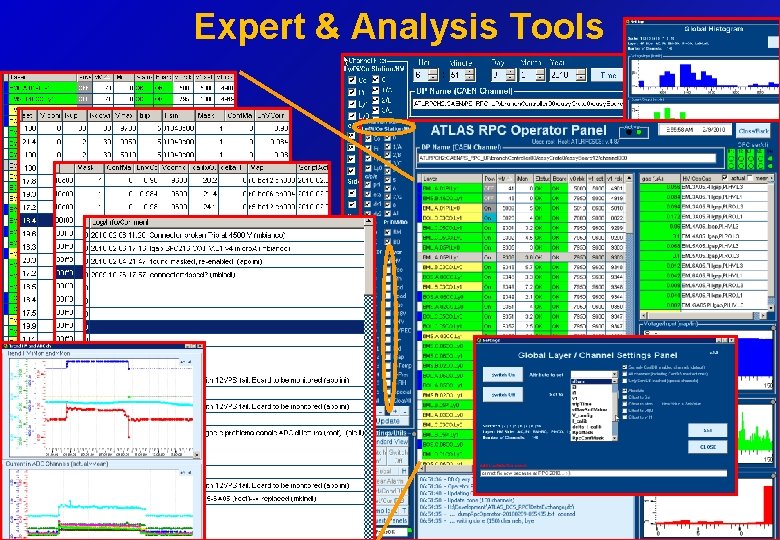

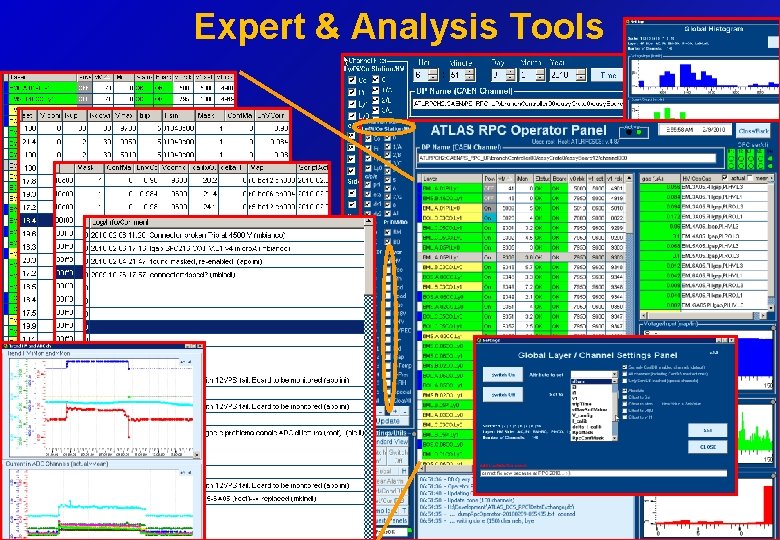

Expert & Analysis Tools A. Polini 14 CHEP 2010, October 18 -22, Taipei, Taiwan

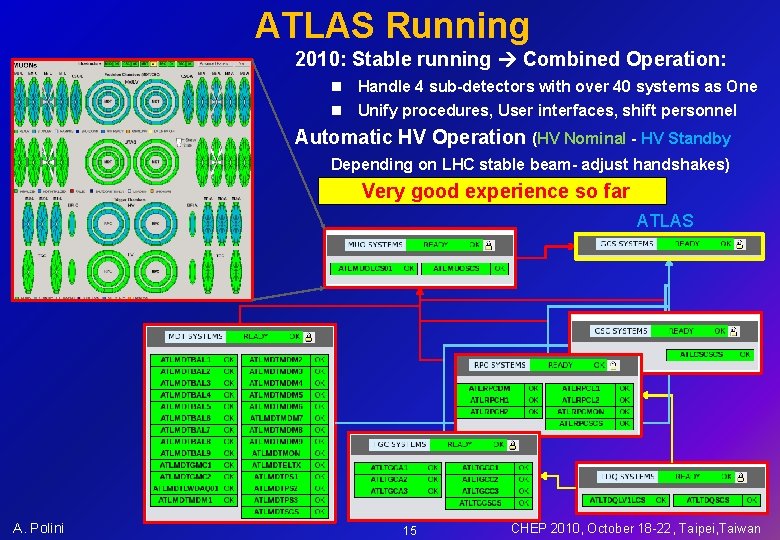

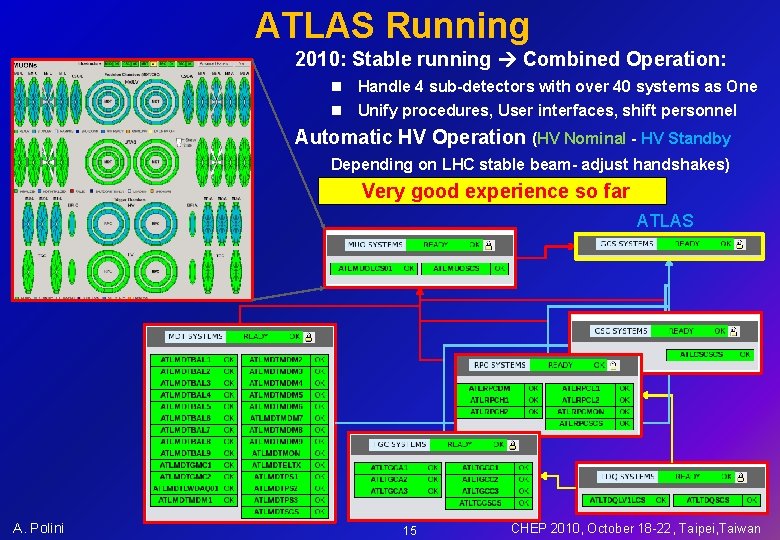

ATLAS Running 2010: Stable running Combined Operation: n Handle 4 sub-detectors with over 40 systems as One n Unify procedures, User interfaces, shift personnel Automatic HV Operation (HV Nominal - HV Standby Depending on LHC stable beam- adjust handshakes) Very good experience so far ATLAS A. Polini 15 CHEP 2010, October 18 -22, Taipei, Taiwan

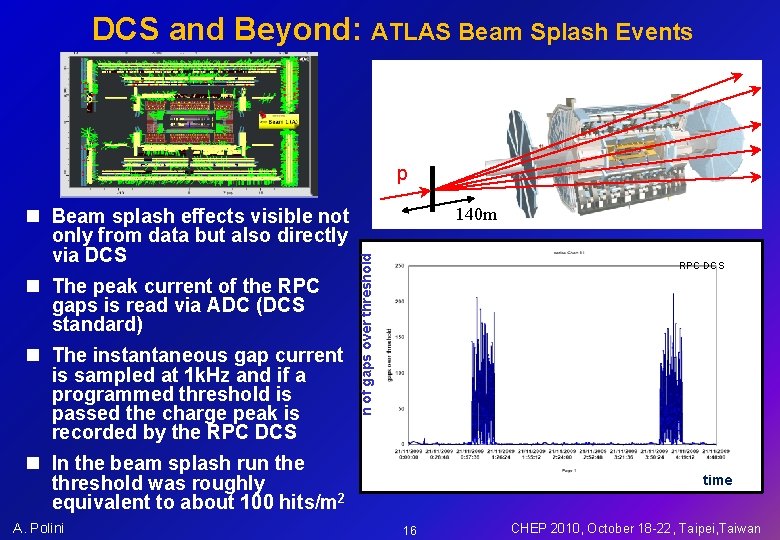

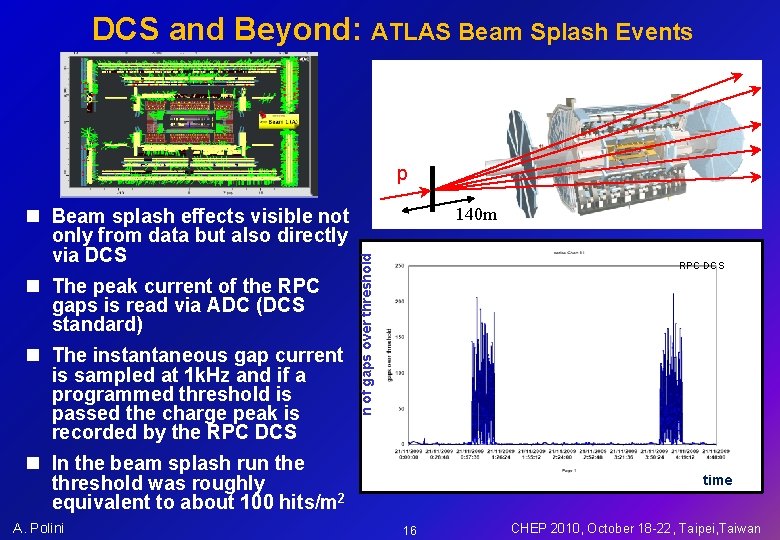

DCS and Beyond: ATLAS Beam Splash Events p n The peak current of the RPC gaps is read via ADC (DCS standard) n The instantaneous gap current is sampled at 1 k. Hz and if a programmed threshold is passed the charge peak is recorded by the RPC DCS 140 m n of gaps over threshold n Beam splash effects visible not only from data but also directly via DCS RPC DCS n In the beam splash run the threshold was roughly equivalent to about 100 hits/m 2 A. Polini time 16 CHEP 2010, October 18 -22, Taipei, Taiwan

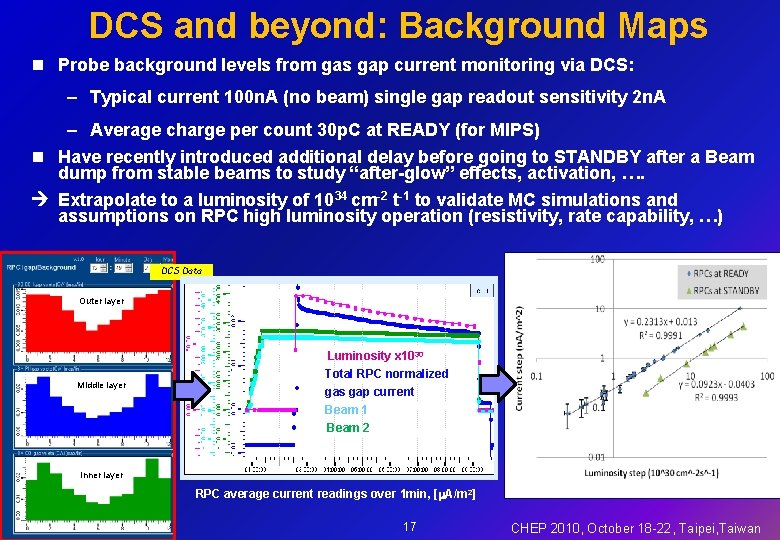

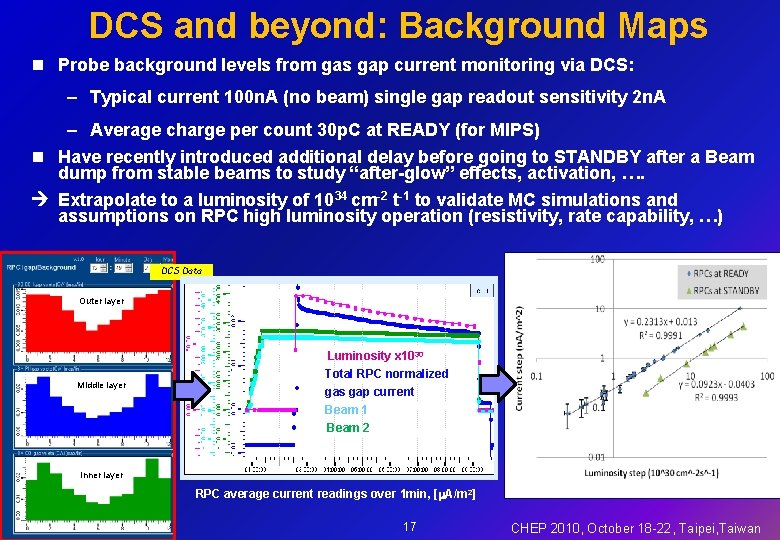

DCS and beyond: Background Maps n Probe background levels from gas gap current monitoring via DCS: – Typical current 100 n. A (no beam) single gap readout sensitivity 2 n. A – Average charge per count 30 p. C at READY (for MIPS) n Have recently introduced additional delay before going to STANDBY after a Beam dump from stable beams to study “after-glow” effects, activation, …. Extrapolate to a luminosity of 1034 cm-2 t-1 to validate MC simulations and assumptions on RPC high luminosity operation (resistivity, rate capability, …) DCS Data Outer layer Middle layer Luminosity x 1030 Total RPC normalized gas gap current Beam 1 Beam 2 Inner layer RPC average current readings over 1 min, [ A/m 2] A. Polini 17 CHEP 2010, October 18 -22, Taipei, Taiwan

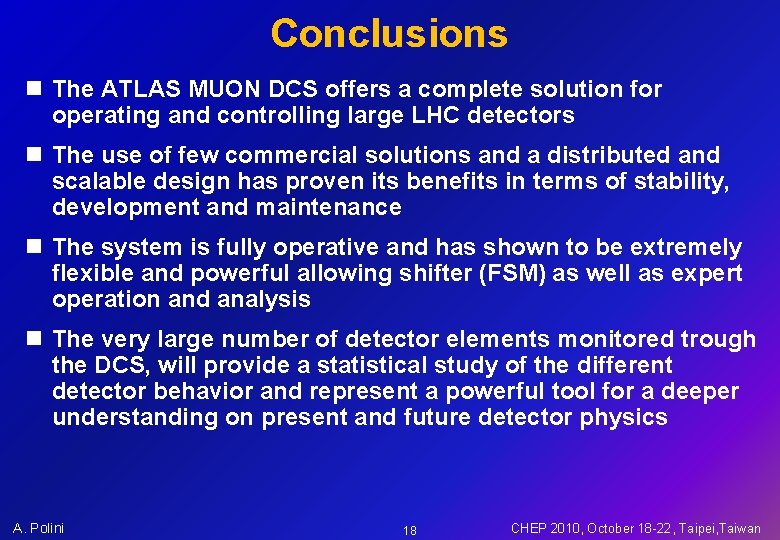

Conclusions n The ATLAS MUON DCS offers a complete solution for operating and controlling large LHC detectors n The use of few commercial solutions and a distributed and scalable design has proven its benefits in terms of stability, development and maintenance n The system is fully operative and has shown to be extremely flexible and powerful allowing shifter (FSM) as well as expert operation and analysis n The very large number of detector elements monitored trough the DCS, will provide a statistical study of the different detector behavior and represent a powerful tool for a deeper understanding on present and future detector physics A. Polini 18 CHEP 2010, October 18 -22, Taipei, Taiwan