Design and Modeling of Specialized Architectures PHD DISSERTATION

![Contributions Big Cores GPU/D SP WIICA: Accelerator Workload Characterization [ISPASS’ 13] Mach. Suite: Accelerator Contributions Big Cores GPU/D SP WIICA: Accelerator Workload Characterization [ISPASS’ 13] Mach. Suite: Accelerator](https://slidetodoc.com/presentation_image_h/c65a89e18a79dab436c4854d150f21d9/image-15.jpg)

![From C to Design Space C Code: for(i=0; i<N; ++i) c[i] = a[i] + From C to Design Space C Code: for(i=0; i<N; ++i) c[i] = a[i] +](https://slidetodoc.com/presentation_image_h/c65a89e18a79dab436c4854d150f21d9/image-21.jpg)

![From C to Design Space IR Dynamic Trace C Code: for(i=0; i<N; ++i) c[i] From C to Design Space IR Dynamic Trace C Code: for(i=0; i<N; ++i) c[i]](https://slidetodoc.com/presentation_image_h/c65a89e18a79dab436c4854d150f21d9/image-22.jpg)

![From C to Design Space Initial DDDG C Code: for(i=0; i<N; ++i) c[i] = From C to Design Space Initial DDDG C Code: for(i=0; i<N; ++i) c[i] =](https://slidetodoc.com/presentation_image_h/c65a89e18a79dab436c4854d150f21d9/image-23.jpg)

![From C to Design Space Idealistic DDDG C Code: for(i=0; i<N; ++i) c[i] = From C to Design Space Idealistic DDDG C Code: for(i=0; i<N; ++i) c[i] =](https://slidetodoc.com/presentation_image_h/c65a89e18a79dab436c4854d150f21d9/image-24.jpg)

![Contributions Big Cores GPU/D SP WIICA: Accelerator Workload Characterization [ISPASS’ 13] Mach. Suite: Accelerator Contributions Big Cores GPU/D SP WIICA: Accelerator Workload Characterization [ISPASS’ 13] Mach. Suite: Accelerator](https://slidetodoc.com/presentation_image_h/c65a89e18a79dab436c4854d150f21d9/image-50.jpg)

- Slides: 53

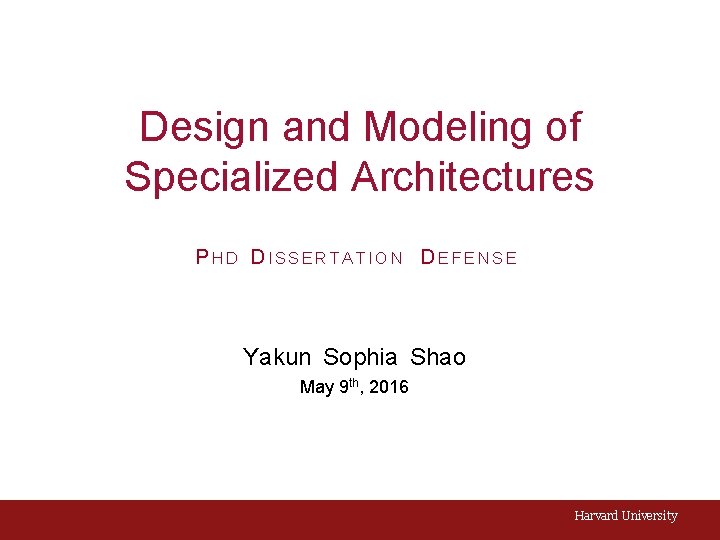

Design and Modeling of Specialized Architectures PHD DISSERTATION DEFENSE Yakun Sophia Shao May 9 th, 2016 Harvard University

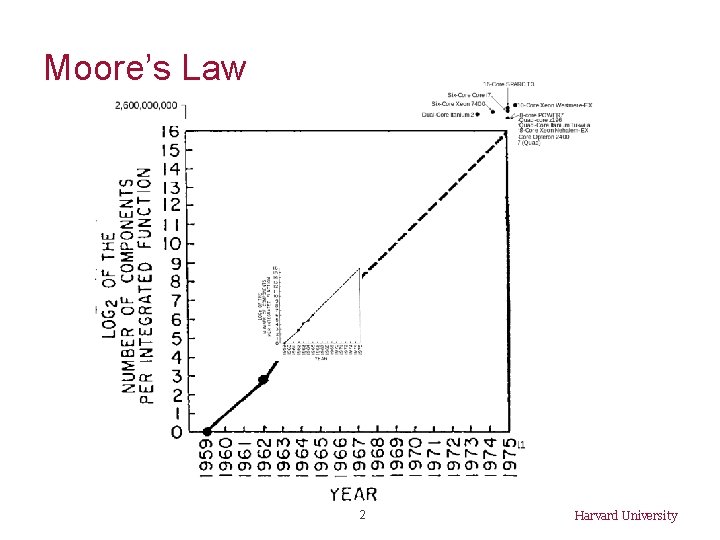

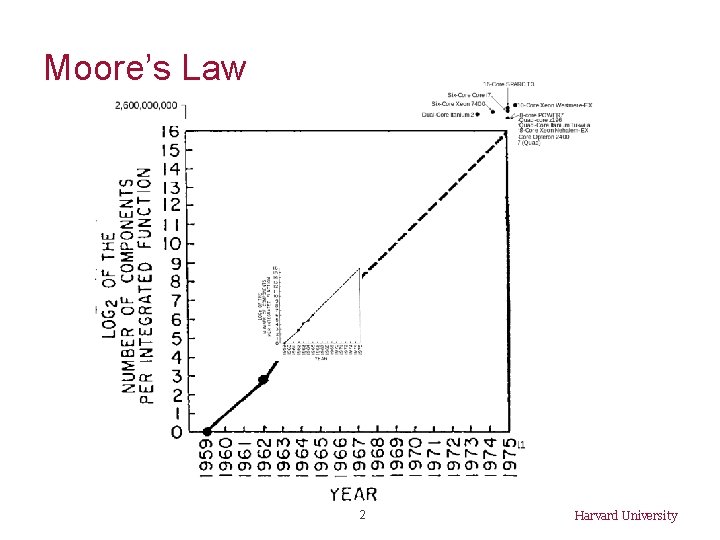

Moore’s Law 2 Harvard University

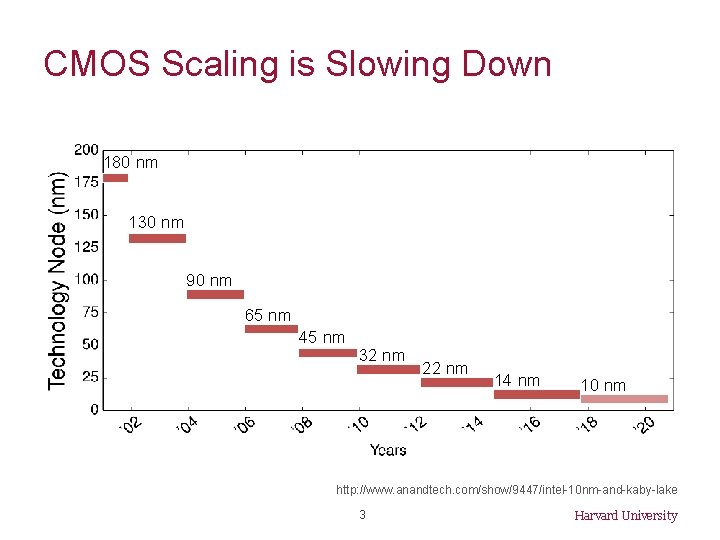

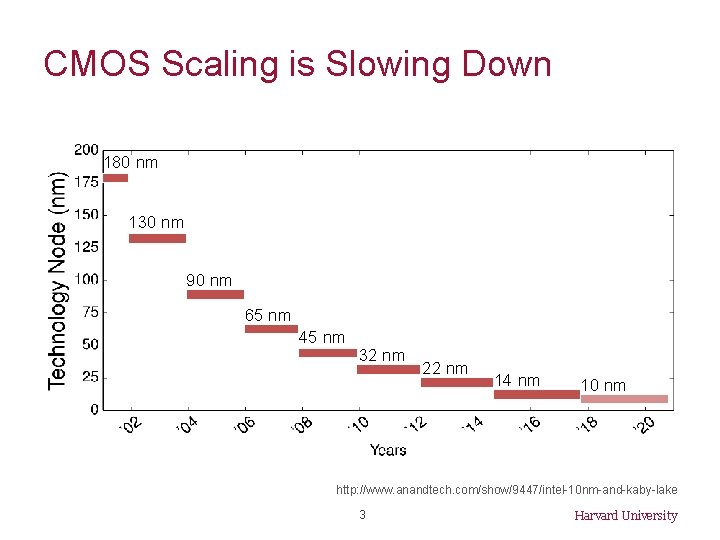

CMOS Scaling is Slowing Down 180 nm 130 nm 90 nm 65 nm 45 nm 32 nm 22 nm 14 nm 10 nm http: //www. anandtech. com/show/9447/intel-10 nm-and-kaby-lake 3 Harvard University

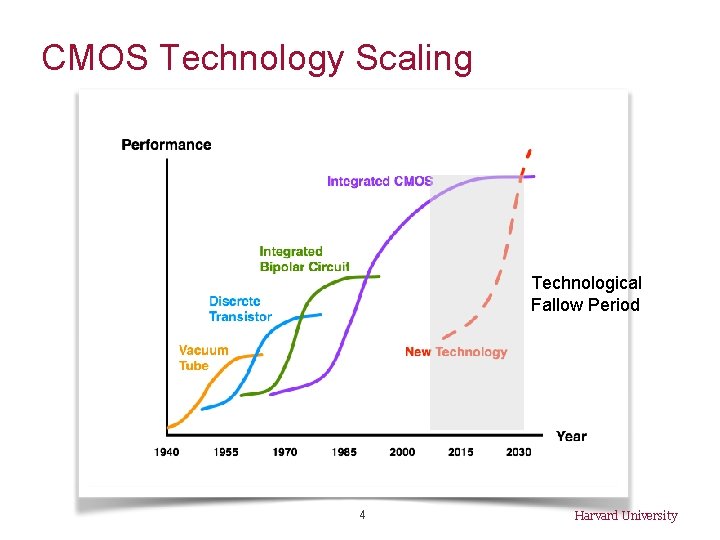

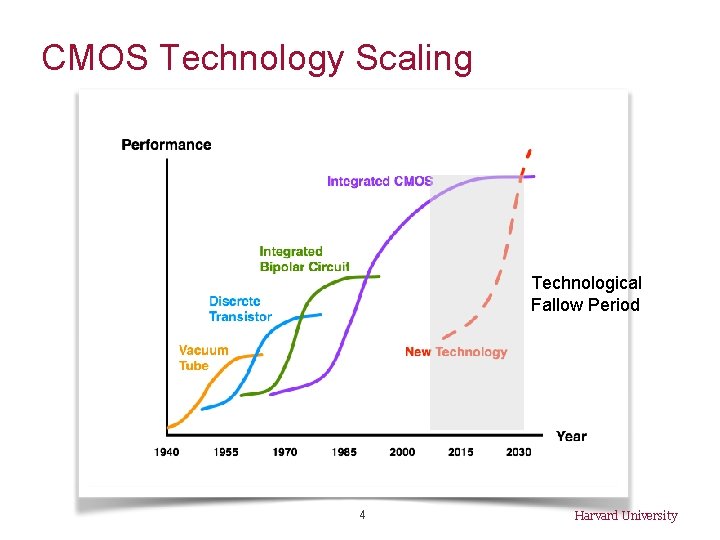

CMOS Technology Scaling Technological Fallow Period 4 Harvard University

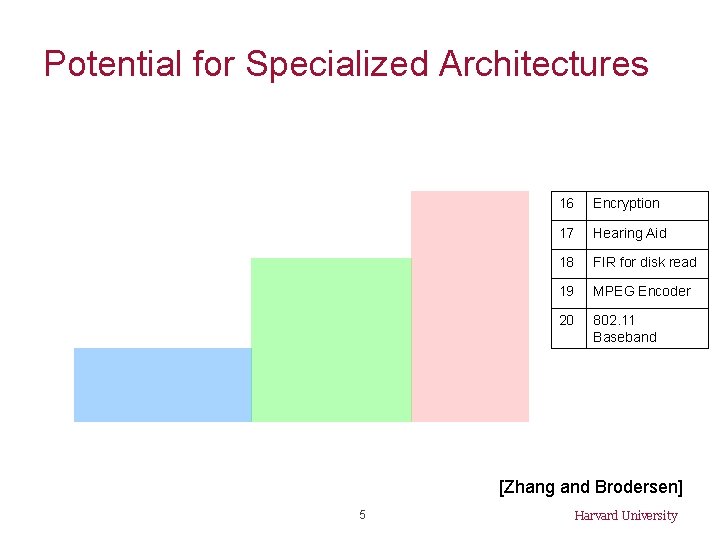

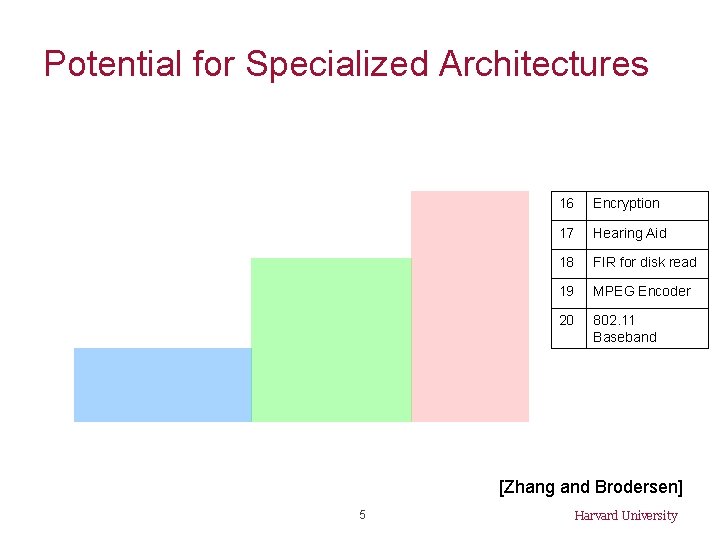

Potential for Specialized Architectures 16 Encryption 17 Hearing Aid 18 FIR for disk read 19 MPEG Encoder 20 802. 11 Baseband [Zhang and Brodersen] 5 Harvard University

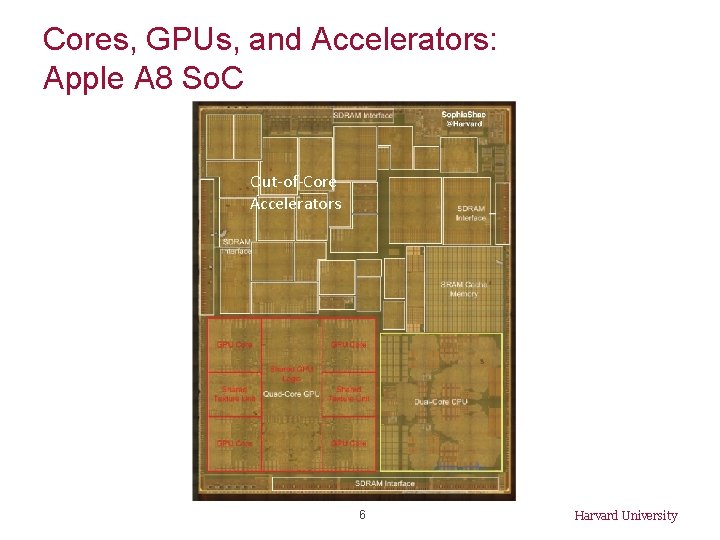

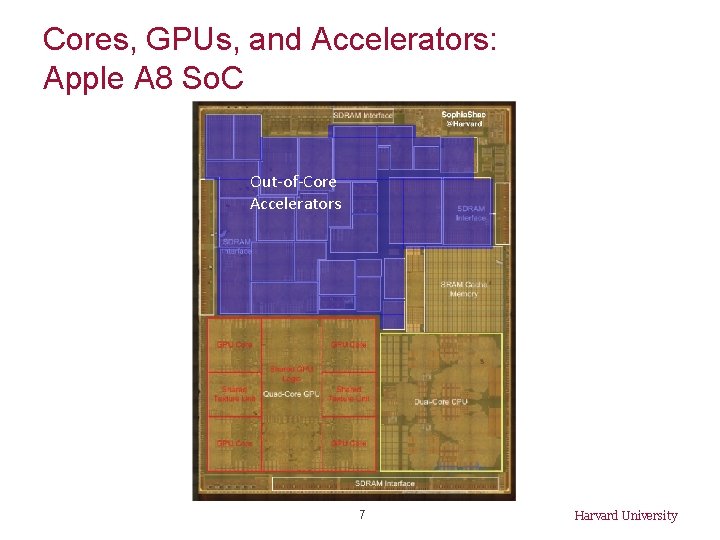

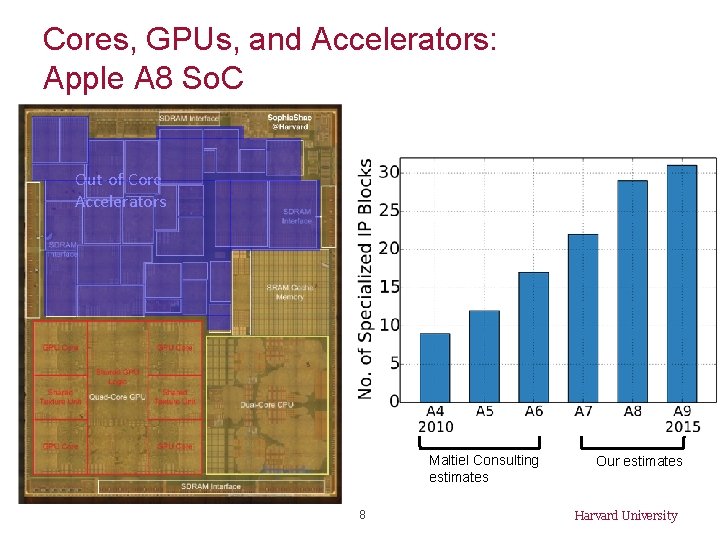

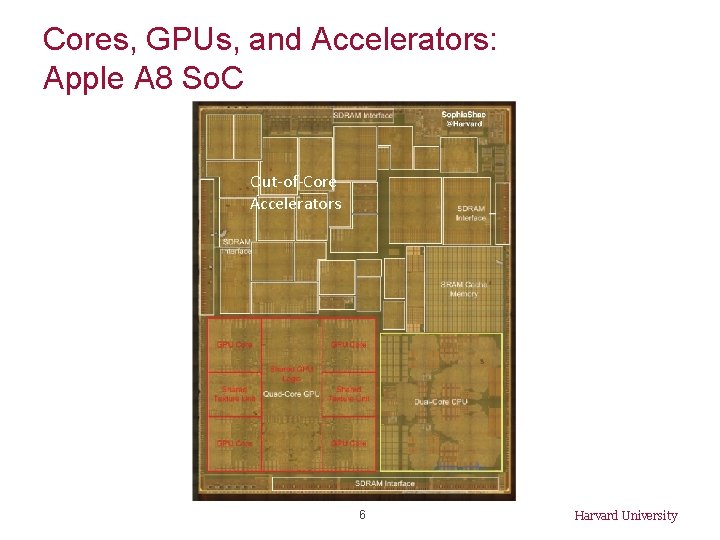

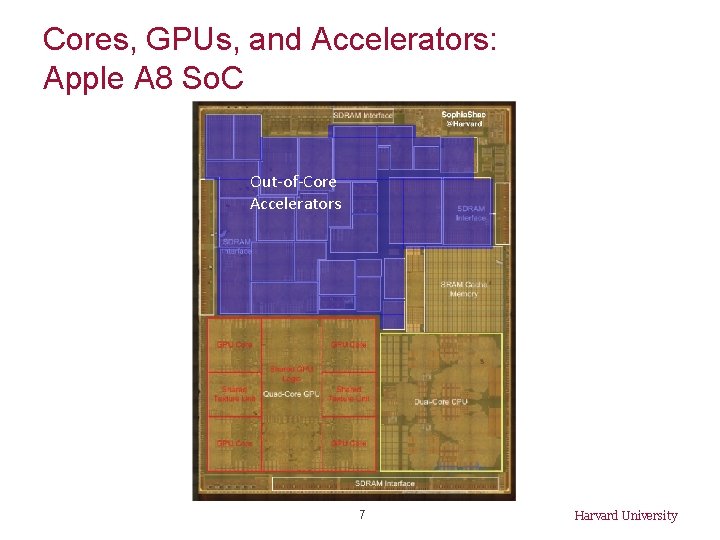

Cores, GPUs, and Accelerators: Apple A 8 So. C Out-of-Core Accelerators 6 Harvard University

Cores, GPUs, and Accelerators: Apple A 8 So. C Out-of-Core Accelerators 7 Harvard University

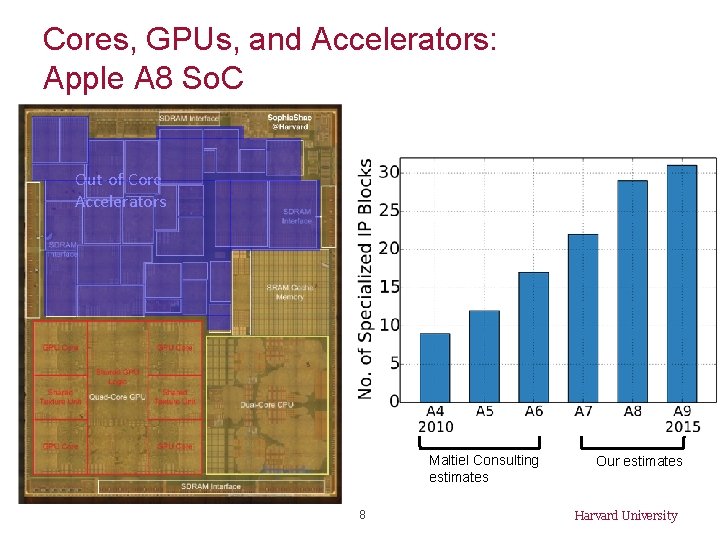

Cores, GPUs, and Accelerators: Apple A 8 So. C Out-of-Core Accelerators Maltiel Consulting estimates 8 Our estimates Harvard University

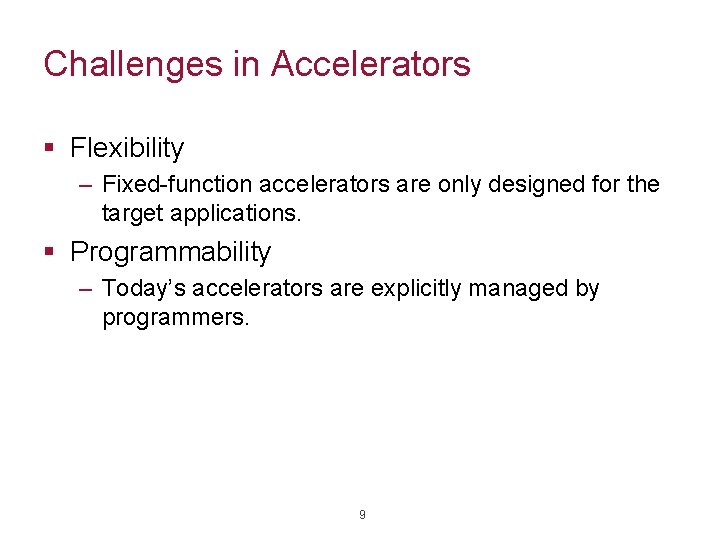

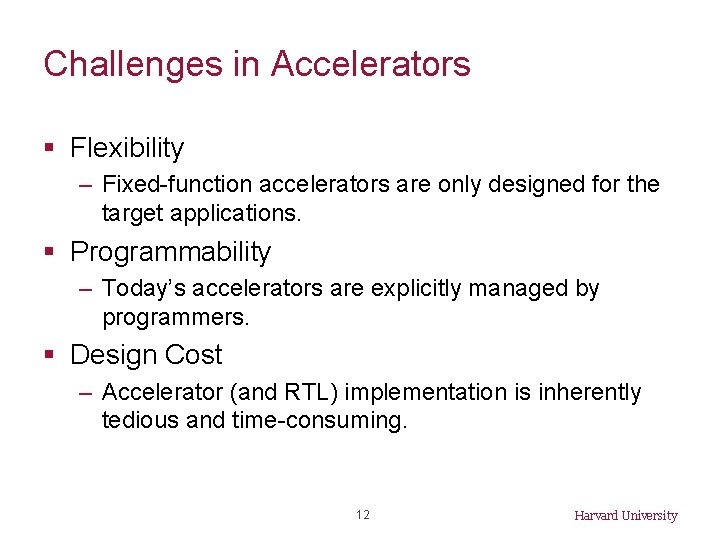

Challenges in Accelerators § Flexibility – Fixed-function accelerators are only designed for the target applications. § Programmability – Today’s accelerators are explicitly managed by programmers. 9

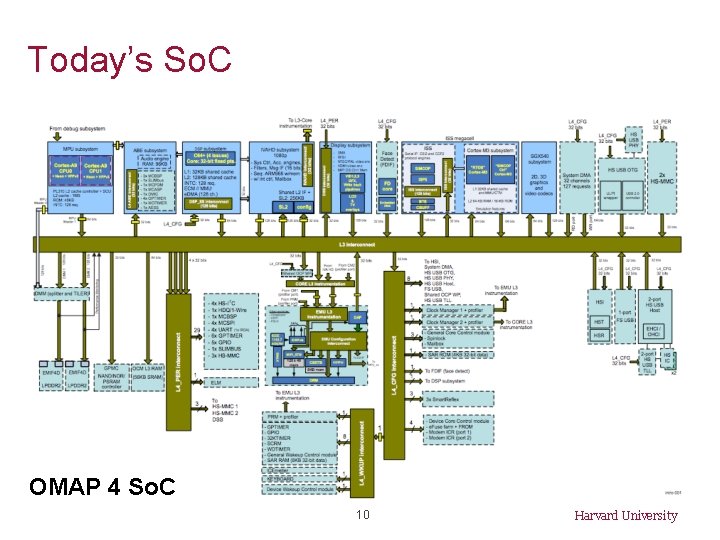

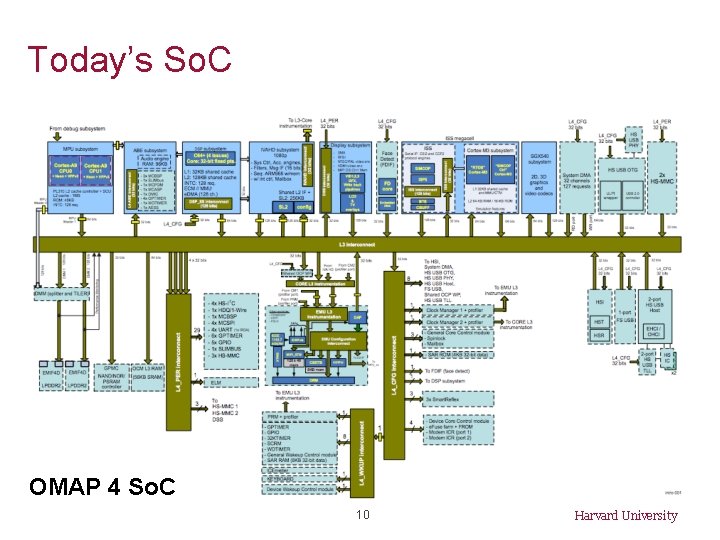

Today’s So. C OMAP 4 So. C 10 Harvard University

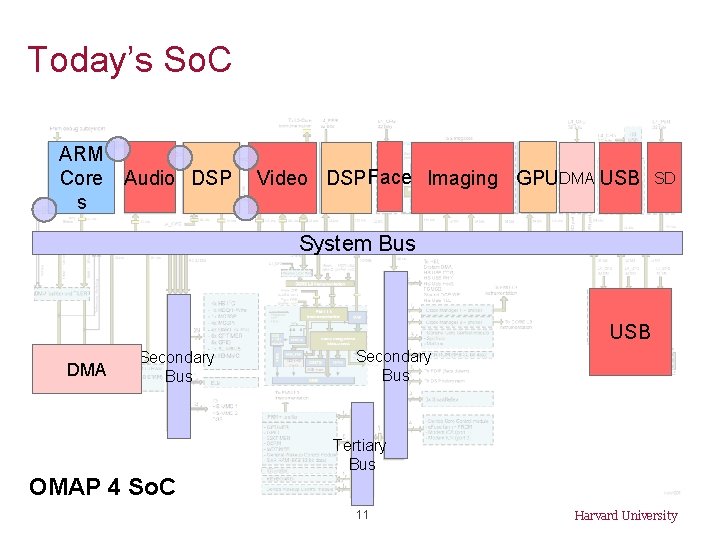

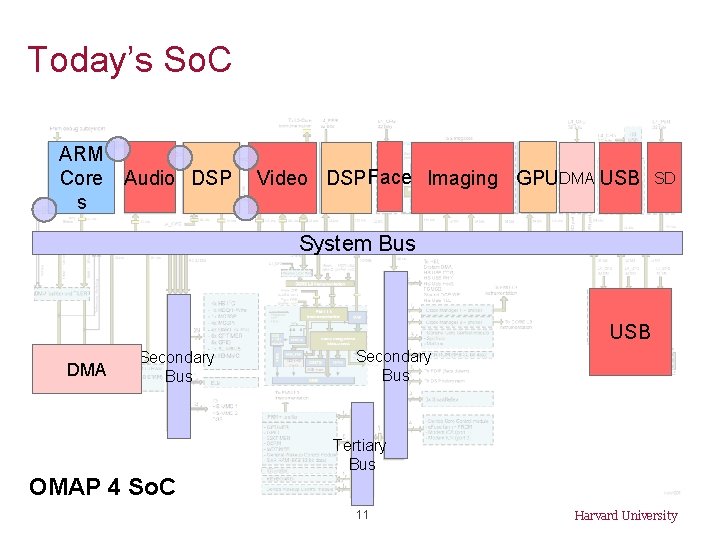

Today’s So. C ARM Core s Audio DSP Video DSP Face Imaging GPUDMA USB SD System Bus USB DMA Secondary Bus Tertiary Bus OMAP 4 So. C 11 Harvard University

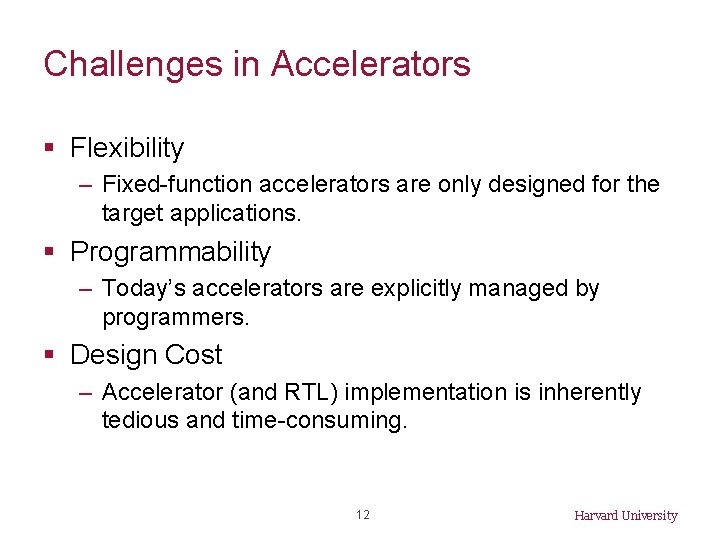

Challenges in Accelerators § Flexibility – Fixed-function accelerators are only designed for the target applications. § Programmability – Today’s accelerators are explicitly managed by programmers. § Design Cost – Accelerator (and RTL) implementation is inherently tedious and time-consuming. 12 Harvard University

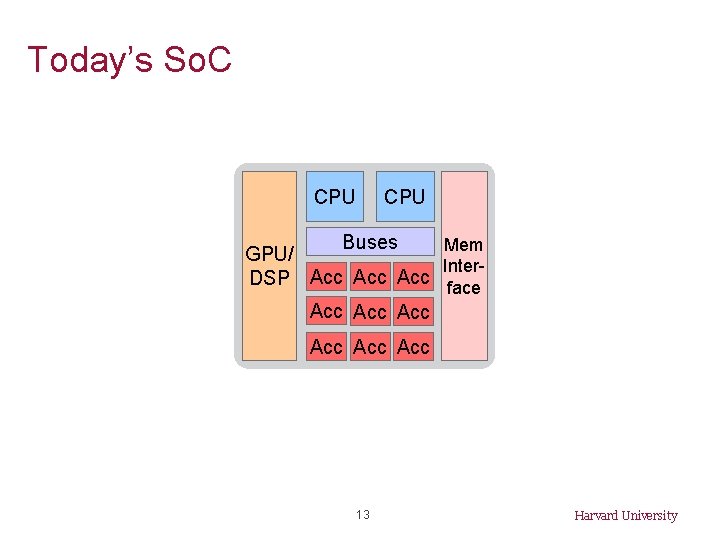

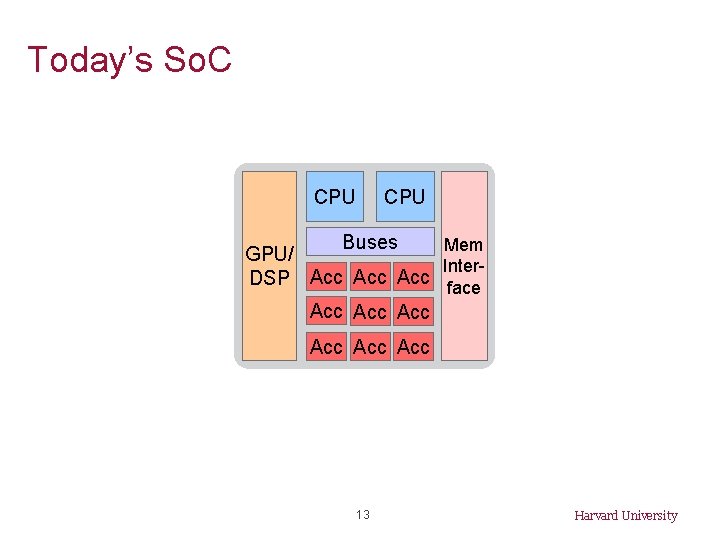

Today’s So. C CPU Buses Mem GPU/ Inter. DSP Acc Acc face Acc Acc Acc 13 Harvard University

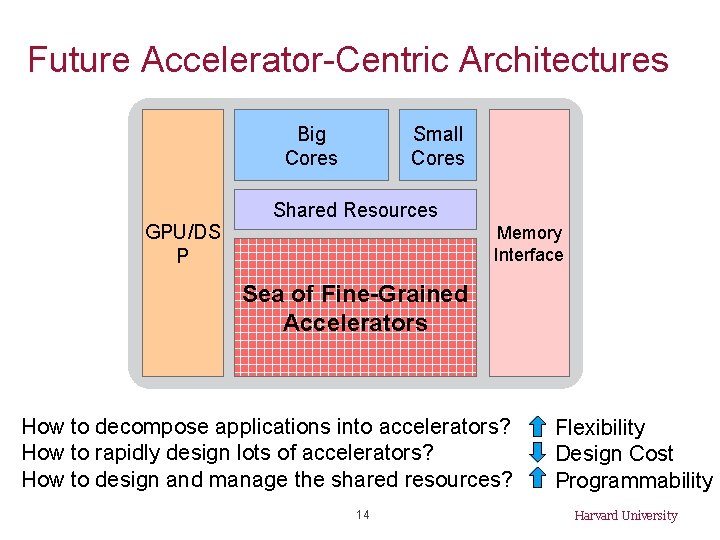

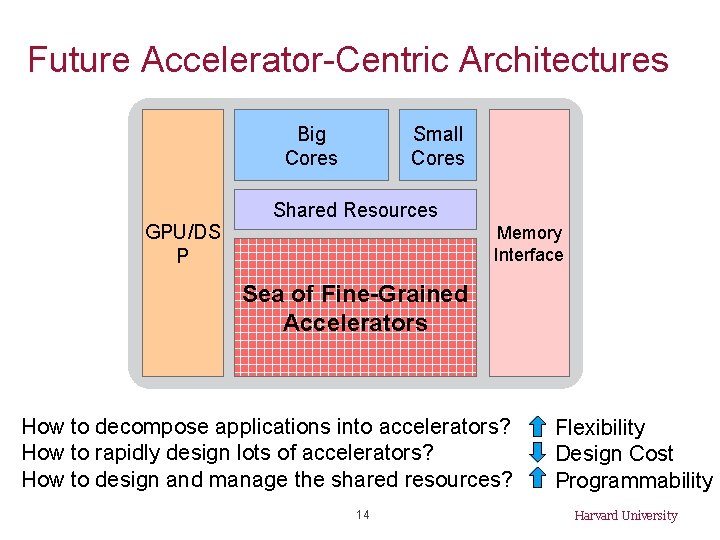

Future Accelerator-Centric Architectures Big Cores GPU/DS P Small Cores Shared Resources Memory Interface Sea of Fine-Grained Accelerators How to decompose applications into accelerators? How to rapidly design lots of accelerators? How to design and manage the shared resources? 14 Flexibility Design Cost Programmability Harvard University

![Contributions Big Cores GPUD SP WIICA Accelerator Workload Characterization ISPASS 13 Mach Suite Accelerator Contributions Big Cores GPU/D SP WIICA: Accelerator Workload Characterization [ISPASS’ 13] Mach. Suite: Accelerator](https://slidetodoc.com/presentation_image_h/c65a89e18a79dab436c4854d150f21d9/image-15.jpg)

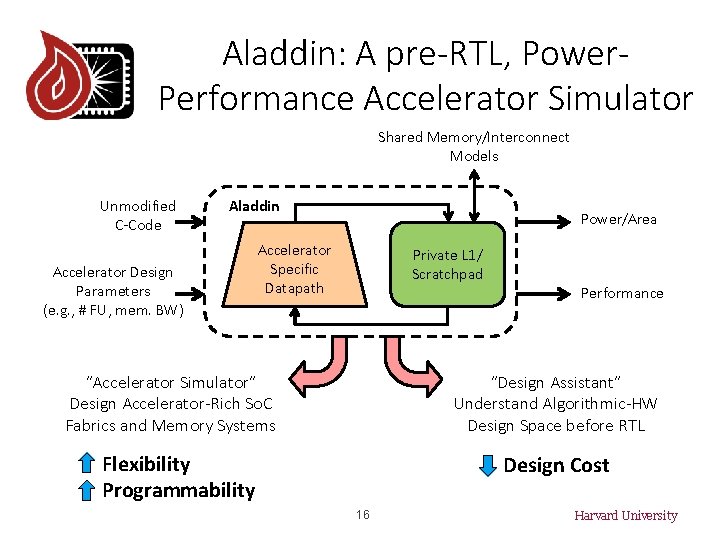

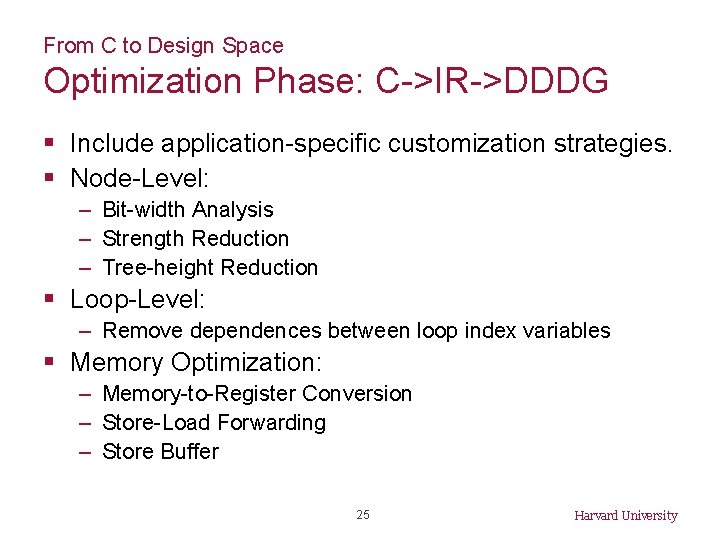

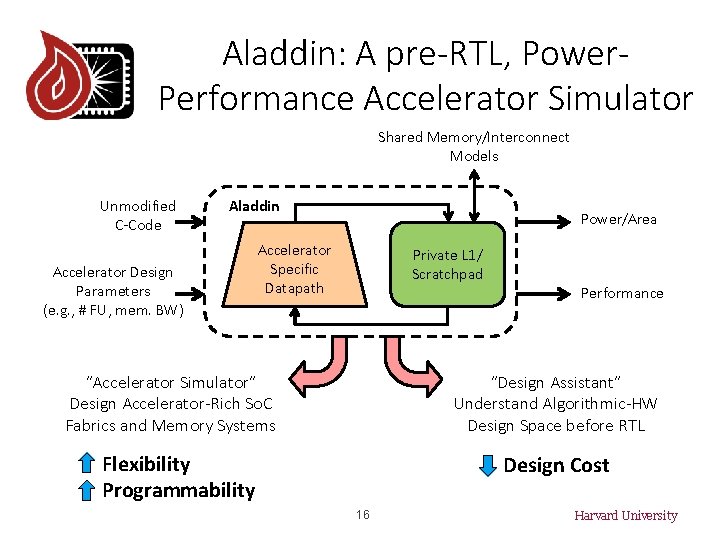

Contributions Big Cores GPU/D SP WIICA: Accelerator Workload Characterization [ISPASS’ 13] Mach. Suite: Accelerator Benchmark Suite [IISWC’ 14] Small Core s Shared Resources Aladdin: Accelerator Pre. RTL, Power-Performance Simulator [ISCA’ 14, Top. Picks’ 15] Accelerator Design w/ Memory Interface High-Level Synthesis [ISLPED’ 13_1] Sea of Fine-Grained Accelerators Research Infrastructures for Hardware Accelerators [Synthesis Lecture’ 15] Accelerator-System Co-Design [Under Review] Instruction-Level Energy Model for Xeon Phi [ISLPED’ 13_2] 15 Harvard University

Aladdin: A pre-RTL, Power. Performance Accelerator Simulator Shared Memory/Interconnect Models Unmodified C-Code Aladdin Accelerator Design Parameters (e. g. , # FU, mem. BW) Power/Area Accelerator Specific Datapath Private L 1/ Scratchpad “Accelerator Simulator” Design Accelerator-Rich So. C Fabrics and Memory Systems Performance “Design Assistant” Understand Algorithmic-HW Design Space before RTL Flexibility Programmability Design Cost 16 Harvard University

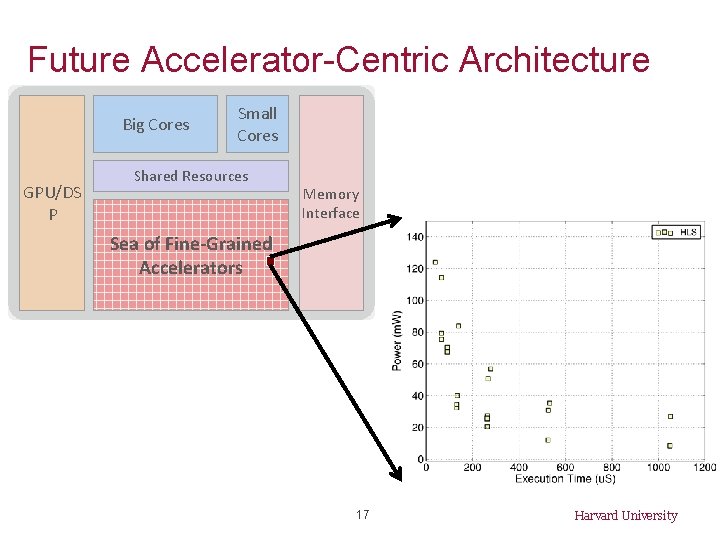

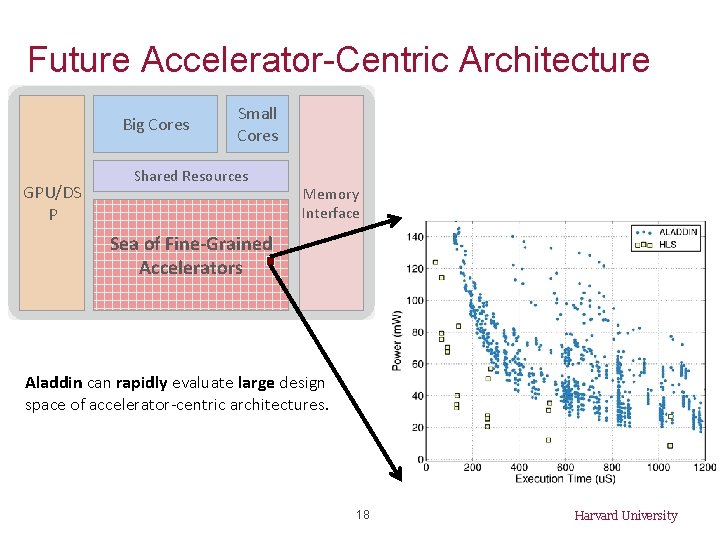

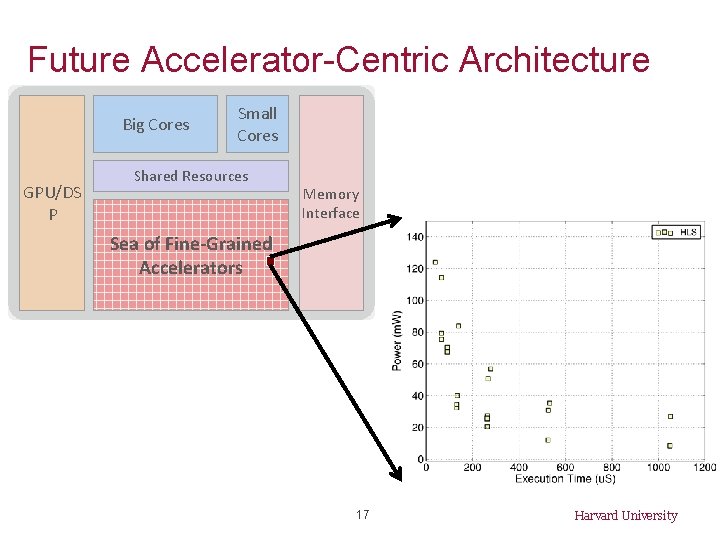

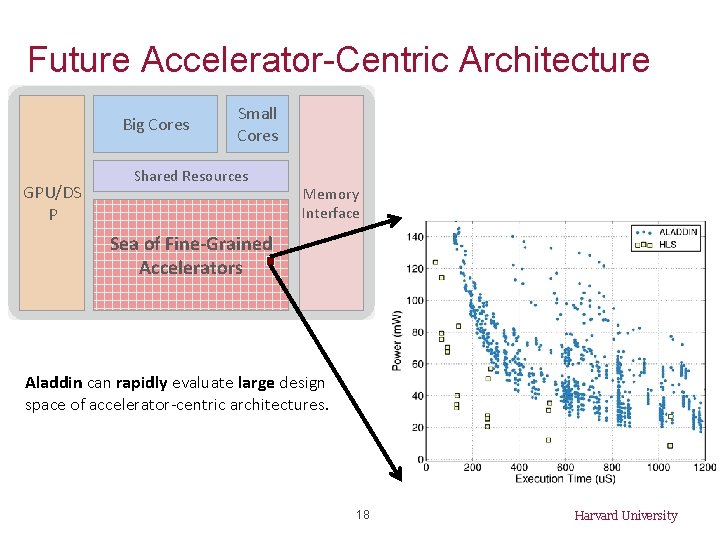

Future Accelerator-Centric Architecture Big Cores GPU/DS P Small Cores Shared Resources Memory Interface Sea of Fine-Grained Accelerators 17 Harvard University

Future Accelerator-Centric Architecture Big Cores GPU/DS P Small Cores Shared Resources Memory Interface Sea of Fine-Grained Accelerators Aladdin can rapidly evaluate large design space of accelerator-centric architectures. 18 Harvard University

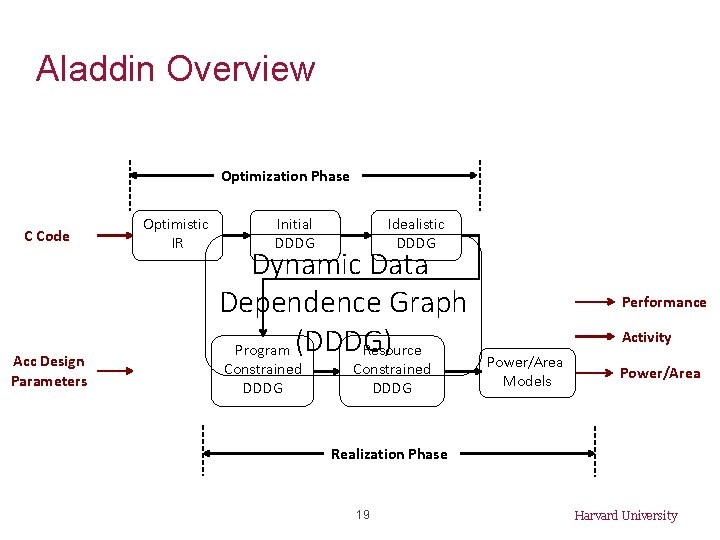

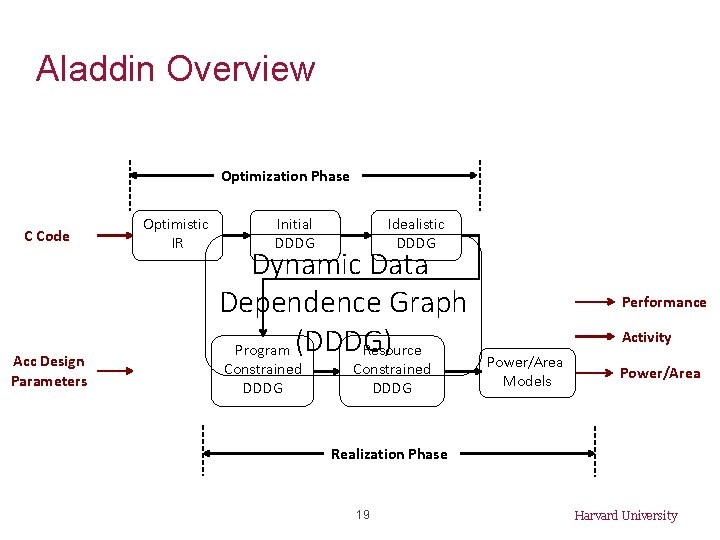

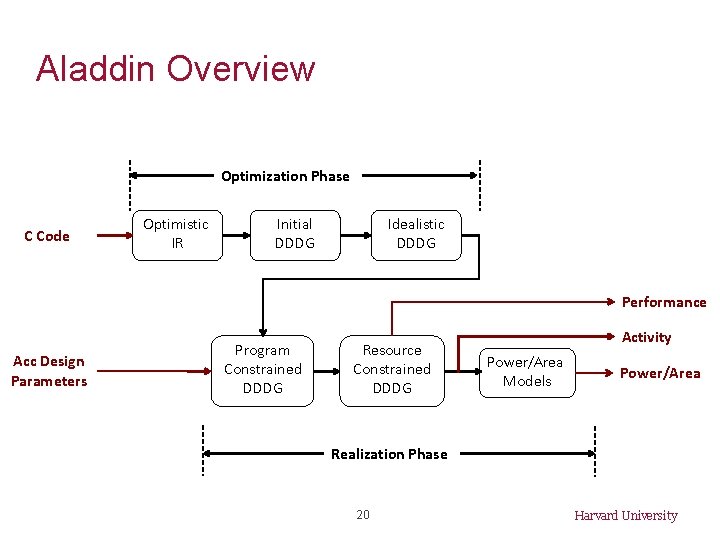

Aladdin Overview Optimization Phase C Code Acc Design Parameters Optimistic IR Initial DDDG Idealistic DDDG Dynamic Data Dependence Graph Resource Program (DDDG) Constrained DDDG Performance Activity Power/Area Models Power/Area Realization Phase 19 Harvard University

Aladdin Overview Optimization Phase C Code Optimistic IR Initial DDDG Idealistic DDDG Performance Acc Design Parameters Program Constrained DDDG Resource Constrained DDDG Activity Power/Area Models Power/Area Realization Phase 20 Harvard University

![From C to Design Space C Code fori0 iN i ci ai From C to Design Space C Code: for(i=0; i<N; ++i) c[i] = a[i] +](https://slidetodoc.com/presentation_image_h/c65a89e18a79dab436c4854d150f21d9/image-21.jpg)

From C to Design Space C Code: for(i=0; i<N; ++i) c[i] = a[i] + b[i]; 21 Harvard University

![From C to Design Space IR Dynamic Trace C Code fori0 iN i ci From C to Design Space IR Dynamic Trace C Code: for(i=0; i<N; ++i) c[i]](https://slidetodoc.com/presentation_image_h/c65a89e18a79dab436c4854d150f21d9/image-22.jpg)

From C to Design Space IR Dynamic Trace C Code: for(i=0; i<N; ++i) c[i] = a[i] + b[i]; 0. r 0=0 //i = 0 1. r 4=load (r 0 + r 1) //load a[i] 2. r 5=load (r 0 + r 2) //load b[i] 3. r 6=r 4 + r 5 4. store(r 0 + r 3, r 6) //store c[i] 5. r 0=r 0 + 1 //++i 6. r 4=load(r 0 + r 1) //load a[i] 7. r 5=load(r 0 + r 2) //load b[i] 8. r 6=r 4 + r 5 9. store(r 0 + r 3, r 6) //store c[i] 10. r 0 = r 0 + 1 //++i … 22 Harvard University

![From C to Design Space Initial DDDG C Code fori0 iN i ci From C to Design Space Initial DDDG C Code: for(i=0; i<N; ++i) c[i] =](https://slidetodoc.com/presentation_image_h/c65a89e18a79dab436c4854d150f21d9/image-23.jpg)

From C to Design Space Initial DDDG C Code: for(i=0; i<N; ++i) c[i] = a[i] + b[i]; IR Trace: 0. r 0=0 //i = 0 1. r 4=load (r 0 + r 1) //load a[i] 2. r 5=load (r 0 + r 2) //load b[i] 3. r 6=r 4 + r 5 4. store(r 0 + r 3, r 6) //store c[i] 5. r 0=r 0 + 1 //++i 6. r 4=load(r 0 + r 1) //load a[i] 7. r 5=load(r 0 + r 2) //load b[i] 8. r 6=r 4 + r 5 9. store(r 0 + r 3, r 6) //store c[i] 10. r 0 = r 0 + 1 //++i … 0. i=0 5. i++ 10. i++ 11. ld a 2. ld b 6. ld a 7. ld b 3. + 12. ld b 8. + 4. st c 13. + 9. st c 14. st c 23 Harvard University

![From C to Design Space Idealistic DDDG C Code fori0 iN i ci From C to Design Space Idealistic DDDG C Code: for(i=0; i<N; ++i) c[i] =](https://slidetodoc.com/presentation_image_h/c65a89e18a79dab436c4854d150f21d9/image-24.jpg)

From C to Design Space Idealistic DDDG C Code: for(i=0; i<N; ++i) c[i] = a[i] + b[i]; IR Trace: 0. r 0=0 //i = 0 1. r 4=load (r 0 + r 1) //load a[i] 2. r 5=load (r 0 + r 2) //load b[i] 3. r 6=r 4 + r 5 4. store(r 0 + r 3, r 6) //store c[i] 5. r 0=r 0 + 1 //++i 6. r 4=load(r 0 + r 1) //load a[i] 7. r 5=load(r 0 + r 2) //load b[i] 8. r 6=r 4 + r 5 9. store(r 0 + r 3, r 6) //store c[i] 10. r 0 = r 0 + 1 //++i … 0. i=0 5. i++ 1. ld a 2. ld b 1. ld a 5. i++ 2. ld b 6. ld a 10. i++ 7. ld b 11. ld a 10. i++ 6. ld a 7. ld b 3. + 8. + 13. + 11. ld a 12. ld b 8. + 4. st c 9. st c 14. st c 13. + 9. st c 12. ld b 14. st c 24 Harvard University

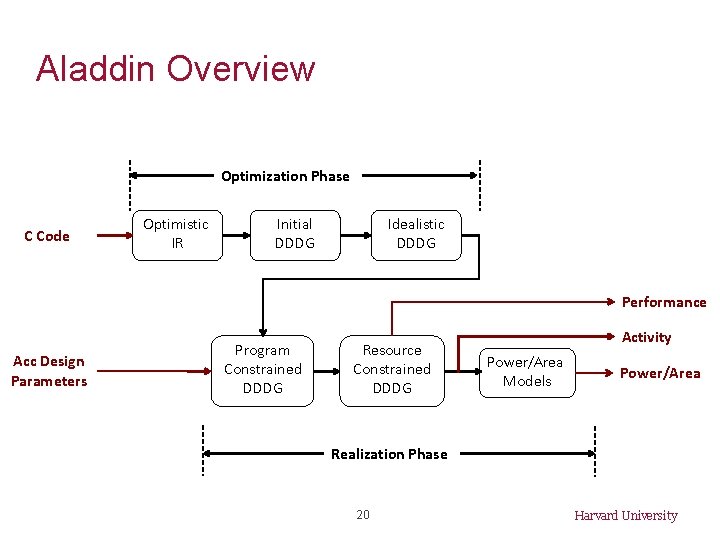

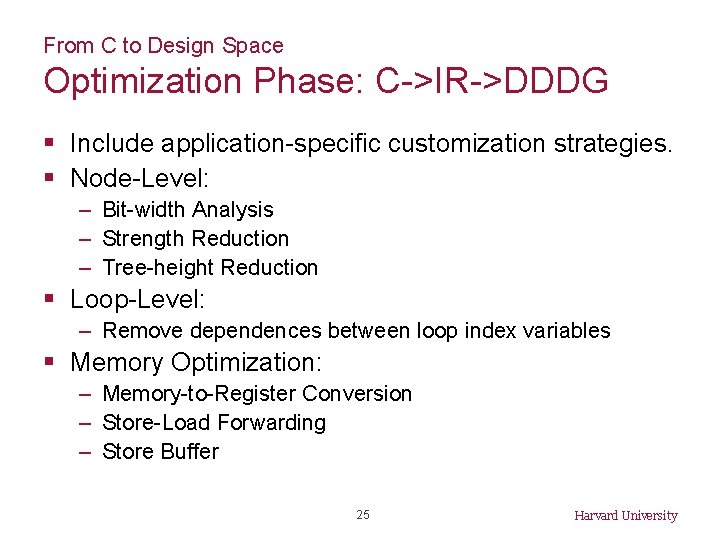

From C to Design Space Optimization Phase: C->IR->DDDG § Include application-specific customization strategies. § Node-Level: – Bit-width Analysis – Strength Reduction – Tree-height Reduction § Loop-Level: – Remove dependences between loop index variables § Memory Optimization: – Memory-to-Register Conversion – Store-Load Forwarding – Store Buffer 25 Harvard University

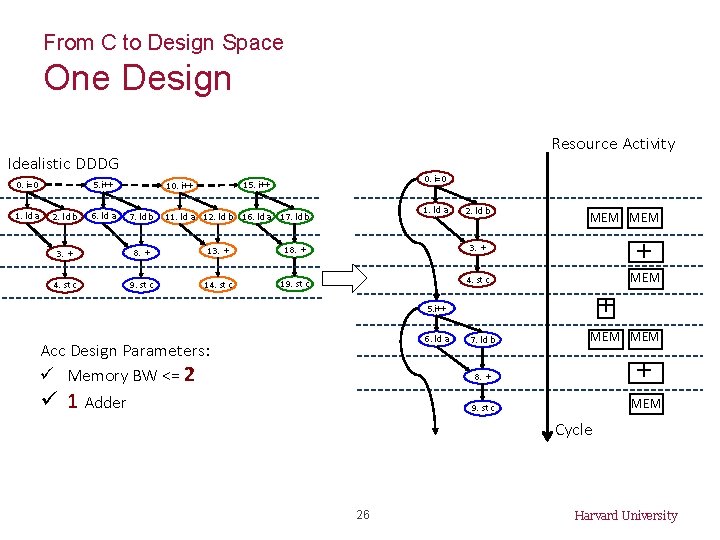

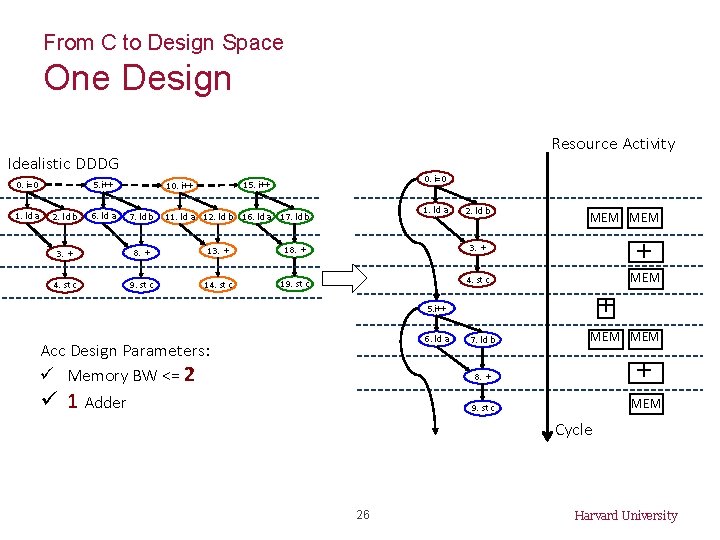

From C to Design Space One Design Resource Activity Idealistic DDDG 0. i=0 1. ld a 5. i++ 2. ld b 6. ld a 7. ld b 0. i=0 15. i++ 10. i++ 1. ld a 12. ld b 16. ld a 17. ld b 2. ld b MEM 3. + 8. + 13. + 18. + 3. + + 4. st c 9. st c 14. st c 19. st c 4. st c MEM + 5. i++ 6. ld a Acc Design Parameters: ü Memory BW <= 2 ü 1 Adder 7. ld b MEM + 8. + MEM 9. st c Cycle 26 Harvard University

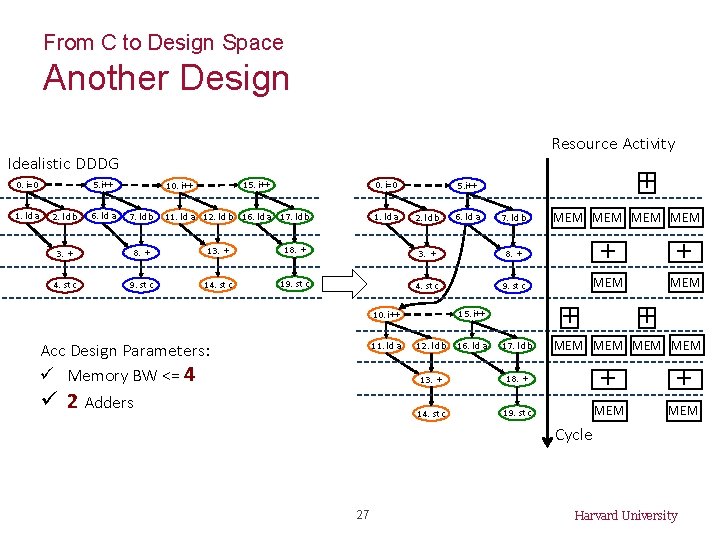

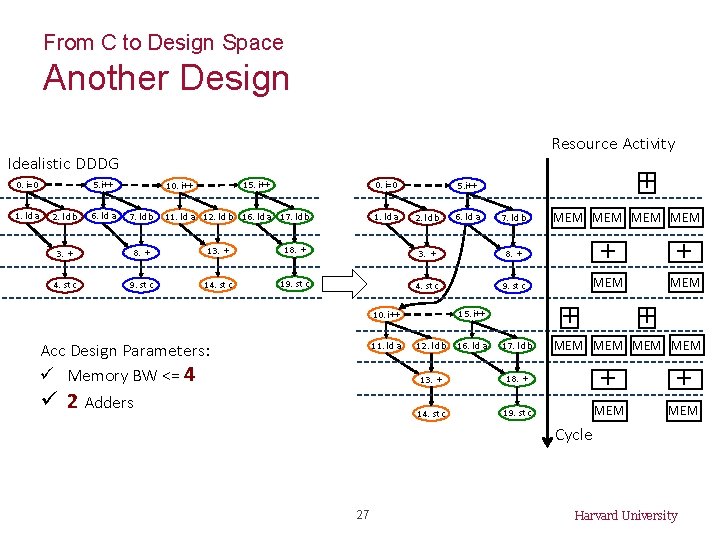

From C to Design Space Another Design Resource Activity Idealistic DDDG 0. i=0 1. ld a 5. i++ 2. ld b 6. ld a 15. i++ 10. i++ 7. ld b 0. i=0 11. ld a 12. ld b 16. ld a 17. ld b 1. ld a + 5. i++ 2. ld b 6. ld a 7. ld b 3. + 8. + 13. + 18. + 3. + 8. + 4. st c 9. st c 14. st c 19. st c 4. st c 9. st c Acc Design Parameters: ü Memory BW <= 4 ü 2 Adders 11. ld a 12. ld b 16. ld a + + MEM + + 15. i++ 10. i++ MEM MEM 17. ld b MEM MEM 13. + 18. + + + 14. st c 19. st c MEM Cycle 27 Harvard University

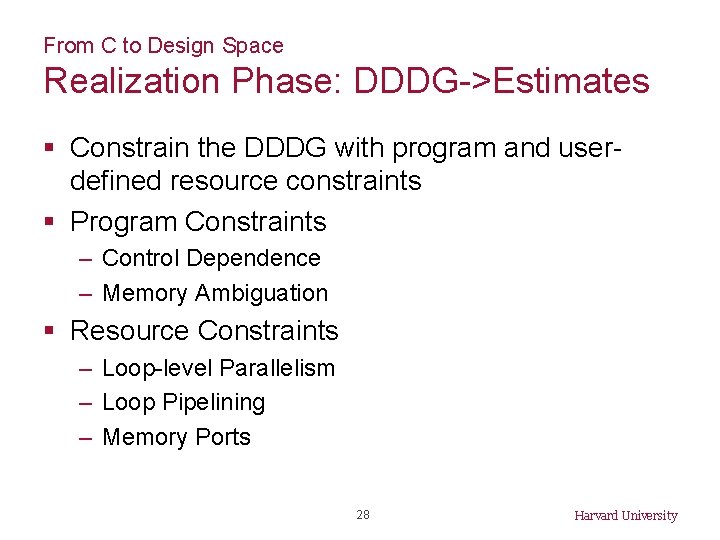

From C to Design Space Realization Phase: DDDG->Estimates § Constrain the DDDG with program and userdefined resource constraints § Program Constraints – Control Dependence – Memory Ambiguation § Resource Constraints – Loop-level Parallelism – Loop Pipelining – Memory Ports 28 Harvard University

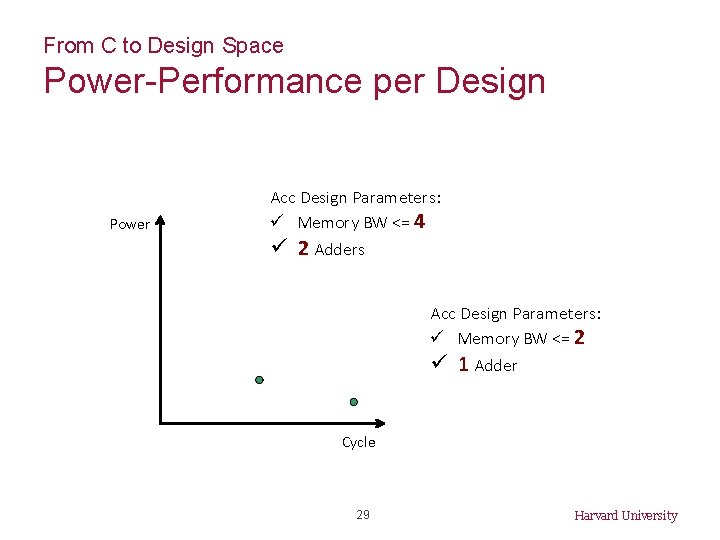

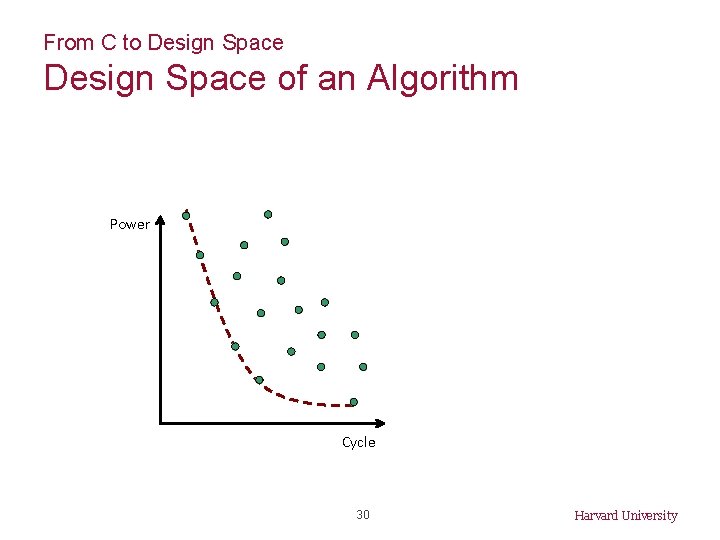

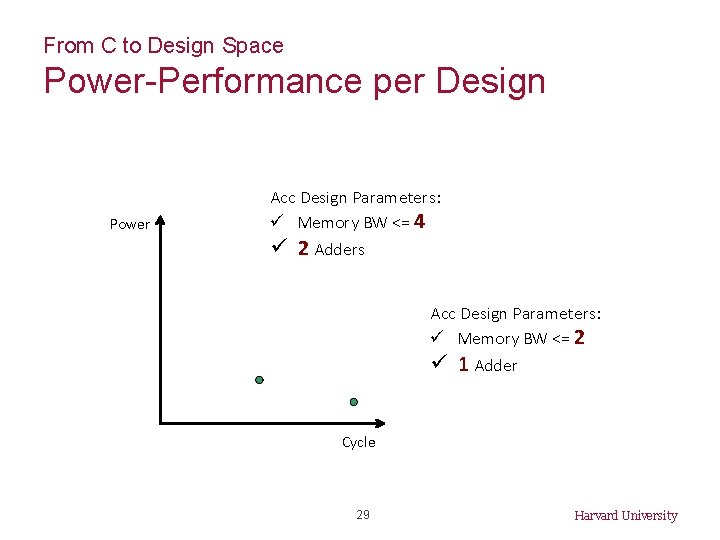

From C to Design Space Power-Performance per Design Power Acc Design Parameters: ü Memory BW <= 4 ü 2 Adders Acc Design Parameters: ü Memory BW <= 2 ü 1 Adder Cycle 29 Harvard University

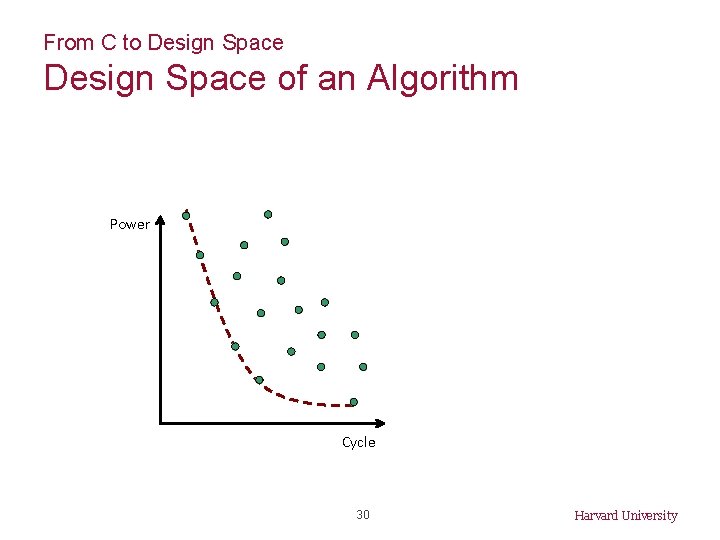

From C to Design Space of an Algorithm Power Cycle 30 Harvard University

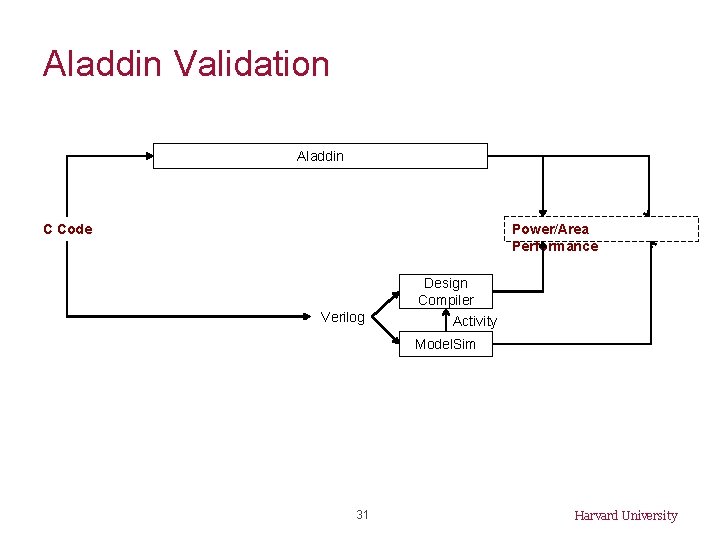

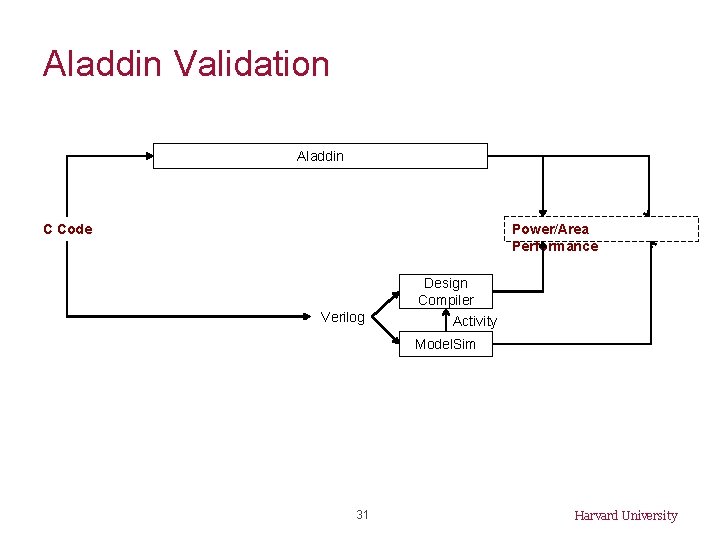

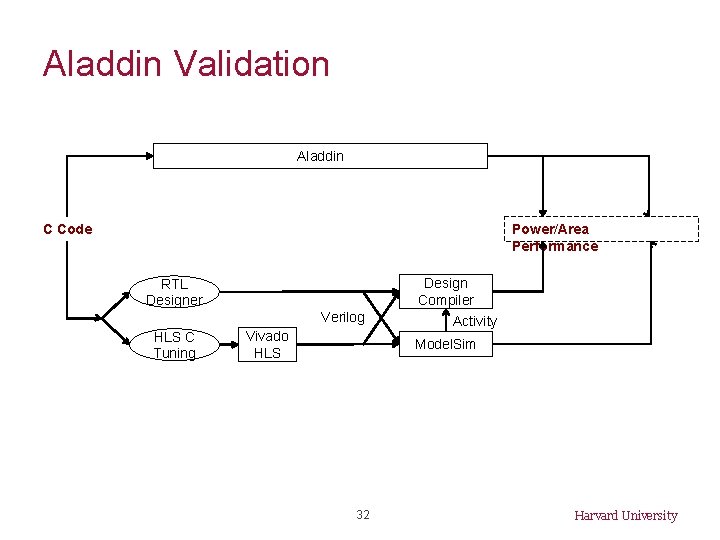

Aladdin Validation Aladdin C Code Power/Area Performance Verilog Design Compiler Activity Model. Sim 31 Harvard University

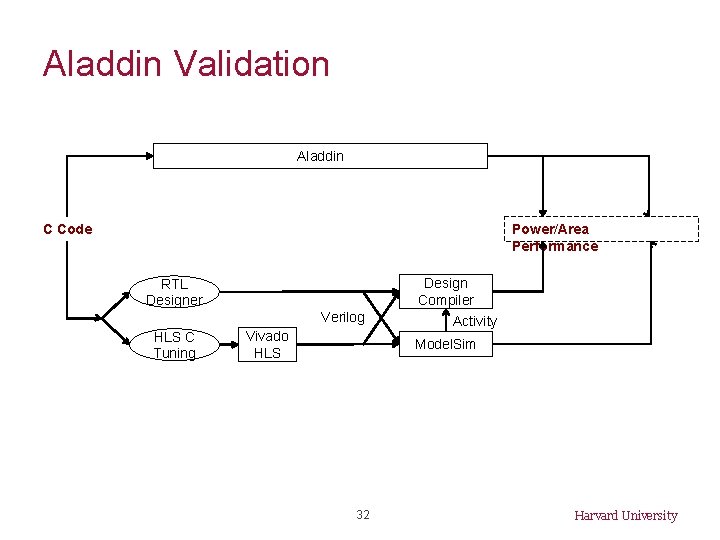

Aladdin Validation Aladdin C Code Power/Area Performance RTL Designer Verilog HLS C Tuning Vivado HLS Design Compiler Activity Model. Sim 32 Harvard University

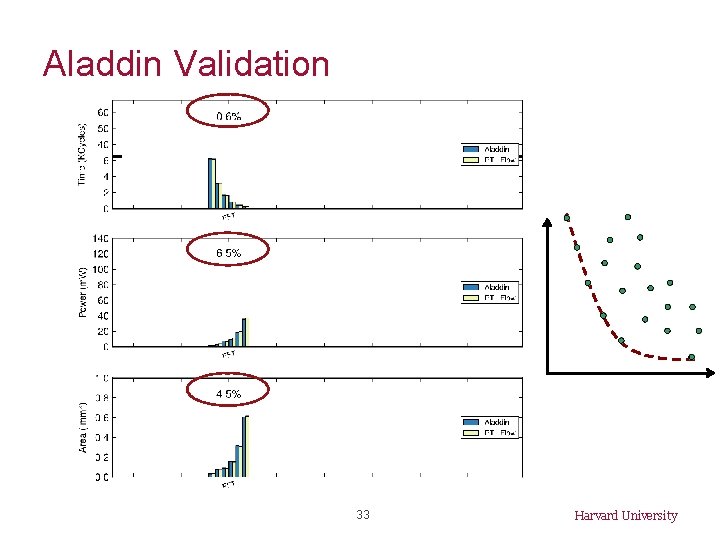

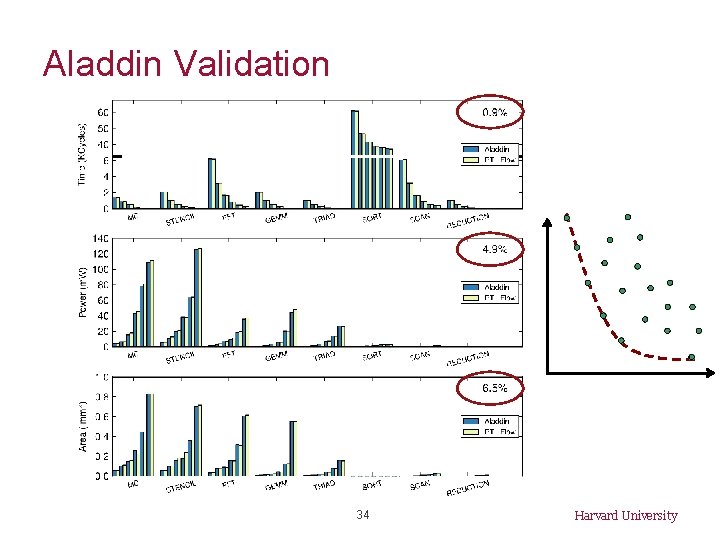

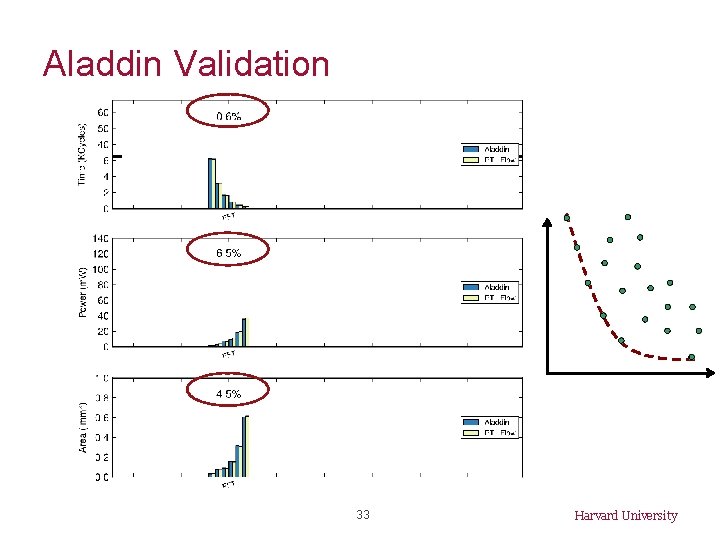

Aladdin Validation 33 Harvard University

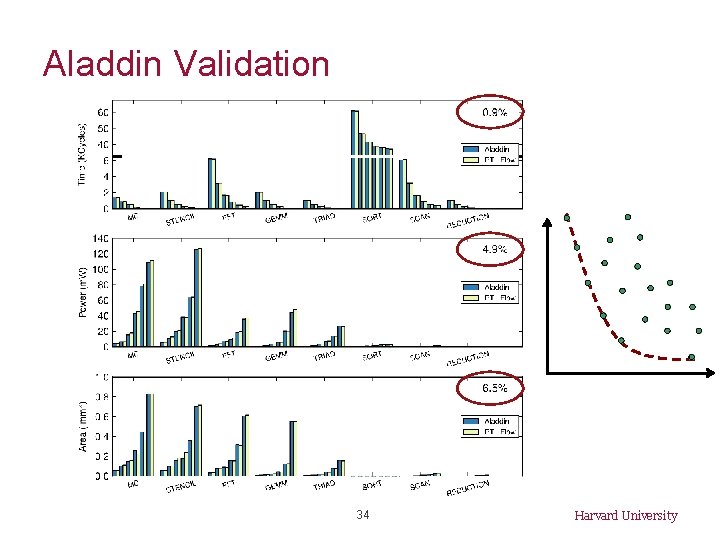

Aladdin Validation 34 Harvard University

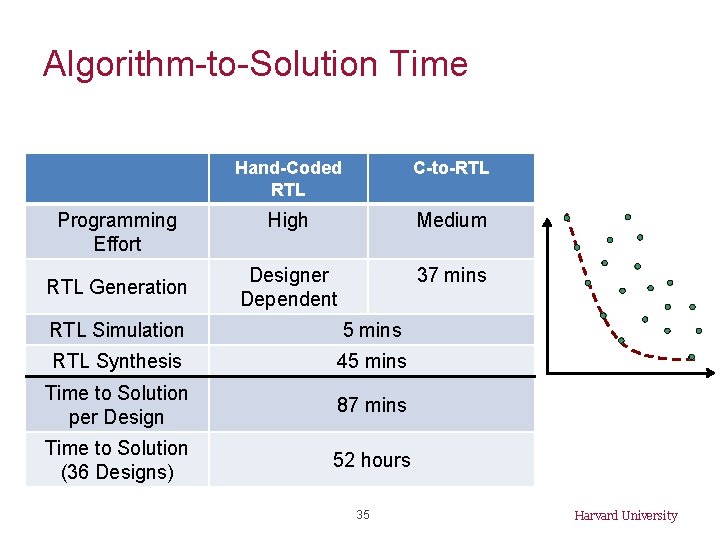

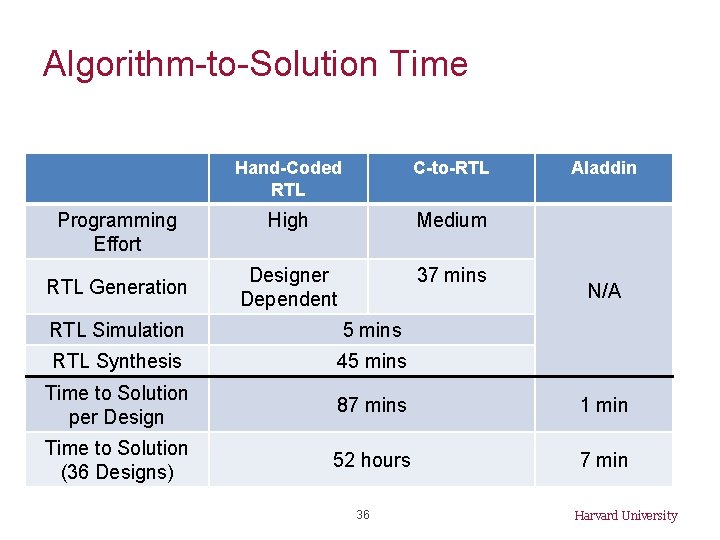

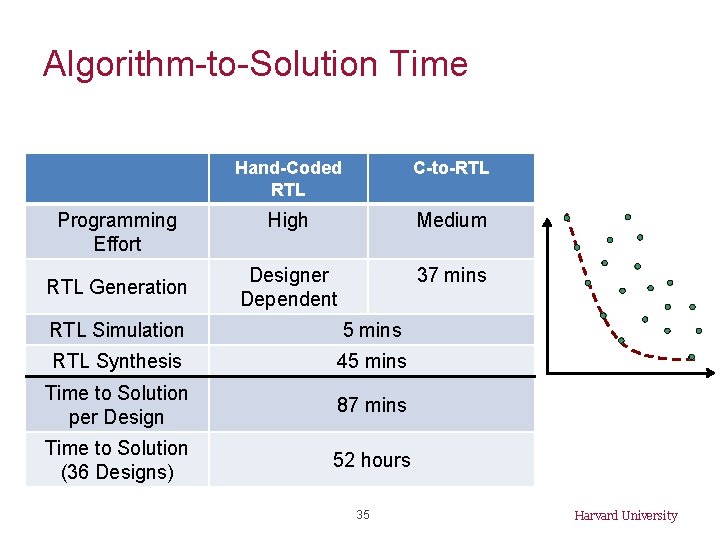

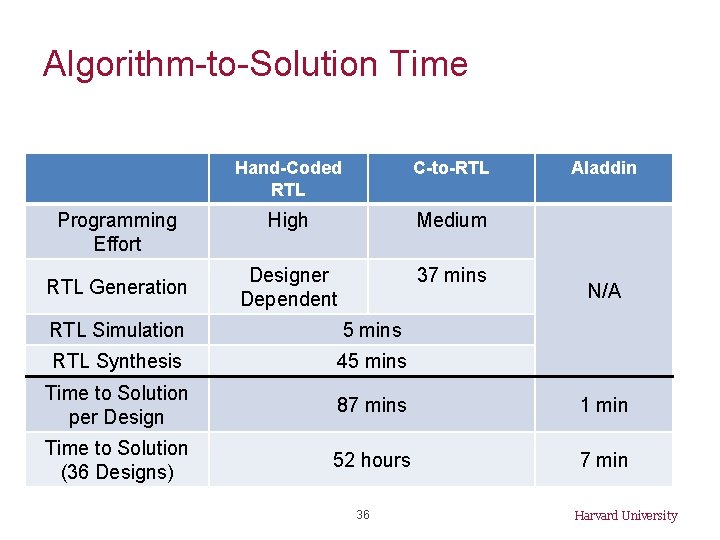

Algorithm-to-Solution Time Programming Effort RTL Generation Hand-Coded RTL C-to-RTL High Medium Designer Dependent 37 mins RTL Simulation 5 mins RTL Synthesis 45 mins Time to Solution per Design 87 mins Time to Solution (36 Designs) 52 hours 35 Harvard University

Algorithm-to-Solution Time Programming Effort RTL Generation Hand-Coded RTL C-to-RTL High Medium Designer Dependent 37 mins Aladdin N/A RTL Simulation 5 mins RTL Synthesis 45 mins Time to Solution per Design 87 mins 1 min Time to Solution (36 Designs) 52 hours 7 min 36 Harvard University

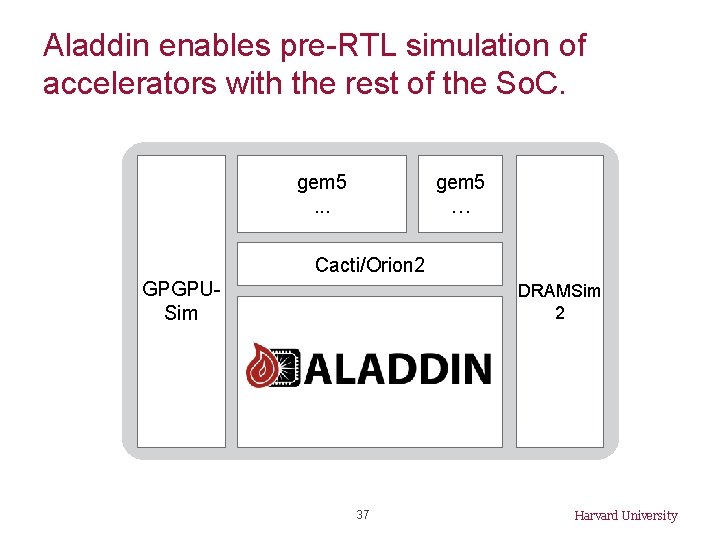

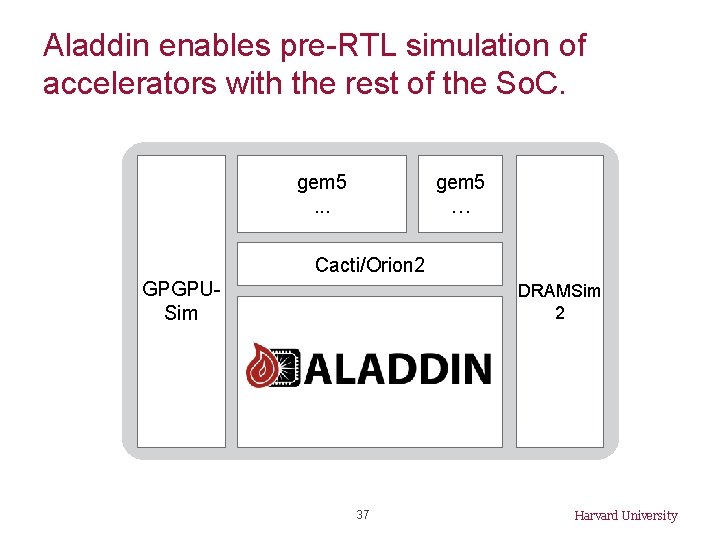

Aladdin enables pre-RTL simulation of accelerators with the rest of the So. C. gem 5 Big Cores. . . gem 5 Small Cores … Shared Cacti/Orion 2 Resources GPGPUGPU Sim DRAMSim Memory 2 Interface Sea of Fine-Grained Accelerators 37 Harvard University

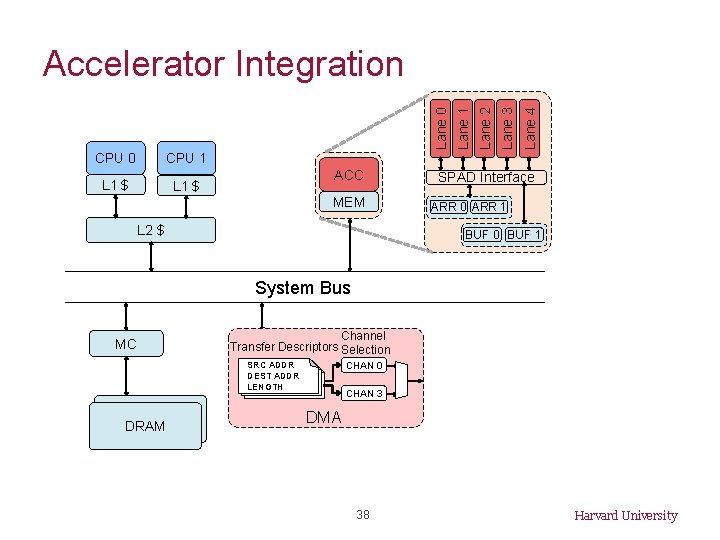

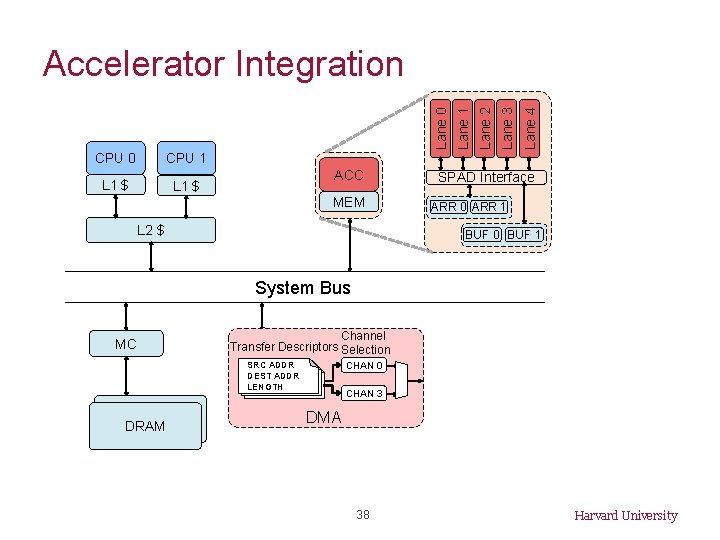

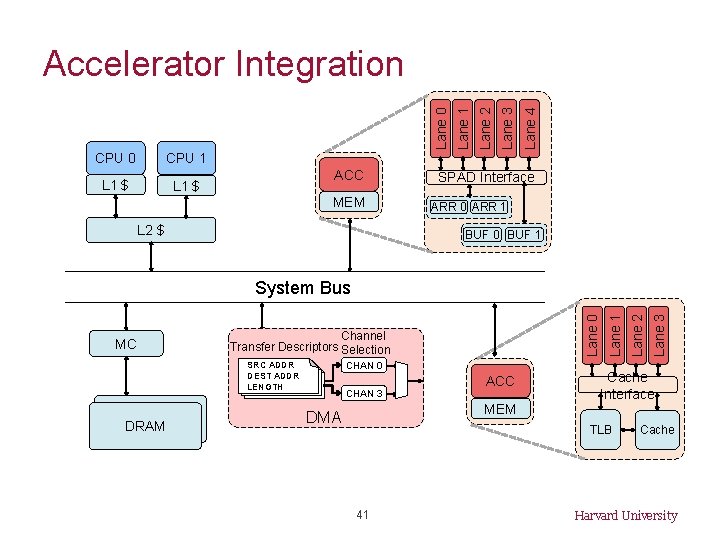

CPU 0 CPU 1 L 1 $ ACC MEM L 2 $ Lane 4 Lane 3 Lane 2 Lane 1 Lane 0 Accelerator Integration SPAD Interface ARR 0 ARR 1 BUF 0 BUF 1 System Bus MC Channel Transfer Descriptors Selection CHAN 0 SRC ADDR DEST ADDR LENGTH DRAM CHAN 3 DMA 38 Harvard University

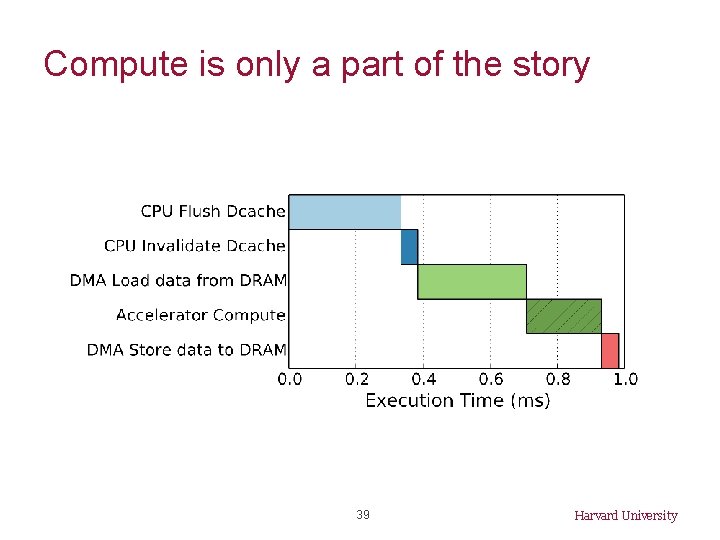

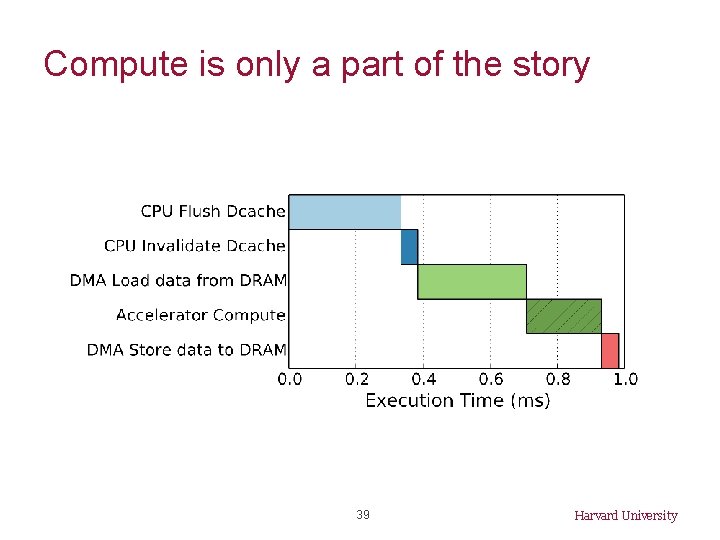

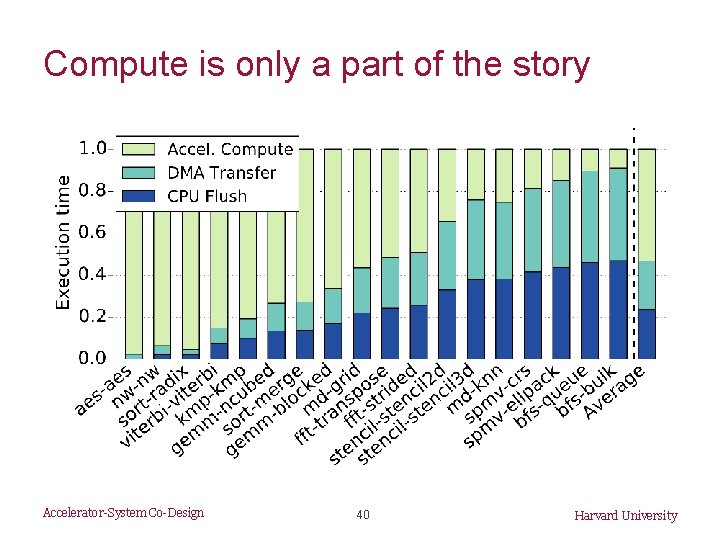

Compute is only a part of the story 39 Harvard University

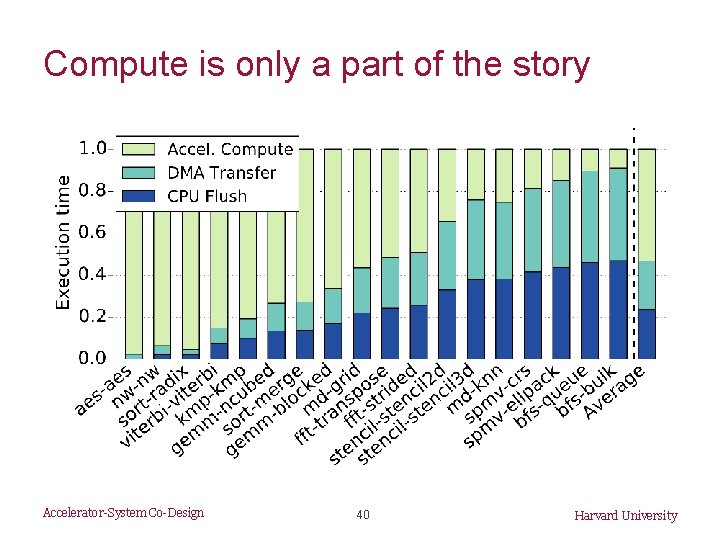

Compute is only a part of the story Accelerator-System Co-Design 40 Harvard University

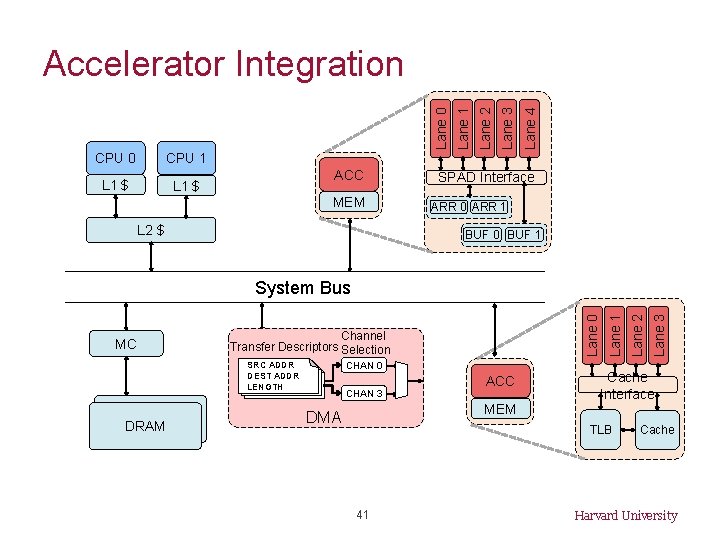

CPU 0 CPU 1 L 1 $ ACC MEM L 2 $ Lane 4 Lane 3 Lane 2 Lane 1 Lane 0 Accelerator Integration SPAD Interface ARR 0 ARR 1 BUF 0 BUF 1 CHAN 0 SRC ADDR DEST ADDR LENGTH DRAM CHAN 3 ACC MEM DMA Lane 3 Cache Interface TLB 41 Lane 2 Channel Transfer Descriptors Selection Lane 1 MC Lane 0 System Bus Cache Harvard University

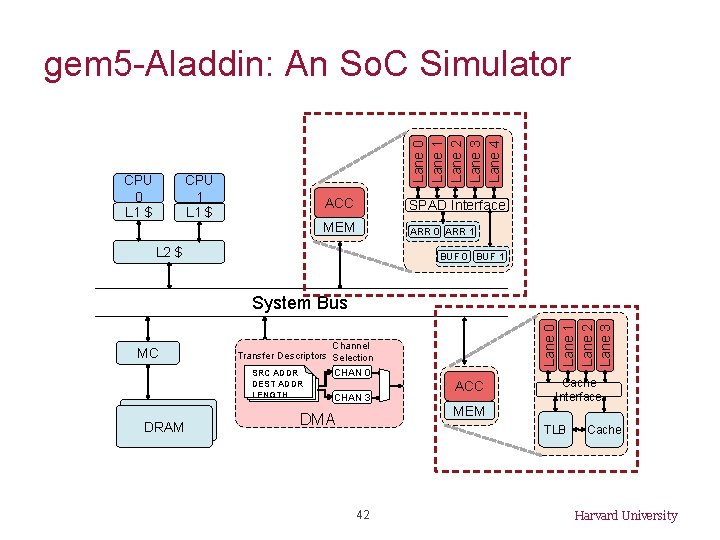

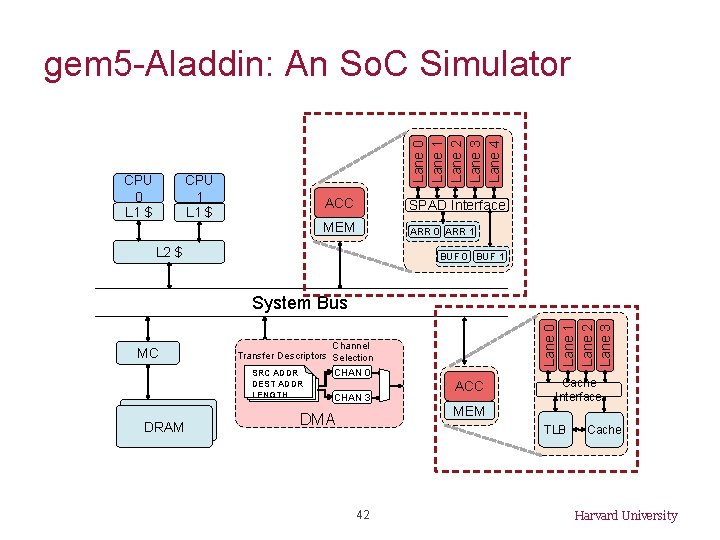

CPU 0 L 1 $ Lane 0 Lane 1 Lane 2 Lane 3 Lane 4 gem 5 -Aladdin: An So. C Simulator CPU 1 L 1 $ ACC SPAD Interface MEM ARR 0 ARR 1 L 2 $ BUF 0 BUF 1 MC Channel Transfer Descriptors Selection CHAN 0 SRC ADDR DEST ADDR LENGTH DRAM Lane 0 Lane 1 Lane 2 Lane 3 System Bus CHAN 3 ACC MEM DMA Cache Interface TLB 42 Cache Harvard University

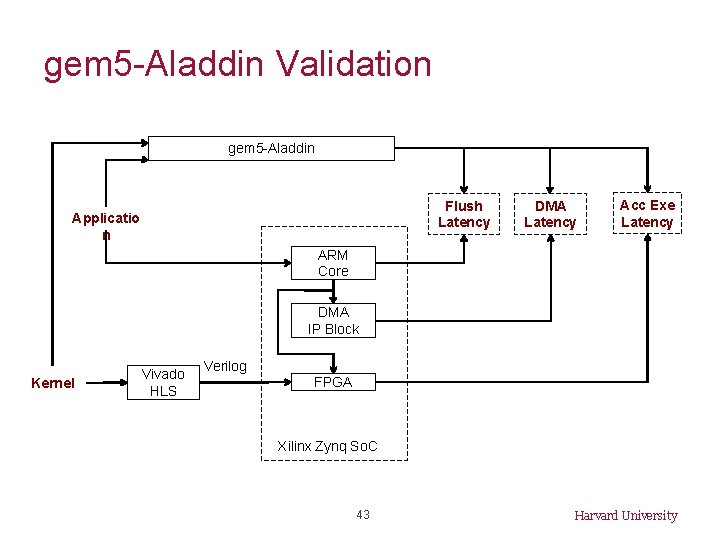

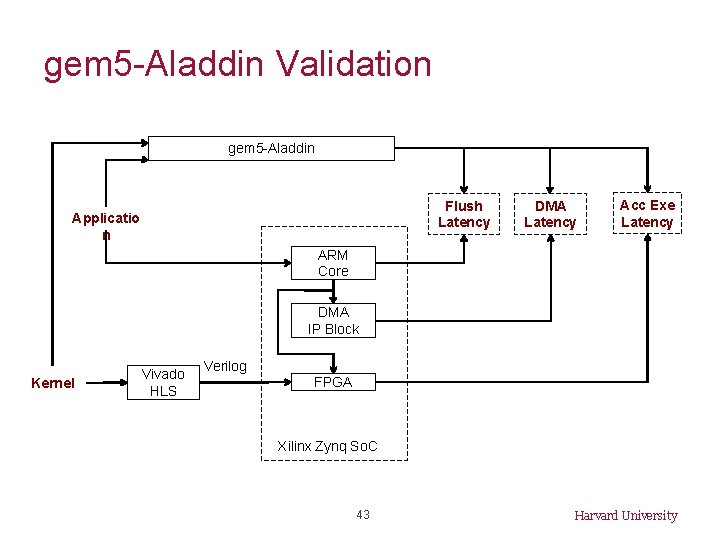

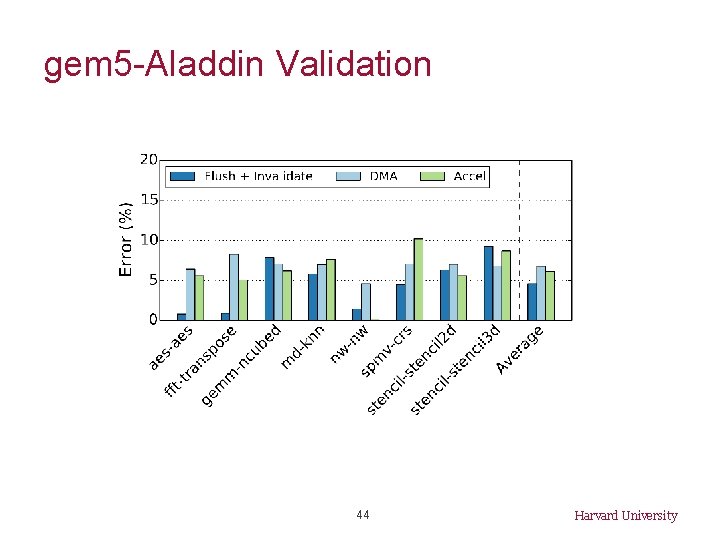

gem 5 -Aladdin Validation gem 5 -Aladdin Flush Latency Applicatio n DMA Latency Acc Exe Latency ARM Core DMA IP Block Kernel Vivado HLS Verilog FPGA Xilinx Zynq So. C 43 Harvard University

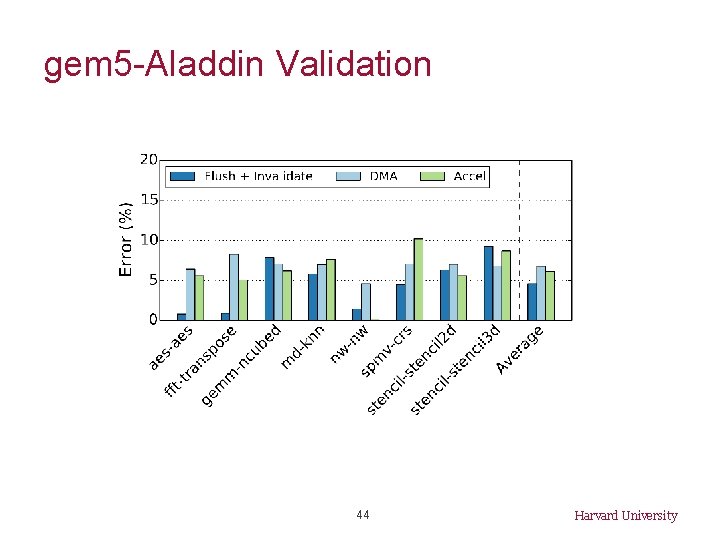

gem 5 -Aladdin Validation 44 Harvard University

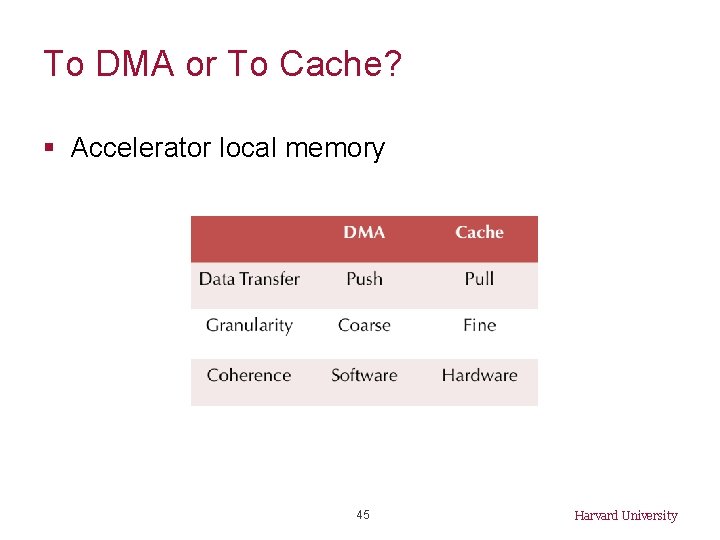

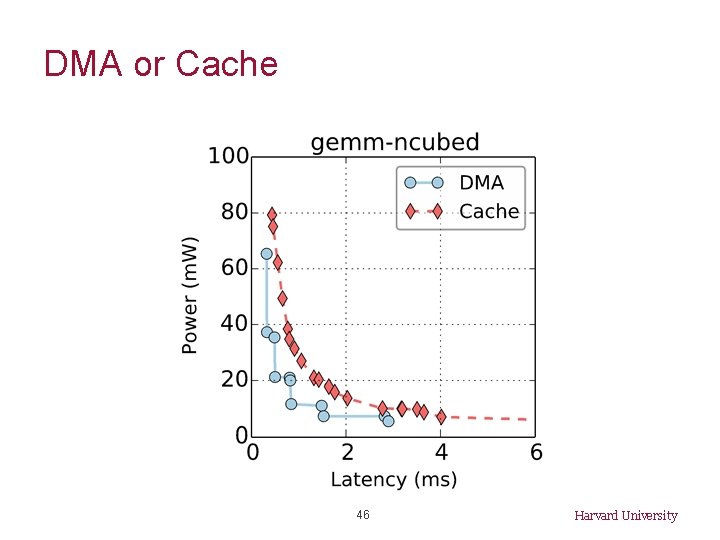

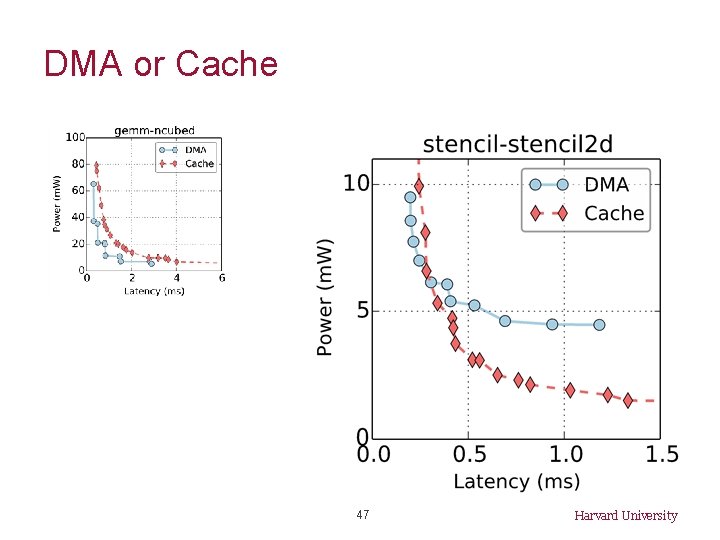

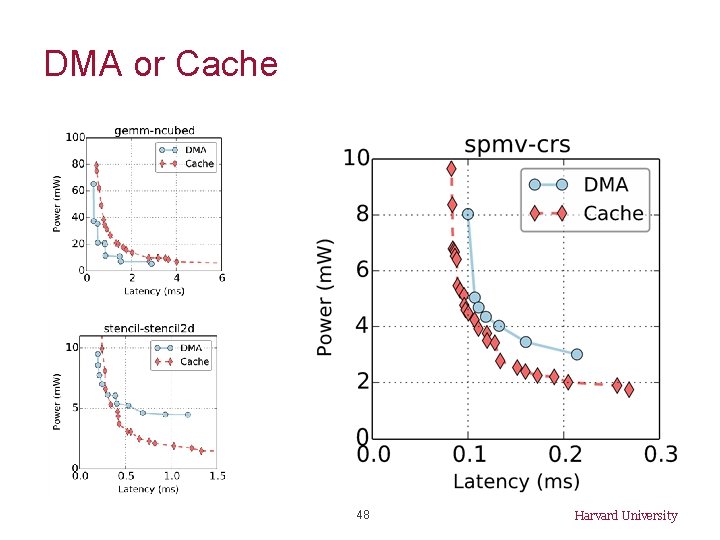

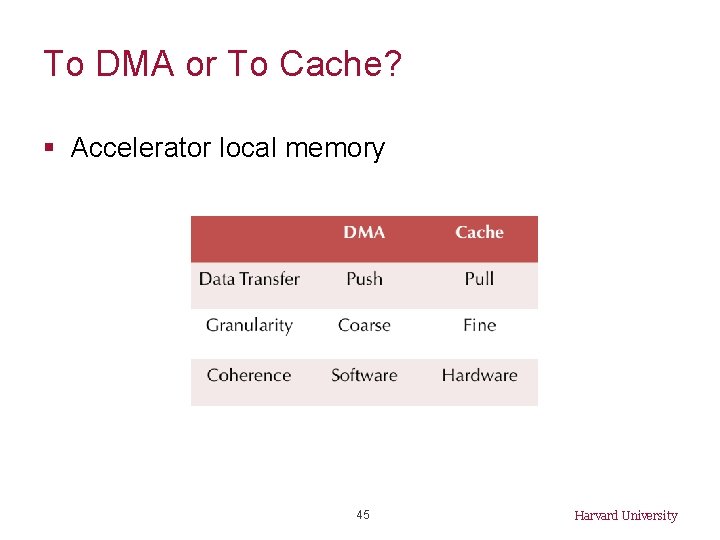

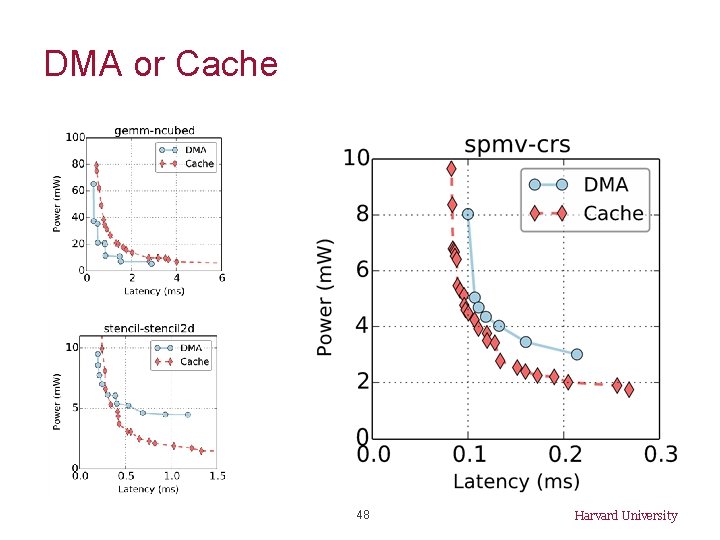

To DMA or To Cache? § Accelerator local memory 45 Harvard University

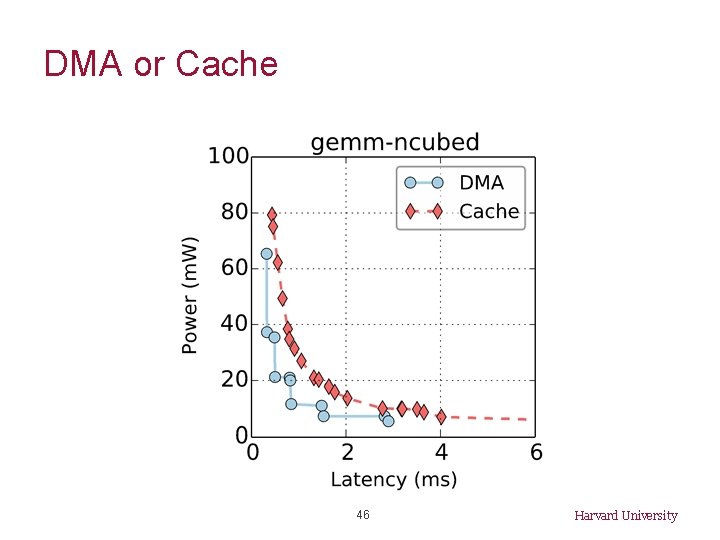

DMA or Cache 46 Harvard University

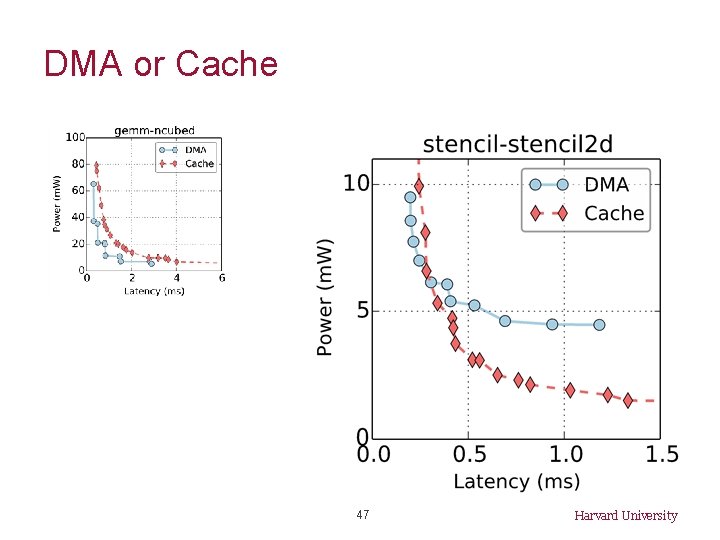

DMA or Cache 47 Harvard University

DMA or Cache 48 Harvard University

Conclusions § Architectures with 1000 s of accelerators will be radically different; New design tools are needed. § We built Aladdin, an architectural level power, performance, and area simulator for accelerators. § We integrated Aladdin with gem 5 to model the interactions between accelerators and the rest of the So. C. § These accelerator infrastructures open up opportunities for innovation on heterogeneous architecture designs. 49 Harvard University

![Contributions Big Cores GPUD SP WIICA Accelerator Workload Characterization ISPASS 13 Mach Suite Accelerator Contributions Big Cores GPU/D SP WIICA: Accelerator Workload Characterization [ISPASS’ 13] Mach. Suite: Accelerator](https://slidetodoc.com/presentation_image_h/c65a89e18a79dab436c4854d150f21d9/image-50.jpg)

Contributions Big Cores GPU/D SP WIICA: Accelerator Workload Characterization [ISPASS’ 13] Mach. Suite: Accelerator Benchmark Suite [IISWC’ 14] Small Core s Shared Resources Aladdin: Accelerator Pre. RTL, Power-Performance Simulator [ISCA’ 14, Top. Picks’ 15] Accelerator Design w/ Memory Interface High-Level Synthesis [ISLPED’ 13_1] Sea of Fine-Grained Accelerators Research Infrastructures for Hardware Accelerators [Synthesis Lecture’ 15] Accelerator-System Co-Design [Under Review] Instruction-Level Energy Model for Xeon Phi [ISLPED’ 13_2] 50 Harvard University

Publications 1. Y. S. Shao, S. Xi, V. Srinivasan, G. -Y. Wei, D. Brooks, “An Holistic Approach to Accelerator. System Co-Design, ” Under Review. 2. Y. S Shao and D. Brooks, “Research Infrastructures for Hardware Accelerators, ” Synthesis Lectures on Computer Architecture, Nov 2015. 3. Y. S. Shao, B. Reagen, G. -Y. Wei, D. Brooks, “The Aladdin Approach to Accelerator Design and Modeling, ” IEEE Micro Top. Picks, May-June 2015. 4. Y. S. Shao, S. Xi, V. Srinivasan, G. -Y. Wei, D. Brooks, “Toward Cache-Friendly Hardware Accelerators, ” SCAW’ 15. 5. B. Reagen, B. Adolf, Y. S. Shao, G. -Y. Wei, D. Brooks, “Mach. Suite: Benchmarks for Accelerator Design and Customized Architectures, ” IISWC’ 14. 6. Y. S. Shao, B. Reagen, G. -Y. Wei, D. Brooks, “Aladdin: A Pre-RTL, Power-Performance Accelerator Simulator Enabling Large Design Space Exploration of Customized Architectures, ” ISCA’ 14. 7. B. Reagen, Y. S. Shao, G. -Y. Wei, D. Brooks, “Quantifying Acceleration: Power/Performance Trade-Offs of Application Kernels in Hardware, ” ISLPED’ 13. 8. Y. S. Shao and D. Brooks, “Energy Characterization and Instruction-Level Energy Model of Intel’s Xeon Phi Processor, ” ISLPED’ 13. 9. Y. S. Shao and D. Brooks, “ISA-Independent Workload Characterization and its Implications for Specialized Architectures, ” ISPASS’ 13. 51 Harvard University

Acknowledgement 52 Harvard University

Thanks! 53 Harvard University