Design and Implementation of the CCC Parallel Programming

![An Example– Matrix Multiplication domain matrix_op[16] { int a[16], b[16], c[16]; multiply(distribute in int An Example– Matrix Multiplication domain matrix_op[16] { int a[16], b[16], c[16]; multiply(distribute in int](https://slidetodoc.com/presentation_image/0d03cb209d9cb0ef01e72205eb2c840b/image-27.jpg)

![An Example– Matrix Multiplication task: : main( ) { int A[16], B[16], C[16]; domain An Example– Matrix Multiplication task: : main( ) { int A[16], B[16], C[16]; domain](https://slidetodoc.com/presentation_image/0d03cb209d9cb0ef01e72205eb2c840b/image-28.jpg)

![An Example– Matrix Multiplication matrix_op: : multiply(A, B, C) distribute in int [16: block][16] An Example– Matrix Multiplication matrix_op: : multiply(A, B, C) distribute in int [16: block][16]](https://slidetodoc.com/presentation_image/0d03cb209d9cb0ef01e72205eb2c840b/image-29.jpg)

- Slides: 43

Design and Implementation of the CCC Parallel Programming Language Nai-Wei Lin Department of Computer Science and Information Engineering National Chung Cheng University ICS 2004

Outline z. Introduction z. The CCC programming language z. The CCC compiler z. Performance evaluation z. Conclusions ICS 2004 2

Motivations z. Parallelism is the future trend z. Programming in parallel is much more difficult than programming in serial z. Parallel architectures are very diverse z. Parallel programming models are very diverse ICS 2004 3

Motivations z. Design a parallel programming language that uniformly integrates various parallel programming models z. Implement a retargetable compiler for this parallel programming language on various parallel architectures ICS 2004 4

Approaches to Parallelism z. Library approach y. MPI (Message Passing Interface), pthread z. Compiler approach y. HPF (High Performance Fortran), HPC++ z. Language approach y. Occam, Linda, CCC (Chung Cheng C) ICS 2004 5

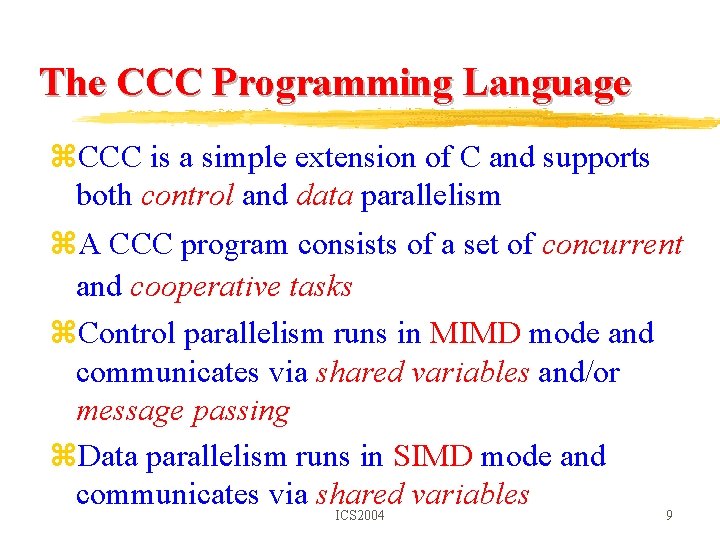

Models of Parallel Architectures z. Control Model y. SIMD: Single Instruction Multiple Data y. MIMD: Multiple Instruction Multiple Data z. Data Model y. Shared-memory y. Distributed-memory ICS 2004 6

Models of Parallel Programming z. Concurrency y. Control parallelism: simultaneously execute multiple threads of control y. Data parallelism: simultaneously execute the same operations on multiple data z. Synchronization and communication y. Shared variables y. Message passing ICS 2004 7

Granularity of Parallelism z. Procedure-level parallelism y. Concurrent execution of procedures on multiple processors z. Loop-level parallelism y. Concurrent execution of iterations of loops on multiple processors z. Instruction-level parallelism y. Concurrent execution of instructions on a single processor with multiple functional units ICS 2004 8

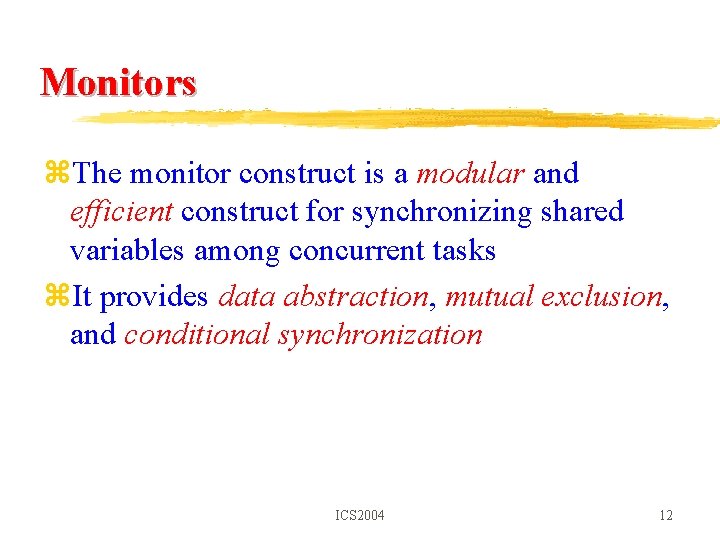

The CCC Programming Language z. CCC is a simple extension of C and supports both control and data parallelism z. A CCC program consists of a set of concurrent and cooperative tasks z. Control parallelism runs in MIMD mode and communicates via shared variables and/or message passing z. Data parallelism runs in SIMD mode and communicates via shared variables ICS 2004 9

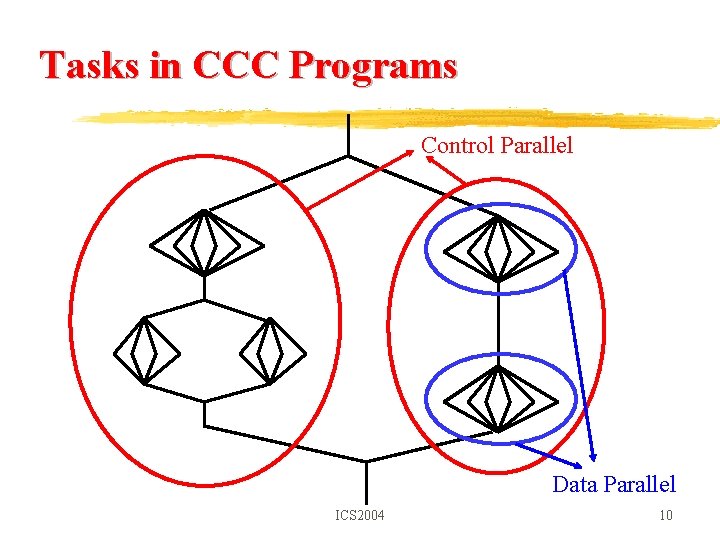

Tasks in CCC Programs Control Parallel Data Parallel ICS 2004 10

Control Parallelism z. Concurrency ytask ypar and parfor z. Synchronization and communication yshared variables – monitors ymessage passing – channels ICS 2004 11

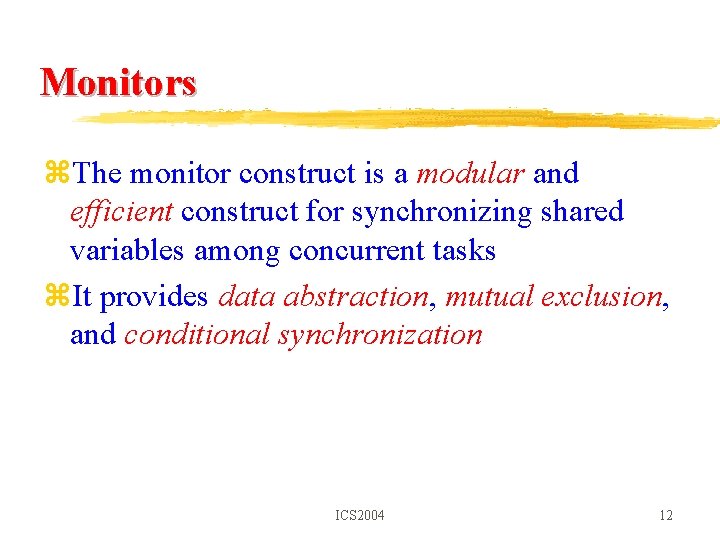

Monitors z. The monitor construct is a modular and efficient construct for synchronizing shared variables among concurrent tasks z. It provides data abstraction, mutual exclusion, and conditional synchronization ICS 2004 12

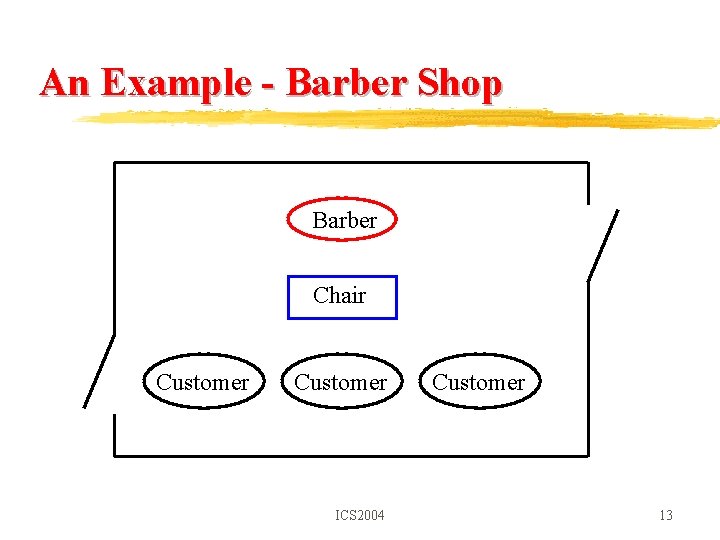

An Example - Barber Shop Barber Chair Customer ICS 2004 Customer 13

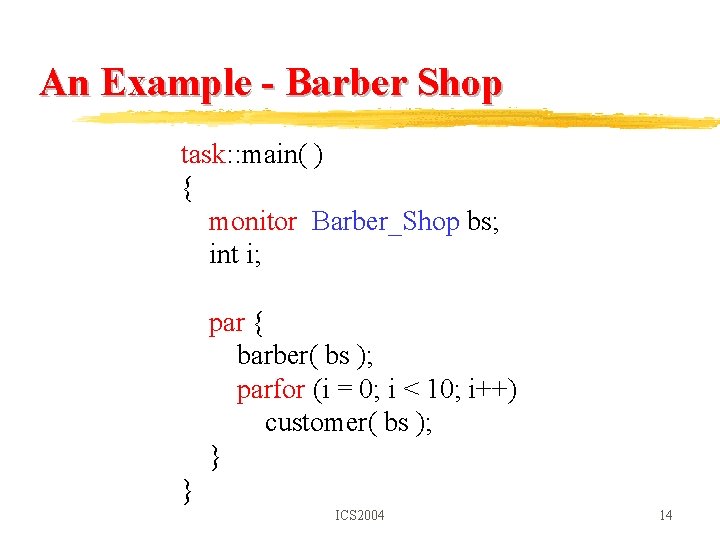

An Example - Barber Shop task: : main( ) { monitor Barber_Shop bs; int i; par { barber( bs ); parfor (i = 0; i < 10; i++) customer( bs ); } } ICS 2004 14

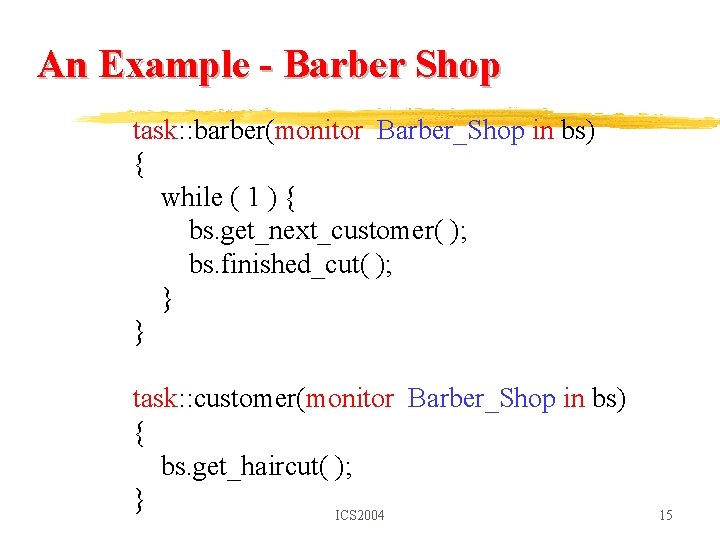

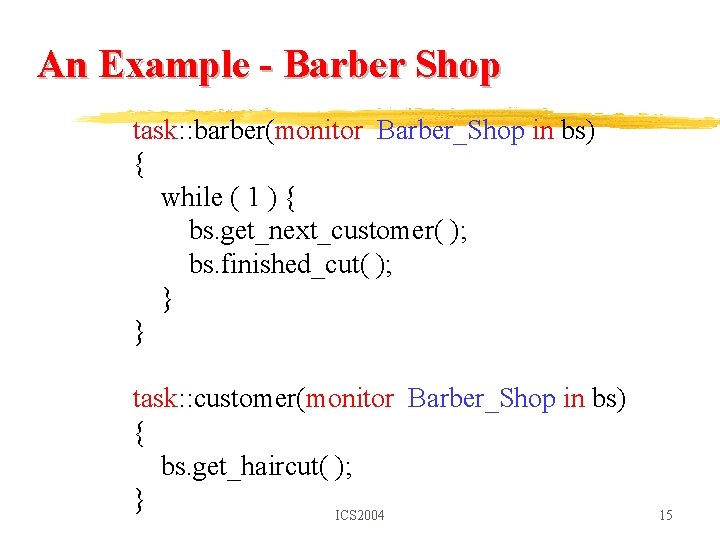

An Example - Barber Shop task: : barber(monitor Barber_Shop in bs) { while ( 1 ) { bs. get_next_customer( ); bs. finished_cut( ); } } task: : customer(monitor Barber_Shop in bs) { bs. get_haircut( ); } ICS 2004 15

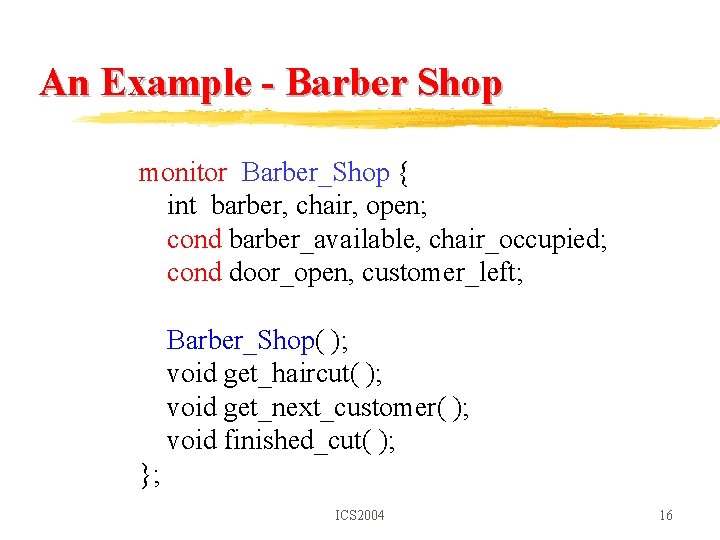

An Example - Barber Shop monitor Barber_Shop { int barber, chair, open; cond barber_available, chair_occupied; cond door_open, customer_left; Barber_Shop( ); void get_haircut( ); void get_next_customer( ); void finished_cut( ); }; ICS 2004 16

An Example - Barber Shop Barber_Shop( ) { barber = 0; chair = 0; open = 0; } void get_haircut( ) { while (barber == 0) wait(barber_available); barber = 1; chair += 1; signal(chair_occupied); while (open == 0) wait(door_open); open = 1; signal(customer_left); } ICS 2004 17

An Example - Barber Shop void get_next_customer( ) { barber += 1; signal(barber_available); while (chair == 0) wait(chair_occupied); chair = 1; } void get_haircut( ) { open += 1; signal(door_open); while (open > 0) wait(customer_left); } ICS 2004 18

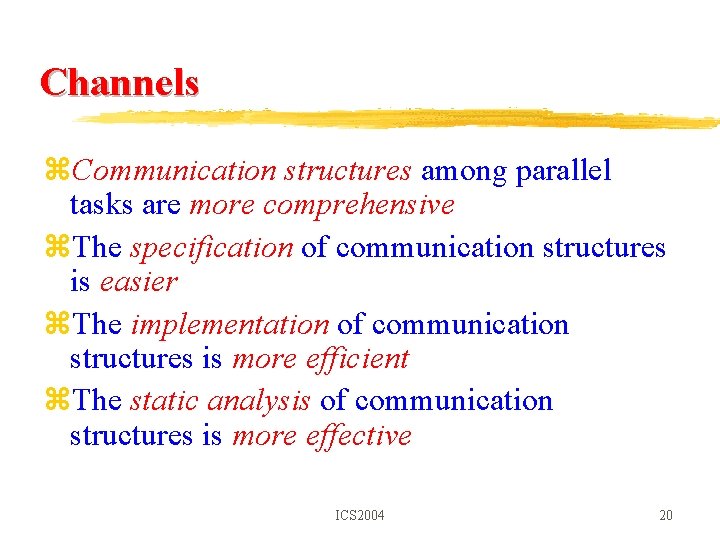

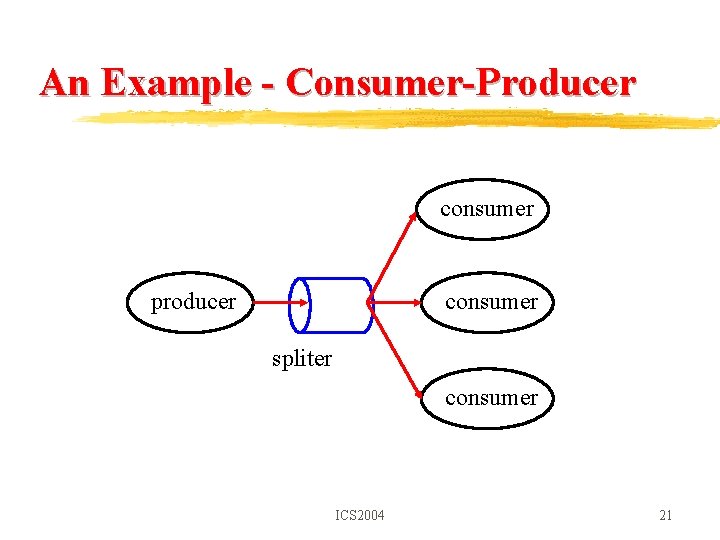

Channels z. The channel construct is a modular and efficient construct for message passing among concurrent tasks z. Pipe: one to one z. Merger: many to one z. Spliter: one to many z. Multiplexer: many to many ICS 2004 19

Channels z. Communication structures among parallel tasks are more comprehensive z. The specification of communication structures is easier z. The implementation of communication structures is more efficient z. The static analysis of communication structures is more effective ICS 2004 20

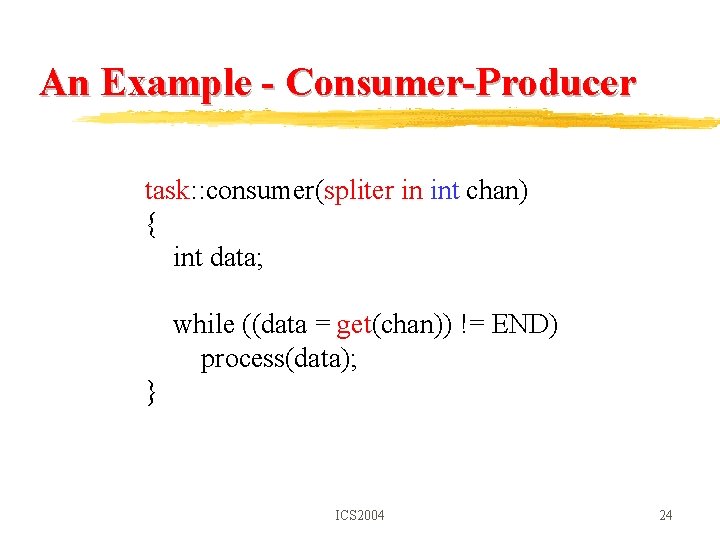

An Example - Consumer-Producer consumer producer consumer spliter consumer ICS 2004 21

An Example - Consumer-Producer task: : main( ) { spliter int chan; int i; par { producer( chan ); parfor (i = 0; i < 10; i++) consumer( chan ); } } ICS 2004 22

An Example - Consumer-Producer task: : producer(spliter in int chan) { int i; for (i = 0; i < 100; i++) put(chan, i); for (i = 0; i < 10; i++) put(chan, END); } ICS 2004 23

An Example - Consumer-Producer task: : consumer(spliter in int chan) { int data; while ((data = get(chan)) != END) process(data); } ICS 2004 24

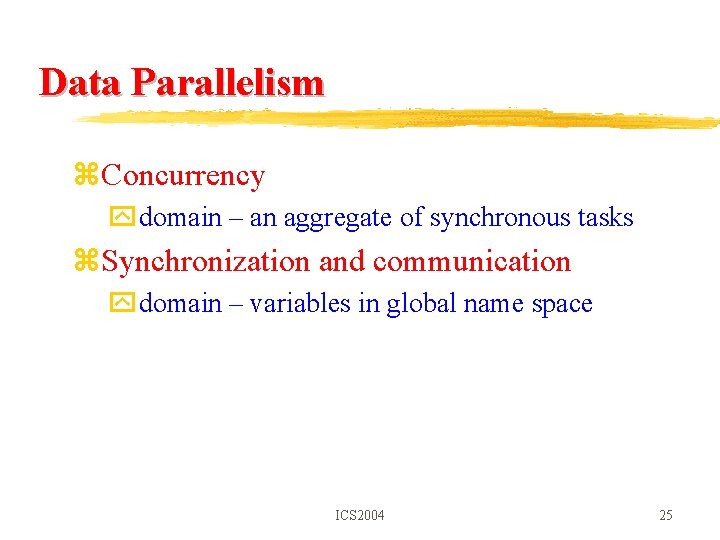

Data Parallelism z. Concurrency ydomain – an aggregate of synchronous tasks z. Synchronization and communication ydomain – variables in global name space ICS 2004 25

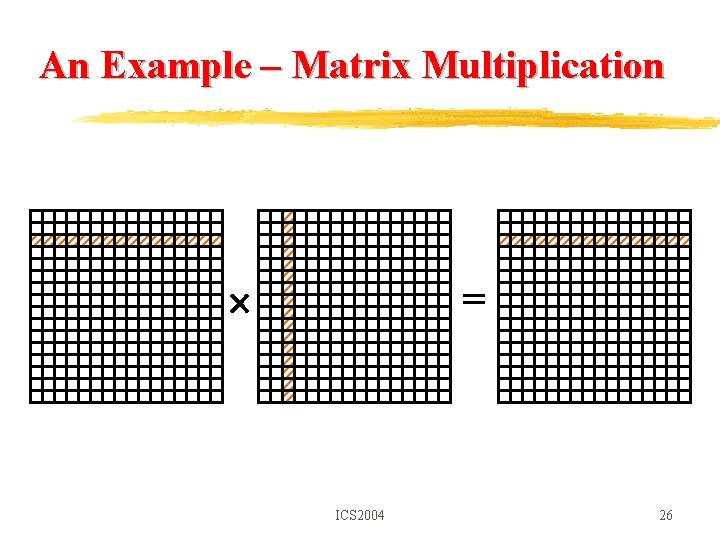

An Example – Matrix Multiplication = ICS 2004 26

![An Example Matrix Multiplication domain matrixop16 int a16 b16 c16 multiplydistribute in int An Example– Matrix Multiplication domain matrix_op[16] { int a[16], b[16], c[16]; multiply(distribute in int](https://slidetodoc.com/presentation_image/0d03cb209d9cb0ef01e72205eb2c840b/image-27.jpg)

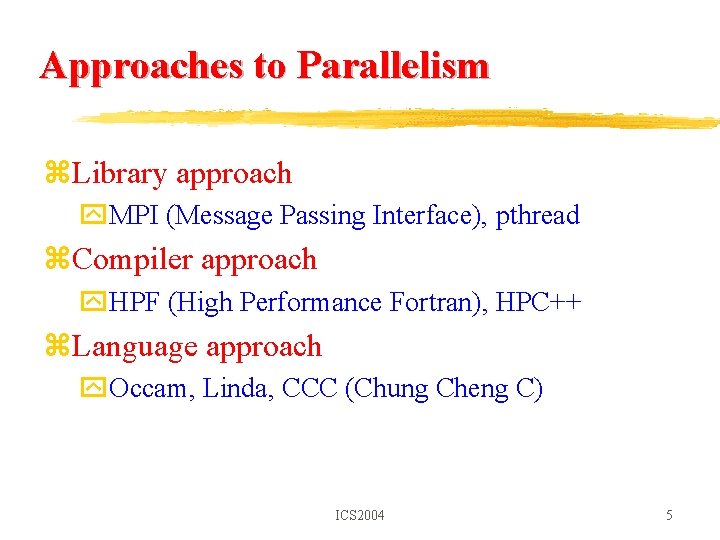

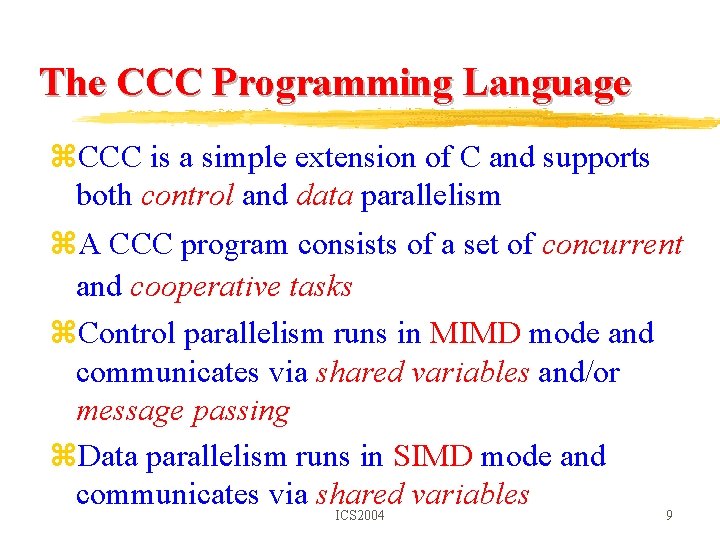

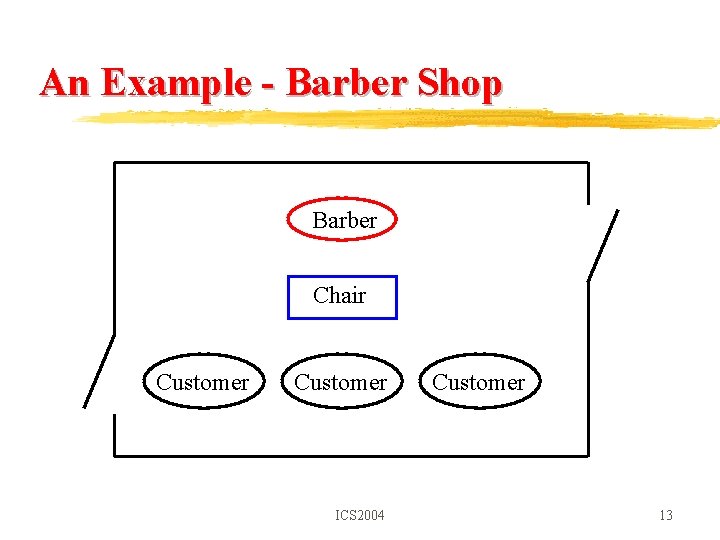

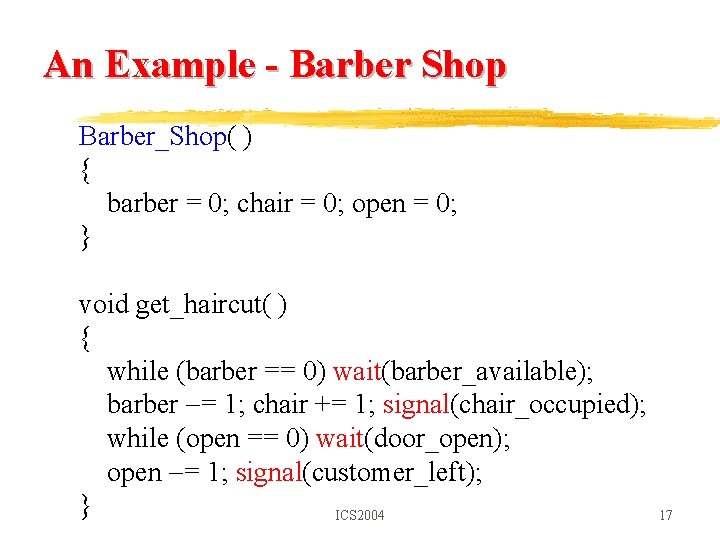

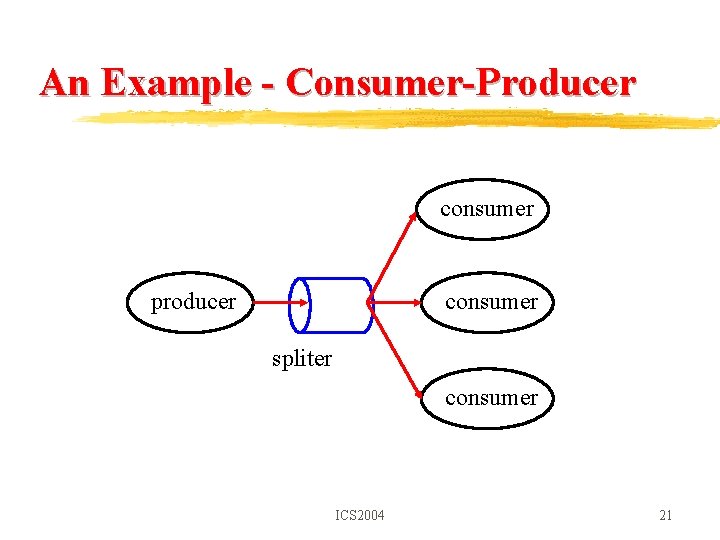

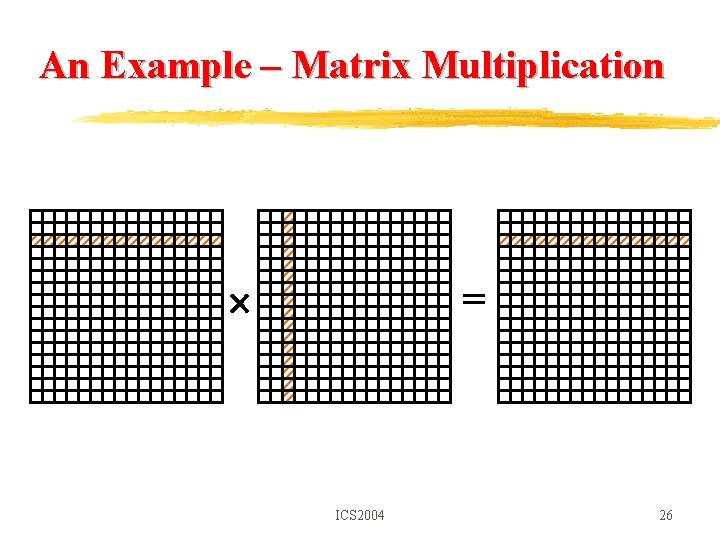

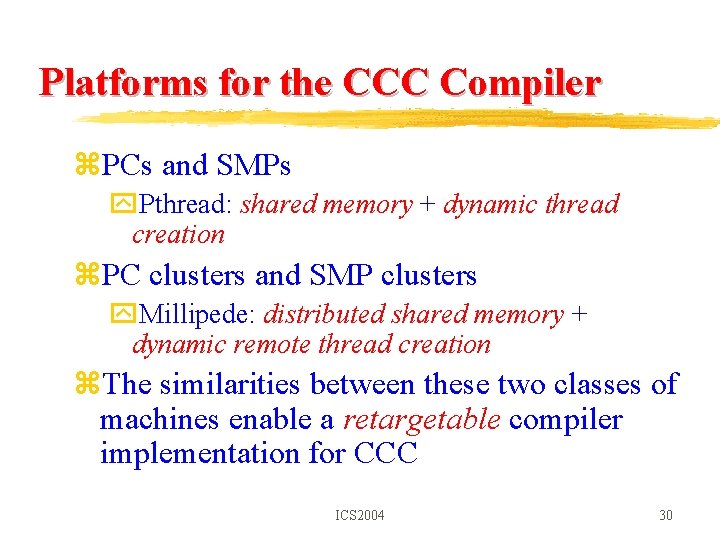

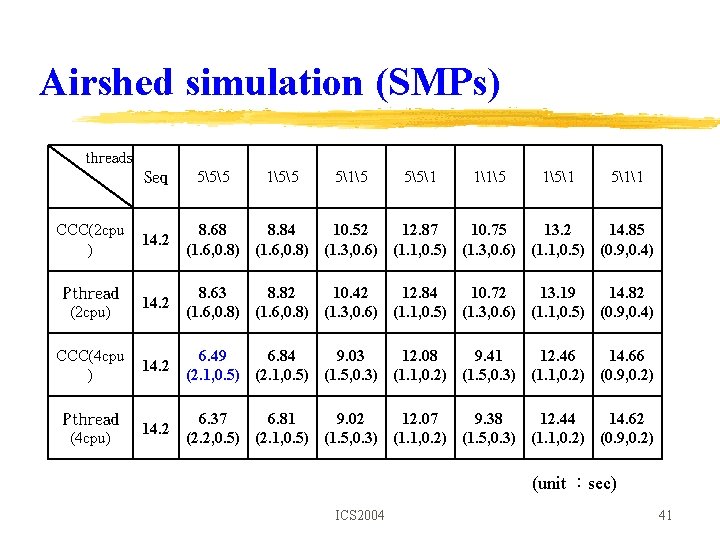

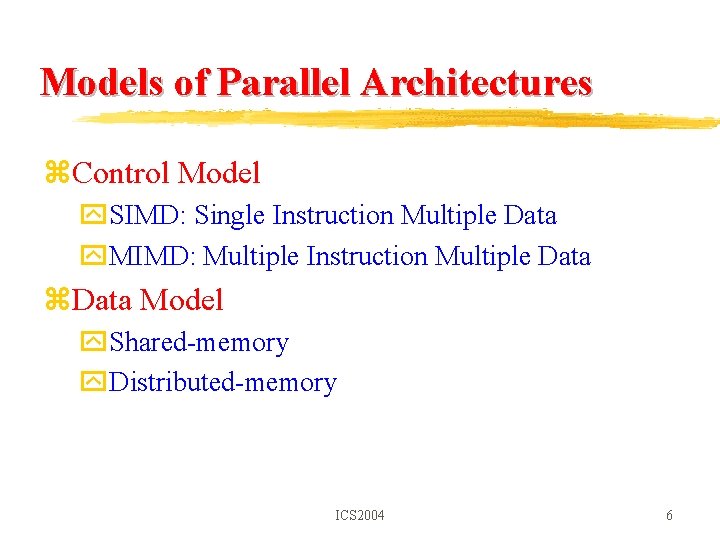

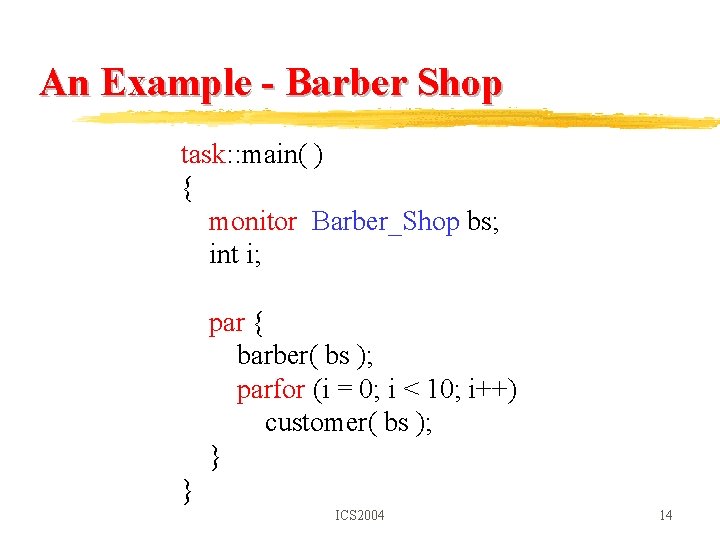

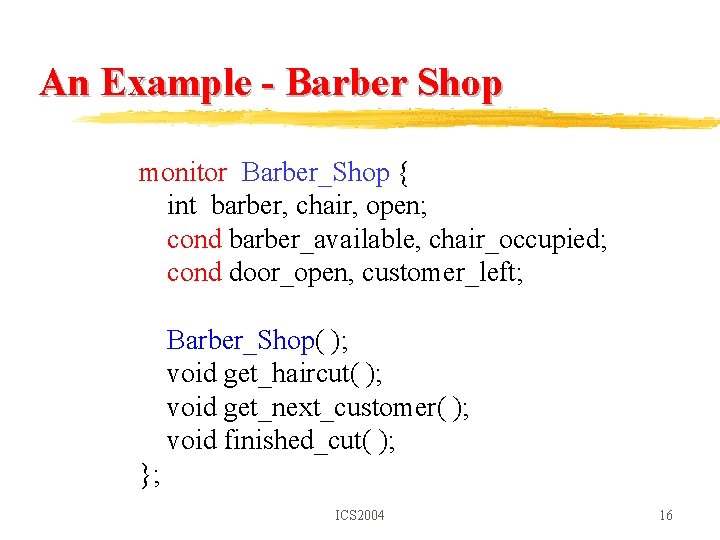

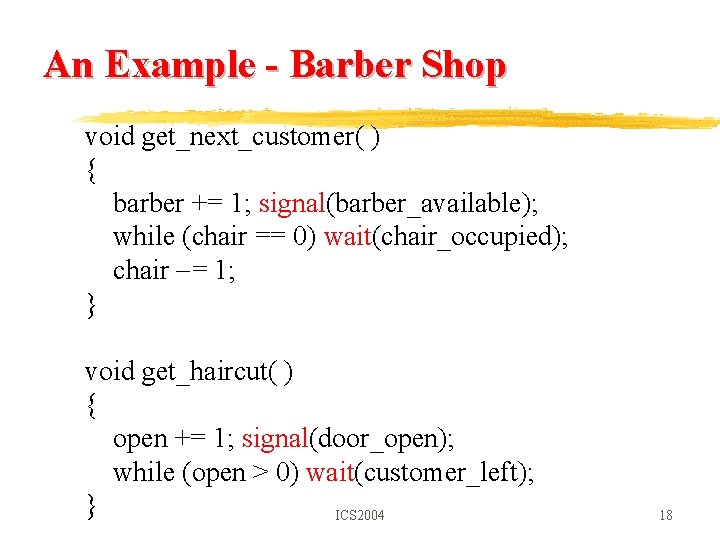

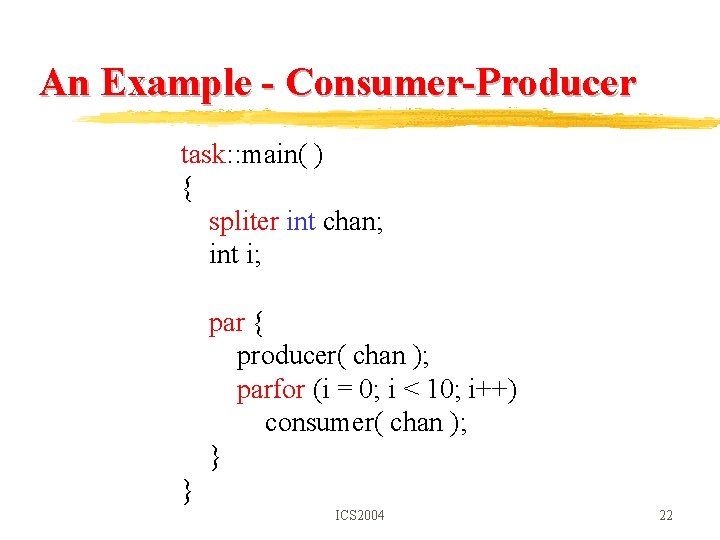

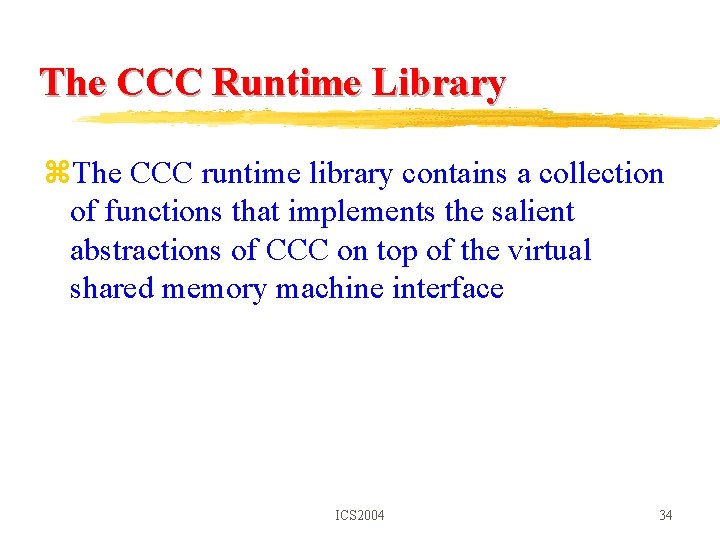

An Example– Matrix Multiplication domain matrix_op[16] { int a[16], b[16], c[16]; multiply(distribute in int [16: block][16], distribute in int [16][16: block], distribute out int [16: block][16]); }; ICS 2004 27

![An Example Matrix Multiplication task main int A16 B16 C16 domain An Example– Matrix Multiplication task: : main( ) { int A[16], B[16], C[16]; domain](https://slidetodoc.com/presentation_image/0d03cb209d9cb0ef01e72205eb2c840b/image-28.jpg)

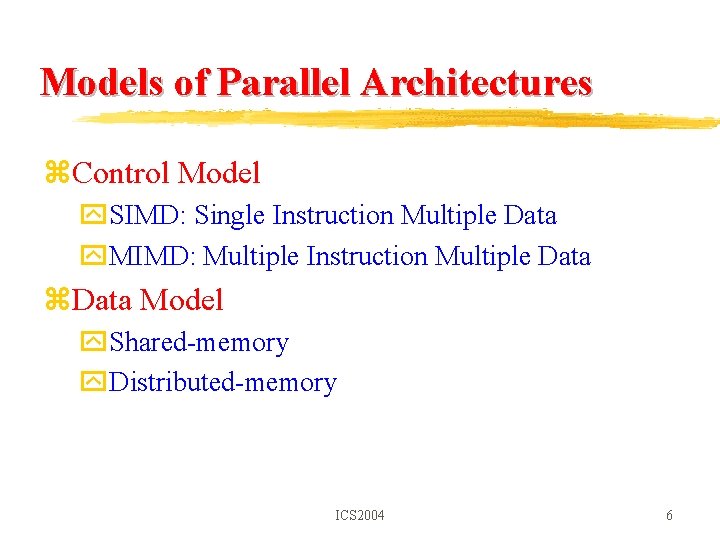

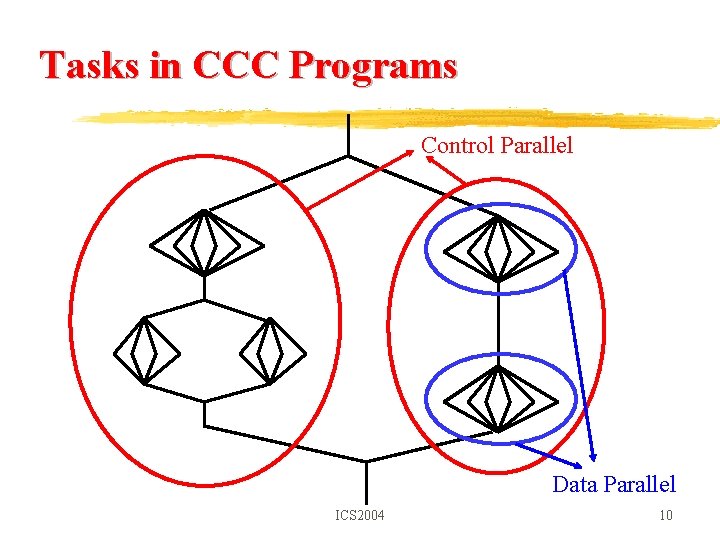

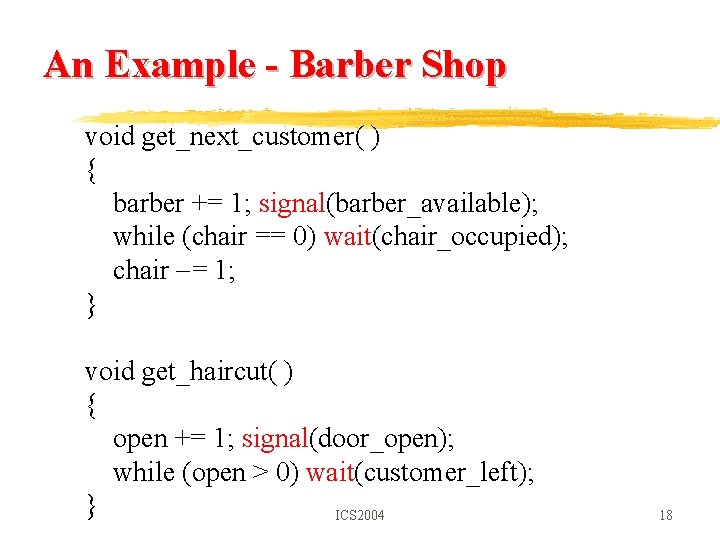

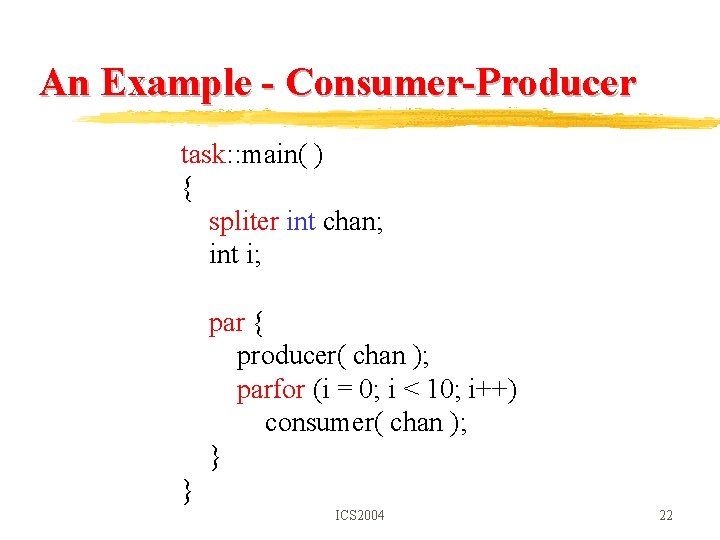

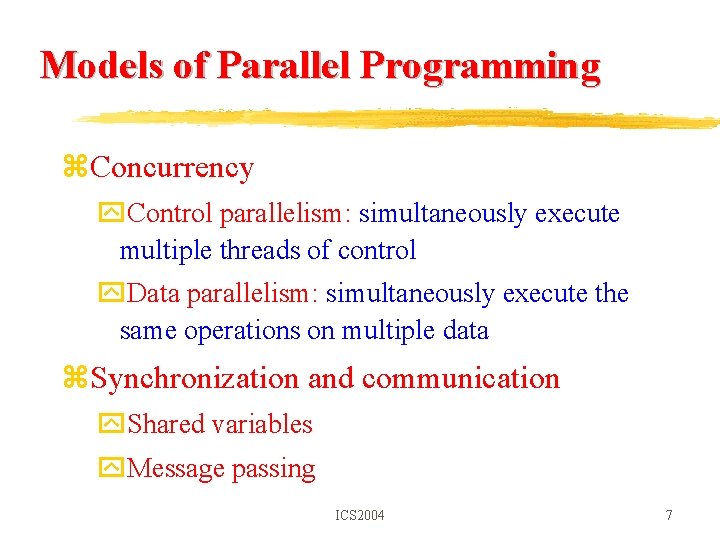

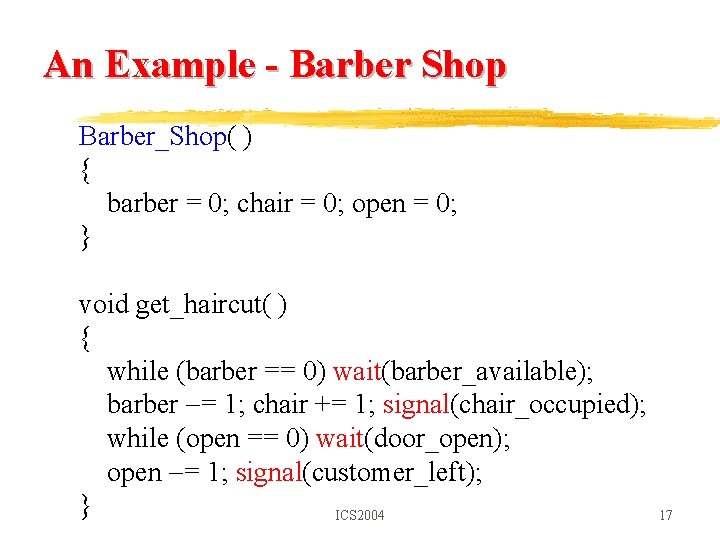

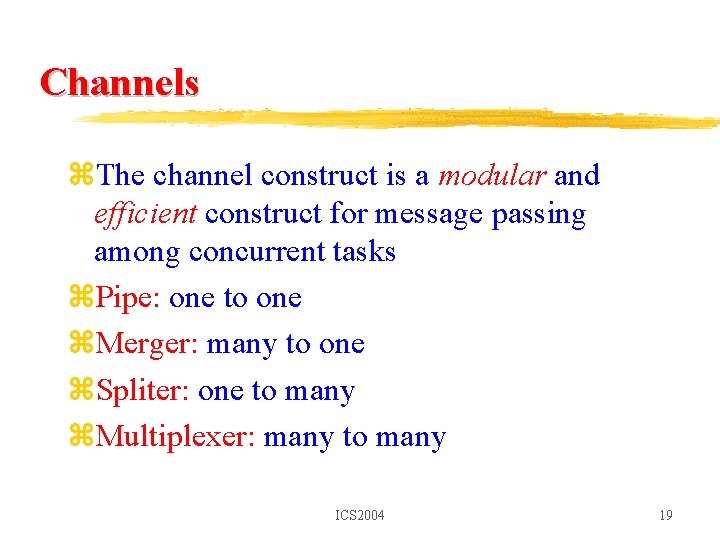

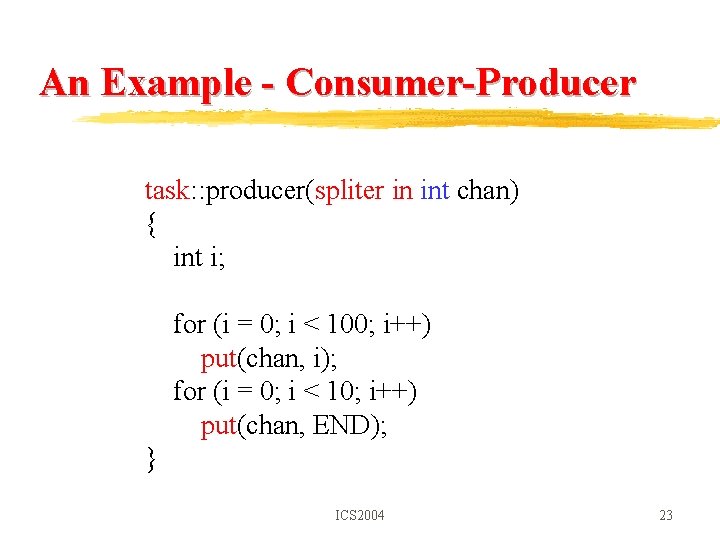

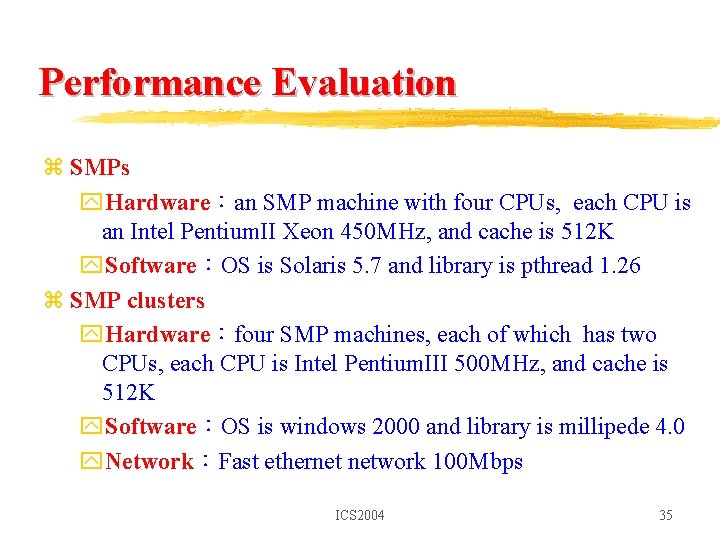

An Example– Matrix Multiplication task: : main( ) { int A[16], B[16], C[16]; domain matrix_op m; read_array(A); read_array(B); m. multiply(A, B, C); print_array(C); } ICS 2004 28

![An Example Matrix Multiplication matrixop multiplyA B C distribute in int 16 block16 An Example– Matrix Multiplication matrix_op: : multiply(A, B, C) distribute in int [16: block][16]](https://slidetodoc.com/presentation_image/0d03cb209d9cb0ef01e72205eb2c840b/image-29.jpg)

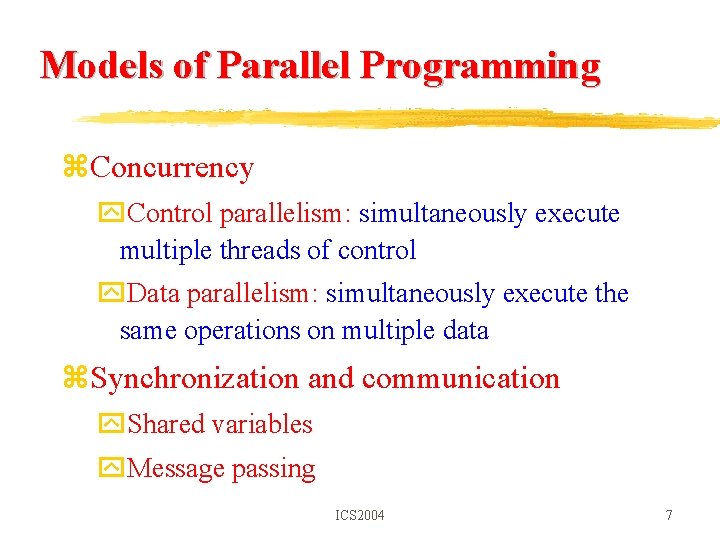

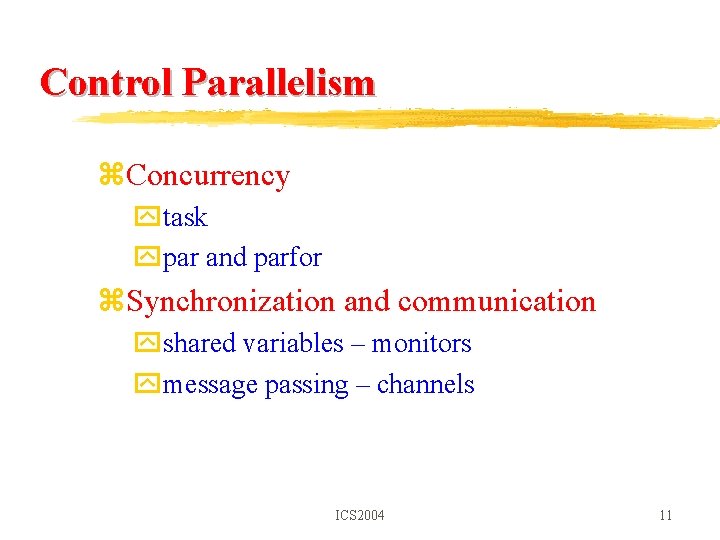

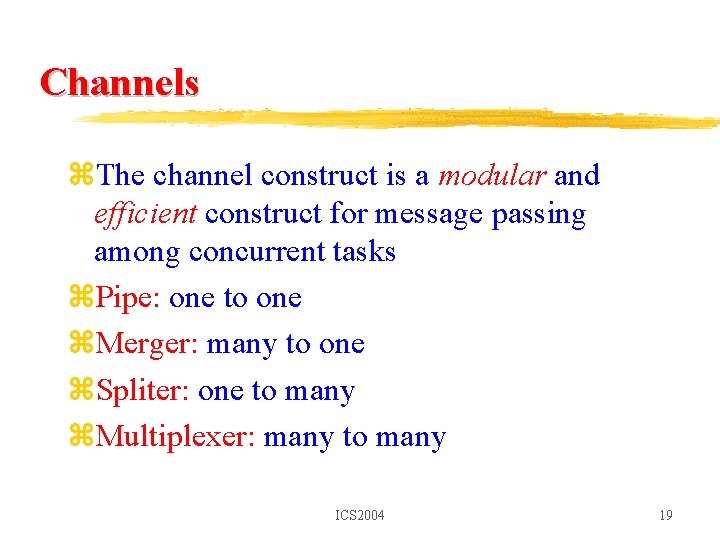

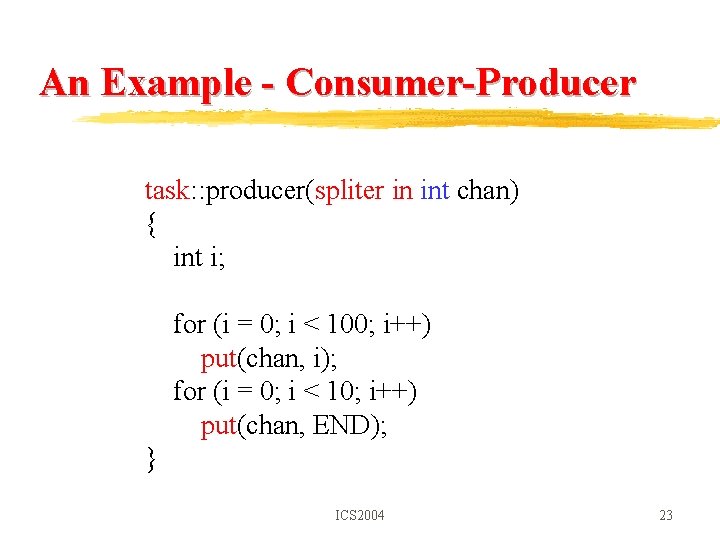

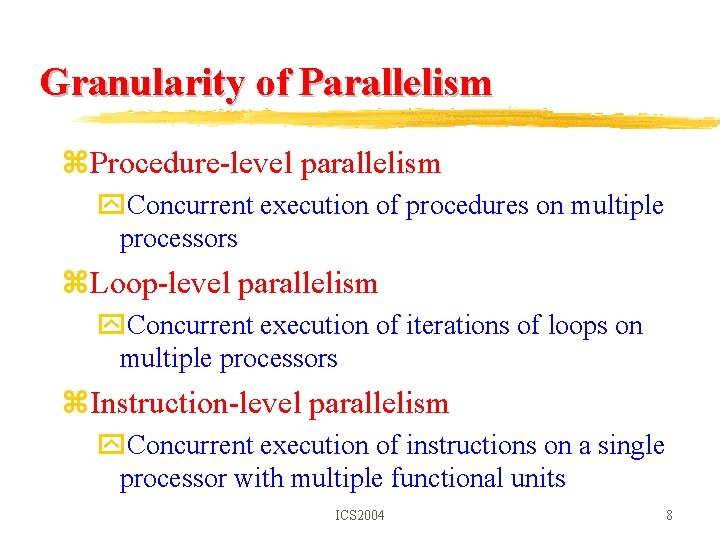

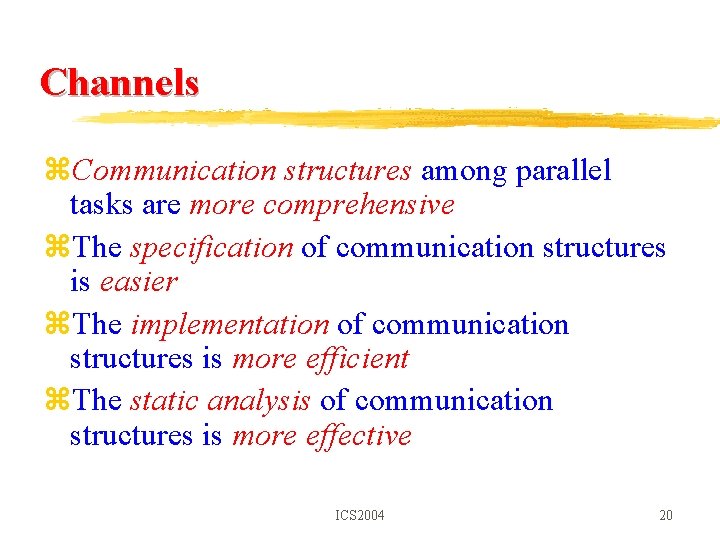

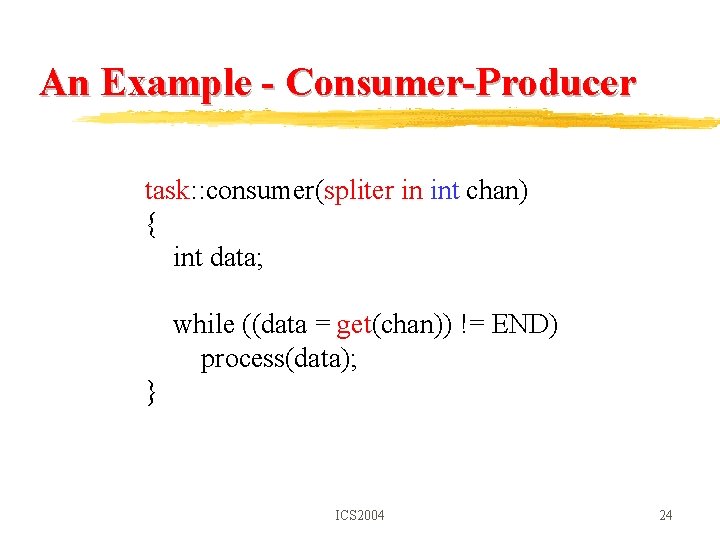

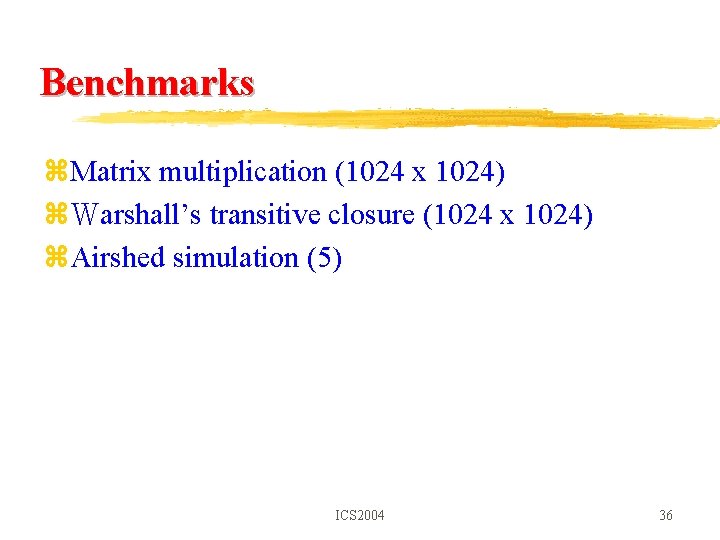

An Example– Matrix Multiplication matrix_op: : multiply(A, B, C) distribute in int [16: block][16] A; distribute in int [16][16: block] B; distribute out int [16: block][16] C; { int i, j; a : = A; b : = B; for (i = 0; i < 16; i++) for (c[i] = 0, j = 0; j < 16; j++) c[i] += a[j] * matrix_op[i]. b[j]; C : = c; } ICS 2004 29

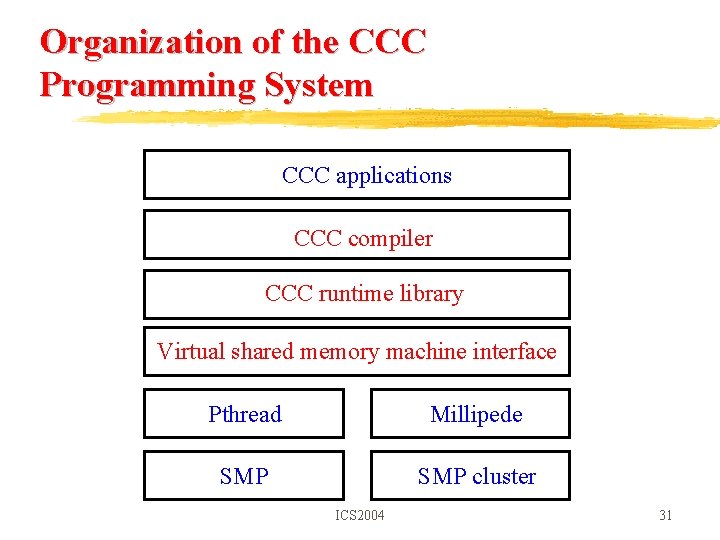

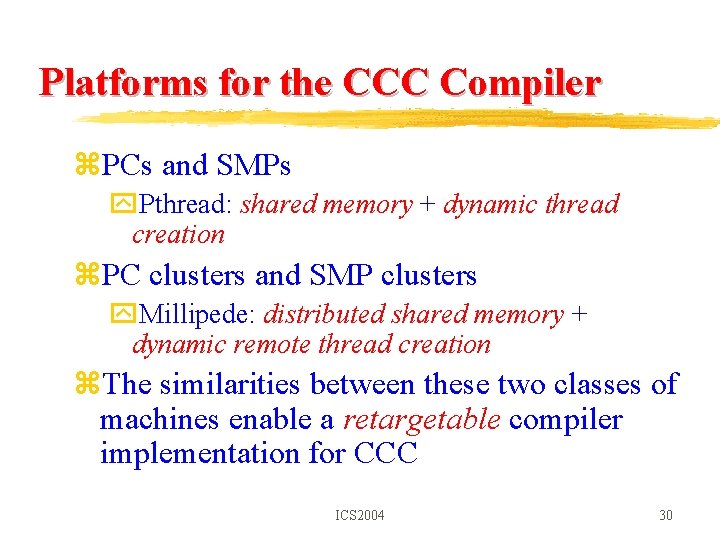

Platforms for the CCC Compiler z. PCs and SMPs y. Pthread: shared memory + dynamic thread creation z. PC clusters and SMP clusters y. Millipede: distributed shared memory + dynamic remote thread creation z. The similarities between these two classes of machines enable a retargetable compiler implementation for CCC ICS 2004 30

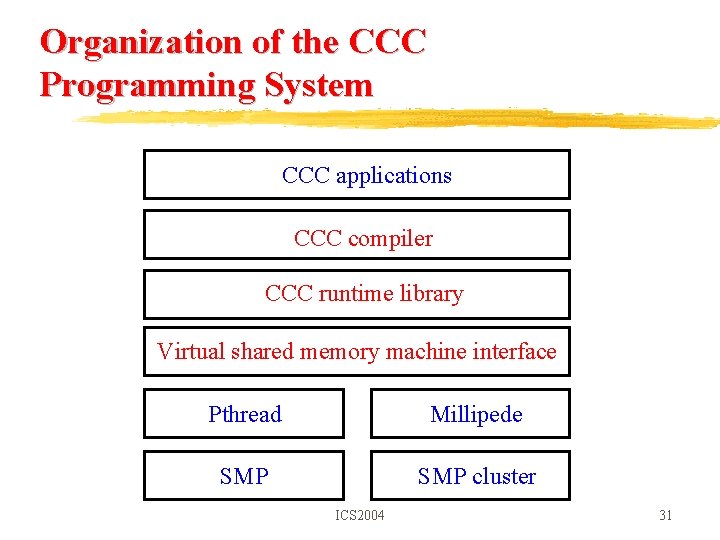

Organization of the CCC Programming System CCC applications CCC compiler CCC runtime library Virtual shared memory machine interface Pthread Millipede SMP cluster ICS 2004 31

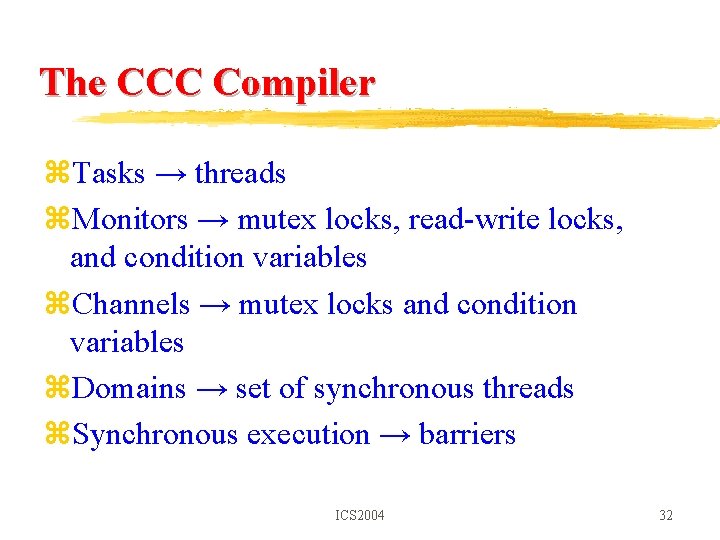

The CCC Compiler z. Tasks → threads z. Monitors → mutex locks, read-write locks, and condition variables z. Channels → mutex locks and condition variables z. Domains → set of synchronous threads z. Synchronous execution → barriers ICS 2004 32

Virtual Shared Memory Machine Interface z. Processor management z. Thread management z. Shared memory allocation z. Mutex locks z. Read-write locks z. Condition variables z. Barriers ICS 2004 33

The CCC Runtime Library z. The CCC runtime library contains a collection of functions that implements the salient abstractions of CCC on top of the virtual shared memory machine interface ICS 2004 34

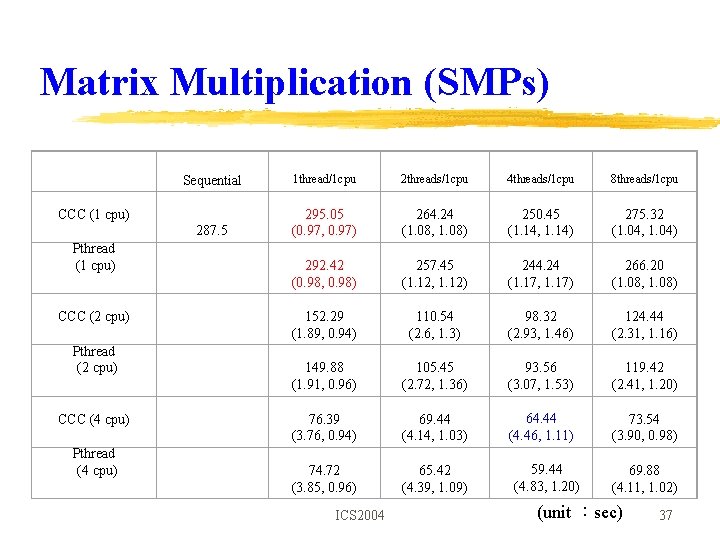

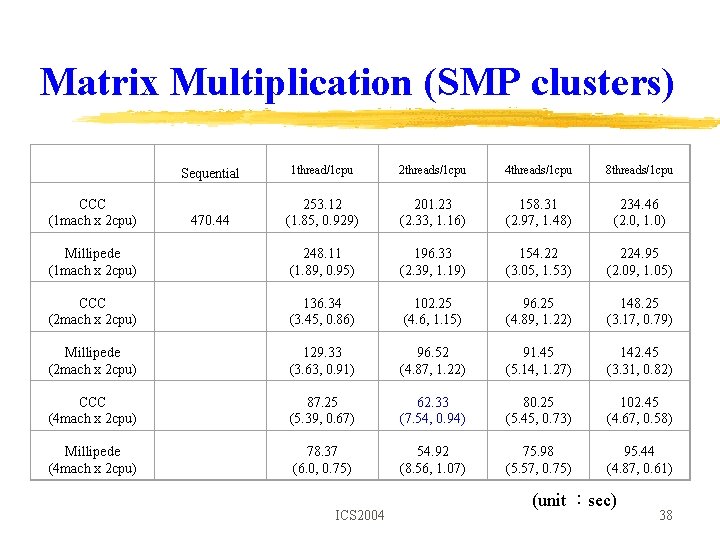

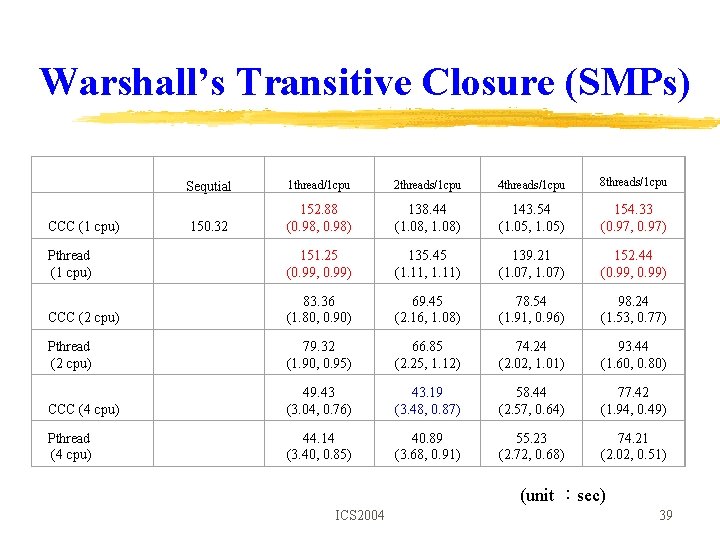

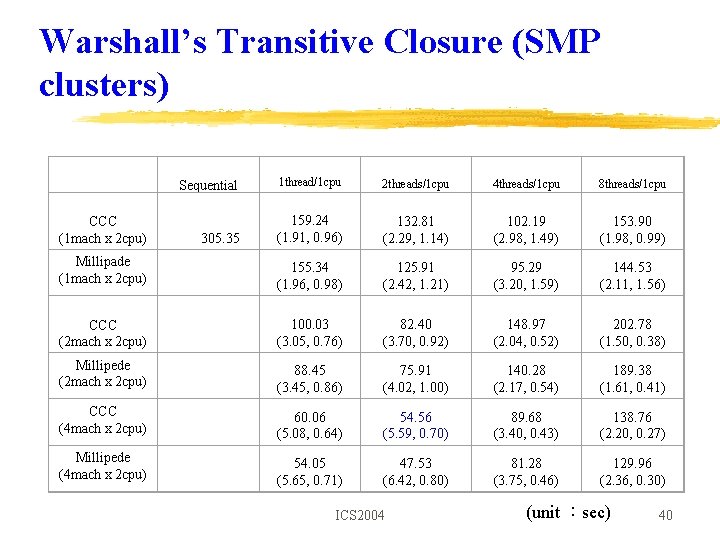

Performance Evaluation z SMPs y. Hardware:an SMP machine with four CPUs, each CPU is an Intel Pentium. II Xeon 450 MHz, and cache is 512 K y. Software:OS is Solaris 5. 7 and library is pthread 1. 26 z SMP clusters y. Hardware:four SMP machines, each of which has two CPUs, each CPU is Intel Pentium. III 500 MHz, and cache is 512 K y. Software:OS is windows 2000 and library is millipede 4. 0 y. Network:Fast ethernet network 100 Mbps ICS 2004 35

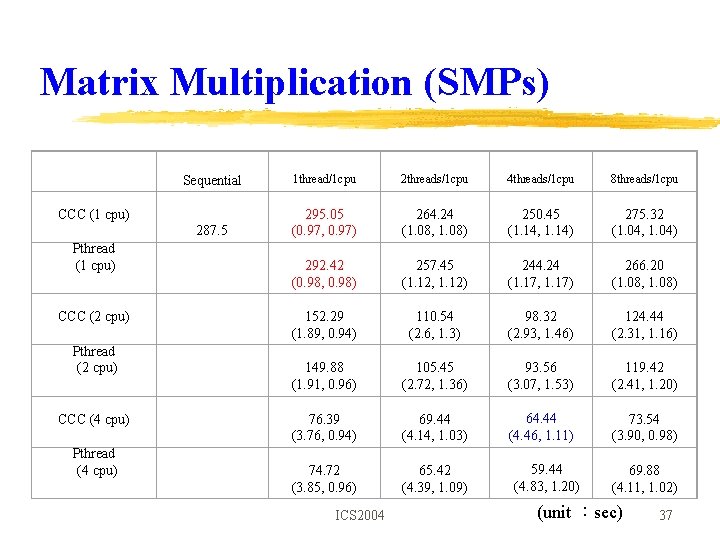

Benchmarks z. Matrix multiplication (1024 x 1024) zWarshall’s transitive closure (1024 x 1024) z. Airshed simulation (5) ICS 2004 36

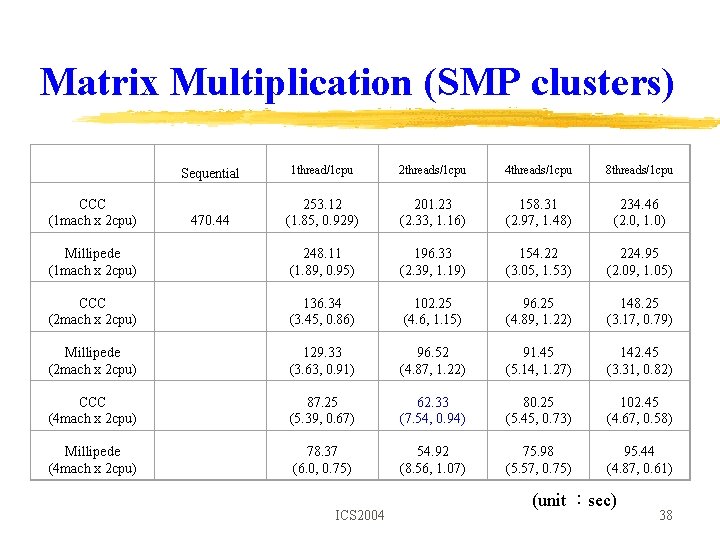

Matrix Multiplication (SMPs) CCC (1 cpu) Pthread (1 cpu) CCC (2 cpu) Pthread (2 cpu) CCC (4 cpu) Pthread (4 cpu) Sequential 1 thread/1 cpu 2 threads/1 cpu 4 threads/1 cpu 8 threads/1 cpu 287. 5 295. 05 (0. 97, 0. 97) 264. 24 (1. 08, 1. 08) 250. 45 (1. 14, 1. 14) 275. 32 (1. 04, 1. 04) 292. 42 (0. 98, 0. 98) 257. 45 (1. 12, 1. 12) 244. 24 (1. 17, 1. 17) 266. 20 (1. 08, 1. 08) 152. 29 (1. 89, 0. 94) 110. 54 (2. 6, 1. 3) 98. 32 (2. 93, 1. 46) 124. 44 (2. 31, 1. 16) 149. 88 (1. 91, 0. 96) 105. 45 (2. 72, 1. 36) 93. 56 (3. 07, 1. 53) 119. 42 (2. 41, 1. 20) 76. 39 (3. 76, 0. 94) 69. 44 (4. 14, 1. 03) 64. 44 (4. 46, 1. 11) 73. 54 (3. 90, 0. 98) 74. 72 (3. 85, 0. 96) 65. 42 (4. 39, 1. 09) ICS 2004 59. 44 (4. 83, 1. 20) 69. 88 (4. 11, 1. 02) (unit :sec) 37

Matrix Multiplication (SMP clusters) CCC (1 mach x 2 cpu) Sequential 1 thread/1 cpu 2 threads/1 cpu 4 threads/1 cpu 8 threads/1 cpu 470. 44 253. 12 (1. 85, 0. 929) 201. 23 (2. 33, 1. 16) 158. 31 (2. 97, 1. 48) 234. 46 (2. 0, 1. 0) Millipede (1 mach x 2 cpu) 248. 11 (1. 89, 0. 95) 196. 33 (2. 39, 1. 19) 154. 22 (3. 05, 1. 53) 224. 95 (2. 09, 1. 05) CCC (2 mach x 2 cpu) 136. 34 (3. 45, 0. 86) 102. 25 (4. 6, 1. 15) 96. 25 (4. 89, 1. 22) 148. 25 (3. 17, 0. 79) Millipede (2 mach x 2 cpu) 129. 33 (3. 63, 0. 91) 96. 52 (4. 87, 1. 22) 91. 45 (5. 14, 1. 27) 142. 45 (3. 31, 0. 82) CCC (4 mach x 2 cpu) 87. 25 (5. 39, 0. 67) 62. 33 (7. 54, 0. 94) 80. 25 (5. 45, 0. 73) 102. 45 (4. 67, 0. 58) Millipede (4 mach x 2 cpu) 78. 37 (6. 0, 0. 75) 54. 92 (8. 56, 1. 07) 75. 98 (5. 57, 0. 75) 95. 44 (4. 87, 0. 61) ICS 2004 (unit :sec) 38

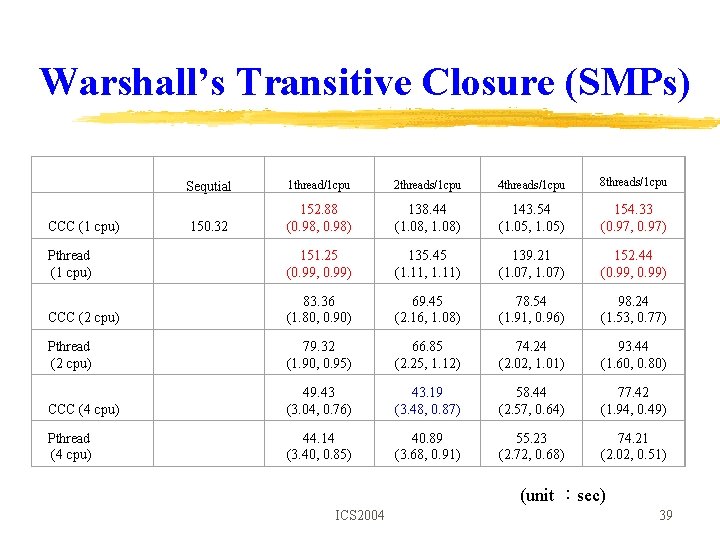

Warshall’s Transitive Closure (SMPs) CCC (1 cpu) Pthread (1 cpu) Sequtial 1 thread/1 cpu 2 threads/1 cpu 4 threads/1 cpu 8 threads/1 cpu 150. 32 152. 88 (0. 98, 0. 98) 138. 44 (1. 08, 1. 08) 143. 54 (1. 05, 1. 05) 154. 33 (0. 97, 0. 97) 151. 25 (0. 99, 0. 99) 135. 45 (1. 11, 1. 11) 139. 21 (1. 07, 1. 07) 152. 44 (0. 99, 0. 99) 83. 36 (1. 80, 0. 90) 69. 45 (2. 16, 1. 08) 78. 54 (1. 91, 0. 96) 98. 24 (1. 53, 0. 77) 79. 32 (1. 90, 0. 95) 66. 85 (2. 25, 1. 12) 74. 24 (2. 02, 1. 01) 93. 44 (1. 60, 0. 80) 49. 43 (3. 04, 0. 76) 43. 19 (3. 48, 0. 87) 58. 44 (2. 57, 0. 64) 77. 42 (1. 94, 0. 49) 44. 14 (3. 40, 0. 85) 40. 89 (3. 68, 0. 91) 55. 23 (2. 72, 0. 68) 74. 21 (2. 02, 0. 51) CCC (2 cpu) Pthread (2 cpu) CCC (4 cpu) Pthread (4 cpu) (unit :sec) ICS 2004 39

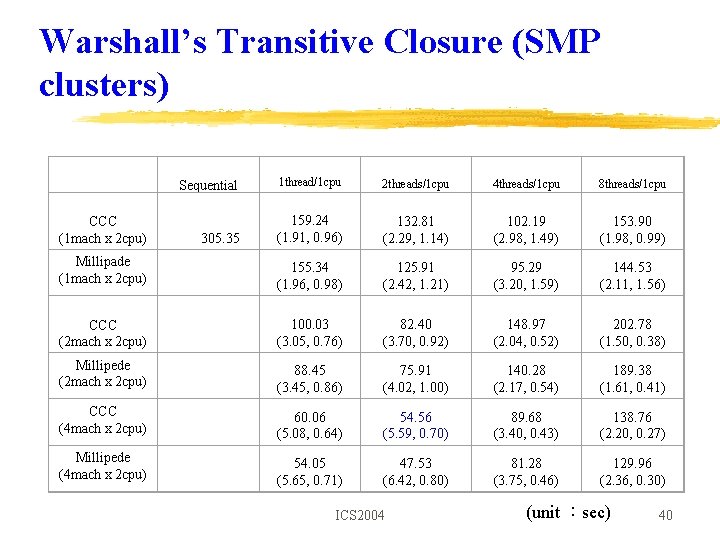

Warshall’s Transitive Closure (SMP clusters) CCC (1 mach x 2 cpu) Sequential 1 thread/1 cpu 2 threads/1 cpu 4 threads/1 cpu 8 threads/1 cpu 305. 35 159. 24 (1. 91, 0. 96) 132. 81 (2. 29, 1. 14) 102. 19 (2. 98, 1. 49) 153. 90 (1. 98, 0. 99) Millipade (1 mach x 2 cpu) 155. 34 (1. 96, 0. 98) 125. 91 (2. 42, 1. 21) 95. 29 (3. 20, 1. 59) 144. 53 (2. 11, 1. 56) CCC (2 mach x 2 cpu) 100. 03 (3. 05, 0. 76) 82. 40 (3. 70, 0. 92) 148. 97 (2. 04, 0. 52) 202. 78 (1. 50, 0. 38) Millipede (2 mach x 2 cpu) 88. 45 (3. 45, 0. 86) 75. 91 (4. 02, 1. 00) 140. 28 (2. 17, 0. 54) 189. 38 (1. 61, 0. 41) CCC (4 mach x 2 cpu) 60. 06 (5. 08, 0. 64) 54. 56 (5. 59, 0. 70) 89. 68 (3. 40, 0. 43) 138. 76 (2. 20, 0. 27) Millipede (4 mach x 2 cpu) 54. 05 (5. 65, 0. 71) 47. 53 (6. 42, 0. 80) 81. 28 (3. 75, 0. 46) 129. 96 (2. 36, 0. 30) ICS 2004 (unit :sec) 40

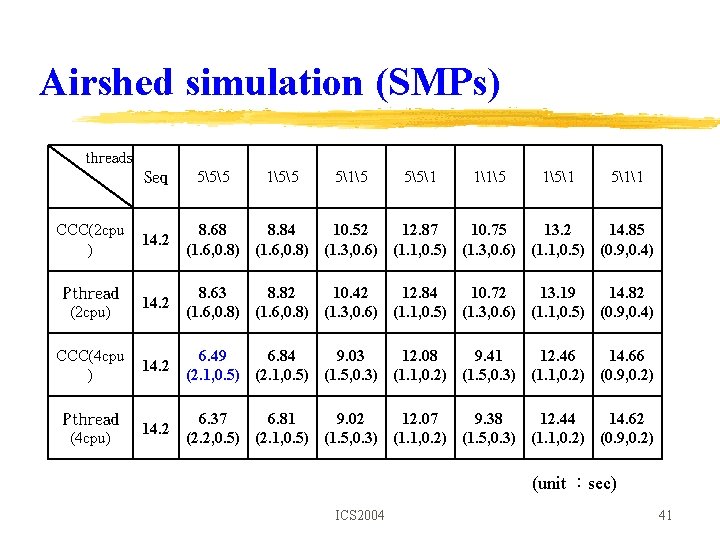

Airshed simulation (SMPs) threads Seq 555 155 515 551 115 151 511 CCC(2 cpu ) 14. 2 8. 68 8. 84 10. 52 12. 87 10. 75 13. 2 14. 85 (1. 6, 0. 8) (1. 3, 0. 6) (1. 1, 0. 5) (0. 9, 0. 4) Pthread (2 cpu) 14. 2 8. 63 8. 82 10. 42 12. 84 10. 72 13. 19 14. 82 (1. 6, 0. 8) (1. 3, 0. 6) (1. 1, 0. 5) (0. 9, 0. 4) CCC(4 cpu ) 14. 2 6. 49 6. 84 9. 03 12. 08 9. 41 12. 46 14. 66 (2. 1, 0. 5) (1. 5, 0. 3) (1. 1, 0. 2) (0. 9, 0. 2) Pthread (4 cpu) 14. 2 6. 37 6. 81 9. 02 12. 07 9. 38 12. 44 14. 62 (2. 2, 0. 5) (2. 1, 0. 5) (1. 5, 0. 3) (1. 1, 0. 2) (0. 9, 0. 2) (unit :sec) ICS 2004 41

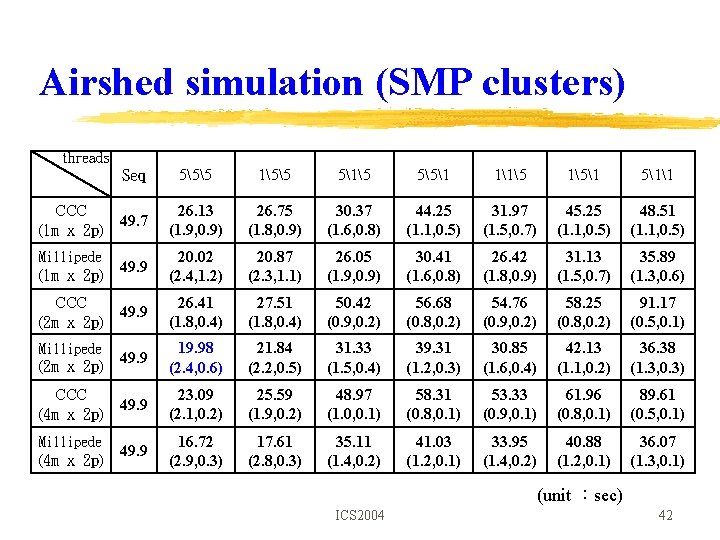

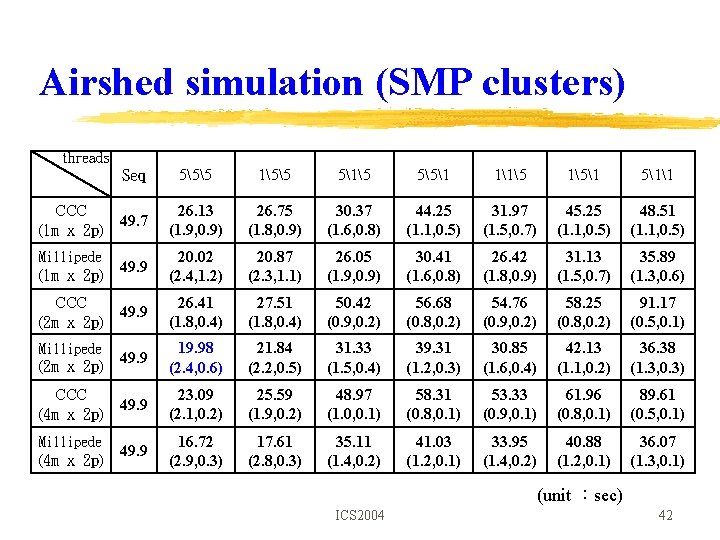

Airshed simulation (SMP clusters) threads 555 155 515 551 115 151 511 CCC 49. 7 (1 m x 2 p) 26. 13 (1. 9, 0. 9) 26. 75 (1. 8, 0. 9) 30. 37 (1. 6, 0. 8) 44. 25 (1. 1, 0. 5) 31. 97 (1. 5, 0. 7) 45. 25 (1. 1, 0. 5) 48. 51 (1. 1, 0. 5) Millipede 49. 9 20. 02 (2. 4, 1. 2) 20. 87 (2. 3, 1. 1) 26. 05 (1. 9, 0. 9) 30. 41 (1. 6, 0. 8) 26. 42 (1. 8, 0. 9) 31. 13 (1. 5, 0. 7) 35. 89 (1. 3, 0. 6) CCC 49. 9 (2 m x 2 p) 26. 41 (1. 8, 0. 4) 27. 51 (1. 8, 0. 4) 50. 42 (0. 9, 0. 2) 56. 68 (0. 8, 0. 2) 54. 76 (0. 9, 0. 2) 58. 25 (0. 8, 0. 2) 91. 17 (0. 5, 0. 1) Millipede 49. 9 19. 98 (2. 4, 0. 6) 21. 84 (2. 2, 0. 5) 31. 33 (1. 5, 0. 4) 39. 31 (1. 2, 0. 3) 30. 85 (1. 6, 0. 4) 42. 13 (1. 1, 0. 2) 36. 38 (1. 3, 0. 3) CCC 49. 9 (4 m x 2 p) 23. 09 (2. 1, 0. 2) 25. 59 (1. 9, 0. 2) 48. 97 (1. 0, 0. 1) 58. 31 (0. 8, 0. 1) 53. 33 (0. 9, 0. 1) 61. 96 (0. 8, 0. 1) 89. 61 (0. 5, 0. 1) Millipede 16. 72 (2. 9, 0. 3) 17. 61 (2. 8, 0. 3) 35. 11 (1. 4, 0. 2) 41. 03 (1. 2, 0. 1) 33. 95 (1. 4, 0. 2) 40. 88 (1. 2, 0. 1) 36. 07 (1. 3, 0. 1) Seq (1 m x 2 p) (2 m x 2 p) (4 m x 2 p) 49. 9 (unit :sec) ICS 2004 42

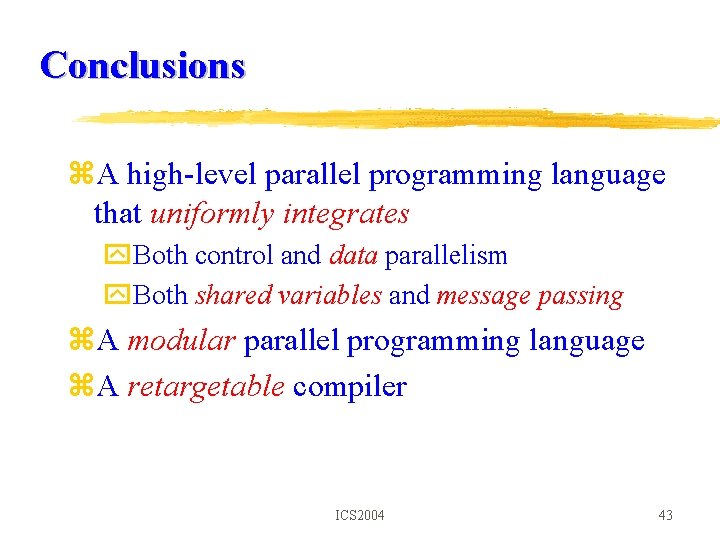

Conclusions z. A high-level parallel programming language that uniformly integrates y. Both control and data parallelism y. Both shared variables and message passing z. A modular parallel programming language z. A retargetable compiler ICS 2004 43