Deploying Deep Learning Models on GPU Enabled Kubernetes

Deploying Deep Learning Models on GPU Enabled Kubernetes Cluster Mathew Salvaris (@MSalvaris) & Fidan Boylu Uz (@ Fidan. Boylu. Uz) Senior Data Scientists at Microsoft

Basics of Deployment - Payload, batching, HTTP, Kubernetes GPU/CPU comparison for inference Deployment on Kubernetes 1. 2. 3. Using Kubectl Using Azure. ML Using Kubeflow and TF serving

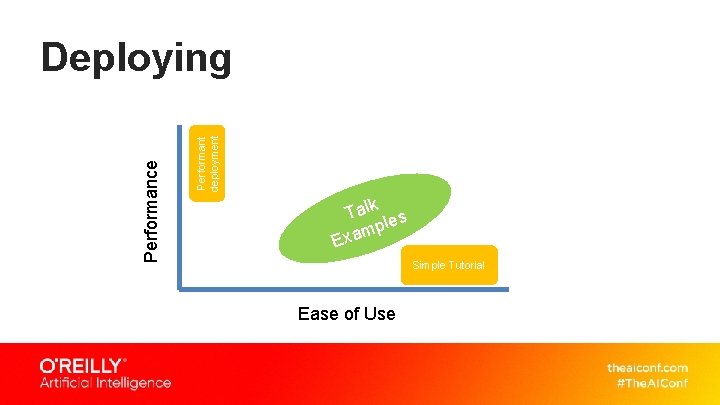

Performant deployment Performance Deploying Talk les mp a x E Simple Tutorial Ease of Use

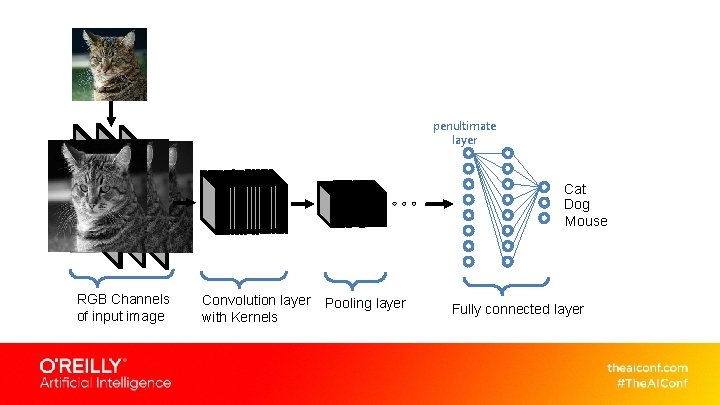

penultimate layer Cat Dog Mouse RGB Channels of input image Convolution layer with Kernels Pooling layer Fully connected layer

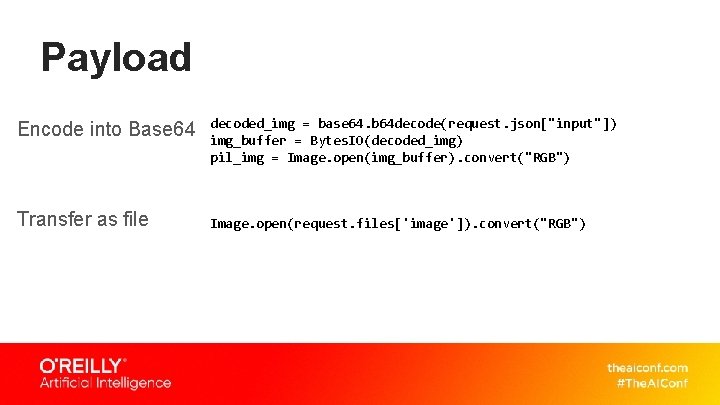

Payload Encode into Base 64 Transfer as file decoded_img = base 64. b 64 decode(request. json["input"]) img_buffer = Bytes. IO(decoded_img) pil_img = Image. open(img_buffer). convert("RGB") Image. open(request. files['image']). convert("RGB")

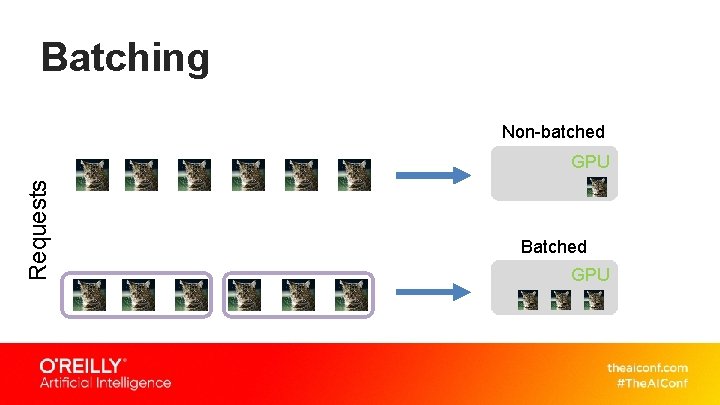

Batching Non-batched Requests GPU Batched GPU

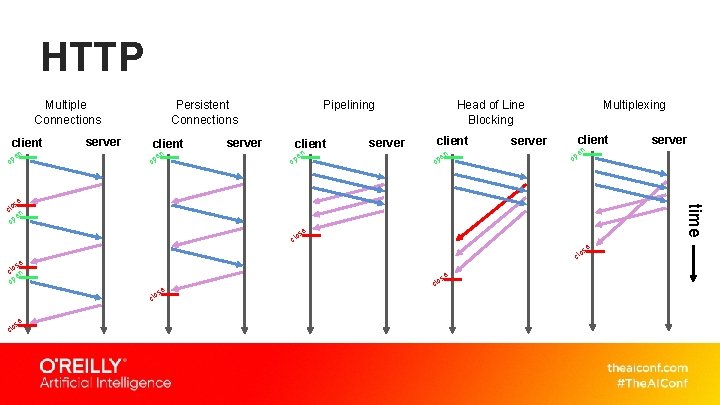

HTTP Multiple Connections client en op server Persistent Connections client o n pe server Pipelining client en op server Multiplexing client n se clo se clo n e op server e op time se clo n e op server Head of Line Blocking clo

Kubernetes Node Cluster Node

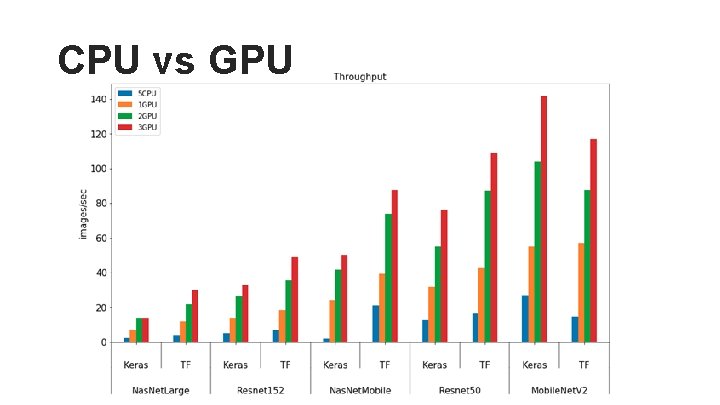

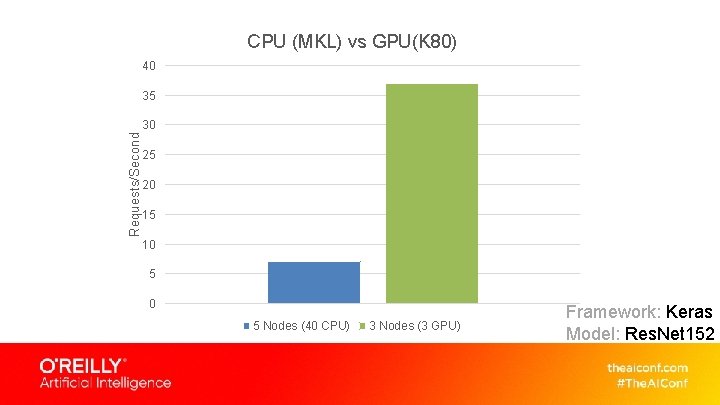

CPU vs GPU

CPU (MKL) vs GPU(K 80) 40 35 Requests/Second 30 25 20 15 10 5 Nodes (40 CPU) 3 Nodes (3 GPU) Framework: Keras Model: Res. Net 152

3 Approaches… • Deploy on Kubernetes with Kubectl • Deploy on Kubernetes with Azure Machine Learning (Azure. ML/AML) • Deploy on Kubernetes with Kubeflow

Common steps • • Develop model API Prepare docker container for web service Deploy on Kubernetes

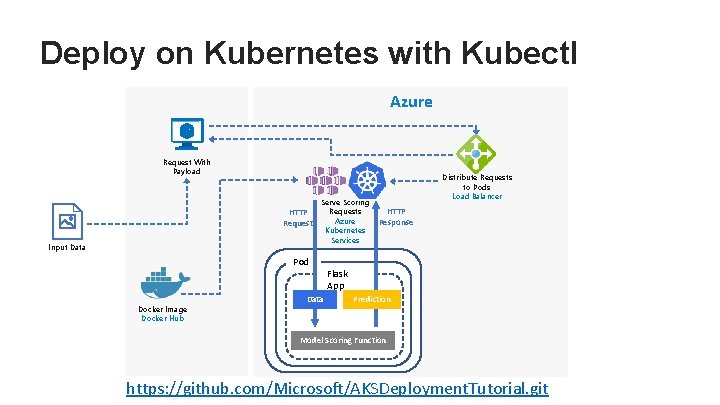

Deploy on Kubernetes with Kubectl • • • Develop model, model API, Flask App Build container image with model, model API and Flask App Test locally and push image to Docker Hub Provision Kubernetes cluster Connect to Kubernetes with kubectl Deploy application using manifest (. yaml)

Deploy on Kubernetes with Kubectl Azure Request With Payload HTTP Request Input Data Serve Scoring Requests Azure Kubernetes Services Pod Docker Image Docker Hub Data Distribute Requests to Pods Load Balancer HTTP Response Flask App Prediction Model Scoring Function https: //github. com/Microsoft/AKSDeployment. Tutorial. git

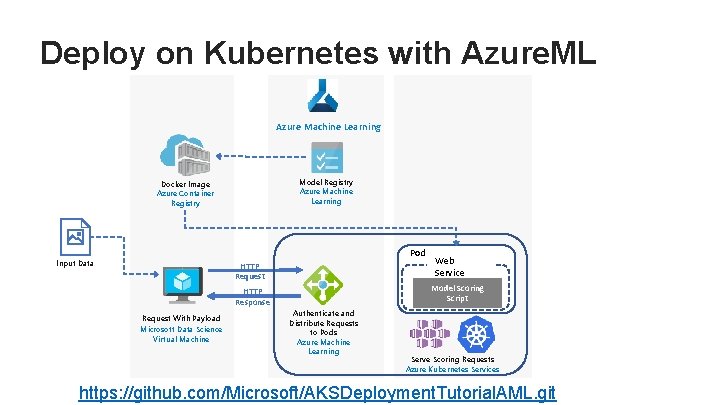

Deploy on Kubernetes with Azure. ML • Azure. ML setup, develop model, register the model to your Azure. ML Workspace, develop model API (scoring script) • Build image with Azure. ML using conda dependencies, pip requirements and other dependencies • Pull image from Azure Container Registry (ACR) and test locally • Provision Kubernetes cluster and deploy webservice with Azure. ML.

Deploy on Kubernetes with Azure. ML Azure Machine Learning Model Registry Azure Machine Learning Docker Image Azure Container Registry Pod Input Data HTTP Request Model Scoring Script HTTP Response Request With Payload Microsoft Data Science Virtual Machine Web Service Authenticate and Distribute Requests to Pods Azure Machine Learning Serve Scoring Requests Azure Kubernetes Services https: //github. com/Microsoft/AKSDeployment. Tutorial. AML. git

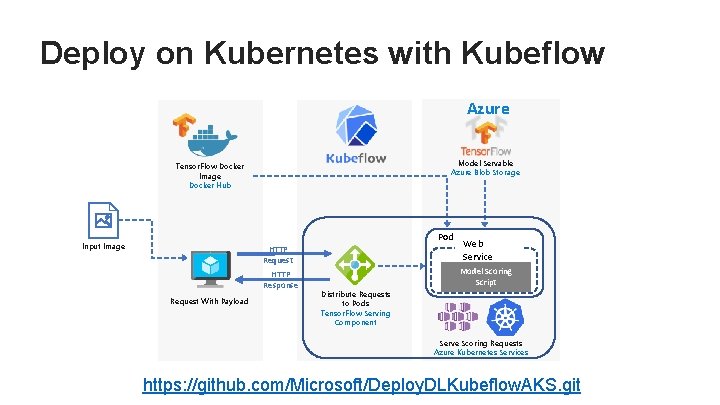

Deploy on Kubernetes with Kubeflow • Develop model and save as Tensor. Flow servable • Pull Tensor. Flow Serving image from Docker hub, mount the model path, open REST API port, test locally • Create Kubernetes cluster, attach blob storage on AKS, copy model servable • Install Ksonnet, Kubeflow and deploy webservice using Kubeflow Tensor. Flow serving Component using ksonnet templates.

Deploy on Kubernetes with Kubeflow Azure Model Servable Azure Blob Storage Tensor. Flow Docker Image Docker Hub Pod Input Image HTTP Request HTTP Response Request With Payload Web Service Model Scoring Script Distribute Requests to Pods Tensor. Flow Serving Component Serve Scoring Requests Azure Kubernetes Services https: //github. com/Microsoft/Deploy. DLKubeflow. AKS. git

Thank You & Questions @Msalvaris @Fidan. Boylu. Uz http: //aka. ms/aireferencearchitectures

- Slides: 22