DeeplyRecursive Convolutional Network for Image SuperResolution Jiwon Kim

![Observation from VDSR ▪ VDSR [CVPR 2016] ▪ a successful very deep CNN for Observation from VDSR ▪ VDSR [CVPR 2016] ▪ a successful very deep CNN for](https://slidetodoc.com/presentation_image_h/9f6a328cada5db7dc31adf5e6d73d709/image-4.jpg)

![Experimental Results Ground truth (PSNR/SSIM) A+ [1] 29. 18/0. 9007 SRCNN[2] 29. 45/0. 9022 Experimental Results Ground truth (PSNR/SSIM) A+ [1] 29. 18/0. 9007 SRCNN[2] 29. 45/0. 9022](https://slidetodoc.com/presentation_image_h/9f6a328cada5db7dc31adf5e6d73d709/image-21.jpg)

![Experimental Results Ground truth (PSNR/SSIM) A+ [1] 26. 24/0. 8805 SRCNN[2] 26. 40/0. 8844 Experimental Results Ground truth (PSNR/SSIM) A+ [1] 26. 24/0. 8805 SRCNN[2] 26. 40/0. 8844](https://slidetodoc.com/presentation_image_h/9f6a328cada5db7dc31adf5e6d73d709/image-22.jpg)

![Experimental Results Ground truth (PSNR/SSIM) A+ [1] 28. 90/0. 9278 SRCNN[2] 29. 40/0. 9270 Experimental Results Ground truth (PSNR/SSIM) A+ [1] 28. 90/0. 9278 SRCNN[2] 29. 40/0. 9270](https://slidetodoc.com/presentation_image_h/9f6a328cada5db7dc31adf5e6d73d709/image-23.jpg)

![Experimental Results Ground truth (PSNR/SSIM) A+ [1] 28. 44/0. 8990 SRCNN[2] 28. 84/0. 9041 Experimental Results Ground truth (PSNR/SSIM) A+ [1] 28. 44/0. 8990 SRCNN[2] 28. 84/0. 9041](https://slidetodoc.com/presentation_image_h/9f6a328cada5db7dc31adf5e6d73d709/image-24.jpg)

![Experimental Results Ground truth (PSNR/SSIM) A+ [1] 30. 00/0. 7878 SRCNN[2] 29. 98/0. 7867 Experimental Results Ground truth (PSNR/SSIM) A+ [1] 30. 00/0. 7878 SRCNN[2] 29. 98/0. 7867](https://slidetodoc.com/presentation_image_h/9f6a328cada5db7dc31adf5e6d73d709/image-25.jpg)

![Quantitative Results *cpu version [ECCV 2014] PSNR/SSIM/time VDSR (ours CVPR 2016) PSNR/SSIM/time DRCN (ours) Quantitative Results *cpu version [ECCV 2014] PSNR/SSIM/time VDSR (ours CVPR 2016) PSNR/SSIM/time DRCN (ours)](https://slidetodoc.com/presentation_image_h/9f6a328cada5db7dc31adf5e6d73d709/image-28.jpg)

- Slides: 31

Deeply-Recursive Convolutional Network for Image Super-Resolution Jiwon Kim, Jung Kwon Lee and Kyoung Mu Lee Computer Vision Lab. Dept. of ECE, ASRI Seoul National University http: //cv. snu. ac. kr

Introduction Super-Resolution Problem

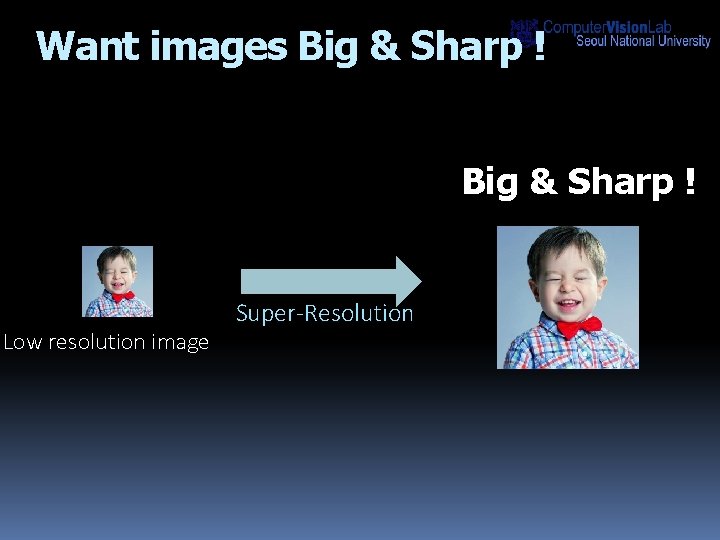

Want images Big & Sharp ! Low resolution image Super-Resolution

![Observation from VDSR VDSR CVPR 2016 a successful very deep CNN for Observation from VDSR ▪ VDSR [CVPR 2016] ▪ a successful very deep CNN for](https://slidetodoc.com/presentation_image_h/9f6a328cada5db7dc31adf5e6d73d709/image-4.jpg)

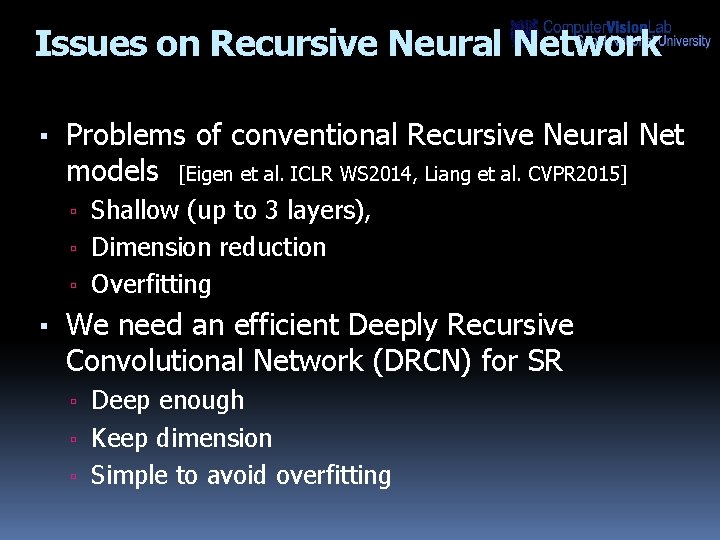

Observation from VDSR ▪ VDSR [CVPR 2016] ▪ a successful very deep CNN for SR ▪ We observed that Convolution layers exactly have the SAME structures reminding us of RECURSIONs conv 3 x 3 -64 / relu ILR 20 -layer CNN with same size layers HR

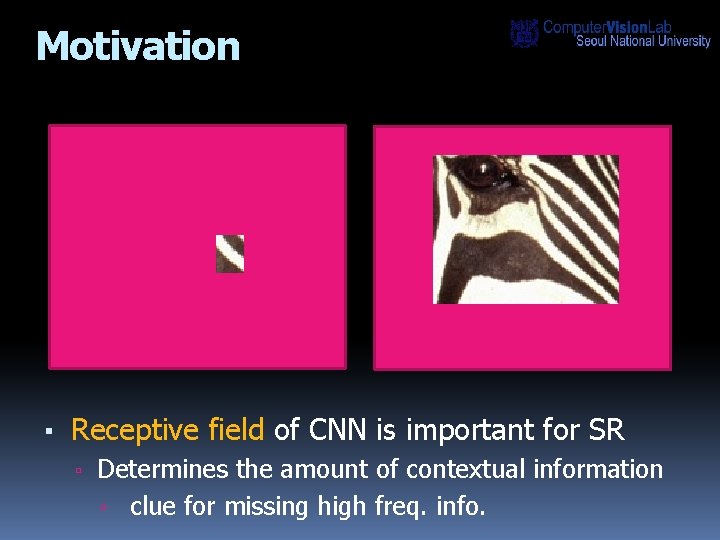

Motivation ▪ Receptive field of CNN is important for SR ▫ Determines the amount of contextual information ▫ clue for missing high freq. info.

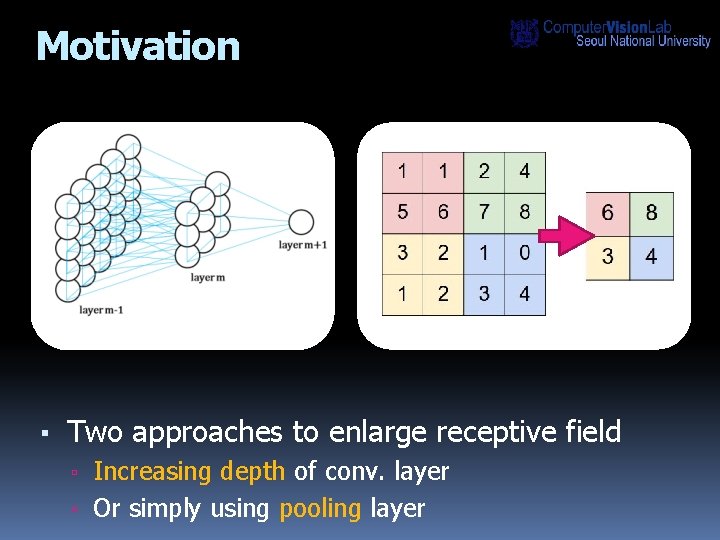

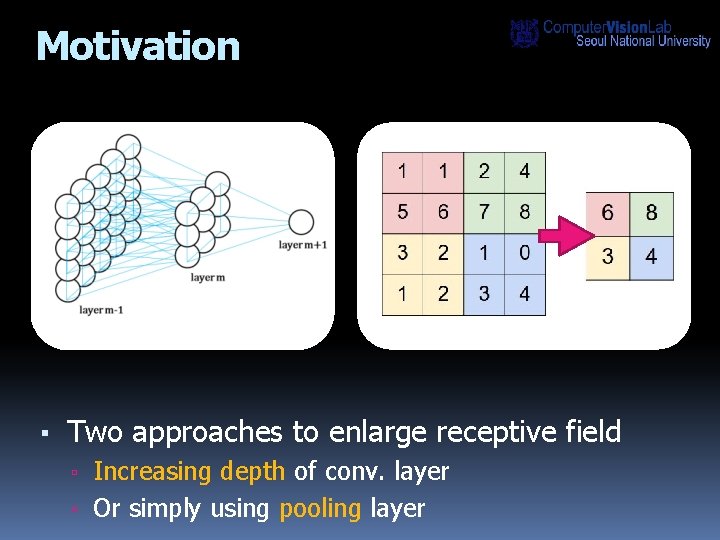

Motivation ▪ Two approaches to enlarge receptive field ▫ Increasing depth of conv. layer ▫ Or simply using pooling layer

Motivation ▪ Drawbacks ▫ More parameters overfitting & data management ▫ Discard pixel-wise information ▪ We need a better efficient CNN model to secure large receptive field for SR Deep Recursive Neural Net

Issues on Recursive Neural Network ▪ Problems of conventional Recursive Neural Net models [Eigen et al. ICLR WS 2014, Liang et al. CVPR 2015] ▫ Shallow (up to 3 layers), ▫ Dimension reduction ▫ Overfitting ▪ We need an efficient Deeply Recursive Convolutional Network (DRCN) for SR ▫ Deep enough ▫ Keep dimension ▫ Simple to avoid overfitting

Our Approach

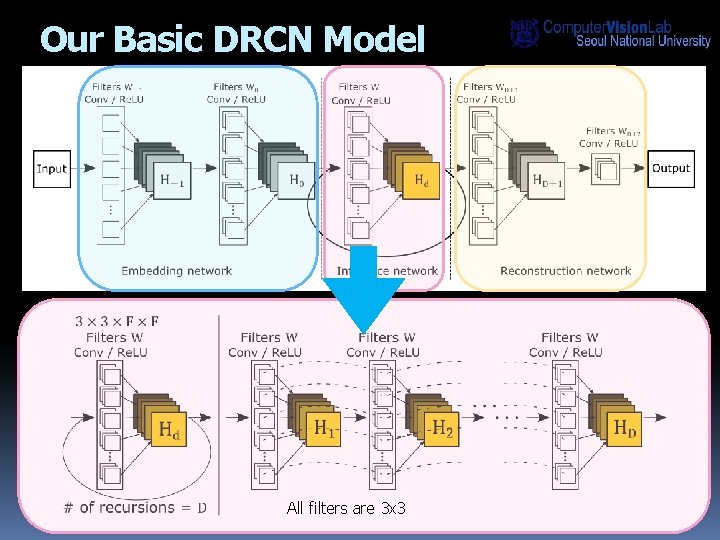

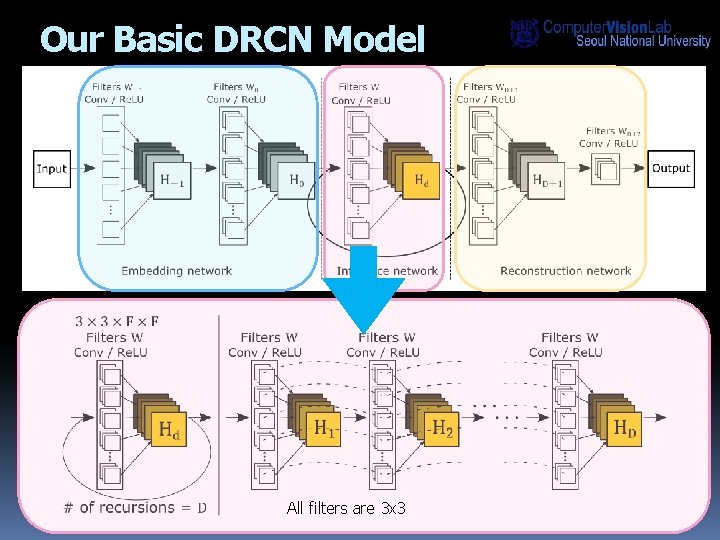

Our Basic DRCN Model ▪ Very deep recursive layer of the same convolution (up to 16 recursions) ▫ Very large receptive field (41 x 41 vs 13 x 13 of SRCNN) ▫ Can improve performance without introducing new parameters for additional convolutions All filters are 3 x 3

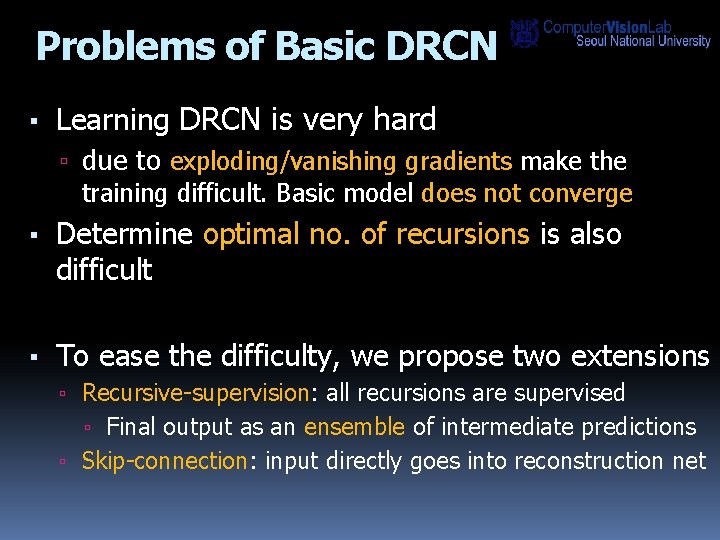

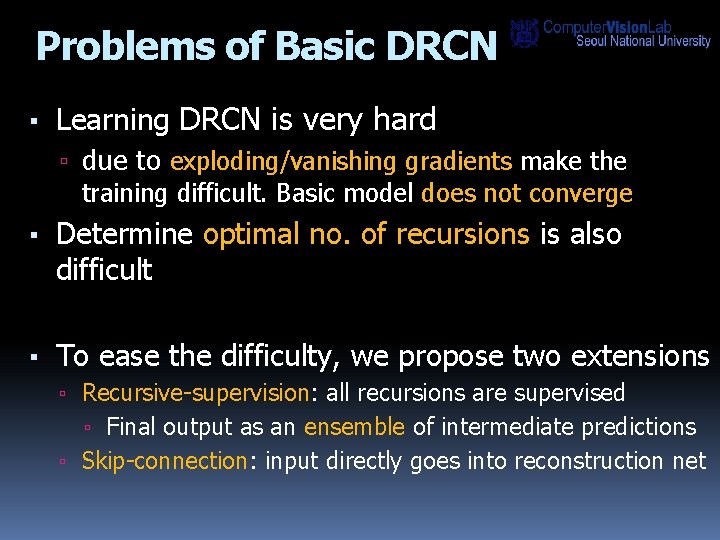

Problems of Basic DRCN ▪ Learning DRCN is very hard ▫ due to exploding/vanishing gradients make the training difficult. Basic model does not converge ▪ Determine optimal no. of recursions is also difficult ▪ To ease the difficulty, we propose two extensions ▫ Recursive-supervision: all recursions are supervised ▫ Final output as an ensemble of intermediate predictions ▫ Skip-connection: input directly goes into reconstruction net

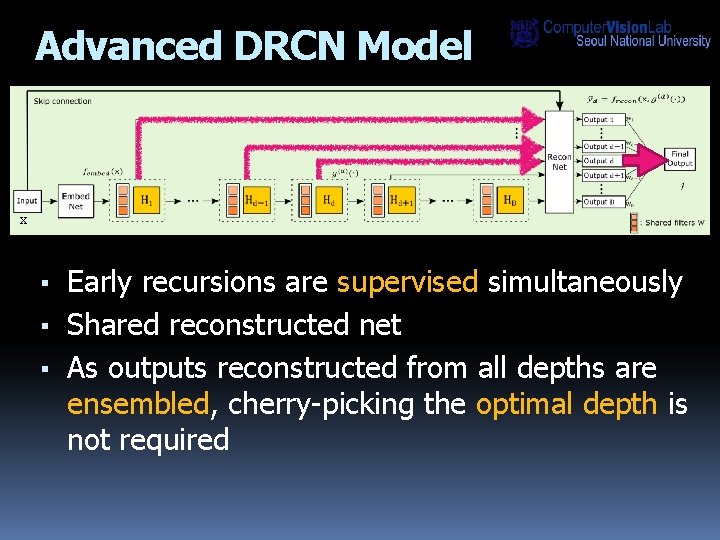

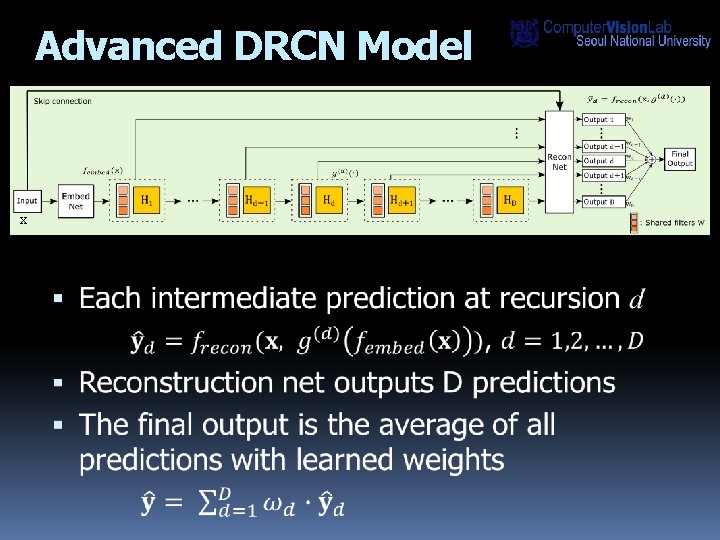

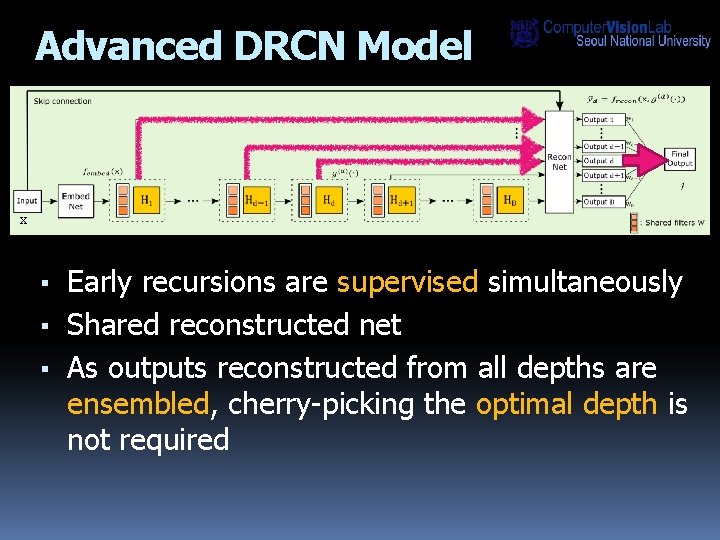

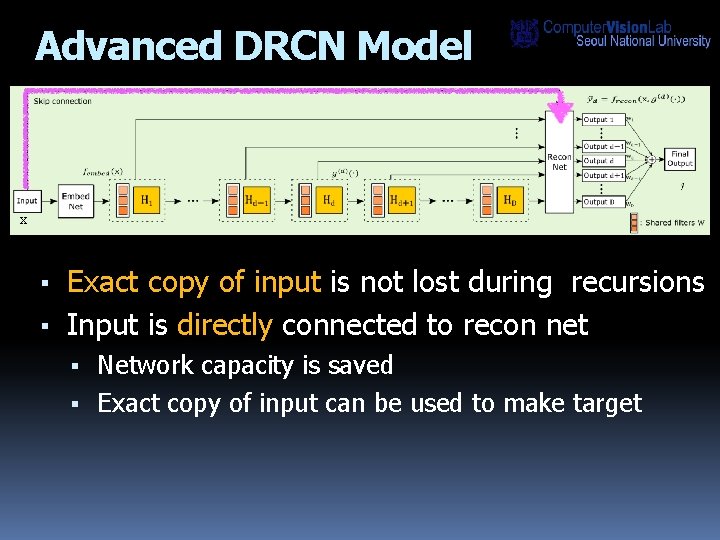

Advanced DRCN Model x ▪ Early recursions are supervised simultaneously ▪ Shared reconstructed net ▪ As outputs reconstructed from all depths are ensembled, cherry-picking the optimal depth is not required

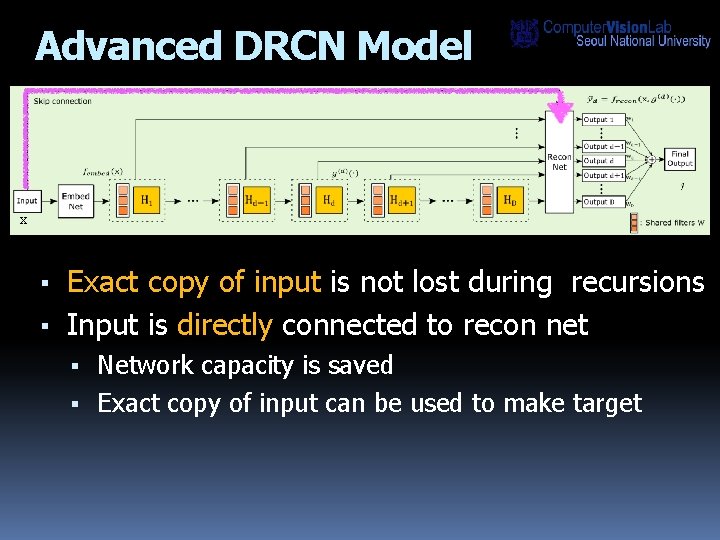

Advanced DRCN Model x ▪ Exact copy of input is not lost during recursions ▪ Input is directly connected to recon net ▪ Network capacity is saved ▪ Exact copy of input can be used to make target

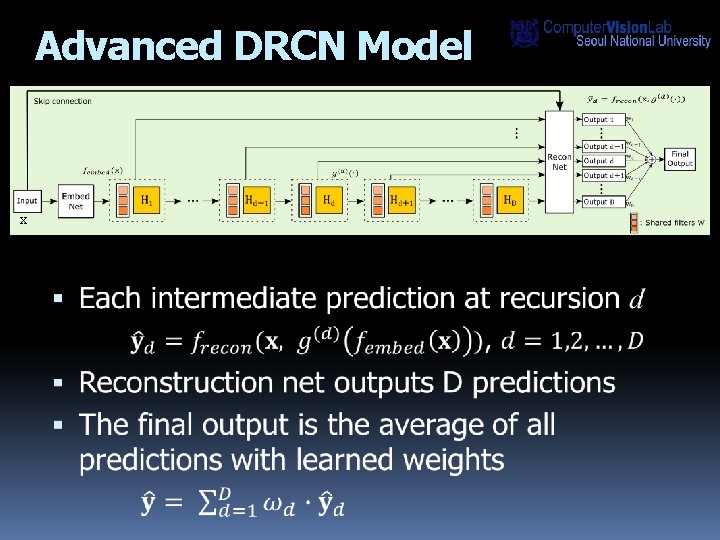

Advanced DRCN Model x

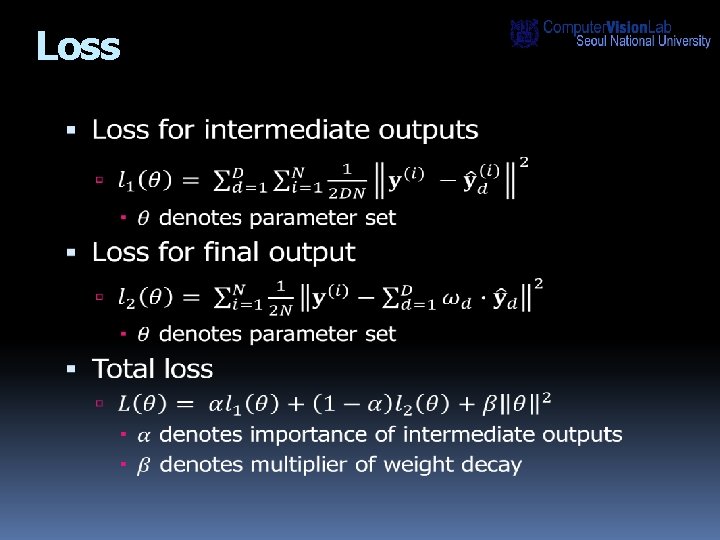

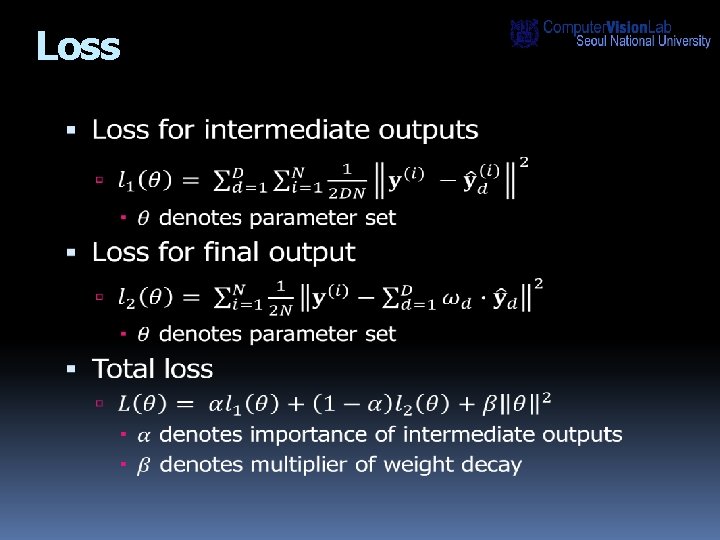

Loss

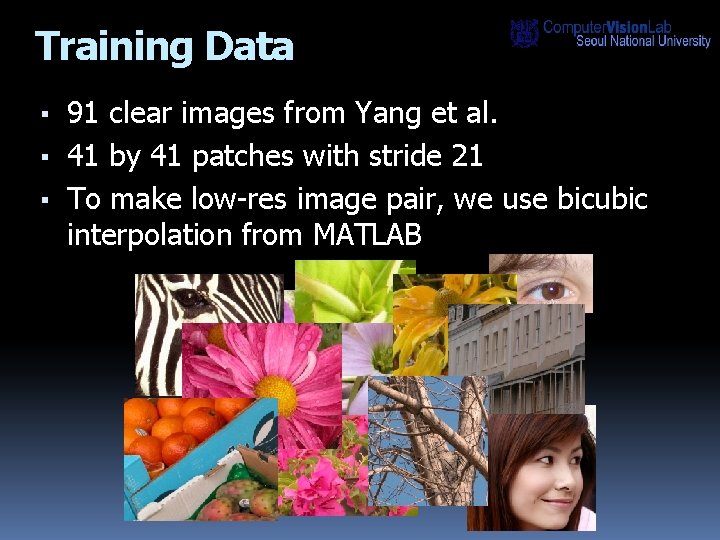

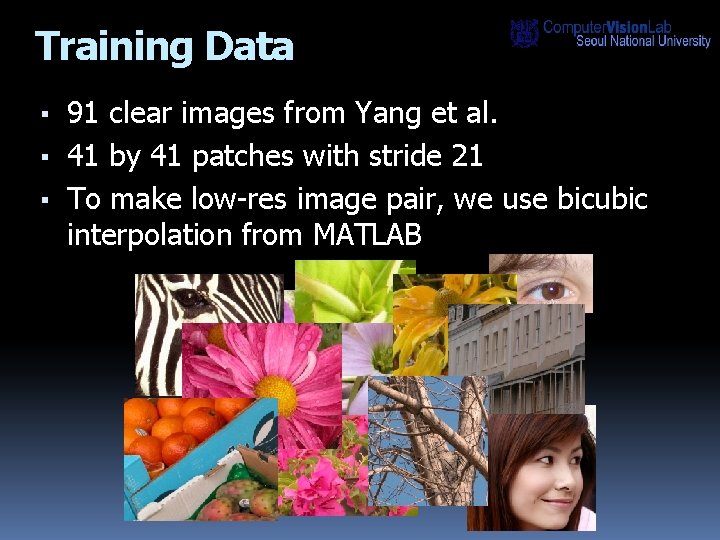

Training Data ▪ 91 clear images from Yang et al. ▪ 41 by 41 patches with stride 21 ▪ To make low-res image pair, we use bicubic interpolation from MATLAB

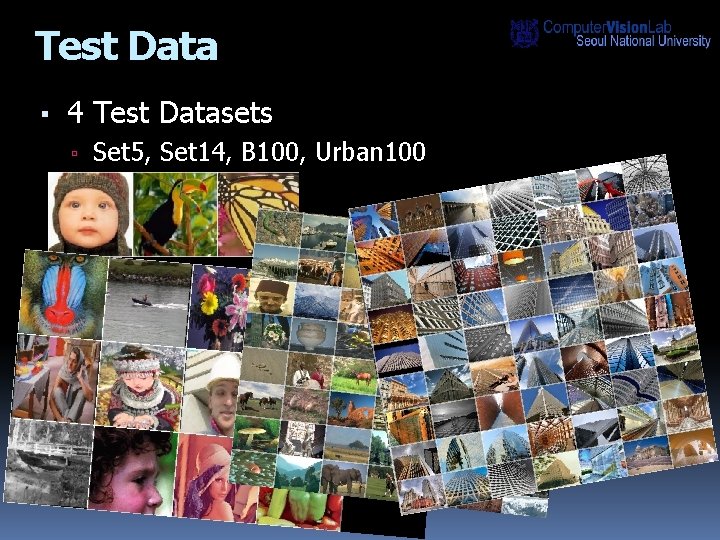

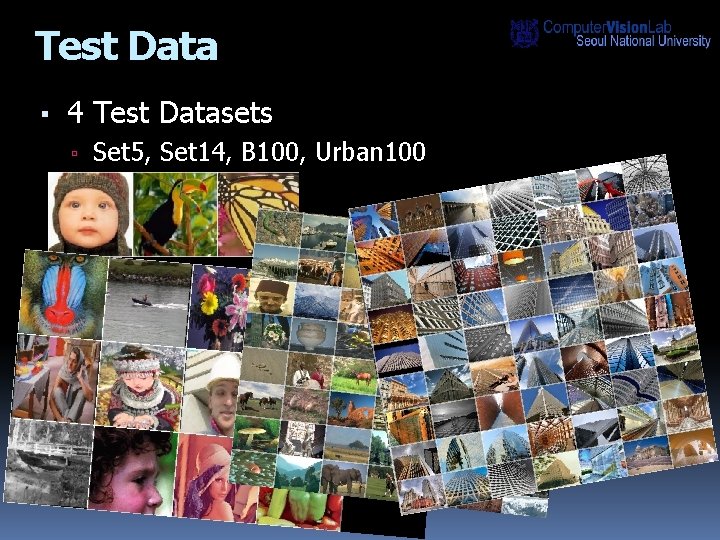

Test Data ▪ 4 Test Datasets ▫ Set 5, Set 14, B 100, Urban 100

Experimental Results

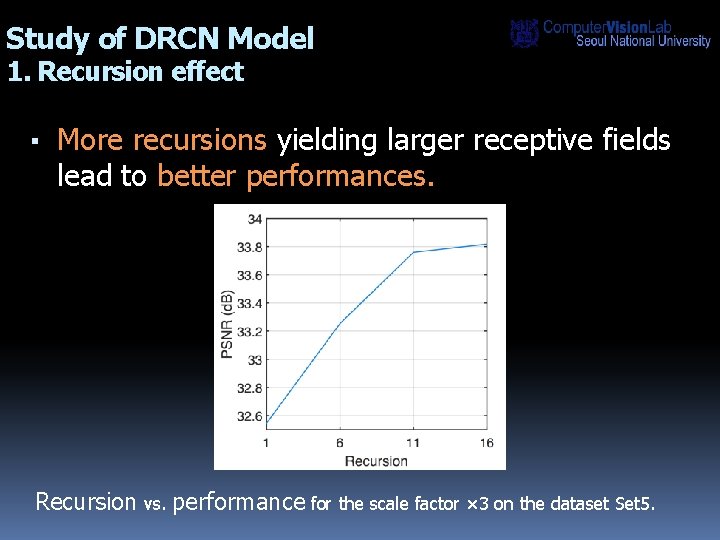

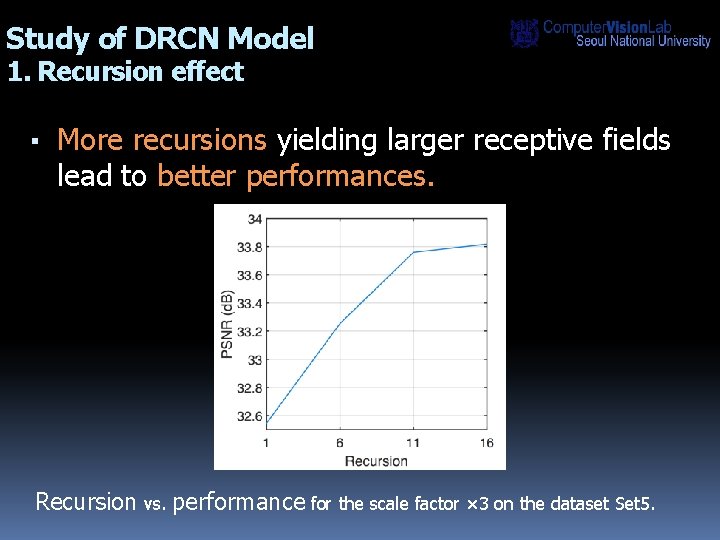

Study of DRCN Model 1. Recursion effect ▪ More recursions yielding larger receptive fields lead to better performances. Recursion vs. performance for the scale factor × 3 on the dataset Set 5.

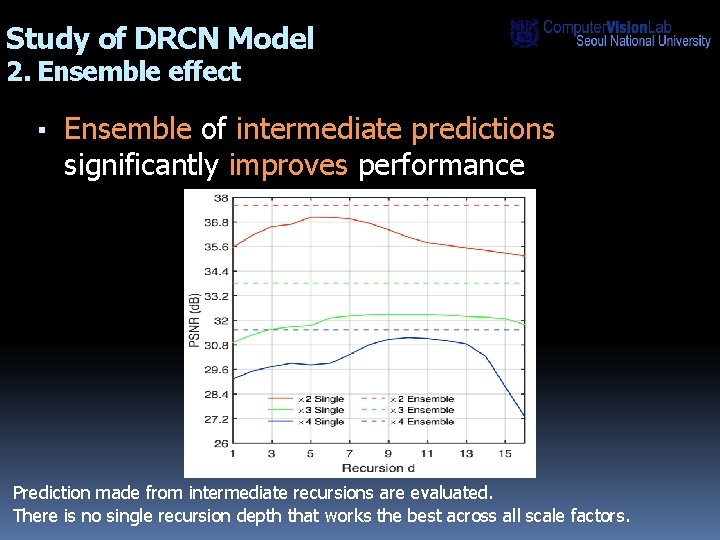

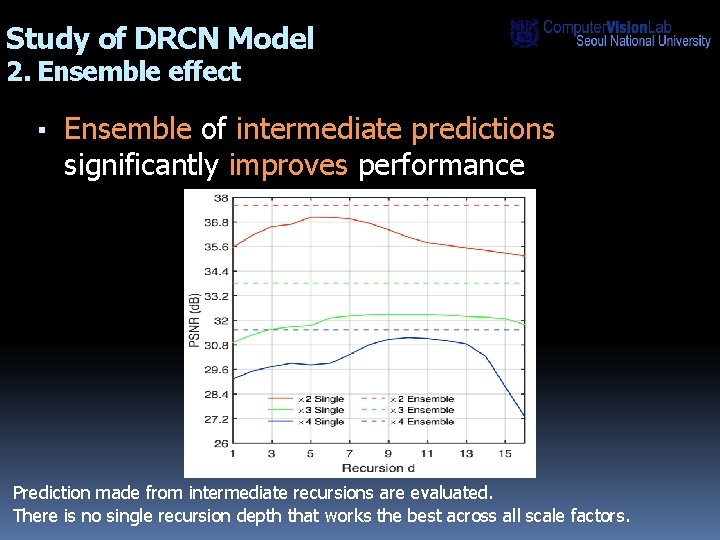

Study of DRCN Model 2. Ensemble effect ▪ Ensemble of intermediate predictions significantly improves performance Prediction made from intermediate recursions are evaluated. There is no single recursion depth that works the best across all scale factors.

![Experimental Results Ground truth PSNRSSIM A 1 29 180 9007 SRCNN2 29 450 9022 Experimental Results Ground truth (PSNR/SSIM) A+ [1] 29. 18/0. 9007 SRCNN[2] 29. 45/0. 9022](https://slidetodoc.com/presentation_image_h/9f6a328cada5db7dc31adf5e6d73d709/image-21.jpg)

Experimental Results Ground truth (PSNR/SSIM) A+ [1] 29. 18/0. 9007 SRCNN[2] 29. 45/0. 9022 RFL[3] 29. 16/0. 8989 Self. Ex[4] 29. 19/0. 9000 DRCN (ours) 29. 98/0. 9115 [1] R. Timofte, V. De Smet, and L. Van Gool. A+: Adjusted anchored neighborhood regression for fast super-resolution. In ACCV, 2014 [2] C. Dong, C. C. Loy, K. He, and X. Tang. Image super-resolution using deep convolutional networks. TPAMI, 2014 [3] S. Schulter, C. Leistner, and H. Bischof. Fast and accurate image upscaling with super-resolution forests. In CVPR, 2015 [4] J. -B. Huang, A. Singh, and N. Ahuja. Single image super-resolution using transformed self-exemplars. In CVPR, 2015.

![Experimental Results Ground truth PSNRSSIM A 1 26 240 8805 SRCNN2 26 400 8844 Experimental Results Ground truth (PSNR/SSIM) A+ [1] 26. 24/0. 8805 SRCNN[2] 26. 40/0. 8844](https://slidetodoc.com/presentation_image_h/9f6a328cada5db7dc31adf5e6d73d709/image-22.jpg)

Experimental Results Ground truth (PSNR/SSIM) A+ [1] 26. 24/0. 8805 SRCNN[2] 26. 40/0. 8844 RFL[3] 26. 22/0. 8779 Self. Ex[4] 26. 90/0. 8953 DRCN (ours) 27. 05/0. 9033

![Experimental Results Ground truth PSNRSSIM A 1 28 900 9278 SRCNN2 29 400 9270 Experimental Results Ground truth (PSNR/SSIM) A+ [1] 28. 90/0. 9278 SRCNN[2] 29. 40/0. 9270](https://slidetodoc.com/presentation_image_h/9f6a328cada5db7dc31adf5e6d73d709/image-23.jpg)

Experimental Results Ground truth (PSNR/SSIM) A+ [1] 28. 90/0. 9278 SRCNN[2] 29. 40/0. 9270 RFL[3] 28. 44/0. 9200 Self. Ex[4] 29. 16/0. 9284 DRCN (ours) 32. 35/0. 9578

![Experimental Results Ground truth PSNRSSIM A 1 28 440 8990 SRCNN2 28 840 9041 Experimental Results Ground truth (PSNR/SSIM) A+ [1] 28. 44/0. 8990 SRCNN[2] 28. 84/0. 9041](https://slidetodoc.com/presentation_image_h/9f6a328cada5db7dc31adf5e6d73d709/image-24.jpg)

Experimental Results Ground truth (PSNR/SSIM) A+ [1] 28. 44/0. 8990 SRCNN[2] 28. 84/0. 9041 RFL[3] 28. 42/0. 8980 Self. Ex[4] 28. 48/0. 8998 DRCN (ours) 29. 65/0. 9151

![Experimental Results Ground truth PSNRSSIM A 1 30 000 7878 SRCNN2 29 980 7867 Experimental Results Ground truth (PSNR/SSIM) A+ [1] 30. 00/0. 7878 SRCNN[2] 29. 98/0. 7867](https://slidetodoc.com/presentation_image_h/9f6a328cada5db7dc31adf5e6d73d709/image-25.jpg)

Experimental Results Ground truth (PSNR/SSIM) A+ [1] 30. 00/0. 7878 SRCNN[2] 29. 98/0. 7867 RFL[3] 29. 86/0. 7830 Self. Ex[4] 29. 93/0. 7883 DRCN (ours) 30. 40/0. 8014

![Quantitative Results cpu version ECCV 2014 PSNRSSIMtime VDSR ours CVPR 2016 PSNRSSIMtime DRCN ours Quantitative Results *cpu version [ECCV 2014] PSNR/SSIM/time VDSR (ours CVPR 2016) PSNR/SSIM/time DRCN (ours)](https://slidetodoc.com/presentation_image_h/9f6a328cada5db7dc31adf5e6d73d709/image-28.jpg)

Quantitative Results *cpu version [ECCV 2014] PSNR/SSIM/time VDSR (ours CVPR 2016) PSNR/SSIM/time DRCN (ours) PSNR/SSIM/time 36. 49/0. 9537/45. 78 32. 58/0. 9093/33. 44 30. 31/0. 8619/29. 18 36. 66/0. 9542/2. 19 32. 75/0. 9090/2. 23 30. 48/0. 8628/2. 19 37. 53/0. 9587/0. 13 33. 66/0. 9213/0. 13 31. 35/0. 8838/0. 12 37. 63/0. 9588/1. 54 33. 82/0. 9226/1. 55 31. 53/0. 8854/1. 54 32. 26/0. 9040/1. 13 29. 05/0. 8164/0. 85 27. 24/0. 7451/0. 65 32. 22/0. 9034/105. 0 29. 16/0. 8196/74. 96 27. 40/0. 7518/65. 08 32. 42/0. 9063/4. 32 29. 28/0. 8209/4. 40 27. 49/0. 7503/4. 39 33. 03/0. 9124/0. 25 29. 77/0. 8314/0. 26 28. 01/0. 7674/0. 25 33. 04/0. 9118/3. 44 29. 79/0. 8311/3. 65 28. 02/0. 7670/3. 63 31. 21/0. 8863/0. 59 28. 29/0. 7835/0. 33 26. 82/0. 7087/0. 26 31. 16/0. 8840/0. 80 28. 22/0. 7806/0. 62 26. 75/0. 7054/0. 48 31. 18/0. 8855/60. 09 28. 29/0. 7840/40. 01 26. 84/0. 7106/35. 87 31. 36/0. 8879/2. 51 28. 41/0. 7863/2. 58 26. 90/0. 7101/2. 51 31. 90/0. 8960/0. 16 28. 82/0. 7976/0. 21 27. 29/0. 7251/0. 21 31. 85/0. 8942/2. 30 28. 80/0. 7963/2. 31 27. 23/0. 7233/2. 30 29. 20/0. 8938/2. 96 26. 03/0. 7973/1. 67 24. 32/0. 7183/1. 21 29. 11/0. 8904/3. 62 25. 86/0. 7900/2. 48 24. 19/0. 7096/1. 88 29. 54/0. 8967/663. 9 26. 44/0. 8088/473. 6 24. 79/0. 7374/694. 4 29. 50/0. 8946/22. 1 26. 24/0. 7989/19. 4 24. 52/0. 7221/18. 5 30. 76/0. 9140/0. 98 27. 14/0. 8279/1. 08 25. 18/0. 7524/1. 06 30. 75/0. 9133/12. 72 27. 15/0. 8276/12. 70 25. 14/0. 7510/12. 71 A+ [ACCV 2014] PSNR/SSIM/time RFL [CVPR 2015] PSNR/SSIM/time Self. Ex [CVPR 2015] PSNR/SSIM/time Set 5 x 2 x 3 x 4 36. 54/0. 9544/0. 58 32. 58/0. 9088/0. 32 30. 28/0. 8603/0. 24 36. 54/0. 9537/0. 63 32. 43/0. 9057/0. 49 30. 14/0. 8548/0. 38 Set 14 x 2 x 3 x 4 32. 28/0. 9056/0. 86 29. 13/0. 8188/0. 56 27. 32/0. 7491/0. 38 B 100 x 2 x 3 x 4 Urban 100 x 2 x 3 x 4 Dataset SRCNN* Ours outperforms SRCNN by 0. 67 d. B / 0. 017 SSIM score

Concolusion

Conclusion 1. Novel SR method using deeply-recursive convolution network ▪ additional recursion introduces no additional weight parameters (fixed capacity) 2. Recursive-supervision and skip-connection are used for better training 3. Achieves the state-of-the-art performance 4. Can be applied to other image restoration problems easily