Deep Neural Network Partitioning in Distributed Computing System

![基于多核加速计算平台的深度神经网络 分割与重训练技术 Deep Neural Network Partitioning in Distributed Computing System Jiyuan Shen [5130309194] Computer 基于多核加速计算平台的深度神经网络 分割与重训练技术 Deep Neural Network Partitioning in Distributed Computing System Jiyuan Shen [5130309194] Computer](https://slidetodoc.com/presentation_image_h2/9553fd6c01a6ffdf36b5349e3ed7861a/image-1.jpg)

基于多核加速计算平台的深度神经网络 分割与重训练技术 Deep Neural Network Partitioning in Distributed Computing System Jiyuan Shen [5130309194] Computer Science and Technology Shanghai Jiao Tong University Mentor: Li Jiang

Distributed DNN Partitioning

1 Motivation

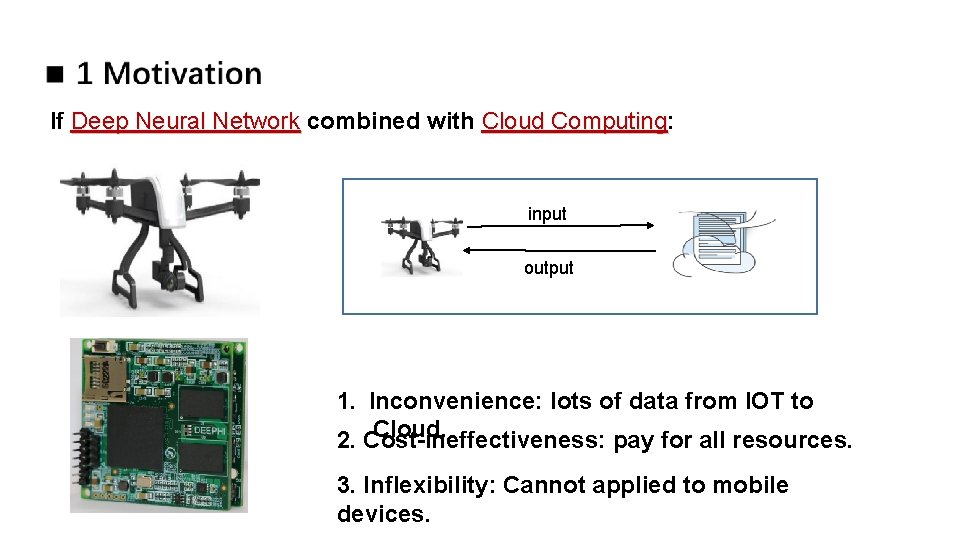

If Deep Neural Network combined with Cloud Computing: Computing input output 1. Inconvenience: lots of data from IOT to Cloud. 2. Cost-ineffectiveness: pay for all resources. 3. Inflexibility: Cannot applied to mobile devices.

If Deep Neural Network combined with Cloud Computing: Computing input output 1. Inconvenience: lots of data from IOT to Cloud. 2. Cost-ineffectiveness: pay for all resources. 3. Inflexibility: Cannot applied to mobile devices.

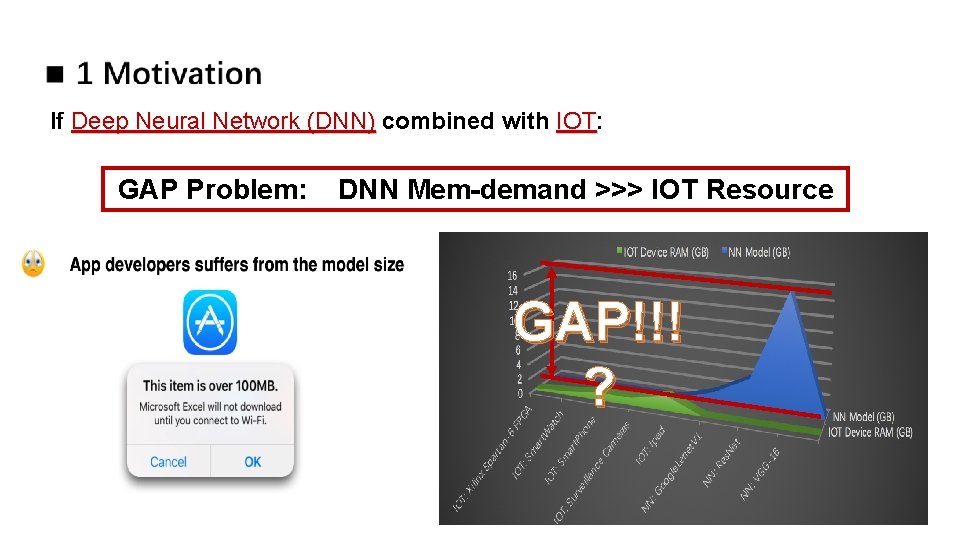

If Deep Neural Network (DNN) combined with IOT: IOT GAP Problem: DNN Mem-demand >>> IOT Resource GAP!!! ?

If Deep Neural Network (DNN) combined with IOT: IOT GAP Problem: DNN Mem-demand >>> IOT Resource GAP!!! ?

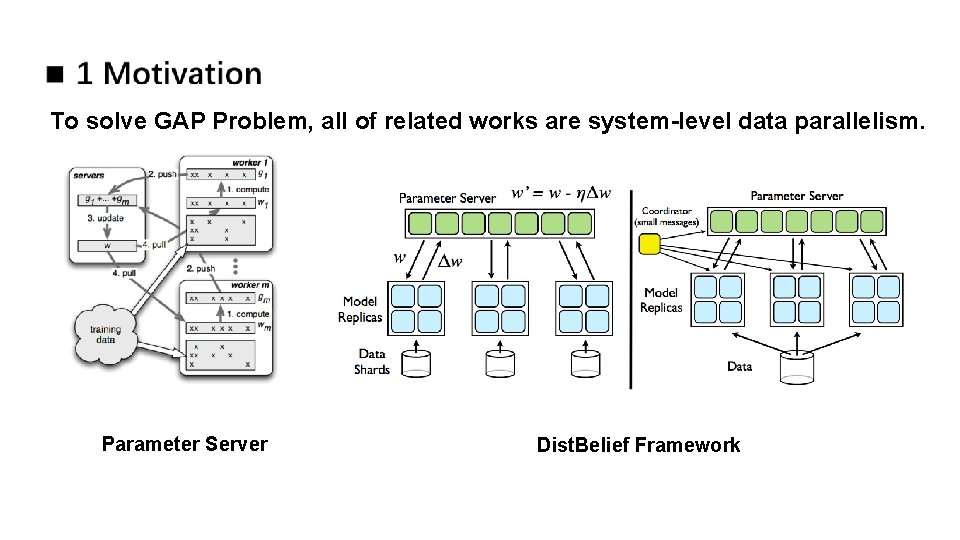

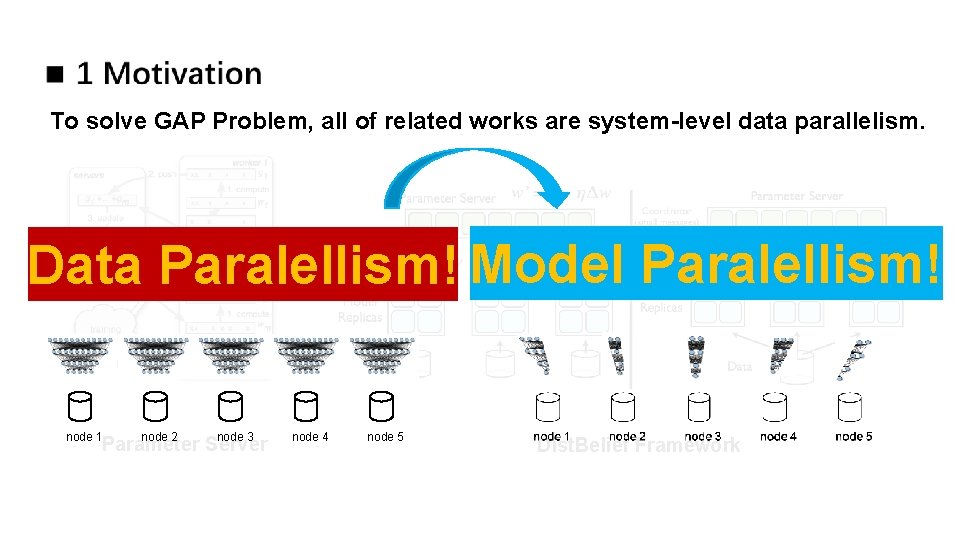

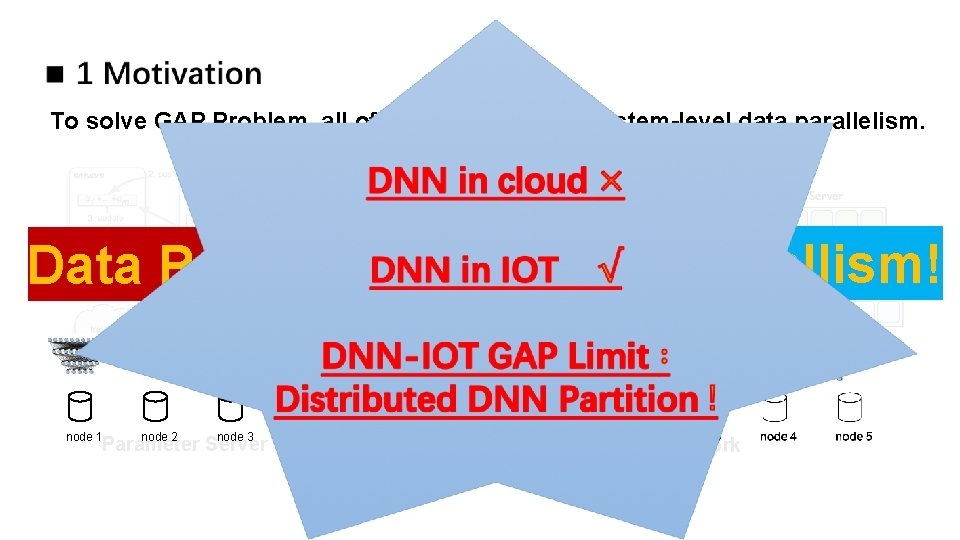

To solve GAP Problem, all of related works are system-level data parallelism. Parameter Server Dist. Belief Framework

To solve GAP Problem, all of related works are system-level data parallelism. Data Paralellism! Model Paralellism! node 1 node 2 node 3 Parameter Server node 4 node 5 Dist. Belief Framework

To solve GAP Problem, all of related works are system-level data parallelism. Data Paralellism! Model Paralellism! node 1 node 2 node 3 Parameter Server node 4 node 5 Dist. Belief Framework

![New Solution: Distributed DNN Partition [ Property ] Software-level Model Parallelism. [ Concept ] New Solution: Distributed DNN Partition [ Property ] Software-level Model Parallelism. [ Concept ]](http://slidetodoc.com/presentation_image_h2/9553fd6c01a6ffdf36b5349e3ed7861a/image-11.jpg)

New Solution: Distributed DNN Partition [ Property ] Software-level Model Parallelism. [ Concept ] 1 Given a distributed computing system with k computing nodes: 2 Partition the whole deep neural network into k individual network component; 3 Run them in corresponding distributed computing node. At the same time, we should follow the basic rules that first, each computing node maintain workload balance; second, inter computing node communication costs are minimized.

![New Solution: Distributed DNN Partition [ Property ] Software-level Model Parallelism. [ Concept ] New Solution: Distributed DNN Partition [ Property ] Software-level Model Parallelism. [ Concept ]](http://slidetodoc.com/presentation_image_h2/9553fd6c01a6ffdf36b5349e3ed7861a/image-12.jpg)

New Solution: Distributed DNN Partition [ Property ] Software-level Model Parallelism. [ Concept ] 1 Given a distributed computing system with k computing nodes: 2 Partition the whole deep neural network into k individual network component; 3 Run them in corresponding distributed computing node. At the same time, we should follow the basic rules that first, each computing node maintain workload balance; second, inter computing node communication costs are minimized.

2 Framework Graph. DNN

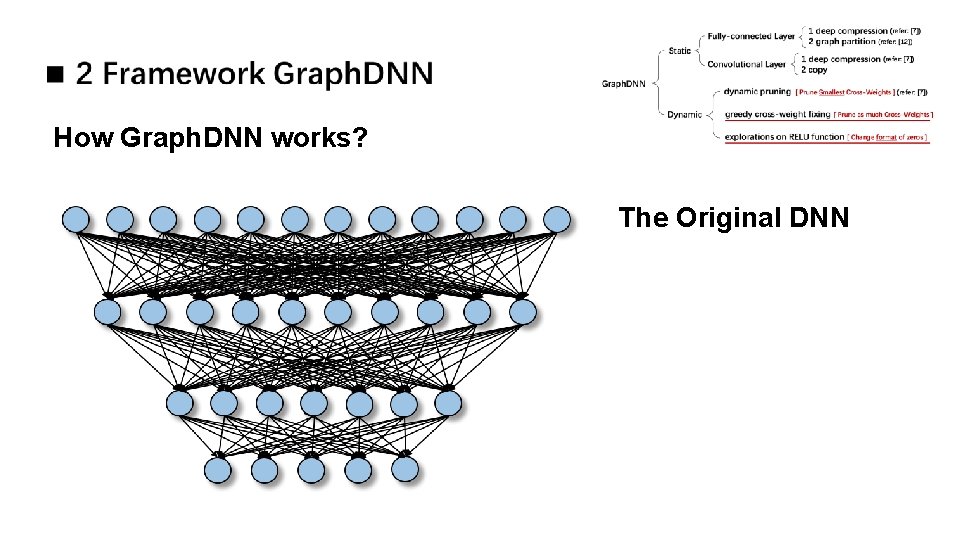

![(refer: [7]) (refer: [12]) (refer: [7]) [ Prune Smallest Cross-Weights(refer: ] [7]) [ Prune (refer: [7]) (refer: [12]) (refer: [7]) [ Prune Smallest Cross-Weights(refer: ] [7]) [ Prune](http://slidetodoc.com/presentation_image_h2/9553fd6c01a6ffdf36b5349e3ed7861a/image-14.jpg)

(refer: [7]) (refer: [12]) (refer: [7]) [ Prune Smallest Cross-Weights(refer: ] [7]) [ Prune as much Cross-Weights ] [ Change format of zeros ]

How Graph. DNN works? The Original DNN

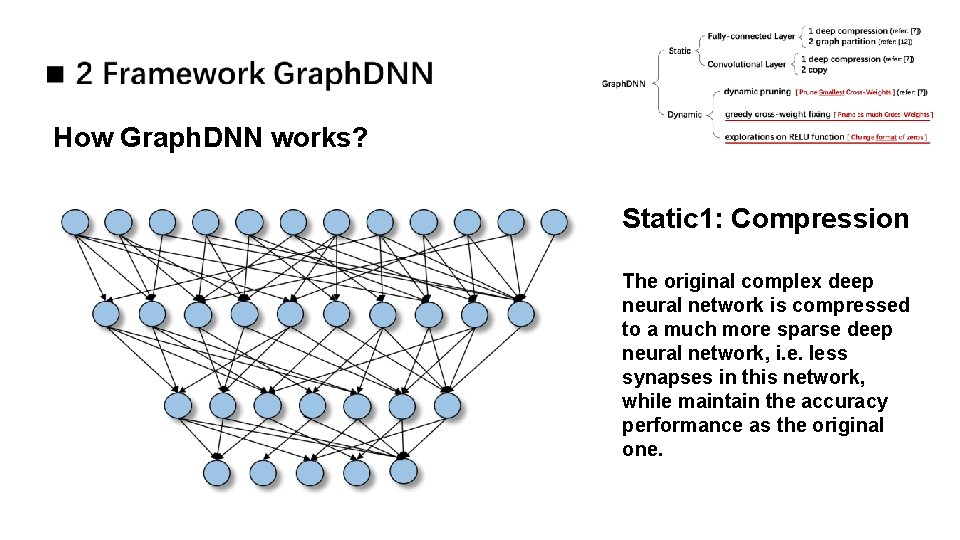

How Graph. DNN works? Static 1: Compression The original complex deep neural network is compressed to a much more sparse deep neural network, i. e. less synapses in this network, while maintain the accuracy performance as the original one.

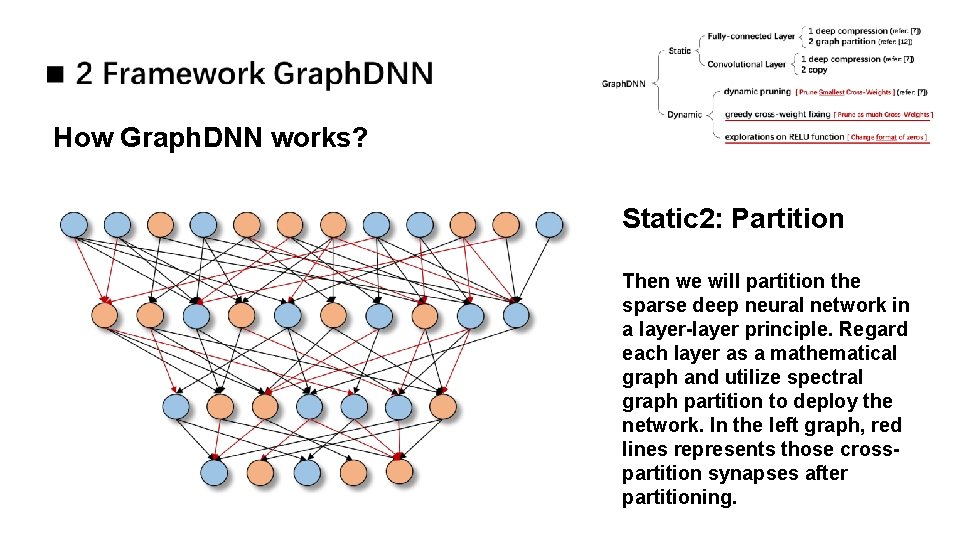

How Graph. DNN works? Static 2: Partition Then we will partition the sparse deep neural network in a layer-layer principle. Regard each layer as a mathematical graph and utilize spectral graph partition to deploy the network. In the left graph, red lines represents those crosspartition synapses after partitioning.

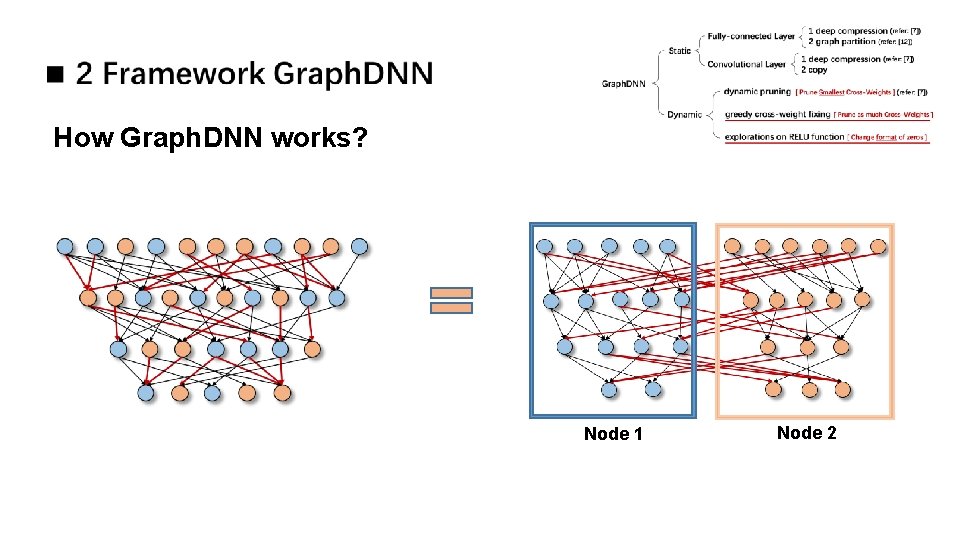

How Graph. DNN works? Node 1 Node 2

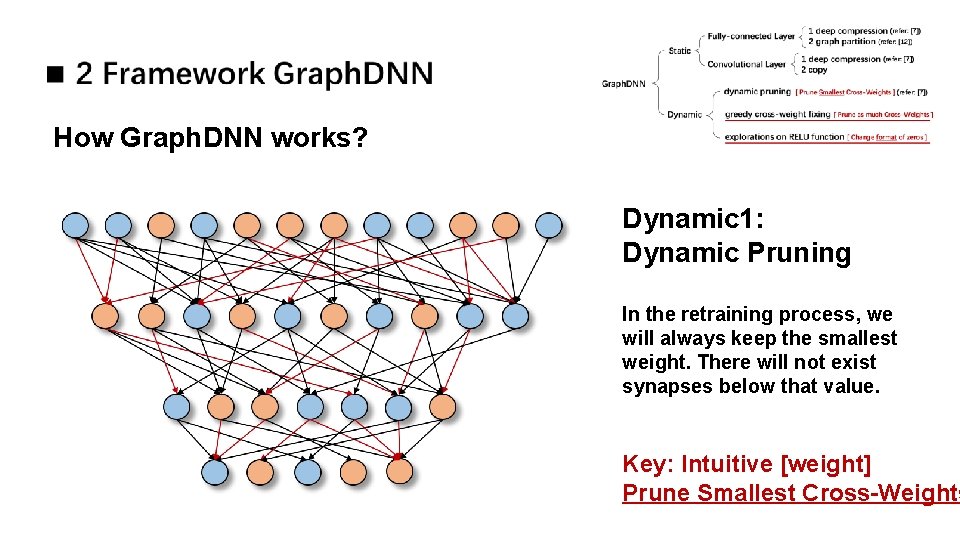

How Graph. DNN works? Dynamic 1: Dynamic Pruning In the retraining process, we will always keep the smallest weight. There will not exist synapses below that value. Key: Intuitive [weight] Prune Smallest Cross-Weights

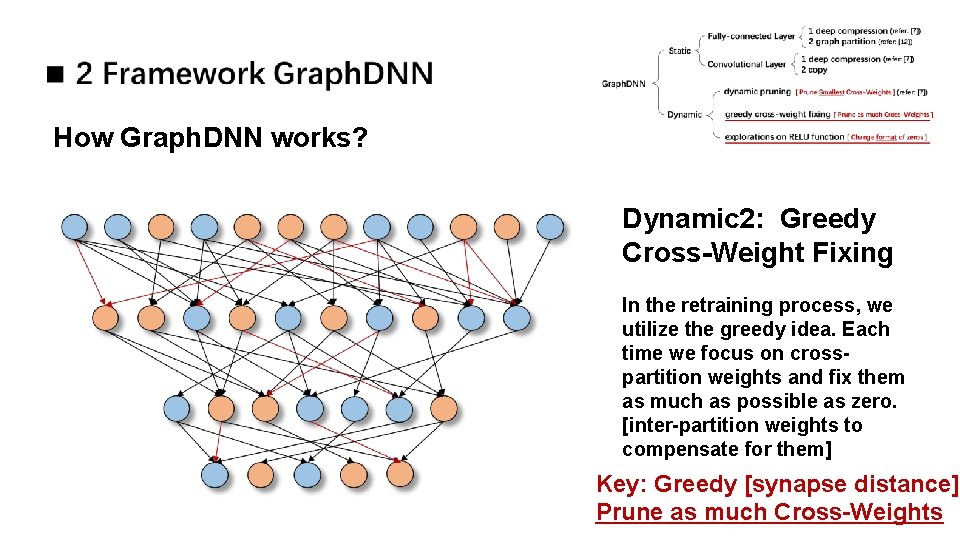

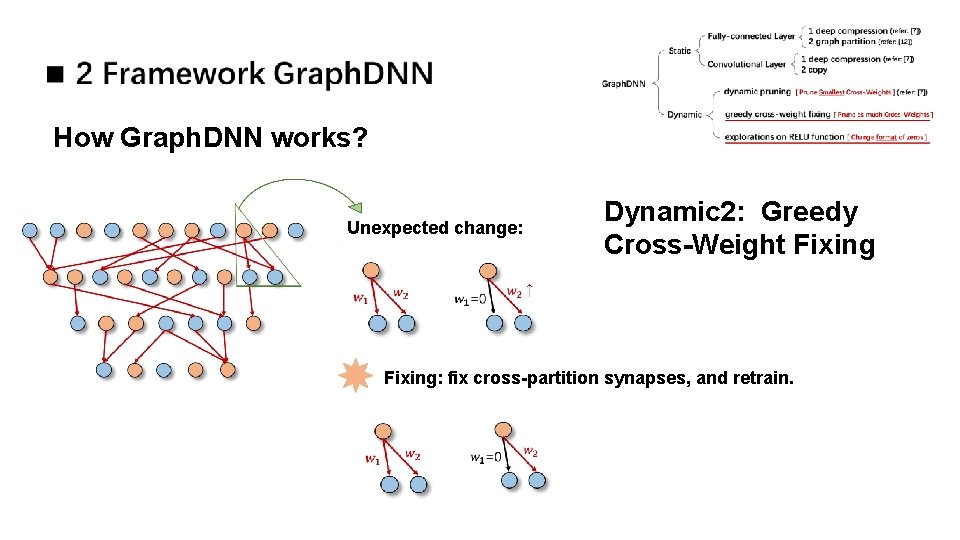

How Graph. DNN works? Dynamic 2: Greedy Cross-Weight Fixing In the retraining process, we utilize the greedy idea. Each time we focus on crosspartition weights and fix them as much as possible as zero. [inter-partition weights to compensate for them] Key: Greedy [synapse distance] Prune as much Cross-Weights

How Graph. DNN works? Unexpected change: Dynamic 2: Greedy Cross-Weight Fixing: fix cross-partition synapses, and retrain.

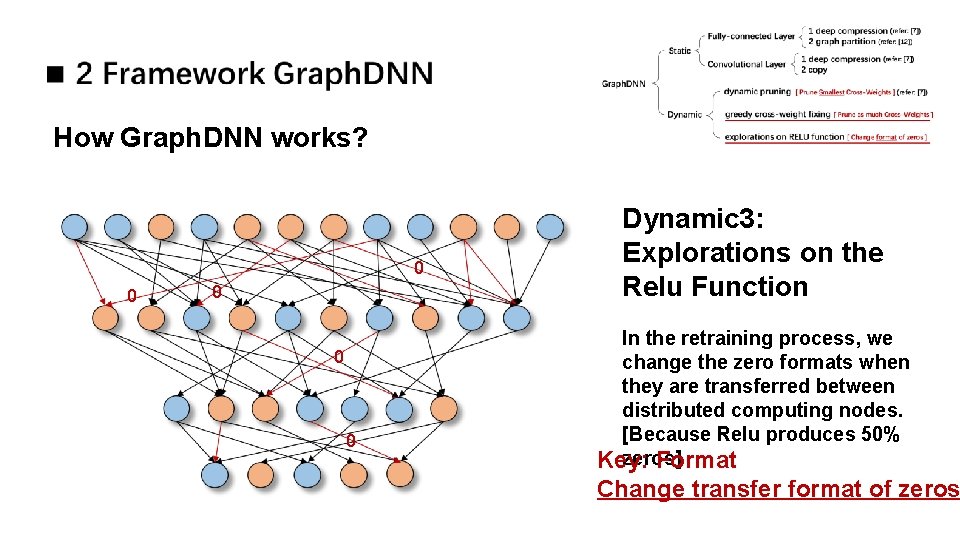

How Graph. DNN works? 0 0 0 Dynamic 3: Explorations on the Relu Function In the retraining process, we change the zero formats when they are transferred between distributed computing nodes. [Because Relu produces 50% zeros] Key: Format Change transfer format of zeros

3 Experiments & Main Contribution

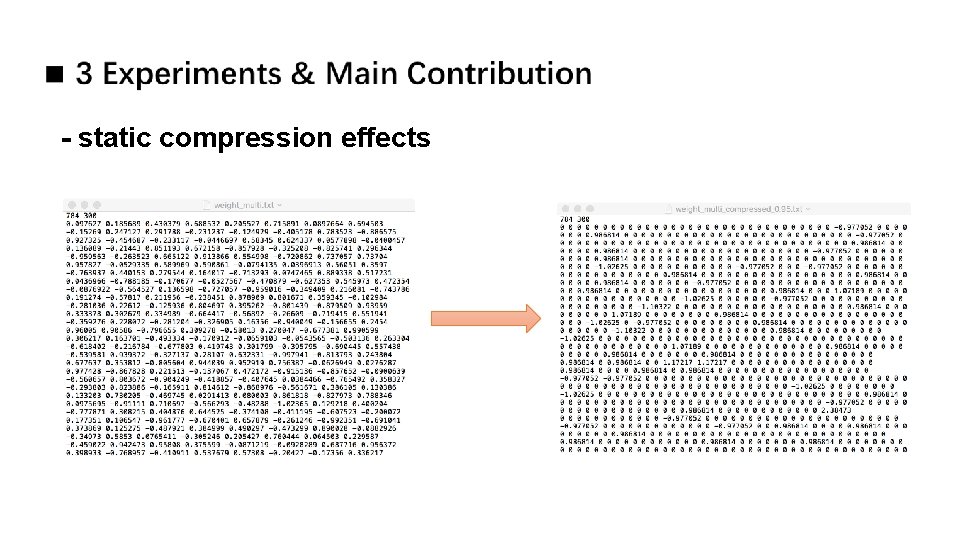

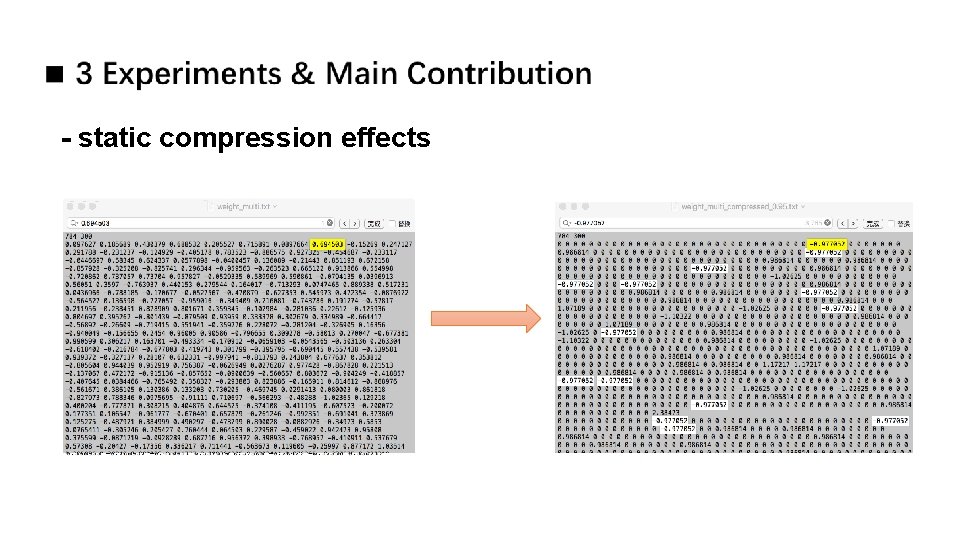

- static compression effects

- static compression effects

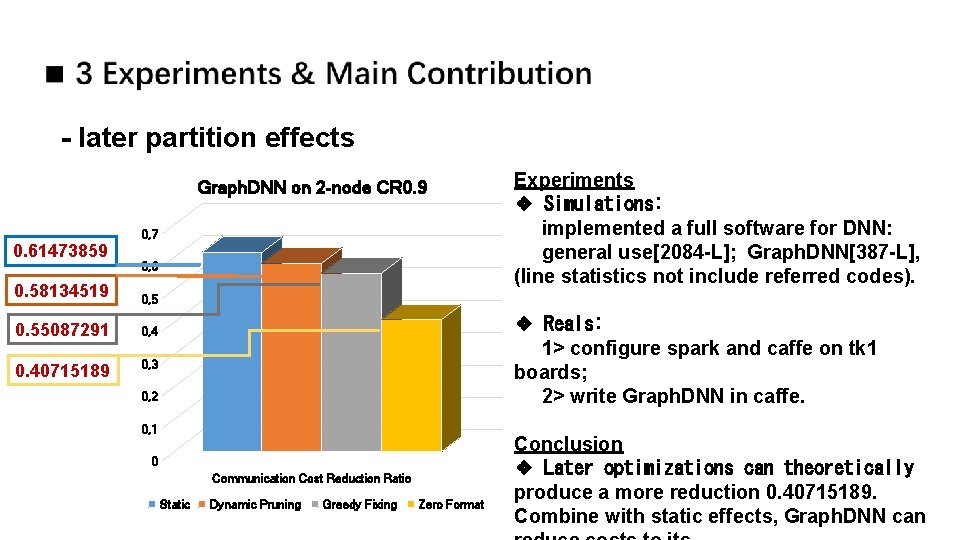

- later partition effects Graph. DNN on 2 -node CR 0. 9 0. 61473859 0, 7 0, 6 0. 58134519 0, 5 0. 55087291 0, 4 0. 40715189 0, 3 Experiments ❖ Simulations: implemented a full software for DNN: general use[2084 -L]; Graph. DNN[387 -L], (line statistics not include referred codes). ❖ Reals: 1> configure spark and caffe on tk 1 boards; 2> write Graph. DNN in caffe. 0, 2 0, 1 0 Communication Cost Reduction Ratio Static Dynamic Pruning Greedy Fixing Zero Format Conclusion ❖ Later optimizations can theoretically produce a more reduction 0. 40715189. Combine with static effects, Graph. DNN can

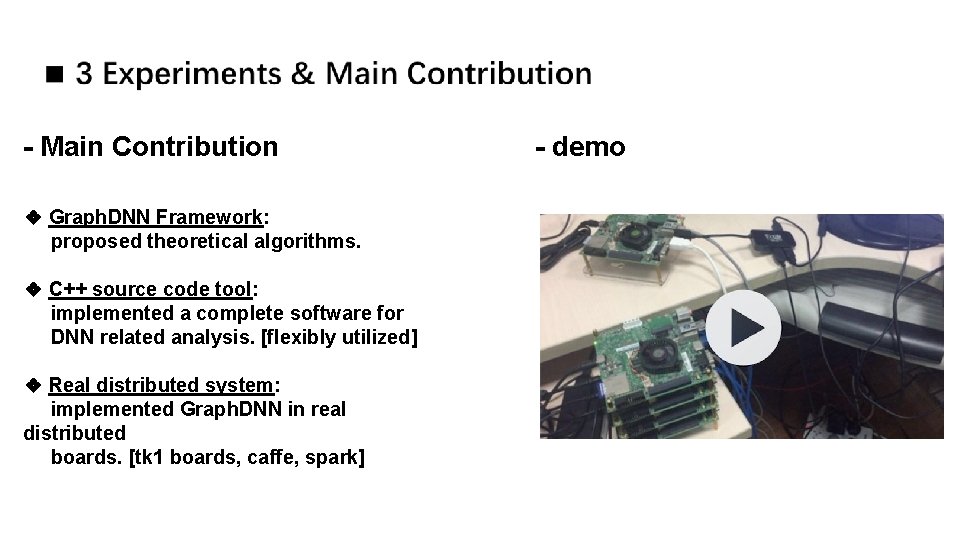

- Main Contribution ❖ Graph. DNN Framework: proposed theoretical algorithms. ❖ C++ source code tool: implemented a complete software for DNN related analysis. [flexibly utilized] ❖ Real distributed system: implemented Graph. DNN in real distributed boards. [tk 1 boards, caffe, spark] - demo

- Slides: 27