Deep Learning with Consumer Transaction Data Eric Greene

- Slides: 19

Deep Learning with Consumer Transaction Data Eric Greene Principal Consultant

A little about me… Architect & Engineer focused on integrating machine learning and real time analytics into modern applications Soon starting a new role with Think Big Analytics in Melbourne, Australia Last year working with Keras and Tensor flow Currently in transition from Wells Fargo AI Labs Four years working with Hadoop and Spark Legal Disclaimer The views and opinions expressed in this article are those of the authors and do not necessarily reflect the official policy or position of any other entity. Examples of analysis performed within this presentation are only examples. They should not be utilized in real-world analytic products as they are based only on very limited and dated open source information. Assumptions made within the analysis are not reflective of the position of any entity.

Deep learning is awesome! What about my use case? Most deep learning literature on time series is about text, image or sound data.

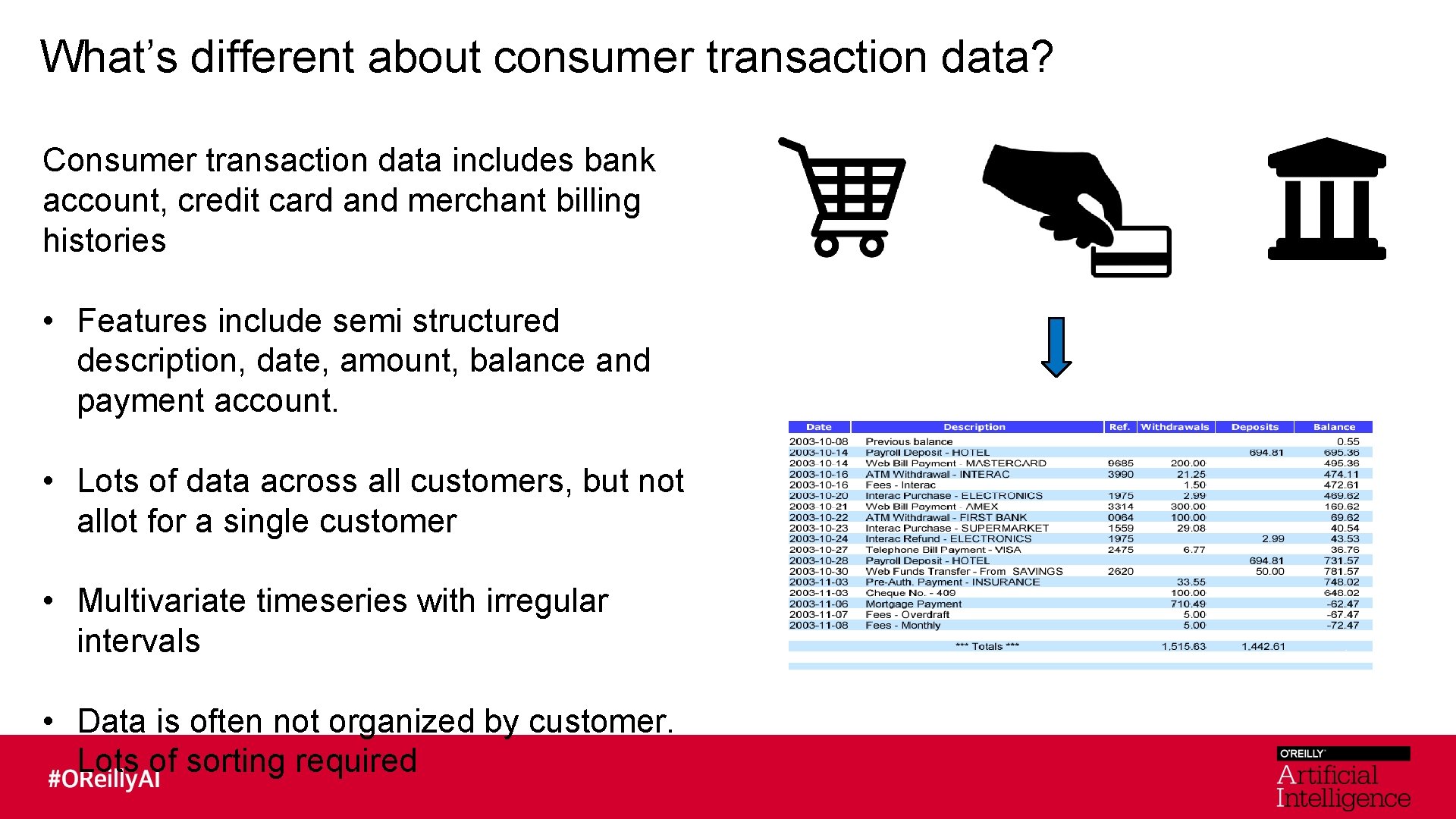

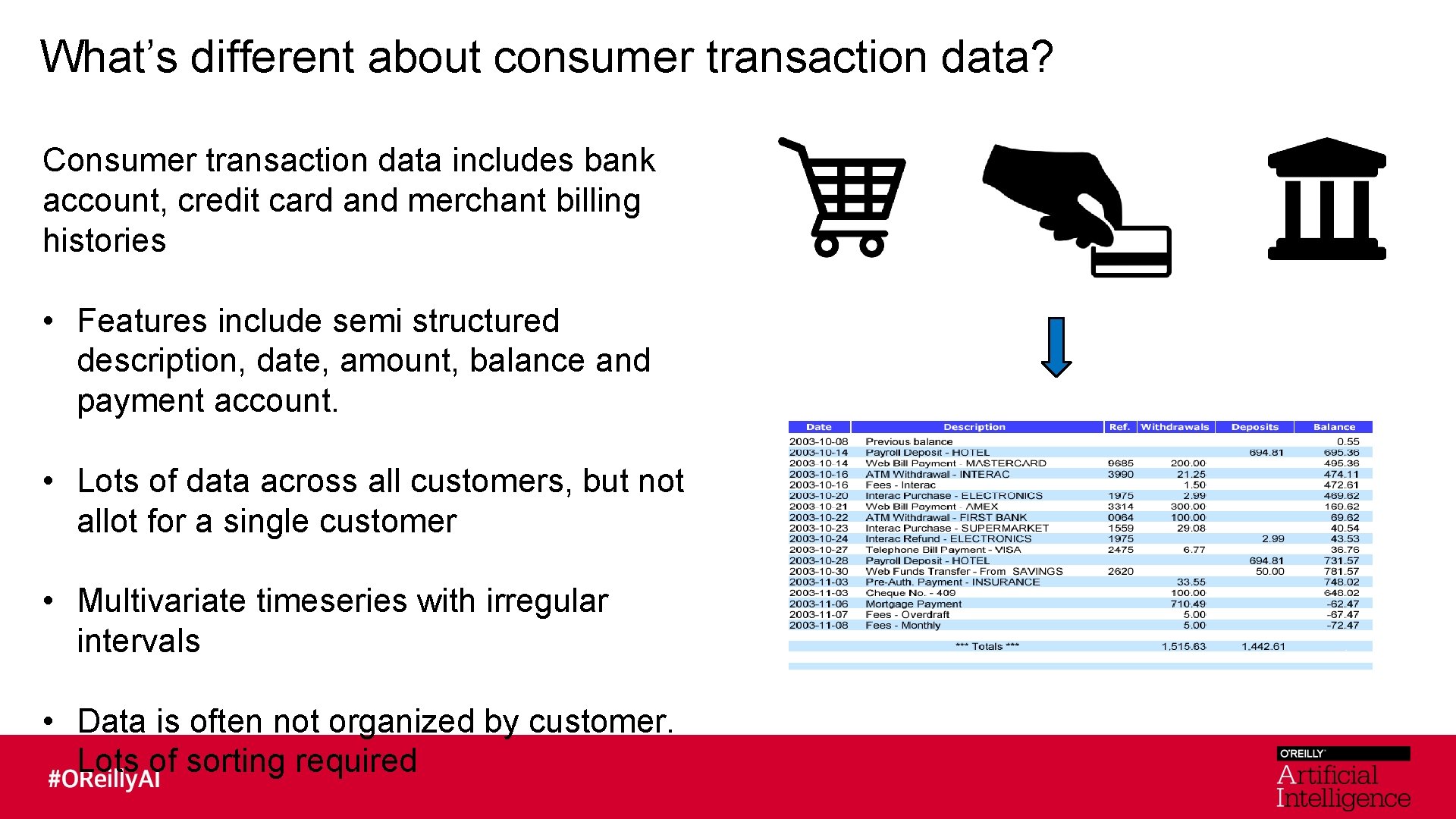

What’s different about consumer transaction data? Consumer transaction data includes bank account, credit card and merchant billing histories • Features include semi structured description, date, amount, balance and payment account. • Lots of data across all customers, but not allot for a single customer • Multivariate timeseries with irregular intervals • Data is often not organized by customer. Lots of sorting required

What are the use cases? A short list of useful insights: • Predict future transactions • Predict future spending or income within specific categories • Categorize transactions • Detect anomalies for fraud, overspending • Segmentation. Identify groups of transactions during shopping, travel, life events.

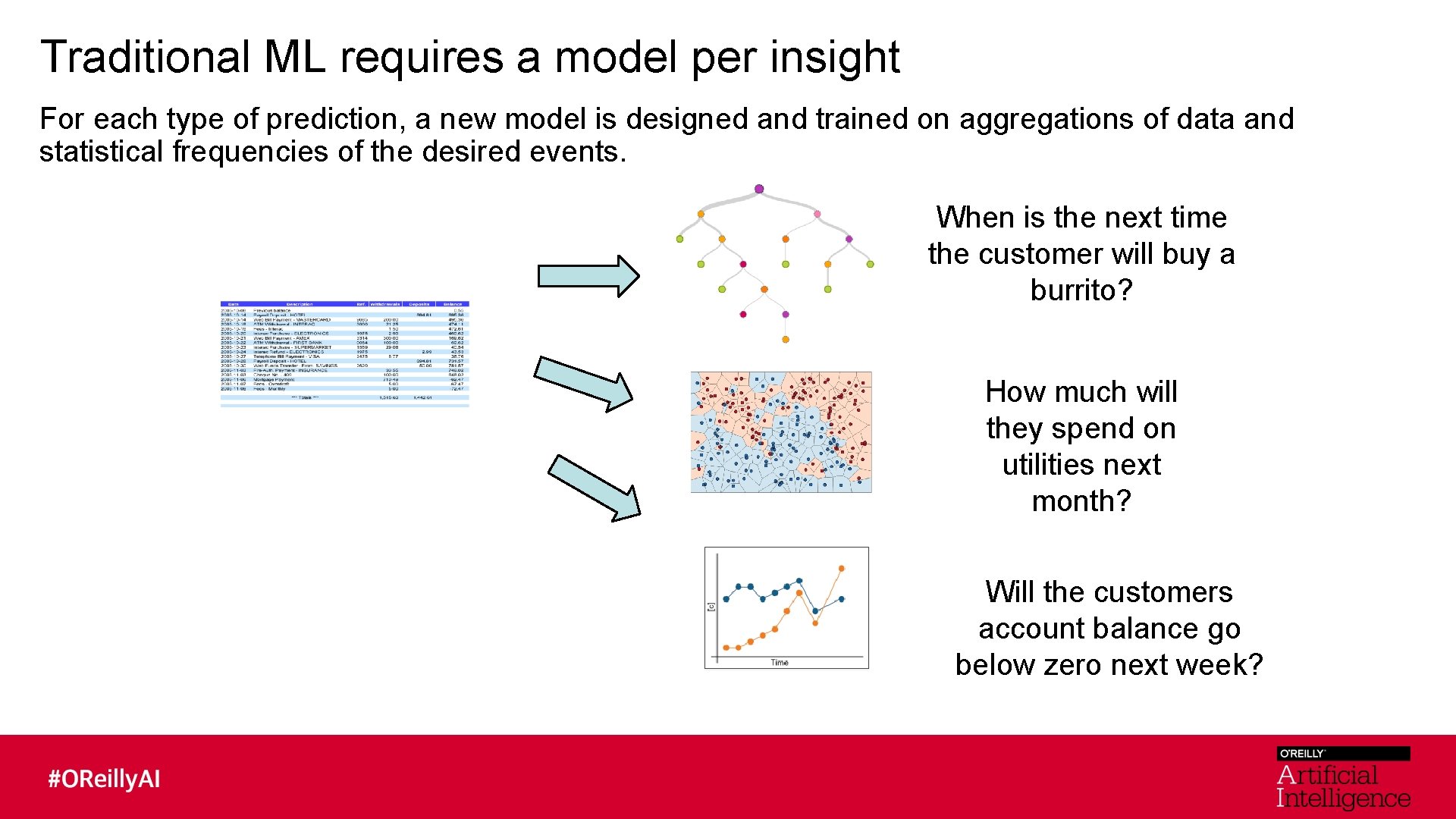

Traditional ML requires a model per insight For each type of prediction, a new model is designed and trained on aggregations of data and statistical frequencies of the desired events. When is the next time the customer will buy a burrito? How much will they spend on utilities next month? Will the customers account balance go below zero next week?

That’s allot of work Every insight requires separate data science and engineering efforts. Data Scientist Models

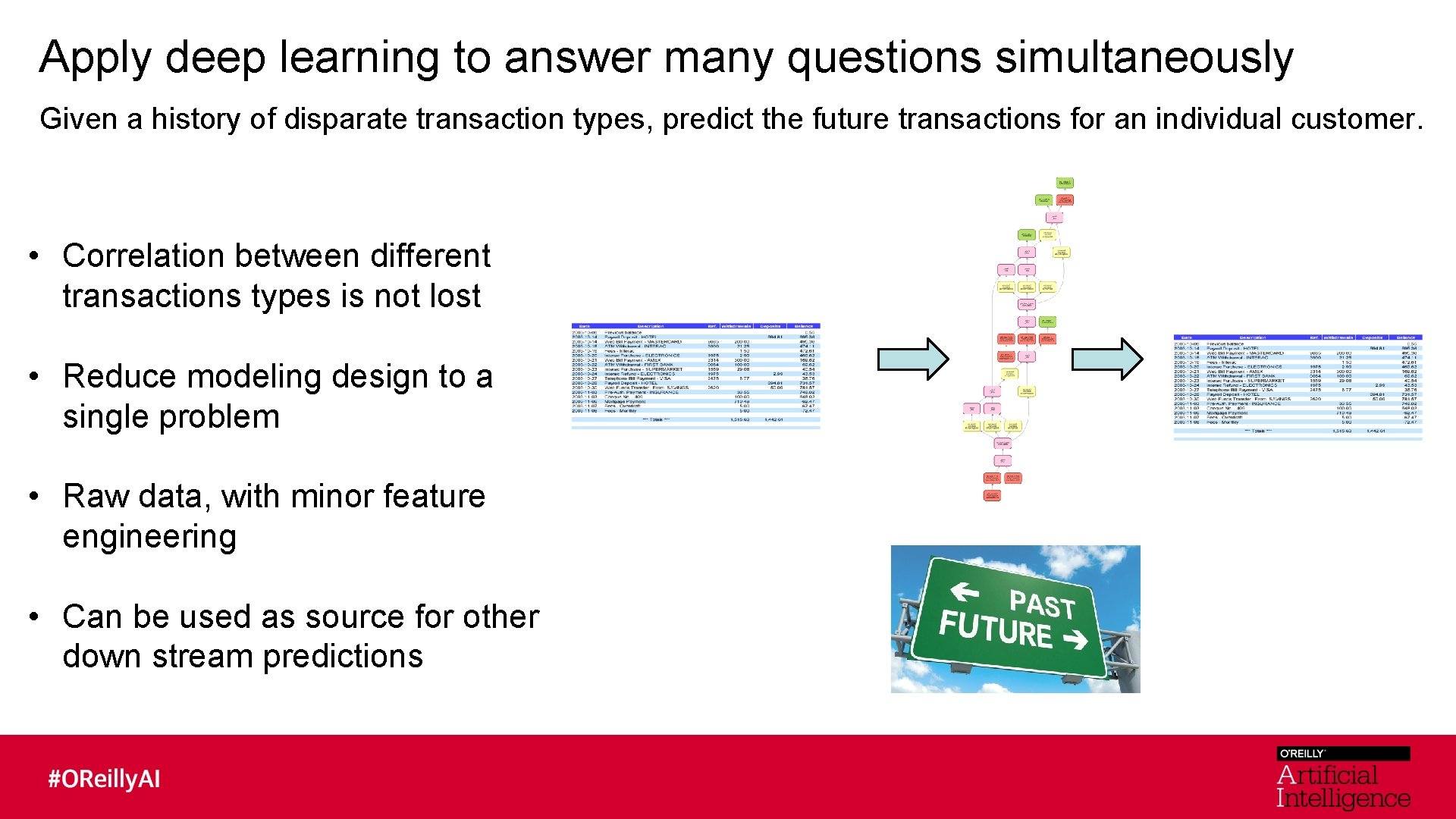

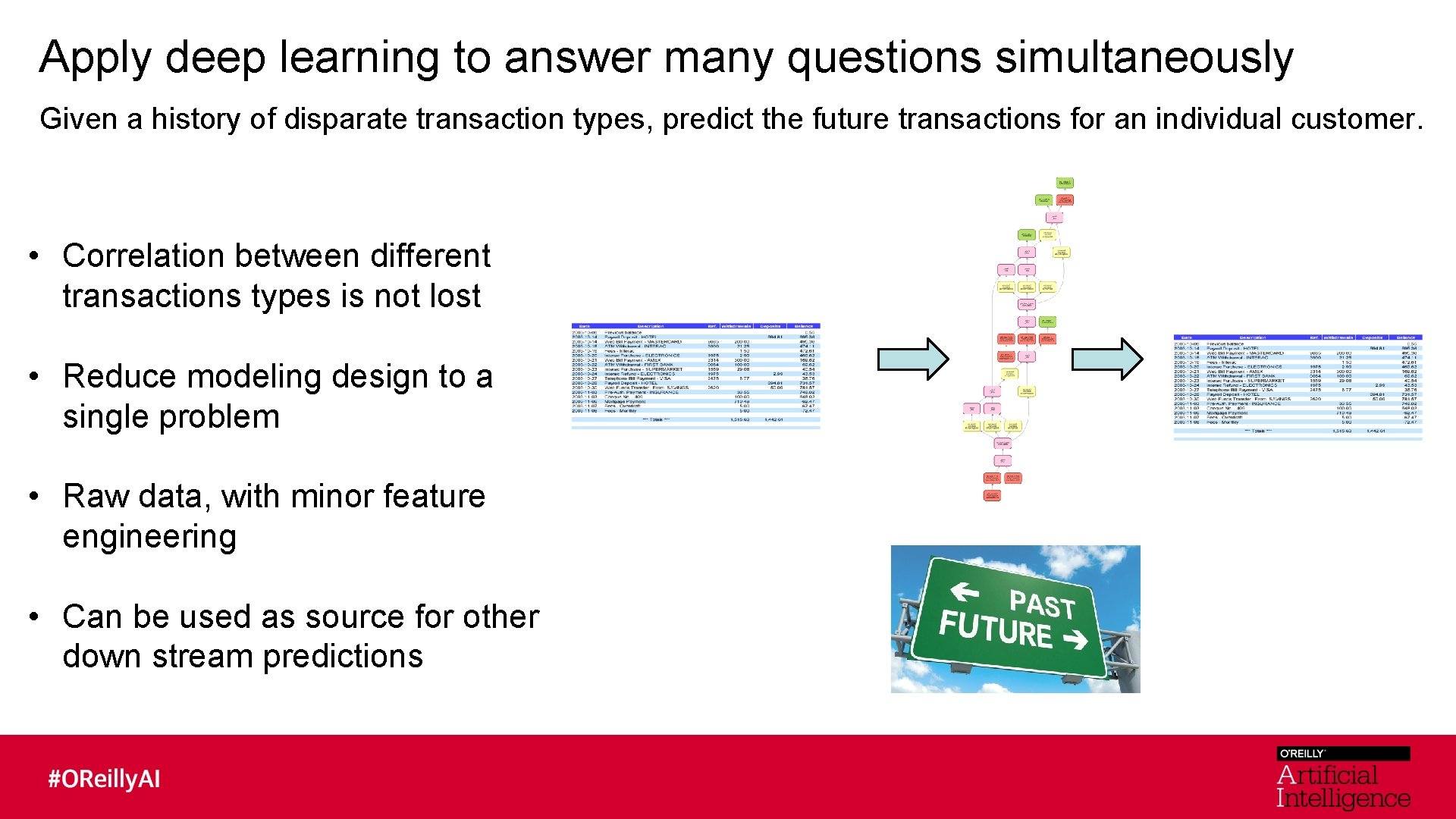

Apply deep learning to answer many questions simultaneously Given a history of disparate transaction types, predict the future transactions for an individual customer. • Correlation between different transactions types is not lost • Reduce modeling design to a single problem • Raw data, with minor feature engineering • Can be used as source for other down stream predictions

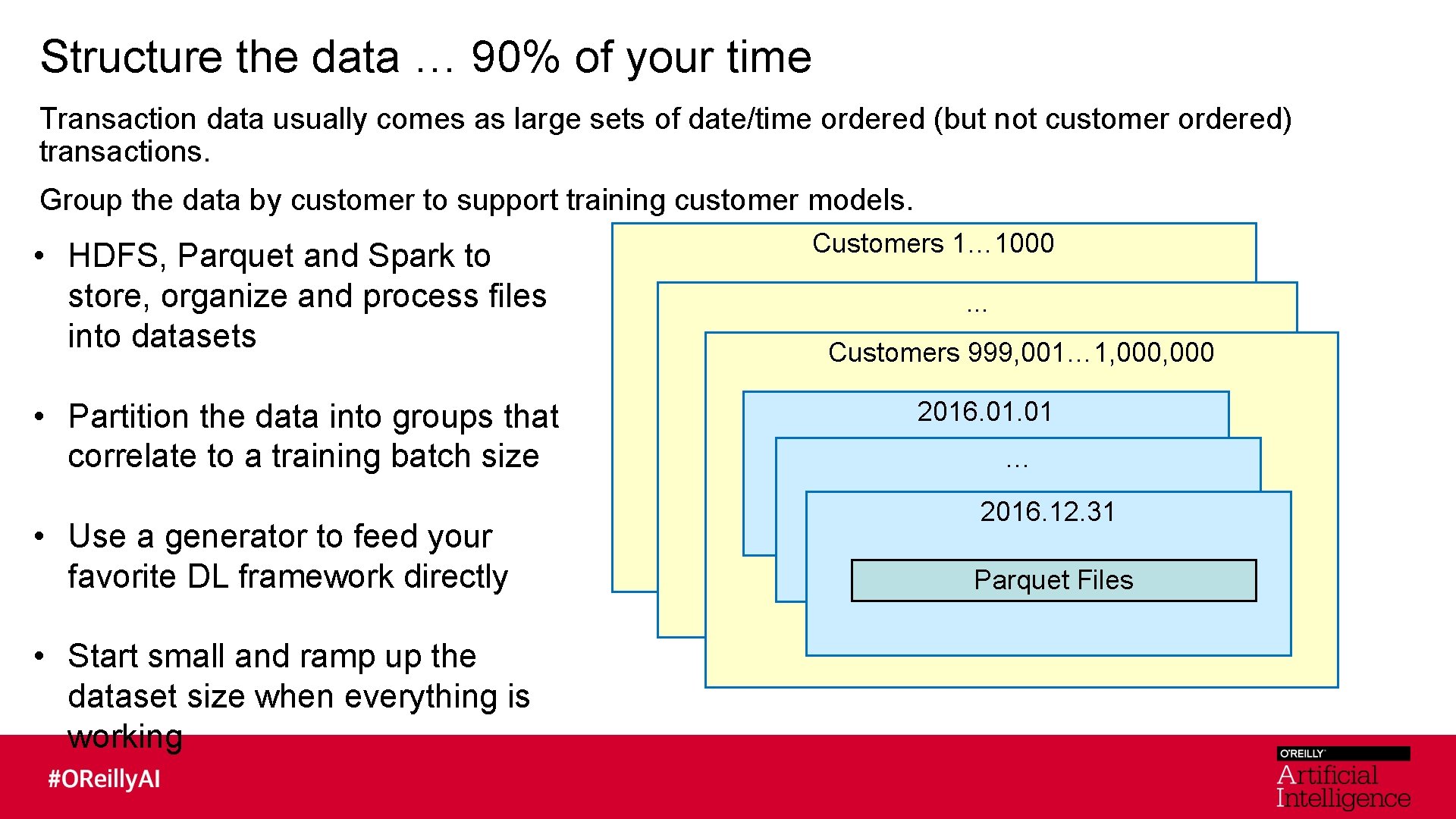

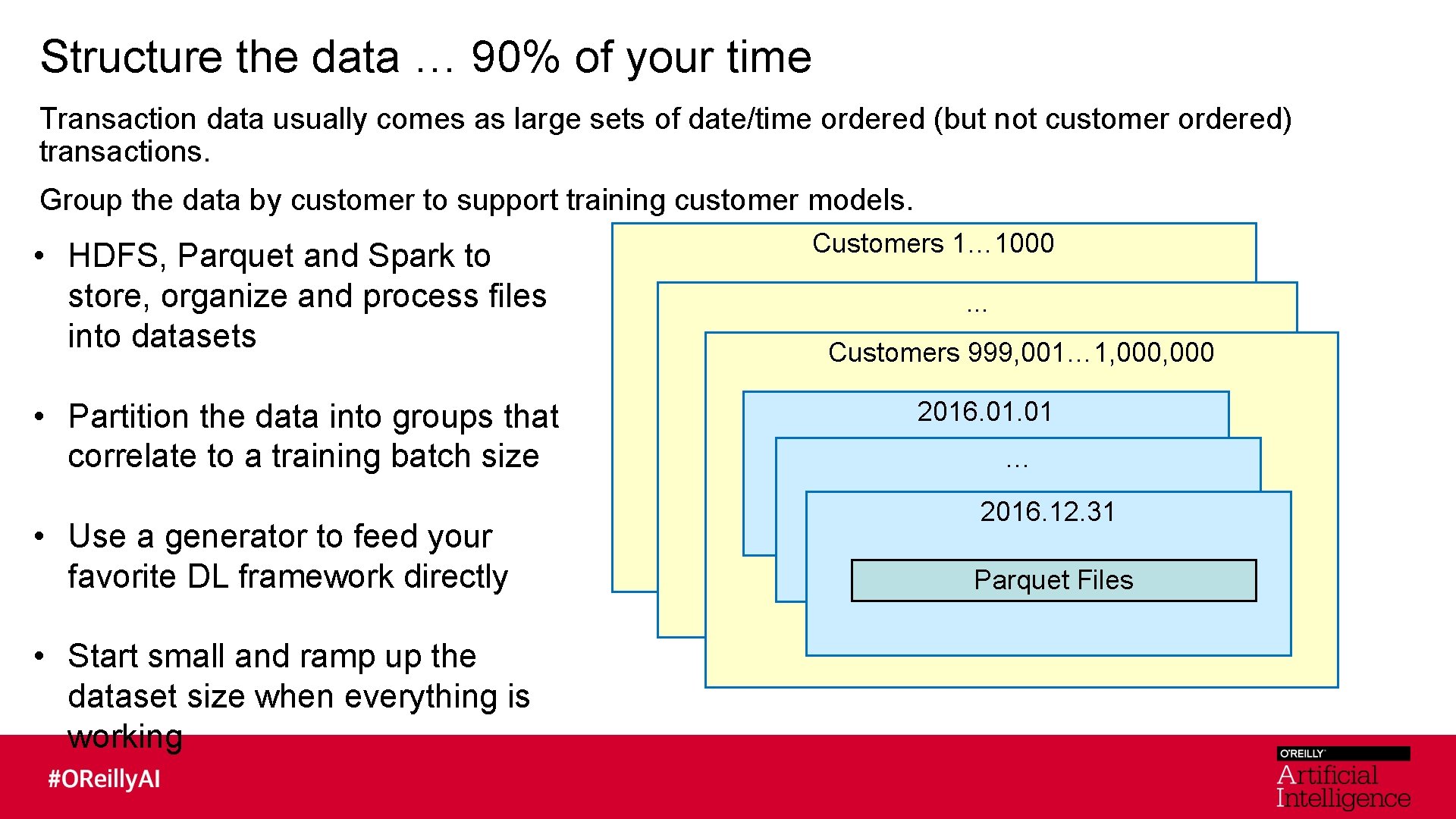

Structure the data … 90% of your time Transaction data usually comes as large sets of date/time ordered (but not customer ordered) transactions. Group the data by customer to support training customer models. • HDFS, Parquet and Spark to store, organize and process files into datasets • Partition the data into groups that correlate to a training batch size • Use a generator to feed your favorite DL framework directly • Start small and ramp up the dataset size when everything is working Customers 1… 1000. . . Customers 999, 001… 1, 000 2016. 01 … 2016. 12. 31 Parquet Files

Use existing model architectures for word prediction Transactions aren’t Shakespeare, but lets treat them like it! • Word prediction models using LSTM and 1 D convolutions have been successful at predicting the next words in a sentence. • Transactions are more repetitive and structured, so should work even better. • Companies have millions of rows of transactions for their customers. Plenty of data!

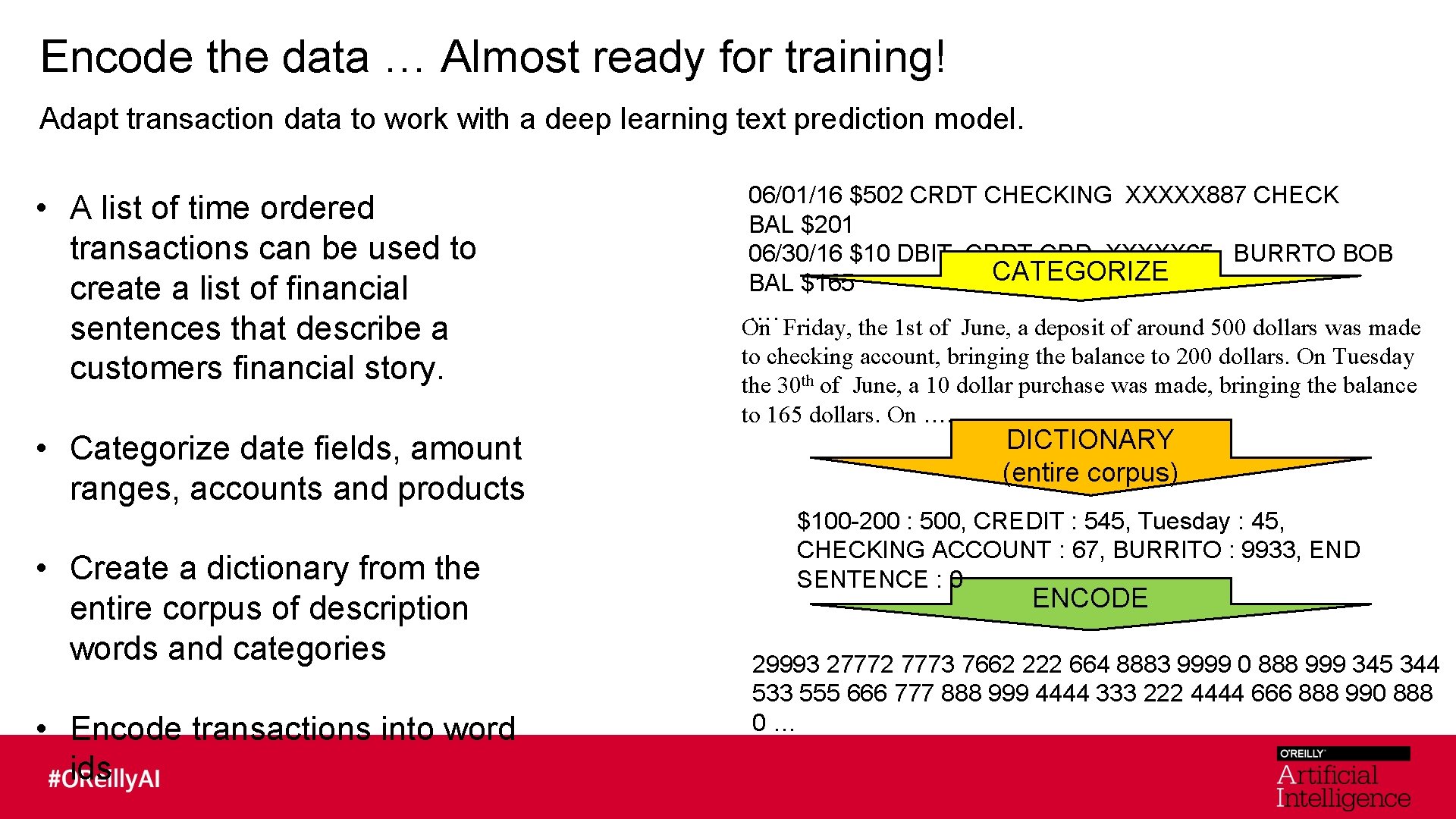

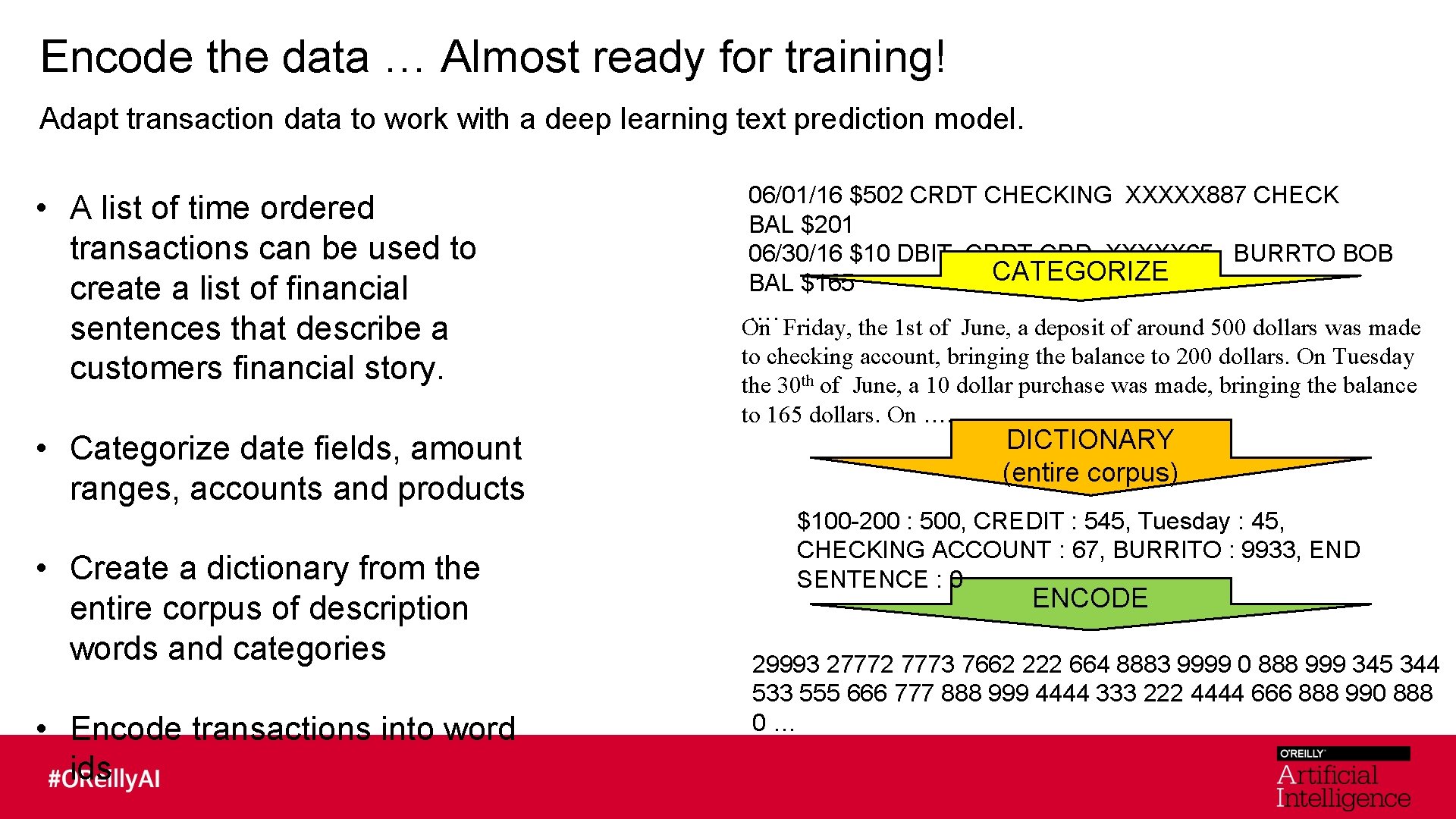

Encode the data … Almost ready for training! Adapt transaction data to work with a deep learning text prediction model. • A list of time ordered transactions can be used to create a list of financial sentences that describe a customers financial story. • Categorize date fields, amount ranges, accounts and products • Create a dictionary from the entire corpus of description words and categories • Encode transactions into word ids 06/01/16 $502 CRDT CHECKING XXXXX 887 CHECK BAL $201 06/30/16 $10 DBIT CRD XXXXX 65 BURRTO BOB CATEGORIZE BAL $165 …. On Friday, the 1 st of June, a deposit of around 500 dollars was made to checking account, bringing the balance to 200 dollars. On Tuesday the 30 th of June, a 10 dollar purchase was made, bringing the balance to 165 dollars. On …. DICTIONARY (entire corpus) $100 -200 : 500, CREDIT : 545, Tuesday : 45, CHECKING ACCOUNT : 67, BURRITO : 9933, END SENTENCE : 0 ENCODE 29993 27772 7773 7662 222 664 8883 9999 0 888 999 345 344 533 555 666 777 888 999 4444 333 222 4444 666 888 990 888 0…

Train the models 3 Months later … the train has arrived • Create word embeddings. Data Engineer U R ST U T C D E R A T DA • Modify well known deep CNN architecture to work with 1 D text data. • Use an RNN variant such as LSTM, GRU. • Or use the next best deep learning text model you discovered at the OReilly AI Conference….

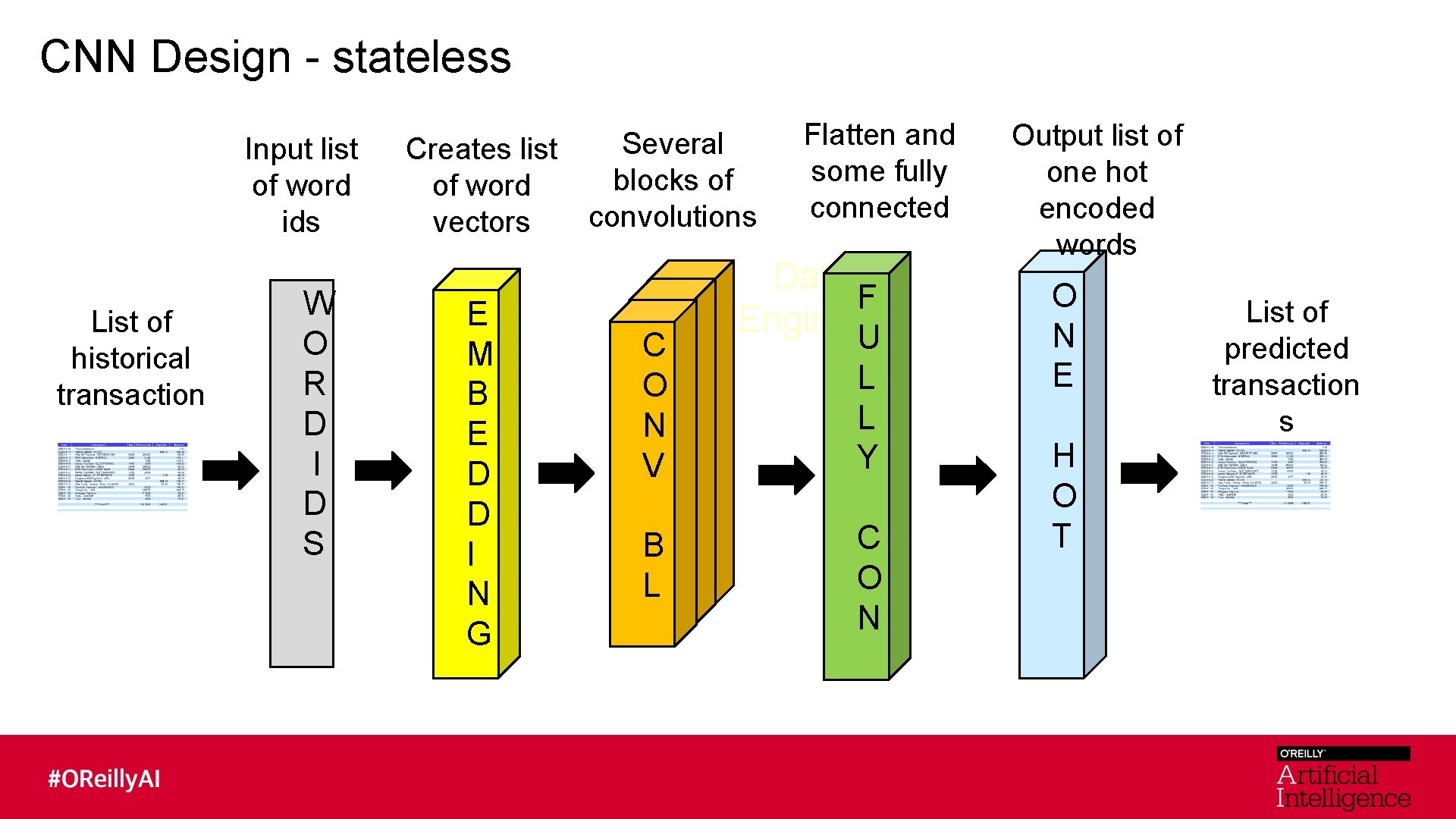

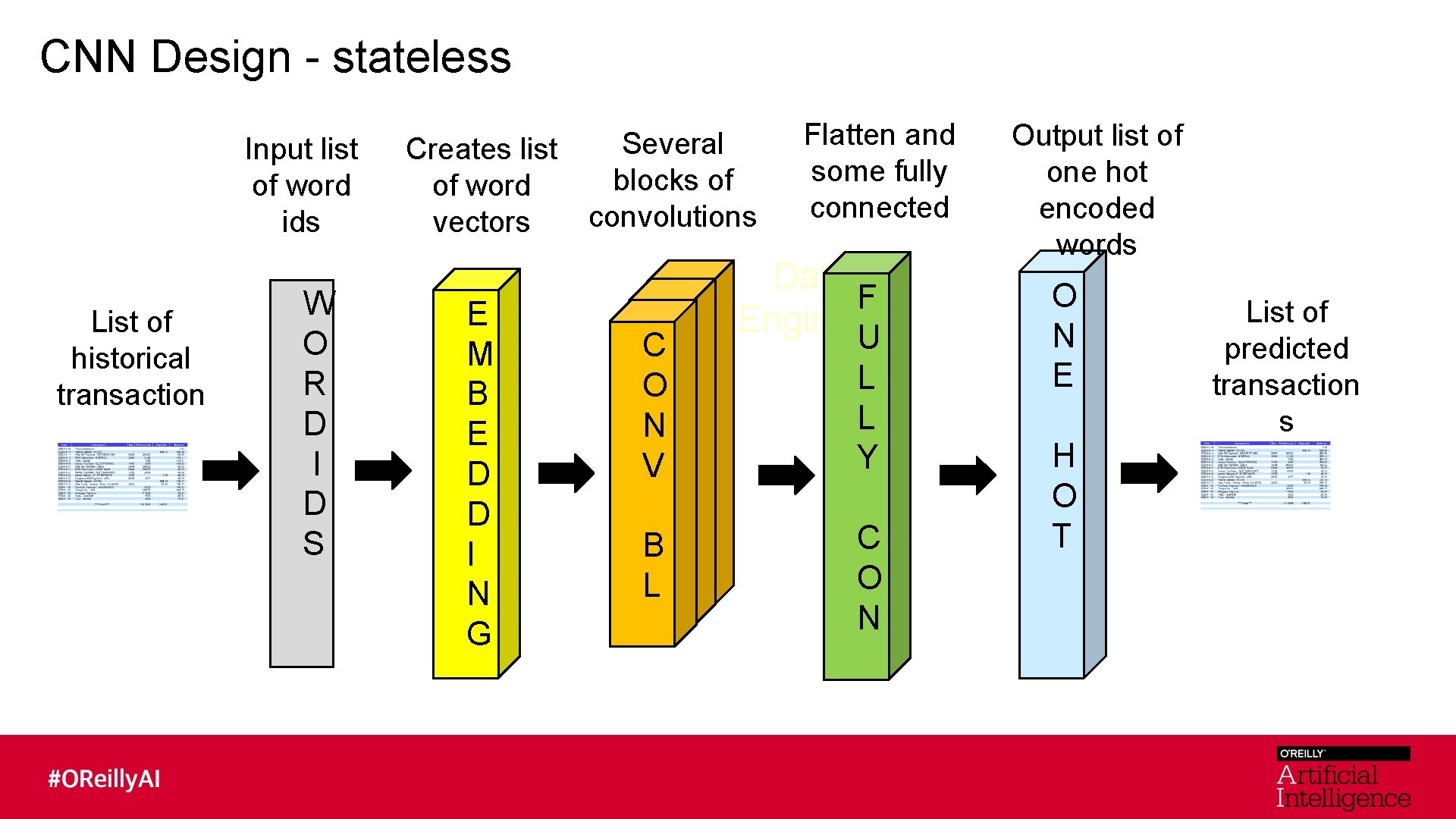

CNN Design - stateless Input list of word ids List of historical transaction Creates list of word vectors W E O M A A R D B D E D TUR E C U I R T D S D D S I N G Several blocks of convolutions C O N V B L Flatten and some fully connected Data F Engineer U L L Y C O N Output list of one hot encoded words O N E H O T List of predicted transaction s

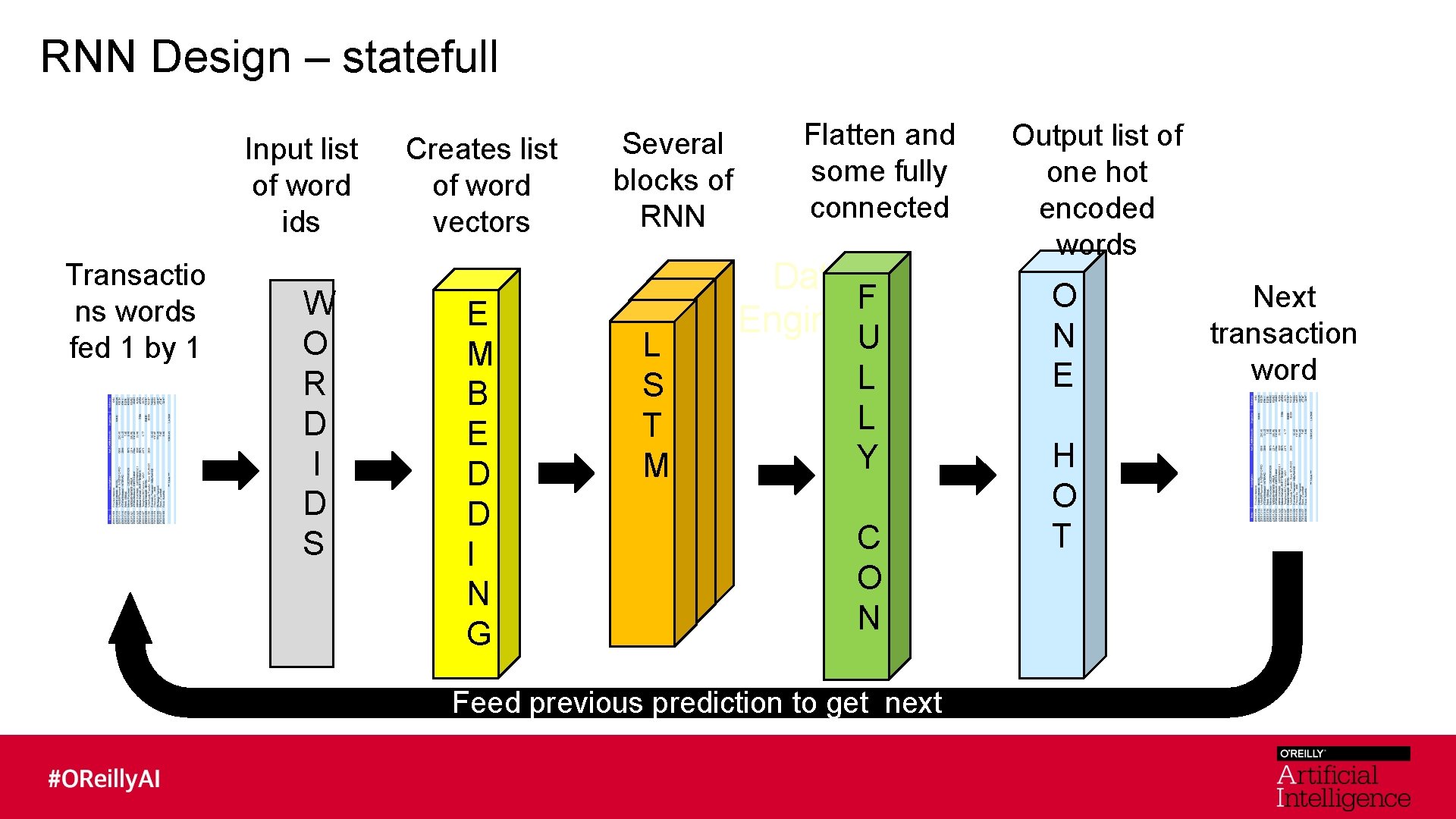

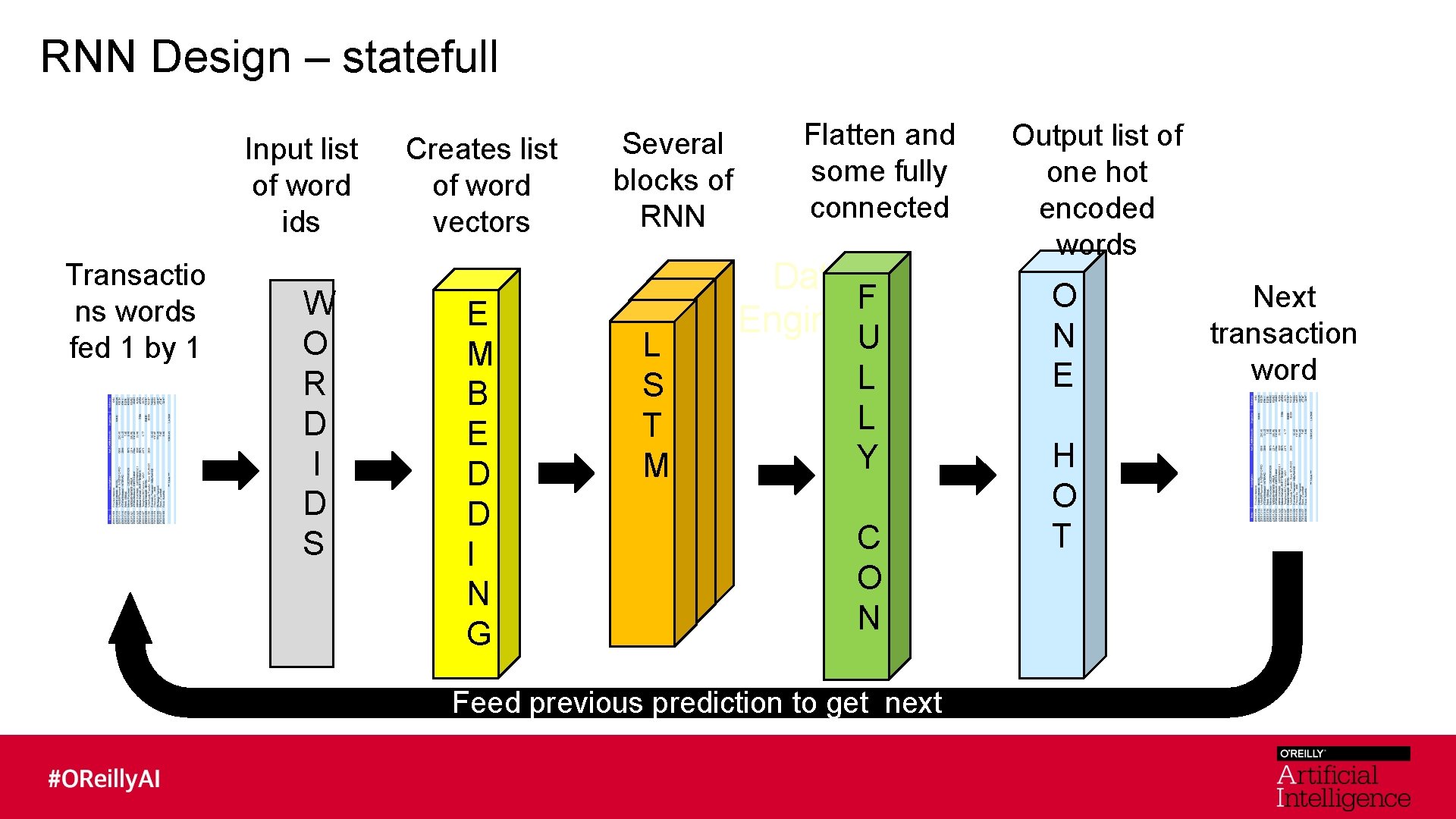

RNN Design – statefull Input list of word ids Transactio ns words fed 1 by 1 Creates list of word vectors W E O M A A R D B D E D TUR E C U I R T D S D D S I N G Several blocks of RNN L S T M Flatten and some fully connected Data F Engineer U L L Y C O N Feed previous prediction to get next Output list of one hot encoded words O N E H O T Next transaction word

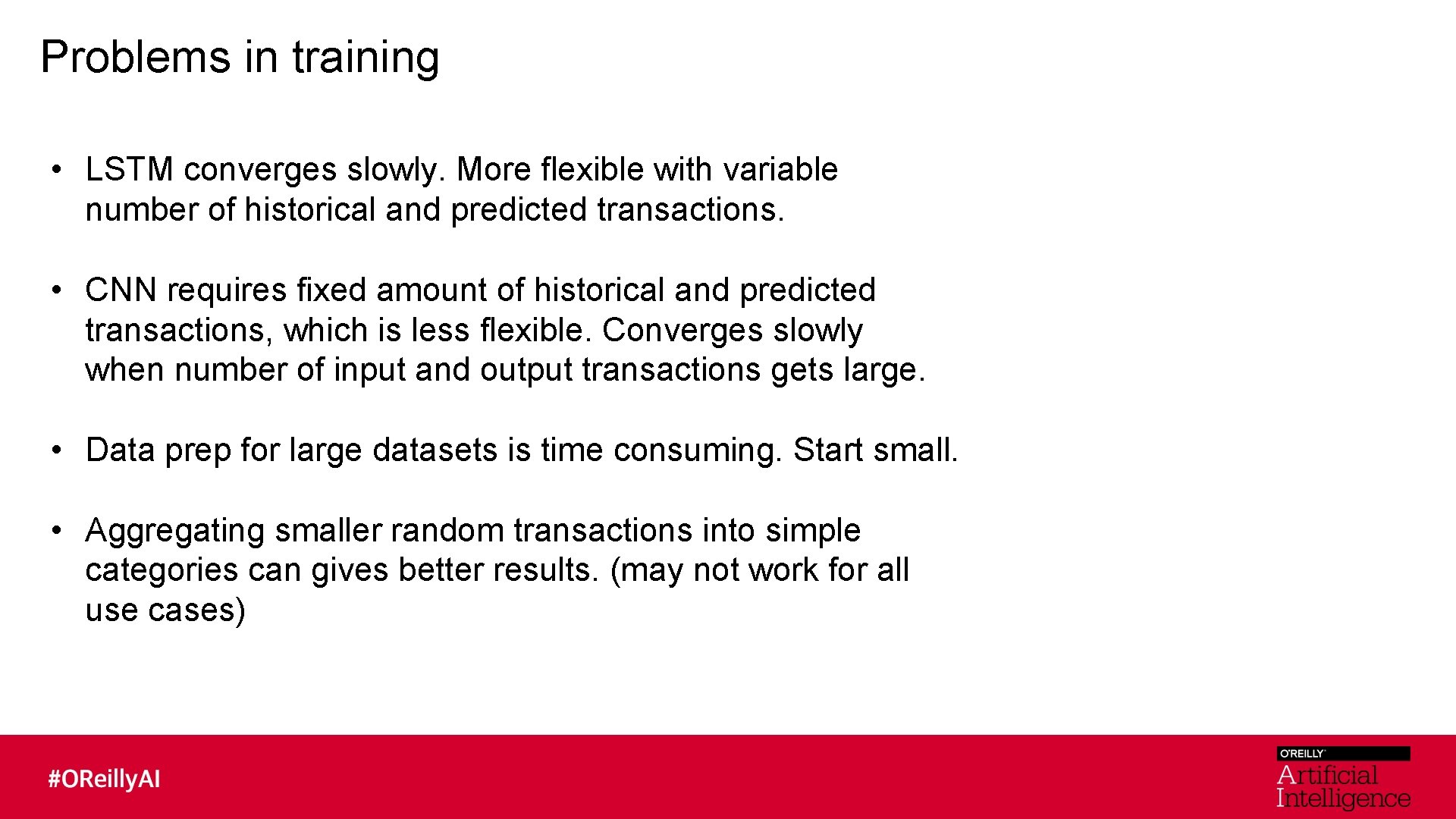

Problems in training • LSTM converges slowly. More flexible with variable number of historical and predicted transactions. • CNN requires fixed amount of historical and predicted transactions, which is less flexible. Converges slowly when number of input and output transactions gets large. • Data prep for large datasets is time consuming. Start small. • Aggregating smaller random transactions into simple categories can gives better results. (may not work for all use cases)

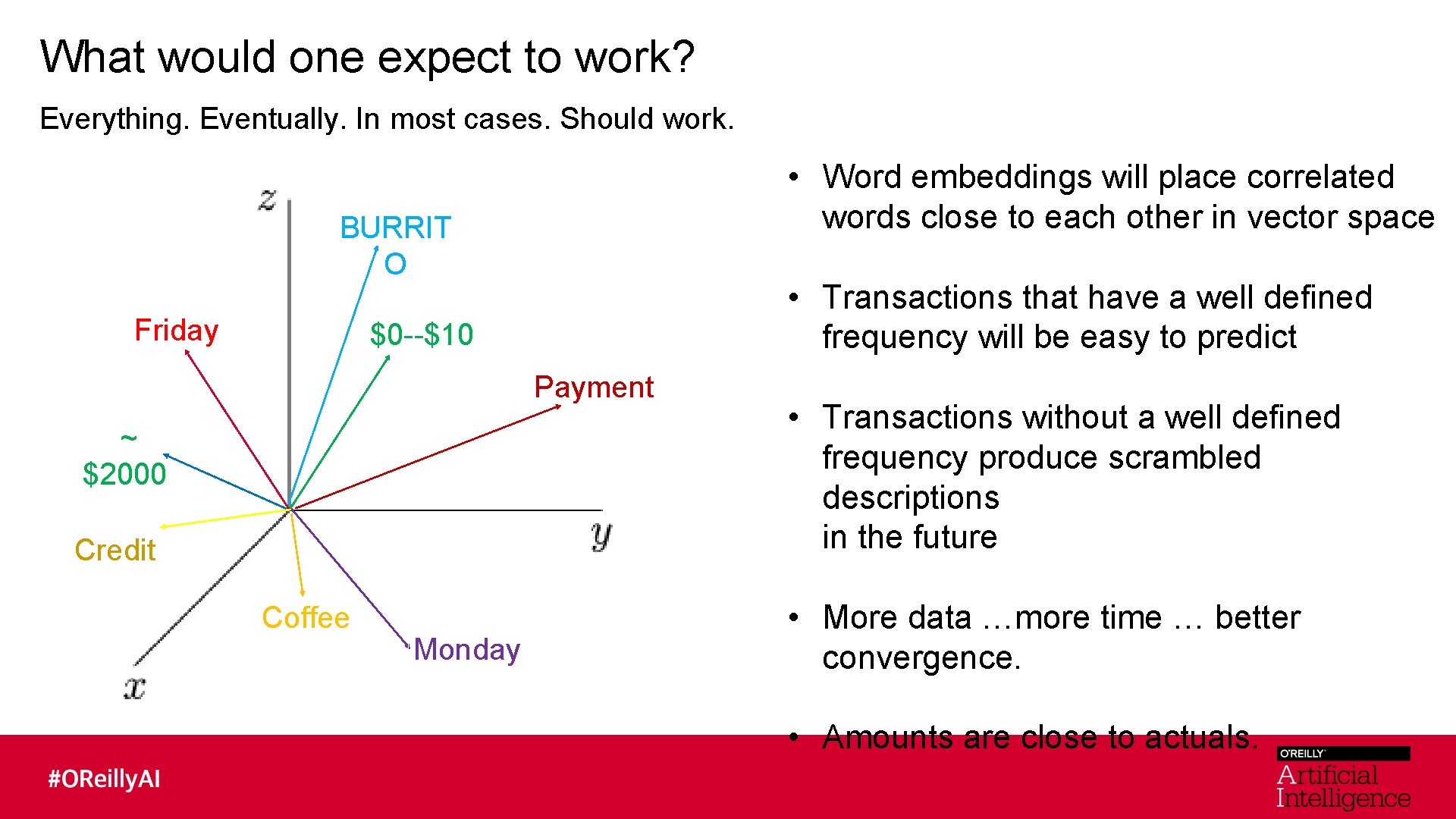

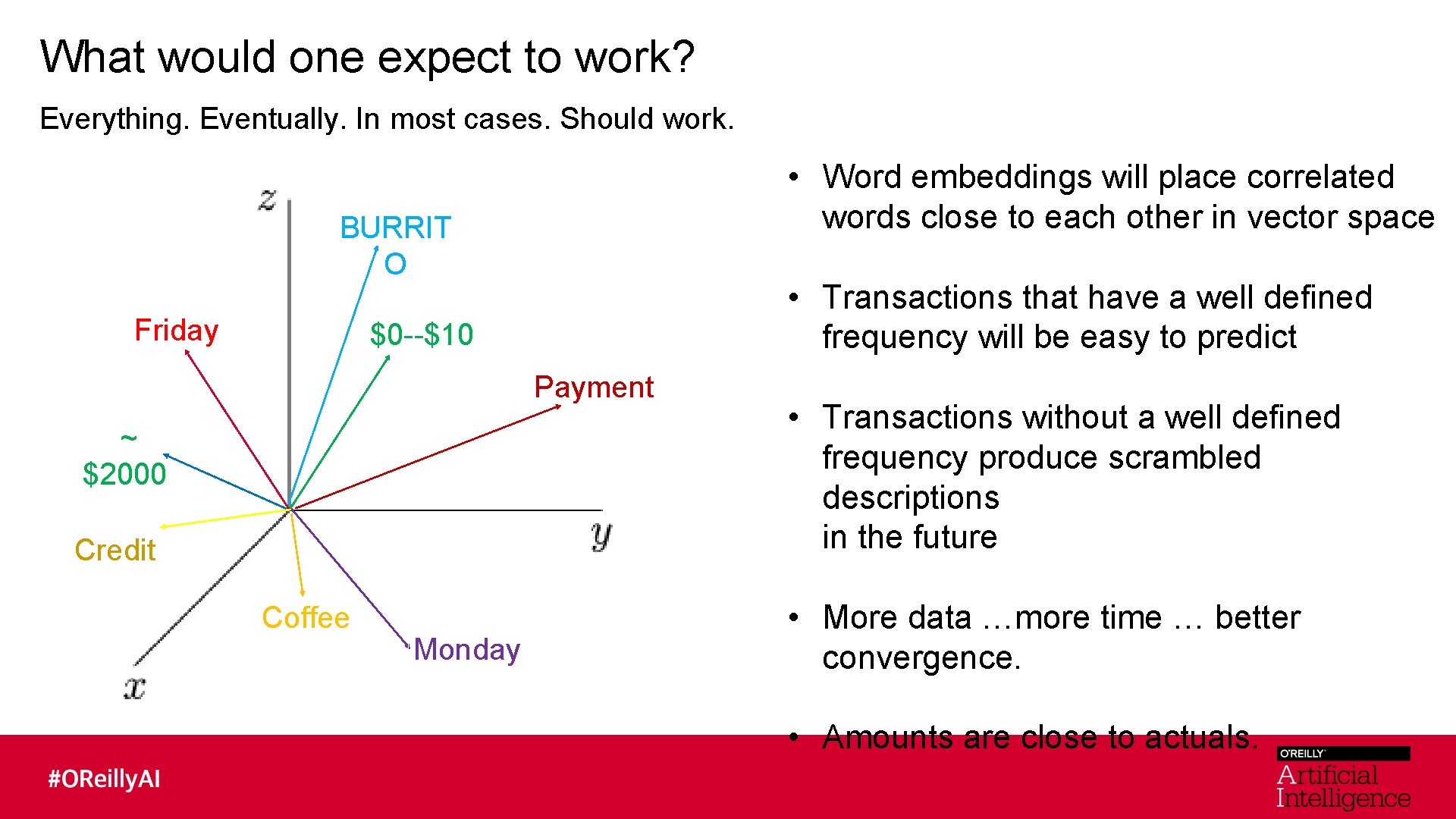

What would one expect to work? Everything. Eventually. In most cases. Should work. • Word embeddings will place correlated words close to each other in vector space BURRIT O Friday • Transactions that have a well defined frequency will be easy to predict $0 --$10 Payment ~ $2000 Credit Coffee Monday • Transactions without a well defined frequency produce scrambled descriptions in the future • More data …more time … better convergence. • Amounts are close to actuals.

But also expect the strange. . . • Allot of randomness in historical daily transactions so the model will show randomness in the predicted transactions. • Equal importance given by cost function to getting every word correct. • More data … better convergence. • Amounts are close to actuals within categories. Some descriptions will be jumbled.

What’s Next? • Use the word embeddings for other models. • Reuse top layers of model for transfer learning in other problem areas. May work well across data sets. • Better models with more data. • Apply different model architectures as they become better understood. • Deploy. Does your model scale to millions of customers?

Questions?