Deep Learning on FPGAs Past Present and Future

- Slides: 14

Deep Learning on FPGAs: Past, Present, and Future Author: Griffin Lacey, Graham W. Taylor, Shawki Areibi. Publisher/Conf. : ar. Xiv Presenter: 柯懷貿 Date: 2018/09/26 Department of Computer Science and Information Engineering National Cheng Kung University, Taiwan R. O. C.

Introduction l The study of data-driven techniques which involve much “feature engineering” by hand using expert domain-specific knowledge is called deep learning. l Researchers and application scientists are limited by the need for better hardware acceleration to accommodate scaling beyond current data and algorithm sizes. l The current state of hardware acceleration for deep learning is making use of GPU and FPGA. National Cheng Kung University CSIE Computer & Internet Architecture Lab 2

The Case for FPGAs l Comparing with GPUs, more flexible hardware configuration and better performance per for subroutines are important to deep learning. l Many researchers and application scientists may not possess FPGAs because of hardware-level programming, therefore, software-level programming models, including Open. CL, provide them a more attractive option. l The reconfigurability, large degree of parallelism, higher abstraction designed tools and stronger performance per watt will benefit deep learning. National Cheng Kung University CSIE Computer & Internet Architecture Lab 3

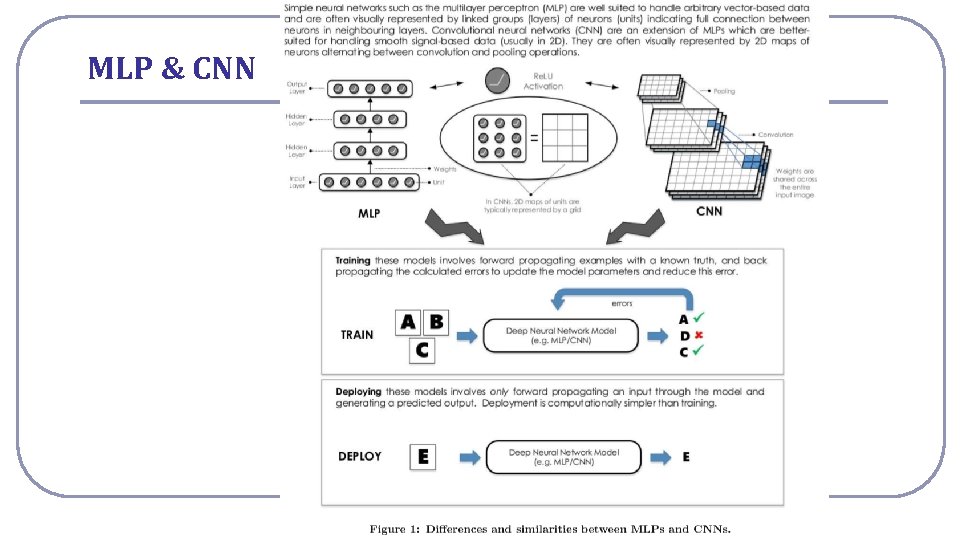

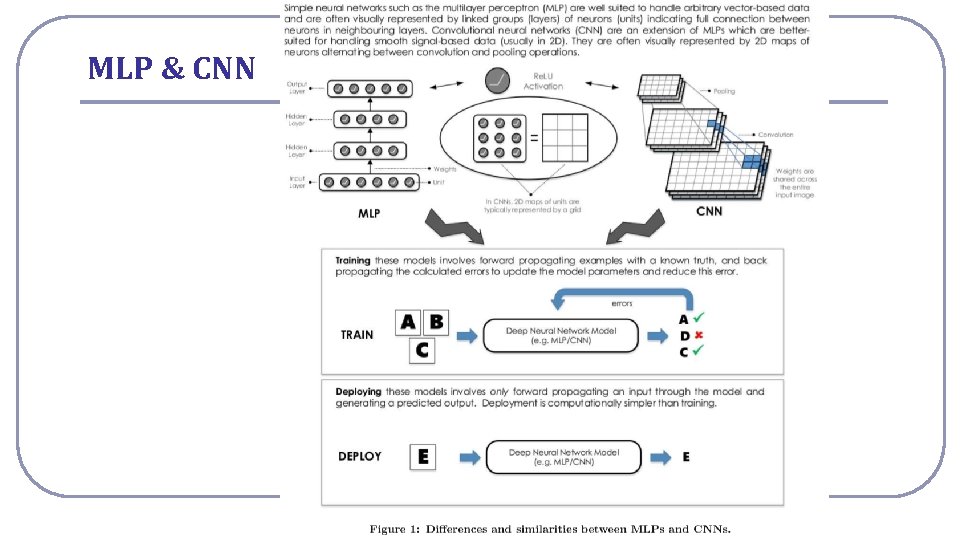

Deep Learning l The field of deep learning emerged around 2006 after a long period of relative disinterest around neural networks research. Interestingly, the early successes in the field were due to unsupervised learning. l There are three models make deep learning well-suited for parallelization using hardware accelerators: data parallelism, model parallelism, pipeline parallelism. l Multi-layer perceptron (MLP) and convolutional neural networks (CNN) are the two types of neural network architectures that have received most of the attention. National Cheng Kung University CSIE Computer & Internet Architecture Lab 4

MLP & CNN National Cheng Kung University CSIE Computer & Internet Architecture Lab 5

Comparisons of FPGAs l Comparing with GPPs, FPGAs perform more efficiently. l Comparing with ASICs, FPGAs are more flexible, cheap and easy to produce. l Comparing GPUs, FPGAs allow more freedom to explore algorithm level optimizations, but spend more compile time. l researchers and application scientists tend to opt for a software design experience, which has matured to support a large assortment of abstractions and conveniences that increase the productivity of programmers. National Cheng Kung University CSIE Computer & Internet Architecture Lab 6

Open. CL l Programs written in Open. CL can be executed transparently on GPPs, GPUs, DSPs, and FP-GAs. Similar to CUDA, Open. CL provides a standard framework for parallel programming, as well as low-level access to hardware. l CUDA, created by NVIDIA, is the current choice for most popular deep learning tools, while Open. CL, maintained by the Khronos group, is opensource, royality-free, and has similar performance with CUDA. . l Though Open. CL is flexible, all supported platforms are not guaranteed to support all Open. CL functions, like FPGA. National Cheng Kung University CSIE Computer & Internet Architecture Lab 7

Compilation time l Open. CL kernel compilation time for both Altera and Xilinx is on the order of tens of minutes to hours, whereas compiling generic Open. CL kernels for GPPs/GPUs is on the order of milliseconds to seconds. l CUDA employs a just-in-time compiling approach doing one-time o�ine compilation of commonly used deep learning kernels. l Both Altera and Xilinx support integrated kernel profiling, debugging, and optimization in their Open. CL tools. National Cheng Kung University CSIE Computer & Internet Architecture Lab 8

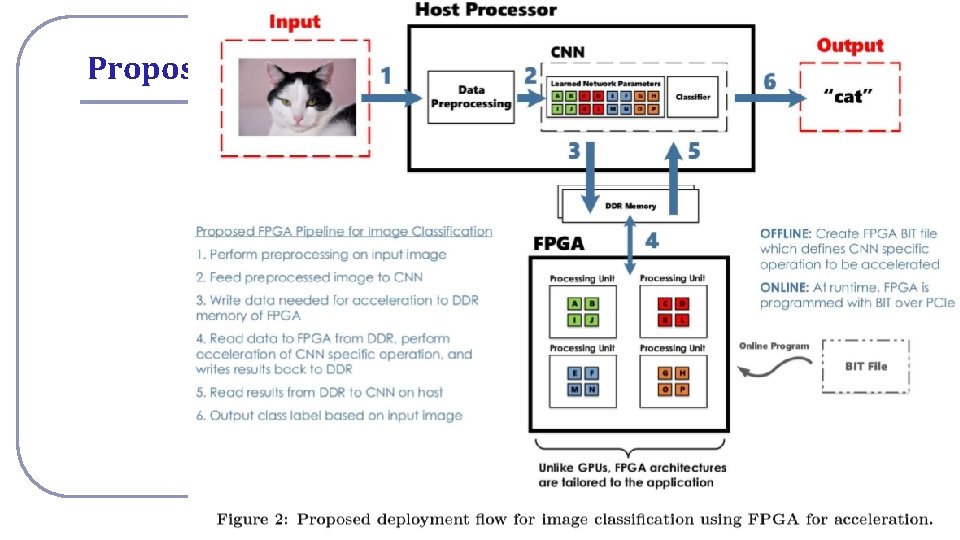

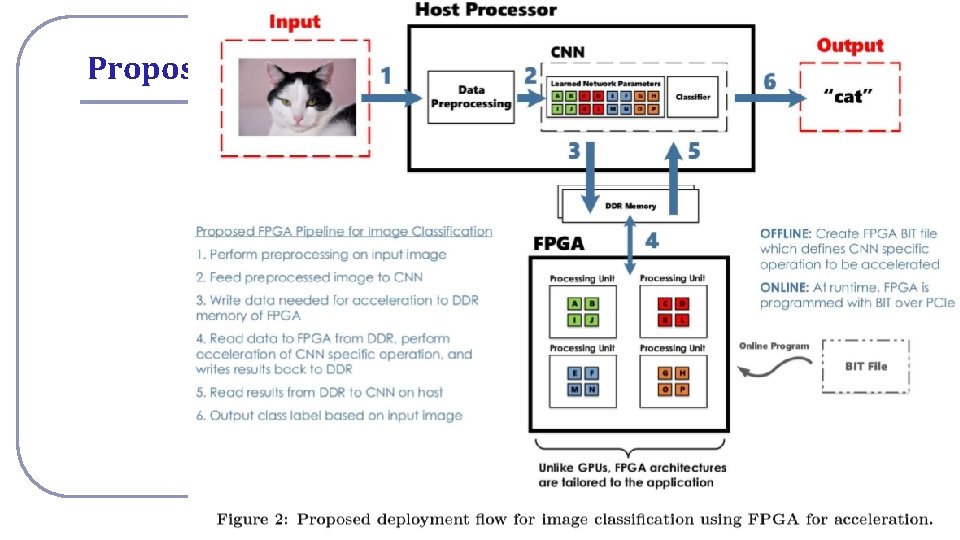

Proposed deployment flow National Cheng Kung University CSIE Computer & Internet Architecture Lab 9

CNN on FPGAs l One of the most limiting hardware realizations for deep learning techniques on FPGAs is design size, therefore, modern FPGAs continue to exploit smaller feature (transistor) sizes to increase density. l State-of-the-art performance forward propagation of CNNs on FPGAs was achieved by a team at Microsoft that have reported a throughput of 134 images/second on the Image. Net 1 K dataset. l Moreover, that is projected to increase by using top-of-the-line FPGAs, with an estimated through-put of roughly 233 images/second while consuming roughly the same power, 25 W, on an Arria 10 GX 1150. National Cheng Kung University CSIE Computer & Internet Architecture Lab 10

CNN on FPGAs l On the other hand, high-performing GPU implementations (Ca�e + cu. DNN), which achieve 500 -824 images/second consuming 235 W. l An experimental project which integrates FPGAs into datacenter applications has claimed to improve large-scale search engine performance by a factor of 2. l Since pre-trained CNNs are algorithmically simple and computationally e�cient, most FPGA e�orts have involved accelerating the forward propagation of these models, and reporting on the achieved throughput. However, accelerating backward propagation on FPGAs is also an area of interest. National Cheng Kung University CSIE Computer & Internet Architecture Lab 11

Increasing Degrees of Freedom for Training l While one may expect the process of training machine learning algorithms to be fully autonomous, in practice there are tunable hyper-parameters that need to be adjusted. l The number of training iterations, the learning rate, mini-batch size, number of hidden units, and number of layers are all examples of hyper-parameters. l Traditionally, hyper-parameters have been set by experience or systematically by grid search or more e�ectively, random search. Very recently, researchers have turned to adaptive methods, which exploit the results of hyper-parameter attempts. Among these, Bayesian Optimization is the most popular. National Cheng Kung University CSIE Computer & Internet Architecture Lab 12

Increasing Degrees of Freedom for Training l Current training procedures using fixed architectures are somewhat limited in their ability to grow these sets of possible models. l The flexible architecture of FPGAs, however, may be better suited for these types of optimizations, as a completely di�erent hardware structure can be programmed and accelerated at runtime. l Complex high level features in data, or to increase performance, current solutions to this problem involve using clusters of GPUs to interconnect and MPI to allow high levels of parallel computing power and fast data transfer between nodes. National Cheng Kung University CSIE Computer & Internet Architecture Lab 13

Low Power Compute Clusters l The use of FPGAs may prove to be a superior alternative because large scale applications become increasingly heterogeneous in GPUs. l For implement, we first gather all the rules below this node, these rules located at leaf nodes below this node, then program a Bloom Filter with these rules in each field. l Integrating FPGAs into popular deep learning frameworks is now possible, and providing architectural freedom for exploration and research. National Cheng Kung University CSIE Computer & Internet Architecture Lab 14