Deep Learning of RDF rules Semantic Machine Learning

- Slides: 22

Deep Learning of RDF rules Semantic Machine Learning Bassem Makni SML 16 1

Outline www. rpi. edu Deep Learning motivation Semantic Reasoning Deep learning of RDFS rules Results Discussion 2

Deep Learning motivation After a few decades of low popularity relative to other machine learning methods, neural networks are trending again with the deep learning movement. Other machine learning techniques rely on the selection of pertinent features of the data, Deep neural networks learn the best features for each task. www. rpi. edu 3

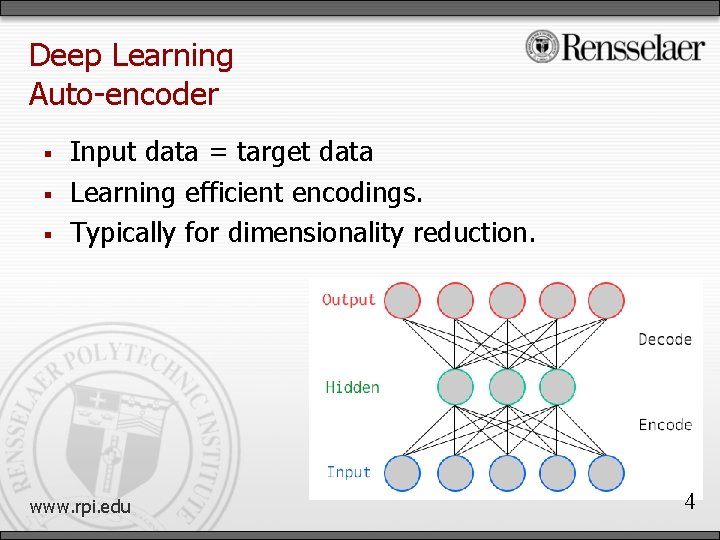

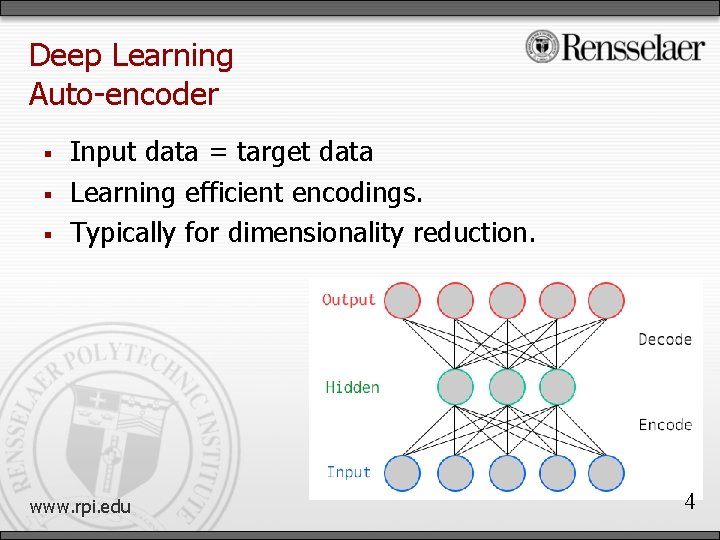

Deep Learning Auto-encoder Input data = target data Learning efficient encodings. Typically for dimensionality reduction. www. rpi. edu 4

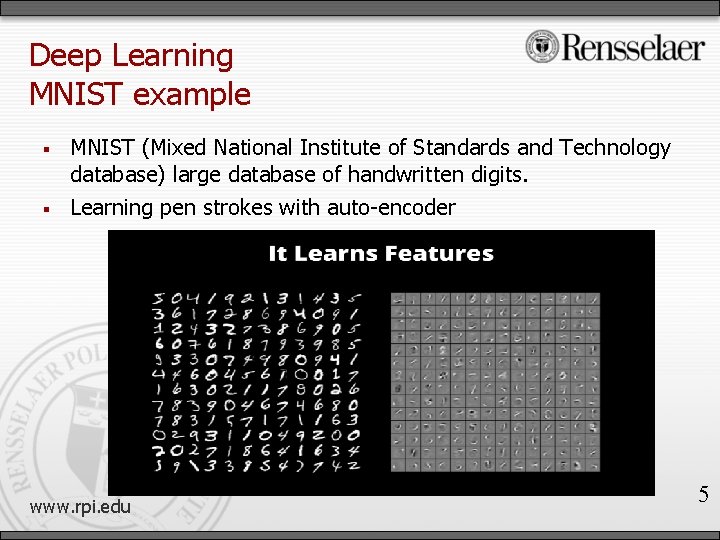

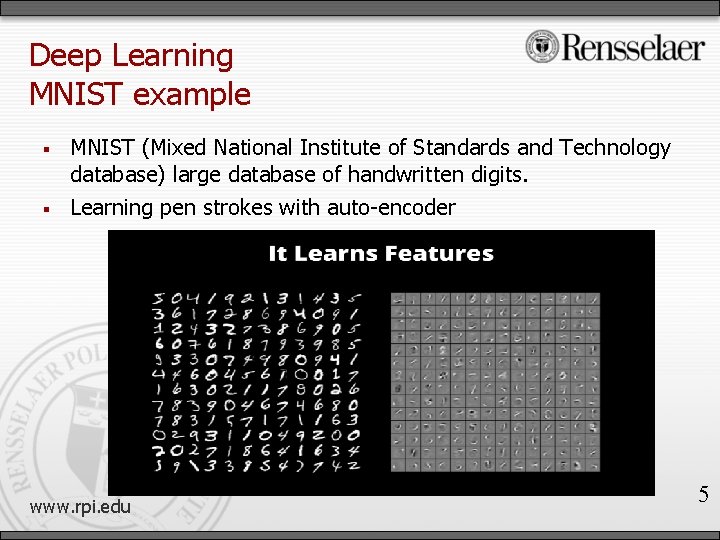

Deep Learning MNIST example MNIST (Mixed National Institute of Standards and Technology database) large database of handwritten digits. Learning pen strokes with auto-encoder www. rpi. edu 5

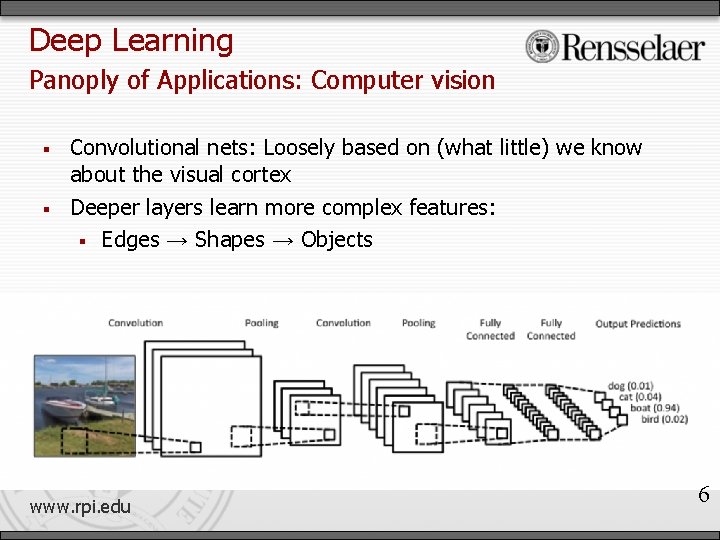

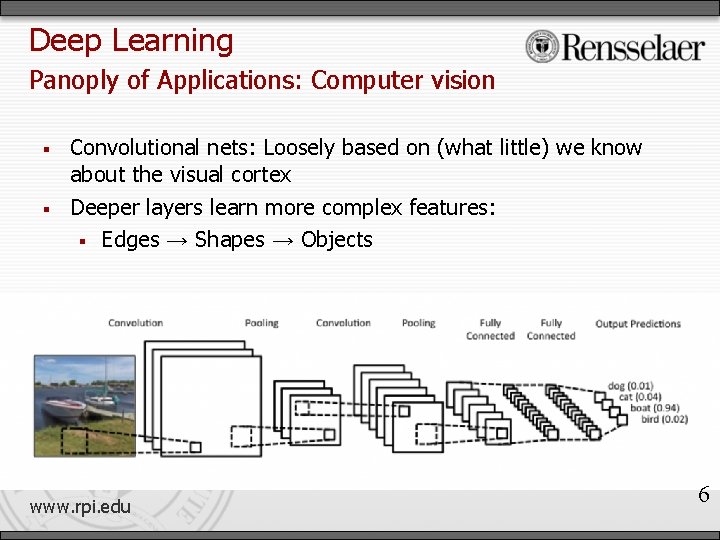

Deep Learning Panoply of Applications: Computer vision Convolutional nets: Loosely based on (what little) we know about the visual cortex Deeper layers learn more complex features: Edges → Shapes → Objects www. rpi. edu 6

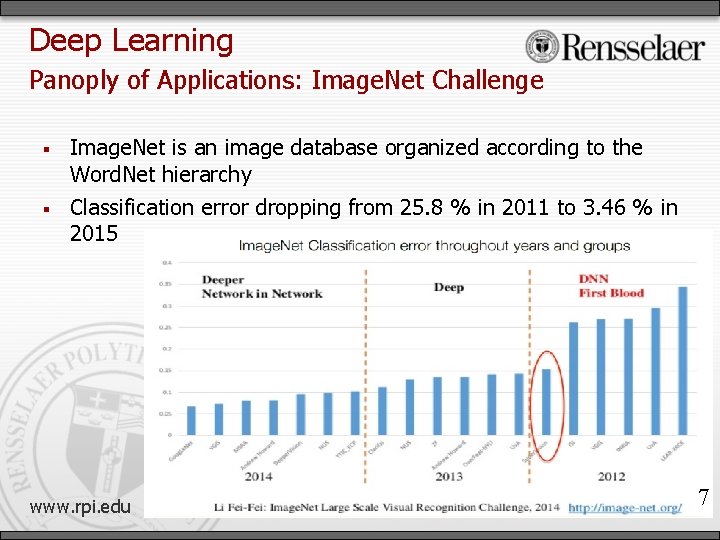

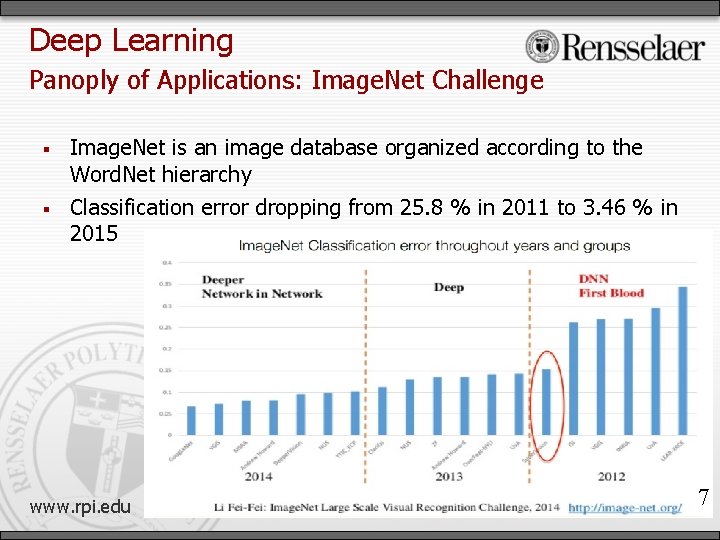

Deep Learning Panoply of Applications: Image. Net Challenge Image. Net is an image database organized according to the Word. Net hierarchy Classification error dropping from 25. 8 % in 2011 to 3. 46 % in 2015 www. rpi. edu 7

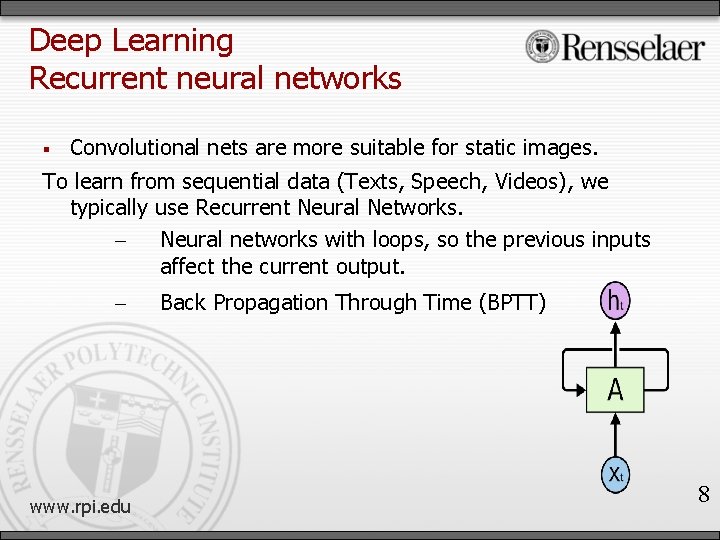

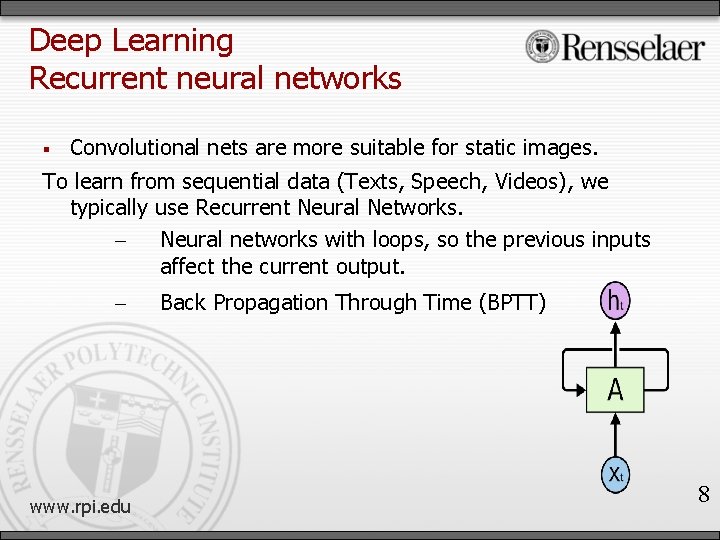

Deep Learning Recurrent neural networks Convolutional nets are more suitable for static images. To learn from sequential data (Texts, Speech, Videos), we typically use Recurrent Neural Networks. – Neural networks with loops, so the previous inputs affect the current output. – www. rpi. edu Back Propagation Through Time (BPTT) 8

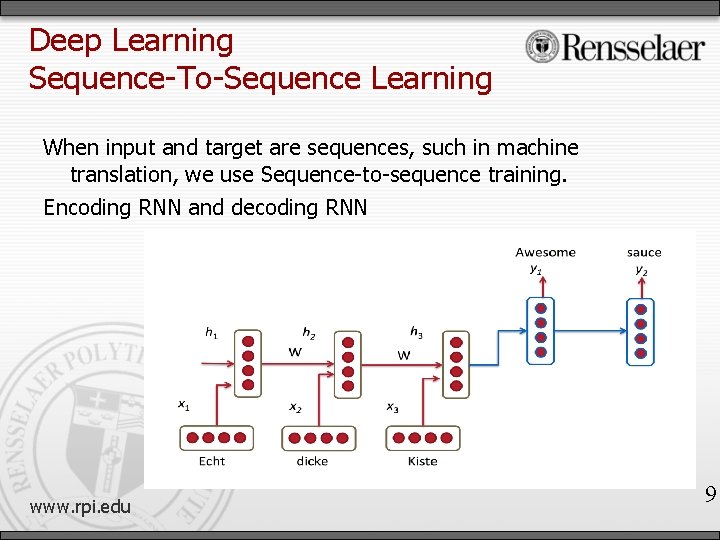

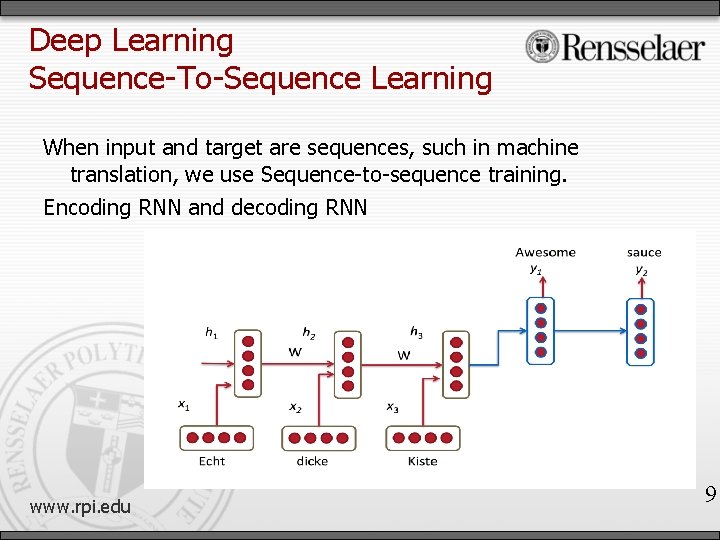

Deep Learning Sequence-To-Sequence Learning When input and target are sequences, such in machine translation, we use Sequence-to-sequence training. Encoding RNN and decoding RNN www. rpi. edu 9

Rules Learning Main Idea Input: a, transitive_property, b, b, transitive_property, c Target: a, transitive_property, c www. rpi. edu 10

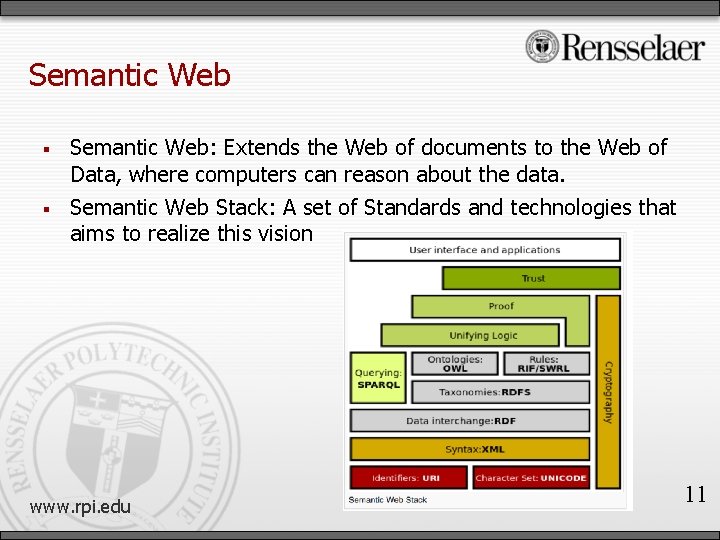

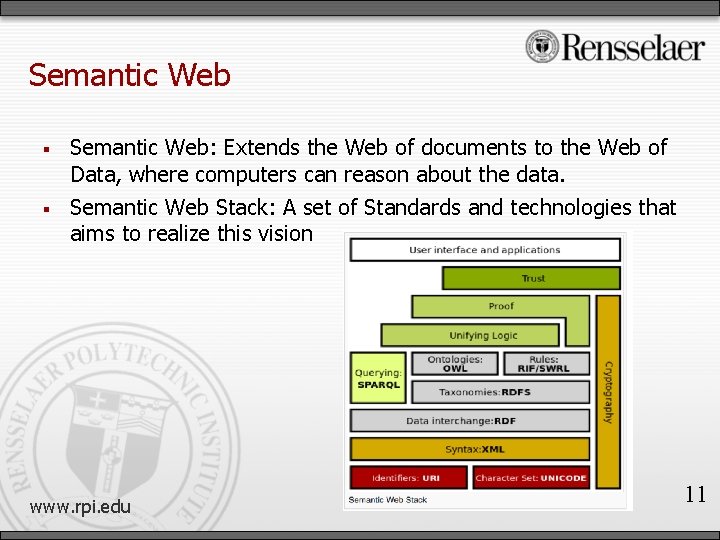

Semantic Web Semantic Web: Extends the Web of documents to the Web of Data, where computers can reason about the data. Semantic Web Stack: A set of Standards and technologies that aims to realize this vision www. rpi. edu 11

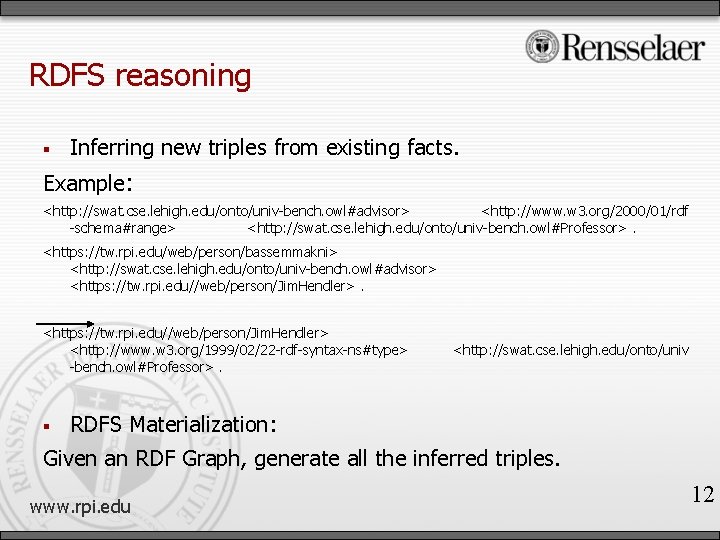

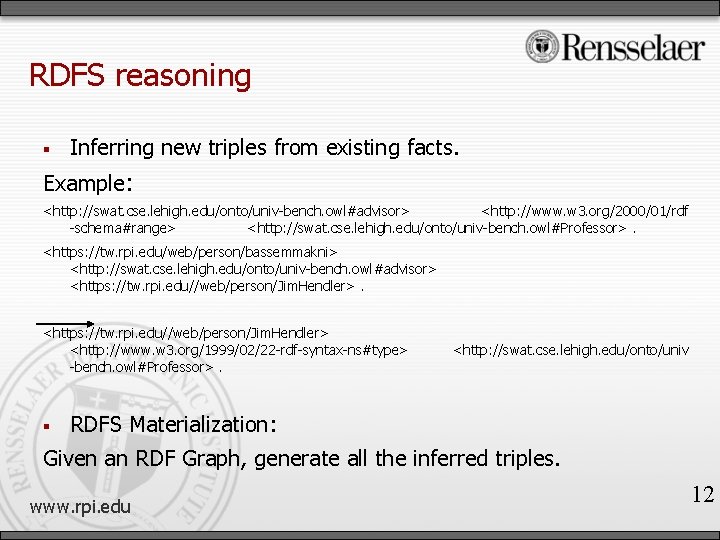

RDFS reasoning Inferring new triples from existing facts. Example: <http: //swat. cse. lehigh. edu/onto/univ-bench. owl#advisor> <http: //www. w 3. org/2000/01/rdf -schema#range> <http: //swat. cse. lehigh. edu/onto/univ-bench. owl#Professor>. <https: //tw. rpi. edu/web/person/bassemmakni> <http: //swat. cse. lehigh. edu/onto/univ-bench. owl#advisor> <https: //tw. rpi. edu//web/person/Jim. Hendler> <http: //www. w 3. org/1999/02/22 -rdf-syntax-ns#type> -bench. owl#Professor>. <http: //swat. cse. lehigh. edu/onto/univ RDFS Materialization: Given an RDF Graph, generate all the inferred triples. www. rpi. edu 12

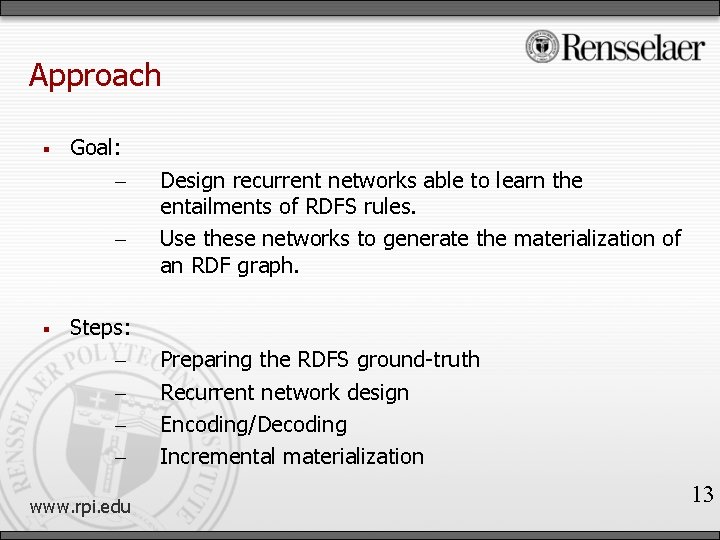

Approach Goal: – – Steps: – – www. rpi. edu Design recurrent networks able to learn the entailments of RDFS rules. Use these networks to generate the materialization of an RDF graph. Preparing the RDFS ground-truth Recurrent network design Encoding/Decoding Incremental materialization 13

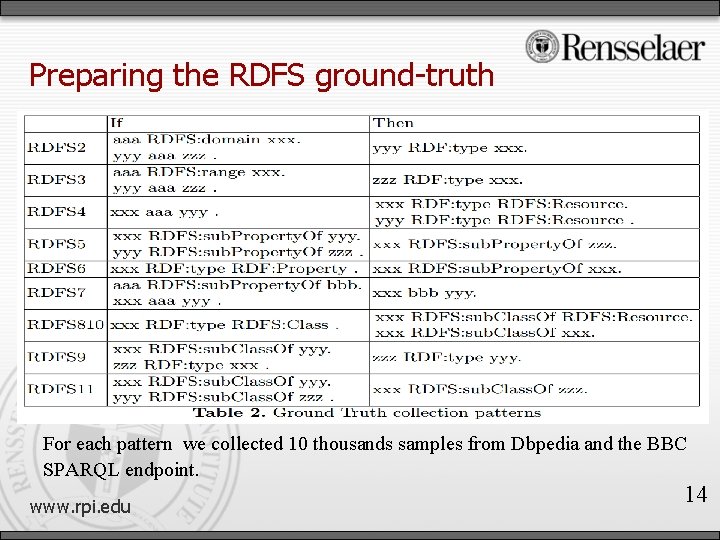

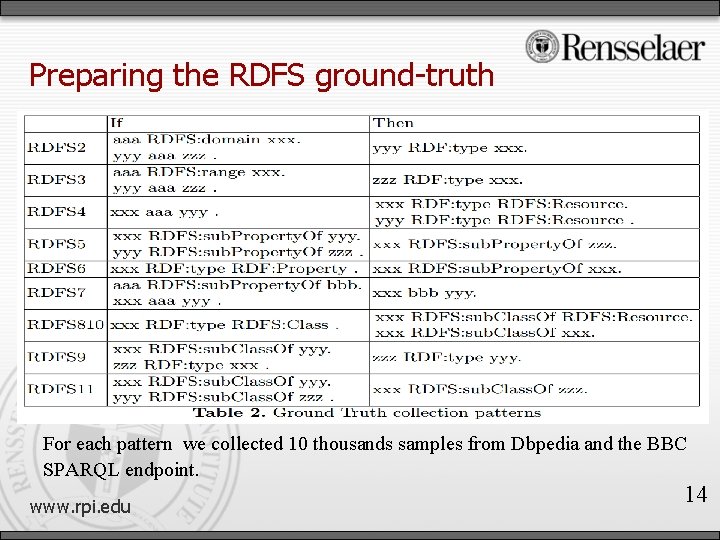

Preparing the RDFS ground-truth For each pattern we collected 10 thousands samples from Dbpedia and the BBC SPARQL endpoint. www. rpi. edu 14

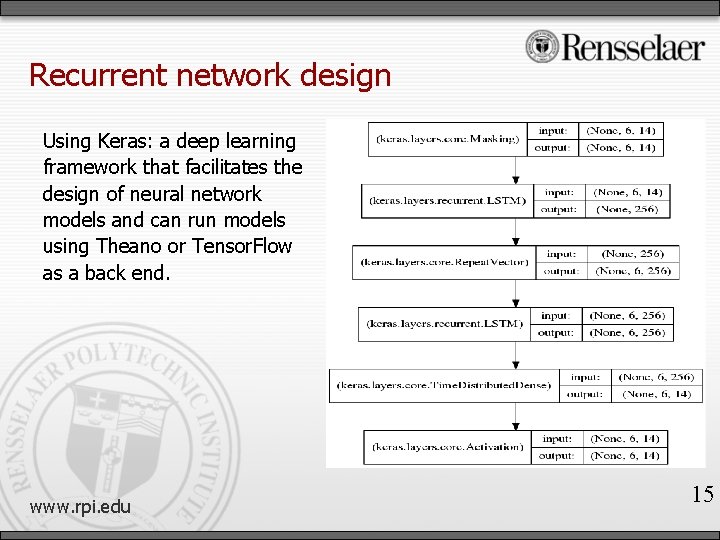

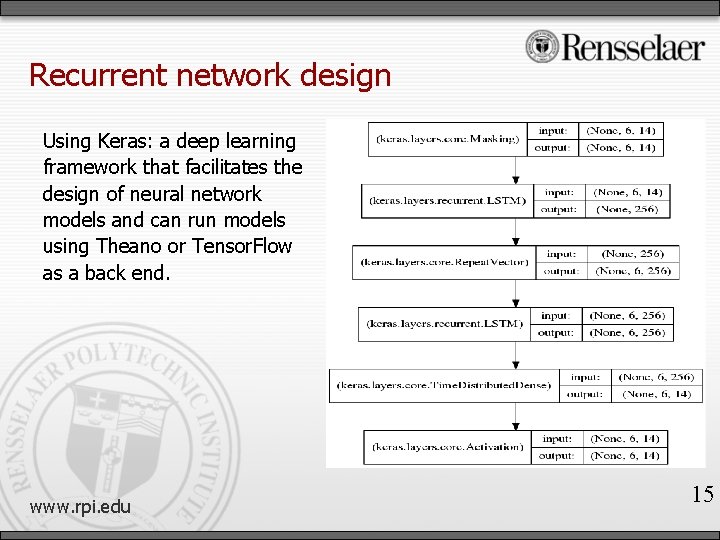

Recurrent network design Using Keras: a deep learning framework that facilitates the design of neural network models and can run models using Theano or Tensor. Flow as a back end. www. rpi. edu 15

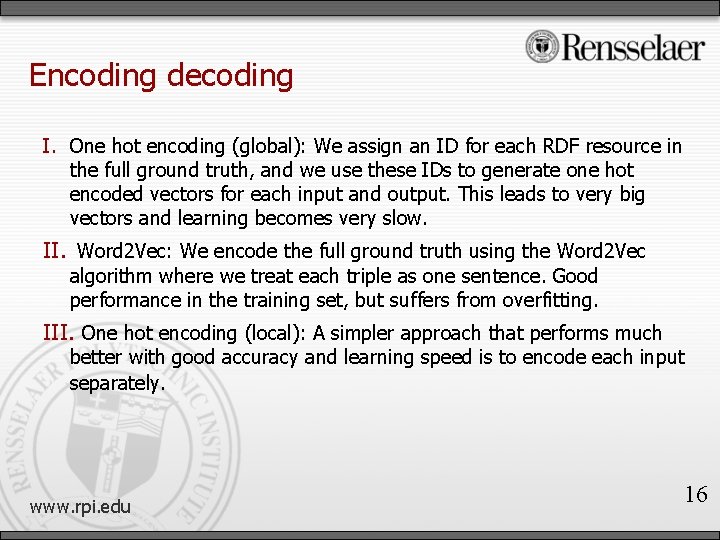

Encoding decoding I. One hot encoding (global): We assign an ID for each RDF resource in the full ground truth, and we use these IDs to generate one hot encoded vectors for each input and output. This leads to very big vectors and learning becomes very slow. II. Word 2 Vec: We encode the full ground truth using the Word 2 Vec algorithm where we treat each triple as one sentence. Good performance in the training set, but suffers from overfitting. III. One hot encoding (local): A simpler approach that performs much better with good accuracy and learning speed is to encode each input separately. www. rpi. edu 16

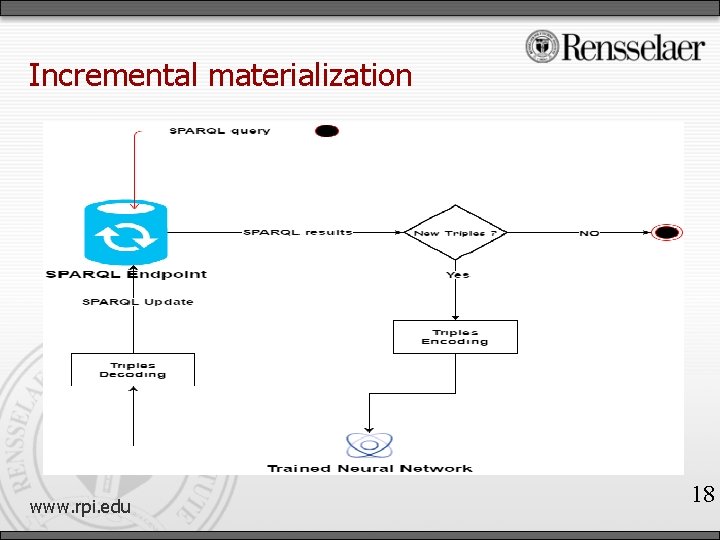

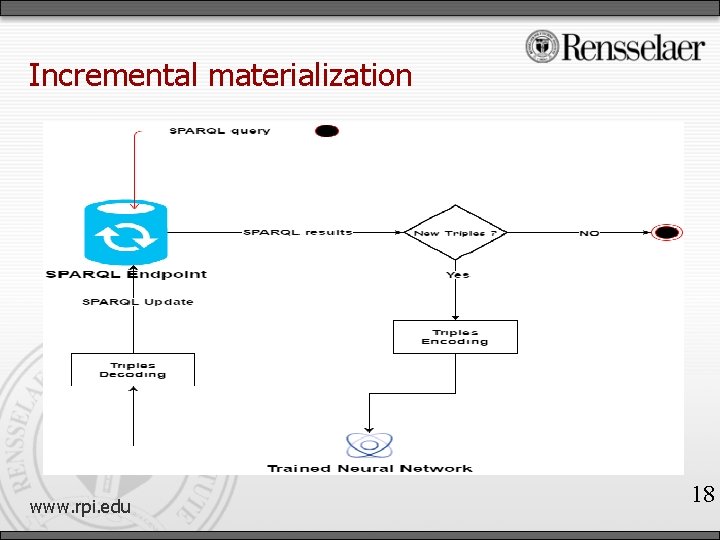

Incremental materialization After the training of our network on the DBpedia+BBC ground truth, we use the network to materialize the LUBM graph. LUBM: Benchmark ontology describing the academic world concepts and relations. Algorithm: 1) Running a SPARQL query to collect all rules patterns against an RDF store hosting the LUBM graph. 2) Encoding the collected triples. 3) Using the trained network to generate new triples encodings. 4) Decoding the output to obtain RDF triples. 5) Insert the generated triples back in the RDF store. We repeat these steps till no new inferences are generated. www. rpi. edu 17

Incremental materialization www. rpi. edu 18

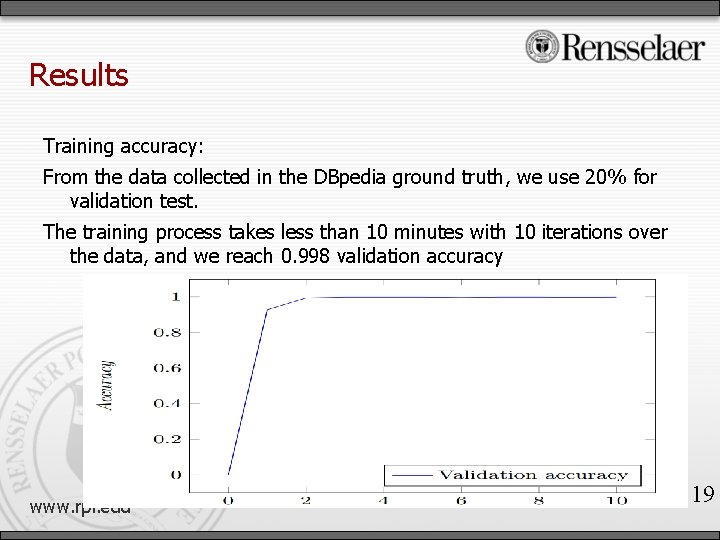

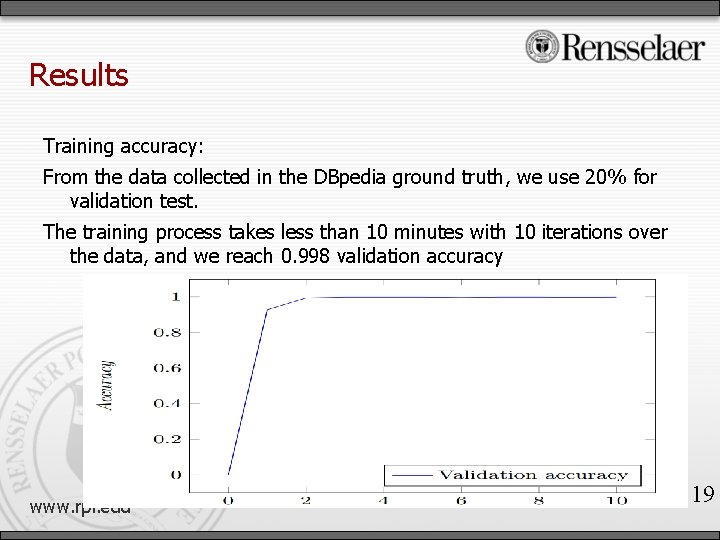

Results Training accuracy: From the data collected in the DBpedia ground truth, we use 20% for validation test. The training process takes less than 10 minutes with 10 iterations over the data, and we reach 0. 998 validation accuracy www. rpi. edu 19

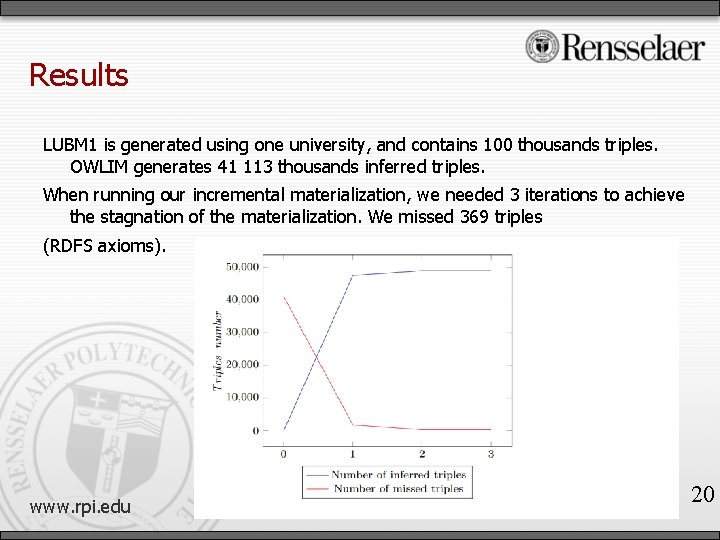

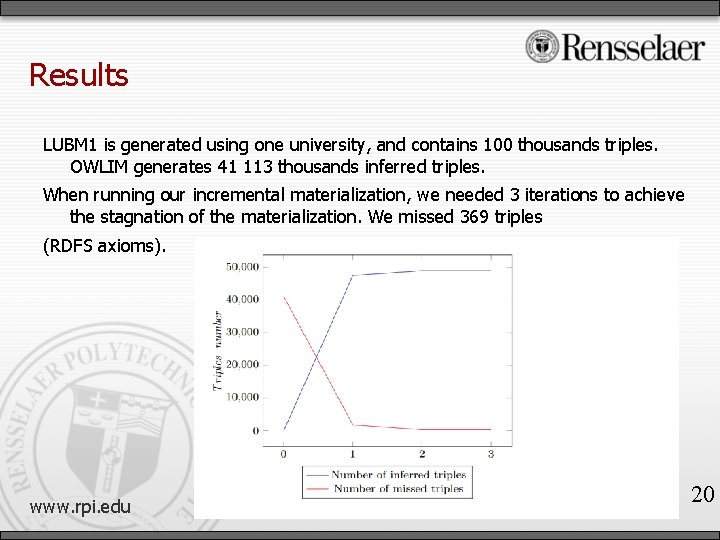

Results LUBM 1 is generated using one university, and contains 100 thousands triples. OWLIM generates 41 113 thousands inferred triples. When running our incremental materialization, we needed 3 iterations to achieve the stagnation of the materialization. We missed 369 triples (RDFS axioms). www. rpi. edu 20

Conclusions Our prototype proves that sequence-to-sequence neural networks can learn RDFS and be used for RDF graph materialization. The advantages of our approach: Our algorithm is natively parallel, and can profit from the advances in the deep learning frameworks and the GPU speeds. Deployment on neuromorphic chips. Our lab is in the process of getting access to the IBM True. North chip, and we are planning to run our reasoner on True. North chip. www. rpi. edu 21

www. rpi. edu