Deep Learning in NLP Word representation and how

- Slides: 35

Deep Learning in NLP Word representation and how to use it for Parsing For Sig Group Seminar Talk Wenyi Huang harrywy@gmail. com

Existing NLP Applications • Language Modeling • Speech Recognition • Machine Translation • • Part-Of-Speech Tagging Chunking Named Entity Recognition Semantic Role Labeling Sentiment Analysis Paraphrasing Question-Answering Word-Sense Disambiguation

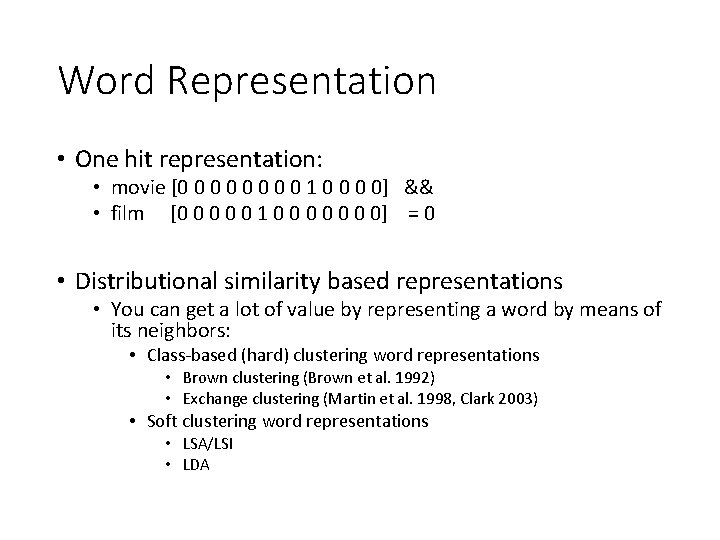

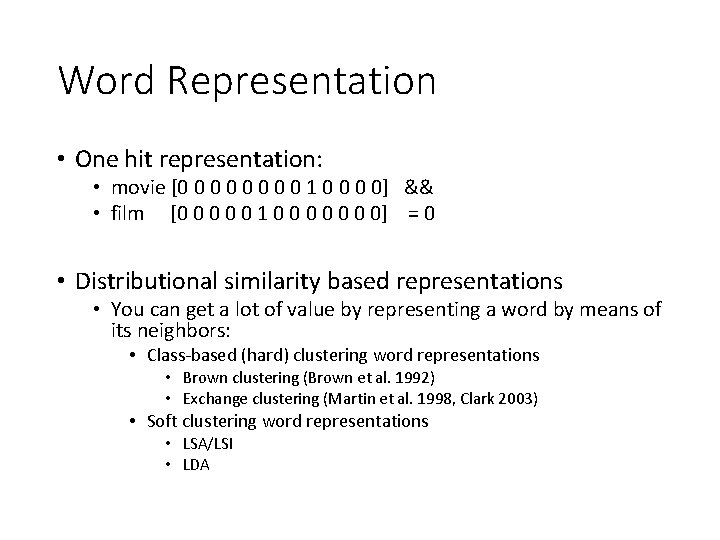

Word Representation • One hit representation: • movie [0 0 0 0 1 0 0] && • film [0 0 0 1 0 0 0 0] = 0 • Distributional similarity based representations • You can get a lot of value by representing a word by means of its neighbors: • Class-based (hard) clustering word representations • Brown clustering (Brown et al. 1992) • Exchange clustering (Martin et al. 1998, Clark 2003) • Soft clustering word representations • LSA/LSI • LDA

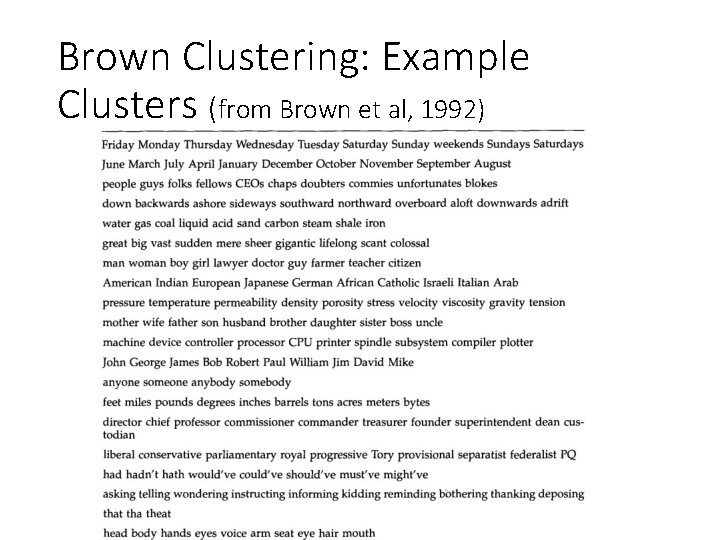

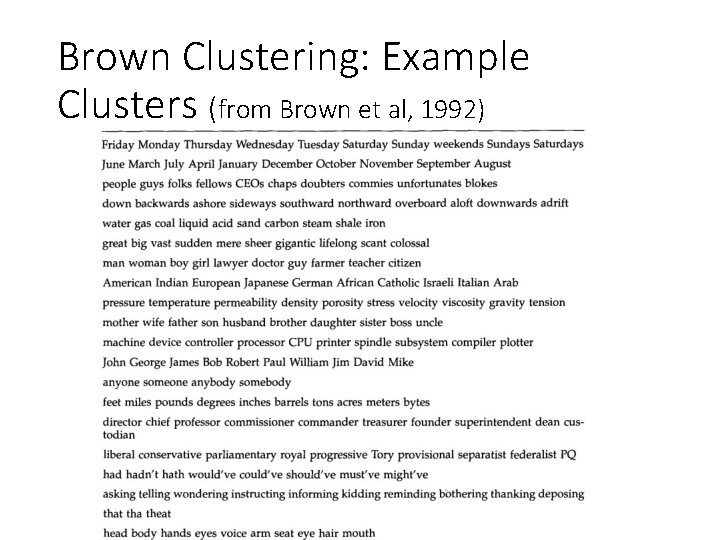

Brown Clustering: Example Clusters (from Brown et al, 1992)

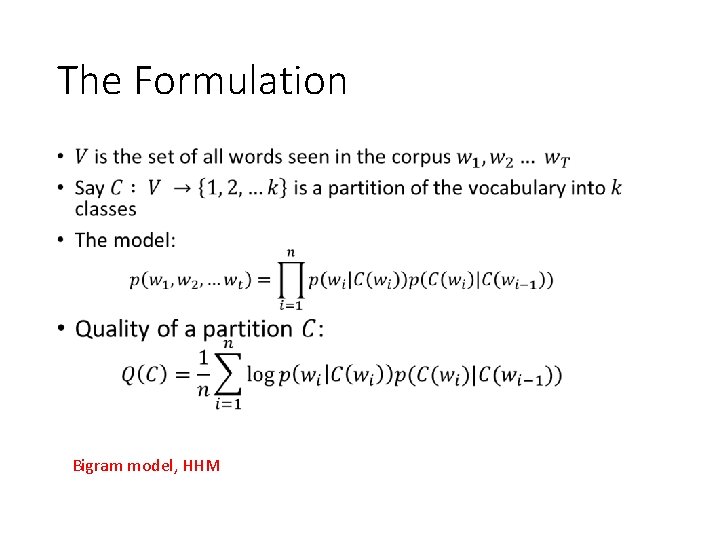

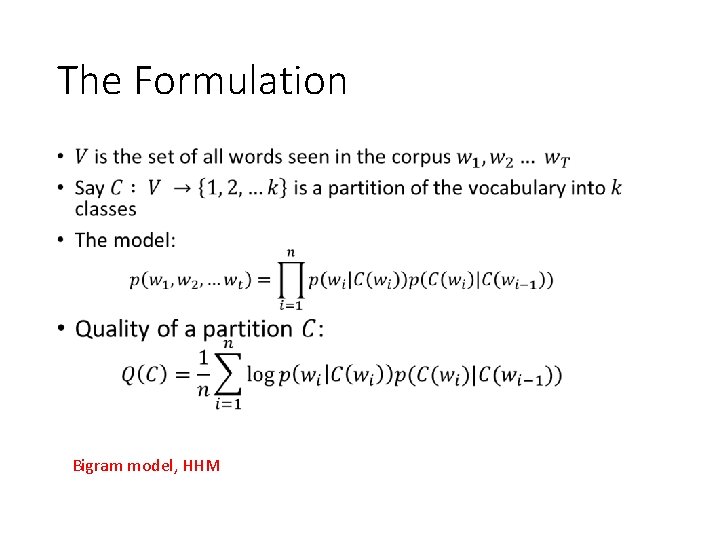

The Formulation • Bigram model, HHM

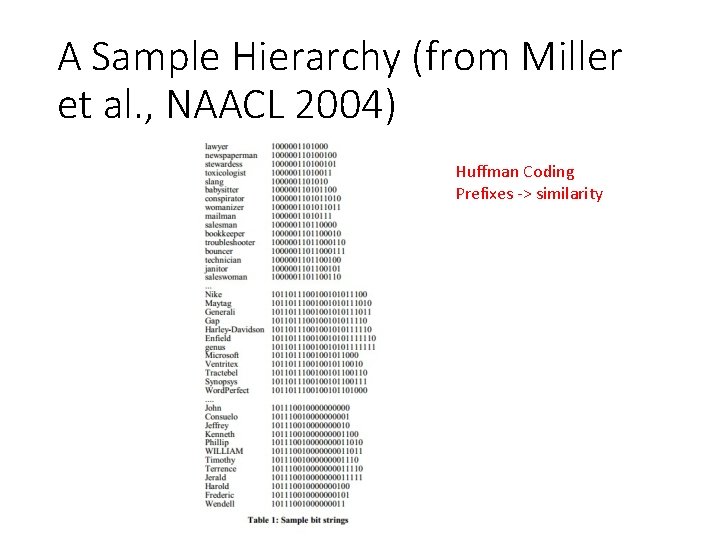

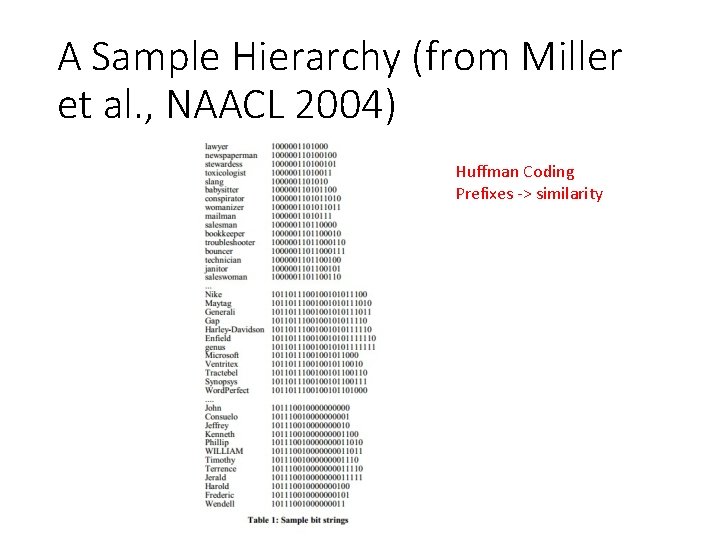

A Sample Hierarchy (from Miller et al. , NAACL 2004) Huffman Coding Prefixes -> similarity

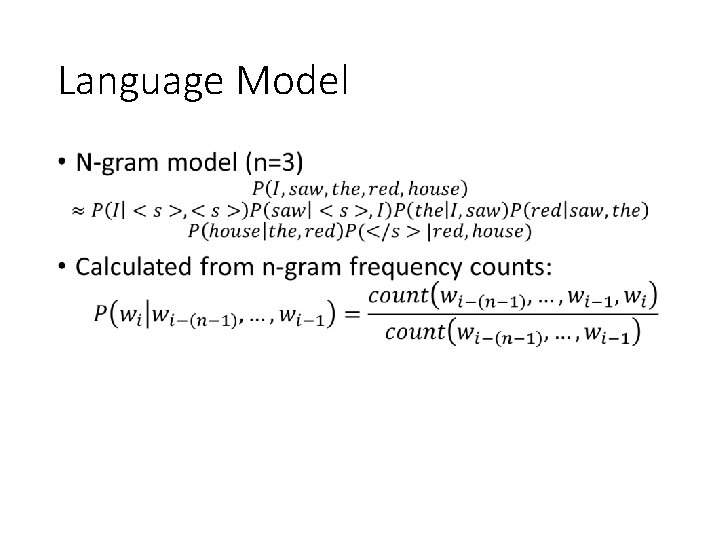

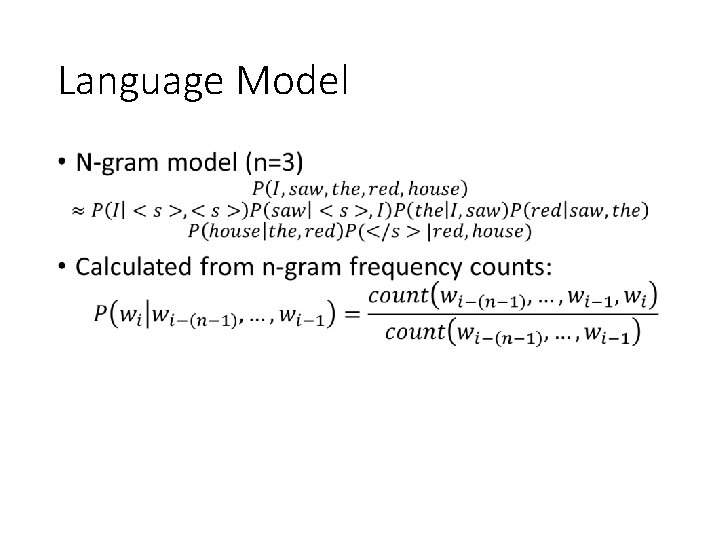

Language Model •

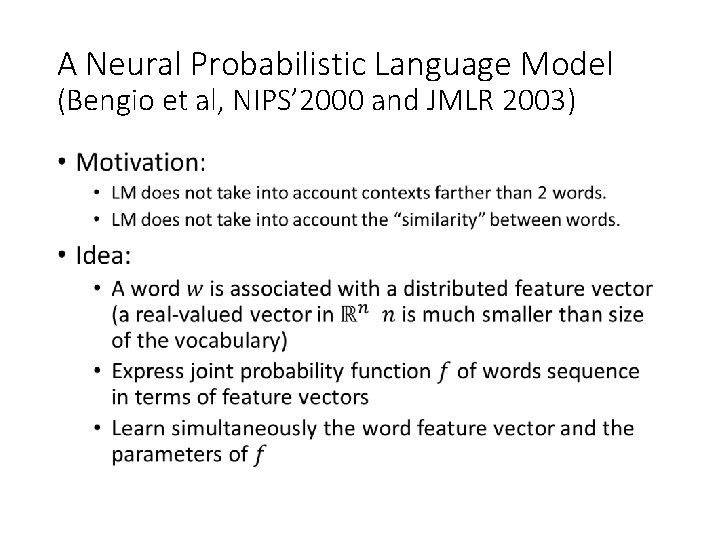

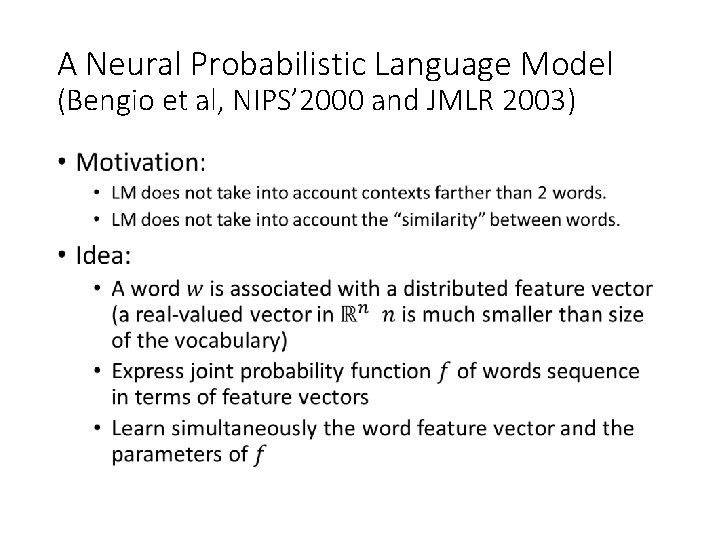

A Neural Probabilistic Language Model (Bengio et al, NIPS’ 2000 and JMLR 2003) •

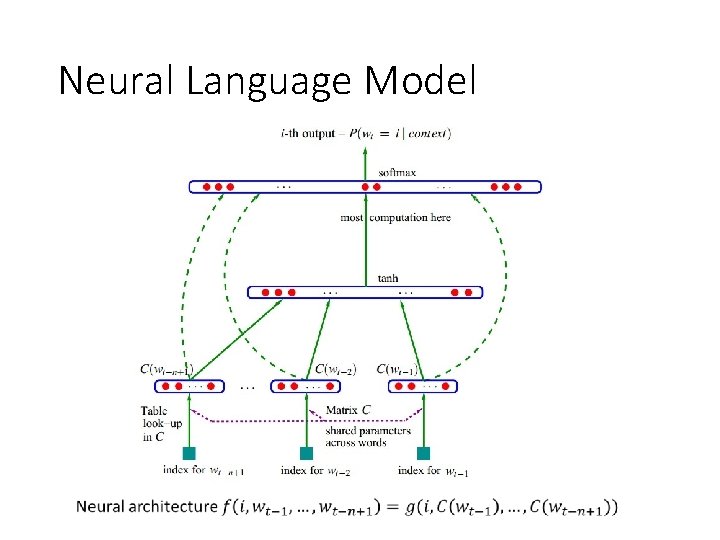

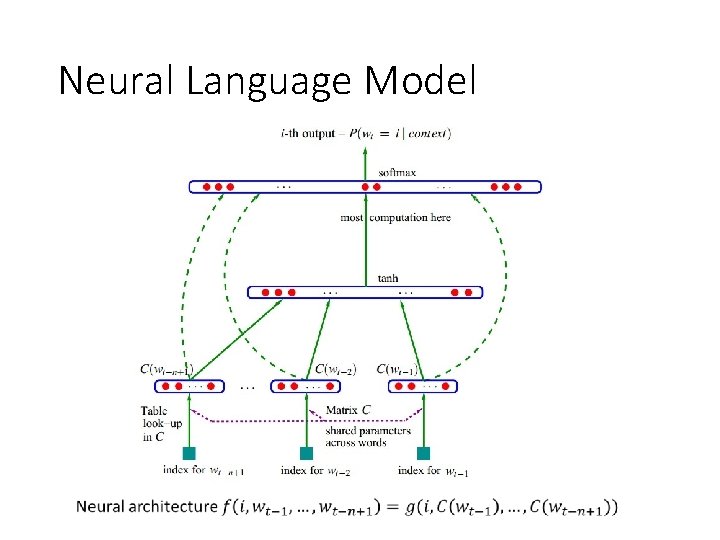

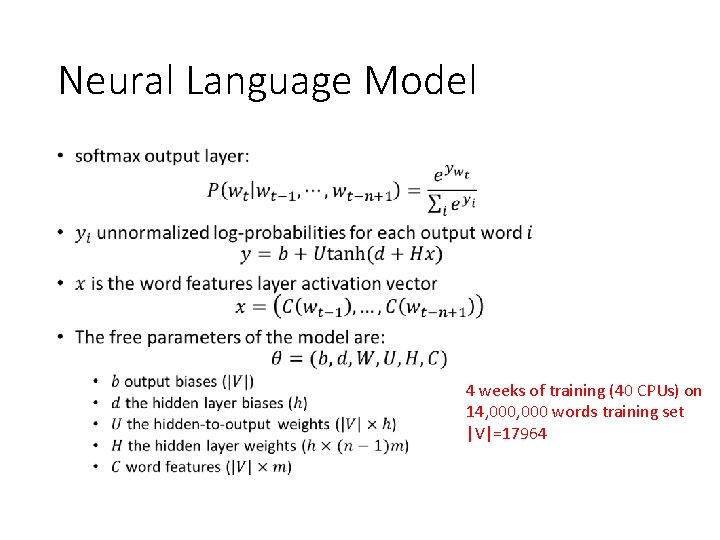

Neural Language Model

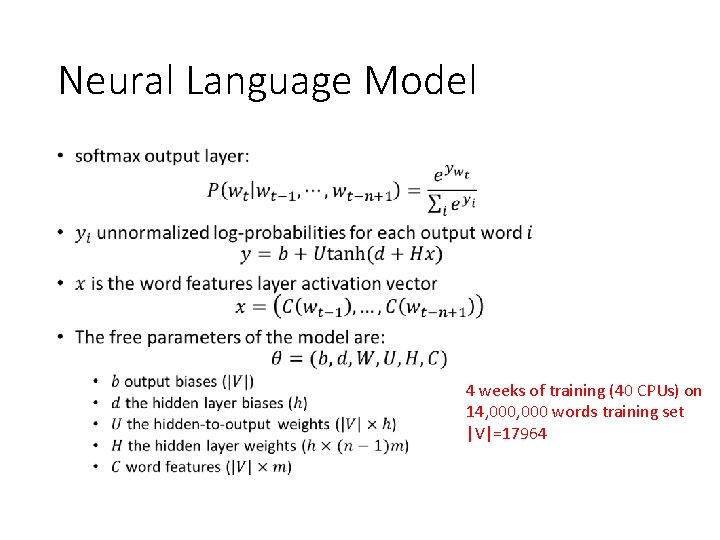

Neural Language Model • 4 weeks of training (40 CPUs) on 14, 000 words training set |V|=17964

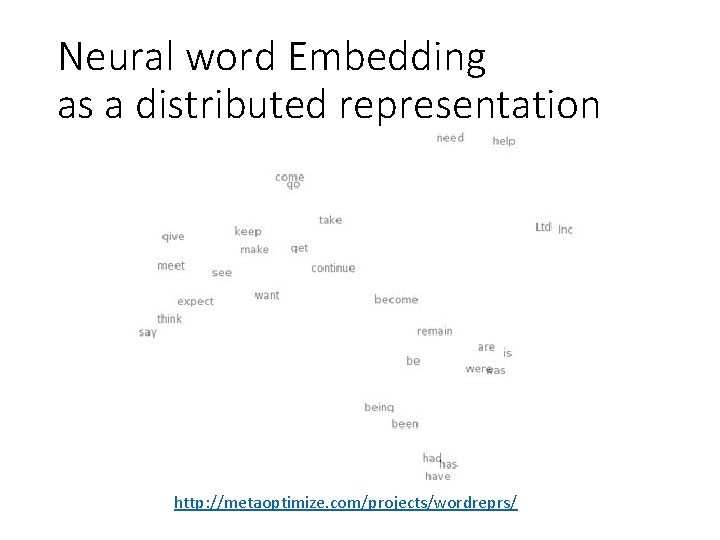

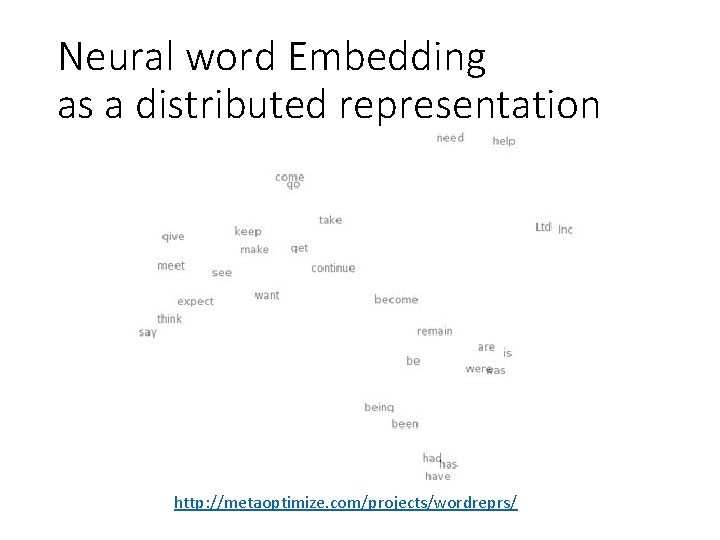

Neural word Embedding as a distributed representation http: //metaoptimize. com/projects/wordreprs/

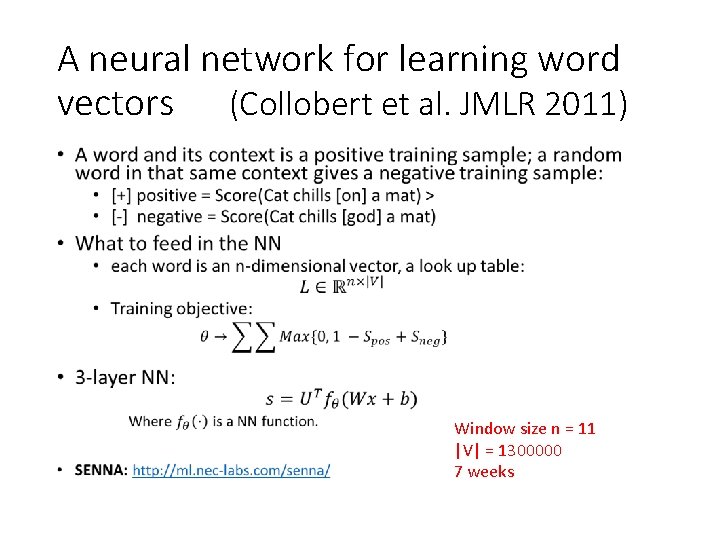

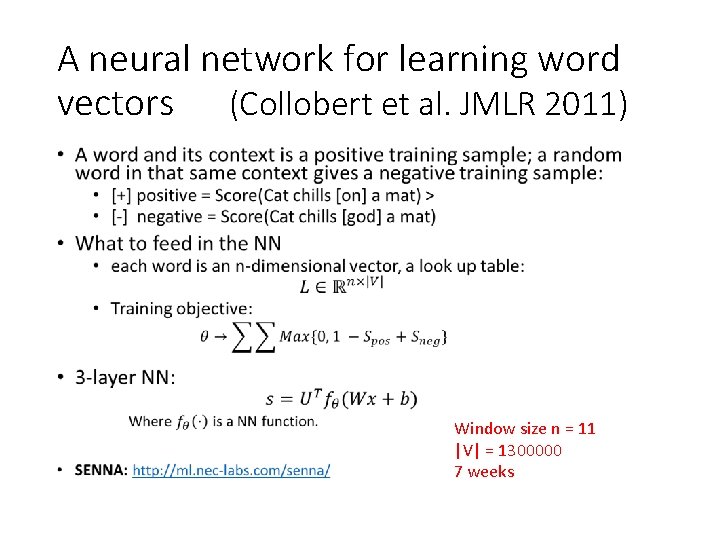

A neural network for learning word vectors (Collobert et al. JMLR 2011) • Window size n = 11 |V| = 1300000 7 weeks

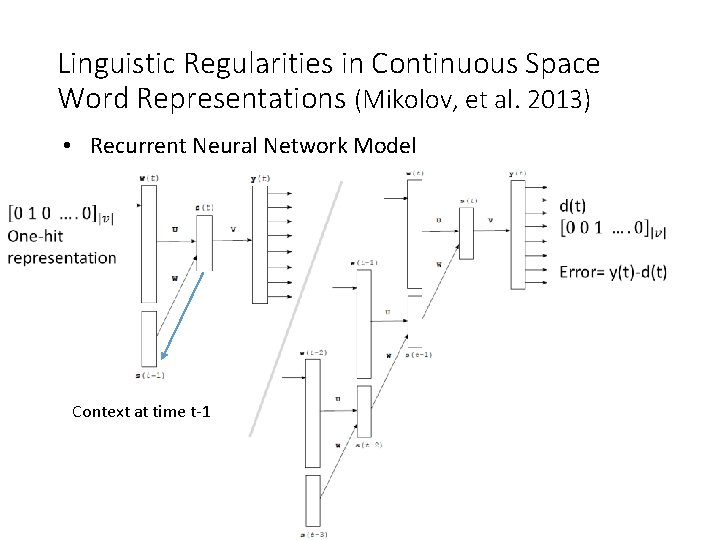

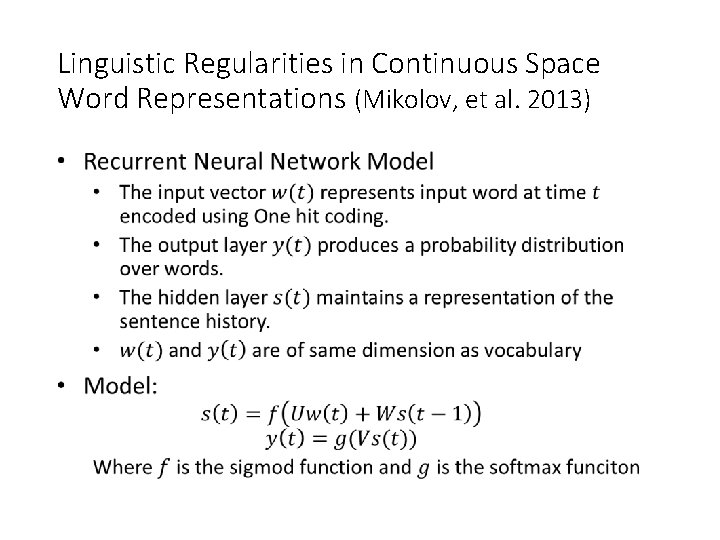

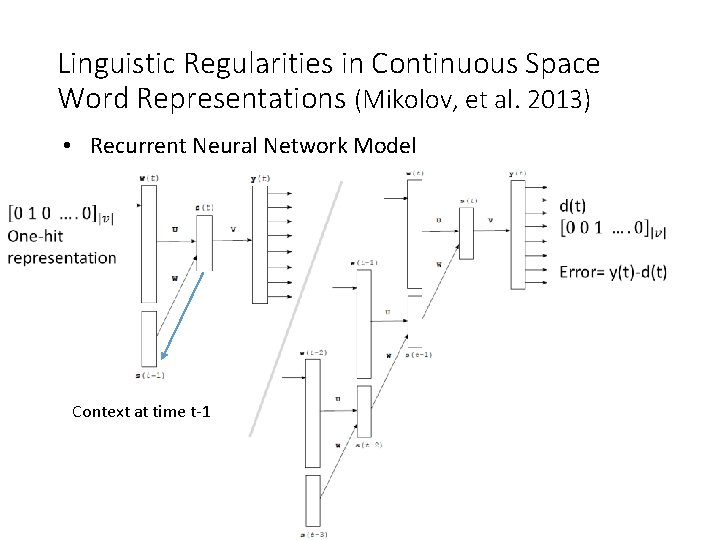

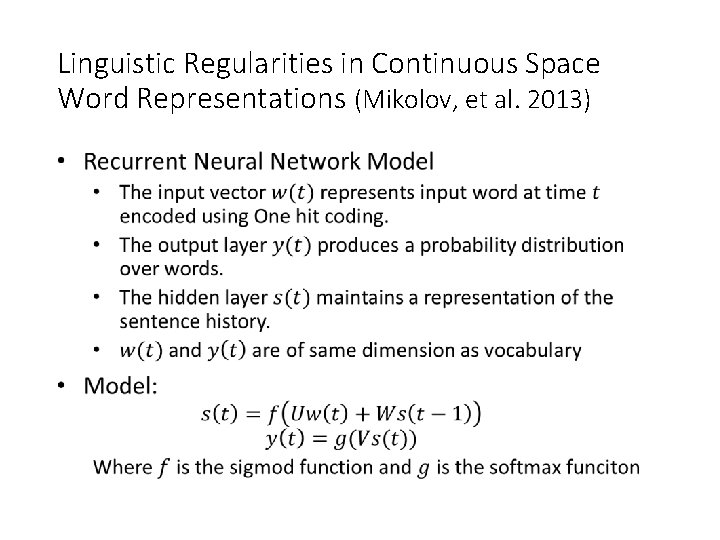

Linguistic Regularities in Continuous Space Word Representations (Mikolov, et al. 2013) • Recurrent Neural Network Model Context at time t-1

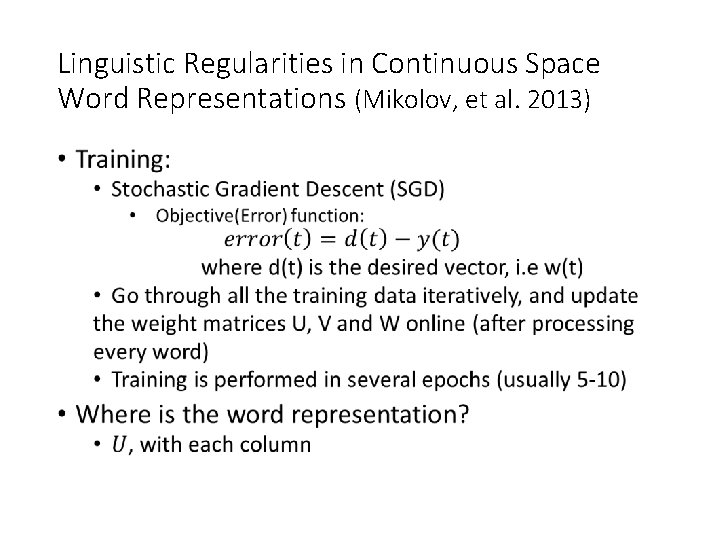

Linguistic Regularities in Continuous Space Word Representations (Mikolov, et al. 2013) •

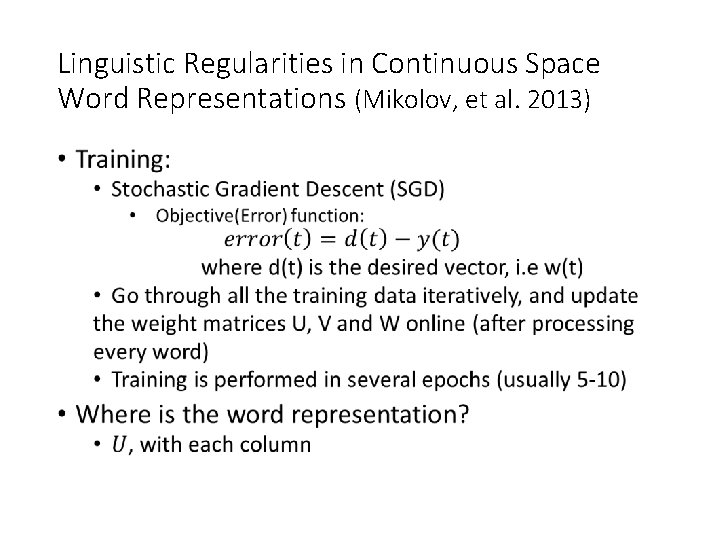

Linguistic Regularities in Continuous Space Word Representations (Mikolov, et al. 2013) •

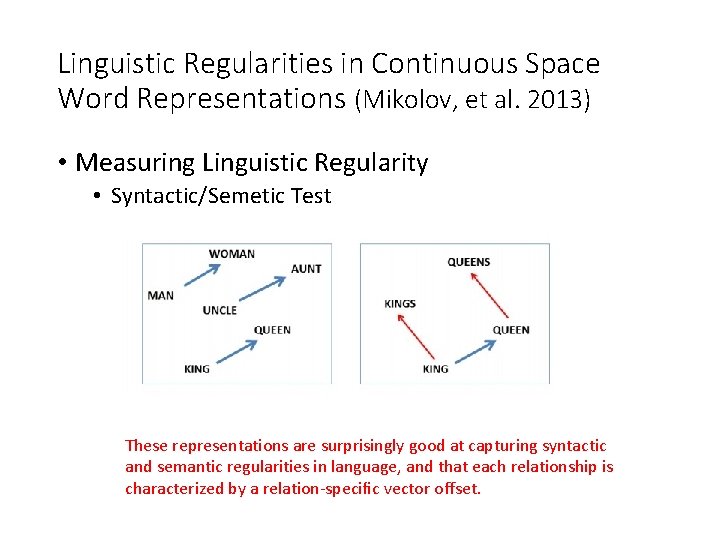

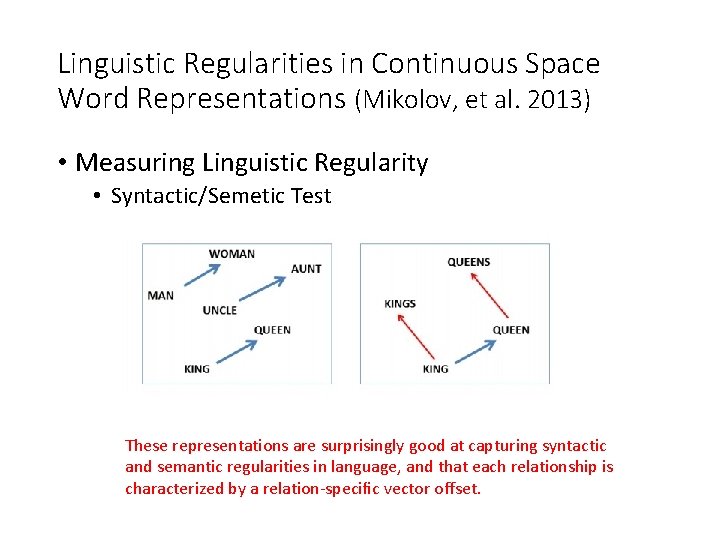

Linguistic Regularities in Continuous Space Word Representations (Mikolov, et al. 2013) • Measuring Linguistic Regularity • Syntactic/Semetic Test These representations are surprisingly good at capturing syntactic and semantic regularities in language, and that each relationship is characterized by a relation-specific vector offset.

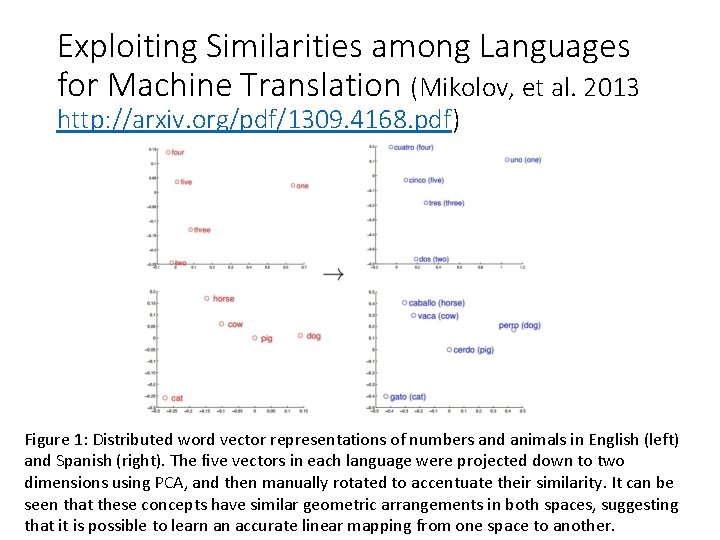

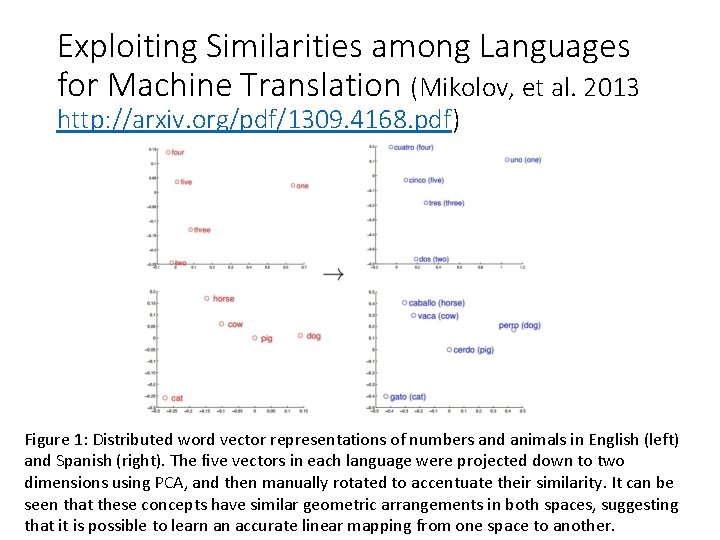

Exploiting Similarities among Languages for Machine Translation (Mikolov, et al. 2013 http: //arxiv. org/pdf/1309. 4168. pdf) Figure 1: Distributed word vector representations of numbers and animals in English (left) and Spanish (right). The five vectors in each language were projected down to two dimensions using PCA, and then manually rotated to accentuate their similarity. It can be seen that these concepts have similar geometric arrangements in both spaces, suggesting that it is possible to learn an accurate linear mapping from one space to another.

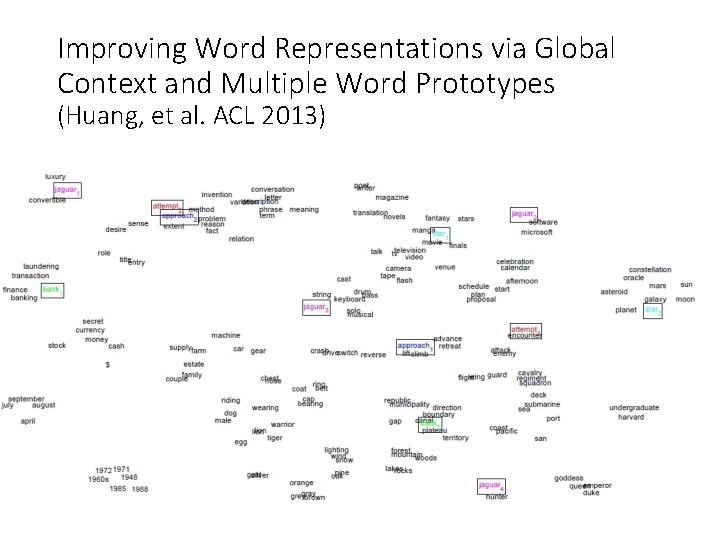

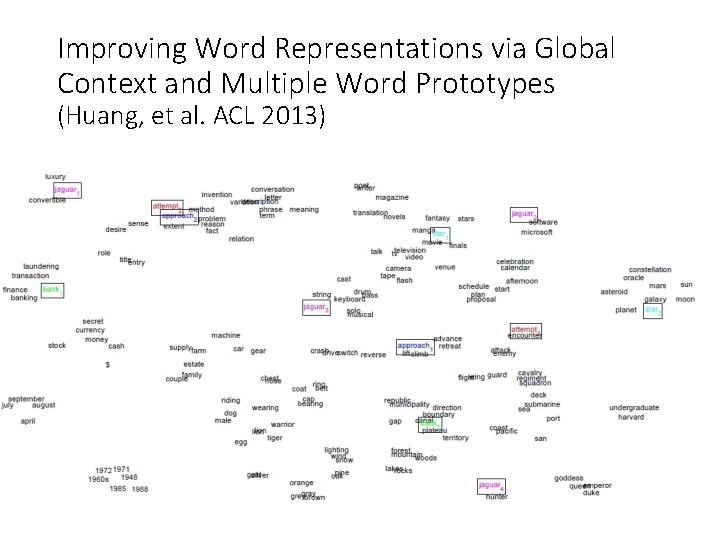

Improving Word Representations via Global Context and Multiple Word Prototypes (Huang, et al. ACL 2013)

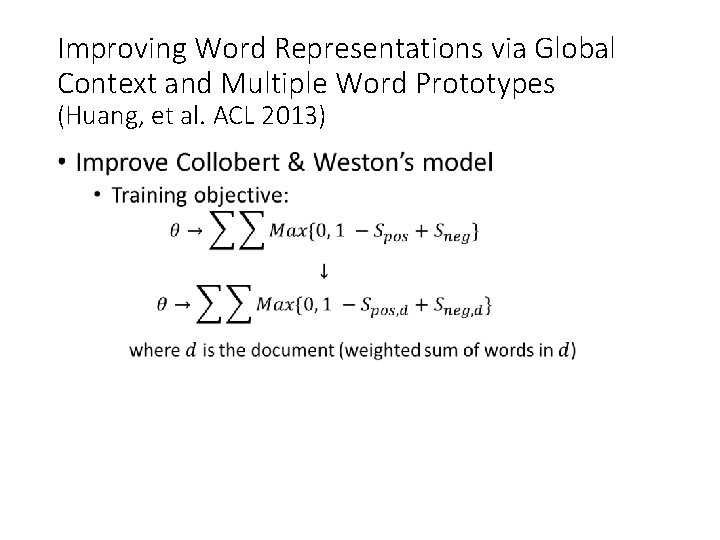

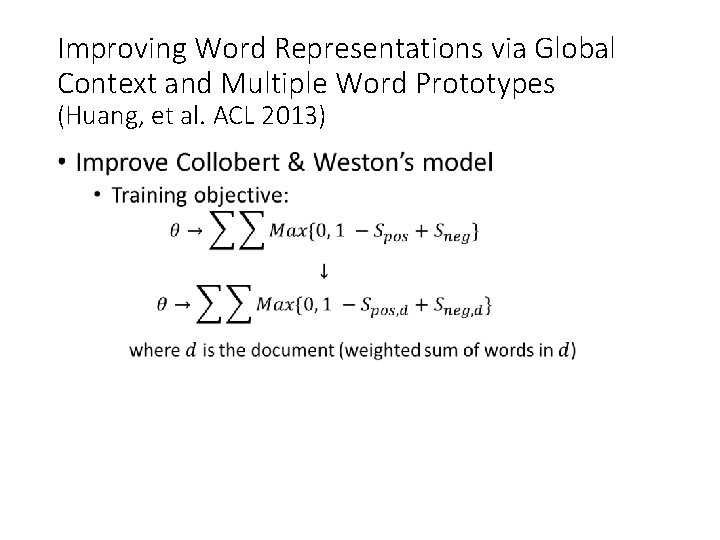

Improving Word Representations via Global Context and Multiple Word Prototypes (Huang, et al. ACL 2013) •

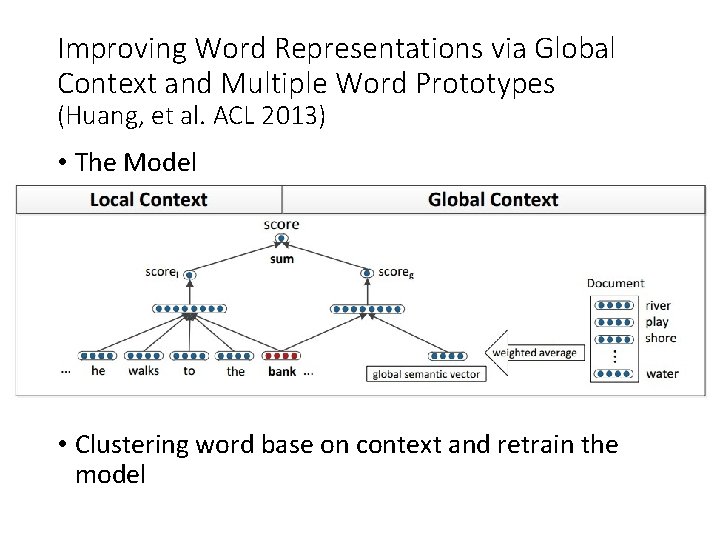

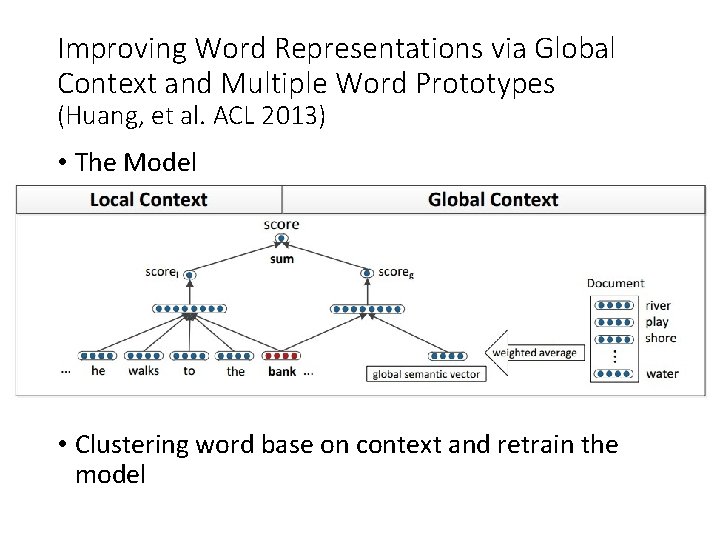

Improving Word Representations via Global Context and Multiple Word Prototypes (Huang, et al. ACL 2013) • The Model • Clustering word base on context and retrain the model

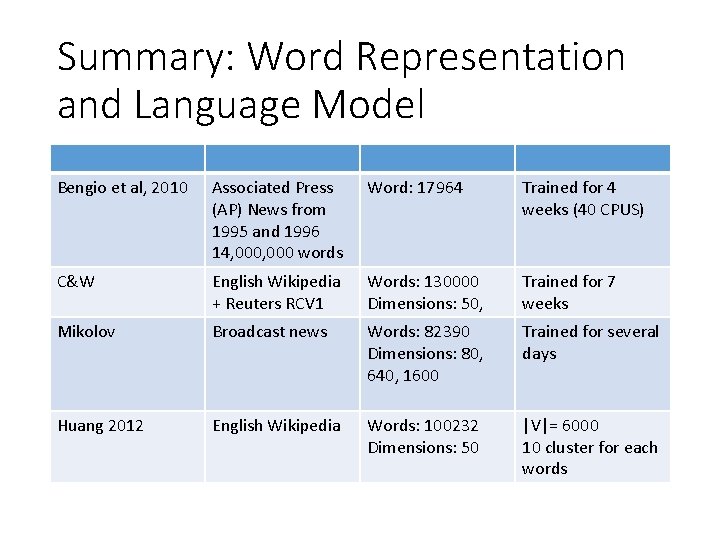

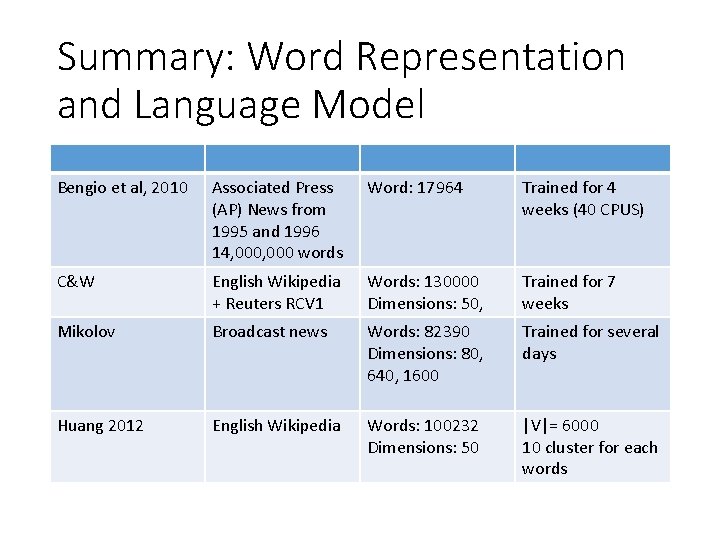

Summary: Word Representation and Language Model Bengio et al, 2010 Associated Press (AP) News from 1995 and 1996 14, 000 words Word: 17964 Trained for 4 weeks (40 CPUS) C&W English Wikipedia + Reuters RCV 1 Words: 130000 Dimensions: 50, Trained for 7 weeks Mikolov Broadcast news Words: 82390 Dimensions: 80, 640, 1600 Trained for several days Huang 2012 English Wikipedia Words: 100232 Dimensions: 50 |V|= 6000 10 cluster for each words

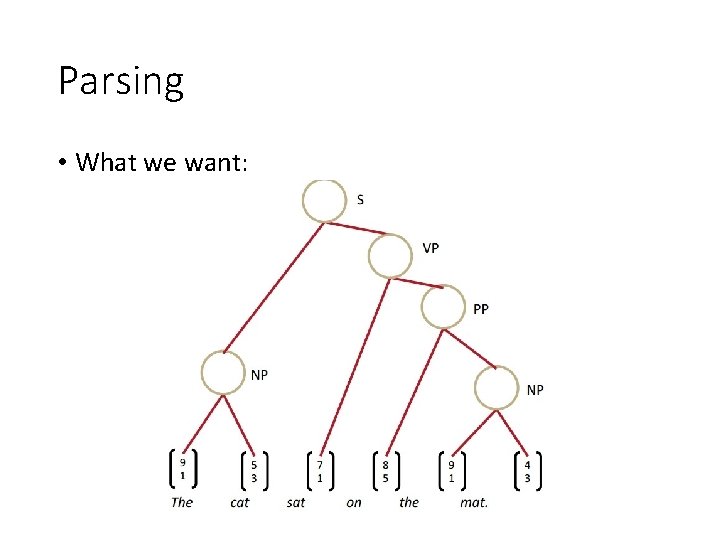

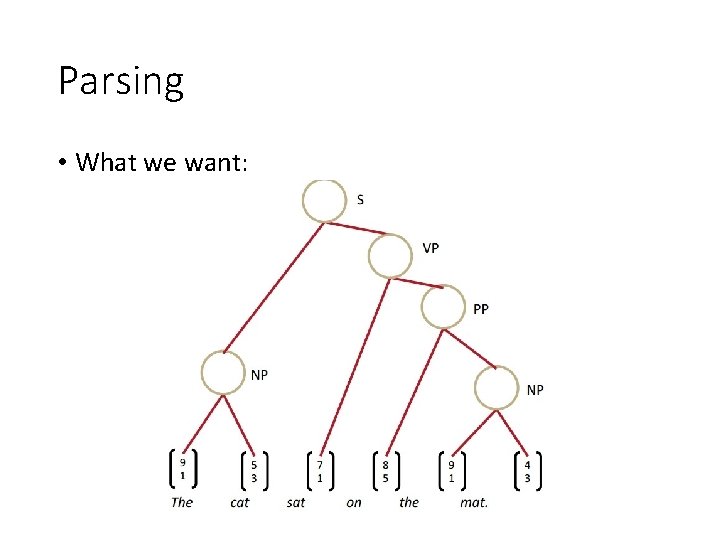

Parsing • What we want:

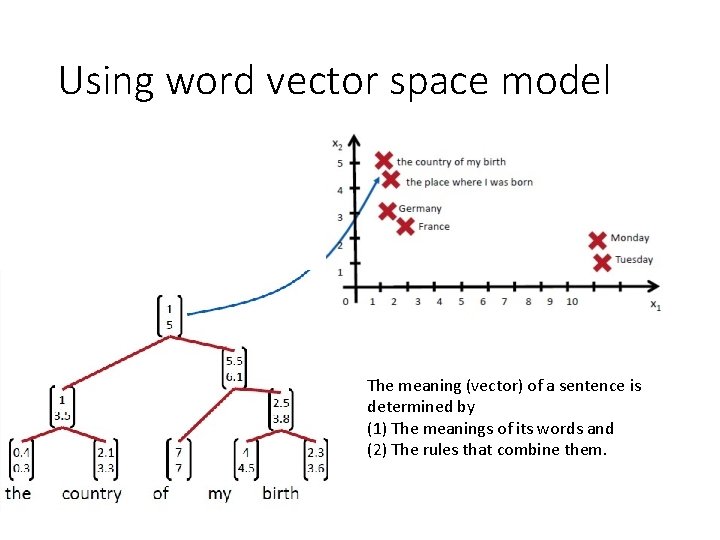

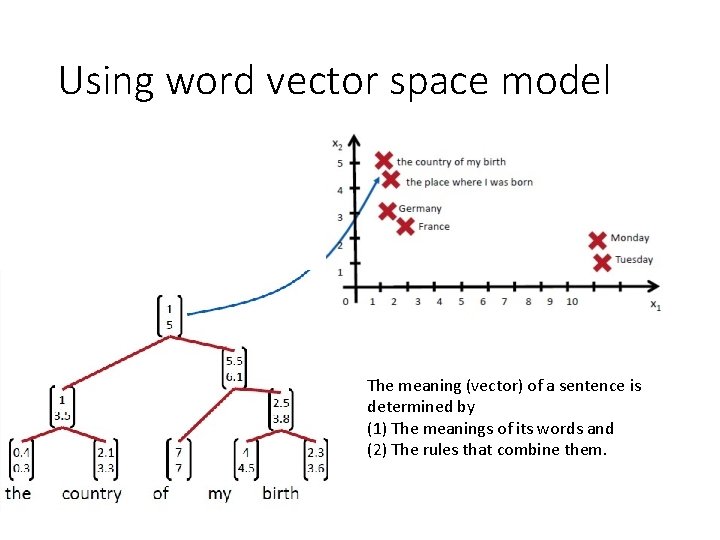

Using word vector space model The meaning (vector) of a sentence is determined by (1) The meanings of its words and (2) The rules that combine them.

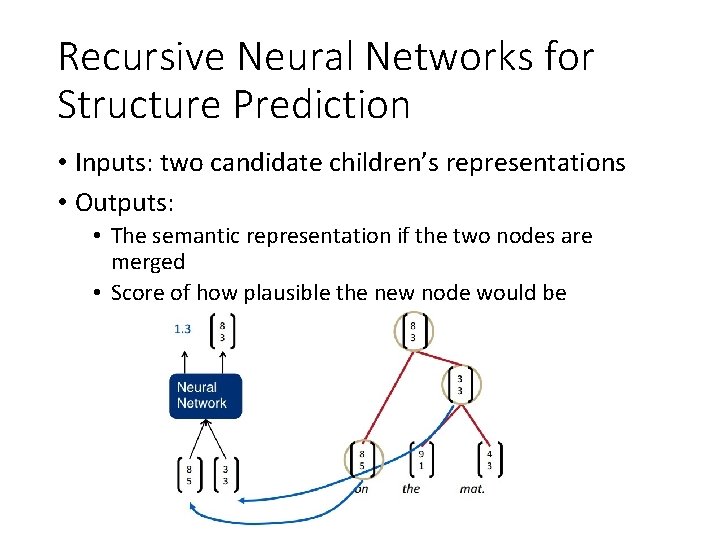

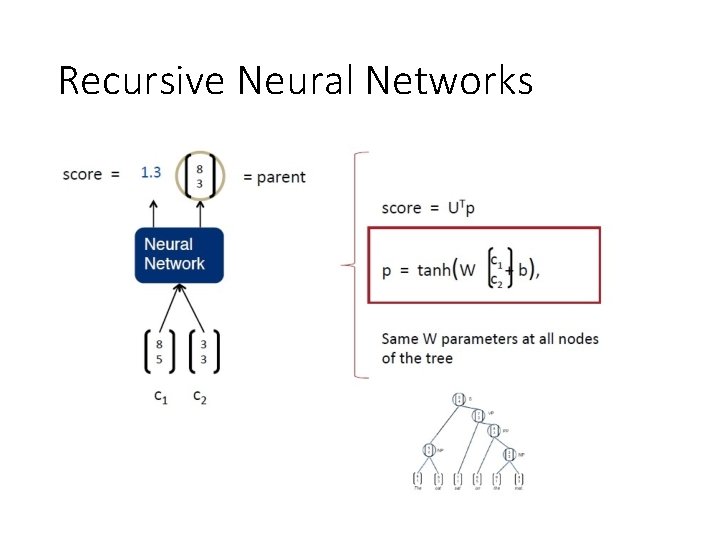

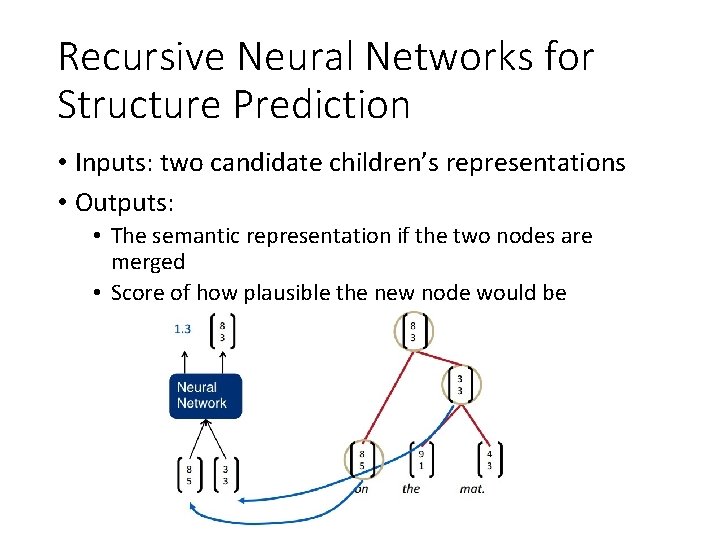

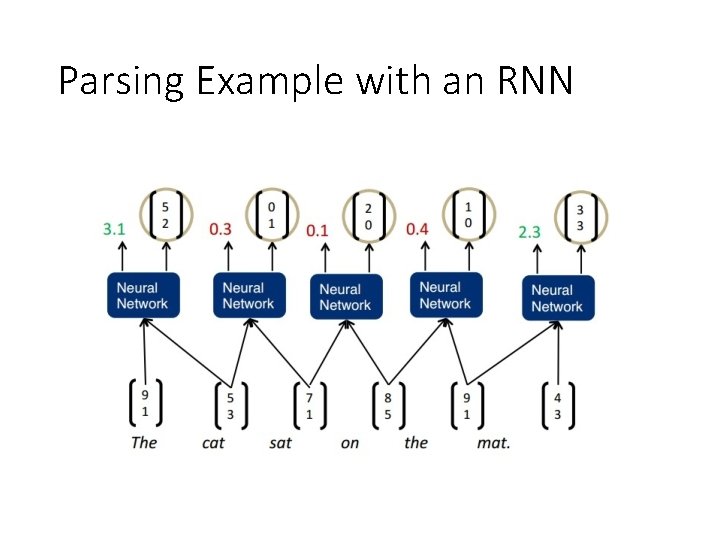

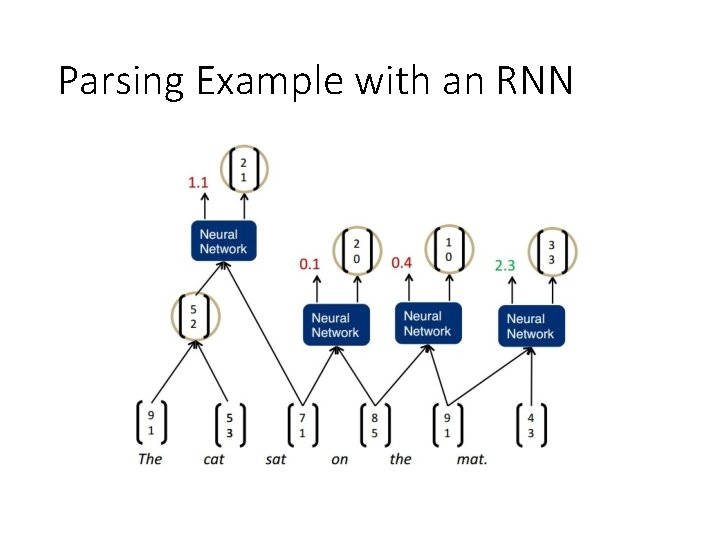

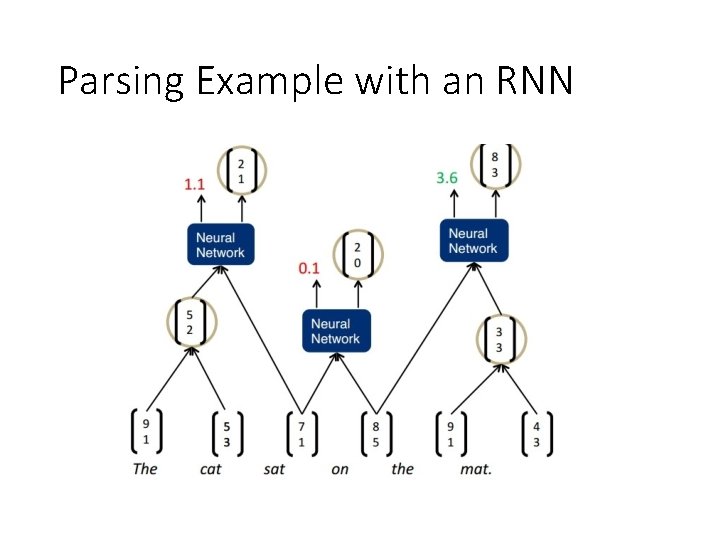

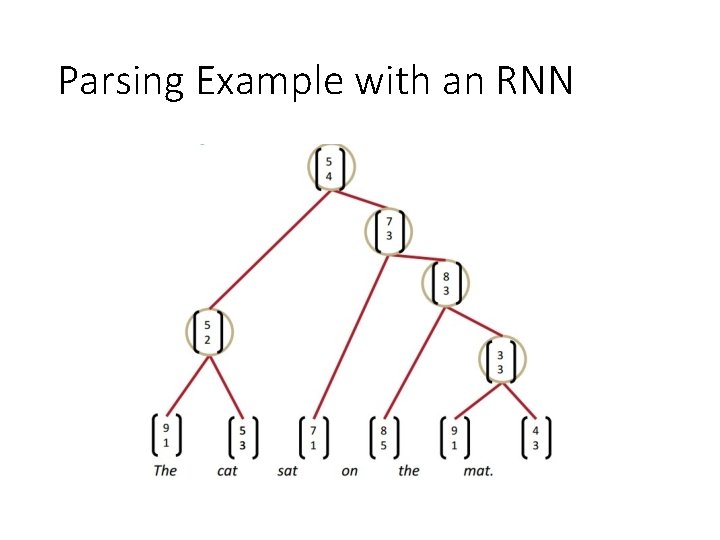

Recursive Neural Networks for Structure Prediction • Inputs: two candidate children’s representations • Outputs: • The semantic representation if the two nodes are merged • Score of how plausible the new node would be

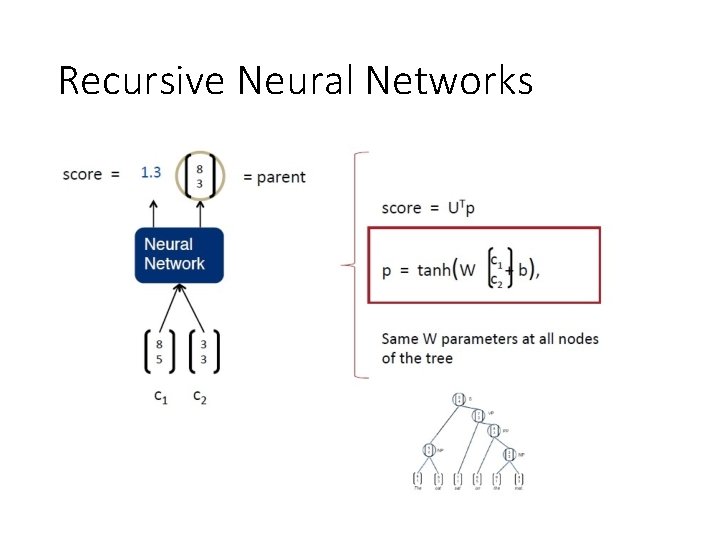

Recursive Neural Networks

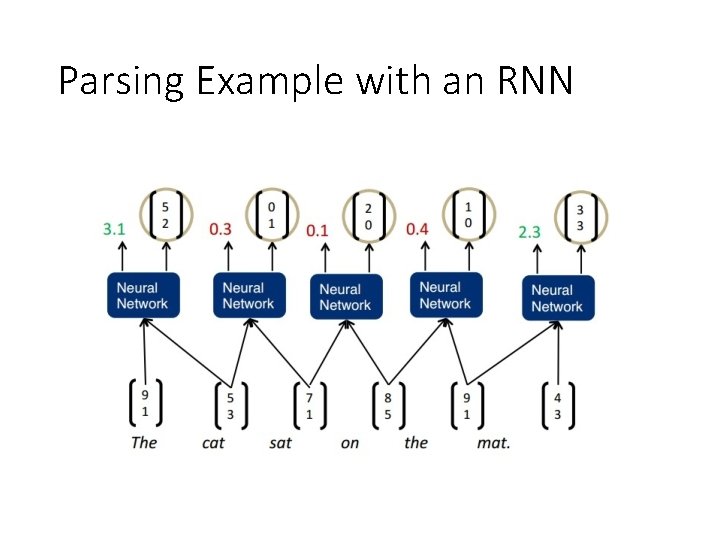

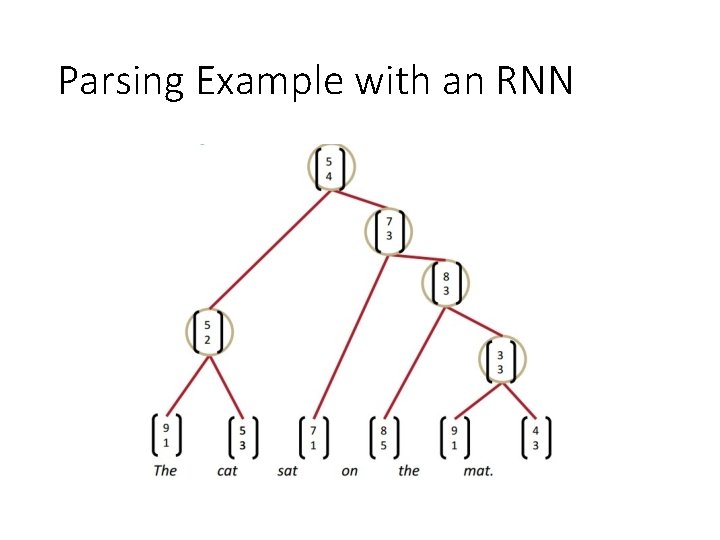

Parsing Example with an RNN

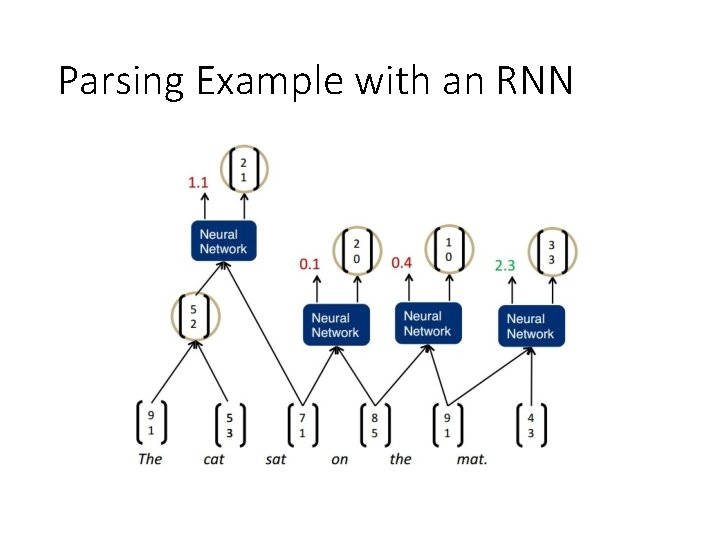

Parsing Example with an RNN

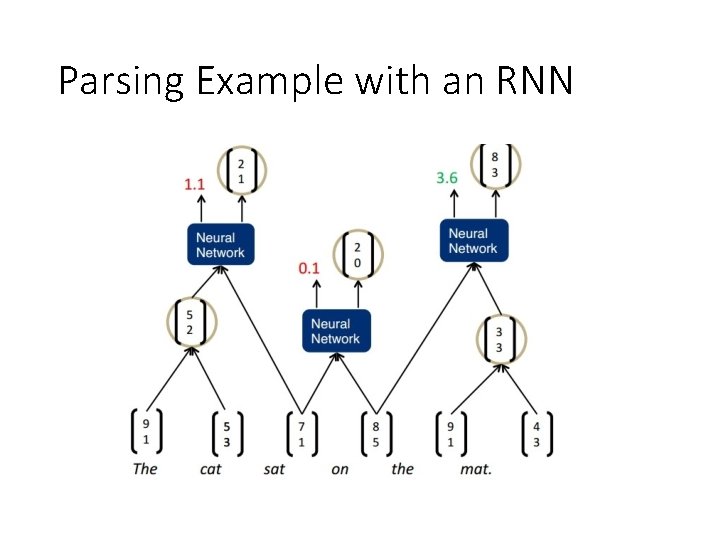

Parsing Example with an RNN

Parsing Example with an RNN

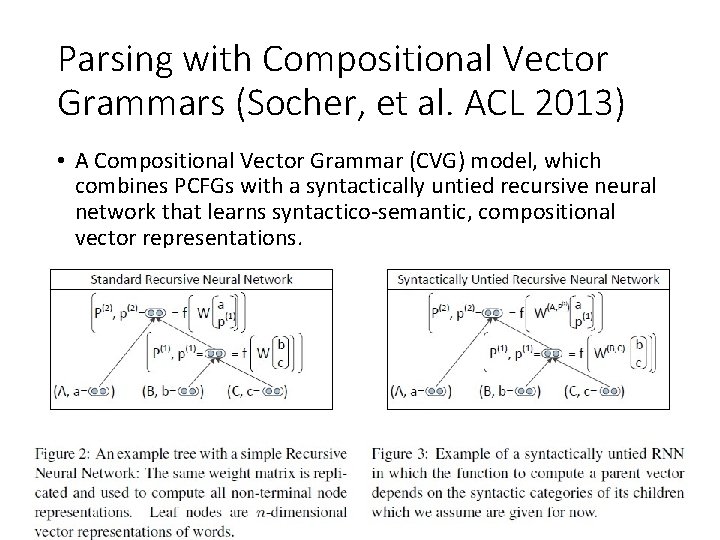

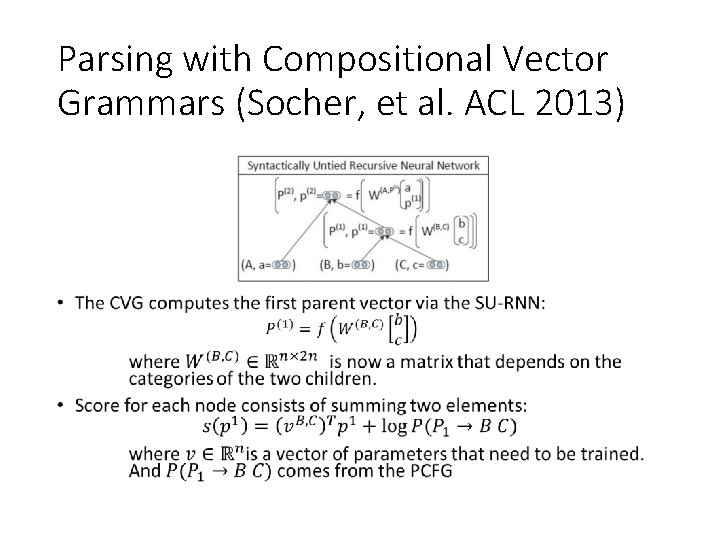

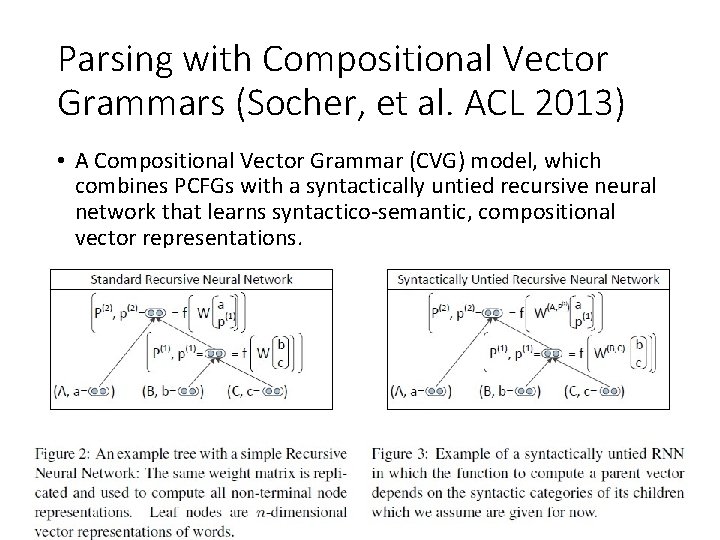

Parsing with Compositional Vector Grammars (Socher, et al. ACL 2013) • A Compositional Vector Grammar (CVG) model, which combines PCFGs with a syntactically untied recursive neural network that learns syntactico-semantic, compositional vector representations.

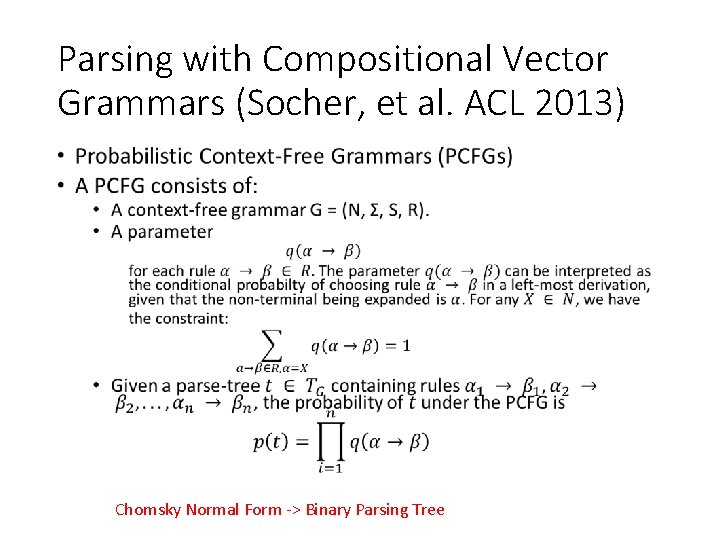

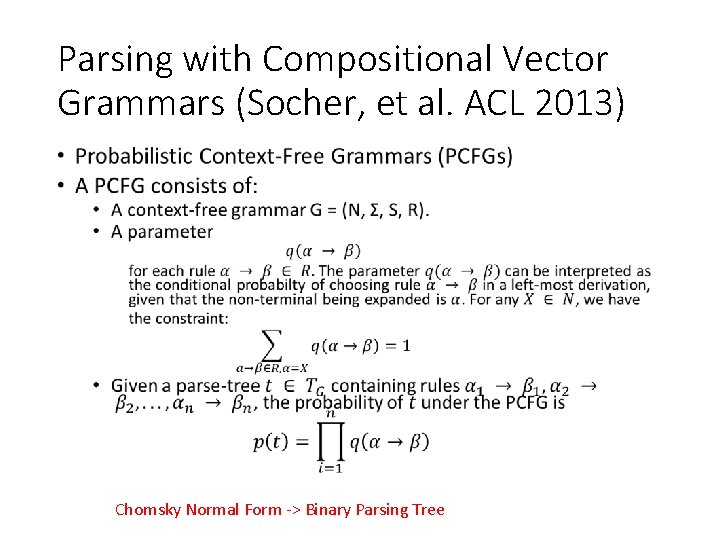

Parsing with Compositional Vector Grammars (Socher, et al. ACL 2013) • Chomsky Normal Form -> Binary Parsing Tree

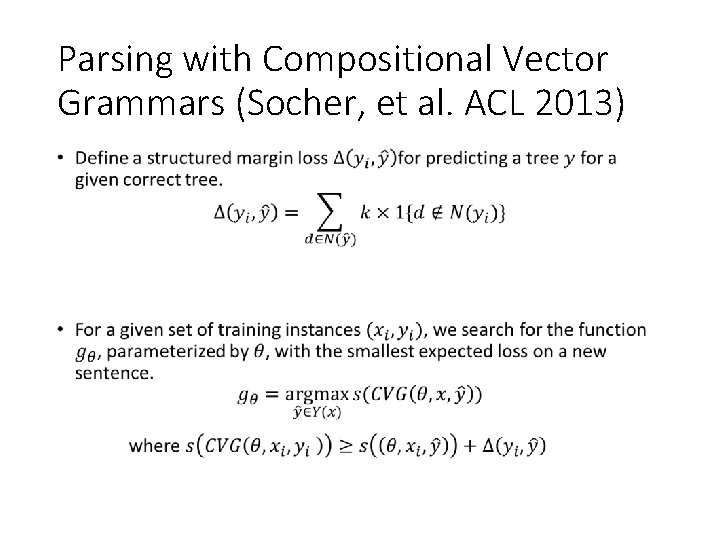

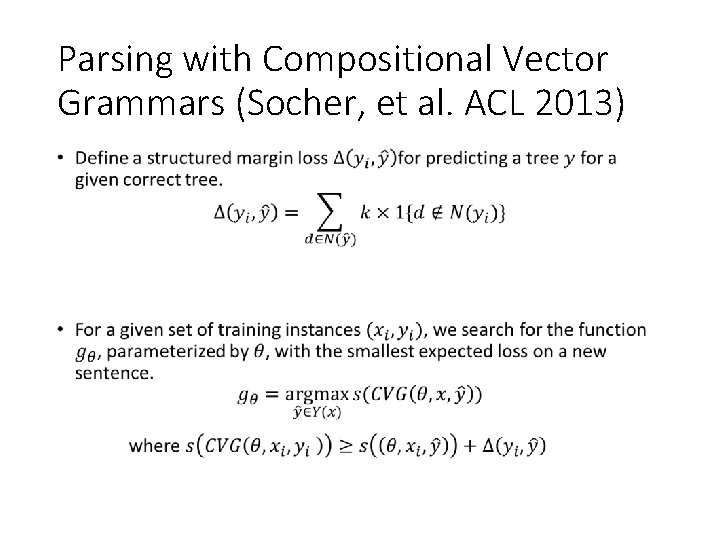

Parsing with Compositional Vector Grammars (Socher, et al. ACL 2013) •

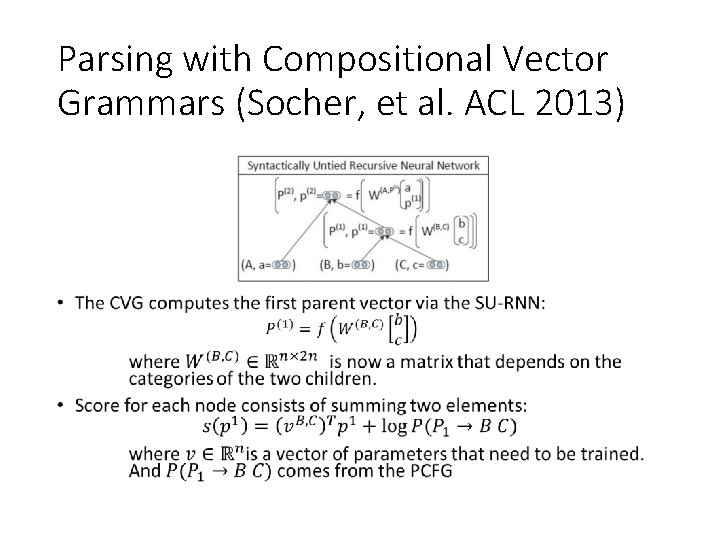

Parsing with Compositional Vector Grammars (Socher, et al. ACL 2013)

Parsing with CVGs • bottom-up beam search keeping a k-best list at every cell of the chart • The CVG improves the PCFG of the Stanford Parser by 3. 8% to obtain an F 1 score of 90. 4%. • As a reranker.

Q&A Thanks!