Deep Learning for Program Repair Aditya Kanade Indian

- Slides: 15

Deep Learning for Program Repair Aditya Kanade Indian Institute of Science Dagstuhl Seminar on Automated Program Repair, January 2017

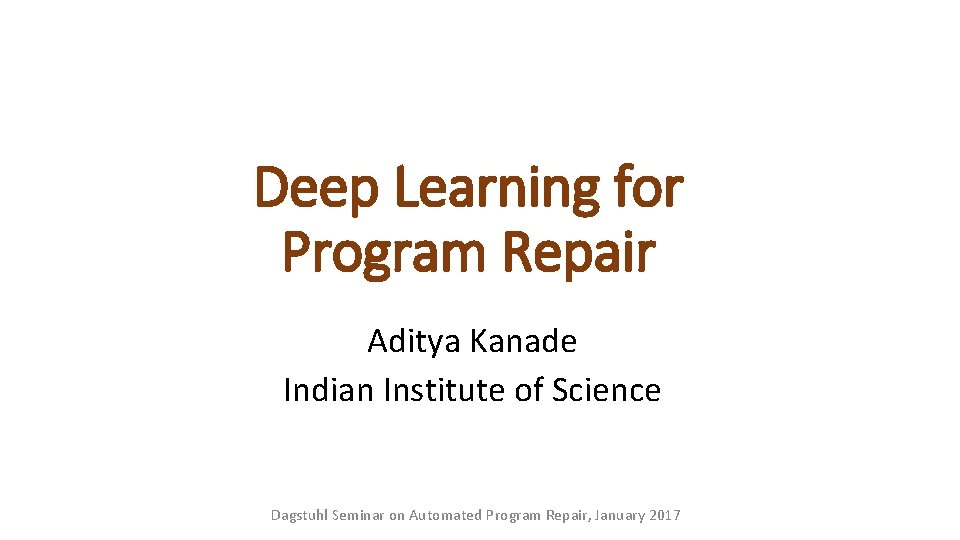

A Probabilistic Perspective on Program Repair • Specification = A set of examples = { (x, y) | x is a faulty program and y is its fixed version } • Represent the faulty and fixed programs as sequences of tokens x = x 1, x 2, …, xn and y = y 1, y 2, …, yk • Learn a conditional probability distribution P(Y = y | X = x) • Repair procedure: To fix an unseen program x, generate a y such that y = argmax P(Y = y’ | X = x) 2

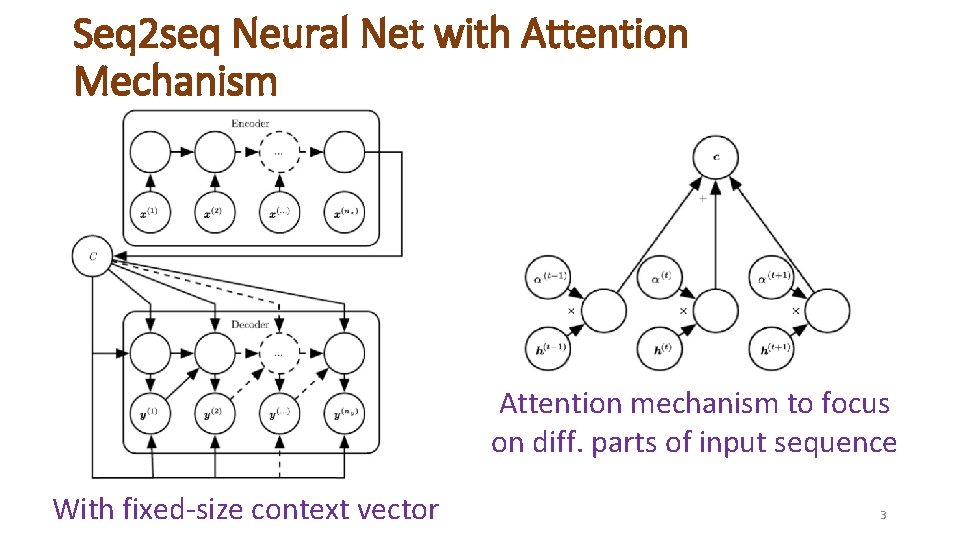

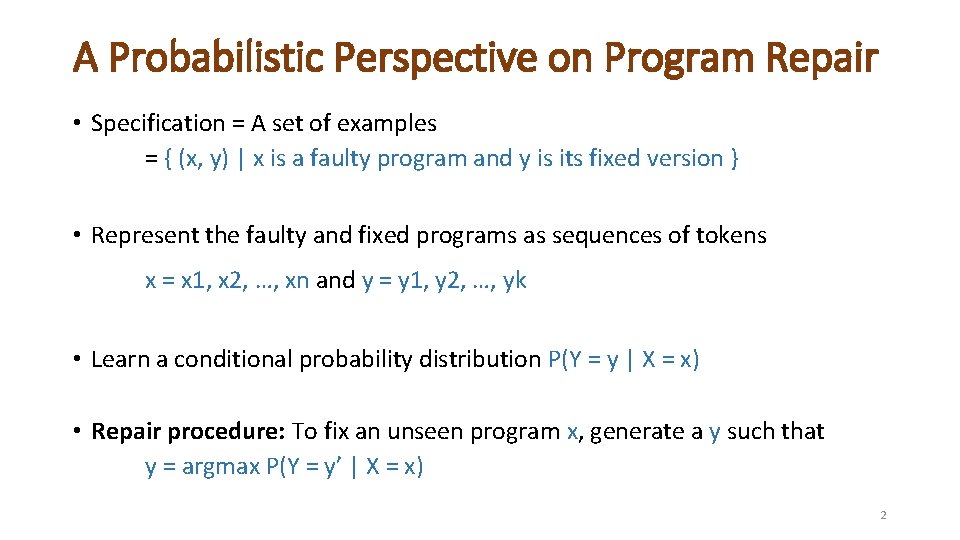

Seq 2 seq Neural Net with Attention Mechanism Attention mechanism to focus on diff. parts of input sequence With fixed-size context vector 3

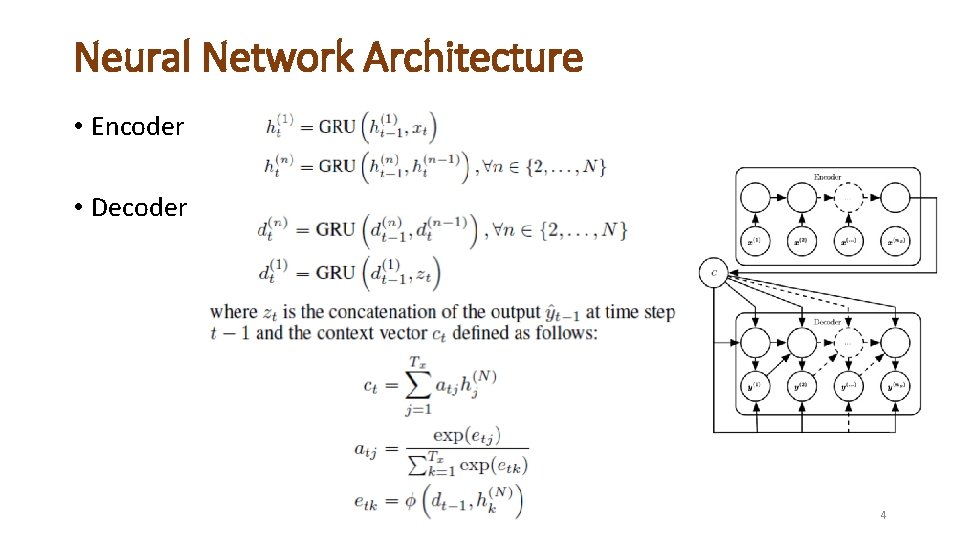

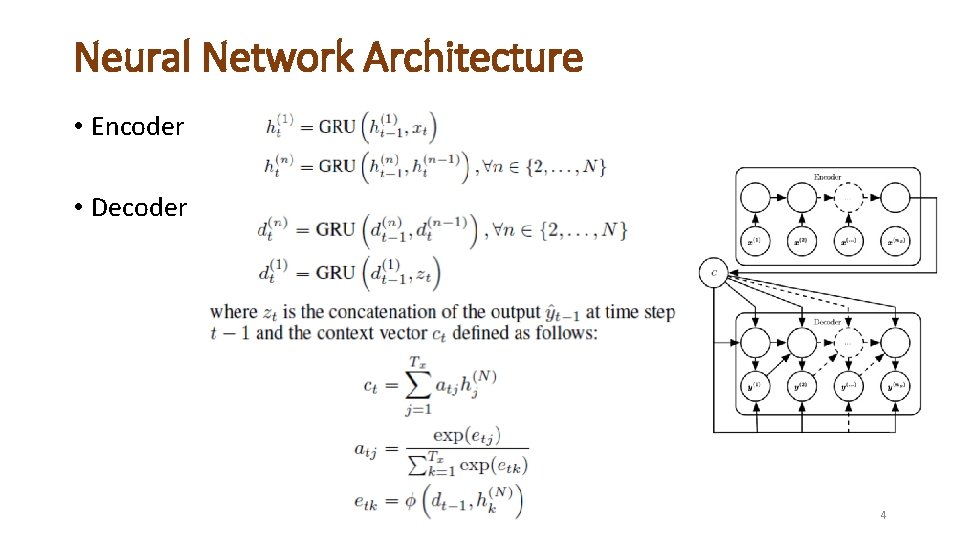

Neural Network Architecture • Encoder • Decoder 4

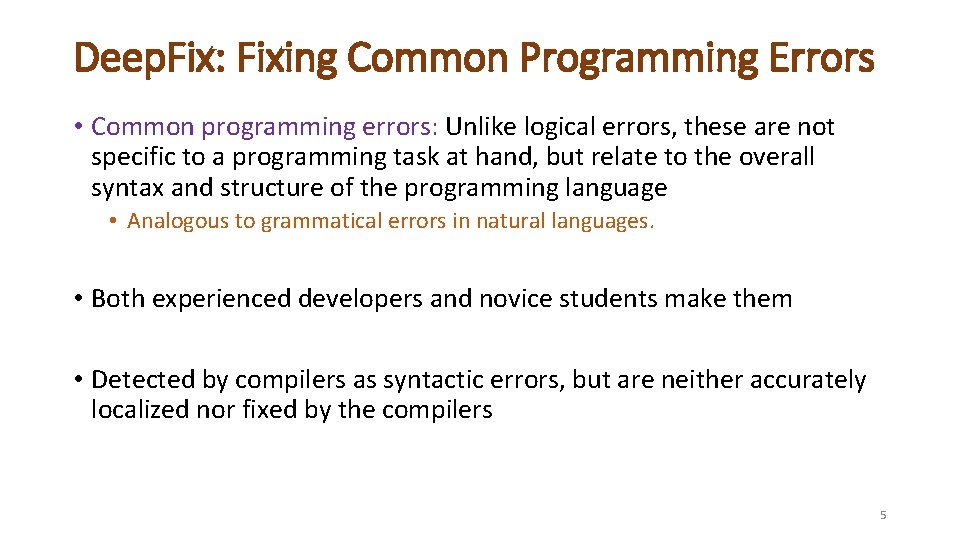

Deep. Fix: Fixing Common Programming Errors • Common programming errors: Unlike logical errors, these are not specific to a programming task at hand, but relate to the overall syntax and structure of the programming language • Analogous to grammatical errors in natural languages. • Both experienced developers and novice students make them • Detected by compilers as syntactic errors, but are neither accurately localized nor fixed by the compilers 5

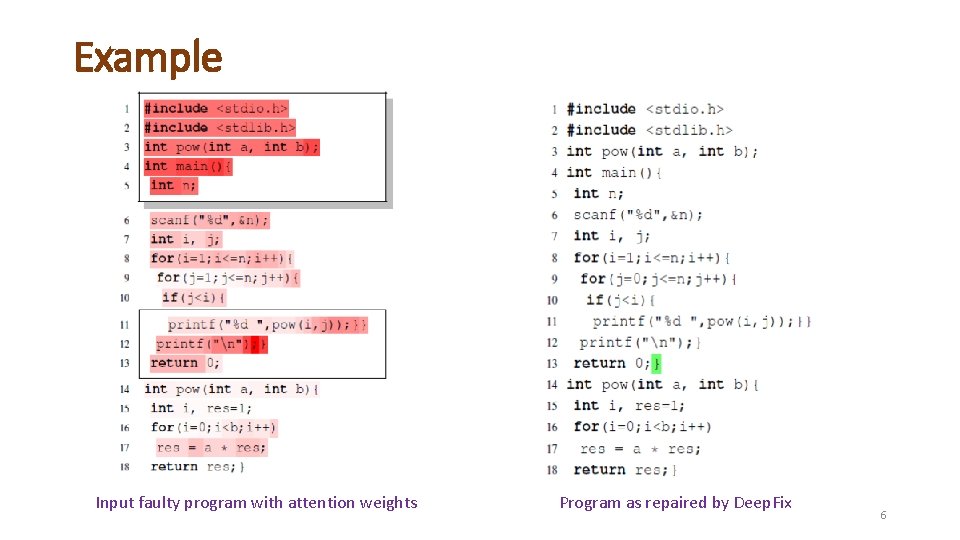

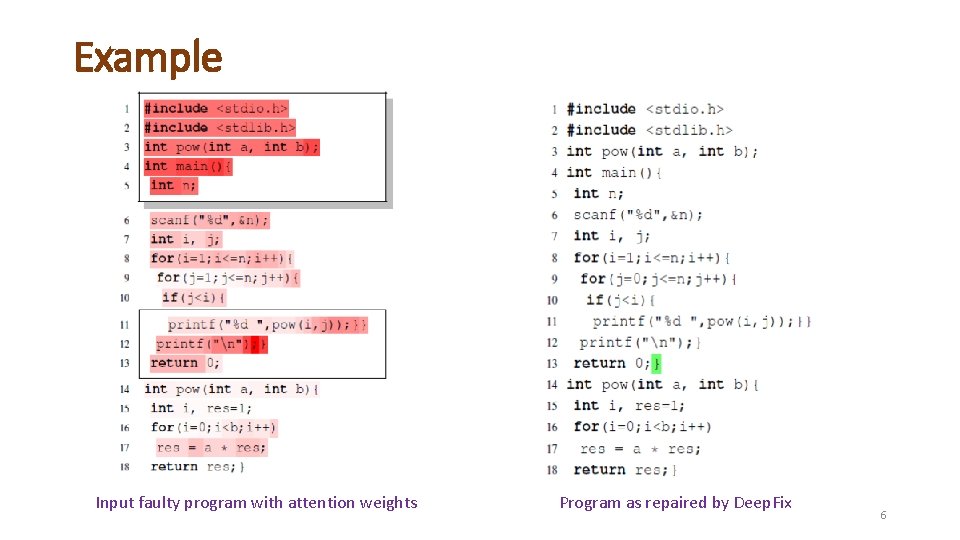

Example Input faulty program with attention weights Program as repaired by Deep. Fix 6

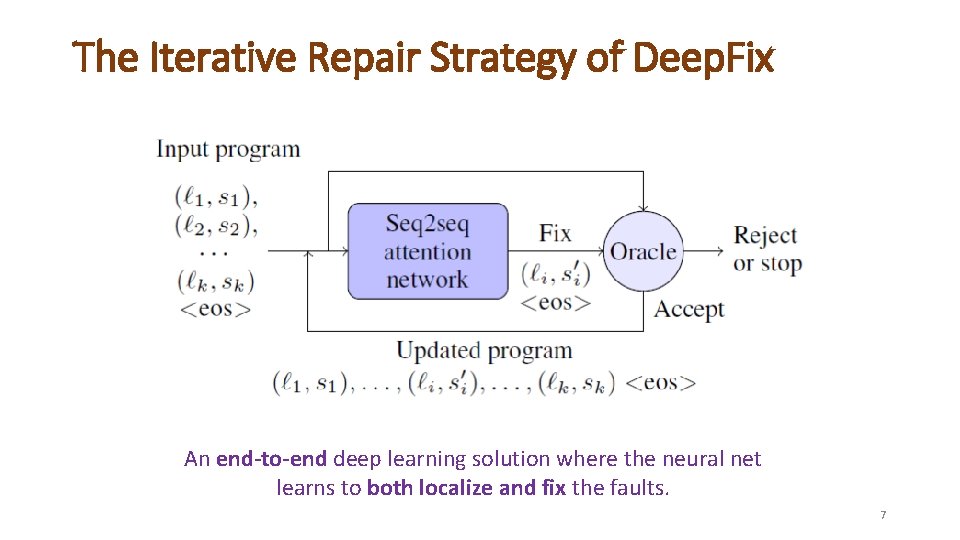

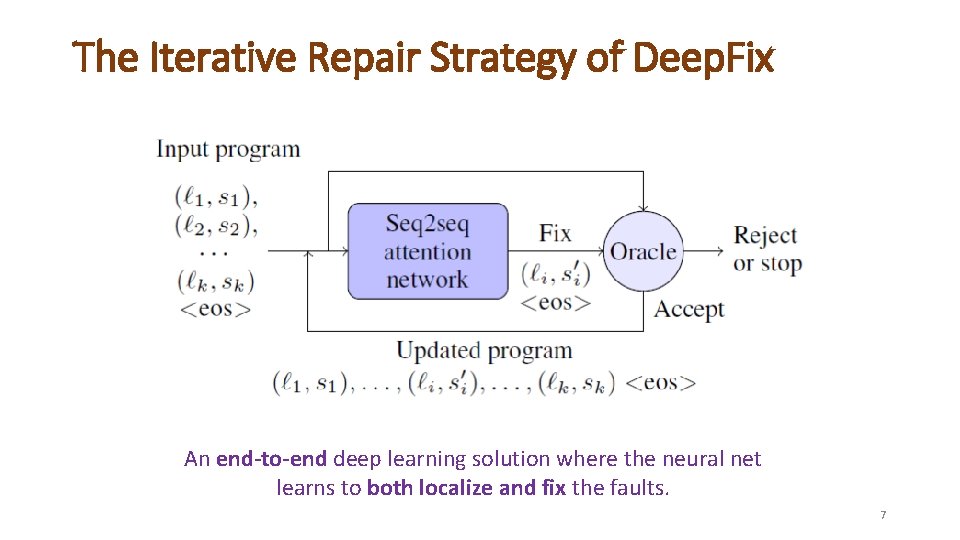

The Iterative Repair Strategy of Deep. Fix An end-to-end deep learning solution where the neural net learns to both localize and fix the faults. 7

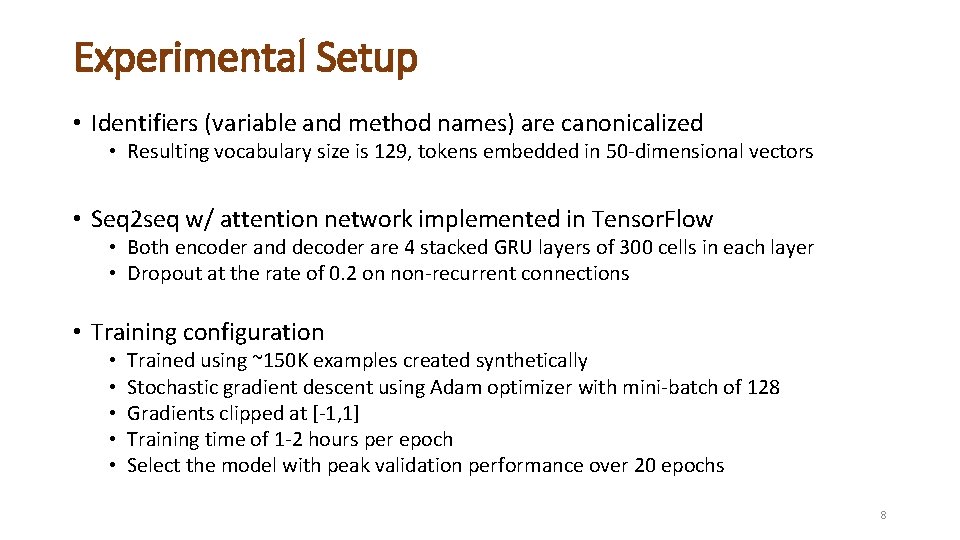

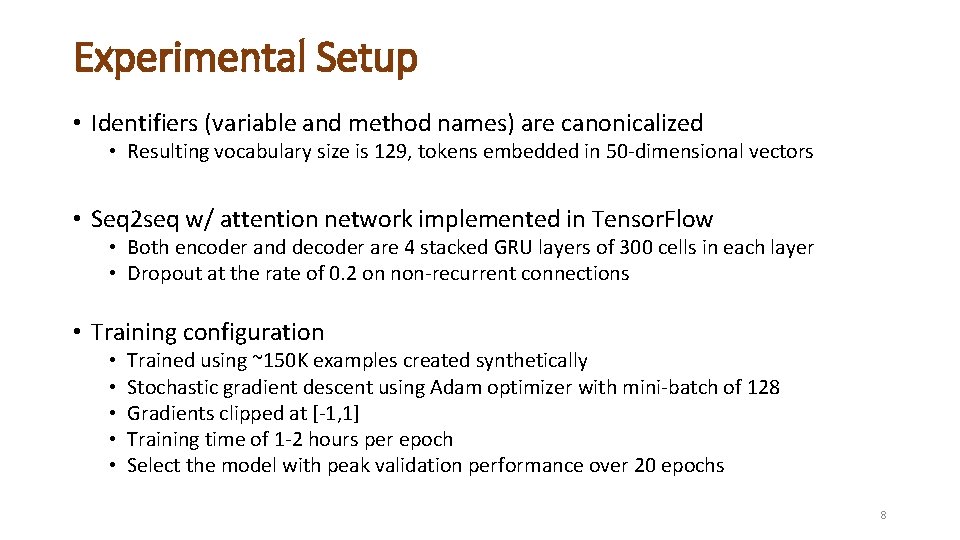

Experimental Setup • Identifiers (variable and method names) are canonicalized • Resulting vocabulary size is 129, tokens embedded in 50 -dimensional vectors • Seq 2 seq w/ attention network implemented in Tensor. Flow • Both encoder and decoder are 4 stacked GRU layers of 300 cells in each layer • Dropout at the rate of 0. 2 on non-recurrent connections • Training configuration • • • Trained using ~150 K examples created synthetically Stochastic gradient descent using Adam optimizer with mini-batch of 128 Gradients clipped at [-1, 1] Training time of 1 -2 hours per epoch Select the model with peak validation performance over 20 epochs 8

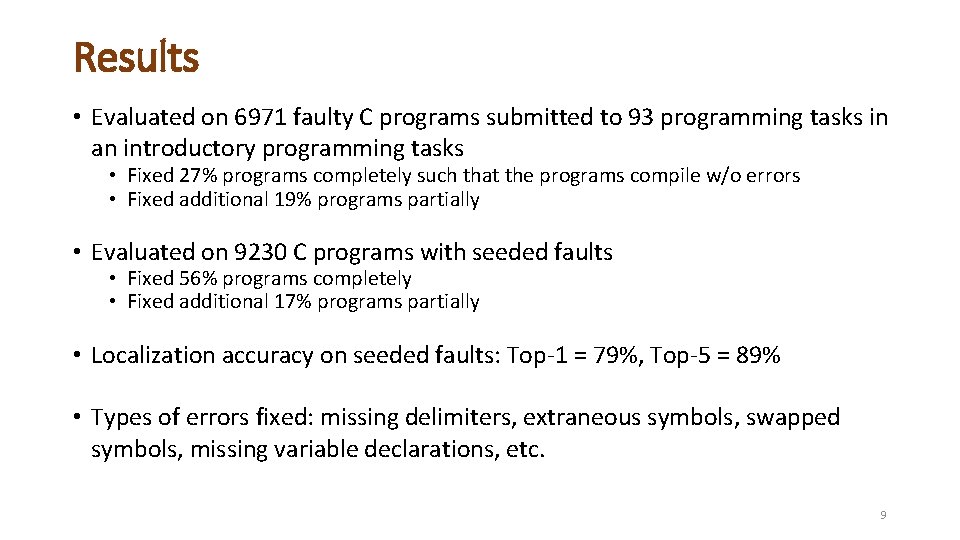

Results • Evaluated on 6971 faulty C programs submitted to 93 programming tasks in an introductory programming tasks • Fixed 27% programs completely such that the programs compile w/o errors • Fixed additional 19% programs partially • Evaluated on 9230 C programs with seeded faults • Fixed 56% programs completely • Fixed additional 17% programs partially • Localization accuracy on seeded faults: Top-1 = 79%, Top-5 = 89% • Types of errors fixed: missing delimiters, extraneous symbols, swapped symbols, missing variable declarations, etc. 9

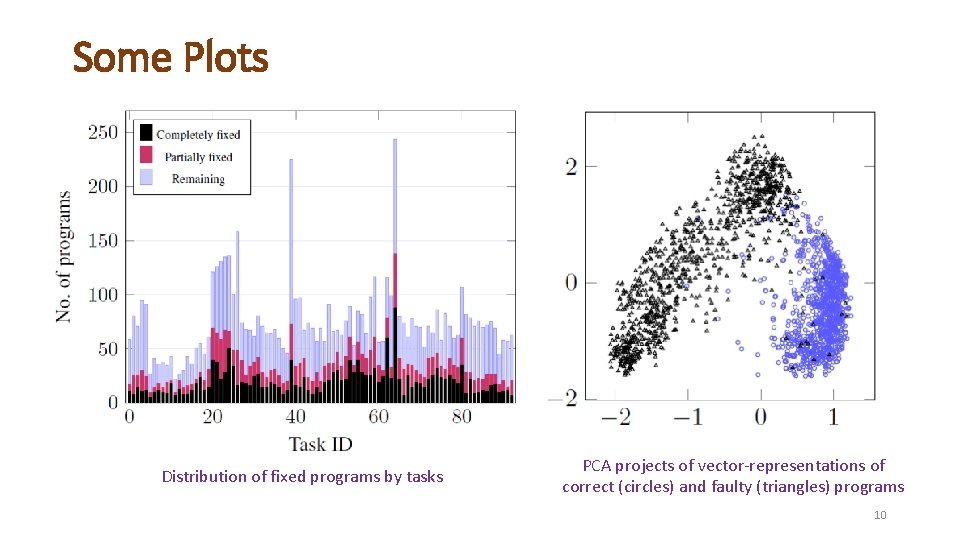

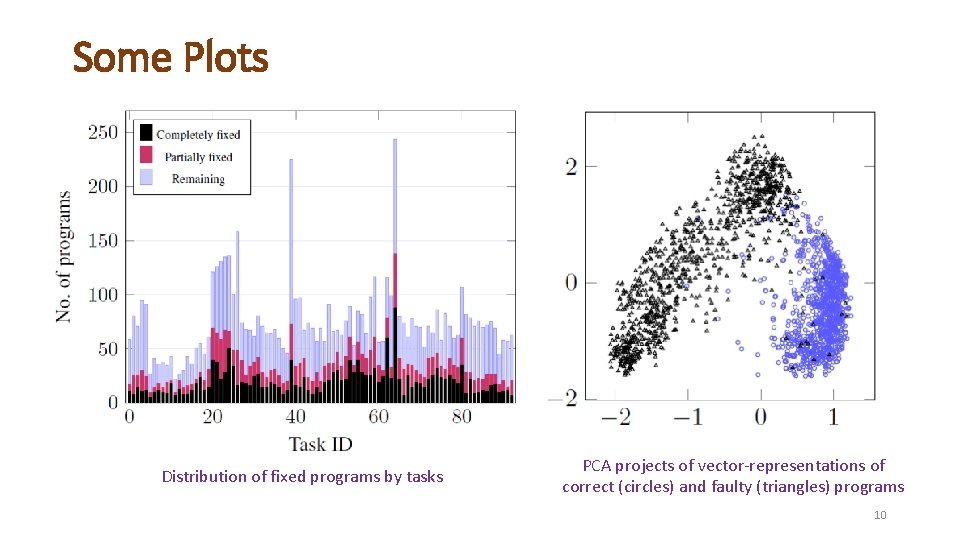

Some Plots Distribution of fixed programs by tasks PCA projects of vector-representations of correct (circles) and faulty (triangles) programs 10

Lightweight Machine Learning 11

Statistical Correlation to Search for Likely Fixes • Given a test suite and a potential faulty location, how to search for expressions that are likely to appear in the fixed version? • Use symbolic execution to obtain desired expression values • Enumerate a set of expressions and their values on all executions • Rank expressions using statistical correlation with the desired values • Used for synthesis of repair hints in Mint. Hint [ICSE’ 14] 12

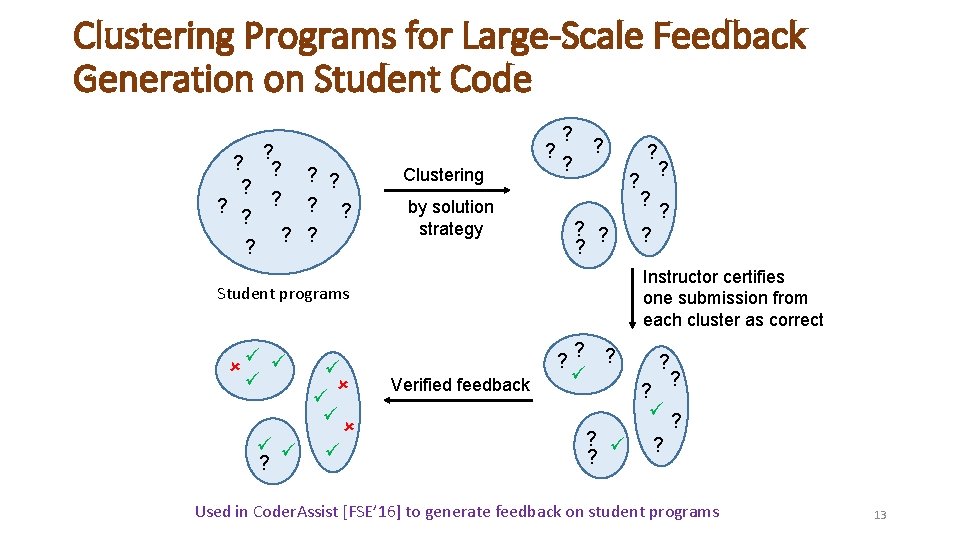

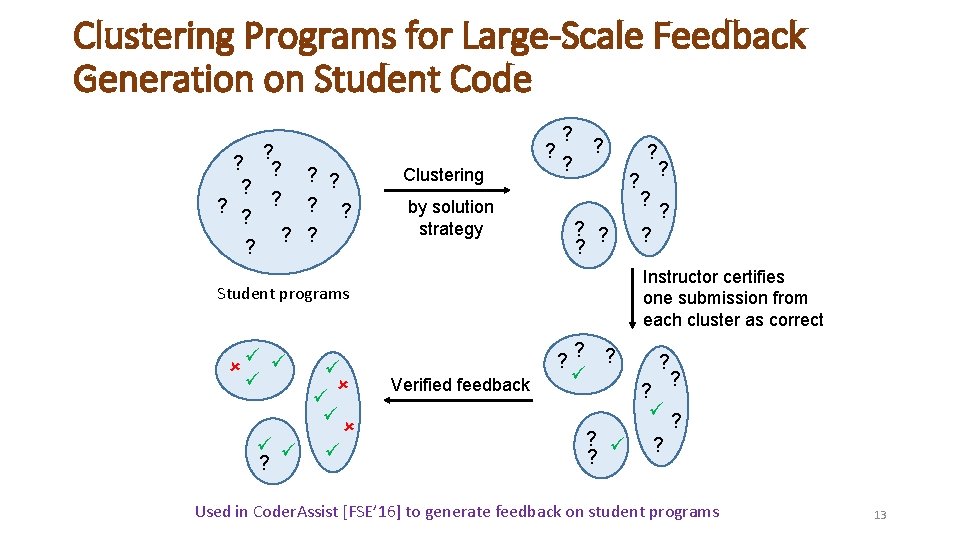

Clustering Programs for Large-Scale Feedback Generation on Student Code ? ? ? ? ? Clustering ? ? by solution strategy ? ? ? ? Instructor certifies one submission from each cluster as correct Student programs ? ? ? ? ? Verified feedback ? ? ? Used in Coder. Assist [FSE’ 16] to generate feedback on student programs 13

Acknowledgements • Deep. Fix: Fixing common C language errors by deep learning Rahul Gupta, Soham Pal, Aditya Kanade, Shirish Shevade AAAI’ 17 • Semi-supervised verified feedback generation Shalini Kaleeswaran, Anirudh Santhiar, Aditya Kanade, Sumit Gulwani FSE’ 16 • Mint. Hint: Automated synthesis of repair hints Shalini Kaleeswaran, Varun Tulsian, Aditya Kanade, Alessandro Orso ICSE’ 14 • Some images taken from the Deep Learning book by Goodfellow, Bengio and Courville 14

Discussion Points • Improving performance of Deep. Fix, e. g. , using a copying mechanism? • Fixing more challenging programming errors? • Handling larger programs? Learning better dependences? • General-purpose deep nets versus special-purpose deep nets designed for program text? • How to obtain high-quality training data? Mutation strategies? 15