Deep Learning Adaptive Learning Rate Algorithms Upsolver Implementing

- Slides: 18

Deep Learning Adaptive Learning Rate Algorithms Upsolver Implementing deep learning in Ad-tech Yoni Iny - CTO Ori Rafael – CEO

About Upsolver Founded in Feb 2014 Post seed Offices in TLV On-boarding first customers Finished 3 technology validation projects 1 st to graduate from Yahoo’s ad-tech innovation program

THE TEAM R&D ORI RAFAEL YONI INY CEO & Co-Founder CTO & Co-Founder 7 years technology management at 8200 3 years of B 2 B sales and biz dev B. A Computer Science, MBA 9 years at 8200 CTO of a major Data science group B. A Mathematics & Computer Science 8200 dev force Shani Elharrar VP R&D 5 years at 8200 Coding since age 13 Omer Kushnir Full stack developer 12 years at 8200 Jason Fine Full stack developer 5 years at 8200 Coding since age 13 Data Science Academia and industry experience Bar Vinograd Senior Data Scientist Chief data science at Adallom 5 years at 8200 B. A Mathematics Eyal Gruss Deep learning expert PHD in theoretical physic Principal Data scientist at RSA

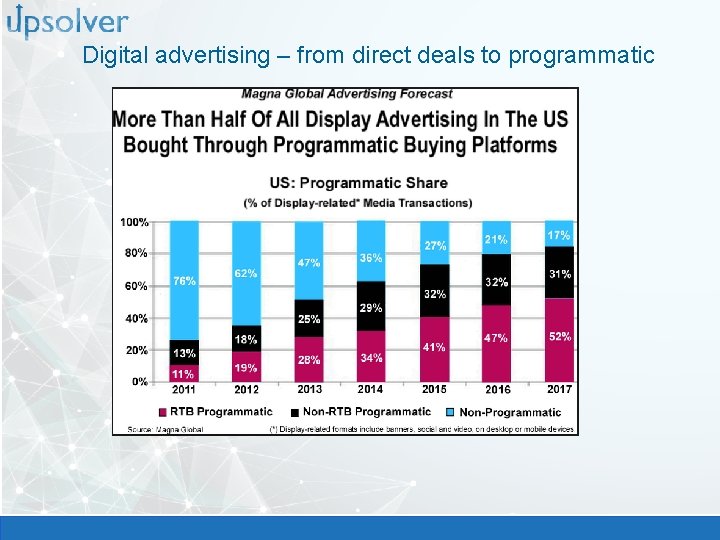

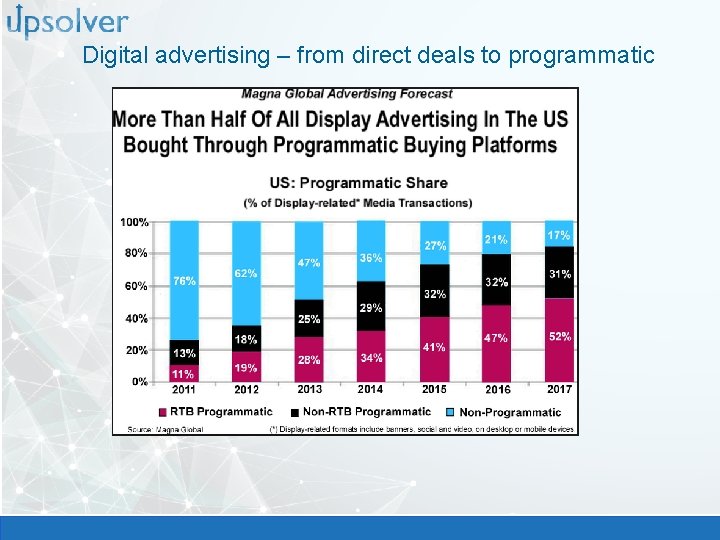

Digital advertising – from direct deals to programmatic

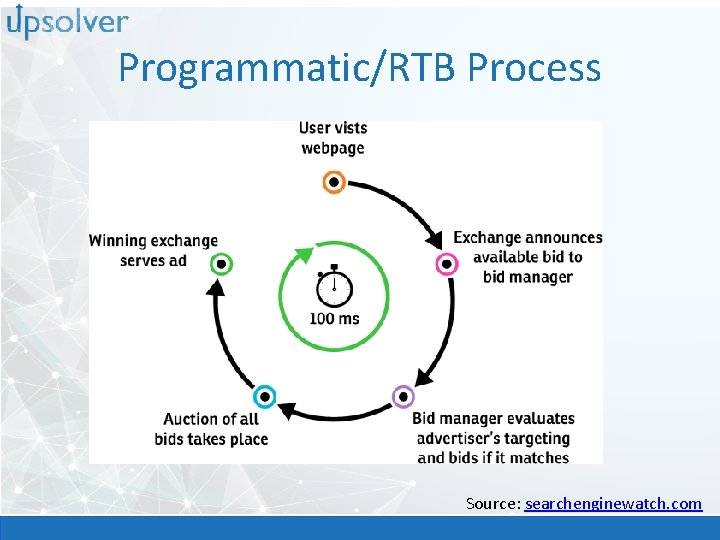

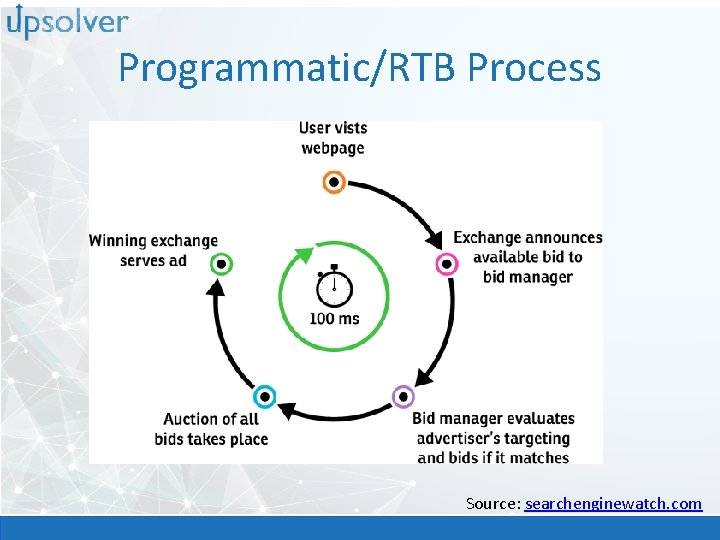

Programmatic/RTB Process Source: searchenginewatch. com

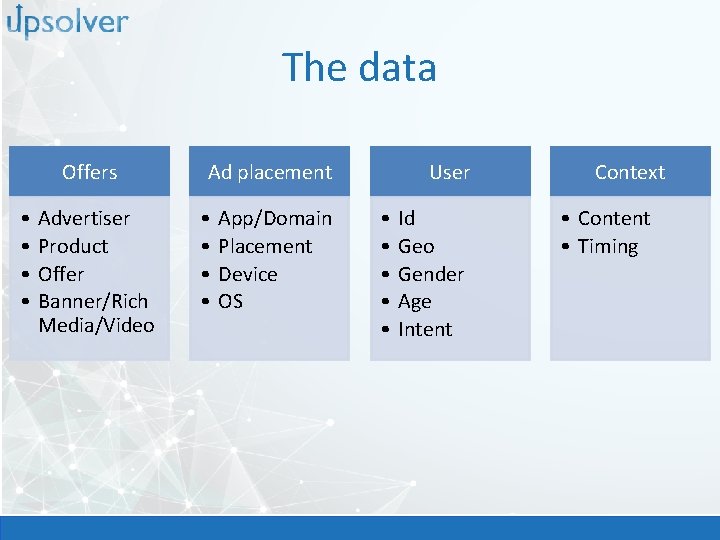

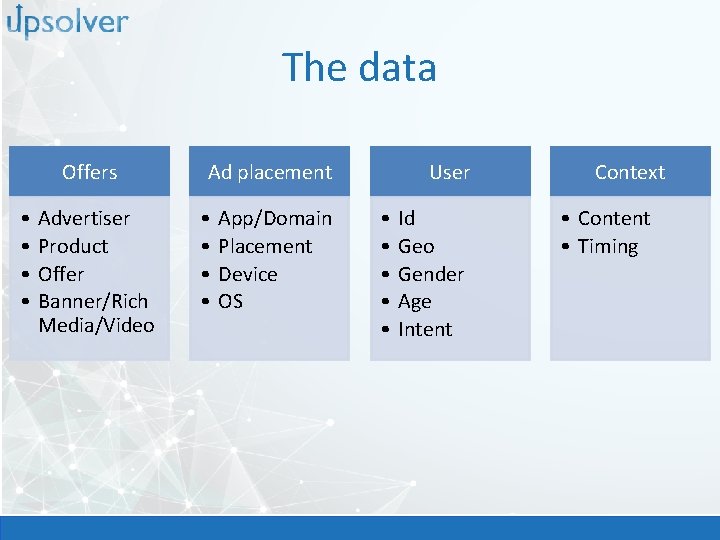

The data Offers • • Advertiser Product Offer Banner/Rich Media/Video Ad placement • • App/Domain Placement Device OS User • • • Id Geo Gender Age Intent Context • Content • Timing

The “standard” approach feature engineering + logistic regression

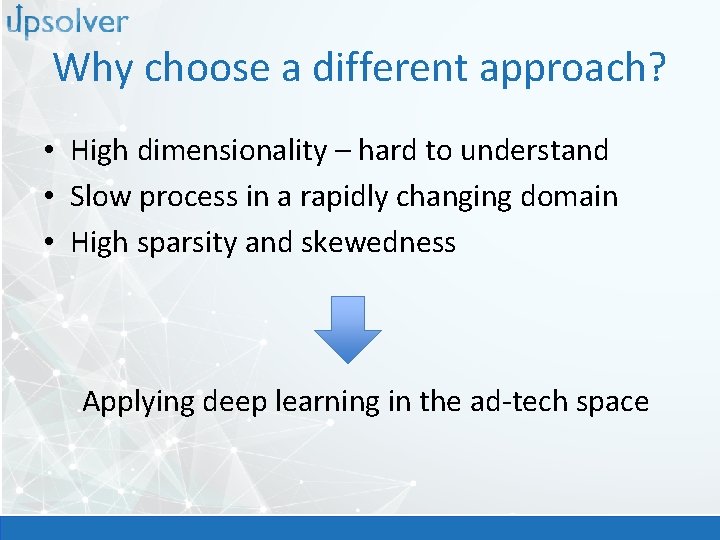

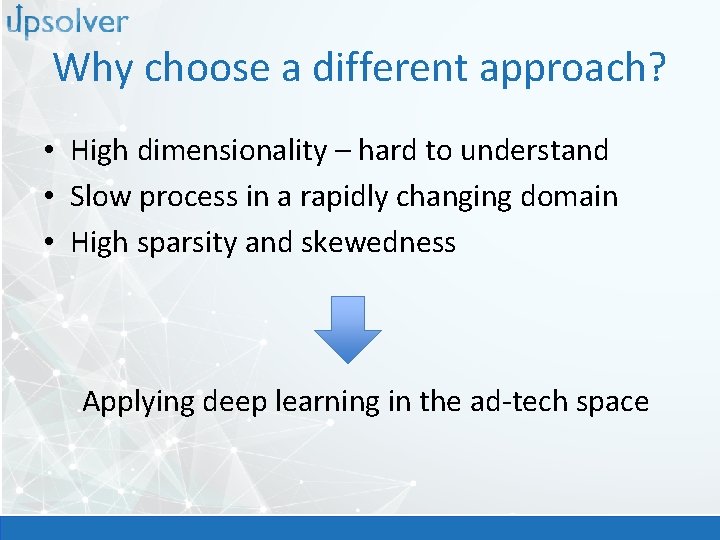

Why choose a different approach? • High dimensionality – hard to understand • Slow process in a rapidly changing domain • High sparsity and skewedness Applying deep learning in the ad-tech space

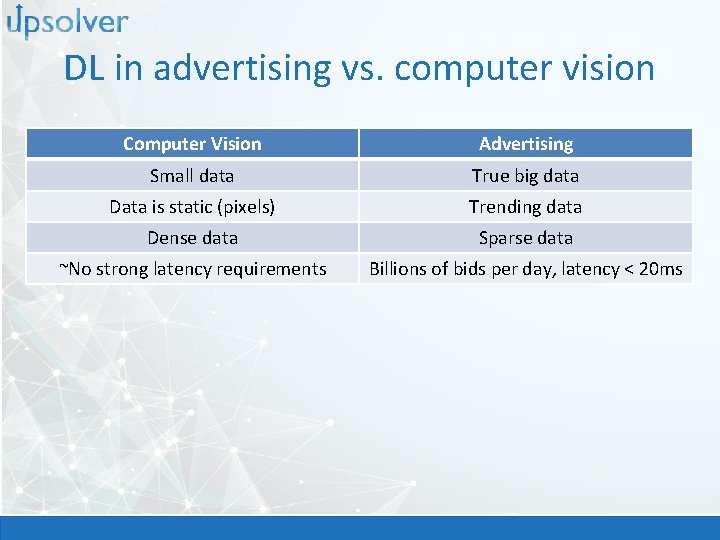

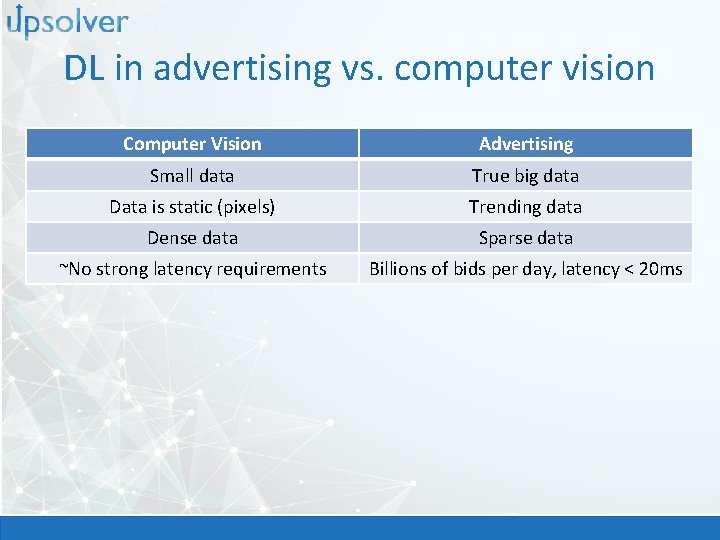

DL in advertising vs. computer vision Computer Vision Advertising Small data True big data Data is static (pixels) Trending data Dense data Sparse data ~No strong latency requirements Billions of bids per day, latency < 20 ms

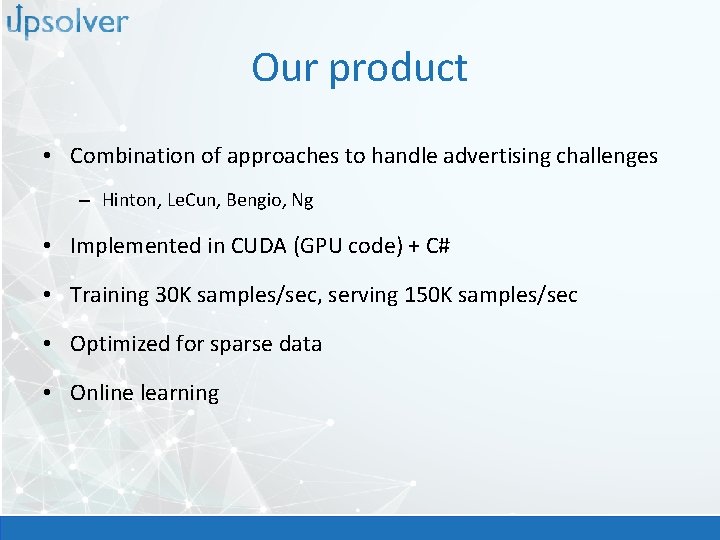

Our product • Combination of approaches to handle advertising challenges – Hinton, Le. Cun, Bengio, Ng • Implemented in CUDA (GPU code) + C# • Training 30 K samples/sec, serving 150 K samples/sec • Optimized for sparse data • Online learning

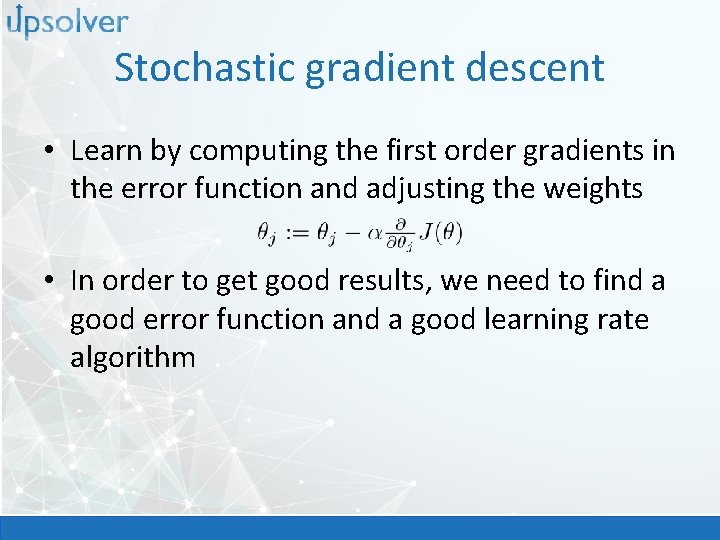

Stochastic gradient descent • Learn by computing the first order gradients in the error function and adjusting the weights • In order to get good results, we need to find a good error function and a good learning rate algorithm

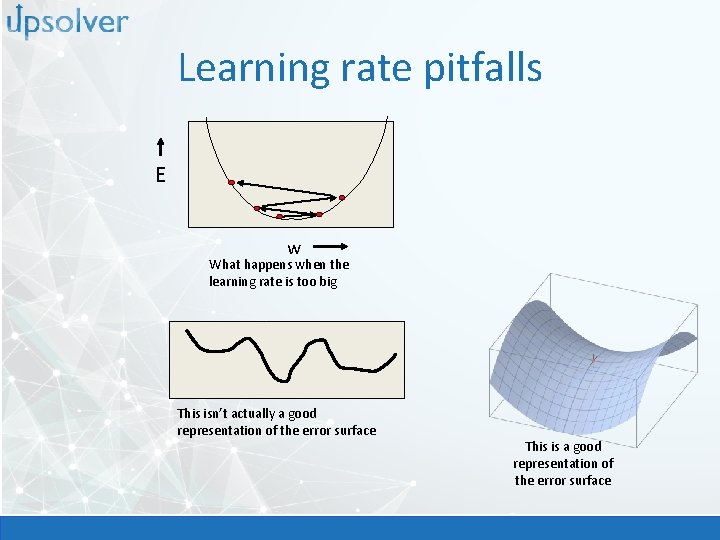

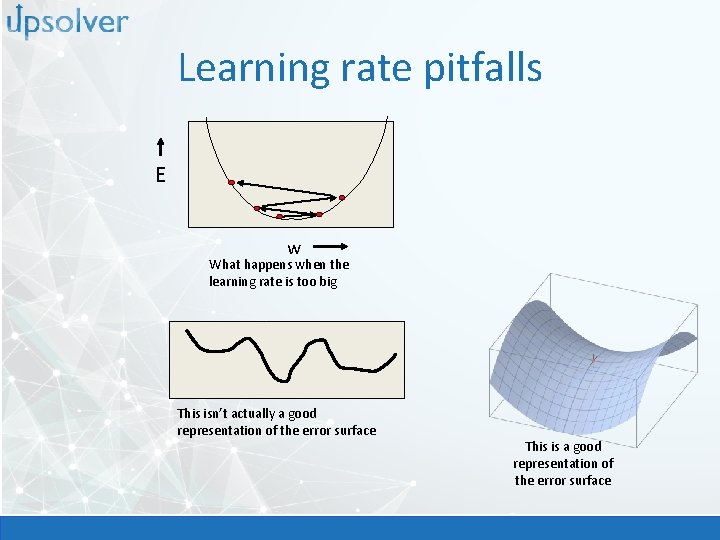

Learning rate pitfalls E w What happens when the learning rate is too big This isn’t actually a good representation of the error surface This is a good representation of the error surface

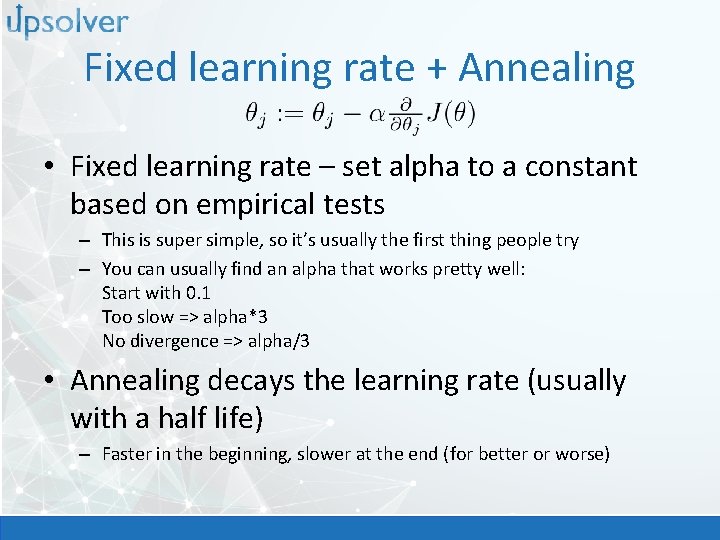

Fixed learning rate + Annealing • Fixed learning rate – set alpha to a constant based on empirical tests – This is super simple, so it’s usually the first thing people try – You can usually find an alpha that works pretty well: Start with 0. 1 Too slow => alpha*3 No divergence => alpha/3 • Annealing decays the learning rate (usually with a half life) – Faster in the beginning, slower at the end (for better or worse)

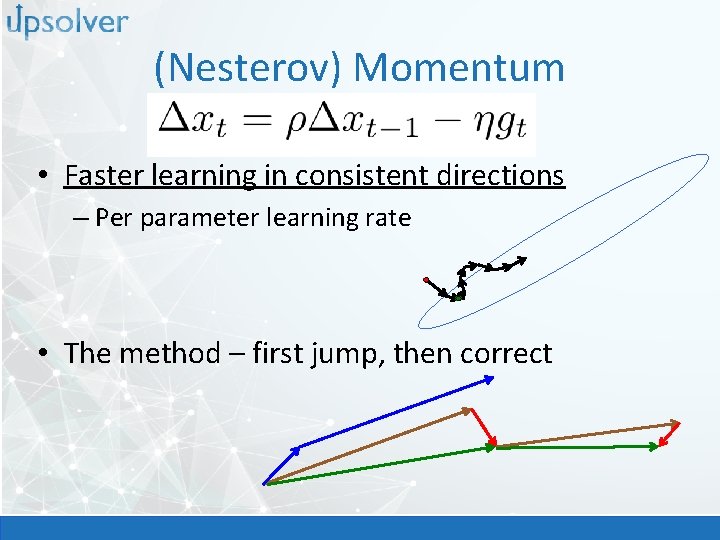

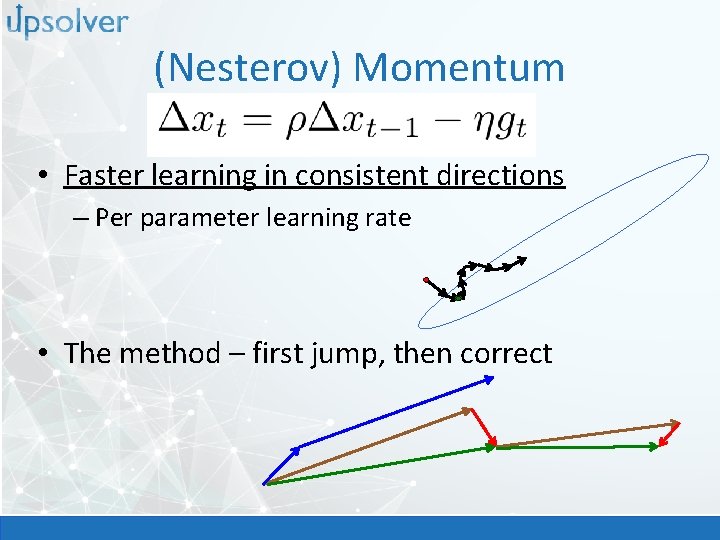

(Nesterov) Momentum • Faster learning in consistent directions – Per parameter learning rate • The method – first jump, then correct

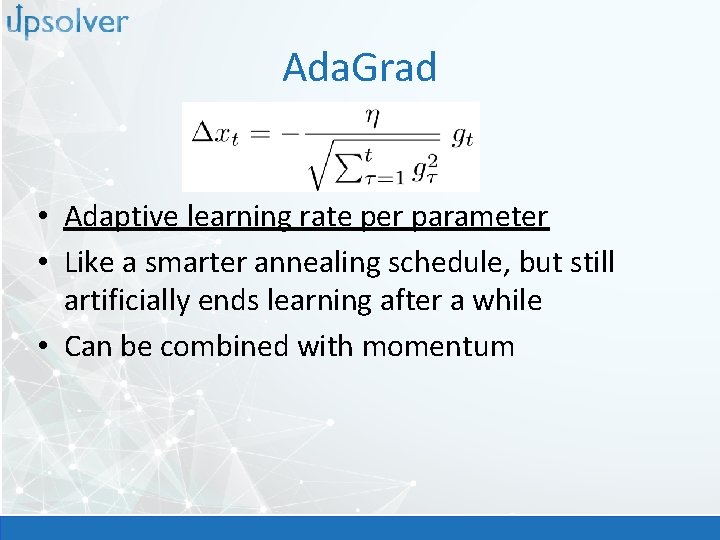

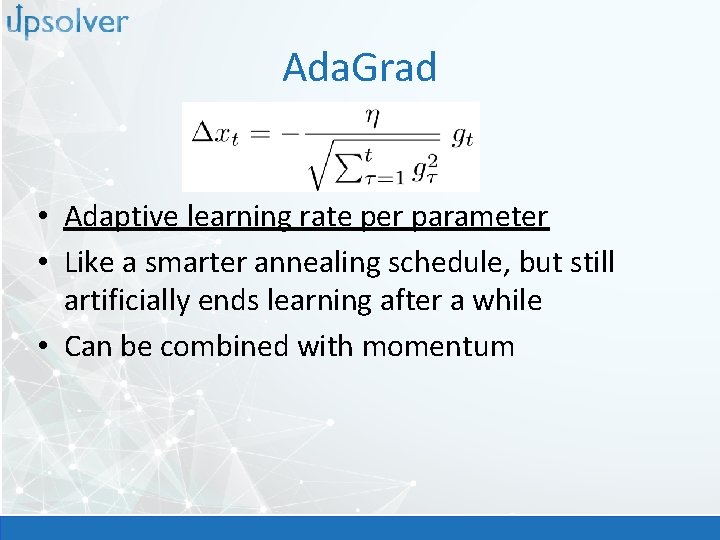

Ada. Grad • Adaptive learning rate per parameter • Like a smarter annealing schedule, but still artificially ends learning after a while • Can be combined with momentum

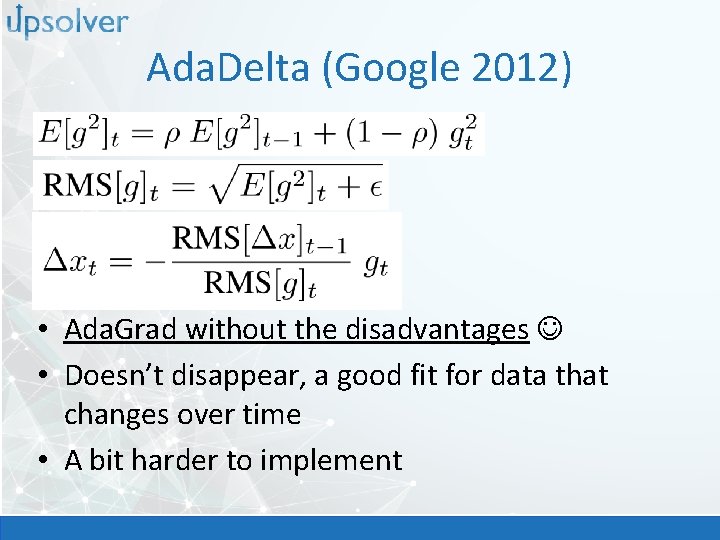

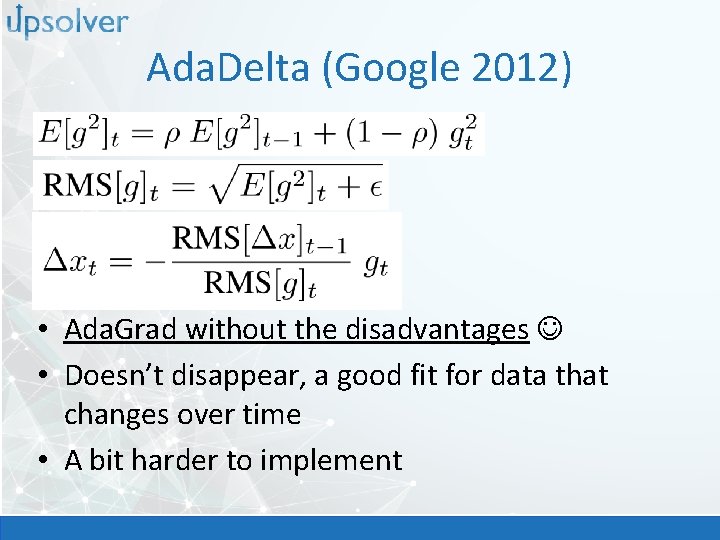

Ada. Delta (Google 2012) • Ada. Grad without the disadvantages • Doesn’t disappear, a good fit for data that changes over time • A bit harder to implement

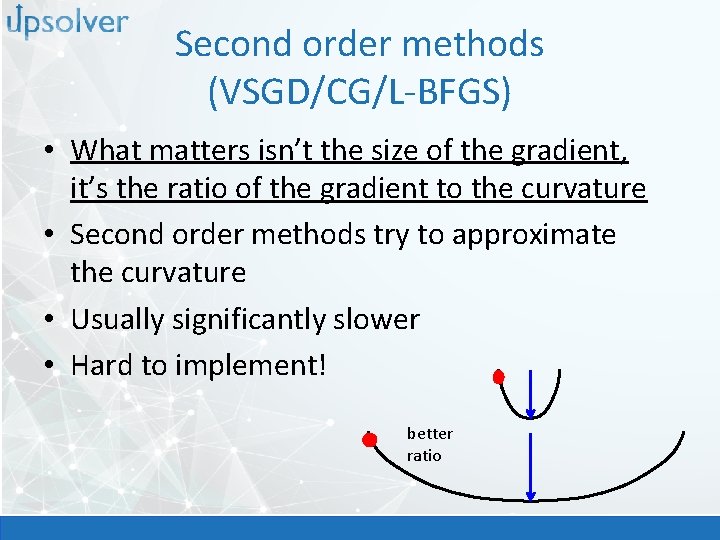

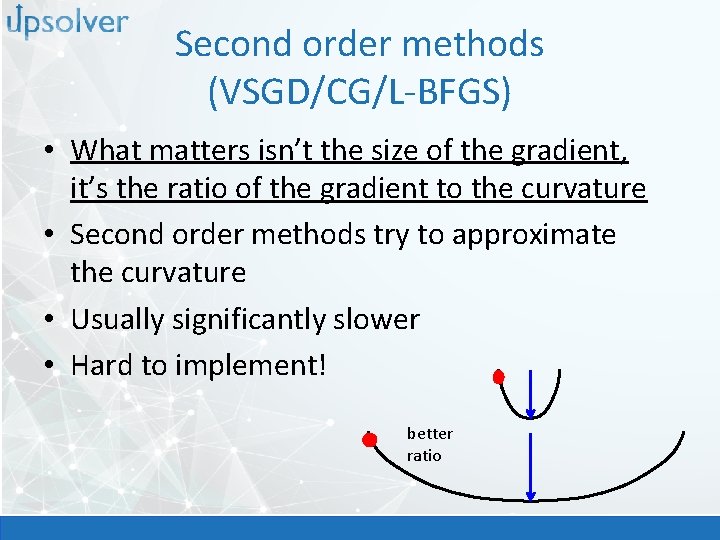

Second order methods (VSGD/CG/L-BFGS) • What matters isn’t the size of the gradient, it’s the ratio of the gradient to the curvature • Second order methods try to approximate the curvature • Usually significantly slower • Hard to implement! better ratio

Questions! Ori Rafael +972 -54 -9849666 ori@upsolver. com Yoni Iny +972 -54 -4860360 yoni@upsolver. com