Deconstructing Commodity Storage Clusters Haryadi S Gunawi Nitin

- Slides: 29

Deconstructing Commodity Storage Clusters Haryadi S. Gunawi, Nitin Agrawal, Andrea C. Arpaci-Dusseau, Remzi H. Arpaci-Dusseau Univ. of Wisconsin - Madison Jiri Schindler Corporation

Storage system § Storage system – Important components of large-scale systems – Multi-billion dollar industry § Often comprised of high-end storage servers – A big box with lots of disks inside § The simple question – How does storage server work? – Simple but hard – closed storage subsystem design 2

Why need to know? § Better modeling – How system behaves under different workload – Example in storage industry: capacity model for capacity planning – Model is limited if the information is limited § Product validation – Validate what product specs say – Performance numbers cannot confirm § Critical evaluation of design and implementation choices – Control what is occurring inside 3

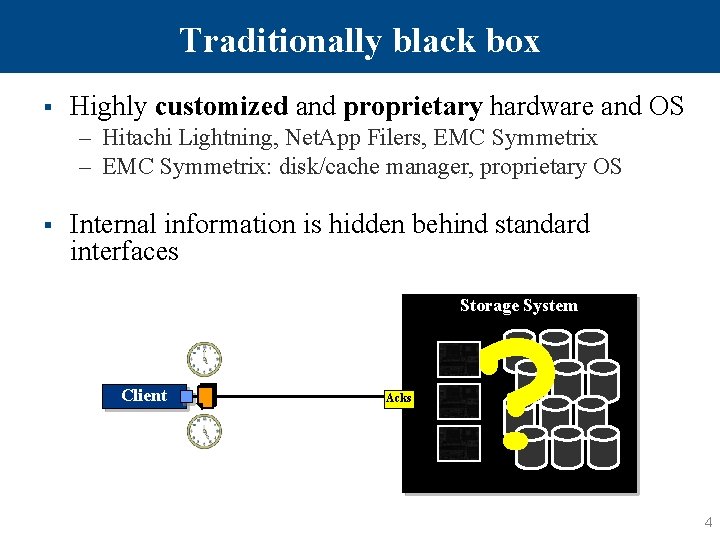

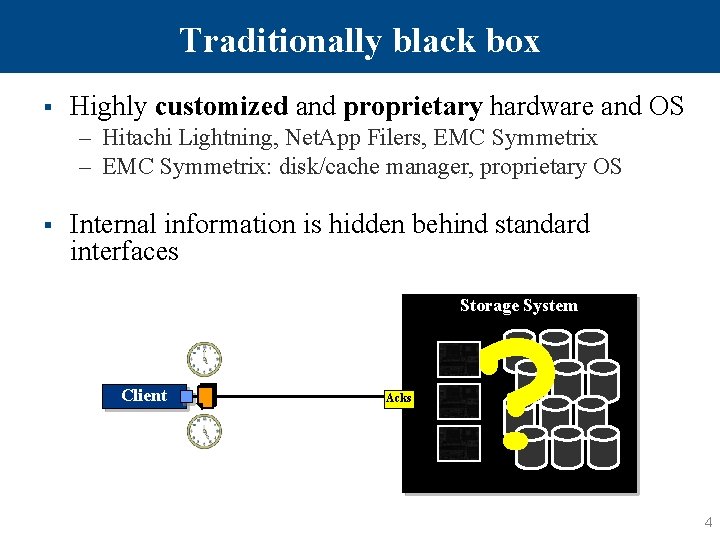

Traditionally black box § Highly customized and proprietary hardware and OS – Hitachi Lightning, Net. App Filers, EMC Symmetrix – EMC Symmetrix: disk/cache manager, proprietary OS § Internal information is hidden behind standard interfaces Storage System Client Acks ? 4

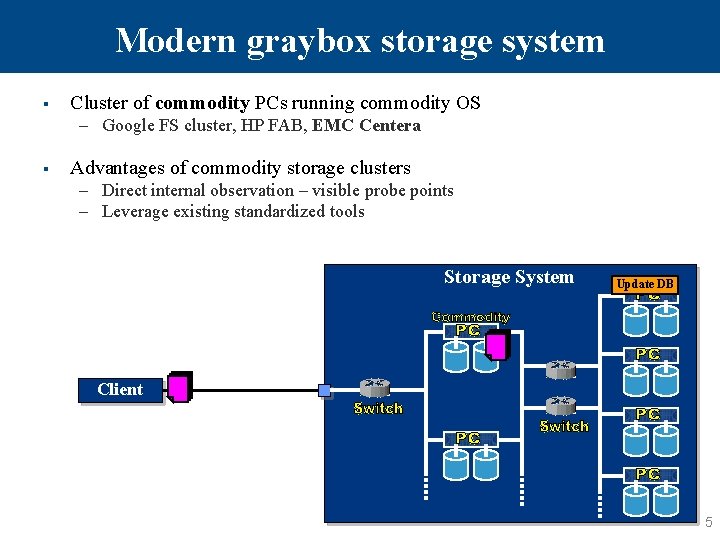

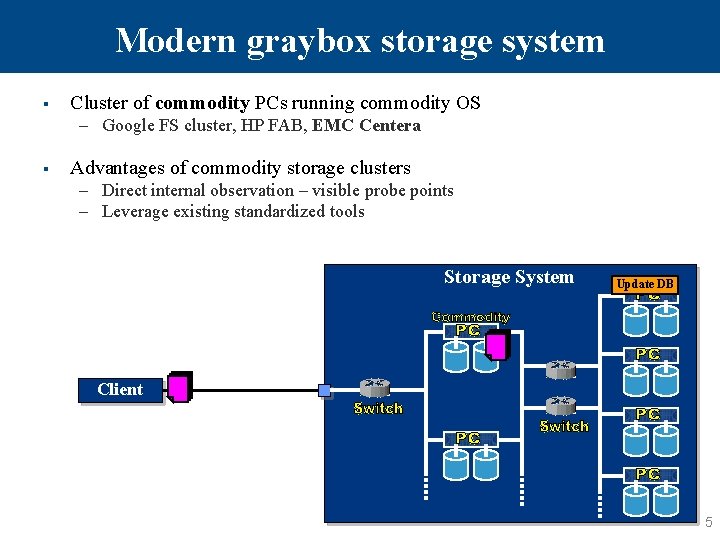

Modern graybox storage system § Cluster of commodity PCs running commodity OS – Google FS cluster, HP FAB, EMC Centera § Advantages of commodity storage clusters – Direct internal observation – visible probe points – Leverage existing standardized tools Storage System Update DB Client 5

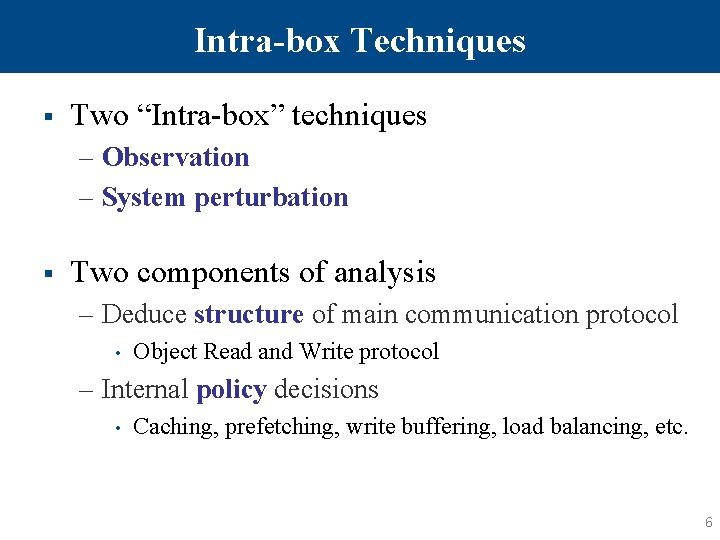

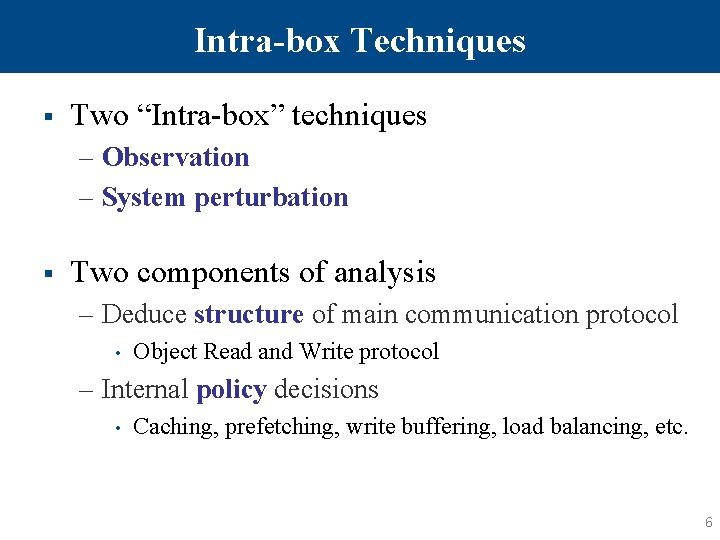

Intra-box Techniques § Two “Intra-box” techniques – Observation – System perturbation § Two components of analysis – Deduce structure of main communication protocol • Object Read and Write protocol – Internal policy decisions • Caching, prefetching, write buffering, load balancing, etc. 6

Goal and EMC Author § Objectives – Feasibility of deconstructing commodity storage clusters, no source code – Results achieved without EMC assistance § EMC Author – Evaluate correctness of our findings – Give insights behind their design decisions 7

Outline § Introduction § EMC Centera Overview – Intra-box tools § Deducing Protocol – Observation and Delay Pertubation § Inferring Policies – System Perturbation § Conclusion 8

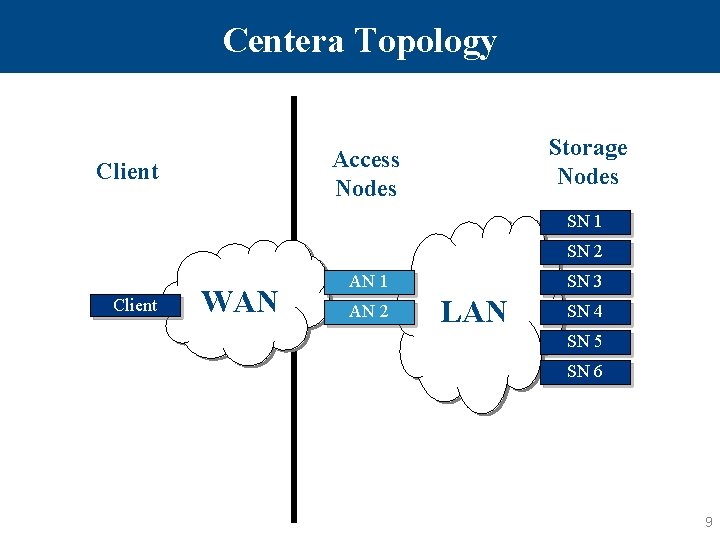

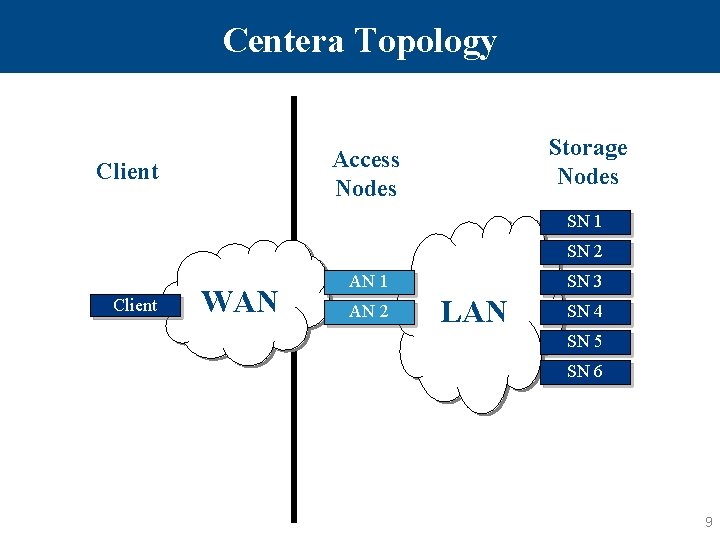

Centera Topology Storage Nodes Access Nodes Client SN 1 SN 2 Client WAN AN 1 AN 2 SN 3 LAN SN 4 SN 5 SN 6 9

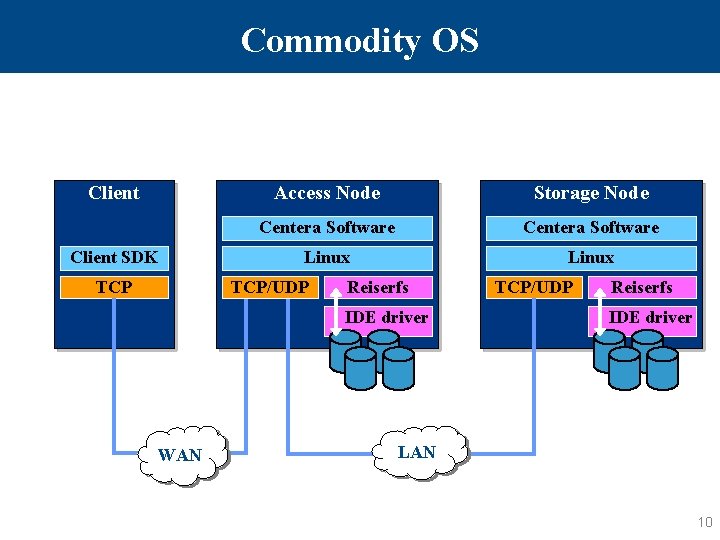

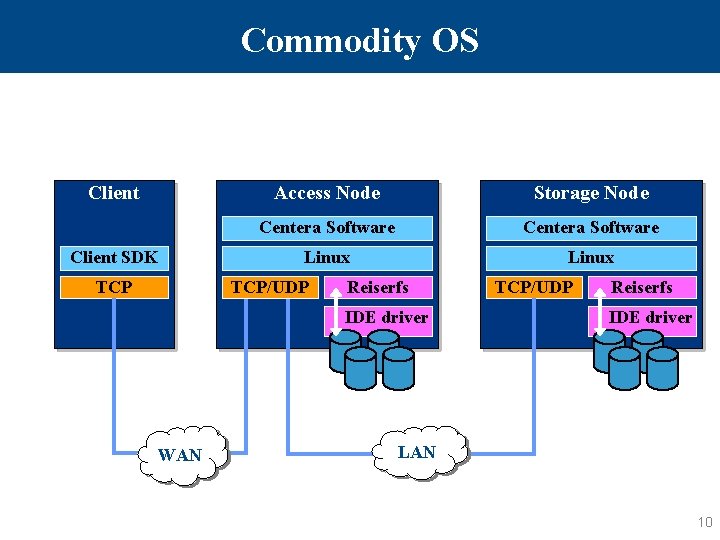

Commodity OS Client SDK TCP Access Node Storage Node Centera Software Linux TCP/UDP Reiserfs IDE driver WAN TCP/UDP Reiserfs IDE driver LAN 10

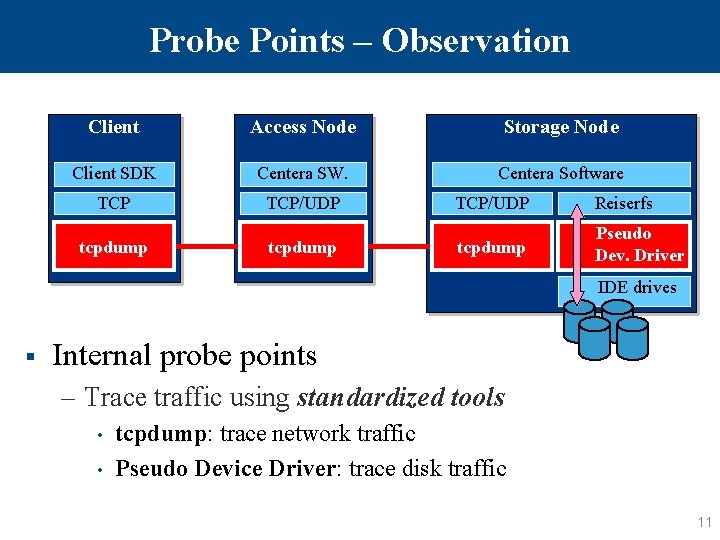

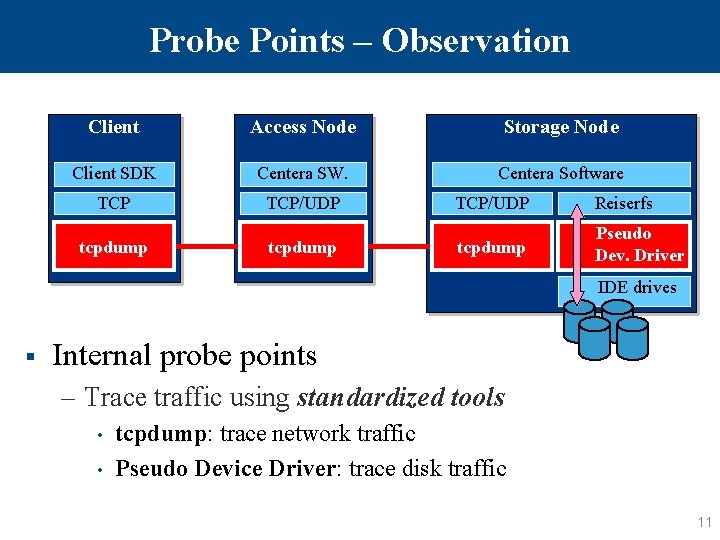

Probe Points – Observation Client Access Node Storage Node Client SDK Centera SW. Centera Software TCP/UDP tcpdump TCP/UDP Reiserfs tcpdump Pseudo Dev. Driver IDE drives § Internal probe points – Trace traffic using standardized tools • • tcpdump: trace network traffic Pseudo Device Driver: trace disk traffic 11

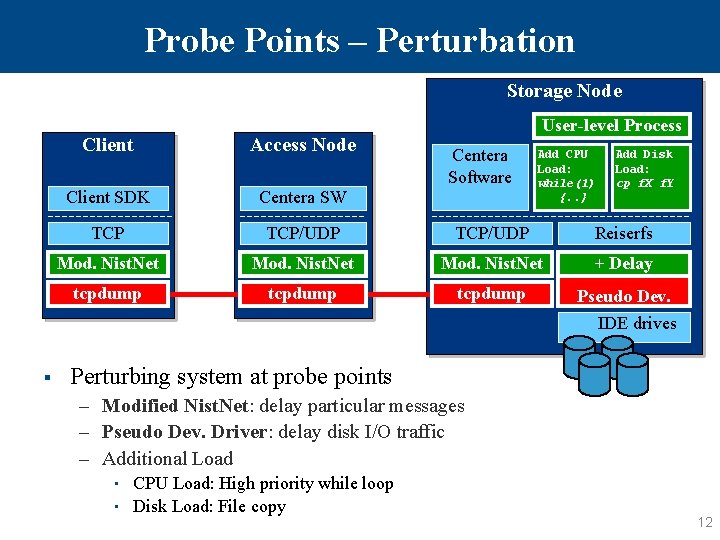

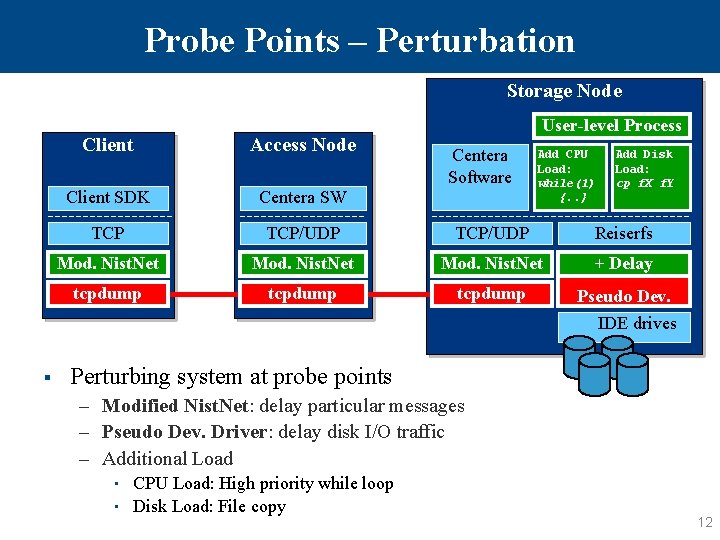

Probe Points – Perturbation Storage Node User-level Process Client Access Node Client SDK Centera SW TCP/UDP Reiserfs Mod. Nist. Net + Delay tcpdump Pseudo Dev. Centera Software Add CPU Load: while(1) {. . } Add Disk Load: cp f. X f. Y IDE drives § Perturbing system at probe points – Modified Nist. Net: delay particular messages – Pseudo Dev. Driver: delay disk I/O traffic – Additional Load • • CPU Load: High priority while loop Disk Load: File copy 12

Outline § Introduction § EMC Centera Overview § Deducing Protocol – Observation and Delay Perturbation § Inferring Policies – System Perturbation § Conclusion 13

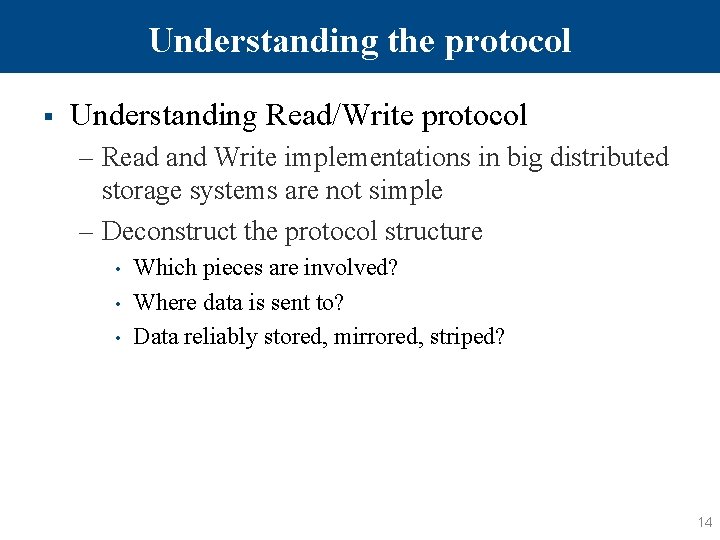

Understanding the protocol § Understanding Read/Write protocol – Read and Write implementations in big distributed storage systems are not simple – Deconstruct the protocol structure • • • Which pieces are involved? Where data is sent to? Data reliably stored, mirrored, striped? 14

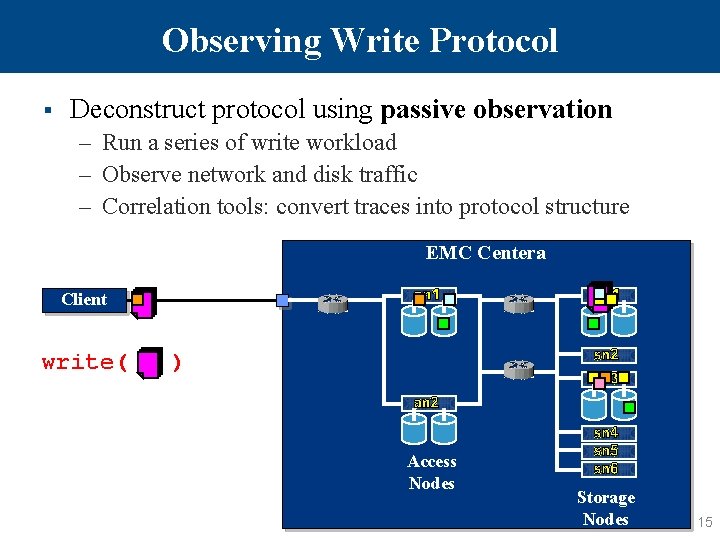

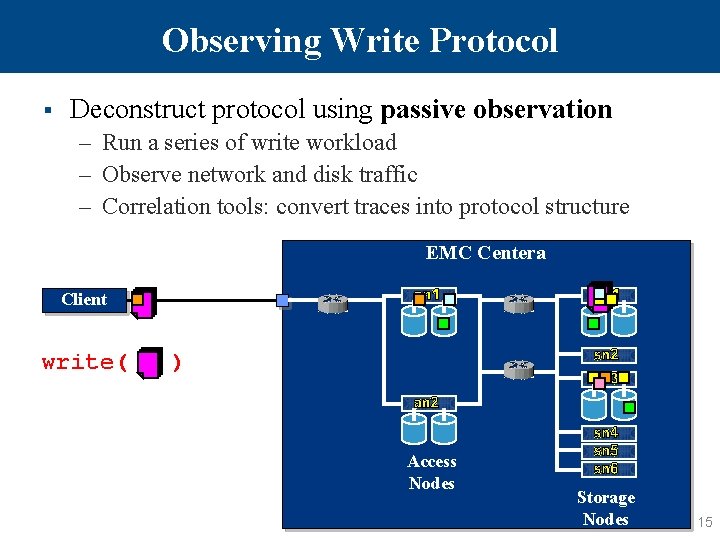

Observing Write Protocol § Deconstruct protocol using passive observation – Run a series of write workload – Observe network and disk traffic – Correlation tools: convert traces into protocol structure EMC Centera Client write( ) Access Nodes Storage Nodes 15

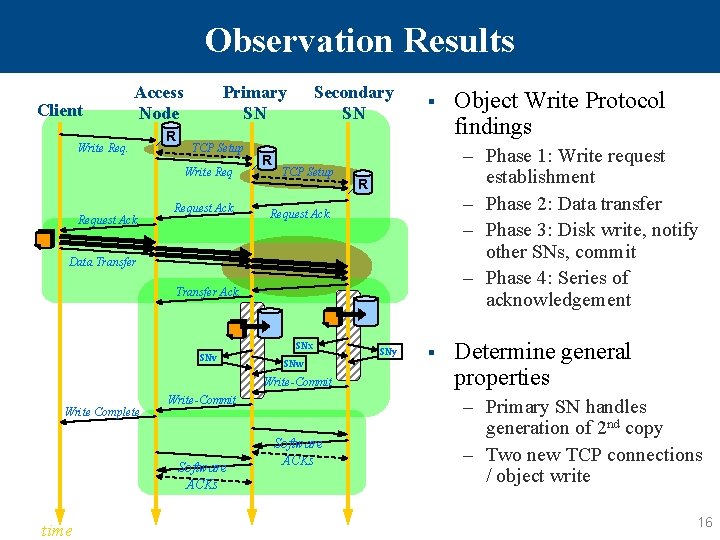

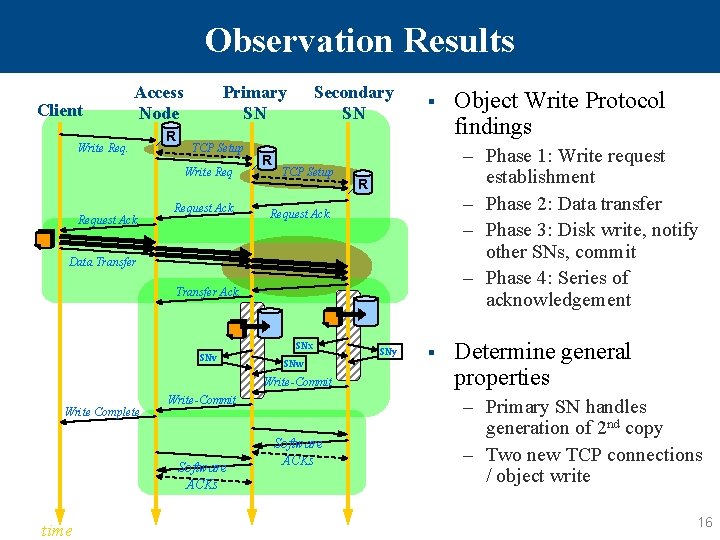

Observation Results Client Access Node Write Req. R Primary SN TCP Setup Write Request Ack. R Secondary SN TCP Setup § – Phase 1: Write request establishment – Phase 2: Data transfer – Phase 3: Disk write, notify other SNs, commit – Phase 4: Series of acknowledgement R Request Ack. Data Transfer Ack. SNx SNv SNw Write-Commit Write Complete Write-Commit Software ACKs time Software ACKs Object Write Protocol findings SNy § Determine general properties – Primary SN handles generation of 2 nd copy – Two new TCP connections / object write 16

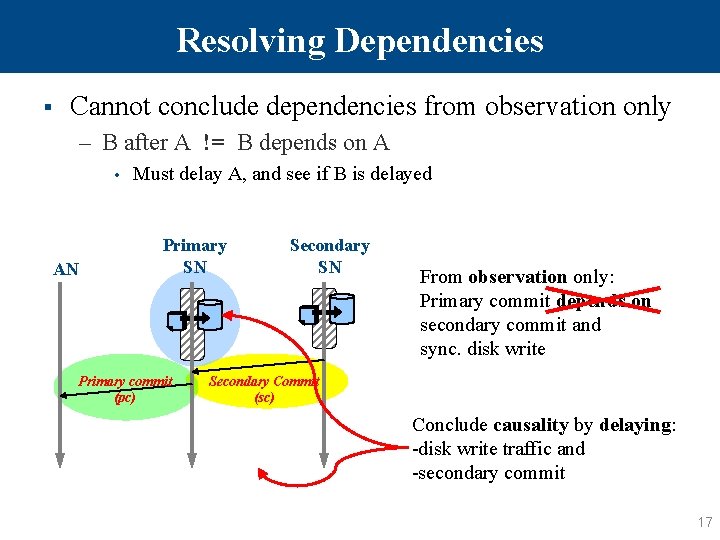

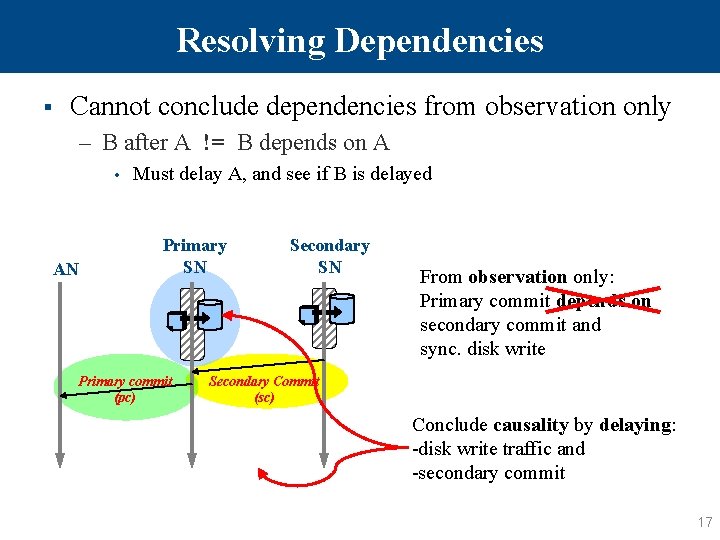

Resolving Dependencies § Cannot conclude dependencies from observation only – B after A != B depends on A • AN Must delay A, and see if B is delayed Primary SN Primary commit (pc) Secondary SN From observation only: Primary commit depends on secondary commit and sync. disk write Secondary Commit (sc) Conclude causality by delaying: -disk write traffic and -secondary commit 17

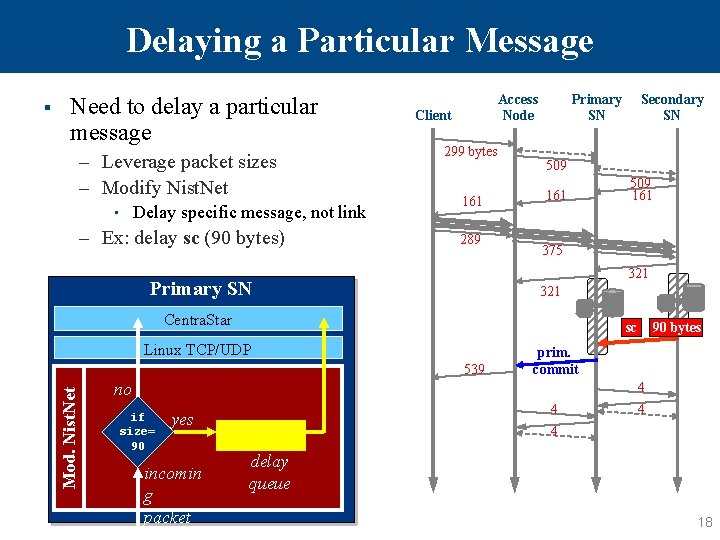

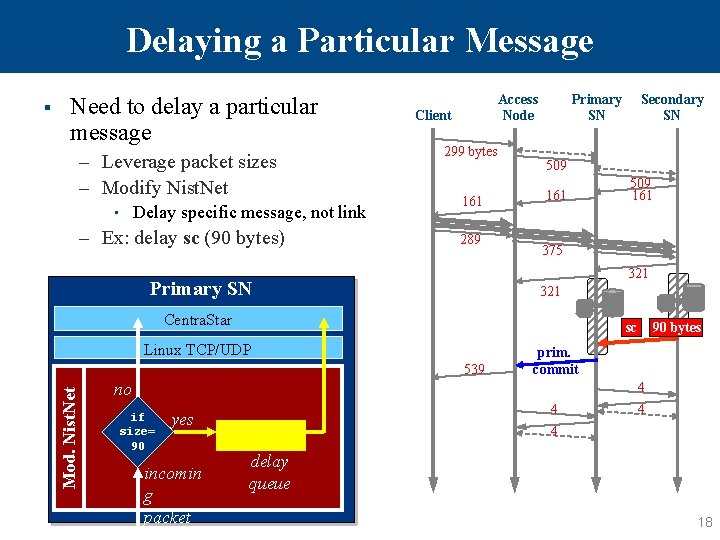

Delaying a Particular Message Need to delay a particular message – Leverage packet sizes – Modify Nist. Net • Delay specific message, not link – Ex: delay sc (90 bytes) Access Node Client 299 bytes 161 289 Primary SN Secondary SN 509 161 375 321 Primary SN 321 Centra. Star sc Linux TCP/UDP 539 Mod. Nist. Net § 90 bytes prim. commit no 4 if size= 90 4 yes incomin g packet 4 4 delay queue 18

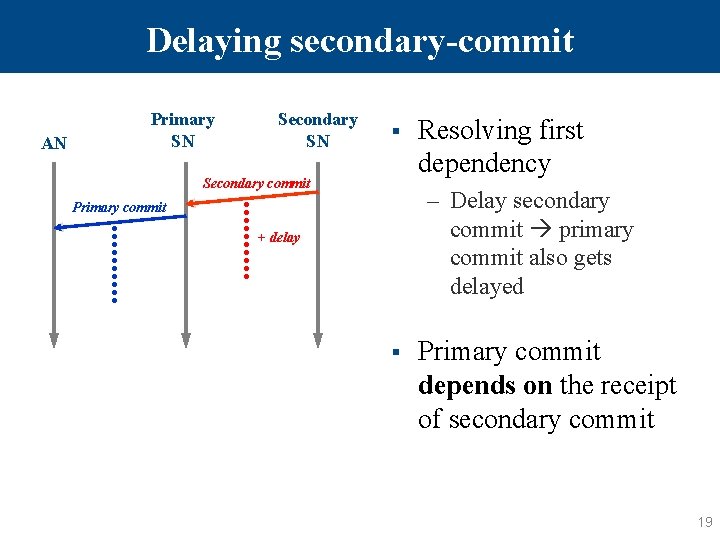

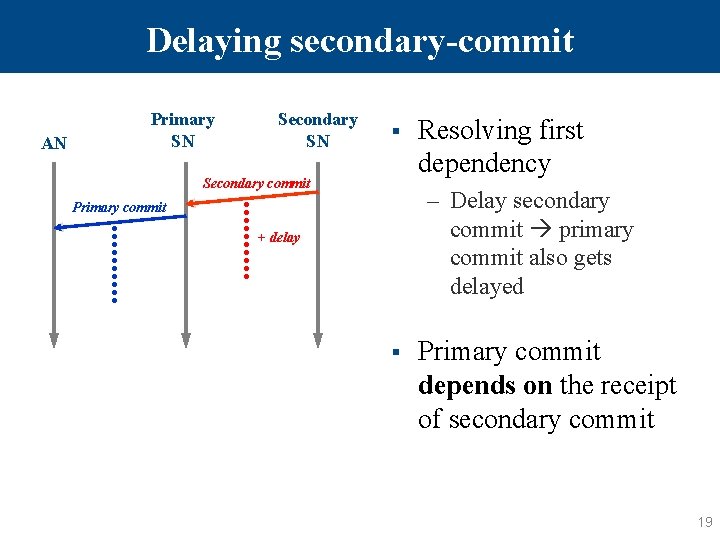

Delaying secondary-commit AN Primary SN Secondary SN § Secondary commit Resolving first dependency – Delay secondary commit primary commit also gets delayed Primary commit + delay § Primary commit depends on the receipt of secondary commit 19

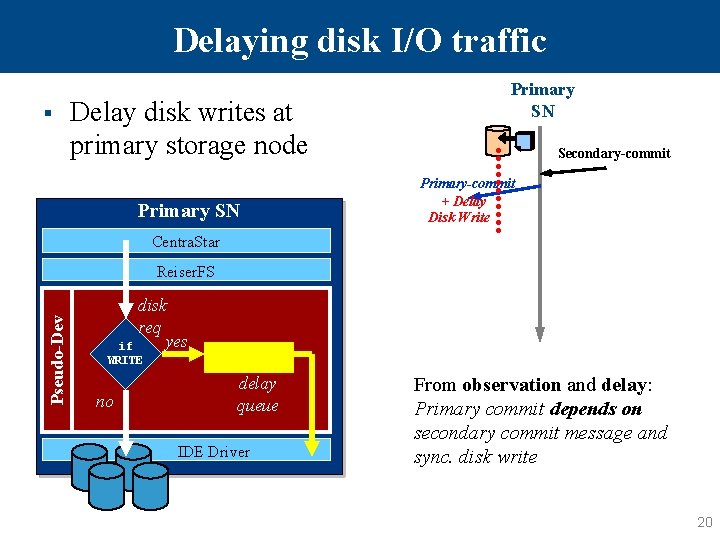

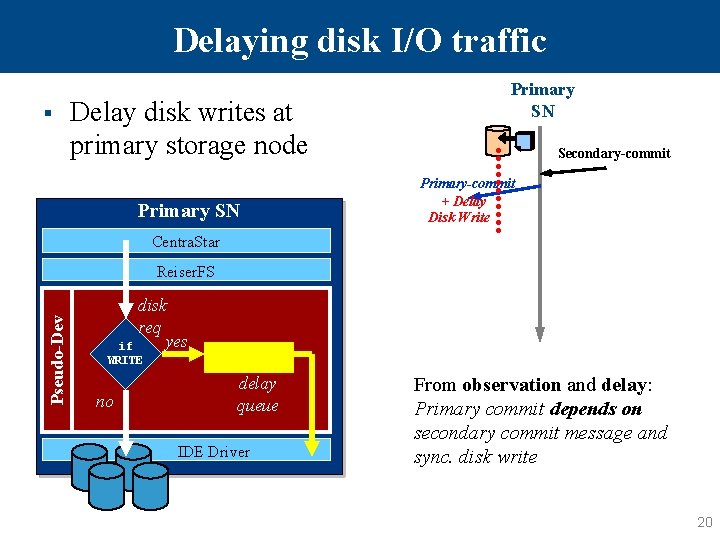

Delaying disk I/O traffic § Delay disk writes at primary storage node Primary SN Secondary-commit Primary-commit + Delay Disk Write Centra. Star Pseudo-Dev Reiser. FS disk req yes if WRITE no delay queue IDE Driver From observation and delay: Primary commit depends on secondary commit message and sync. disk write 20

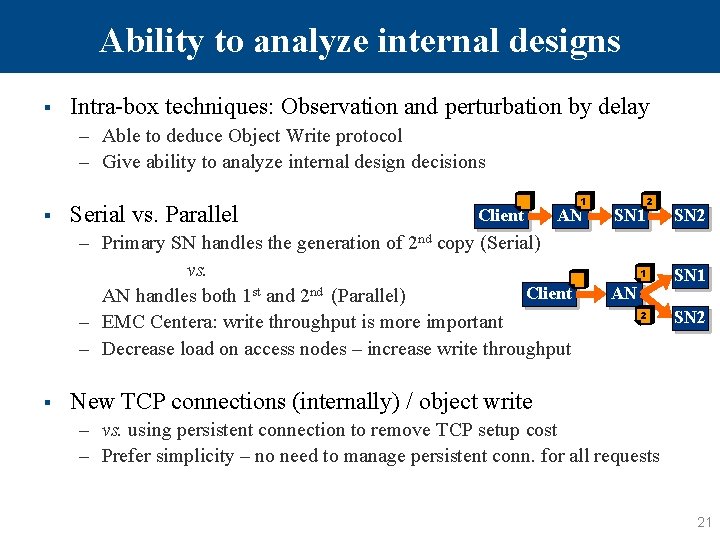

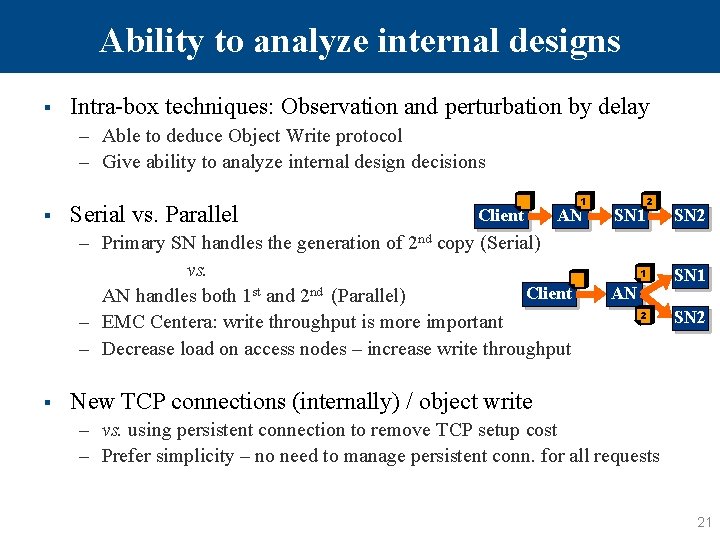

Ability to analyze internal designs § Intra-box techniques: Observation and perturbation by delay – Able to deduce Object Write protocol – Give ability to analyze internal design decisions § Serial vs. Parallel Client AN – Primary SN handles the generation of 2 nd copy (Serial) vs. Client AN handles both 1 st and 2 nd (Parallel) – EMC Centera: write throughput is more important – Decrease load on access nodes – increase write throughput § 1 SN 1 2 SN 2 AN New TCP connections (internally) / object write – vs. using persistent connection to remove TCP setup cost – Prefer simplicity – no need to manage persistent conn. for all requests 21

Outline § Introduction § EMC Centera Overview § Deducing Protocol § Inferring Policies – Various system perturbation § Conclusion 22

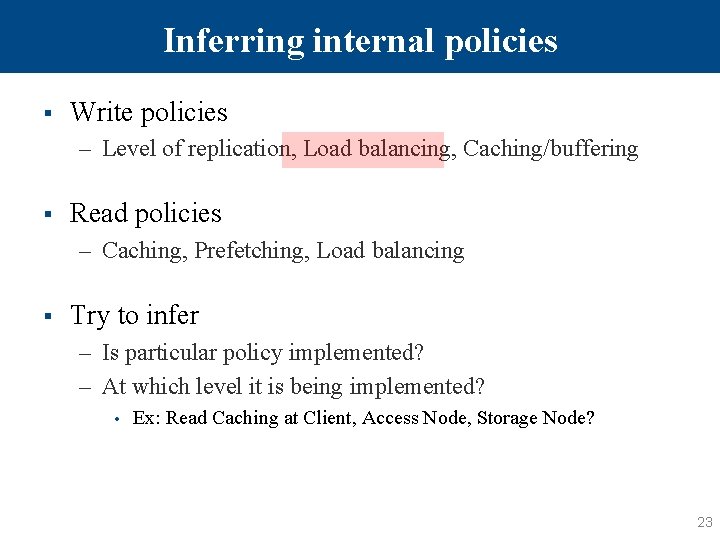

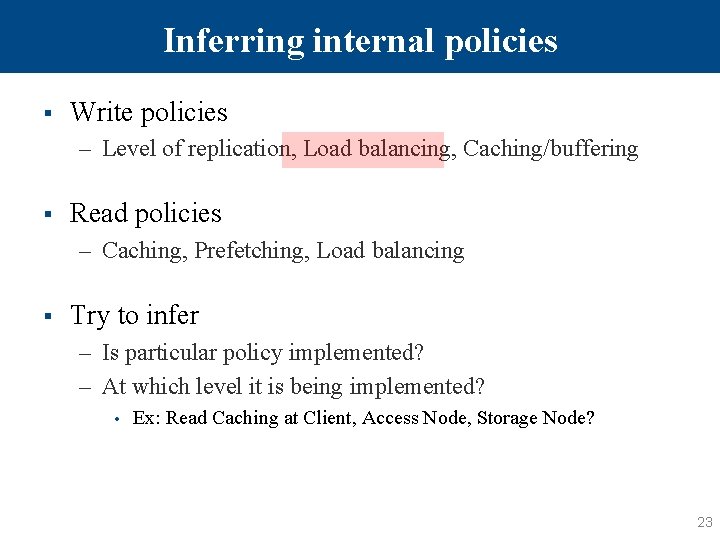

Inferring internal policies § Write policies – Level of replication, Load balancing, Caching/buffering § Read policies – Caching, Prefetching, Load balancing § Try to infer – Is particular policy implemented? – At which level it is being implemented? • Ex: Read Caching at Client, Access Node, Storage Node? 23

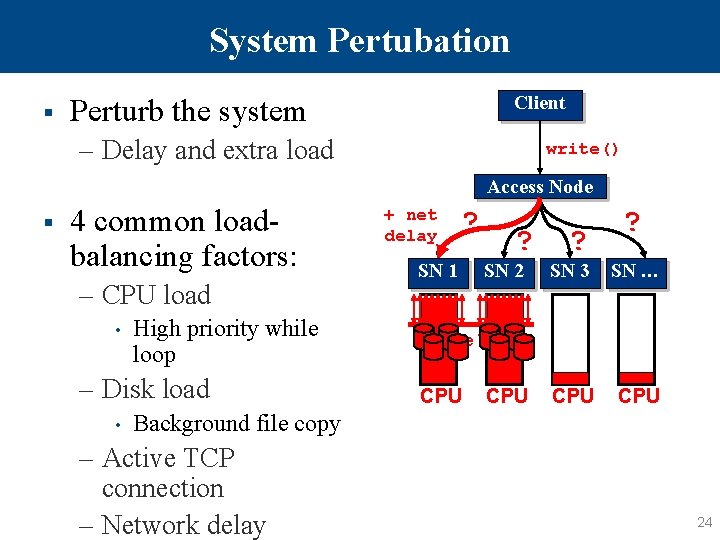

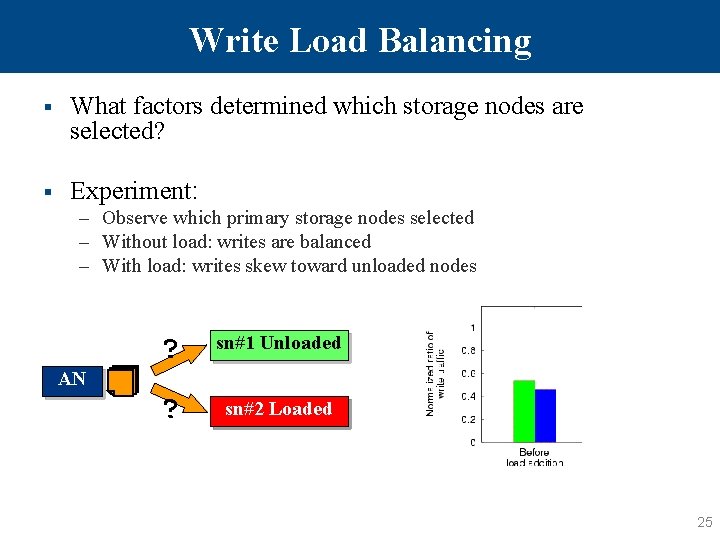

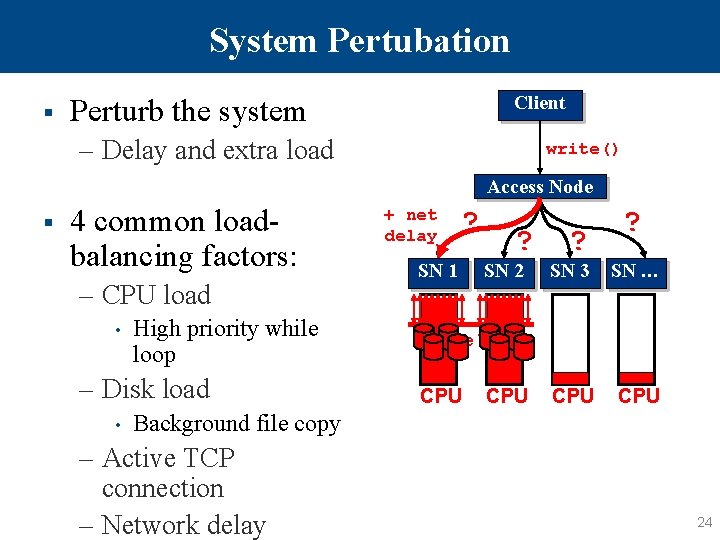

System Pertubation § Client Perturb the system – Delay and extra load write() Access Node § 4 common loadbalancing factors: – CPU load • High priority while loop – Disk load • + net delay ? SN 1 ? SN 2 ? ? SN 3 SN … CPU Active TCP CPU Background file copy – Active TCP connection – Network delay 24

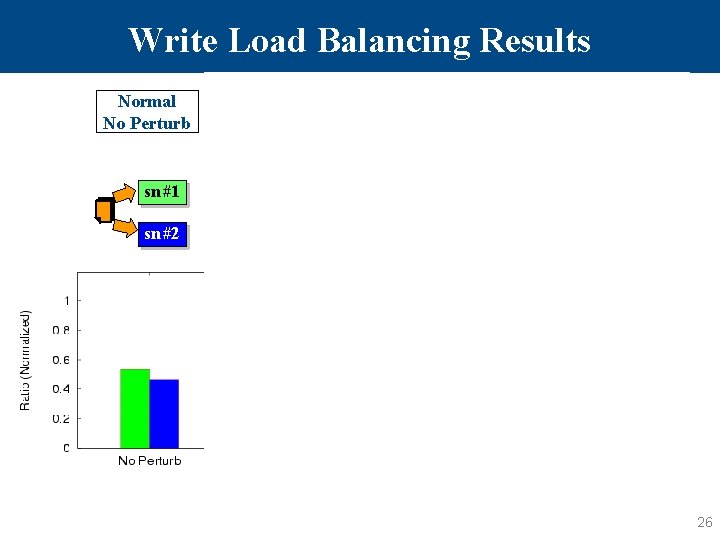

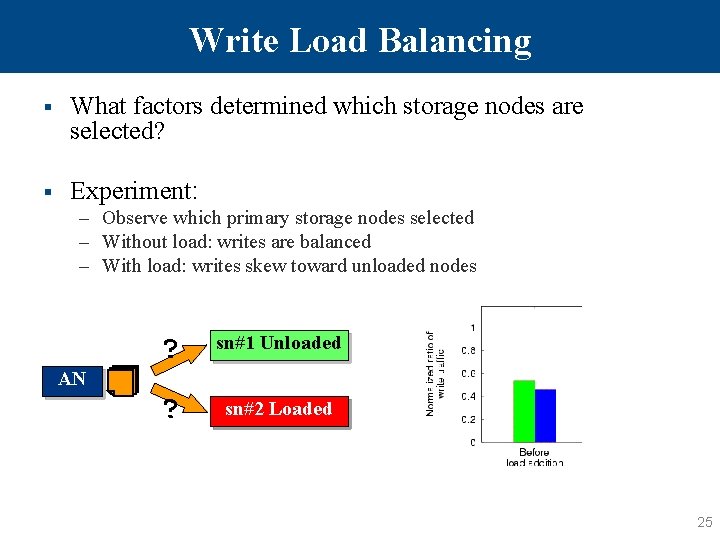

Write Load Balancing § What factors determined which storage nodes are selected? § Experiment: – Observe which primary storage nodes selected – Without load: writes are balanced – With load: writes skew toward unloaded nodes AN ? sn#1 Unloaded ? sn#2 Unloaded Loaded 25

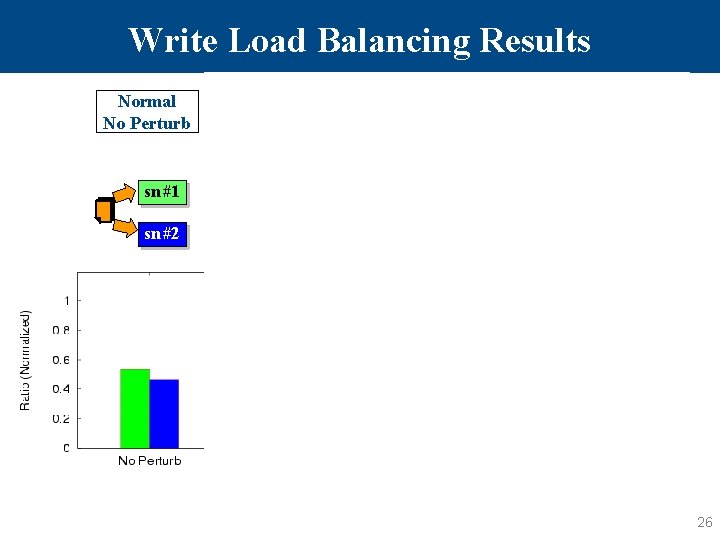

Write Load Balancing Results Normal No Perturb Additional CPU Load Disk Load Network Load Incoming Net. Delay sn#1 sn#1 sn#2 +CPU +Disk +TCP +Delay 26

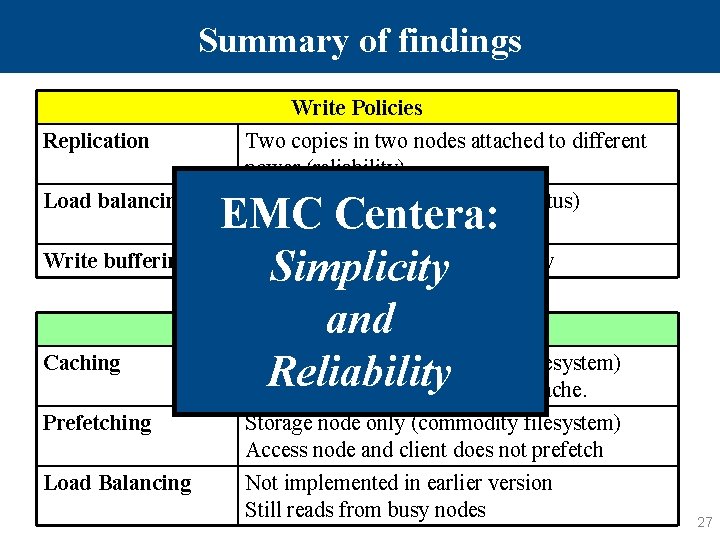

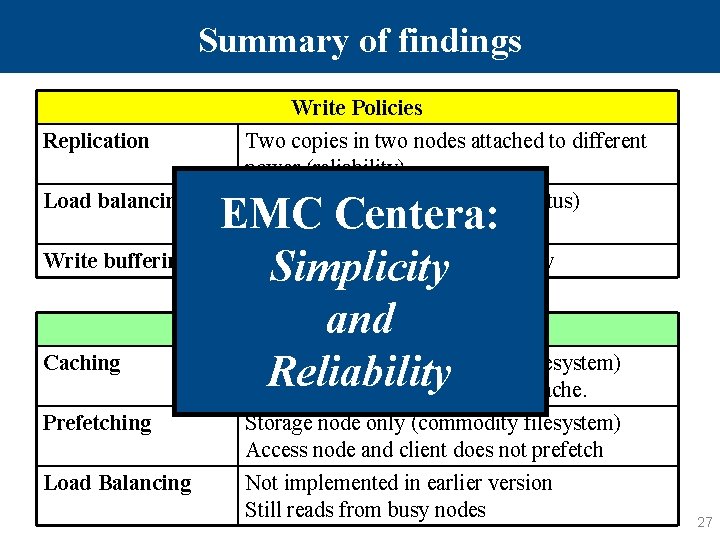

Summary of findings Write Policies Replication Load balancing Write buffering Caching Two copies in two nodes attached to different power (reliability) EMC Centera: Storage nodes write synchronously Simplicity and Read Policies Storage node only (commodity filesystem) Reliability Access node and client does not cache. CPU usage (locally observable status) Network status is not incorporated Prefetching Storage node only (commodity filesystem) Access node and client does not prefetch Load Balancing Not implemented in earlier version Still reads from busy nodes 27

Conclusion § Intra-box: – Observe and perturb – Deconstruct protocol and infer policies – No access to source code § Power of probe points – More observation places – Ability to control the system § Systems built with more externally visible probe points – Systems more readily understood, analyzed, and debugged – Higher-performing, more robust and reliable computer systems 28

Questions? 29