Decision Tree Algorithms Rule Based Suitable for automatic

Decision Tree Algorithms • Rule Based • Suitable for automatic generation

8 -2 Decision trees • Logical branching • Historical: – ID 3 – early rulegenerating system • Branches: – Different possible values • Nodes: – From which branches emanate Mc. Graw-Hill/Irwin © 2007 The Mc. Graw-Hill Companies, Inc. All rights reserved

8 -3 Goal-Driven Data Mining • Define goal – Identify fraudulent cases • Develop rules identifying attributes attaining that goal – IF attorney = Smith, THEN better check Mc. Graw-Hill/Irwin © 2007 The Mc. Graw-Hill Companies, Inc. All rights reserved

8 -4 Tree Structure • Sorts out data – IF THEN rules – Loan variables • Age: {young, middle, old} • Income: {low, average, high} • Risk: {low, medium, high} • Exhaustive tree enumerates all combinations – 81 combinations – classify all Mc. Graw-Hill/Irwin © 2007 The Mc. Graw-Hill Companies, Inc. All rights reserved

8 -5 Types of Trees • Classification tree – Variable values classes – Finite conditions • Regression tree – Variable values continuous numbers – Prediction or estimation Mc. Graw-Hill/Irwin © 2007 The Mc. Graw-Hill Companies, Inc. All rights reserved

8 -6 Rule Induction • Automatically process data – Classification (logical, easier) – Regression (estimation, messier) • Search through data for patterns & relationships – Pure knowledge discovery • Assumes no prior hypothesis • Disregards human judgment Mc. Graw-Hill/Irwin © 2007 The Mc. Graw-Hill Companies, Inc. All rights reserved

8 -7 Example • Three variables: – Age – Income – Risk • Outcomes: – On-time – Late Mc. Graw-Hill/Irwin © 2007 The Mc. Graw-Hill Companies, Inc. All rights reserved

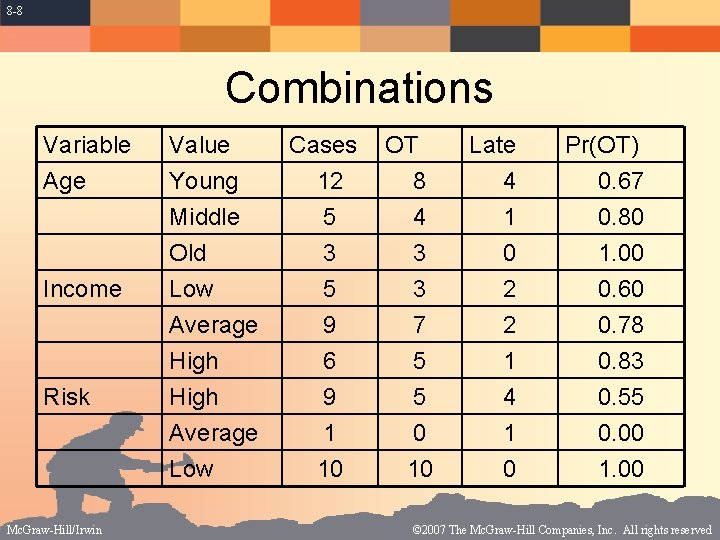

8 -8 Combinations Variable Age Value Young Middle Old Income Low Average High Average Low Risk Mc. Graw-Hill/Irwin Cases 12 5 3 5 9 6 9 1 10 OT 8 4 3 Late 4 1 0 Pr(OT) 0. 67 0. 80 1. 00 3 7 5 5 0 10 2 2 1 4 1 0 0. 60 0. 78 0. 83 0. 55 0. 00 1. 00 © 2007 The Mc. Graw-Hill Companies, Inc. All rights reserved

8 -9 Basis for Classification • If a category has all outcomes of a certain kind, that makes a good rule – IF income = High, they always paid • ENTROPY: Measure of content – Actually measure of randomness Mc. Graw-Hill/Irwin © 2007 The Mc. Graw-Hill Companies, Inc. All rights reserved

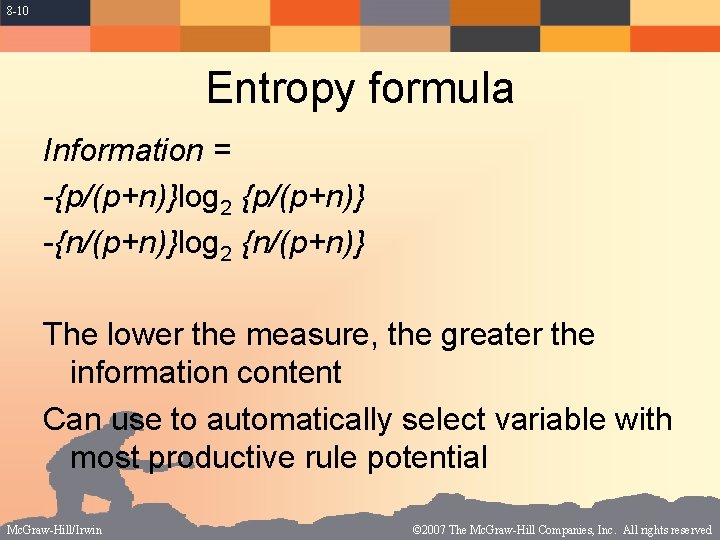

8 -10 Entropy formula Information = -{p/(p+n)}log 2 {p/(p+n)} -{n/(p+n)}log 2 {n/(p+n)} The lower the measure, the greater the information content Can use to automatically select variable with most productive rule potential Mc. Graw-Hill/Irwin © 2007 The Mc. Graw-Hill Companies, Inc. All rights reserved

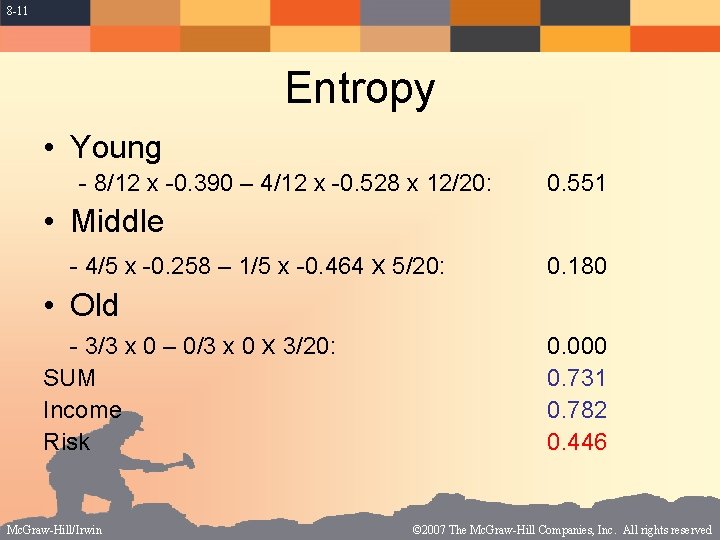

8 -11 Entropy • Young - 8/12 x -0. 390 – 4/12 x -0. 528 x 12/20: 0. 551 • Middle - 4/5 x -0. 258 – 1/5 x -0. 464 x 5/20: 0. 180 • Old - 3/3 x 0 – 0/3 x 0 x 3/20: SUM Income Risk Mc. Graw-Hill/Irwin 0. 000 0. 731 0. 782 0. 446 © 2007 The Mc. Graw-Hill Companies, Inc. All rights reserved

8 -12 Rule 1. IF(Risk = Low) THEN OT 2. ELSE LATE Mc. Graw-Hill/Irwin © 2007 The Mc. Graw-Hill Companies, Inc. All rights reserved

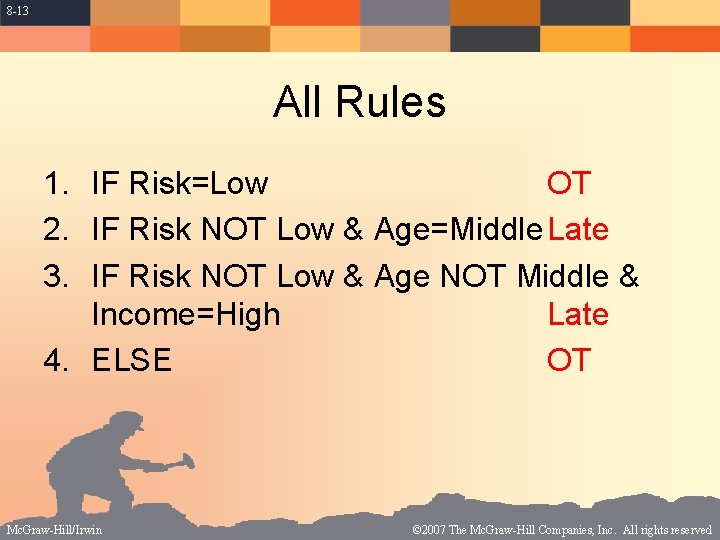

8 -13 All Rules 1. IF Risk=Low OT 2. IF Risk NOT Low & Age=Middle Late 3. IF Risk NOT Low & Age NOT Middle & Income=High Late 4. ELSE OT Mc. Graw-Hill/Irwin © 2007 The Mc. Graw-Hill Companies, Inc. All rights reserved

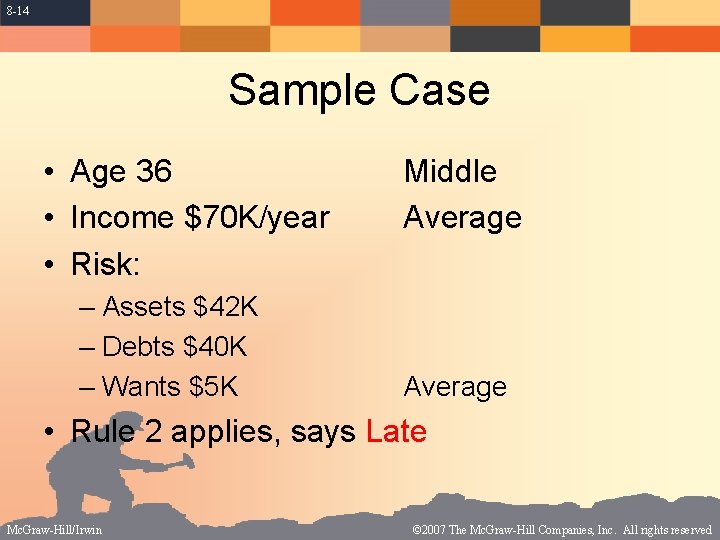

8 -14 Sample Case • Age 36 • Income $70 K/year • Risk: – Assets $42 K – Debts $40 K – Wants $5 K Middle Average • Rule 2 applies, says Late Mc. Graw-Hill/Irwin © 2007 The Mc. Graw-Hill Companies, Inc. All rights reserved

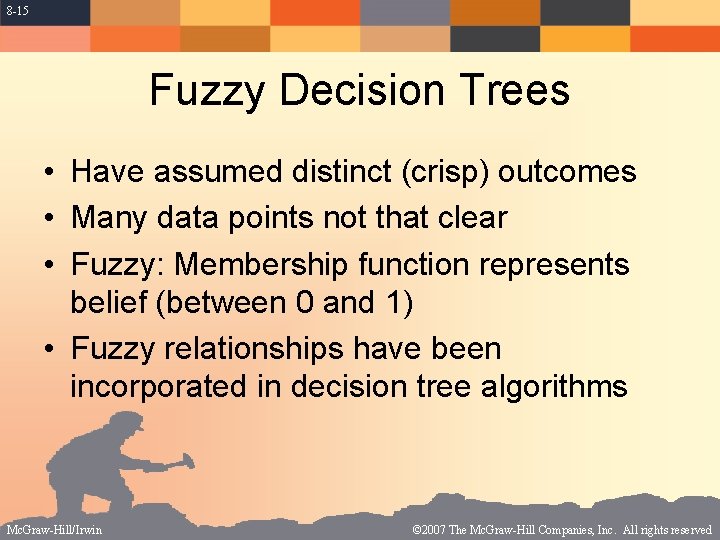

8 -15 Fuzzy Decision Trees • Have assumed distinct (crisp) outcomes • Many data points not that clear • Fuzzy: Membership function represents belief (between 0 and 1) • Fuzzy relationships have been incorporated in decision tree algorithms Mc. Graw-Hill/Irwin © 2007 The Mc. Graw-Hill Companies, Inc. All rights reserved

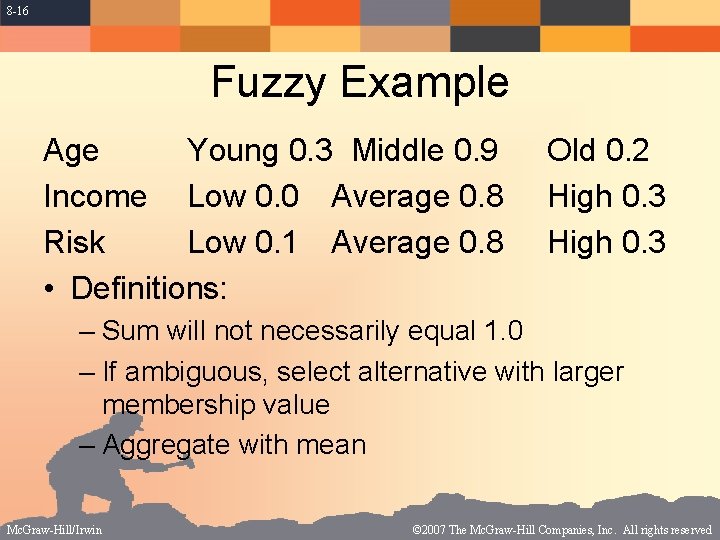

8 -16 Fuzzy Example Age Young 0. 3 Middle 0. 9 Income Low 0. 0 Average 0. 8 Risk Low 0. 1 Average 0. 8 • Definitions: Old 0. 2 High 0. 3 – Sum will not necessarily equal 1. 0 – If ambiguous, select alternative with larger membership value – Aggregate with mean Mc. Graw-Hill/Irwin © 2007 The Mc. Graw-Hill Companies, Inc. All rights reserved

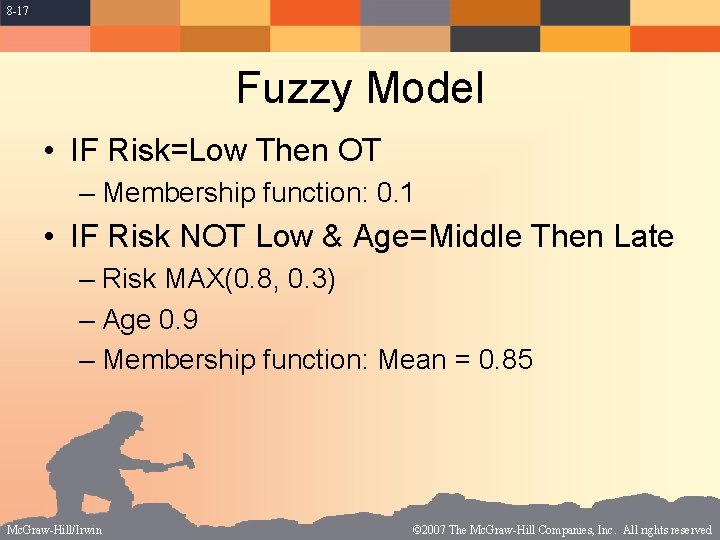

8 -17 Fuzzy Model • IF Risk=Low Then OT – Membership function: 0. 1 • IF Risk NOT Low & Age=Middle Then Late – Risk MAX(0. 8, 0. 3) – Age 0. 9 – Membership function: Mean = 0. 85 Mc. Graw-Hill/Irwin © 2007 The Mc. Graw-Hill Companies, Inc. All rights reserved

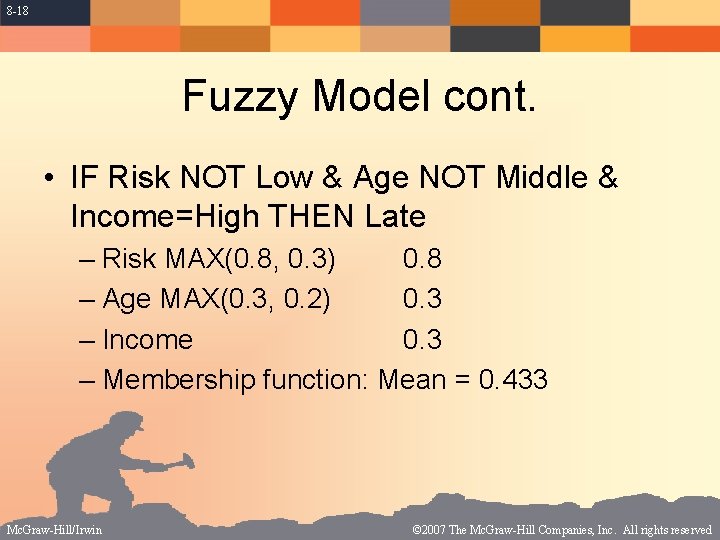

8 -18 Fuzzy Model cont. • IF Risk NOT Low & Age NOT Middle & Income=High THEN Late – Risk MAX(0. 8, 0. 3) 0. 8 – Age MAX(0. 3, 0. 2) 0. 3 – Income 0. 3 – Membership function: Mean = 0. 433 Mc. Graw-Hill/Irwin © 2007 The Mc. Graw-Hill Companies, Inc. All rights reserved

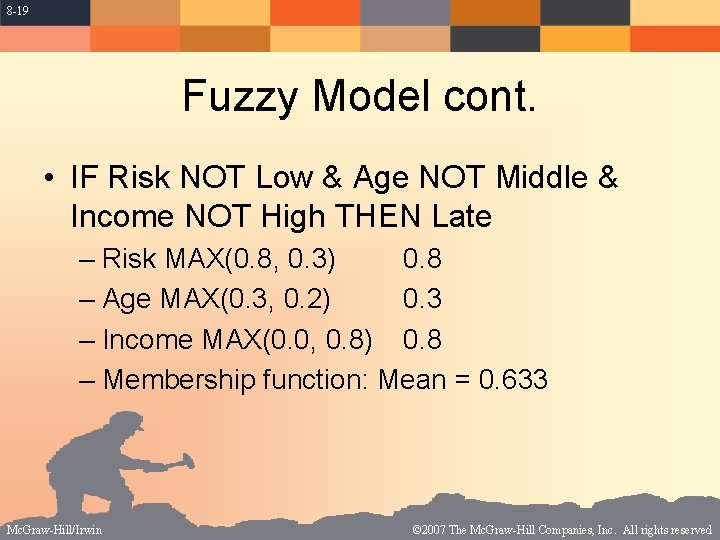

8 -19 Fuzzy Model cont. • IF Risk NOT Low & Age NOT Middle & Income NOT High THEN Late – Risk MAX(0. 8, 0. 3) 0. 8 – Age MAX(0. 3, 0. 2) 0. 3 – Income MAX(0. 0, 0. 8) 0. 8 – Membership function: Mean = 0. 633 Mc. Graw-Hill/Irwin © 2007 The Mc. Graw-Hill Companies, Inc. All rights reserved

8 -20 Fuzzy Model cont. • Highest membership function is 0. 633, for Rule 4 • Conclusion: On-time Mc. Graw-Hill/Irwin © 2007 The Mc. Graw-Hill Companies, Inc. All rights reserved

8 -21 Applications • Inventory Prediction • Clinical Databases • Software Development Quality Mc. Graw-Hill/Irwin © 2007 The Mc. Graw-Hill Companies, Inc. All rights reserved

8 -22 Inventory Prediction • Groceries – Maybe over 100, 000 SKUs – Barcode data input • Data mining to discover patterns – – Random sample of over 1. 6 million records 30 months 95 outlets Test sample 400, 000 records • Rule induction more workable than regression – 28, 000 rules – Very accurate, up to 27% improvement Mc. Graw-Hill/Irwin © 2007 The Mc. Graw-Hill Companies, Inc. All rights reserved

8 -23 Clinical Database • Headache – Over 60 possible causes • Exclusive reasoning uses negative rules – Use when symptom absent • Inclusive reasoning uses positive rules • Probabilistic rule induction expert system – Headache: Training sample over 50, 000 cases, 45 classes, 147 attributes – Meningitis: 1200 samples on 41 attributes, 4 outputs Mc. Graw-Hill/Irwin © 2007 The Mc. Graw-Hill Companies, Inc. All rights reserved

8 -24 Clinical Database • Used AQ 15, C 4. 5 – Average accuracy 82% • Expert System – Average accuracy 92% • Rough Set Rule System – Average accuracy 70% • Using both positive & negative rules from rough sets – Average accuracy over 90% Mc. Graw-Hill/Irwin © 2007 The Mc. Graw-Hill Companies, Inc. All rights reserved

8 -25 Software Development Quality • Telecommunications company • Goal: find patterns in modules being developed likely to contain faults discovered by customers – Typical module several million lines of code – Probability of fault averaged 0. 074 • Apply greater effort for those – Specification, testing, inspection Mc. Graw-Hill/Irwin © 2007 The Mc. Graw-Hill Companies, Inc. All rights reserved

8 -26 Software Quality • Preprocessed data • Reduced data • Used CART – (Classification & Regression Trees) – Could specify prior probabilities • First model 9 rules, 6 variables – Better at cross-validation – But variable values not available until late • Second model 4 rules, 2 variables – About same accuracy, data available earlier Mc. Graw-Hill/Irwin © 2007 The Mc. Graw-Hill Companies, Inc. All rights reserved

8 -27 Decision Trees • Very effective & useful • Automatic machine learning – Thus unbiased (but omit judgment) • Can handle very large data sets – Not affected much by missing data • Lots of software available Mc. Graw-Hill/Irwin © 2007 The Mc. Graw-Hill Companies, Inc. All rights reserved

- Slides: 27