Decentralized Distributed Storage System for Big Data Presenter

Decentralized Distributed Storage System for Big Data Presenter: Wei Xie Data-Intensive Scalable Computing Laboratory(DISCL) Computer Science Department Texas Tech University 2016 Symposium on Big Data

Outline p Trends in Big Data and Cloud Storage p Decentralized storage technique p Uni. Store project at Texas Tech University 2016 Symposium on Big Data

Big Data Storage Requirements p Large capacity: 100 s terabytes of data and more p Performance-intensive: demanding big data analytics applications, real-time response p Data protection: protect 100 s terabytes of data from loss Texas Tech University 2016 Symposium on Big Data

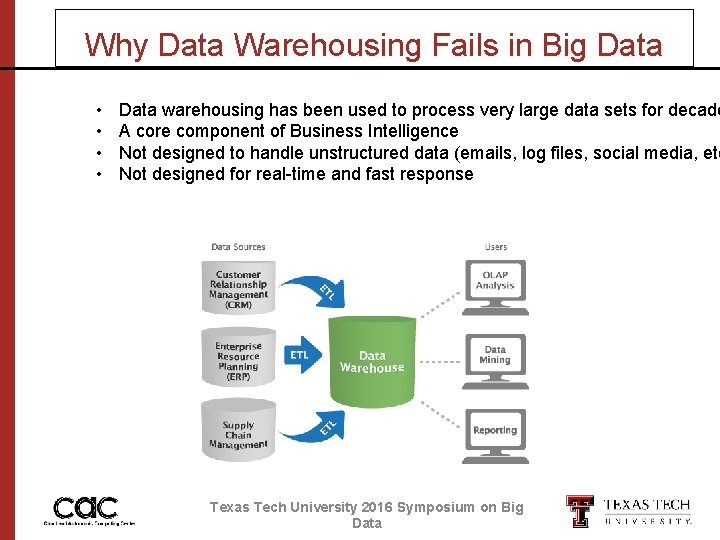

Why Data Warehousing Fails in Big Data • • Data warehousing has been used to process very large data sets for decade A core component of Business Intelligence Not designed to handle unstructured data (emails, log files, social media, etc Not designed for real-time and fast response Texas Tech University 2016 Symposium on Big Data

Comparison p Traditional data warehousing problem Retrieve the sales figures of a particular item in a chain of retail stores exist in a database p Big data problem Cross-reference sales of a particular item with weather conditions at time of sale, or with various customer details, and to retrieve that information quickly Texas Tech University 2016 Symposium on Big Data

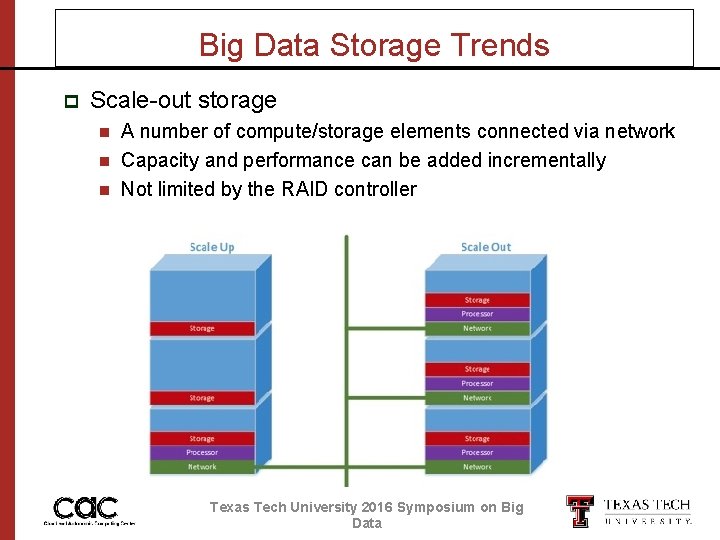

Big Data Storage Trends p Scale-out storage n n n A number of compute/storage elements connected via network Capacity and performance can be added incrementally Not limited by the RAID controller Texas Tech University 2016 Symposium on Big Data

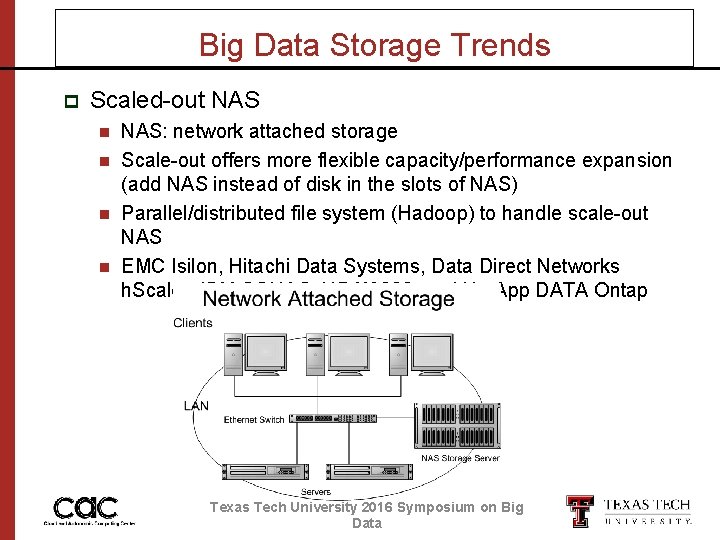

Big Data Storage Trends p Scaled-out NAS n n NAS: network attached storage Scale-out offers more flexible capacity/performance expansion (add NAS instead of disk in the slots of NAS) Parallel/distributed file system (Hadoop) to handle scale-out NAS EMC Isilon, Hitachi Data Systems, Data Direct Networks h. Scaler, IBM SONAS, HP X 9000, and Net. App DATA Ontap Texas Tech University 2016 Symposium on Big Data

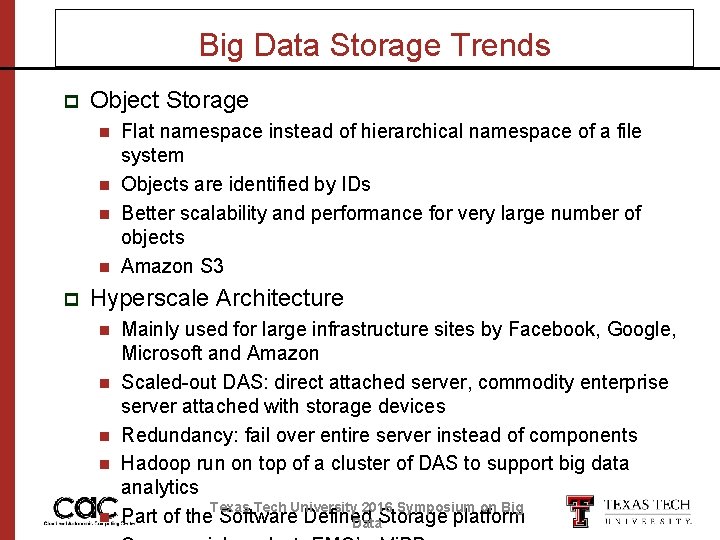

Big Data Storage Trends p Object Storage n n p Flat namespace instead of hierarchical namespace of a file system Objects are identified by IDs Better scalability and performance for very large number of objects Amazon S 3 Hyperscale Architecture n n n Mainly used for large infrastructure sites by Facebook, Google, Microsoft and Amazon Scaled-out DAS: direct attached server, commodity enterprise server attached with storage devices Redundancy: fail over entire server instead of components Hadoop run on top of a cluster of DAS to support big data analytics Texas Tech University 2016 Symposium on Big Part of the Software Defined Storage platform Data

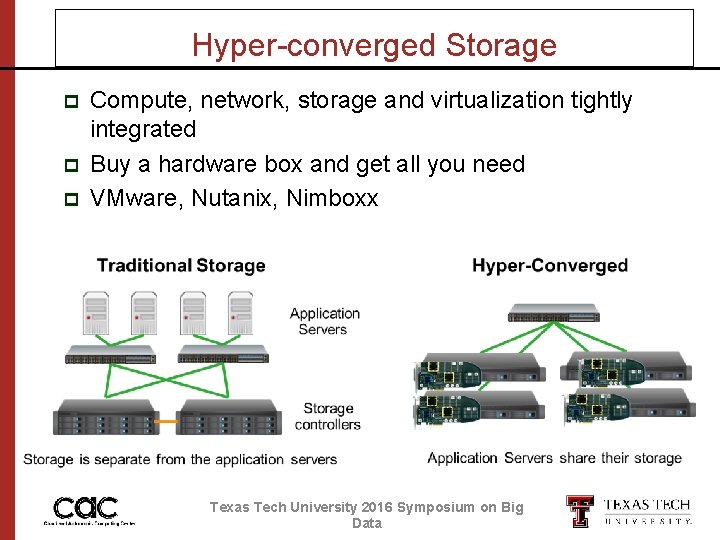

Hyper-converged Storage p p p Compute, network, storage and virtualization tightly integrated Buy a hardware box and get all you need VMware, Nutanix, Nimboxx Texas Tech University 2016 Symposium on Big Data

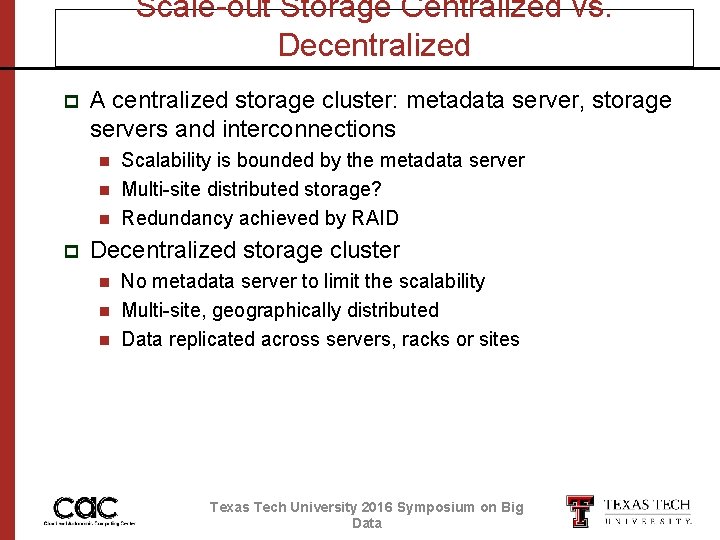

Scale-out Storage Centralized vs. Decentralized p A centralized storage cluster: metadata server, storage servers and interconnections n n n p Scalability is bounded by the metadata server Multi-site distributed storage? Redundancy achieved by RAID Decentralized storage cluster n n n No metadata server to limit the scalability Multi-site, geographically distributed Data replicated across servers, racks or sites Texas Tech University 2016 Symposium on Big Data

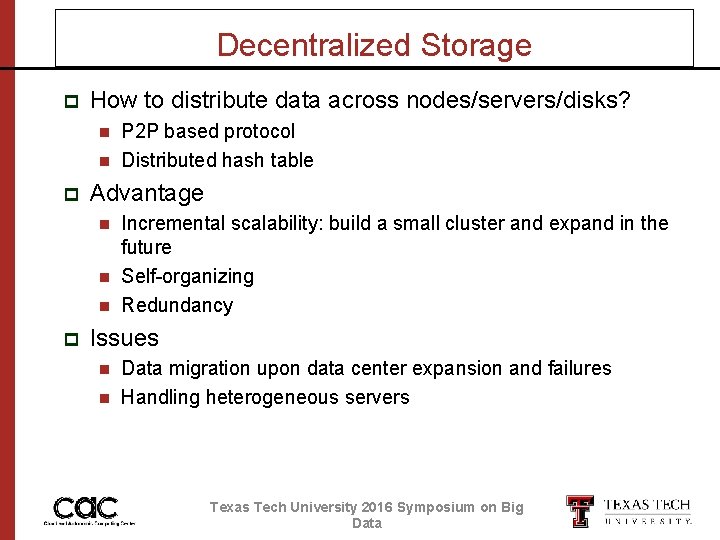

Decentralized Storage p How to distribute data across nodes/servers/disks? n n p Advantage n n n p P 2 P based protocol Distributed hash table Incremental scalability: build a small cluster and expand in the future Self-organizing Redundancy Issues n n Data migration upon data center expansion and failures Handling heterogeneous servers Texas Tech University 2016 Symposium on Big Data

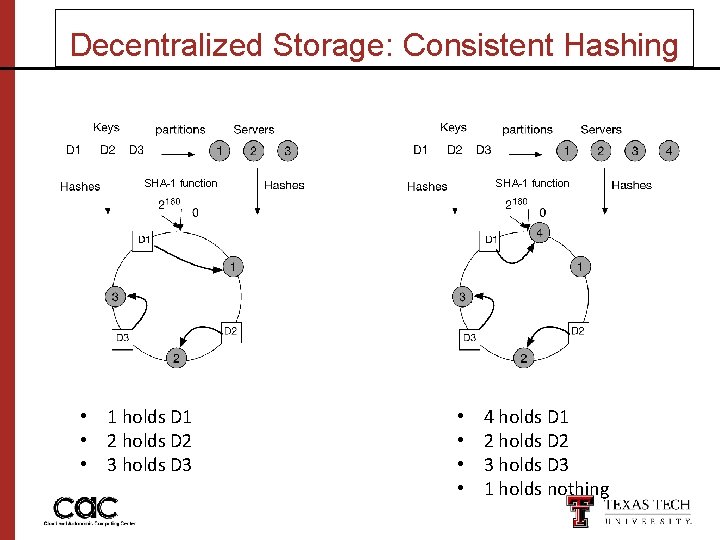

Decentralized Storage: Consistent Hashing SHA-1 function • 1 holds D 1 • 2 holds D 2 • 3 holds D 3 SHA-1 function • • 4 holds D 1 2 holds D 2 3 holds D 3 1 holds nothing

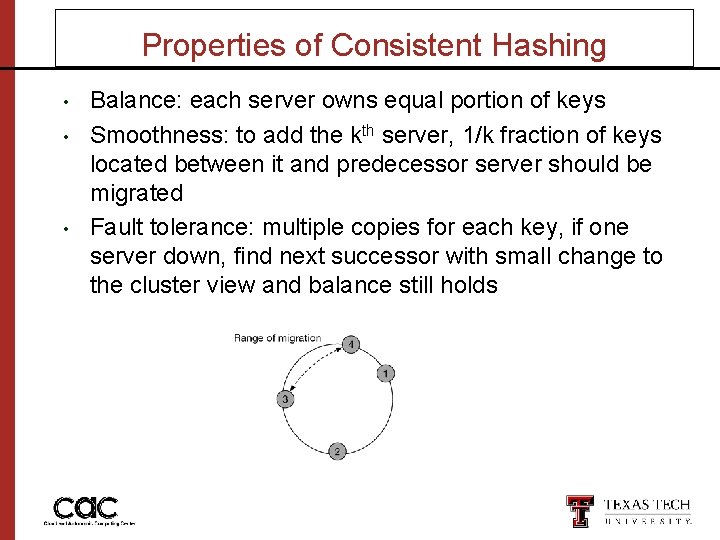

Properties of Consistent Hashing • • • Balance: each server owns equal portion of keys Smoothness: to add the kth server, 1/k fraction of keys located between it and predecessor server should be migrated Fault tolerance: multiple copies for each key, if one server down, find next successor with small change to the cluster view and balance still holds

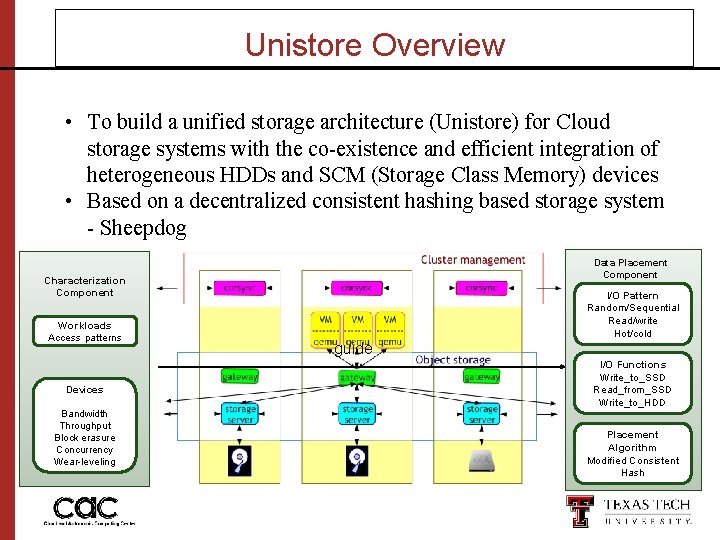

Unistore Overview • To build a unified storage architecture (Unistore) for Cloud storage systems with the co-existence and efficient integration of heterogeneous HDDs and SCM (Storage Class Memory) devices • Based on a decentralized consistent hashing based storage system - Sheepdog Data Placement Component Characterization Component Workloads Access patterns Devices Bandwidth Throughput Block erasure Concurrency Wear-leveling I/O Pattern Random/Sequential Read/write Hot/cold guide I/O Functions Write_to_SSD Read_from_SSD Write_to_HDD Placement Algorithm Modified Consistent Hash

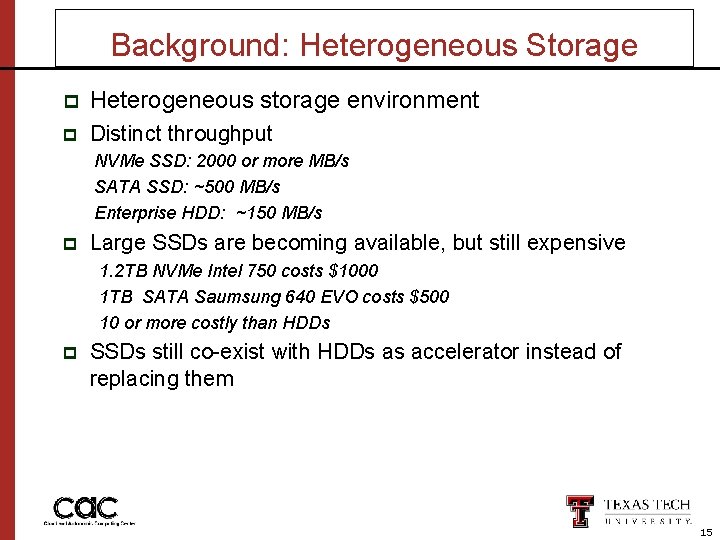

Background: Heterogeneous Storage p Heterogeneous storage environment p Distinct throughput NVMe SSD: 2000 or more MB/s SATA SSD: ~500 MB/s Enterprise HDD: ~150 MB/s p Large SSDs are becoming available, but still expensive 1. 2 TB NVMe Intel 750 costs $1000 1 TB SATA Saumsung 640 EVO costs $500 10 or more costly than HDDs p SSDs still co-exist with HDDs as accelerator instead of replacing them 15

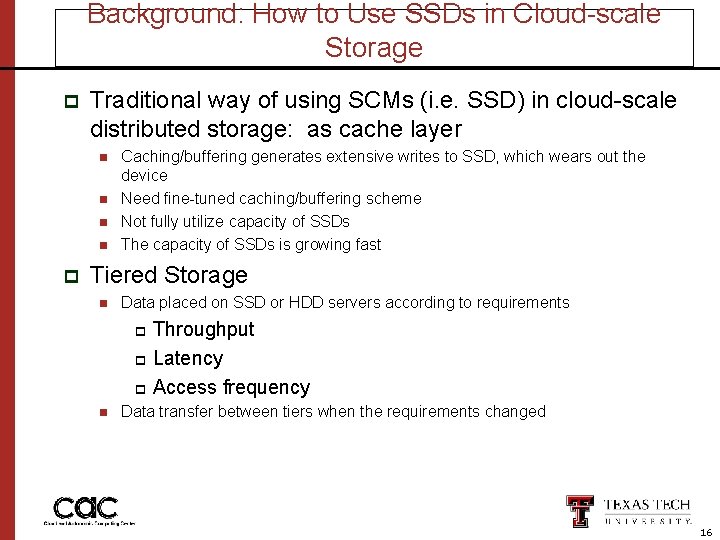

Background: How to Use SSDs in Cloud-scale Storage p Traditional way of using SCMs (i. e. SSD) in cloud-scale distributed storage: as cache layer n n p Caching/buffering generates extensive writes to SSD, which wears out the device Need fine-tuned caching/buffering scheme Not fully utilize capacity of SSDs The capacity of SSDs is growing fast Tiered Storage n Data placed on SSD or HDD servers according to requirements Throughput p Latency p Access frequency p n Data transfer between tiers when the requirements changed 16

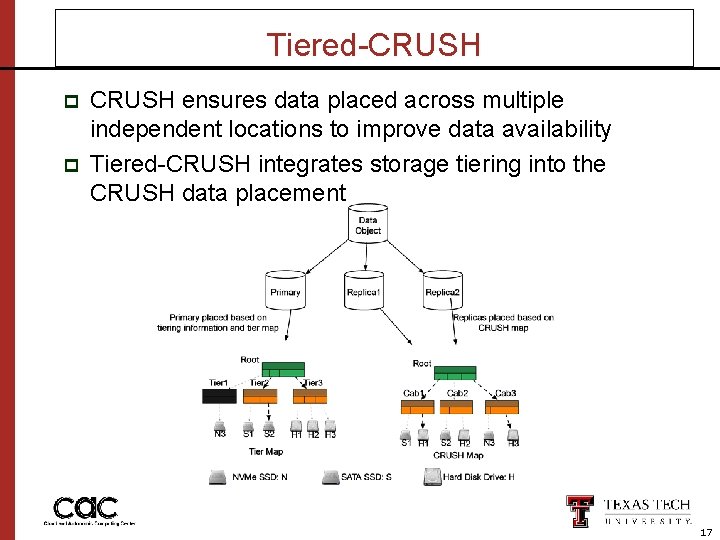

Tiered-CRUSH p p CRUSH ensures data placed across multiple independent locations to improve data availability Tiered-CRUSH integrates storage tiering into the CRUSH data placement 17

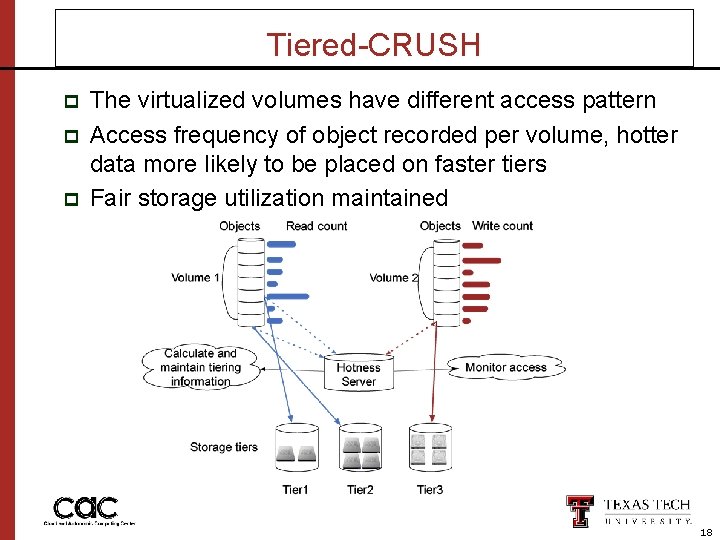

Tiered-CRUSH p p p The virtualized volumes have different access pattern Access frequency of object recorded per volume, hotter data more likely to be placed on faster tiers Fair storage utilization maintained 18

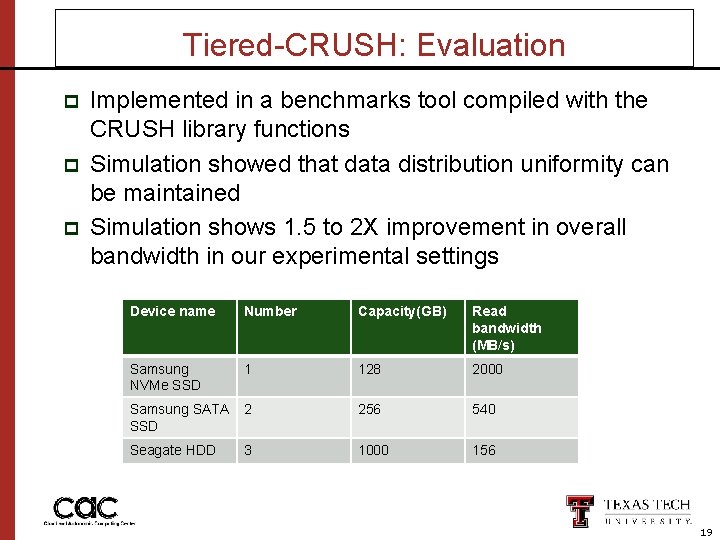

Tiered-CRUSH: Evaluation p p p Implemented in a benchmarks tool compiled with the CRUSH library functions Simulation showed that data distribution uniformity can be maintained Simulation shows 1. 5 to 2 X improvement in overall bandwidth in our experimental settings Device name Number Capacity(GB) Read bandwidth (MB/s) Samsung NVMe SSD 1 128 2000 Samsung SATA 2 SSD 256 540 Seagate HDD 1000 156 3 19

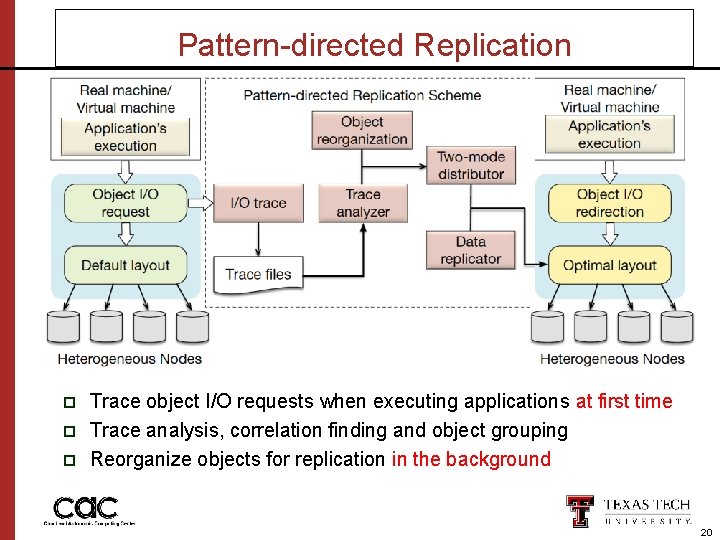

Pattern-directed Replication p p p Trace object I/O requests when executing applications at first time Trace analysis, correlation finding and object grouping Reorganize objects for replication in the background 20

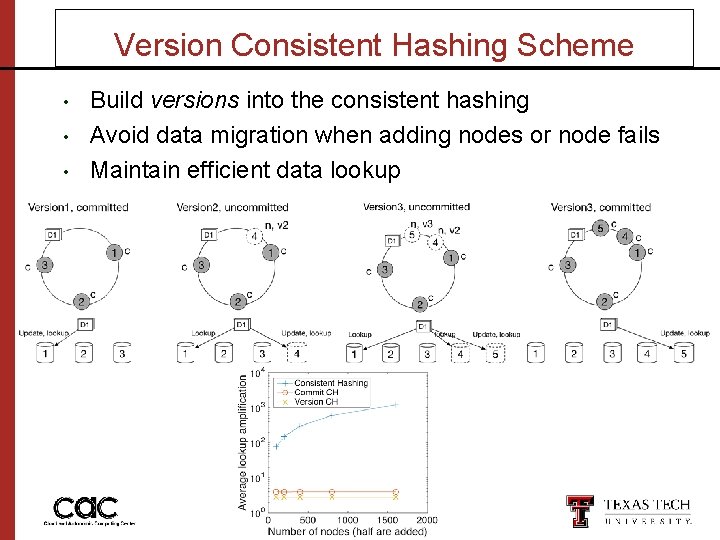

Version Consistent Hashing Scheme • • • Build versions into the consistent hashing Avoid data migration when adding nodes or node fails Maintain efficient data lookup

Conclusions • • Decentralized storage becomes the standard in cloud storage Tiered-CRUSH algorithm achieves better IO performance and higher data availability at the same time for heterogeneous storage system Version consistent hashing scheme for improving manageability of data center PRS for high performance data replication by reorganizing the placement of data replications

Thank you! Questions? Visit: discl. cs. ttu. edu for more details Texas Tech University 2016 Symposium on Big Data

- Slides: 23