DataParallel Algorithms and Multithreading Gregory S Johnson johnsongcs

- Slides: 35

Data-Parallel Algorithms and Multithreading Gregory S. Johnson johnsong@cs. utexas. edu

Topics • expressing v. exploiting parallelism • data parallel algorithms, case study: MPIRE (part 1) • hardware multithreading, case study: MPIRE (part 2) • Culler’s multithreading rebuttal

Expressing v. Exploiting Parallelism • there exists a two-part problem in achieving high parallel efficiency: 1. expressing the problem in terms of parallelism - doing so may require an algorithm very different from the serial version (user) 2. exploiting this parallelism on a specific machine (user + compiler) • the “family” tree of parallel architectures is both wide and deep • parallel APIs abound: PVM, MPI, Shmem, Open. MP, CRAFT, HPF, F --, co-array Fortran, ZPL

Data-Parallel Algorithms

Data Parallel Algorithms • one of the very first MPPs is described • SIMD machine with modest processors • machine used as a “co-processor” hosted by a conventional workstation • dated model of parallelism (one data element per PE, modest ratio of computation to communication) • a few of the presented parallel algorithms are timeless

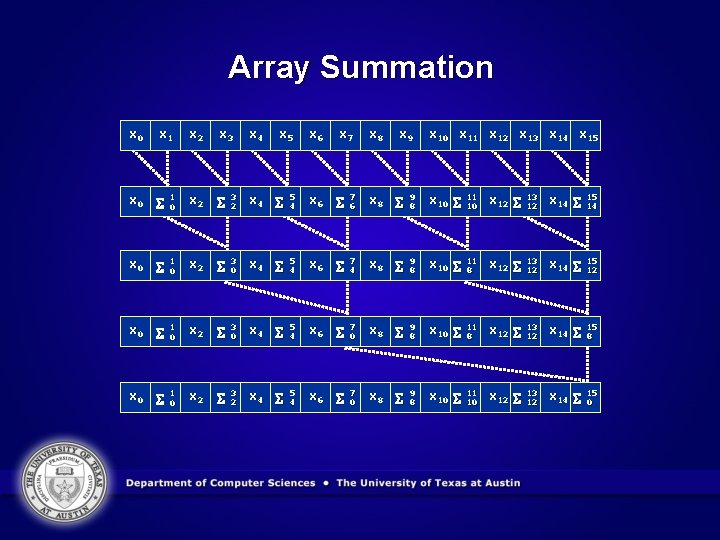

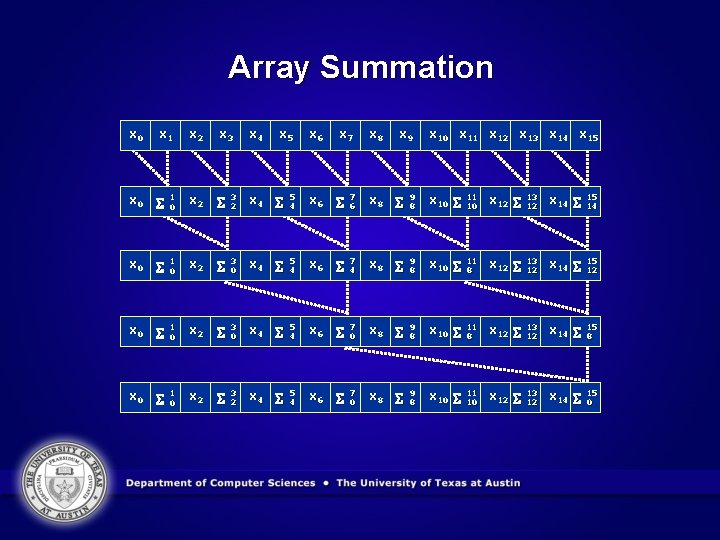

Array Summation x 0 x 1 x 2 x 3 x 4 x 5 x 6 x 7 x 8 x 9 x 10 x 11 x 12 x 13 x 14 x 15 x 0 1 0 x 2 3 2 x 4 5 4 x 6 7 6 x 8 9 8 x 10 11 10 x 12 13 12 x 14 15 14 x 0 1 0 x 2 3 0 x 4 5 4 x 6 7 4 x 8 9 8 x 10 11 8 x 12 13 12 x 14 15 12 x 0 1 0 x 2 3 0 x 4 5 4 x 6 7 0 x 8 9 8 x 10 11 8 x 12 13 12 x 14 15 8 x 0 1 0 x 2 3 2 x 4 5 4 x 6 7 0 x 8 9 8 x 10 11 10 x 12 13 12 x 14 15 0

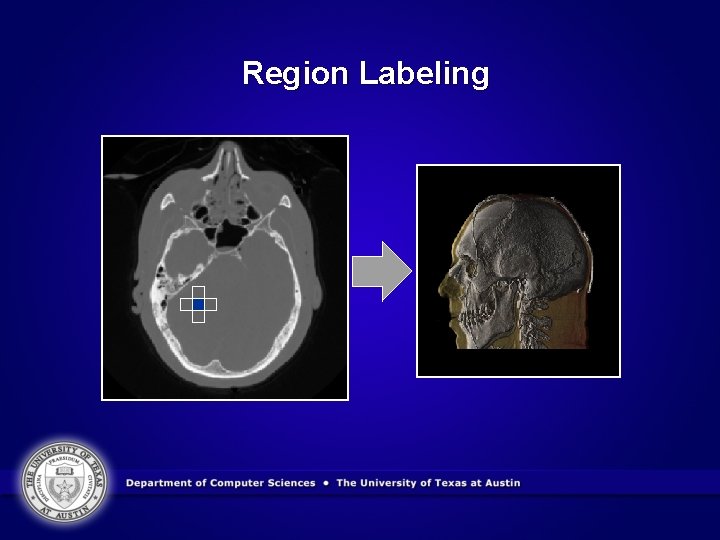

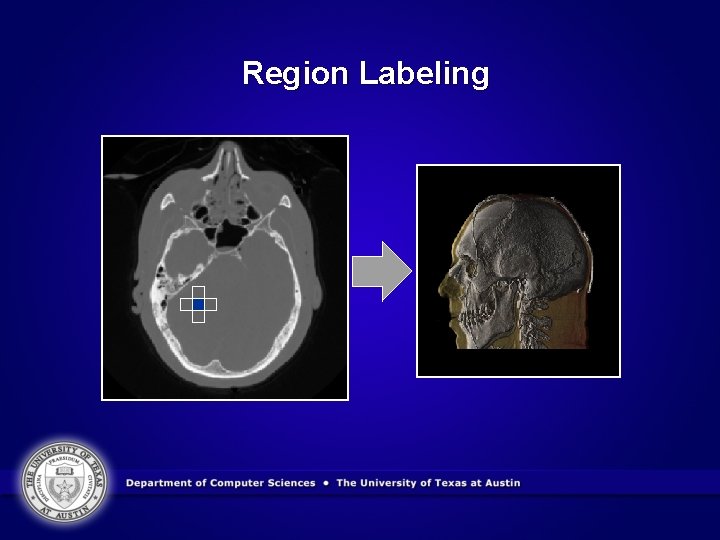

Region Labeling

Observations • value placed on processors is quite low and the algorithms show this • today’s trend is toward smaller numbers of more capable processors, not larger numbers of less capable processors (interconnects are not keeping pace with CPU development) • Thinking Machines tried to reverse course with the CM 5 (MIMD) but perhaps too late (founded 1983, folded 1993) • interesting point that as data grows arbitrarily large, data parallelism is much more abundant than instruction level parallelism (true for scientific codes, but. . . ) • “we would be by no means surprised if some of the algorithms described in this article begin to look quite ‘old-fashioned’ in the years to come”

Case Study: MPIRE • software implementation of direct volume rendering algorithms including splatting and ray casting • designed to render high quality images of multi-GB, multiresolution volume datasets with R, G, B, , per sample • designed to express fine grained (per pixel), medium grained (subvolume), and coarse grained (per frame) parallelism • runs relatively efficiently on 32 and 64 -bit SMPs, MPPs, COWs, clustered SMPs, and uniprocessors

Case Study: MPIRE

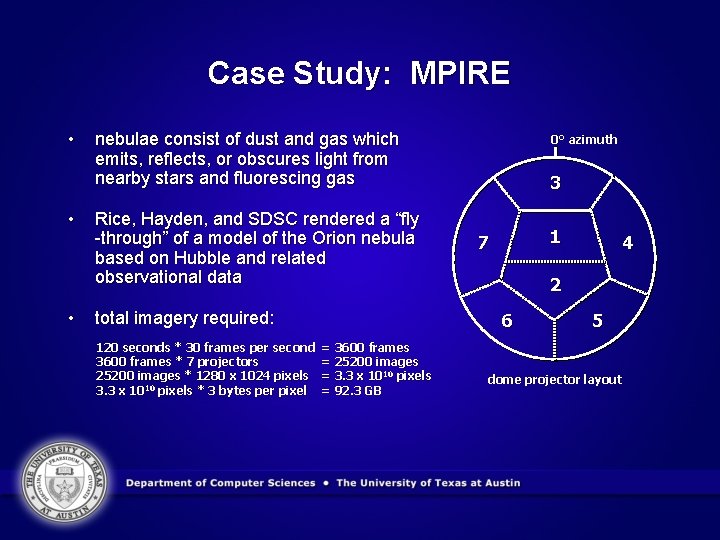

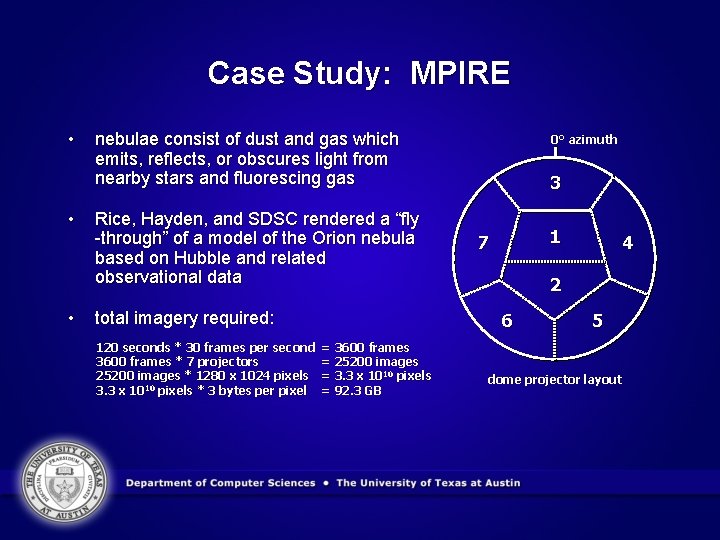

Case Study: MPIRE • • • nebulae consist of dust and gas which emits, reflects, or obscures light from nearby stars and fluorescing gas Rice, Hayden, and SDSC rendered a “fly -through” of a model of the Orion nebula based on Hubble and related observational data total imagery required: 120 seconds * 30 frames per second 3600 frames * 7 projectors 25200 images * 1280 x 1024 pixels 3. 3 x 1010 pixels * 3 bytes per pixel 0° azimuth 3 1 7 2 6 = 3600 frames = 25200 images = 3. 3 x 1010 pixels = 92. 3 GB 4 5 dome projector layout

Case Study: MPIRE

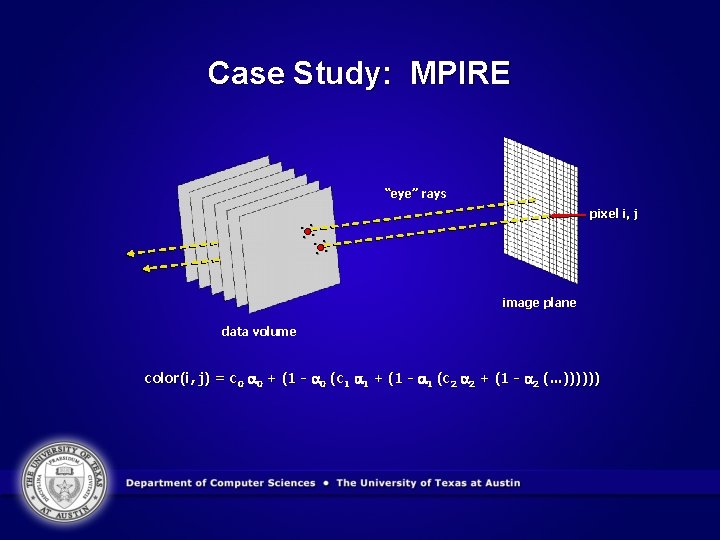

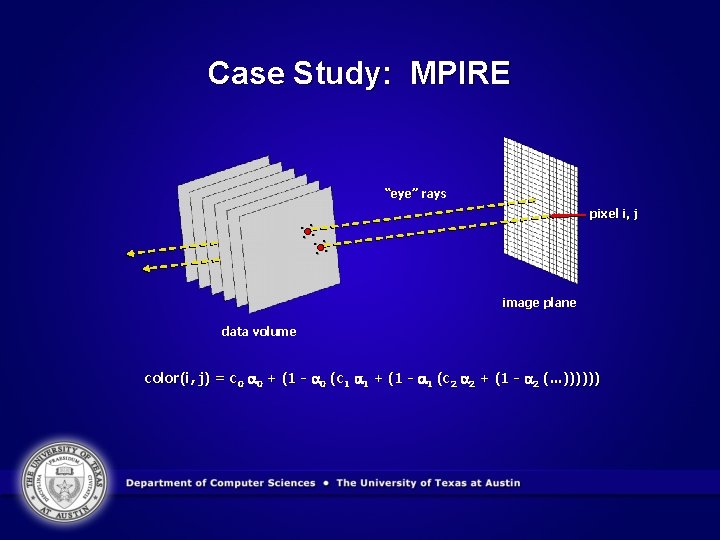

Case Study: MPIRE “eye” rays pixel i, j . . . . image plane data volume color(i, j) = c 0 0 + (1 - 0 (c 1 1 + (1 - 1 (c 2 2 + (1 - 2 (. . . ))))))

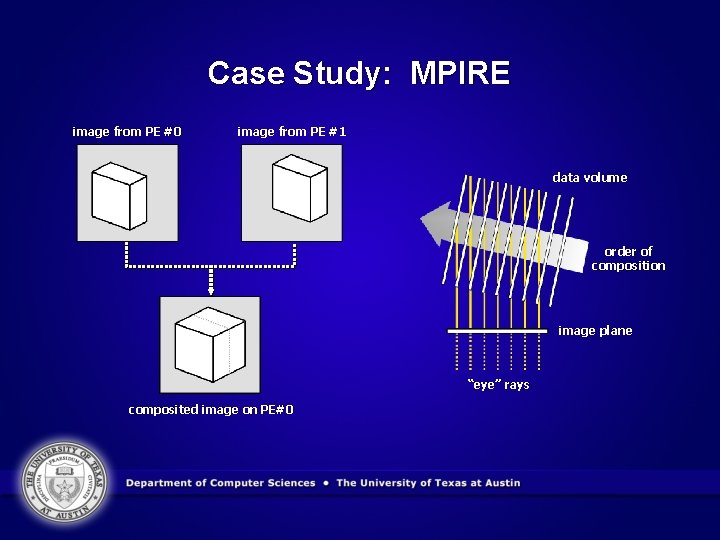

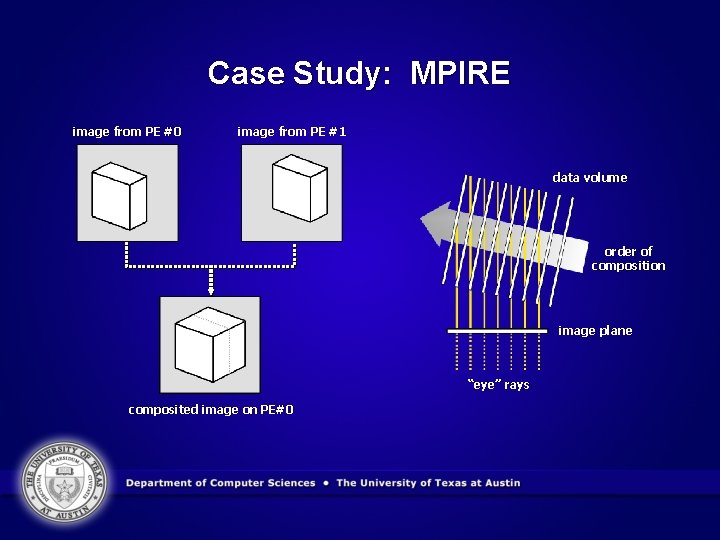

Case Study: MPIRE image from PE #0 image from PE #1 data volume order of composition image plane “eye” rays composited image on PE#0

Hardware Multithreading

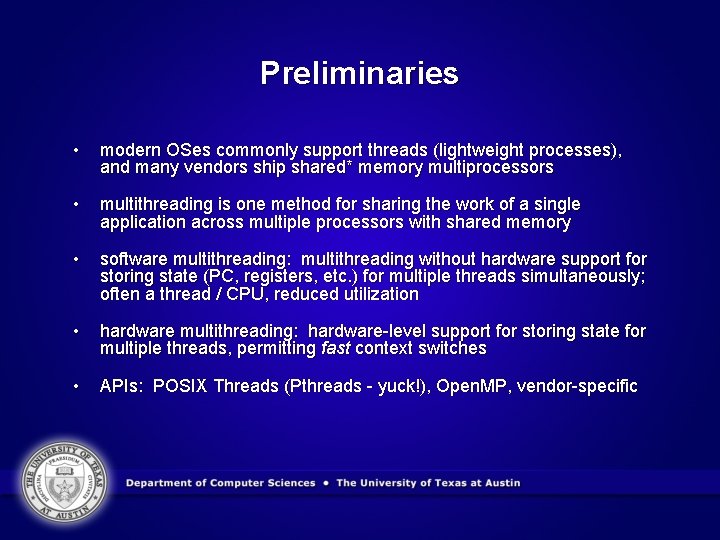

Preliminaries • modern OSes commonly support threads (lightweight processes), and many vendors ship shared* memory multiprocessors • multithreading is one method for sharing the work of a single application across multiple processors with shared memory • software multithreading: multithreading without hardware support for storing state (PC, registers, etc. ) for multiple threads simultaneously; often a thread / CPU, reduced utilization • hardware multithreading: hardware-level support for storing state for multiple threads, permitting fast context switches • APIs: POSIX Threads (Pthreads - yuck!), Open. MP, vendor-specific

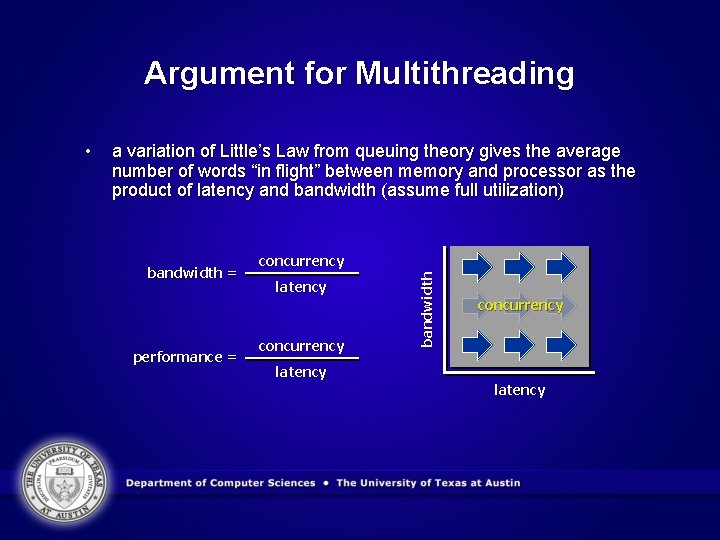

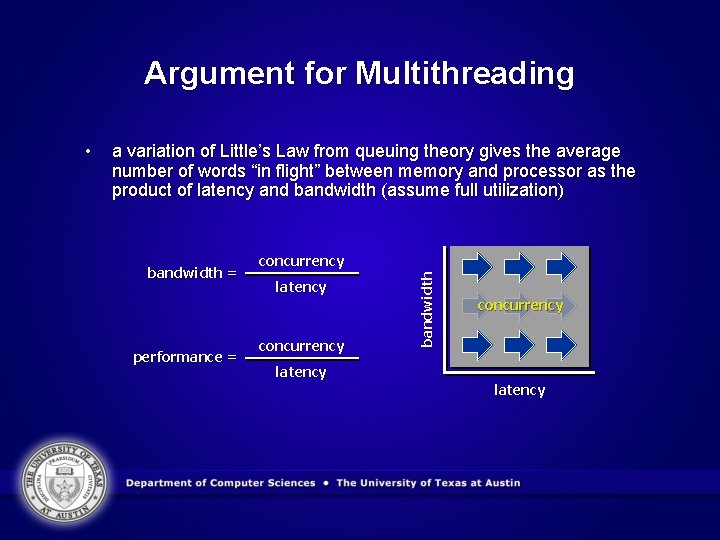

Argument for Multithreading a variation of Little’s Law from queuing theory gives the average number of words “in flight” between memory and processor as the product of latency and bandwidth (assume full utilization) bandwidth = performance = concurrency latency bandwidth • concurrency latency

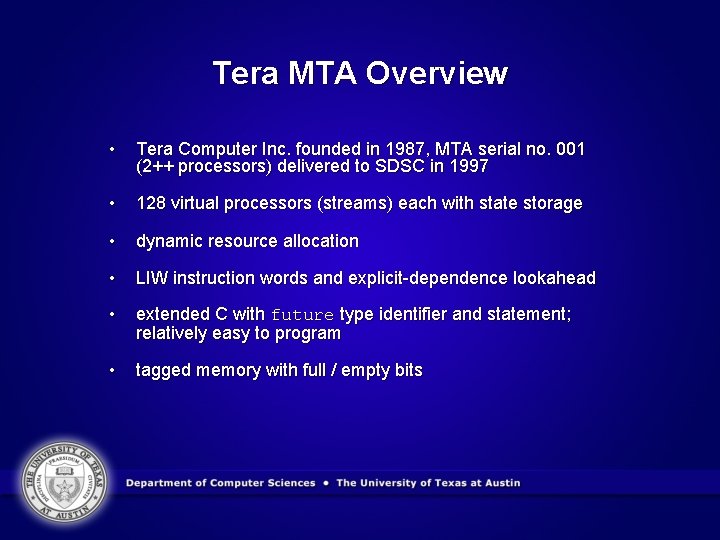

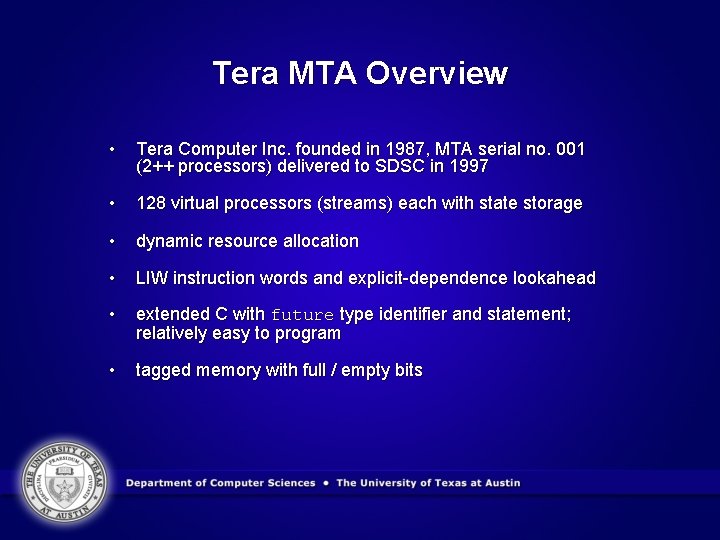

Tera MTA Overview • Tera Computer Inc. founded in 1987, MTA serial no. 001 (2++ processors) delivered to SDSC in 1997 • 128 virtual processors (streams) each with state storage • dynamic resource allocation • LIW instruction words and explicit-dependence lookahead • extended C with future type identifier and statement; relatively easy to program • tagged memory with full / empty bits

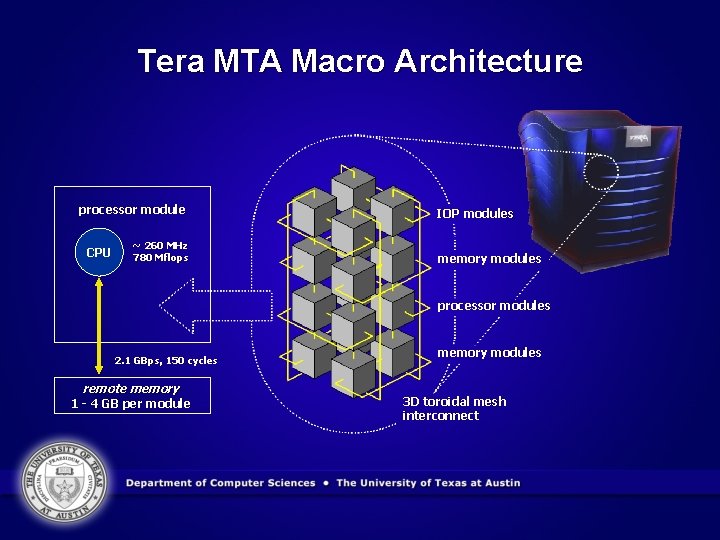

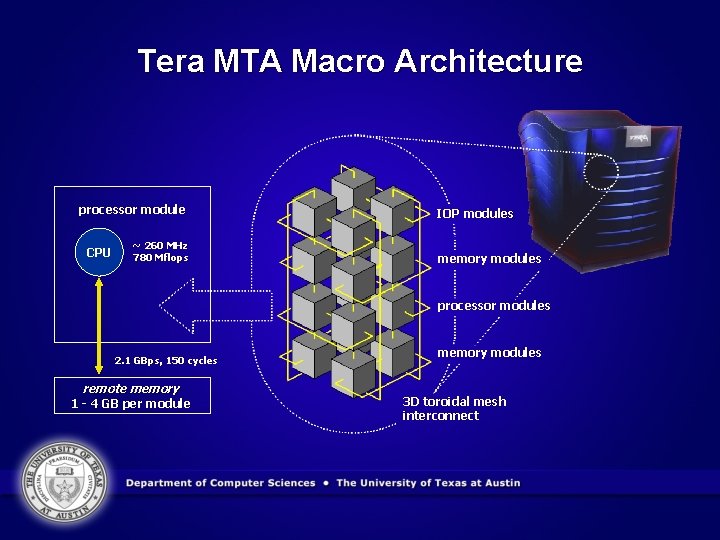

Tera MTA Macro Architecture processor module CPU ~ 260 MHz 780 Mflops IOP modules memory modules processor modules 2. 1 GBps, 150 cycles remote memory 1 - 4 GB per module memory modules 3 D toroidal mesh interconnect

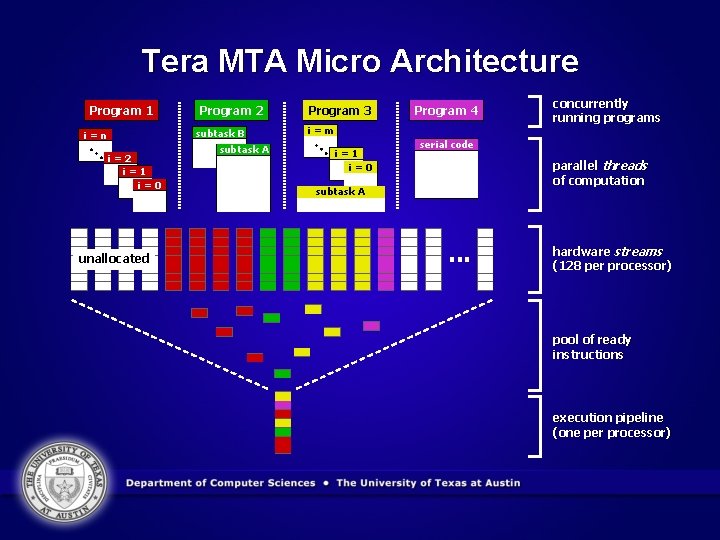

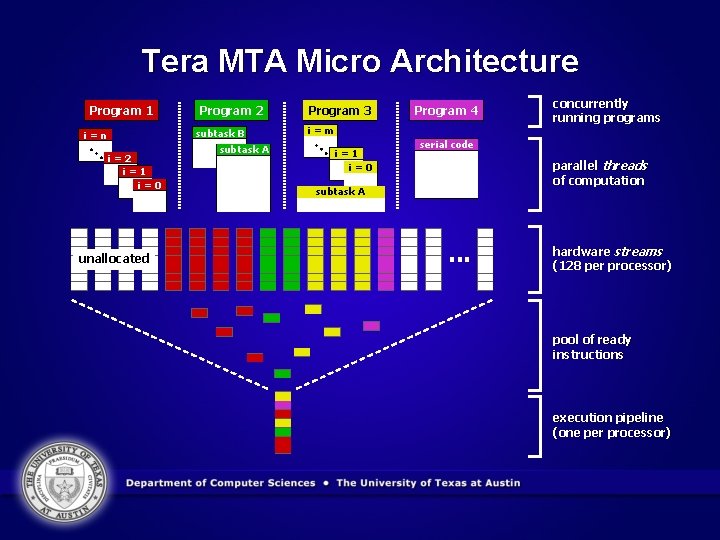

Tera MTA Micro Architecture Program 1 Program 2 subtask B i=n i=2 i=1 i=0 unallocated subtask A Program 3 Program 4 i=m i=1 i=0 concurrently running programs serial code parallel threads of computation subtask A . . . hardware streams (128 per processor) pool of ready instructions execution pipeline (one per processor)

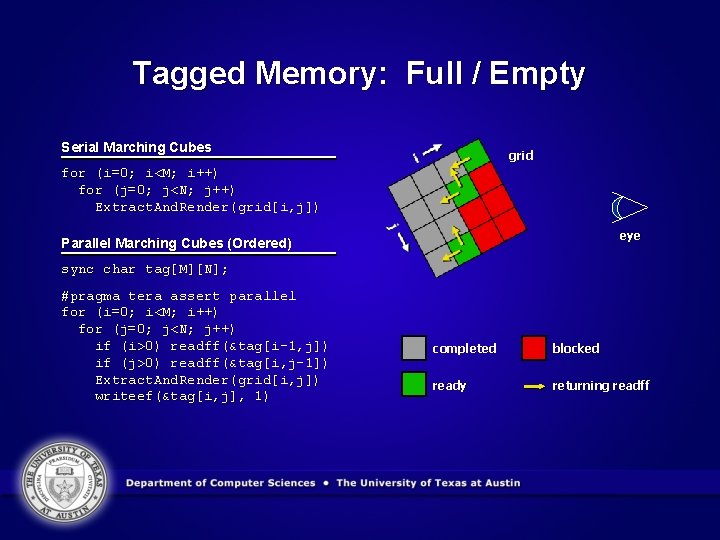

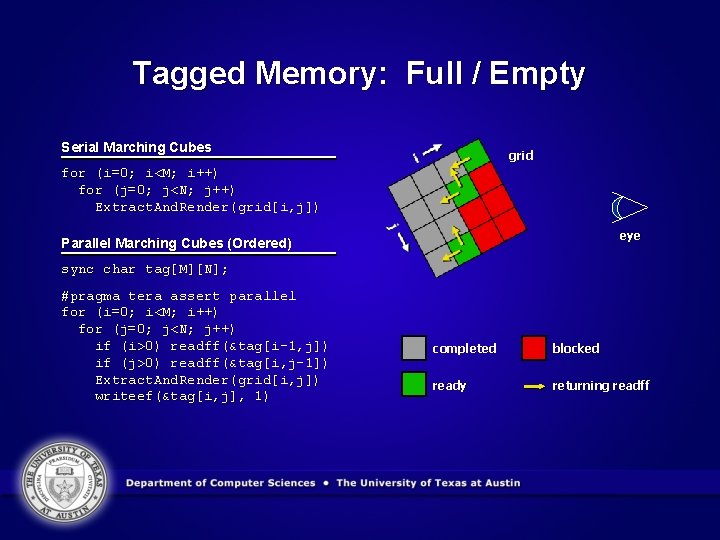

Tagged Memory: Full / Empty Serial Marching Cubes grid for (i=0; i<M; i++) for (j=0; j<N; j++) Extract. And. Render(grid[i, j]) eye Parallel Marching Cubes (Ordered) sync char tag[M][N]; #pragma tera assert parallel for (i=0; i<M; i++) for (j=0; j<N; j++) if (i>0) readff(&tag[i-1, j]) if (j>0) readff(&tag[i, j-1]) Extract. And. Render(grid[i, j]) writeef(&tag[i, j], 1) completed blocked ready returning readff

Related Work • • • Network Processors SMT (Simultaneous Multithreading, Dean Tullsen) Hyper. Threading (2 -way SMT on Intel Xeon and Pentium 4) SSMT (Simultaneous Subordinate Microthreading, Yale Patt) Cascade (Burton Smith et al. )

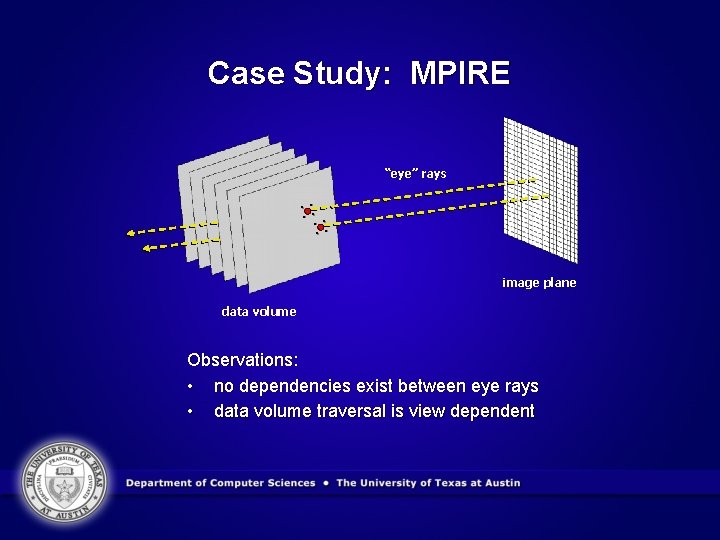

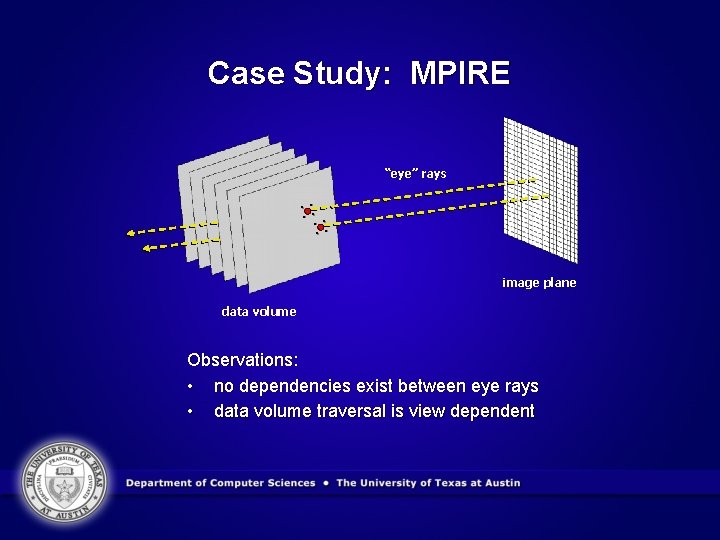

Case Study: MPIRE “eye” rays. . . . image plane data volume Observations: • no dependencies exist between eye rays • data volume traversal is view dependent

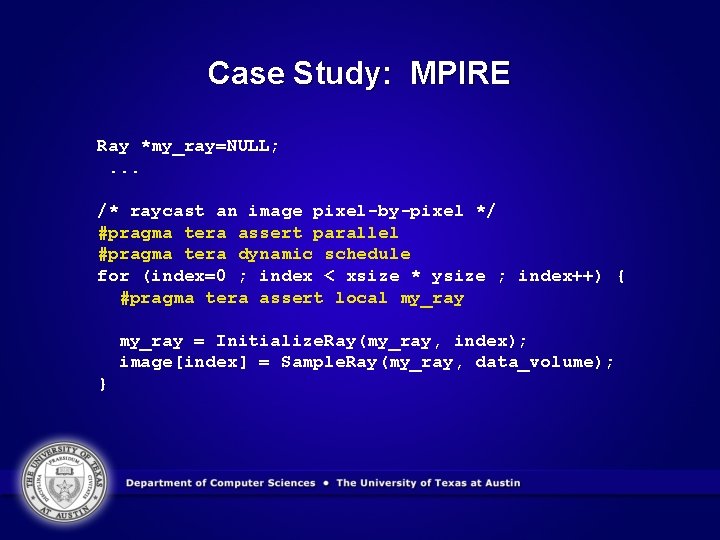

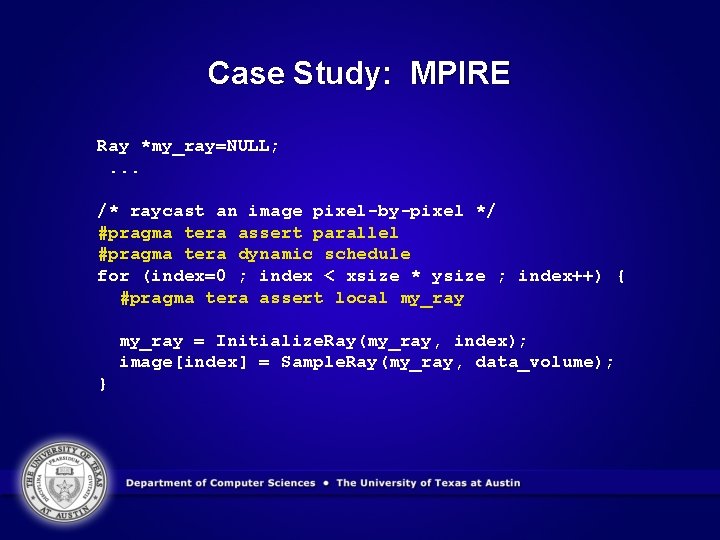

Case Study: MPIRE Ray *my_ray=NULL; . . . /* raycast an image pixel-by-pixel */ #pragma tera assert parallel #pragma tera dynamic schedule for (index=0 ; index < xsize * ysize ; index++) { #pragma tera assert local my_ray = Initialize. Ray(my_ray, index); image[index] = Sample. Ray(my_ray, data_volume); }

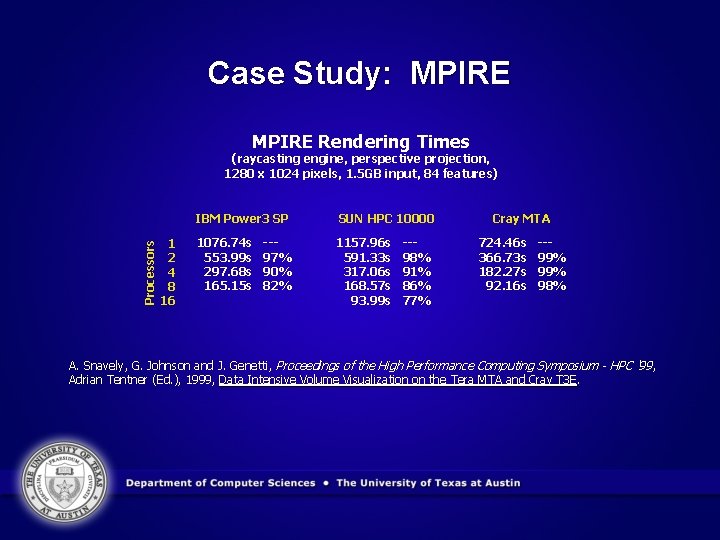

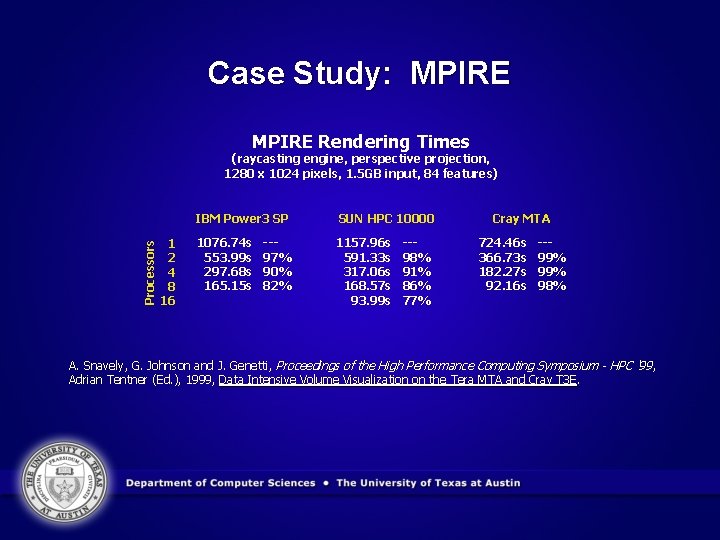

Case Study: MPIRE Rendering Times Processors (raycasting engine, perspective projection, 1280 x 1024 pixels, 1. 5 GB input, 84 features) 1 2 4 8 16 IBM Power 3 SP SUN HPC 10000 1076. 74 s 553. 99 s 297. 68 s 165. 15 s 1157. 96 s 591. 33 s 317. 06 s 168. 57 s 93. 99 s --97% 90% 82% --98% 91% 86% 77% Cray MTA 724. 46 s 366. 73 s 182. 27 s 92. 16 s --99% 98% A. Snavely, G. Johnson and J. Genetti, Proceedings of the High Performance Computing Symposium - HPC '99 , Adrian Tentner (Ed. ), 1999, Data Intensive Volume Visualization on the Tera MTA and Cray T 3 E.

Culler’s Multithreading Rebuttal

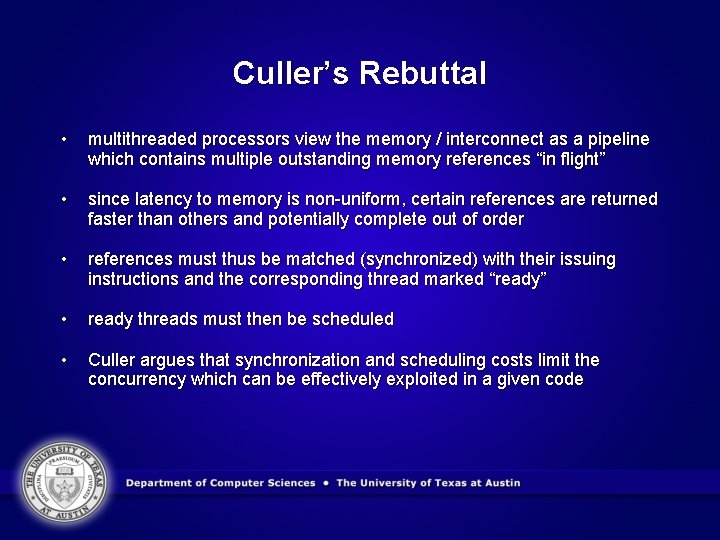

Culler’s Rebuttal • multithreaded processors view the memory / interconnect as a pipeline which contains multiple outstanding memory references “in flight” • since latency to memory is non-uniform, certain references are returned faster than others and potentially complete out of order • references must thus be matched (synchronized) with their issuing instructions and the corresponding thread marked “ready” • ready threads must then be scheduled • Culler argues that synchronization and scheduling costs limit the concurrency which can be effectively exploited in a given code

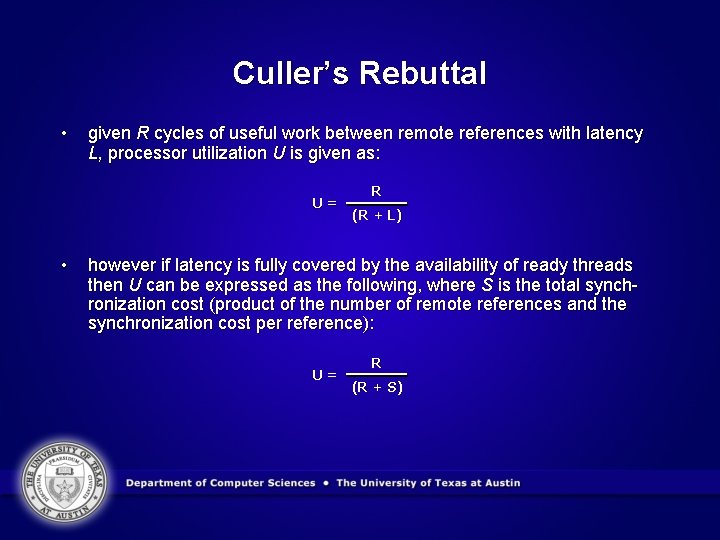

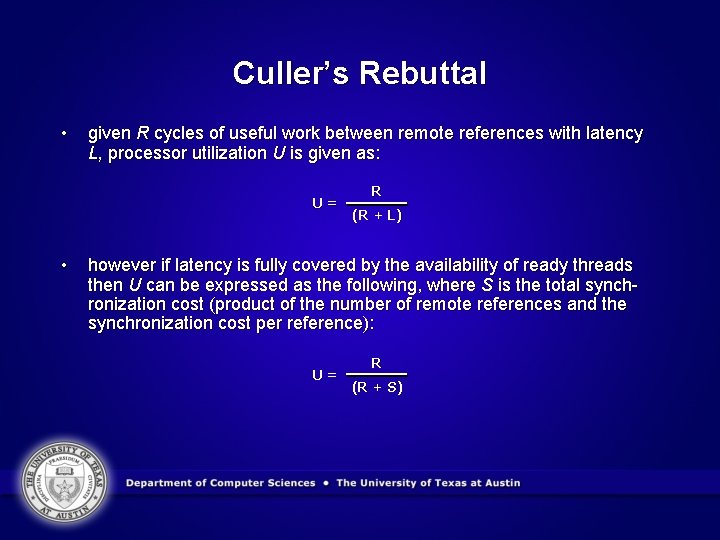

Culler’s Rebuttal • given R cycles of useful work between remote references with latency L, processor utilization U is given as: U= • R (R + L) however if latency is fully covered by the availability of ready threads then U can be expressed as the following, where S is the total synchronization cost (product of the number of remote references and the synchronization cost per reference): U= R (R + S)

Culler’s Rebuttal • scheduling threads without respect to the storage hierarchy (i. e. simply scheduling the next ready thread) increases the average latency of memory references • but as latency increases, the number of threads required to hide the latency increases, thus synchronization costs increase, thus overall utilization decreases • a related problem is that multithreading schemes typically reduce the state per thread (and thereby the cost of switching between threads) at the cost of increased number of remote references (again increasing synchronization costs)

Culler’s Rebuttal & MTA • the number of threads should be a function of the size of the top level of the storage hierarchy (to minimize switching costs), thus the size of the top level determines the amount of latency that can be hidden • what if (as in the case of the Tera MTA) the top level is main memory? • codes in which computation dominates are unable to benefit from highspeed register / cache access and reuse • indeed we’ve seen this on the Tera MTA - the machine really does favor memory intensive codes with little reuse (like MPIRE)

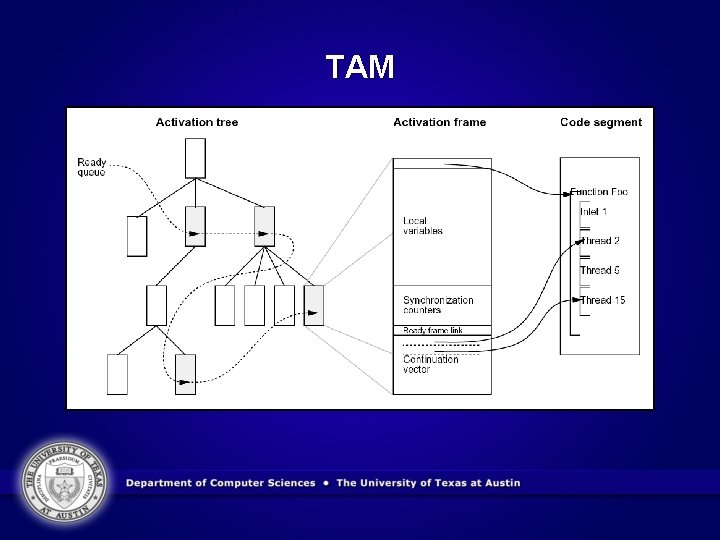

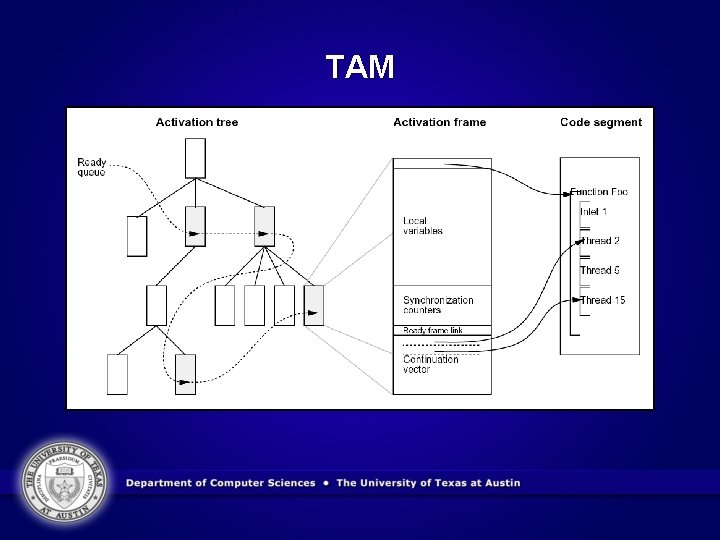

TAM • Threaded Abstract Machine tailors thread count and thread scheduling to the storage hierarchy of the target machine • unlike MTA threads, TAM threads each execute until completion • threads are organized by function activation, threads within the same activation execute “near” one another (in time or space) • TAM benefits from the migration of data up through the storage hierarchy (TAM thread scheduling maximizes reuse)

TAM

Concluding Remarks

Hardware Multithreaded Graphics • Argus pipeline • growth of bus speeds versus growth rate of CPUs and GPUs implies the distance between the CPU and GPU is growing • perhaps a solution to the problem of building and traversing balanced spatial data structures (potentially useful for ray tracing, shadows, antialiasing, etc. ); in other words, maybe this is a solution to matching irregular data structures to streaming processors

© Carter Emmart The End