Database Selection Using Actual Physical and Acquired Logical

Database Selection Using Actual Physical and Acquired Logical Collection Resources in a Massive Domain-specific Operational Environment Jack G. Conrad, Xi S. Guo, Peter Jackson, Monem Meziou Research & Development Thomson Legal & Regulatory – West Group St. Paul, Minnesota 55123 USA {Jack. Conrad, Peter. Jackson}@West. Group. com 20 -23 Aug. 2002 28 th International VLDB '02 — J. Conrad

20 -23 Aug. 2002 28 th International VLDB '02 — J. Conrad 2

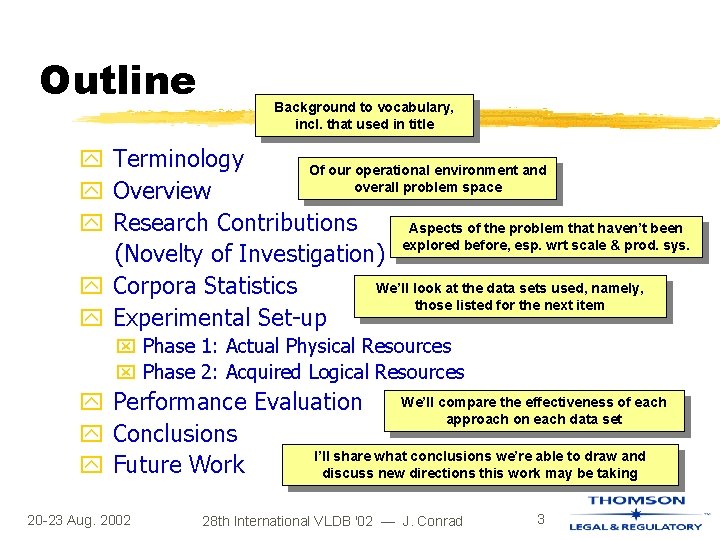

Outline Background to vocabulary, incl. that used in title y Terminology Of our operational environment and overall problem space y Overview y Research Contributions Aspects of the problem that haven’t been explored before, esp. wrt scale & prod. sys. (Novelty of Investigation) We’ll look at the data sets used, namely, y Corpora Statistics those listed for the next item y Experimental Set-up x Phase 1: Actual Physical Resources x Phase 2: Acquired Logical Resources We’ll compare the effectiveness of each y Performance Evaluation approach on each data set y Conclusions I’ll share what conclusions we’re able to draw and y Future Work discuss new directions this work may be taking 20 -23 Aug. 2002 28 th International VLDB '02 — J. Conrad 3

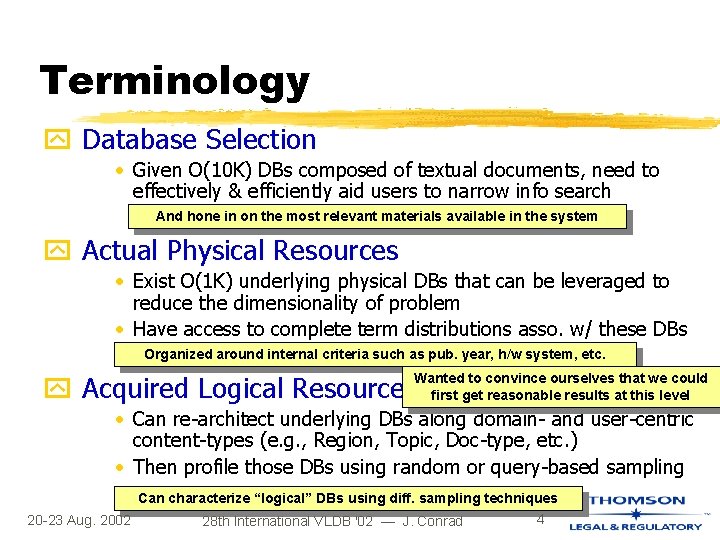

Terminology y Database Selection • Given O(10 K) DBs composed of textual documents, need to effectively & efficiently aid users to narrow info search And hone in on the most relevant materials available in the system y Actual Physical Resources • Exist O(1 K) underlying physical DBs that can be leveraged to reduce the dimensionality of problem • Have access to complete term distributions asso. w/ these DBs Organized around internal criteria such as pub. year, h/w system, etc. y Acquired Logical Resources Wanted to convince ourselves that we could first get reasonable results at this level • Can re-architect underlying DBs along domain- and user-centric content-types (e. g. , Region, Topic, Doc-type, etc. ) • Then profile those DBs using random or query-based sampling Can characterize “logical” DBs using diff. sampling techniques 4 20 -23 Aug. 2002 28 th International VLDB '02 — J. Conrad

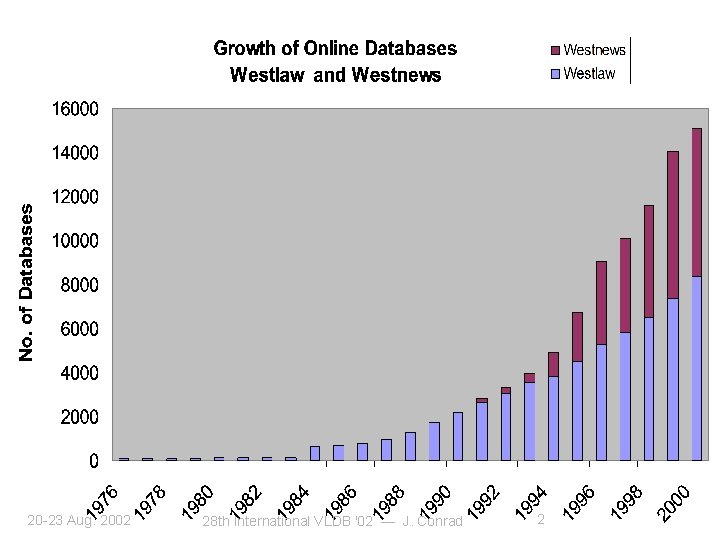

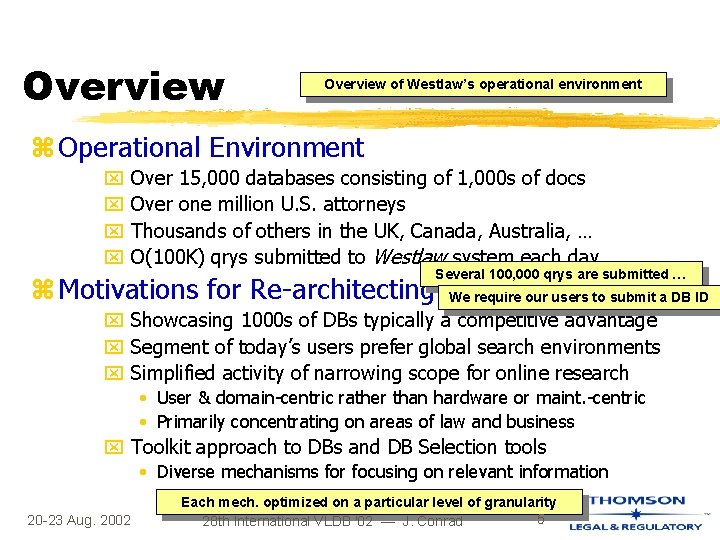

Overview of Westlaw’s operational environment z Operational Environment x x Over 15, 000 databases consisting of 1, 000 s of docs Over one million U. S. attorneys Thousands of others in the UK, Canada, Australia, … O(100 K) qrys submitted to Westlaw system each day Several 100, 000 qrys are submitted … z Motivations for Re-architecting System We require our users to submit a DB ID x Showcasing 1000 s of DBs typically a competitive advantage x Segment of today’s users prefer global search environments x Simplified activity of narrowing scope for online research • User & domain-centric rather than hardware or maint. -centric • Primarily concentrating on areas of law and business x Toolkit approach to DBs and DB Selection tools • Diverse mechanisms for focusing on relevant information 20 -23 Aug. 2002 Each mech. optimized on a particular level of granularity 5 28 th International VLDB '02 — J. Conrad

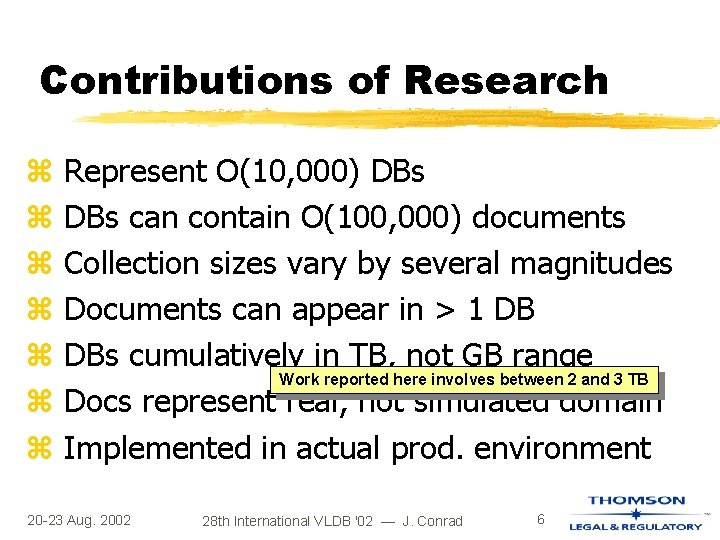

Contributions of Research z z z z Represent O(10, 000) DBs can contain O(100, 000) documents Collection sizes vary by several magnitudes Documents can appear in > 1 DB DBs cumulatively in TB, not GB range Work reported here involves between 2 and 3 TB Docs represent real, not simulated domain Implemented in actual prod. environment 20 -23 Aug. 2002 28 th International VLDB '02 — J. Conrad 6

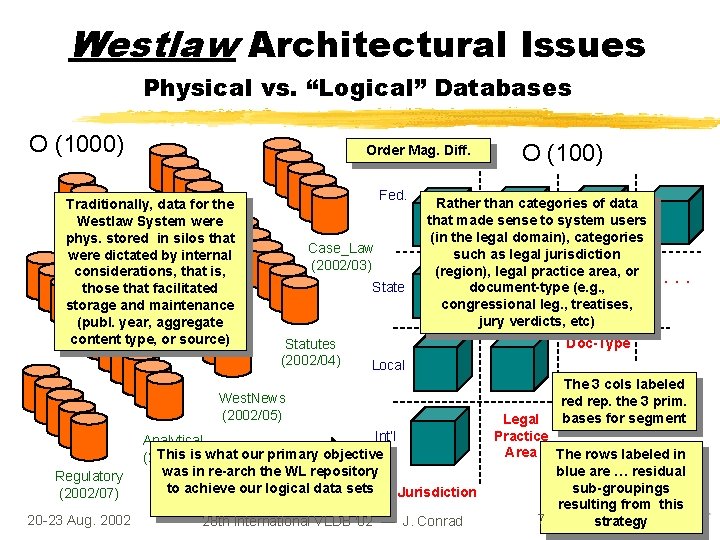

Westlaw Architectural Issues Physical vs. “Logical” Databases O (1000) Order Mag. Diff. Traditionally, data for the Westlaw System were phys. stored in silos that were dictated by internal considerations, that is, those that facilitated storage and maintenance (publ. year, aggregate content type, or source) Fed. Case_Law (2002/03) State Statutes (2002/04) Rather than categories of data that made sense to system users (in the legal domain), categories such as legal jurisdiction (region), legal practice area, or document-type (e. g. , congressional leg. , treatises, jury verdicts, etc) 20 -23 Aug. 2002 . . . Doc-Type Local West. News (2002/05) Regulatory (2002/07) O (100) Int’l Analytical This is what our primary objective (2002/06) was in re-arch the WL repository to achieve our logical data sets Jurisdiction 28 th International VLDB '02 — J. Conrad The 3 cols labeled rep. the 3 prim. bases for segment Legal Practice Area The rows labeled in blue are … residual sub-groupings resulting from this 7 strategy

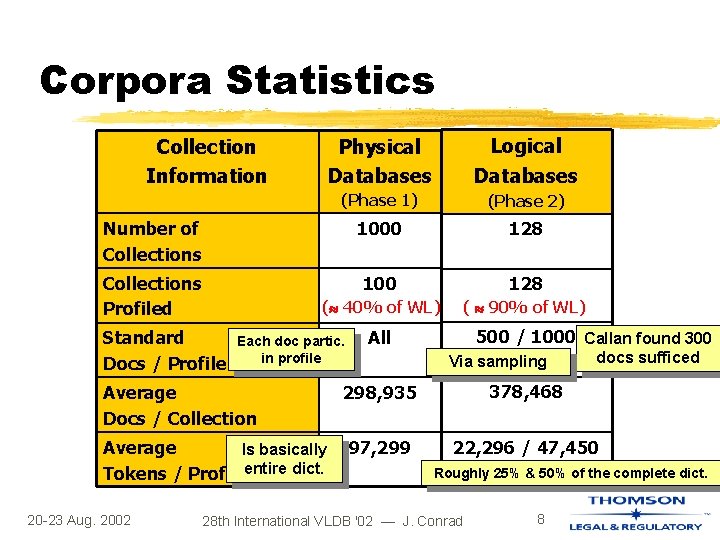

Corpora Statistics Physical Databases Logical Databases (Phase 1) (Phase 2) Number of Collections 1000 128 Collections Profiled 100 128 ( 40% of WL) ( 90% of WL) Collection Information Standard Docs / Profile Each doc partic. in profile Average Docs / Collection Average Is basically Tokens / Profileentire dict. 20 -23 Aug. 2002 500 / 1000 Callan found 300 All Via sampling docs sufficed 298, 935 378, 468 97, 299 22, 296 / 47, 450 Roughly 25% & 50% of the complete dict. 28 th International VLDB '02 — J. Conrad 8

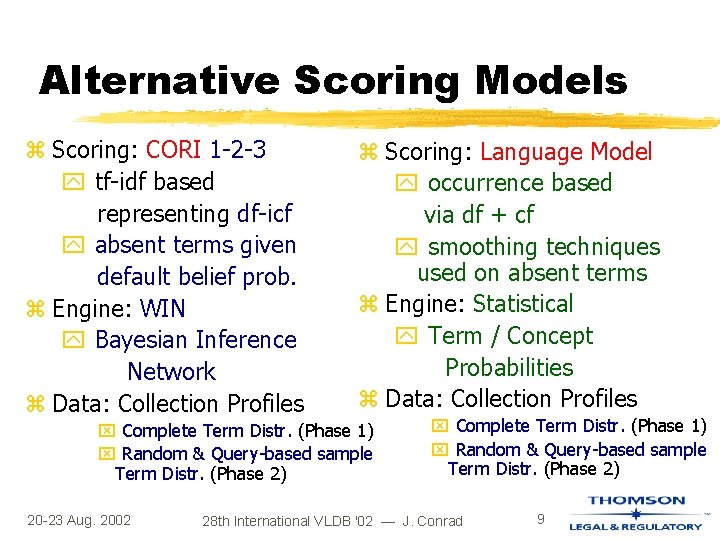

Alternative Scoring Models z Scoring: CORI 1 -2 -3 y tf-idf based representing df-icf y absent terms given default belief prob. z Engine: WIN y Bayesian Inference Network z Data: Collection Profiles z Scoring: Language Model y occurrence based via df + cf y smoothing techniques used on absent terms z Engine: Statistical y Term / Concept Probabilities z Data: Collection Profiles x Complete Term Distr. (Phase 1) x Random & Query-based sample Term Distr. (Phase 2) 20 -23 Aug. 2002 x Complete Term Distr. (Phase 1) x Random & Query-based sample Term Distr. (Phase 2) 28 th International VLDB '02 — J. Conrad 9

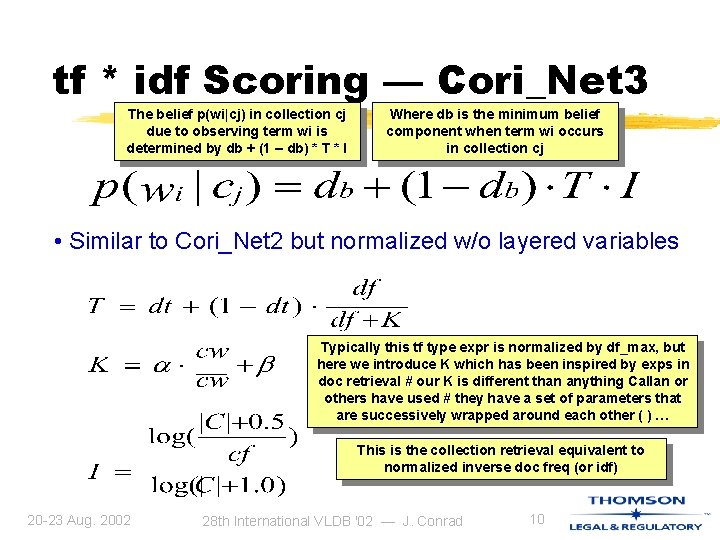

tf * idf Scoring — Cori_Net 3 The belief p(wi|cj) in collection cj due to observing term wi is determined by db + (1 – db) * T * I Where db is the minimum belief component when term wi occurs in collection cj • Similar to Cori_Net 2 but normalized w/o layered variables Typically this tf type expr is normalized by df_max, but here we introduce K which has been inspired by exps in doc retrieval # our K is different than anything Callan or others have used # they have a set of parameters that are successively wrapped around each other ( ) … This is the collection retrieval equivalent to normalized inverse doc freq (or idf) 20 -23 Aug. 2002 28 th International VLDB '02 — J. Conrad 10

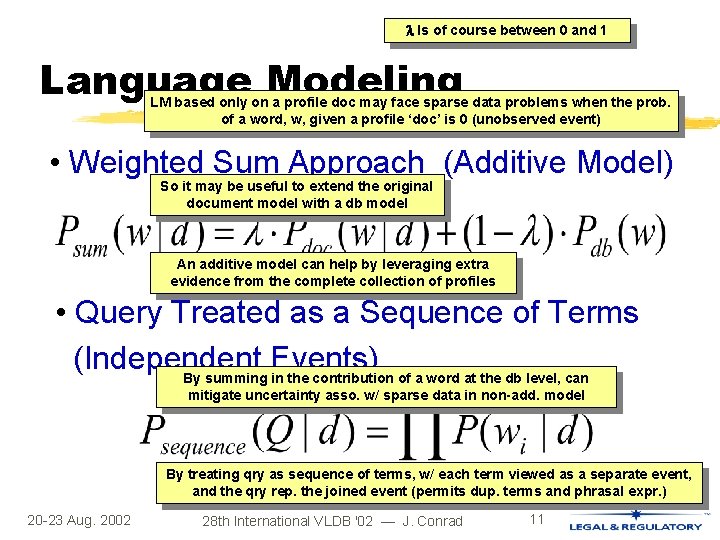

Is of course between 0 and 1 Language Modeling LM based only on a profile doc may face sparse data problems when the prob. of a word, w, given a profile ‘doc’ is 0 (unobserved event) • Weighted Sum Approach (Additive Model) So it may be useful to extend the original document model with a db model An additive model can help by leveraging extra evidence from the complete collection of profiles • Query Treated as a Sequence of Terms (Independent Events) By summing in the contribution of a word at the db level, can mitigate uncertainty asso. w/ sparse data in non-add. model By treating qry as sequence of terms, w/ each term viewed as a separate event, and the qry rep. the joined event (permits dup. terms and phrasal expr. ) 20 -23 Aug. 2002 28 th International VLDB '02 — J. Conrad 11

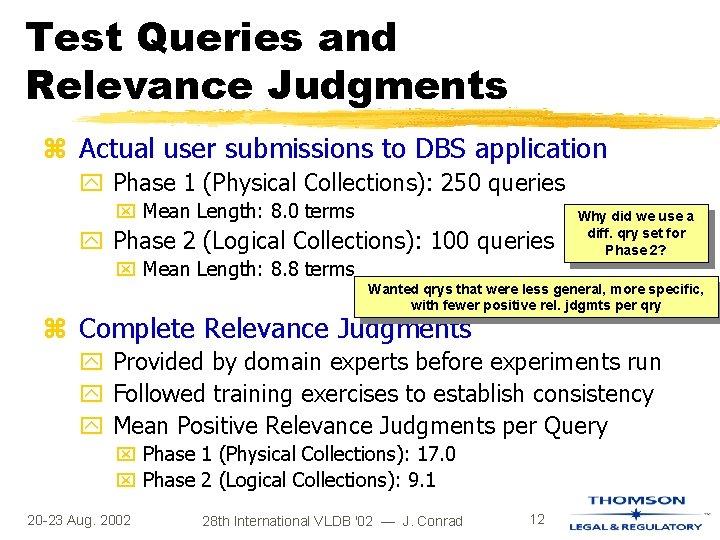

Test Queries and Relevance Judgments z Actual user submissions to DBS application y Phase 1 (Physical Collections): 250 queries x Mean Length: 8. 0 terms y Phase 2 (Logical Collections): 100 queries x Mean Length: 8. 8 terms Why did we use a diff. qry set for Phase 2? Wanted qrys that were less general, more specific, with fewer positive rel. jdgmts per qry z Complete Relevance Judgments y Provided by domain experts before experiments run y Followed training exercises to establish consistency y Mean Positive Relevance Judgments per Query x Phase 1 (Physical Collections): 17. 0 x Phase 2 (Logical Collections): 9. 1 20 -23 Aug. 2002 28 th International VLDB '02 — J. Conrad 12

Retrieval Experiments z Database-level z Test Parameters: It’s important to point out that our initial exps were at the … Some of the variables we examined are indicated here y 100 physical DBs vs. 128 logical DBs x For logical DB profiles: Query-based vs. Random sampling y y Qrys with … versus … phrasal concepts vs. terms only Stemmed terms vs. stemming vs. no stemming unstemmed terms scaling vs. none (i. e. , global freq reduction) minimum term frequency thresholds z Performance Metrics: Inspired by speech recogn experiments – noise y Standard Precision at 11 -point Recall We’ll see some y Precision at N-database cut-offs examples of these next 20 -23 Aug. 2002 28 th International VLDB '02 — J. Conrad 13

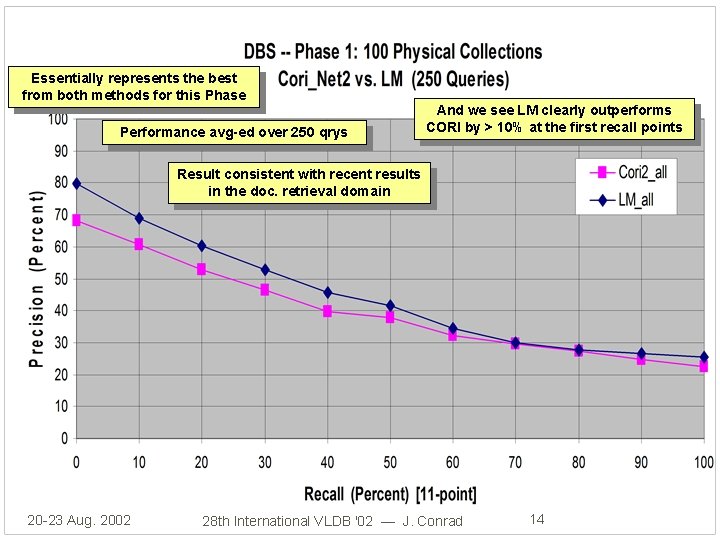

Essentially represents the best from both methods for this Phase Performance avg-ed over 250 qrys And we see LM clearly outperforms CORI by > 10% at the first recall points Result consistent with recent results in the doc. retrieval domain 20 -23 Aug. 2002 28 th International VLDB '02 — J. Conrad 14

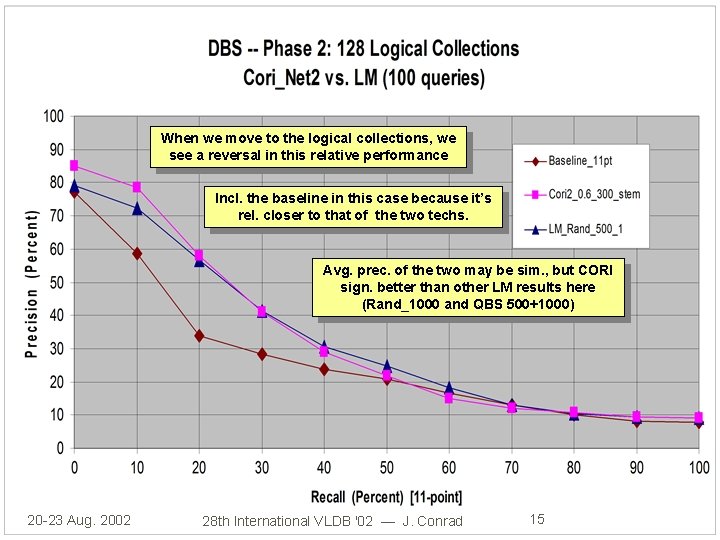

When we move to the logical collections, we see a reversal in this relative performance Incl. the baseline in this case because it’s rel. closer to that of the two techs. Avg. prec. of the two may be sim. , but CORI sign. better than other LM results here (Rand_1000 and QBS 500+1000) 20 -23 Aug. 2002 28 th International VLDB '02 — J. Conrad 15

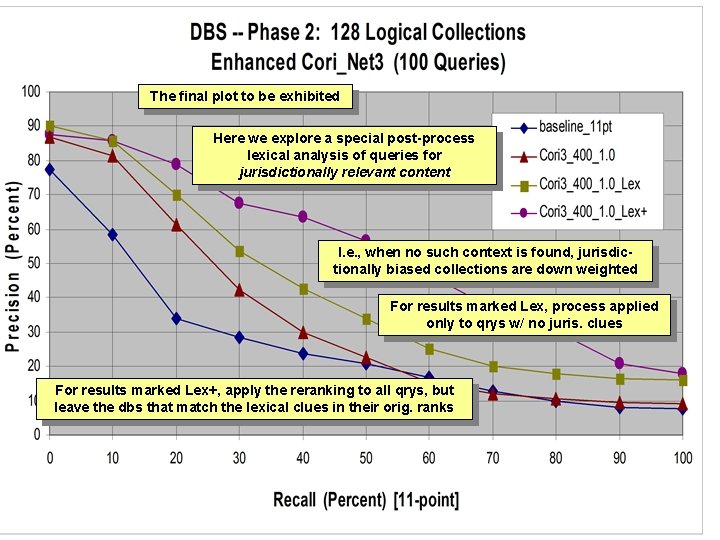

The final plot to be exhibited Here we explore a special post-process lexical analysis of queries for jurisdictionally relevant content I. e. , when no such context is found, jurisdictionally biased collections are down weighted For results marked Lex, process applied only to qrys w/ no juris. clues For results marked Lex+, apply the reranking to all qrys, but leave the dbs that match the lexical clues in their orig. ranks

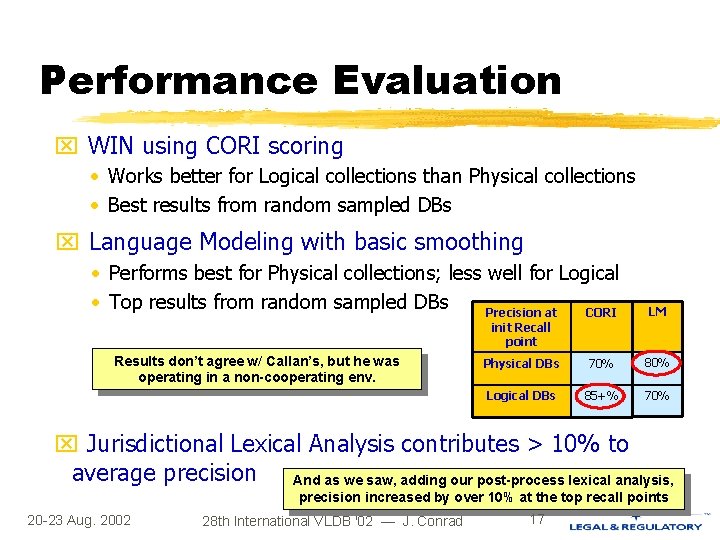

Performance Evaluation x WIN using CORI scoring • Works better for Logical collections than Physical collections • Best results from random sampled DBs x Language Modeling with basic smoothing • Performs best for Physical collections; less well for Logical • Top results from random sampled DBs Precision at CORI LM init Recall point Results don’t agree w/ Callan’s, but he was operating in a non-cooperating env. Physical DBs 70% 80% Logical DBs 85+% 70% x Jurisdictional Lexical Analysis contributes > 10% to average precision And as we saw, adding our post-process lexical analysis, precision increased by over 10% at the top recall points 20 -23 Aug. 2002 28 th International VLDB '02 — J. Conrad 17

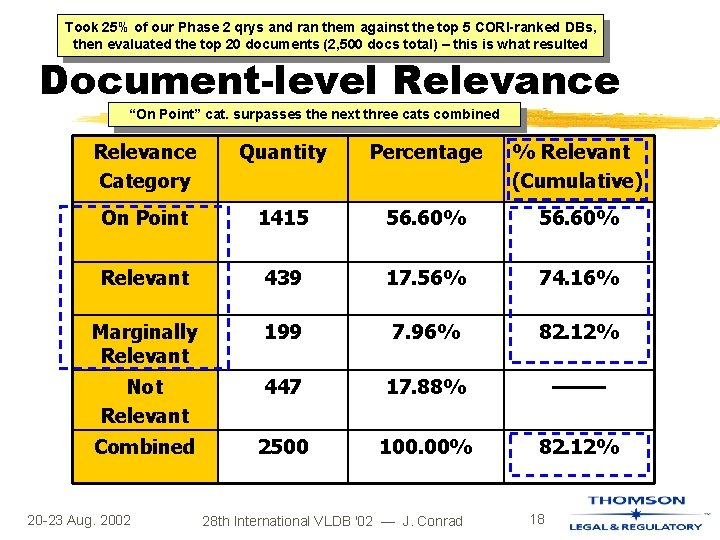

Took 25% of our Phase 2 qrys and ran them against the top 5 CORI-ranked DBs, then evaluated the top 20 documents (2, 500 docs total) – this is what resulted Document-level Relevance “On Point” cat. surpasses the next three cats combined Relevance Category Quantity Percentage % Relevant (Cumulative) On Point 1415 56. 60% Relevant 439 17. 56% 74. 16% Marginally Relevant 199 7. 96% 82. 12% Not Relevant 447 17. 88% ——— Combined 2500 100. 00% 82. 12% 20 -23 Aug. 2002 28 th International VLDB '02 — J. Conrad 18

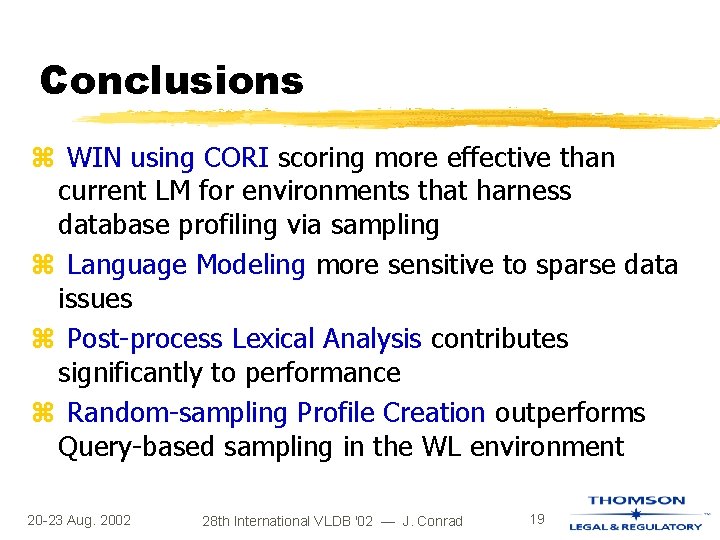

Conclusions z WIN using CORI scoring more effective than current LM for environments that harness database profiling via sampling z Language Modeling more sensitive to sparse data issues z Post-process Lexical Analysis contributes significantly to performance z Random-sampling Profile Creation outperforms Query-based sampling in the WL environment 20 -23 Aug. 2002 28 th International VLDB '02 — J. Conrad 19

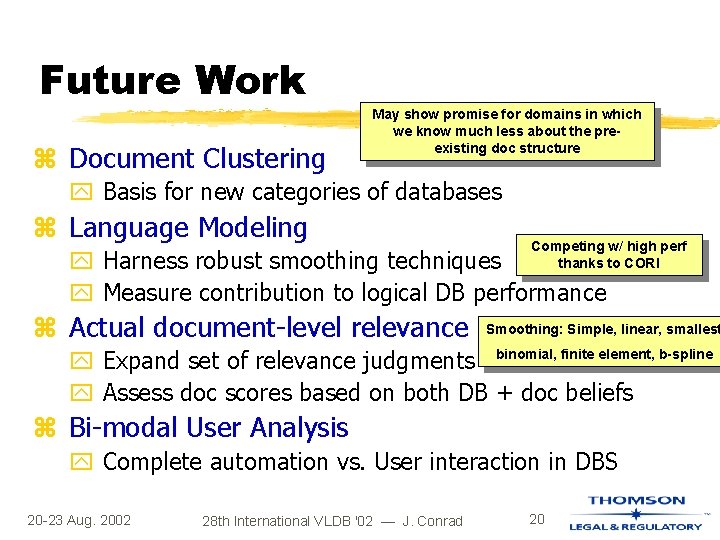

Future Work z Document Clustering May show promise for domains in which we know much less about the preexisting doc structure y Basis for new categories of databases z Language Modeling Competing w/ high perf thanks to CORI y Harness robust smoothing techniques y Measure contribution to logical DB performance z Actual document-level relevance Smoothing: Simple, linear, smallest y Expand set of relevance judgments binomial, finite element, b-spline y Assess doc scores based on both DB + doc beliefs z Bi-modal User Analysis y Complete automation vs. User interaction in DBS 20 -23 Aug. 2002 28 th International VLDB '02 — J. Conrad 20

Database Selection Using Actual Physical and Acquired Logical Collection Resources in a Massive Domain-specific Operational Environment Jack G. Conrad, Xi S. Guo, Peter Jackson, Monem Meziou Research & Development Thomson Legal & Regulatory – West Group St. Paul, Minnesota 55123 USA {Jack. Conrad, Peter. Jackson}@West. Group. com 20 -23 Aug. 2002 28 th International VLDB '02 — J. Conrad

Related Work z L. Gravano, et al. , Stanford (VLDB 1995) x Presented Gl. OSS system to assist in DB selection task x Used ‘Goodness’ as measure of effectiveness z J. French, et al. , U. Virginia (SIGIR 1998) x Came up with metrics to evaluate DB selection systems x Began to compare effectiveness of different methods z J. Callan, et al. , UMass. (SIGIR 95+99, CIKM 2000) x Developed Collection Retrieval Inference Net (CORI) x Showed CORI was more effective than Gl. OSS, CVV, others 20 -23 Aug. 2002 28 th International VLDB '02 — J. Conrad 22

Background z Exponential growth of data sets on Web and in commercial enterprises z Limited means of narrowing scope of searches to relevant databases z Application challenges in large domainspecific operational environments z Need effective approaches that scale and deliver in focused production systems 20 -23 Aug. 2002 28 th International VLDB '02 — J. Conrad 23

- Slides: 23