Data Warehousing in the Cloud The End of

- Slides: 56

Data Warehousing in the Cloud (The End of ”Shared-Nothing”) David J. De. Witt 2017 MIT Willis Lang Microsoft Jim Gray Systems Lab © Permission to use contents only with attribution to the authors 1

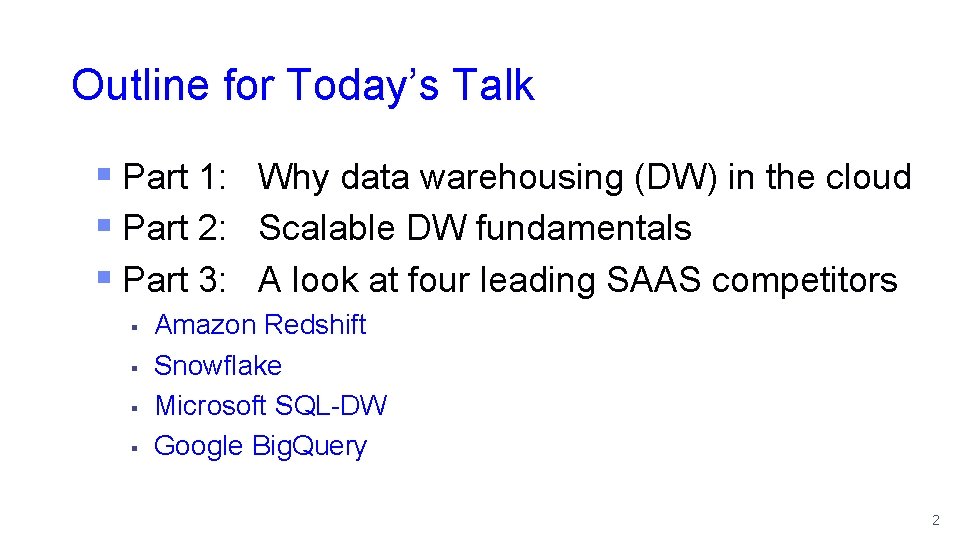

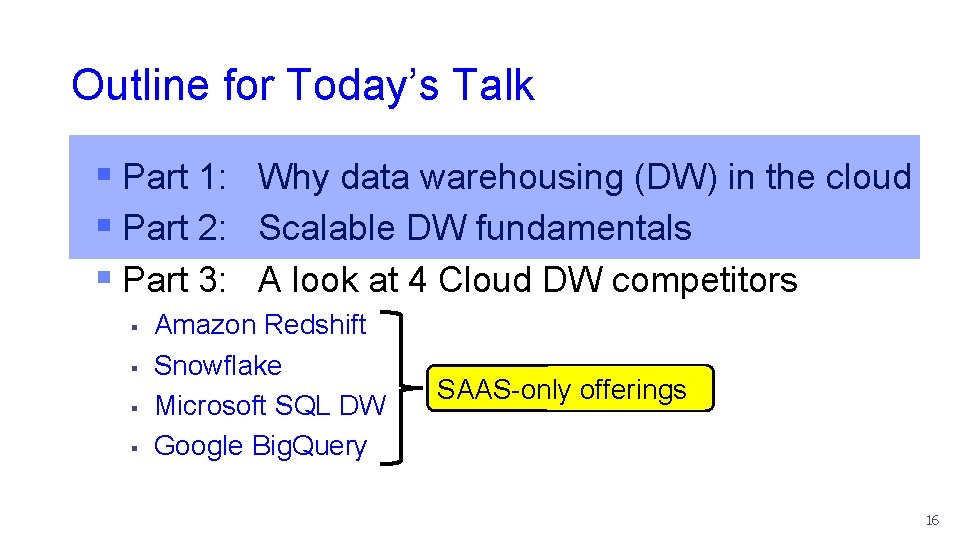

Outline for Today’s Talk § Part 1: Why data warehousing (DW) in the cloud § Part 2: Scalable DW fundamentals § Part 3: A look at four leading SAAS competitors § § Amazon Redshift Snowflake Microsoft SQL-DW Google Big. Query 2

Factors driving the DW explosion § Moore’s Law § $100/TB storage, $1000 servers, commodity networking § Increasing volumes of “dark” data § Data collected but never analyzed § Widening analysis gap of ”traditional” solutions § Due to their cost, complexity, scalability, & rigidity 3

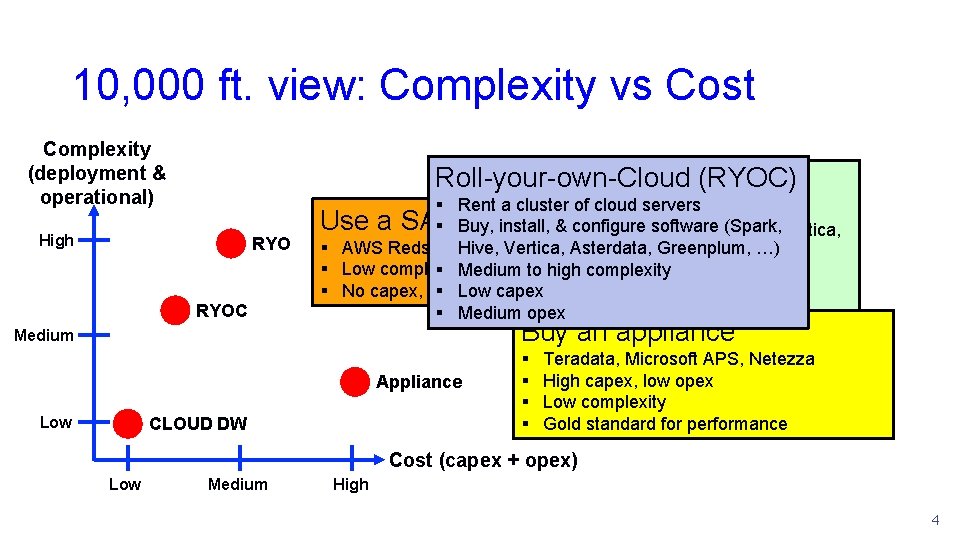

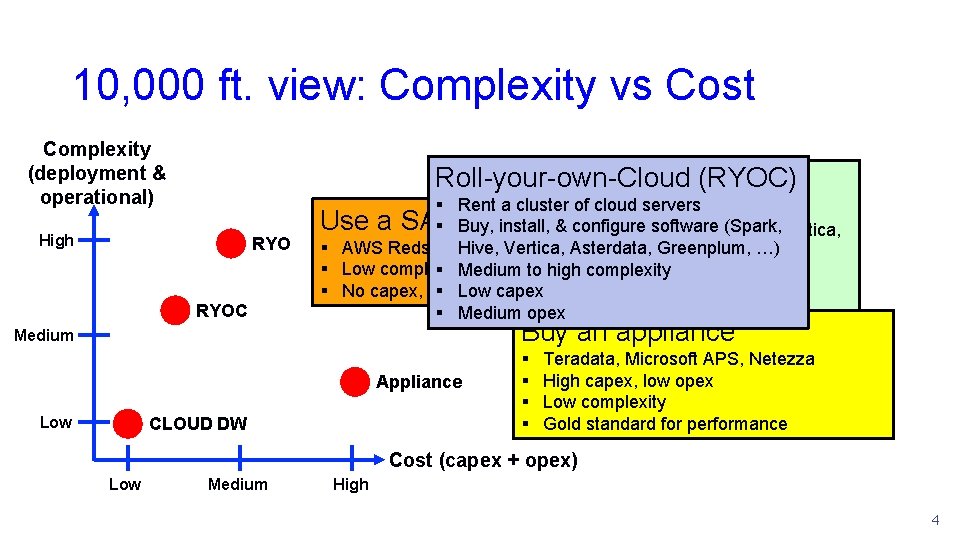

10, 000 ft. view: Complexity vs Cost Complexity (deployment & operational) High RYOC Roll-your-own-Cloud (RYOC) Roll-your-own (RYO) § Rent cluster of cloud servers § a. Buy & install a cluster of servers Use a SAAS DW § Buy, &the configure software (Spark, (Vertica, § install, Buy, in install, &cloud configure software § AWS Redshift, Hive, MSFT SQL DW, Snowflake, Big. Query Vertica, Asterdata, Greenplum, …) § Low complexity § Medium to high complexity High complexity Only 2§ options 5 years ago! § No capex, low opex § Low § capex Medium capex and opex § Medium opex Buy an appliance Medium Appliance Low CLOUD DW § § Teradata, Microsoft APS, Netezza High capex, low opex Low complexity Gold standard for performance Cost (capex + opex) Low Medium High 4

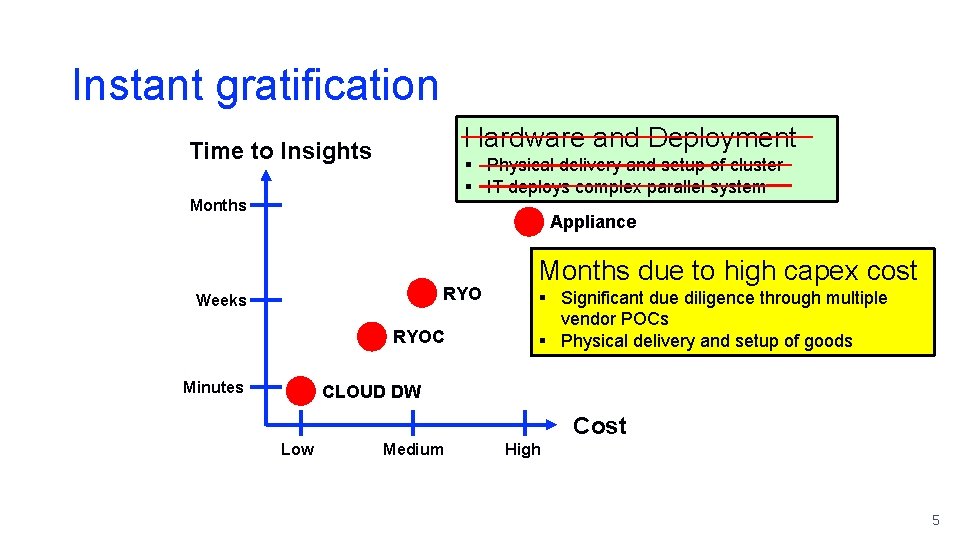

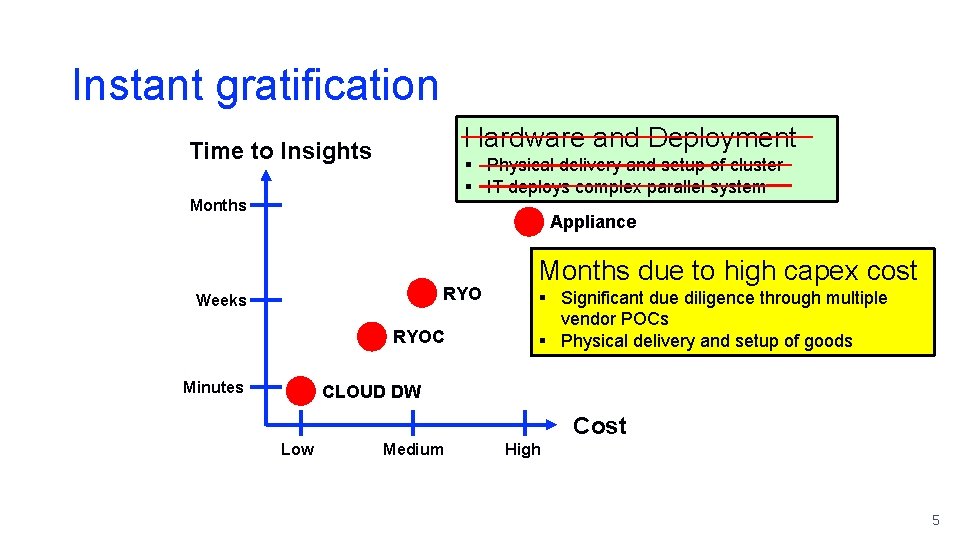

Instant gratification Hardware and Deployment Time to Insights § Physical delivery and setup of cluster § IT deploys complex parallel system Months Appliance RYO Weeks RYOC Minutes Months due to high capex cost § Significant due diligence through multiple vendor POCs § Physical delivery and setup of goods CLOUD DW Cost Low Medium High 5

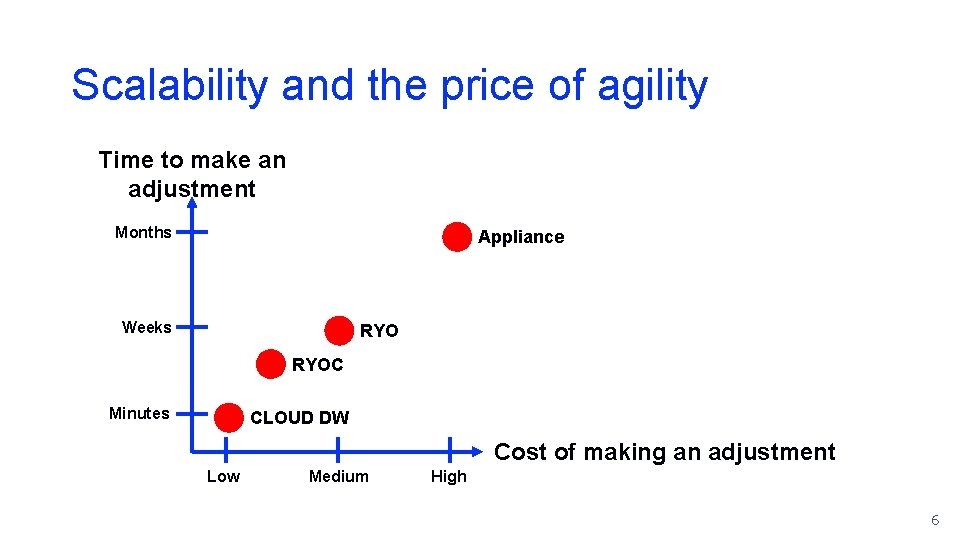

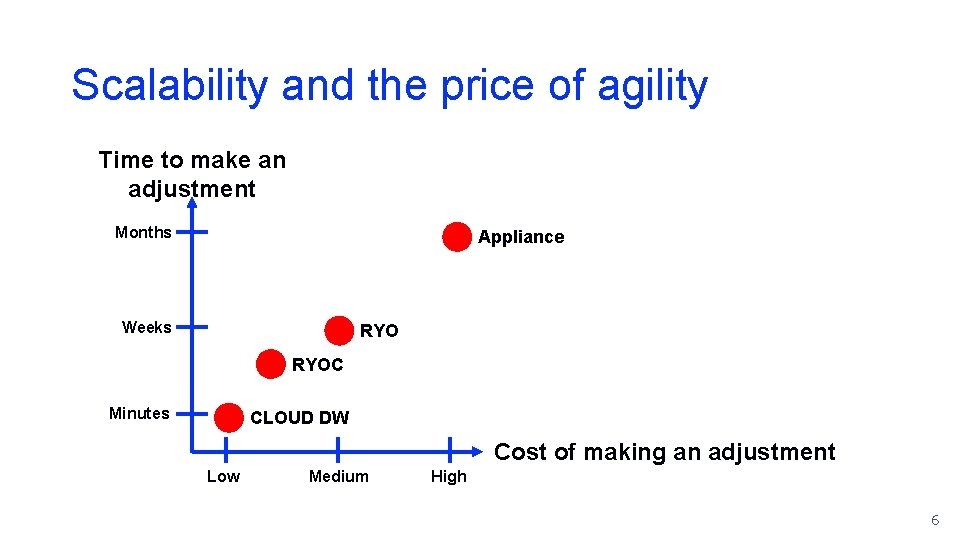

Scalability and the price of agility Time to make an adjustment Months Appliance Weeks RYOC Minutes CLOUD DW Cost of making an adjustment Low Medium High 6

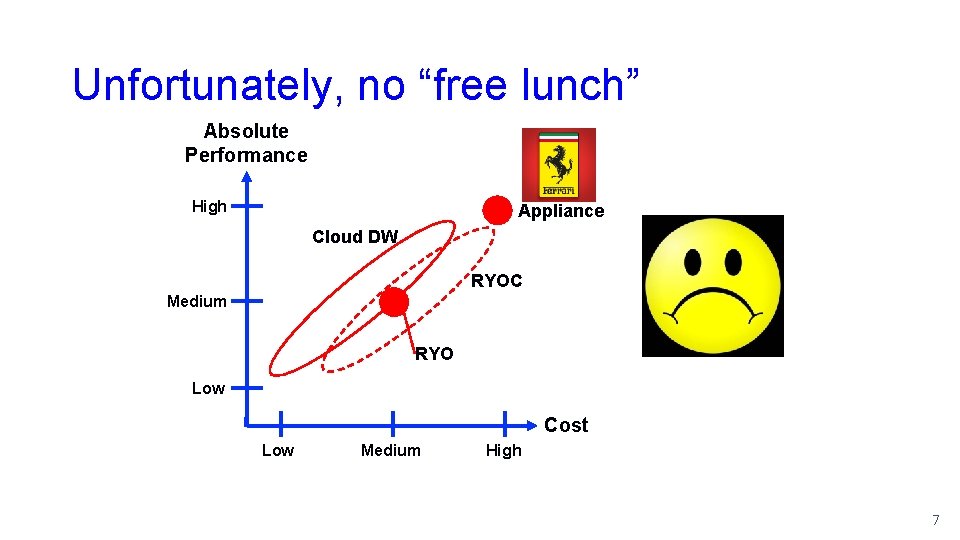

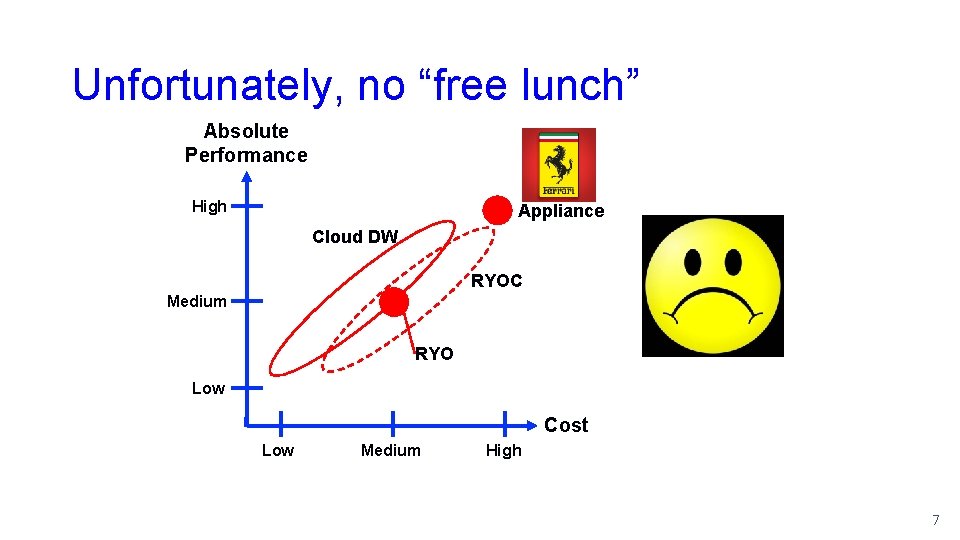

Unfortunately, no “free lunch” Absolute Performance High Appliance Cloud DW RYOC Medium RYO Low Cost Low Medium High 7

Why Cloud DW? § No Cap. Ex and low Op. Ex § Go from conception to insight in hours § Rock bottom storage prices (Azure, AWS S 3, GFS) § Flexibility to scale up/down compute capacity § Simple upgrade process 8

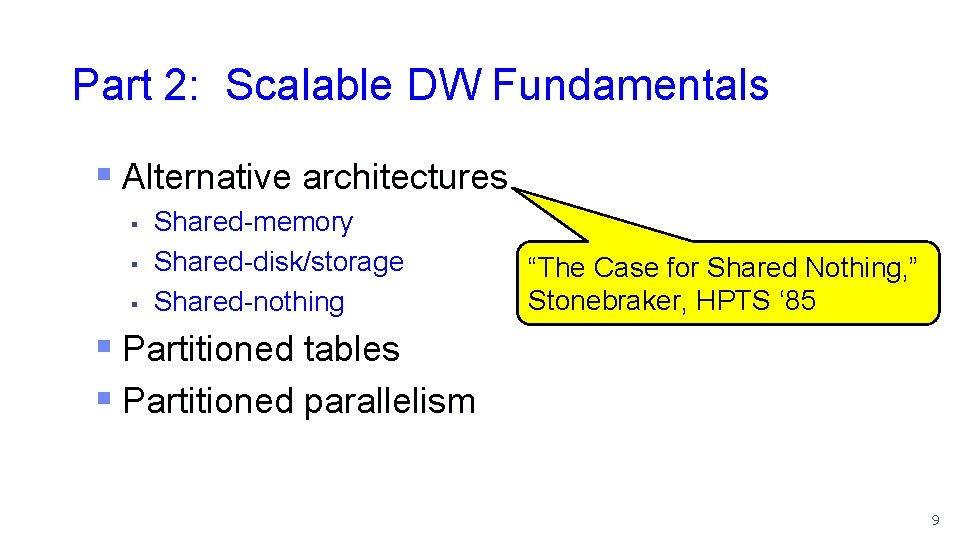

Part 2: Scalable DW Fundamentals § Alternative architectures § § § Shared-memory Shared-disk/storage Shared-nothing “The Case for Shared Nothing, ” Stonebraker, HPTS ‘ 85 § Partitioned tables § Partitioned parallelism 9

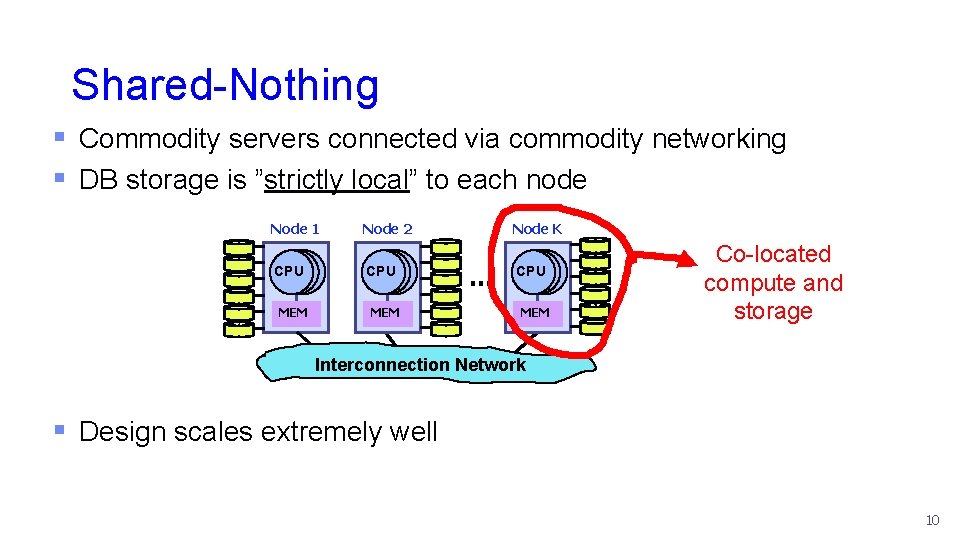

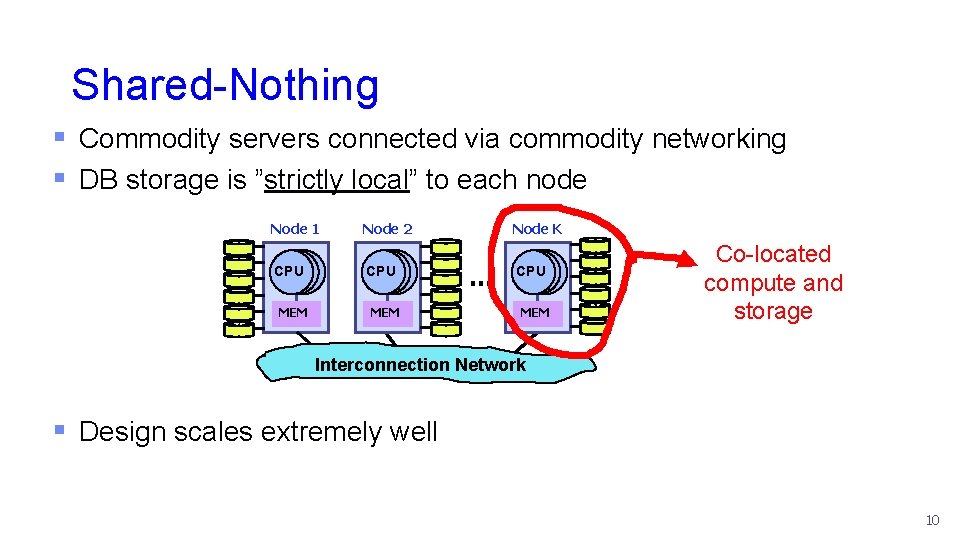

Shared-Nothing § Commodity servers connected via commodity networking § DB storage is ”strictly local” to each node Node 1 Node 2 CPU MEM Node K … CPU MEM Co-located compute and storage Interconnection Network § Design scales extremely well 10

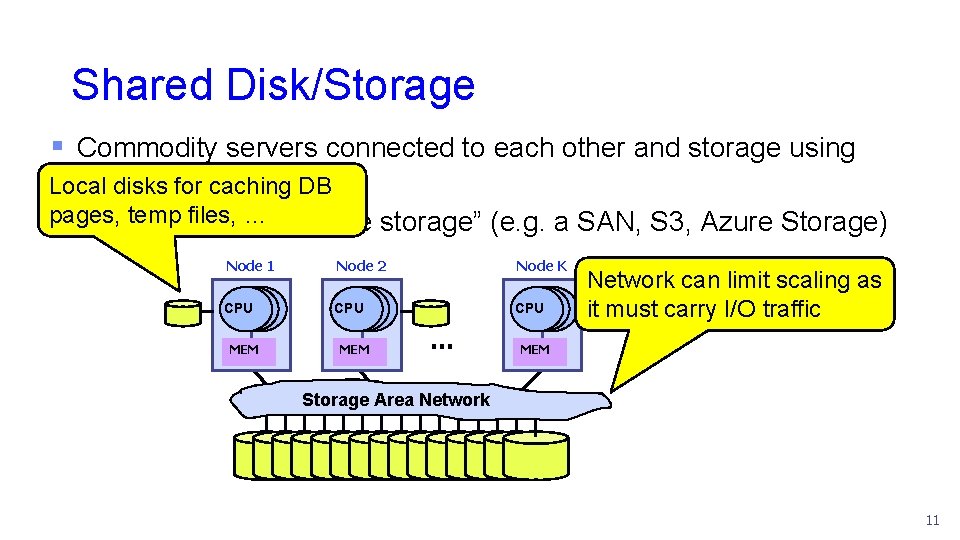

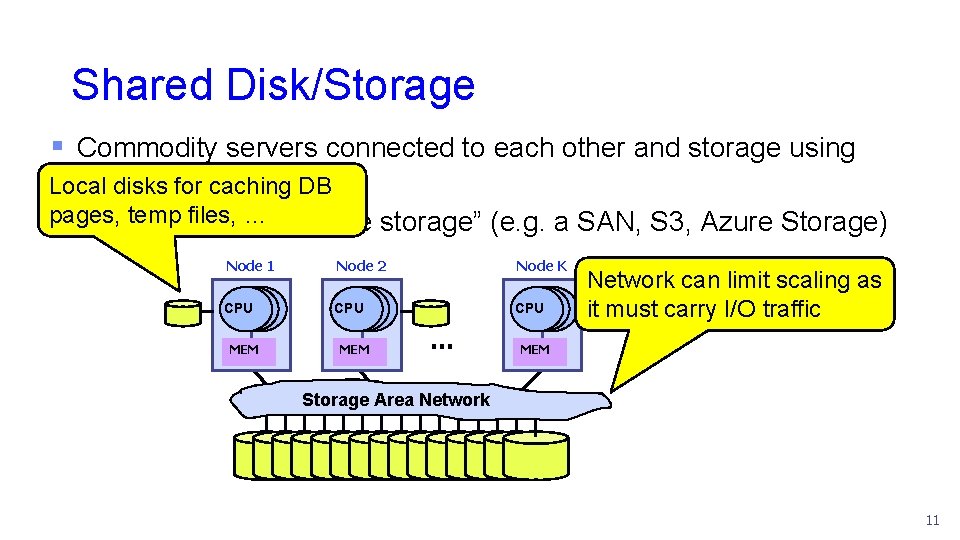

Shared Disk/Storage § Commodity servers connected to each other and storage using commodity networking Local disks for caching DB pages, temp files, on … “remote storage” (e. g. a SAN, S 3, Azure Storage) § DB is stored Node 1 Node 2 Node K CPU CPU MEM … Network can limit scaling as it must carry I/O traffic MEM Storage Area Network 11

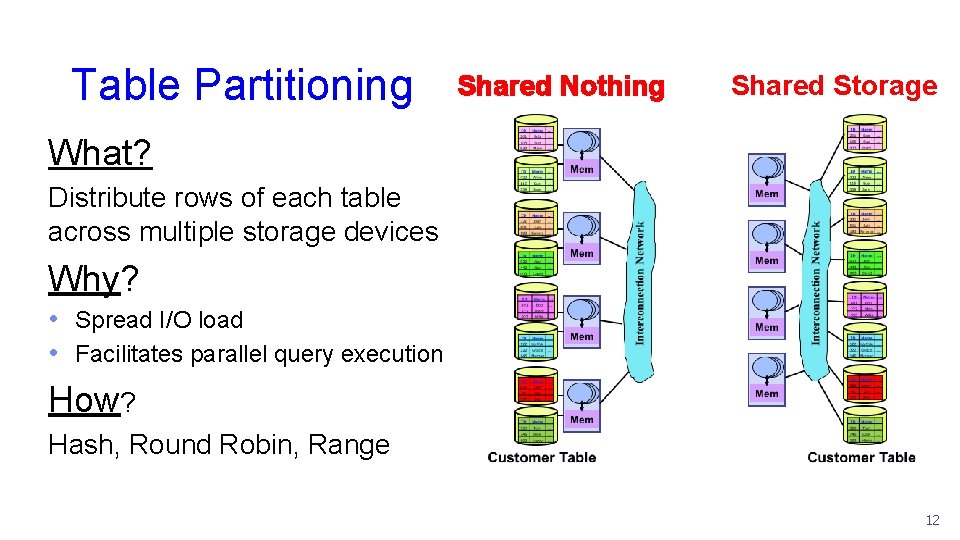

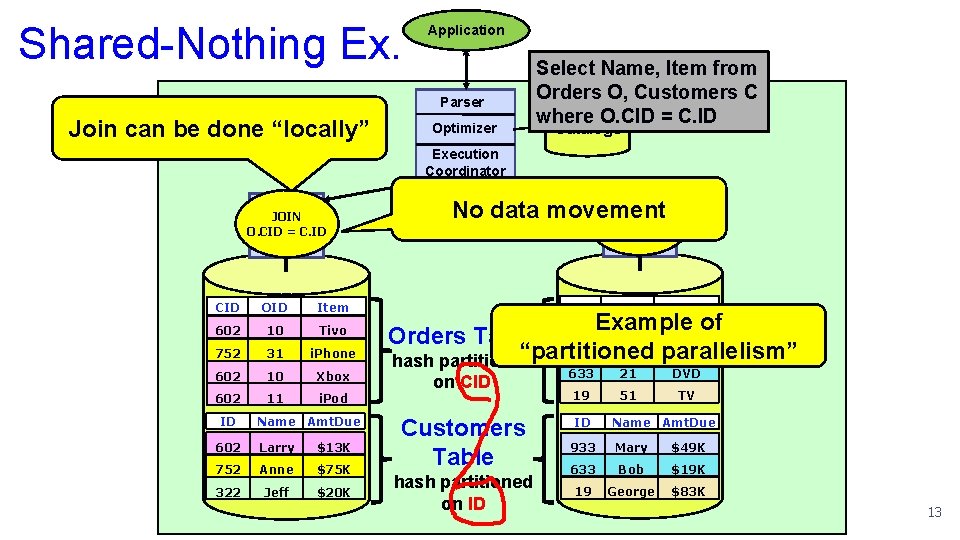

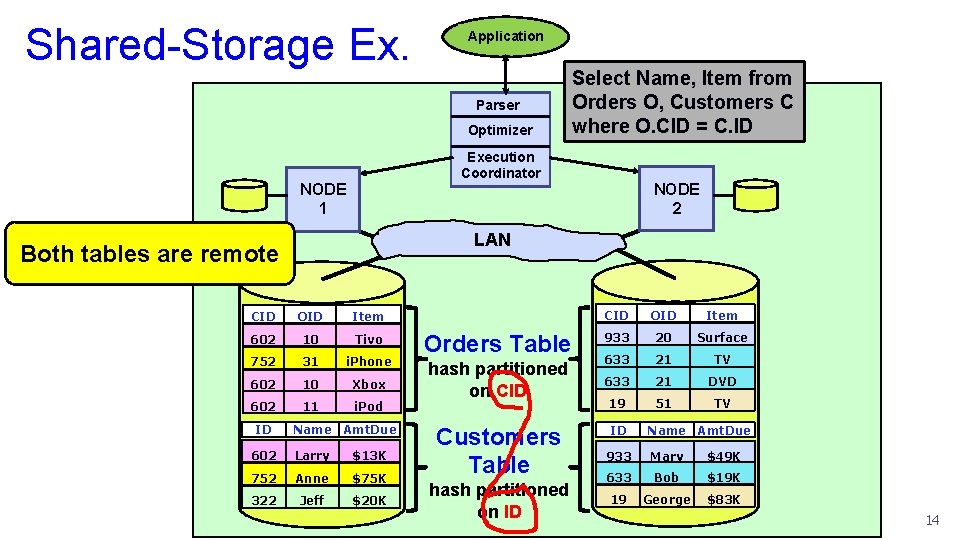

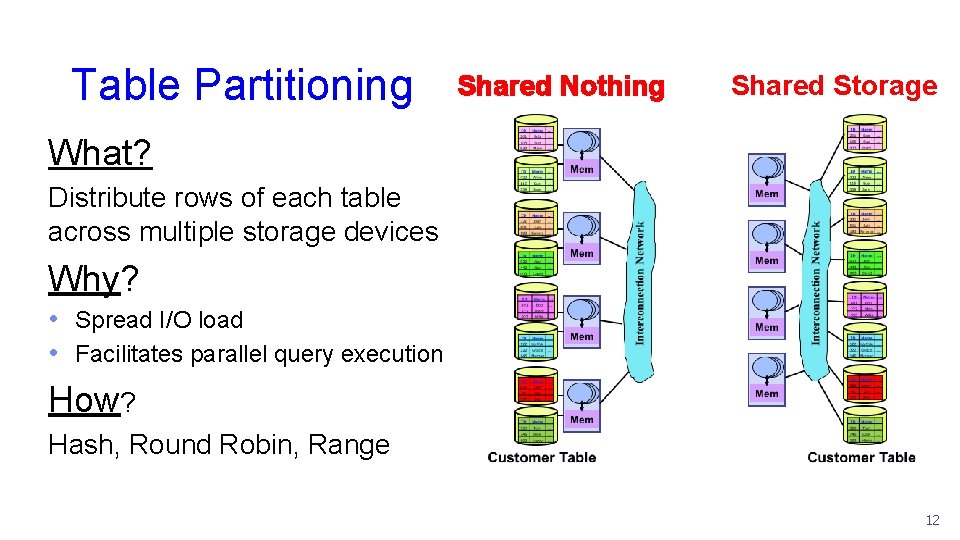

Table Partitioning Shared Nothing Shared Storage What? Distribute rows of each table across multiple storage devices Why? • Spread I/O load • Facilitates parallel query execution How? Hash, Round Robin, Range 12

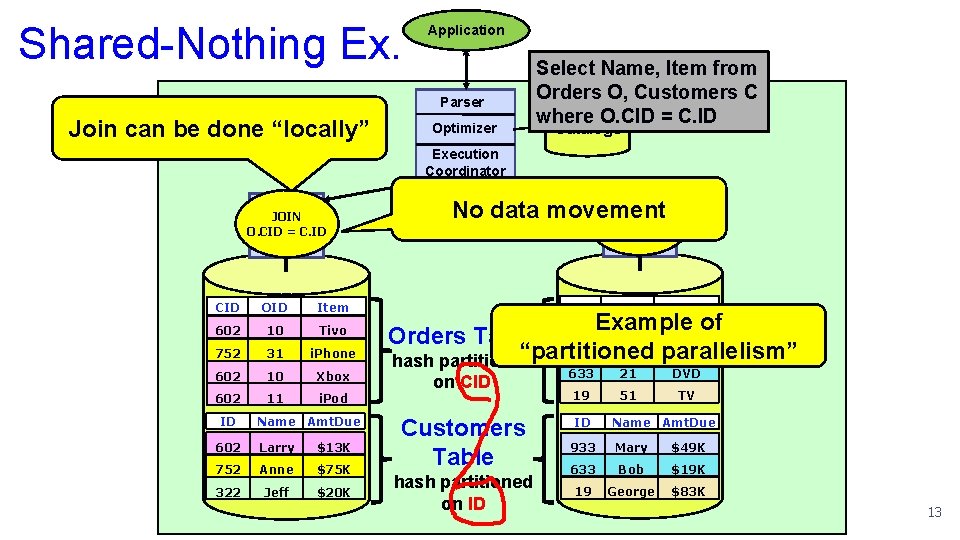

Shared-Nothing Ex. Application Parser Join can be done “locally” Optimizer Select Name, Item from Orders O, Customers C where O. CID = C. ID Catalogs Execution Coordinator NODE JOIN O. CID 1= C. ID CID OID Item 602 10 Tivo 752 31 i. Phone 602 10 Xbox 602 11 i. Pod ID Name Amt. Due 602 Larry $13 K 752 Anne $75 K 322 Jeff $20 K No data movement JOIN NODE O. CID = C. ID 2 CID OID Item 633 21 DVD 19 51 TV Example of Orders Table 933 20 Surface 633 21 TV “partitioned parallelism” hash partitioned on CID Customers Table 933 Mary $49 K hash partitioned on ID 633 Bob $19 K 19 George $83 K ID Name Amt. Due 13

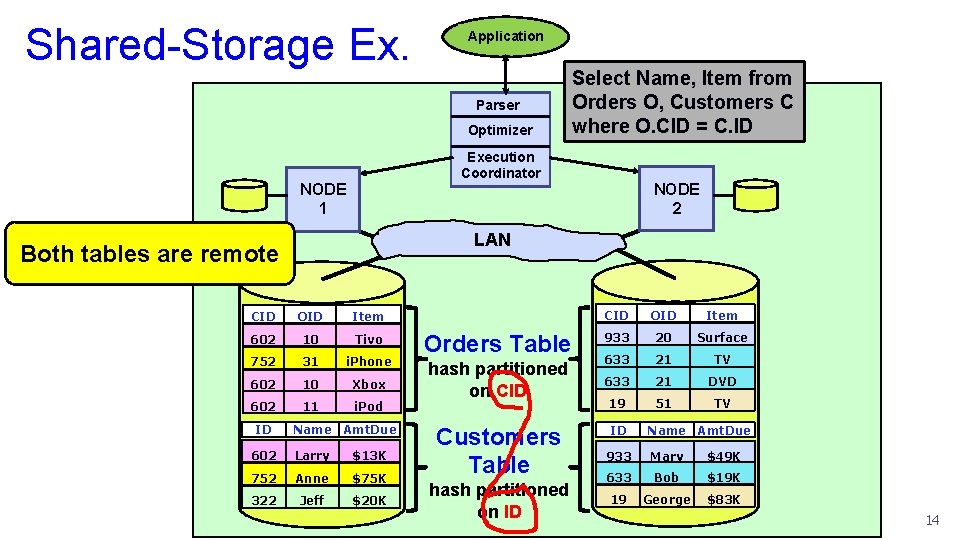

Shared-Storage Ex. Application Parser Optimizer Execution Coordinator NODE 1 NODE 2 LAN Both tables are remote CID OID Item 602 10 Tivo 752 31 i. Phone 602 10 Xbox 602 11 i. Pod ID Select Name, Item from Orders O, Customers C where O. CID = C. ID Name Amt. Due 602 Larry $13 K 752 Anne $75 K 322 Jeff $20 K Orders Table hash partitioned on CID OID Item 933 20 Surface 633 21 TV 633 21 DVD 19 51 TV Customers Table 933 Mary $49 K hash partitioned on ID 633 Bob $19 K 19 George $83 K ID Name Amt. Due 14

For 30+ years § Shared-nothing has been “gold standard” § Teradata, Gamma, Netezza, Vertica, DB 2/PE, SQL Server PDW, Greenplum, Asterdata… § Simplest design § Excellent scalability § Minimizes data movement § Especially for DBs with a star schema design § The “cloud” has changed the game 15

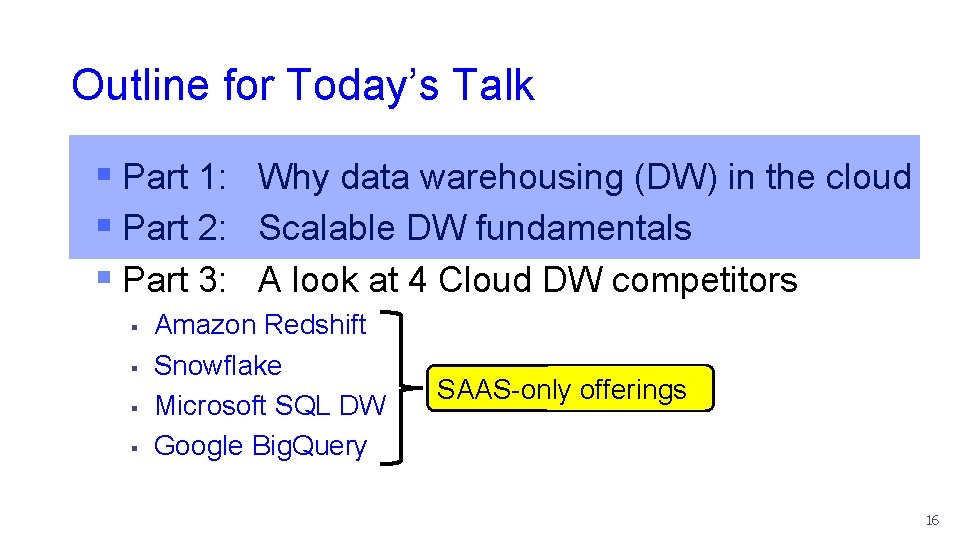

Outline for Today’s Talk § Part 1: Why data warehousing (DW) in the cloud § Part 2: Scalable DW fundamentals § Part 3: A look at 4 Cloud DW competitors § § Amazon Redshift Snowflake Microsoft SQL DW Google Big. Query SAAS-only offerings 16

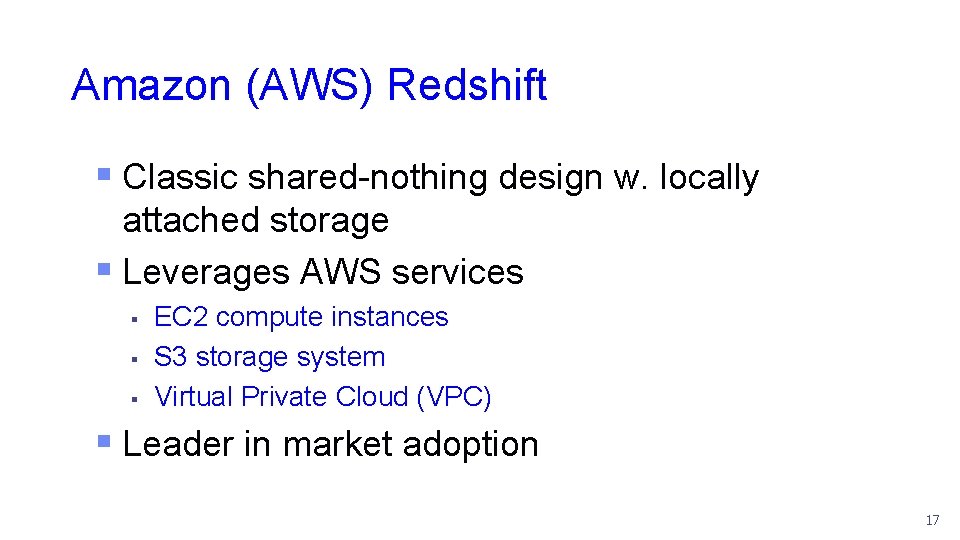

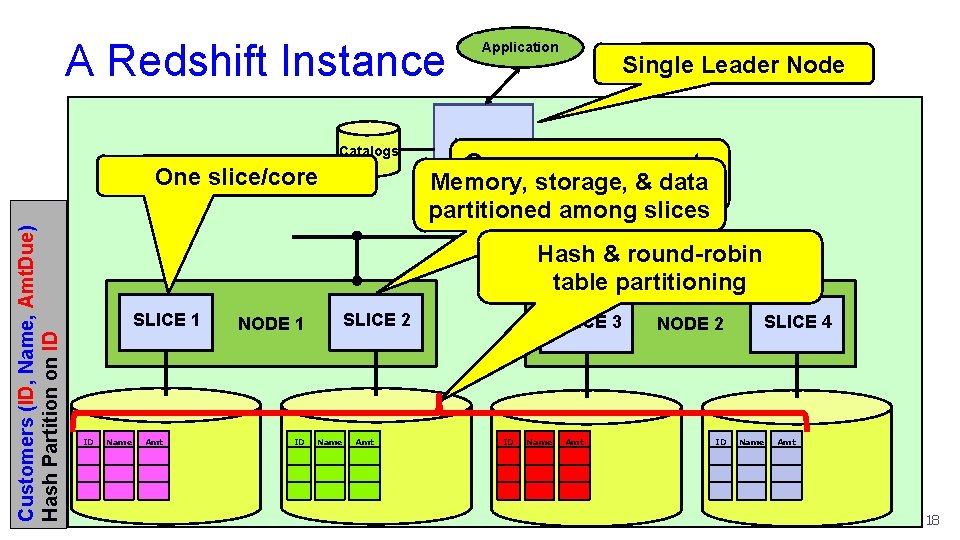

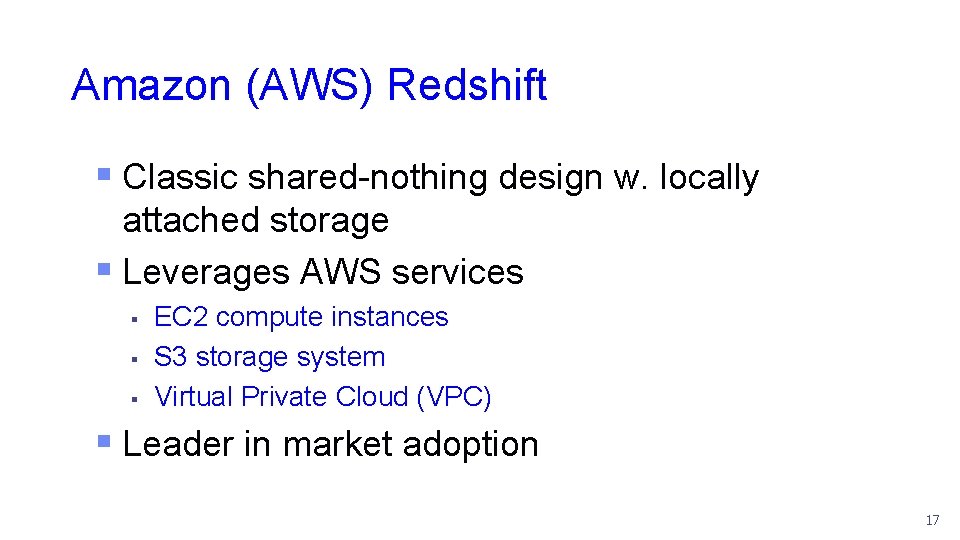

Amazon (AWS) Redshift § Classic shared-nothing design w. locally attached storage § Leverages AWS services § § § EC 2 compute instances S 3 storage system Virtual Private Cloud (VPC) § Leader in market adoption 17

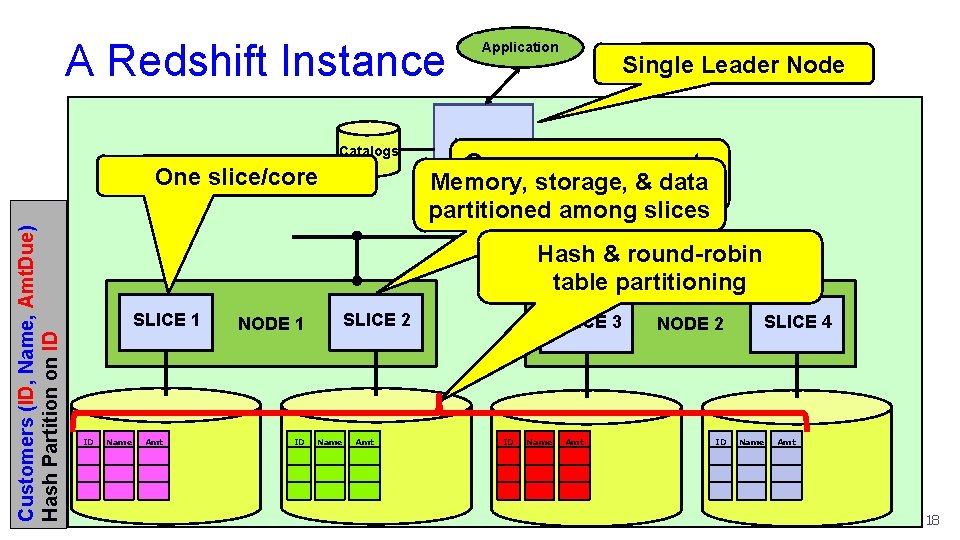

A Redshift Instance Catalogs Customers (ID, Name, Amt. Due) Hash Partition on ID One slice/core Application LEADER NODE One or more compute Memory, & data nodesstorage, (EC 2 instance) partitioned among slices NODE 1 SLICE 1 ID Name Amt Single Leader Node Hash & round-robin table partitioning SLICE 2 NODE 1 ID Name Amt SLICE 3 ID Name Amt NODE 2 ID SLICE 4 Name Amt 18

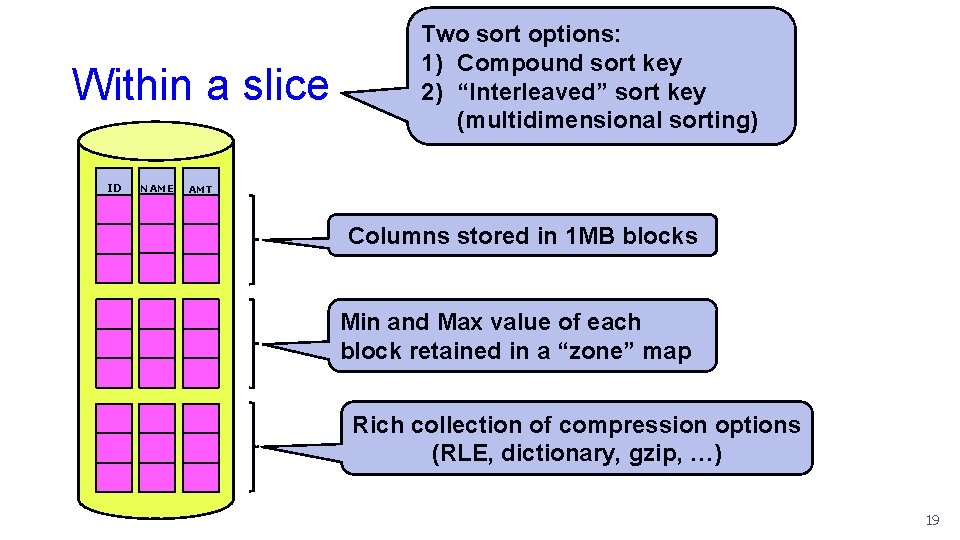

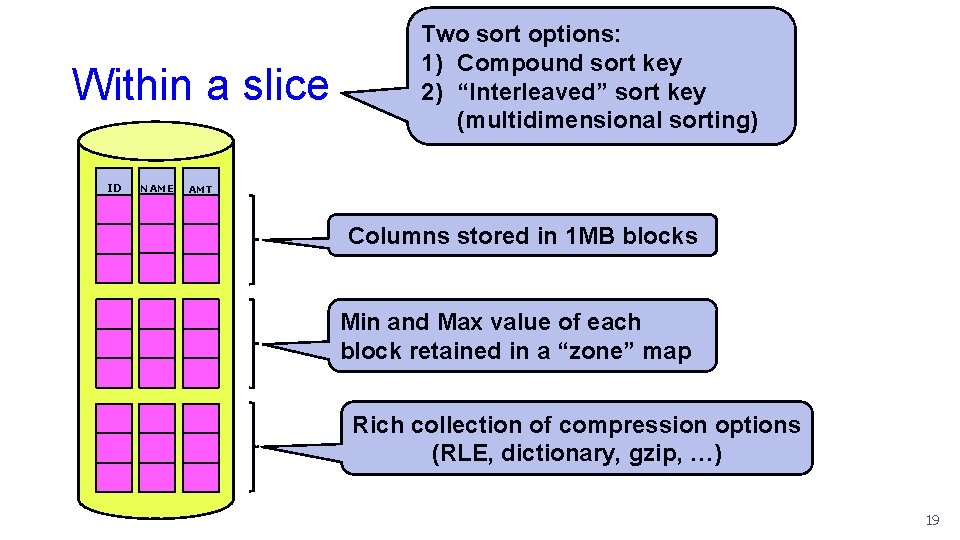

Within a slice ID NAME Two sort options: 1) Compound sort key 2) “Interleaved” sort key (multidimensional sorting) AMT Columns stored in 1 MB blocks Min and Max value of each block retained in a “zone” map Rich collection of compression options (RLE, dictionary, gzip, …) 19

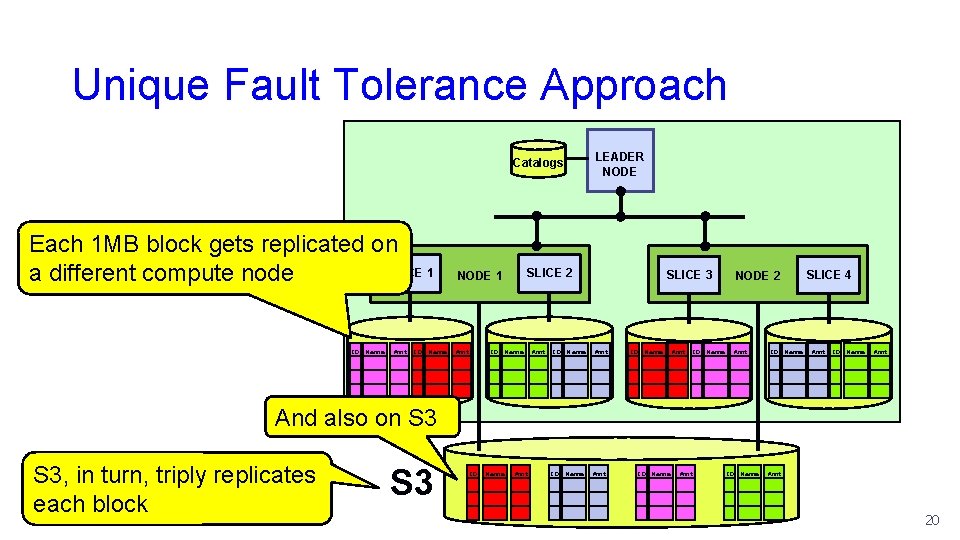

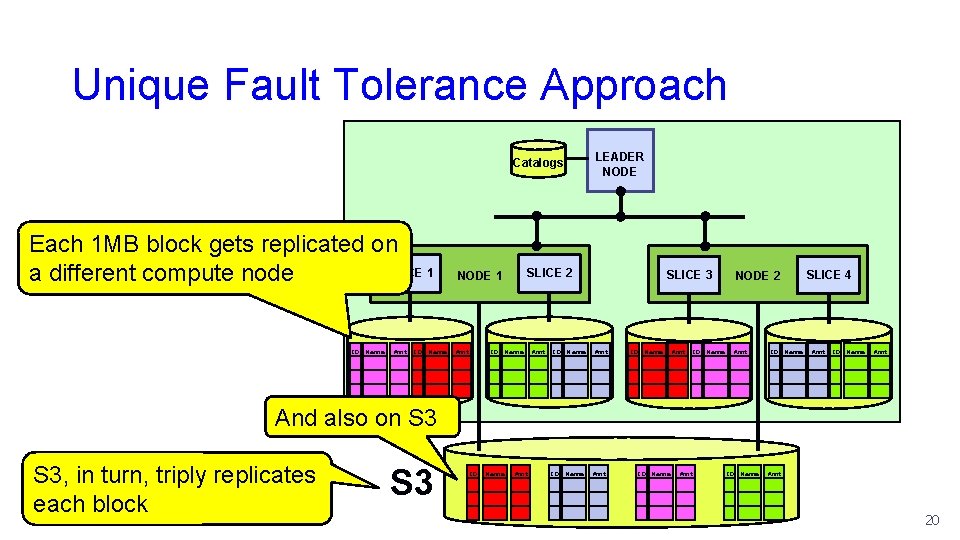

Unique Fault Tolerance Approach Catalogs Each 1 MB block gets replicated on SLICE 1 a different compute node ID Name Amt ID Name SLICE 2 NODE 1 Amt LEADER NODE ID Name Amt ID Name SLICE 3 Amt ID Name NODE 2 Amt ID Name SLICE 4 Amt ID Name Amt And also on S 3, in turn, triply replicates each block S 3 ID Name Amt 20

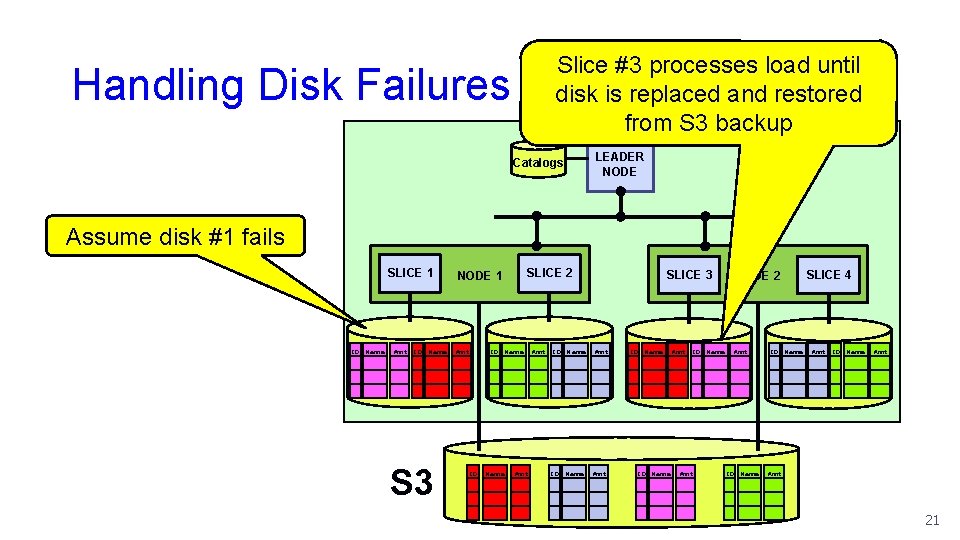

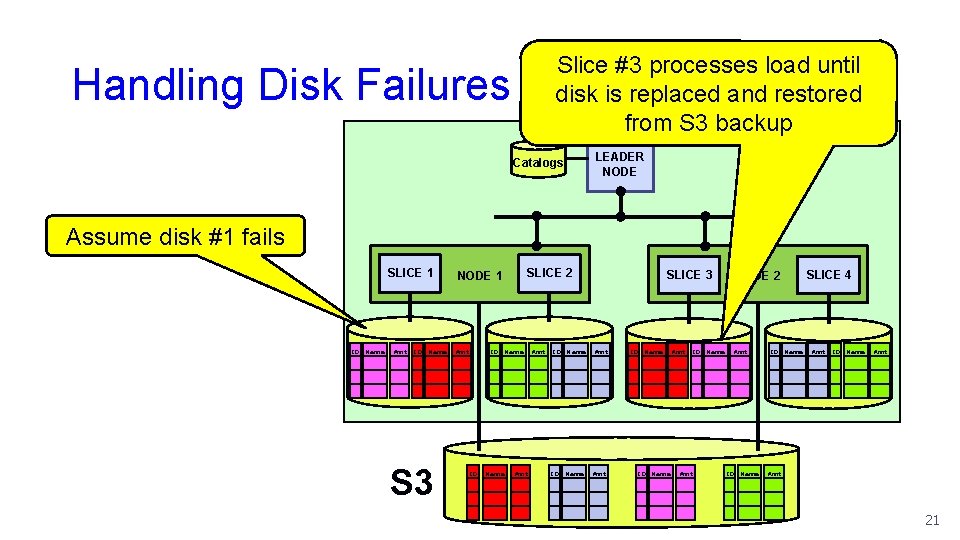

Slice #3 processes load until disk is replaced and restored from S 3 backup Handling Disk Failures Catalogs LEADER NODE Assume disk #1 fails SLICE 1 ID Name Amt ID Name S 3 SLICE 2 NODE 1 Amt ID Name SLICE 3 Amt ID Name Amt NODE 2 Amt ID Name SLICE 4 Amt ID Name Amt 21

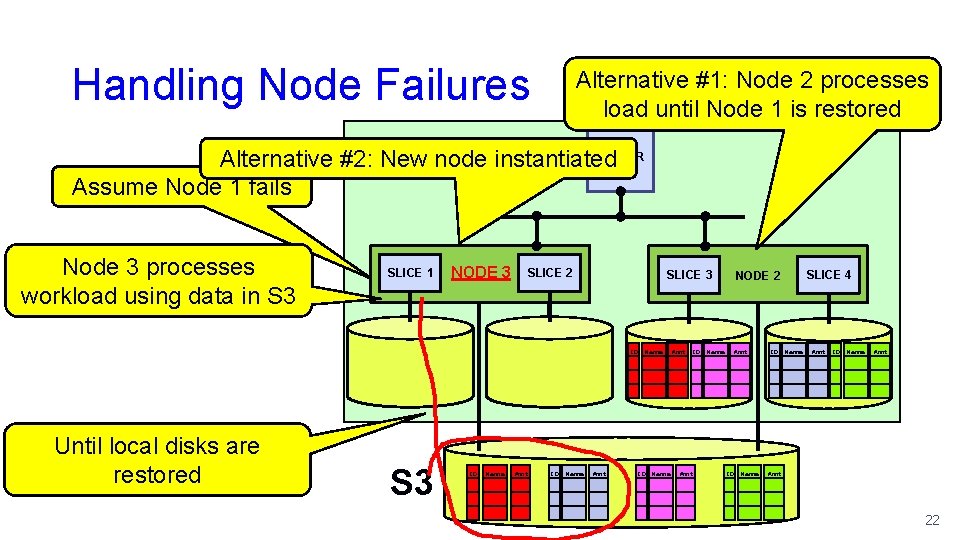

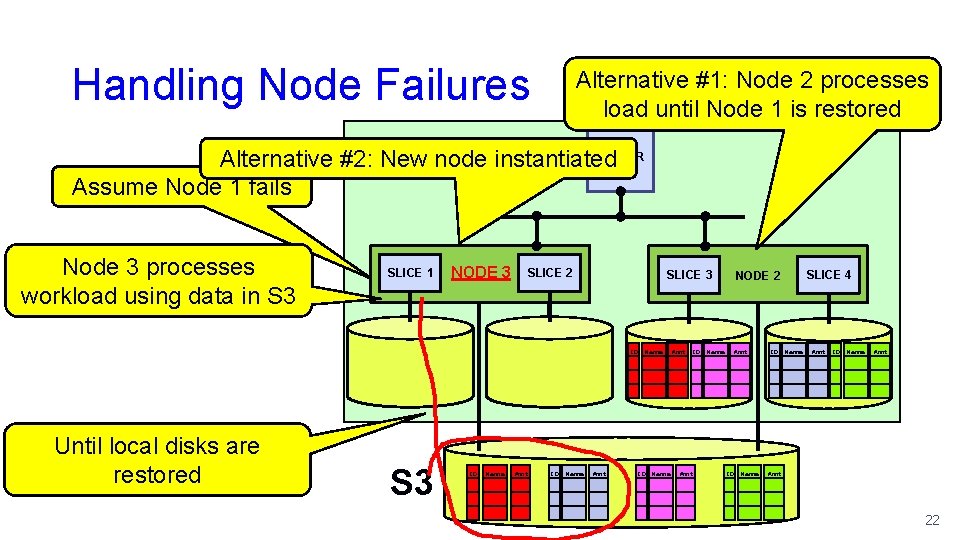

Handling Node Failures Alternative #1: Node 2 processes load until Node 1 is restored LEADER Catalogs Alternative #2: New node instantiated NODE Assume Node 1 fails Node 3 processes workload using data in S 3 SLICE 1 ID Name Until local disks are restored Amt ID Name S 3 NODE 13 Amt SLICE 2 ID Name Amt ID Name SLICE 3 Amt ID Name Amt NODE 2 Amt ID Name SLICE 4 Amt ID Name Amt 22

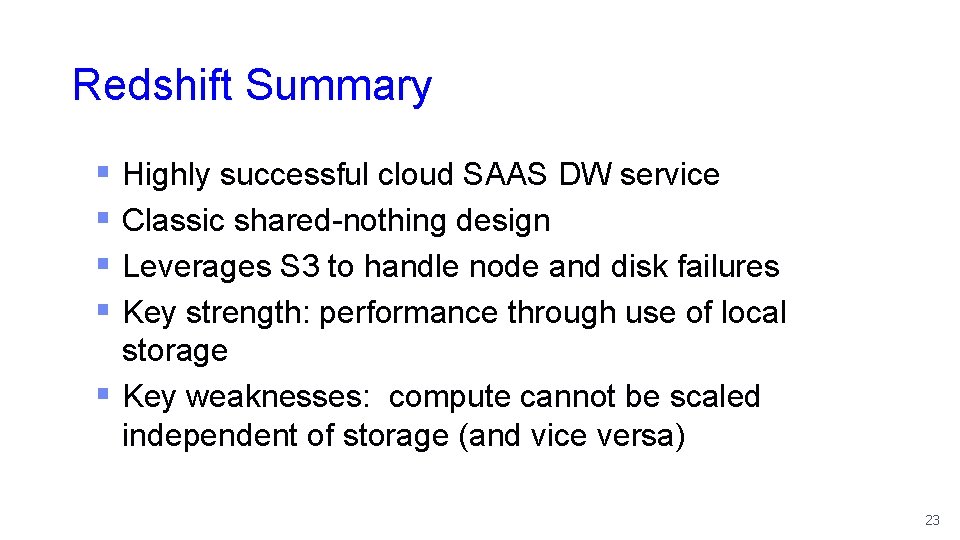

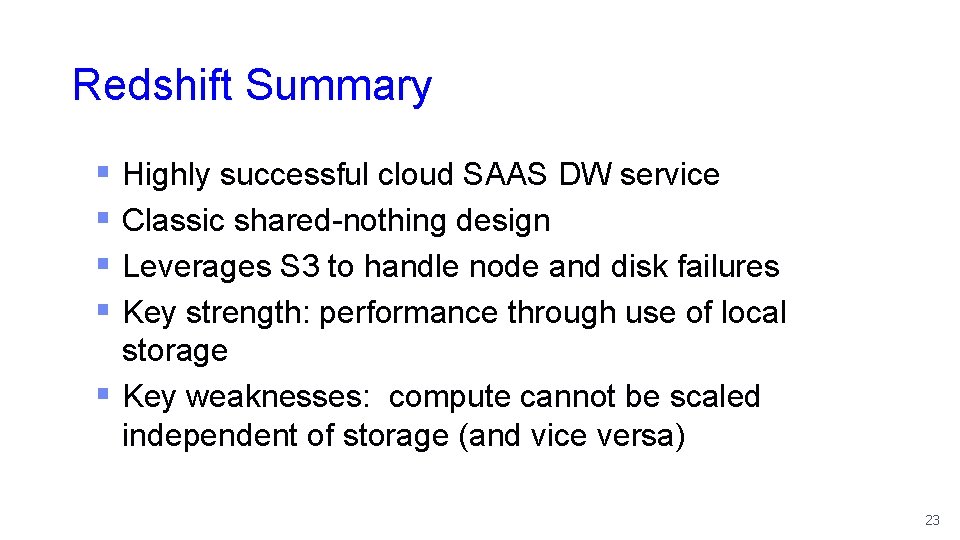

Redshift Summary § Highly successful cloud SAAS DW service § Classic shared-nothing design § Leverages S 3 to handle node and disk failures § Key strength: performance through use of local storage § Key weaknesses: compute cannot be scaled independent of storage (and vice versa) 23

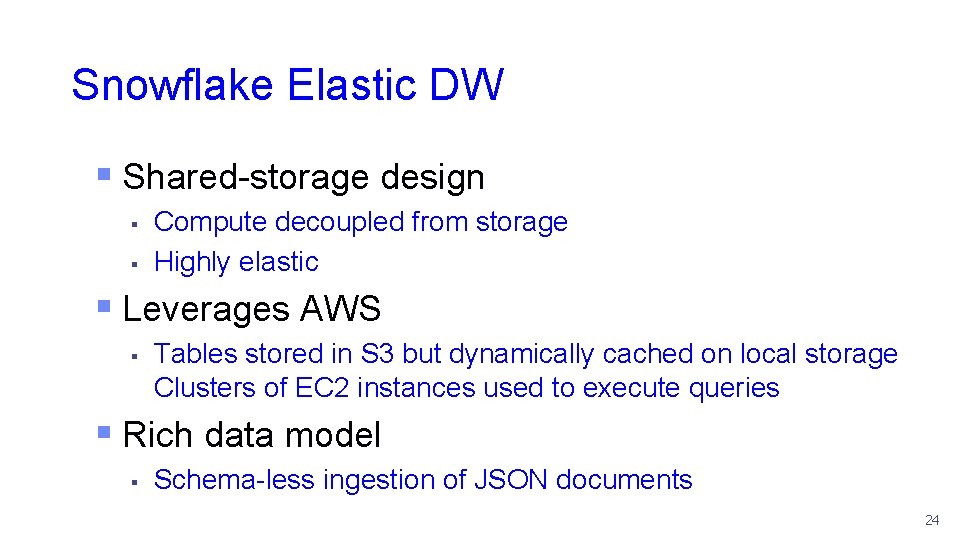

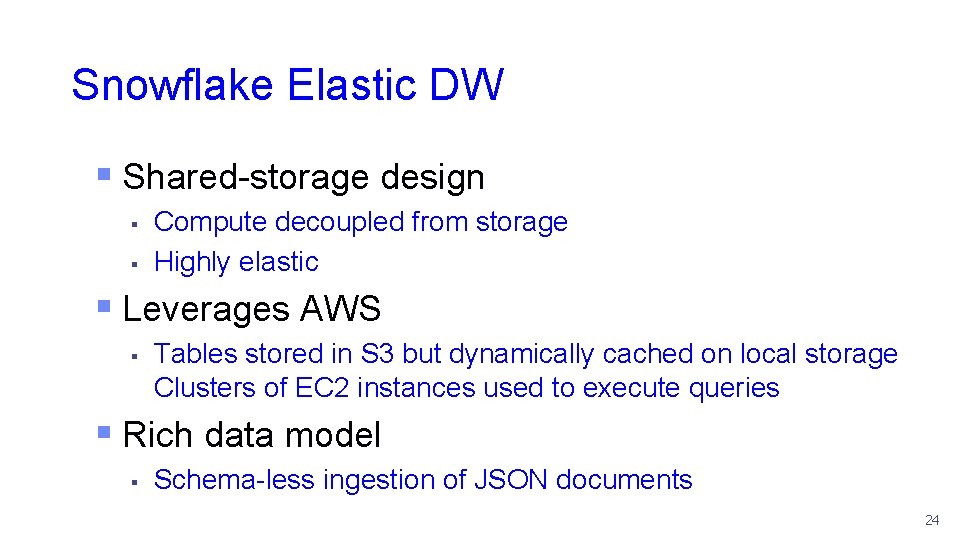

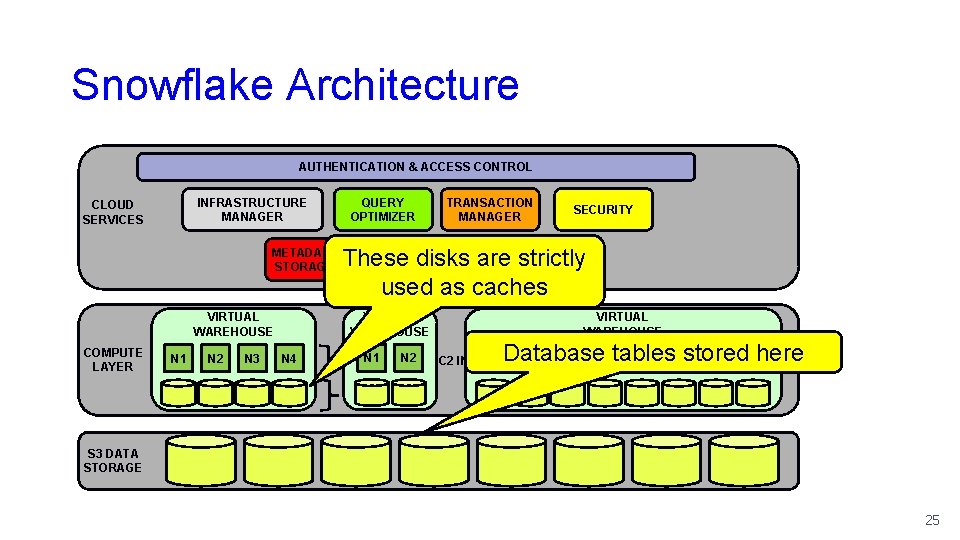

Snowflake Elastic DW § Shared-storage design § § Compute decoupled from storage Highly elastic § Leverages AWS § Tables stored in S 3 but dynamically cached on local storage Clusters of EC 2 instances used to execute queries § Rich data model § Schema-less ingestion of JSON documents 24

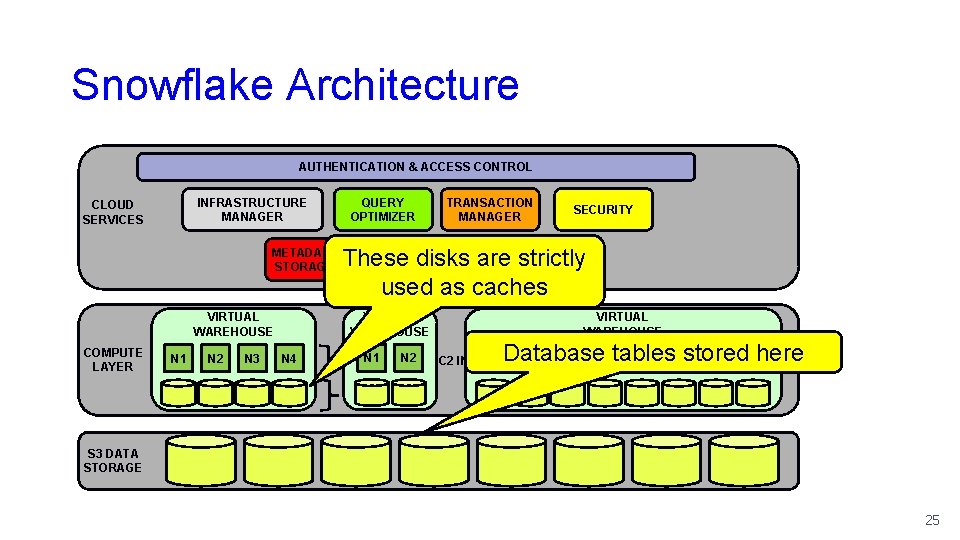

Snowflake Architecture AUTHENTICATION & ACCESS CONTROL INFRASTRUCTURE MANAGER CLOUD SERVICES METADATA STORAGE VIRTUAL WAREHOUSE COMPUTE LAYER N 1 N 2 N 3 QUERY OPTIMIZER TRANSACTION MANAGER These disks are strictly used as caches VIRTUAL WAREHOUSE N 4 SECURITY VIRTUAL WAREHOUSE Database N 3 N 4 tables N 5 N 6 stored N 7 N 8 here N 1 N 2 OF EC 2 INSTANCES N 1 N 2 CLUSTER DATA CACHE S 3 DATA STORAGE 25

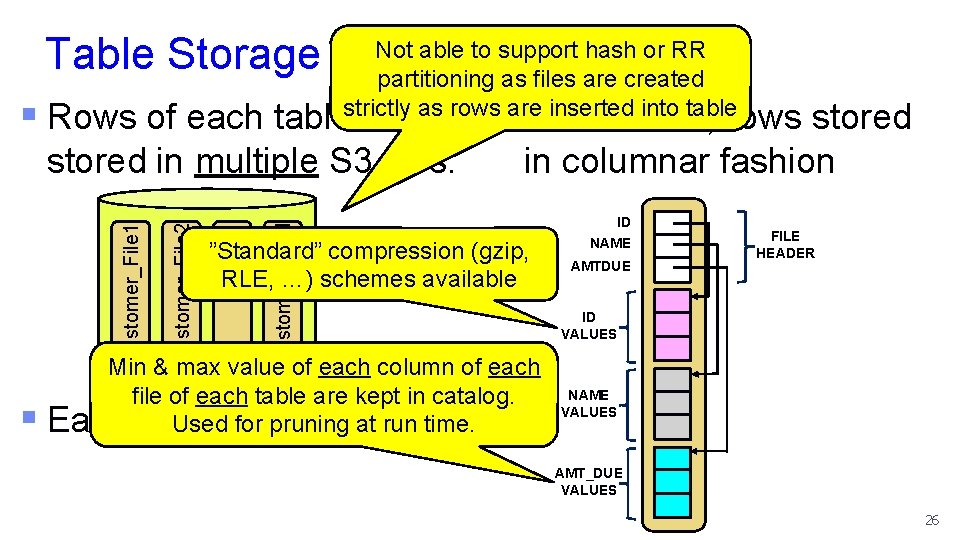

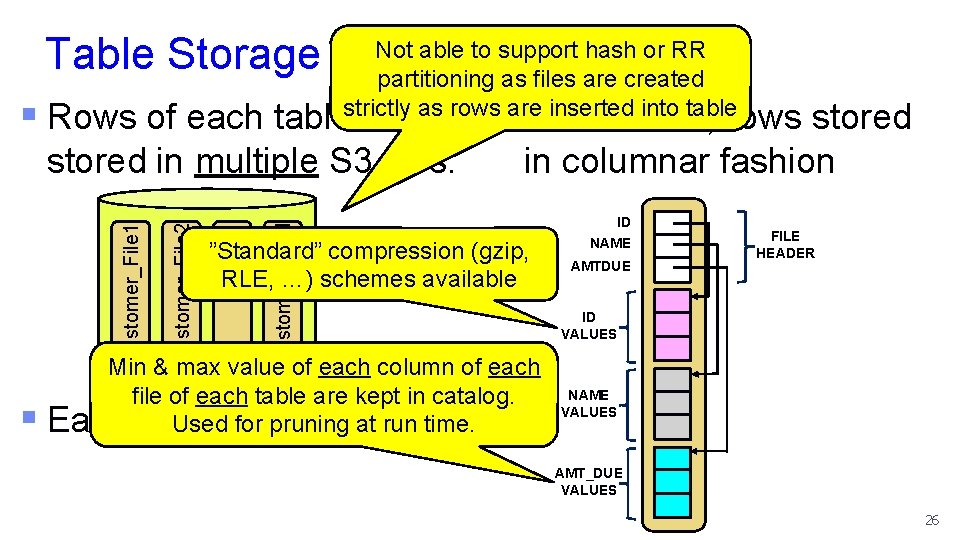

Table Storage Not able to support hash or RR partitioning as files are created strictly as rows are inserted into table § Rows of each table are § Customer_File. N in columnar fashion ”Standard” compression (gzip, RLE, …) schemes available … Customer_File 2 Customer_File 1 stored in multiple S 3 files: § Inside a file, rows stored Min & max value of each column of each file of each table are kept in catalog. Each file. Used is ~10 MB for pruning at run time. ID NAME AMTDUE FILE HEADER ID VALUES NAME VALUES AMT_DUE VALUES 26

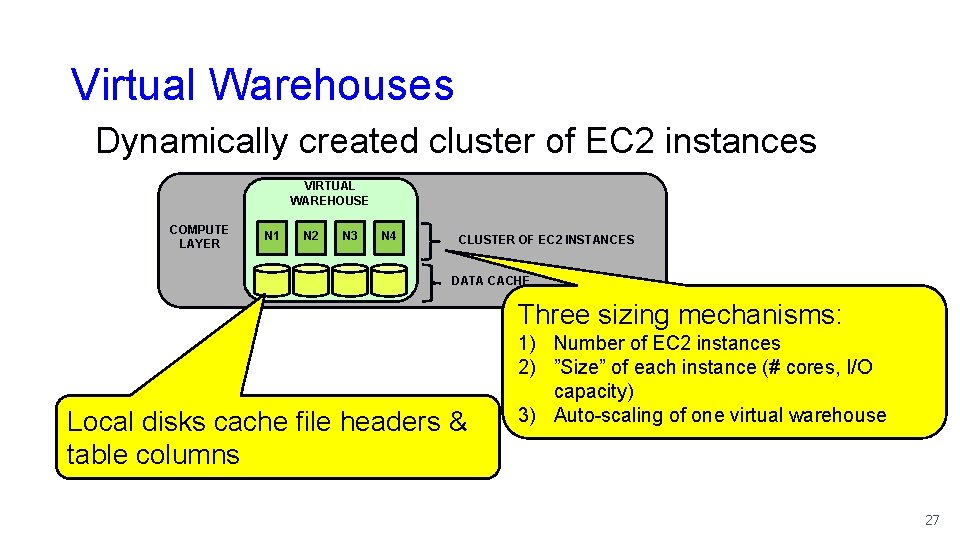

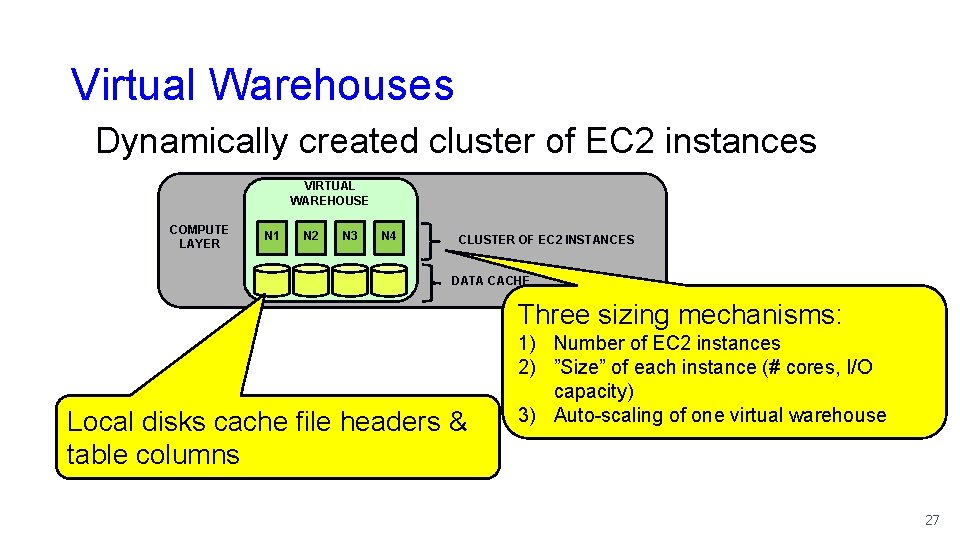

Virtual Warehouses Dynamically created cluster of EC 2 instances VIRTUAL WAREHOUSE COMPUTE LAYER N 1 N 2 N 3 N 4 CLUSTER OF EC 2 INSTANCES DATA CACHE Three sizing mechanisms: Local disks cache file headers & table columns 1) Number of EC 2 instances 2) ”Size” of each instance (# cores, I/O capacity) 3) Auto-scaling of one virtual warehouse 27

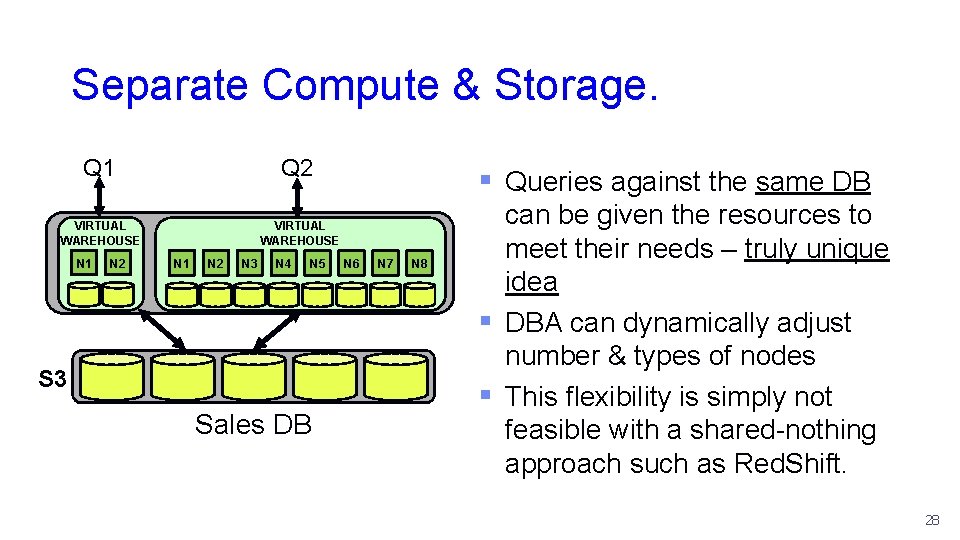

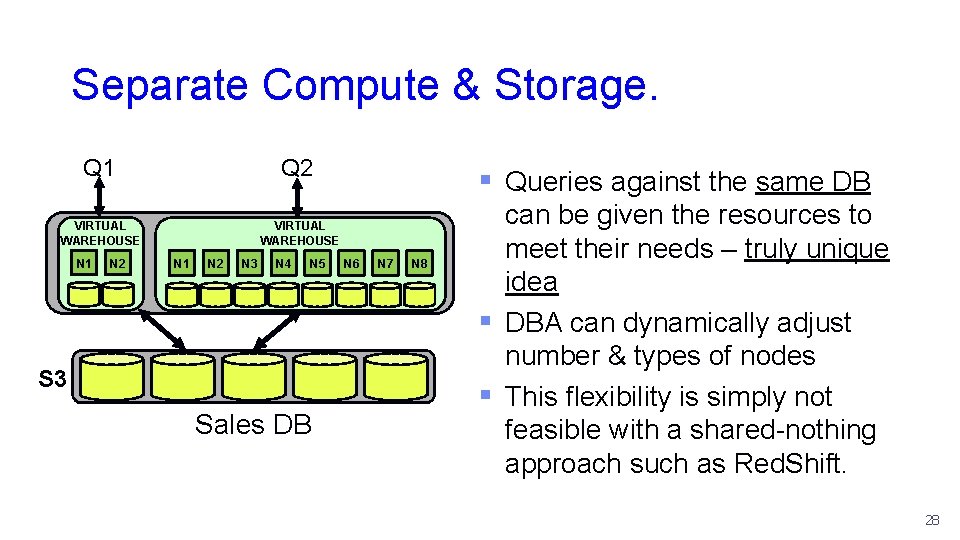

Separate Compute & Storage. Q 1 Q 2 VIRTUAL WAREHOUSE N 1 N 2 N 3 N 4 N 5 S 3 Sales DB § Queries against the same DB N 6 N 7 N 8 can be given the resources to meet their needs – truly unique idea § DBA can dynamically adjust number & types of nodes § This flexibility is simply not feasible with a shared-nothing approach such as Red. Shift. 28

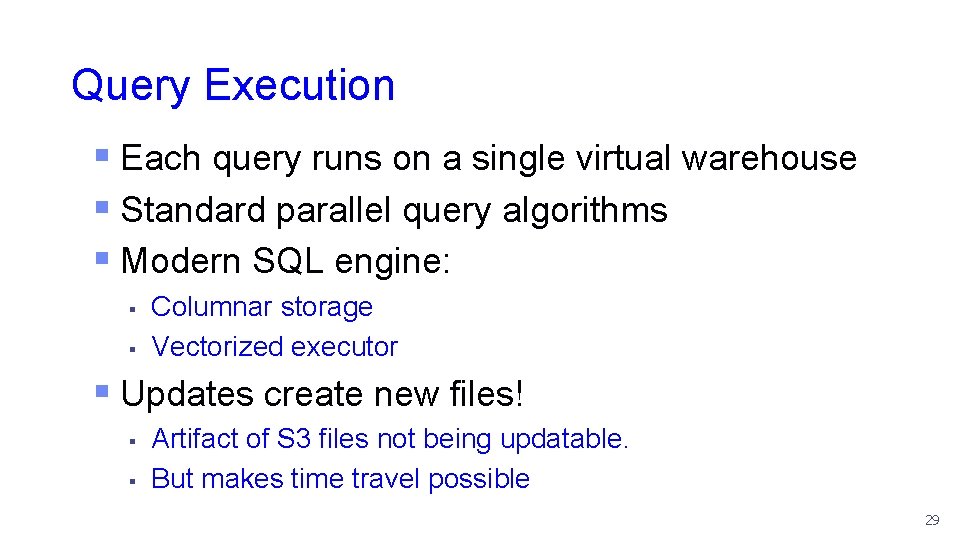

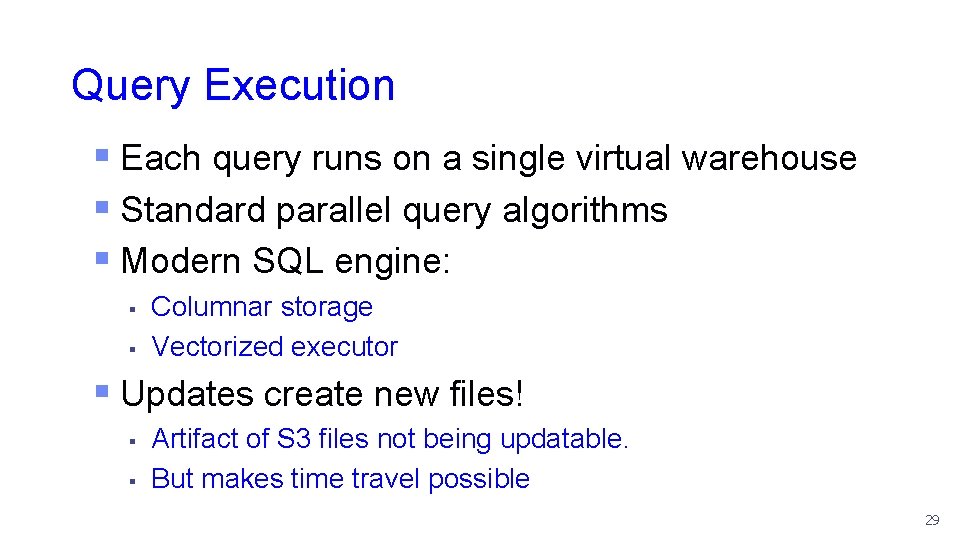

Query Execution § Each query runs on a single virtual warehouse § Standard parallel query algorithms § Modern SQL engine: § § Columnar storage Vectorized executor § Updates create new files! § § Artifact of S 3 files not being updatable. But makes time travel possible 29

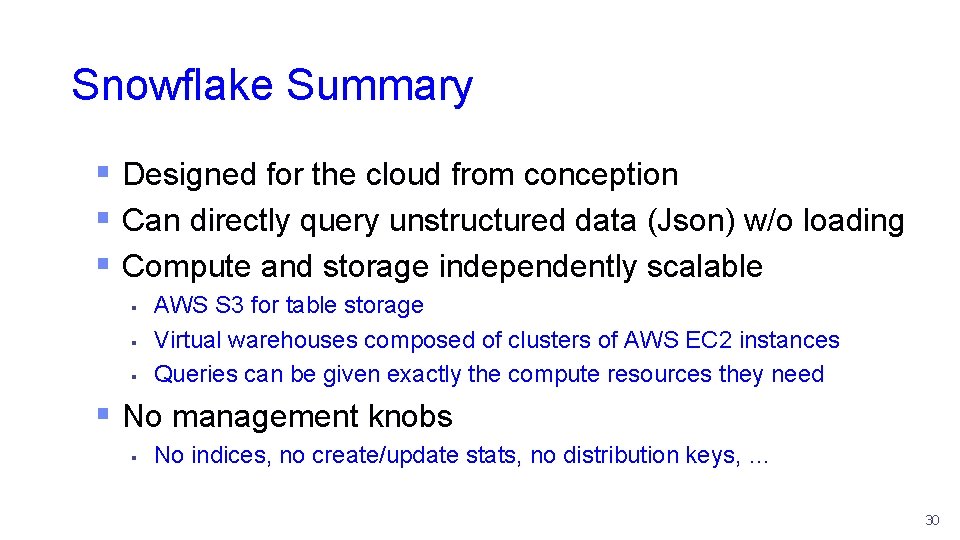

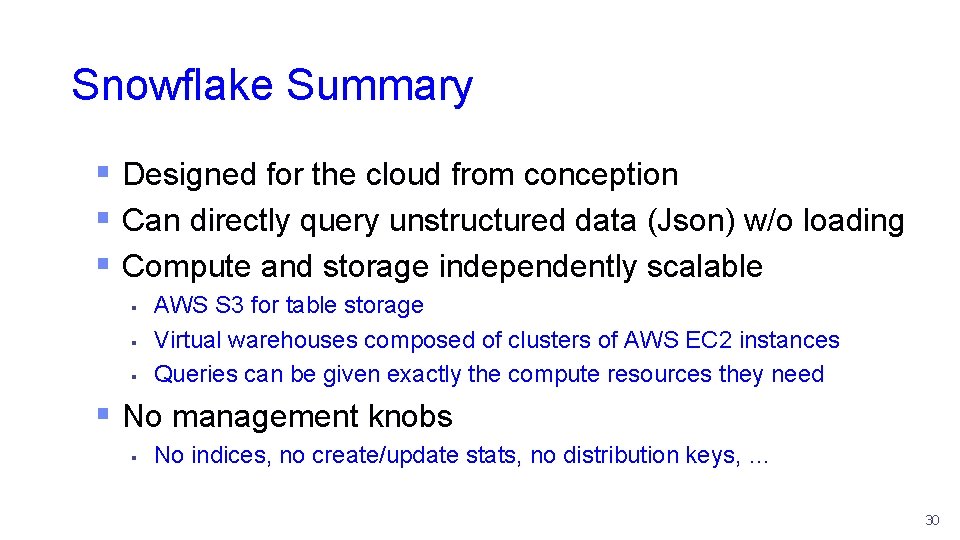

Snowflake Summary § Designed for the cloud from conception § Can directly query unstructured data (Json) w/o loading § Compute and storage independently scalable § § § AWS S 3 for table storage Virtual warehouses composed of clusters of AWS EC 2 instances Queries can be given exactly the compute resources they need § No management knobs § No indices, no create/update stats, no distribution keys, … 30

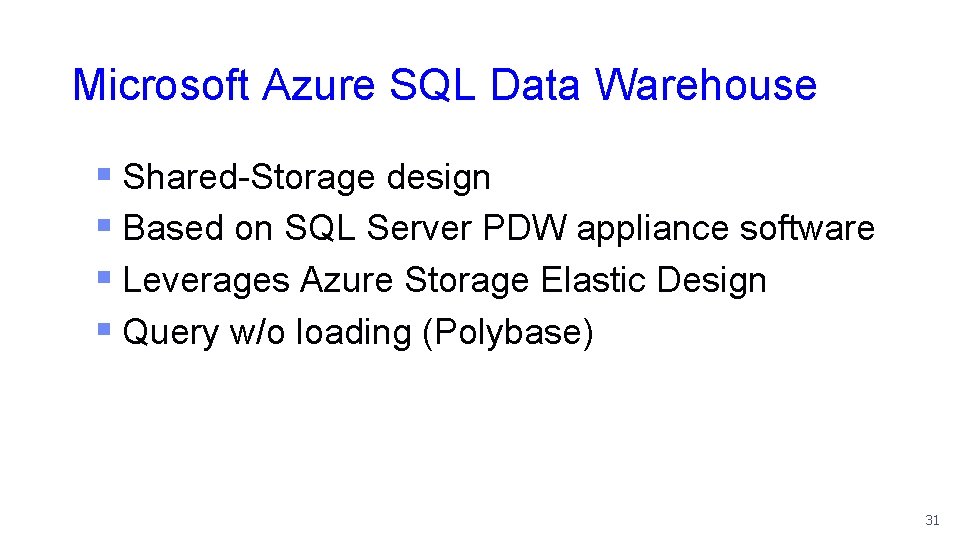

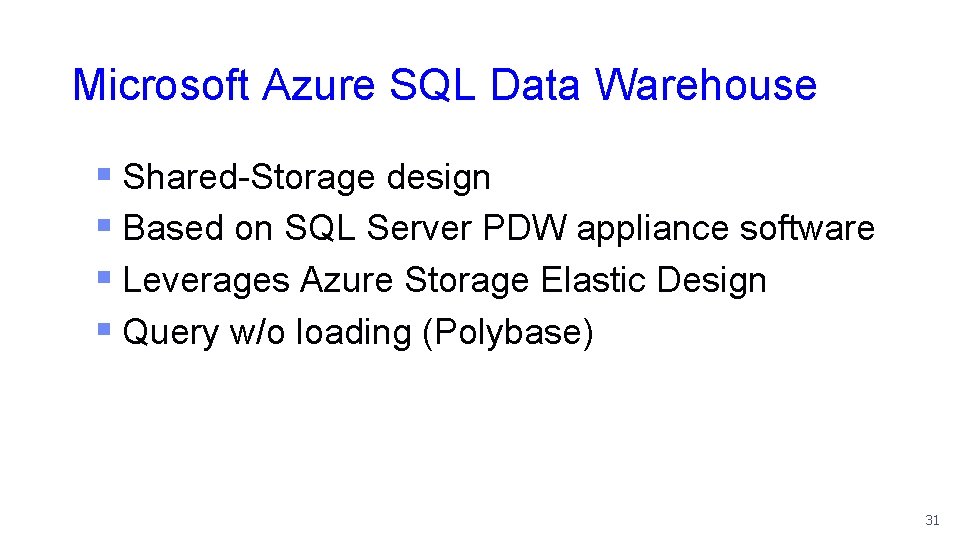

Microsoft Azure SQL Data Warehouse § Shared-Storage design § Based on SQL Server PDW appliance software § Leverages Azure Storage Elastic Design § Query w/o loading (Polybase) 31

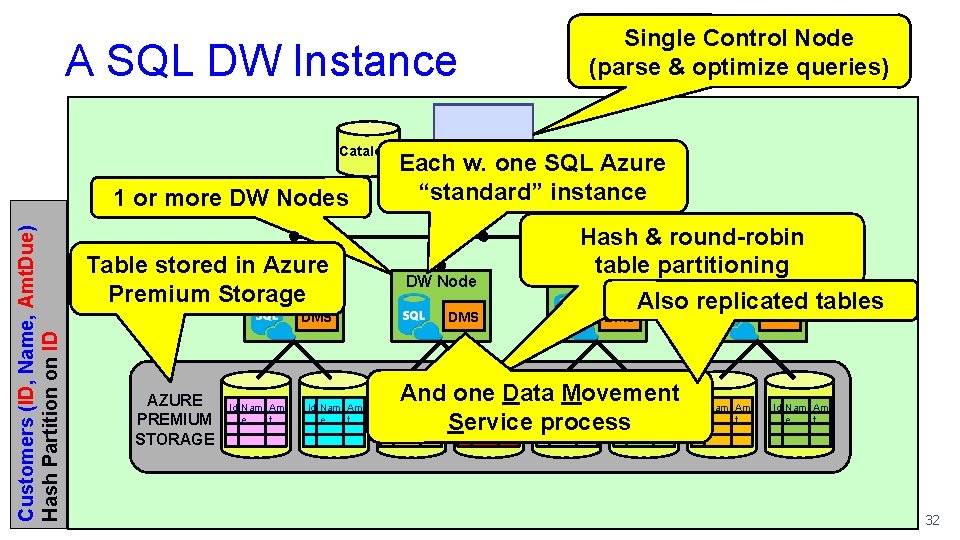

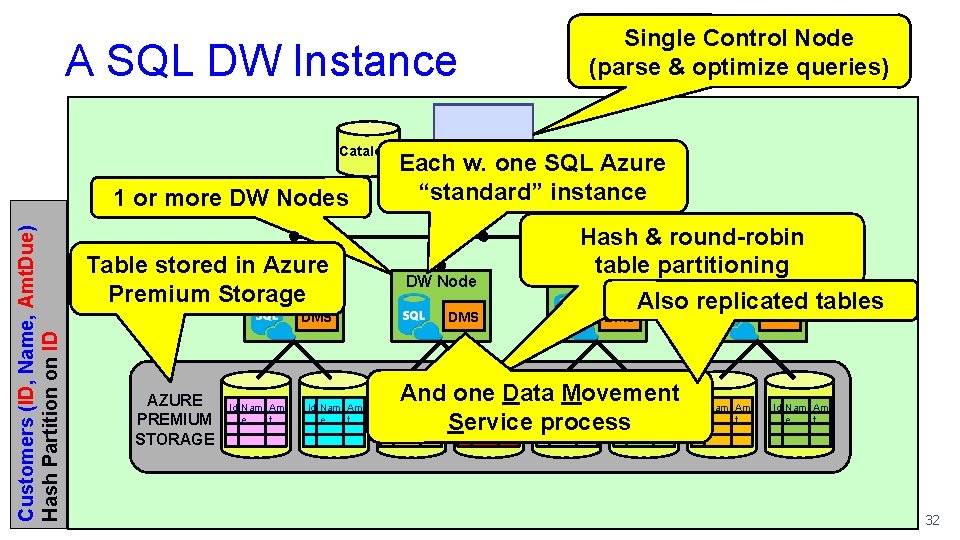

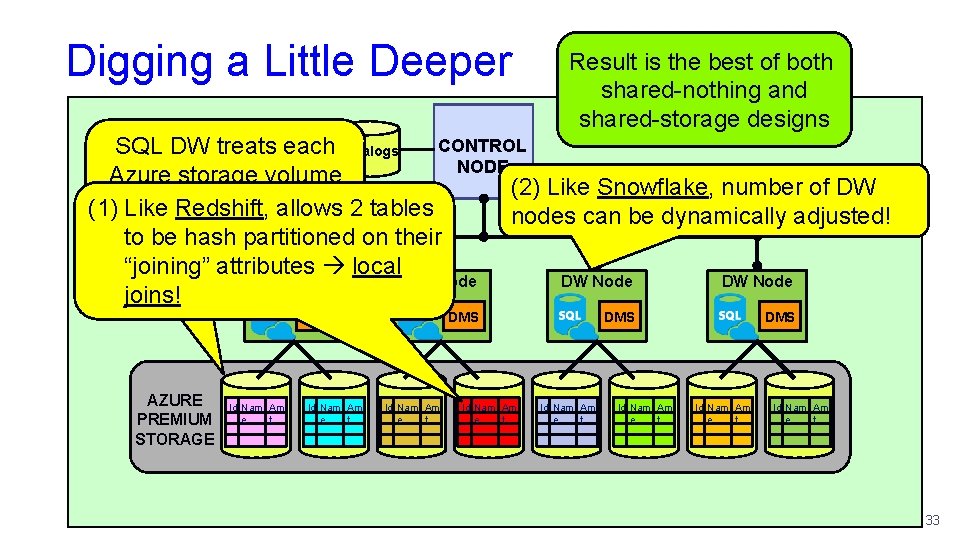

A SQL DW Instance Catalogs Customers (ID, Name, Amt. Due) Hash Partition on ID 1 or more DW Nodes CONTROL Each NODE w. one SQL Azure “standard” instance Table stored in Azure DW Node Premium Storage DW Node DMS AZURE PREMIUM STORAGE Id Nam Am e t Single Control Node (parse & optimize queries) Hash & round-robin table partitioning DW Node Also replicated tables DMS And one Data Movement Id Nam Am e t Service processe t Id Nam Am e t DMS Id Nam Am e t 32

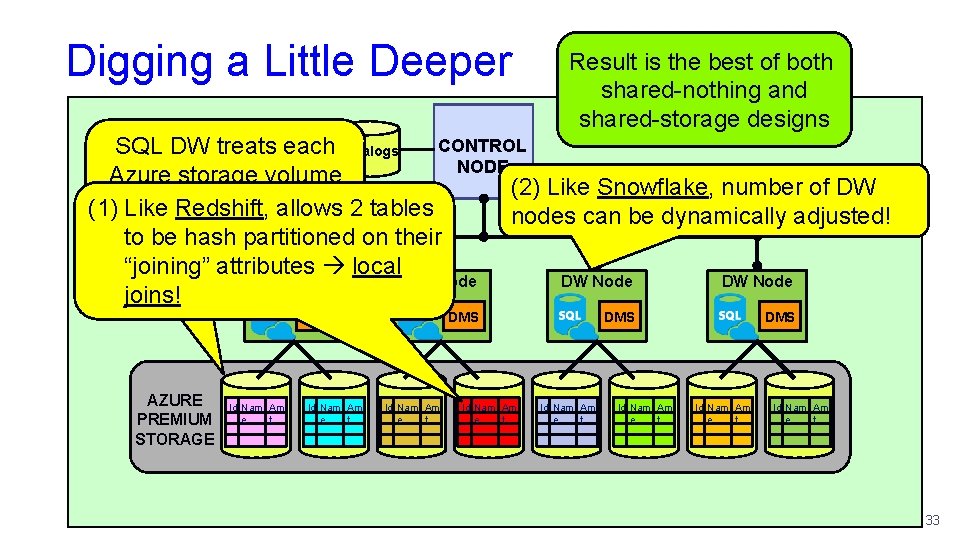

Digging a Little Deeper Result is the best of both shared-nothing and shared-storage designs CONTROL SQL DW treats each Catalogs NODE Azure storage volume (2) Like Snowflake, number of DW a SQL database it 2 tables (1)as Like Redshift, allows nodes can be dynamically adjusted! can to beattach/detach hash partitioned on their “joining” attributes local DW Node joins! DMS AZURE PREMIUM STORAGE Id Nam Am e t DMS Id Nam Am e t 33

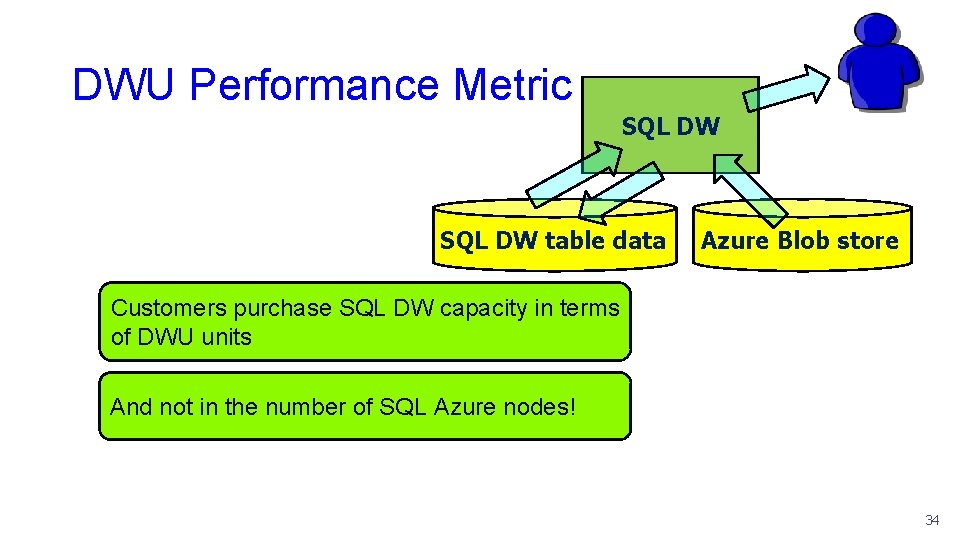

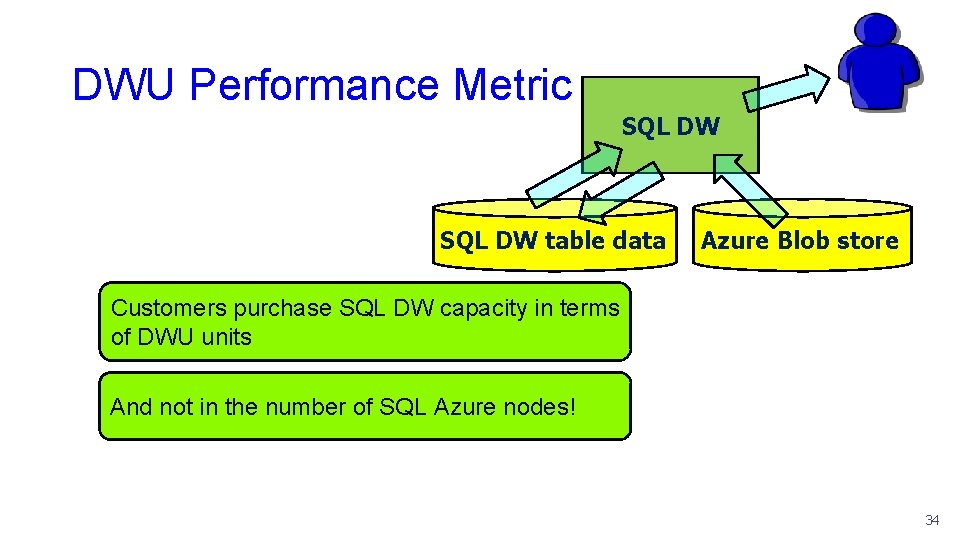

DWU Performance Metric SQL DW § SQL DW uses DWU (data warehouse unit) as its performance capacity metric SQL DW table data Azure Blob store § Based on three workloads 1. Customers Scan/Aggregation: query and CPU resources purchase SQL DW stressing capacity in I/O terms DWU ingest units speed stressing network and CPU resources 2. of Load: 3. Create Table As Select (CTAS): copy and redistribute data And not in the SQL Azure nodes! stressing I/O, number CPU, ofand network resources 34

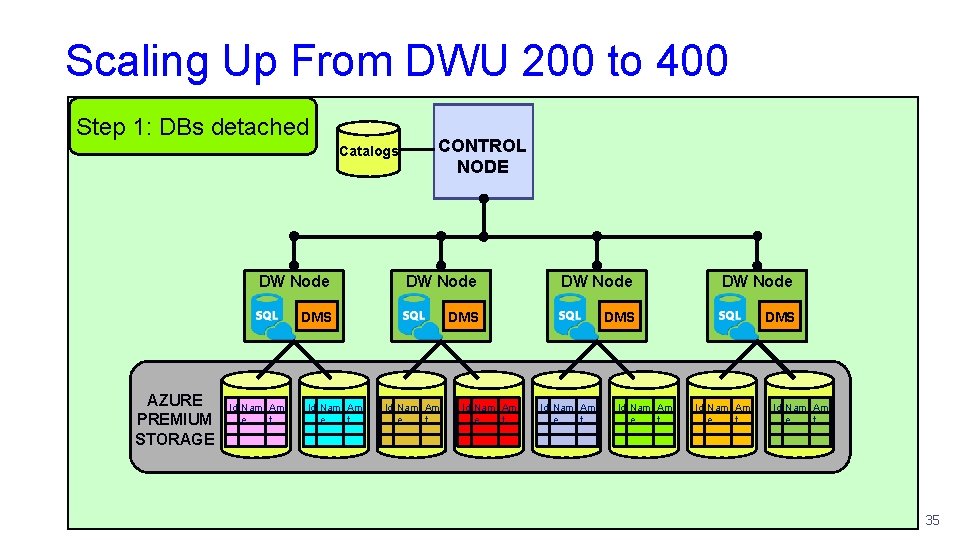

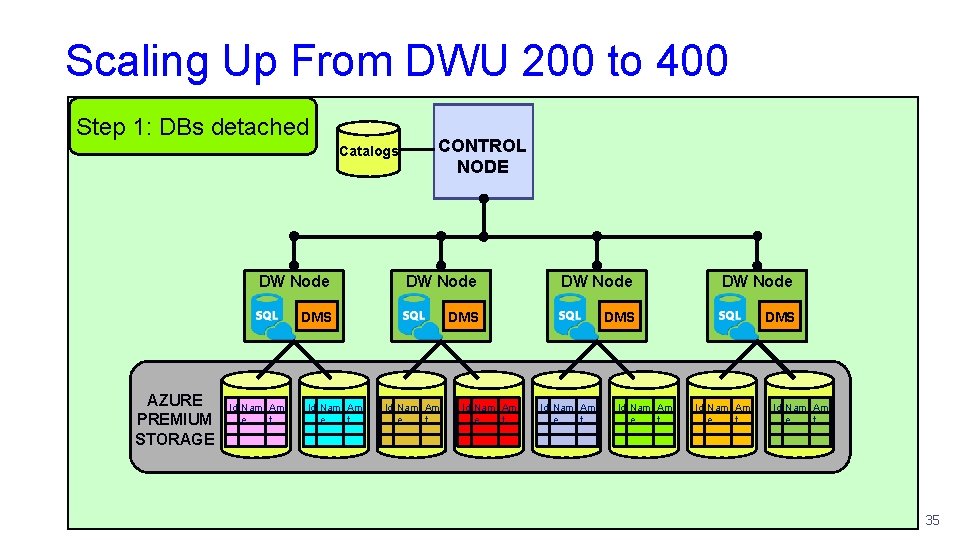

Scaling Up From DWU 200 to 400 Step 1: DBs detached Catalogs AZURE PREMIUM STORAGE CONTROL NODE DW Node DMS DMS Id Nam Am e t Id Nam Am e t 35

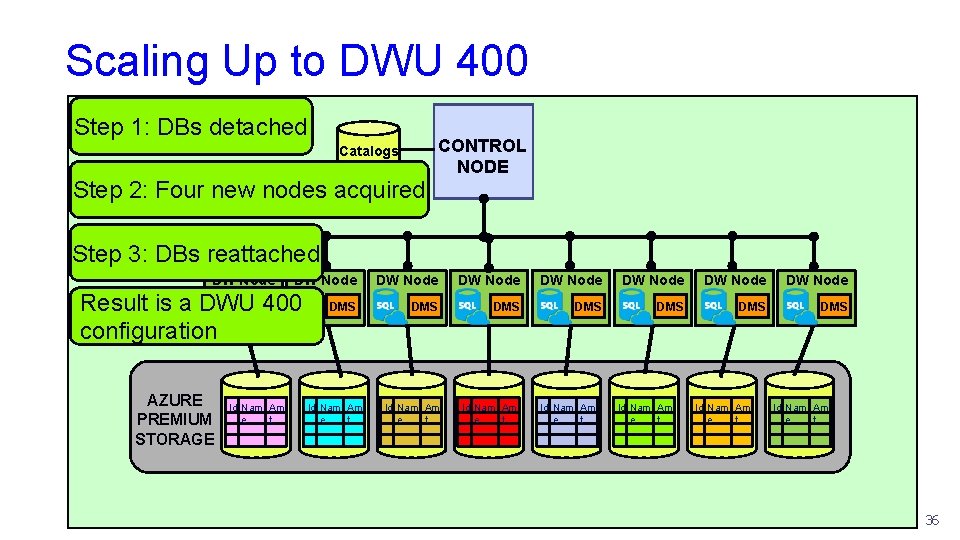

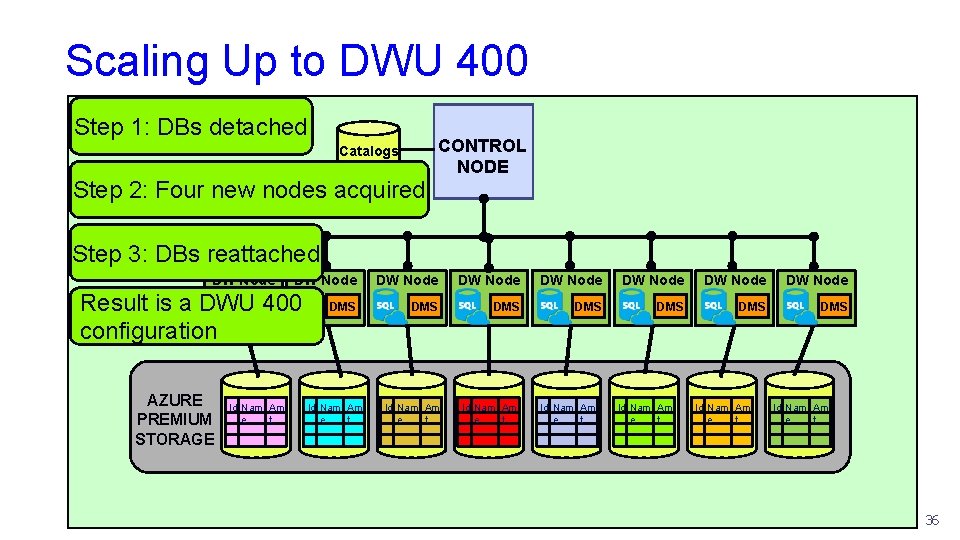

Scaling Up to DWU 400 Step 1: DBs detached Catalogs Step 2: Four new nodes acquired CONTROL NODE Step 3: DBs reattached DW Node Result is a DWUDMS 400 configuration AZURE PREMIUM STORAGE Id Nam Am e t DMS Id Nam Am e t DW Node DW Node DMS DMS DMS Id Nam Am e t Id Nam Am e t 36

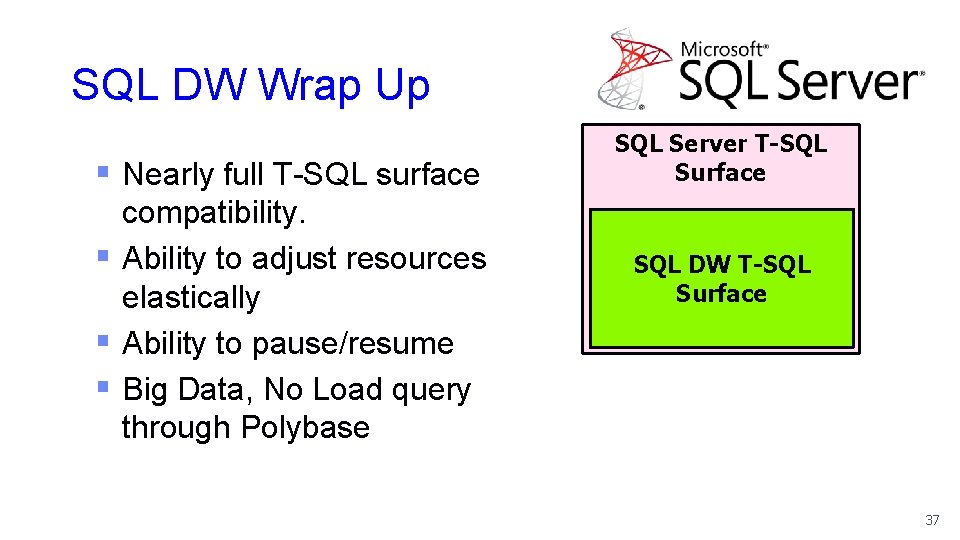

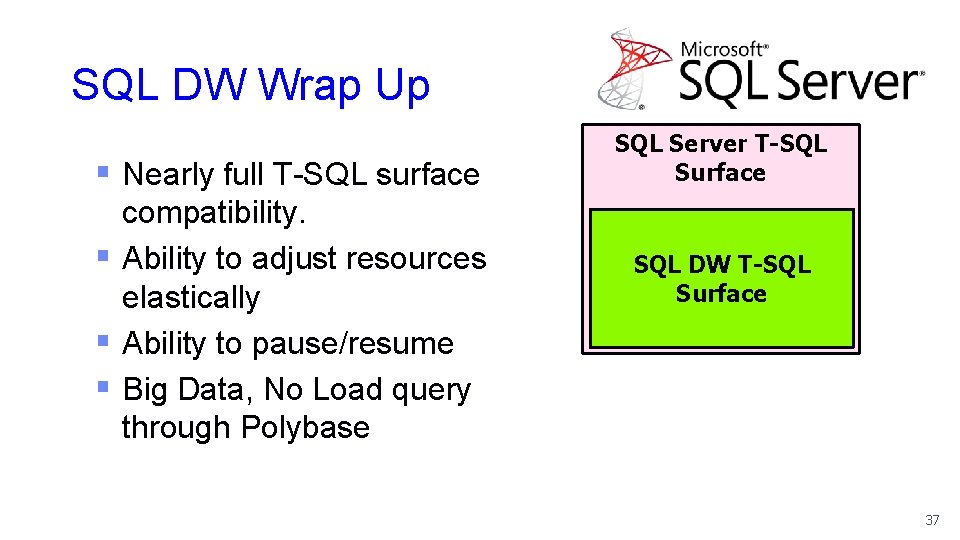

SQL DW Wrap Up § Nearly full T-SQL surface compatibility. § Ability to adjust resources elastically § Ability to pause/resume § Big Data, No Load query through Polybase SQL Server T-SQL Surface SQL DW T-SQL Surface 37

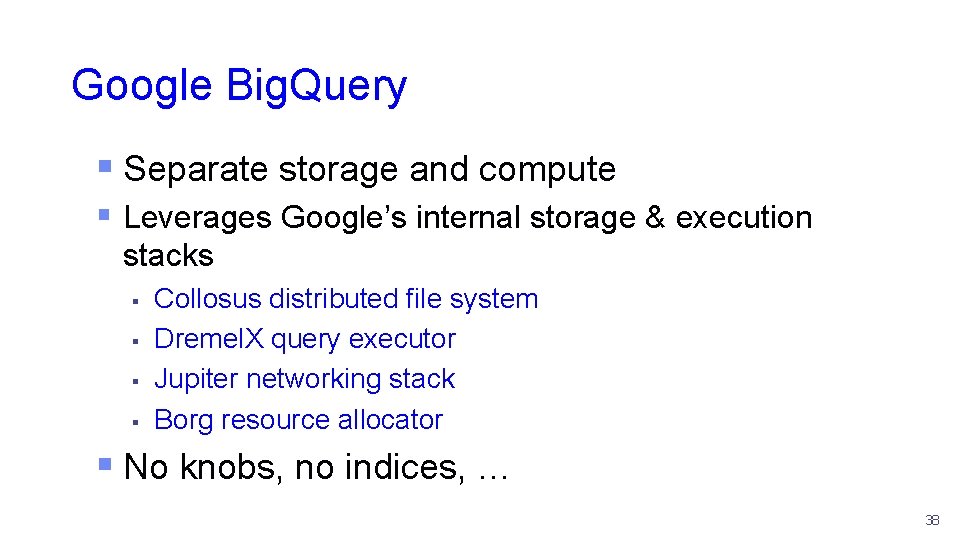

Google Big. Query § Separate storage and compute § Leverages Google’s internal storage & execution stacks § § Collosus distributed file system Dremel. X query executor Jupiter networking stack Borg resource allocator § No knobs, no indices, … 38

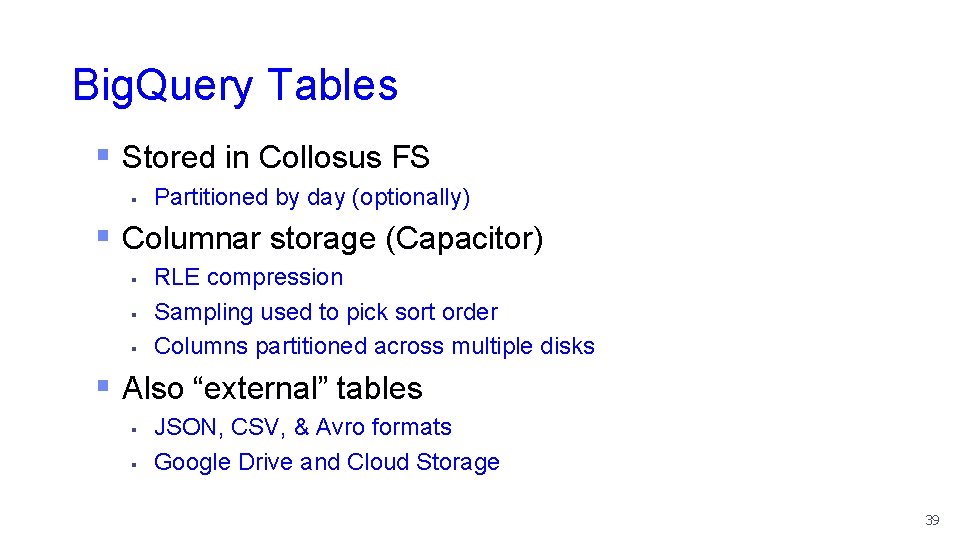

Big. Query Tables § Stored in Collosus FS § Partitioned by day (optionally) § Columnar storage (Capacitor) § § § RLE compression Sampling used to pick sort order Columns partitioned across multiple disks § Also “external” tables § § JSON, CSV, & Avro formats Google Drive and Cloud Storage 39

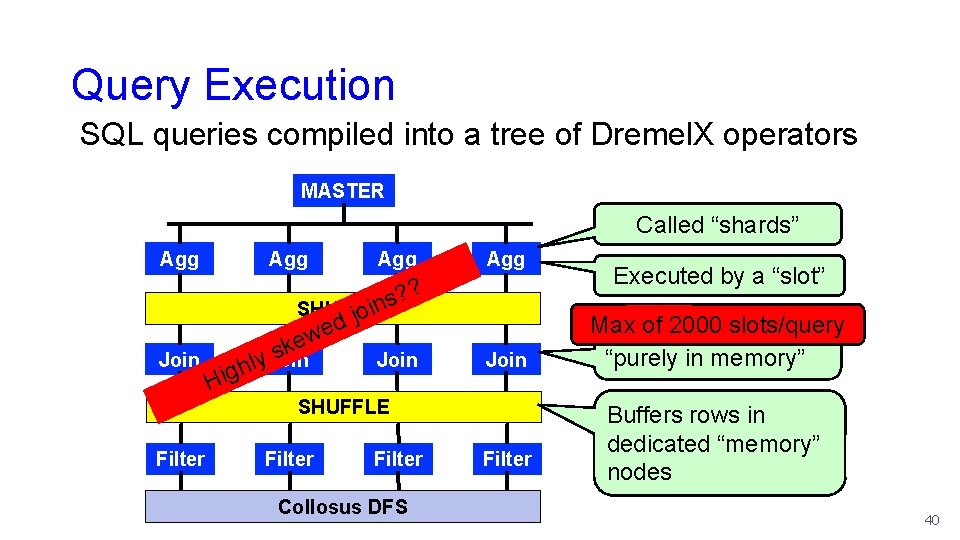

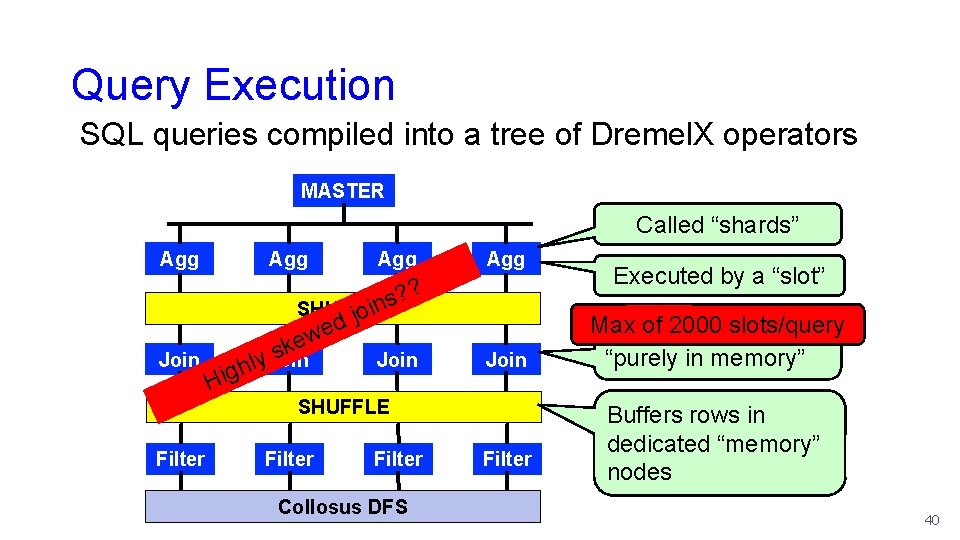

Query Execution SQL queries compiled into a tree of Dremel. X operators MASTER Called “shards” Agg Agg s? ? SHUFFLE join Join d e w e k s ly. Join h g i H Join SHUFFLE Filter Collosus DFS Filter Executed by a “slot” Max of 2000 slots/query All operators are “purely in memory” Buffers rows in dedicated “memory” nodes 40

CPU Resource Allocation § Compute resources not dedicated! § § Shared among other internal and external customers No apparent way to control computational resources used for a query § # of shards/slots assigned to an operator function of: § § Estimated amount of data to be processed Cluster capacity and current load 41

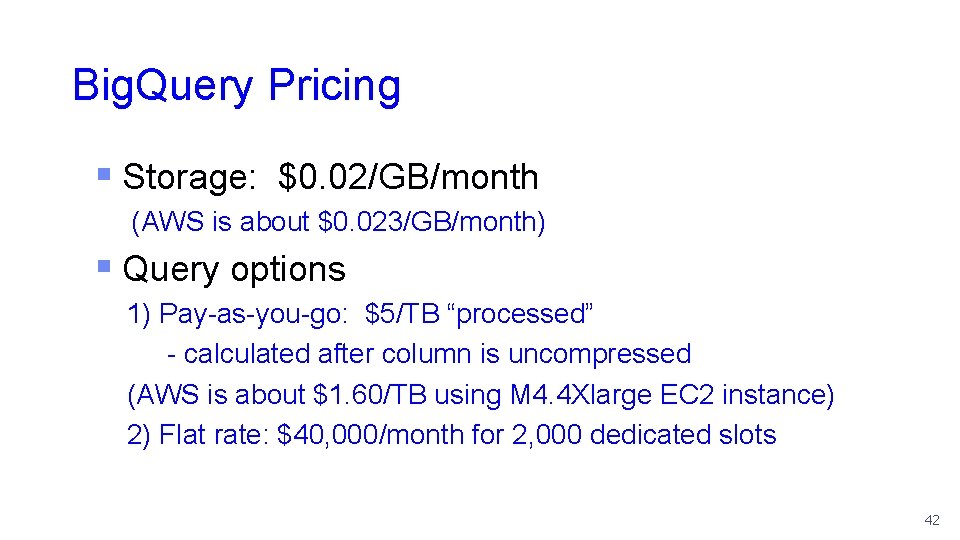

Big. Query Pricing § Storage: $0. 02/GB/month (AWS is about $0. 023/GB/month) § Query options 1) Pay-as-you-go: $5/TB “processed” - calculated after column is uncompressed (AWS is about $1. 60/TB using M 4. 4 Xlarge EC 2 instance) 2) Flat rate: $40, 000/month for 2, 000 dedicated slots 42

Handicapping the ”Ponies” § Pay for only what you use § Elasticity § Performance 43

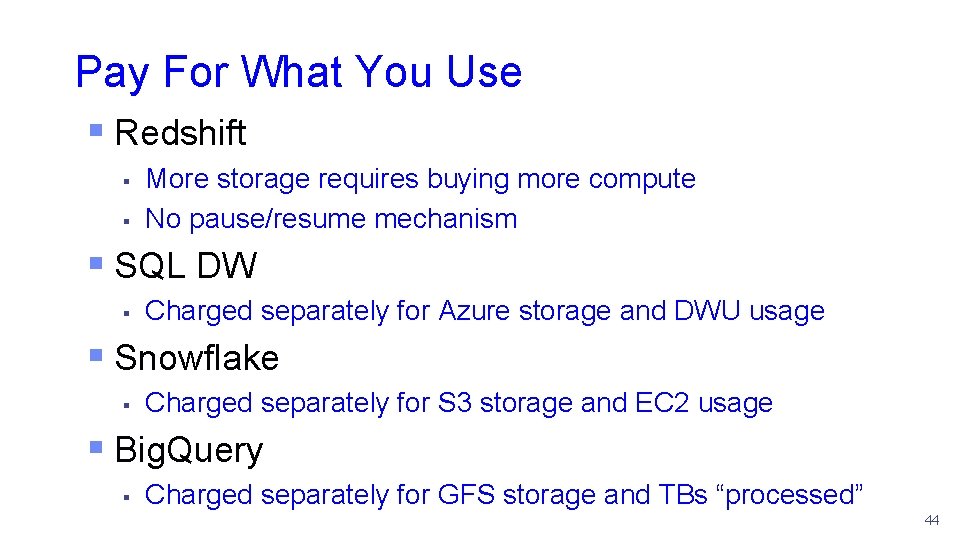

Pay For What You Use § Redshift § § More storage requires buying more compute No pause/resume mechanism § SQL DW § Charged separately for Azure storage and DWU usage § Snowflake § Charged separately for S 3 storage and EC 2 usage § Big. Query § Charged separately for GFS storage and TBs “processed” 44

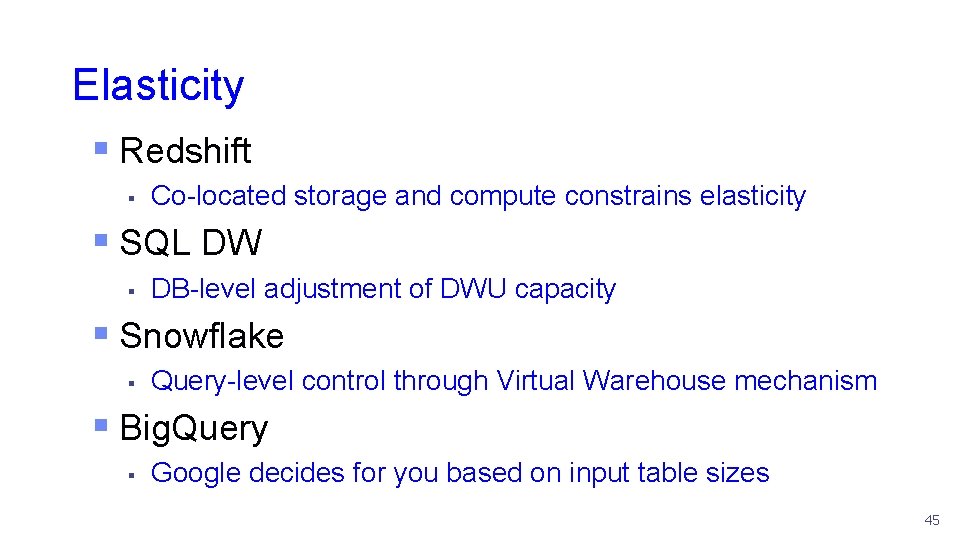

Elasticity § Redshift § Co-located storage and compute constrains elasticity § SQL DW § DB-level adjustment of DWU capacity § Snowflake § Query-level control through Virtual Warehouse mechanism § Big. Query § Google decides for you based on input table sizes 45

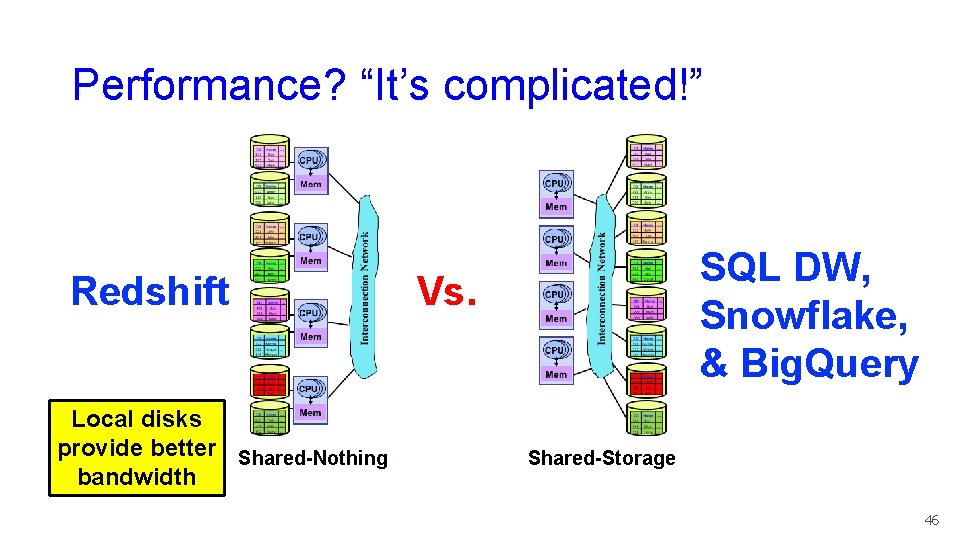

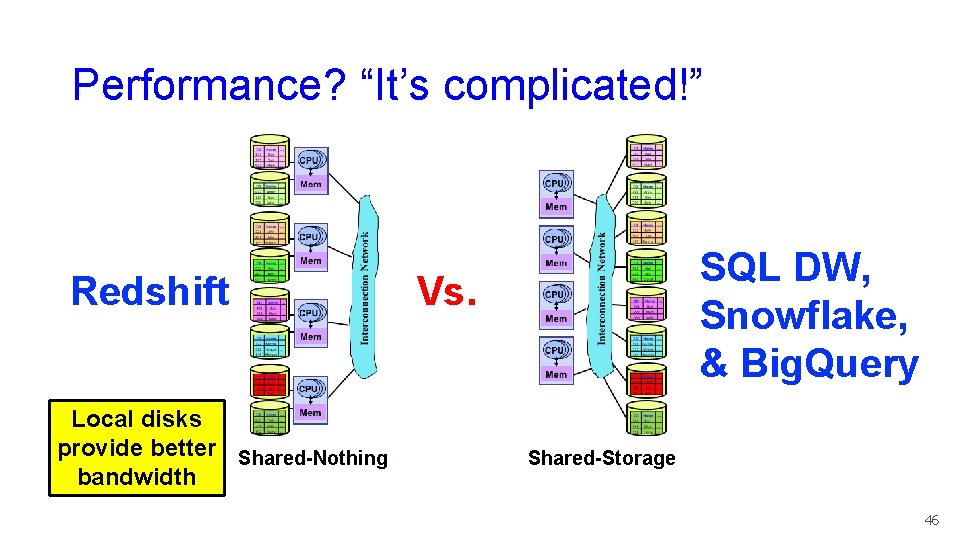

Performance? “It’s complicated!” Redshift Local disks provide better Shared-Nothing bandwidth SQL DW, Snowflake, & Big. Query Vs. Shared-Storage 46

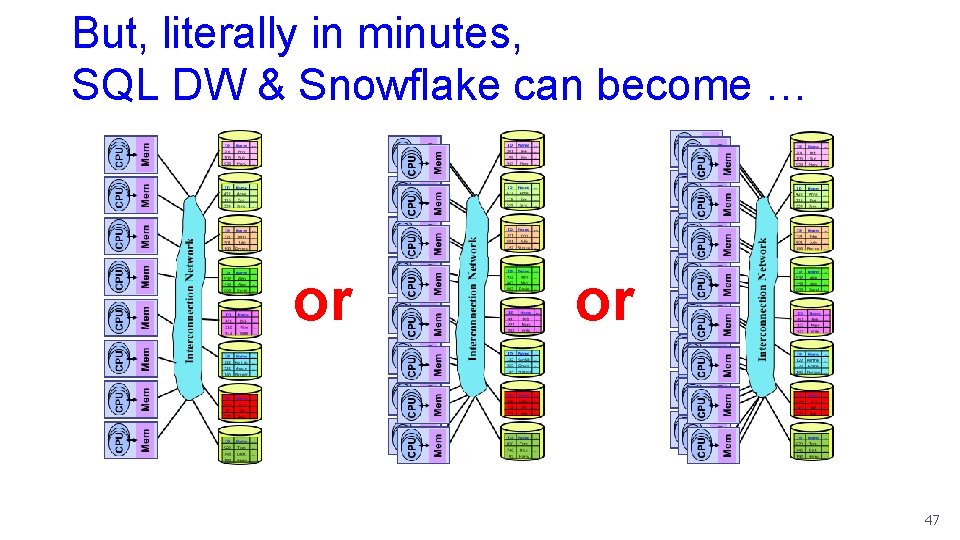

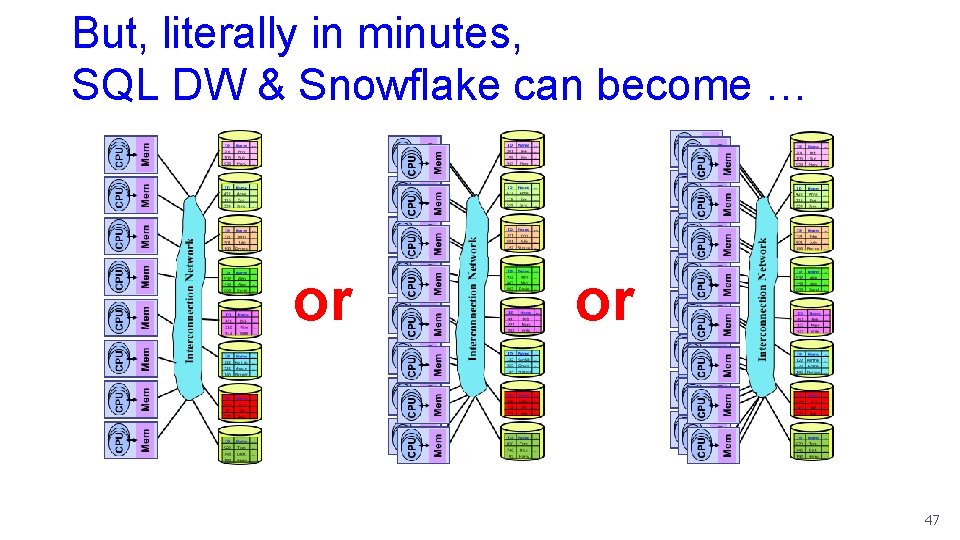

But, literally in minutes, SQL DW & Snowflake can become … or or 47

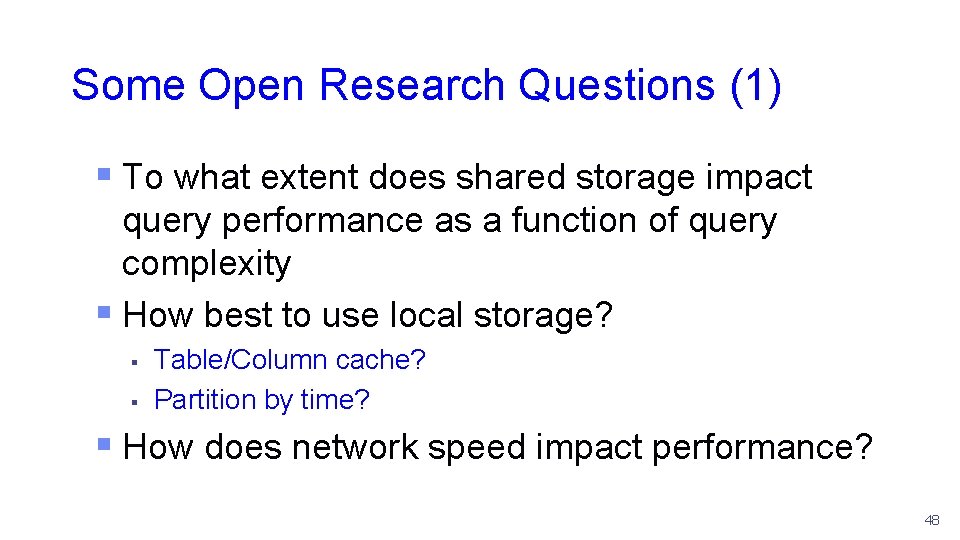

Some Open Research Questions (1) § To what extent does shared storage impact query performance as a function of query complexity § How best to use local storage? § § Table/Column cache? Partition by time? § How does network speed impact performance? 48

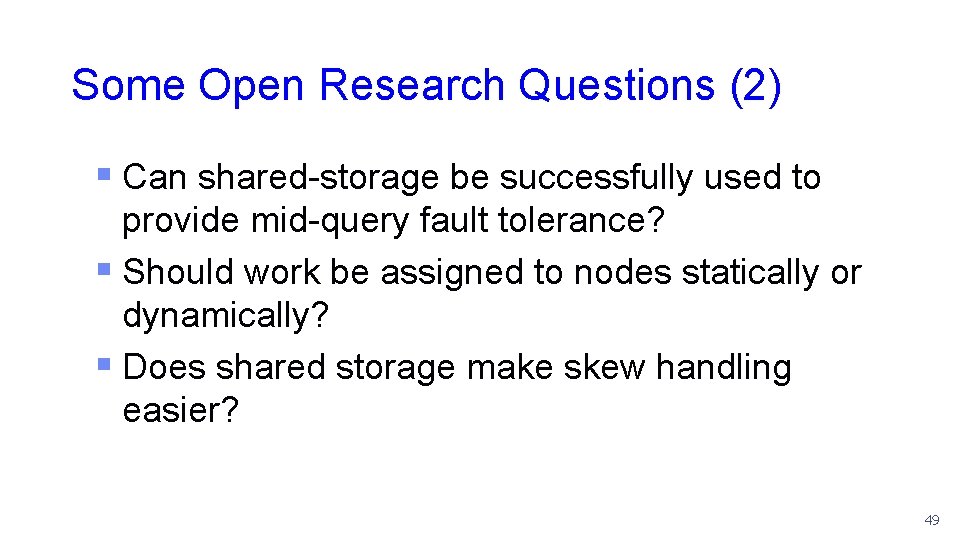

Some Open Research Questions (2) § Can shared-storage be successfully used to provide mid-query fault tolerance? § Should work be assigned to nodes statically or dynamically? § Does shared storage make skew handling easier? 49

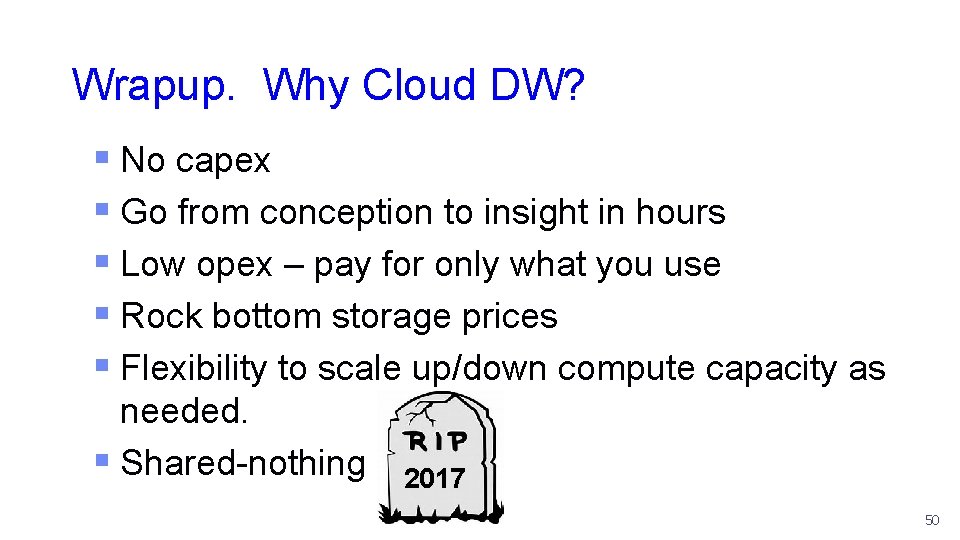

Wrapup. Why Cloud DW? § No capex § Go from conception to insight in hours § Low opex – pay for only what you use § Rock bottom storage prices § Flexibility to scale up/down compute capacity as needed. § Shared-nothing 2017 50

Unused 51

What about … § Spark, Impala, Hive, Presto, … § Not exclusively SAAS offerings § All use shared storage (HDFS) 52

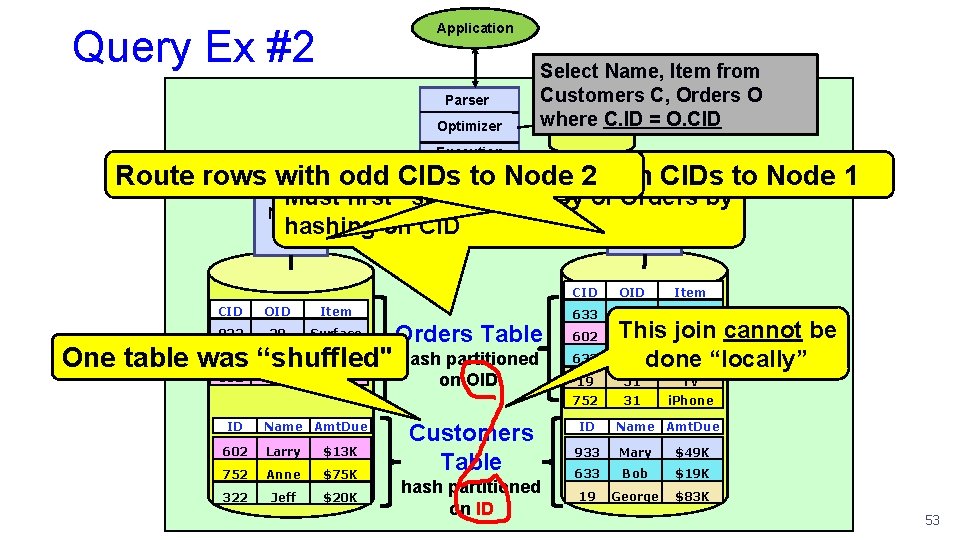

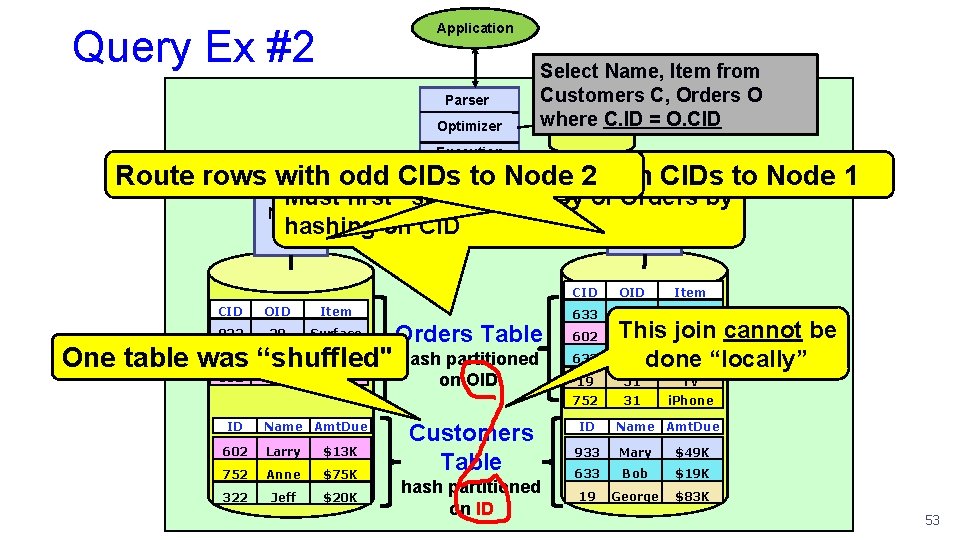

Application Query Ex #2 Parser Optimizer Select Name, Item from Customers C, Orders O where C. ID = O. CID Catalogs Execution Coordinator Route rows with odd CIDsrows to Node Route with 2 even CIDs to Node 1 Must first ”shuffle” a copy of Orders by NODE on CID 2 NODE hashing 1 CID OID Item 933 20 Surface Orders Table 602 10 Tivo hash partitioned One table was “shuffled" 602 ID 10 Xbox Name Amt. Due 602 Larry $13 K 752 Anne $75 K 322 Jeff $20 K on OID CID OID Item 633 21 TV 19 51 TV 752 31 i. Phone 602 633 ID This join cannot be 11 i. Pod 21 done DVD “locally” Name Amt. Due Customers Table 933 Mary $49 K hash partitioned on ID 633 Bob $19 K 19 George $83 K 53

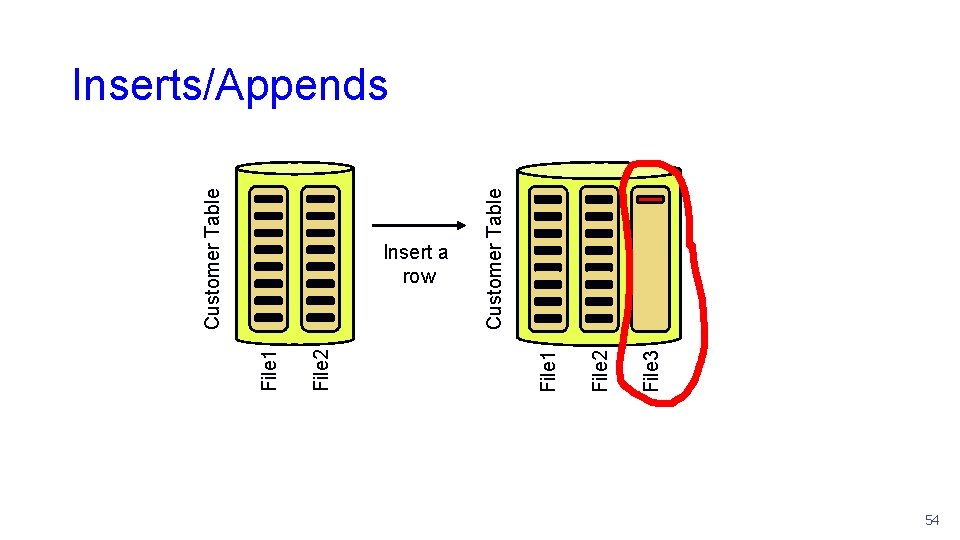

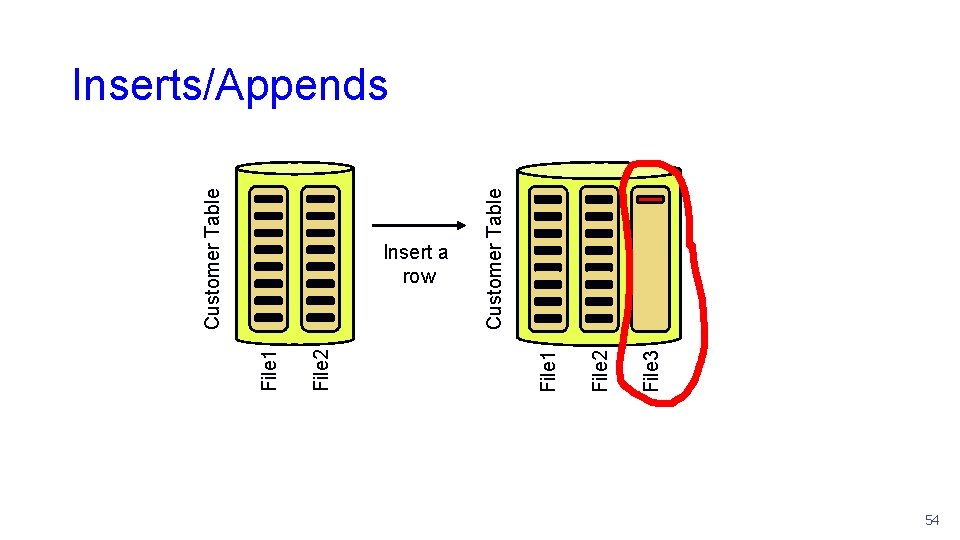

File 3 File 2 Customer Table Insert a row File 1 File 2 File 1 Customer Table Inserts/Appends 54

Delete File 2 Assume: Deletes/Updates • Customer table is 100 GB => 10, 000 S 3 files @ 10 MB/file • Delete/update query updates 1 or more rows in each file Customer Table Delete/Update vs. Select perf tradeoff #4 Copy File 2 to File 3, dropping deleted row(s) ow r e File 3 File 2 row Delete File 2 File 3 Copy File 2 to File 3, modify qualifying rows(s) File 2 ea File 1 File 2 File 1 Up dat File 1 t le e D 55

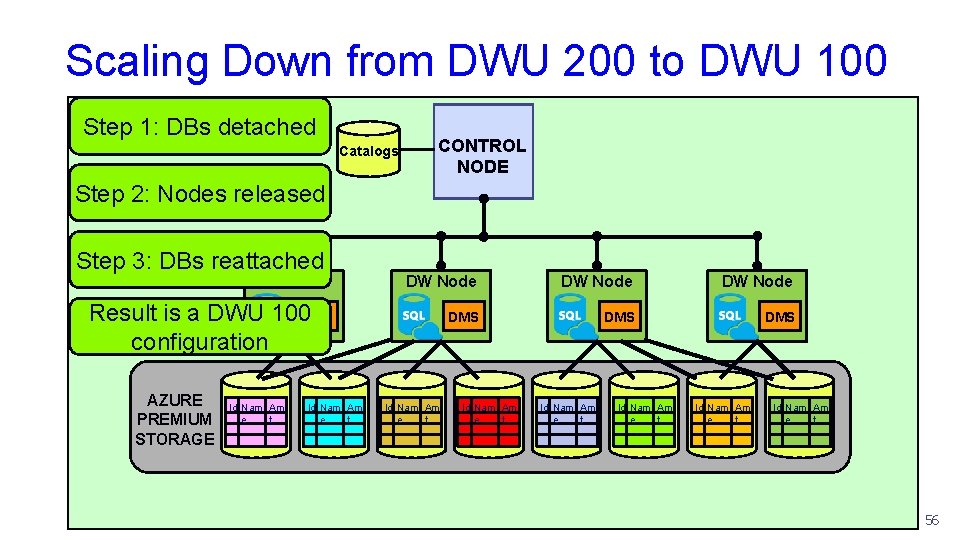

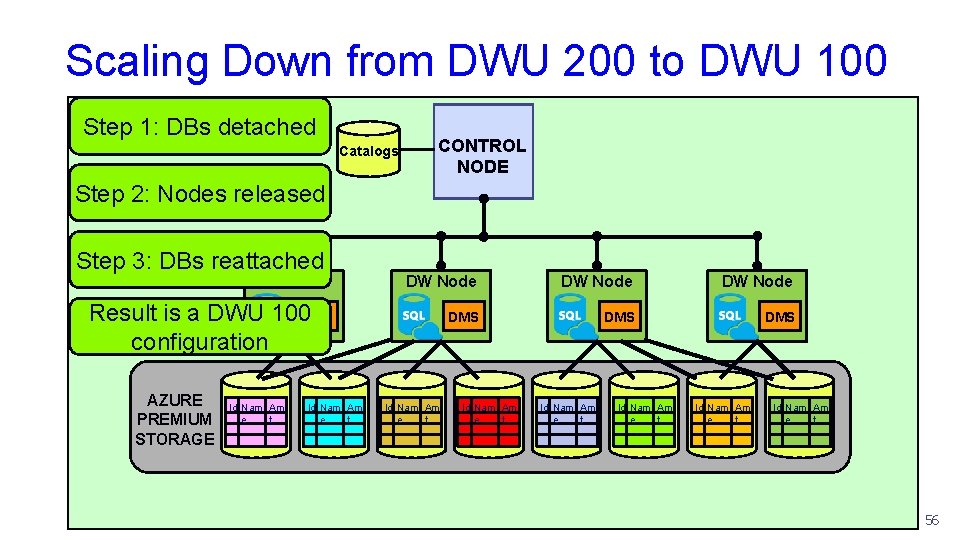

Scaling Down from DWU 200 to DWU 100 Step 1: DBs detached Catalogs CONTROL NODE Step 2: Nodes released Step 3: DBs reattached DW Node DMS DMS Result is a DWU 100 DMS configuration AZURE PREMIUM STORAGE Id Nam Am e t Id Nam Am e t 56