Data Systems Quality Implementing the Data Quality Assessment

- Slides: 38

Data Systems Quality Implementing the Data Quality Assessment Tool

Session Overview § Why data quality matters § Dimensions of data quality § Thoughts about improving data quality § Data Quality Assurance Tool § Activity: Implementing the Tool

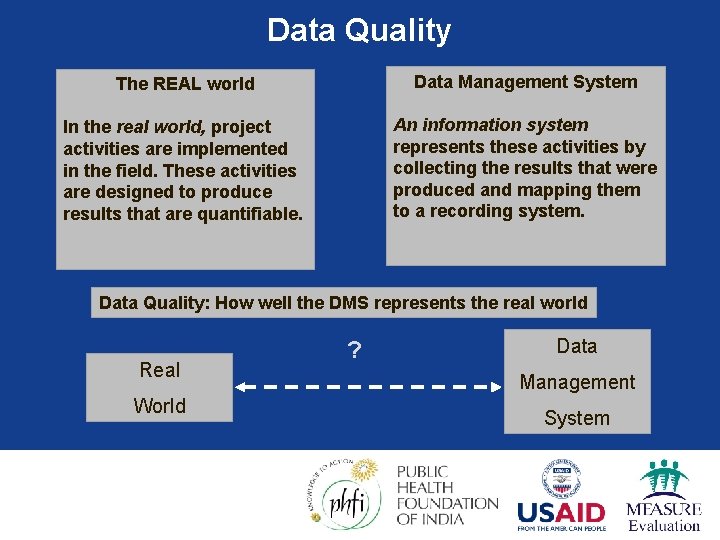

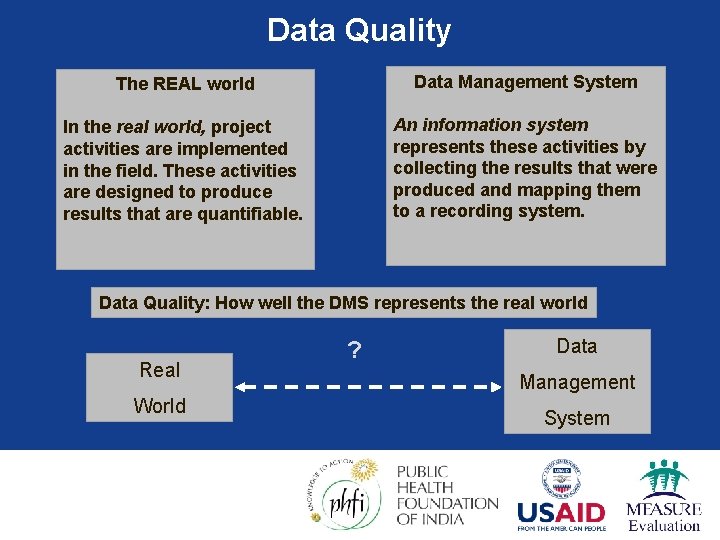

Data Quality The REAL world Data Management System In the real world, project activities are implemented in the field. These activities are designed to produce results that are quantifiable. An information system represents these activities by collecting the results that were produced and mapping them to a recording system. Data Quality: How well the DMS represents the real world Real World ? Data Management System

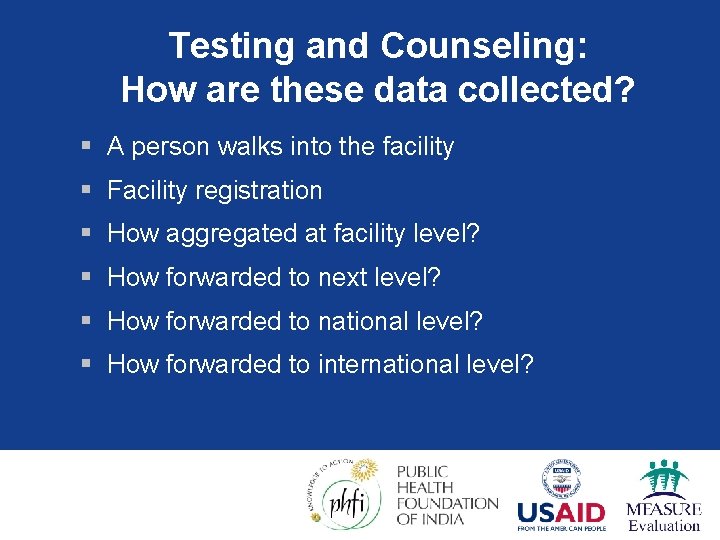

Testing and Counseling: How are these data collected? § A person walks into the facility § Facility registration § How aggregated at facility level? § How forwarded to next level? § How forwarded to national level? § How forwarded to international level?

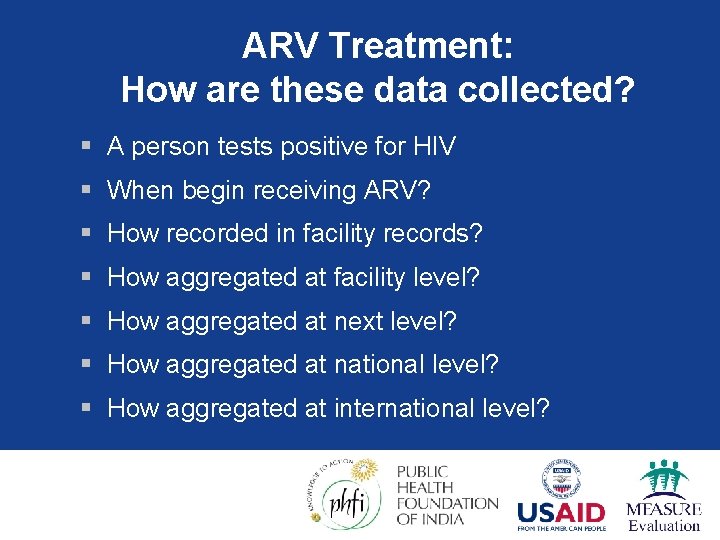

ARV Treatment: How are these data collected? § A person tests positive for HIV § When begin receiving ARV? § How recorded in facility records? § How aggregated at facility level? § How aggregated at next level? § How aggregated at national level? § How aggregated at international level?

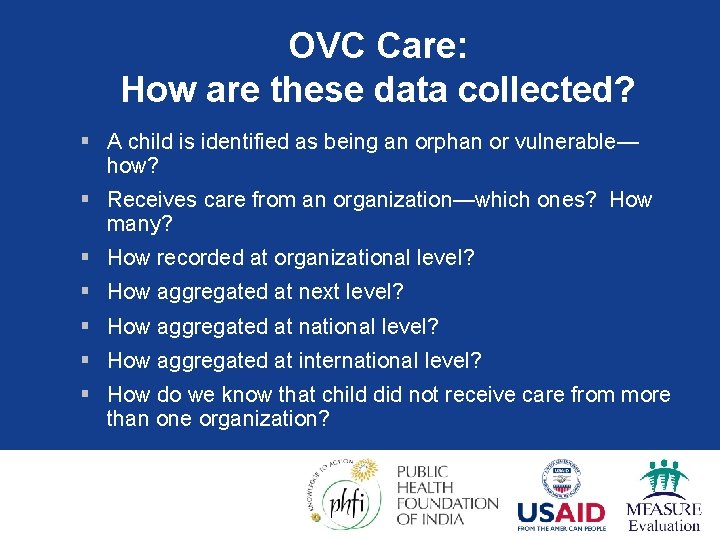

OVC Care: How are these data collected? § A child is identified as being an orphan or vulnerable— how? § Receives care from an organization—which ones? How many? § How recorded at organizational level? § How aggregated at next level? § How aggregated at national level? § How aggregated at international level? § How do we know that child did not receive care from more than one organization?

Why is data quality important? § Governments and donors collaborating on “Three Ones” § Accountability for funding and results reported increasingly important § Quality data needed at program level for management decisions

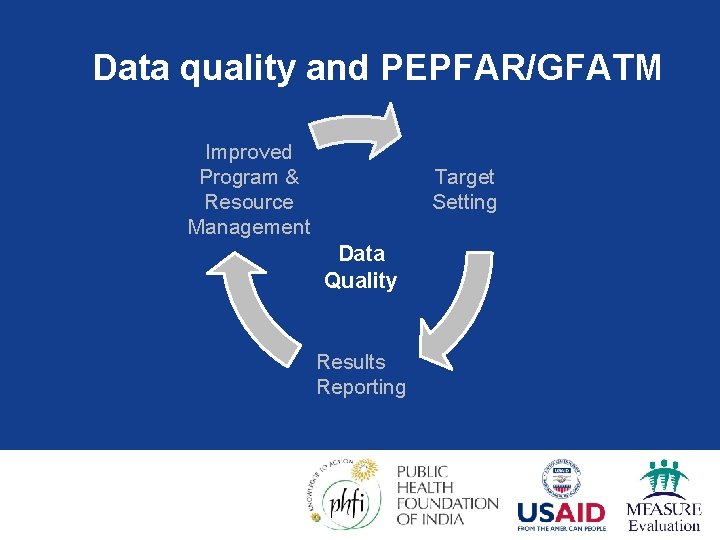

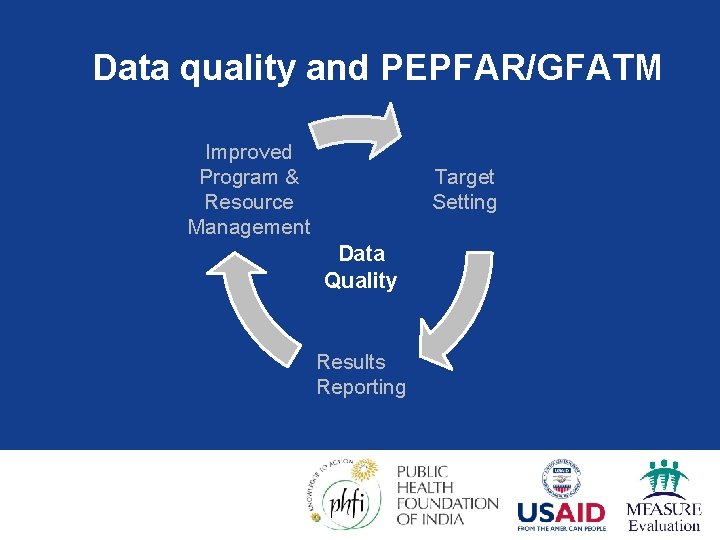

Data quality and PEPFAR/GFATM Improved Program & Resource Management Target Setting Data Quality Results Reporting

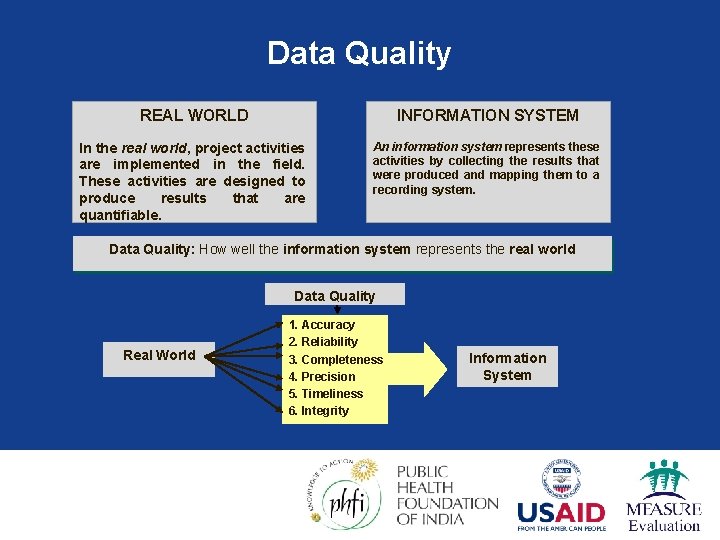

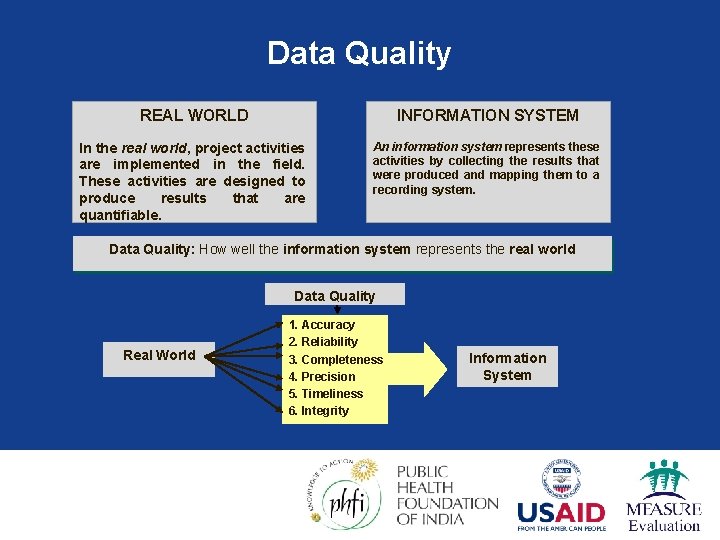

Data Quality REAL WORLD INFORMATION SYSTEM In the real world, project activities are implemented in the field. These activities are designed to produce results that are quantifiable. An information system represents these activities by collecting the results that were produced and mapping them to a recording system. Data Quality: How well the information system represents the real world Data Quality Real World 1. Accuracy 2. Reliability 3. Completeness 4. Precision 5. Timeliness 6. Integrity Information System

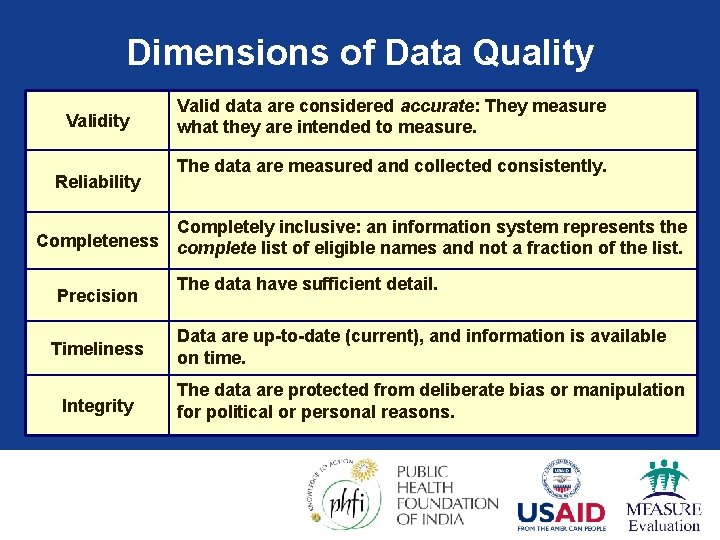

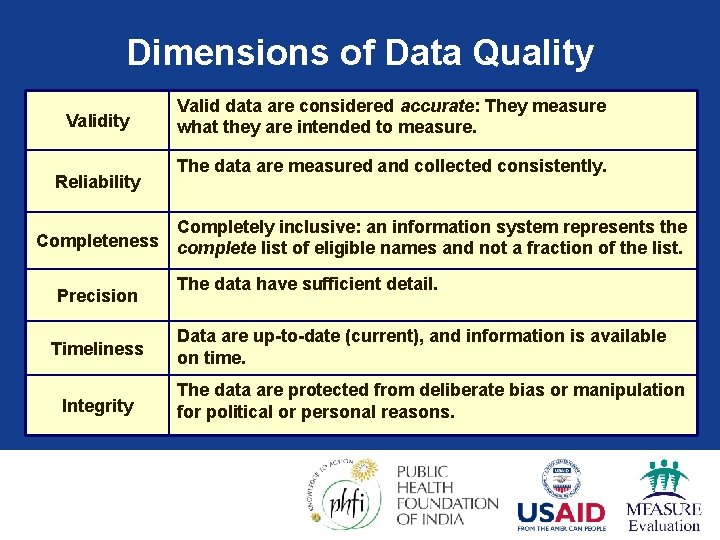

Dimensions of Data Quality Validity Reliability Valid data are considered accurate: They measure what they are intended to measure. The data are measured and collected consistently. Completely inclusive: an information system represents the Completeness complete list of eligible names and not a fraction of the list. Precision Timeliness Integrity The data have sufficient detail. Data are up-to-date (current), and information is available on time. The data are protected from deliberate bias or manipulation for political or personal reasons.

Validity/Accuracy: Questions to ask… § What is the relationship between the activity/program & what you are measuring? § What is the data transcription process? § Is there potential for error? § Are steps being taken to limit transcription error § double keying of data for large surveys, built in validation checks, random checks

Reliability: Questions to ask… § Is the same instrument used from year to year, site to site? § Is the same data collection process used from year to year, site to site? § Are procedures in place to ensure that data are free of significant error and that bias is not introduced (e. g. , instructions, indicator reference sheets, training, etc. )?

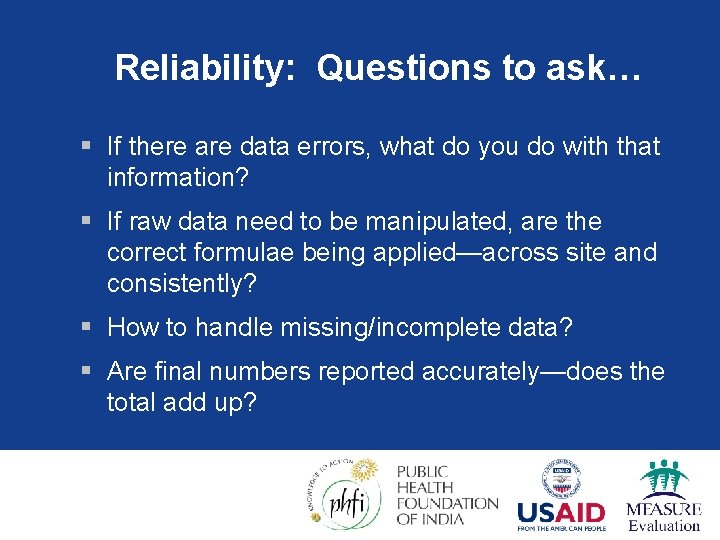

Reliability: Questions to ask… § If there are data errors, what do you do with that information? § If raw data need to be manipulated, are the correct formulae being applied—across site and consistently? § How to handle missing/incomplete data? § Are final numbers reported accurately—does the total add up?

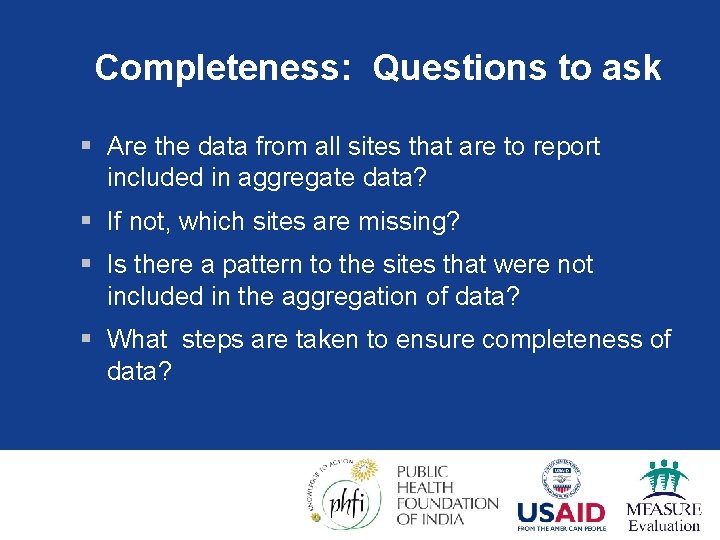

Completeness: Questions to ask § Are the data from all sites that are to report included in aggregate data? § If not, which sites are missing? § Is there a pattern to the sites that were not included in the aggregation of data? § What steps are taken to ensure completeness of data?

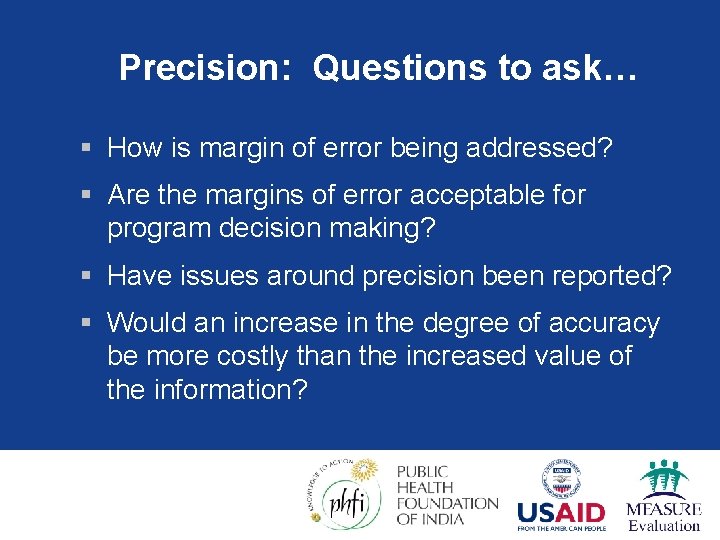

Precision: Questions to ask… § How is margin of error being addressed? § Are the margins of error acceptable for program decision making? § Have issues around precision been reported? § Would an increase in the degree of accuracy be more costly than the increased value of the information?

Timeliness: Questions to ask… § Are data available on a frequent enough basis to inform program management decisions? § Is a regularized schedule of data collection in place to meet program management needs? § Are data from within the policy period of interest (i. e. are the data from a point in time after the intervention has begun)? § Are the data reported as soon as possible after collection?

Integrity: Questions to ask… § Are there risks that data are manipulated for personal or political reasons? § What systems are in place to minimize such risks? § Has there been an independent review?

During this workshop, think about… § How well does your information system function? § Are the definitions of indicators clear and understood at all levels? § Do individuals and groups understand their roles and responsibilities? § Does everyone understand the specific reporting timelines—and why they need to be followed?

…Keep thinking about… § Are data collection instruments and reporting forms standardized and compatible? Do they have clear instructions? § Do you have documented data review procedures for all levels…and use them? § Are you aware of potential data quality challenges, such as missing data, double counting, lost to follow up? How do you address them? § What are your policies and procedures for storing and filing data collection instruments?

Data Quality Assessment Tool For Assessment & Capacity Building

Purpose of the DQA The Data-Quality Assessment (DQA) Protocol is designed: u to verify that appropriate data management systems are in place in countries; v to verify the quality of reported data for key indicators at selected sites; and w to contribute to M&E systems strengthening and capacity building.

DQA Components § Determine scope of the data quality assessment § Suggested criteria for selecting Program/project(s) & indicators § Engage Program/project(s), obtain authorization for DQA § Templates for notifying the Program/project of the assessment § Guidelines for obtaining authorization to conduct the assessment § Assess the design & implementation of the Program/project’s data collection and reporting systems. § Steps & protocols to ID potential threats to data quality created by Program/project’s data management & reporting system

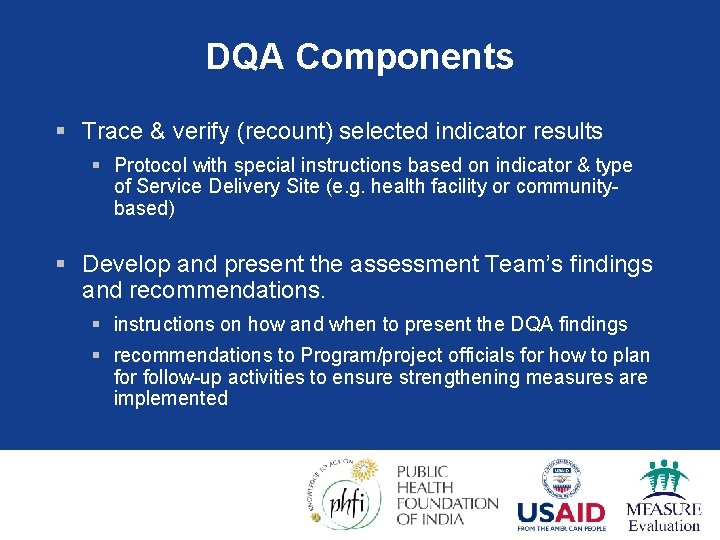

DQA Components § Trace & verify (recount) selected indicator results § Protocol with special instructions based on indicator & type of Service Delivery Site (e. g. health facility or communitybased) § Develop and present the assessment Team’s findings and recommendations. § instructions on how and when to present the DQA findings § recommendations to Program/project officials for how to plan for follow-up activities to ensure strengthening measures are implemented

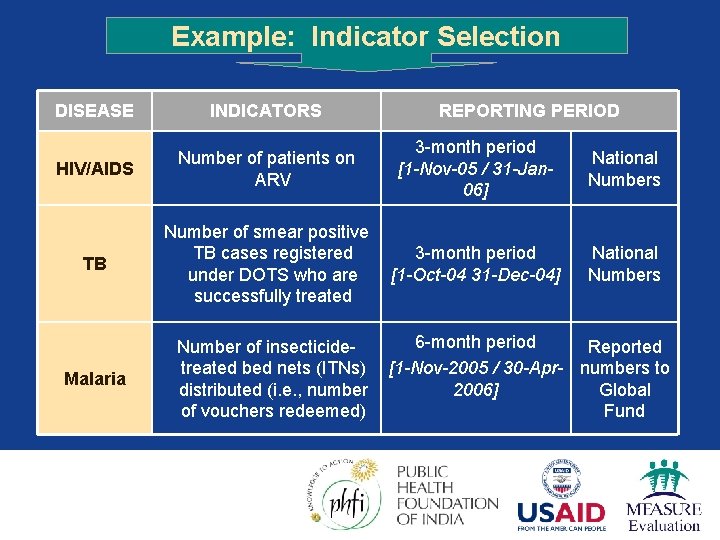

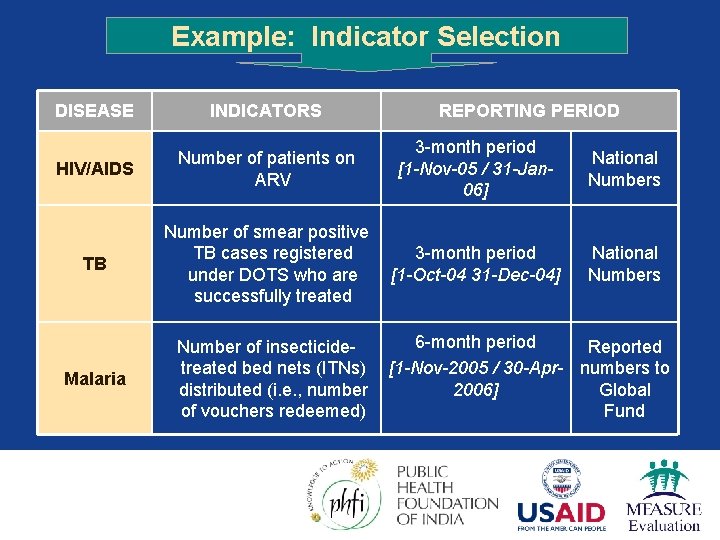

Example: Indicator Selection DISEASE INDICATORS HIV/AIDS Number of patients on ARV 3 -month period [1 -Nov-05 / 31 -Jan 06] National Numbers TB Number of smear positive TB cases registered under DOTS who are successfully treated 3 -month period [1 -Oct-04 31 -Dec-04] National Numbers Malaria Number of insecticidetreated bed nets (ITNs) distributed (i. e. , number of vouchers redeemed) REPORTING PERIOD 6 -month period Reported [1 -Nov-2005 / 30 -Apr- numbers to 2006] Global Fund

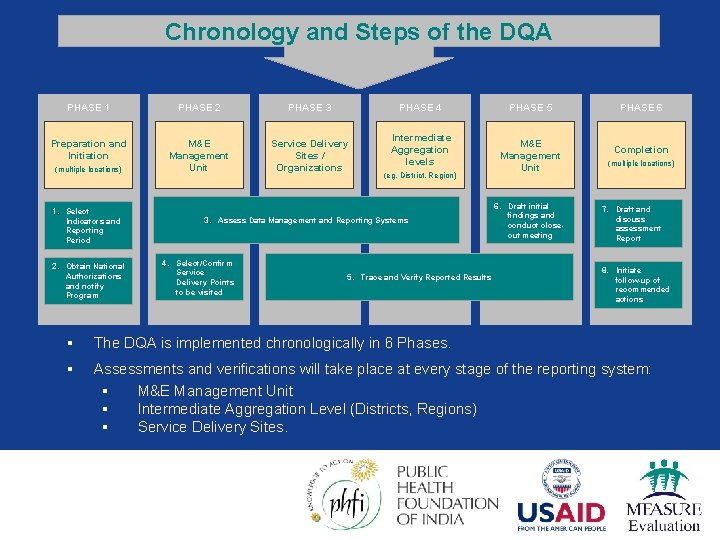

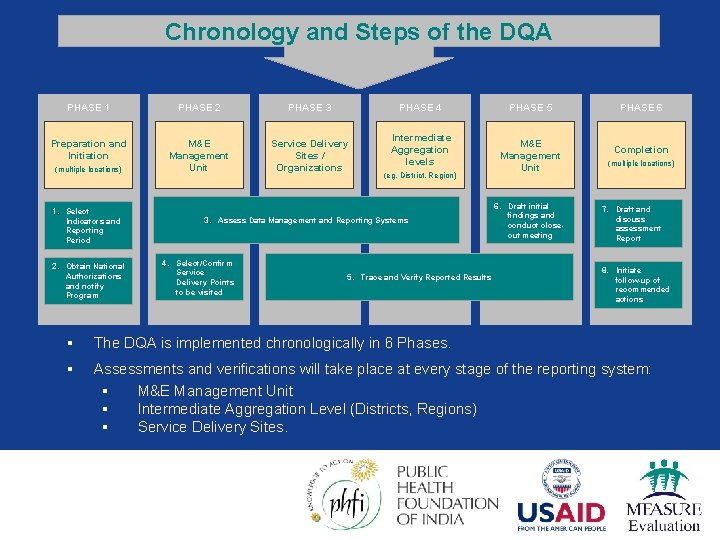

Chronology and Steps of the DQA PHASE 1 PHASE 2 PHASE 3 Preparation and Initiation M&E Management Unit Service Delivery Sites / Organizations (multiple locations) PHASE 4 Intermediate Aggregation levels (eg. District, Region) PHASE 5 M&E Management Unit 6. Draft initial 1. Select Indicators and Reporting Period 2. Obtain National Authorizations and notify Program 3. Assess Data Management and Reporting Systems 4. Select/Confirm Service Delivery Points to be visited 5. Trace and Verify Reported Results findings and conduct closeout meeting PHASE 6 Completion (multiple locations) 7. Draft and discuss assessment Report 8. Initiate follow-up of recommended actions § The DQA is implemented chronologically in 6 Phases. § Assessments and verifications will take place at every stage of the reporting system: § M&E Management Unit § Intermediate Aggregation Level (Districts, Regions) § Service Delivery Sites.

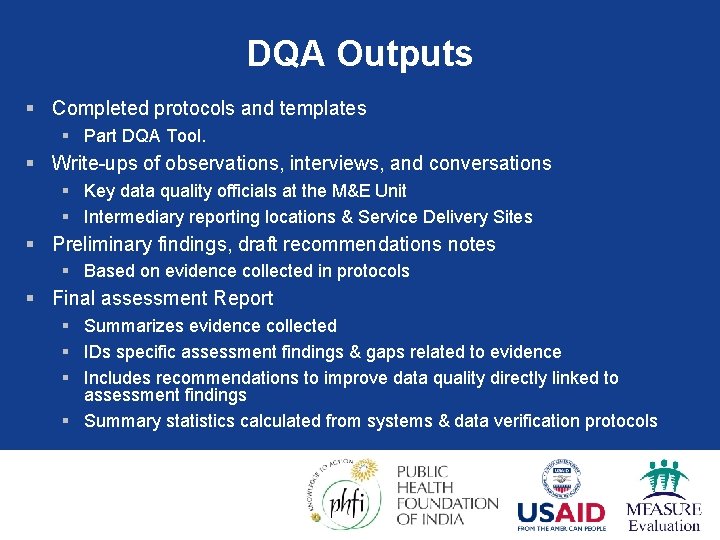

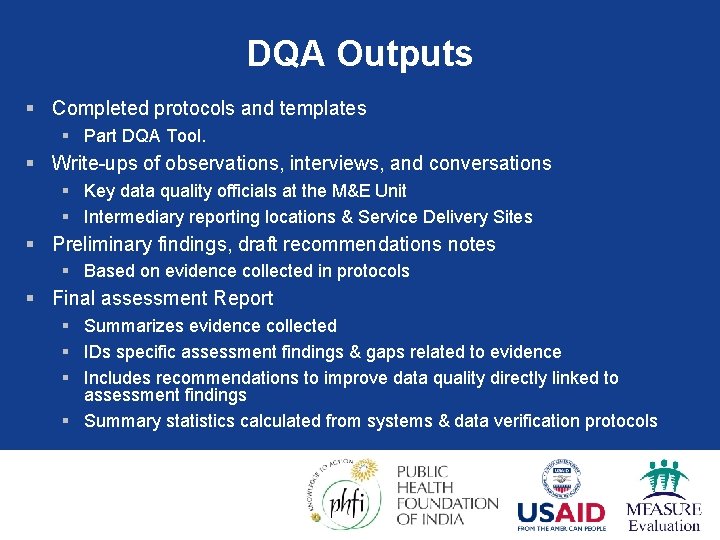

DQA Outputs § Completed protocols and templates § Part DQA Tool. § Write-ups of observations, interviews, and conversations § Key data quality officials at the M&E Unit § Intermediary reporting locations & Service Delivery Sites § Preliminary findings, draft recommendations notes § Based on evidence collected in protocols § Final assessment Report § Summarizes evidence collected § IDs specific assessment findings & gaps related to evidence § Includes recommendations to improve data quality directly linked to assessment findings § Summary statistics calculated from systems & data verification protocols

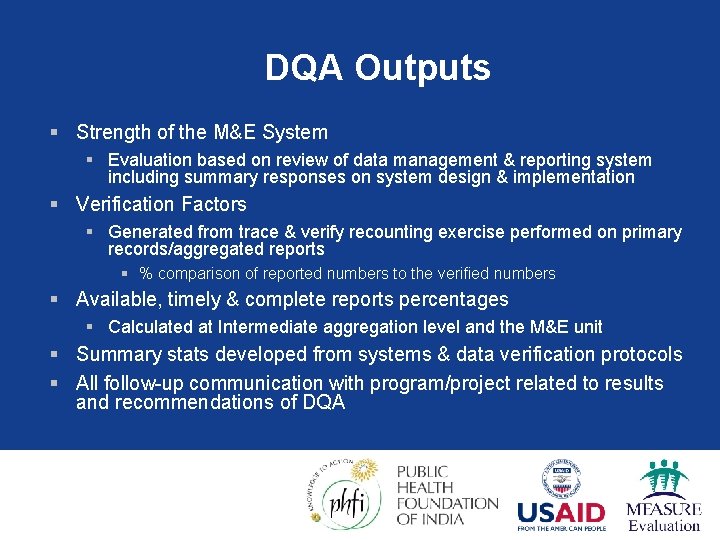

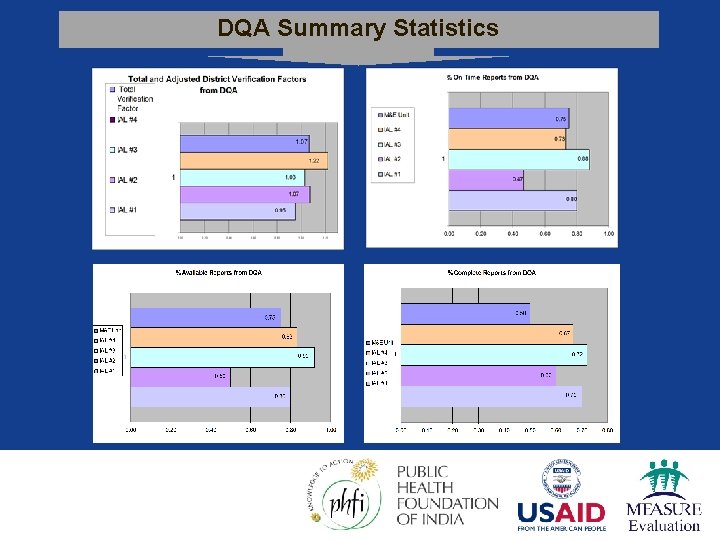

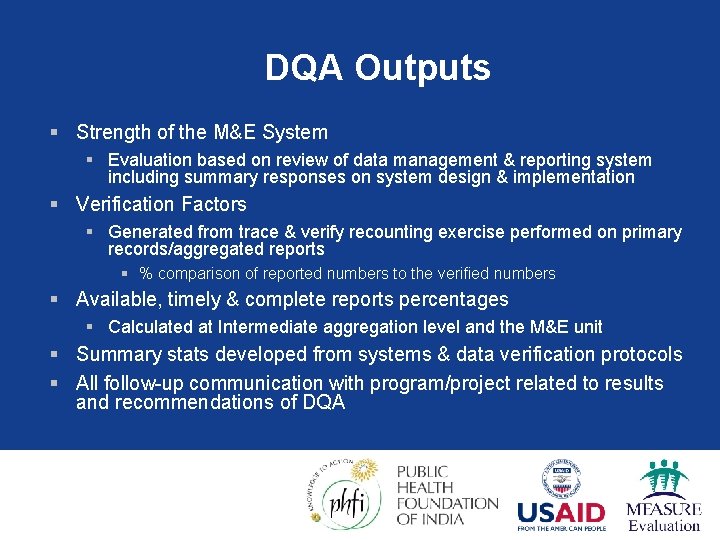

DQA Outputs § Strength of the M&E System § Evaluation based on review of data management & reporting system including summary responses on system design & implementation § Verification Factors § Generated from trace & verify recounting exercise performed on primary records/aggregated reports § % comparison of reported numbers to the verified numbers § Available, timely & complete reports percentages § Calculated at Intermediate aggregation level and the M&E unit § Summary stats developed from systems & data verification protocols § All follow-up communication with program/project related to results and recommendations of DQA

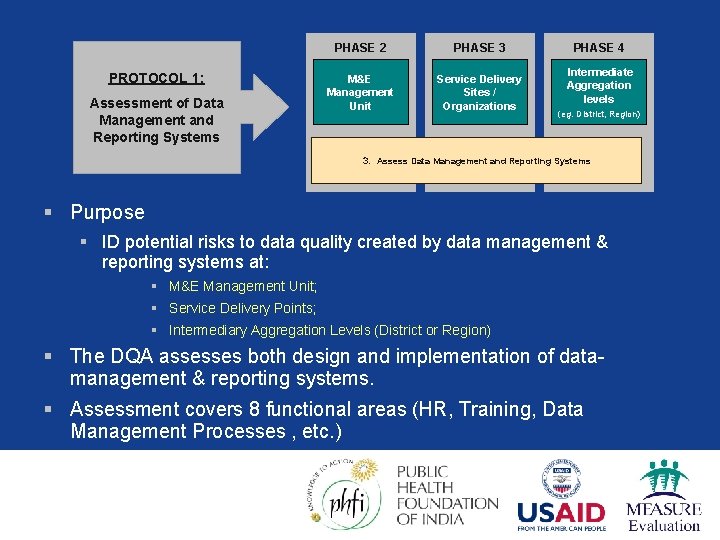

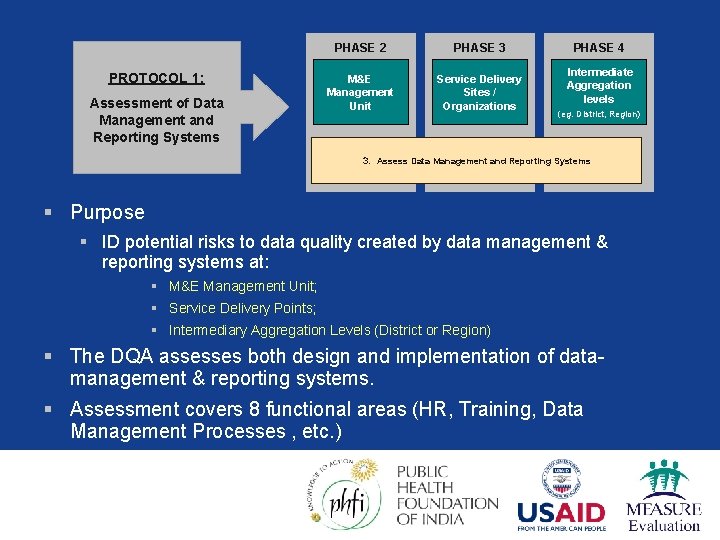

PROTOCOL 1: Assessment of Data Management and Reporting Systems PHASE 2 PHASE 3 M&E Management Unit Service Delivery Sites / Organizations PHASE 4 Intermediate Aggregation levels (eg. District, Region) 3. Assess Data Management and Reporting Systems § Purpose § ID potential risks to data quality created by data management & reporting systems at: § M&E Management Unit; § Service Delivery Points; § Intermediary Aggregation Levels (District or Region) § The DQA assesses both design and implementation of datamanagement & reporting systems. § Assessment covers 8 functional areas (HR, Training, Data Management Processes , etc. )

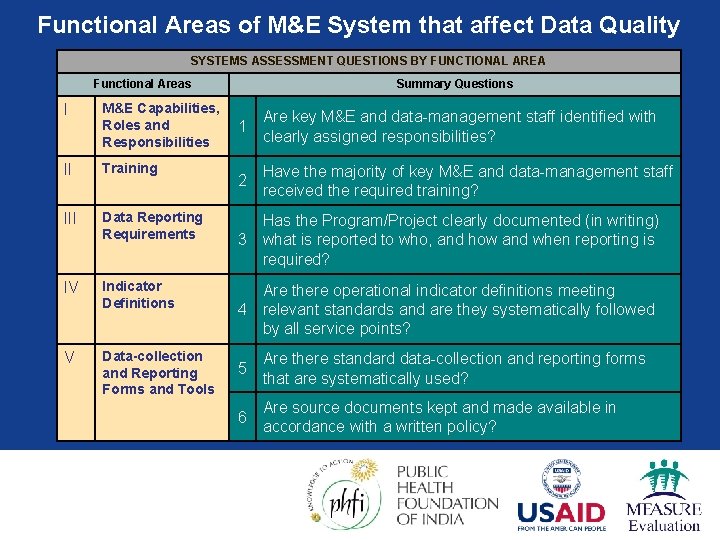

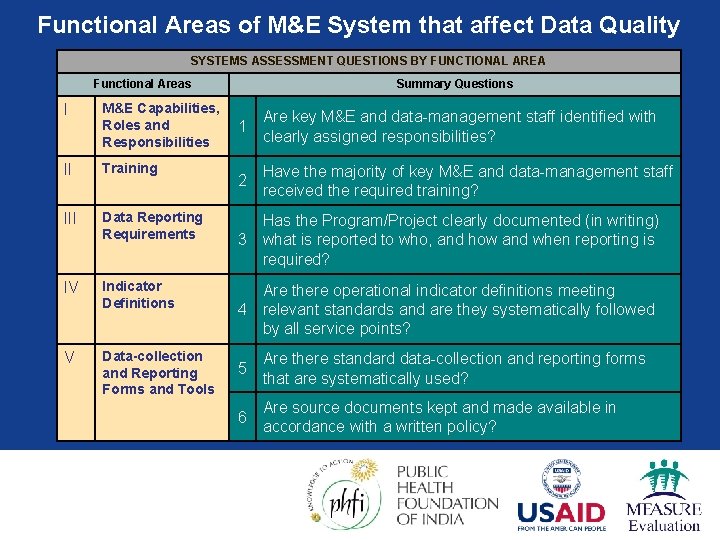

Functional Areas of M&E System that affect Data Quality SYSTEMS ASSESSMENT QUESTIONS BY FUNCTIONAL AREA Functional Areas Summary Questions I M&E Capabilities, Roles and Responsibilities II Training III Data Reporting Requirements Has the Program/Project clearly documented (in writing) 3 what is reported to who, and how and when reporting is required? IV Indicator Definitions Are there operational indicator definitions meeting 4 relevant standards and are they systematically followed by all service points? V Data-collection and Reporting Forms and Tools 1 Are key M&E and data-management staff identified with clearly assigned responsibilities? 2 Have the majority of key M&E and data-management staff received the required training? 5 Are there standard data-collection and reporting forms that are systematically used? 6 Are source documents kept and made available in accordance with a written policy?

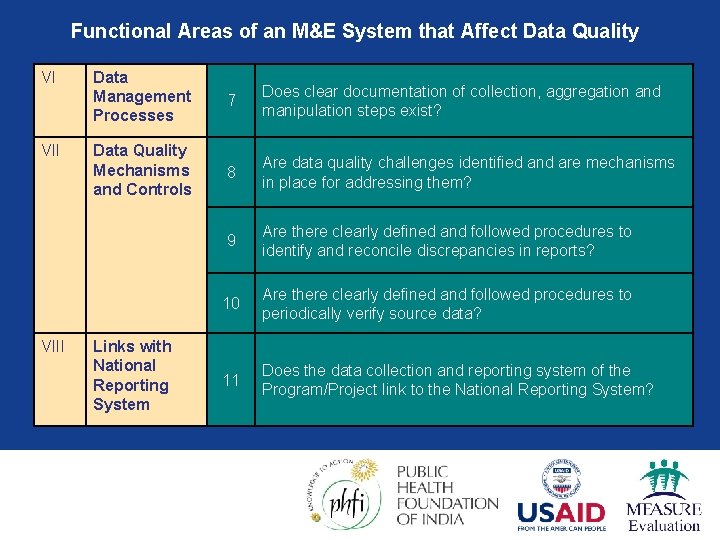

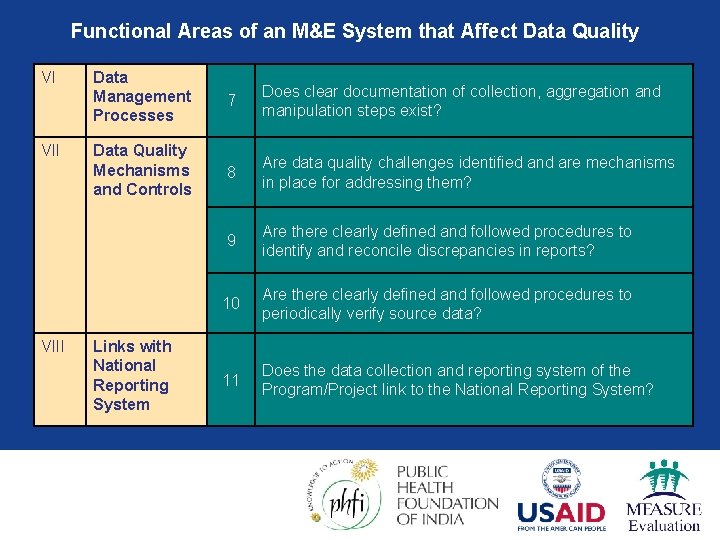

Functional Areas of an M&E System that Affect Data Quality VI VIII Data Management Processes 7 Does clear documentation of collection, aggregation and manipulation steps exist? Data Quality Mechanisms and Controls 8 Are data quality challenges identified and are mechanisms in place for addressing them? 9 Are there clearly defined and followed procedures to identify and reconcile discrepancies in reports? 10 Are there clearly defined and followed procedures to periodically verify source data? 11 Does the data collection and reporting system of the Program/Project link to the National Reporting System? Links with National Reporting System

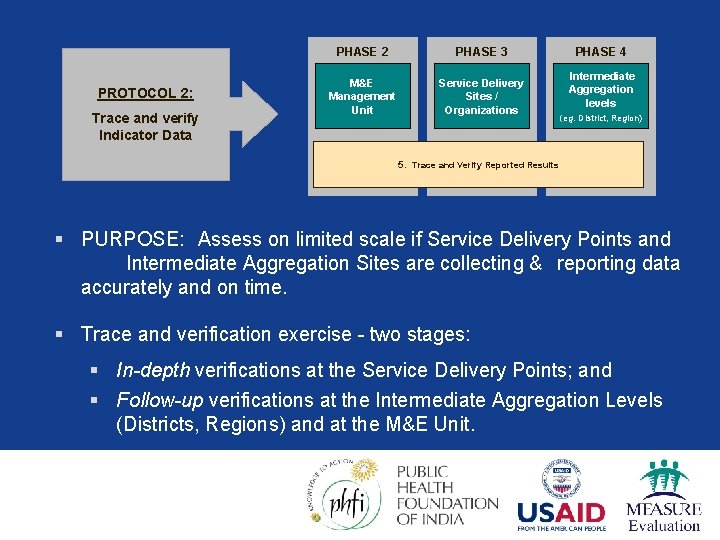

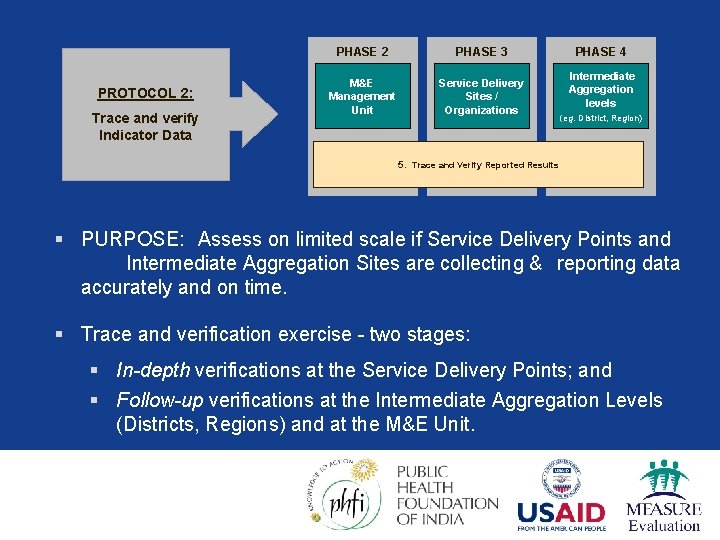

PROTOCOL 2: Trace and verify Indicator Data PHASE 2 PHASE 3 M&E Management Unit Service Delivery Sites / Organizations PHASE 4 Intermediate Aggregation levels (eg. District, Region) 5. Trace and Verify Reported Results § PURPOSE: Assess on limited scale if Service Delivery Points and Intermediate Aggregation Sites are collecting & reporting data accurately and on time. § Trace and verification exercise - two stages: § In-depth verifications at the Service Delivery Points; and § Follow-up verifications at the Intermediate Aggregation Levels (Districts, Regions) and at the M&E Unit.

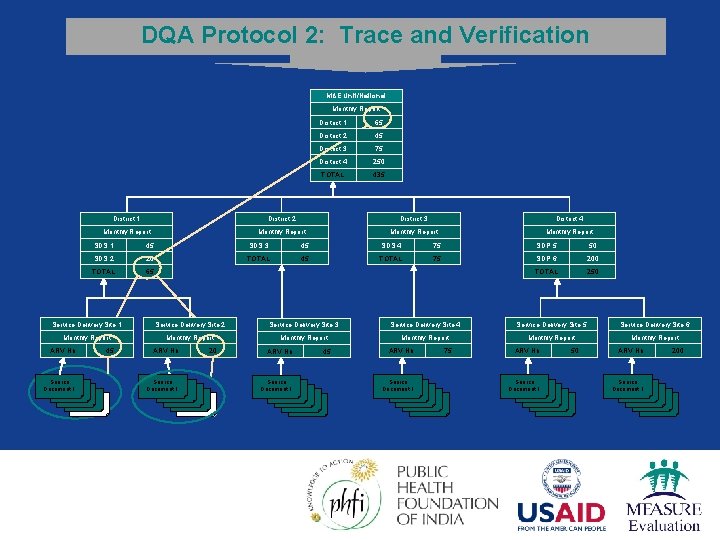

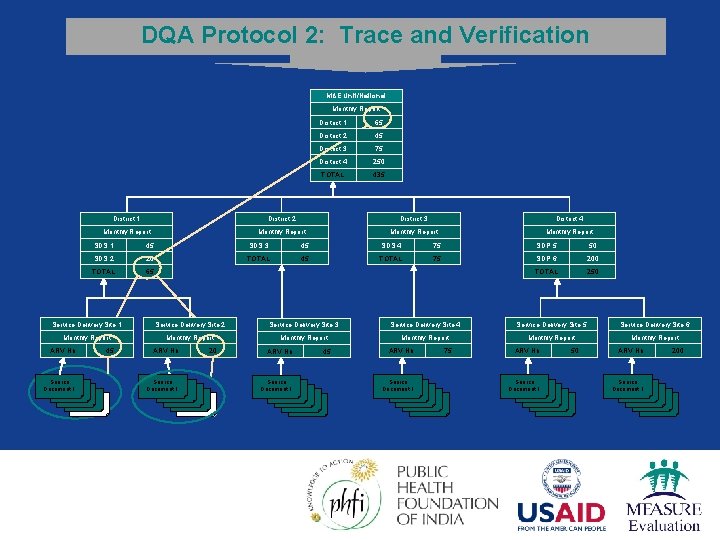

DQA Protocol 2: Trace and Verification M&E Unit/National Monthly Report 45 District 3 75 District 4 250 TOTAL 435 District 1 District 2 District 3 District 4 Monthly Report 45 SDS 3 45 SDS 4 75 SDS 2 20 TOTAL 45 TOTAL 75 TOTAL 65 Service Delivery Site 1 Service Delivery Site 2 Monthly Report Source Document 1 65 District 2 Monthly Report SDS 1 ARV Nb. District 1 45 ARV Nb. Source Document 1 20 SDP 5 50 SDP 6 200 TOTAL 250 Service Delivery Site 3 Service Delivery Site 4 Service Delivery Site 5 Service Delivery Site 6 Monthly Report ARV Nb. Source Document 1 45 ARV Nb. Source Document 1 75 ARV Nb. Source Document 1 50 ARV Nb. Source Document 1 200

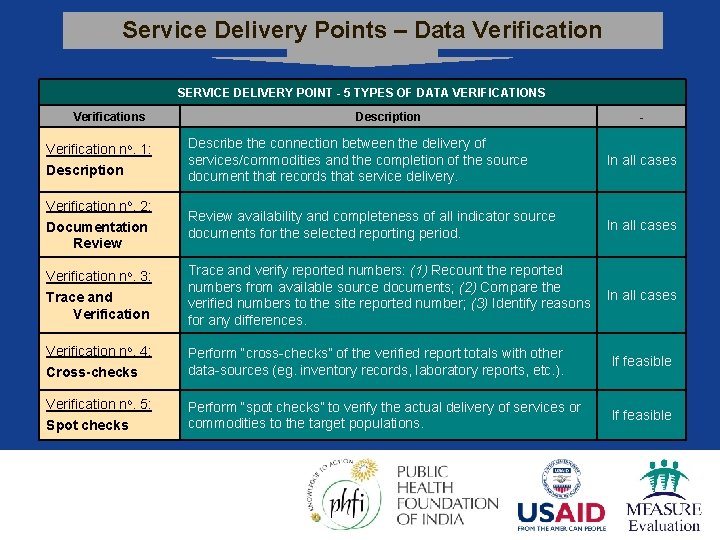

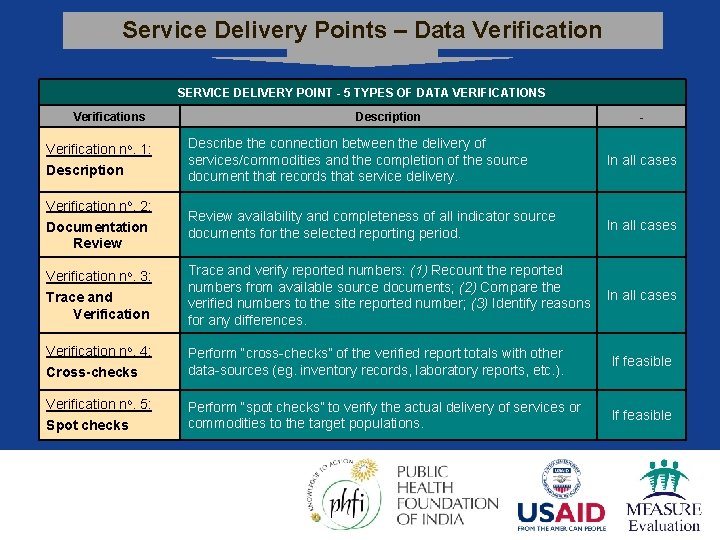

Service Delivery Points – Data Verification SERVICE DELIVERY POINT - 5 TYPES OF DATA VERIFICATIONS Verifications Description - Describe the connection between the delivery of services/commodities and the completion of the source document that records that service delivery. In all cases Review availability and completeness of all indicator source documents for the selected reporting period. In all cases Verification no. 3: Trace and Verification Trace and verify reported numbers: (1) Recount the reported numbers from available source documents; (2) Compare the verified numbers to the site reported number; (3) Identify reasons for any differences. In all cases Verification no. 4: Cross-checks Perform “cross-checks” of the verified report totals with other data-sources (eg. inventory records, laboratory reports, etc. ). If feasible Verification no. 5: Spot checks Perform “spot checks” to verify the actual delivery of services or commodities to the target populations. If feasible Verification no. 1: Description Verification no. 2: Documentation Review

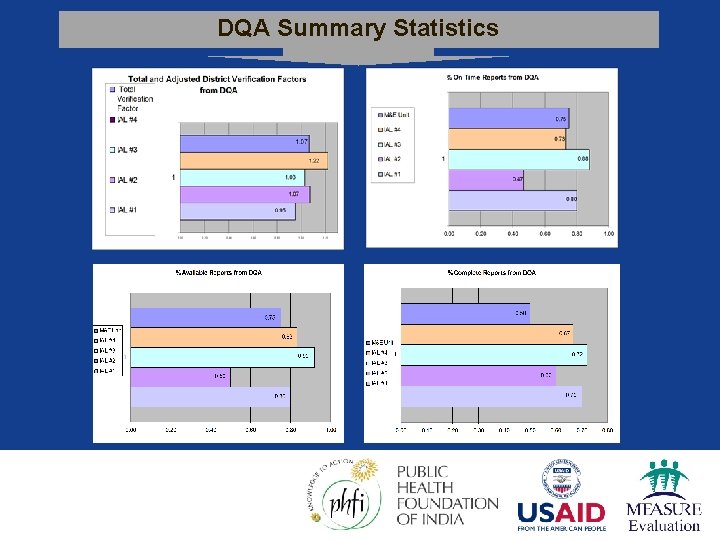

DQA Summary Statistics

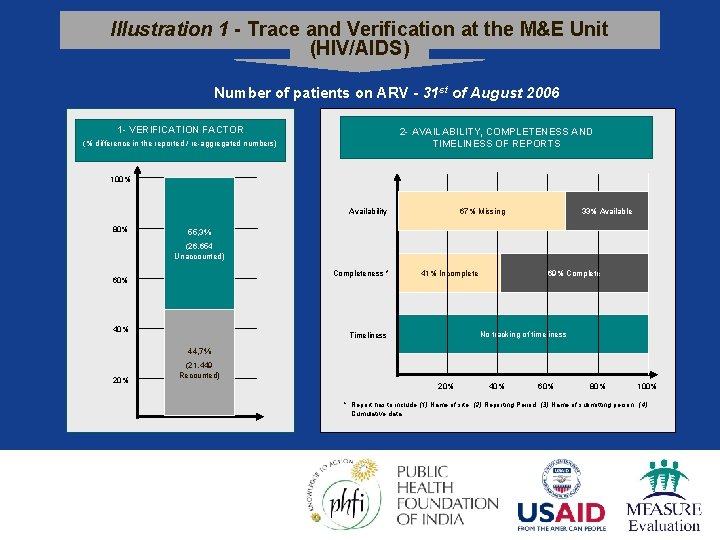

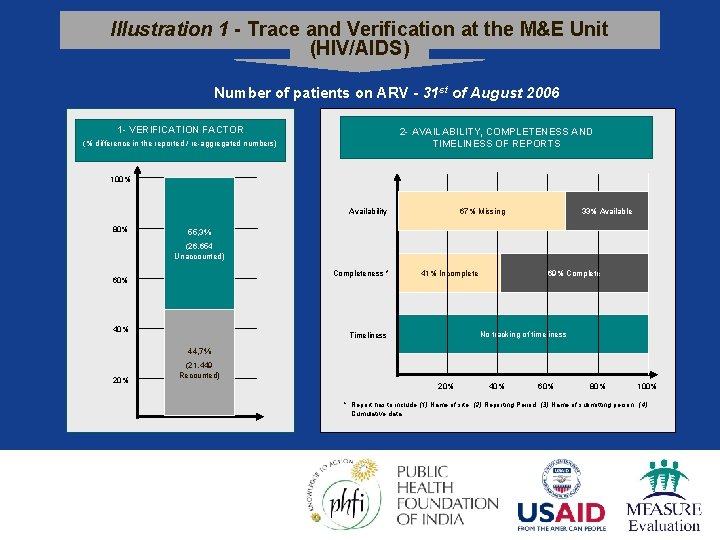

Illustration 1 - Trace and Verification at the M&E Unit (HIV/AIDS) Number of patients on ARV - 31 st of August 2006 1 - VERIFICATION FACTOR 2 - AVAILABILITY, COMPLETENESS AND TIMELINESS OF REPORTS (% difference in the reported / re-aggregated numbers) 100% Availability 80% 67% Missing 33% Available 55, 3% (26, 654 Unaccounted) Completeness * 60% 41% Incomplete 69% Complete No tracking of timeliness Timeliness 44, 7% 20% (21, 449 Recounted) 20% 40% 60% 80% 100% * Report has to include (1) Name of site; (2) Reporting Period; (3) Name of submitting person; (4) Cumulative data.

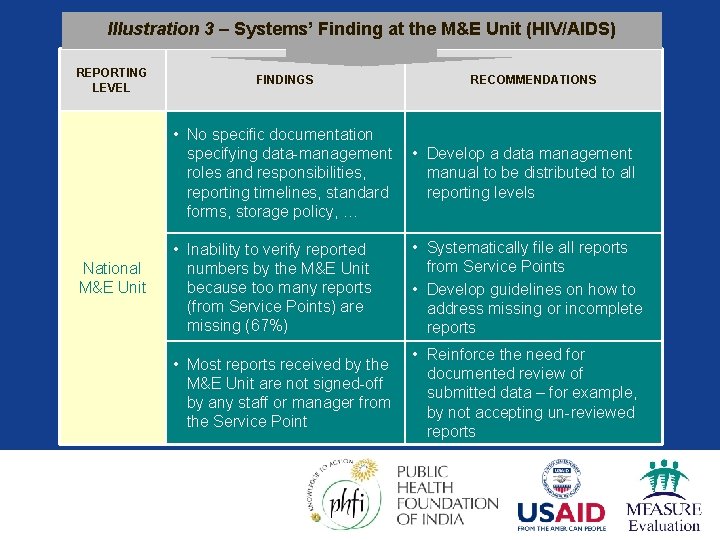

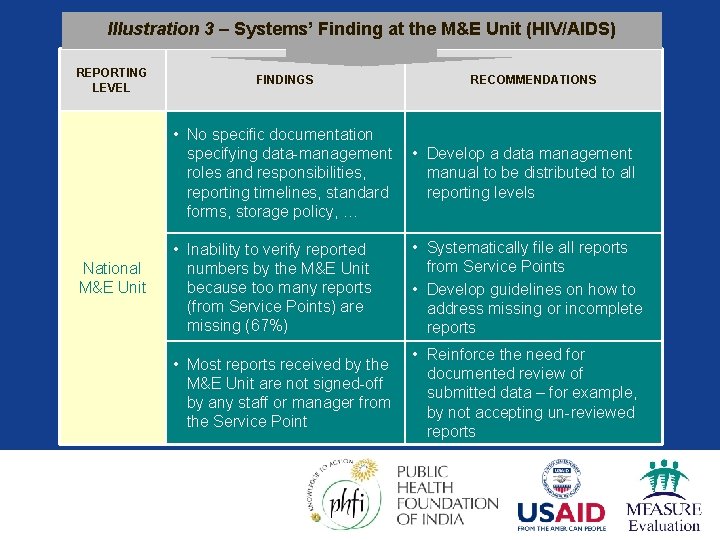

Illustration 3 – Systems’ Finding at the M&E Unit (HIV/AIDS) REPORTING LEVEL National M&E Unit FINDINGS RECOMMENDATIONS • No specific documentation specifying data-management roles and responsibilities, reporting timelines, standard forms, storage policy, … • Develop a data management manual to be distributed to all reporting levels • Inability to verify reported numbers by the M&E Unit because too many reports (from Service Points) are missing (67%) • Systematically file all reports from Service Points • Develop guidelines on how to address missing or incomplete reports • Most reports received by the M&E Unit are not signed-off by any staff or manager from the Service Point • Reinforce the need for documented review of submitted data – for example, by not accepting un-reviewed reports

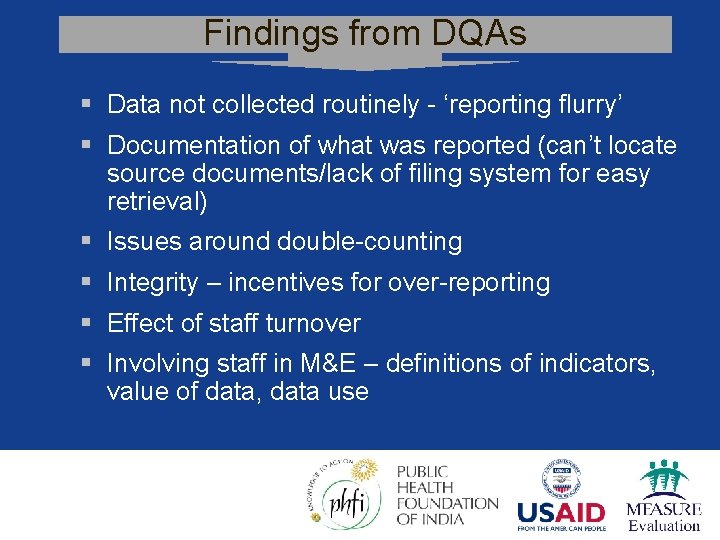

Findings from DQAs § Data not collected routinely - ‘reporting flurry’ § Documentation of what was reported (can’t locate source documents/lack of filing system for easy retrieval) § Issues around double-counting § Integrity – incentives for over-reporting § Effect of staff turnover § Involving staff in M&E – definitions of indicators, value of data, data use

MEASURE Evaluation is a MEASURE project funded by the U. S. Agency for International Development and implemented by the Carolina Population Center at the University of North Carolina at Chapel Hill in partnership with Futures Group International, ICF Macro, John Snow, Inc. , Management Sciences for Health, and Tulane University. Views expressed in this presentation do not necessarily reflect the views of USAID or the U. S. Government. MEASURE Evaluation is the USAID Global Health Bureau's primary vehicle for supporting improvements in monitoring and evaluation in population, health and nutrition worldwide.