Data Protection with Oracle Data Guard Jacek Wojcieszuk

- Slides: 23

Data Protection with Oracle Data Guard Jacek Wojcieszuk, CERN/IT-DM Distributed Database Operations Workshop November 12 th, 2008 CERN IT Department CH-1211 Genève 23 Switzerland www. cern. ch/it Distributed Databse Operations Workshop -

Outline • • Main challenges Oracle Maximum Availability Architecture Backup and recovery evolution @ CERN Data Guard Physical Standby Database – Disaster recovery for production DBS – Standby technology for large-scale testing • CERN IT Department CH-1211 Genève 23 Switzerland www. cern. ch/it Possible RMAN backup improvements Distributed Databse Operations Workshop - 2

Databases for LHC experiments challenges • Data volume – Very quick, linear growth of databases (especially during LHC run) – Some databases may reach 10 TB already next year • Database availability – On-line databases highly critical for data taking • Few hours of downtime can already lead to data loss – Off-line databases critical for data distribution and analysis CERN IT Department CH-1211 Genève 23 Switzerland www. cern. ch/it Distributed Databse Operations Workshop - 3

. . . More challenges • With data volume increase: – Increases probability of physical data corruption – Increases frequency of human errors • Traditional RMAN and tape-based approach doesn’t fit well into this picture: – Leads to backup and recovery times proportional to or dependent on data volume – Makes certain recovery scenarios quite complex e. g: recovery of a single database object CERN IT Department CH-1211 Genève 23 Switzerland www. cern. ch/it Distributed Databse Operations Workshop - 4

Maximum Availability Architecture (MAA) • Oracle's best practices blueprint • Goal: to achieve the optimal high availability architecture at the lowest cost and complexity • Helps to minimize impact of different types of unplanned and planned downtimes • Is based on such Oracle products/features like: – – – RAC ASM RMAN Flashback Data Guard • http: //www. oracle. com/technology/deploy/av ailability/htdocs/maa. htm CERN IT Department CH-1211 Genève 23 Switzerland www. cern. ch/it Distributed Databse Operations Workshop - 5

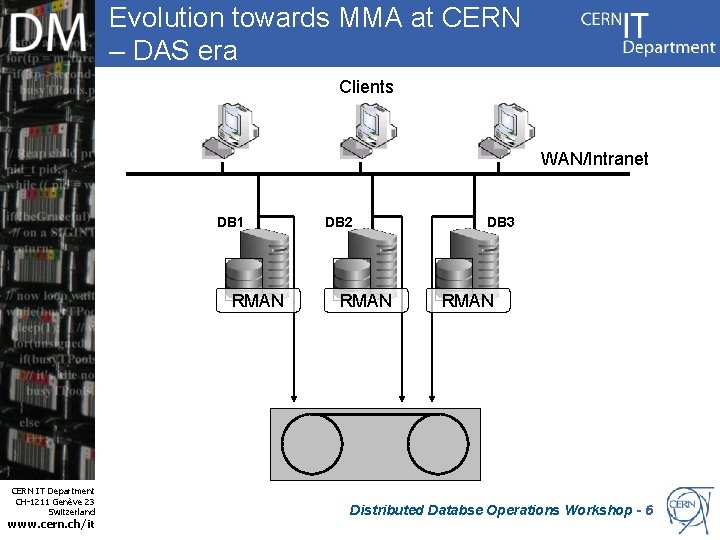

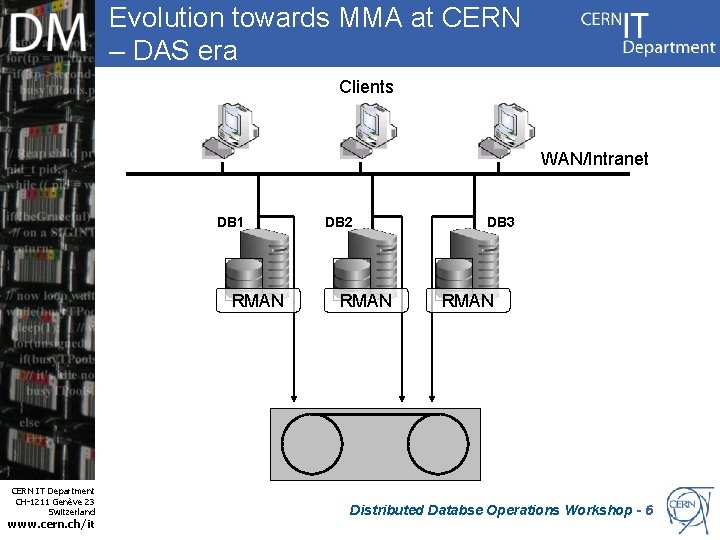

Evolution towards MMA at CERN – DAS era Clients WAN/Intranet DB 1 RMAN CERN IT Department CH-1211 Genève 23 Switzerland www. cern. ch/it DB 2 RMAN DB 3 RMAN Distributed Databse Operations Workshop - 6

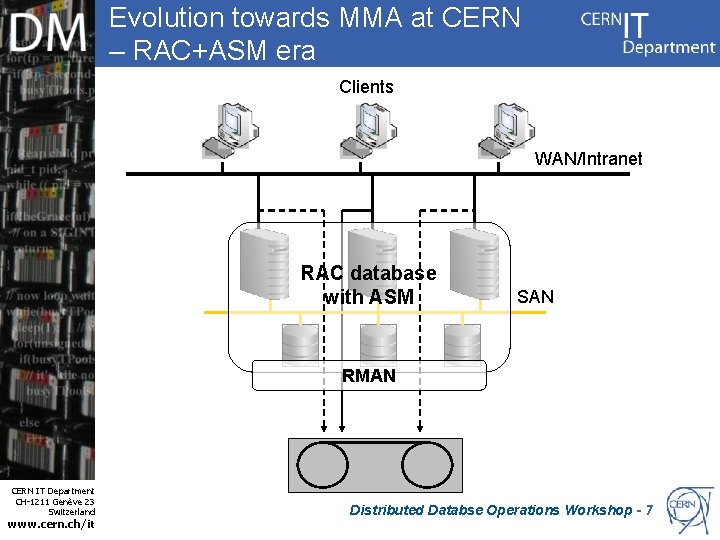

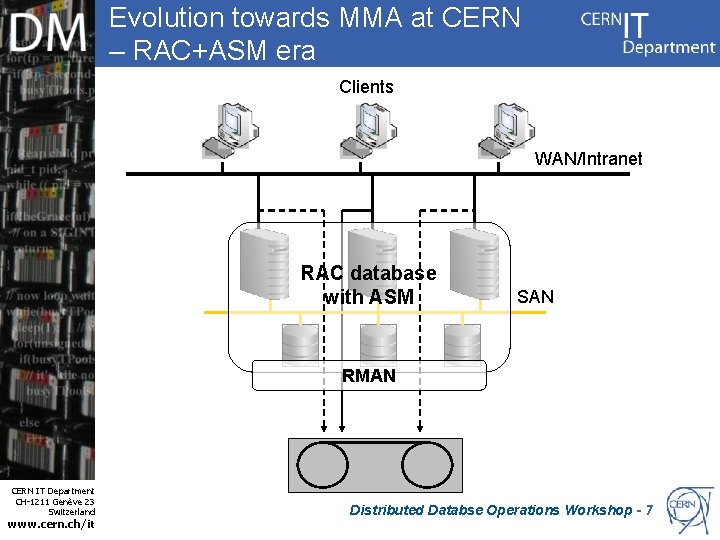

Evolution towards MMA at CERN – RAC+ASM era Clients WAN/Intranet RAC database with ASM SAN RMAN CERN IT Department CH-1211 Genève 23 Switzerland www. cern. ch/it Distributed Databse Operations Workshop - 7

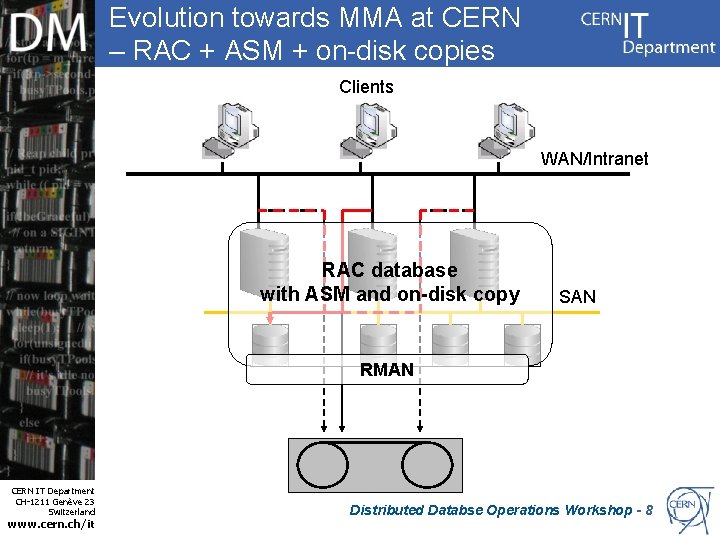

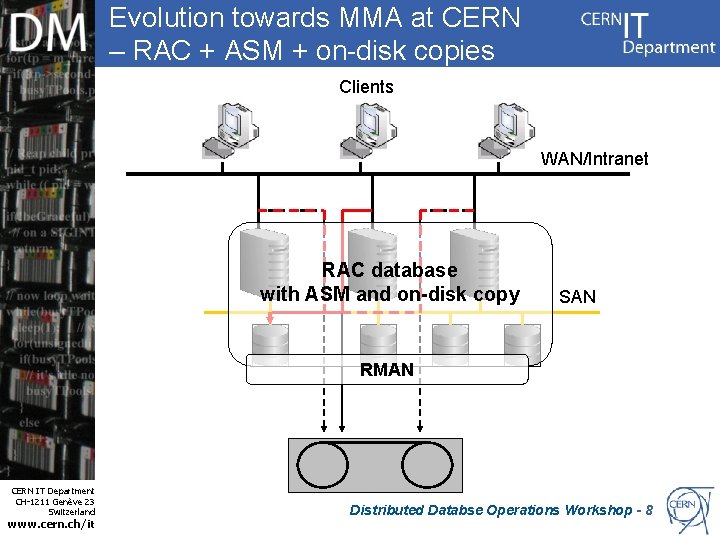

Evolution towards MMA at CERN – RAC + ASM + on-disk copies Clients WAN/Intranet RAC database with ASM and on-disk copy SAN RMAN CERN IT Department CH-1211 Genève 23 Switzerland www. cern. ch/it Distributed Databse Operations Workshop - 8

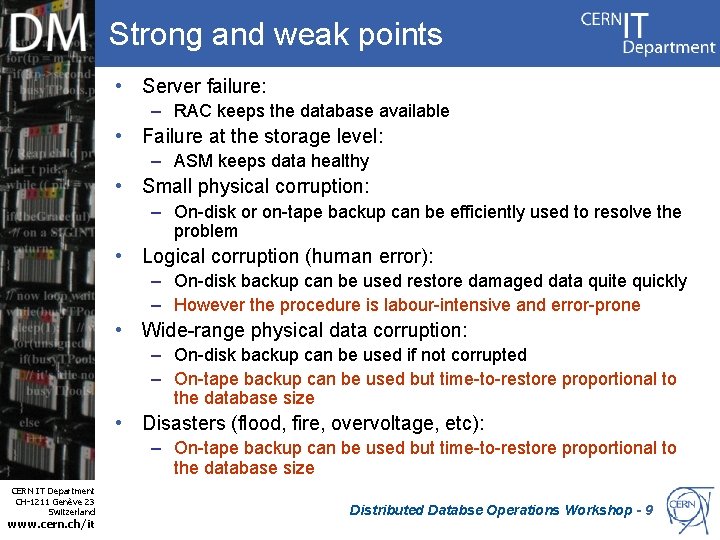

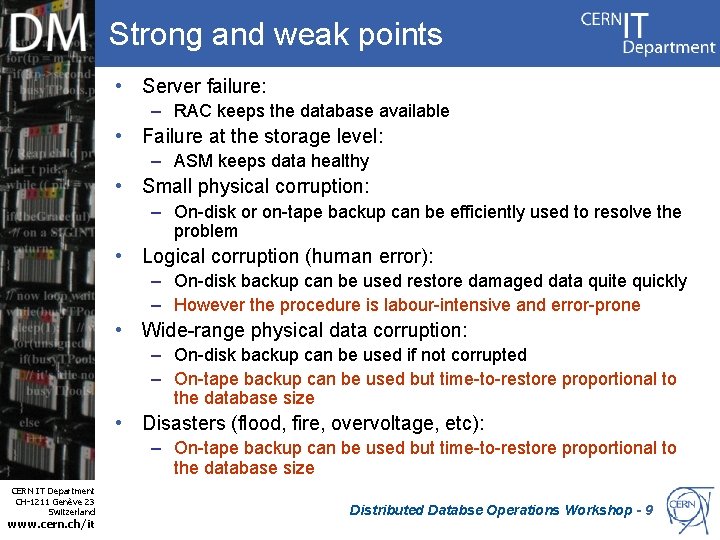

Strong and weak points • Server failure: – RAC keeps the database available • Failure at the storage level: – ASM keeps data healthy • Small physical corruption: – On-disk or on-tape backup can be efficiently used to resolve the problem • Logical corruption (human error): – On-disk backup can be used restore damaged data quite quickly – However the procedure is labour-intensive and error-prone • Wide-range physical data corruption: – On-disk backup can be used if not corrupted – On-tape backup can be used but time-to-restore proportional to the database size • Disasters (flood, fire, overvoltage, etc): – On-tape backup can be used but time-to-restore proportional to the database size CERN IT Department CH-1211 Genève 23 Switzerland www. cern. ch/it Distributed Databse Operations Workshop - 9

Solution – Data Guard Physical Standby Database • Mature and stable technology: – Introduced in Oracle 8 i – Relying on old and proven functionality: • redo generation • media recovery • Flexible configuration – Synchronous or asynchronous propagation of data changes – Immediate or delayed standby database’s updates – Different protection levels: • Maximum performance • Maximum availability • Maximum protection • Small performance overhead on the primary system CERN IT Department CH-1211 Genève 23 Switzerland www. cern. ch/it Distributed Databse Operations Workshop - 10

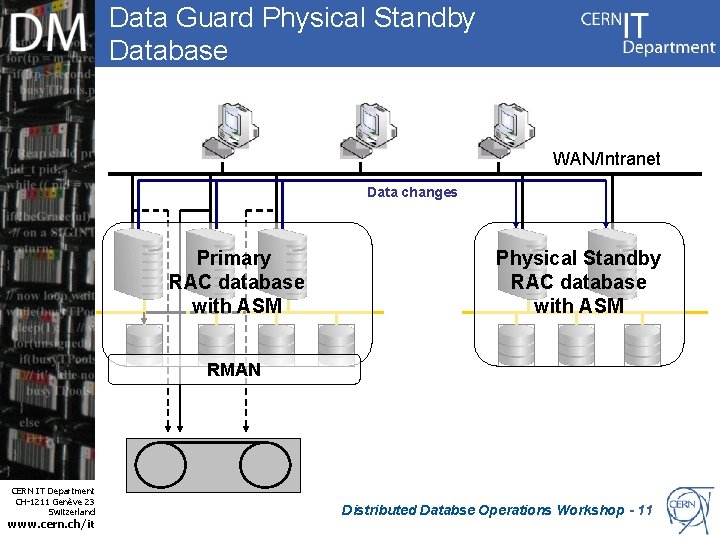

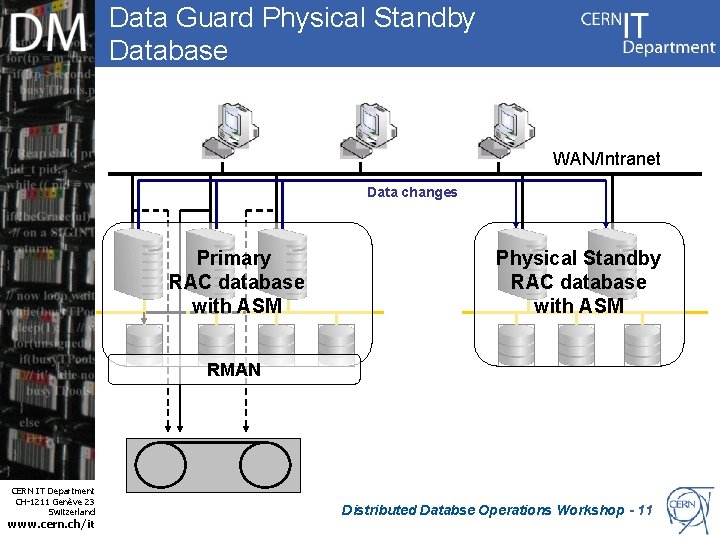

Data Guard Physical Standby Database WAN/Intranet Data changes Primary RAC database with ASM Physical Standby RAC database with ASM RMAN CERN IT Department CH-1211 Genève 23 Switzerland www. cern. ch/it Distributed Databse Operations Workshop - 11

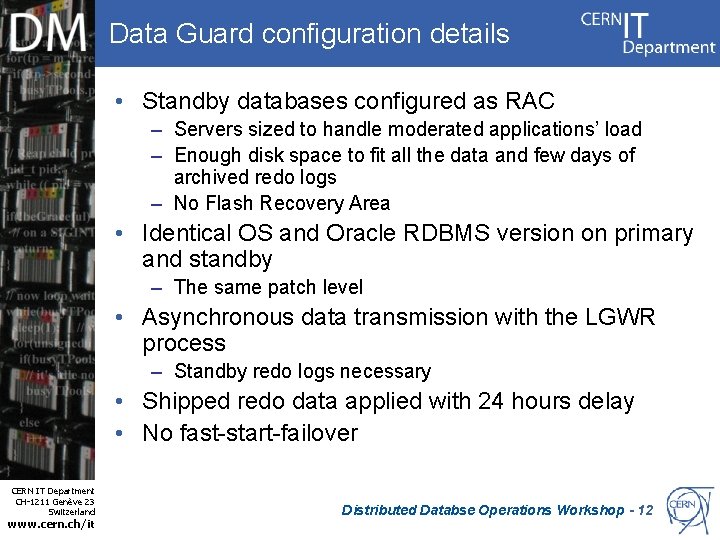

Data Guard configuration details • Standby databases configured as RAC – Servers sized to handle moderated applications’ load – Enough disk space to fit all the data and few days of archived redo logs – No Flash Recovery Area • Identical OS and Oracle RDBMS version on primary and standby – The same patch level • Asynchronous data transmission with the LGWR process – Standby redo logs necessary • Shipped redo data applied with 24 hours delay • No fast-start-failover CERN IT Department CH-1211 Genève 23 Switzerland www. cern. ch/it Distributed Databse Operations Workshop - 12

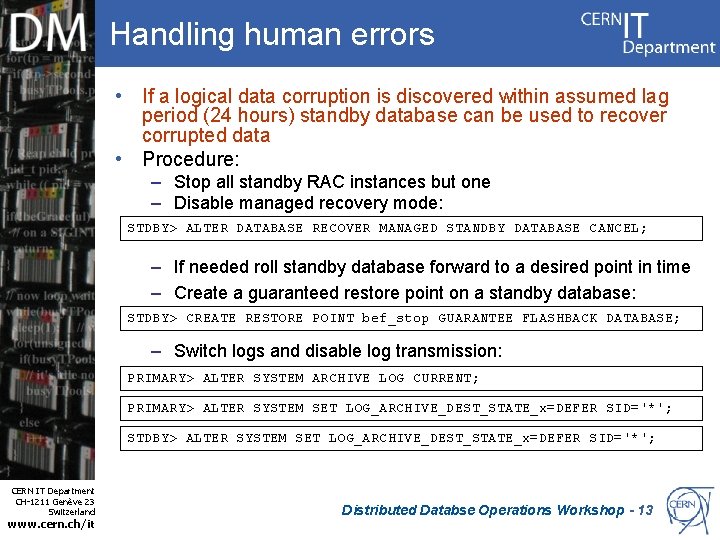

Handling human errors • If a logical data corruption is discovered within assumed lag period (24 hours) standby database can be used to recover corrupted data • Procedure: – Stop all standby RAC instances but one – Disable managed recovery mode: STDBY> ALTER DATABASE RECOVER MANAGED STANDBY DATABASE CANCEL; – If needed roll standby database forward to a desired point in time – Create a guaranteed restore point on a standby database: STDBY> CREATE RESTORE POINT bef_stop GUARANTEE FLASHBACK DATABASE; – Switch logs and disable log transmission: PRIMARY> ALTER SYSTEM ARCHIVE LOG CURRENT; PRIMARY> ALTER SYSTEM SET LOG_ARCHIVE_DEST_STATE_x=DEFER SID='*'; STDBY> ALTER SYSTEM SET LOG_ARCHIVE_DEST_STATE_x=DEFER SID='*'; CERN IT Department CH-1211 Genève 23 Switzerland www. cern. ch/it Distributed Databse Operations Workshop - 13

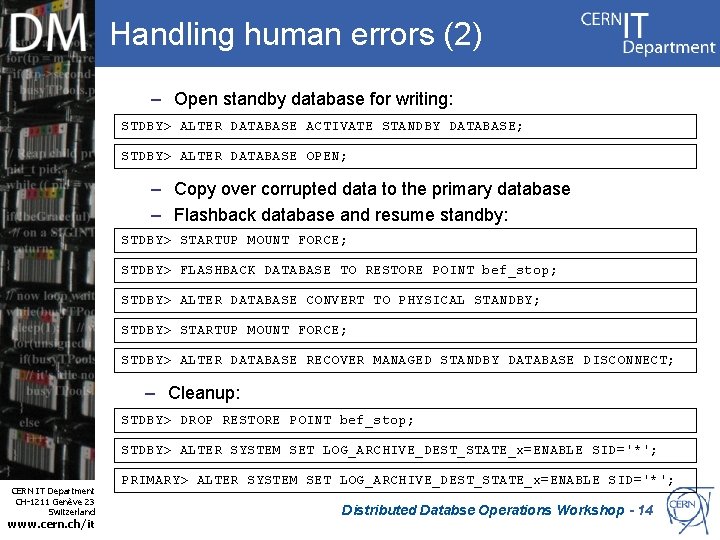

Handling human errors (2) – Open standby database for writing: STDBY> ALTER DATABASE ACTIVATE STANDBY DATABASE; STDBY> ALTER DATABASE OPEN; – Copy over corrupted data to the primary database – Flashback database and resume standby: STDBY> STARTUP MOUNT FORCE; STDBY> FLASHBACK DATABASE TO RESTORE POINT bef_stop; STDBY> ALTER DATABASE CONVERT TO PHYSICAL STANDBY; STDBY> STARTUP MOUNT FORCE; STDBY> ALTER DATABASE RECOVER MANAGED STANDBY DATABASE DISCONNECT; – Cleanup: STDBY> DROP RESTORE POINT bef_stop; STDBY> ALTER SYSTEM SET LOG_ARCHIVE_DEST_STATE_x=ENABLE SID='*'; CERN IT Department CH-1211 Genève 23 Switzerland www. cern. ch/it PRIMARY> ALTER SYSTEM SET LOG_ARCHIVE_DEST_STATE_x=ENABLE SID='*'; Distributed Databse Operations Workshop - 14

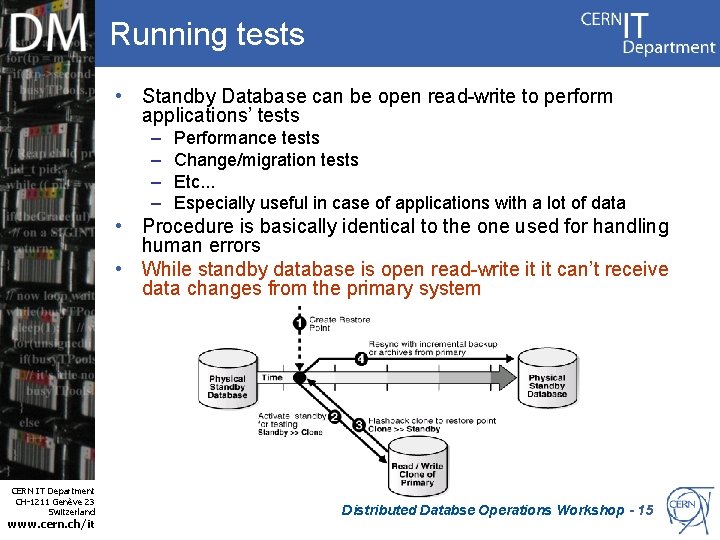

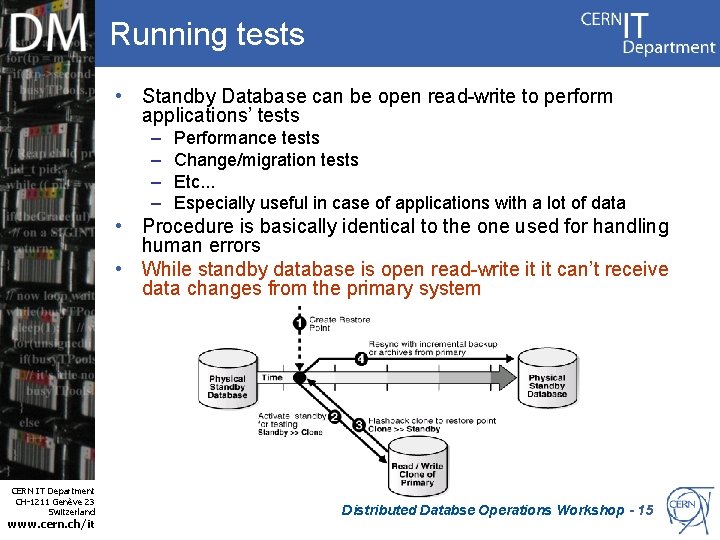

Running tests • Standby Database can be open read-write to perform applications’ tests – – Performance tests Change/migration tests Etc. . . Especially useful in case of applications with a lot of data • Procedure is basically identical to the one used for handling human errors • While standby database is open read-write it it can’t receive data changes from the primary system CERN IT Department CH-1211 Genève 23 Switzerland www. cern. ch/it Distributed Databse Operations Workshop - 15

Handling wide-range corruptions and disasters • In case the primary system becomes unavailable clients can be failed over to the standby database • Data Guard environment can be configured for either automatic (fast-start-failover) or manual failover • The most tricky part is re-directing clients to the standby database in an efficient way • Two approaches: – DNS alias-based – Oracle service-based CERN IT Department CH-1211 Genève 23 Switzerland www. cern. ch/it Distributed Databse Operations Workshop - 16

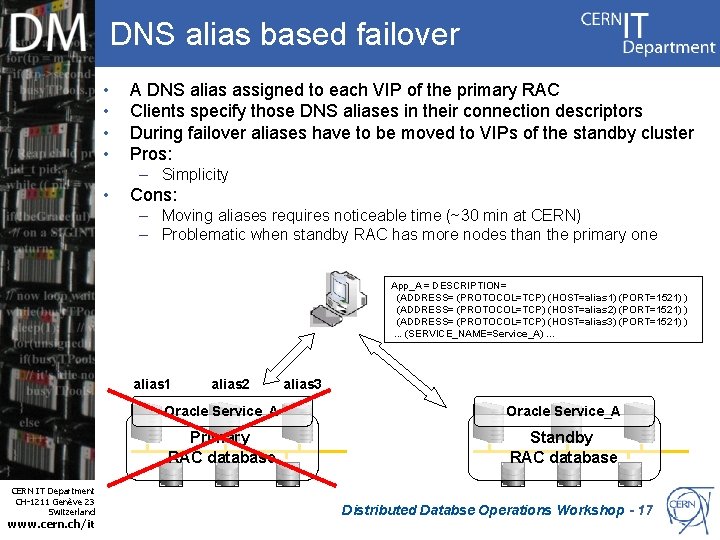

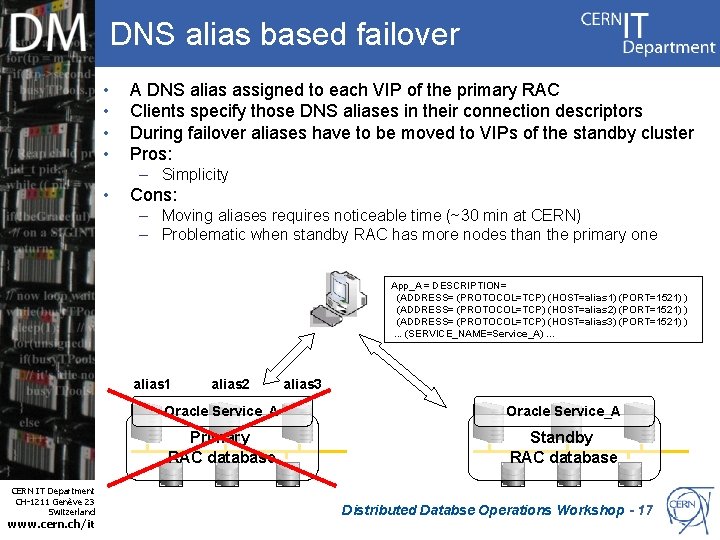

DNS alias based failover • • A DNS alias assigned to each VIP of the primary RAC Clients specify those DNS aliases in their connection descriptors During failover aliases have to be moved to VIPs of the standby cluster Pros: – Simplicity • Cons: – Moving aliases requires noticeable time (~30 min at CERN) – Problematic when standby RAC has more nodes than the primary one App_A = DESCRIPTION= (ADDRESS= (PROTOCOL=TCP) (HOST=alias 1) (PORT=1521) ) (ADDRESS= (PROTOCOL=TCP) (HOST=alias 2) (PORT=1521) ) (ADDRESS= (PROTOCOL=TCP) (HOST=alias 3) (PORT=1521) ). . . (SERVICE_NAME=Service_A). . . alias 1 CERN IT Department CH-1211 Genève 23 Switzerland www. cern. ch/it alias 2 alias 3 Oracle Service_A Primary RAC database Standby RAC database Distributed Databse Operations Workshop - 17

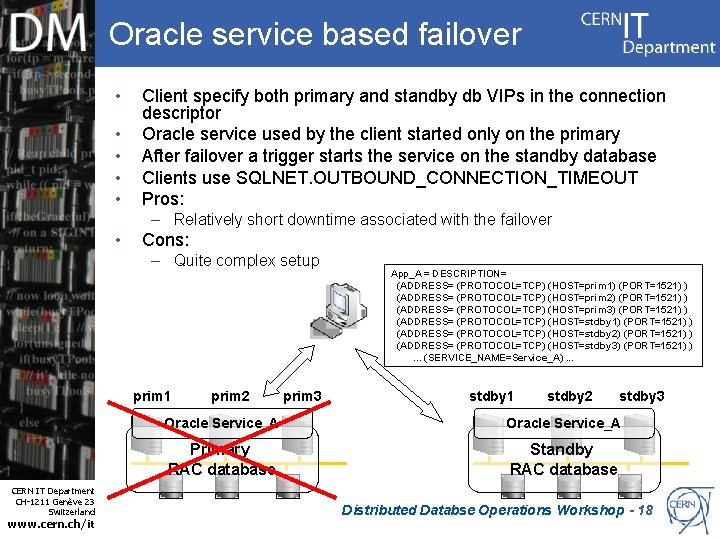

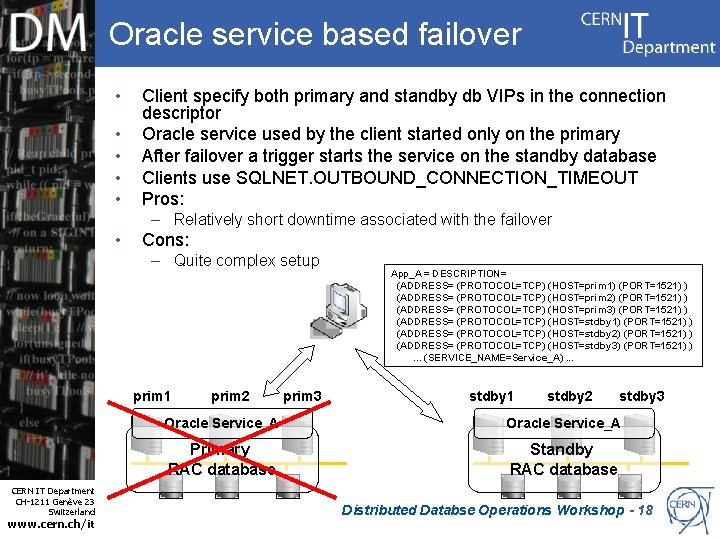

Oracle service based failover • • • Client specify both primary and standby db VIPs in the connection descriptor Oracle service used by the client started only on the primary After failover a trigger starts the service on the standby database Clients use SQLNET. OUTBOUND_CONNECTION_TIMEOUT Pros: – Relatively short downtime associated with the failover • Cons: – Quite complex setup prim 1 CERN IT Department CH-1211 Genève 23 Switzerland www. cern. ch/it prim 2 prim 3 App_A = DESCRIPTION= (ADDRESS= (PROTOCOL=TCP) (HOST=prim 1) (PORT=1521) ) (ADDRESS= (PROTOCOL=TCP) (HOST=prim 2) (PORT=1521) ) (ADDRESS= (PROTOCOL=TCP) (HOST=prim 3) (PORT=1521) ) (ADDRESS= (PROTOCOL=TCP) (HOST=stdby 1) (PORT=1521) ) (ADDRESS= (PROTOCOL=TCP) (HOST=stdby 2) (PORT=1521) ) (ADDRESS= (PROTOCOL=TCP) (HOST=stdby 3) (PORT=1521) ). . . (SERVICE_NAME=Service_A). . . stdby 1 stdby 2 stdby 3 Oracle Service_A Primary RAC database Standby RAC database Distributed Databse Operations Workshop - 18

Possible improvements – Active Data Guard • New feature of Oracle 11 g. R 1: – Extra-cost option for Oracle RDBMS 11 g Enterprise Edition • Allows clients to connect to and query a physical standby database while ‘managed recovery’ mode enabled: – Better resource utilization – Excellent idea for read-only workload – Many potential use-cases at CERN e. g. CMS online database • Extensive tests started CERN IT Department CH-1211 Genève 23 Switzerland www. cern. ch/it Distributed Databse Operations Workshop - 19

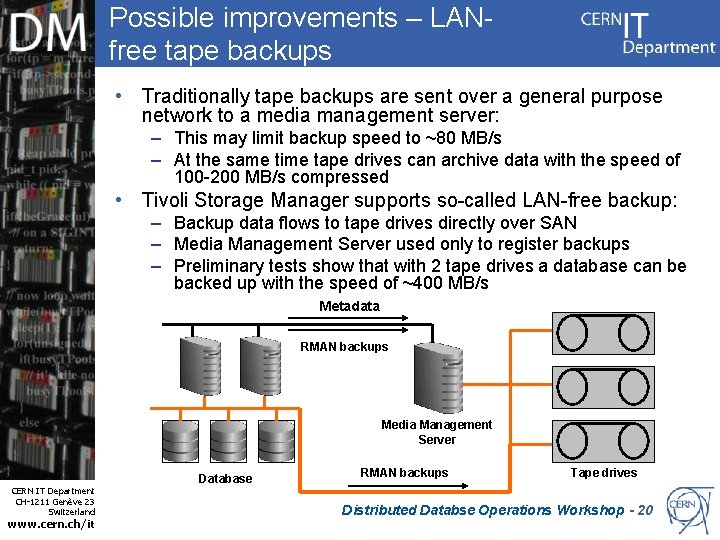

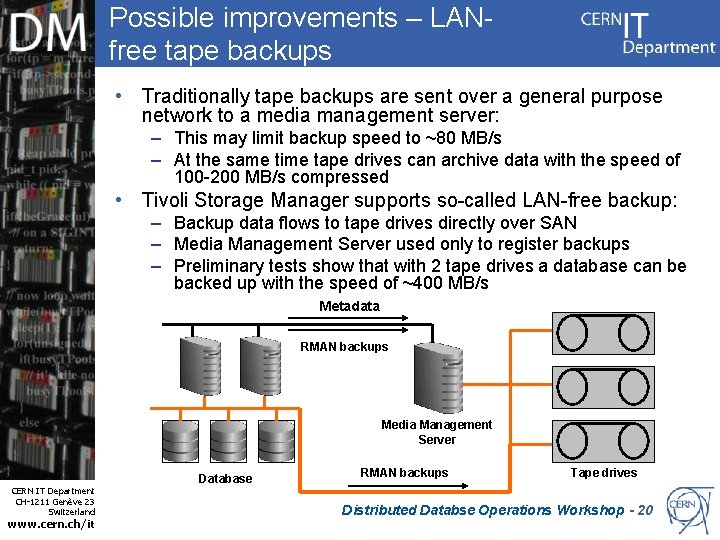

Possible improvements – LANfree tape backups • Traditionally tape backups are sent over a general purpose network to a media management server: – This may limit backup speed to ~80 MB/s – At the same time tape drives can archive data with the speed of 100 -200 MB/s compressed • Tivoli Storage Manager supports so-called LAN-free backup: – Backup data flows to tape drives directly over SAN – Media Management Server used only to register backups – Preliminary tests show that with 2 tape drives a database can be backed up with the speed of ~400 MB/s Metadata RMAN backups Media Management Server CERN IT Department CH-1211 Genève 23 Switzerland www. cern. ch/it Database RMAN backups Tape drives Distributed Databse Operations Workshop - 20

Possible improvements - Using a disk pool instead of tapes • Tape infrastructure is expensive and difficult to maintain: – costly hardware and software – noticeable maintenance effort – tape media is quite unreliable and needs to be validated • At the same time disk space is getting cheaper and cheaper: – 1. 5 TB SATA disks already available • Pool of disks can be easily configured as destination for RMAN backups: – Can simplify backup infrastructure – Can improve backup performance – Can increase backup reliability • Several configurations possible: – NFS mounted disks – SAN-attached storage with CFS – SAN-attached storage with ASM • Tests is progress CERN IT Department CH-1211 Genève 23 Switzerland www. cern. ch/it Distributed Databse Operations Workshop - 21

Conclusions • Production databases are growing very large – Recovery time in case of failure becomes critical • Procedures successfully tested to make recovery time scale: – – CERN IT Department CH-1211 Genève 23 Switzerland www. cern. ch/it On-disk backup Physical Standby for disaster recovery Physical Standby for large-scale testing RMAN backup optimisations Distributed Databse Operations Workshop - 22

Q&A Thank you CERN IT Department CH-1211 Genève 23 Switzerland www. cern. ch/it Distributed Databse Operations Workshop - 23