Data Preprocessing Data Reduction Warehouse may store terabytes

Data Preprocessing Data Reduction • Warehouse may store terabytes of data Complex data analysis/mining may take a very long time to run on the complete data set. • Reduction techniques are applied to obtain a reduced representation of the data set that is much smaller in volume and maintains the integrity of base data. Ajith G. S: poposir. orgfree. com

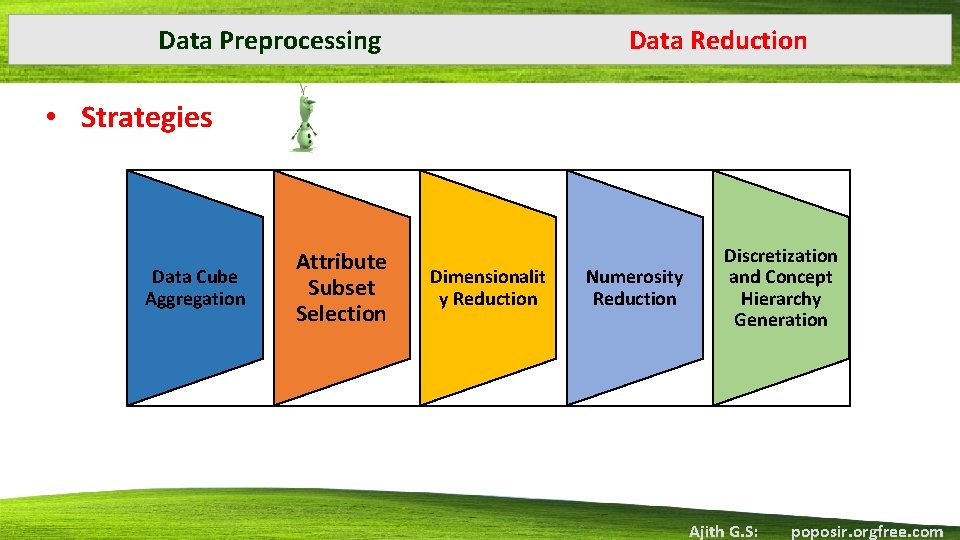

Data Preprocessing Data Reduction • Strategies Data Cube Aggregation Attribute Subset Selection Dimensionalit y Reduction Numerosity Reduction Discretization and Concept Hierarchy Generation Ajith G. S: poposir. orgfree. com

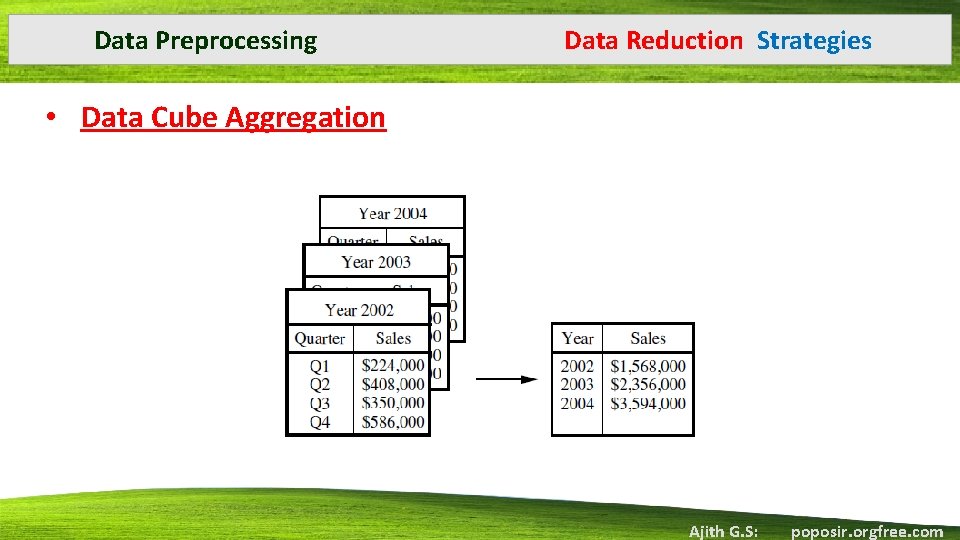

Data Preprocessing Data Reduction Strategies • Data Cube Aggregation • Aggregation operations are applied to the data in the construction of a data cube. • Data cubes store multidimensional aggregated information. • Each cell holds an aggregate data value, corresponding to the data point in multidimensional space. • Concept hierarchies allows the analysis of data at multiple levels of abstraction • Data cubes created for varying levels of abstraction are often referred to as cuboids base cuboid and apex cuboid Ajith G. S: poposir. orgfree. com

Data Preprocessing Data Reduction Strategies • Data Cube Aggregation Ajith G. S: poposir. orgfree. com

Data Preprocessing Data Reduction Strategies • Attribute Subset Selection • Irrelevant, weekly relevant or redundant attributes or dimensions may be detected and removed. • Aim is to remove data sets that are irrelevant to the analysis task • Selecting the relevant attributes manually difficult and time consuming • Keeping the irrelevant data may lead to poor quality of mining result • Also the added volume of irrelevant and redundant attributes can slow down the mining process. • Advantage: Makes patterns more easier to understand Reduced number of attributes appearing in the discovered patterns Ajith G. S: poposir. orgfree. com

Data Preprocessing Data Reduction Strategies • Attribute Subset Selection • Finding a good subset • For n attributes 2^n possible subsets are therev Heuristic methods are used for attribute subset selection Uses a reduced search space. • These methods are greedy Always makes a best choice at the time. • Basic heuristic techniques include: 1. Stepwise forward selection: 2. Stepwise backward elimination 3. Combination of above methods 4. Decision tree induction Ajith G. S: poposir. orgfree. com

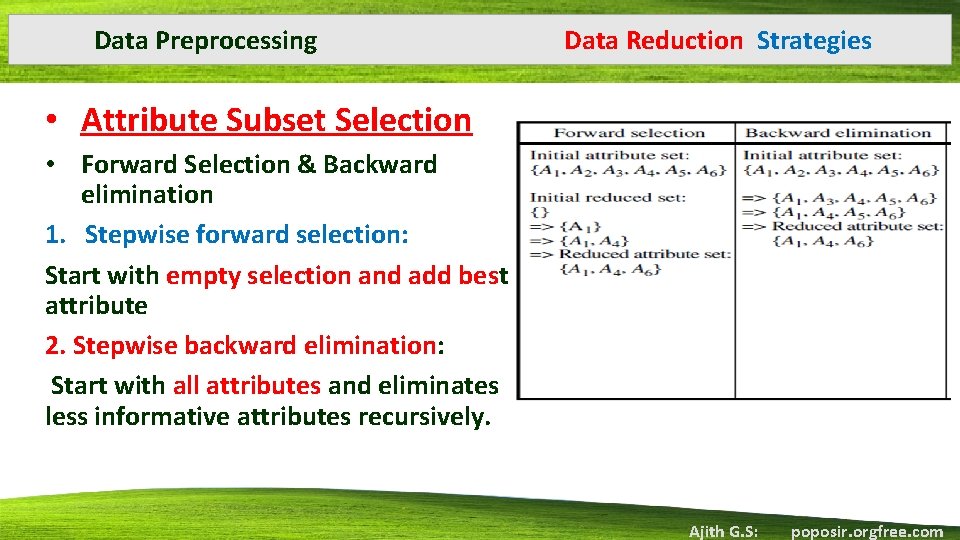

Data Preprocessing Data Reduction Strategies • Attribute Subset Selection • Forward Selection & Backward elimination 1. Stepwise forward selection: Start with empty selection and add best attribute 2. Stepwise backward elimination: Start with all attributes and eliminates less informative attributes recursively. Ajith G. S: poposir. orgfree. com

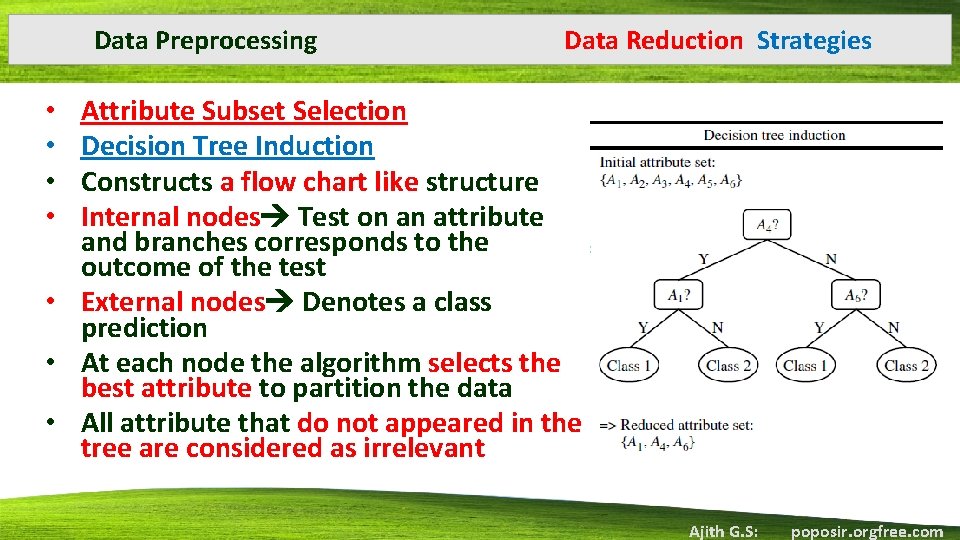

Data Preprocessing Data Reduction Strategies Attribute Subset Selection Decision Tree Induction Constructs a flow chart like structure Internal nodes Test on an attribute and branches corresponds to the outcome of the test • External nodes Denotes a class prediction • At each node the algorithm selects the best attribute to partition the data • All attribute that do not appeared in the tree are considered as irrelevant • • Ajith G. S: poposir. orgfree. com

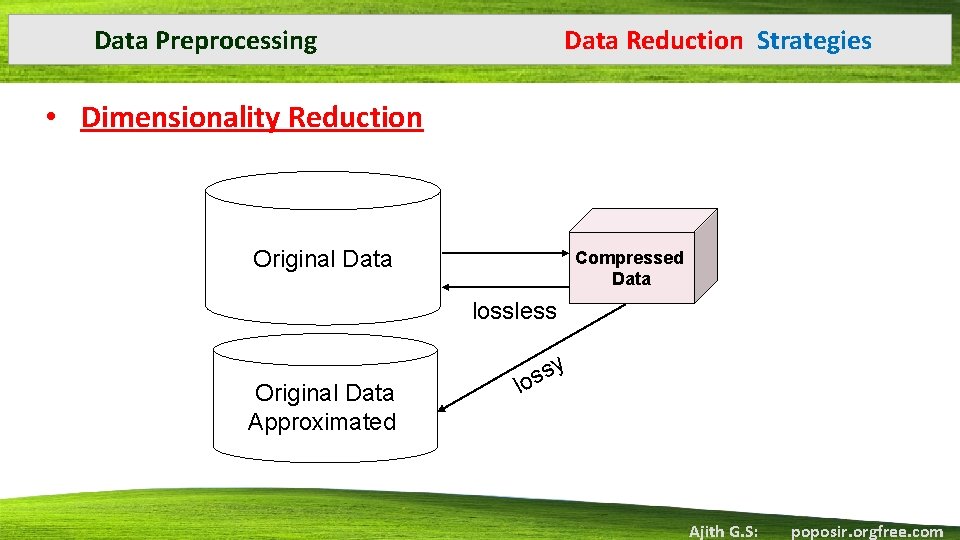

Data Preprocessing Data Reduction Strategies • Dimensionality Reduction • Data encoding or transformations are applied to obtain a reduced or compressed representation of original data. • 2 types: • Lossless: If the original data can be reconstructed from the compressed data without any loss of information Allows only limited manipulation of the data. • Lossy: Only an approximation of the original data can be reconstructed Ajith G. S: poposir. orgfree. com

Data Preprocessing Data Reduction Strategies • Dimensionality Reduction Original Data Compressed Data lossless Original Data Approximated sy s lo Ajith G. S: poposir. orgfree. com

Data Preprocessing Data Reduction Strategies Dimensionality Reduction Lossy Dimensionality Reduction 1. Wavelet Transforms 2. Principal Component Analysis Ajith G. S: poposir. orgfree. com

Data Preprocessing Data Reduction Strategies Dimensionality Reduction Lossy Dimensionality Reduction 1. Wavelet Transforms Discrete wavelet transform (DWT): linear signal processing technique Transforms a data vector X to a numerically different vector X’ of wavelet coefficients. 2 vectors X and X’ are of same length Data Reduction Each tuple is considered as an n-dimensional data vector X=(x 1, x 2…, xn) The wavelet transformed data are of the same length as the original data Compressed approximation: store only a small fraction of the strongest of the wavelet coefficients The resulting data representation is very sparse and operations on such data are computationally very fast Ajith G. S: poposir. orgfree. com

Data Preprocessing Data Reduction Strategies Dimensionality Reduction Lossy Dimensionality Reduction 1. Wavelet Transforms • This method can also be used for data cleaning as well Removes noise without smoothing out the main features of the data • An approximation of the original data can be constructed by applying the inverse of the DWT used • It achieves better lossy compression More accurate approximation of the original data. Ajith G. S: poposir. orgfree. com

Data Preprocessing Data Reduction Strategies Dimensionality Reduction Lossy Dimensionality Reduction 1. Wavelet Transforms • The general procedure Hierarchical pyramid algorithm Halves the data at each iteration • Method: • 1. The length, L, of the input data vector must be an integer power of 2. (padding the data vector with zeros as necessary ) • 2. Each transform involves applying two functions. • The first applies some data smoothing, such as a sum or weighted average. • The second performs a weighted difference to bring out the detailed features of the data. Ajith G. S: poposir. orgfree. com

Data Preprocessing Data Reduction Strategies Dimensionality Reduction Lossy Dimensionality Reduction 1. Wavelet Transforms • 3. The two functions are applied to pairs of data points in X. • This results in two sets of data of length L/2 Low frequency and high frequency data • 4. The two functions are recursively applied to the sets of data obtained in the previous loop, until the resulting data sets obtained are of length 2. • 5. Selected values from the data sets obtained in the above iterations are designated the wavelet coefficients of the transformed data. Ajith G. S: poposir. orgfree. com

Data Preprocessing Data Reduction Strategies Dimensionality Reduction Lossy Dimensionality Reduction 1. Principal Component Analysis or Karhuren-Loeve (K-L) method Finds a smaller projection of data. Data to be reduced consist of tuples described by n attributes or dimesnions PCA or K-L method searches for k n-dimensional orthogonal vectors that can best be used to represent the data where k<=n PCA combines the essence of attribute set by creating an alternative smaller set of variables Works for numeric data only Used when the number of dimensions is large Ajith G. S: poposir. orgfree. com

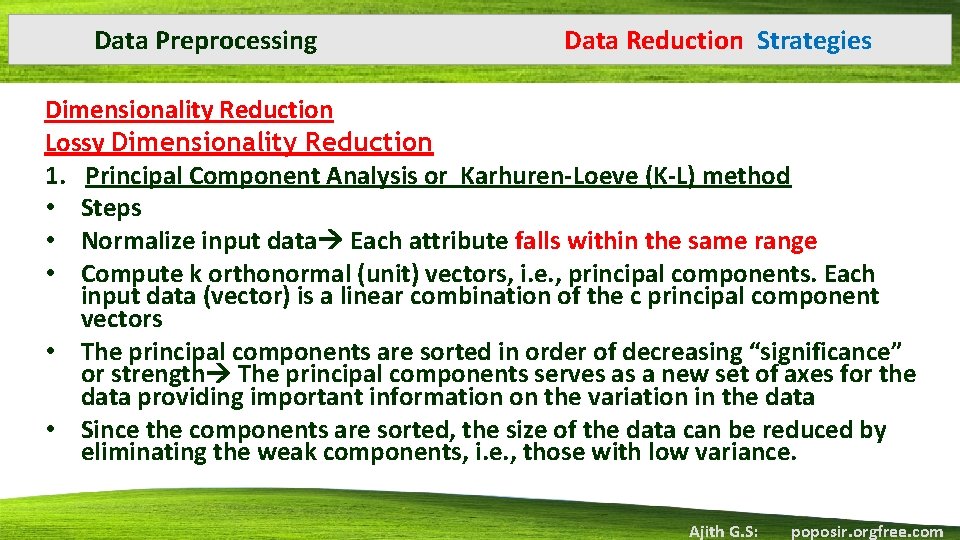

Data Preprocessing Data Reduction Strategies Dimensionality Reduction Lossy Dimensionality Reduction 1. Principal Component Analysis or Karhuren-Loeve (K-L) method • Steps • Normalize input data Each attribute falls within the same range • Compute k orthonormal (unit) vectors, i. e. , principal components. Each input data (vector) is a linear combination of the c principal component vectors • The principal components are sorted in order of decreasing “significance” or strength The principal components serves as a new set of axes for the data providing important information on the variation in the data • Since the components are sorted, the size of the data can be reduced by eliminating the weak components, i. e. , those with low variance. Ajith G. S: poposir. orgfree. com

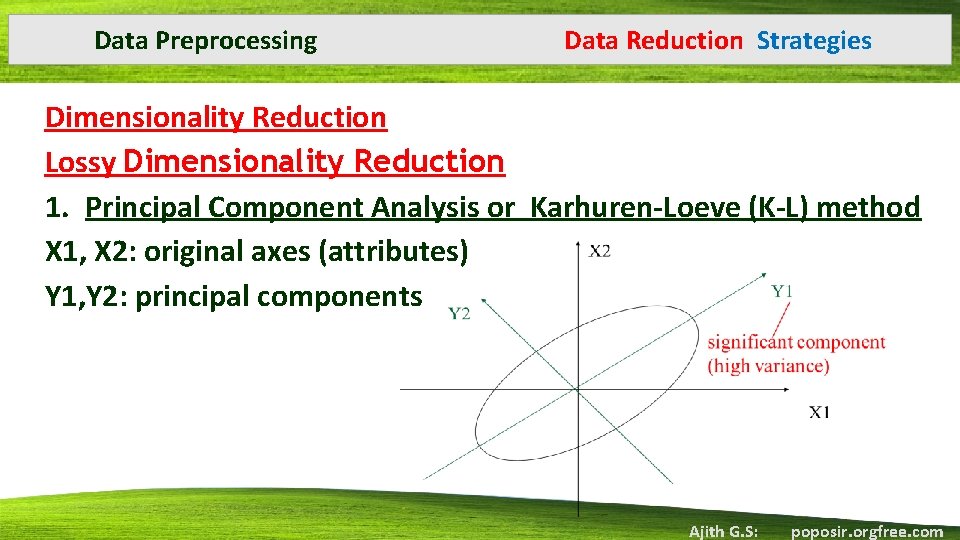

Data Preprocessing Data Reduction Strategies Dimensionality Reduction Lossy Dimensionality Reduction 1. Principal Component Analysis or Karhuren-Loeve (K-L) method X 1, X 2: original axes (attributes) Y 1, Y 2: principal components Ajith G. S: poposir. orgfree. com

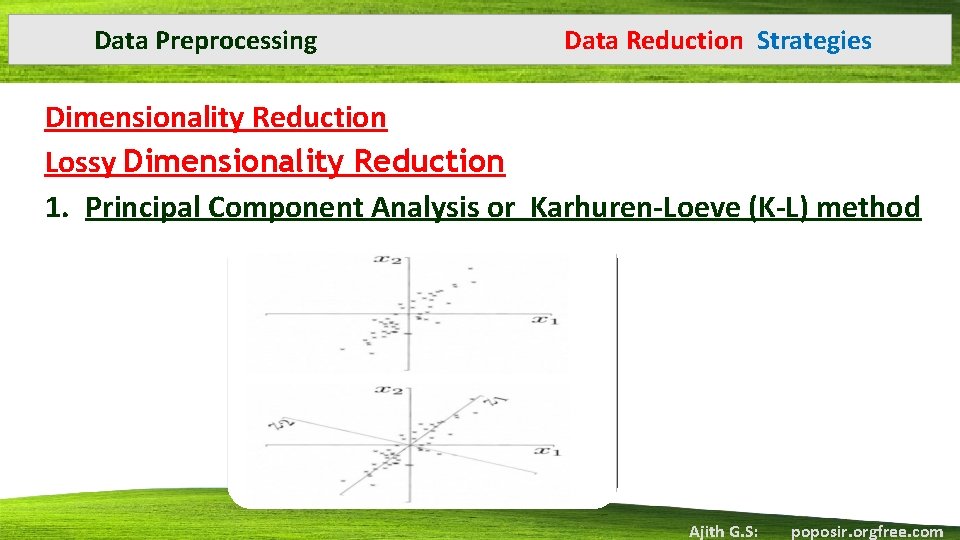

Data Preprocessing Data Reduction Strategies Dimensionality Reduction Lossy Dimensionality Reduction 1. Principal Component Analysis or Karhuren-Loeve (K-L) method Ajith G. S: poposir. orgfree. com

Data Preprocessing Data Reduction Strategies • Numerosity Reduction • Reducing the data volume by choosing smaller forms of data representation • Techniques can be divided into 2 categories • Parametric: Assume the data fits in some model, estimate model parameters, store only the parameters, and discard the data. • Example: Regression and Log-Linear models. • Non-Parametric: No assumed model. Major techniques: histograms, clustering, sampling. Ajith G. S: poposir. orgfree. com

Data Preprocessing • • • Data Reduction Strategies Numerosity Reduction Parametric : Regression and Log-Linear Models Linear regression Data are modeled to fit a straight line. A numerical database attribute is modeled as a linear function of another attribute. Multiple linear regression It is an extension of (simple) linear regression, which allows an attribute to be modeled as a linear function of two or more attributes. Log-linear model Given a set of tuples in n dimensions , we can consider each tuple as a point in n dimensional space This model estimates the probability of each point in a multidimensional space for a set of attributes based on a smaller subset of dimensional combinations Ajith G. S: poposir. orgfree. com

Data Preprocessing Data Reduction Strategies Numerosity Reduction Non Parametric : Histograms A popular data reduction technique Use binning to approximate data distribution Divide the data distribution of attribute A into buckets and store average (sum) for each bucket • Singleton buckets If each bucket represents only a single attribute-value/ frequency pair • Buckets often represents continuous ranges for the given attribute • • • Ajith G. S: poposir. orgfree. com

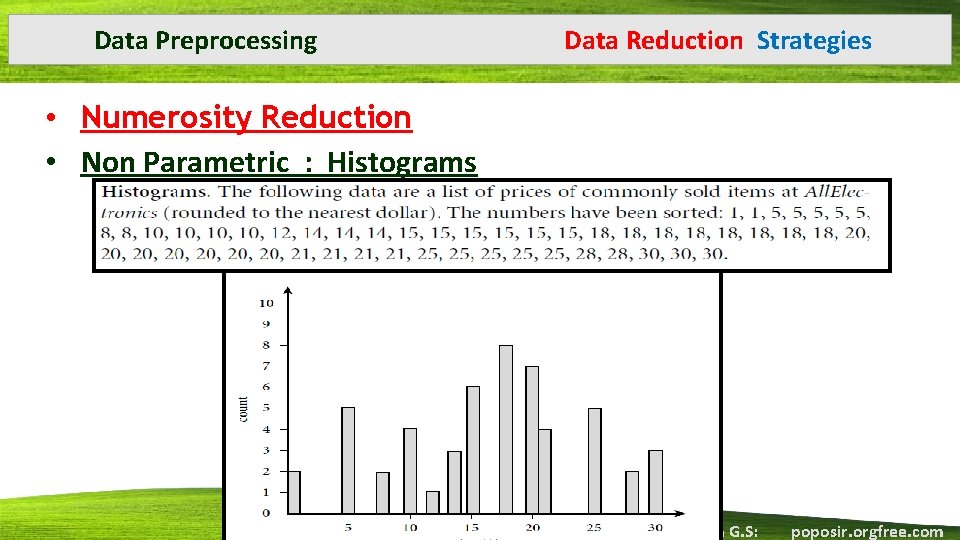

Data Preprocessing Data Reduction Strategies • Numerosity Reduction • Non Parametric : Histograms Ajith G. S: poposir. orgfree. com

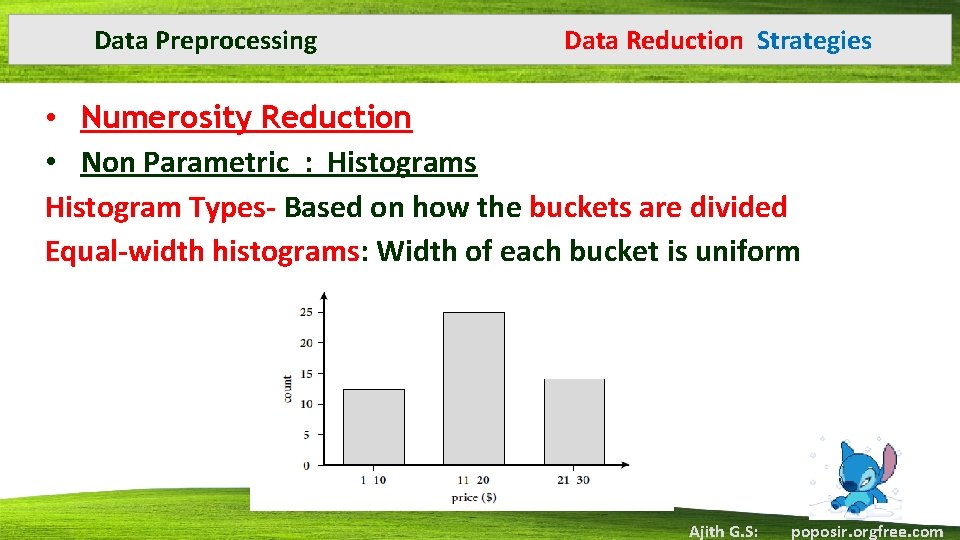

Data Preprocessing Data Reduction Strategies • Numerosity Reduction • Non Parametric : Histograms Histogram Types- Based on how the buckets are divided Equal-width histograms: Width of each bucket is uniform Ajith G. S: poposir. orgfree. com

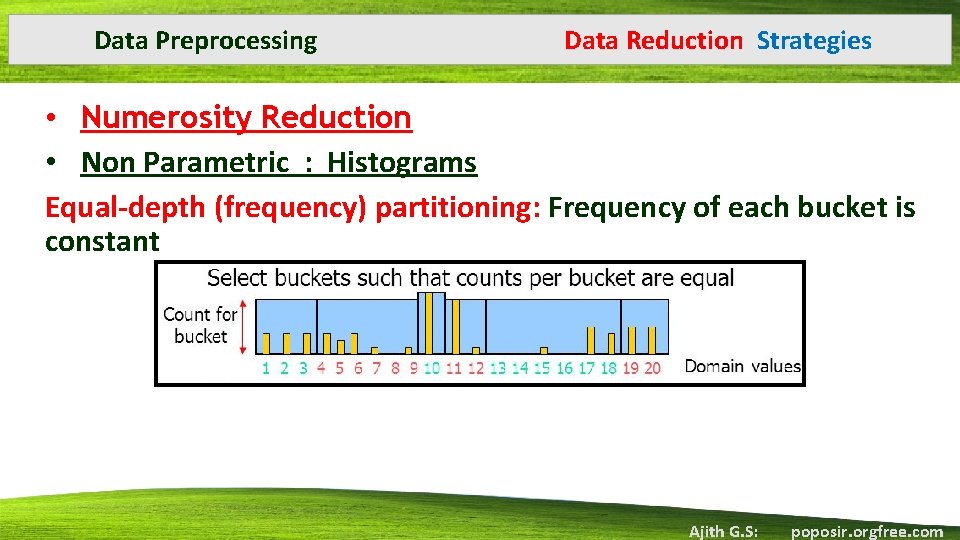

Data Preprocessing Data Reduction Strategies • Numerosity Reduction • Non Parametric : Histograms Equal-depth (frequency) partitioning: Frequency of each bucket is constant Ajith G. S: poposir. orgfree. com

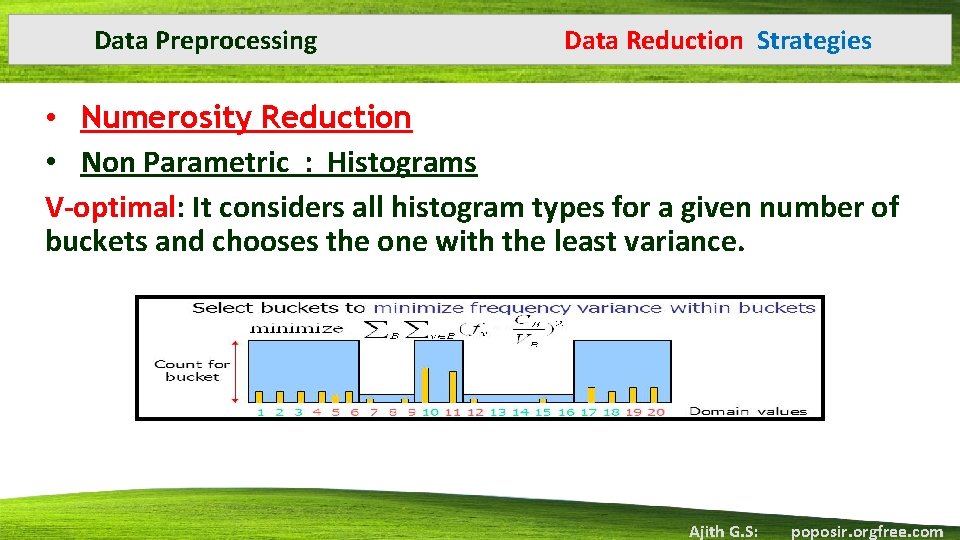

Data Preprocessing Data Reduction Strategies • Numerosity Reduction • Non Parametric : Histograms V-optimal: It considers all histogram types for a given number of buckets and chooses the one with the least variance. Ajith G. S: poposir. orgfree. com

Data Preprocessing Data Reduction Strategies • Numerosity Reduction • Non Parametric : Histograms Max. Diff: After sorting the data to be approximated, it defines the borders of the buckets at points where the adjacent values have the maximum difference : - Example: split 1, 1, 4, 5, 5, 7, 9, 14, 16, 18, 27, 30, 32 to three buckets Max. Diff 27 -18 and 14 -9 Ajith G. S: poposir. orgfree. com

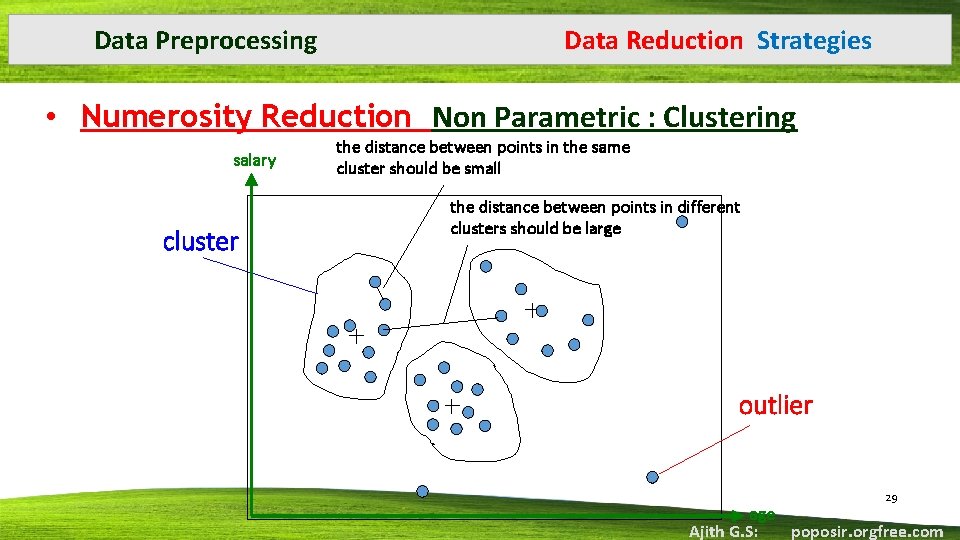

Data Preprocessing Data Reduction Strategies Numerosity Reduction Non Parametric : Clustering Considers data tuples as objects. Partition data set into clusters objects within a cluster are similar to one another and dissimilar to objects in other clusters. • Quality of a cluster Diameter Maximum distance between any 2 objects in the cluster. • Centroid distance is another measure of quality Average distance of each cluster objects from the cluster centroid. • The actual data are replaced by the corresponding cluster representation. • • Ajith G. S: poposir. orgfree. com

Data Preprocessing Data Reduction Strategies • Numerosity Reduction Non Parametric : Clustering salary cluster the distance between points in the same cluster should be small the distance between points in different clusters should be large outlier 29 age Ajith G. S: poposir. orgfree. com

Data Preprocessing Data Reduction Strategies • Numerosity Reduction • Non Parametric : Sampling • Allows a large data set to be represented by a much smaller random sample of the data. • Methods to reduce a data set D: • Simple random sample without replacement (SRSWOR) • Simple random sample with replacement (SRSWR) • Cluster sample • Stratified sample Ajith G. S: poposir. orgfree. com

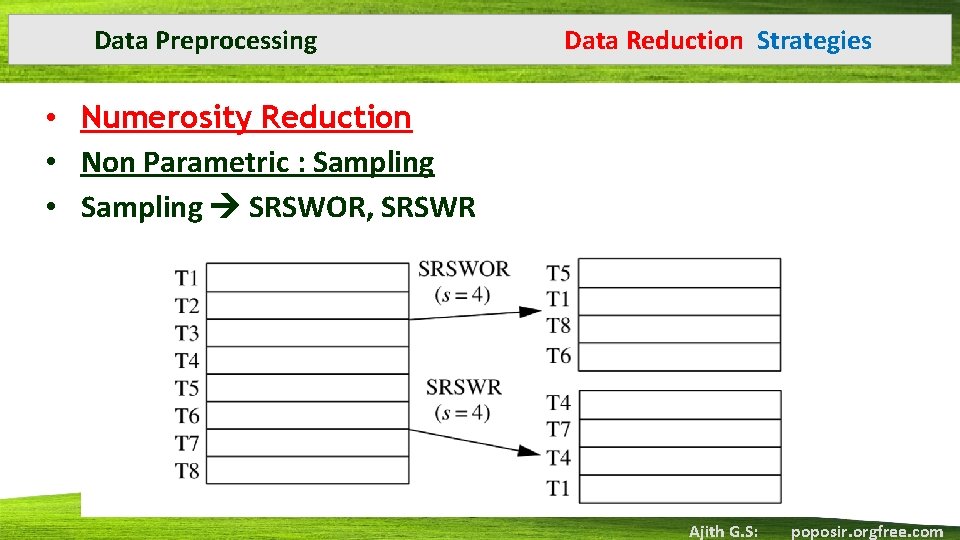

Data Preprocessing Data Reduction Strategies • Numerosity Reduction • Non Parametric : Sampling • Sampling SRSWOR, SRSWR Ajith G. S: poposir. orgfree. com

Data Preprocessing Data Reduction Strategies • Data Discretization • Reducing the number of values for a continuous attribute by dividing the range of the attribute into intervals • Interval labels are used to replace actual data values • Can be categorized based on • Whether it uses class information • 1) Supervised Uses class information • 2) Unsupervised • Which direction it proceeds • 1) Top-down or splitting • 2) Bottom-up or merging Ajith G. S: poposir. orgfree. com

Data Preprocessing Data Reduction Strategies • Data Discretization • Top-down or splitting • Finds one or a few points (split points or cut points) to split the entire attribute range. • Repeat the process recursively on the resulting intervals. • Bottom-up or merging • Consider all of the continuous values as a potential split points. • Merge the neighborhood values to form intervals. • Then recursively applies this process to the resulting intervals. Ajith G. S: poposir. orgfree. com

Data Preprocessing Data Reduction Strategies • Concept Hierarchy Generation • Reducing the data by replacing low level concepts by high level concepts • A concept hierarchy of a numerical attribute defines the discretization of the attribute. • Detail may lost But more meaningful and Easier to interpret mining results • Applied as a preprocessing step • Several concept hierarchies can be defined for the same attribute Ajith G. S: poposir. orgfree. com

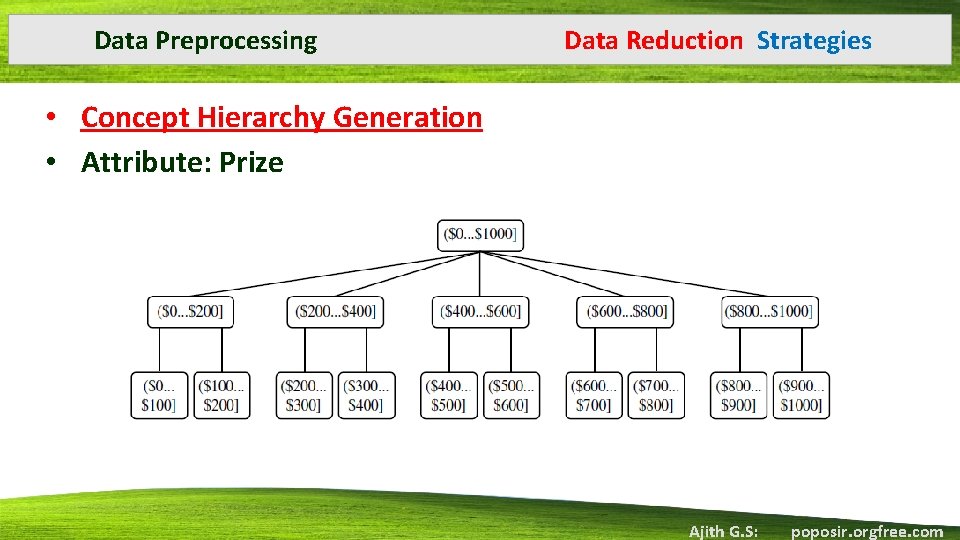

Data Preprocessing Data Reduction Strategies • Concept Hierarchy Generation • Attribute: Prize Ajith G. S: poposir. orgfree. com

Data Preprocessing • • Data Reduction Strategies Concept Hierarchy Generation for numerical data Binning Histogram Analysis Entropy Based Discretization X^2 Merging Cluster Analysis Discretization by intuitive (natural)Partitioning Ajith G. S: poposir. orgfree. com

Data Preprocessing • • • Data Reduction Strategies Concept Hierarchy Generation for numerical data Binning An unsupervised discretization technique Follows a top down approach The sorted values are distributed into a number of buckets or bins and the replacing each bin value by the bin mean or median Histogram Analysis An unsupervised discretization technique Partitions the value for an attribute A into disjoint ranges called buckets Algorithms can be used to recursively partition the buckets to intervals and generates multilevel heirarchies Ajith G. S: poposir. orgfree. com

Data Preprocessing Data Reduction Strategies Concept Hierarchy Generation for numerical data Entropy based discretization Commonly used discretization method Supervised top down splitting technique�Uses class information in its calculation and determination of the split points • Selects a value of attribute A that has the minimum entropy as a split point • • Ajith G. S: poposir. orgfree. com

Data Preprocessing Data Reduction Strategies Concept Hierarchy Generation for numerical data Entropy based discretization Method: Let D consist of data tuples defined by a set of attributes and a class label attribute • 1) Select a split point and partition the range of A such that A<= split-point and A> split-point�Binary discretization • • Ajith G. S: poposir. orgfree. com

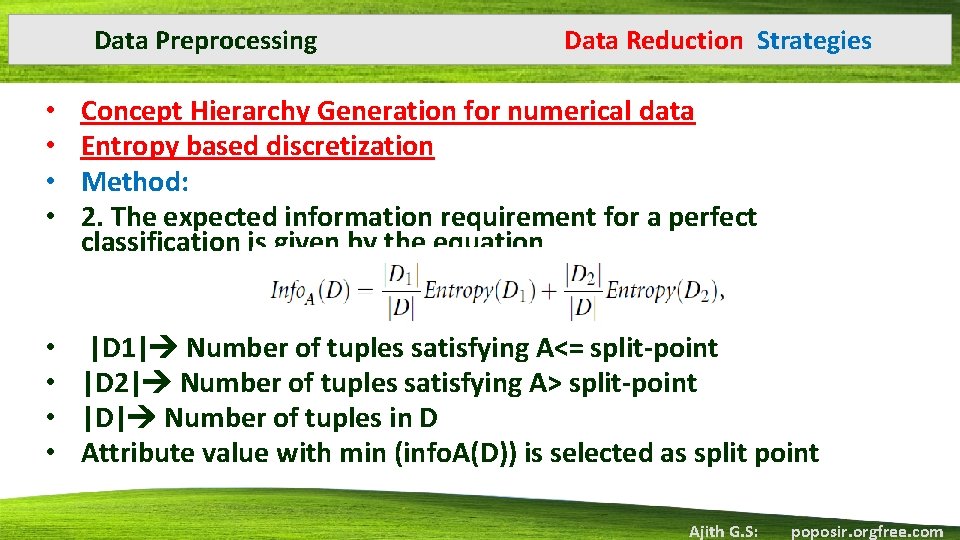

Data Preprocessing • • Data Reduction Strategies Concept Hierarchy Generation for numerical data Entropy based discretization Method: 2. The expected information requirement for a perfect classification is given by the equation • |D 1| Number of tuples satisfying A<= split-point • |D 2| Number of tuples satisfying A> split-point • |D| Number of tuples in D • Attribute value with min (info. A(D)) is selected as split point Ajith G. S: poposir. orgfree. com

Data Preprocessing • • Data Reduction Strategies Concept Hierarchy Generation for numerical data Entropy based discretization Method: 3. The process of determining a split point is recursively applied until some stopping criterion is met. Ajith G. S: poposir. orgfree. com

Data Preprocessing • • Data Reduction Strategies Concept Hierarchy Generation for numerical data Interval Merging by X^2 Analysis Chi. Merge Bottom-up approach and Supervised Find the best neighboring intervals and merges them to form larger intervals Two adjacent intervals have similar distribution of classes Method Initially each value of the attribute is in a separate interval X^2 tests are performed for every pair of adjacent intervals. Those with least values are merged�similar class distribution Can be repeated until a stopping condition is met (Threshold, Number of intervals) Ajith G. S: poposir. orgfree. com

Data Preprocessing Data Reduction Strategies • Concept Hierarchy Generation for numerical data • Cluster Analysis • Discretize a numerical attribute by partitioning the attribute values into clusters or groups • Based on the distribution and closeness of the data points of attribute A, clustering is performed • Used to generate concept hierarchy • Top down splitting strategy�Lower level of hierarchy • Bottom-up merging strategy�Higher level of hierarchy • Clusters form nodes of concept hierarchy Ajith G. S: poposir. orgfree. com

Data Preprocessing Data Reduction Strategies • Concept Hierarchy Generation for numerical data • Natural Partitioning • Partitions numerical data into relatively uniform, “natural” intervals. E. g. , [50 -60] better than [51. 223 -60. 812] • 3 -4 -5 rule is used to partition the data. • If an interval covers 3, 6, 7 or 9 distinct values at the most significant digit, partition the range into 3 equi-width intervals • If it covers 2, 4, or 8 distinct values at the most significant digit, partition the range into 4 intervals • If it covers 1, 5, or 10 distinct values at the most significant digit, partition the range into 5 intervals. Ajith G. S: poposir. orgfree. com

- Slides: 44