Data Mutation Software Testing AData Method Mutation for

- Slides: 42

Data Mutation Software Testing -- AData Method. Mutation for Automated. Testing Generation of Structurally Complex Test Cases Hong Zhu Dept. of Computing and Electronics, Oxford Brookes Univ. , Oxford, OX 33 1 HX, UK Email: hzhu@brookes. ac. uk Aug. /Sept. 2009 1

Outline Ø Motivation Overview of existing work on software test case generation u The challenges to software testing u Aug. /Sept. 2009 Ø The Data Mutation Testing Method Basic ideas u Process u Measurements u Ø A Case study Subject software under test u The mutation operators u Experiment process u Main results u Ø Perspectives and future works Potential applications u Integration with other black box testing methods u Data Mutation Software Testing 2

Aug. /Sept. 2009 Motivation Ø Test case generation u. Need to meet multiple goals q. Reality: to represent real operation of the system q. Coverage: functions, program code, input/output data space, and their combinations q. Efficiency: not to overkill, easy to execute, etc. q. Effective: capable of detecting faults, which implies easy to check the correctness of program’s output q. Externally useful: help with debugging, reliability estimation, etc. u. Huge impact on test effectiveness and efficiency u. One of the most labour intensive tasks in practices Data Mutation Software Testing 3

Existing Work ØProgram-based test case generation u. Static: analysis of code without execution, e. g. symbolic execution Aug. /Sept. 2009 q. Path oriented Howden, W. E. (1975, 1977, 1978); Ramamoorthy, C. , Ho, S. and Chen, W. (1976) ; King, J. (1975) ; Clarke, L. (1976) ; Xie T. , Marinov, D. , and Notkin, D. (2004); J. Zhang. (2004), Xu, Z. and Zhang, J. (2006) q. Goal oriented De. Millo, R. A. , Guindi, D. S. , Mc. Cracken, W. M. , Offutt, A. J. and King, K. N. (1988) ; Pargas, R. P. , Harrold, M. J. and Peck, R. R. (1999); Gupta, N. , Mathur, A. P. and Soffa, M. L. (2000); u. Dynamic: through execution of the program Korel, B. (1990) , Beydeda, S. and Gruhn, V. (2003) u. Hybrid: combination of dynamic execution with symbolic execution, e. g. concolic techniques Godefroid, P. , Klarlund, N. , and Sen, K. . (2005); u. Techniques: q. Constraint solver, Heuristic search, e. g. genetic algorithms: Mc. Minn, P. and Holcombe, M. (2003) , Survey: Mc. Minn, P. (2004) Data Mutation Software Testing 4

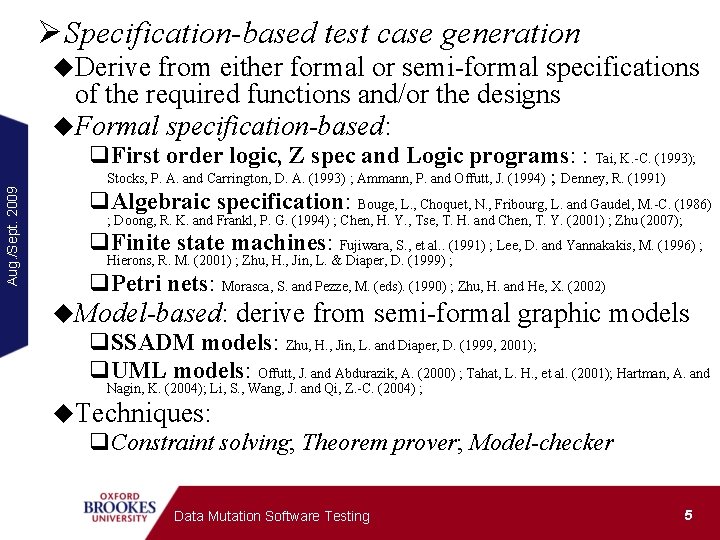

ØSpecification-based test case generation u. Derive from either formal or semi-formal specifications of the required functions and/or the designs u. Formal specification-based: q. First order logic, Z spec and Logic programs: : Tai, K. -C. (1993); Aug. /Sept. 2009 Stocks, P. A. and Carrington, D. A. (1993) ; Ammann, P. and Offutt, J. (1994) ; Denney, R. (1991) q. Algebraic specification: Bouge, L. , Choquet, N. , Fribourg, L. and Gaudel, M. -C. (1986) ; Doong, R. K. and Frankl, P. G. (1994) ; Chen, H. Y. , Tse, T. H. and Chen, T. Y. (2001) ; Zhu (2007); q. Finite state machines: Fujiwara, S. , et al. . (1991) ; Lee, D. and Yannakakis, M. (1996) ; Hierons, R. M. (2001) ; Zhu, H. , Jin, L. & Diaper, D. (1999) ; q. Petri nets: Morasca, S. and Pezze, M. (eds). (1990) ; Zhu, H. and He, X. (2002) u. Model-based: derive from semi-formal graphic models q. SSADM models: Zhu, H. , Jin, L. and Diaper, D. (1999, 2001); q. UML models: Offutt, J. and Abdurazik, A. (2000) ; Tahat, L. H. , et al. (2001); Hartman, A. and Nagin, K. (2004); Li, S. , Wang, J. and Qi, Z. -C. (2004) ; u. Techniques: q. Constraint solving; Theorem prover; Model-checker Data Mutation Software Testing 5

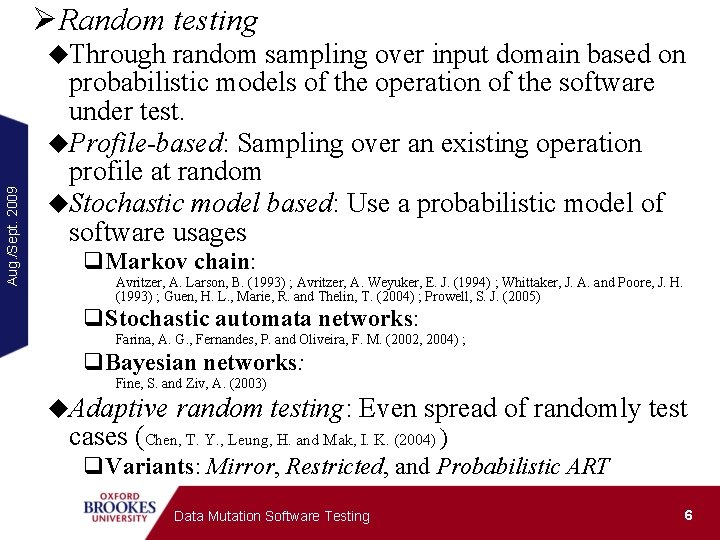

ØRandom testing Aug. /Sept. 2009 u. Through random sampling over input domain based on probabilistic models of the operation of the software under test. u. Profile-based: Sampling over an existing operation profile at random u. Stochastic model based: Use a probabilistic model of software usages q. Markov chain: Avritzer, A. Larson, B. (1993) ; Avritzer, A. Weyuker, E. J. (1994) ; Whittaker, J. A. and Poore, J. H. (1993) ; Guen, H. L. , Marie, R. and Thelin, T. (2004) ; Prowell, S. J. (2005) q. Stochastic automata networks: Farina, A. G. , Fernandes, P. and Oliveira, F. M. (2002, 2004) ; q. Bayesian networks: Fine, S. and Ziv, A. (2003) u. Adaptive random testing: Even spread of randomly test cases (Chen, T. Y. , Leung, H. and Mak, I. K. (2004) ) q. Variants: Mirror, Restricted, and Probabilistic ART Data Mutation Software Testing 6

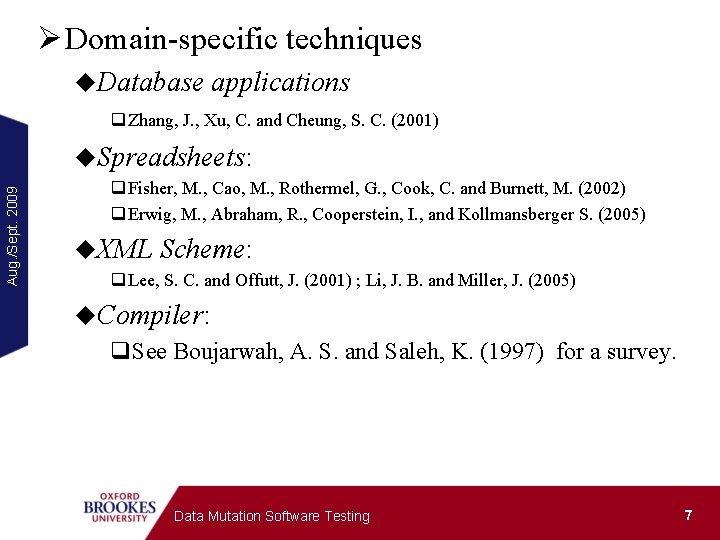

Ø Domain-specific techniques u. Database applications q Zhang, J. , Xu, C. and Cheung, S. C. (2001) Aug. /Sept. 2009 u. Spreadsheets: q Fisher, M. , Cao, M. , Rothermel, G. , Cook, C. and Burnett, M. (2002) q Erwig, M. , Abraham, R. , Cooperstein, I. , and Kollmansberger S. (2005) u. XML Scheme: q Lee, S. C. and Offutt, J. (2001) ; Li, J. B. and Miller, J. (2005) u. Compiler: q. See Boujarwah, A. S. and Saleh, K. (1997) for a survey. Data Mutation Software Testing 7

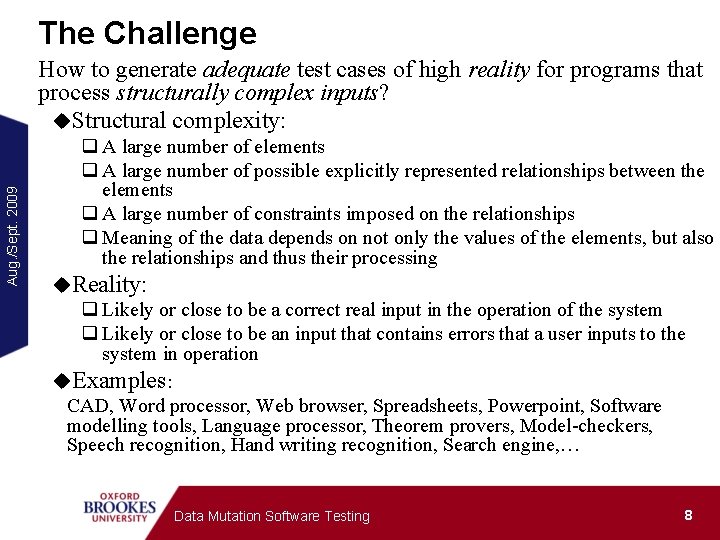

The Challenge Aug. /Sept. 2009 How to generate adequate test cases of high reality for programs that process structurally complex inputs? u. Structural complexity: q A large number of elements q A large number of possible explicitly represented relationships between the elements q A large number of constraints imposed on the relationships q Meaning of the data depends on not only the values of the elements, but also the relationships and thus their processing u. Reality: q Likely or close to be a correct real input in the operation of the system q Likely or close to be an input that contains errors that a user inputs to the system in operation u. Examples: CAD, Word processor, Web browser, Spreadsheets, Powerpoint, Software modelling tools, Language processor, Theorem provers, Model-checkers, Speech recognition, Hand writing recognition, Search engine, … Data Mutation Software Testing 8

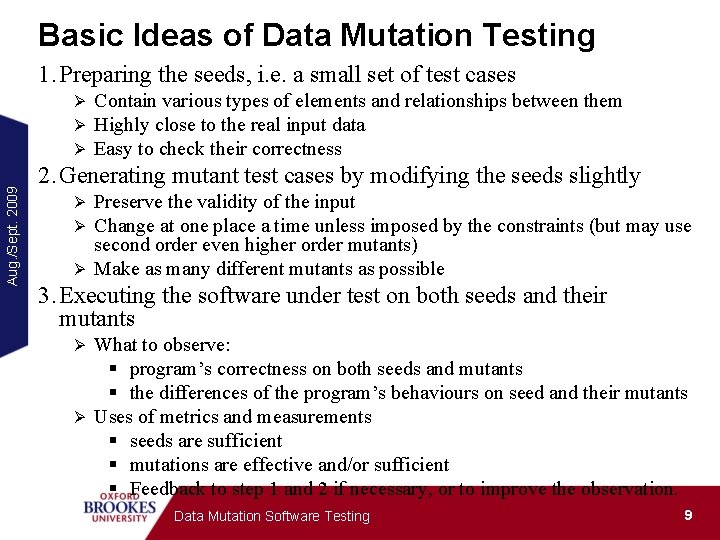

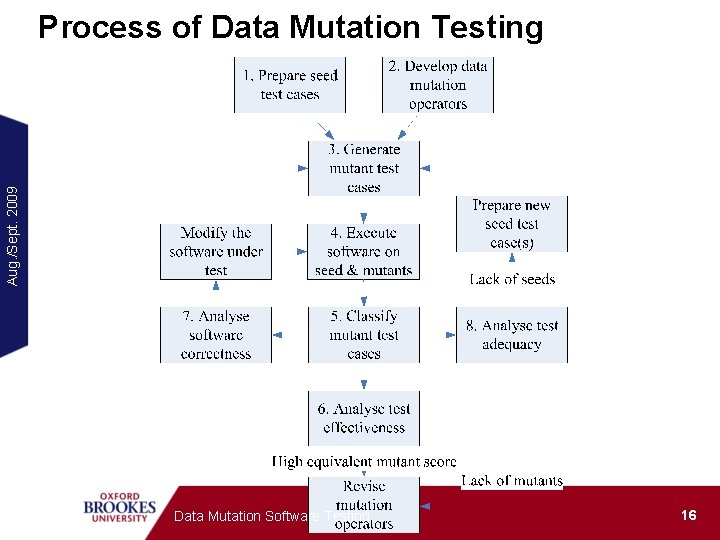

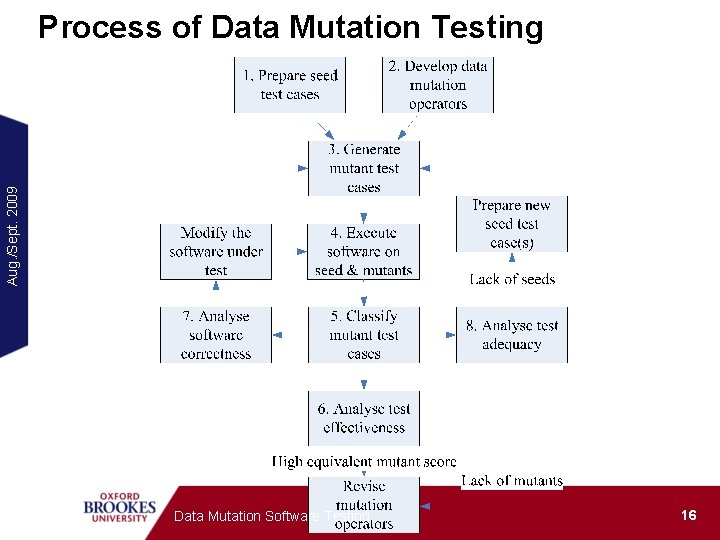

Basic Ideas of Data Mutation Testing 1. Preparing the seeds, i. e. a small set of test cases Aug. /Sept. 2009 Ø Ø Ø Contain various types of elements and relationships between them Highly close to the real input data Easy to check their correctness 2. Generating mutant test cases by modifying the seeds slightly Preserve the validity of the input Change at one place a time unless imposed by the constraints (but may use second order even higher order mutants) Ø Make as many different mutants as possible Ø Ø 3. Executing the software under test on both seeds and their mutants What to observe: § program’s correctness on both seeds and mutants § the differences of the program’s behaviours on seed and their mutants Ø Uses of metrics and measurements § seeds are sufficient § mutations are effective and/or sufficient § Feedback to step 1 and 2 if necessary, or to improve the observation. Ø Data Mutation Software Testing 9

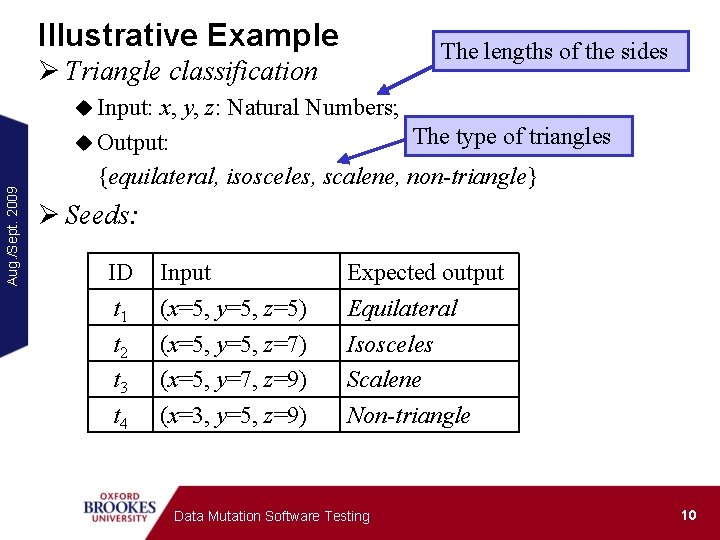

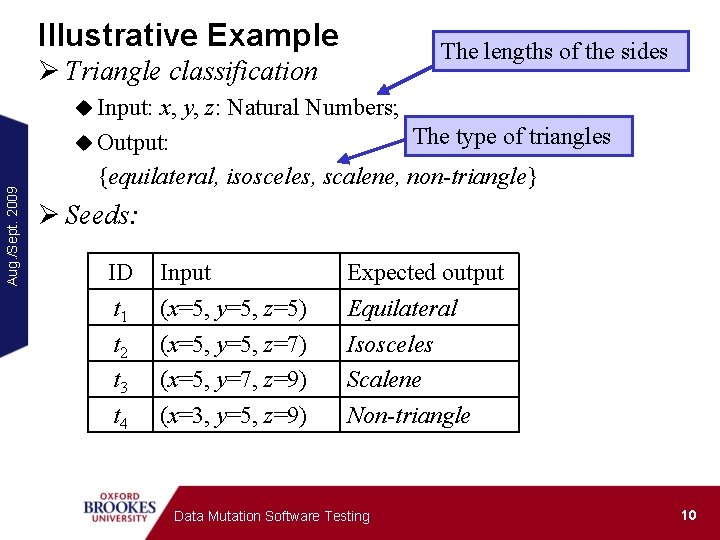

Illustrative Example The lengths of the sides Ø Triangle classification Aug. /Sept. 2009 u Input: x, y, z: Natural Numbers; The type of triangles u Output: {equilateral, isosceles, scalene, non-triangle} Ø Seeds: ID t 1 t 2 t 3 t 4 Input (x=5, y=5, z=5) (x=5, y=5, z=7) (x=5, y=7, z=9) (x=3, y=5, z=9) Expected output Equilateral Isosceles Scalene Non-triangle Data Mutation Software Testing 10

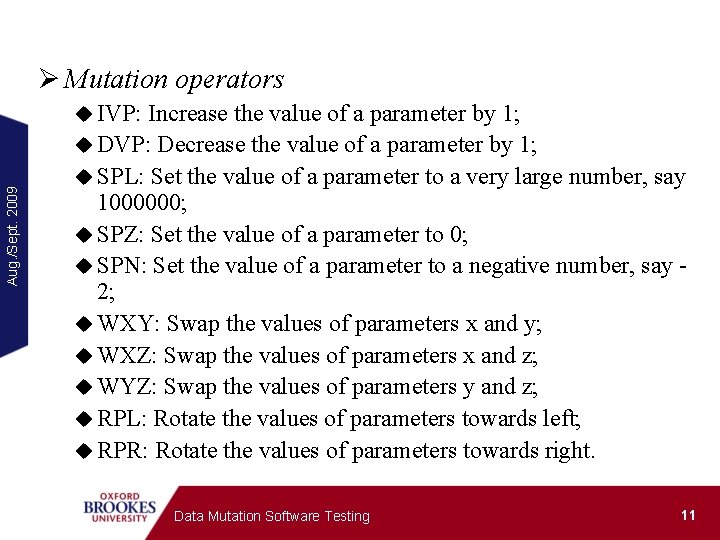

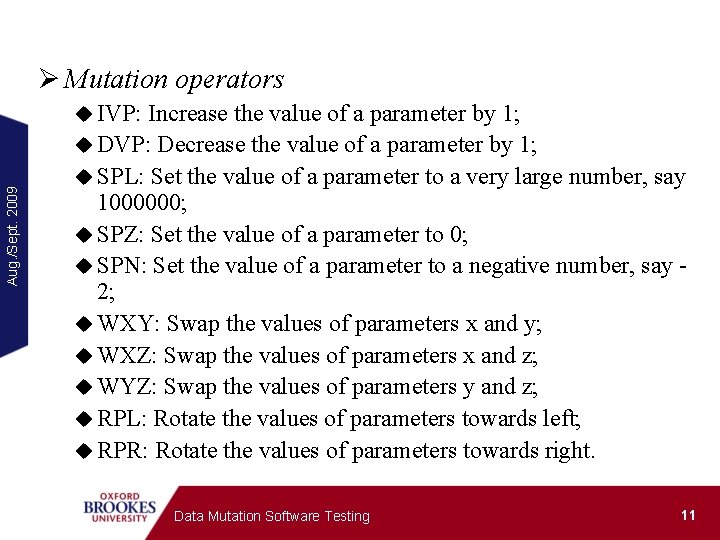

Ø Mutation operators Aug. /Sept. 2009 u IVP: Increase the value of a parameter by 1; u DVP: Decrease the value of a parameter by 1; u SPL: Set the value of a parameter to a very large number, say 1000000; u SPZ: Set the value of a parameter to 0; u SPN: Set the value of a parameter to a negative number, say 2; u WXY: Swap the values of parameters x and y; u WXZ: Swap the values of parameters x and z; u WYZ: Swap the values of parameters y and z; u RPL: Rotate the values of parameters towards left; u RPR: Rotate the values of parameters towards right. Data Mutation Software Testing 11

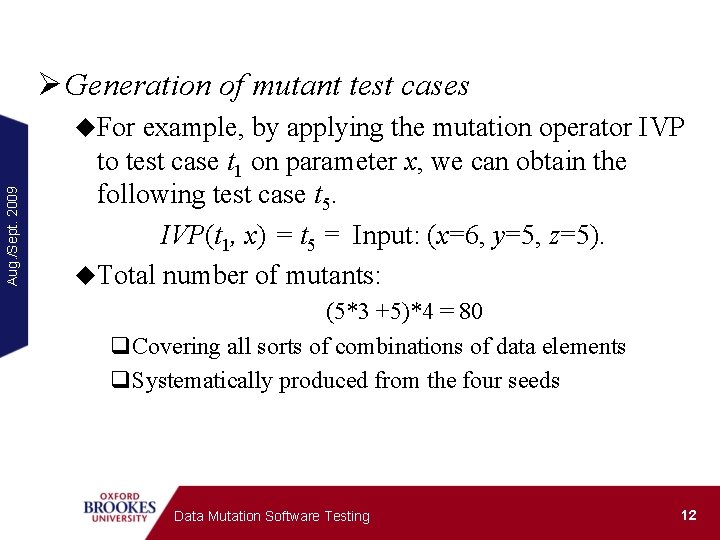

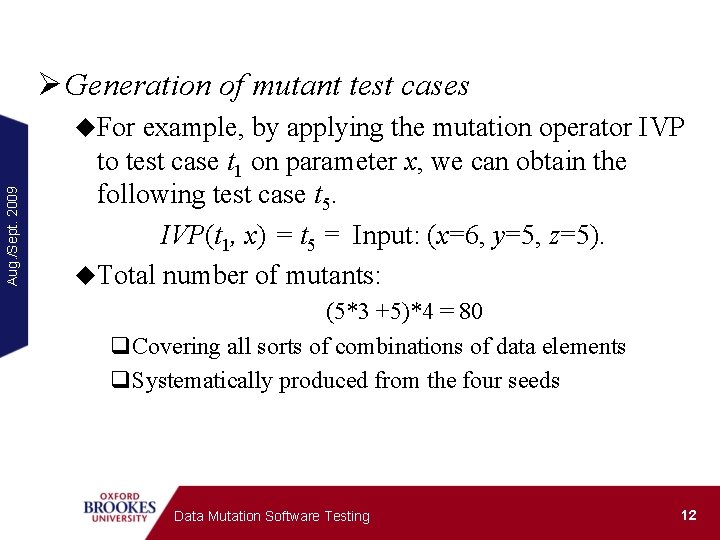

Ø Generation of mutant test cases Aug. /Sept. 2009 u. For example, by applying the mutation operator IVP to test case t 1 on parameter x, we can obtain the following test case t 5. IVP(t 1, x) = t 5 = Input: (x=6, y=5, z=5). u. Total number of mutants: (5*3 +5)*4 = 80 q. Covering all sorts of combinations of data elements q. Systematically produced from the four seeds Data Mutation Software Testing 12

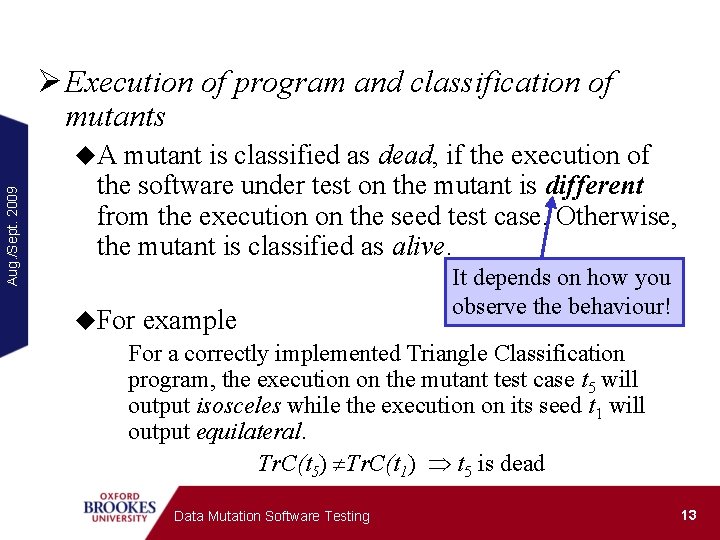

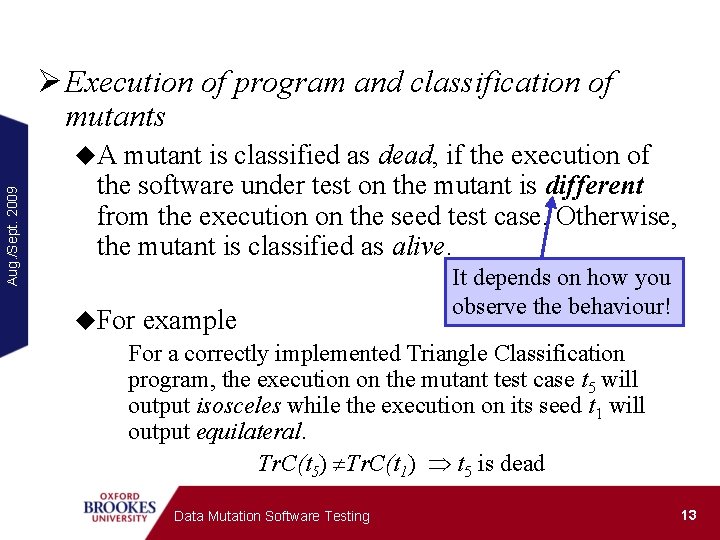

Ø Execution of program and classification of mutants Aug. /Sept. 2009 u. A mutant is classified as dead, if the execution of the software under test on the mutant is different from the execution on the seed test case. Otherwise, the mutant is classified as alive. It depends on how you observe the behaviour! u. For example For a correctly implemented Triangle Classification program, the execution on the mutant test case t 5 will output isosceles while the execution on its seed t 1 will output equilateral. Tr. C(t 5) Tr. C(t 1) t 5 is dead Data Mutation Software Testing 13

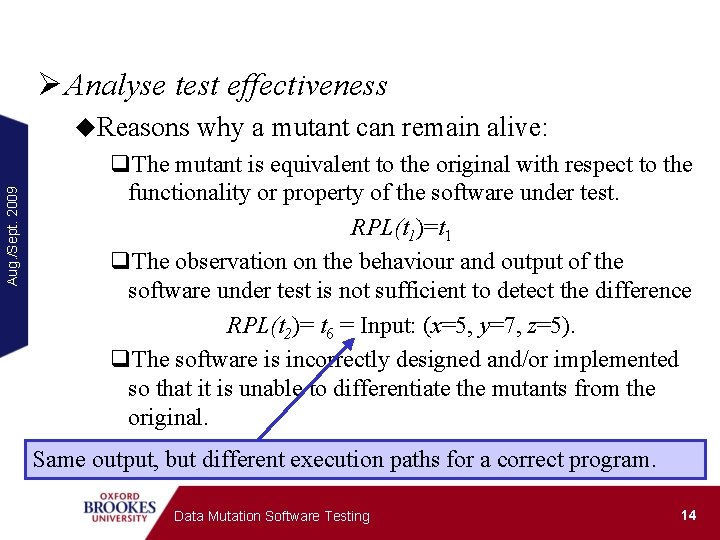

Ø Analyse test effectiveness Aug. /Sept. 2009 u. Reasons why a mutant can remain alive: q. The mutant is equivalent to the original with respect to the functionality or property of the software under test. RPL(t 1)=t 1 q. The observation on the behaviour and output of the software under test is not sufficient to detect the difference RPL(t 2)= t 6 = Input: (x=5, y=7, z=5). q. The software is incorrectly designed and/or implemented so that it is unable to differentiate the mutants from the original. Same output, but different execution paths for a correct program. Data Mutation Software Testing 14

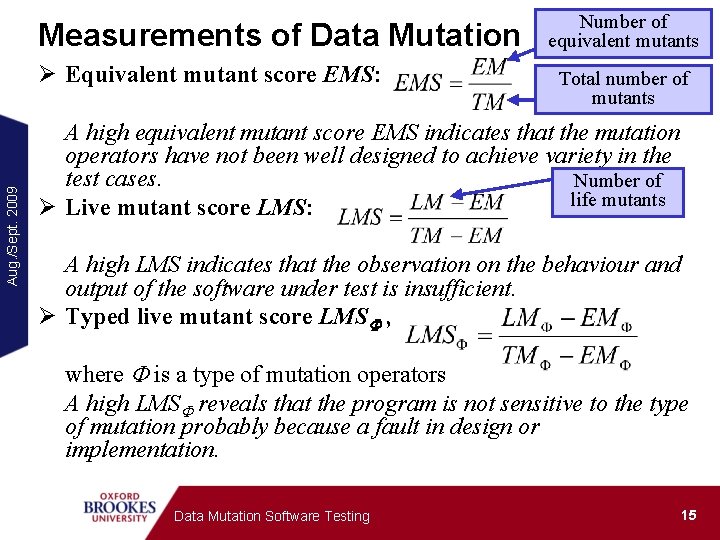

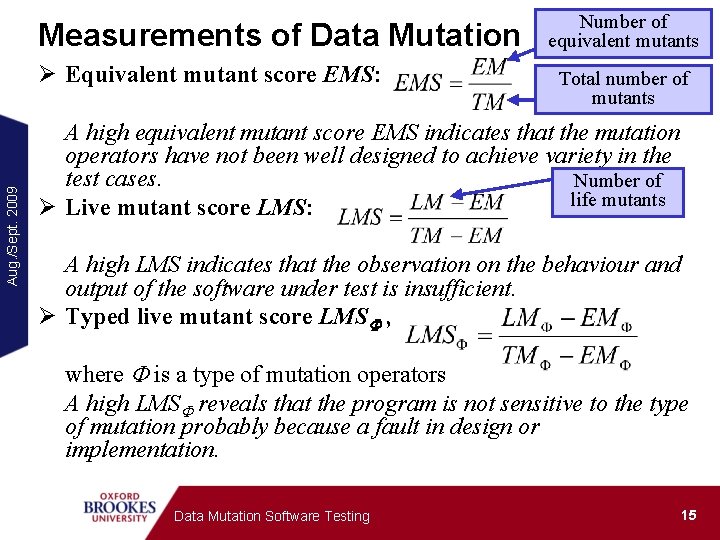

Measurements of Data Mutation Aug. /Sept. 2009 Ø Equivalent mutant score EMS: Number of equivalent mutants Total number of mutants A high equivalent mutant score EMS indicates that the mutation operators have not been well designed to achieve variety in the test cases. Number of life mutants Ø Live mutant score LMS: A high LMS indicates that the observation on the behaviour and output of the software under test is insufficient. Ø Typed live mutant score LMSF , where F is a type of mutation operators A high LMSF reveals that the program is not sensitive to the type of mutation probably because a fault in design or implementation. Data Mutation Software Testing 15

Aug. /Sept. 2009 Process of Data Mutation Testing Data Mutation Software Testing 16

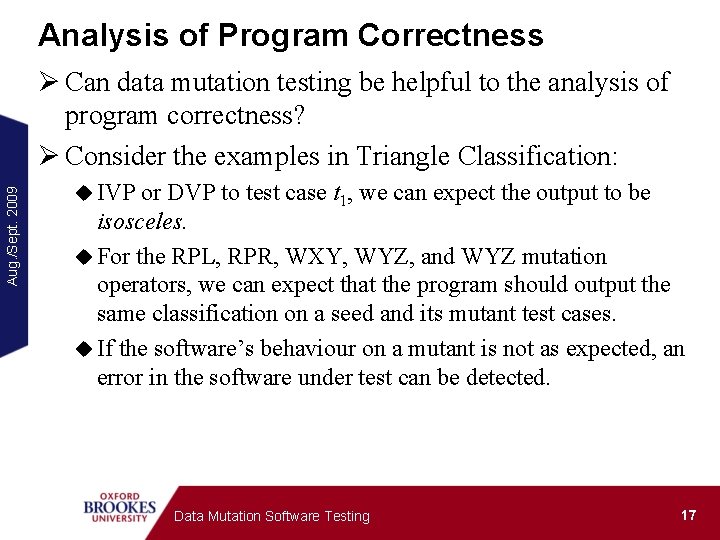

Analysis of Program Correctness Aug. /Sept. 2009 Ø Can data mutation testing be helpful to the analysis of program correctness? Ø Consider the examples in Triangle Classification: u IVP or DVP to test case t 1, we can expect the output to be isosceles. u For the RPL, RPR, WXY, WYZ, and WYZ mutation operators, we can expect that the program should output the same classification on a seed and its mutant test cases. u If the software’s behaviour on a mutant is not as expected, an error in the software under test can be detected. Data Mutation Software Testing 17

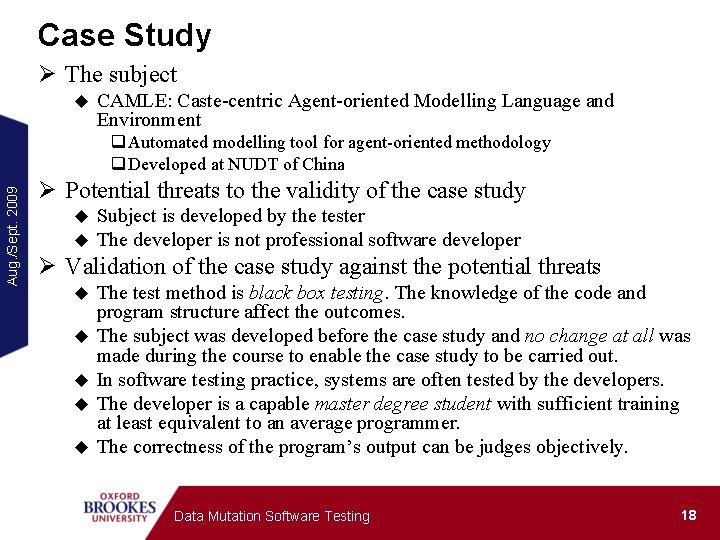

Case Study Ø The subject u CAMLE: Caste-centric Agent-oriented Modelling Language and Environment Aug. /Sept. 2009 q Automated modelling tool for agent-oriented methodology q Developed at NUDT of China Ø Potential threats to the validity of the case study u u Subject is developed by the tester The developer is not professional software developer Ø Validation of the case study against the potential threats u u u The test method is black box testing. The knowledge of the code and program structure affect the outcomes. The subject was developed before the case study and no change at all was made during the course to enable the case study to be carried out. In software testing practice, systems are often tested by the developers. The developer is a capable master degree student with sufficient training at least equivalent to an average programmer. The correctness of the program’s output can be judges objectively. Data Mutation Software Testing 18

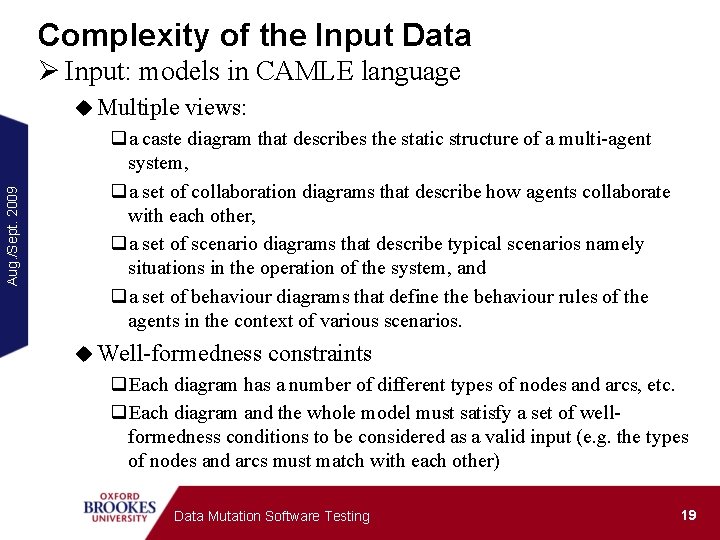

Complexity of the Input Data Ø Input: models in CAMLE language Aug. /Sept. 2009 u Multiple views: qa caste diagram that describes the static structure of a multi-agent system, qa set of collaboration diagrams that describe how agents collaborate with each other, qa set of scenario diagrams that describe typical scenarios namely situations in the operation of the system, and qa set of behaviour diagrams that define the behaviour rules of the agents in the context of various scenarios. u Well-formedness constraints q. Each diagram has a number of different types of nodes and arcs, etc. q. Each diagram and the whole model must satisfy a set of wellformedness conditions to be considered as a valid input (e. g. the types of nodes and arcs must match with each other) Data Mutation Software Testing 19

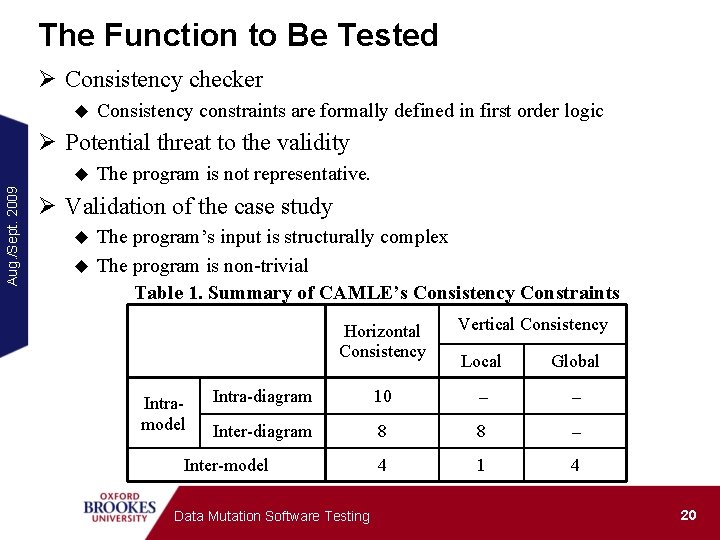

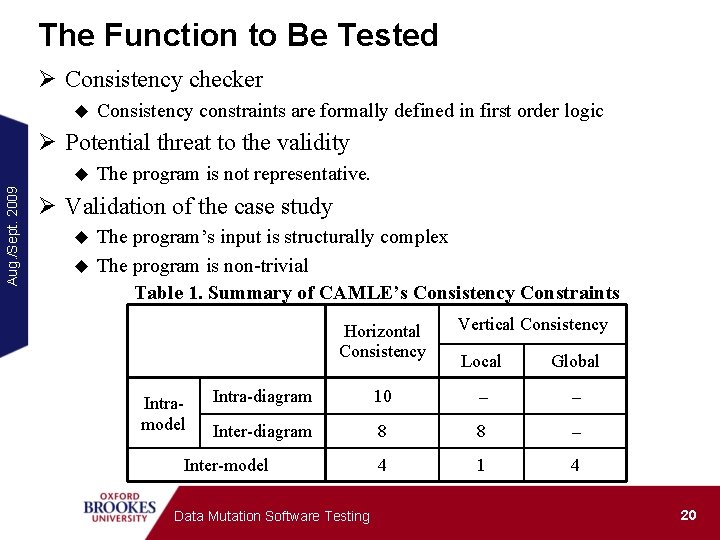

The Function to Be Tested Ø Consistency checker u Consistency constraints are formally defined in first order logic Ø Potential threat to the validity Aug. /Sept. 2009 u The program is not representative. Ø Validation of the case study The program’s input is structurally complex u The program is non-trivial Table 1. Summary of CAMLE’s Consistency Constraints u Horizontal Consistency Intramodel Vertical Consistency Local Global Intra-diagram 10 - - Inter-diagram 8 8 - 4 1 4 Inter-model Data Mutation Software Testing 20

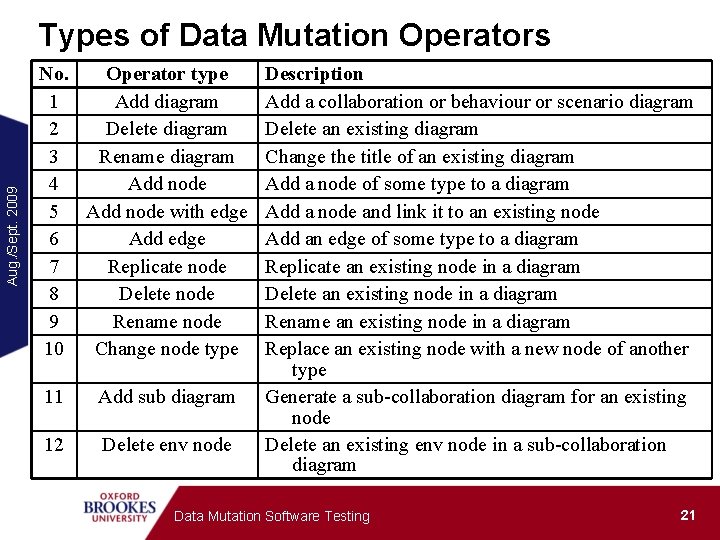

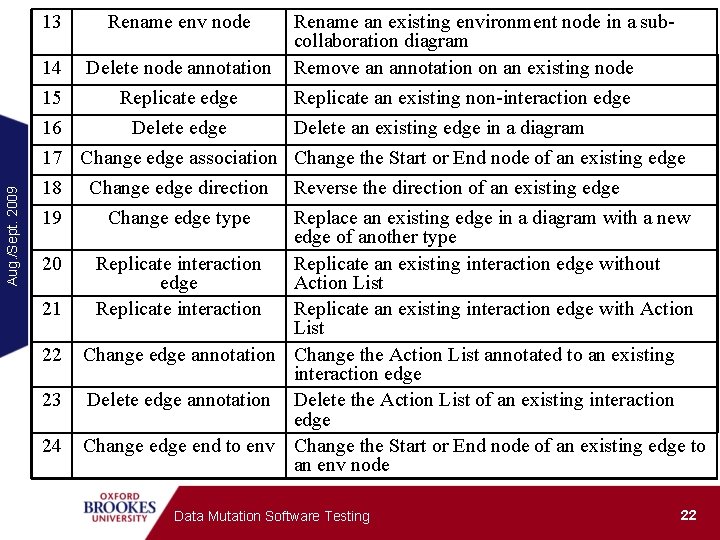

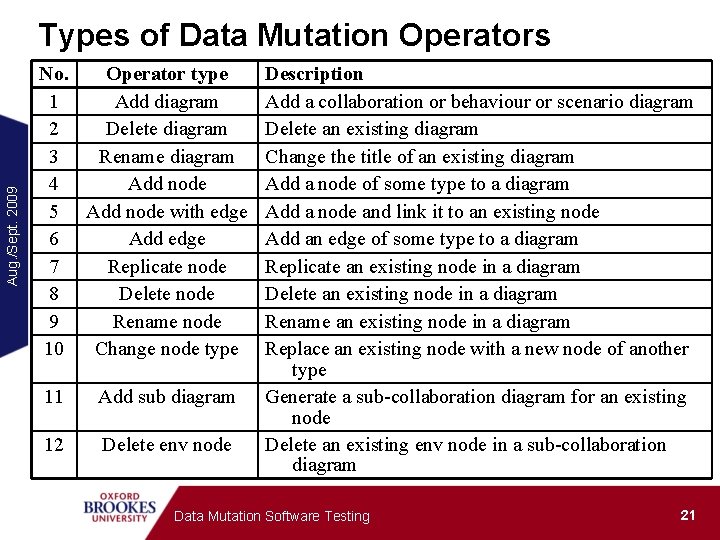

Aug. /Sept. 2009 Types of Data Mutation Operators No. Operator type 1 Add diagram 2 Delete diagram 3 Rename diagram 4 Add node 5 Add node with edge 6 Add edge 7 Replicate node 8 Delete node 9 Rename node 10 Change node type 11 Add sub diagram 12 Delete env node Description Add a collaboration or behaviour or scenario diagram Delete an existing diagram Change the title of an existing diagram Add a node of some type to a diagram Add a node and link it to an existing node Add an edge of some type to a diagram Replicate an existing node in a diagram Delete an existing node in a diagram Rename an existing node in a diagram Replace an existing node with a new node of another type Generate a sub-collaboration diagram for an existing node Delete an existing env node in a sub-collaboration diagram Data Mutation Software Testing 21

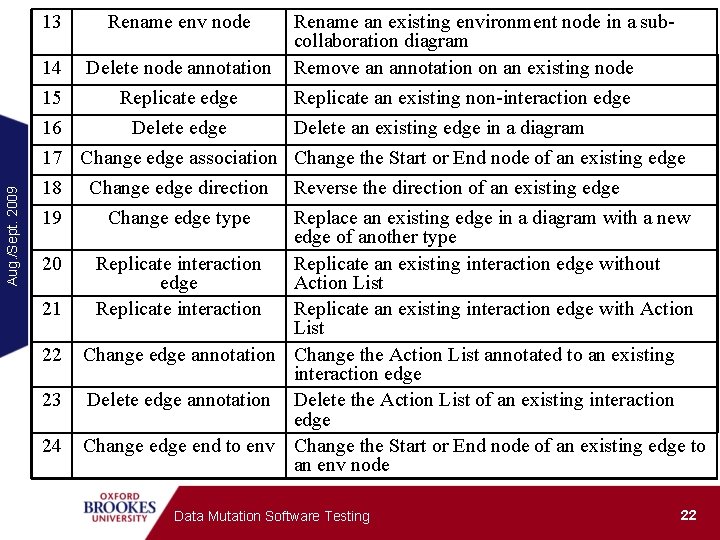

13 Rename env node 14 Delete node annotation Rename an existing environment node in a subcollaboration diagram Remove an annotation on an existing node Aug. /Sept. 2009 15 Replicate edge Replicate an existing non-interaction edge 16 Delete edge Delete an existing edge in a diagram 17 Change edge association Change the Start or End node of an existing edge 18 19 20 21 22 23 24 Change edge direction Change edge type Reverse the direction of an existing edge Replace an existing edge in a diagram with a new edge of another type Replicate interaction Replicate an existing interaction edge without edge Action List Replicate interaction Replicate an existing interaction edge with Action List Change edge annotation Change the Action List annotated to an existing interaction edge Delete edge annotation Delete the Action List of an existing interaction edge Change edge end to env Change the Start or End node of an existing edge to an env node Data Mutation Software Testing 22

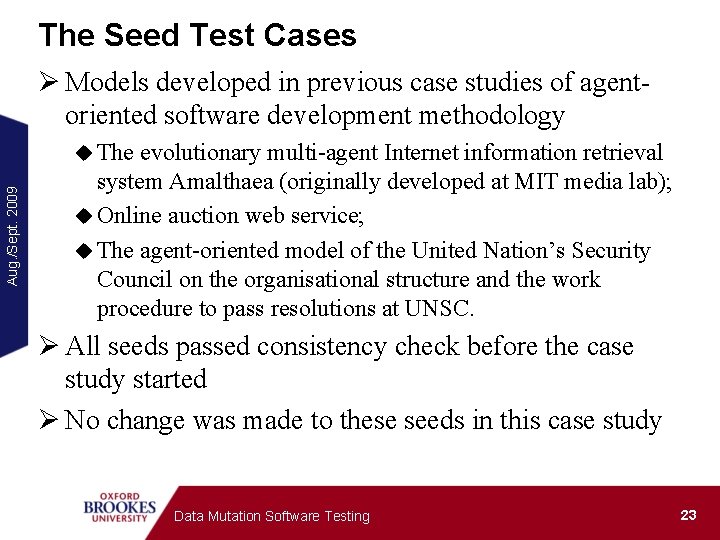

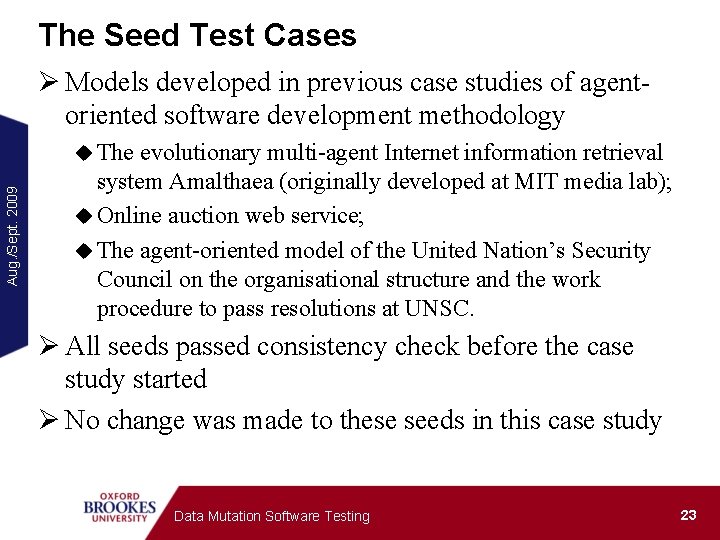

The Seed Test Cases Ø Models developed in previous case studies of agentoriented software development methodology Aug. /Sept. 2009 u The evolutionary multi-agent Internet information retrieval system Amalthaea (originally developed at MIT media lab); u Online auction web service; u The agent-oriented model of the United Nation’s Security Council on the organisational structure and the work procedure to pass resolutions at UNSC. Ø All seeds passed consistency check before the case study started Ø No change was made to these seeds in this case study Data Mutation Software Testing 23

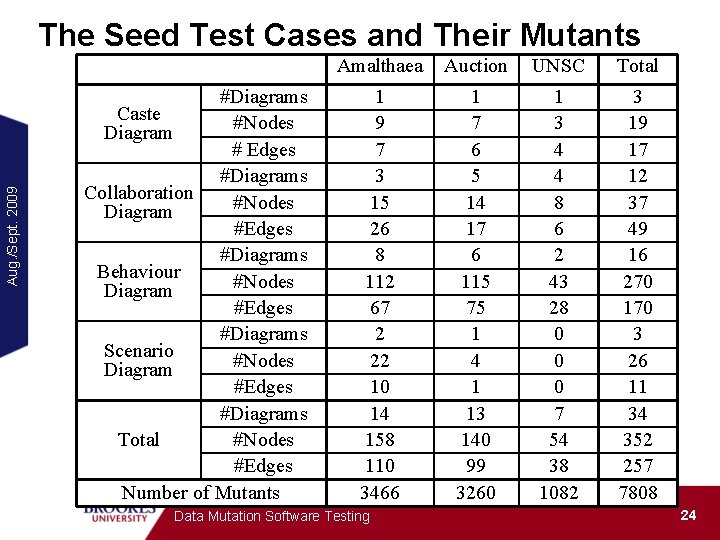

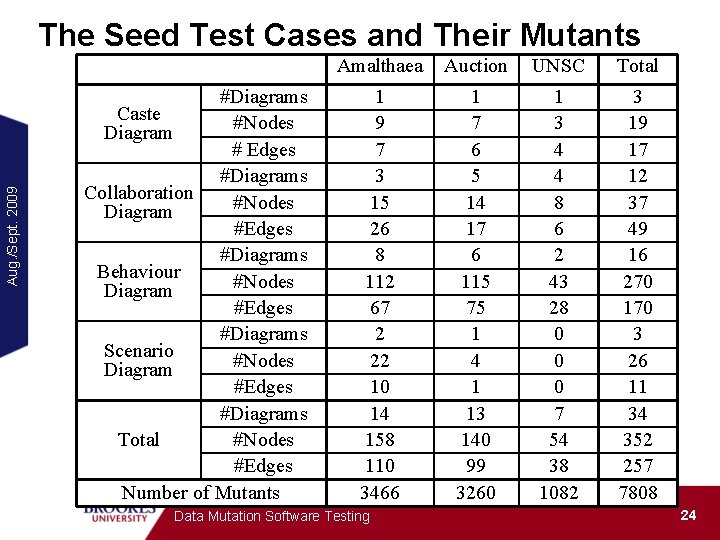

Aug. /Sept. 2009 The Seed Test Cases and Their Mutants #Diagrams Caste #Nodes Diagram # Edges #Diagrams Collaboration #Nodes Diagram #Edges #Diagrams Behaviour #Nodes Diagram #Edges #Diagrams Scenario #Nodes Diagram #Edges #Diagrams #Nodes Total #Edges Number of Mutants Amalthaea Auction UNSC Total 1 9 7 3 15 26 8 112 67 2 22 10 14 158 110 3466 1 7 6 5 14 17 6 115 75 1 4 1 13 140 99 3260 1 3 4 4 8 6 2 43 28 0 0 0 7 54 38 1082 3 19 17 12 37 49 16 270 170 3 26 11 34 352 257 7808 Data Mutation Software Testing 24

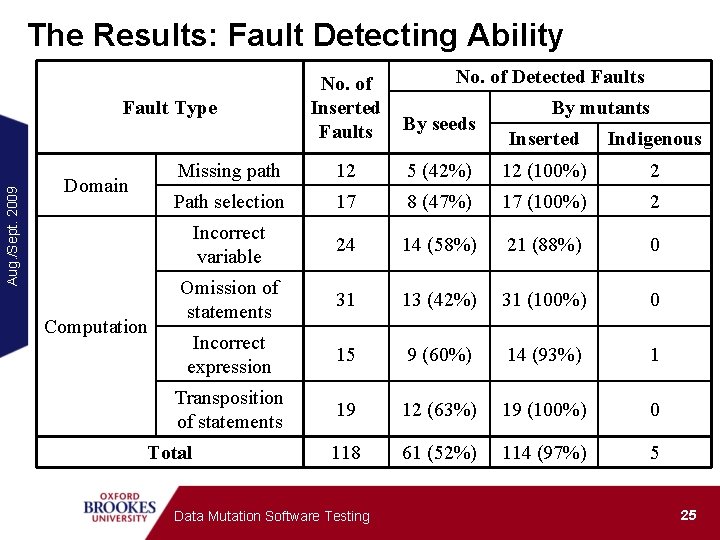

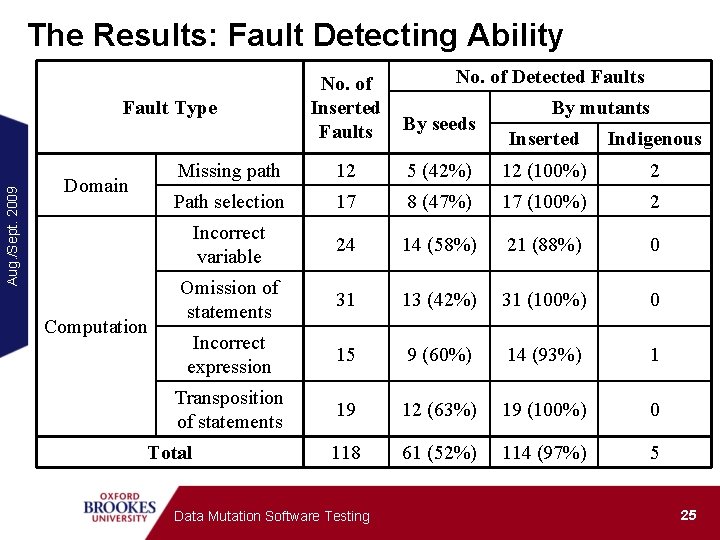

The Results: Fault Detecting Ability By seeds Missing path 12 Path selection Aug. /Sept. 2009 Fault Type Domain Computation No. of Detected Faults No. of Inserted Faults By mutants Inserted Indigenous 5 (42%) 12 (100%) 2 17 8 (47%) 17 (100%) 2 Incorrect variable 24 14 (58%) 21 (88%) 0 Omission of statements 31 13 (42%) 31 (100%) 0 Incorrect expression 15 9 (60%) 14 (93%) 1 Transposition of statements 19 12 (63%) 19 (100%) 0 118 61 (52%) 114 (97%) 5 Total Data Mutation Software Testing 25

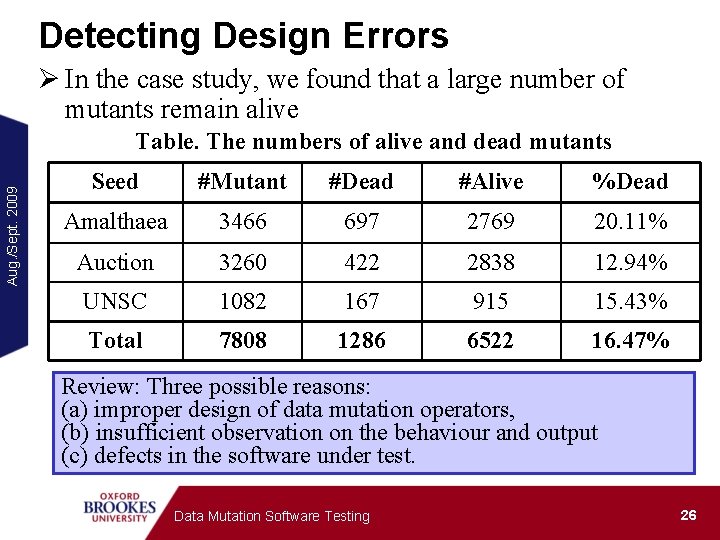

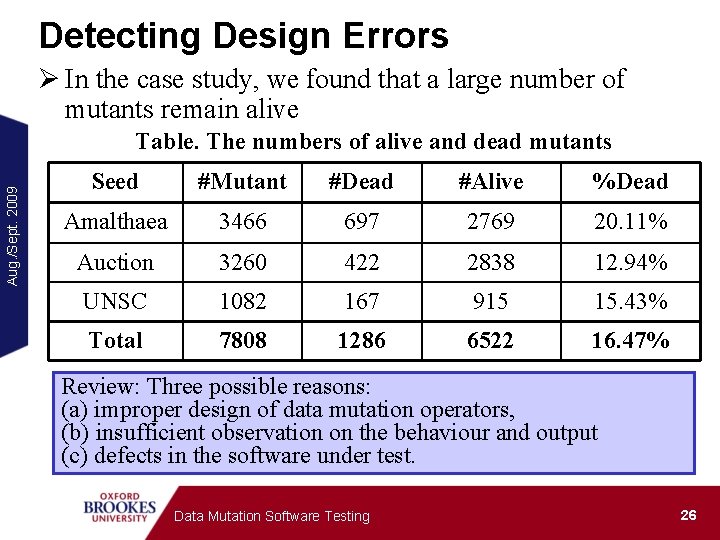

Detecting Design Errors Ø In the case study, we found that a large number of mutants remain alive Aug. /Sept. 2009 Table. The numbers of alive and dead mutants Seed #Mutant #Dead #Alive %Dead Amalthaea 3466 697 2769 20. 11% Auction 3260 422 2838 12. 94% UNSC 1082 167 915 15. 43% Total 7808 1286 6522 16. 47% Review: Three possible reasons: (a) improper design of data mutation operators, (b) insufficient observation on the behaviour and output (c) defects in the software under test. Data Mutation Software Testing 26

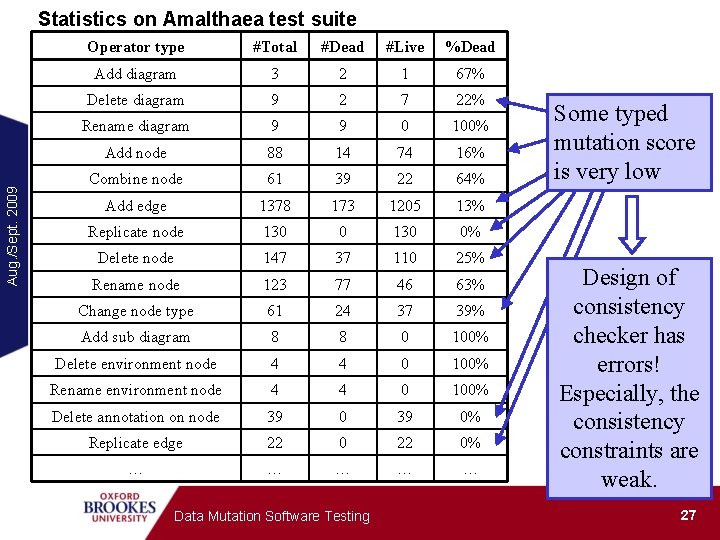

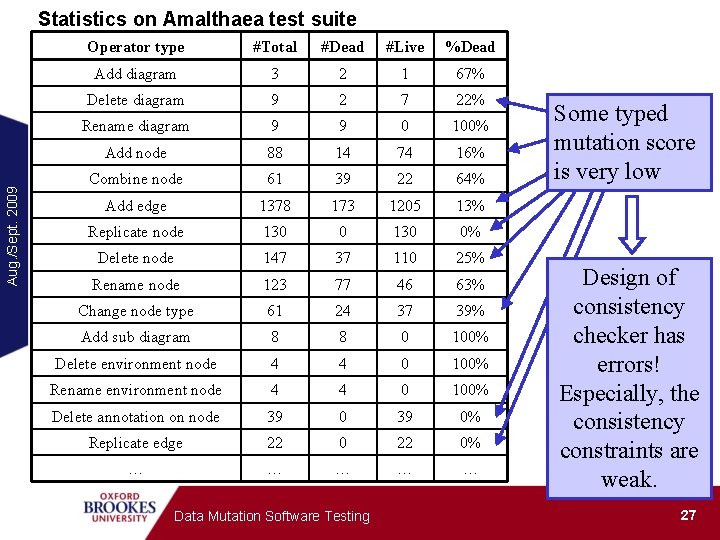

Aug. /Sept. 2009 Statistics on Amalthaea test suite Operator type #Total #Dead #Live %Dead Add diagram 3 2 1 67% Delete diagram 9 2 7 22% Rename diagram 9 9 0 100% Add node 88 14 74 16% Combine node 61 39 22 64% Add edge 1378 173 1205 13% Replicate node 130 0% Delete node 147 37 110 25% Rename node 123 77 46 63% Change node type 61 24 37 39% Add sub diagram 8 8 0 100% Delete environment node 4 4 0 100% Rename environment node 4 4 0 100% Delete annotation on node 39 0% Replicate edge 22 0% … … … Data Mutation Software Testing Some typed mutation score is very low Design of consistency checker has errors! Especially, the consistency constraints are weak. 27

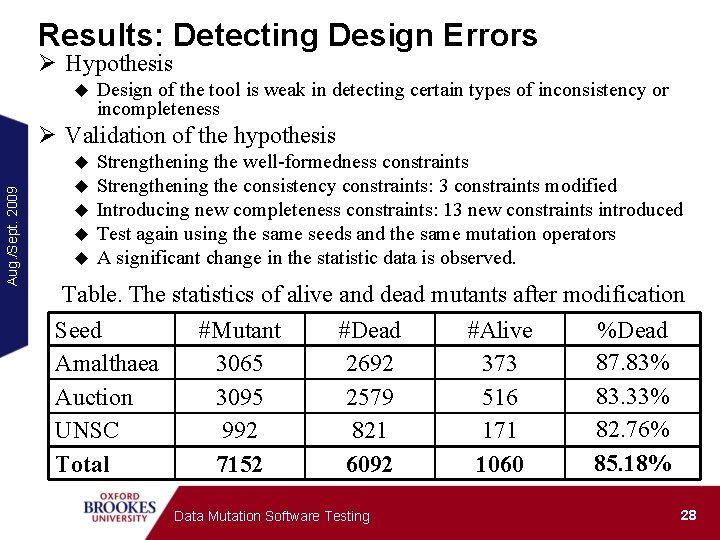

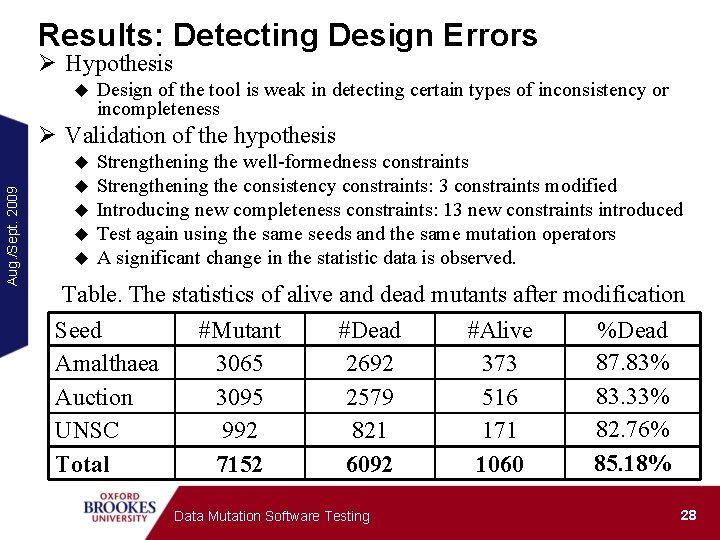

Results: Detecting Design Errors Ø Hypothesis u Design of the tool is weak in detecting certain types of inconsistency or incompleteness Aug. /Sept. 2009 Ø Validation of the hypothesis u u u Strengthening the well-formedness constraints Strengthening the consistency constraints: 3 constraints modified Introducing new completeness constraints: 13 new constraints introduced Test again using the same seeds and the same mutation operators A significant change in the statistic data is observed. Table. The statistics of alive and dead mutants after modification Seed #Mutant #Dead #Alive %Dead 87. 83% Amalthaea 3065 2692 373 83. 33% Auction 3095 2579 516 82. 76% UNSC 992 821 171 85. 18% Total 7152 6092 1060 Data Mutation Software Testing 28

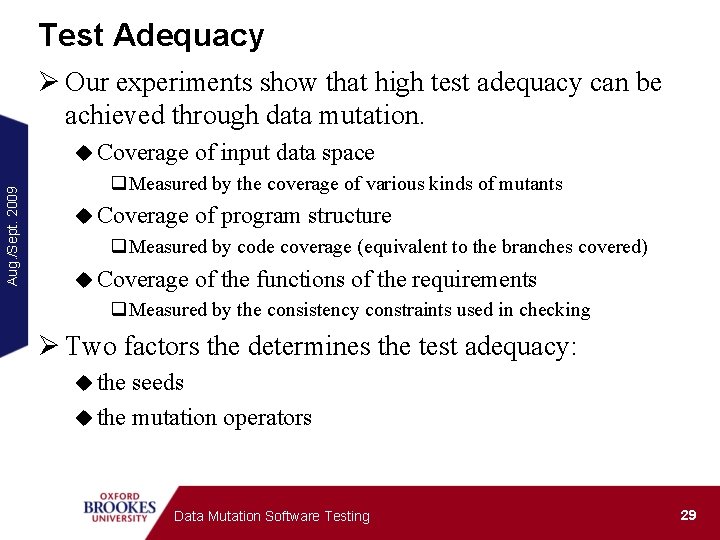

Test Adequacy Ø Our experiments show that high test adequacy can be achieved through data mutation. Aug. /Sept. 2009 u Coverage of input data space q. Measured by the coverage of various kinds of mutants u Coverage of program structure q. Measured by code coverage (equivalent to the branches covered) u Coverage of the functions of the requirements q. Measured by the consistency constraints used in checking Ø Two factors the determines the test adequacy: u the seeds u the mutation operators Data Mutation Software Testing 29

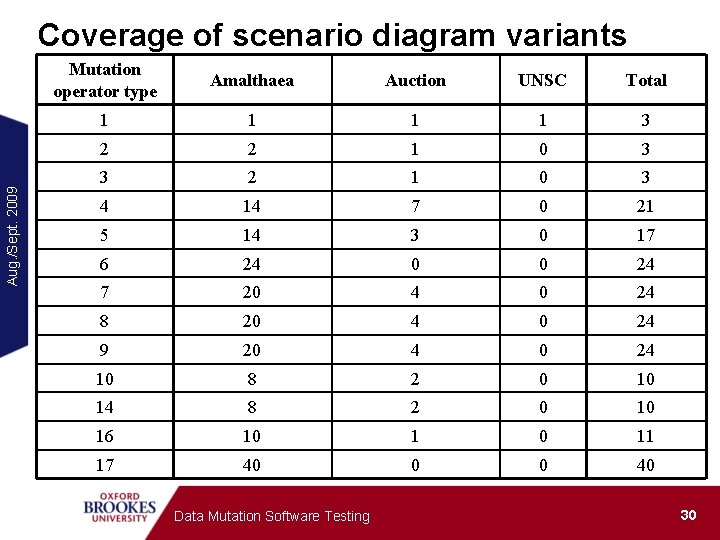

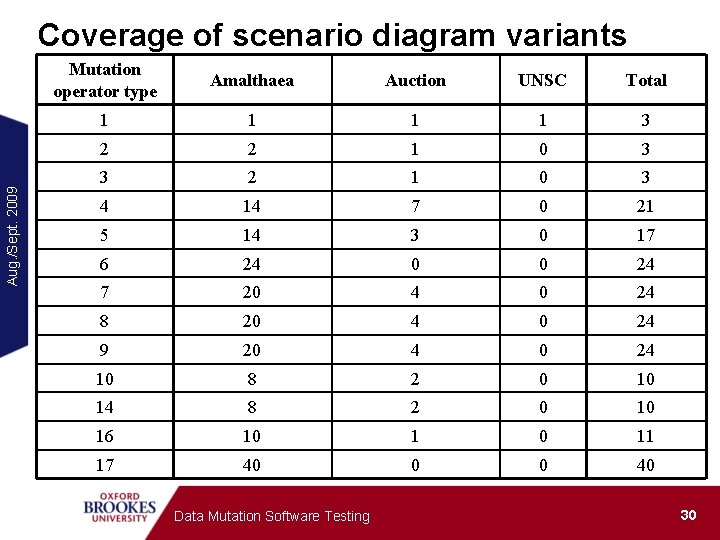

Aug. /Sept. 2009 Coverage of scenario diagram variants Mutation operator type Amalthaea Auction UNSC Total 1 1 3 2 2 1 0 3 3 2 1 0 3 4 14 7 0 21 5 14 3 0 17 6 24 0 0 24 7 20 4 0 24 8 20 4 0 24 9 20 4 0 24 10 8 2 0 10 14 8 2 0 10 16 10 1 0 11 17 40 0 0 40 Data Mutation Software Testing 30

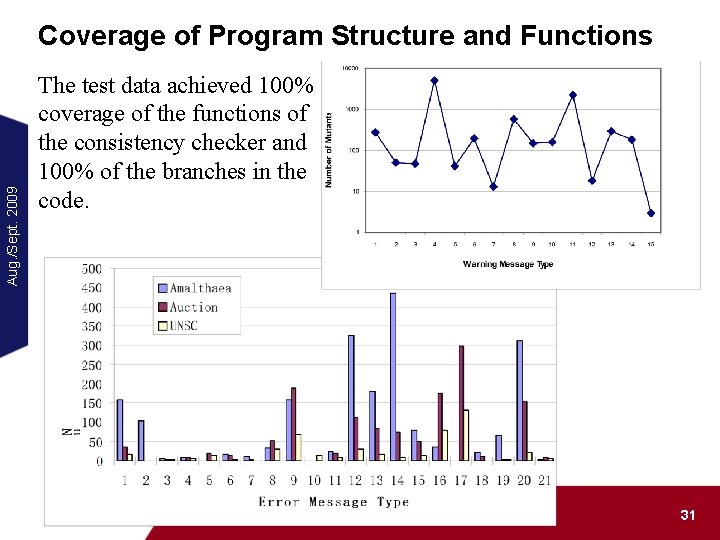

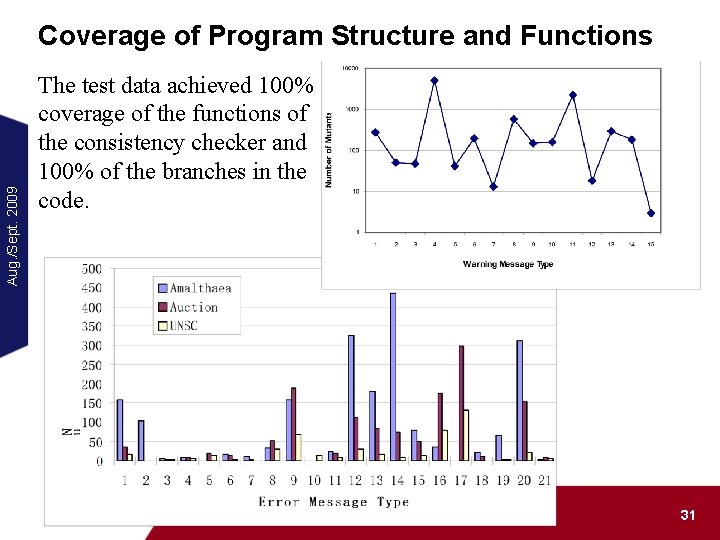

Aug. /Sept. 2009 Coverage of Program Structure and Functions The test data achieved 100% coverage of the functions of the consistency checker and 100% of the branches in the code. Data Mutation Software Testing 31

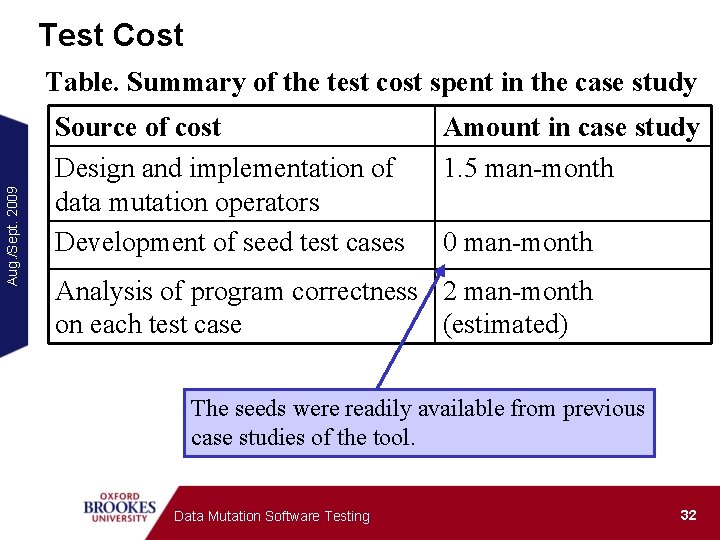

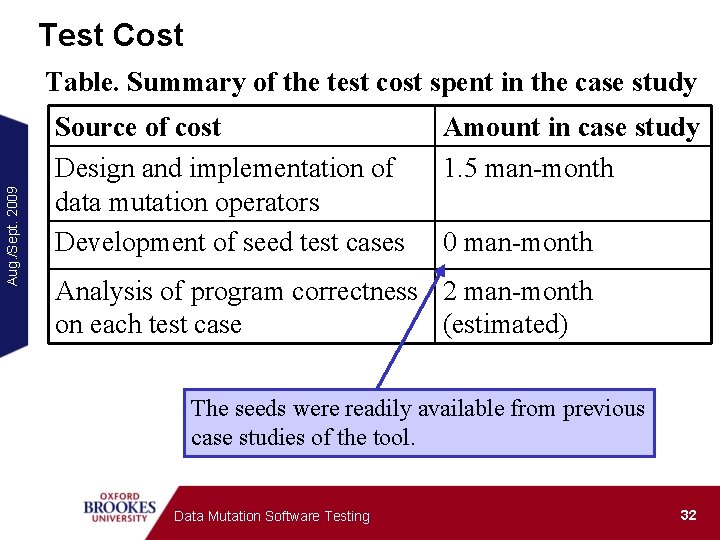

Test Cost Aug. /Sept. 2009 Table. Summary of the test cost spent in the case study Source of cost Design and implementation of data mutation operators Development of seed test cases Amount in case study 1. 5 man-month 0 man-month Analysis of program correctness 2 man-month on each test case (estimated) The seeds were readily available from previous case studies of the tool. Data Mutation Software Testing 32

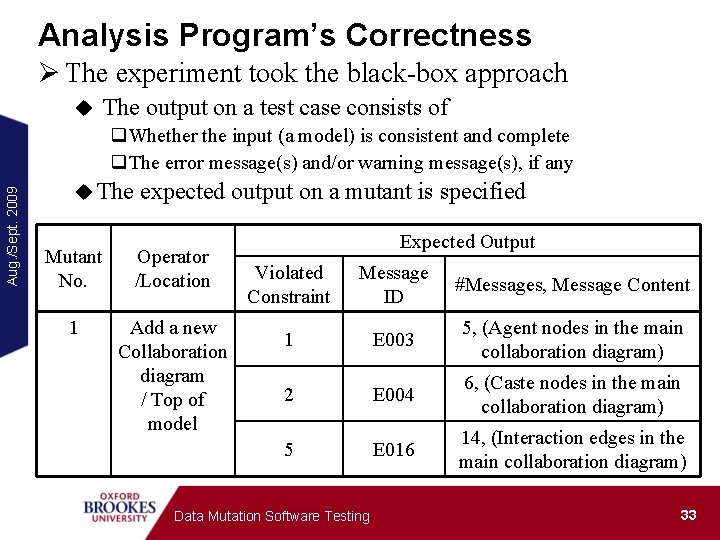

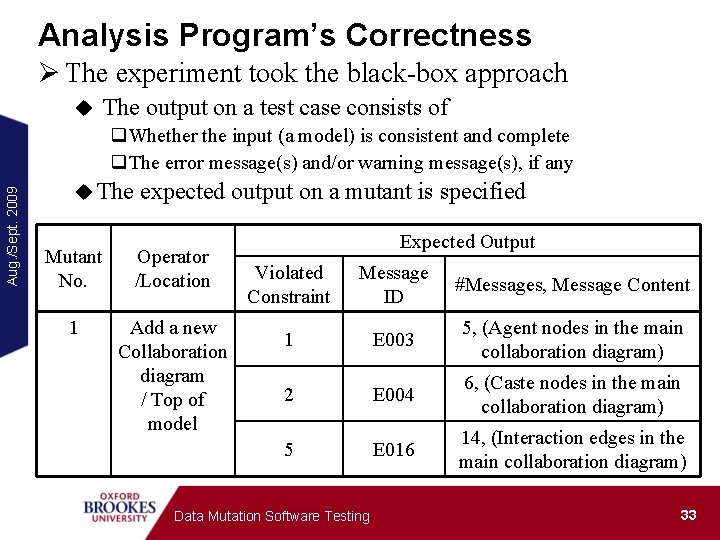

Analysis Program’s Correctness Ø The experiment took the black-box approach u The output on a test case consists of Aug. /Sept. 2009 q. Whether the input (a model) is consistent and complete q. The error message(s) and/or warning message(s), if any u The expected output on a mutant is specified Mutant No. Operator /Location 1 Add a new Collaboration diagram / Top of model Expected Output Violated Constraint Message ID #Messages, Message Content 1 E 003 5, (Agent nodes in the main collaboration diagram) 2 E 004 6, (Caste nodes in the main collaboration diagram) 5 E 016 14, (Interaction edges in the main collaboration diagram) Data Mutation Software Testing 33

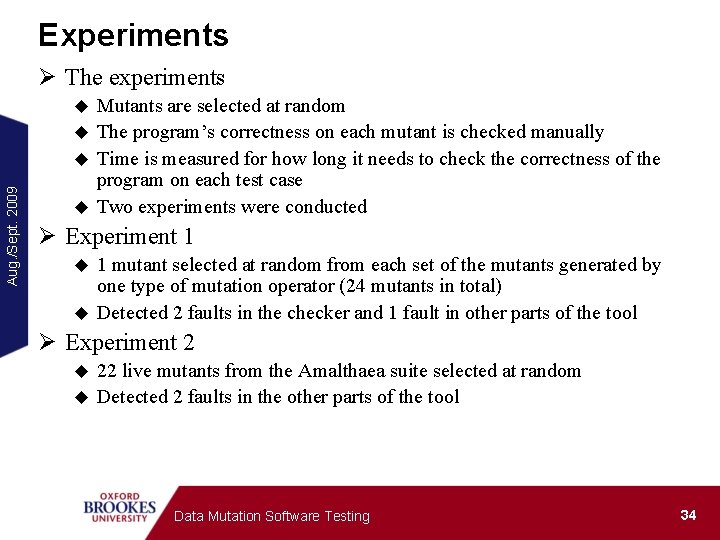

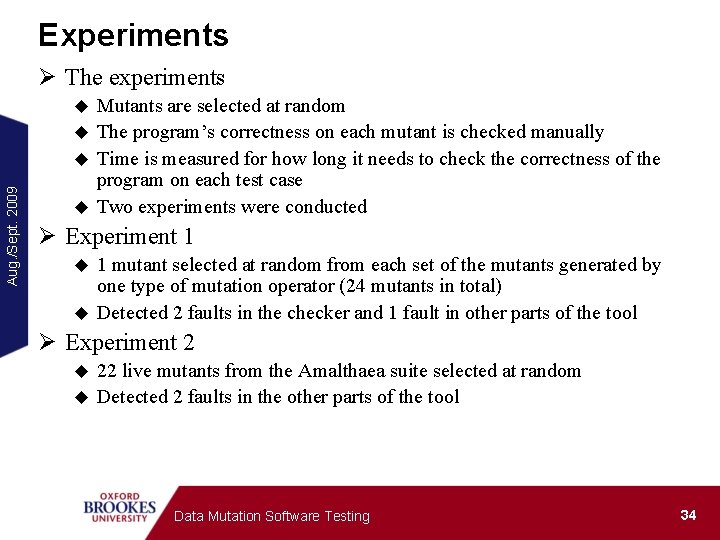

Experiments Ø The experiments Mutants are selected at random u The program’s correctness on each mutant is checked manually u Time is measured for how long it needs to check the correctness of the program on each test case u Two experiments were conducted Aug. /Sept. 2009 u Ø Experiment 1 1 mutant selected at random from each set of the mutants generated by one type of mutation operator (24 mutants in total) u Detected 2 faults in the checker and 1 fault in other parts of the tool u Ø Experiment 2 22 live mutants from the Amalthaea suite selected at random u Detected 2 faults in the other parts of the tool u Data Mutation Software Testing 34

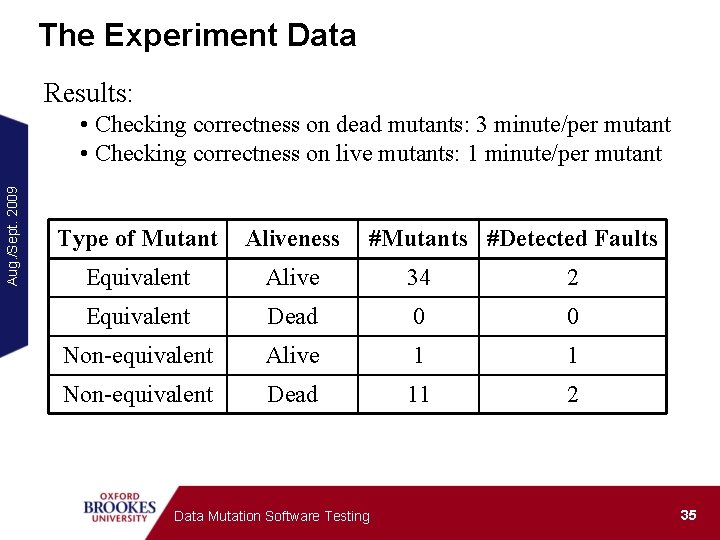

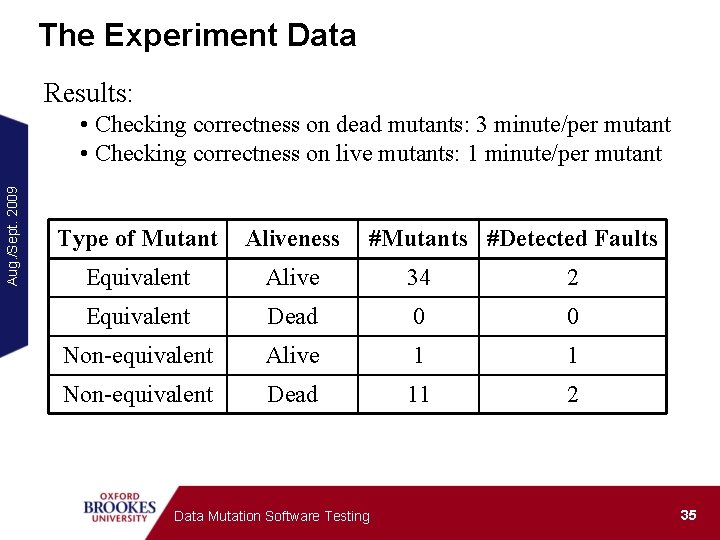

The Experiment Data Results: Aug. /Sept. 2009 • Checking correctness on dead mutants: 3 minute/per mutant • Checking correctness on live mutants: 1 minute/per mutant Type of Mutant Aliveness #Mutants #Detected Faults Equivalent Alive 34 2 Equivalent Dead 0 0 Non-equivalent Alive 1 1 Non-equivalent Dead 11 2 Data Mutation Software Testing 35

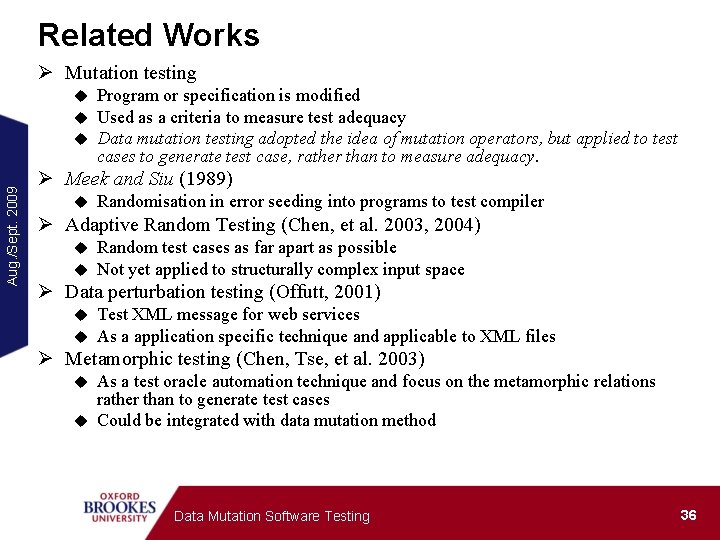

Related Works Ø Mutation testing Aug. /Sept. 2009 u u u Program or specification is modified Used as a criteria to measure test adequacy Data mutation testing adopted the idea of mutation operators, but applied to test cases to generate test case, rather than to measure adequacy. Ø Meek and Siu (1989) u Randomisation in error seeding into programs to test compiler Ø Adaptive Random Testing (Chen, et al. 2003, 2004) u u Random test cases as far apart as possible Not yet applied to structurally complex input space Ø Data perturbation testing (Offutt, 2001) u u Test XML message for web services As a application specific technique and applicable to XML files Ø Metamorphic testing (Chen, Tse, et al. 2003) As a test oracle automation technique and focus on the metamorphic relations rather than to generate test cases u Could be integrated with data mutation method u Data Mutation Software Testing 36

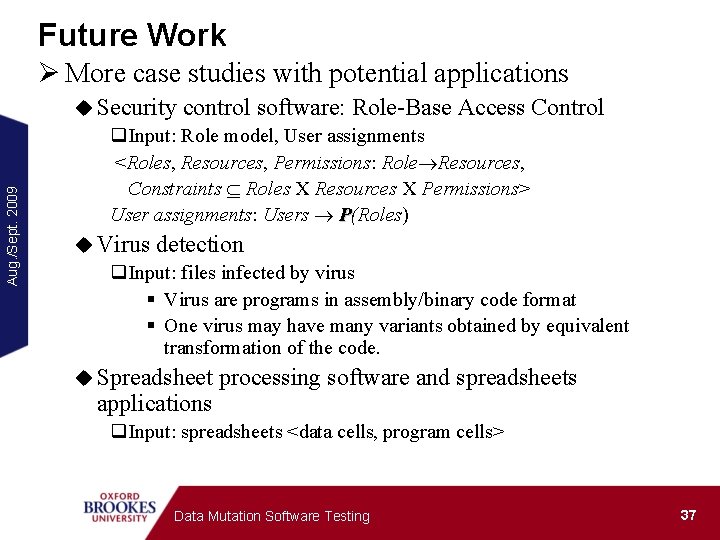

Future Work Aug. /Sept. 2009 Ø More case studies with potential applications u Security control software: Role-Base Access Control q. Input: Role model, User assignments <Roles, Resources, Permissions: Role Resources, Constraints Roles X Resources X Permissions> User assignments: Users P(Roles) u Virus detection q. Input: files infected by virus § Virus are programs in assembly/binary code format § One virus may have many variants obtained by equivalent transformation of the code. u Spreadsheet applications processing software and spreadsheets q. Input: spreadsheets <data cells, program cells> Data Mutation Software Testing 37

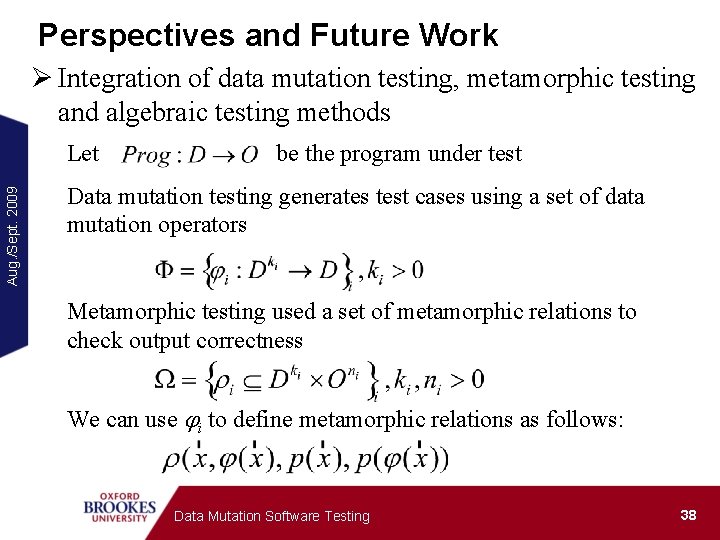

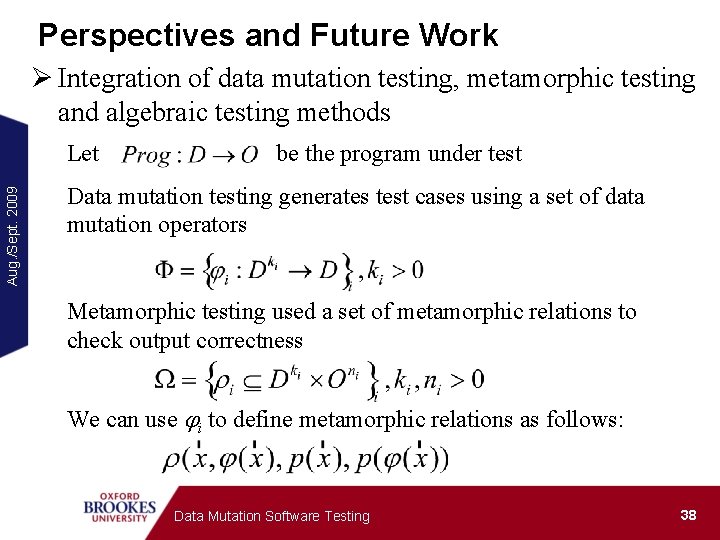

Perspectives and Future Work Ø Integration of data mutation testing, metamorphic testing and algebraic testing methods Aug. /Sept. 2009 Let be the program under test Data mutation testing generates test cases using a set of data mutation operators Metamorphic testing used a set of metamorphic relations to check output correctness We can use i to define metamorphic relations as follows: Data Mutation Software Testing 38

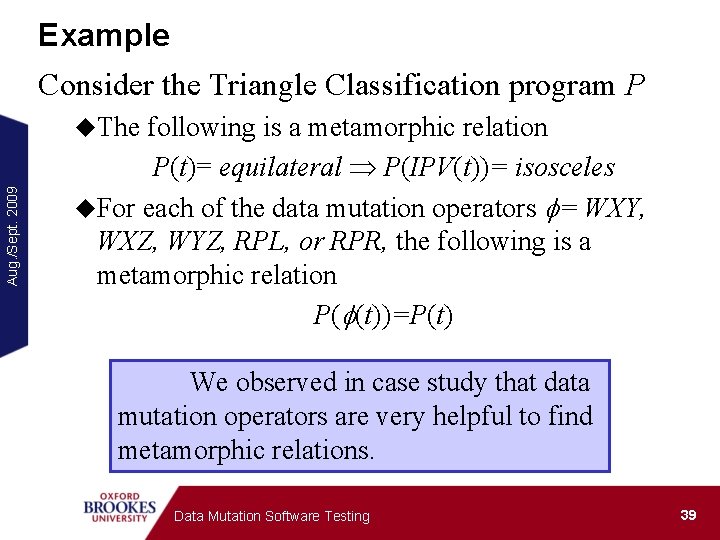

Example Consider the Triangle Classification program P Aug. /Sept. 2009 u. The following is a metamorphic relation P(t)= equilateral P(IPV(t))= isosceles u. For each of the data mutation operators f= WXY, WXZ, WYZ, RPL, or RPR, the following is a metamorphic relation P(f(t))=P(t) We observed in case study that data mutation operators are very helpful to find metamorphic relations. Data Mutation Software Testing 39

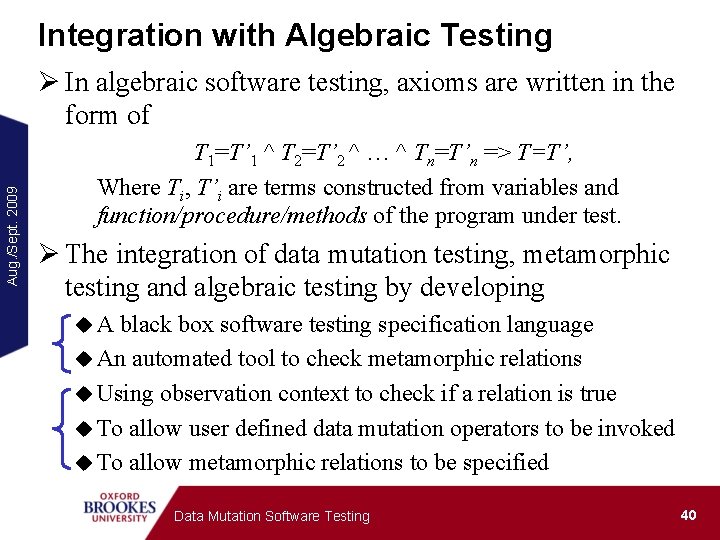

Integration with Algebraic Testing Aug. /Sept. 2009 Ø In algebraic software testing, axioms are written in the form of T 1=T’ 1 ^ T 2=T’ 2 ^ … ^ Tn=T’n => T=T’, Where Ti, T’i are terms constructed from variables and function/procedure/methods of the program under test. Ø The integration of data mutation testing, metamorphic testing and algebraic testing by developing u. A black box software testing specification language u An automated tool to check metamorphic relations u Using observation context to check if a relation is true u To allow user defined data mutation operators to be invoked u To allow metamorphic relations to be specified Data Mutation Software Testing 40

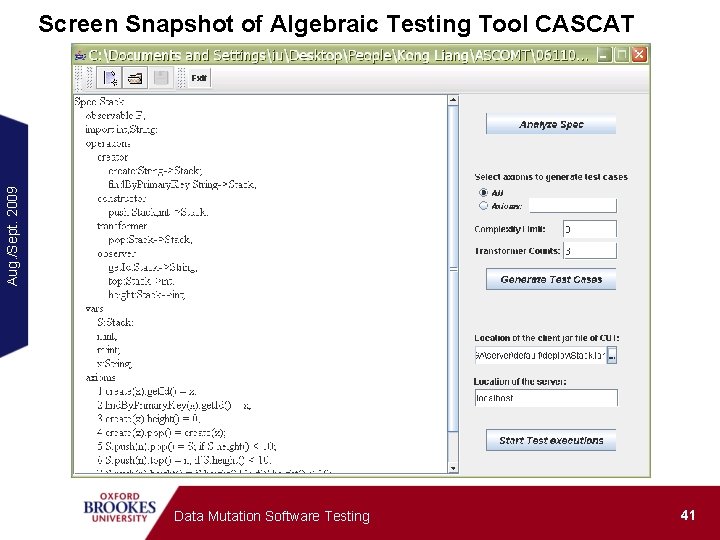

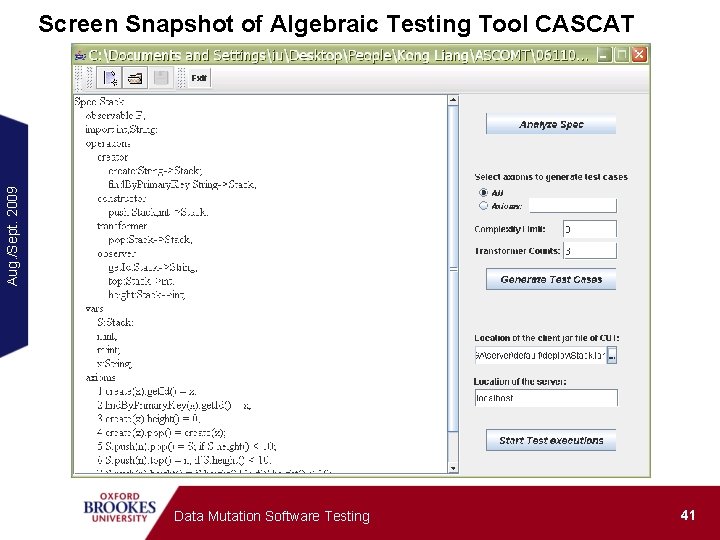

Aug. /Sept. 2009 Screen Snapshot of Algebraic Testing Tool CASCAT Data Mutation Software Testing 41

Aug. /Sept. 2009 References Ø Lijun Shan and Hong Zhu, Generating Structurally Complex Test Cases by Data Mutation: A Case Study of Testing an Automated Modelling Tool, Special Issue on Automation of Software Test, the Computer Journal, (In press). Ø Shan, L. and Zhu, H. , Testing Software Modelling Tools Using Data Mutation, Proc. of AST’ 06, ACM Press, 2006, pp 43 -49. Ø Zhu, H. and Shan, L. , Caste-Centric Modelling of Multi-Agent Systems: The CAMLE Modelling Language and Automated Tools, in Beydeda, S. and Gruhn, V. (eds) Model-driven Software Development, Research and Practice in Software Engineering, Vol. II, Springer, 2005, pp 57 -89. Ø Liang Kong, Hong Zhu and Bin Zhou, Automated Testing EJB Components Based on Algebraic Specifications, Proc. of TEST’ 07, IEEE CS Press, 2007. Data Mutation Software Testing 42