DATA MINING PROCESS Introduction Look at the big

DATA MINING PROCESS: Introduction Look at the big picture. Get the data. Discover and visualize the data to gain insights. Prepare the data for Machine Learning algorithms. Select a model and train it. Fine-tune your model. Present your solution. Launch, monitor, and maintain your system.

DATA MINING PROCESS: Environments Anaconda: https: //anaconda. org Jupyter python notebooks Matlab: https: //www. mathworks. com Can obtain free trial, purchase student version ($99) R: https: //www. r-project. org Free software environment for statistical computing and graphics WEKA: https: //www. cs. waikato. ac. nz/ml/weka/ The workbench for machine learning

DATA MINING PROCESS: Environments Anaconda – python-based and hence most flexible Install packages matplotlib (graphing) numpy (library for the Python programming language, adding support for large, multi-dimensional arrays and matrices, along with a large collection of high-level mathematical functions to operate on these arrays 0 pandas (library for data manipulation and analysis) scipy (library used for scientific computing and technical computing) scikit-learn (library for machine learning, providing a range of supervised and unsupervised learning algorithms)

DATA MINING PROCESS: Environments Matlab: https: //www. mathworks. com Purchase any additional packages R: https: //www. r-project. org Install modules as needed WEKA: https: //www. cs. waikato. ac. nz/ml/weka/ Mostly self-contained but can add extend add packages Excel or alternative spreadsheets!

DATA PREPARATION: Grab Some Data Popular open data repositories: UC Irvine Machine Learning Repository: https: //archive. ics. uci. edu/ml/index. php Kaggle datasets: https: //www. kaggle. com Amazon’s AWS datasets: https: //registry. opendata. aws NASA Open Datasets: https: //nasa. github. io/data-nasa-govfrontpage/ USGS Datasets: https: //www. usgs. gov/products/data-andtools/science-datasets NOAA Datasets: https: //www. ncdc. noaa. gov/cdo-web/datasets

DATA PREPARATION: Dataset Portals Meta portals (they list open data repositories) http: //dataportals. org/ http: //opendatamonitor. eu/ http: //quandl. com/ Other pages listing many popular open data repositories: Wikipedia’s list of Machine Learning datasets Quora. com question Datasets subreddit Data about specific problem Search for it!

DATA PREPARATION: Data cleaning Other pages listing many popular open data repositories: Wikipedia’s list of Machine Learning datasets Quora. com question Datasets subreddit

Anaconda: Getting Started python 3 -m pip install matplotlib numpy pandas scipy scikitlearn from OS OR ! python 3 -m pip install matplotlib numpy pandas scipy scikit-learn from within Jupyter notebook

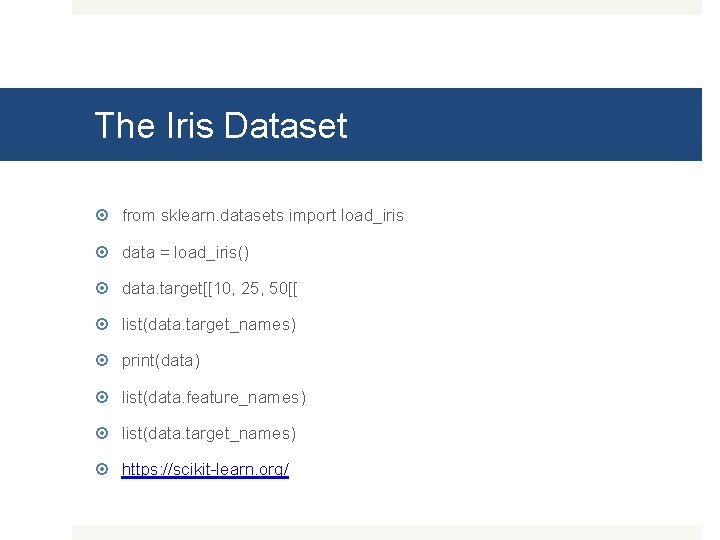

The Iris Dataset from sklearn. datasets import load_iris data = load_iris() data. target[[10, 25, 50[[ list(data. target_names) print(data) list(data. feature_names) list(data. target_names) https: //scikit-learn. org/

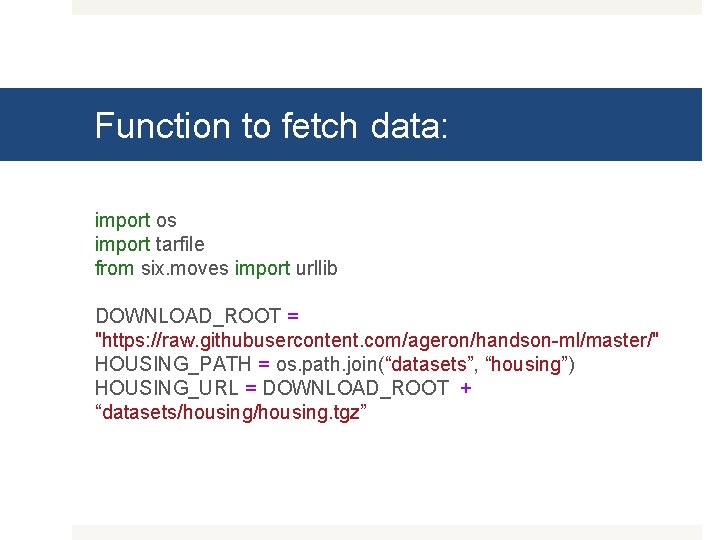

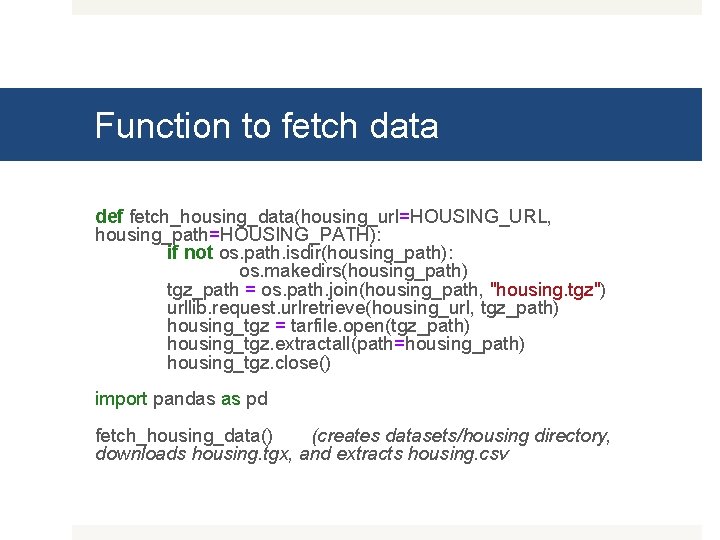

Function to fetch data: import os import tarfile from six. moves import urllib DOWNLOAD_ROOT = "https: //raw. githubusercontent. com/ageron/handson-ml/master/" HOUSING_PATH = os. path. join(“datasets”, “housing”) HOUSING_URL = DOWNLOAD_ROOT + “datasets/housing. tgz”

Function to fetch data def fetch_housing_data(housing_url=HOUSING_URL, housing_path=HOUSING_PATH): if not os. path. isdir(housing_path): os. makedirs(housing_path) tgz_path = os. path. join(housing_path, "housing. tgz") urllib. request. urlretrieve(housing_url, tgz_path) housing_tgz = tarfile. open(tgz_path) housing_tgz. extractall(path=housing_path) housing_tgz. close() import pandas as pd fetch_housing_data() (creates datasets/housing directory, downloads housing. tgx, and extracts housing. csv

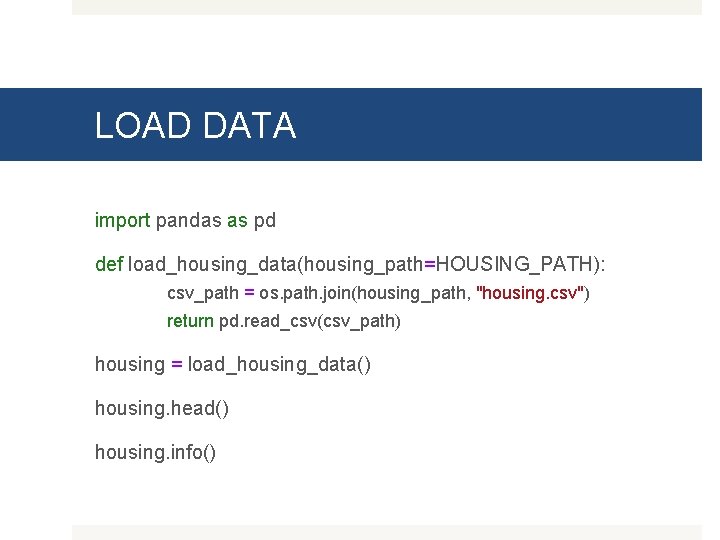

LOAD DATA import pandas as pd def load_housing_data(housing_path=HOUSING_PATH): csv_path = os. path. join(housing_path, "housing. csv") return pd. read_csv(csv_path) housing = load_housing_data() housing. head() housing. info()

EXPLORING HAVE FUN!

- Slides: 13