Data mining for pothole detection Pro gradu seminar

- Slides: 27

Data mining for pothole detection Pro gradu seminar 10. 2. 2011 Hannu Hautakangas Jukka Nieminen

Contents Introduction Related work Data preprocessing Feature extraction Feature selection Support vector machine Results Problems References

Introduction Purpose of the research is to detect anomalies on road surface Expansion joints Potholes Speed bumps Etc. Supervisors Tapani Ristaniemi Fengyu Cong

Related work Accelerometer based techniques Pothole Patrol Nericell Terrain classification Other techniques Image detection Laser profilometer Ground penetrating radar

Data Acceleration data Contains lateral, longitudinal and vertical axis GPS position and timestamp for each measurement Class label for each measurement Sampling rate is 38 Hz Data was collected using several different vehicles

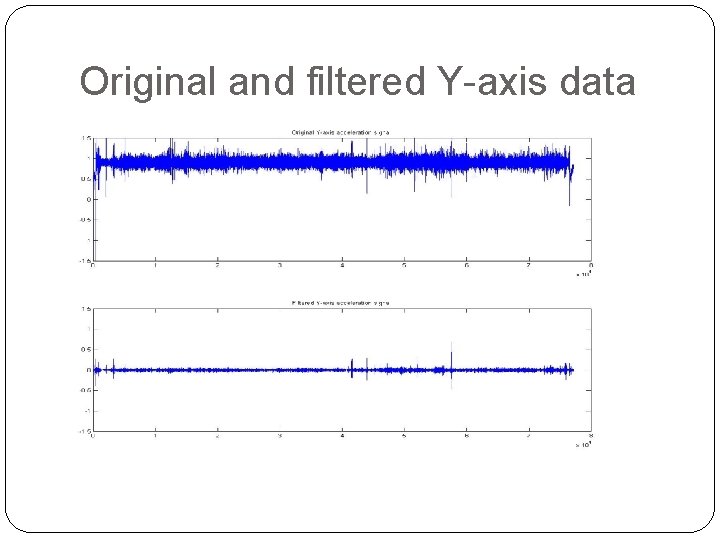

Data preprocessing Several filters were produced and tested using different passbands in the frequency range 0. 5 – 6 Hz Data was windowed using sliding window Different sliding window functions were tested Chebyshev Hamming Taylor Etc. Normalization in the range [0, 1]

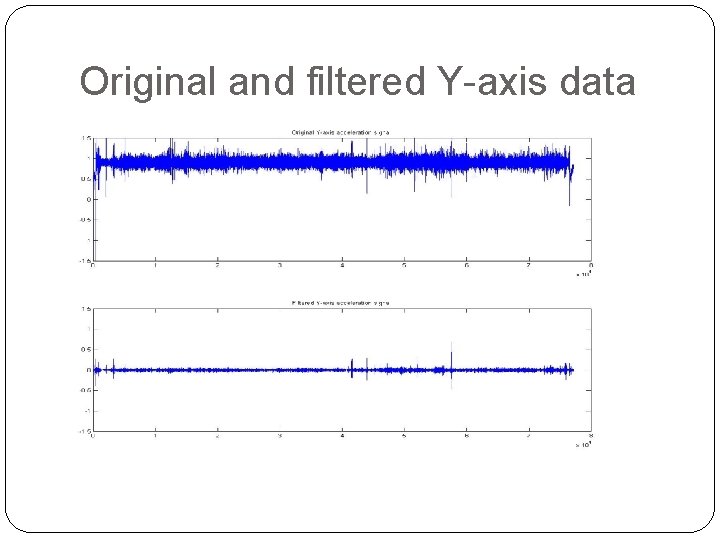

Original and filtered Y-axis data

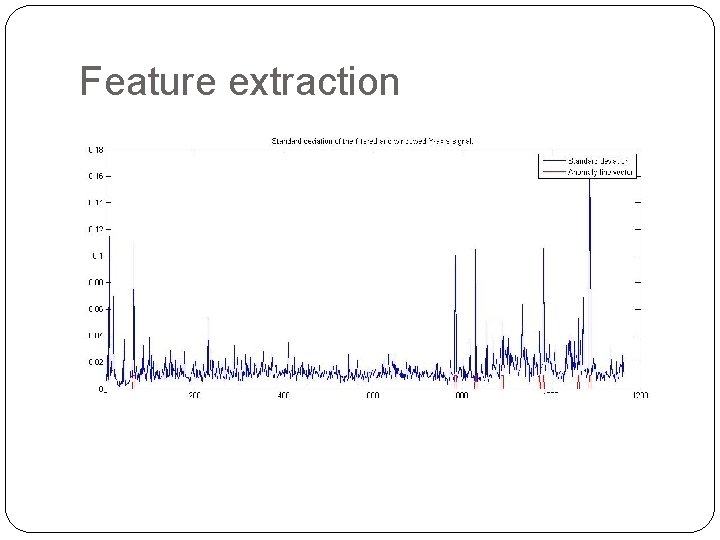

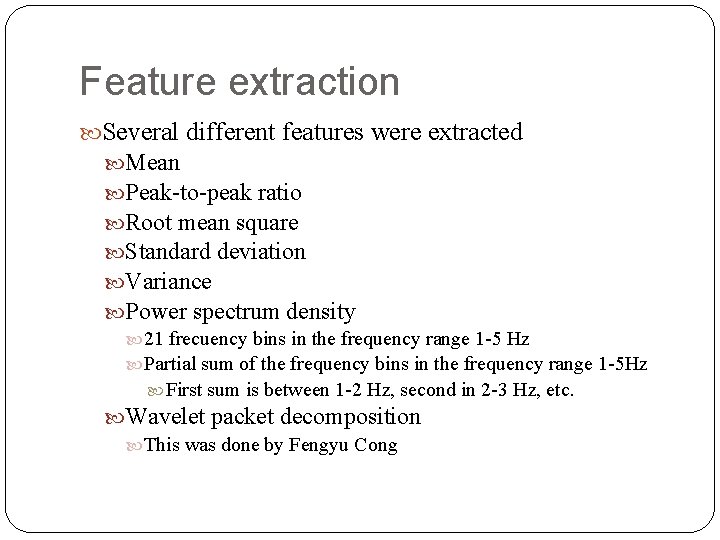

Feature extraction Several different features were extracted Mean Peak-to-peak ratio Root mean square Standard deviation Variance Power spectrum density 21 frecuency bins in the frequency range 1 -5 Hz Partial sum of the frequency bins in the frequency range 1 -5 Hz First sum is between 1 -2 Hz, second in 2 -3 Hz, etc. Wavelet packet decomposition This was done by Fengyu Cong

Feature extraction

Feature selection is used to reduce the number of features and thus reduce the computational effort and make the classification operation faster and more accurate Different techniques were tested – Backward and forward selection – Genetic algorithm – Principal component analysis

Backward and forward selection Originally introduced by M. A. Efroymson 1960 Tries to find best feature subset Model includes only significant features Features are usually evaluated using F-test Based on linear regression Feature is significant if it’s f-value > predetermined significant level

Backward and forward selection Backward selection Starts with all features in the model Removes features one by one starting from most unsignificant Continues until model includes only significant features Forward selection Opposite to backward selection Starts with zero features in the model Adds features one by one starting from most significant Continues until all significant features are selected

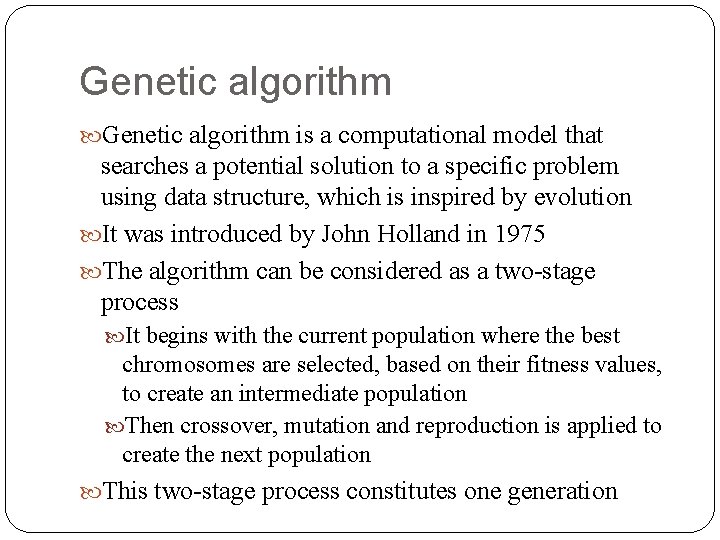

Genetic algorithm is a computational model that searches a potential solution to a specific problem using data structure, which is inspired by evolution It was introduced by John Holland in 1975 The algorithm can be considered as a two-stage process It begins with the current population where the best chromosomes are selected, based on their fitness values, to create an intermediate population Then crossover, mutation and reproduction is applied to create the next population This two-stage process constitutes one generation

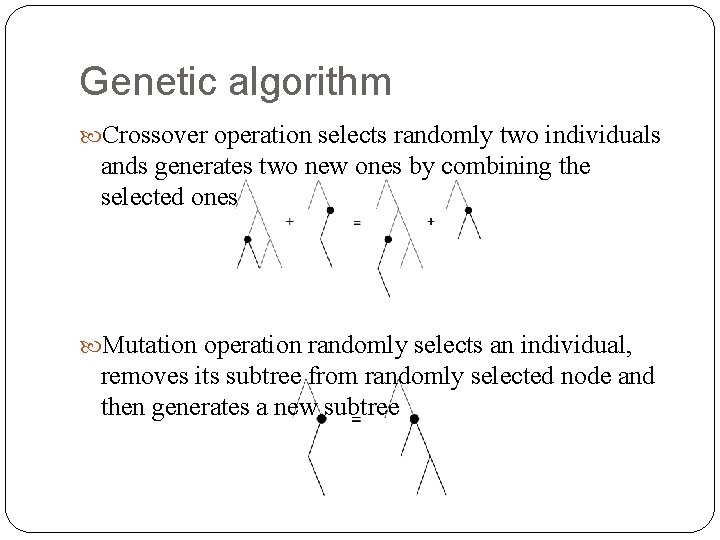

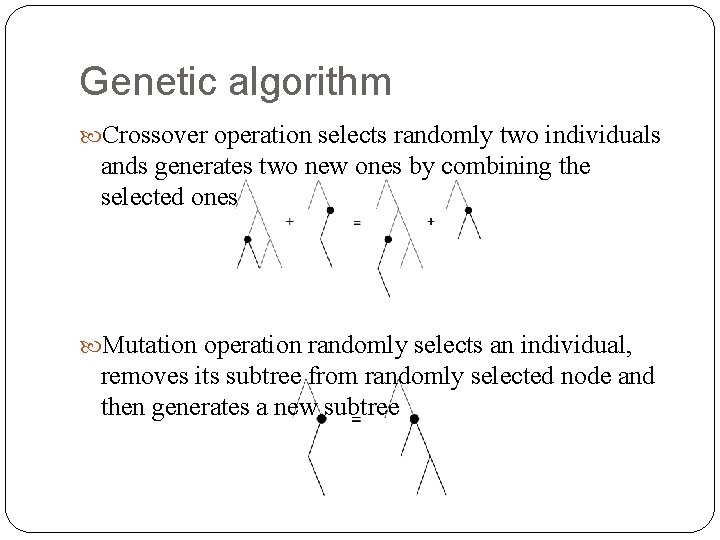

Genetic algorithm Crossover operation selects randomly two individuals ands generates two new ones by combining the selected ones Mutation operation randomly selects an individual, removes its subtree from randomly selected node and then generates a new subtree

Genetic algorithm Reproduction operation moves selected individuals to next population without any change Each feature is represented as a binary vector of dimension m, where m is the amount of features Bit 1 means that the corresponding feature is part of the subset and bit 0 means that the corresponding feature is not part of the subset

Principal component analysis PCA was introduced by Karl Pearson in 1901 The method was not able to calculate more than two or three variables In 1933 Harold Hotelling described the methods for computing multivariate PCA

Principal component analysis The object of PCA is to find uncorrelated principal components Z 1, Z 2, …, Zp that describes the debendencies between variables X 1, X 2, . . , Xp Principal components are ordered so that the first component Z 1 displays the largest amount of variation in the data, second component Z 2 displays the second largest amount of variation, and so on Principal components are selected based on their eigenvalues

Support vector machine Vladimir Vapnik introduced SVM in 1995 A binary classification tool Tries to find optimal separating hyperplane to separate classes from each other Basic SVM can classify only two classes but it can be extended to multiclassifier

Support vector machine Creates a model based on training data Each data sample has a class label Model predicts to which class a specific data sample belongs Model is tested using testing data Predicted labels are compared to known labels A Matlab library LIBSVM was used as an SVM-tool LIBSVM implements most of the common SVM methods Supports multiclassification

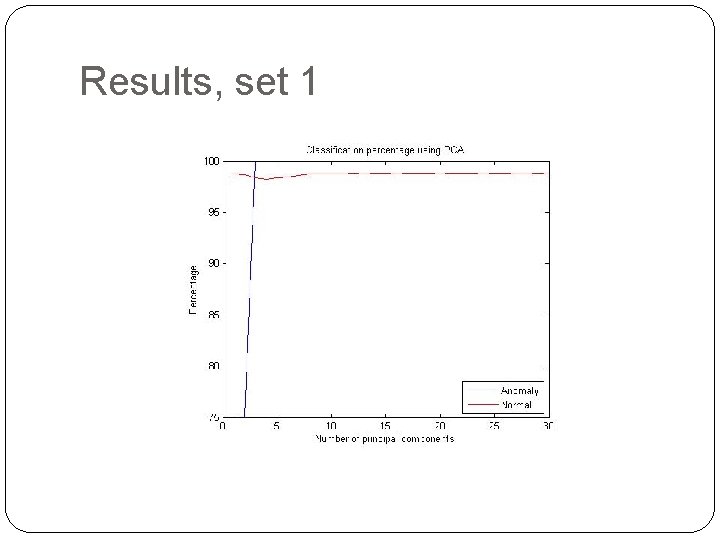

Results Data was classified with SVM using PCA Two data sets Set 1 consists of 1779 normal and 12 anomaly samples Set 2 consists of 1779 normal and 21 anomaly samples Both sets have 30 features which are generated with wavelet packet decomposition 70% of the normal samples were used to create SVM model Rest of the normal samples (534) were used to test the model PCA was used to select the features that represents most of the variation in the data

Results Set 1 With three or more principal components All 12 anomalies were classified correctly 6. 93 normal samples out of 534 were classified incorrectly Set 2 With three principal components 1. 63 anomalies out of 21 were classified incorretly 7. 26 normal samples out of 534 were classified incorrectly With 10 or more principal components 0. 02 anomalies out of 21 were classified incorrectly 6. 53 normal samples out of 534 were classified incorrectly

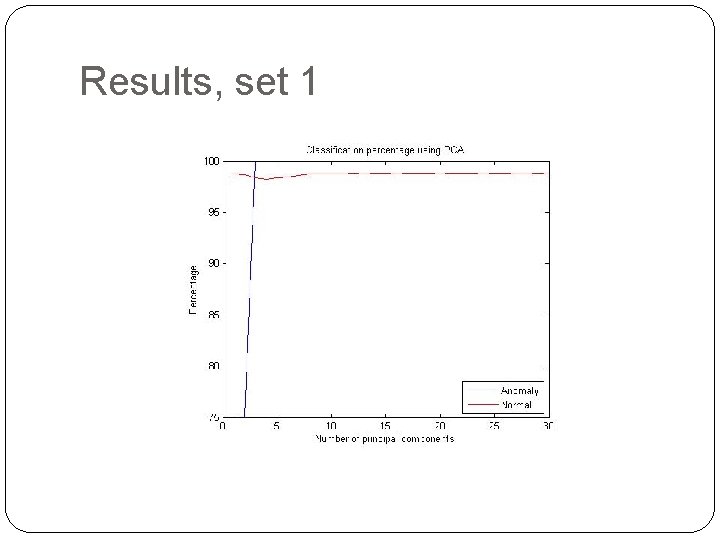

Results, set 1

Results, set 2

Problems Timestamps are not accurate which affects the labeling of the classes Some normal data samples are labeled as anomalies and vice versa Small number of anomaly data samples compared to normal data samples For example data set 1 has 12 anomaly and 1779 normal samples Multiclassification is difficult because there is not enough anomaly samples to create multiclass SVM model

References Backward and forward selection N. R. Draper and H. Smith, Applied regression analysis 2 nd edition, John Wiley & Sons Inc, 1981. M. A. Efroymson, Multiple regression analysis, in Mathematical Methods for Digital Computers, editors A. Ralston and H. S. Wilf, John Wiley & Sons Inc, 1960. Genetic algorithm John Holland, Adaptation in natural and artificial systems : an introductory analysis with applications to biology, control, and artificial intelligence, University of Michigan Press, 1975. L. B. Jack and Asoke K. Nandi, Genetic algorithms for feature selection in machine condition monitoring with vibration signals, in IEEE Signal Processing Vol 147, No 3, June 2000. Darrell Whitley, A genetic algorithm tutorial, in Statistics and computing 4, pages 65 -85, 1994. PCA Harold Hotelling, Analysis of a complex of statistical variables into principal components, in Journal of Educational Psychology, volume 24, issue 7 pages 498 -520, October 1933. Ian T. Jolliffe, Principal Component Analysis, Springer-Verlag, New York, 2002. Karl Pearson, On lines and planes of closest fit to a system of points in space, Philosophical Magazine, Vol. 2, pages 559 -572, 1901.

References Related Work W. Dargie, Analysis of time and frequency domain features of accelerometer measurements, Proceedings of 18 th Internatonal Conference on Computer Communications and Networks. ICCCN 2009, pages 1 -6. Du. Pont, Edmond and Moore, Carl and Collins, Emmanuel and Coyle, Eric, Frequency response method for terrain classification in autonomous ground vehicles, in Autonomous Robots, vol. 24, pages 337 -347, 05/04, 2008. J. Eriksson, L. Girod, B. Hull, R. Newton, S. Madden and H. Balakrishnan, The pothole patrol: using a mobile sensor network for road surface monitoring, in Mobi. Sys 2008: Proceeding of the 6 th international conference on Mobile systems, applications and services, ACM, New York, 2008, pages 29 -39. D. H. Kil, F. B. Shin, Automatic road-distress classification and identification using a combination of hierarchical classifiers and expert systems-subimage and object processing, Proceedings of International Conference on Image Processing, pages 414 - 417 vol 2, Santa Barbara, CA, USA 1997. J. Lin and Y. Liu, Potholes detection based on SVM in the pavement distress image, in Ninth International Symposium on Distributed Computing and Applications to Business, Engineering and Science, pages 544 - 547, Hong Kong, China 2010. P. Mohan, V. N. Padmanabhan and R. Ramjee, Nericell: Rich monitoring of road and traffic conditions using mobile smartphones, in Sen. Sys 2008: Proceedings of the 6 th ACM conference on Embedded network sensor systems, ACM, New York, 2008, pagess 323 -336. SVM Corinna Cortes and Vladimir Vapnik, Support-Vector Networks, Machine Learning, Volume 20, pages 273 -297, Kluwer Academic Publishers, Boston, 1995. LIBSVM – A library for Support Vector Machines, http: //www. csie. ntu. edu. tw/~cjlin/libsvm/, referred 4. 2. 2011.

Tack så mycket!