Data Mining for Information Retrieval ChunNan Hsu Institute

- Slides: 25

Data Mining for Information Retrieval Chun-Nan Hsu Institute of Information Science Academia Sinica, Taipei, TAIWAN Copyright © 1998 Chun-Nan Hsu, All right reserved Lab name TBA NTUST talk 1

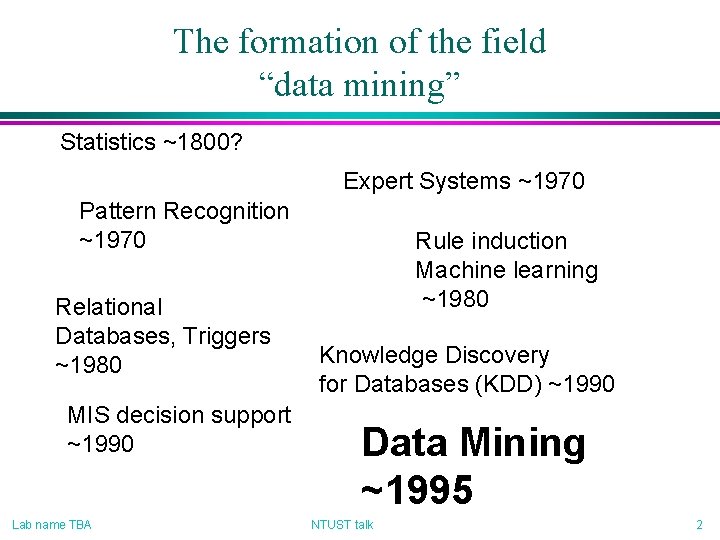

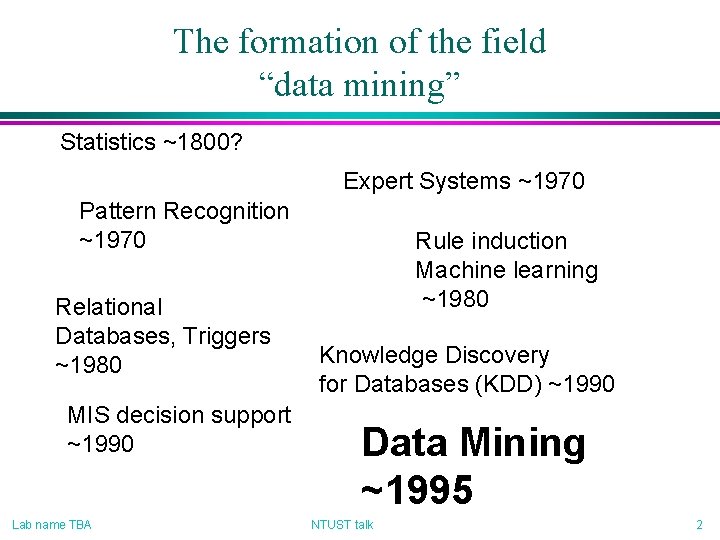

The formation of the field “data mining” Statistics ~1800? Expert Systems ~1970 Pattern Recognition ~1970 Relational Databases, Triggers ~1980 MIS decision support ~1990 Lab name TBA Rule induction Machine learning ~1980 Knowledge Discovery for Databases (KDD) ~1990 Data Mining ~1995 NTUST talk 2

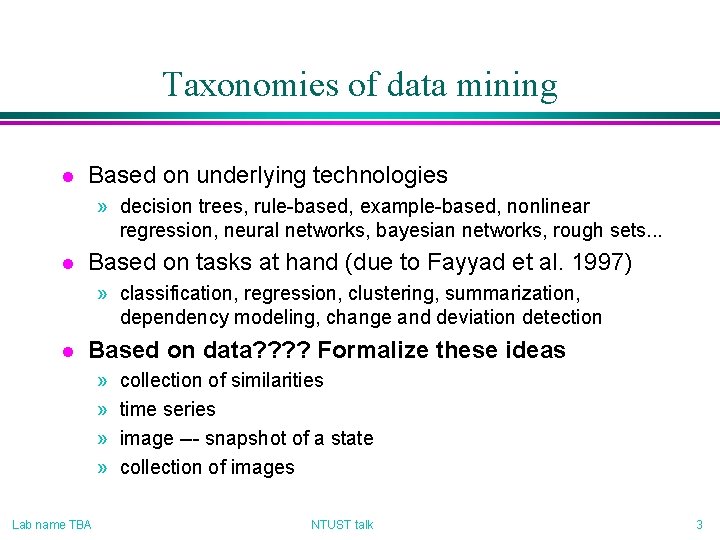

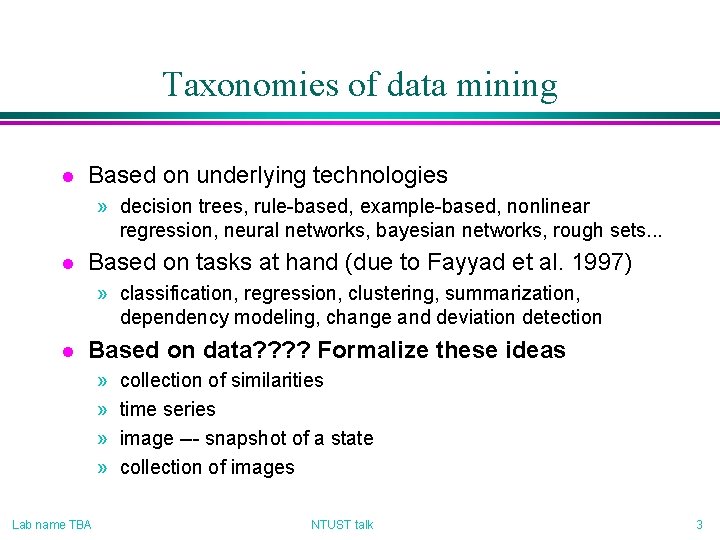

Taxonomies of data mining l Based on underlying technologies » decision trees, rule-based, example-based, nonlinear regression, neural networks, bayesian networks, rough sets. . . l Based on tasks at hand (due to Fayyad et al. 1997) » classification, regression, clustering, summarization, dependency modeling, change and deviation detection l Based on data? ? Formalize these ideas » » Lab name TBA collection of similarities time series image --- snapshot of a state collection of images NTUST talk 3

Collection of similarities l l l Characterize classes by generating classifiers (supervised learning)? ? ? Cluster objects into classes (clustering, unsupervised learning)? ? ? Many techniques available, most well understood Lab name TBA NTUST talk 4

Time series l l l Forecasting, predicting the next (few) states Characterizing the “trend” to detect changes and deviations Usually can be reformulated as a supervised learning problem Lab name TBA NTUST talk 5

Collection of images l l Extracting dependency, co-relations Example: a collection of shopping lists of supermarket customers Example: a collection of symptom lists of patients taking a new medicine? ? ? ? Techniques » Association rules » Bayesian networks and other probabilistic graphical models Lab name TBA NTUST talk 6

Image l l Summarization Key feature extraction Not much is known Example: a snapshot of an inventory database Lab name TBA NTUST talk 7

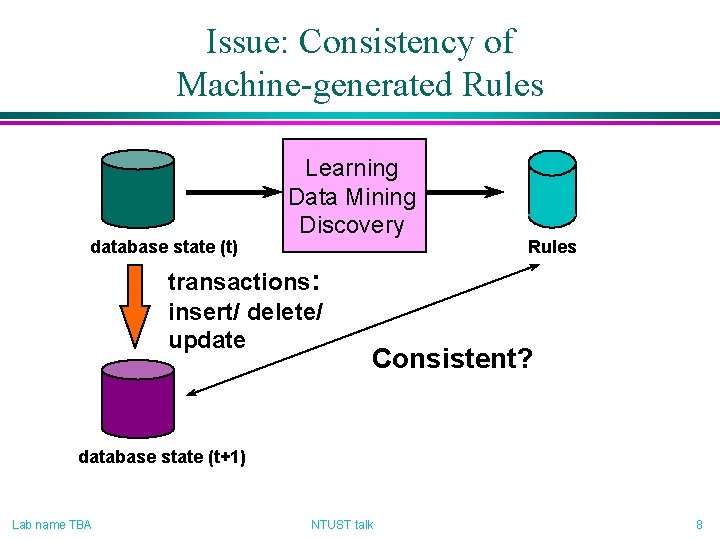

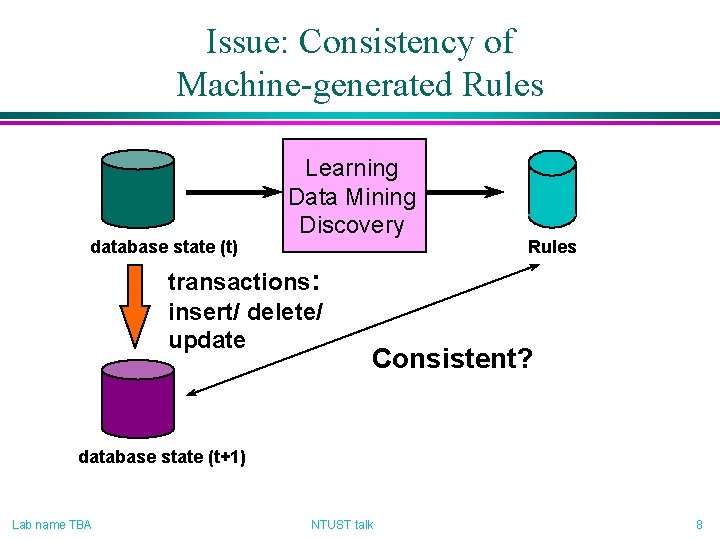

Issue: Consistency of Machine-generated Rules database state (t) Learning Data Mining Discovery transactions: insert/ delete/ update Rules Consistent? database state (t+1) Lab name TBA NTUST talk 8

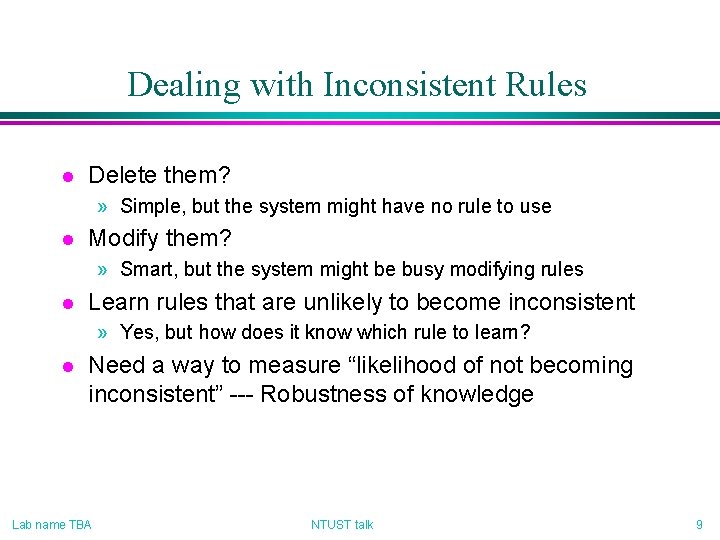

Dealing with Inconsistent Rules l Delete them? » Simple, but the system might have no rule to use l Modify them? » Smart, but the system might be busy modifying rules l Learn rules that are unlikely to become inconsistent » Yes, but how does it know which rule to learn? l Need a way to measure “likelihood of not becoming inconsistent” --- Robustness of knowledge Lab name TBA NTUST talk 9

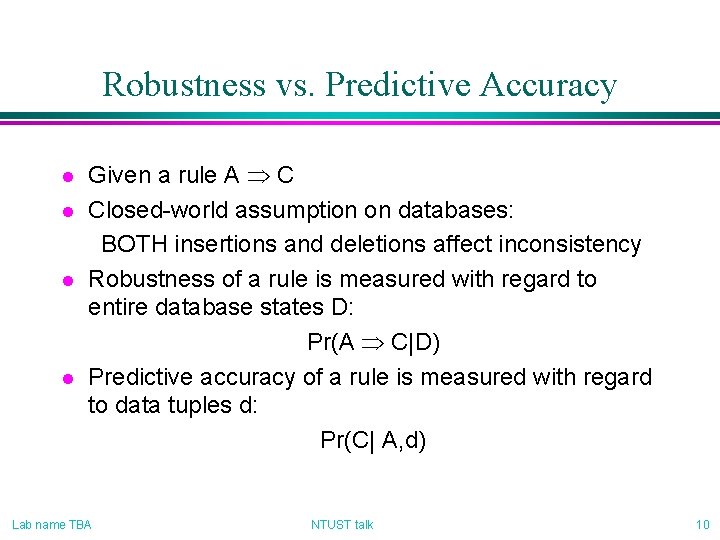

Robustness vs. Predictive Accuracy l l Given a rule A Þ C Closed-world assumption on databases: BOTH insertions and deletions affect inconsistency Robustness of a rule is measured with regard to entire database states D: Pr(A Þ C|D) Predictive accuracy of a rule is measured with regard to data tuples d: Pr(C| A, d) Lab name TBA NTUST talk 10

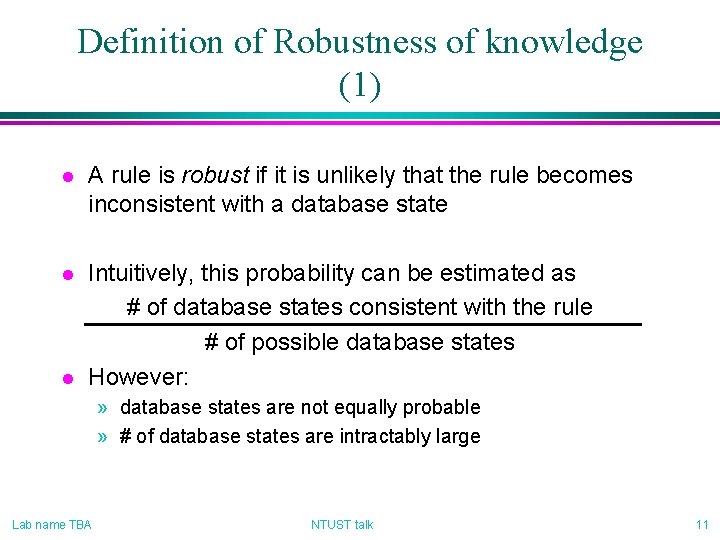

Definition of Robustness of knowledge (1) l A rule is robust if it is unlikely that the rule becomes inconsistent with a database state l Intuitively, this probability can be estimated as # of database states consistent with the rule # of possible database states However: l » database states are not equally probable » # of database states are intractably large Lab name TBA NTUST talk 11

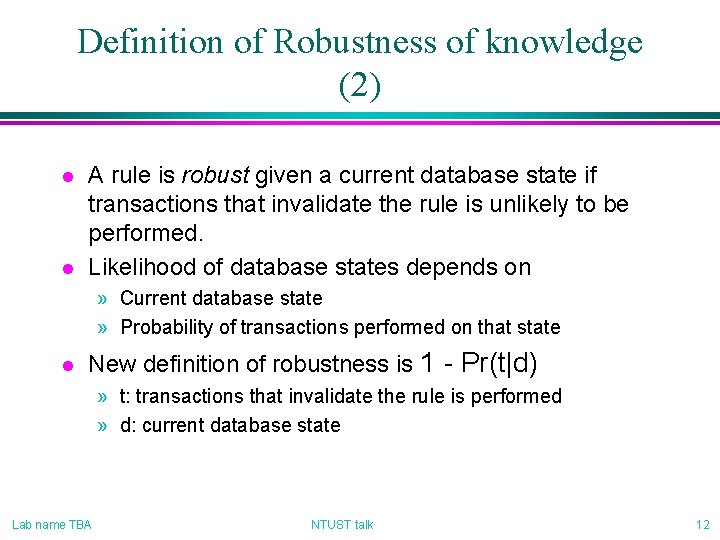

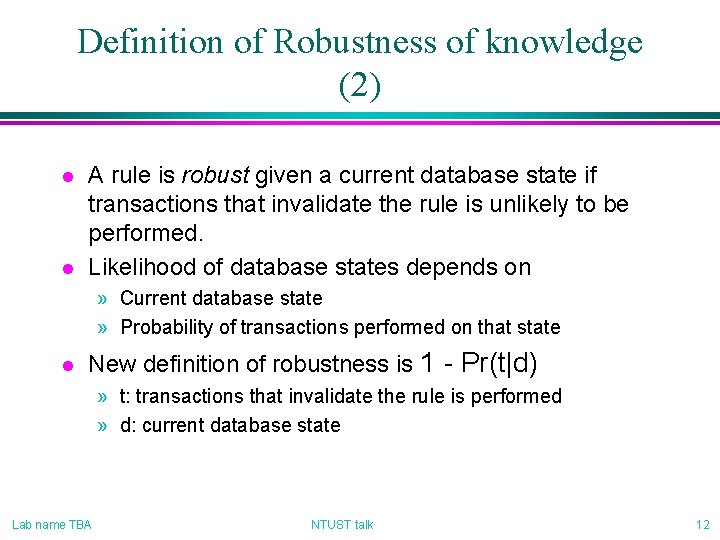

Definition of Robustness of knowledge (2) l l A rule is robust given a current database state if transactions that invalidate the rule is unlikely to be performed. Likelihood of database states depends on » Current database state » Probability of transactions performed on that state l New definition of robustness is 1 - Pr(t|d) » t: transactions that invalidate the rule is performed » d: current database state Lab name TBA NTUST talk 12

Robustness Estimation l l l Step 1: Find transactions that invalidate the input rule Step 2: Decompose the probabilities of invalidating transactions into local probabilities Step 3: Estimate local probabilities Lab name TBA NTUST talk 13

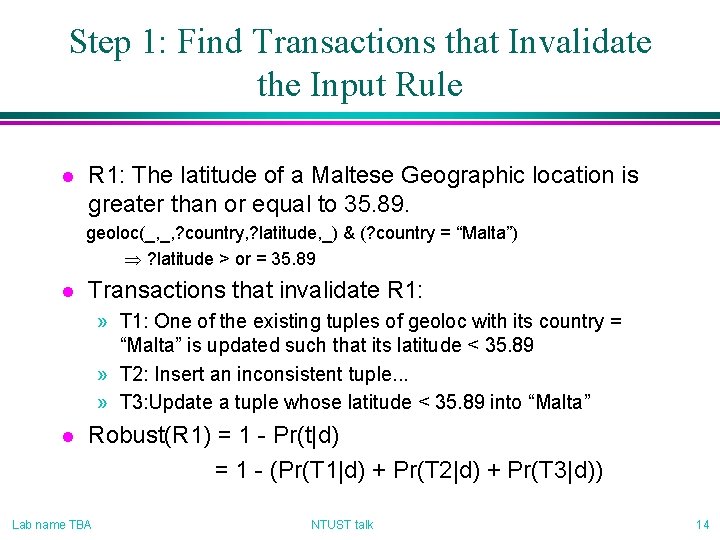

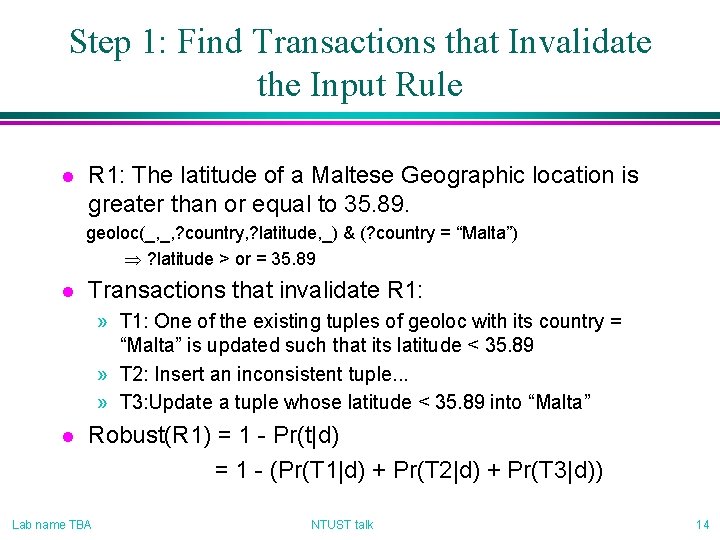

Step 1: Find Transactions that Invalidate the Input Rule l R 1: The latitude of a Maltese Geographic location is greater than or equal to 35. 89. geoloc(_, _, ? country, ? latitude, _) & (? country = “Malta”) Þ ? latitude > or = 35. 89 l Transactions that invalidate R 1: » T 1: One of the existing tuples of geoloc with its country = “Malta” is updated such that its latitude < 35. 89 » T 2: Insert an inconsistent tuple. . . » T 3: Update a tuple whose latitude < 35. 89 into “Malta” l Robust(R 1) = 1 - Pr(t|d) = 1 - (Pr(T 1|d) + Pr(T 2|d) + Pr(T 3|d)) Lab name TBA NTUST talk 14

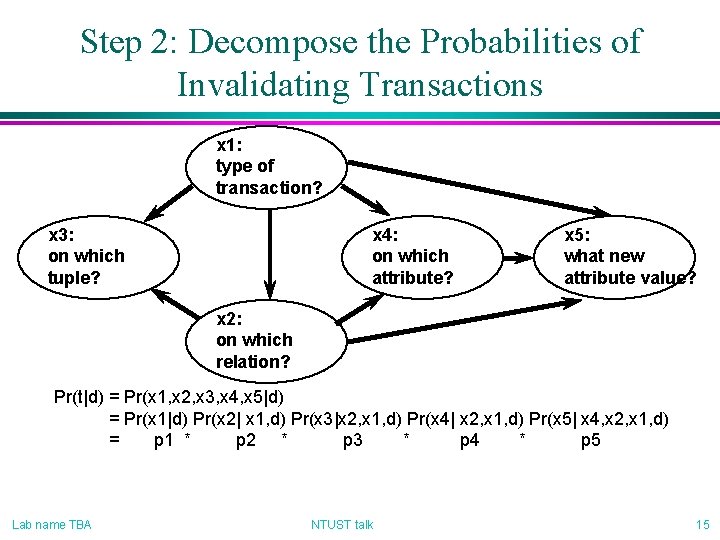

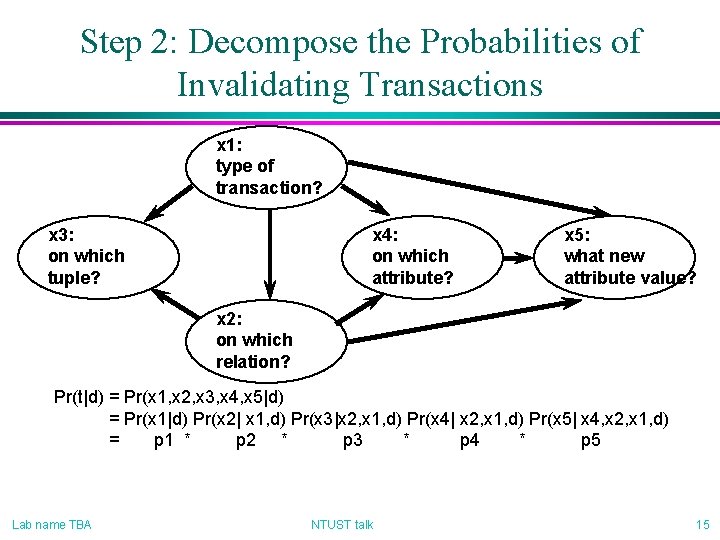

Step 2: Decompose the Probabilities of Invalidating Transactions x 1: type of transaction? x 3: on which tuple? x 4: on which attribute? x 5: what new attribute value? x 2: on which relation? Pr(t|d) = Pr(x 1, x 2, x 3, x 4, x 5|d) = Pr(x 1|d) Pr(x 2| x 1, d) Pr(x 3|x 2, x 1, d) Pr(x 4| x 2, x 1, d) Pr(x 5| x 4, x 2, x 1, d) = p 1 * p 2 * p 3 * p 4 * p 5 Lab name TBA NTUST talk 15

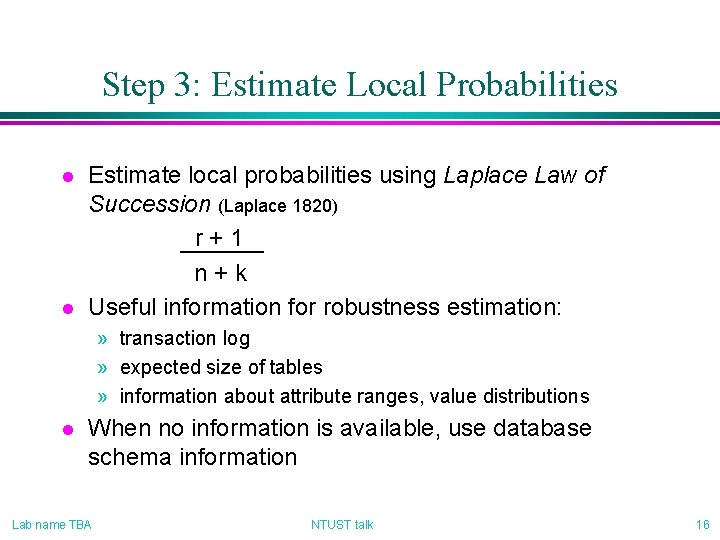

Step 3: Estimate Local Probabilities l l Estimate local probabilities using Laplace Law of Succession (Laplace 1820) r+1 n+k Useful information for robustness estimation: » transaction log » expected size of tables » information about attribute ranges, value distributions l When no information is available, use database schema information Lab name TBA NTUST talk 16

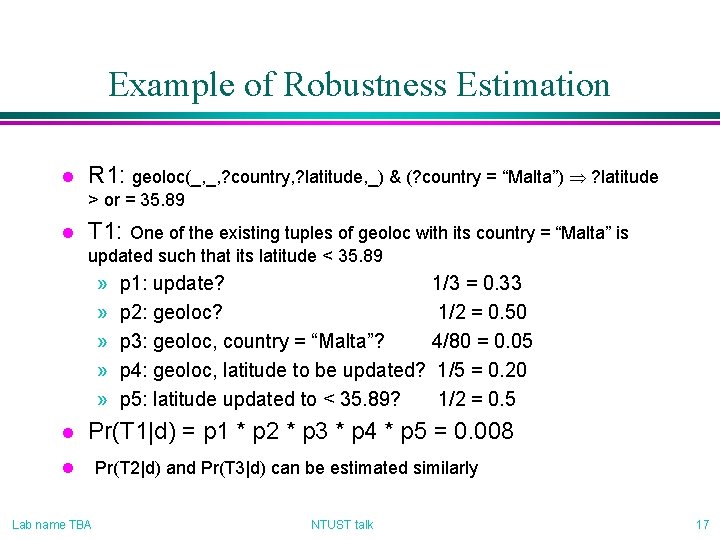

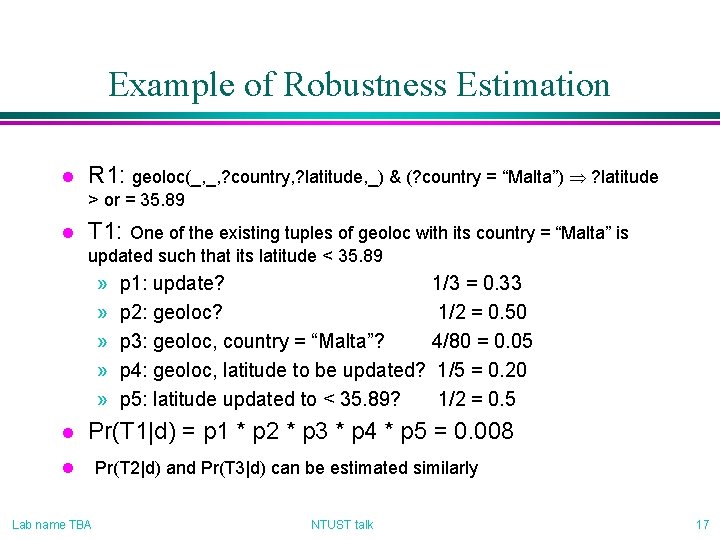

Example of Robustness Estimation l R 1: geoloc(_, _, ? country, ? latitude, _) & (? country = “Malta”) Þ ? latitude > or = 35. 89 l T 1: One of the existing tuples of geoloc with its country = “Malta” is updated such that its latitude < 35. 89 » » » l p 1: update? 1/3 = 0. 33 p 2: geoloc? 1/2 = 0. 50 p 3: geoloc, country = “Malta”? 4/80 = 0. 05 p 4: geoloc, latitude to be updated? 1/5 = 0. 20 p 5: latitude updated to < 35. 89? 1/2 = 0. 5 Pr(T 1|d) = p 1 * p 2 * p 3 * p 4 * p 5 = 0. 008 l Lab name TBA Pr(T 2|d) and Pr(T 3|d) can be estimated similarly NTUST talk 17

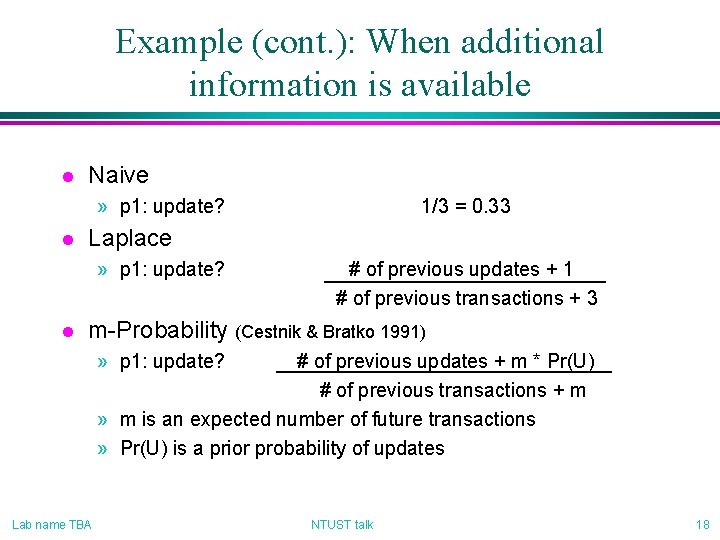

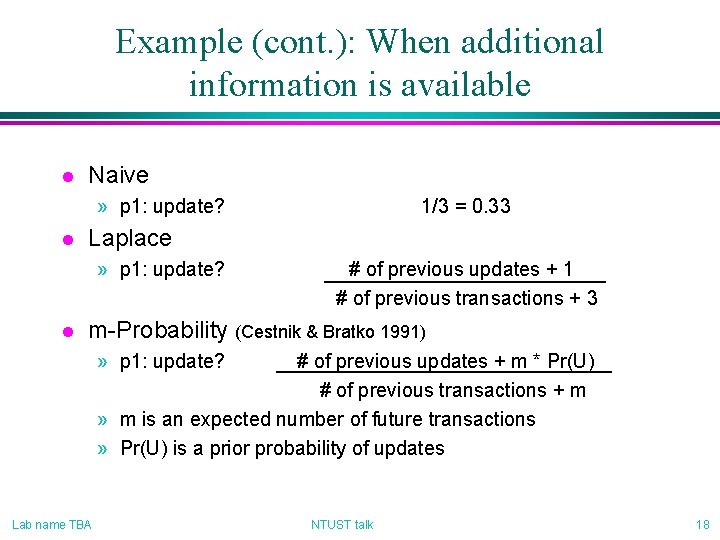

Example (cont. ): When additional information is available l Naive » p 1: update? l Laplace » p 1: update? l 1/3 = 0. 33 # of previous updates + 1 # of previous transactions + 3 m-Probability (Cestnik & Bratko 1991) » p 1: update? # of previous updates + m * Pr(U) # of previous transactions + m » m is an expected number of future transactions » Pr(U) is a prior probability of updates Lab name TBA NTUST talk 18

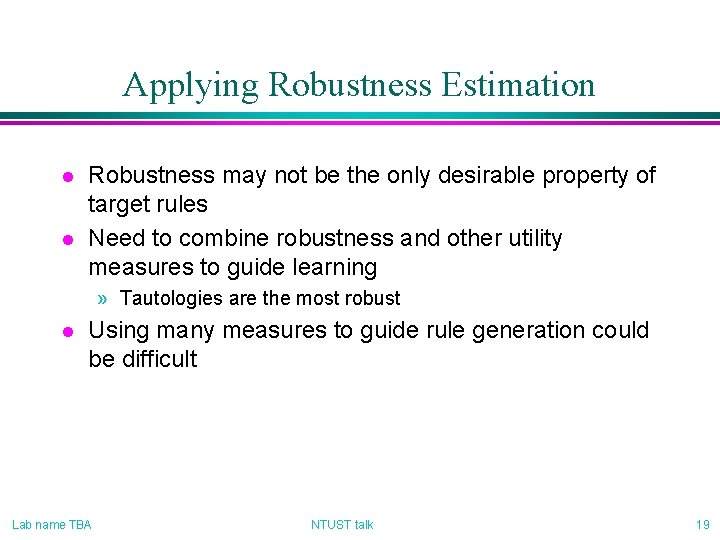

Applying Robustness Estimation l l Robustness may not be the only desirable property of target rules Need to combine robustness and other utility measures to guide learning » Tautologies are the most robust l Using many measures to guide rule generation could be difficult Lab name TBA NTUST talk 19

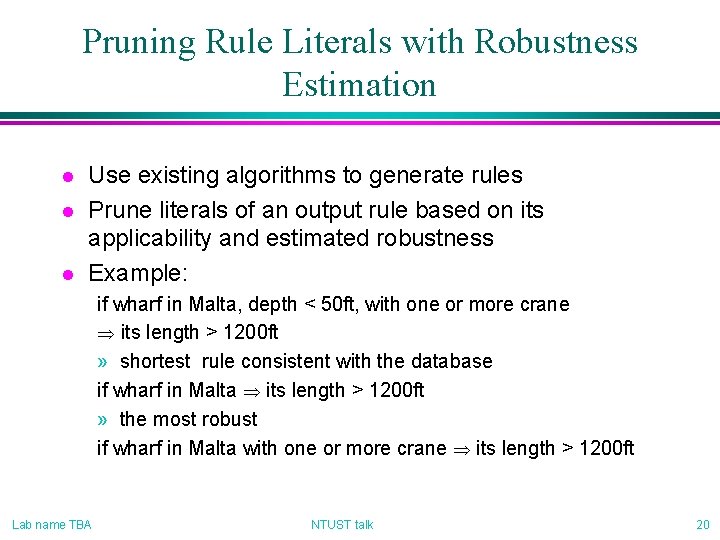

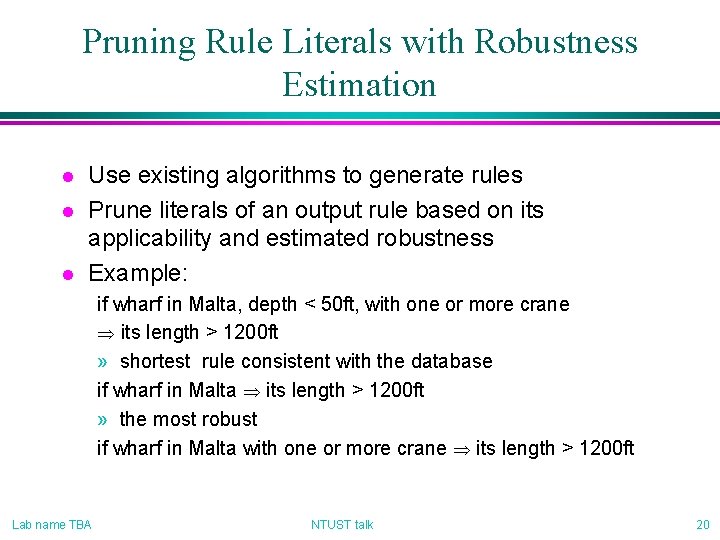

Pruning Rule Literals with Robustness Estimation l l l Use existing algorithms to generate rules Prune literals of an output rule based on its applicability and estimated robustness Example: if wharf in Malta, depth < 50 ft, with one or more crane Þ its length > 1200 ft » shortest rule consistent with the database if wharf in Malta Þ its length > 1200 ft » the most robust if wharf in Malta with one or more crane Þ its length > 1200 ft Lab name TBA NTUST talk 20

Applications l Learning rules for Semantic Query Optimization (Hsu & Knoblock ML 94, Siegel Boston U. thesis 89, Shekha et al. IEEE TKDE 94) l l Learning functional dependency (Mannila & Raiha KDD 94) Discovering models to reconcile/integrate heterogeneous databases (Dao, Son et al. KDD 95) Learning to answer intentional queries (Chu et al. 91) Discovering knowledge for decision suppor Lab name TBA NTUST talk 21

Summary l l l Data Mining from “Image” need to estimate the robustness of extracted knowledge Robustness can be defined based on the probability of invalidating transactions Robustness can be estimated efficiently Rule pruning guided by robustness and other utility measures may yield robust and interesting rules Discovering robust knowledge to enhance database functionalities Lab name TBA NTUST talk 22

Data Mining for IR? l l Different tasks need different ways to collect and prepare data Data preparation and cleaning are important Lab name TBA NTUST talk 23

Data Mining for IR? Issues l Potential Applications » » » l text categorization (a. k. a. classification, routing, filtering) fact extraction (a. k. a. template filling) clustering text summarization (a. k. a. abstracting, gisting) user profiling and modeling interactive query formulation Issues » scaling up to large volume of data » feature selection (a. k. a. dimensionality reduction) Lab name TBA NTUST talk 24

Projects l Recent projects » Template filling --- inducing information extractors from labeled semi-structured documents (J of Info Systems, 1999) » Feature Selection --- feature selection for backprop neural network (IEEE Tools with AI, 1998) l (to-be-proposed) projects » Alias-mining for digital library (NSC) » Classifying NL diagnosis records to ICD-9 -CM coding (NHI) l More projects…plans of collaboration much welcome! Lab name TBA NTUST talk 25