Data Mining Comp Sc and Inf Mgmt Asian

- Slides: 23

Data Mining Comp. Sc. and Inf. Mgmt. Asian Institute of Technology Instructor: Prof. Sumanta Guha Slide Sources: Han & Kamber “Data Mining: Concepts and Techniques” book, slides by Han, Han & Kamber, adapted and supplemented by Guha

Chapter 7: Cluster Analysis

What is Cluster Analysis? n n Cluster: a collection of data objects n Similar to one another within the same cluster n Dissimilar to the objects in other clusters Cluster analysis n Finding similarities between data according to the characteristics found in the data and grouping similar data objects into clusters n Unsupervised learning: no predefined classes n Typical applications n As a stand-alone tool to get insight into data distribution n As a preprocessing step for other algorithms

Clustering: Rich Applications and Multidisciplinary Efforts n n Pattern Recognition Spatial Data Mining n n Detect spatial clusters or for other spatial mining tasks Image Processing Economic Science n n Create thematic maps in GIS by clustering feature spaces Market research WWW n n Document classification Cluster Weblog data to discover groups of similar access patterns

Examples of Clustering Applications n Marketing: Help marketers discover distinct groups in their customer bases, and then use this knowledge to develop targeted marketing programs n Land use: Identification of areas of similar land use in an earth observation database n Insurance: Identifying groups of motor insurance policy holders with a high average claim cost. Fraud detection – outliers ! n City-planning: Identifying groups of houses according to their house type, value, and geographical location n Earth-quake studies: Observed earth quake epicenters should be clustered along continent faults

Quality: What Is Good Clustering? n A good clustering method will produce high quality clusters with n n high intra-class similarity n low inter-class similarity The quality of a clustering result depends on both the similarity measure used by the method and its implementation n The quality of a clustering method is also measured by its ability to discover some or all of the hidden patterns

Measure the Quality of Clustering n n Dissimilarity/Similarity metric: Similarity is expressed in terms of a distance function, typically metric: d(i, j) There is a separate “quality” function that measures the “goodness” of a cluster. The definitions of distance functions are usually very different for numeric, boolean, categorical and ordinal variables. n Numeric: income, temperature, price, etc. n Boolean: Yes/no, e. g, student? citizen? n Categorical: color (red, blue, green, …), nationality, etc. n Ordinal: Excellent/Very good…, High/medium/low (i. e. , with order) It is hard to define “similar enough” or “good enough” n the answer is typically highly subjective.

Requirements of Clustering in Data Mining n Scalability n Ability to deal with different types of attributes n Ability to handle dynamic data n Discovery of clusters with arbitrary shape n Minimal requirements for domain knowledge to determine input parameters n Able to deal with noise and outliers n Insensitive to order of input records n High dimensionality n Incorporation of user-specified constraints n Interpretability and usability

Major Clustering Approaches n Partitioning approach: n Given n objects in the database, a partitioning approach splits it into k groups. n n Typical methods: k-means, k-medoids, CLARANS Hierarchical approach: n Create a hierarchical decomposition of the set of data (or objects) using one of two methods: n Agglomerative (bottom-up): start with each object as a separate group; successively, merge groups that are close until a termination condition holds. n Divisive (top-down): start with all objects in one group; successively split groups that are not “tight” until a termination condition holds. n Typical methods: Diana, Agnes, BIRCH, ROCK, CAMELEON

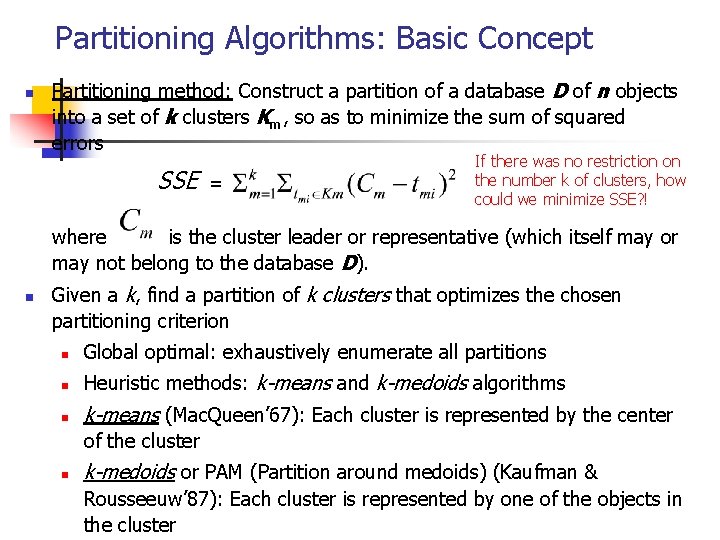

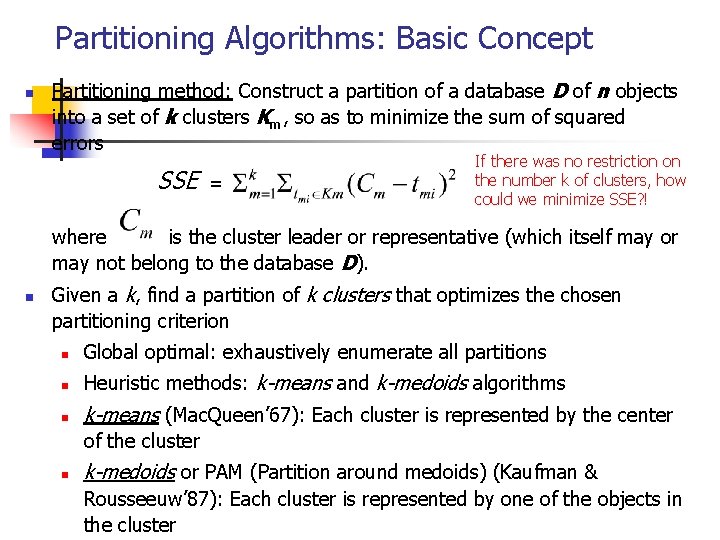

Partitioning Algorithms: Basic Concept n Partitioning method: Construct a partition of a database D of n objects into a set of k clusters Km, so as to minimize the sum of squared errors SSE = If there was no restriction on the number k of clusters, how could we minimize SSE? ! where is the cluster leader or representative (which itself may or may not belong to the database D). n Given a k, find a partition of k clusters that optimizes the chosen partitioning criterion n Global optimal: exhaustively enumerate all partitions n Heuristic methods: k-means and k-medoids algorithms n k-means (Mac. Queen’ 67): Each cluster is represented by the center of the cluster n k-medoids or PAM (Partition around medoids) (Kaufman & Rousseeuw’ 87): Each cluster is represented by one of the objects in the cluster

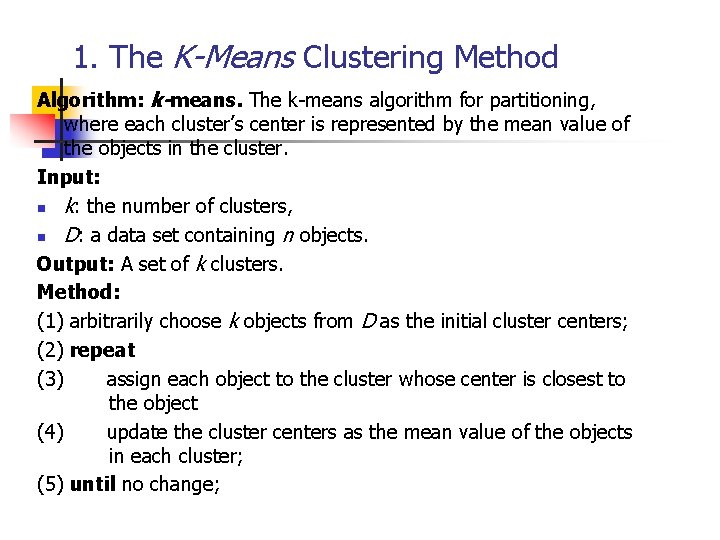

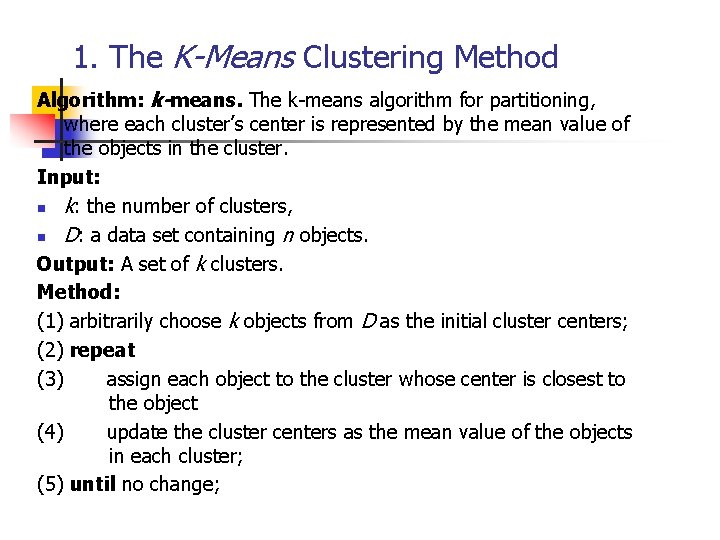

1. The K-Means Clustering Method Algorithm: k-means. The k-means algorithm for partitioning, where each cluster’s center is represented by the mean value of the objects in the cluster. Input: n k: the number of clusters, n D: a data set containing n objects. Output: A set of k clusters. Method: (1) arbitrarily choose k objects from D as the initial cluster centers; (2) repeat (3) assign each object to the cluster whose center is closest to the object (4) update the cluster centers as the mean value of the objects in each cluster; (5) until no change;

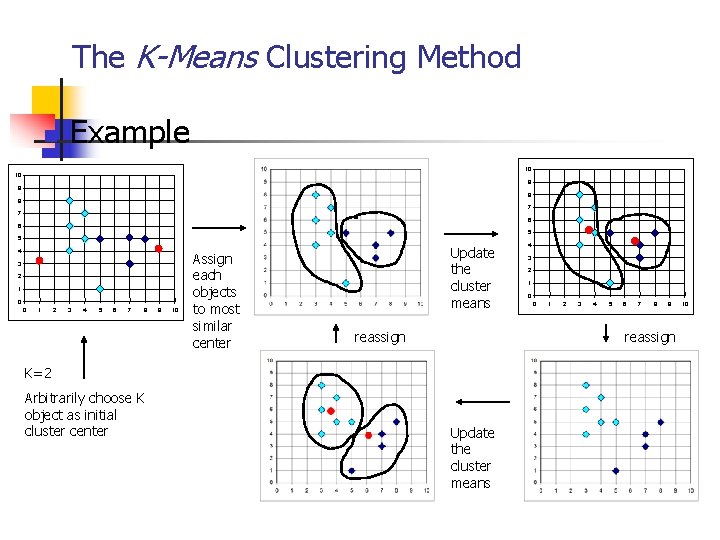

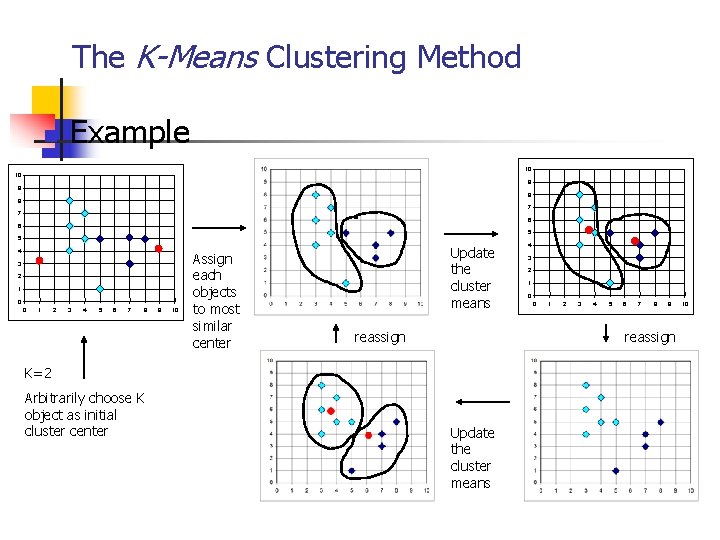

The K-Means Clustering Method n Example 10 10 9 9 8 8 7 7 6 6 5 5 4 3 2 1 0 0 1 2 3 4 5 6 7 8 9 10 Assign each objects to most similar center Update the cluster means reassign 3 2 1 0 0 1 2 3 4 5 6 7 8 9 reassign K=2 Arbitrarily choose K object as initial cluster center 4 Update the cluster means 10

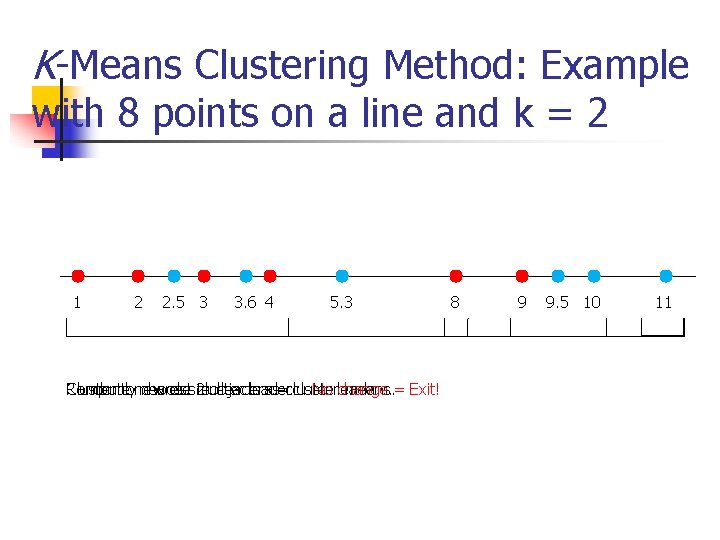

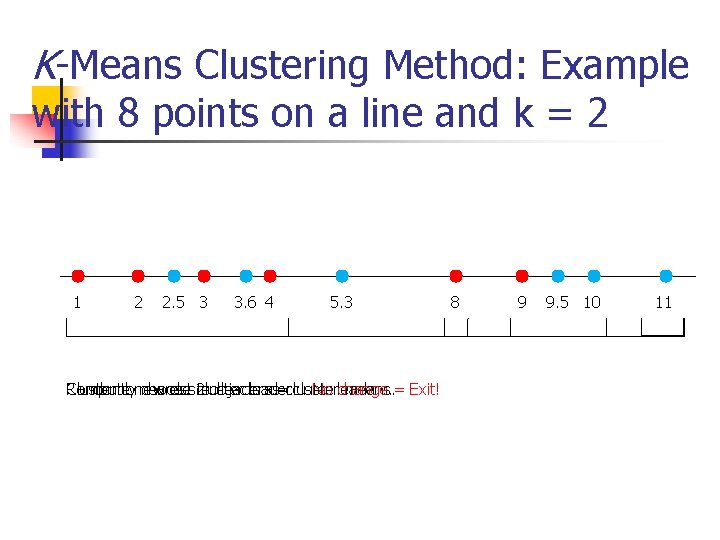

K-Means Clustering Method: Example with 8 points on a line and k = 2 1 2 2. 5 3 3. 6 4 5. 3 Randomly Cluster Compute tonew nearest choose cluster 2 cluster objects leader. as=cluster No leaders. change means. = Exit! 8 9 9. 5 10 11

Comments on the K-Means Method n Strength: Relatively efficient: O(tkn), where n is # objects, k is # clusters, and t is # iterations. Normally, k, t << n. n n n Comparing: PAM: O(k(n-k)2 ), CLARA: O(ks 2 + k(n-k)) Comment: Often terminates at a local optimum. The global optimum may be found using techniques such as deterministic annealing Weakness n Applicable only when mean is defined, then what about categorical data? n Need to specify k, the number of clusters, in advance n Unable to handle noisy data and outliers n Not suitable to discover clusters with non-convex shapes

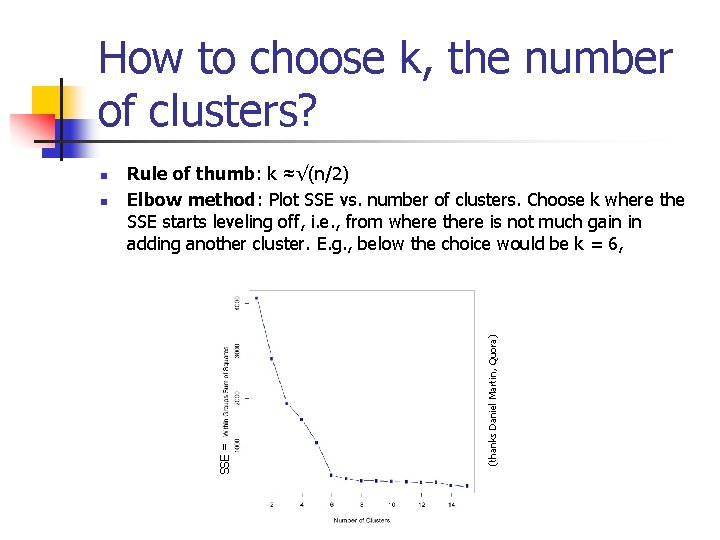

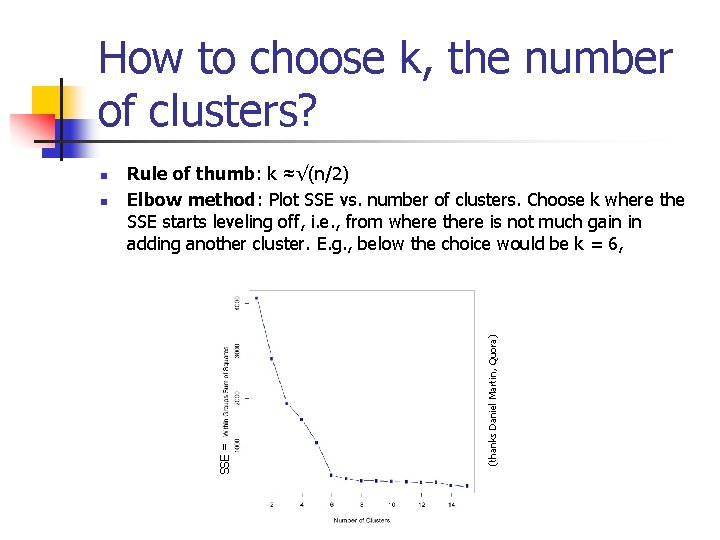

How to choose k, the number of clusters? (thanks Daniel Martin, Quora) n Rule of thumb: k ≈√(n/2) Elbow method: Plot SSE vs. number of clusters. Choose k where the SSE starts leveling off, i. e. , from where there is not much gain in adding another cluster. E. g. , below the choice would be k = 6, SSE = n

Variations of the K-Means Method n n A few variants of the k-means which differ in n Selection of the initial k means n Dissimilarity calculations n Strategies to calculate cluster means Handling categorical data: k-modes (Huang’ 98) n Replacing means of clusters with modes n Using new dissimilarity measures to deal with categorical objects n Using a frequency-based method to update modes of clusters n A mixture of categorical and numerical data: k-prototype method

What Is the Problem with the K-Means Method? n The k-means algorithm is sensitive to outliers ! n Since an object with an extremely large value may substantially distort the distribution of the data. n Ex 1: Apply k-means with k=3 to 1, 3, 4, 6, 10, 12, 13, 17, 25, 27, 28, 30. n Ex 2: Apply k-means with k=3 to 1, 3, 4, 6, 10, 12, 13, 18, 25, 27, 28, 30. (17 changed to 18 from Ex. 1). It seems k-means is not very stable!

More Partitioning Clustering Algorithms 2: K-medoids (PAM = Partitioning Around Medoids) Try Ex. 1 earlier for k-means using k-medoids. 3: CLARA (Custering LARge Applications) 4: CLARANS (Clustering Large Applications based on RANdomized Search) Read above three clustering methods from the paper Efficient and Effective Clustering Methods for Spatial Data Mining, by Ng and Han, Intnl. Conf. on Very Large Data Bases (VLDB’ 94), 1994, which proposes CLARANS, but has a good presentation of PAM and CLARA as well.

Hierarchical Clustering Algorithms 5: ROCK: A Robust Clustering Algorithm for Categorical Data, by (Sudipto) Guha, Rastogi and Shim, Information Systems, 2000. Main slides: ROCK slides by the authors Related slides: ROCK slides by Olusegun et al

Hierarchical Clustering Algorithms 6: DBSCAN A Density-Based Algorithm for Discovering Clusters in Large Spatial Databases with Noise, by Ester, Kriegel, Sander and Xu, Intnl. Conf. Knowledge Discovery And Data Mining (KDD’ 96), 1996.

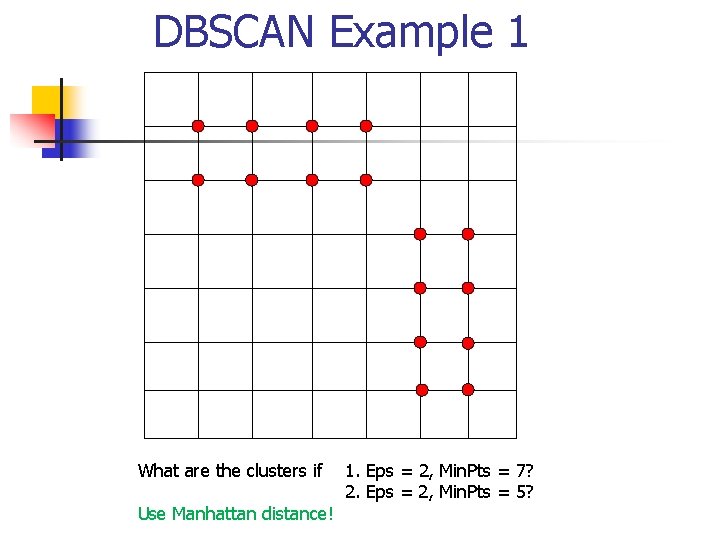

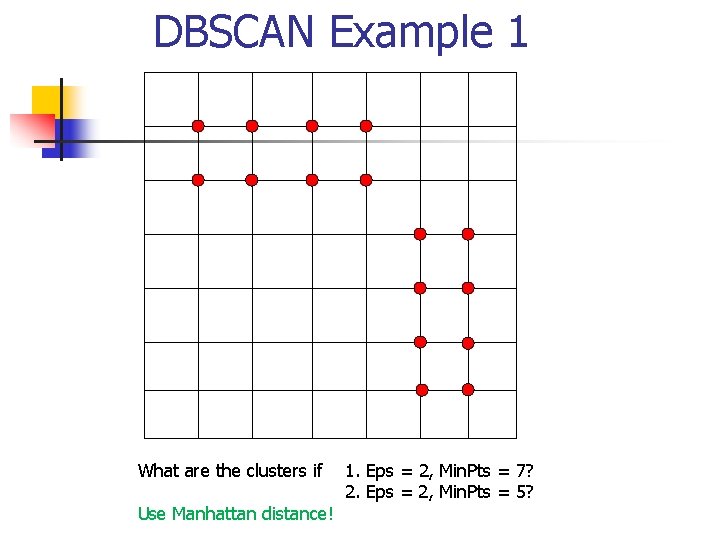

DBSCAN Example 1 What are the clusters if Use Manhattan distance! 1. Eps = 2, Min. Pts = 7? 2. Eps = 2, Min. Pts = 5?

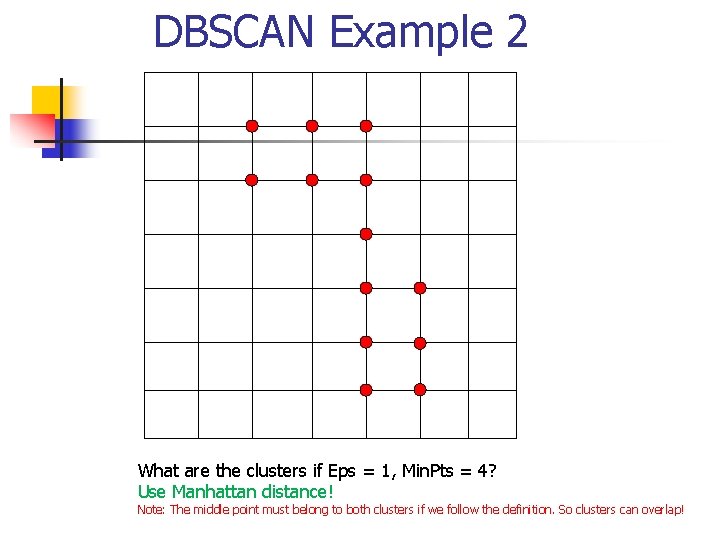

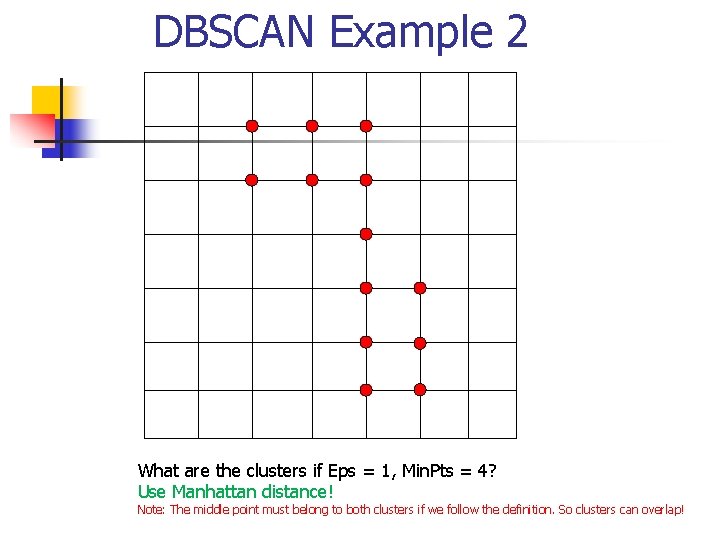

DBSCAN Example 2 What are the clusters if Eps = 1, Min. Pts = 4? Use Manhattan distance! Note: The middle point must belong to both clusters if we follow the definition. So clusters can overlap!

Hierarchical Clustering Algorithms 7: CLIQUE Automatic Subspace Clustering of High Dimensional Data for Data Mining Applications, by Agrawal, Gehrke, Gunopulos and Raghavan, ACM- SIGMOD Intnl. Conf. on Management of Data (SIGMOD’ 98), 1998.