Data Mining and machine learning ROC curves Rule

- Slides: 82

Data Mining (and machine learning) ROC curves Rule Induction Basics of Text Mining

Two classes is a common and special case

Two classes is a common and special case Medical applications: cancer, or not? Computer Vision applications: landmine, or not? Security applications: terrorist, or not? Biotech applications: gene, or not? … …

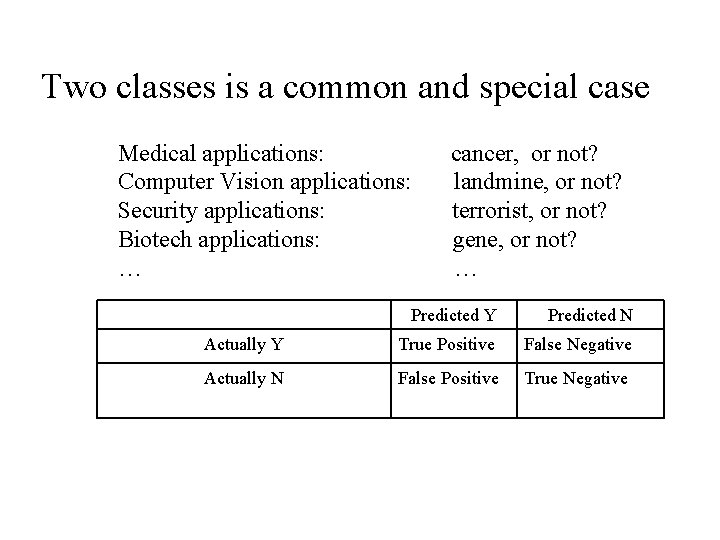

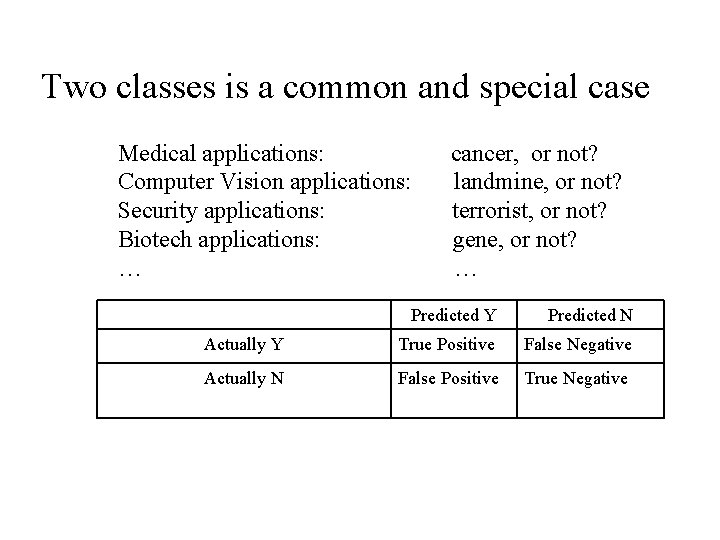

Two classes is a common and special case Medical applications: cancer, or not? Computer Vision applications: landmine, or not? Security applications: terrorist, or not? Biotech applications: gene, or not? … … Predicted Y Predicted N Actually Y True Positive False Negative Actually N False Positive True Negative

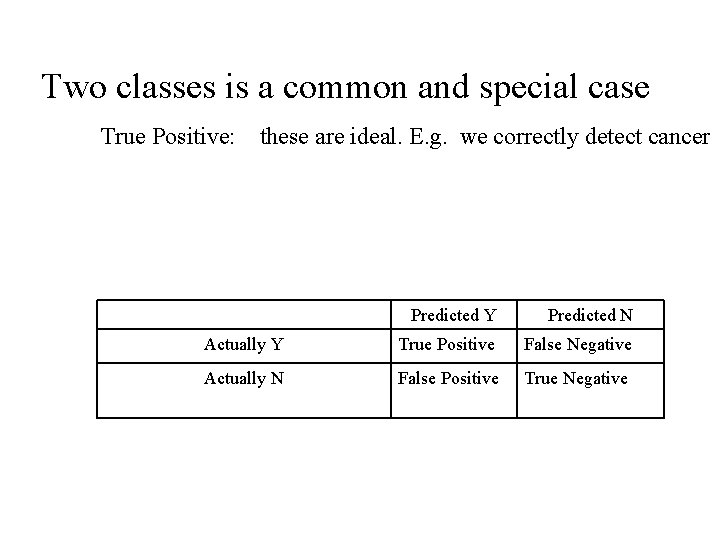

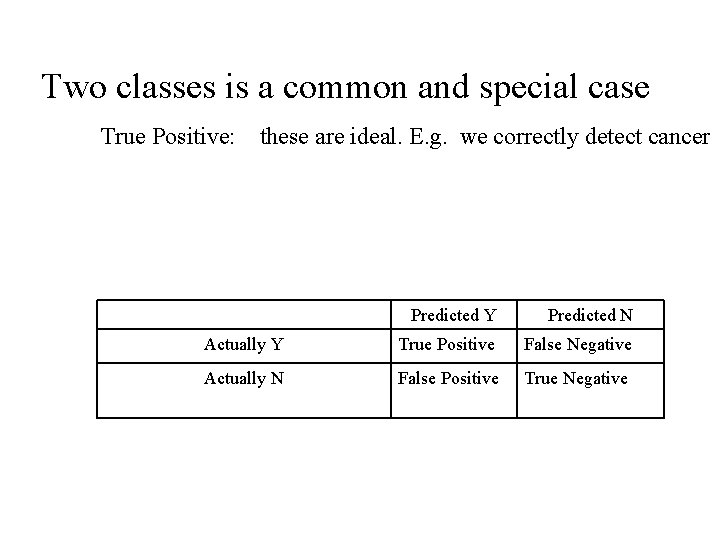

Two classes is a common and special case True Positive: these are ideal. E. g. we correctly detect cancer Predicted Y Predicted N Actually Y True Positive False Negative Actually N False Positive True Negative

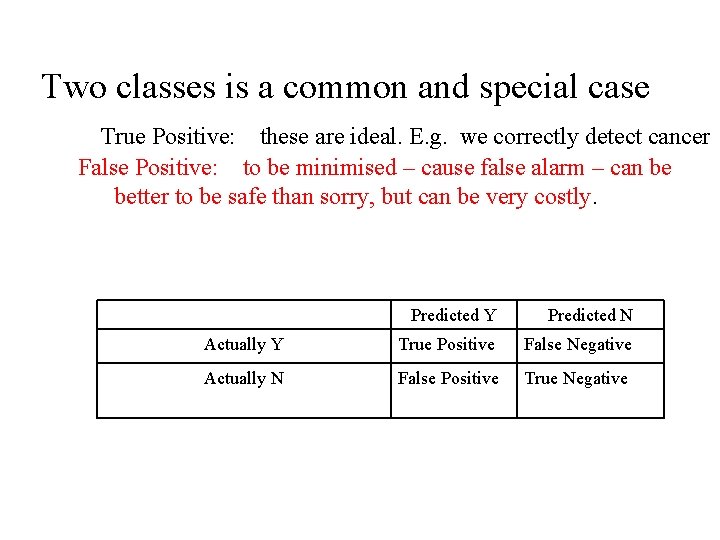

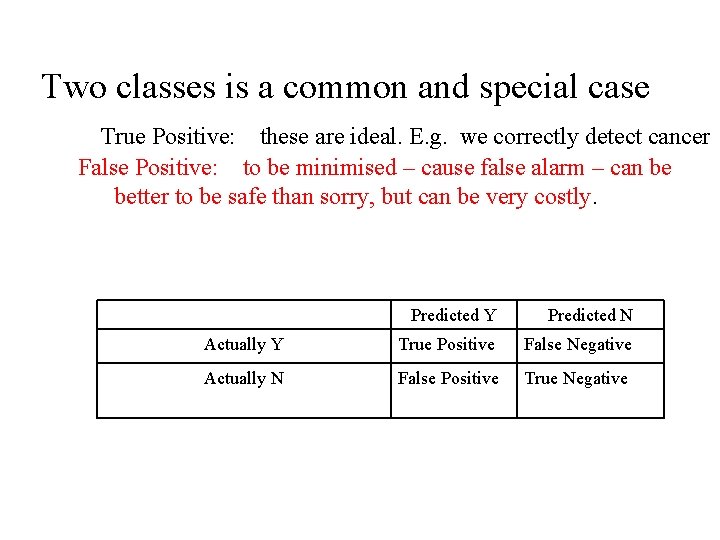

Two classes is a common and special case True Positive: these are ideal. E. g. we correctly detect cancer False Positive: to be minimised – cause false alarm – can be better to be safe than sorry, but can be very costly. Predicted Y Predicted N Actually Y True Positive False Negative Actually N False Positive True Negative

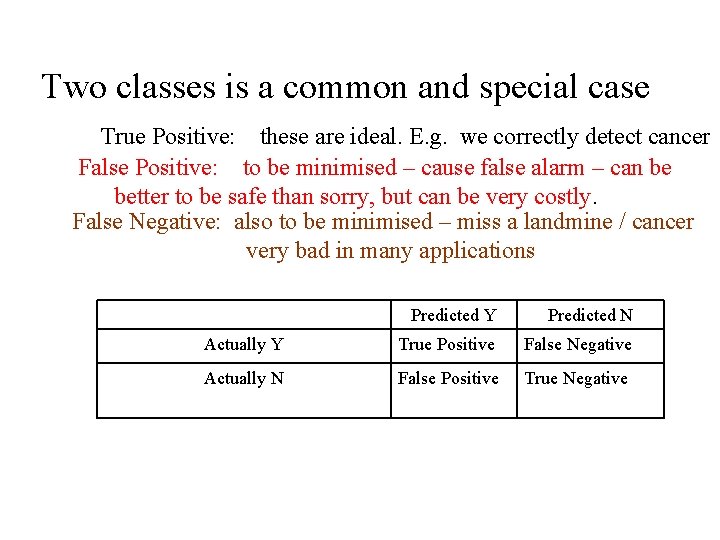

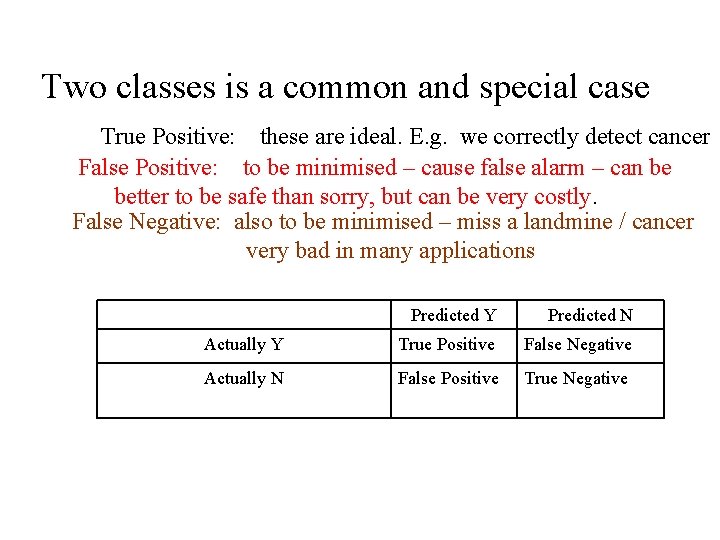

Two classes is a common and special case True Positive: these are ideal. E. g. we correctly detect cancer False Positive: to be minimised – cause false alarm – can be better to be safe than sorry, but can be very costly. False Negative: also to be minimised – miss a landmine / cancer very bad in many applications Predicted Y Predicted N Actually Y True Positive False Negative Actually N False Positive True Negative

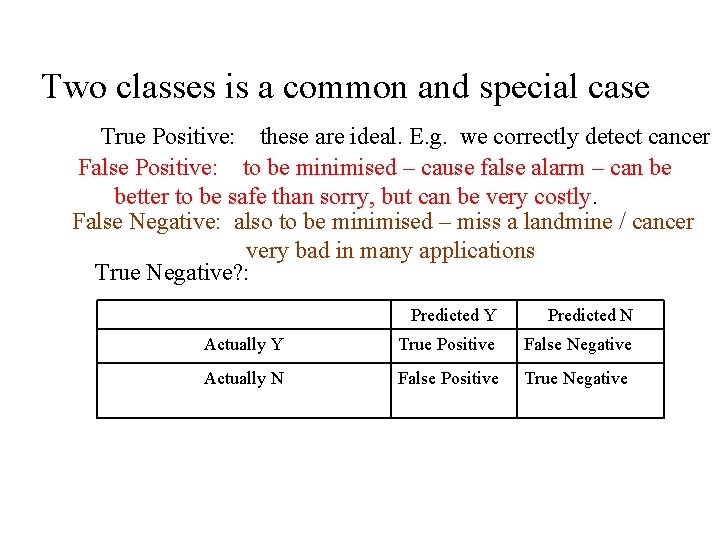

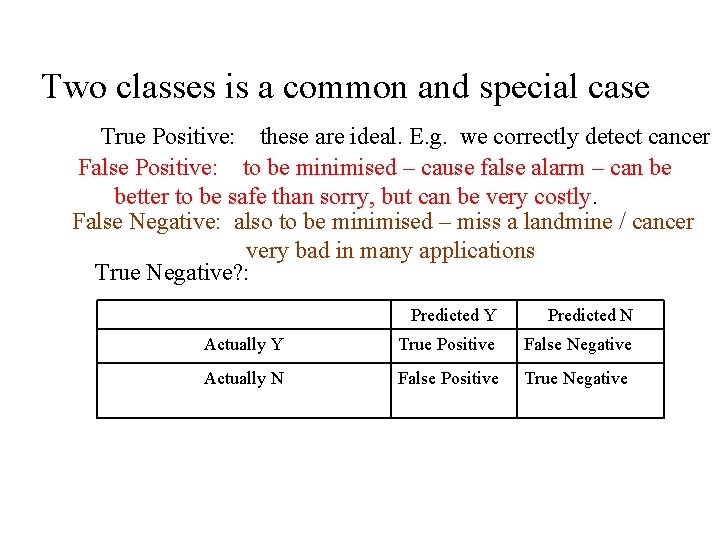

Two classes is a common and special case True Positive: these are ideal. E. g. we correctly detect cancer False Positive: to be minimised – cause false alarm – can be better to be safe than sorry, but can be very costly. False Negative: also to be minimised – miss a landmine / cancer very bad in many applications True Negative? : Predicted Y Predicted N Actually Y True Positive False Negative Actually N False Positive True Negative

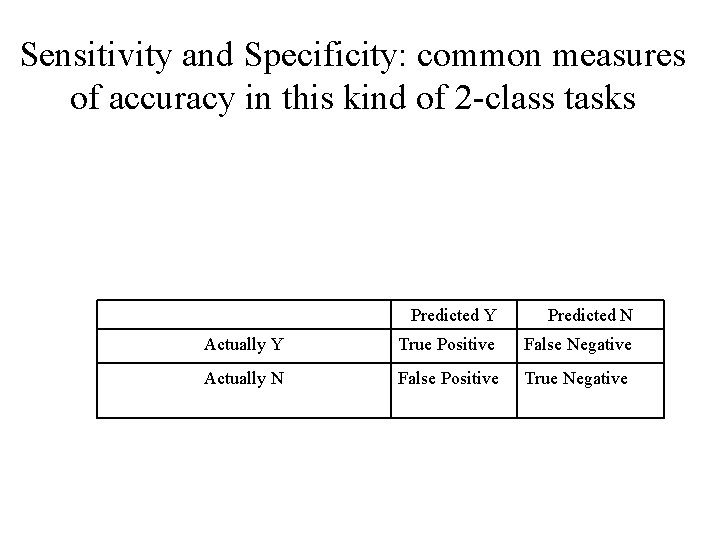

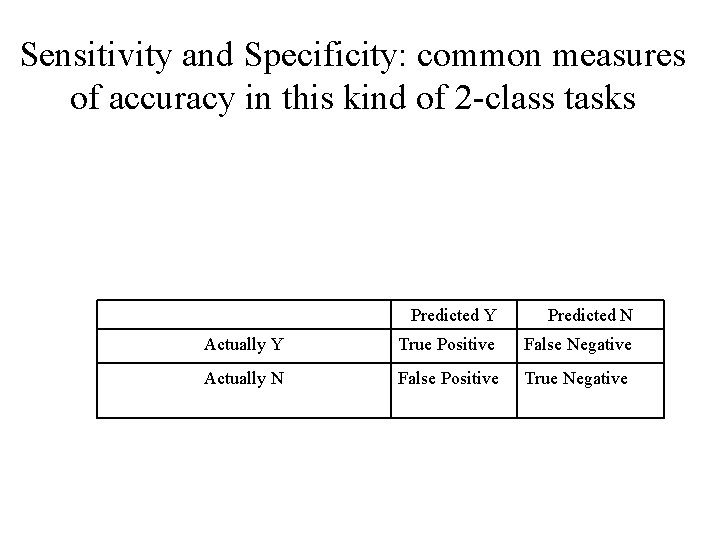

Sensitivity and Specificity: common measures of accuracy in this kind of 2 -class tasks Predicted Y Predicted N Actually Y True Positive False Negative Actually N False Positive True Negative

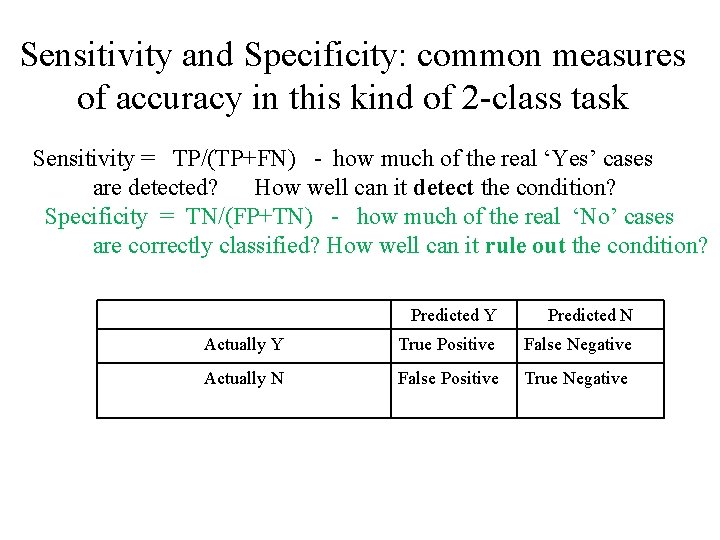

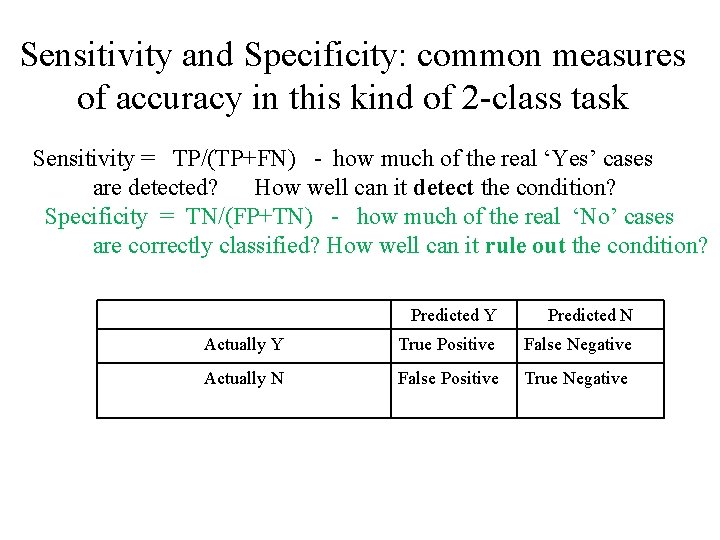

Sensitivity and Specificity: common measures of accuracy in this kind of 2 -class task Sensitivity = TP/(TP+FN) - how much of the real ‘Yes’ cases are detected? How well can it detect the condition? Specificity = TN/(FP+TN) - how much of the real ‘No’ cases are correctly classified? How well can it rule out the condition? Predicted Y Predicted N Actually Y True Positive False Negative Actually N False Positive True Negative

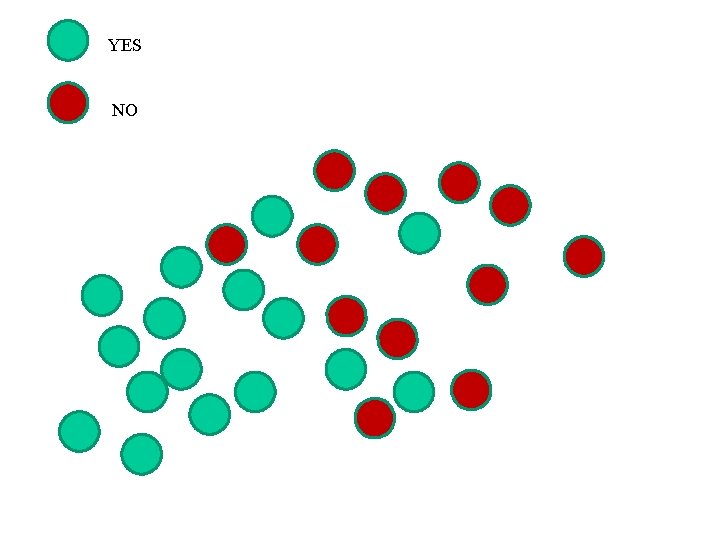

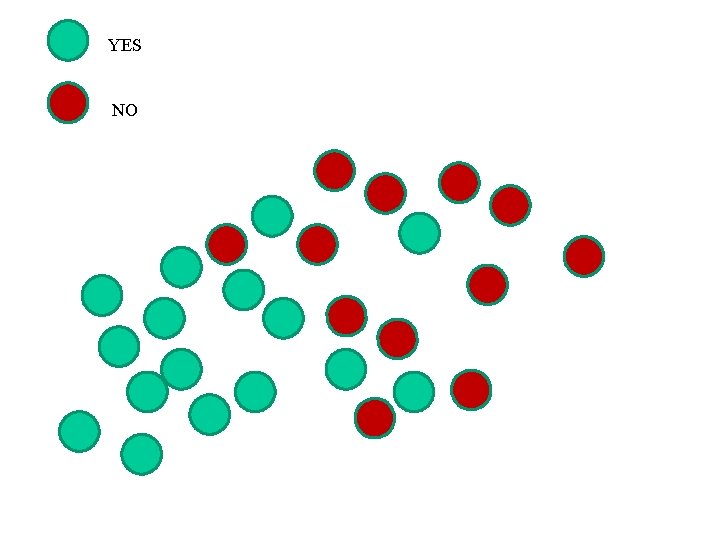

YES NO

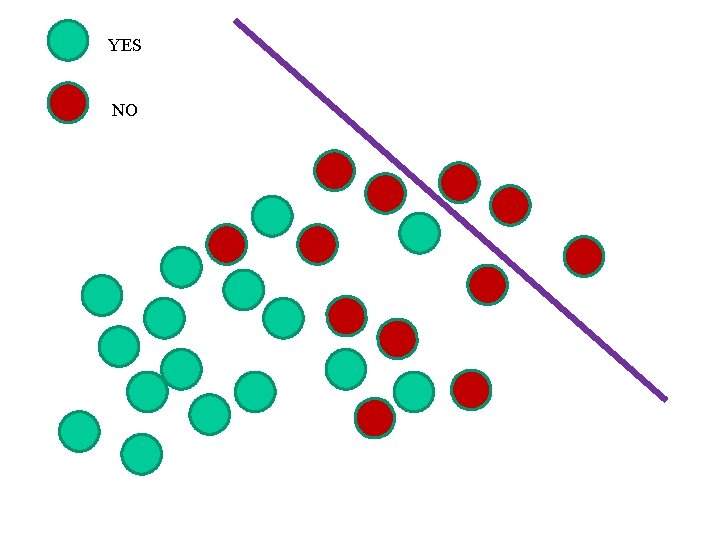

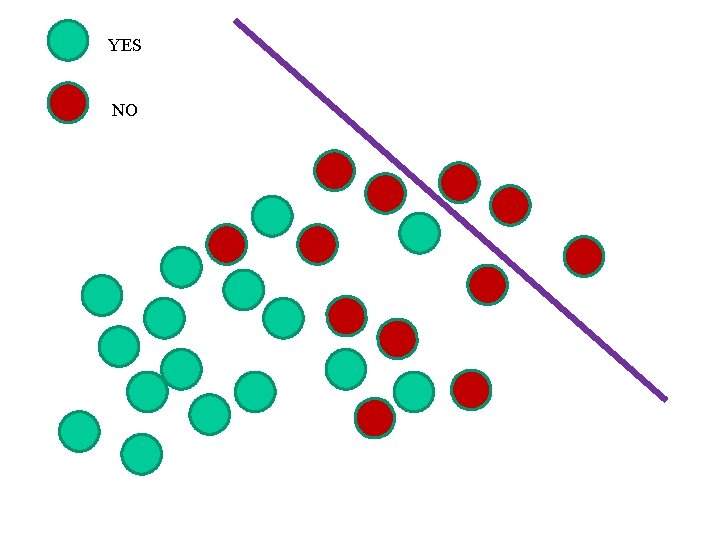

YES NO

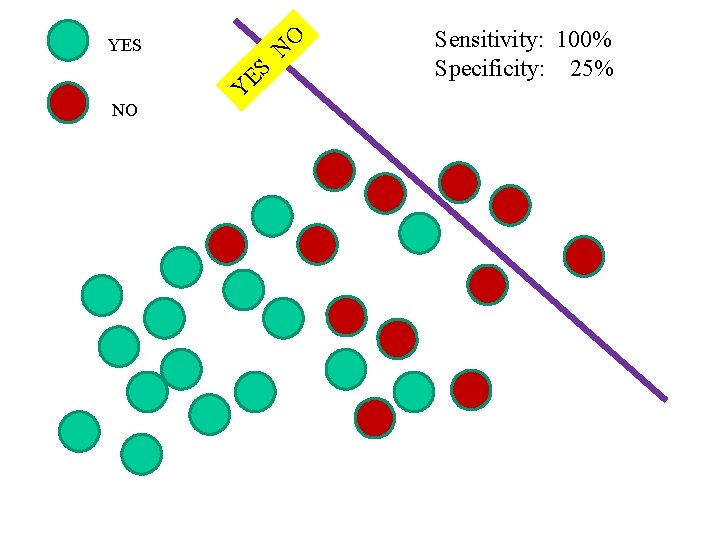

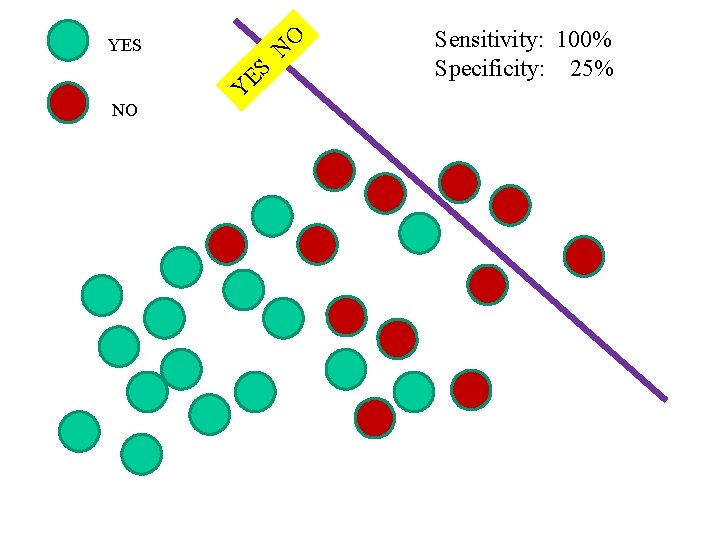

O YES NO Y N ES Sensitivity: 100% Specificity: 25%

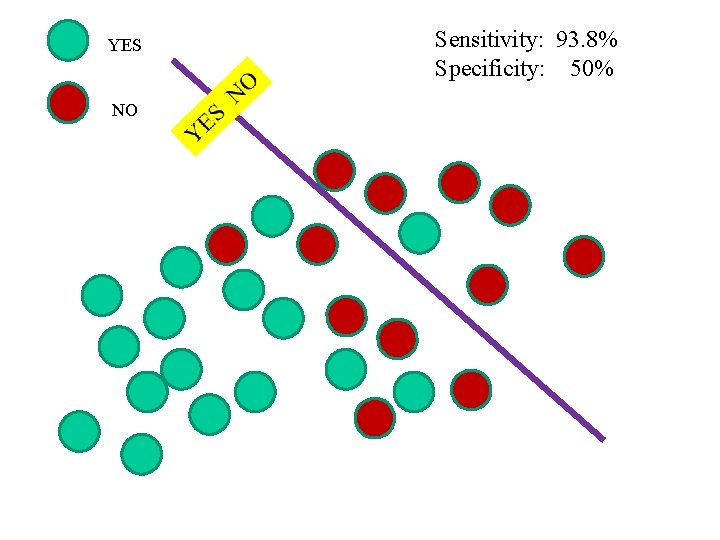

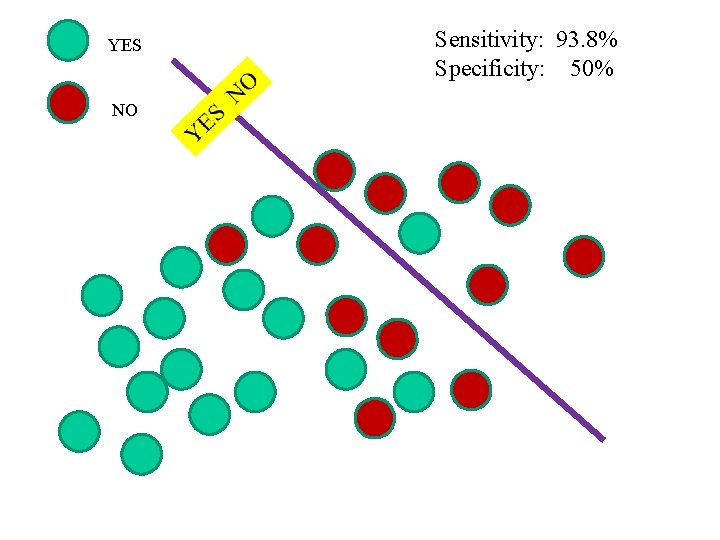

YES NO Sensitivity: 93. 8% Specificity: 50%

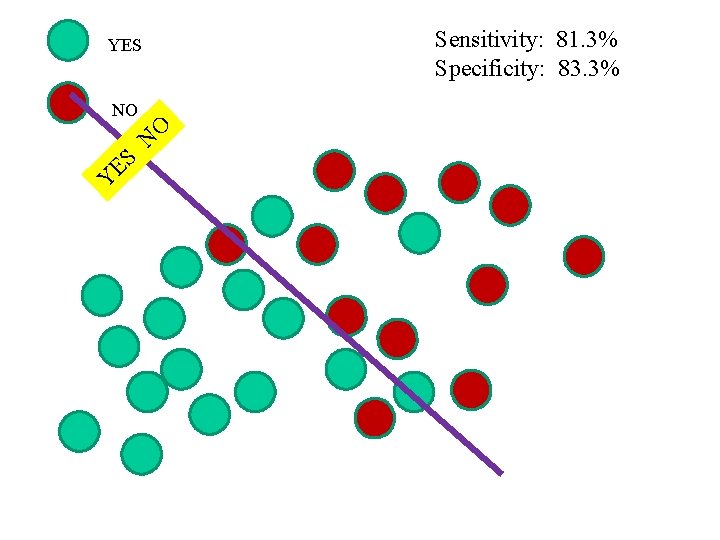

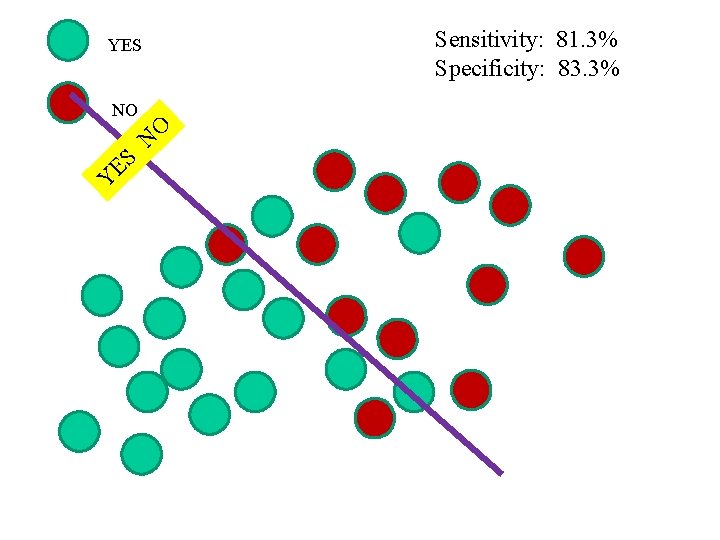

Sensitivity: 81. 3% Specificity: 83. 3% YES NO O N Y ES

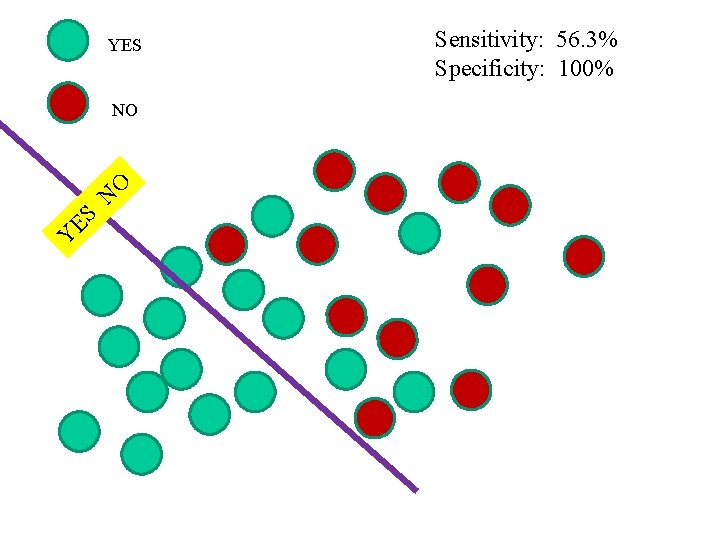

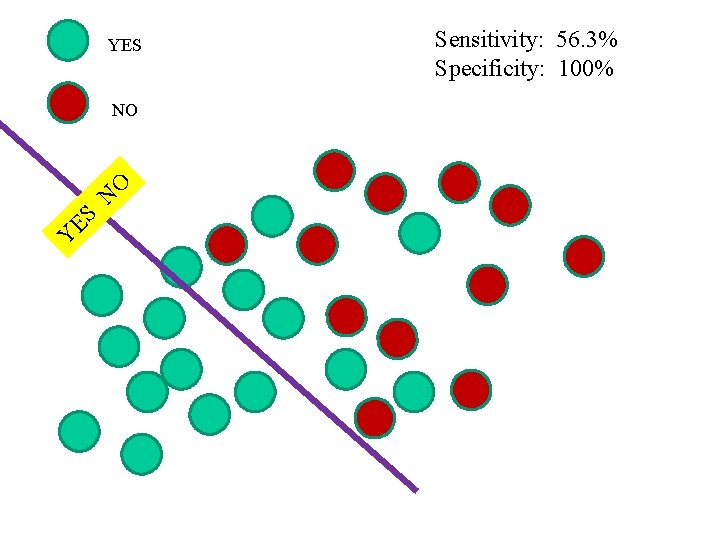

YES NO O N S E Y Sensitivity: 56. 3% Specificity: 100%

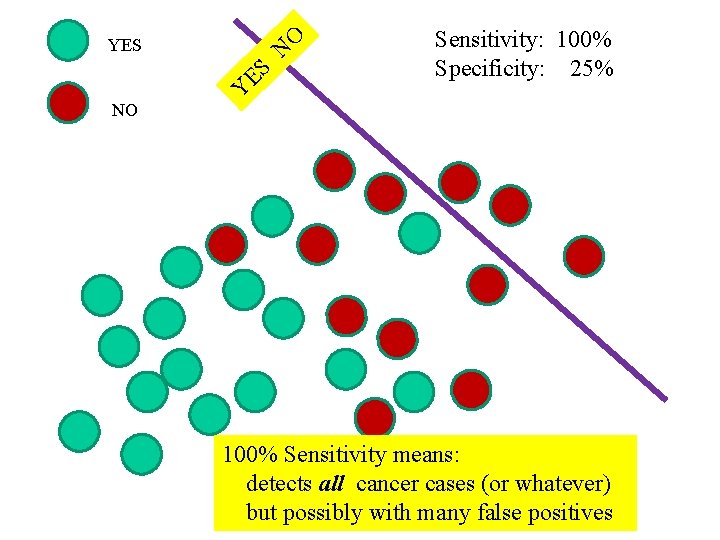

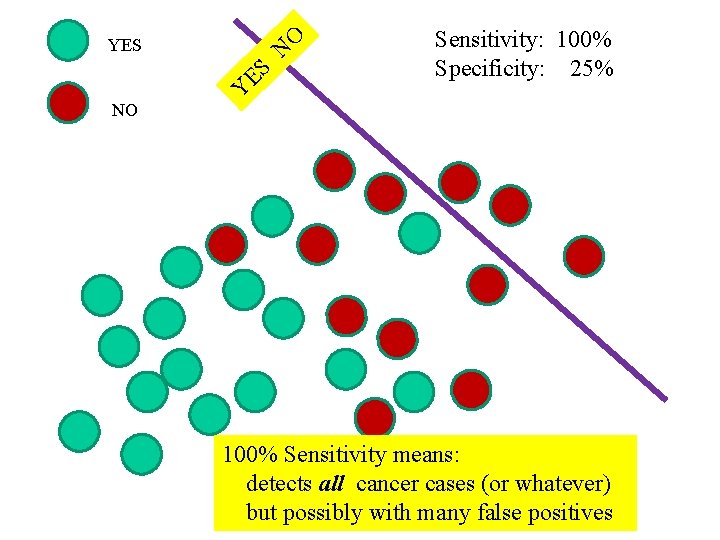

O YES NO N ES Y Sensitivity: 100% Specificity: 25% 100% Sensitivity means: detects all cancer cases (or whatever) but possibly with many false positives

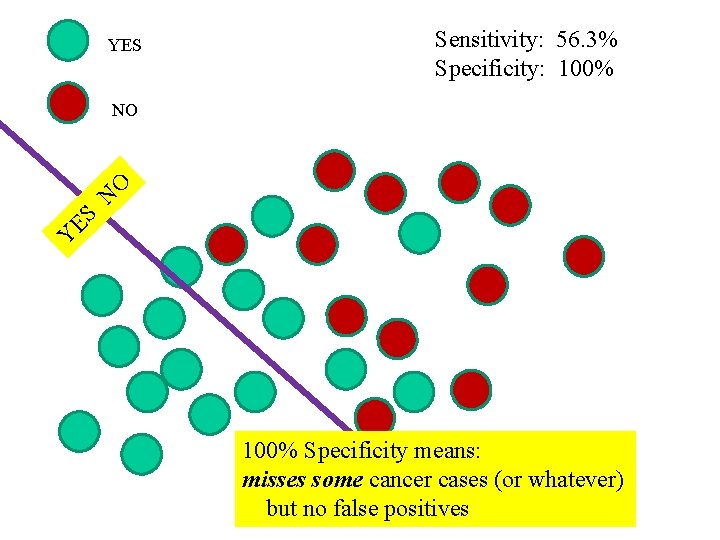

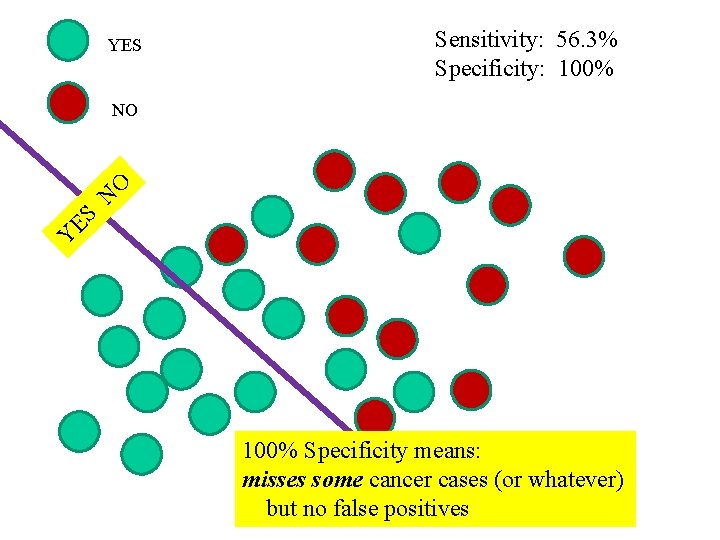

YES Sensitivity: 56. 3% Specificity: 100% NO O N S E Y 100% Specificity means: misses some cancer cases (or whatever) but no false positives

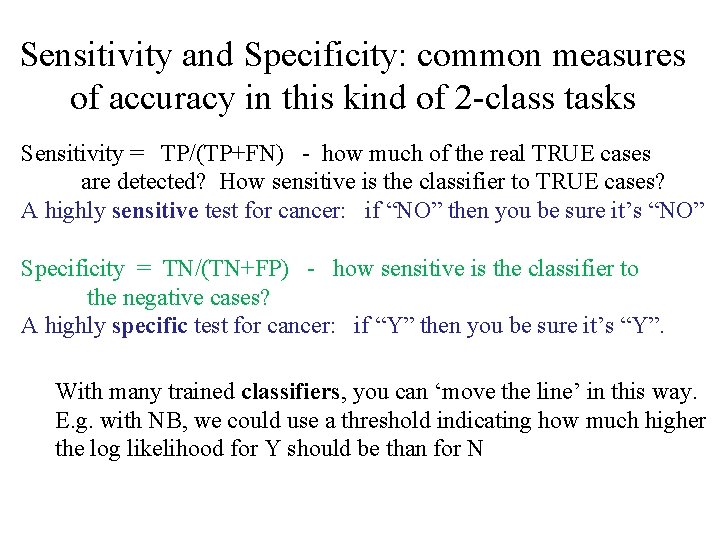

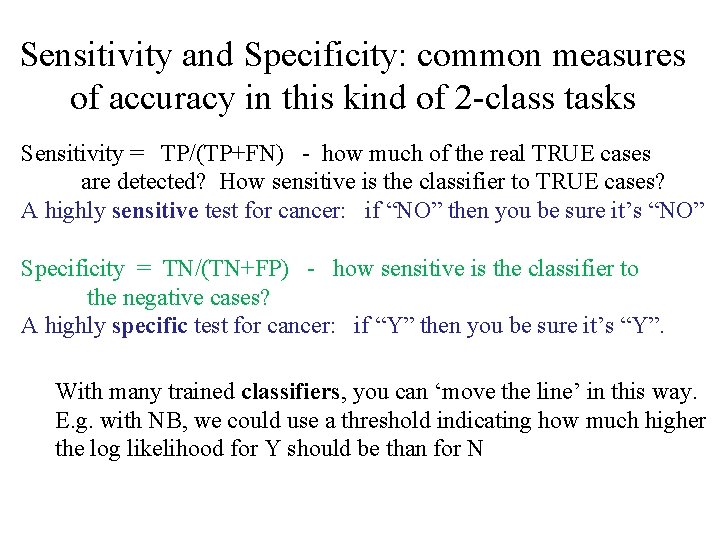

Sensitivity and Specificity: common measures of accuracy in this kind of 2 -class tasks Sensitivity = TP/(TP+FN) - how much of the real TRUE cases are detected? How sensitive is the classifier to TRUE cases? A highly sensitive test for cancer: if “NO” then you be sure it’s “NO” Specificity = TN/(TN+FP) - how sensitive is the classifier to the negative cases? A highly specific test for cancer: if “Y” then you be sure it’s “Y”. With many trained classifiers, you can ‘move the line’ in this way. E. g. with NB, we could use a threshold indicating how much higher the log likelihood for Y should be than for N

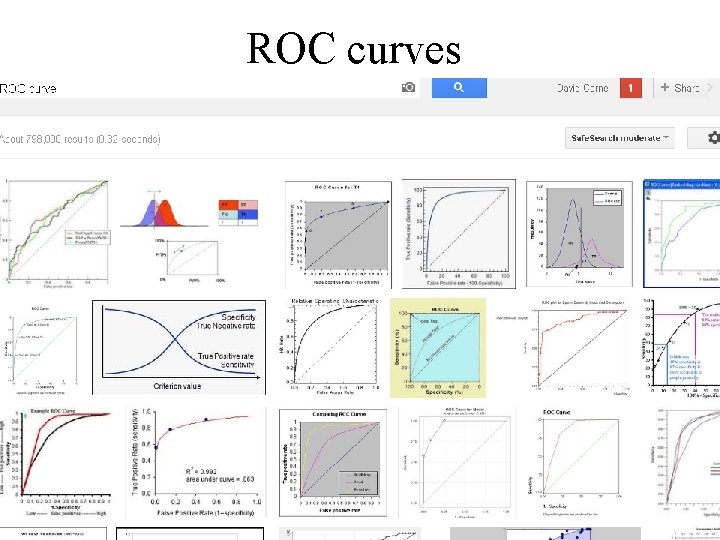

ROC curves David Corne, and Nick Taylor, Heriot-Watt University - dwcorne@gmail. com These slides and related resources: http: //www. macs. hw. ac. uk/~dwcorne/Teaching/dmml. html

Rule Induction • Rules are useful when you want to learn a clear / interpretable classifier, and are less worried about squeezing out as much accuracy as possible • There a number of different ways to ‘learn’ rules or rulesets. • Before we go there, what is a rule / ruleset?

Rules IF Condition … Then Class Value is …

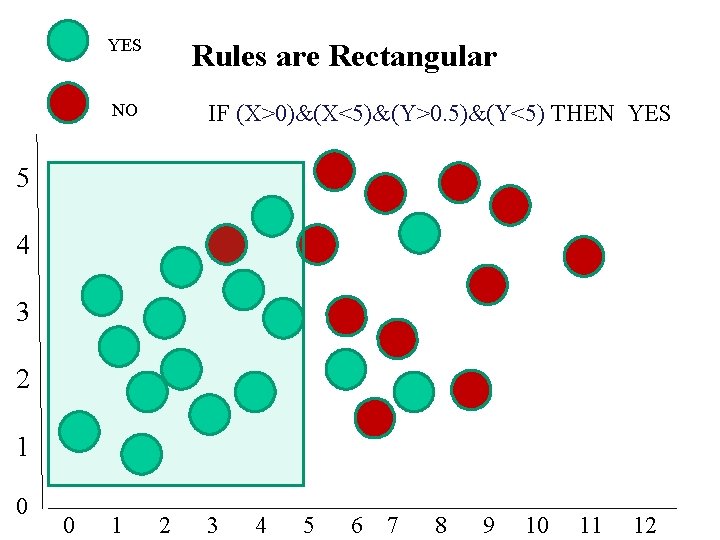

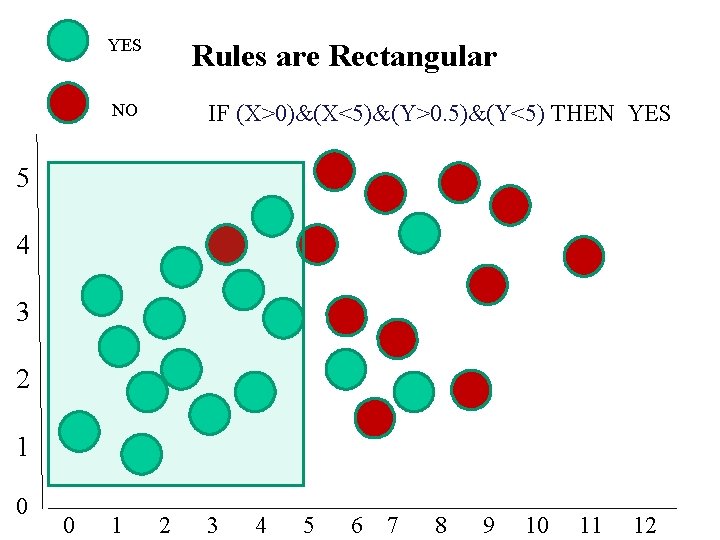

YES NO Rules are Rectangular IF (X>0)&(X<5)&(Y>0. 5)&(Y<5) THEN YES 5 4 3 2 1 0 0 1 2 3 4 5 6 7 8 9 10 11 12

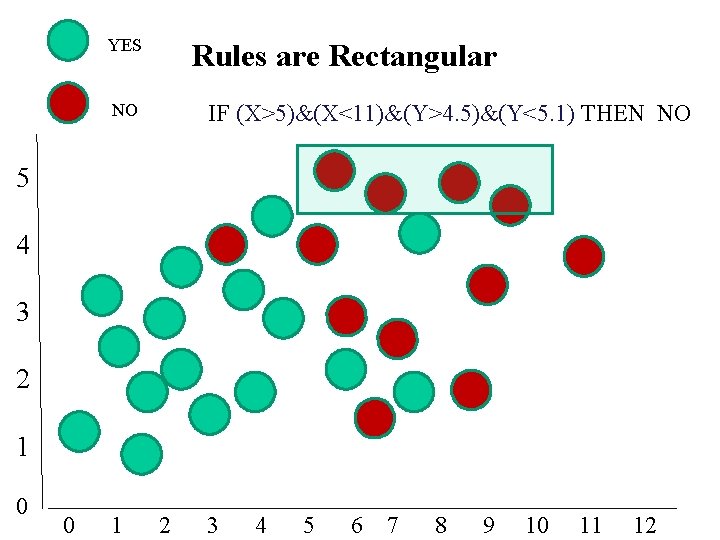

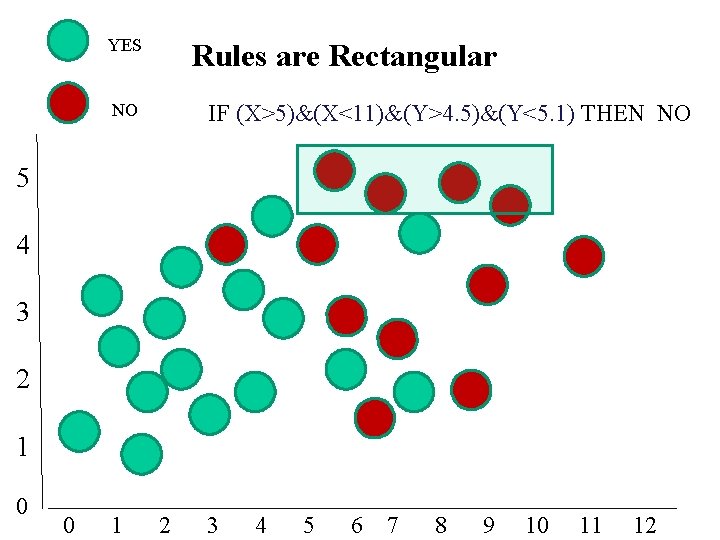

YES NO Rules are Rectangular IF (X>5)&(X<11)&(Y>4. 5)&(Y<5. 1) THEN NO 5 4 3 2 1 0 0 1 2 3 4 5 6 7 8 9 10 11 12

A Ruleset IF Condition 1 … Then Class = A IF Condition 2 … Then Class = A IF Condition 3 … Then Class = B IF Condition 4 … Then Class = C …

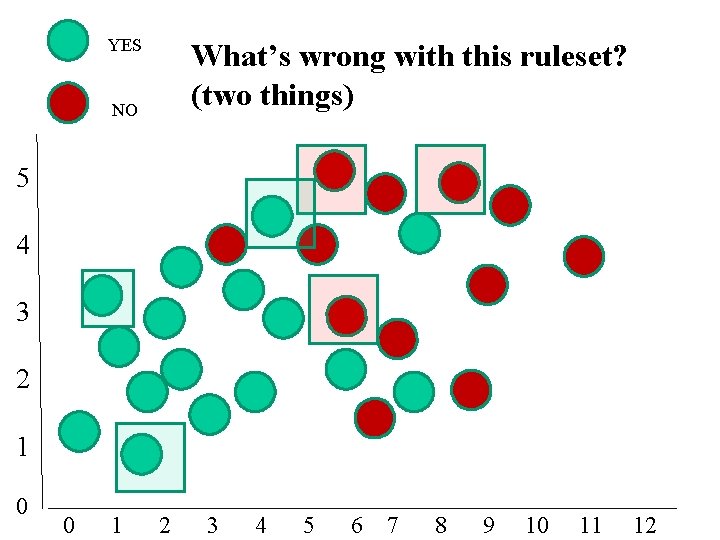

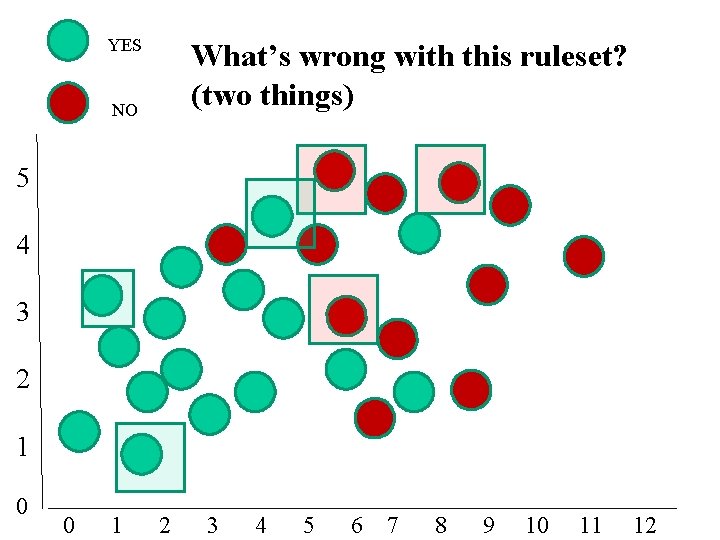

YES NO What’s wrong with this ruleset? (two things) 5 4 3 2 1 0 0 1 2 3 4 5 6 7 8 9 10 11 12

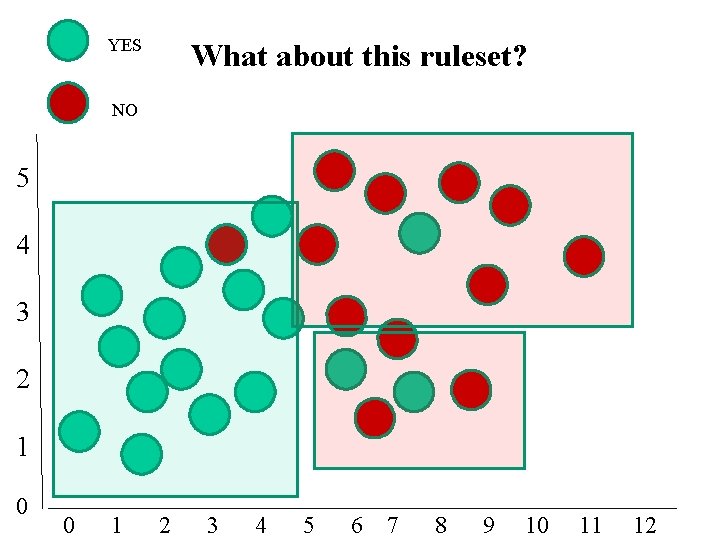

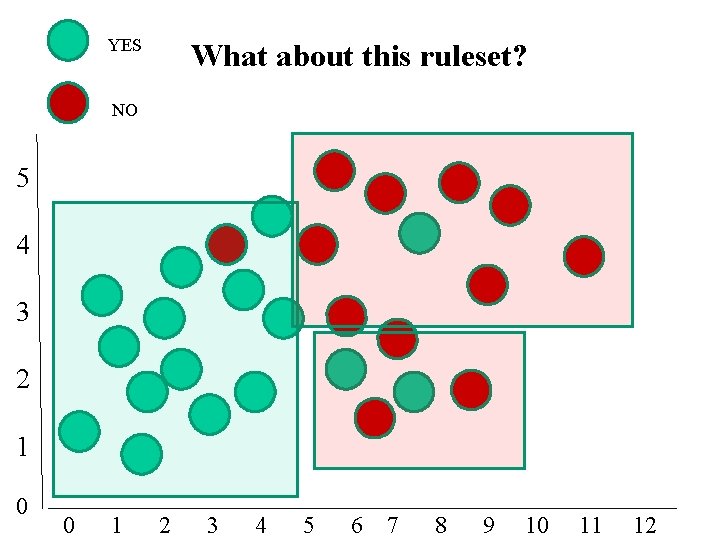

YES What about this ruleset? NO 5 4 3 2 1 0 0 1 2 3 4 5 6 7 8 9 10 11 12

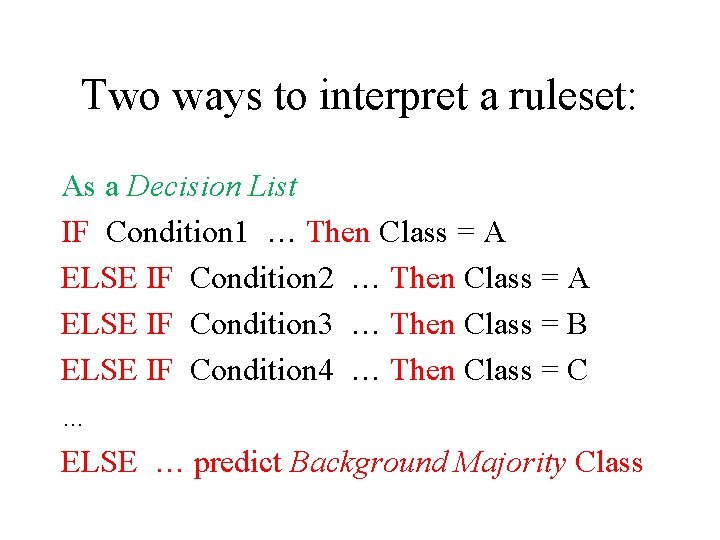

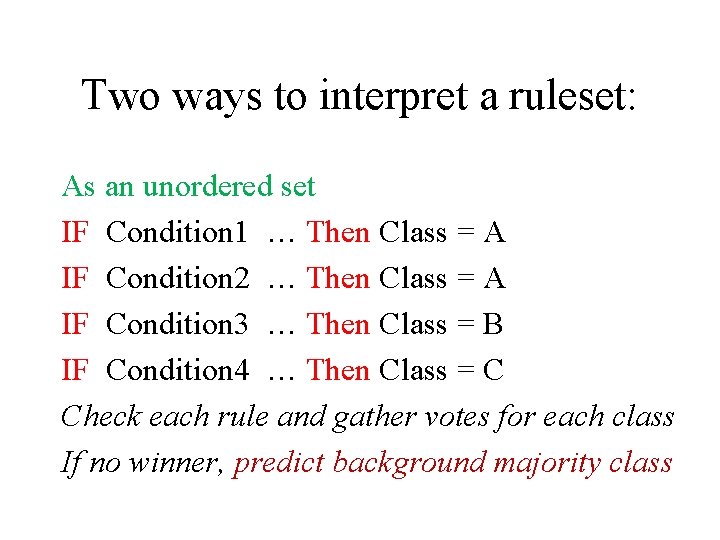

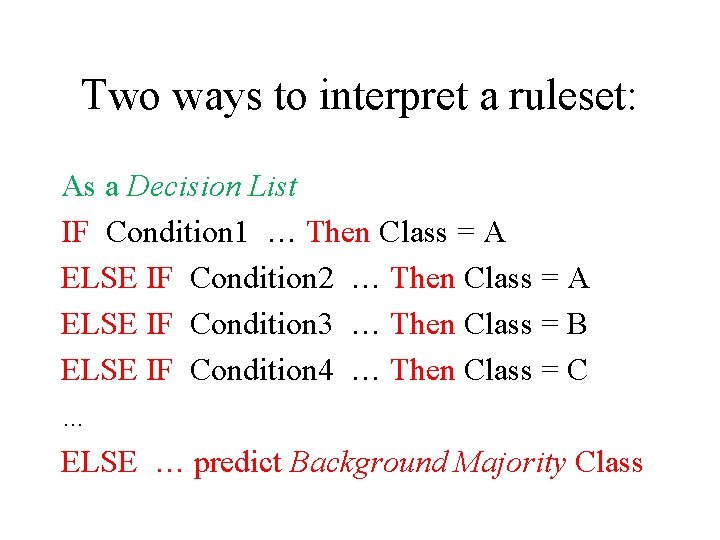

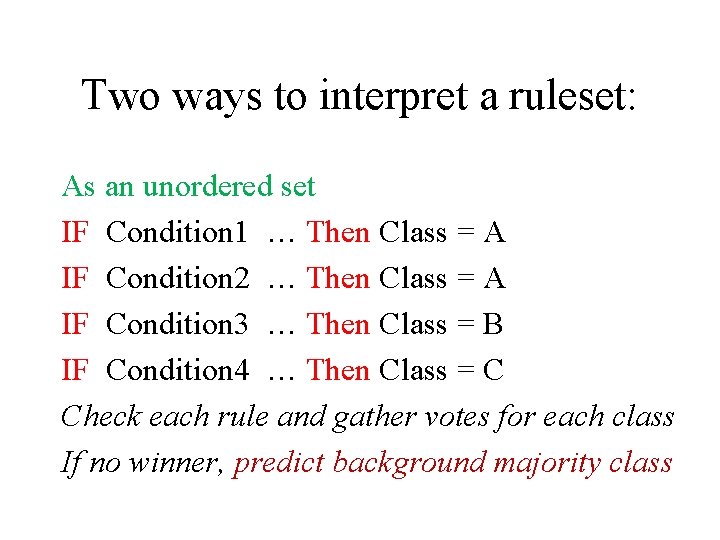

Two ways to interpret a ruleset:

Two ways to interpret a ruleset: As a Decision List IF Condition 1 … Then Class = A ELSE IF Condition 2 … Then Class = A ELSE IF Condition 3 … Then Class = B ELSE IF Condition 4 … Then Class = C … ELSE … predict Background Majority Class

Two ways to interpret a ruleset: As an unordered set IF Condition 1 … Then Class = A IF Condition 2 … Then Class = A IF Condition 3 … Then Class = B IF Condition 4 … Then Class = C Check each rule and gather votes for each class If no winner, predict background majority class

Three broad ways to learn rulesets

Three broad ways to learn rulesets 1. Just build a decision tree with ID 3 (or something else) and you can translate the tree into rules!

Three broad ways to learn rulesets 2. Use any good search/optimisation algorithm. Evolutionary (genetic) algorithms are the most common. You will do this coursework 3. This means simply guessing a ruleset at random, and then trying mutations and variants, gradually improving them over time.

Three broad ways to learn rulesets 3. A number of ‘old’ AI algorithms exist that still work well, and/or can be engineered to work with an evolutionary algorithm. The basic idea is: iterated coverage

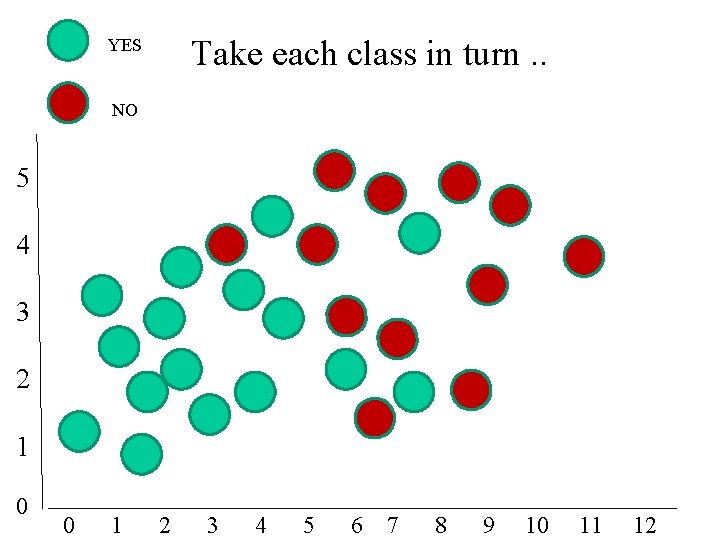

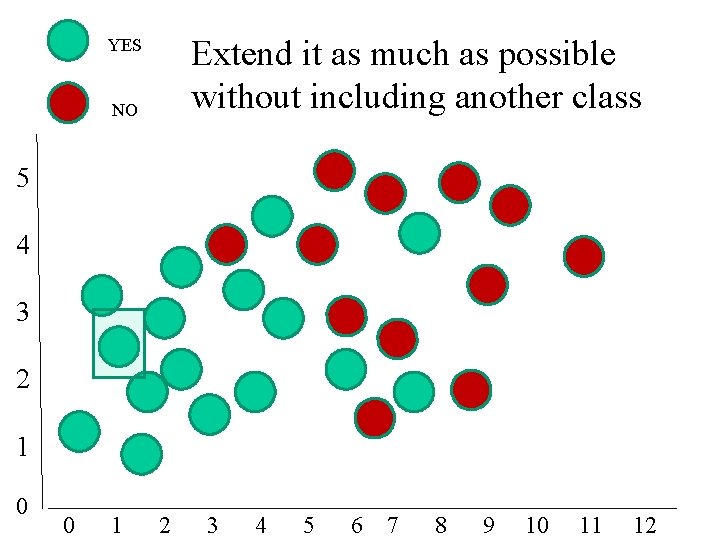

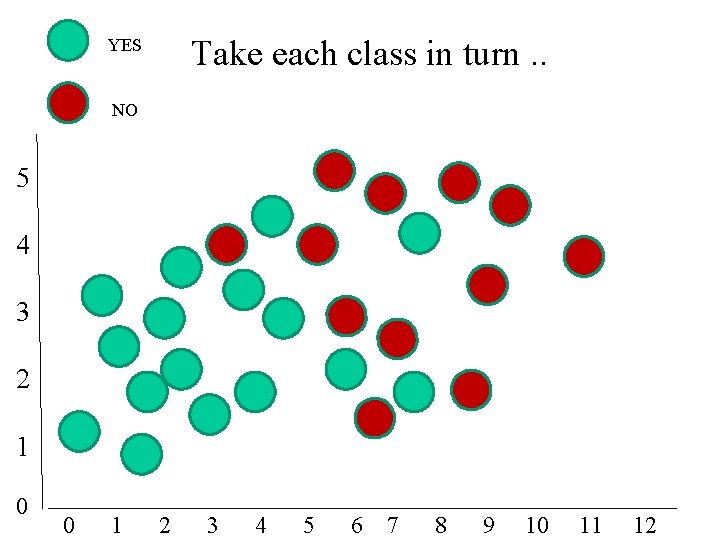

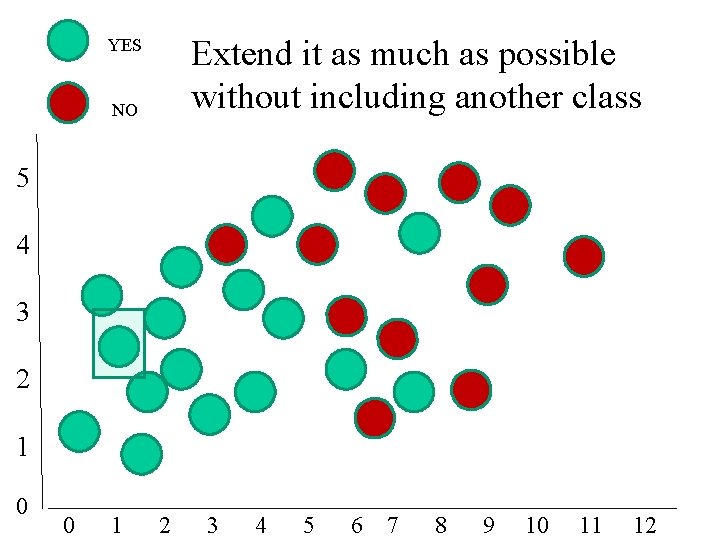

YES Take each class in turn. . NO 5 4 3 2 1 0 0 1 2 3 4 5 6 7 8 9 10 11 12

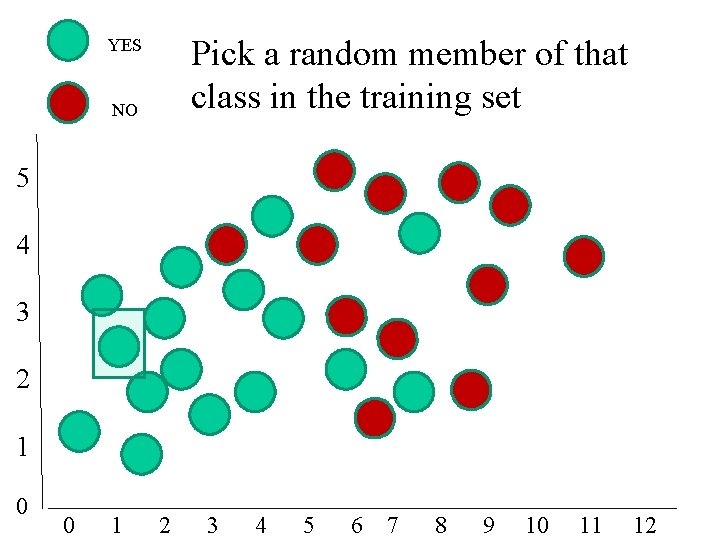

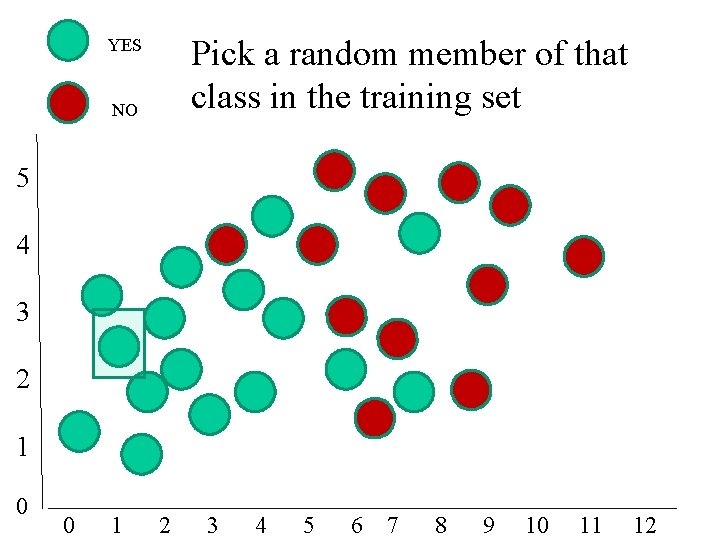

YES NO Pick a random member of that class in the training set 5 4 3 2 1 0 0 1 2 3 4 5 6 7 8 9 10 11 12

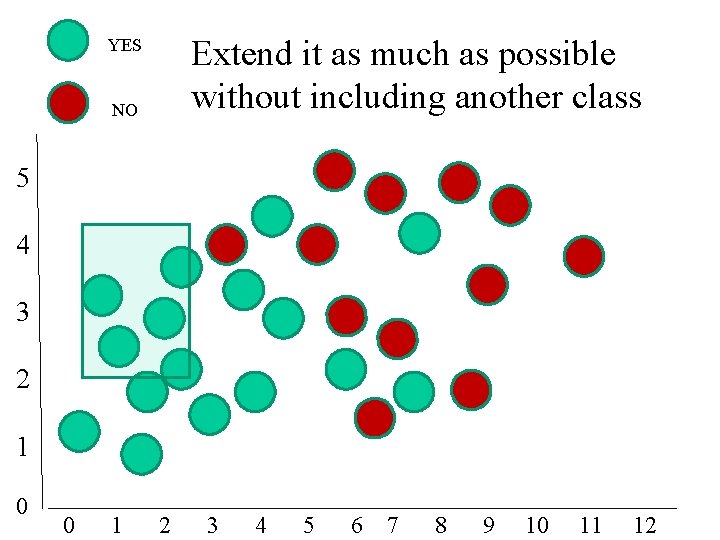

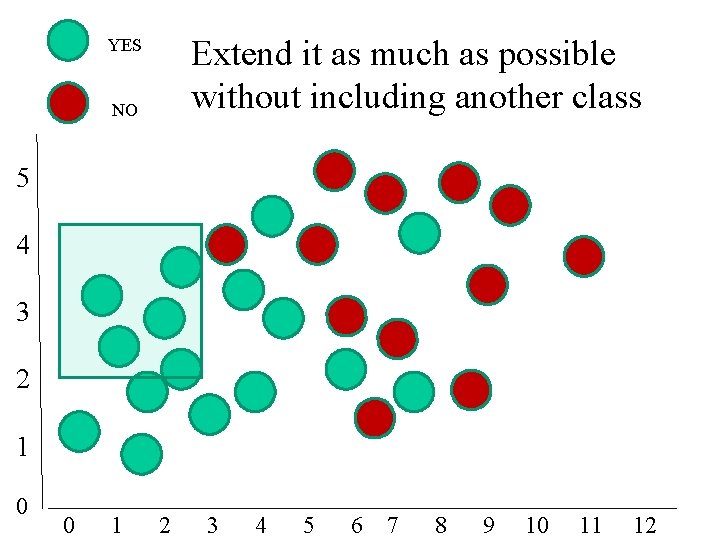

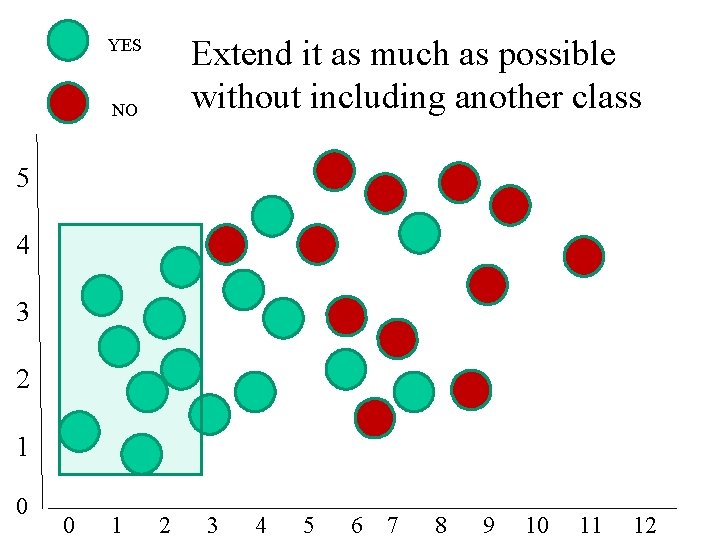

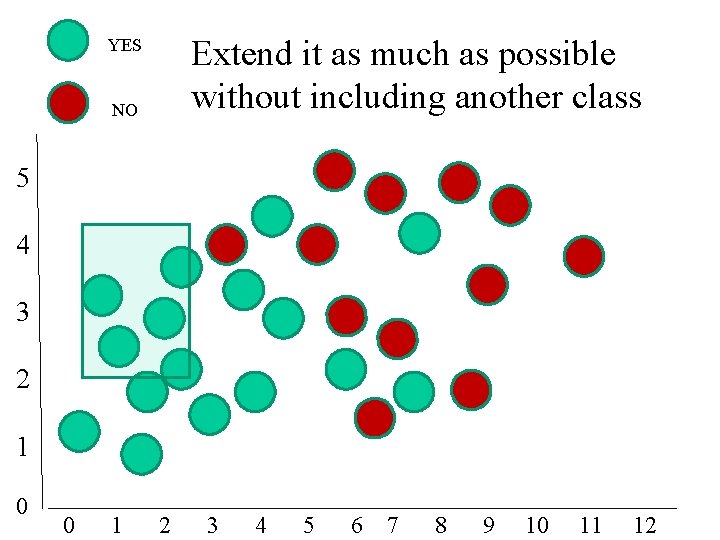

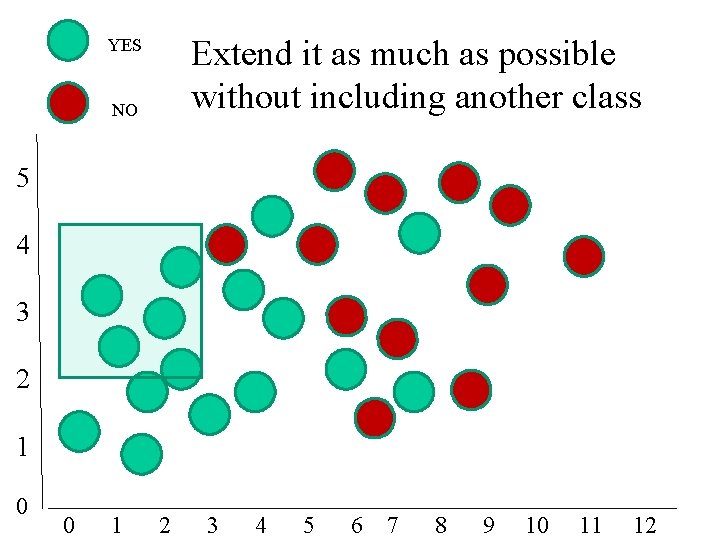

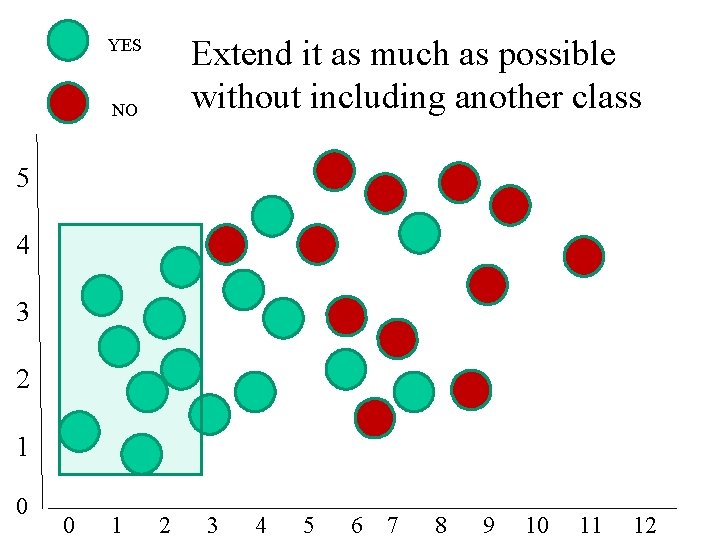

YES NO Extend it as much as possible without including another class 5 4 3 2 1 0 0 1 2 3 4 5 6 7 8 9 10 11 12

YES NO Extend it as much as possible without including another class 5 4 3 2 1 0 0 1 2 3 4 5 6 7 8 9 10 11 12

YES NO Extend it as much as possible without including another class 5 4 3 2 1 0 0 1 2 3 4 5 6 7 8 9 10 11 12

YES NO Extend it as much as possible without including another class 5 4 3 2 1 0 0 1 2 3 4 5 6 7 8 9 10 11 12

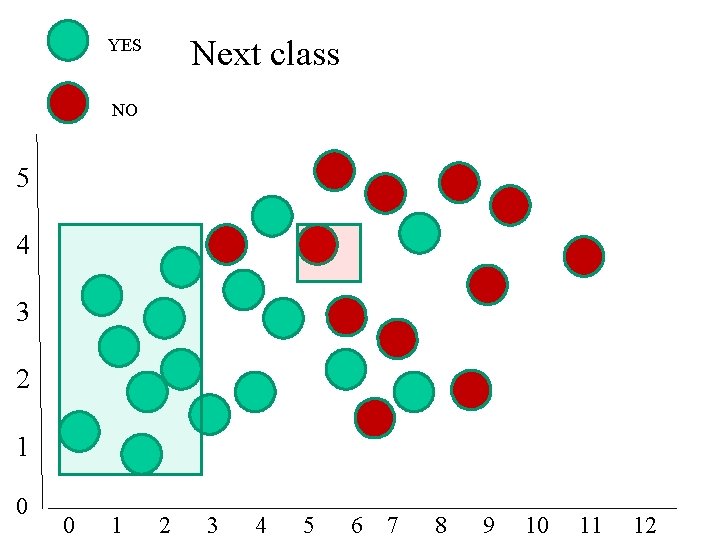

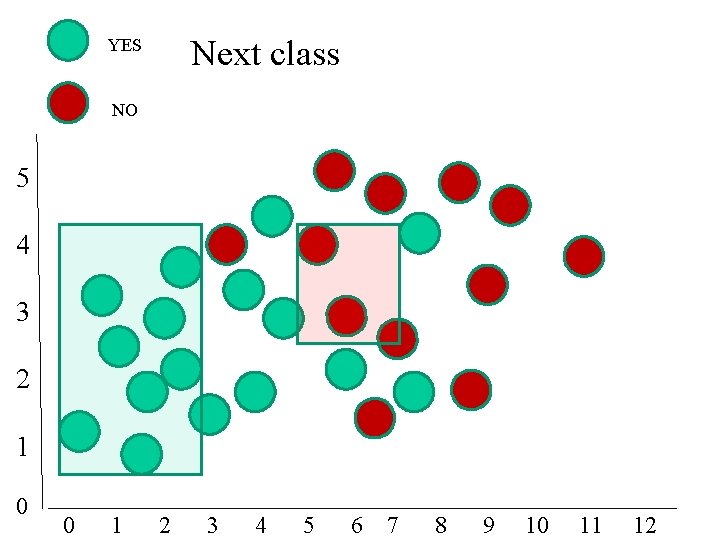

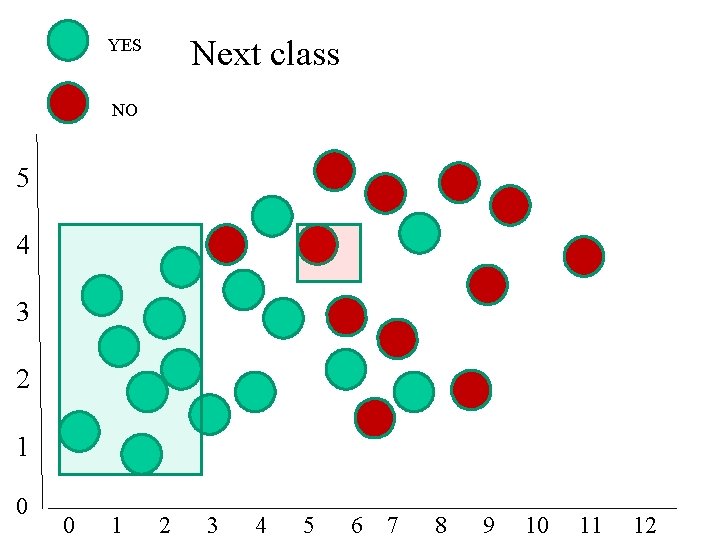

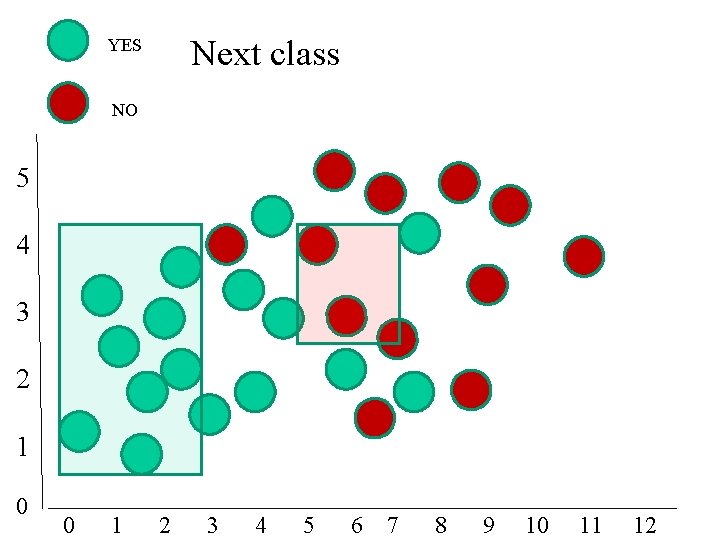

YES Next class NO 5 4 3 2 1 0 0 1 2 3 4 5 6 7 8 9 10 11 12

YES Next class NO 5 4 3 2 1 0 0 1 2 3 4 5 6 7 8 9 10 11 12

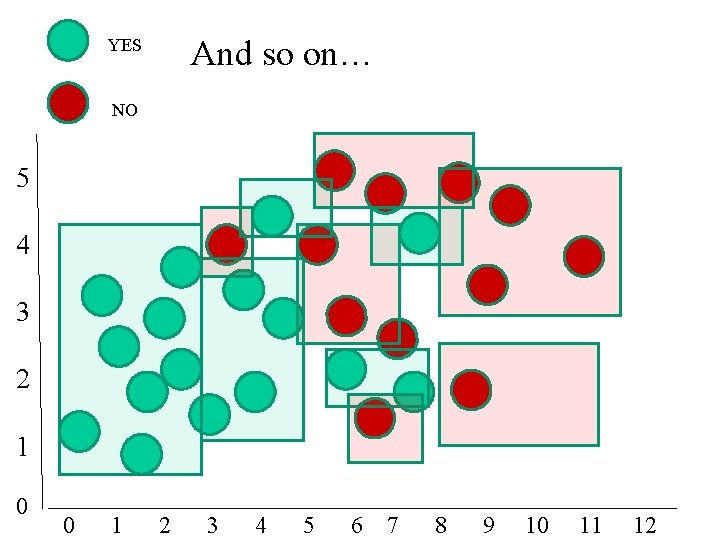

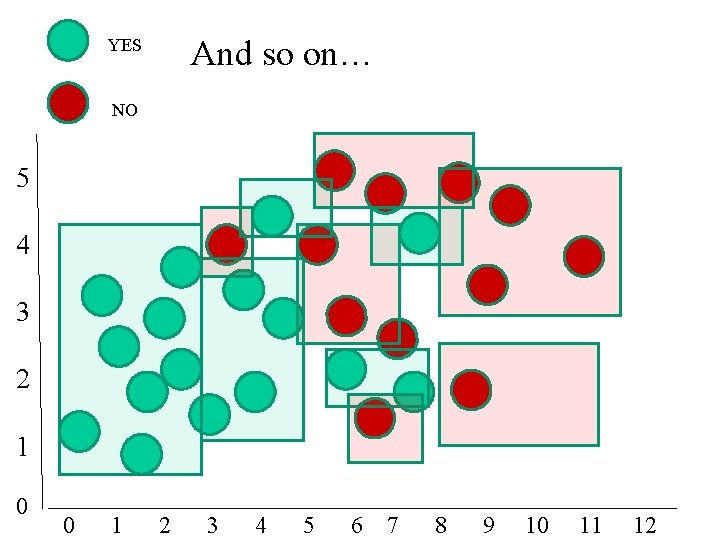

YES And so on… NO 5 4 3 2 1 0 0 1 2 3 4 5 6 7 8 9 10 11 12

Text as Data: what and why?

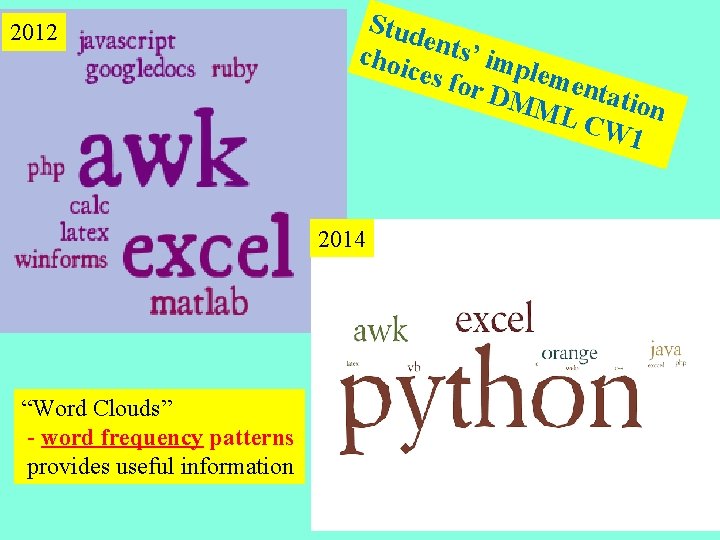

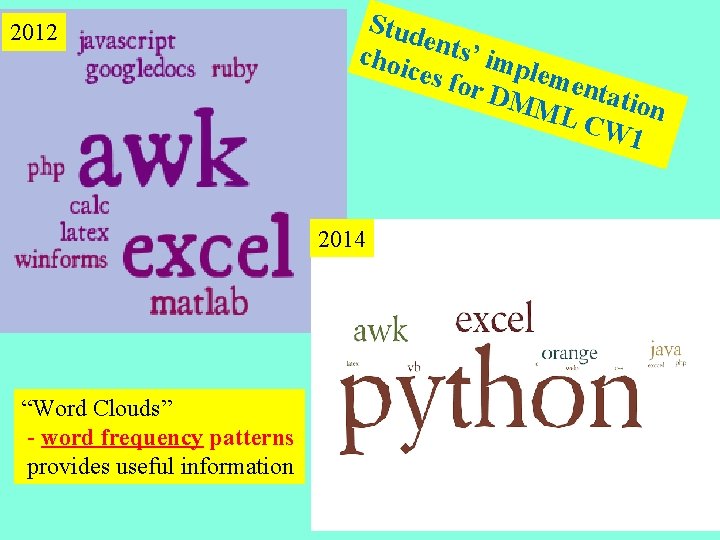

2012 Stud ents ’ imp choi ces f or D lementa tion MM L CW 1 2014 “Word Clouds” - word frequency patterns provides useful information

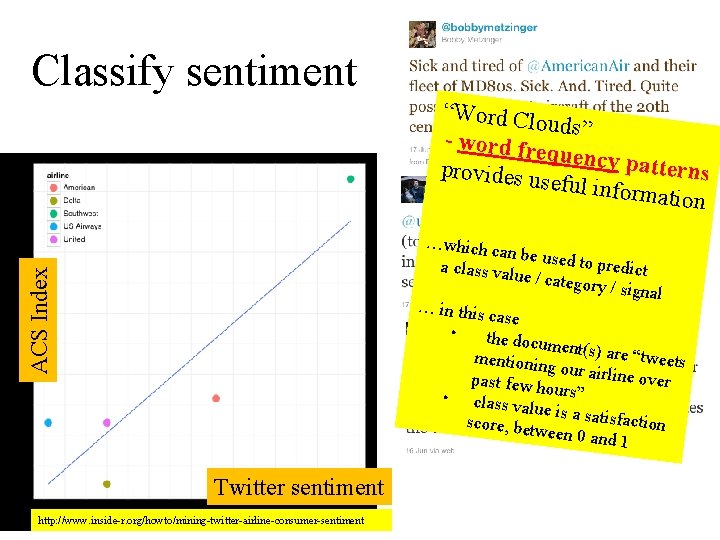

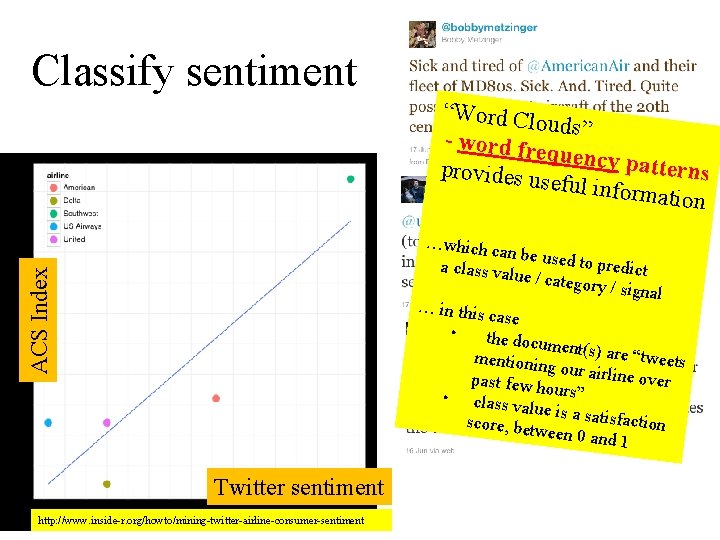

Classify sentiment “Word Clo uds” - word fr equency p atterns provides u seful infor mation ACS Index …which c an be used to predict a class v alue / cate gory / sign al … in this c ase • the do cument(s) are “twee mentio ts ning our a i r line over past fe w hours” • class v alue is a sa tisfaction score, between 0 and 1 Twitter sentiment http: //www. inside-r. org/howto/mining-twitter-airline-consumer-sentiment

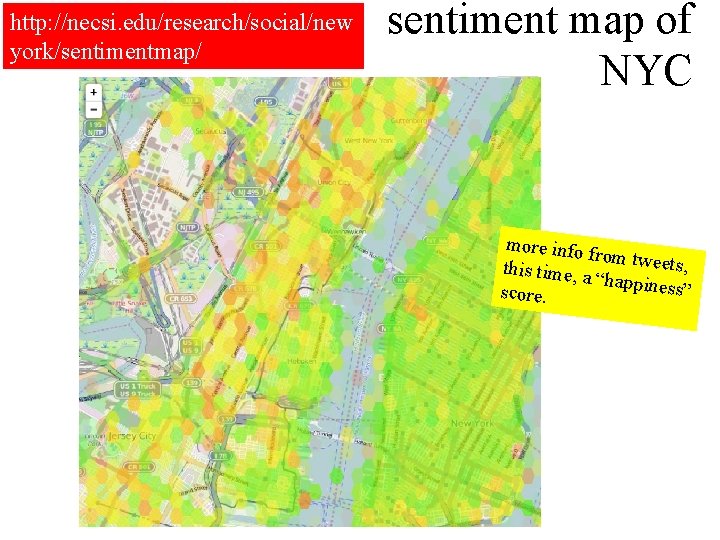

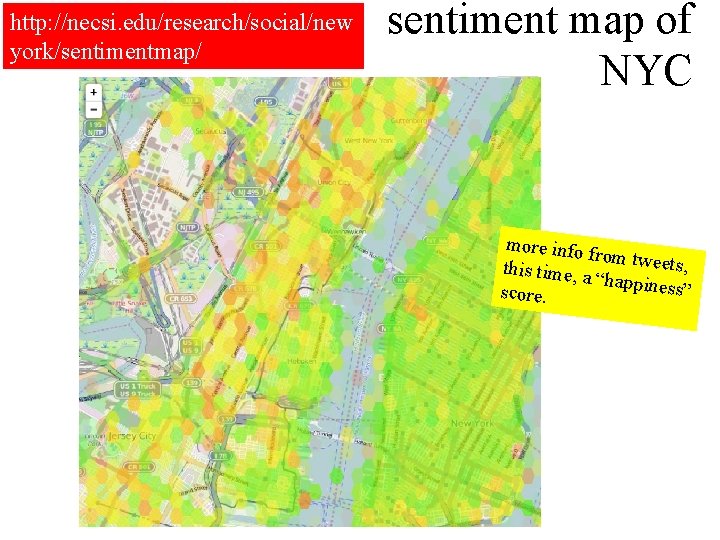

http: //necsi. edu/research/social/new york/sentimentmap/ sentiment map of NYC more info from tweet s, this time, a “happines s” score.

“similar pages” Based on d istances between w ord freque ncy patterns

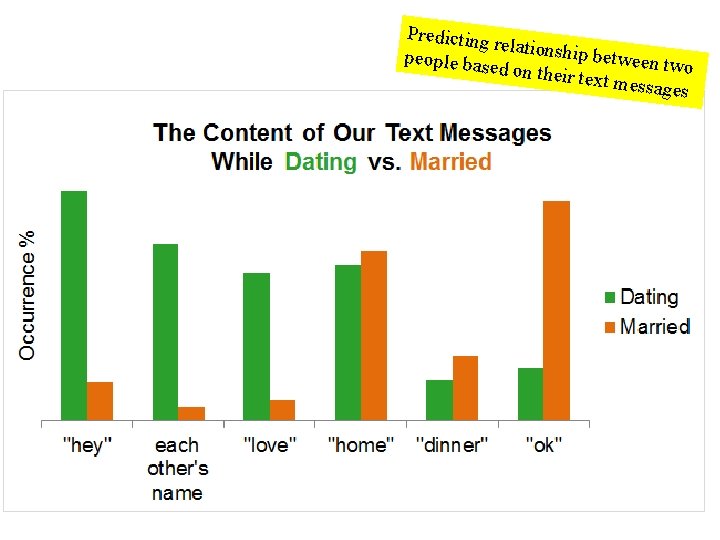

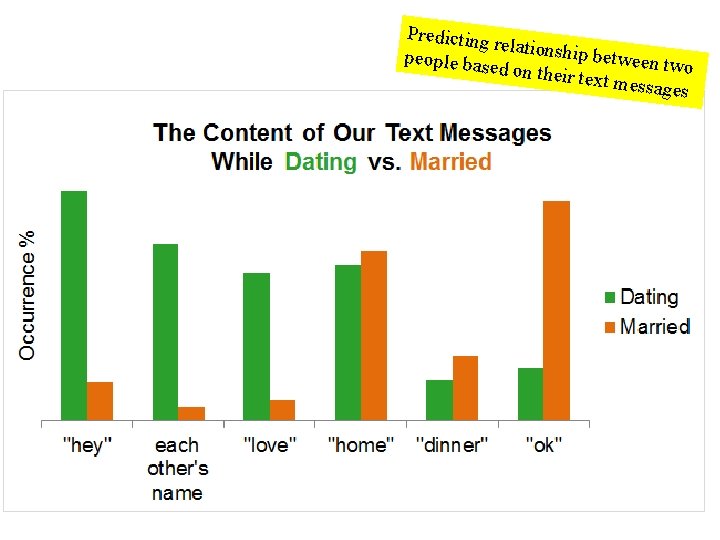

Predicting relationsh ip between people bas two ed on their text messa ges

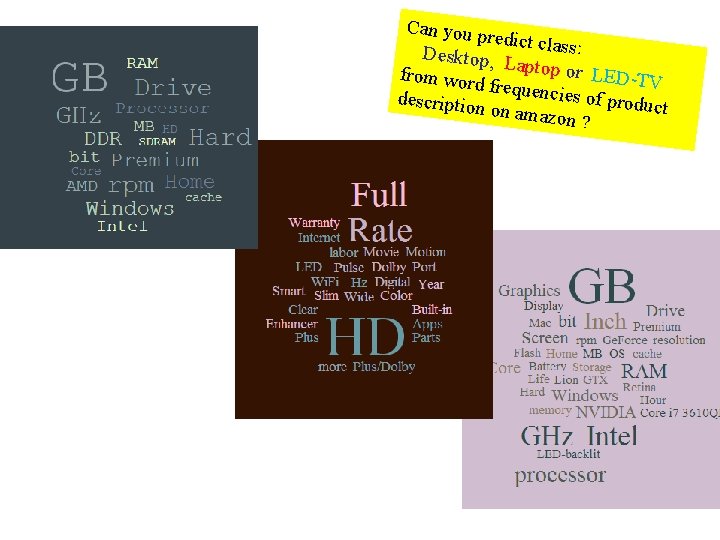

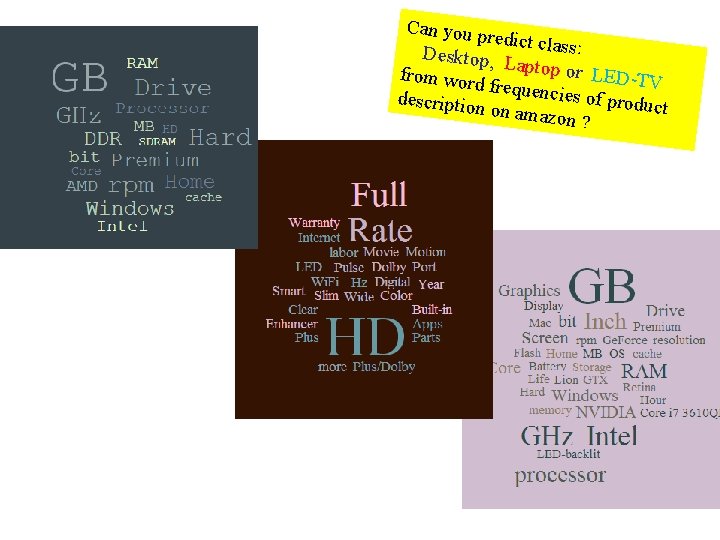

Can you p redict clas s: Desktop , Laptop o r LED-TV from word frequenci es of prod description uct on amazo n ?

So, word frequency is important – does this mean that the most frequent words in a text carry the most useful information about its content/category/meaning?

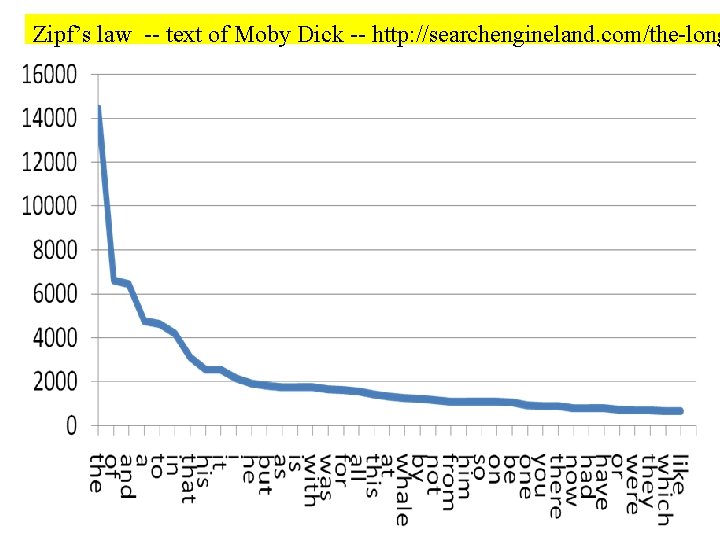

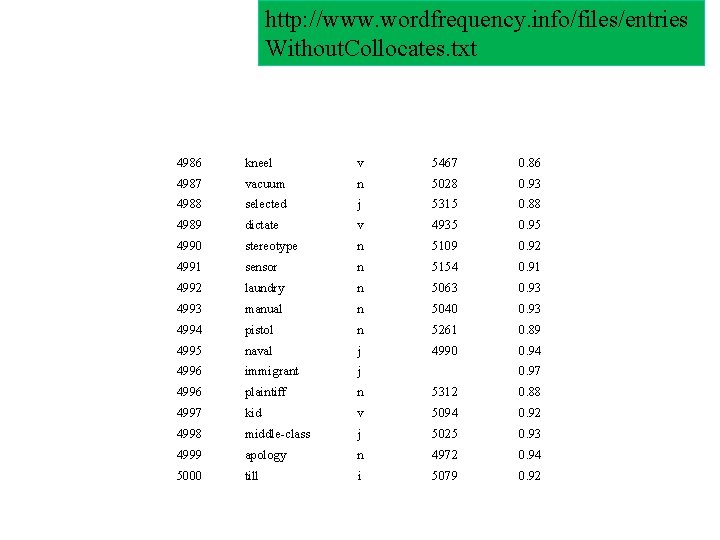

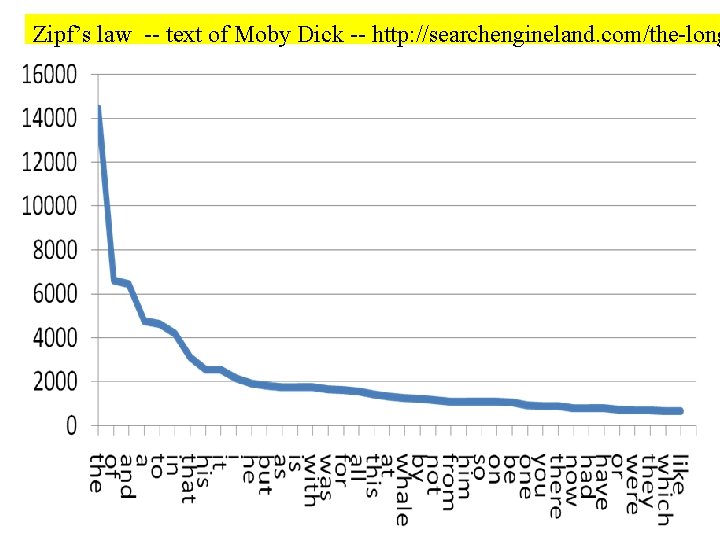

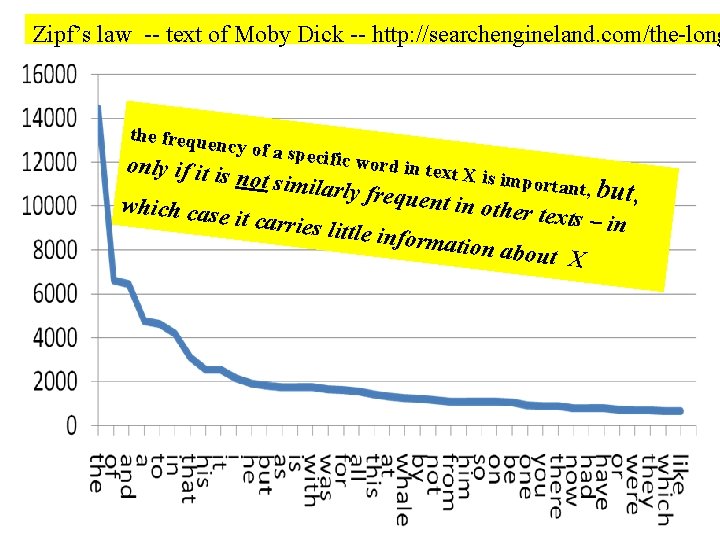

Zipf’s law -- text of Moby Dick -- http: //searchengineland. com/the-long

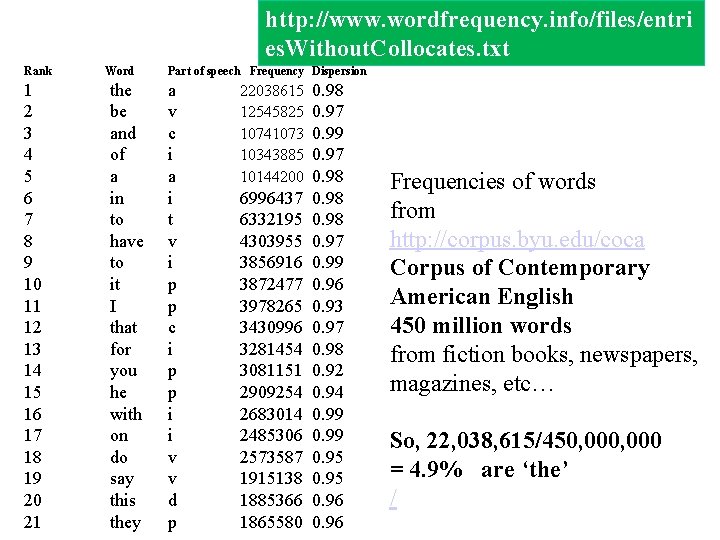

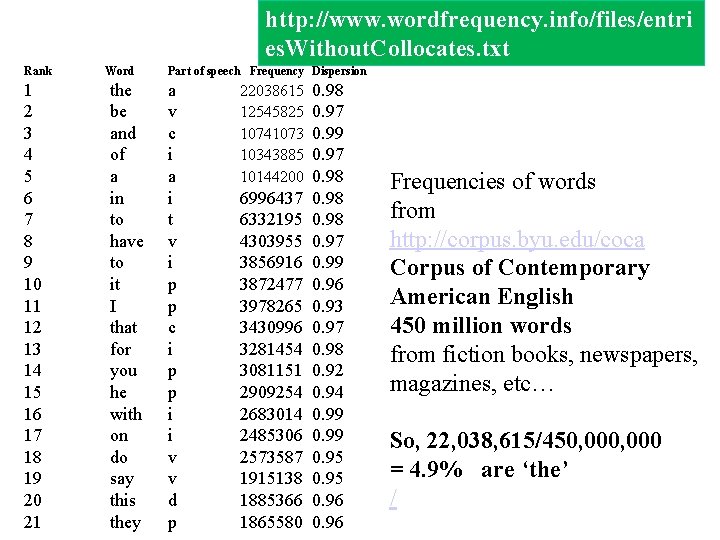

http: //www. wordfrequency. info/files/entri es. Without. Collocates. txt Rank Word 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 the be and of a in to have to it I that for you he with on do say this they Part of speech Frequency Dispersion a v c i a i t v i p p c i p p i i v v d p 22038615 0. 98 12545825 0. 97 10741073 0. 99 10343885 0. 97 10144200 0. 98 6996437 0. 98 6332195 0. 98 4303955 0. 97 3856916 0. 99 3872477 0. 96 3978265 0. 93 3430996 0. 97 3281454 0. 98 3081151 0. 92 2909254 0. 94 2683014 0. 99 2485306 0. 99 2573587 0. 95 1915138 0. 95 1885366 0. 96 1865580 0. 96 Frequencies of words from http: //corpus. byu. edu/coca Corpus of Contemporary American English 450 million words from fiction books, newspapers, magazines, etc… So, 22, 038, 615/450, 000 = 4. 9% are ‘the’ /

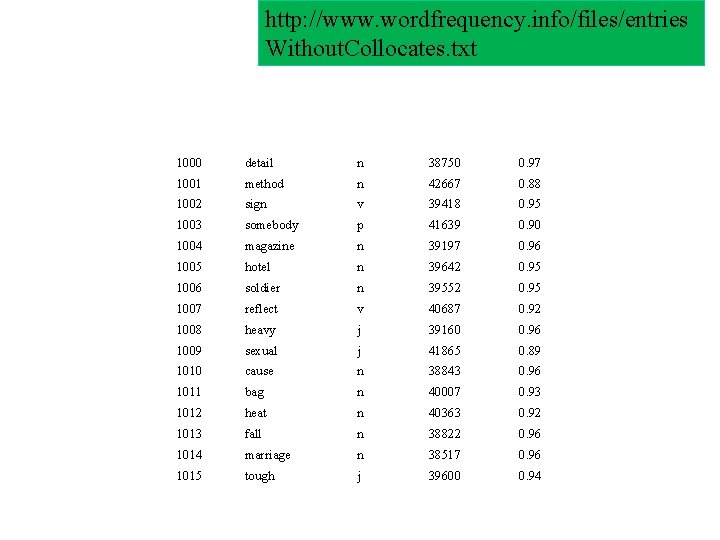

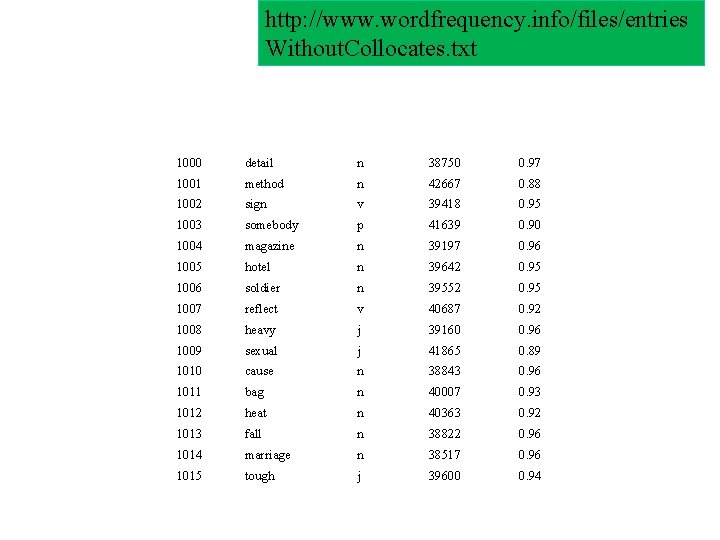

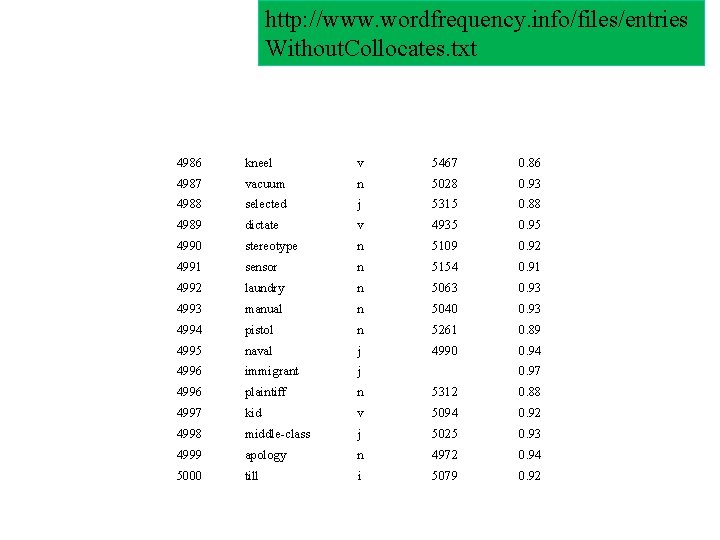

http: //www. wordfrequency. info/files/entries Without. Collocates. txt 1000 detail n 38750 0. 97 1001 method n 42667 0. 88 1002 sign v 39418 0. 95 1003 somebody p 41639 0. 90 1004 magazine n 39197 0. 96 1005 hotel n 39642 0. 95 1006 soldier n 39552 0. 95 1007 reflect v 40687 0. 92 1008 heavy j 39160 0. 96 1009 sexual j 41865 0. 89 1010 cause n 38843 0. 96 1011 bag n 40007 0. 93 1012 heat n 40363 0. 92 1013 fall n 38822 0. 96 1014 marriage n 38517 0. 96 1015 tough j 39600 0. 94

http: //www. wordfrequency. info/files/entries Without. Collocates. txt 4986 kneel v 5467 0. 86 4987 vacuum n 5028 0. 93 4988 selected j 5315 0. 88 4989 dictate v 4935 0. 95 4990 stereotype n 5109 0. 92 4991 sensor n 5154 0. 91 4992 laundry n 5063 0. 93 4993 manual n 5040 0. 93 4994 pistol n 5261 0. 89 4995 naval j 4990 0. 94 4996 immigrant j 4996 plaintiff n 5312 0. 88 4997 kid v 5094 0. 92 4998 middle-class j 5025 0. 93 4999 apology n 4972 0. 94 5000 till i 5079 0. 92 0. 97

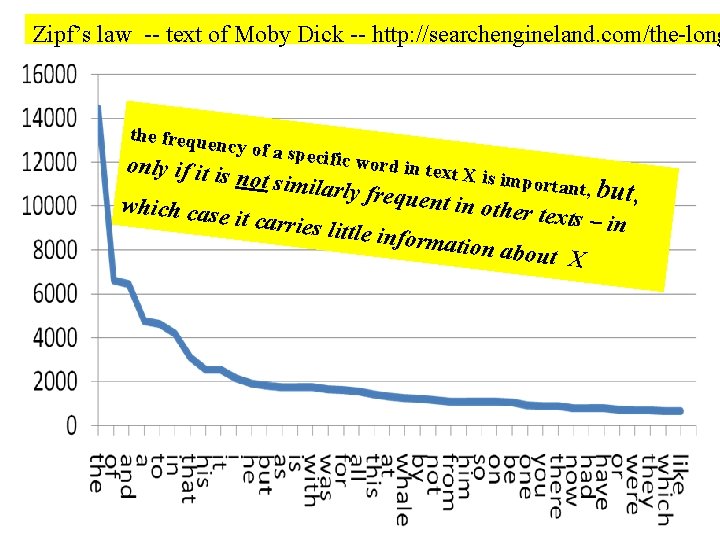

Zipf’s law -- text of Moby Dick -- http: //searchengineland. com/the-long the freque ncy of a sp only if it is which case ecific wor not similar d in text X it carries l ly frequen is importa t in other t ittle inform nt, but, exts – in ation abou t X

Which leads us to TFIDF We can do any kind of DMML with text, as soon as we convert text into numbers - This is usually done with a “TFIDF” encoding - and almost always done with either TFIDF or a close relation - TFIDF is basically word frequency, but takes into account ‘background frequency’ – so words that are very common have their value reduced.

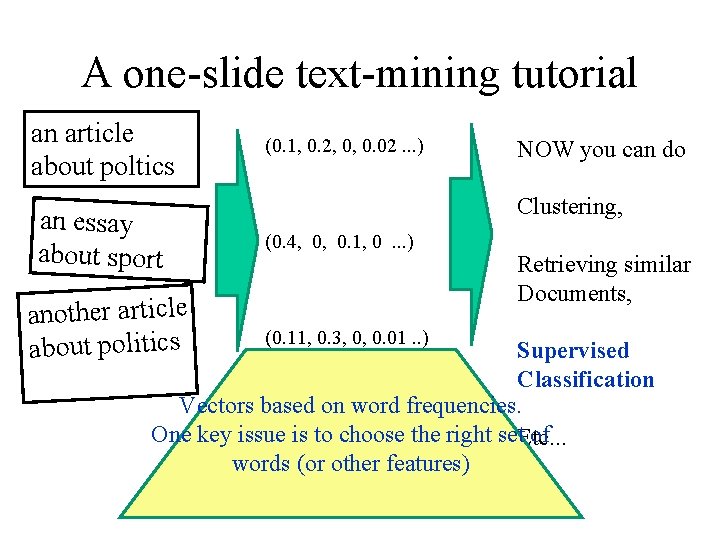

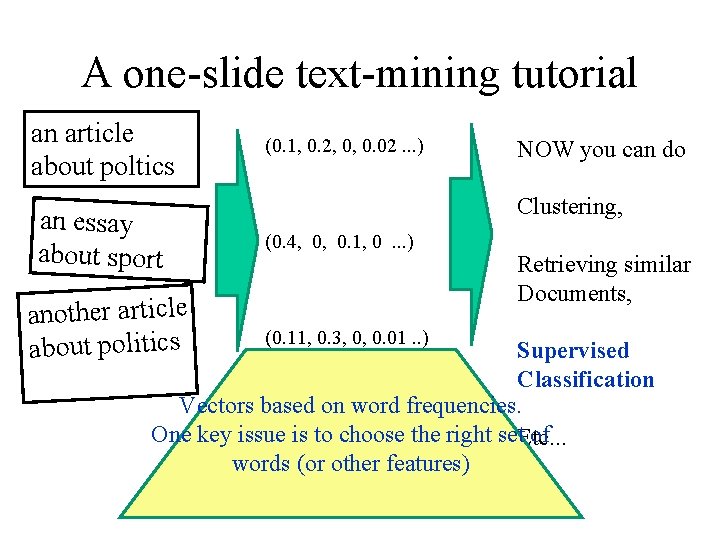

A one-slide text-mining tutorial an article about poltics an essay about sport another article about politics (0. 1, 0. 2, 0, 0. 02. . . ) NOW you can do Clustering, (0. 4, 0. 1, 0 . . . ) (0. 11, 0. 3, 0, 0. 01. . ) Retrieving similar Documents, Supervised Classification Vectors based on word frequencies. One key issue is to choose the right set of Etc. . . words (or other features)

First, a quick illustration to show why wordfrequency vectors are useful

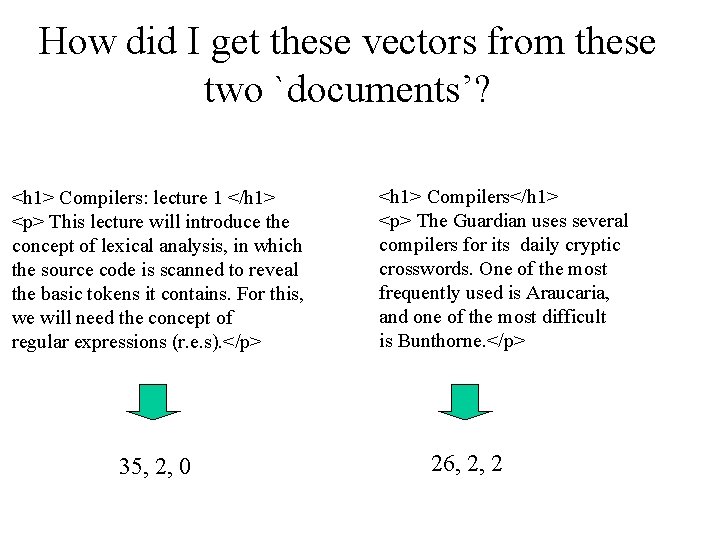

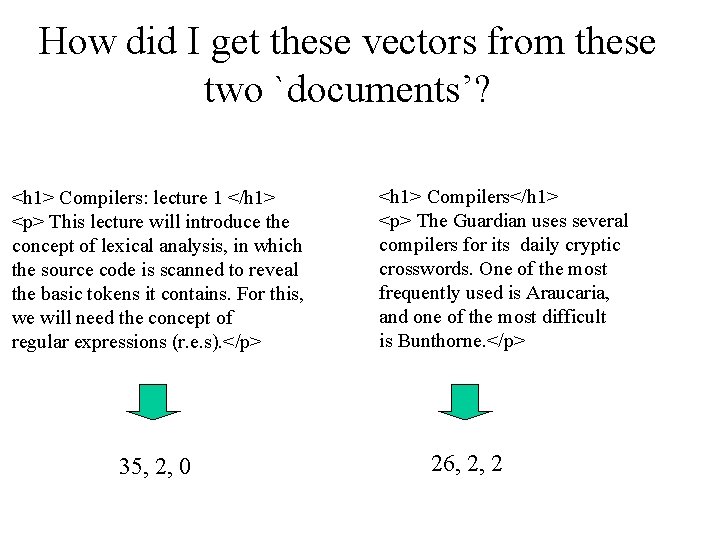

How did I get these vectors from these two `documents’? <h 1> Compilers: lecture 1 </h 1> <p> This lecture will introduce the concept of lexical analysis, in which the source code is scanned to reveal the basic tokens it contains. For this, we will need the concept of regular expressions (r. e. s). </p> 35, 2, 0 <h 1> Compilers</h 1> <p> The Guardian uses several compilers for its daily cryptic crosswords. One of the most frequently used is Araucaria, and one of the most difficult is Bunthorne. </p> 26, 2, 2

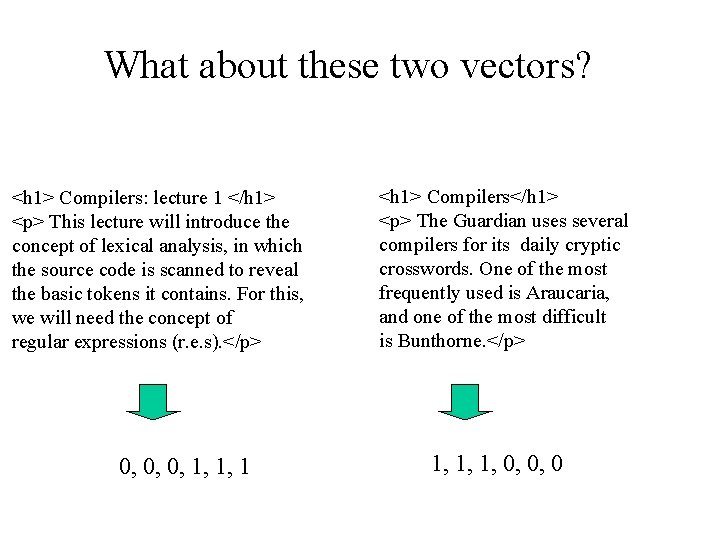

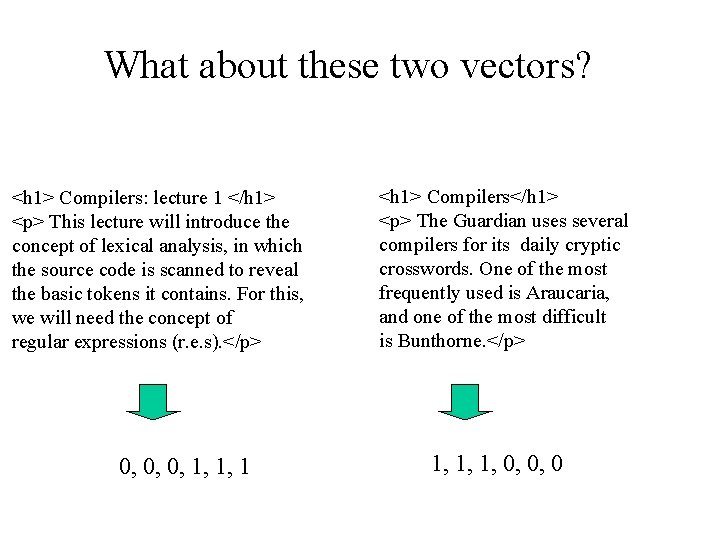

What about these two vectors? <h 1> Compilers: lecture 1 </h 1> <p> This lecture will introduce the concept of lexical analysis, in which the source code is scanned to reveal the basic tokens it contains. For this, we will need the concept of regular expressions (r. e. s). </p> 0, 0, 0, 1, 1, 1 <h 1> Compilers</h 1> <p> The Guardian uses several compilers for its daily cryptic crosswords. One of the most frequently used is Araucaria, and one of the most difficult is Bunthorne. </p> 1, 1, 1, 0, 0, 0

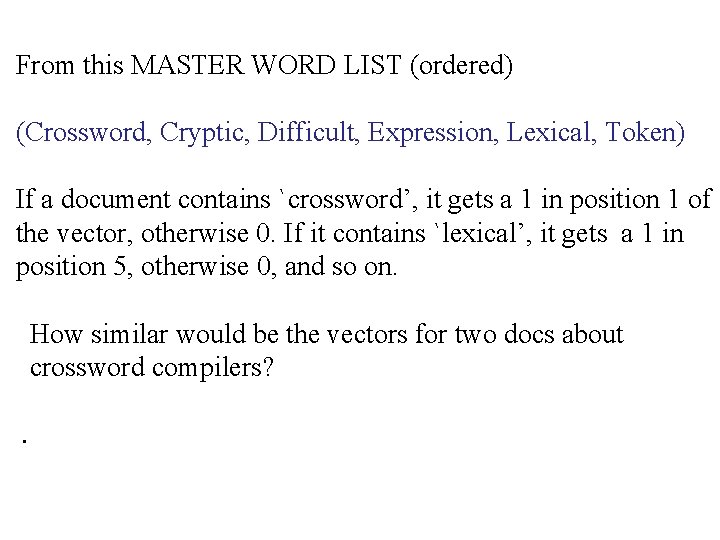

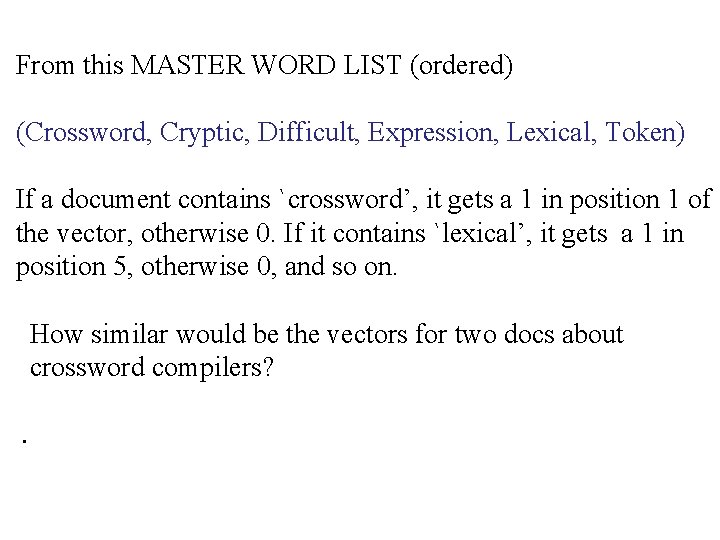

From this MASTER WORD LIST (ordered) (Crossword, Cryptic, Difficult, Expression, Lexical, Token) If a document contains `crossword’, it gets a 1 in position 1 of the vector, otherwise 0. If it contains `lexical’, it gets a 1 in position 5, otherwise 0, and so on. How similar would be the vectors for two docs about crossword compilers? .

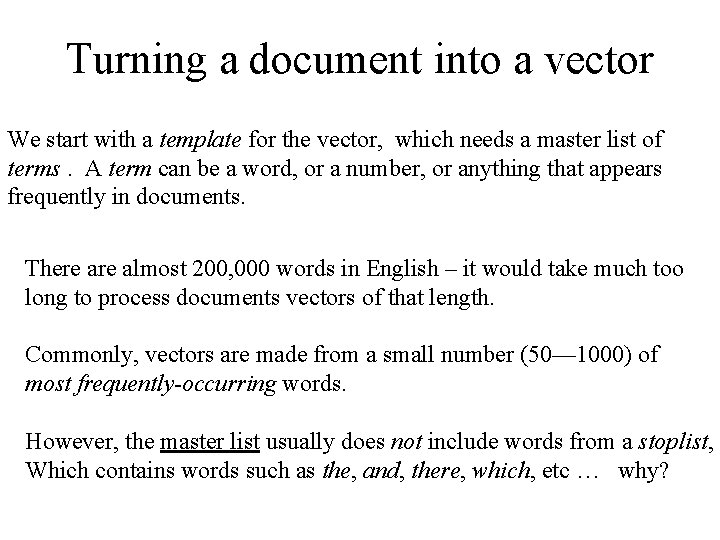

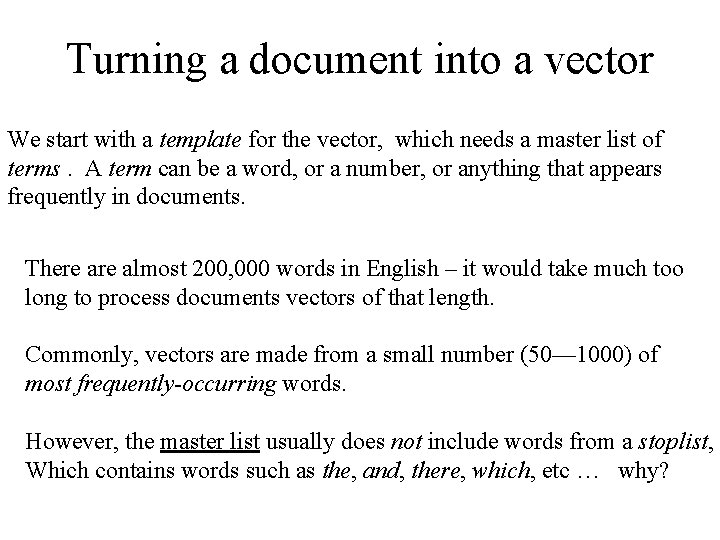

Turning a document into a vector We start with a template for the vector, which needs a master list of terms. A term can be a word, or a number, or anything that appears frequently in documents. There almost 200, 000 words in English – it would take much too long to process documents vectors of that length. Commonly, vectors are made from a small number (50— 1000) of most frequently-occurring words. However, the master list usually does not include words from a stoplist, Which contains words such as the, and, there, which, etc … why?

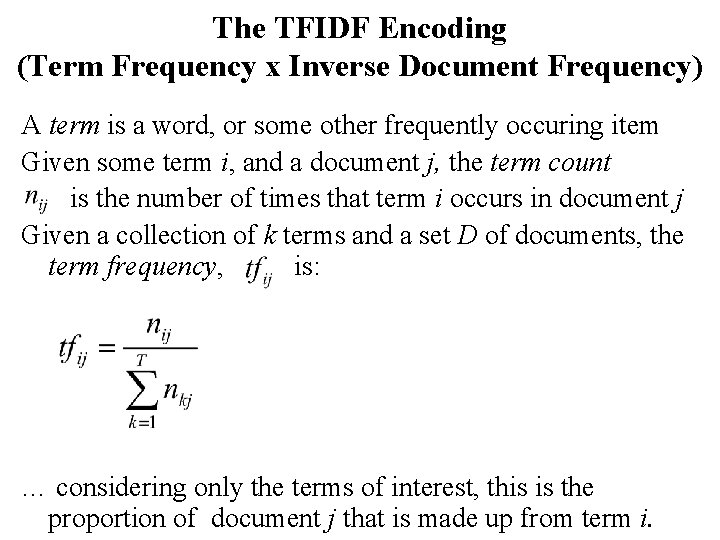

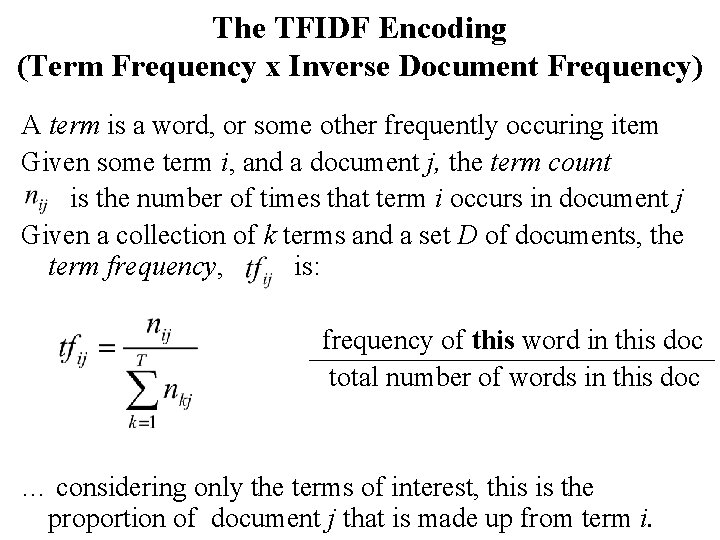

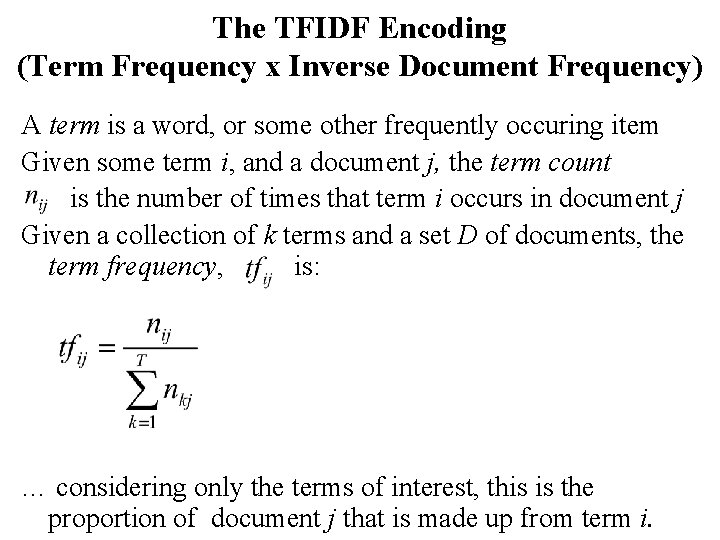

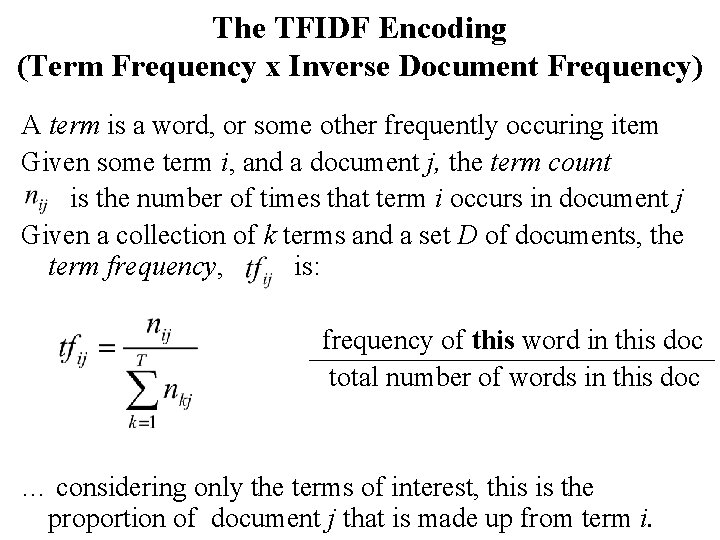

The TFIDF Encoding (Term Frequency x Inverse Document Frequency) A term is a word, or some other frequently occuring item Given some term i, and a document j, the term count is the number of times that term i occurs in document j Given a collection of k terms and a set D of documents, the term frequency, is: … considering only the terms of interest, this is the proportion of document j that is made up from term i.

The TFIDF Encoding (Term Frequency x Inverse Document Frequency) A term is a word, or some other frequently occuring item Given some term i, and a document j, the term count is the number of times that term i occurs in document j Given a collection of k terms and a set D of documents, the term frequency, is: frequency of this word in this doc total number of words in this doc … considering only the terms of interest, this is the proportion of document j that is made up from term i.

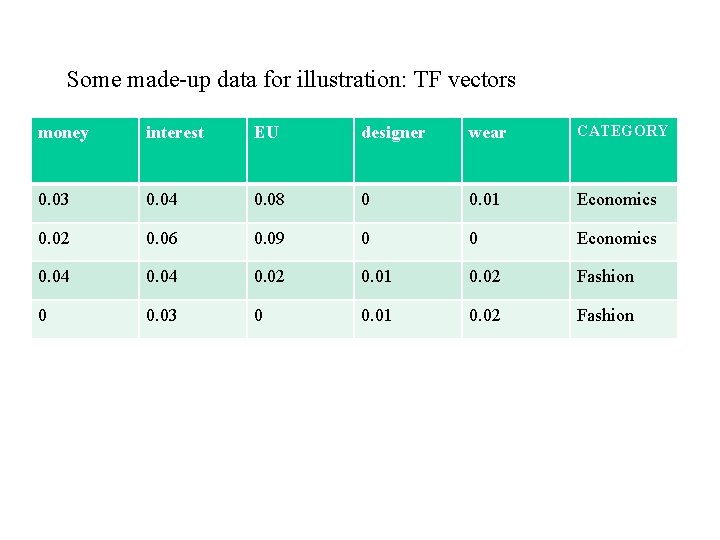

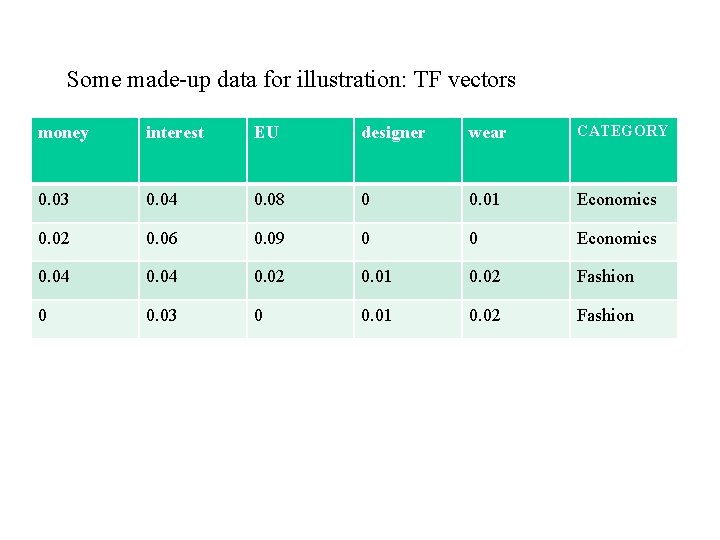

Some made-up data for illustration: TF vectors money interest EU designer wear CATEGORY 0. 03 0. 04 0. 08 0 0. 01 Economics 0. 02 0. 06 0. 09 0 0 Economics 0. 04 0. 02 0. 01 0. 02 Fashion 0 0. 03 0 0. 01 0. 02 Fashion

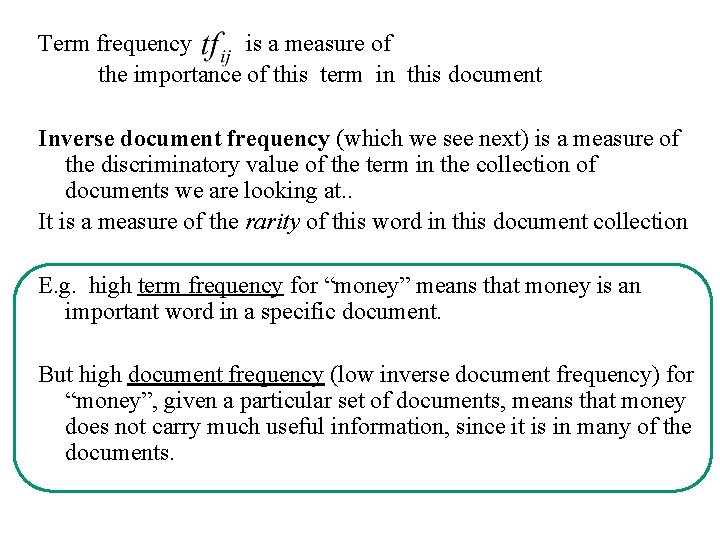

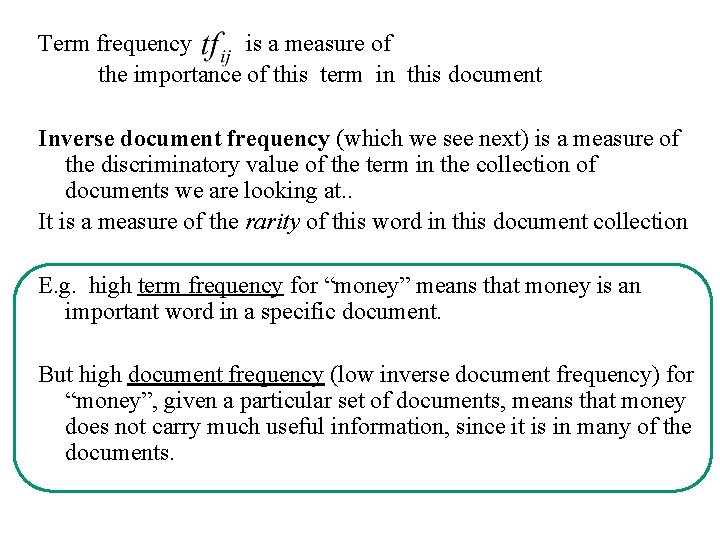

Term frequency is a measure of the importance of this term in this document Inverse document frequency (which we see next) is a measure of the discriminatory value of the term in the collection of documents we are looking at. . It is a measure of the rarity of this word in this document collection E. g. high term frequency for “money” means that money is an important word in a specific document. But high document frequency (low inverse document frequency) for “money”, given a particular set of documents, means that money does not carry much useful information, since it is in many of the documents.

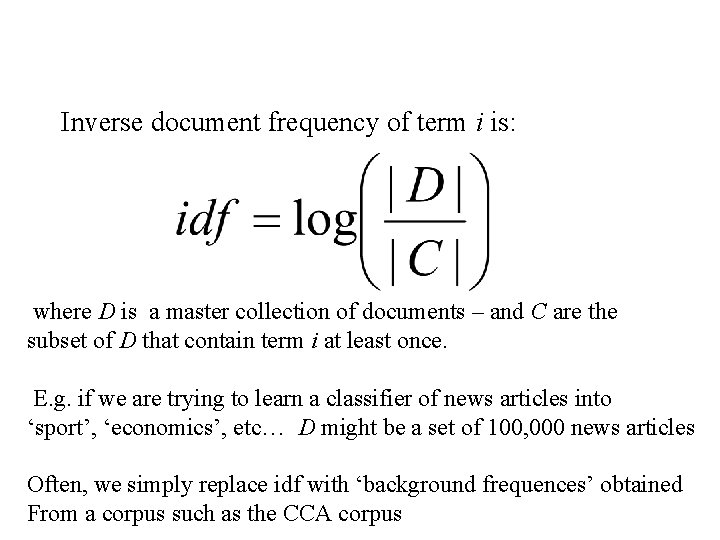

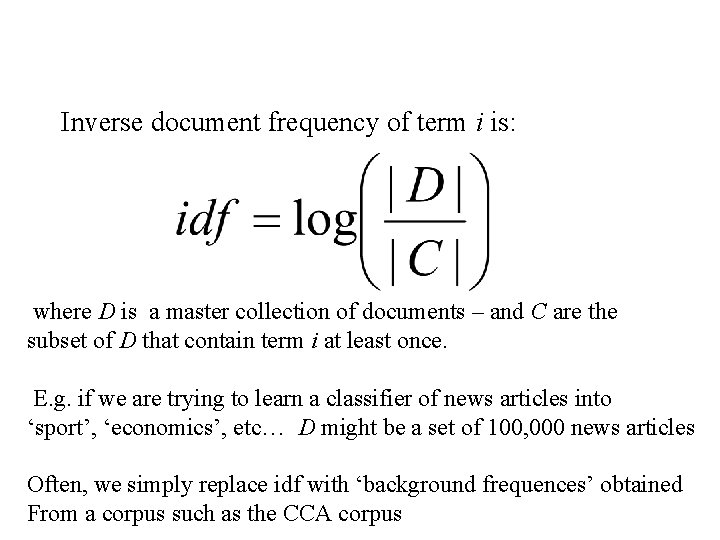

Inverse document frequency of term i is: where D is a master collection of documents – and C are the subset of D that contain term i at least once. E. g. if we are trying to learn a classifier of news articles into ‘sport’, ‘economics’, etc… D might be a set of 100, 000 news articles Often, we simply replace idf with ‘background frequences’ obtained From a corpus such as the CCA corpus

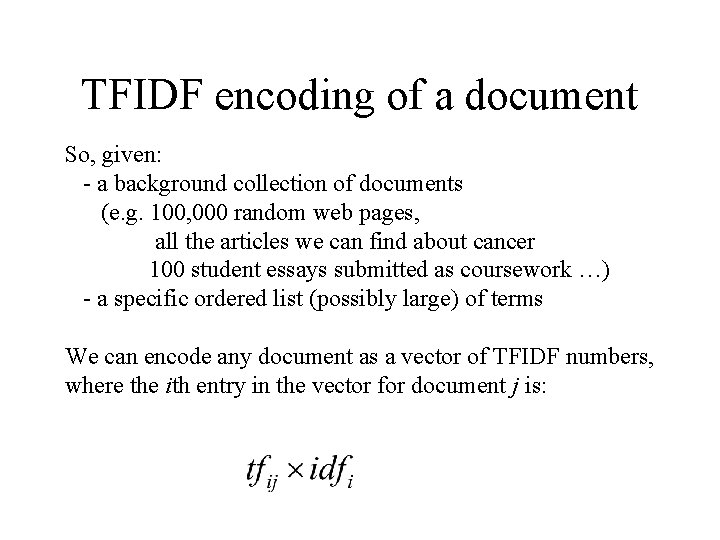

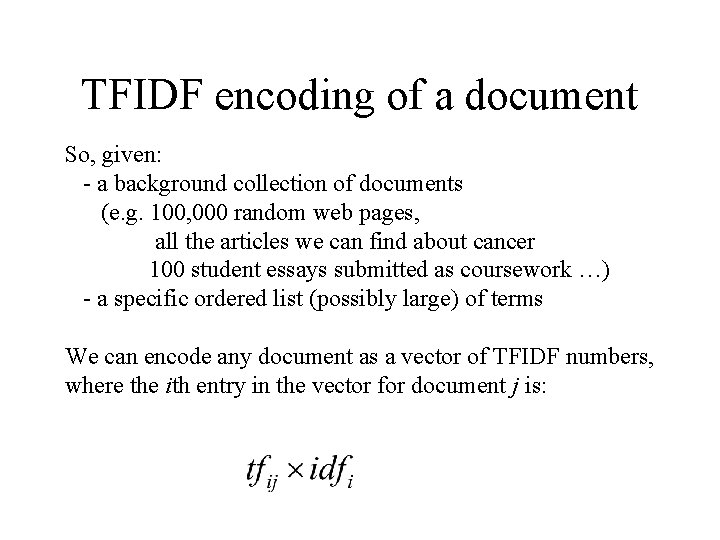

TFIDF encoding of a document So, given: - a background collection of documents (e. g. 100, 000 random web pages, all the articles we can find about cancer 100 student essays submitted as coursework …) - a specific ordered list (possibly large) of terms We can encode any document as a vector of TFIDF numbers, where the ith entry in the vector for document j is:

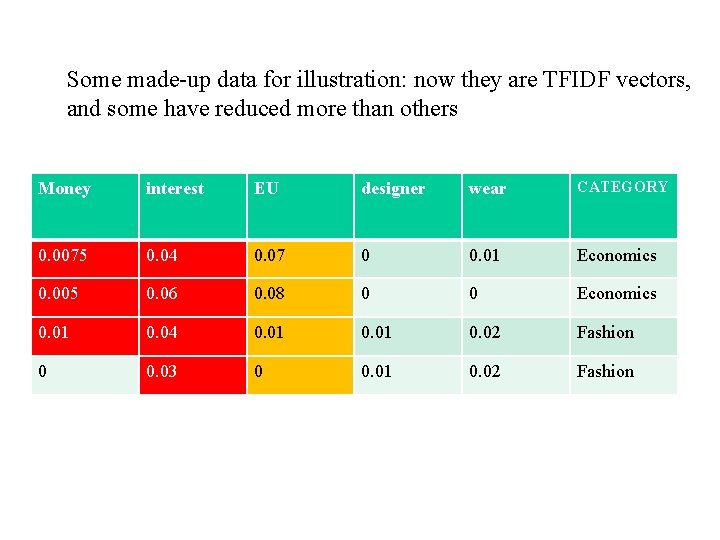

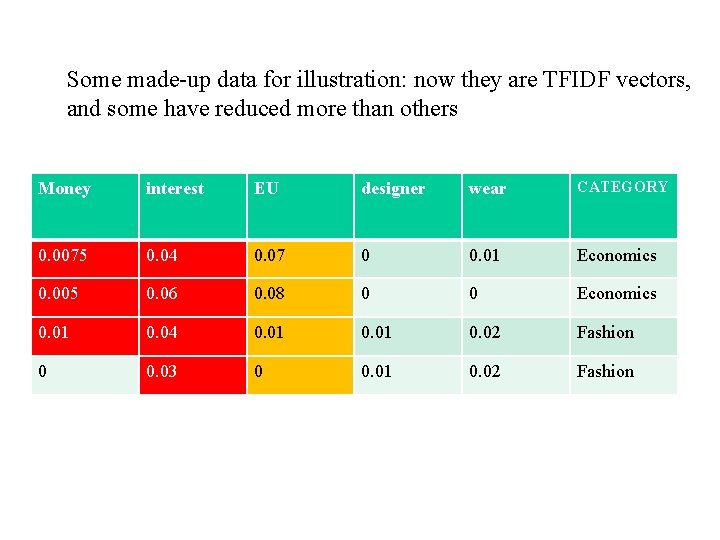

Some made-up data for illustration: now they are TFIDF vectors, and some have reduced more than others Money interest EU designer wear CATEGORY 0. 0075 0. 04 0. 07 0 0. 01 Economics 0. 005 0. 06 0. 08 0 0 Economics 0. 01 0. 04 0. 01 0. 02 Fashion 0 0. 03 0 0. 01 0. 02 Fashion

Vector representation of documents underpins: Many areas of automated document analysis Such as: automated classification of documents Clustering and organising document collections Building maps of the web, and of different web communities Understanding the interactions between different scientific communities, which in turn will lead to helping with automated WWW-based scientific discovery.

Example / recent work of my Ph. D student Hamouda Chantar

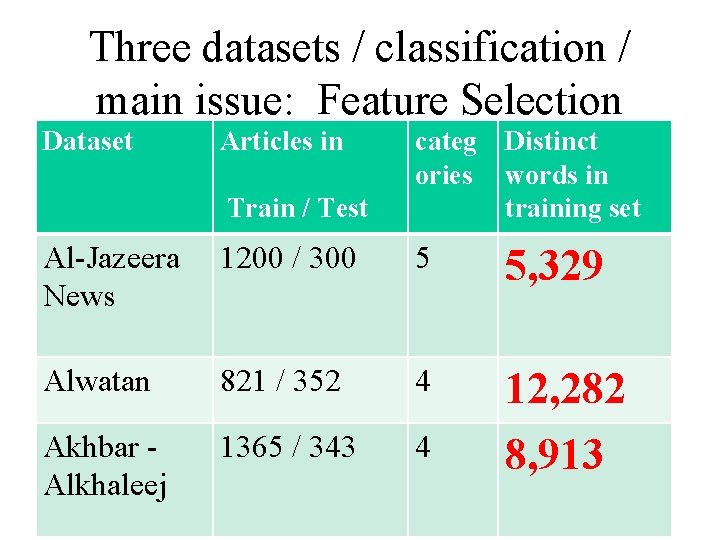

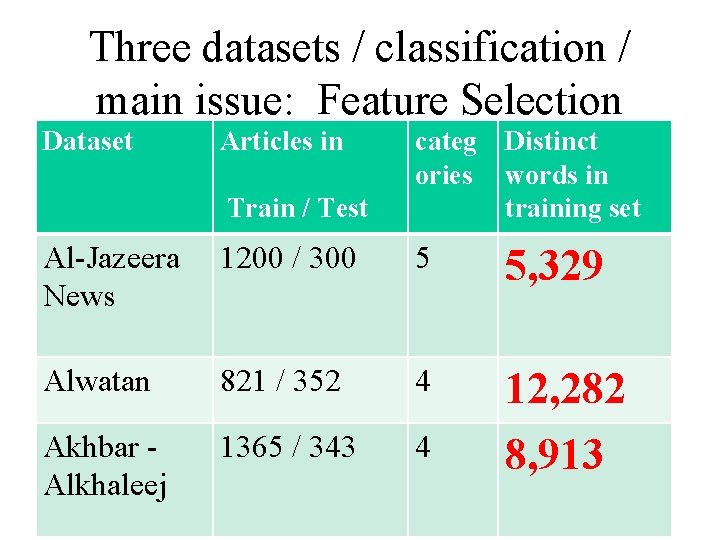

Three datasets / classification / main issue: Feature Selection Dataset Articles in Train / Test categ Distinct ories words in training set Al-Jazeera News 1200 / 300 5 5, 329 Alwatan 821 / 352 4 12, 282 Akhbar Alkhaleej 1365 / 343 4 8, 913

Hamouda’s work Focus on automated classification of an article (e. g. Finance, Economics, Sport, Culture, . . . ) Emphasis on Feature Selection – which words or other features should constitute the vectors, to enable accurate classification?

Example categories: this is the Akhbar-Alkhaleej dataset Category International News Local news Sport Economy Total Train Test Total 228 58 286 576 144 720 343 218 1365 86 55 343 429 273 1708

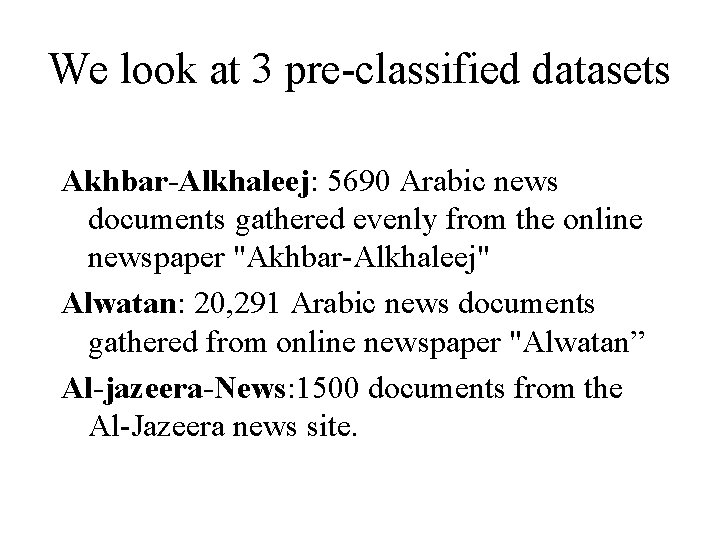

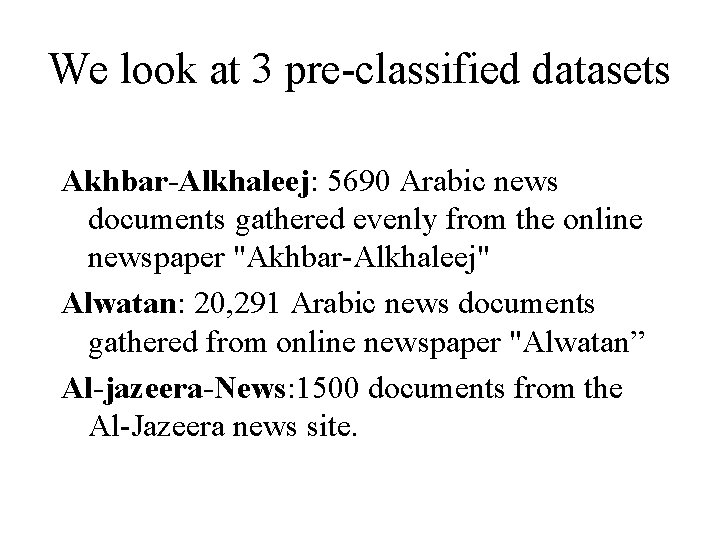

We look at 3 pre-classified datasets Akhbar-Alkhaleej: 5690 Arabic news documents gathered evenly from the online newspaper "Akhbar-Alkhaleej" Alwatan: 20, 291 Arabic news documents gathered from online newspaper "Alwatan” Al-jazeera-News: 1500 documents from the Al-Jazeera news site.

i r a / d g. s a t a d ab

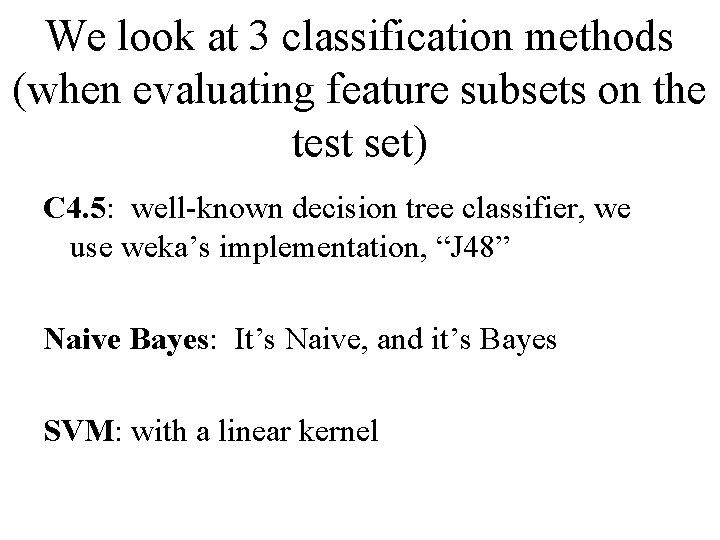

We look at 3 classification methods (when evaluating feature subsets on the test set) C 4. 5: well-known decision tree classifier, we use weka’s implementation, “J 48” Naive Bayes: It’s Naive, and it’s Bayes SVM: with a linear kernel

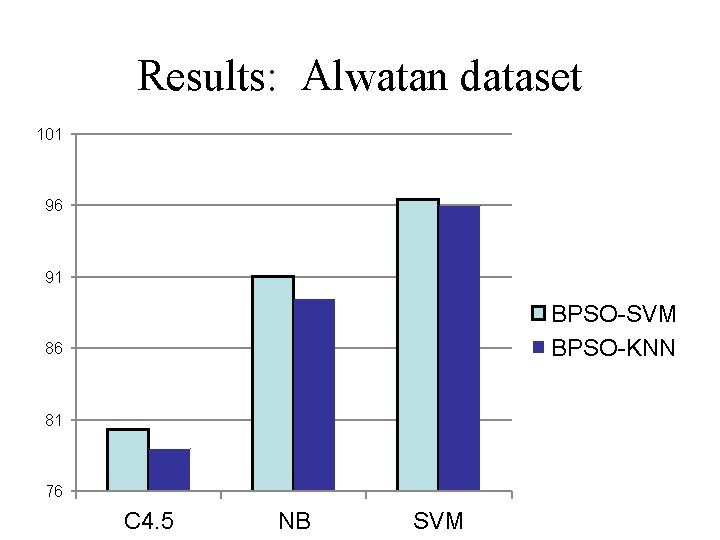

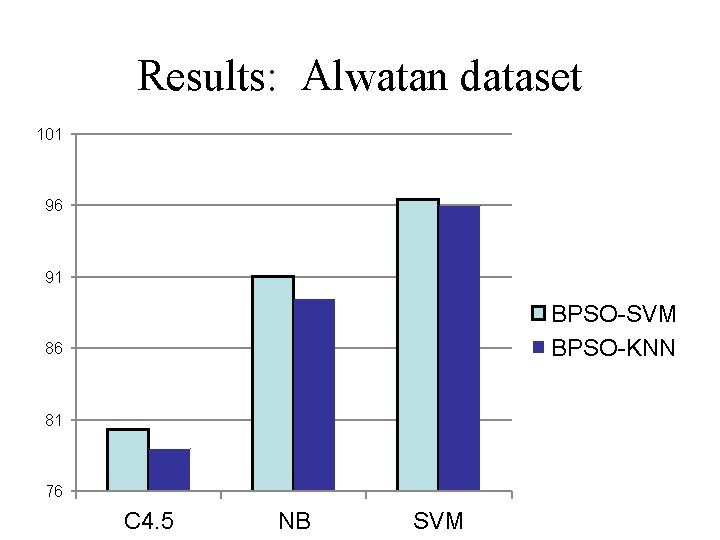

Results: Alwatan dataset 101 96 91 BPSO-SVM BPSO-KNN 86 81 76 C 4. 5 NB SVM

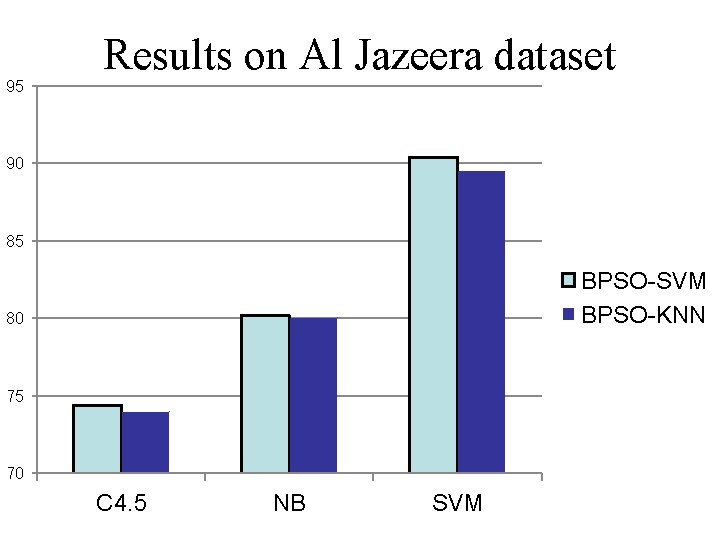

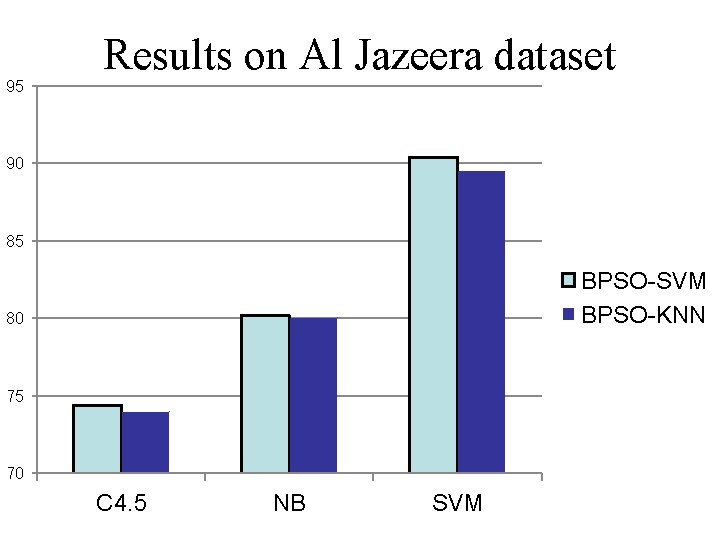

95 Results on Al Jazeera dataset 90 85 BPSO-SVM BPSO-KNN 80 75 70 C 4. 5 NB SVM

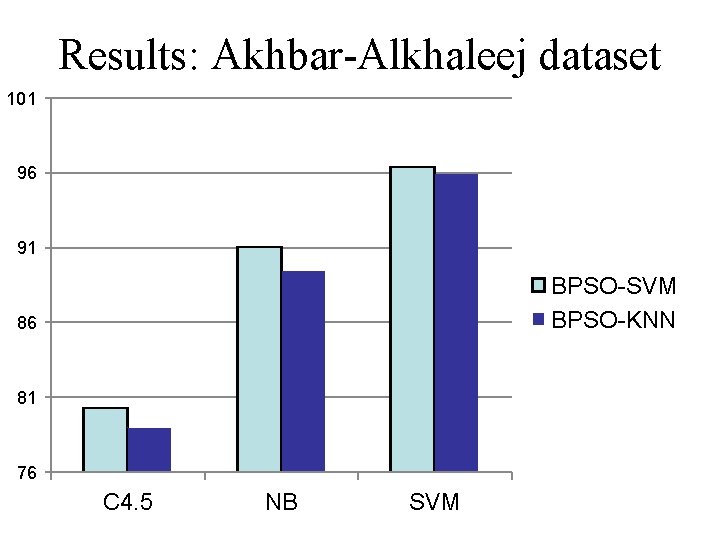

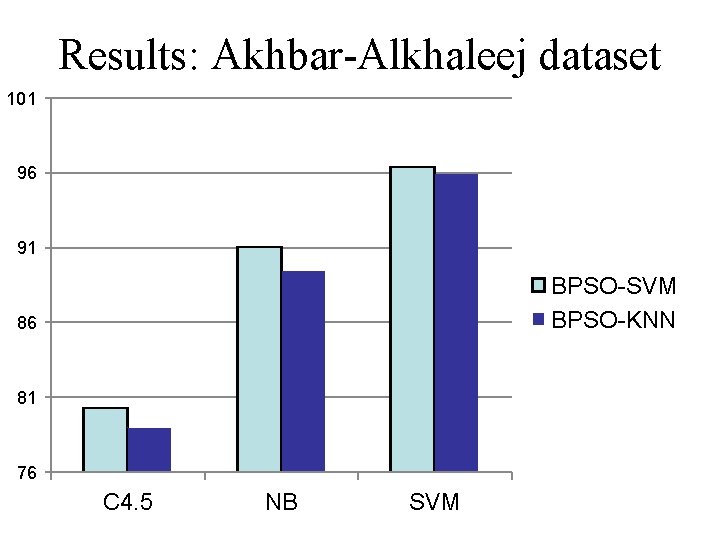

Results: Akhbar-Alkhaleej dataset 101 96 91 BPSO-SVM BPSO-KNN 86 81 76 C 4. 5 NB SVM

tara