Data Mining and machine learning DM Lecture 6

- Slides: 35

Data Mining (and machine learning) DM Lecture 6: Similarity and Distance David Corne, and Nick Taylor, Heriot-Watt University - dwcorne@gmail. com These slides and related resources: http: //www. macs. hw. ac. uk/~dwcorne/Teaching/dmml. html

Today • Similarity / Distance between data records, is used in: – Clustering – Many machine learning methods – Many, many practical applications • More fundamentally: – Sometimes data records are entirely unstructured – e. g free text answers in a questionnaire, news articles, etc. – To do DM/ML they need to be structured somehow – Then we can cluster them, etc … • Plus – Notes about validation and overfitting David Corne, and Nick Taylor, Heriot-Watt University - dwcorne@gmail. com These slides and related resources: http: //www. macs. hw. ac. uk/~dwcorne/Teaching/dmml. html

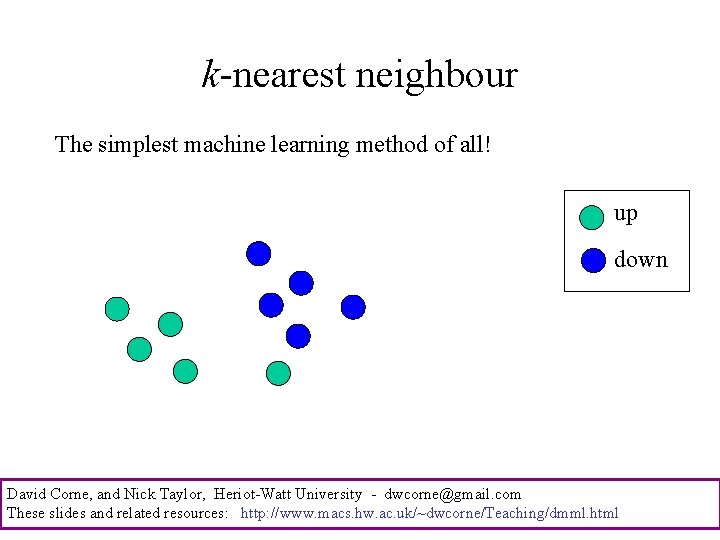

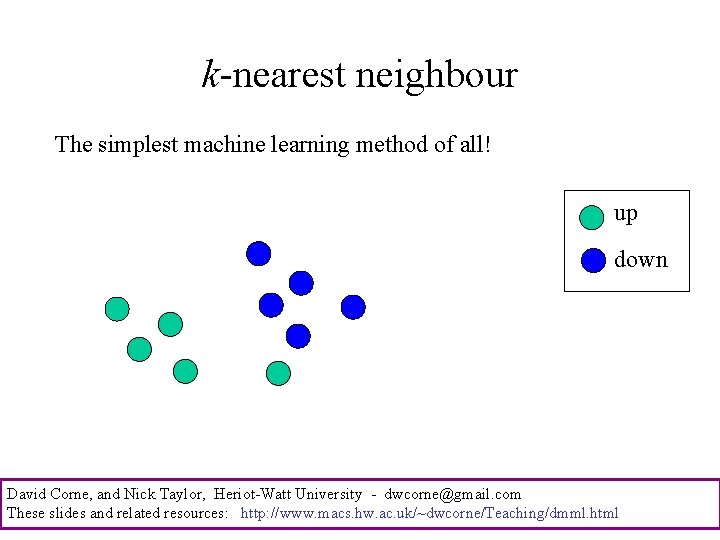

k-nearest neighbour The simplest machine learning method of all! up down David Corne, and Nick Taylor, Heriot-Watt University - dwcorne@gmail. com These slides and related resources: http: //www. macs. hw. ac. uk/~dwcorne/Teaching/dmml. html

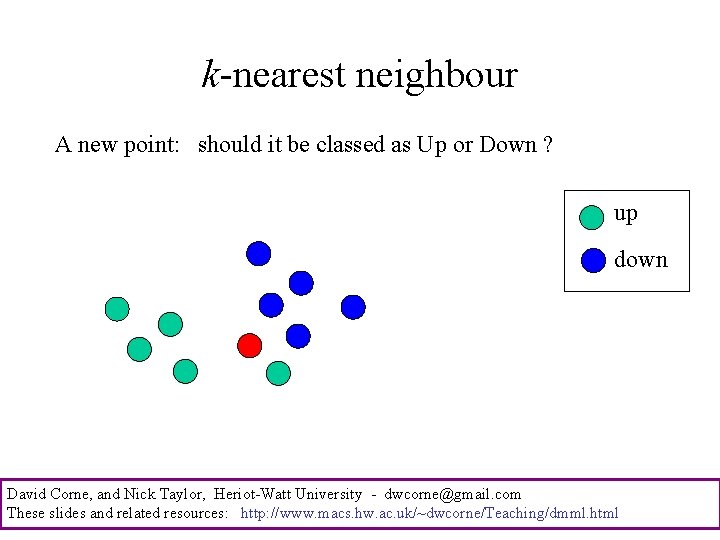

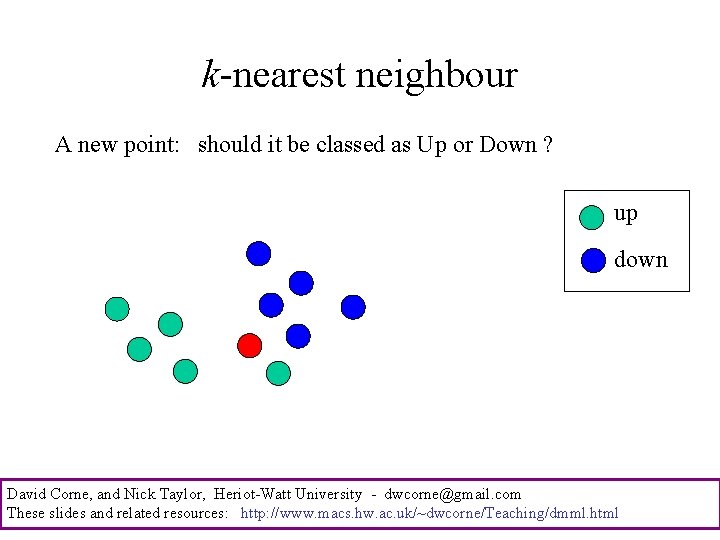

k-nearest neighbour A new point: should it be classed as Up or Down ? up down David Corne, and Nick Taylor, Heriot-Watt University - dwcorne@gmail. com These slides and related resources: http: //www. macs. hw. ac. uk/~dwcorne/Teaching/dmml. html

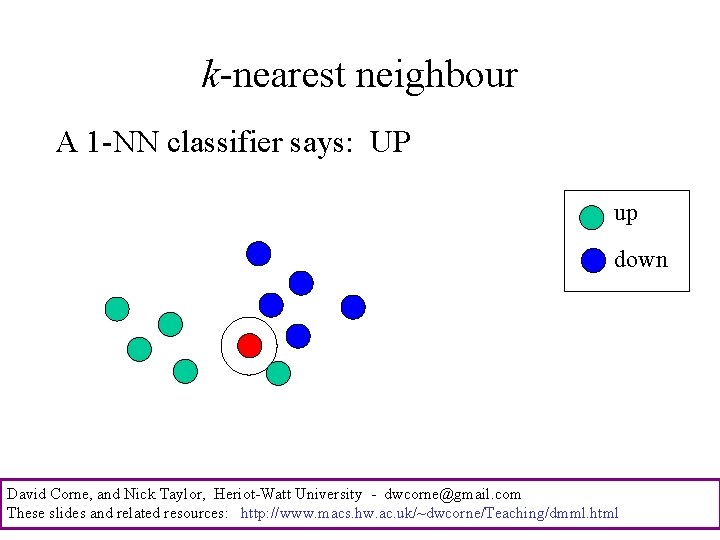

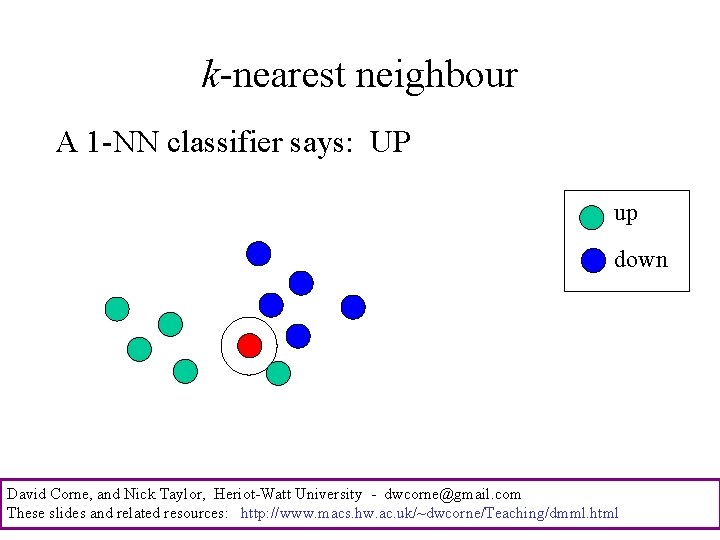

k-nearest neighbour A 1 -NN classifier says: UP up down David Corne, and Nick Taylor, Heriot-Watt University - dwcorne@gmail. com These slides and related resources: http: //www. macs. hw. ac. uk/~dwcorne/Teaching/dmml. html

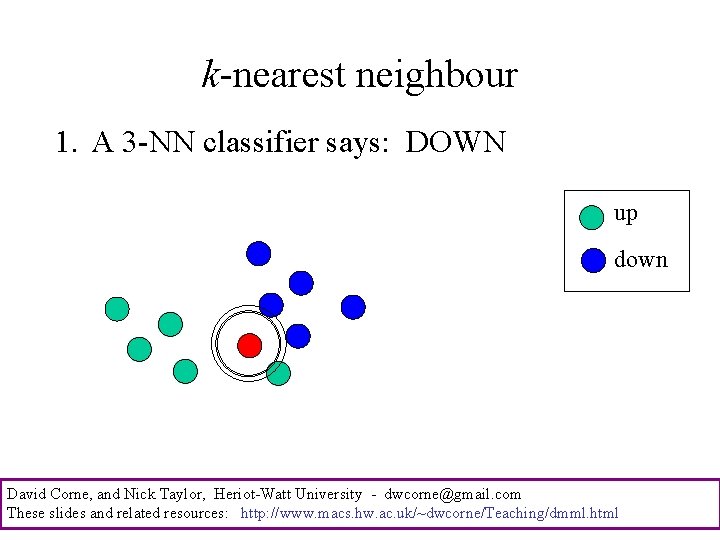

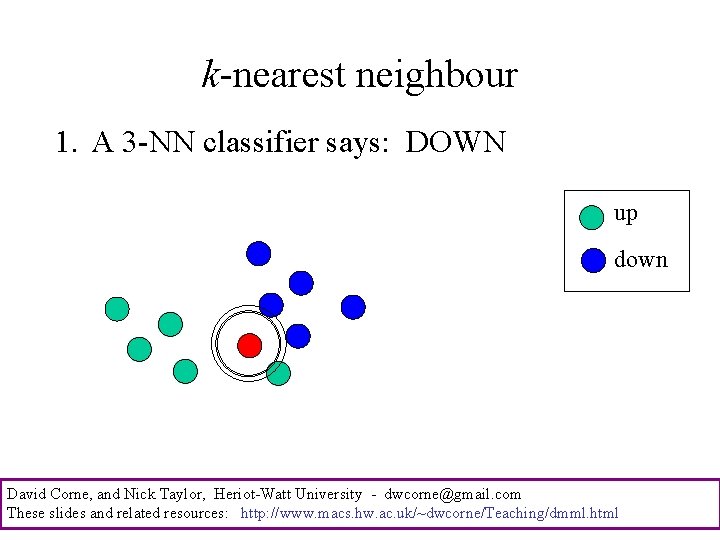

k-nearest neighbour 1. A 3 -NN classifier says: DOWN up down David Corne, and Nick Taylor, Heriot-Watt University - dwcorne@gmail. com These slides and related resources: http: //www. macs. hw. ac. uk/~dwcorne/Teaching/dmml. html

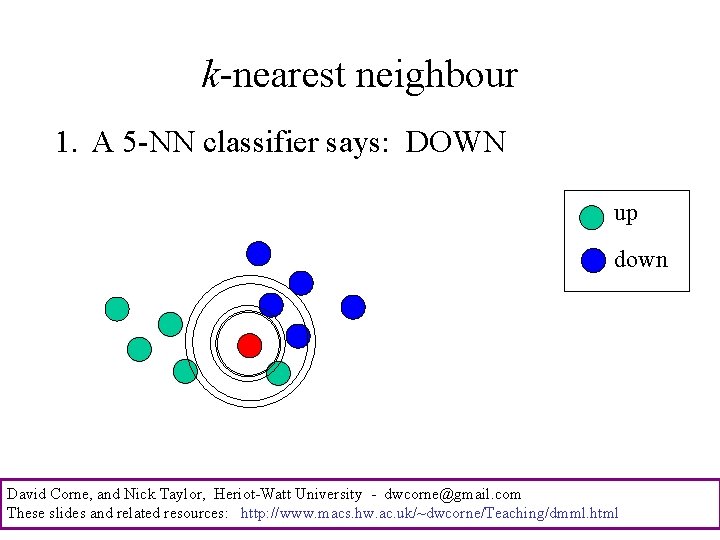

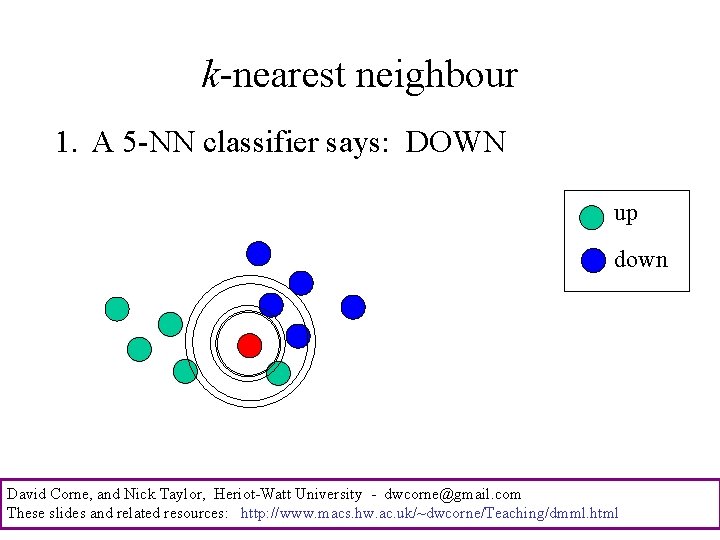

k-nearest neighbour 1. A 5 -NN classifier says: DOWN up down David Corne, and Nick Taylor, Heriot-Watt University - dwcorne@gmail. com These slides and related resources: http: //www. macs. hw. ac. uk/~dwcorne/Teaching/dmml. html

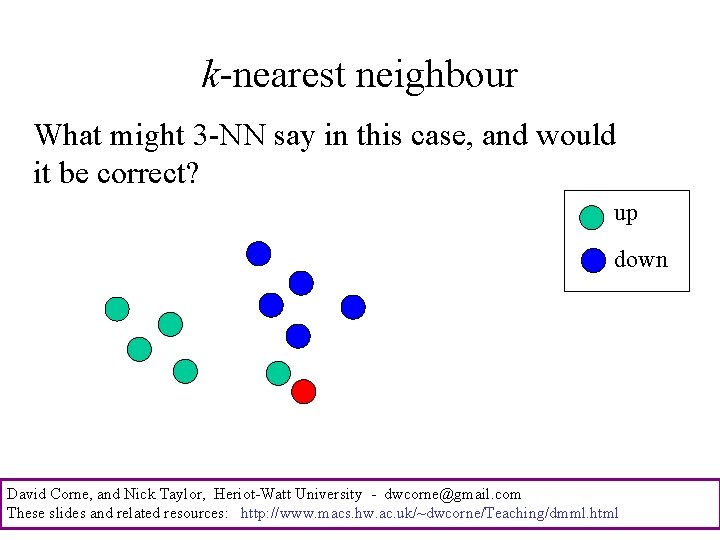

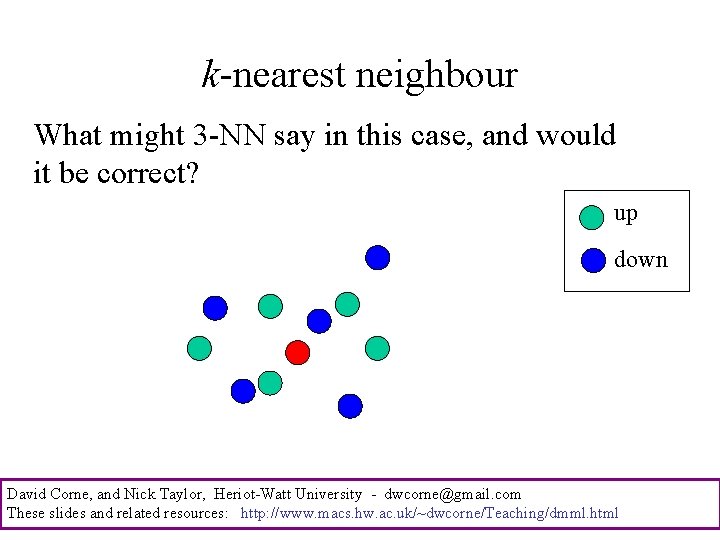

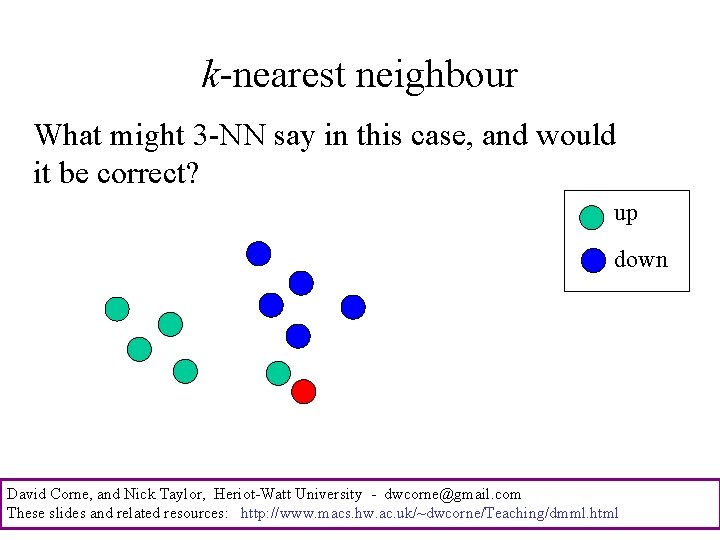

k-nearest neighbour What might 3 -NN say in this case, and would it be correct? up down David Corne, and Nick Taylor, Heriot-Watt University - dwcorne@gmail. com These slides and related resources: http: //www. macs. hw. ac. uk/~dwcorne/Teaching/dmml. html

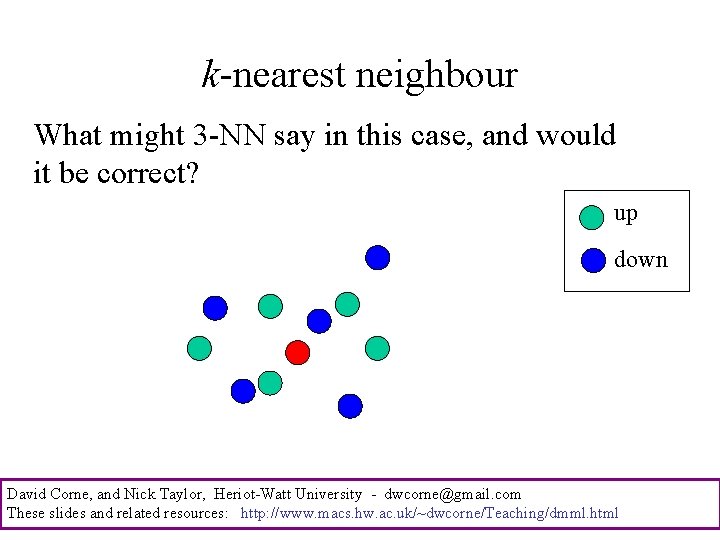

k-nearest neighbour What might 3 -NN say in this case, and would it be correct? up down David Corne, and Nick Taylor, Heriot-Watt University - dwcorne@gmail. com These slides and related resources: http: //www. macs. hw. ac. uk/~dwcorne/Teaching/dmml. html

K-NN • Extremely simple • Often very good performance • Most suitable for datasets where there are clear `geographic’ clusters • Even on complex data, provides a good guess • Like almost all DM/ML techniques, it relies exclusively on a distance measure – different ways to measure distance will give different results. David Corne, and Nick Taylor, Heriot-Watt University - dwcorne@gmail. com These slides and related resources: http: //www. macs. hw. ac. uk/~dwcorne/Teaching/dmml. html

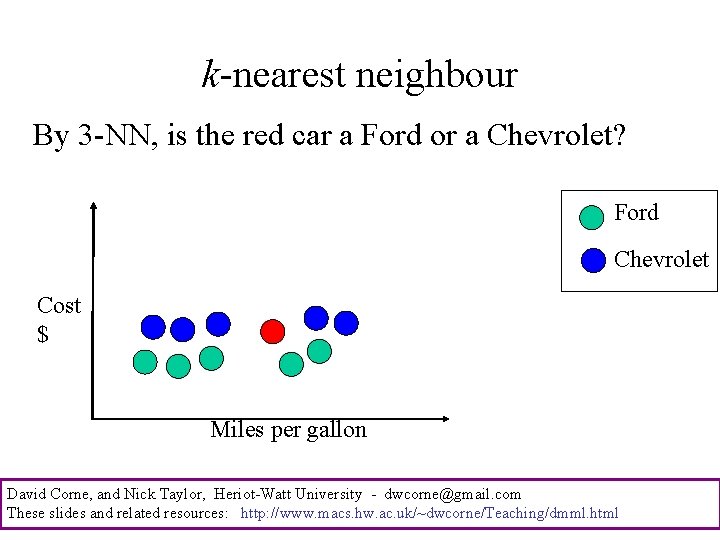

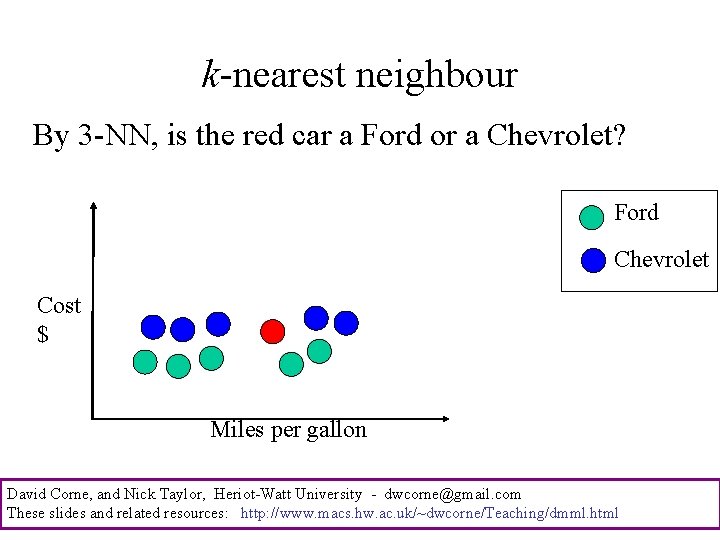

k-nearest neighbour By 3 -NN, is the red car a Ford or a Chevrolet? Ford Chevrolet Cost $ Miles per gallon David Corne, and Nick Taylor, Heriot-Watt University - dwcorne@gmail. com These slides and related resources: http: //www. macs. hw. ac. uk/~dwcorne/Teaching/dmml. html

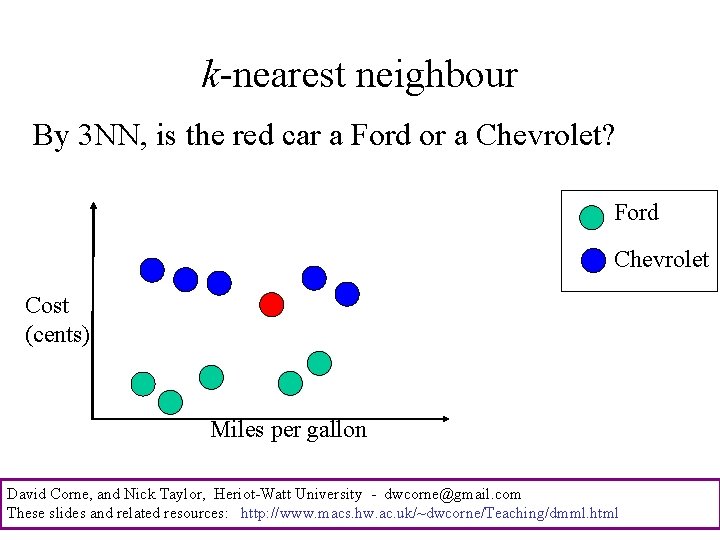

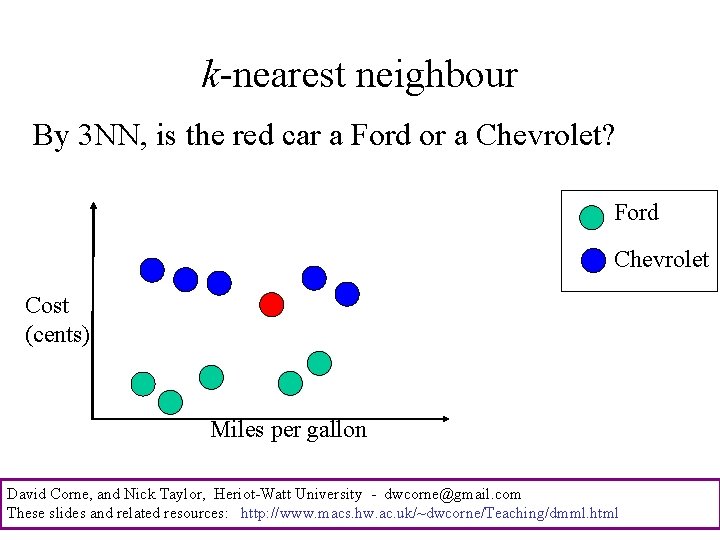

k-nearest neighbour By 3 NN, is the red car a Ford or a Chevrolet? Ford Chevrolet Cost (cents) Miles per gallon David Corne, and Nick Taylor, Heriot-Watt University - dwcorne@gmail. com These slides and related resources: http: //www. macs. hw. ac. uk/~dwcorne/Teaching/dmml. html

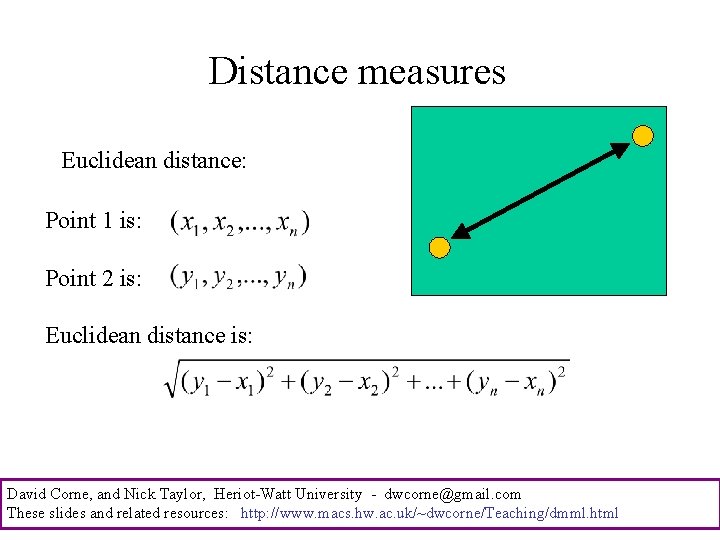

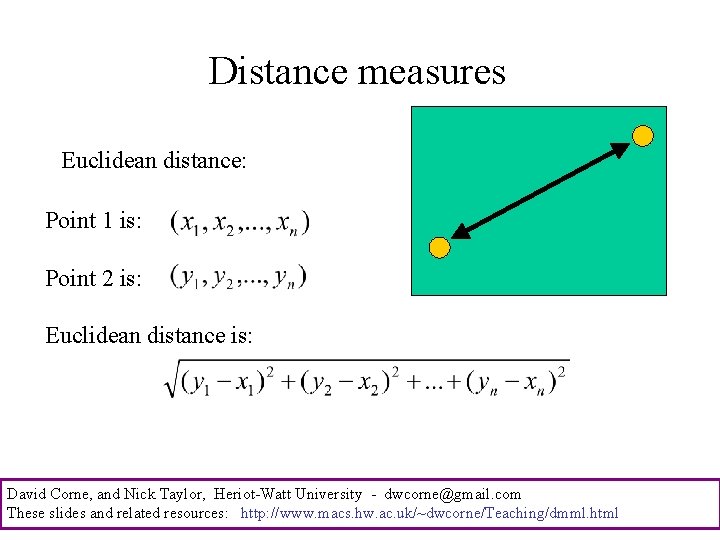

Distance measures Euclidean distance: Point 1 is: Point 2 is: Euclidean distance is: David Corne, and Nick Taylor, Heriot-Watt University - dwcorne@gmail. com These slides and related resources: http: //www. macs. hw. ac. uk/~dwcorne/Teaching/dmml. html

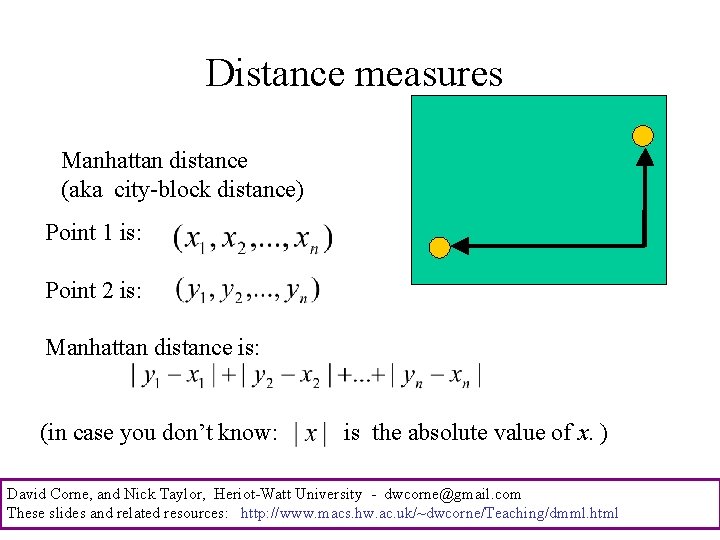

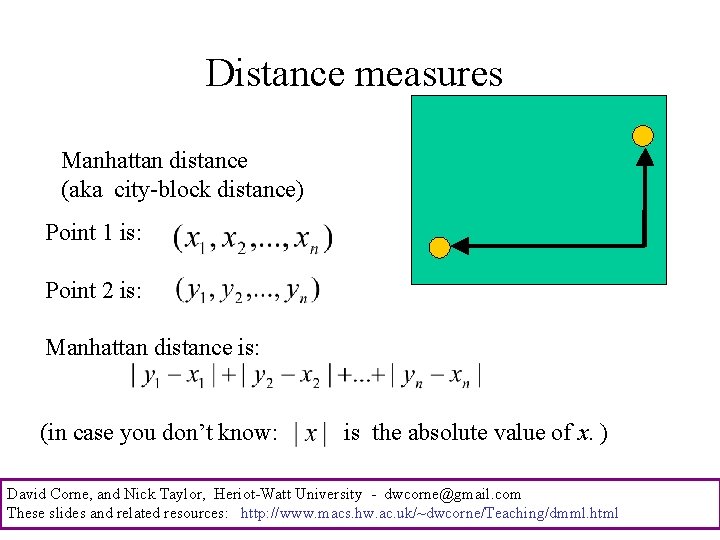

Distance measures Manhattan distance (aka city-block distance) Point 1 is: Point 2 is: Manhattan distance is: (in case you don’t know: is the absolute value of x. ) David Corne, and Nick Taylor, Heriot-Watt University - dwcorne@gmail. com These slides and related resources: http: //www. macs. hw. ac. uk/~dwcorne/Teaching/dmml. html

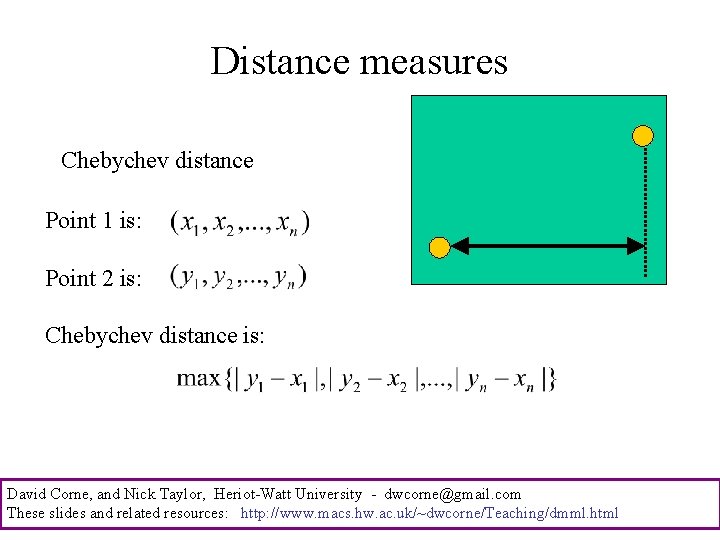

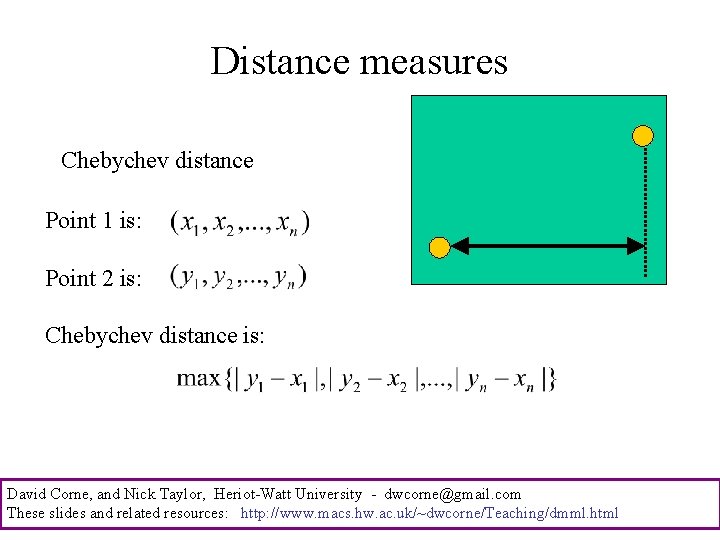

Distance measures Chebychev distance Point 1 is: Point 2 is: Chebychev distance is: David Corne, and Nick Taylor, Heriot-Watt University - dwcorne@gmail. com These slides and related resources: http: //www. macs. hw. ac. uk/~dwcorne/Teaching/dmml. html

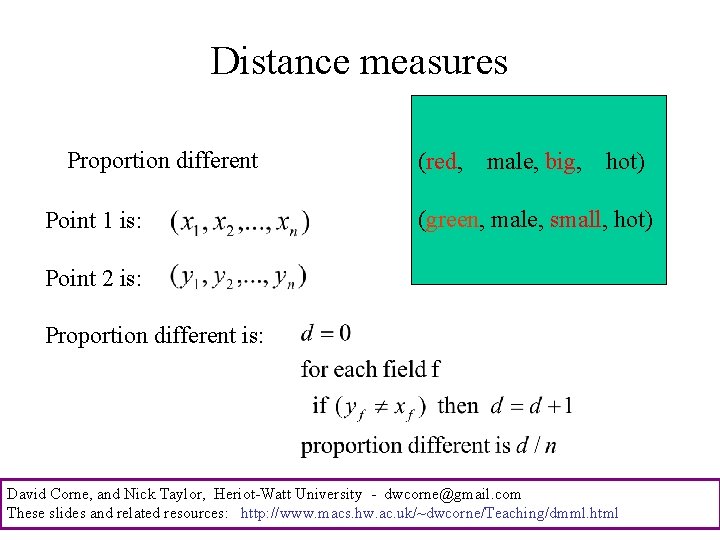

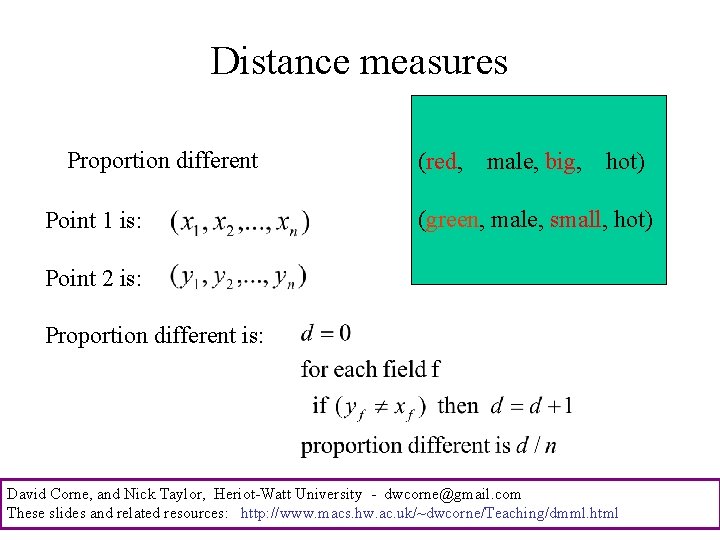

Distance measures Proportion different Point 1 is: (red, male, big, hot) (green, male, small, hot) Point 2 is: Proportion different is: David Corne, and Nick Taylor, Heriot-Watt University - dwcorne@gmail. com These slides and related resources: http: //www. macs. hw. ac. uk/~dwcorne/Teaching/dmml. html

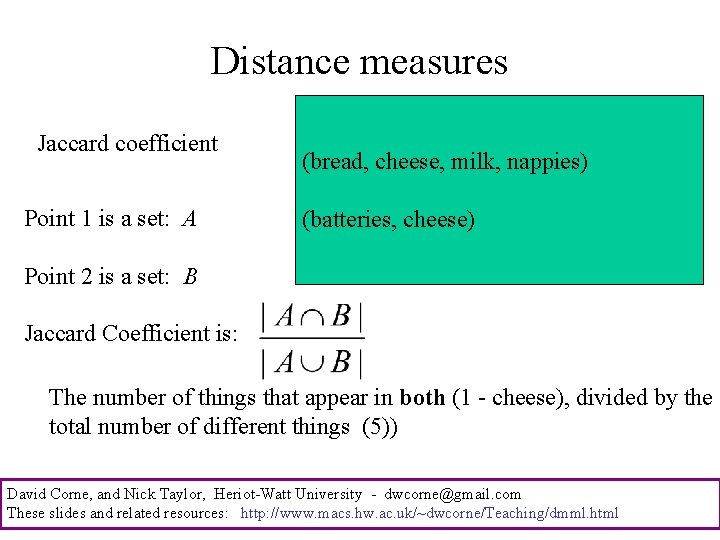

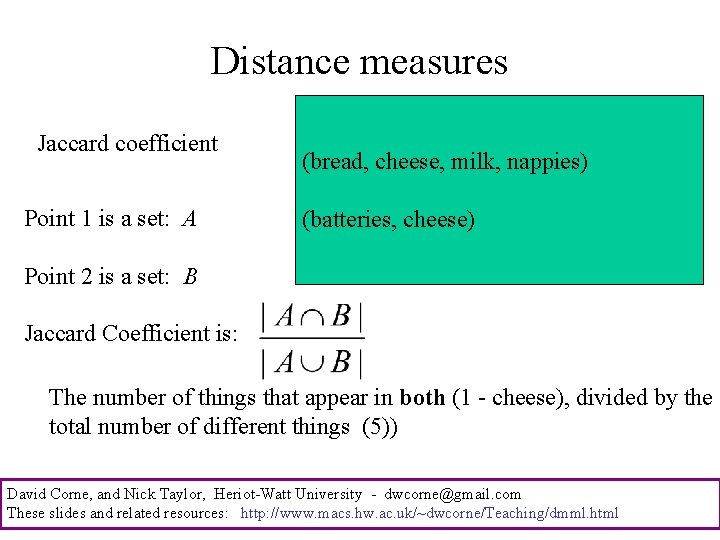

Distance measures Jaccard coefficient Point 1 is a set: A (bread, cheese, milk, nappies) (batteries, cheese) Point 2 is a set: B Jaccard Coefficient is: The number of things that appear in both (1 - cheese), divided by the total number of different things (5)) David Corne, and Nick Taylor, Heriot-Watt University - dwcorne@gmail. com These slides and related resources: http: //www. macs. hw. ac. uk/~dwcorne/Teaching/dmml. html

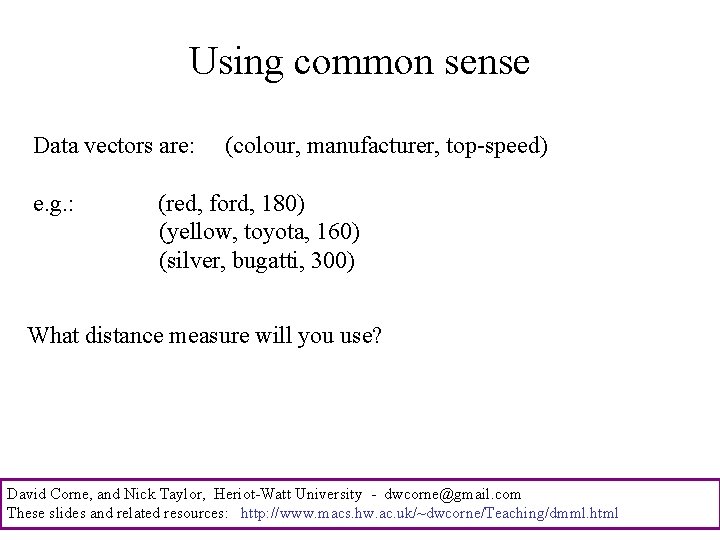

Using common sense Data vectors are: e. g. : (colour, manufacturer, top-speed) (red, ford, 180) (yellow, toyota, 160) (silver, bugatti, 300) What distance measure will you use? David Corne, and Nick Taylor, Heriot-Watt University - dwcorne@gmail. com These slides and related resources: http: //www. macs. hw. ac. uk/~dwcorne/Teaching/dmml. html

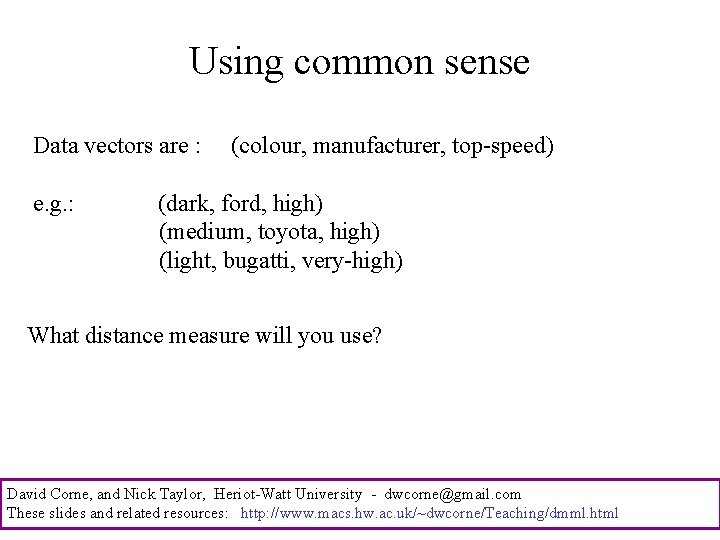

Using common sense Data vectors are : e. g. : (colour, manufacturer, top-speed) (dark, ford, high) (medium, toyota, high) (light, bugatti, very-high) What distance measure will you use? David Corne, and Nick Taylor, Heriot-Watt University - dwcorne@gmail. com These slides and related resources: http: //www. macs. hw. ac. uk/~dwcorne/Teaching/dmml. html

Using common sense With different types of fields, e. g. p 1 = (red, high, 0. 5, UK, 12) p 2 = (blue, high, 0. 6, France, 15) You could simply define a distance measure for each field Individually, and add them up. Similarly, you could divide the vectors into ordinal and numeric parts: p 1 a = (red, high, UK) p 1 b = (0. 5, 12) p 2 a = (blue, high, France) p 2 b = (0. 6, 15) and say that dist(p 1, p 2) = dist(p 1 a, p 2 a)+d(p 1 b, p 2 b) using appropriate measures for the two kinds of vector. David Corne, and Nick Taylor, Heriot-Watt University - dwcorne@gmail. com These slides and related resources: http: //www. macs. hw. ac. uk/~dwcorne/Teaching/dmml. html

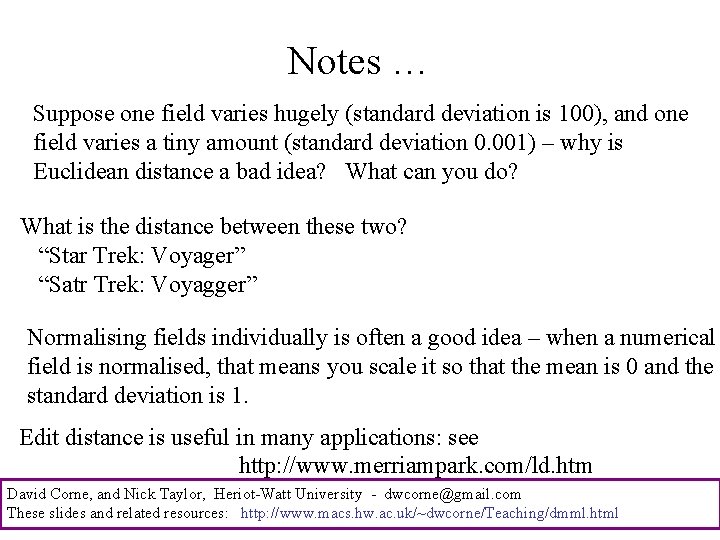

Notes … Suppose one field varies hugely (standard deviation is 100), and one field varies a tiny amount (standard deviation 0. 001) – why is Euclidean distance a bad idea? What can you do? What is the distance between these two? “Star Trek: Voyager” “Satr Trek: Voyagger” Normalising fields individually is often a good idea – when a numerical field is normalised, that means you scale it so that the mean is 0 and the standard deviation is 1. Edit distance is useful in many applications: see http: //www. merriampark. com/ld. htm David Corne, and Nick Taylor, Heriot-Watt University - dwcorne@gmail. com These slides and related resources: http: //www. macs. hw. ac. uk/~dwcorne/Teaching/dmml. html

Text: a prime example of unstructured data David Corne, and Nick Taylor, Heriot-Watt University - dwcorne@gmail. com These slides and related resources: http: //www. macs. hw. ac. uk/~dwcorne/Teaching/dmml. html

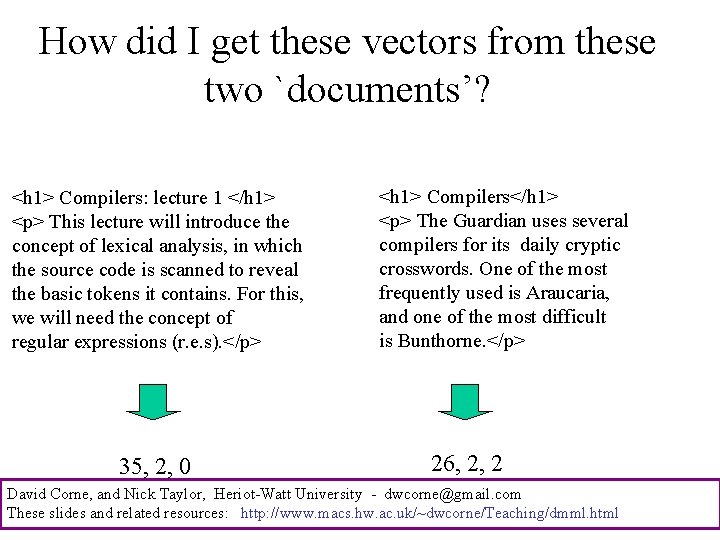

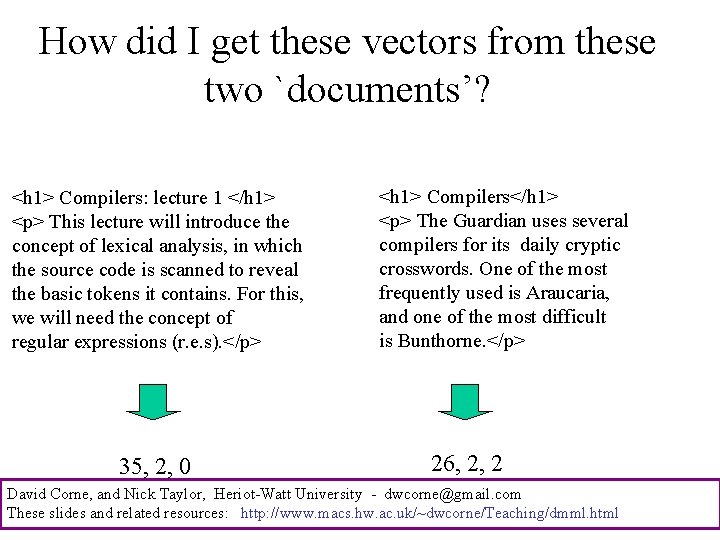

How did I get these vectors from these two `documents’? <h 1> Compilers: lecture 1 </h 1> <p> This lecture will introduce the concept of lexical analysis, in which the source code is scanned to reveal the basic tokens it contains. For this, we will need the concept of regular expressions (r. e. s). </p> 35, 2, 0 <h 1> Compilers</h 1> <p> The Guardian uses several compilers for its daily cryptic crosswords. One of the most frequently used is Araucaria, and one of the most difficult is Bunthorne. </p> 26, 2, 2 David Corne, and Nick Taylor, Heriot-Watt University - dwcorne@gmail. com These slides and related resources: http: //www. macs. hw. ac. uk/~dwcorne/Teaching/dmml. html

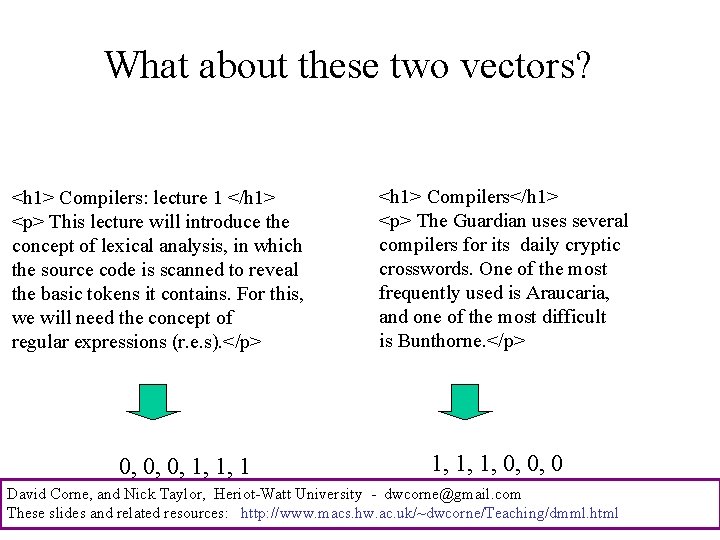

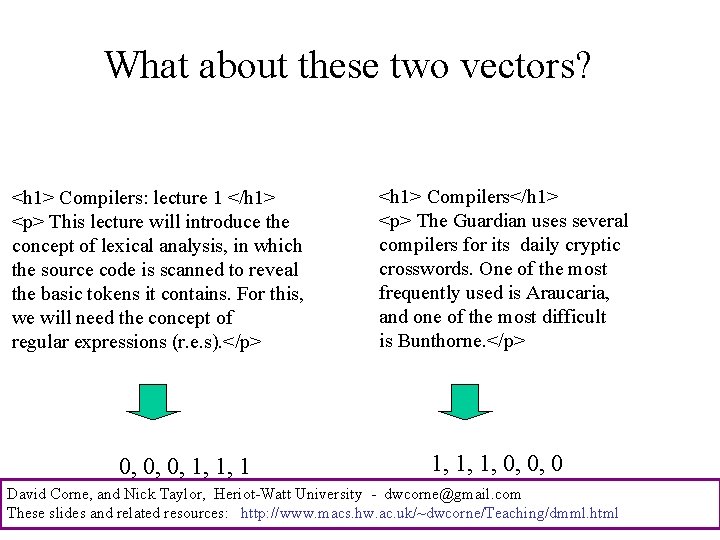

What about these two vectors? <h 1> Compilers: lecture 1 </h 1> <p> This lecture will introduce the concept of lexical analysis, in which the source code is scanned to reveal the basic tokens it contains. For this, we will need the concept of regular expressions (r. e. s). </p> 0, 0, 0, 1, 1, 1 <h 1> Compilers</h 1> <p> The Guardian uses several compilers for its daily cryptic crosswords. One of the most frequently used is Araucaria, and one of the most difficult is Bunthorne. </p> 1, 1, 1, 0, 0, 0 David Corne, and Nick Taylor, Heriot-Watt University - dwcorne@gmail. com These slides and related resources: http: //www. macs. hw. ac. uk/~dwcorne/Teaching/dmml. html

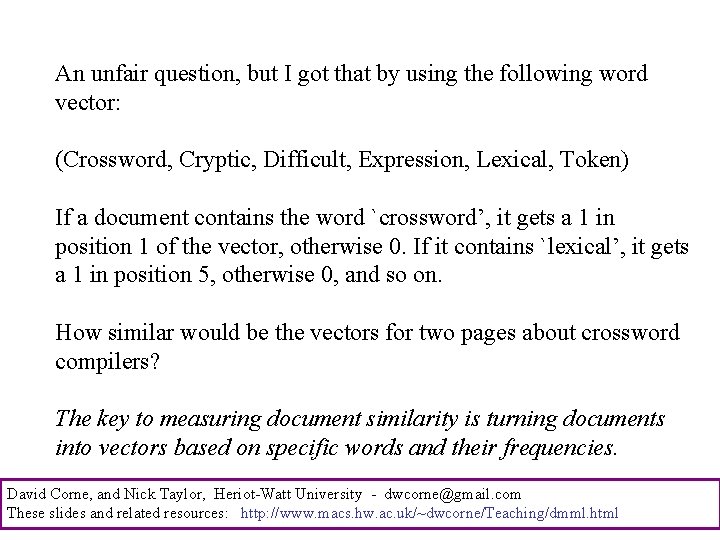

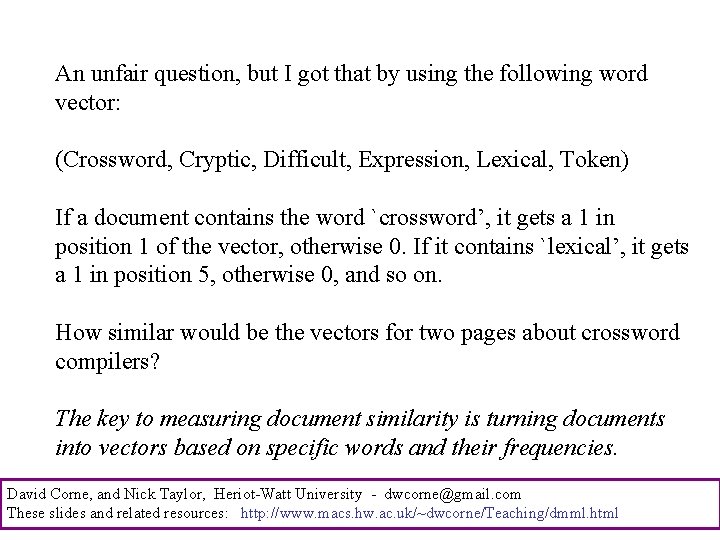

An unfair question, but I got that by using the following word vector: (Crossword, Cryptic, Difficult, Expression, Lexical, Token) If a document contains the word `crossword’, it gets a 1 in position 1 of the vector, otherwise 0. If it contains `lexical’, it gets a 1 in position 5, otherwise 0, and so on. How similar would be the vectors for two pages about crossword compilers? The key to measuring document similarity is turning documents into vectors based on specific words and their frequencies. David Corne, and Nick Taylor, Heriot-Watt University - dwcorne@gmail. com These slides and related resources: http: //www. macs. hw. ac. uk/~dwcorne/Teaching/dmml. html

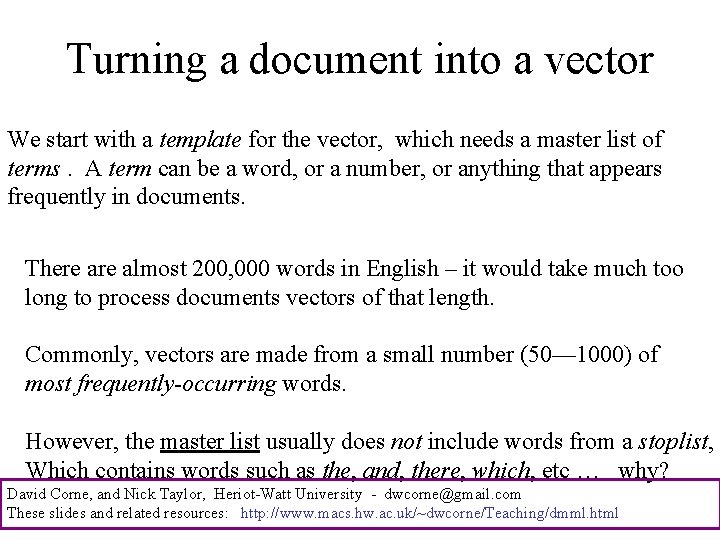

Turning a document into a vector We start with a template for the vector, which needs a master list of terms. A term can be a word, or a number, or anything that appears frequently in documents. There almost 200, 000 words in English – it would take much too long to process documents vectors of that length. Commonly, vectors are made from a small number (50— 1000) of most frequently-occurring words. However, the master list usually does not include words from a stoplist, Which contains words such as the, and, there, which, etc … why? David Corne, and Nick Taylor, Heriot-Watt University - dwcorne@gmail. com These slides and related resources: http: //www. macs. hw. ac. uk/~dwcorne/Teaching/dmml. html

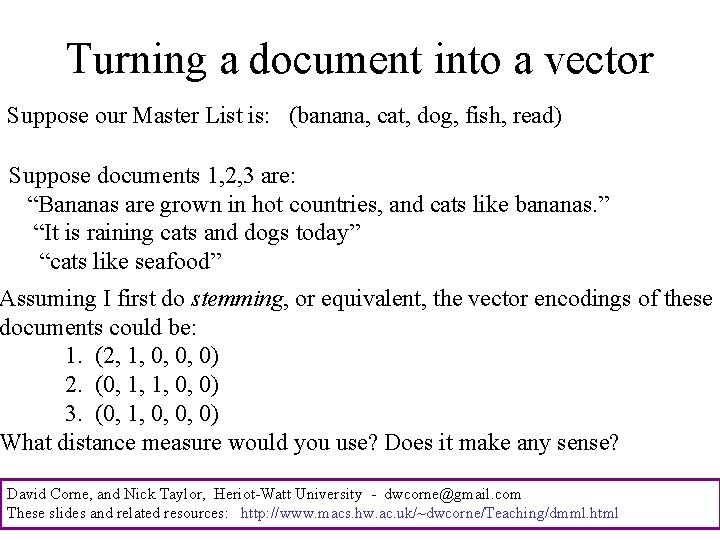

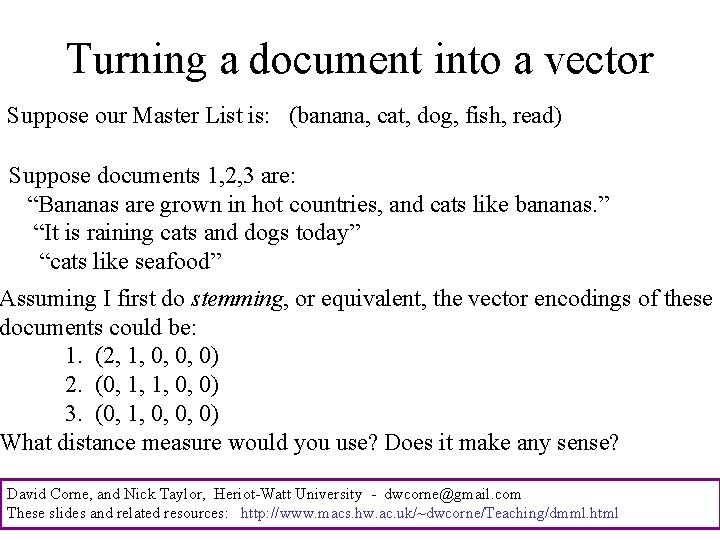

Turning a document into a vector Suppose our Master List is: (banana, cat, dog, fish, read) Suppose documents 1, 2, 3 are: “Bananas are grown in hot countries, and cats like bananas. ” “It is raining cats and dogs today” “cats like seafood” Assuming I first do stemming, or equivalent, the vector encodings of these documents could be: 1. (2, 1, 0, 0, 0) 2. (0, 1, 1, 0, 0) 3. (0, 1, 0, 0, 0) What distance measure would you use? Does it make any sense? David Corne, and Nick Taylor, Heriot-Watt University - dwcorne@gmail. com These slides and related resources: http: //www. macs. hw. ac. uk/~dwcorne/Teaching/dmml. html

Text mining Encoding documents as vectors is a hot topic, and there are many important and valuable applications, e. g. : Predicting `sentiment’ – if a document describes a movie, how much does it rate that movie, on a scale of 1 to 10? What document(s) are the most appropriate for a search engine to retrieve from a search query? Is document A plagiarized from document B? And, there are better standard ways to encode documents, such as TFIDF - I cover that in the Web Intelligence module. David Corne, and Nick Taylor, Heriot-Watt University - dwcorne@gmail. com These slides and related resources: http: //www. macs. hw. ac. uk/~dwcorne/Teaching/dmml. html

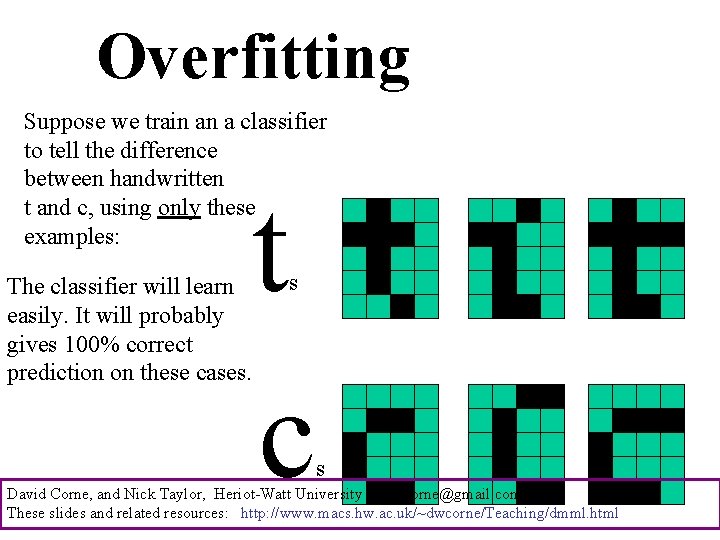

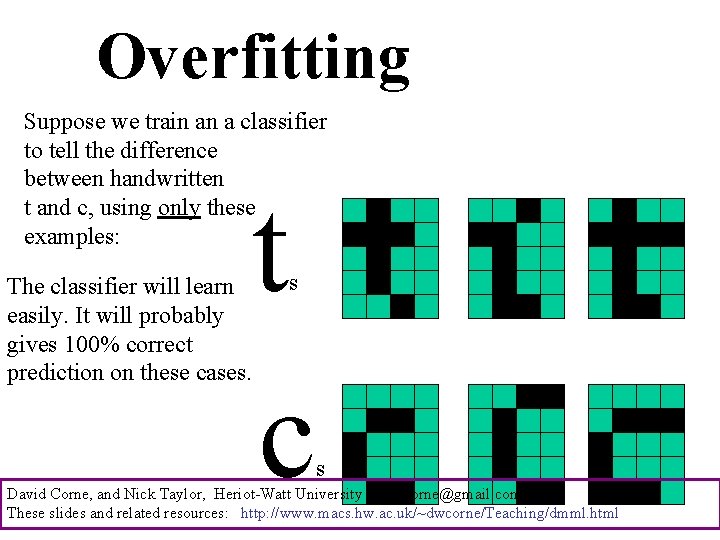

Overfitting Suppose we train an a classifier to tell the difference between handwritten t and c, using only these examples: The classifier will learn easily. It will probably gives 100% correct prediction on these cases. t c s s David Corne, and Nick Taylor, Heriot-Watt University - dwcorne@gmail. com These slides and related resources: http: //www. macs. hw. ac. uk/~dwcorne/Teaching/dmml. html

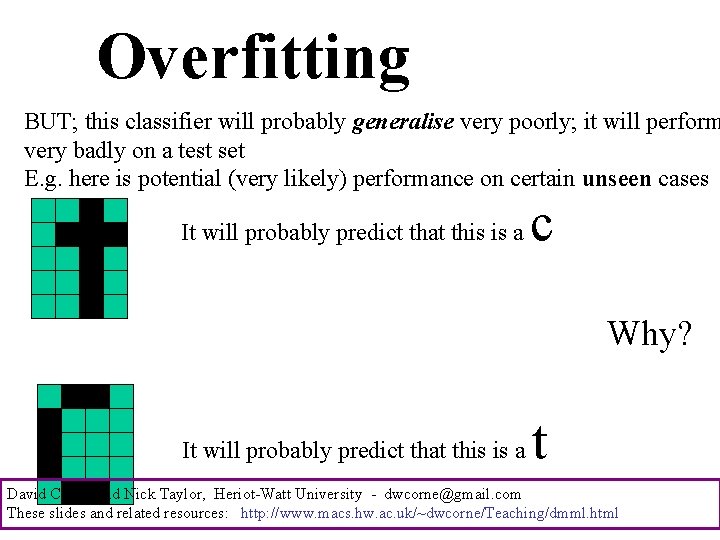

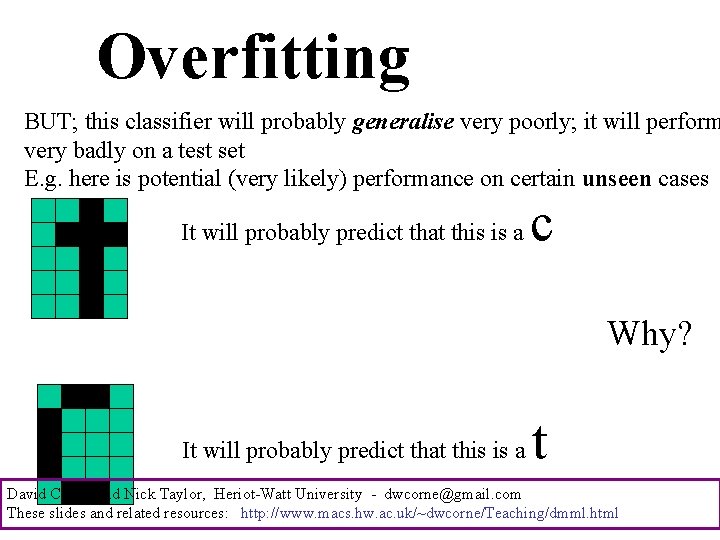

Overfitting BUT; this classifier will probably generalise very poorly; it will perform very badly on a test set E. g. here is potential (very likely) performance on certain unseen cases It will probably predict that this is a c Why? It will probably predict that this is a t David Corne, and Nick Taylor, Heriot-Watt University - dwcorne@gmail. com These slides and related resources: http: //www. macs. hw. ac. uk/~dwcorne/Teaching/dmml. html

Avoiding Overfitting It can be avoided by using as much training data as possible, ensuring as much diversity as possible in the data. This cuts down on the potential existence of features that might be discriminative in the training data, but are otherwise spurious. It can be avoided by jittering (adding noise). During training, every time an input pattern ispresented, it is randomly perturbed. The idea of this is that spurious features will be `washed out’ by the noise, but valid discriminatory features will remain. The problem with this approach is how to correctly choose the level of noise. David Corne, and Nick Taylor, Heriot-Watt University - dwcorne@gmail. com These slides and related resources: http: //www. macs. hw. ac. uk/~dwcorne/Teaching/dmml. html

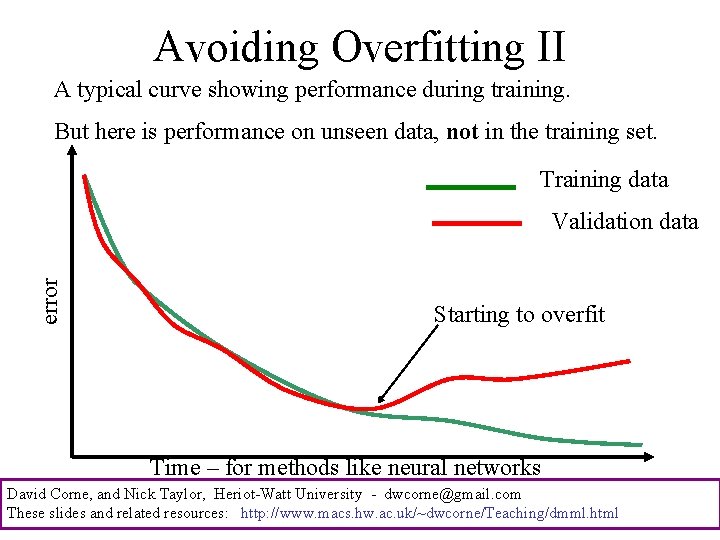

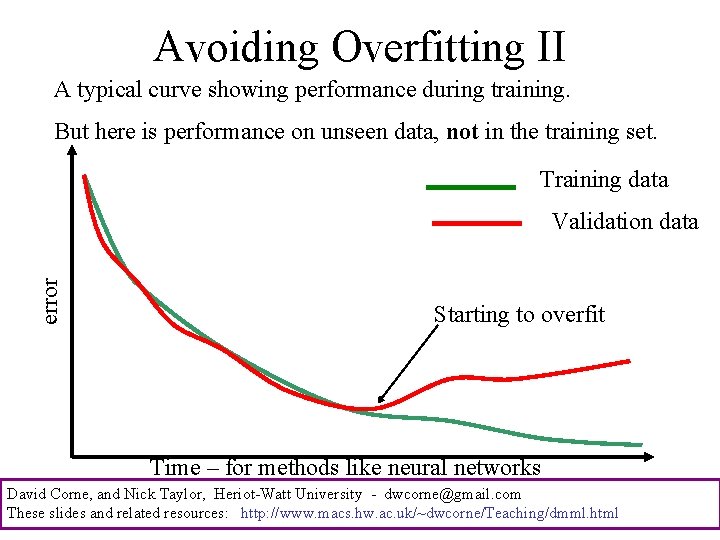

Avoiding Overfitting II A typical curve showing performance during training. But here is performance on unseen data, not in the training set. Training data error Validation data Starting to overfit Time – for methods like neural networks David Corne, and Nick Taylor, Heriot-Watt University - dwcorne@gmail. com These slides and related resources: http: //www. macs. hw. ac. uk/~dwcorne/Teaching/dmml. html

Avoiding Overfitting III Another approach is early stopping. During training, keep track of the network’s performance on a separate validation set of data. At the point where error continues to improve on the training set, but starts to get worse on the validation set, that is when training should be stopped, since it is starting to overfit on the training data. The problem here is that this point is far from always clear cut. David Corne, and Nick Taylor, Heriot-Watt University - dwcorne@gmail. com These slides and related resources: http: //www. macs. hw. ac. uk/~dwcorne/Teaching/dmml. html

Coursework C Invent two different but simple ways to use the Jaccard coefficient for the problem of finding the distance between a document and a set of documents. Explain both within about 200 words (half a page) David Corne, and Nick Taylor, Heriot-Watt University - dwcorne@gmail. com These slides and related resources: http: //www. macs. hw. ac. uk/~dwcorne/Teaching/dmml. html

Coursework C Next week: feature selection David Corne, and Nick Taylor, Heriot-Watt University - dwcorne@gmail. com These slides and related resources: http: //www. macs. hw. ac. uk/~dwcorne/Teaching/dmml. html