Data Mining Algorithms One R Chapter 4 Section

- Slides: 30

Data Mining – Algorithms: One. R Chapter 4, Section 4. 1

Simplicity First • Simple Algorithms sometimes work surprisingly well • It is worth trying simple approaches first • Different approaches may work better for different data • There is more than one simple approach • First to be examined: One. R (or 1 R) – learns one rule for the dataset – actually a bit of a misnomer – one level decision tree

One. R – Holte (1993) • Simple, cheap method • Often performs surprisingly well • Many real datasets may not have complicated things going on • Idea: – Make rules that test a single attribute and branch accordingly (each branch corresponds to a different value for that attribute) – Classification for a given branch is the “majority” class for that branch in the training data – Evaluate use of each attribute via “error rate” on training data – Choose the best attribute

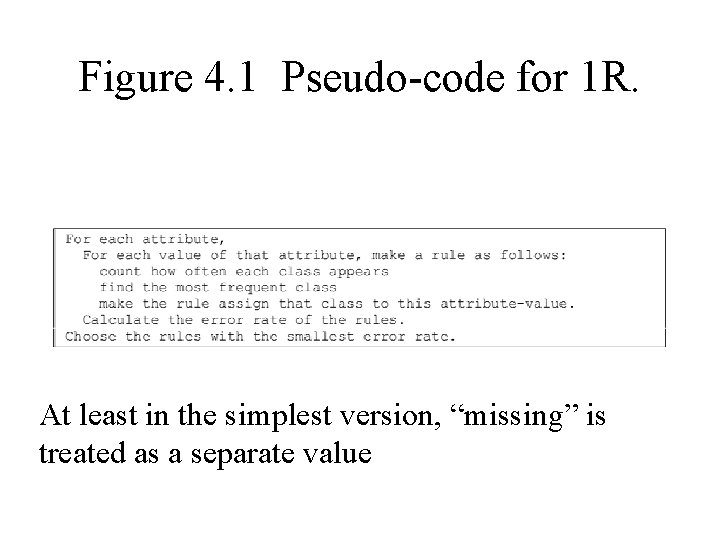

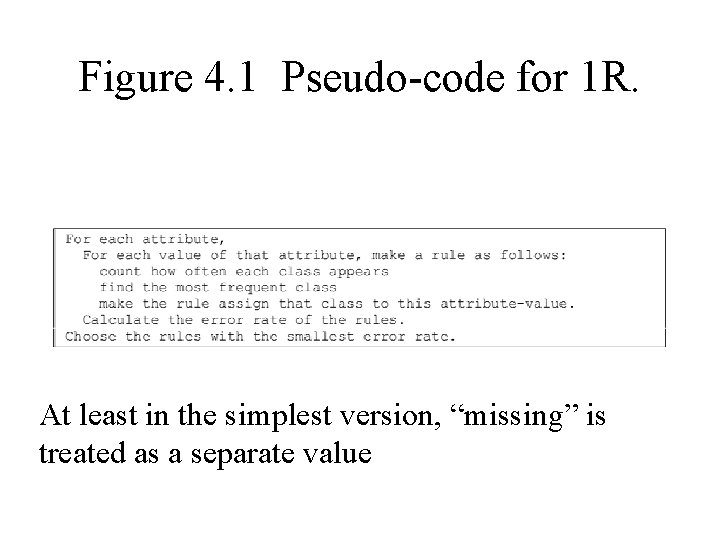

Figure 4. 1 Pseudo-code for 1 R. At least in the simplest version, “missing” is treated as a separate value

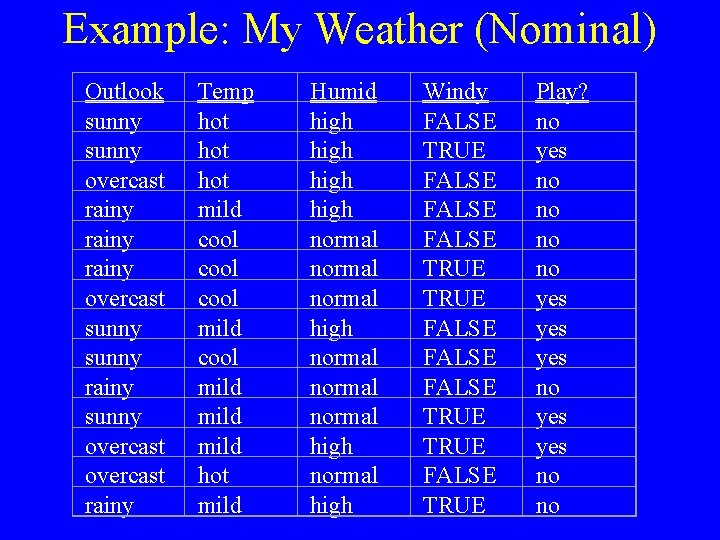

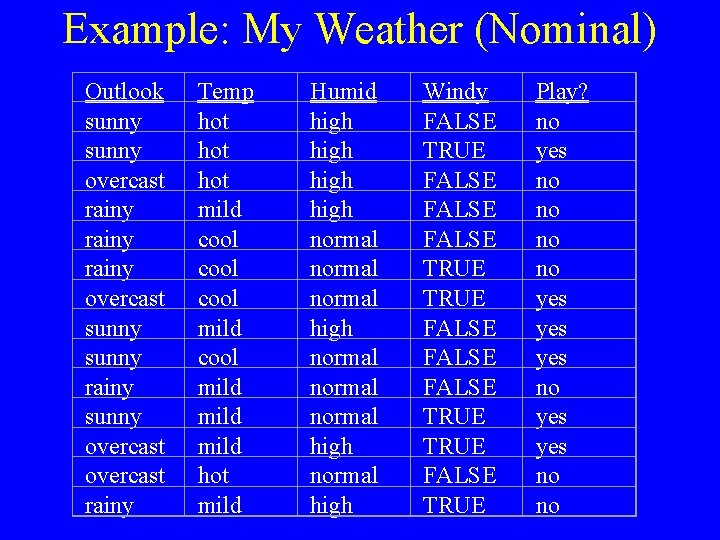

Example: My Weather (Nominal) Outlook sunny overcast rainy overcast sunny rainy sunny overcast rainy Temp hot hot mild cool mild hot mild Humid high normal normal high Windy FALSE TRUE FALSE FALSE TRUE FALSE TRUE Play? no yes no no yes yes no no

Let’s take this a little more realistic than book does • Divide into training and test data • Let’s save the last record as a test

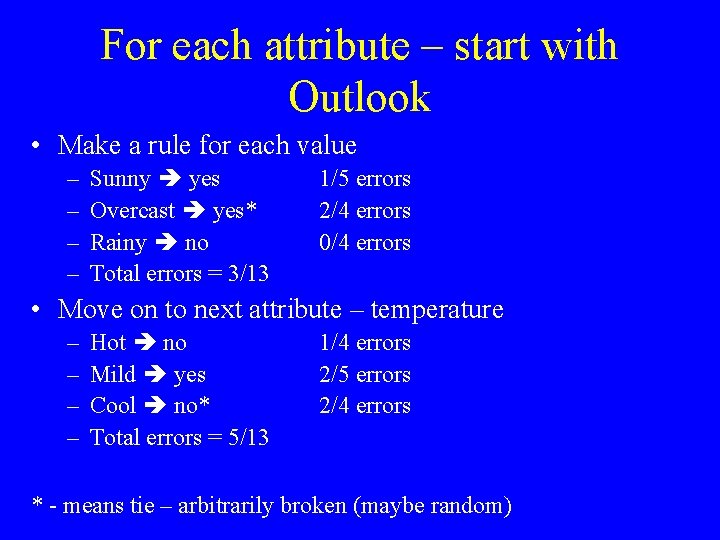

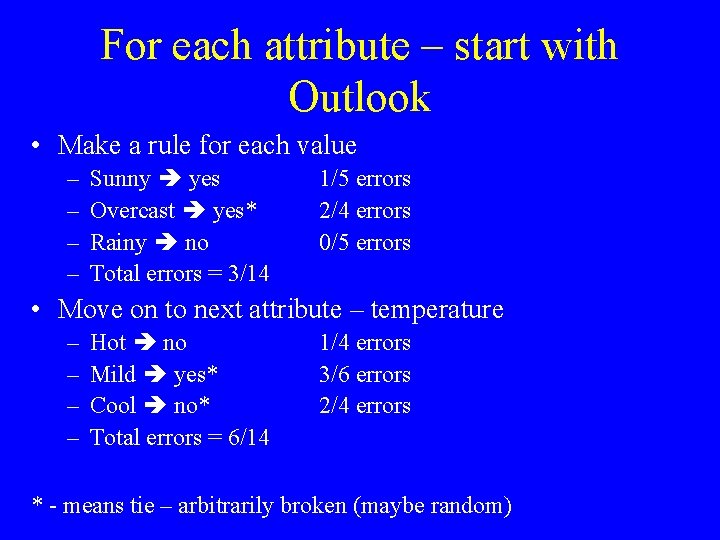

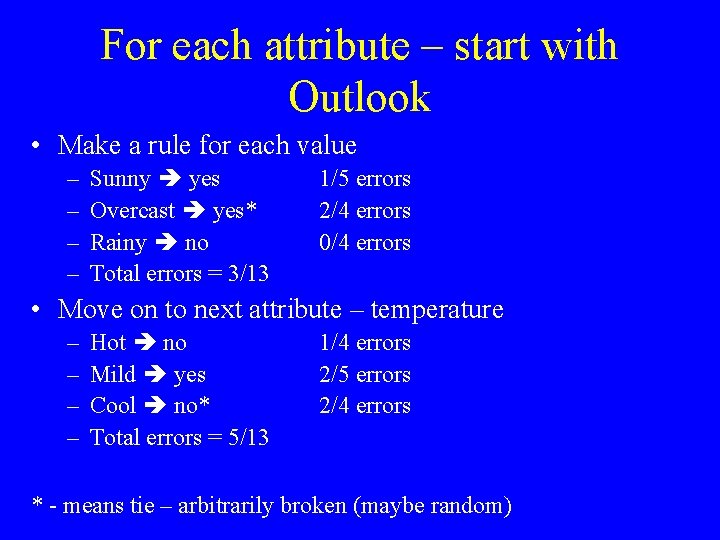

For each attribute – start with Outlook • Make a rule for each value – – Sunny yes Overcast yes* Rainy no Total errors = 3/13 1/5 errors 2/4 errors 0/4 errors • Move on to next attribute – temperature – – Hot no Mild yes Cool no* Total errors = 5/13 1/4 errors 2/5 errors 2/4 errors * - means tie – arbitrarily broken (maybe random)

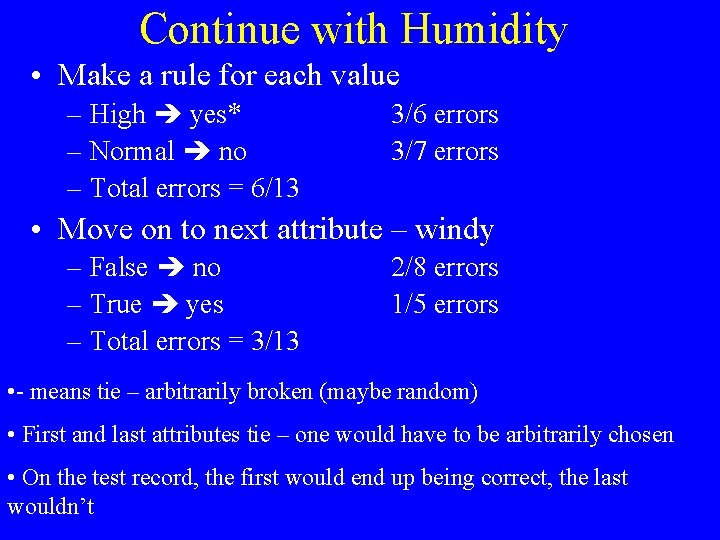

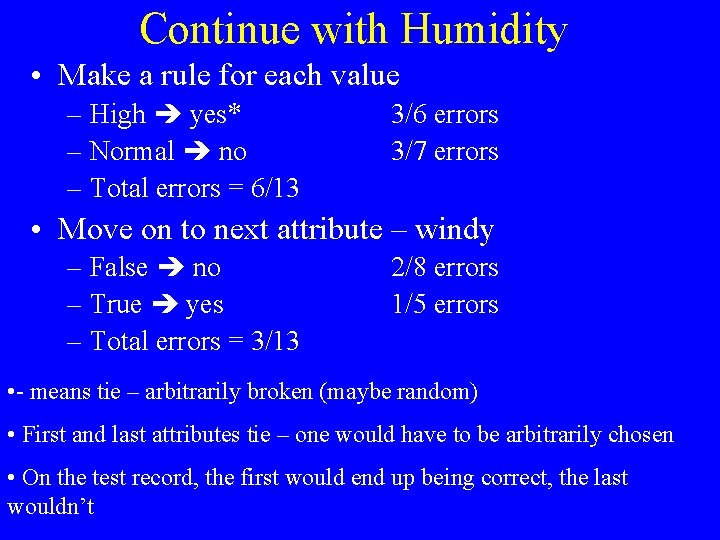

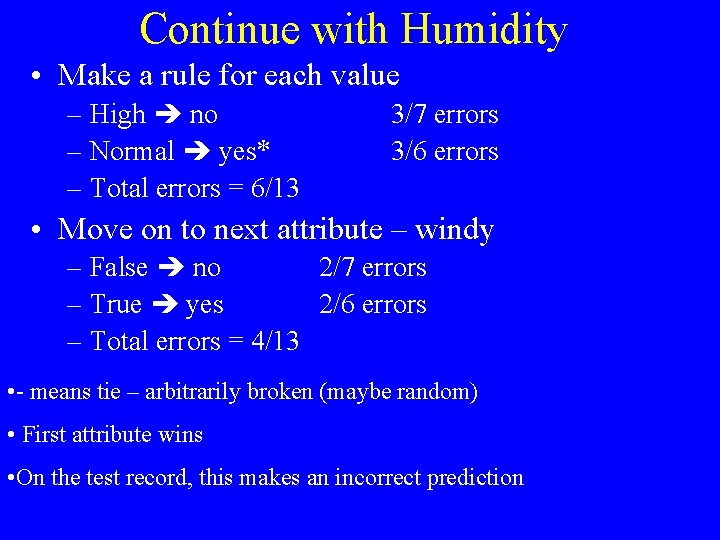

Continue with Humidity • Make a rule for each value – High yes* – Normal no – Total errors = 6/13 3/6 errors 3/7 errors • Move on to next attribute – windy – False no – True yes – Total errors = 3/13 2/8 errors 1/5 errors • - means tie – arbitrarily broken (maybe random) • First and last attributes tie – one would have to be arbitrarily chosen • On the test record, the first would end up being correct, the last wouldn’t

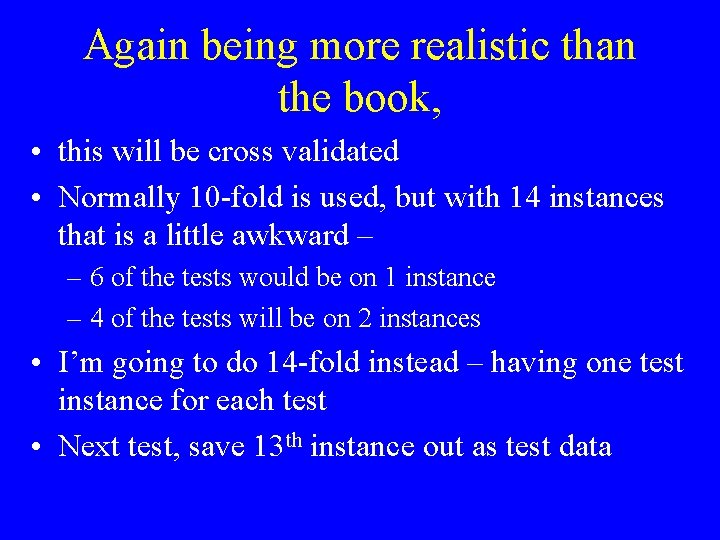

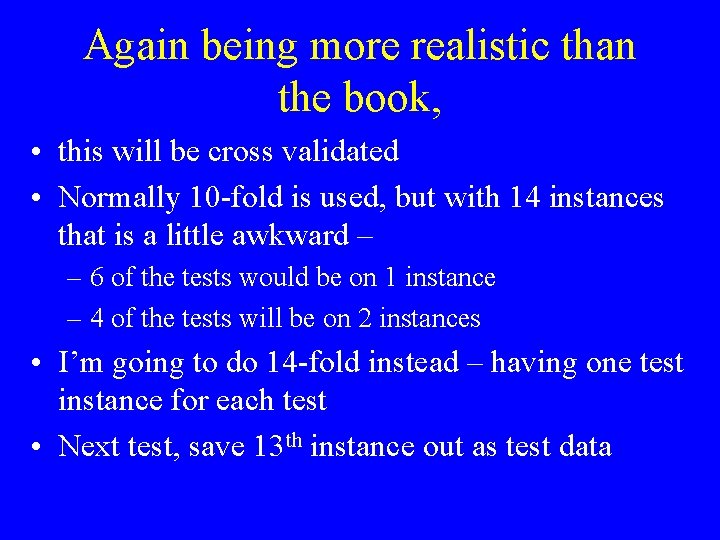

Again being more realistic than the book, • this will be cross validated • Normally 10 -fold is used, but with 14 instances that is a little awkward – – 6 of the tests would be on 1 instance – 4 of the tests will be on 2 instances • I’m going to do 14 -fold instead – having one test instance for each test • Next test, save 13 th instance out as test data

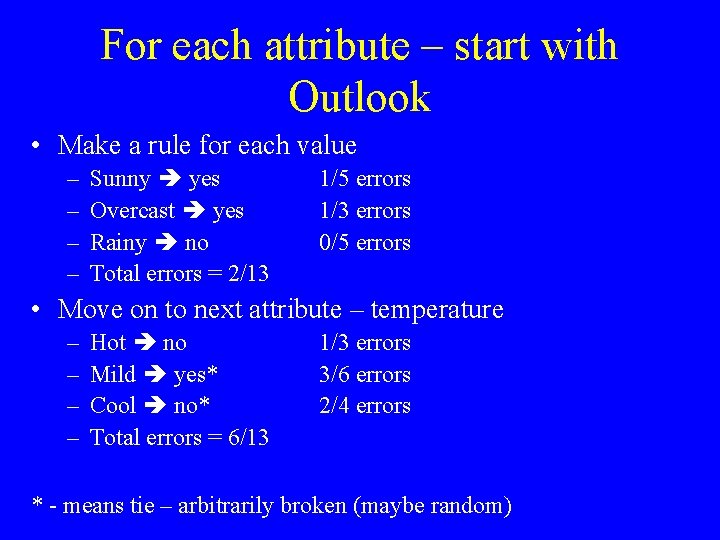

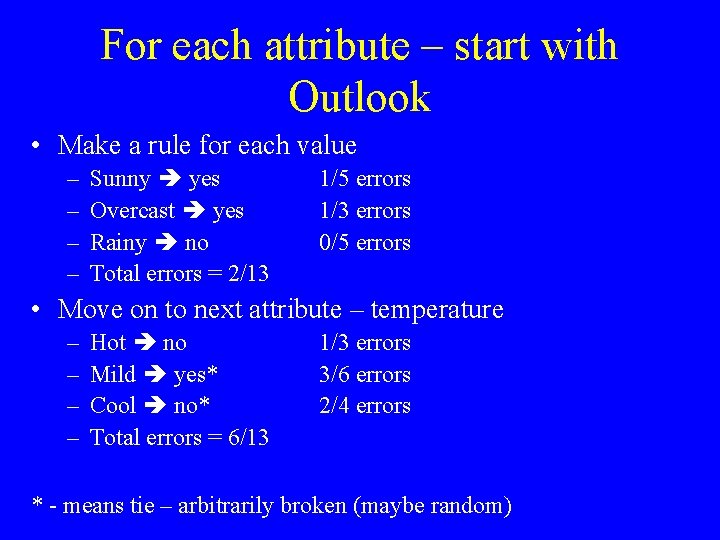

For each attribute – start with Outlook • Make a rule for each value – – Sunny yes Overcast yes Rainy no Total errors = 2/13 1/5 errors 1/3 errors 0/5 errors • Move on to next attribute – temperature – – Hot no Mild yes* Cool no* Total errors = 6/13 1/3 errors 3/6 errors 2/4 errors * - means tie – arbitrarily broken (maybe random)

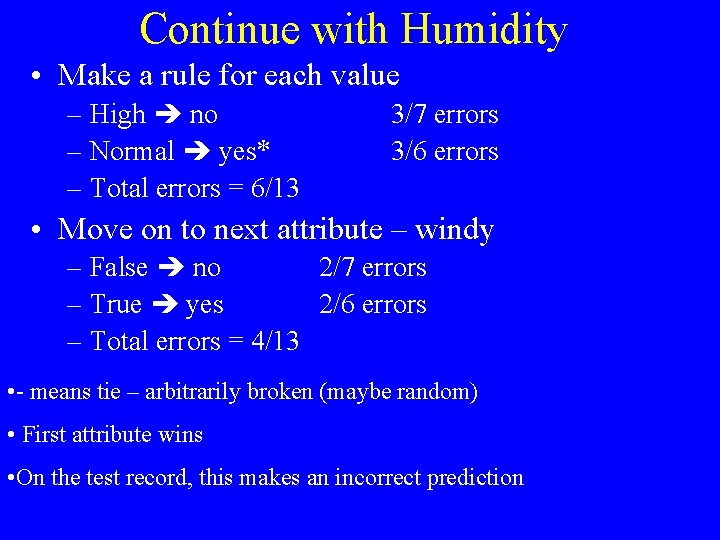

Continue with Humidity • Make a rule for each value – High no – Normal yes* – Total errors = 6/13 3/7 errors 3/6 errors • Move on to next attribute – windy – False no 2/7 errors – True yes 2/6 errors – Total errors = 4/13 • - means tie – arbitrarily broken (maybe random) • First attribute wins • On the test record, this makes an incorrect prediction

In a 14 -fold cross validation, this would continue 12 more times • Let’s run WEKA on this …

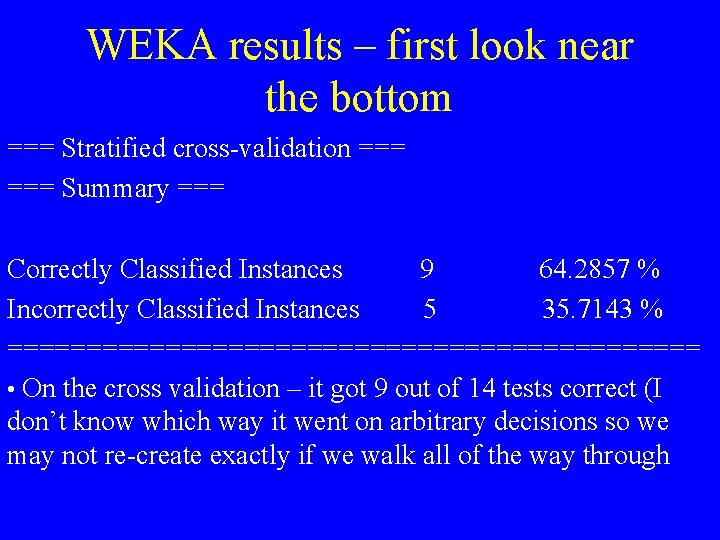

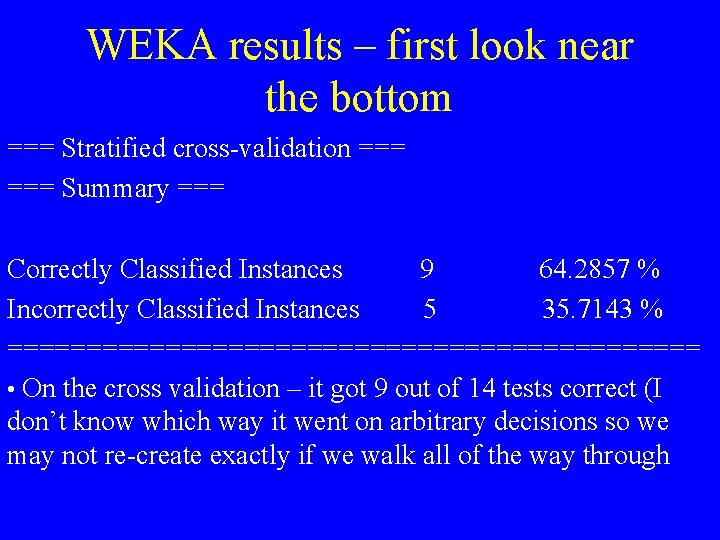

WEKA results – first look near the bottom === Stratified cross-validation === Summary === Correctly Classified Instances 9 64. 2857 % Incorrectly Classified Instances 5 35. 7143 % ====================== • On the cross validation – it got 9 out of 14 tests correct (I don’t know which way it went on arbitrary decisions so we may not re-create exactly if we walk all of the way through

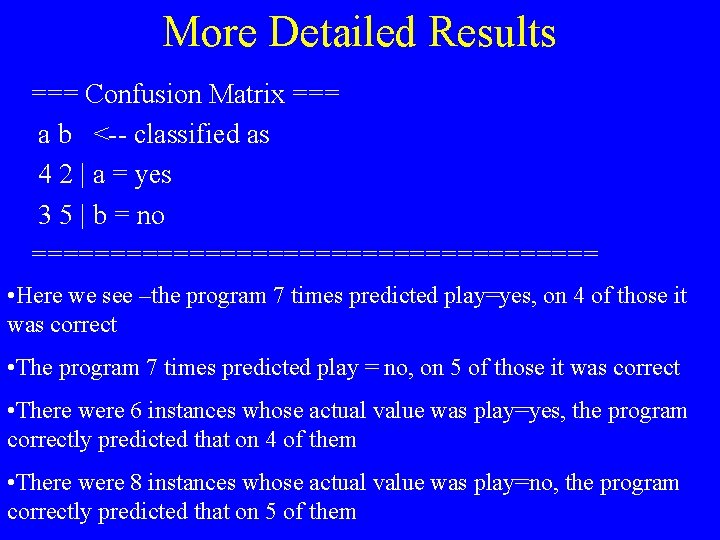

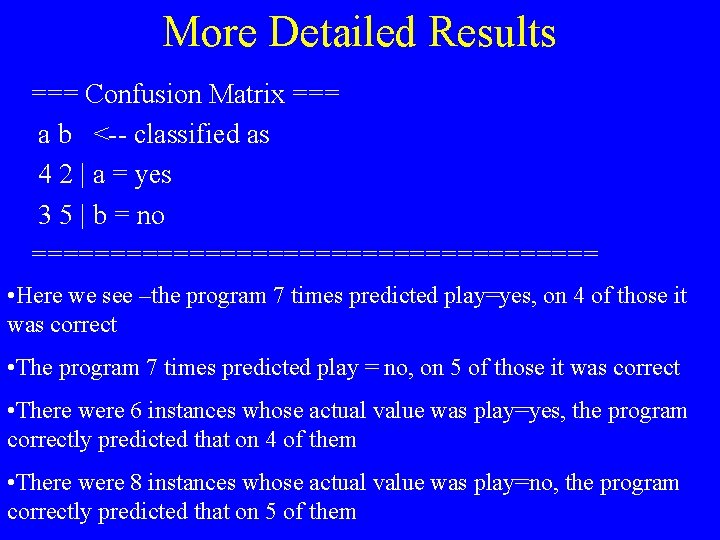

More Detailed Results === Confusion Matrix === a b <-- classified as 4 2 | a = yes 3 5 | b = no ================== • Here we see –the program 7 times predicted play=yes, on 4 of those it was correct • The program 7 times predicted play = no, on 5 of those it was correct • There were 6 instances whose actual value was play=yes, the program correctly predicted that on 4 of them • There were 8 instances whose actual value was play=no, the program correctly predicted that on 5 of them

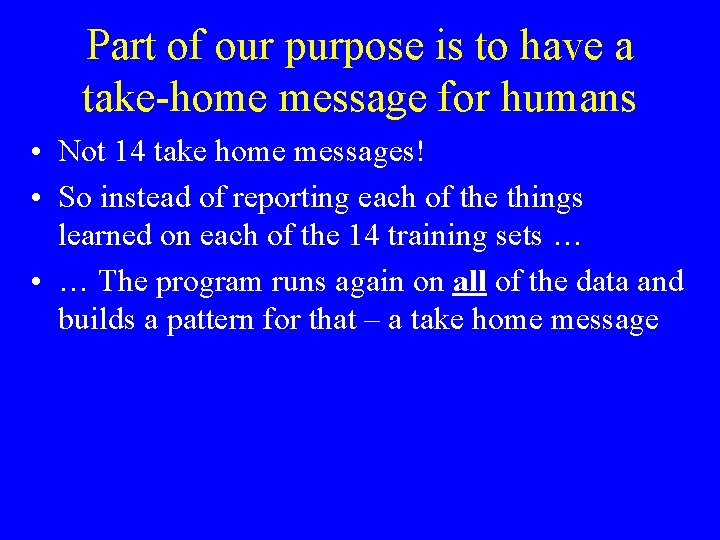

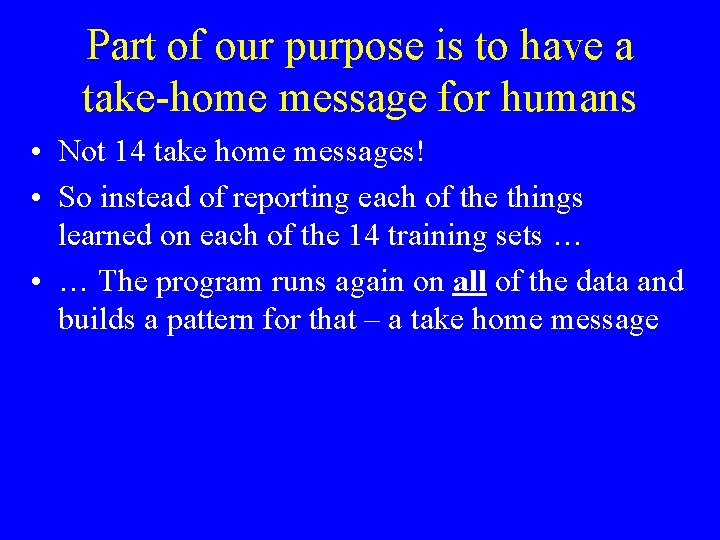

Part of our purpose is to have a take-home message for humans • Not 14 take home messages! • So instead of reporting each of the things learned on each of the 14 training sets … • … The program runs again on all of the data and builds a pattern for that – a take home message

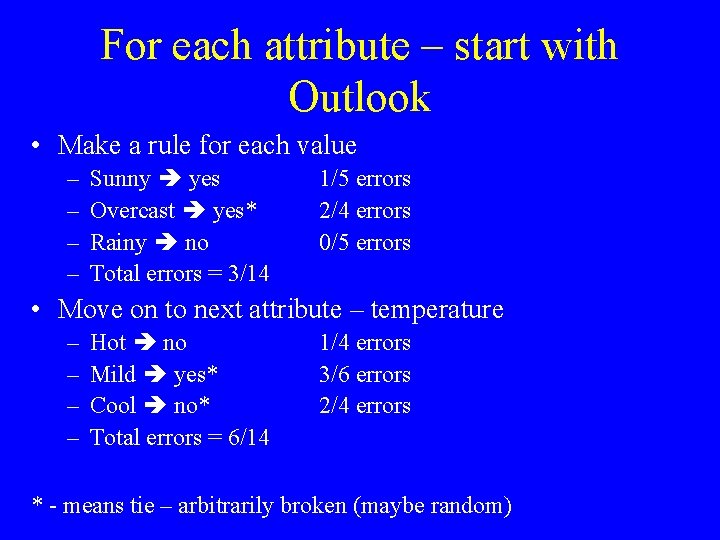

For each attribute – start with Outlook • Make a rule for each value – – Sunny yes Overcast yes* Rainy no Total errors = 3/14 1/5 errors 2/4 errors 0/5 errors • Move on to next attribute – temperature – – Hot no Mild yes* Cool no* Total errors = 6/14 1/4 errors 3/6 errors 2/4 errors * - means tie – arbitrarily broken (maybe random)

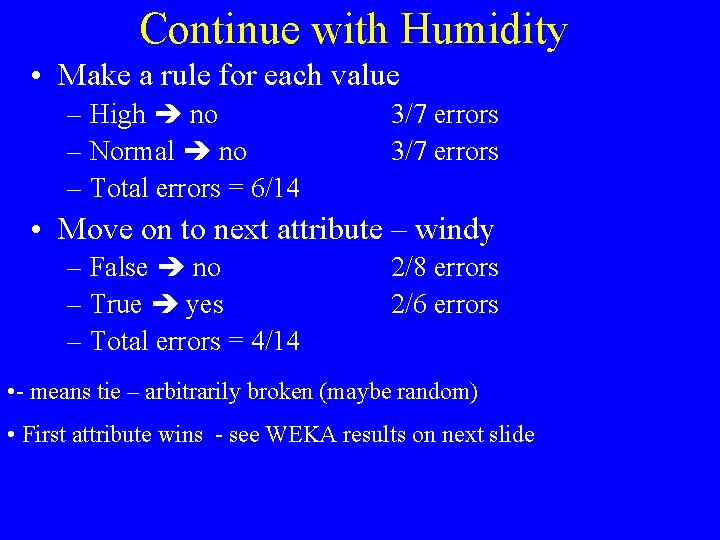

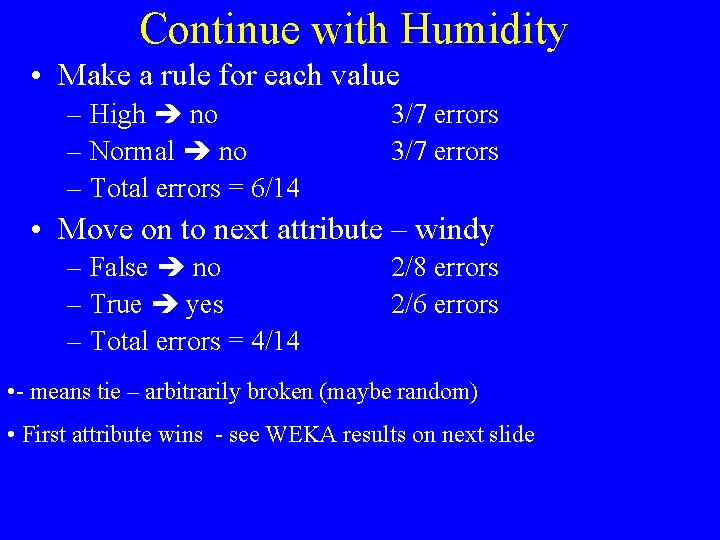

Continue with Humidity • Make a rule for each value – High no – Normal no – Total errors = 6/14 3/7 errors • Move on to next attribute – windy – False no – True yes – Total errors = 4/14 2/8 errors 2/6 errors • - means tie – arbitrarily broken (maybe random) • First attribute wins - see WEKA results on next slide

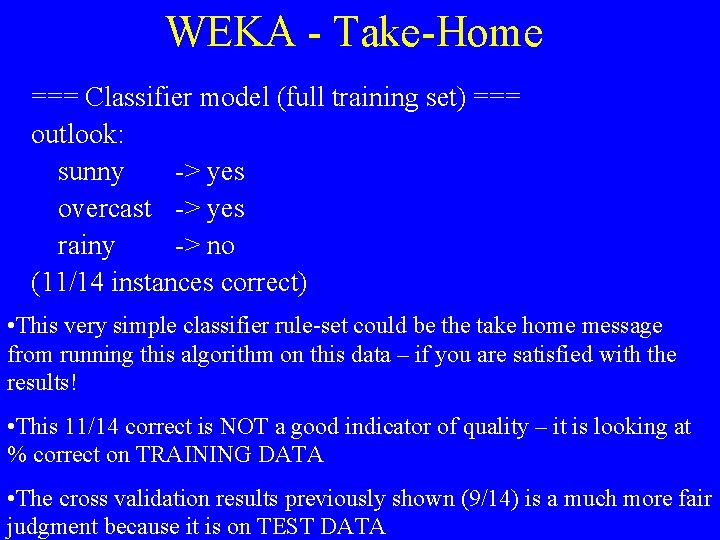

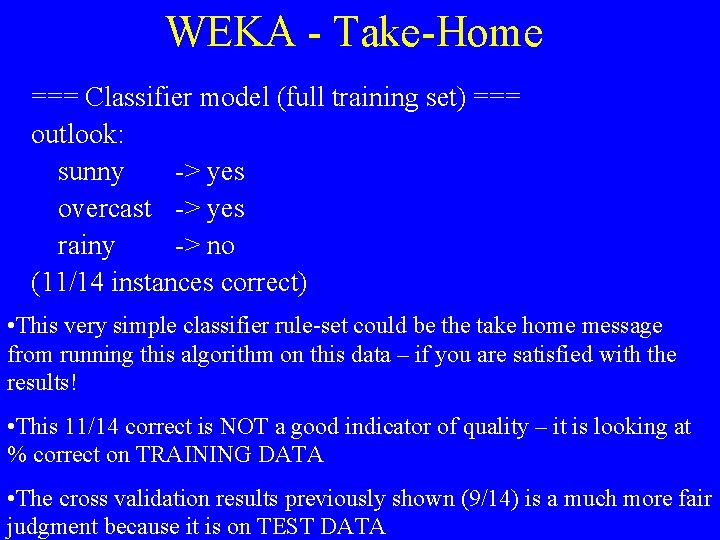

WEKA - Take-Home === Classifier model (full training set) === outlook: sunny -> yes overcast -> yes rainy -> no (11/14 instances correct) • This very simple classifier rule-set could be the take home message from running this algorithm on this data – if you are satisfied with the results! • This 11/14 correct is NOT a good indicator of quality – it is looking at % correct on TRAINING DATA • The cross validation results previously shown (9/14) is a much more fair judgment because it is on TEST DATA

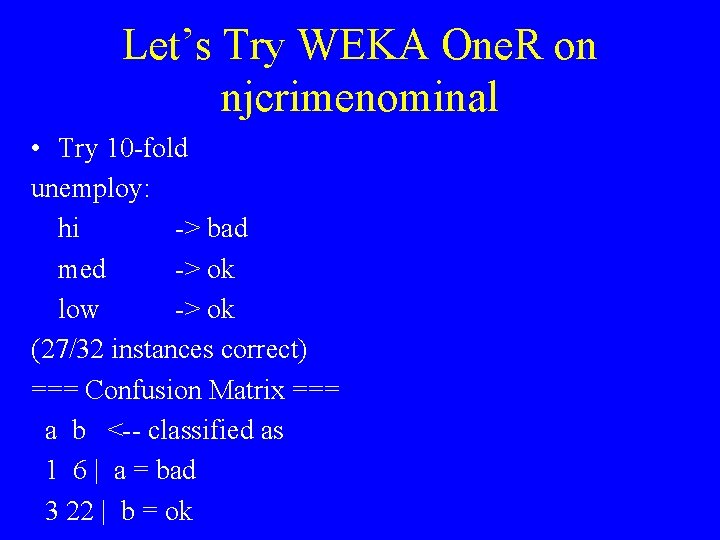

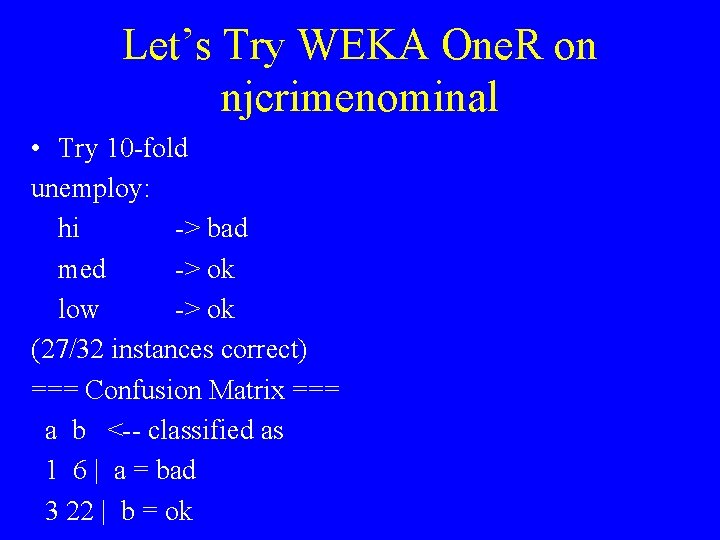

Let’s Try WEKA One. R on njcrimenominal • Try 10 -fold unemploy: hi -> bad med -> ok low -> ok (27/32 instances correct) === Confusion Matrix === a b <-- classified as 1 6 | a = bad 3 22 | b = ok

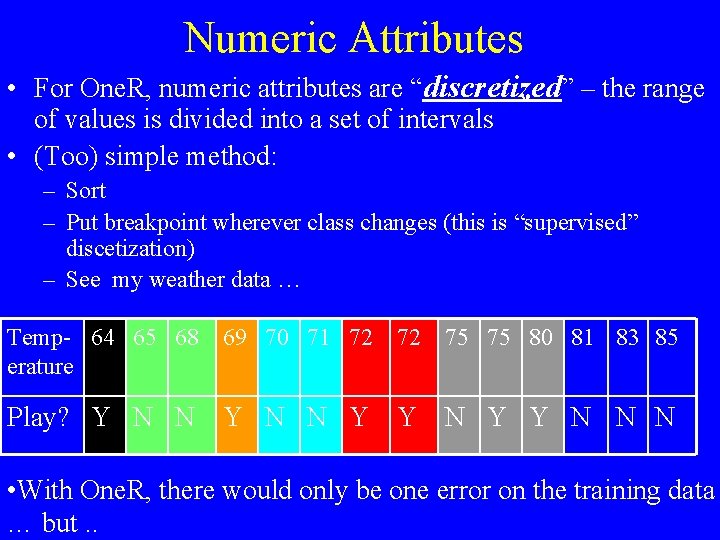

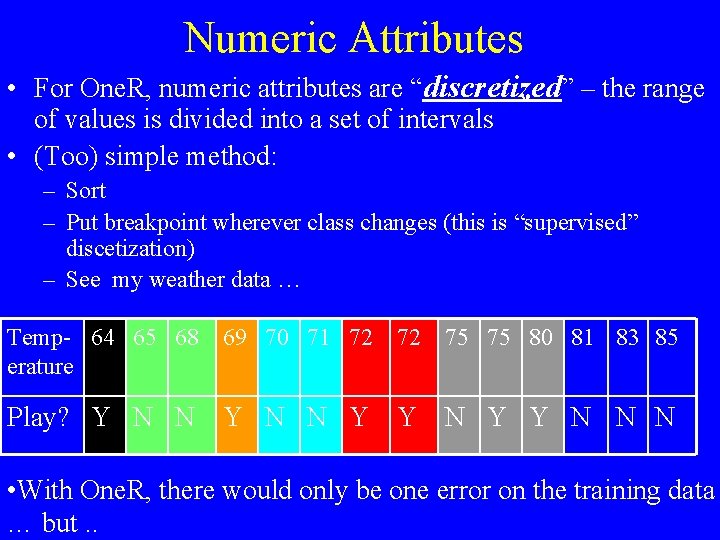

Numeric Attributes • For One. R, numeric attributes are “discretized” – the range of values is divided into a set of intervals • (Too) simple method: – Sort – Put breakpoint wherever class changes (this is “supervised” discetization) – See my weather data … Temp- 64 65 68 erature 69 70 71 72 72 75 75 80 81 83 85 Play? Y N N Y Y N N N • With One. R, there would only be one error on the training data … but. .

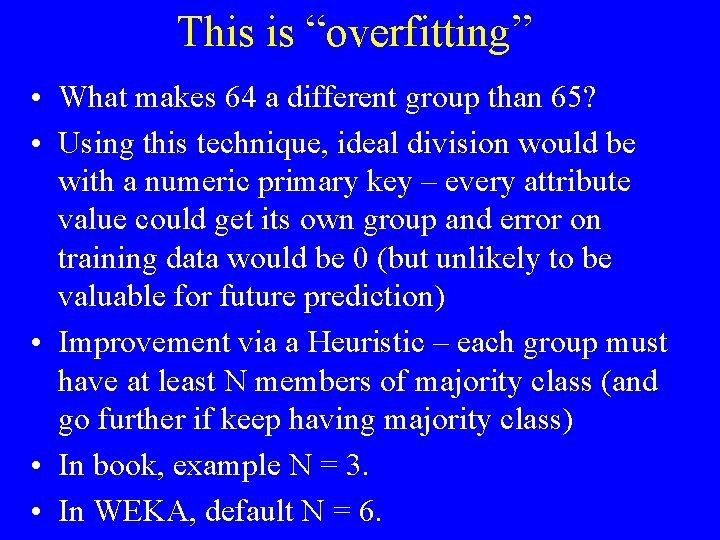

This is “overfitting” • What makes 64 a different group than 65? • Using this technique, ideal division would be with a numeric primary key – every attribute value could get its own group and error on training data would be 0 (but unlikely to be valuable for future prediction) • Improvement via a Heuristic – each group must have at least N members of majority class (and go further if keep having majority class) • In book, example N = 3. • In WEKA, default N = 6.

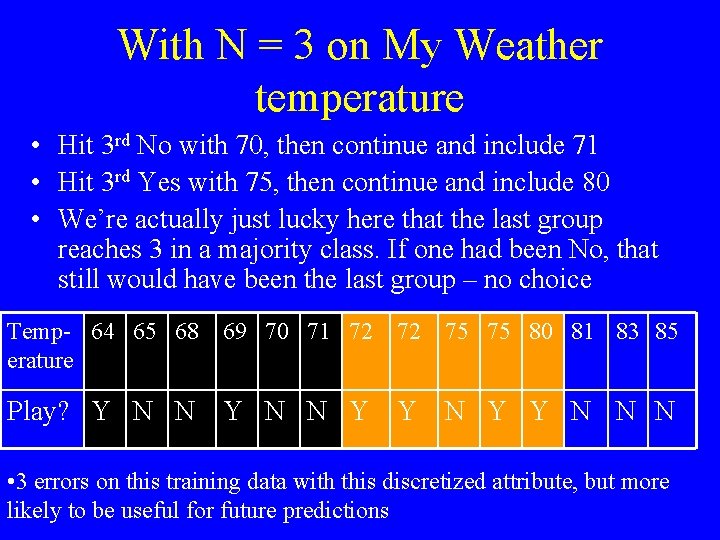

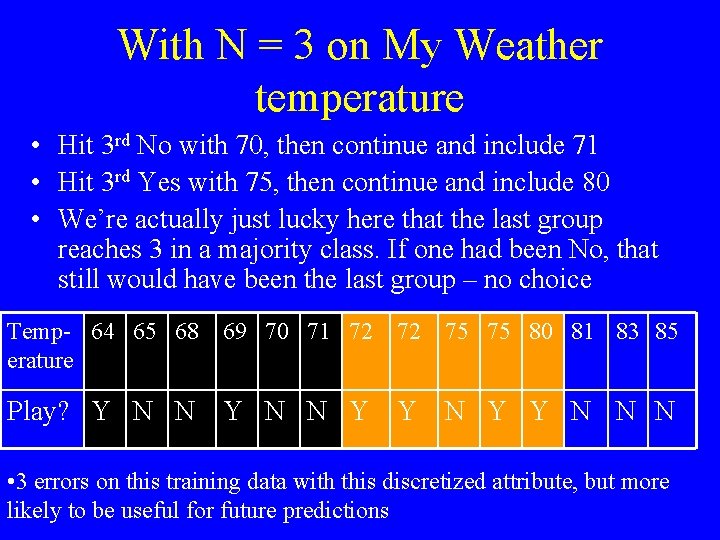

With N = 3 on My Weather temperature • Hit 3 rd No with 70, then continue and include 71 • Hit 3 rd Yes with 75, then continue and include 80 • We’re actually just lucky here that the last group reaches 3 in a majority class. If one had been No, that still would have been the last group – no choice Temp- 64 65 68 erature 69 70 71 72 72 75 75 80 81 83 85 Play? Y N N Y Y N N N • 3 errors on this training data with this discretized attribute, but more likely to be useful for future predictions

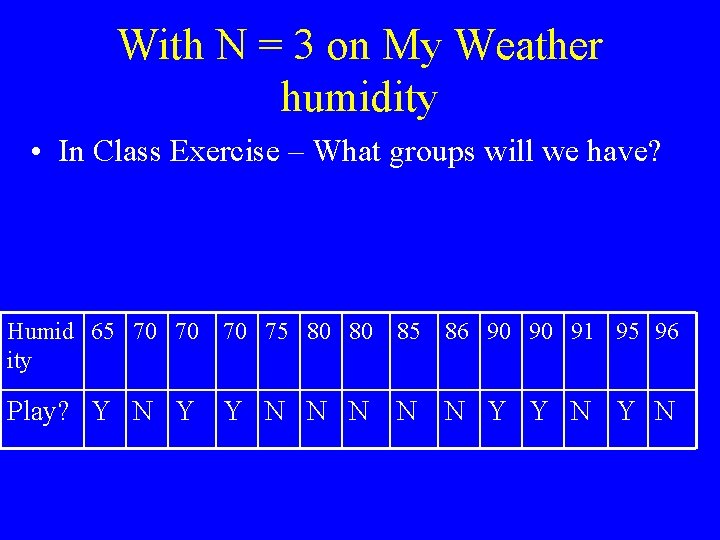

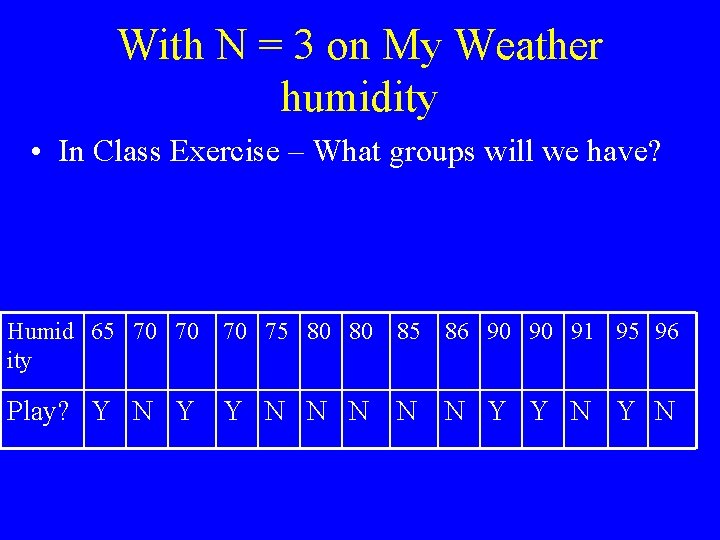

With N = 3 on My Weather humidity • In Class Exercise – What groups will we have? Humid 65 70 70 ity 70 75 80 80 85 86 90 90 91 95 96 Play? Y N Y Y N N N Y Y N

Let’s run WEKA • My Weather Data • First with default options • Next with 3 (double click option area – WEKA option B)

Another Thing or Two • Using this method, if two adjacent groups have the same majority class, they can be collapsed into one group • (this doesn’t happen for temperature or humidity) • We can’t do anything about missing values, they have to be in their own group

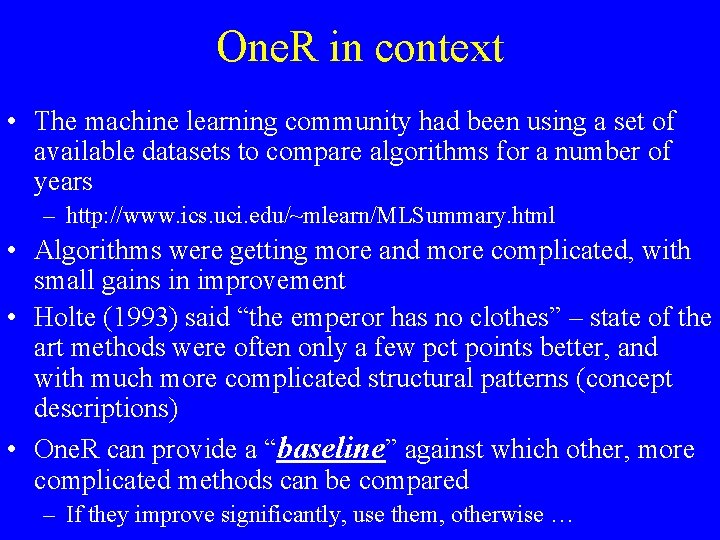

One. R in context • The machine learning community had been using a set of available datasets to compare algorithms for a number of years – http: //www. ics. uci. edu/~mlearn/MLSummary. html • Algorithms were getting more and more complicated, with small gains in improvement • Holte (1993) said “the emperor has no clothes” – state of the art methods were often only a few pct points better, and with much more complicated structural patterns (concept descriptions) • One. R can provide a “baseline” against which other, more complicated methods can be compared – If they improve significantly, use them, otherwise …

Class Exercise

Let’s run WEKA One. R on japanbank • B option = 3

We can actually discretize and save data for future using WEKA Preprocess Tab • • Select Choose Unsupervised > Attribute > Discretize • Choose Options – Attribute indices (#s to be binned – e. g. attr 3 -4) – Find. Num. Bins – to have WEKA find a good number of groups for this data – Num. Bins = max # groups to consider • Choose Apply Button • Choose Save Button, to save in permanent file • Undo if necessary

End Section 4. 1