Data Mining Algorithms K Means Clustering Chapter 4

- Slides: 9

Data Mining – Algorithms: K Means Clustering Chapter 4, Section 4. 8

K Means Clusting • K – is the number of clusters • K must be specified in advance (option or parameter to algorithm) • Develops “Cluster Centers” • Starts with random center points • Puts instances into “closest” cluster – based on euclidean distance • Creates new centers based on instances included • Refines iteratively until no change

Example • See bankrawnumeric. KMeans. Version 2. xls •

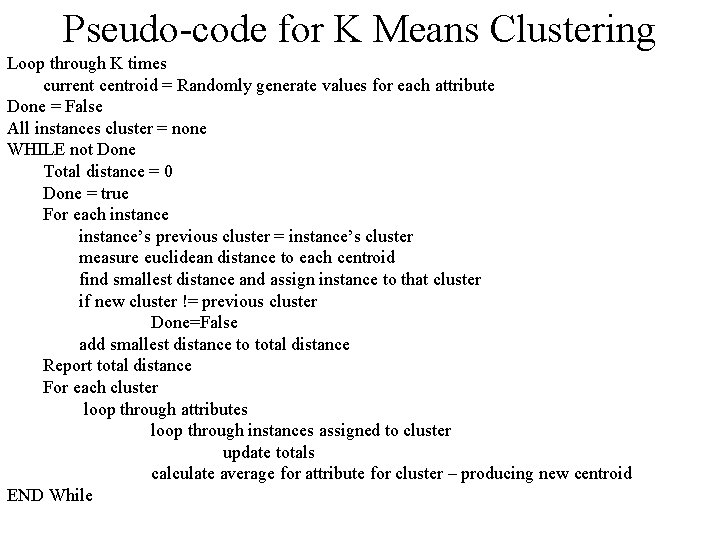

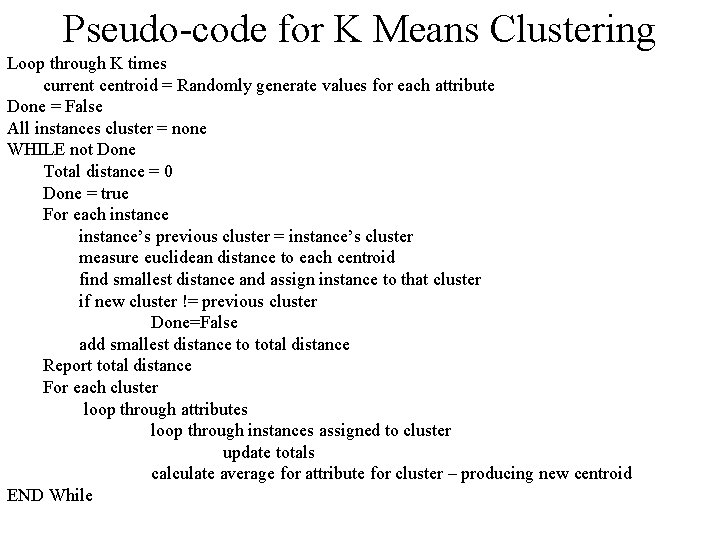

Pseudo-code for K Means Clustering Loop through K times current centroid = Randomly generate values for each attribute Done = False All instances cluster = none WHILE not Done Total distance = 0 Done = true For each instance’s previous cluster = instance’s cluster measure euclidean distance to each centroid find smallest distance and assign instance to that cluster if new cluster != previous cluster Done=False add smallest distance to total distance Report total distance For each cluster loop through attributes loop through instances assigned to cluster update totals calculate average for attribute for cluster – producing new centroid END While

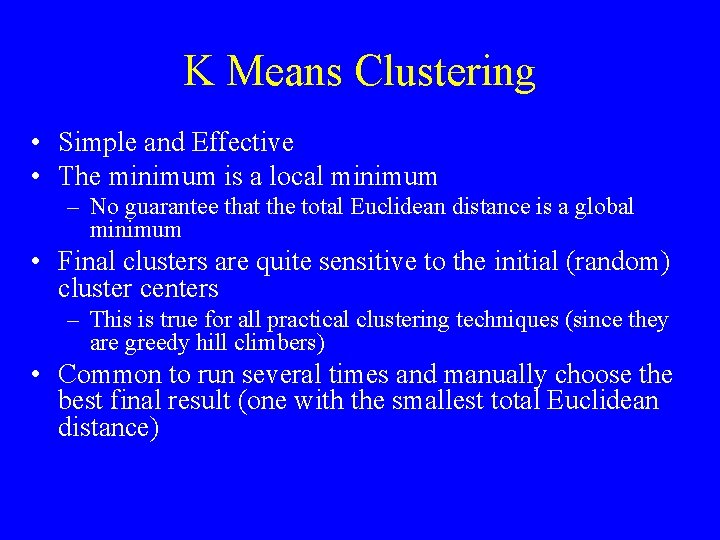

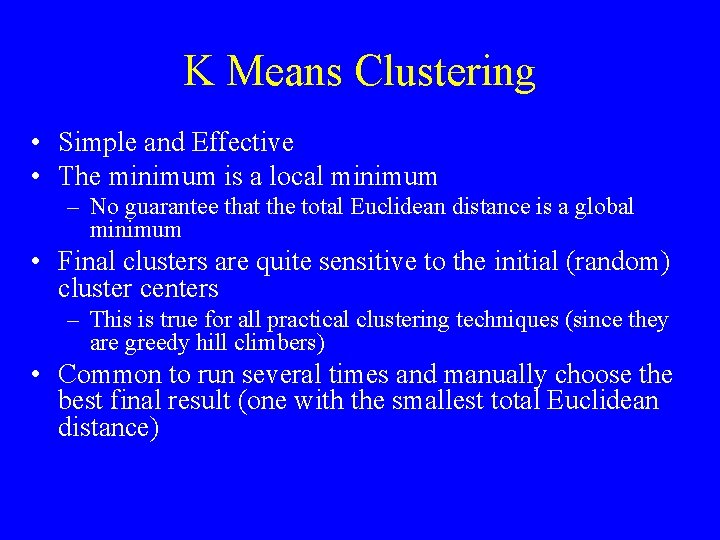

K Means Clustering • Simple and Effective • The minimum is a local minimum – No guarantee that the total Euclidean distance is a global minimum • Final clusters are quite sensitive to the initial (random) cluster centers – This is true for all practical clustering techniques (since they are greedy hill climbers) • Common to run several times and manually choose the best final result (one with the smallest total Euclidean distance)

• Let’s run WEKA on this …

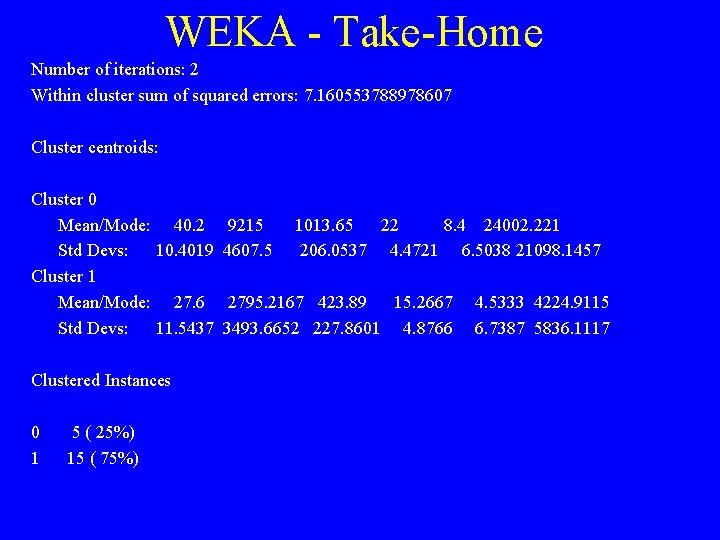

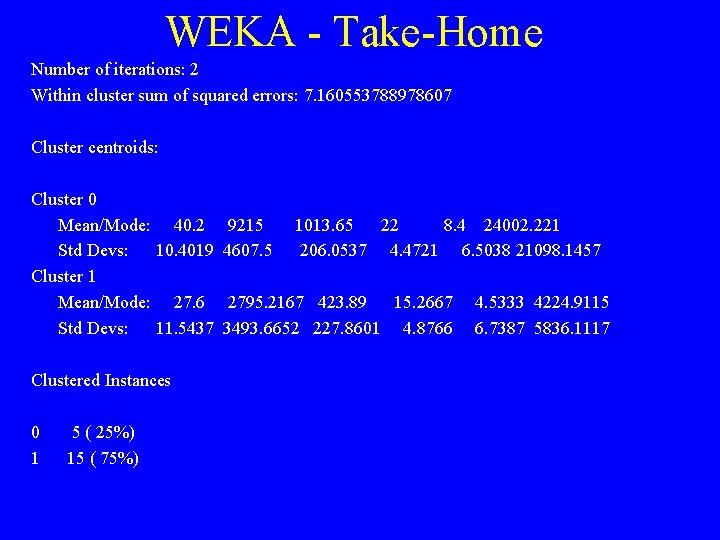

WEKA - Take-Home Number of iterations: 2 Within cluster sum of squared errors: 7. 160553788978607 Cluster centroids: Cluster 0 Mean/Mode: 40. 2 Std Devs: 10. 4019 Cluster 1 Mean/Mode: 27. 6 Std Devs: 11. 5437 Clustered Instances 0 1 5 ( 25%) 15 ( 75%) 9215 4607. 5 1013. 65 22 8. 4 24002. 221 206. 0537 4. 4721 6. 5038 21098. 1457 2795. 2167 423. 89 15. 2667 3493. 6652 227. 8601 4. 8766 4. 5333 4224. 9115 6. 7387 5836. 1117

Numeric Attributes • Simple K Means is designed for Numeric Attributes • Nominal Attributes – similarity measurement has to use all or nothing – Centroid uses mode instead of mean

End Section 4. 8