Data Mining Algorithms and Principles Data Mining and

![Nearest Neighbor Approaches [SAR 00 a] n n Offline phase: n Do nothing…just store Nearest Neighbor Approaches [SAR 00 a] n n Offline phase: n Do nothing…just store](https://slidetodoc.com/presentation_image_h2/2bc34c96ac043ae4ff3c61e1aa905d6a/image-13.jpg)

![Horting Method [AGG 99] n n K-NN is not transitive Horting takes advantage of Horting Method [AGG 99] n n K-NN is not transitive Horting takes advantage of](https://slidetodoc.com/presentation_image_h2/2bc34c96ac043ae4ff3c61e1aa905d6a/image-14.jpg)

![Clustering [BRE 98] n n Offline phase: n Build clusters: k-mean, k-medoid, etc. Online Clustering [BRE 98] n n Offline phase: n Build clusters: k-mean, k-medoid, etc. Online](https://slidetodoc.com/presentation_image_h2/2bc34c96ac043ae4ff3c61e1aa905d6a/image-16.jpg)

![The Power of Representation [UNG 98] Action Foreign Classic Q 1 -B: How can The Power of Representation [UNG 98] Action Foreign Classic Q 1 -B: How can](https://slidetodoc.com/presentation_image_h2/2bc34c96ac043ae4ff3c61e1aa905d6a/image-20.jpg)

![Adjusted Product Taxonomy [CHO 04] • Input : product taxonomy • Output: modified taxonomy Adjusted Product Taxonomy [CHO 04] • Input : product taxonomy • Output: modified taxonomy](https://slidetodoc.com/presentation_image_h2/2bc34c96ac043ae4ff3c61e1aa905d6a/image-22.jpg)

![Latent Semantic Indexing [SAR 00 b] = R R k m. Xn UUk m Latent Semantic Indexing [SAR 00 b] = R R k m. Xn UUk m](https://slidetodoc.com/presentation_image_h2/2bc34c96ac043ae4ff3c61e1aa905d6a/image-24.jpg)

![Selecting Relevant Instances [YU 01] n n Superman and Batman and correlated Predict this Selecting Relevant Instances [YU 01] n n Superman and Batman and correlated Predict this](https://slidetodoc.com/presentation_image_h2/2bc34c96ac043ae4ff3c61e1aa905d6a/image-26.jpg)

![Correlation-based Methods [SAR 01] n Same as in user-user similarity but on item vectors Correlation-based Methods [SAR 01] n Same as in user-user similarity but on item vectors](https://slidetodoc.com/presentation_image_h2/2bc34c96ac043ae4ff3c61e1aa905d6a/image-32.jpg)

![Regression Based Methods [VUC 00] n n Offline phase: n Fit n(n-1) linear regressions Regression Based Methods [VUC 00] n n Offline phase: n Fit n(n-1) linear regressions](https://slidetodoc.com/presentation_image_h2/2bc34c96ac043ae4ff3c61e1aa905d6a/image-34.jpg)

![Belief Network for CF [BRE 98] n n n Every item is a node Belief Network for CF [BRE 98] n n n Every item is a node](https://slidetodoc.com/presentation_image_h2/2bc34c96ac043ae4ff3c61e1aa905d6a/image-38.jpg)

![Adaptive Association Rule Mining [LIN 01] n Given: Desired number min. Confidence n transaction Adaptive Association Rule Mining [LIN 01] n Given: Desired number min. Confidence n transaction](https://slidetodoc.com/presentation_image_h2/2bc34c96ac043ae4ff3c61e1aa905d6a/image-42.jpg)

- Slides: 49

Data Mining: Algorithms and Principles — Data Mining and Collaborative Filtering — ©Jiawei Han Department of Computer Science University of Illinois at Urbana-Champaign www. cs. uiuc. edu/~hanj Additional contributors: Amomen (Spring 2004) 12/24/2021 Data Mining: Principles and Algorithms 1

Outline n n n Motivation Systems in Action A Conceptual Framework User-User Methods Item-Item Methods Recent Advances and Open Problems 12/24/2021 Data Mining: Principles and Algorithms 2

Motivation n n User Perspective n Lots of online products, books, movies, etc. n Reduce my choices…please… Manager Perspective “ if I have 3 million customers on the web, I should have 3 million stores on the web. ” CEO of Amazon. com [SCH 01] 12/24/2021 Data Mining: Principles and Algorithms 3

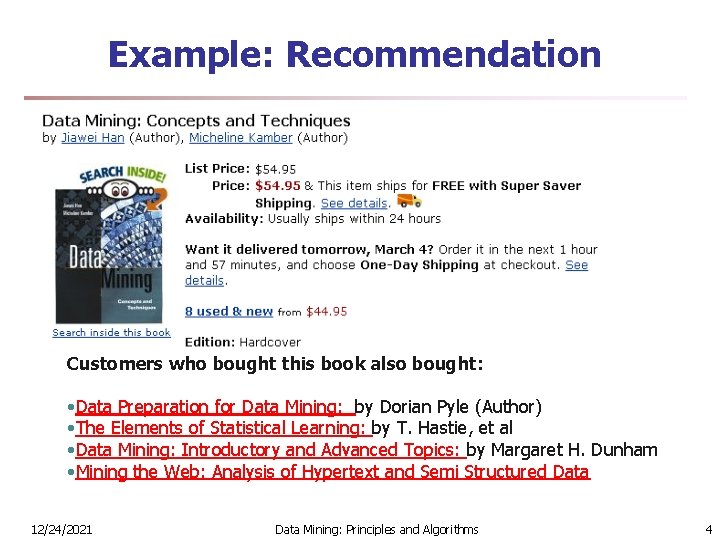

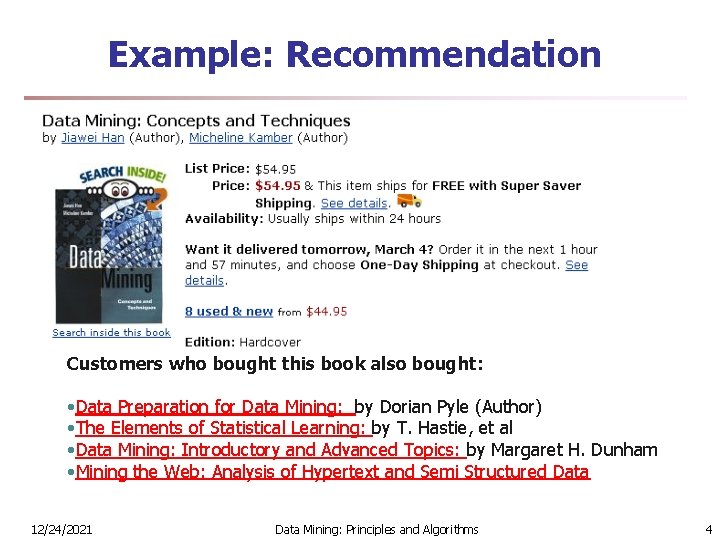

Example: Recommendation Customers who bought this book also bought: • Data Preparation for Data Mining: by Dorian Pyle (Author) • The Elements of Statistical Learning: by T. Hastie, et al • Data Mining: Introductory and Advanced Topics: by Margaret H. Dunham • Mining the Web: Analysis of Hypertext and Semi Structured Data 12/24/2021 Data Mining: Principles and Algorithms 4

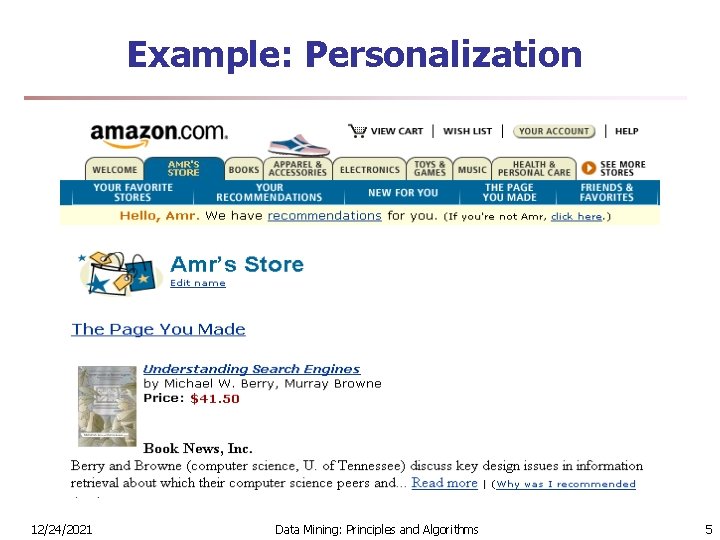

Example: Personalization 12/24/2021 Data Mining: Principles and Algorithms 5

Other Examples n n n n Movielens: movies Moviecritic: movies again My launch: music Gustos starrater: web pages Jester: Jokes TV Recommender: TV shows Suggest 1. 0 : different products And much more… 12/24/2021 Data Mining: Principles and Algorithms 6

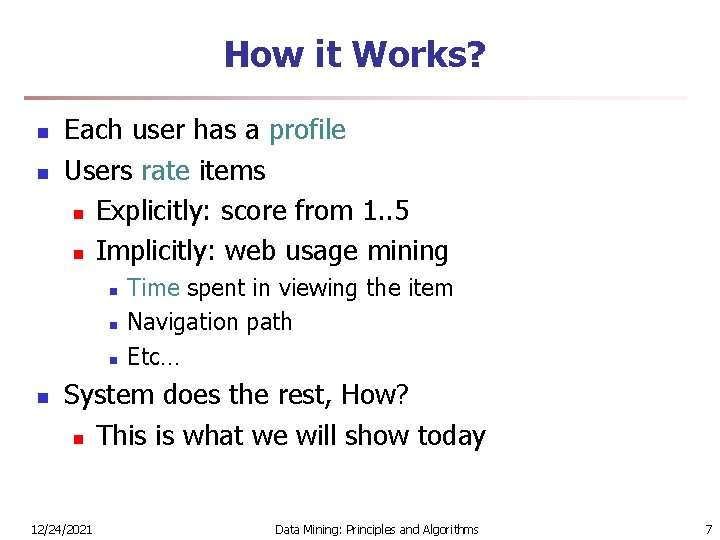

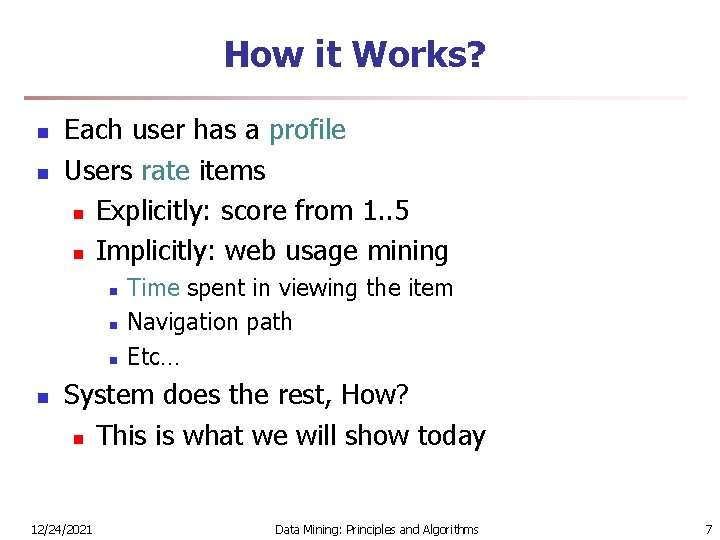

How it Works? n n Each user has a profile Users rate items n Explicitly: score from 1. . 5 n Implicitly: web usage mining n n Time spent in viewing the item Navigation path Etc… System does the rest, How? n This is what we will show today 12/24/2021 Data Mining: Principles and Algorithms 7

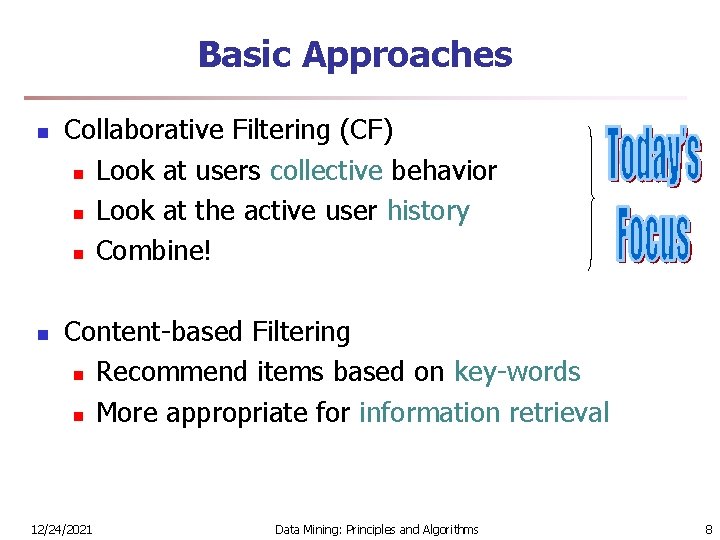

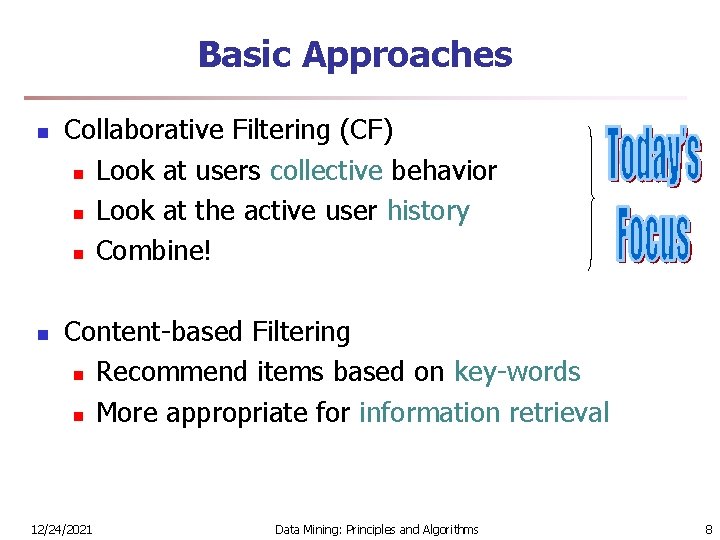

Basic Approaches n n Collaborative Filtering (CF) n Look at users collective behavior n Look at the active user history n Combine! Content-based Filtering n Recommend items based on key-words n More appropriate for information retrieval 12/24/2021 Data Mining: Principles and Algorithms 8

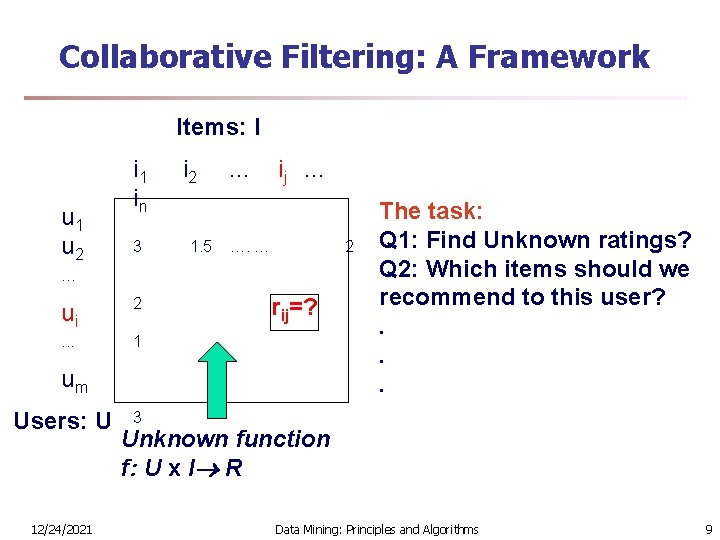

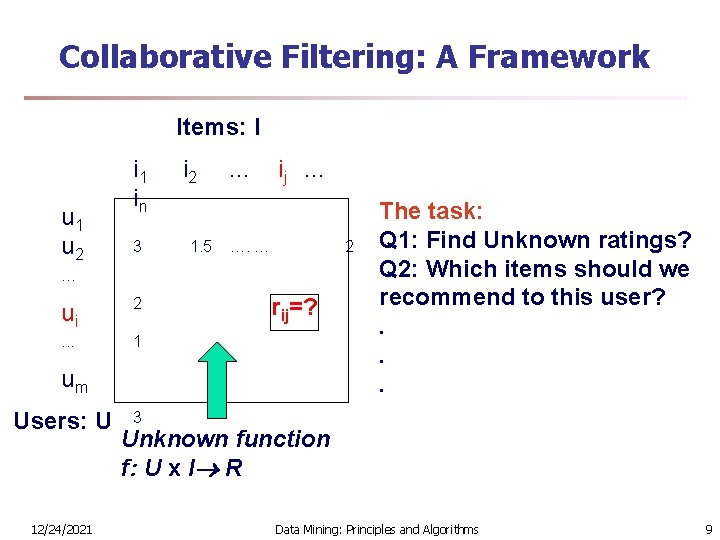

Collaborative Filtering: A Framework Items: I u 1 u 2 i 1 in 3 i 2 1. 5 … ij … …. … 2 … ui 2 . . . 1 rij=? um Users: U 12/24/2021 The task: Q 1: Find Unknown ratings? Q 2: Which items should we recommend to this user? . . . 3 Unknown function f: U x I R Data Mining: Principles and Algorithms 9

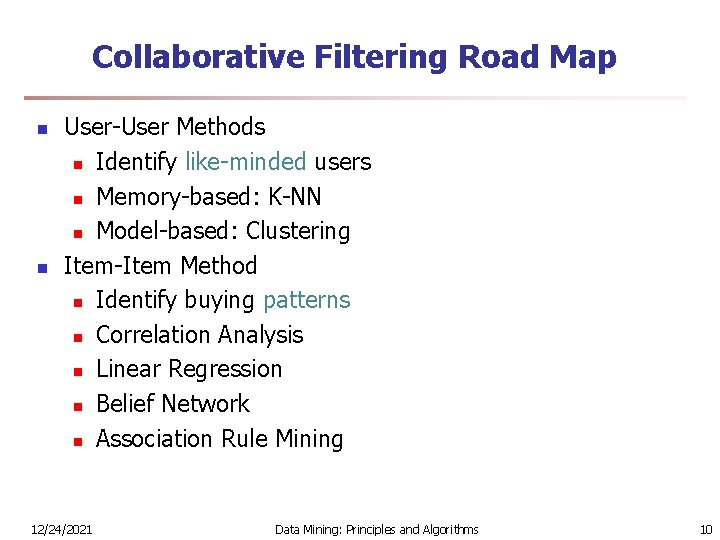

Collaborative Filtering Road Map n n User-User Methods n Identify like-minded users n Memory-based: K-NN n Model-based: Clustering Item-Item Method n Identify buying patterns n Correlation Analysis n Linear Regression n Belief Network n Association Rule Mining 12/24/2021 Data Mining: Principles and Algorithms 10

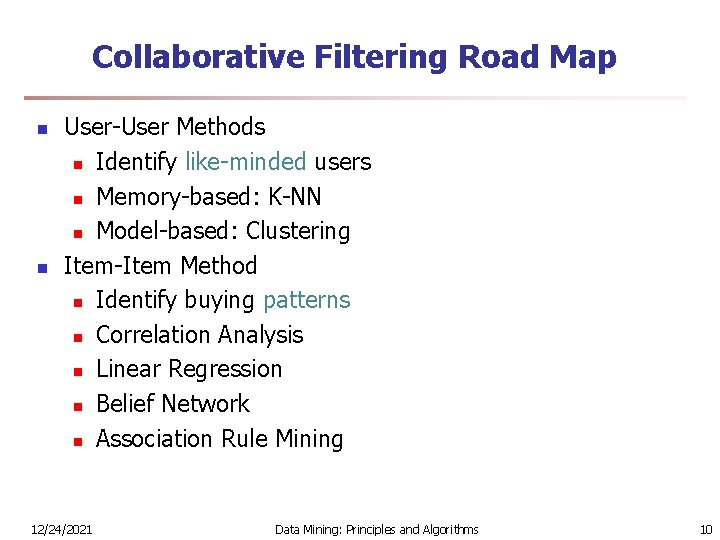

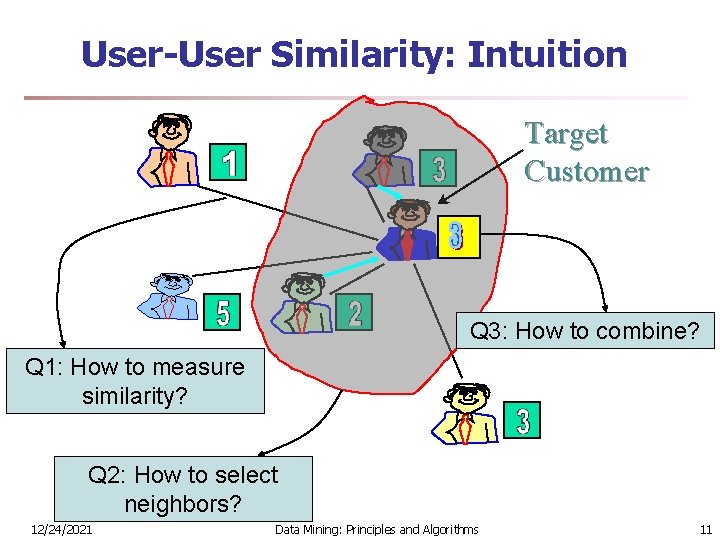

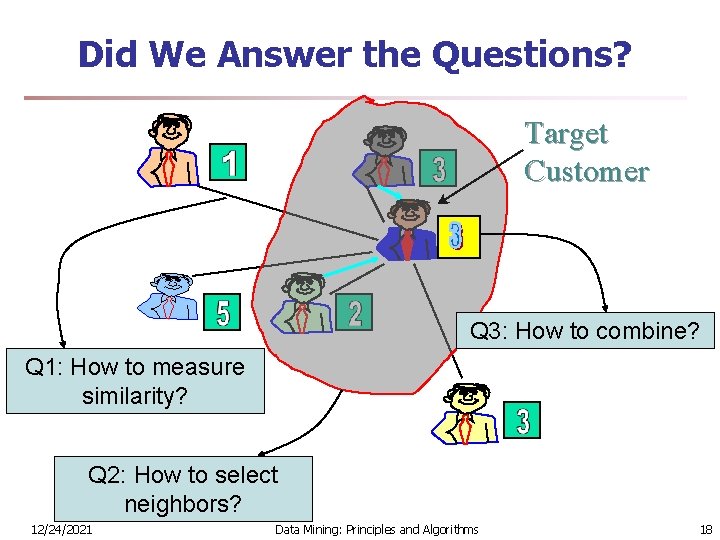

User-User Similarity: Intuition Target Customer Q 3: How to combine? Q 1: How to measure similarity? Q 2: How to select neighbors? 12/24/2021 Data Mining: Principles and Algorithms 11

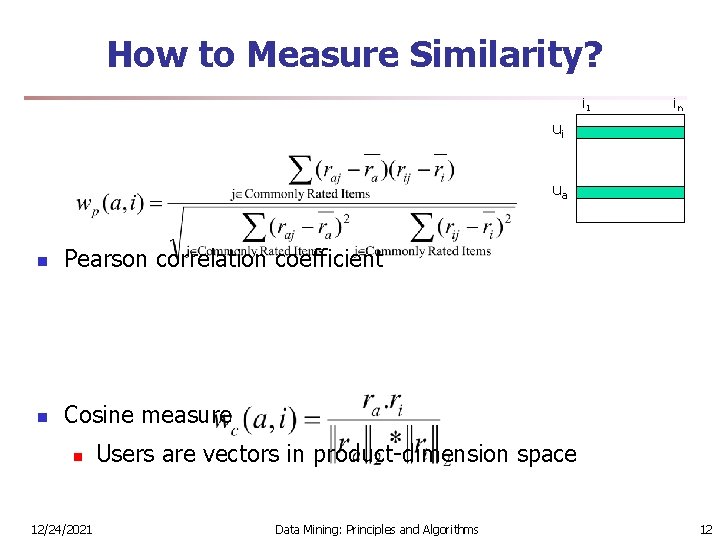

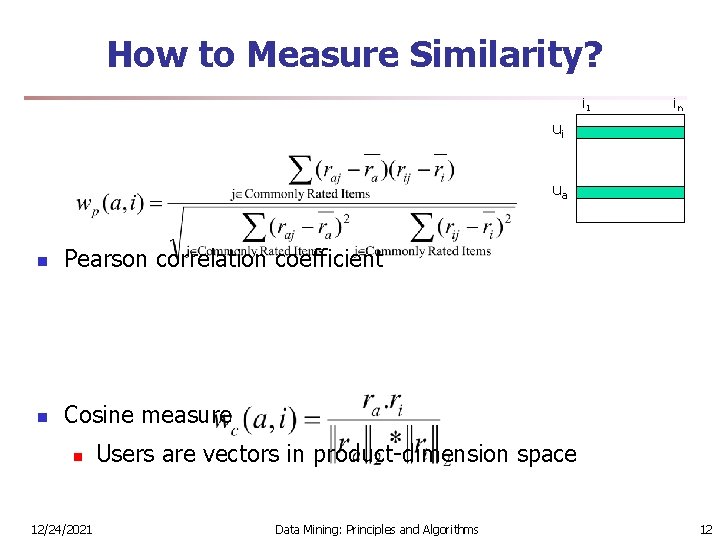

How to Measure Similarity? i 1 in ui ua n Pearson correlation coefficient n Cosine measure n 12/24/2021 Users are vectors in product-dimension space Data Mining: Principles and Algorithms 12

![Nearest Neighbor Approaches SAR 00 a n n Offline phase n Do nothingjust store Nearest Neighbor Approaches [SAR 00 a] n n Offline phase: n Do nothing…just store](https://slidetodoc.com/presentation_image_h2/2bc34c96ac043ae4ff3c61e1aa905d6a/image-13.jpg)

Nearest Neighbor Approaches [SAR 00 a] n n Offline phase: n Do nothing…just store transactions Online phase: n Identify highly similar users to the active one n n n Best K ones All with a measure greater than a threshold Prediction User i’s deviation User a’s neutral User a’s estimated deviation 12/24/2021 Data Mining: Principles and Algorithms 13

![Horting Method AGG 99 n n KNN is not transitive Horting takes advantage of Horting Method [AGG 99] n n K-NN is not transitive Horting takes advantage of](https://slidetodoc.com/presentation_image_h2/2bc34c96ac043ae4ff3c61e1aa905d6a/image-14.jpg)

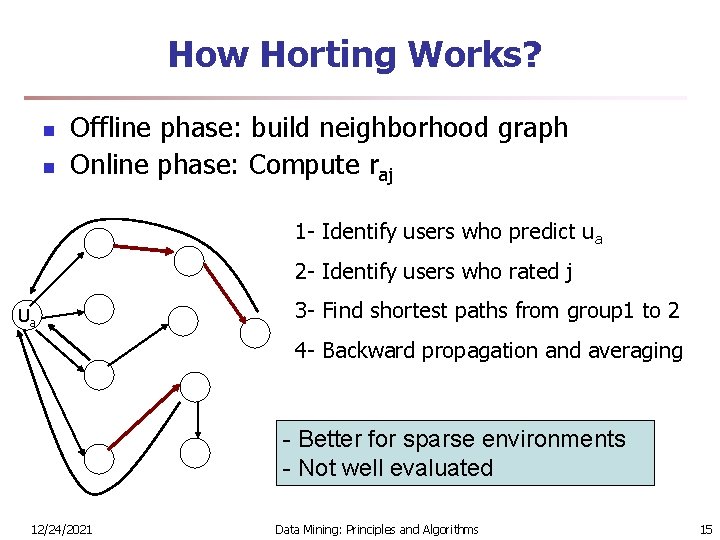

Horting Method [AGG 99] n n K-NN is not transitive Horting takes advantage of transitivity Uses new similarity measure: Predictability User i predicts user a if n They have rated sufficiently common items n There is an error-bounded linear transformation from user i’s ratings to a’s ones 12/24/2021 Data Mining: Principles and Algorithms 14

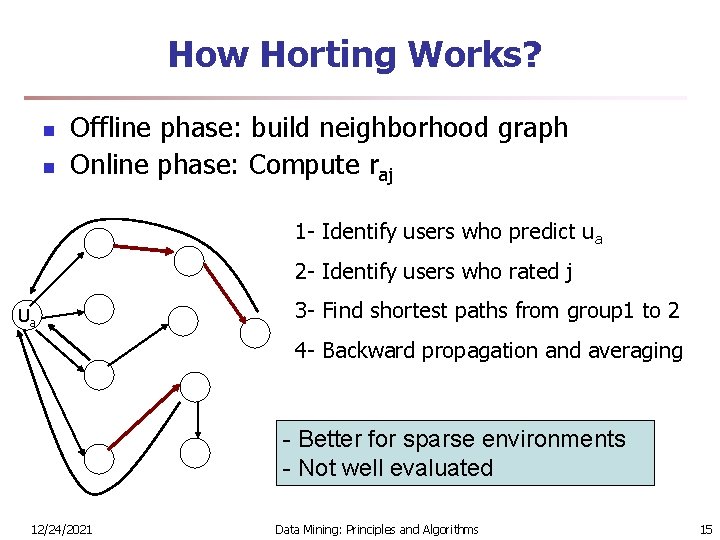

How Horting Works? n n Offline phase: build neighborhood graph Online phase: Compute raj 1 - Identify users who predict ua 2 - Identify users who rated j Ua 3 - Find shortest paths from group 1 to 2 4 - Backward propagation and averaging - Better for sparse environments - Not well evaluated 12/24/2021 Data Mining: Principles and Algorithms 15

![Clustering BRE 98 n n Offline phase n Build clusters kmean kmedoid etc Online Clustering [BRE 98] n n Offline phase: n Build clusters: k-mean, k-medoid, etc. Online](https://slidetodoc.com/presentation_image_h2/2bc34c96ac043ae4ff3c61e1aa905d6a/image-16.jpg)

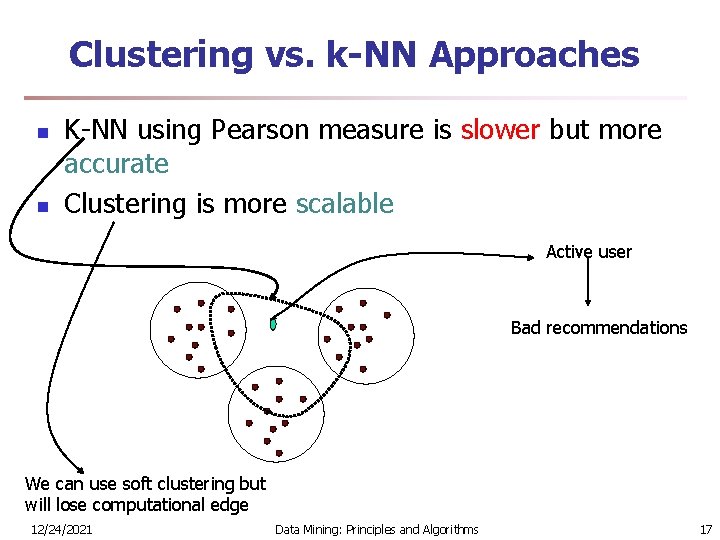

Clustering [BRE 98] n n Offline phase: n Build clusters: k-mean, k-medoid, etc. Online phase: n Identify the nearest cluster to the active user n Prediction: n n Use the center of the cluster Weighted average between cluster members n Weights depend on the active user Faster 12/24/2021 Slower but a little more accurate Data Mining: Principles and Algorithms 16

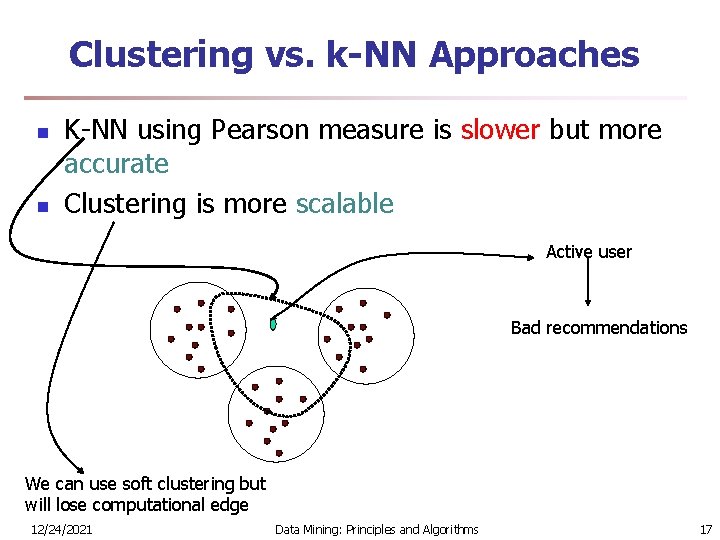

Clustering vs. k-NN Approaches n n K-NN using Pearson measure is slower but more accurate Clustering is more scalable Active user Bad recommendations We can use soft clustering but will lose computational edge 12/24/2021 Data Mining: Principles and Algorithms 17

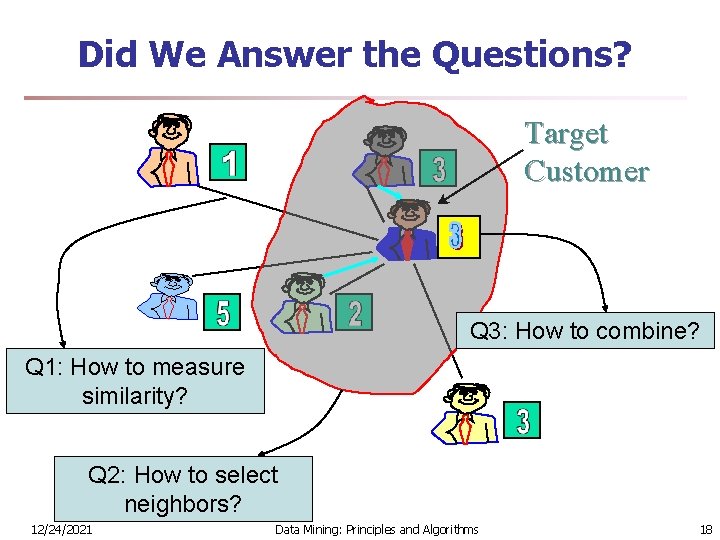

Did We Answer the Questions? Target Customer Q 3: How to combine? Q 1: How to measure similarity? Q 2: How to select neighbors? 12/24/2021 Data Mining: Principles and Algorithms 18

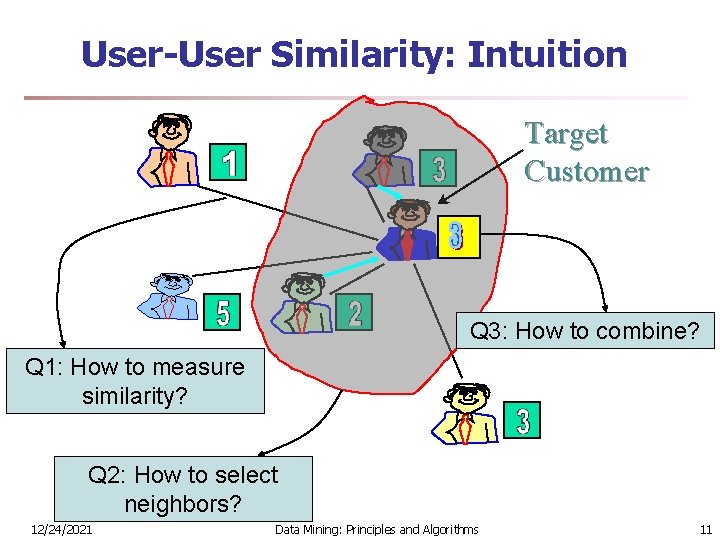

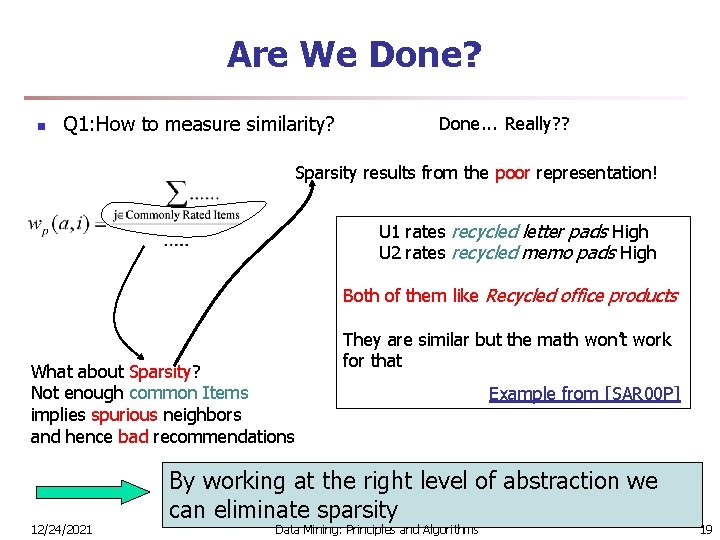

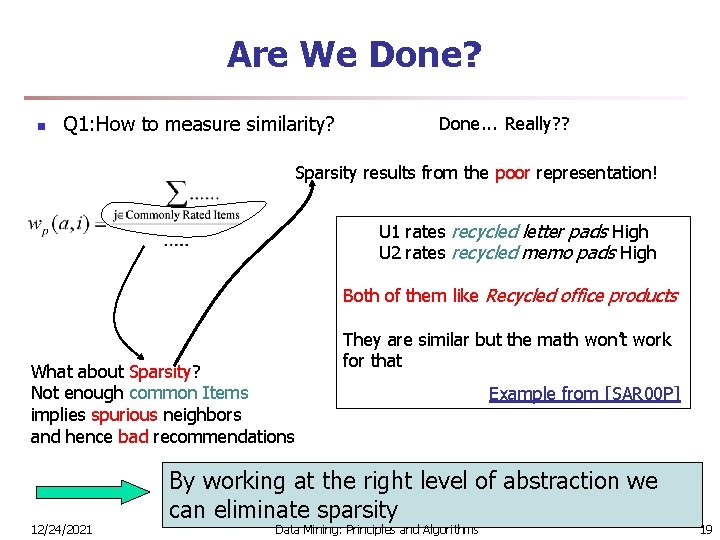

Are We Done? n Q 1: How to measure similarity? Done. . . Really? ? Sparsity results from the poor representation! U 1 rates recycled letter pads High U 2 rates recycled memo pads High Both of them like Recycled office products What about Sparsity? Not enough common Items implies spurious neighbors and hence bad recommendations 12/24/2021 They are similar but the math won’t work for that Example from [SAR 00 P] By working at the right level of abstraction we can eliminate sparsity Data Mining: Principles and Algorithms 19

![The Power of Representation UNG 98 Action Foreign Classic Q 1 B How can The Power of Representation [UNG 98] Action Foreign Classic Q 1 -B: How can](https://slidetodoc.com/presentation_image_h2/2bc34c96ac043ae4ff3c61e1aa905d6a/image-20.jpg)

The Power of Representation [UNG 98] Action Foreign Classic Q 1 -B: How can we formalize this intuition? 12/24/2021 Data Mining: Principles and Algorithms 20

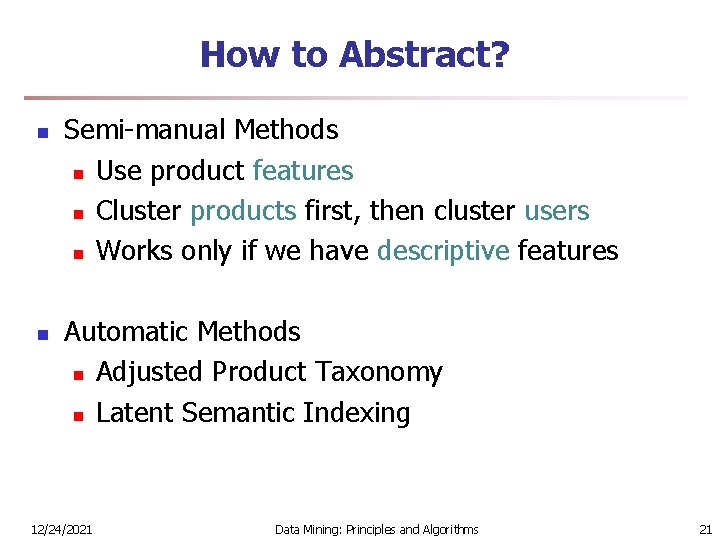

How to Abstract? n n Semi-manual Methods n Use product features n Cluster products first, then cluster users n Works only if we have descriptive features Automatic Methods n Adjusted Product Taxonomy n Latent Semantic Indexing 12/24/2021 Data Mining: Principles and Algorithms 21

![Adjusted Product Taxonomy CHO 04 Input product taxonomy Output modified taxonomy Adjusted Product Taxonomy [CHO 04] • Input : product taxonomy • Output: modified taxonomy](https://slidetodoc.com/presentation_image_h2/2bc34c96ac043ae4ff3c61e1aa905d6a/image-22.jpg)

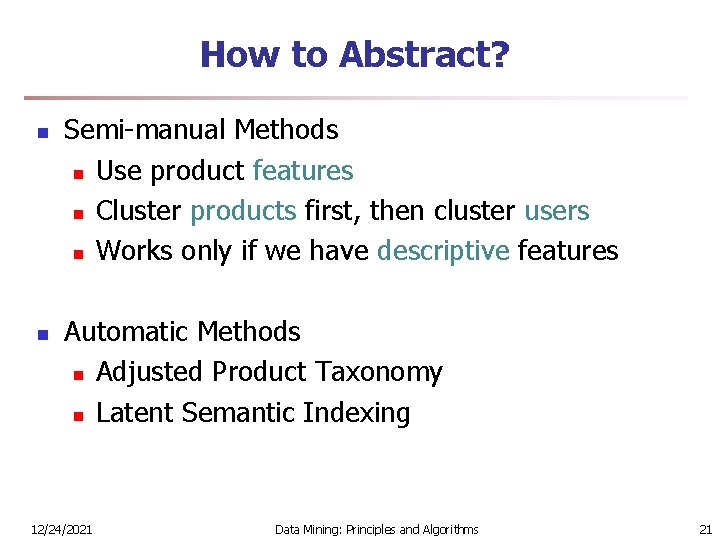

Adjusted Product Taxonomy [CHO 04] • Input : product taxonomy • Output: modified taxonomy with even distribution 12/24/2021 Data Mining: Principles and Algorithms 22

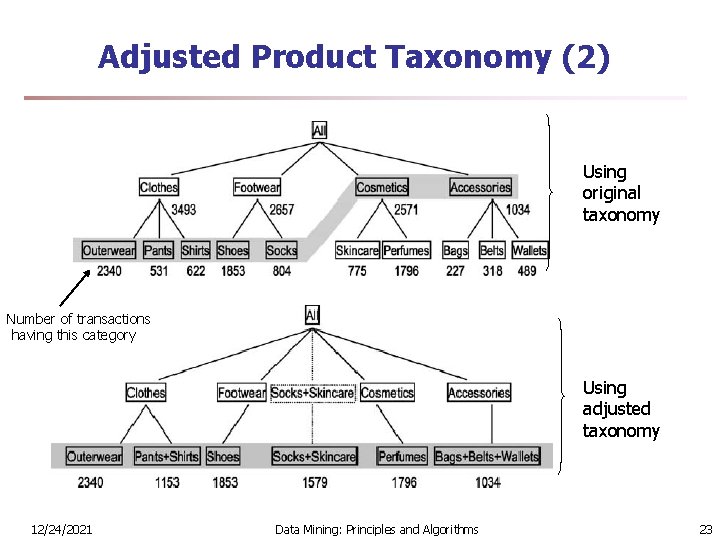

Adjusted Product Taxonomy (2) Using original taxonomy Number of transactions having this category Using adjusted taxonomy 12/24/2021 Data Mining: Principles and Algorithms 23

![Latent Semantic Indexing SAR 00 b R R k m Xn UUk m Latent Semantic Indexing [SAR 00 b] = R R k m. Xn UUk m](https://slidetodoc.com/presentation_image_h2/2bc34c96ac043ae4ff3c61e1aa905d6a/image-24.jpg)

Latent Semantic Indexing [SAR 00 b] = R R k m. Xn UUk m X kr SSk kr X rk I’ Ik ’ rk X n The reconstructed matrix Rk = Uk. Sk. Ik’ is the closest rank-k matrix to the original matrix R. • Captures latent associations • Reduced space is less-noisy 12/24/2021 Data Mining: Principles and Algorithms 24

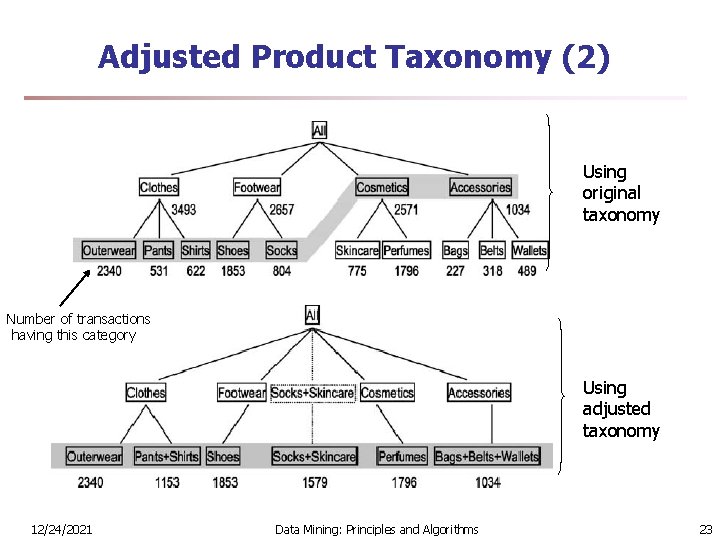

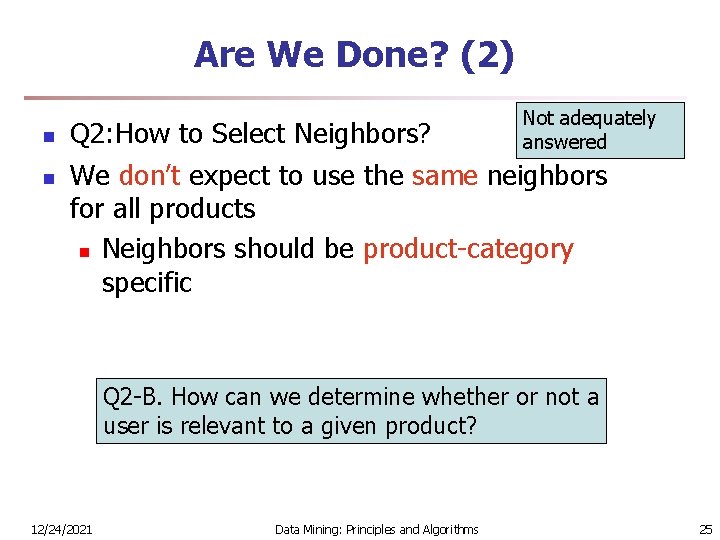

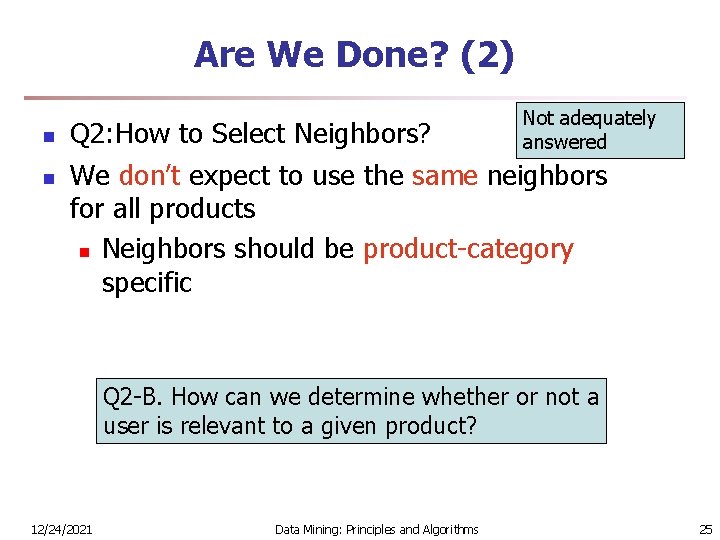

Are We Done? (2) n n Not adequately answered Q 2: How to Select Neighbors? We don’t expect to use the same neighbors for all products n Neighbors should be product-category specific Q 2 -B. How can we determine whether or not a user is relevant to a given product? 12/24/2021 Data Mining: Principles and Algorithms 25

![Selecting Relevant Instances YU 01 n n Superman and Batman and correlated Predict this Selecting Relevant Instances [YU 01] n n Superman and Batman and correlated Predict this](https://slidetodoc.com/presentation_image_h2/2bc34c96ac043ae4ff3c61e1aa905d6a/image-26.jpg)

Selecting Relevant Instances [YU 01] n n Superman and Batman and correlated Predict this Titanic and Batman are negatively correlated “Dances with Wolves” has nothing to do with Batman’s rating Karen is not a good instance to consider How can we formalize this? Mutual Information n 12/24/2021 MI(X; Y) = H(X) – H(X|Y) Data Mining: Principles and Algorithms 26

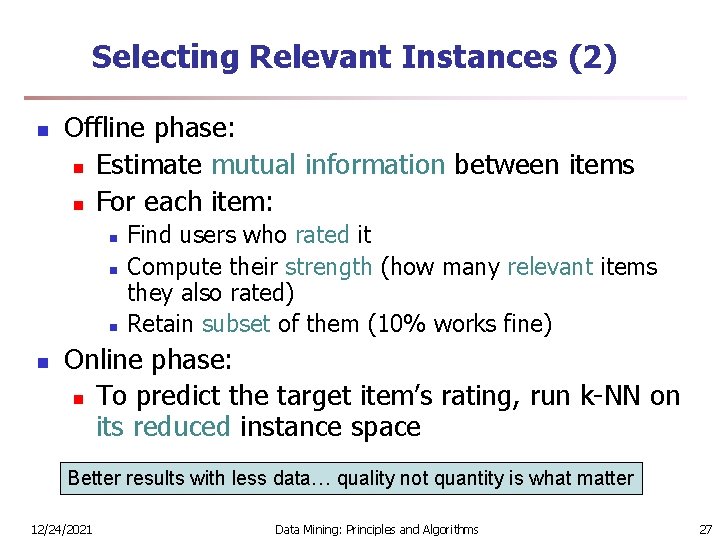

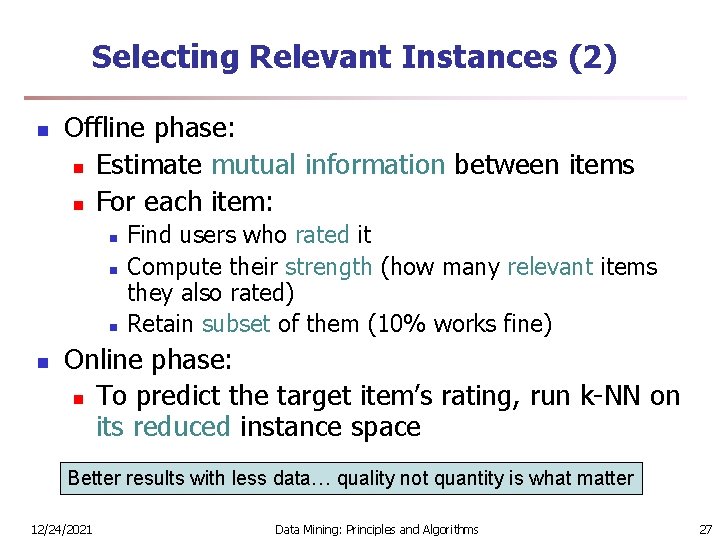

Selecting Relevant Instances (2) n Offline phase: n Estimate mutual information between items n For each item: n n Find users who rated it Compute their strength (how many relevant items they also rated) Retain subset of them (10% works fine) Online phase: n To predict the target item’s rating, run k-NN on its reduced instance space Better results with less data… quality not quantity is what matter 12/24/2021 Data Mining: Principles and Algorithms 27

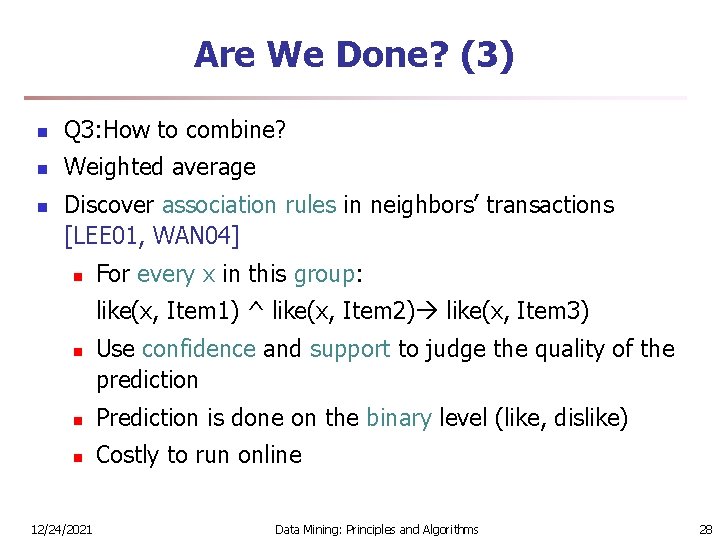

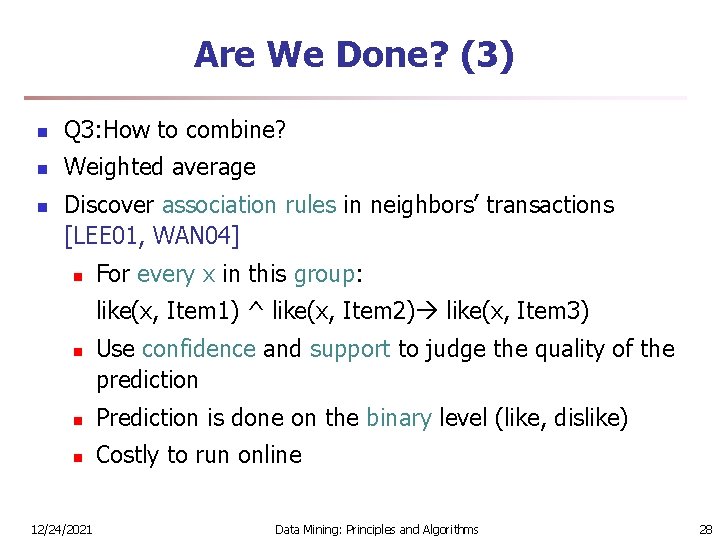

Are We Done? (3) n Q 3: How to combine? n Weighted average n Discover association rules in neighbors’ transactions [LEE 01, WAN 04] n For every x in this group: like(x, Item 1) ^ like(x, Item 2) like(x, Item 3) n Use confidence and support to judge the quality of the prediction n Prediction is done on the binary level (like, dislike) n Costly to run online 12/24/2021 Data Mining: Principles and Algorithms 28

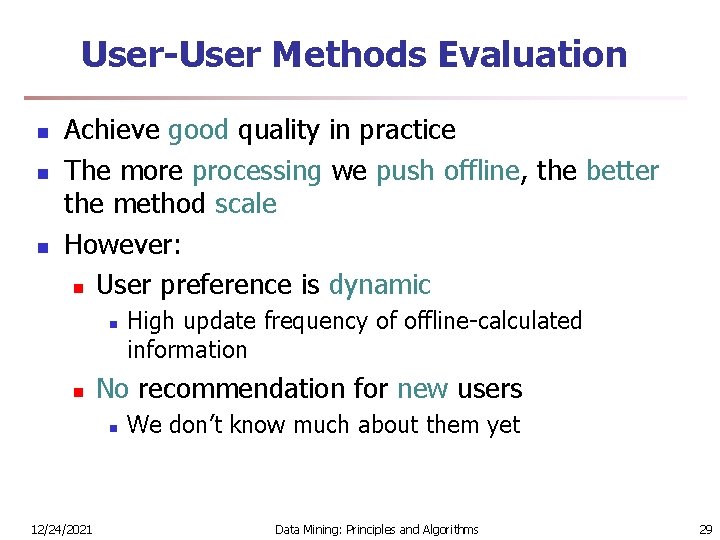

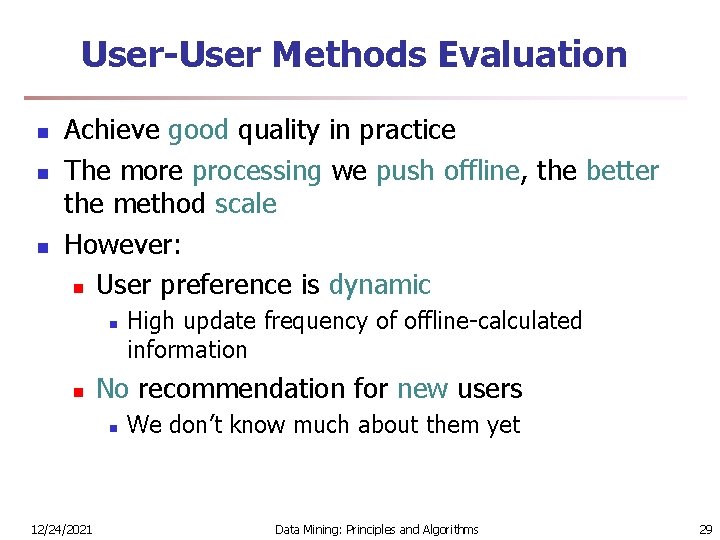

User-User Methods Evaluation n Achieve good quality in practice The more processing we push offline, the better the method scale However: n User preference is dynamic n n No recommendation for new users n 12/24/2021 High update frequency of offline-calculated information We don’t know much about them yet Data Mining: Principles and Algorithms 29

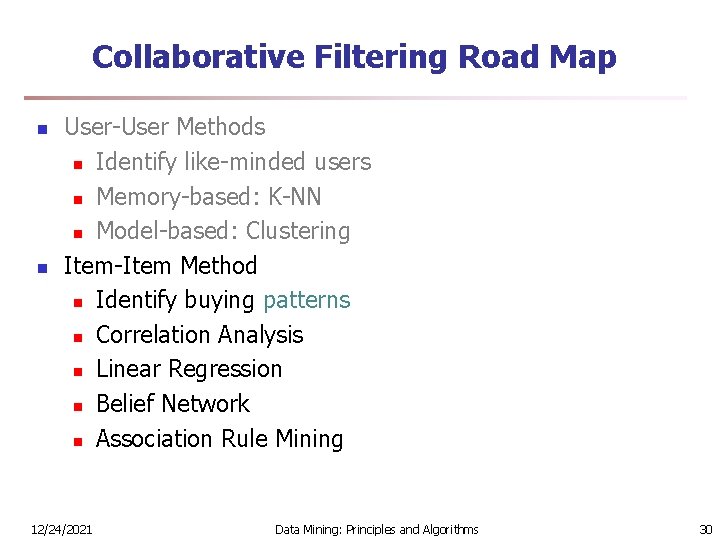

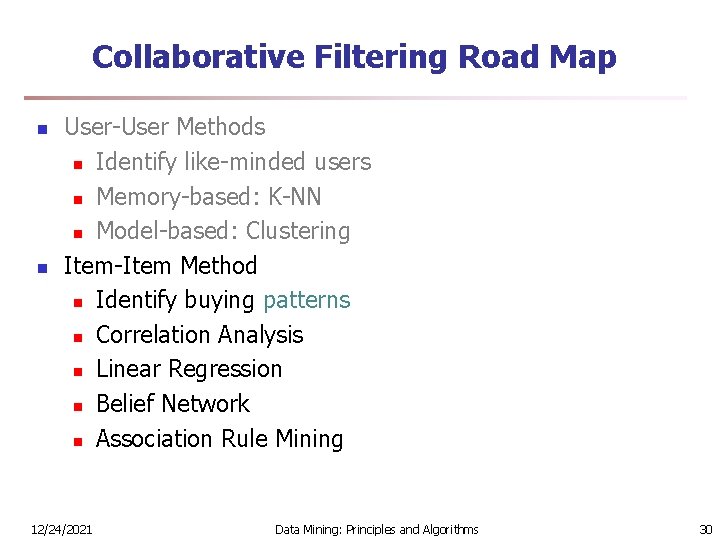

Collaborative Filtering Road Map n n User-User Methods n Identify like-minded users n Memory-based: K-NN n Model-based: Clustering Item-Item Method n Identify buying patterns n Correlation Analysis n Linear Regression n Belief Network n Association Rule Mining 12/24/2021 Data Mining: Principles and Algorithms 30

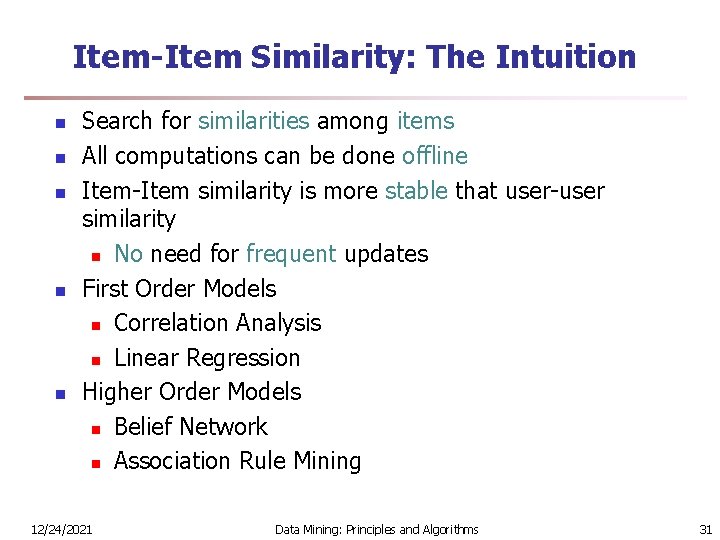

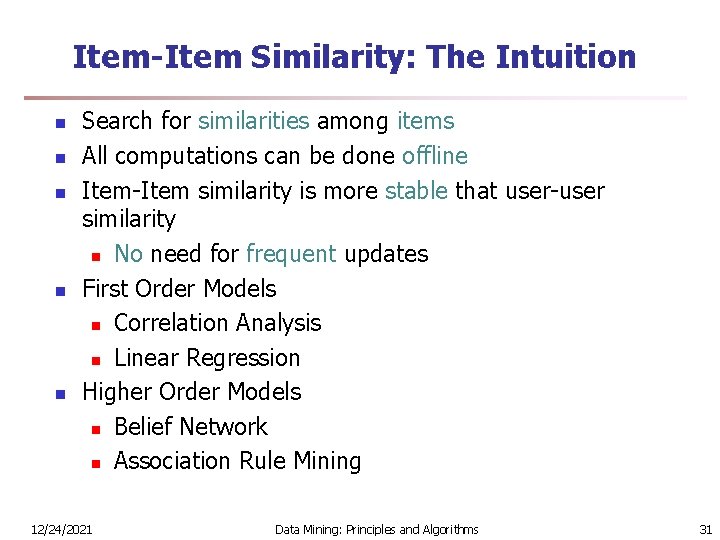

Item-Item Similarity: The Intuition n n Search for similarities among items All computations can be done offline Item-Item similarity is more stable that user-user similarity n No need for frequent updates First Order Models n Correlation Analysis n Linear Regression Higher Order Models n Belief Network n Association Rule Mining 12/24/2021 Data Mining: Principles and Algorithms 31

![Correlationbased Methods SAR 01 n Same as in useruser similarity but on item vectors Correlation-based Methods [SAR 01] n Same as in user-user similarity but on item vectors](https://slidetodoc.com/presentation_image_h2/2bc34c96ac043ae4ff3c61e1aa905d6a/image-32.jpg)

Correlation-based Methods [SAR 01] n Same as in user-user similarity but on item vectors n Pearson correlation coefficient n Look for users who rated both items u 1 i i ij in um 12/24/2021 Data Mining: Principles and Algorithms 32

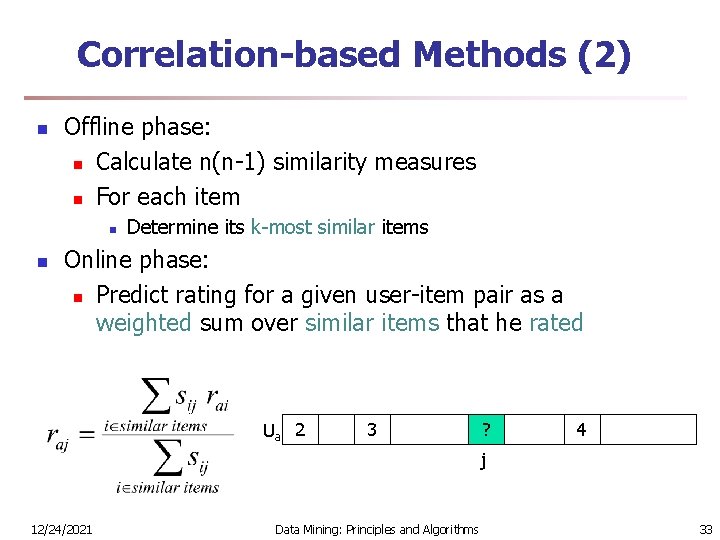

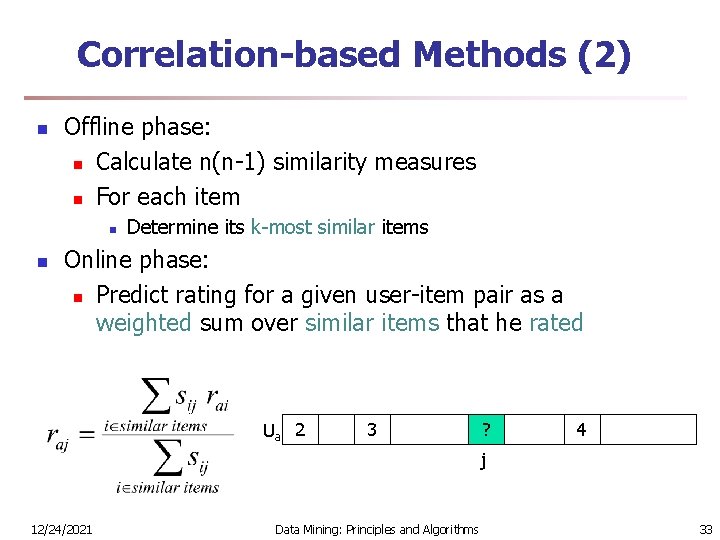

Correlation-based Methods (2) n Offline phase: n Calculate n(n-1) similarity measures n For each item n n Determine its k-most similar items Online phase: n Predict rating for a given user-item pair as a weighted sum over similar items that he rated Ua 2 3 ? 4 j 12/24/2021 Data Mining: Principles and Algorithms 33

![Regression Based Methods VUC 00 n n Offline phase n Fit nn1 linear regressions Regression Based Methods [VUC 00] n n Offline phase: n Fit n(n-1) linear regressions](https://slidetodoc.com/presentation_image_h2/2bc34c96ac043ae4ff3c61e1aa905d6a/image-34.jpg)

Regression Based Methods [VUC 00] n n Offline phase: n Fit n(n-1) linear regressions n Fij(x) is a linear transformation of a user rating on item i to his rating on item j Online phase n Same as previous method n The weights are inversely proportional to the regression error rates 12/24/2021 Data Mining: Principles and Algorithms 34

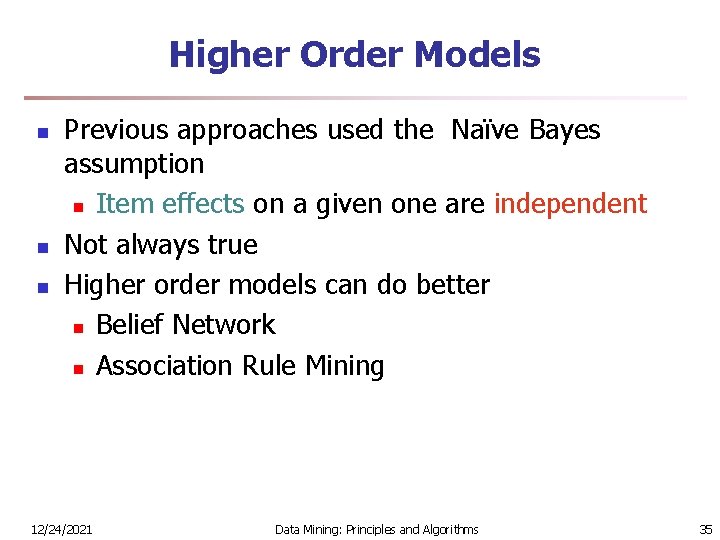

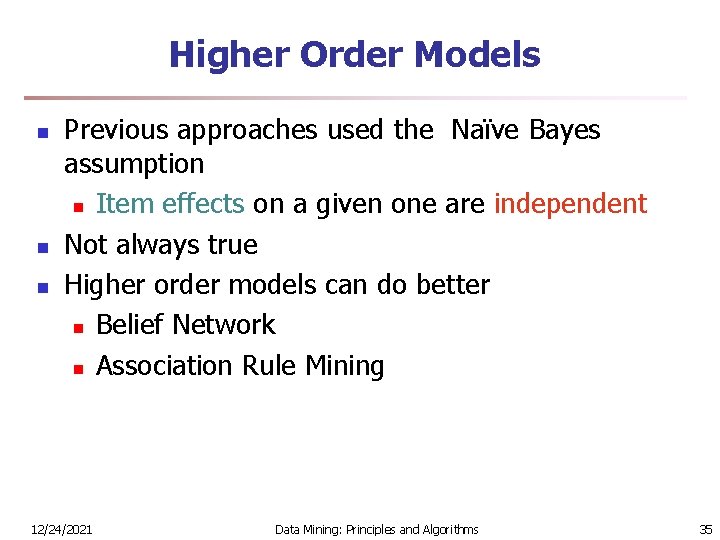

Higher Order Models n n n Previous approaches used the Naïve Bayes assumption n Item effects on a given one are independent Not always true Higher order models can do better n Belief Network n Association Rule Mining 12/24/2021 Data Mining: Principles and Algorithms 35

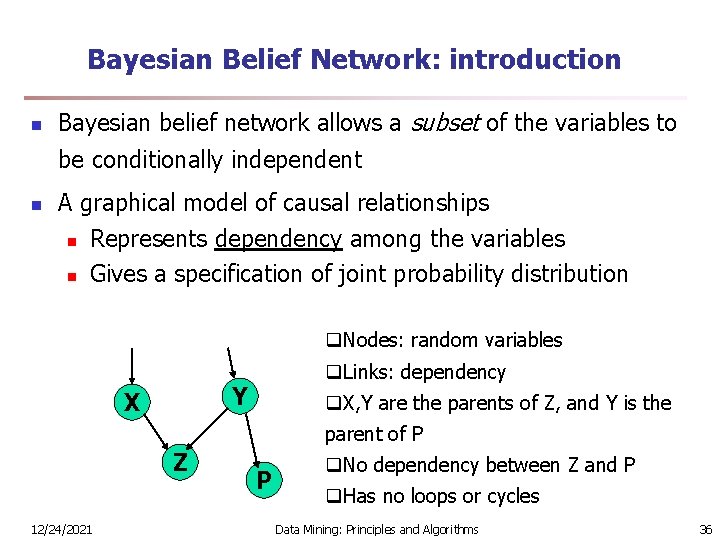

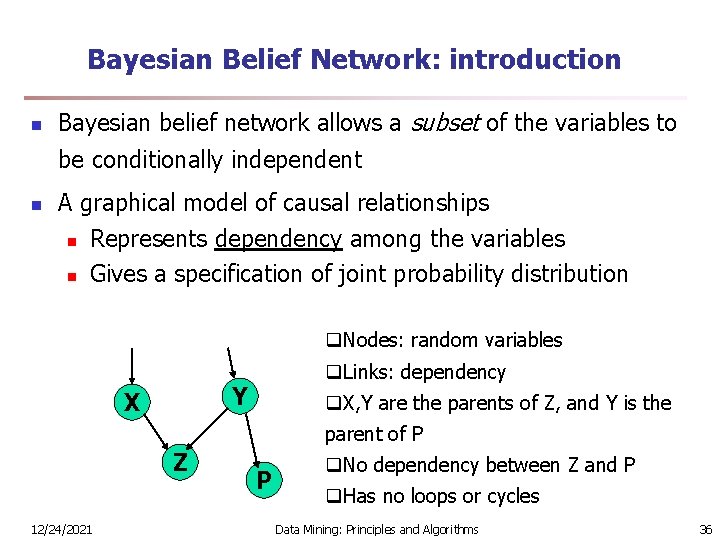

Bayesian Belief Network: introduction n Bayesian belief network allows a subset of the variables to be conditionally independent n A graphical model of causal relationships n n Represents dependency among the variables Gives a specification of joint probability distribution q. Nodes: random variables q. Links: dependency Y X q. X, Y are the parents of Z, and Y is the parent of P Z 12/24/2021 P q. No dependency between Z and P q. Has no loops or cycles Data Mining: Principles and Algorithms 36

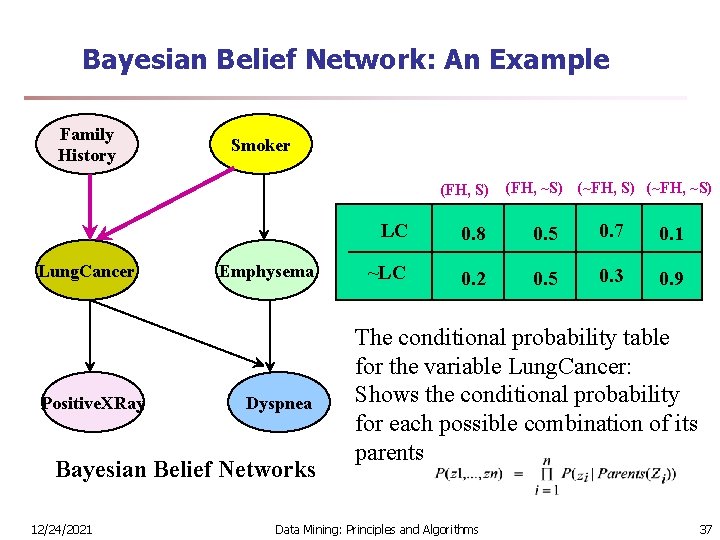

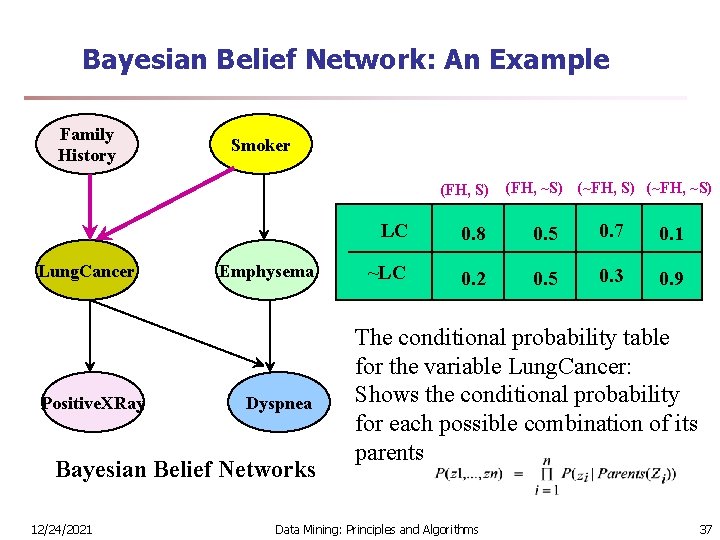

Bayesian Belief Network: An Example Family History Smoker (FH, S) Lung. Cancer Positive. XRay Emphysema Dyspnea Bayesian Belief Networks 12/24/2021 (FH, ~S) (~FH, ~S) LC 0. 8 0. 5 0. 7 0. 1 ~LC 0. 2 0. 5 0. 3 0. 9 The conditional probability table for the variable Lung. Cancer: Shows the conditional probability for each possible combination of its parents Data Mining: Principles and Algorithms 37

![Belief Network for CF BRE 98 n n n Every item is a node Belief Network for CF [BRE 98] n n n Every item is a node](https://slidetodoc.com/presentation_image_h2/2bc34c96ac043ae4ff3c61e1aa905d6a/image-38.jpg)

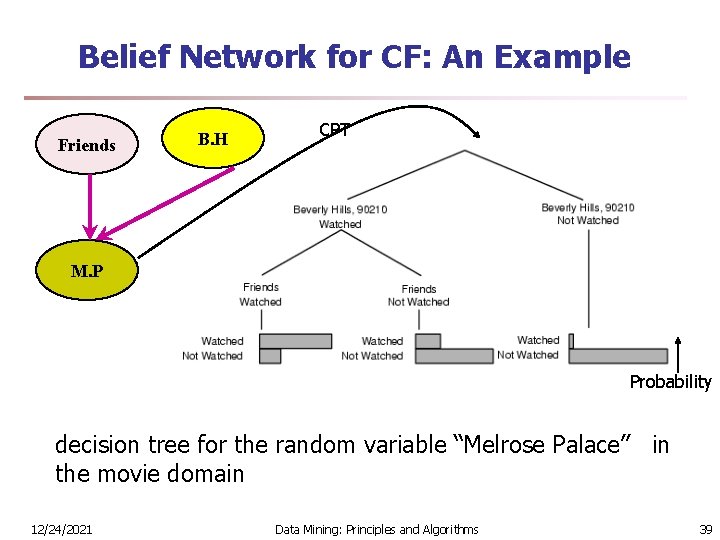

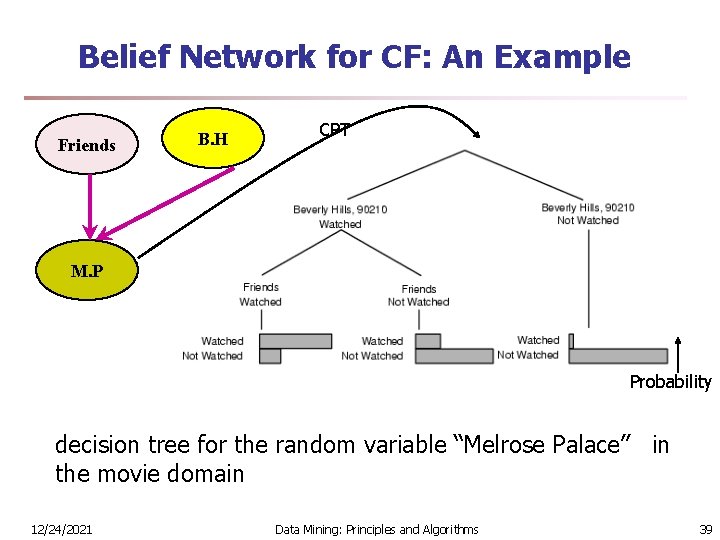

Belief Network for CF [BRE 98] n n n Every item is a node Binary rating (like, dislike) Learn offline a belief network over the training date CPT table at each node is represented as a decision tree Use greedy algorithms to determine the best network structure Use probabilistic inference for online prediction 12/24/2021 Data Mining: Principles and Algorithms 38

Belief Network for CF: An Example Friends B. H CPT M. P Probability decision tree for the random variable “Melrose Palace” in the movie domain 12/24/2021 Data Mining: Principles and Algorithms 39

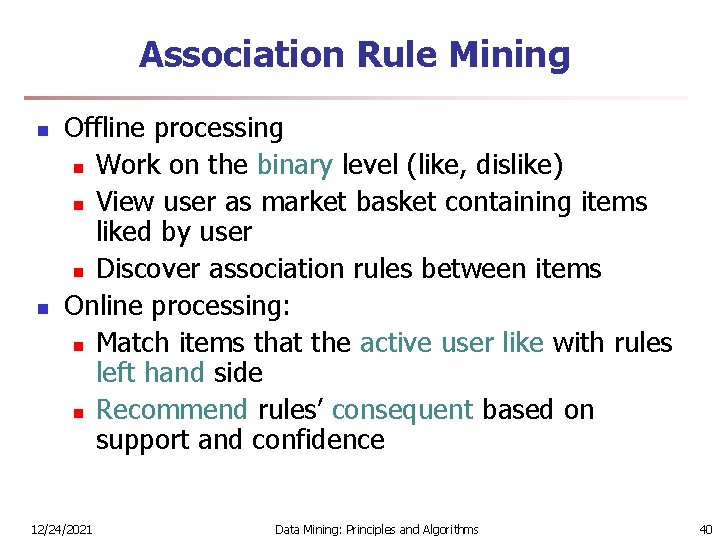

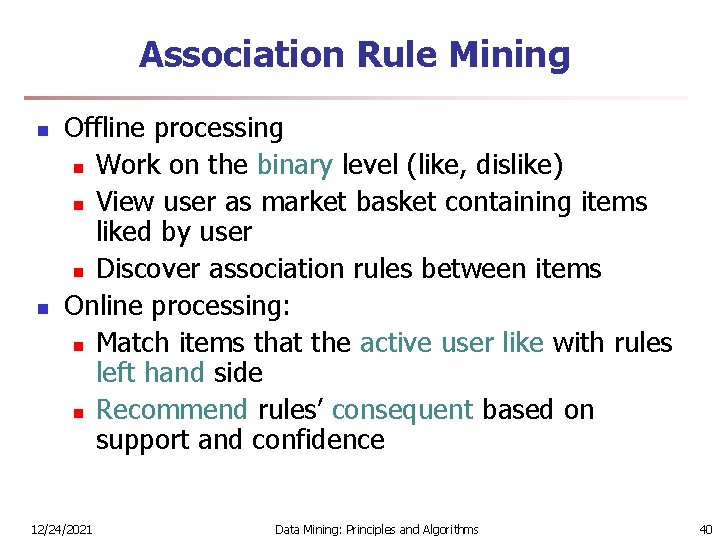

Association Rule Mining n n Offline processing n Work on the binary level (like, dislike) n View user as market basket containing items liked by user n Discover association rules between items Online processing: n Match items that the active user like with rules left hand side n Recommend rules’ consequent based on support and confidence 12/24/2021 Data Mining: Principles and Algorithms 40

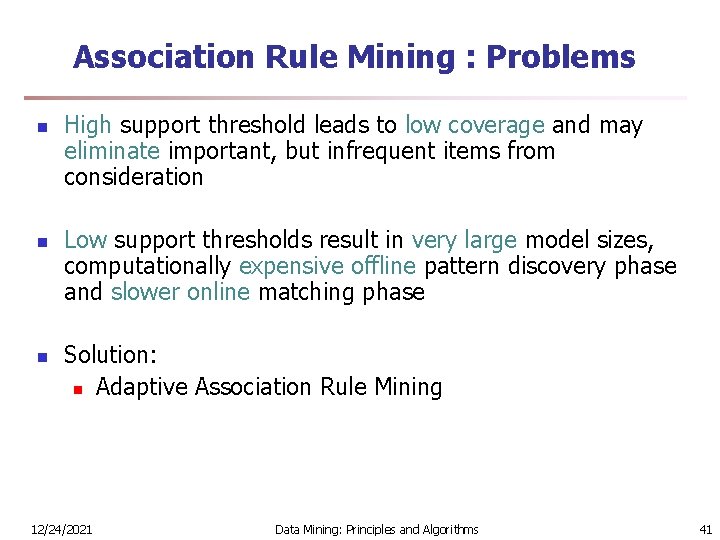

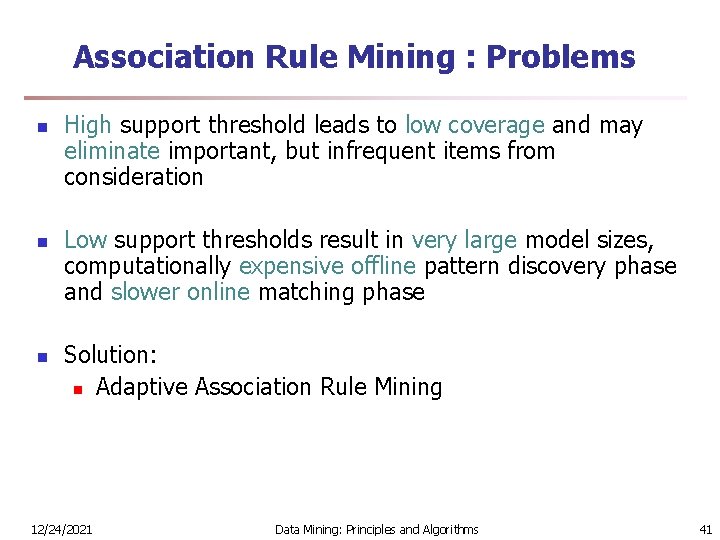

Association Rule Mining : Problems n n n High support threshold leads to low coverage and may eliminate important, but infrequent items from consideration Low support thresholds result in very large model sizes, computationally expensive offline pattern discovery phase and slower online matching phase Solution: n Adaptive Association Rule Mining 12/24/2021 Data Mining: Principles and Algorithms 41

![Adaptive Association Rule Mining LIN 01 n Given Desired number min Confidence n transaction Adaptive Association Rule Mining [LIN 01] n Given: Desired number min. Confidence n transaction](https://slidetodoc.com/presentation_image_h2/2bc34c96ac043ae4ff3c61e1aa905d6a/image-42.jpg)

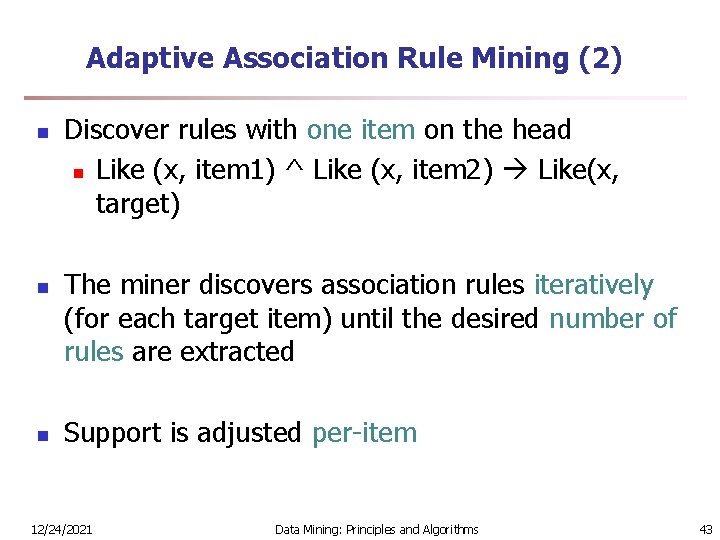

Adaptive Association Rule Mining [LIN 01] n Given: Desired number min. Confidence n transaction dataset of rules n target item n desired range for number of rules min. Support n specified minimum confidence Find: set S of association rules for target item such that n number of rules in S is in given range n rules in S satisfy minimum confidence constraint n rules in S have higher support than rules not in S that satisfy above constraints 12/24/2021 Data Mining: Principles and Algorithms 42

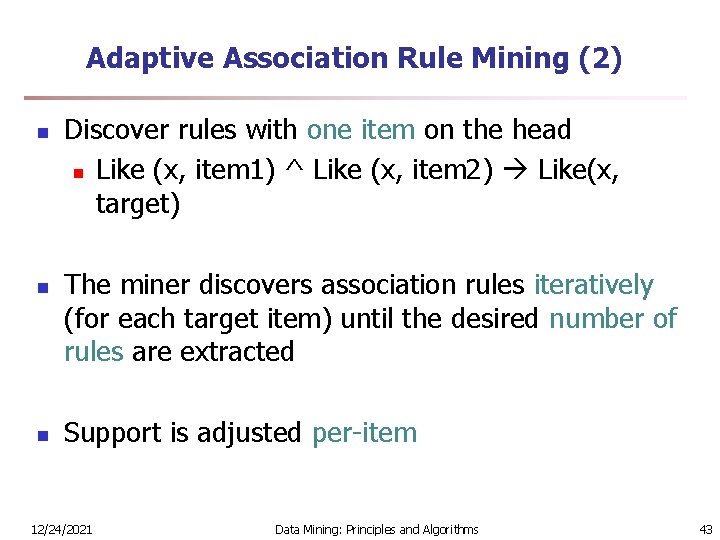

Adaptive Association Rule Mining (2) n n n Discover rules with one item on the head n Like (x, item 1) ^ Like (x, item 2) Like(x, target) The miner discovers association rules iteratively (for each target item) until the desired number of rules are extracted Support is adjusted per-item 12/24/2021 Data Mining: Principles and Algorithms 43

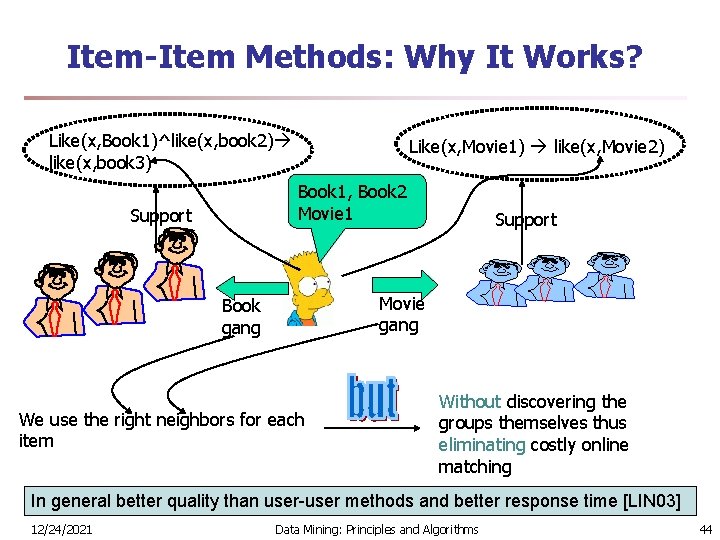

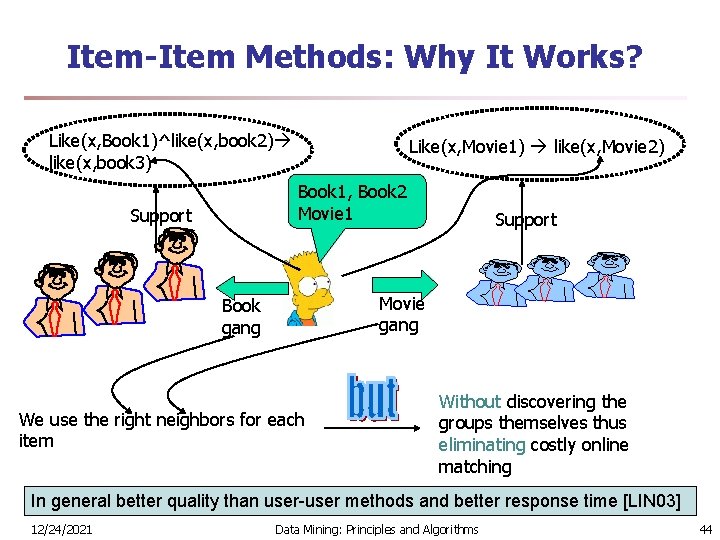

Item-Item Methods: Why It Works? Like(x, Book 1)^like(x, book 2) like(x, book 3) Like(x, Movie 1) like(x, Movie 2) Book 1, Book 2 Movie 1 Support Movie gang Book gang We use the right neighbors for each item Without discovering the groups themselves thus eliminating costly online matching In general better quality than user-user methods and better response time [LIN 03] 12/24/2021 Data Mining: Principles and Algorithms 44

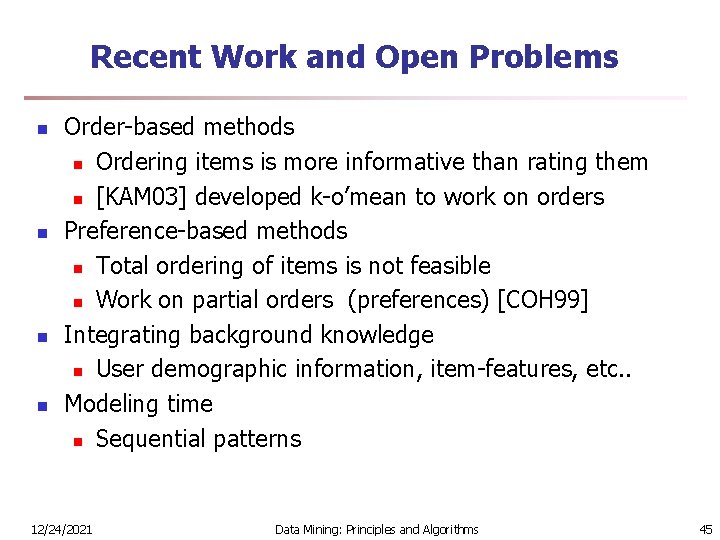

Recent Work and Open Problems n n Order-based methods n Ordering items is more informative than rating them n [KAM 03] developed k-o’mean to work on orders Preference-based methods n Total ordering of items is not feasible n Work on partial orders (preferences) [COH 99] Integrating background knowledge n User demographic information, item-features, etc. . Modeling time n Sequential patterns 12/24/2021 Data Mining: Principles and Algorithms 45

References (1) n n n n Charu C. Aggarwal, Joel L. Wolf, Kun-Lung Wu, Philip S. Yu: Horting Hatches an Egg: A New Graph-Theoretic Approach to Collaborative Filtering. KDD 1999: 201 -212 J. Breese, D. Heckerman, C. Kadie Empirical Analysis of Predictive Algorithms for Collaborative Filtering. In Proc. 14 th Conf. Uncertainty in Artificial Intelligence, Madison, July 1998. Yoon Ho Cho and Jae Kyeong Kim: Application of Web usage mining and product taxonomy to collaborative recommendations in e-commerce. Expert Systems with Applications, 26(2), 2003 William W. Cohen, Robert E. Schapire, and Yoram Singer. Learning to order things. In Advances in Neural Processing Systems 10, Denver, CO, 1997 Jiawe Han, Fall 2003 online course notes available at: http: //www-courses. cs. uiuc. edu/~cs 397 han/slides/05. ppt Toshihiro Kamishima: Nantonac collaborative filtering: recommendation based on order responses. KDD 2003: 583 -588 Lee, C. -H, Kim, Y. -H. , Rhee, P. -K. Web personalization expert with combining collaborative filtering and association rule mining technique. Expert Systems with Applications, v 21, n 3, October, 2001, p 131 -137 12/24/2021 Data Mining: Principles and Algorithms 46

References (2) n n n W. Lin, 2001 P, online presentation available at: http: //www. wiwi. huberlin. de/~myra/WEBKDD 2000_ARCHIVE/Lin. Alvarez. Ruiz_Web KDD 2000. ppt Weiyang Lin, Sergio A. Alvarez, and Carolina Ruiz. Efficient adaptive-support association rule mining for recommender systems. Data Mining and Knowledge Discovery, 6: 83 --105, 2002 G. Linden, B. Smith, and J. York, "Amazon. com Recommendations Iemto item collaborative filtering", IEEE Internet Computing, Vo. 7, No. 1, pp. 7680, Jan. 2003. Badrul M. Sarwar, George Karypis, Joseph A. Konstan, John Riedl: Analysis of recommendation algorithms for e-commerce. ACM Conf. Electronic Commerce 2000: 158 -167 B. Sarwar, G. Karypis, J. Konstan, and J. Riedl: Application of dimensionality reduction in recommender systems--a case study. In ACM Web. KDD 2000 Web Mining for E-Commerce Workshop, 2000. B. M. Sarwar, G. Karypis, J. A. Konstan, and J. Riedl. Item-based collaborative filtering recommendation algorithms. WWW’ 01 12/24/2021 Data Mining: Principles and Algorithms 47

References (3) n n n n B. Sarwar, 2000 P, online presentation available at: http: //www. wiwi. huberlin. de/~myra/WEBKDD 2000_ARCHIVE/badrul. ppt J. Ben Schafer, Joseph A. Konstan, John Riedl: E-Commerce Recommendation Applications. Data Mining and Knowledge Discovery 5(1/2): 115 -153, 2001 L. H. Ungar and D. P. Foster: Clustering Methods for Collaborative Filtering, AAAI Workshop on Recommendation Systems, 1998. Yi-Fan Wang, Yu-Liang Chuang, Mei-Hua Hsu and Huan-Chao Keh: A personalized recommender system for the cosmetic business. Expert Systems with Applications, v 26, n 3, April, 2004 Pages 427 -434 S. Vucetic and Z. Obradovic. A regression-based approach for scaling-up personalized recommender systems in e-commerce. In ACM Web. KDD 2000 Web Mining for E-Commerce Workshop, 2000. Kai Yu, Xiaowei Xu, Martin Ester, and Hans-Peter Kriegel: Selecting relevant instances for efficient accurate collaborative filtering. In Proceedings of the 10 th CIKM, pages 239 --246. ACM Press, 2001. Cheng Zhai, Spring 2003 online course notes available at: http: //sifaka. cs. uiuc. edu/course/2003 -497 CXZ/loc/cf. ppt 12/24/2021 Data Mining: Principles and Algorithms 48

www. cs. uiuc. edu/~hanj Thank you !!! 12/24/2021 Data Mining: Principles and Algorithms 49