Data Intensive Super Computing Randal E Bryant Carnegie

Data Intensive Super Computing Randal E. Bryant Carnegie Mellon University http: //www. cs. cmu. edu/~bryant

Data Intensive Super Scalable Computing Randal E. Bryant Carnegie Mellon University http: //www. cs. cmu. edu/~bryant

Examples of Big Data Sources Wal-Mart n 267 million items/day, sold at 6, 000 stores n HP building them 4 PB data warehouse Mine data to manage supply chain, understand market trends, formulate pricing strategies n Sloan Digital Sky Survey n n n – 3– New Mexico telescope captures 200 GB image data / day Latest dataset release: 10 TB, 287 million celestial objects Sky. Server provides SQL access

Our Data-Driven World Science n Data bases from astronomy, genomics, natural languages, seismic modeling, … Humanities n Scanned books, historic documents, … Commerce n Corporate sales, stock market transactions, census, airline traffic, … Entertainment n Internet images, Hollywood movies, MP 3 files, … Medicine n – 4– MRI & CT scans, patient records, …

Why So Much Data? We Can Get It n Automation + Internet We Can Keep It n n Seagate Barracuda 1 TB @ $159 (16¢ / GB) We Can Use It n n Scientific breakthroughs Business process efficiencies Realistic special effects Better health care Could We Do More? n – 5– Apply more computing power to this data

Google’s Computing Infrastructure n n n – 6– 200+ processors 200+ terabyte database 1010 total clock cycles 0. 1 second response time 5¢ average advertising revenue

Google’s Computing Infrastructure System n ~ 3 million processors in clusters of ~2000 processors each n Commodity parts l x 86 processors, IDE disks, Ethernet communications l Gain reliability through redundancy & software management n Partitioned workload l Data: Web pages, indices distributed across processors l Function: crawling, index generation, index search, document retrieval, Ad placement Barroso, Dean, Hölzle, “Web Search for a Planet: The Google Cluster Architecture” IEEE Micro 2003 A Data-Intensive Scalable Computer (DISC) n Large-scale computer centered around data l Collecting, maintaining, indexing, computing – 7– n Similar systems at Microsoft & Yahoo

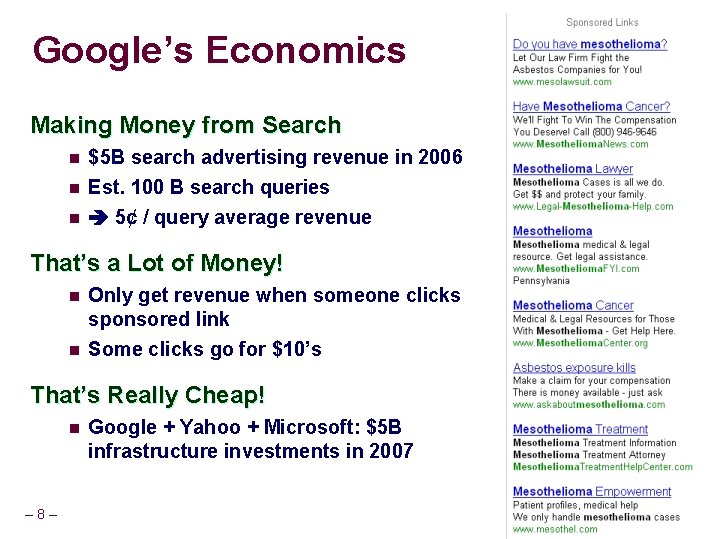

Google’s Economics Making Money from Search n $5 B search advertising revenue in 2006 n Est. 100 B search queries 5¢ / query average revenue n That’s a Lot of Money! n n Only get revenue when someone clicks sponsored link Some clicks go for $10’s That’s Really Cheap! n – 8– Google + Yahoo + Microsoft: $5 B infrastructure investments in 2007

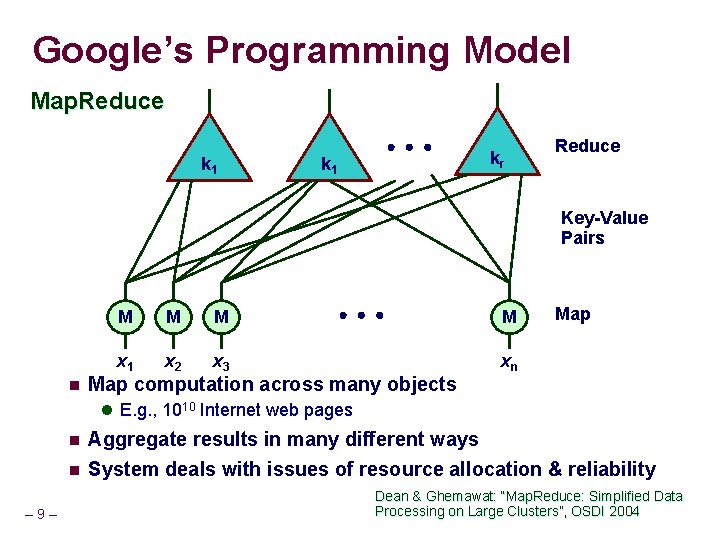

Google’s Programming Model Map. Reduce k 1 kr Reduce Key-Value Pairs n M M M x 1 x 2 x 3 Map computation across many objects M Map xn l E. g. , 1010 Internet web pages n n – 9– Aggregate results in many different ways System deals with issues of resource allocation & reliability Dean & Ghemawat: “Map. Reduce: Simplified Data Processing on Large Clusters”, OSDI 2004

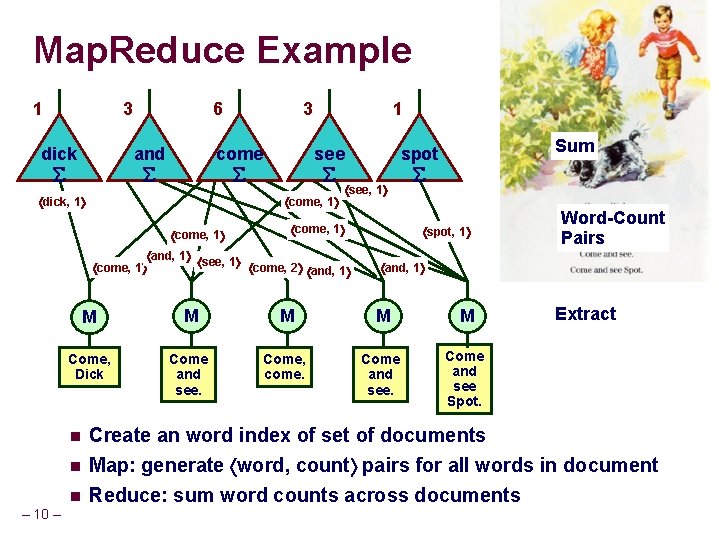

Map. Reduce Example 1 3 dick 6 and come dick, 1 1 see come, 1 and, 1 see, 1 come, 2 and, 1 Sum spot, 1 Word-Count Pairs and, 1 M M M Come, Dick Come and see. Come, come. Come and see Spot. Extract n Create an word index of set of documents Map: generate word, count pairs for all words in document n Reduce: sum word counts across documents n – 10 – 3

DISC: Beyond Web Search Data-Intensive Application Domains n Rely on large, ever-changing data sets l Collecting & maintaining data is major effort n Many possibilities Computational Requirements n n n From simple queries to large-scale analyses Require parallel processing Want to program at abstract level Hypothesis n – 11 – Can apply DISC to many other application domains

The Power of Data + Computation 2005 NIST Machine Translation Competition n Translate 100 news articles from Arabic to English Google’s Entry n First-time entry l Highly qualified researchers l No one on research team knew Arabic n Purely statistical approach l Create most likely translations of words and phrases l Combine into most likely sentences n Trained using United Nations documents l 200 million words of high quality translated text l 1 trillion words of monolingual text in target language n – 12 – During competition, ran on 1000 -processor cluster l One hour per sentence (gotten faster now)

2005 NIST Arabic-English Competition Results Expert human translator Usable translation Human-edittable translation Topic identification BLEU Score 0. 7 0. 4 n n 0. 6 0. 5 BLEU Score Google ISI IBM+CMU UMD JHU+CU Edinburgh Outcome n n 0. 3 Useless n 0. 2 0. 1 Systran Mitre – 13 – 0. 0 FSC Statistical comparison to expert human translators Scale from 0. 0 to 1. 0 Google’s entry qualitatively better Not the most sophisticated approach But lots more training data and computer power

Oceans of Data, Skinny Pipes 1 Terabyte – 14 – n Easy to store n Hard to move Disks MB / s Time Seagate Barracuda 115 2. 3 hours Seagate Cheetah 125 2. 2 hours Networks MB / s Time Home Internet < 0. 625 > 18. 5 days Gigabit Ethernet < 125 > 2. 2 hours PSC Teragrid Connection < 3, 750 > 4. 4 minutes

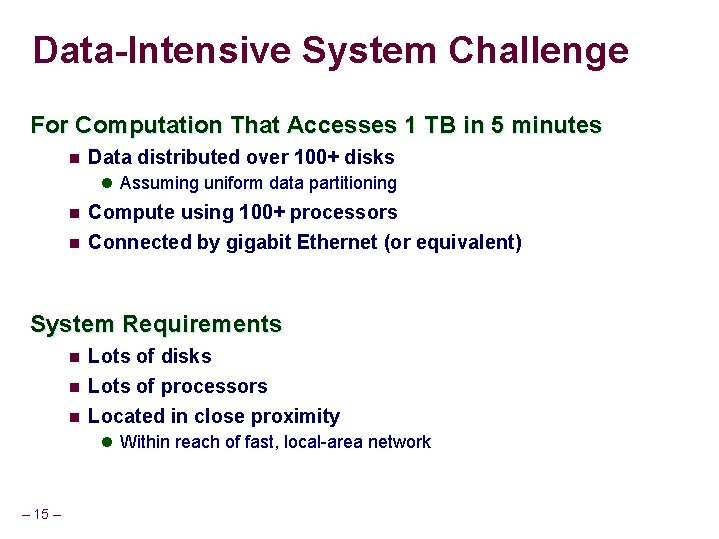

Data-Intensive System Challenge For Computation That Accesses 1 TB in 5 minutes n Data distributed over 100+ disks l Assuming uniform data partitioning n n Compute using 100+ processors Connected by gigabit Ethernet (or equivalent) System Requirements n n n Lots of disks Lots of processors Located in close proximity l Within reach of fast, local-area network – 15 –

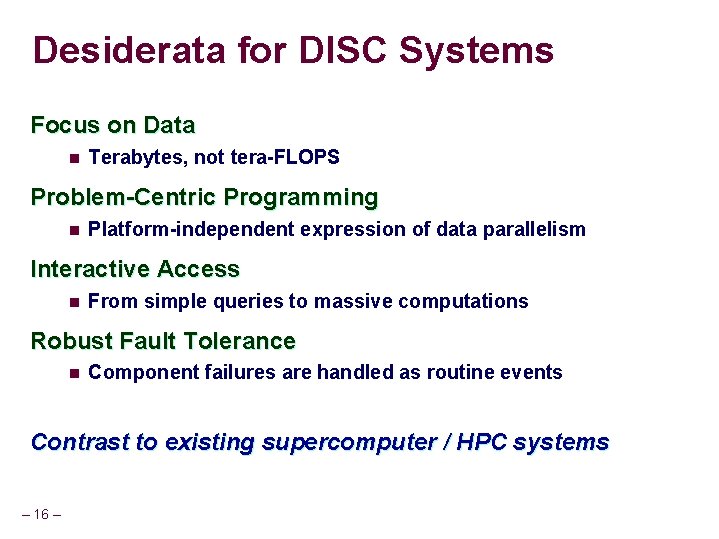

Desiderata for DISC Systems Focus on Data n Terabytes, not tera-FLOPS Problem-Centric Programming n Platform-independent expression of data parallelism Interactive Access n From simple queries to massive computations Robust Fault Tolerance n Component failures are handled as routine events Contrast to existing supercomputer / HPC systems – 16 –

System Comparison: Data DISC Conventional Supercomputers System n System Data stored in separate repository n l No support for collection or management n Brought into system for computation l Time consuming – 17 – l Limits interactivity System collects and maintains data l Shared, active data set n Computation colocated with storage l Faster access

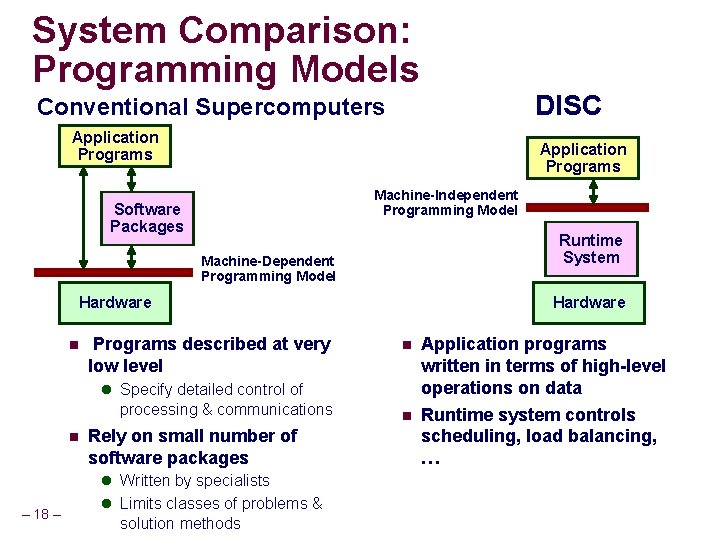

System Comparison: Programming Models DISC Conventional Supercomputers Application Programs Machine-Independent Programming Model Software Packages Runtime System Machine-Dependent Programming Model Hardware n Programs described at very low level Hardware n Application programs written in terms of high-level operations on data n Runtime system controls scheduling, load balancing, … l Specify detailed control of processing & communications n Rely on small number of software packages l Written by specialists – 18 – l Limits classes of problems & solution methods

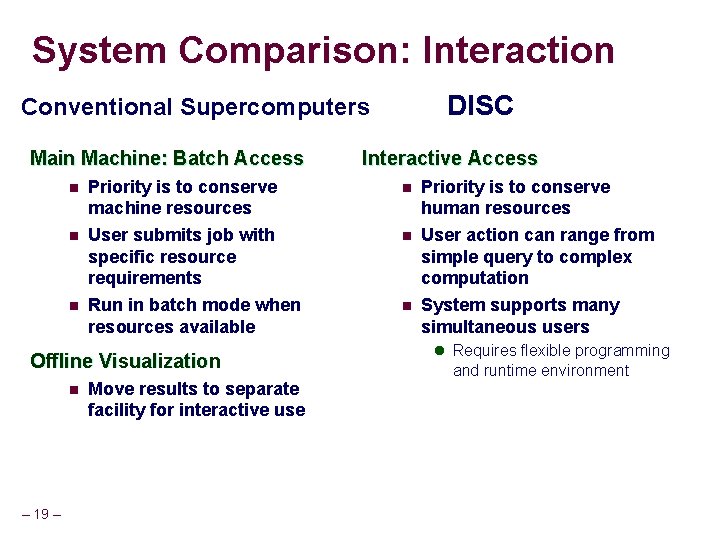

System Comparison: Interaction DISC Conventional Supercomputers Main Machine: Batch Access n Priority is to conserve machine resources n Priority is to conserve human resources n User submits job with specific resource requirements Run in batch mode when resources available n User action can range from simple query to complex computation System supports many simultaneous users n Offline Visualization n – 19 – Interactive Access Move results to separate facility for interactive use n l Requires flexible programming and runtime environment

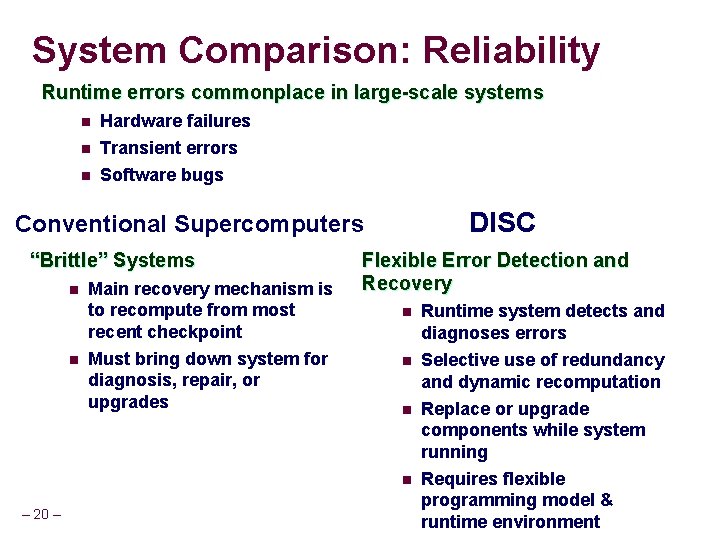

System Comparison: Reliability Runtime errors commonplace in large-scale systems n Hardware failures Transient errors n Software bugs n DISC Conventional Supercomputers “Brittle” Systems n n Main recovery mechanism is to recompute from most recent checkpoint Must bring down system for diagnosis, repair, or upgrades Flexible Error Detection and Recovery n Runtime system detects and diagnoses errors n Selective use of redundancy and dynamic recomputation Replace or upgrade components while system running Requires flexible programming model & runtime environment n n – 20 –

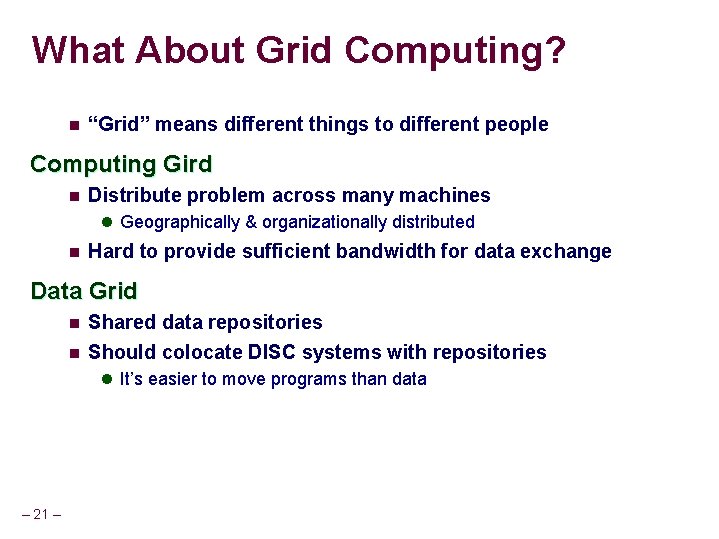

What About Grid Computing? n “Grid” means different things to different people Computing Gird n Distribute problem across many machines l Geographically & organizationally distributed n Hard to provide sufficient bandwidth for data exchange Data Grid n n Shared data repositories Should colocate DISC systems with repositories l It’s easier to move programs than data – 21 –

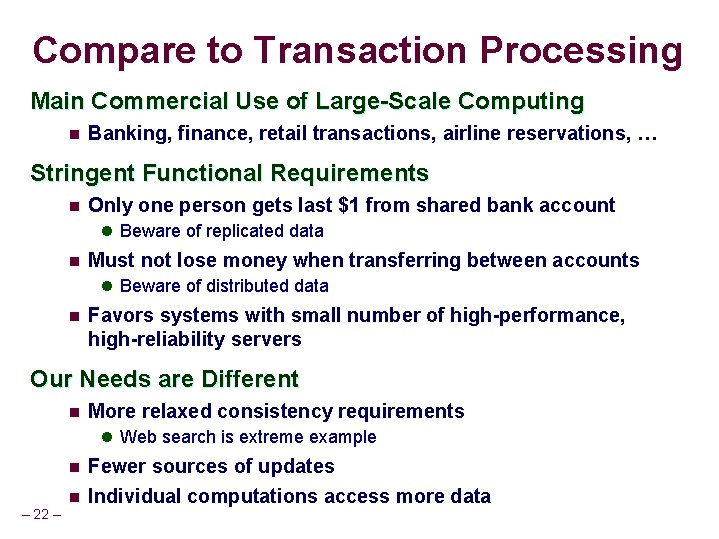

Compare to Transaction Processing Main Commercial Use of Large-Scale Computing n Banking, finance, retail transactions, airline reservations, … Stringent Functional Requirements n Only one person gets last $1 from shared bank account l Beware of replicated data n Must not lose money when transferring between accounts l Beware of distributed data n Favors systems with small number of high-performance, high-reliability servers Our Needs are Different n More relaxed consistency requirements l Web search is extreme example n – 22 – n Fewer sources of updates Individual computations access more data

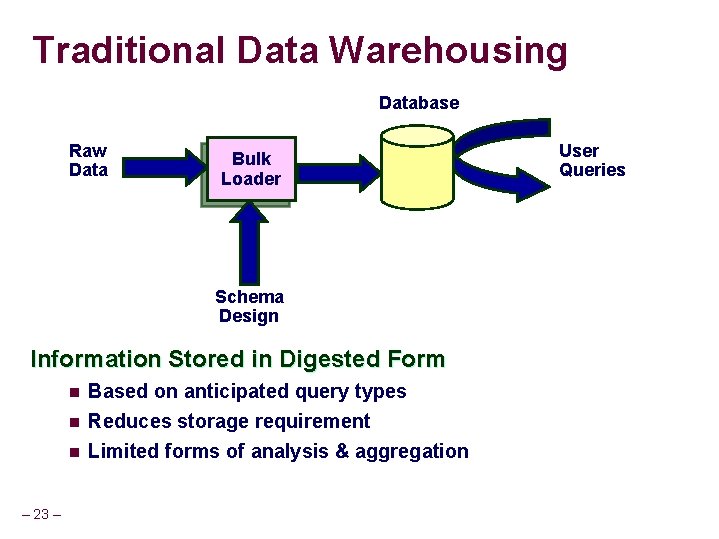

Traditional Data Warehousing Database Raw Data Bulk Loader Schema Design Information Stored in Digested Form n Based on anticipated query types n Reduces storage requirement Limited forms of analysis & aggregation n – 23 – User Queries

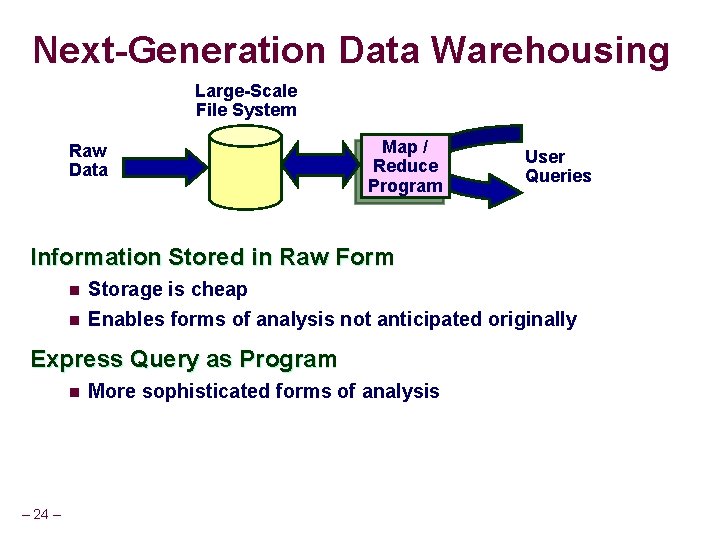

Next-Generation Data Warehousing Large-Scale File System Raw Data Map / Reduce Program User Queries Information Stored in Raw Form n Storage is cheap n Enables forms of analysis not anticipated originally Express Query as Program n – 24 – More sophisticated forms of analysis

Why University-Based Project(s)? Open n Forum for free exchange of ideas n Apply to societally important, possibly noncommercial problems Systematic n Careful study of design ideas and tradeoffs Creative n Get smart people working together Fulfill Our Educational Mission n n – 25 – Expose faculty & students to newest technology Ensure faculty & Ph. D researchers addressing real problems

Designing a DISC System Inspired by Google’s Infrastructure n System with high performance & reliability n Carefully optimized capital & operating costs Take advantage of their learning curve n But, Must Adapt n More than web search l Wider range of data types & computing requirements l Less advantage to precomputing and caching information l Higher correctness requirements n 102– 104 users, not 106– 108 l Don’t require massive infrastructure – 26 –

Constructing General-Purpose DISC Hardware n n Similar to that used in data centers and highperformance systems Available off-the-shelf Hypothetical “Node” n n n – 27 – 1– 2 dual or quad core processors 1 TB disk (2 -3 drives) ~$10 K (including portion of routing network)

Possible System Sizes 100 Nodes $1 M n 100 TB storage n Deal with failures by stop & repair Useful for prototyping n 1, 000 Nodes n n n 1 PB storage Reliability becomes important issue Enough for WWW caching & indexing 10, 000 Nodes n n – 28 – $10 M $100 M 10 PB storage National resource Continuously dealing with failures Utility?

Implementing System Software Programming Support n Abstractions for computation & data representation l E. g. , Google: Map. Reduce & Big. Table n Usage models Runtime Support n n n Allocating processing and storage Scheduling multiple users Implementing programming model Error Handling n n n – 29 – Detecting errors Dynamic recovery Identifying failed components

Getting Started Goal n Get faculty & students active in DISC Hardware: Rent from Amazon n Elastic Compute Cloud (EC 2) l Generic Linux cycles for $0. 10 / hour ($877 / yr) n Simple Storage Service (S 3) l Network-accessible storage for $0. 15 / GB / month ($1800/TB/yr) n Example: maintain crawled copy of web (50 TB, 100 processors, 0. 5 TB/day refresh) ~$250 K / year Software n Hadoop Project l Open source project providing file system and Map. Reduce l Supported and used by Yahoo l Prototype on single machine, map onto cluster – 30 –

Rely on Kindness of Others n n Google setting up dedicated cluster for university use Loaded with open-source software l Including Hadoop n n – 31 – IBM providing additional software support NSF will determine how facility should be used.

More Sources of Kindness n n – 32 – Yahoo: Major supporter of Hadoop Yahoo plans to work with other universities

Beyond the U. S. – 33 –

CS Research Issues Applications n Language translation, image processing, … Application Support n n Machine learning over very large data sets Web crawling Programming n n Abstract programming models to support large-scale computation Distributed databases System Design n – 34 – Error detection & recovery mechanisms Resource scheduling and load balancing Distribution and sharing of data across system

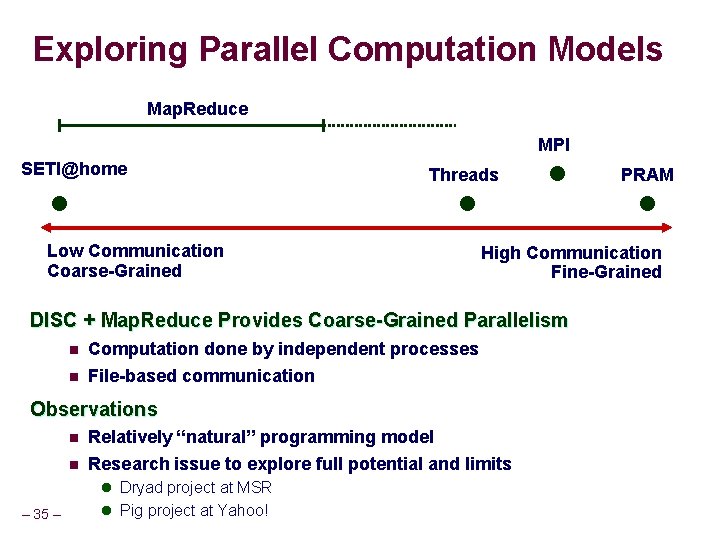

Exploring Parallel Computation Models Map. Reduce MPI SETI@home Threads Low Communication Coarse-Grained High Communication Fine-Grained DISC + Map. Reduce Provides Coarse-Grained Parallelism n n Computation done by independent processes File-based communication Observations n n Relatively “natural” programming model Research issue to explore full potential and limits l Dryad project at MSR – 35 – l Pig project at Yahoo! PRAM

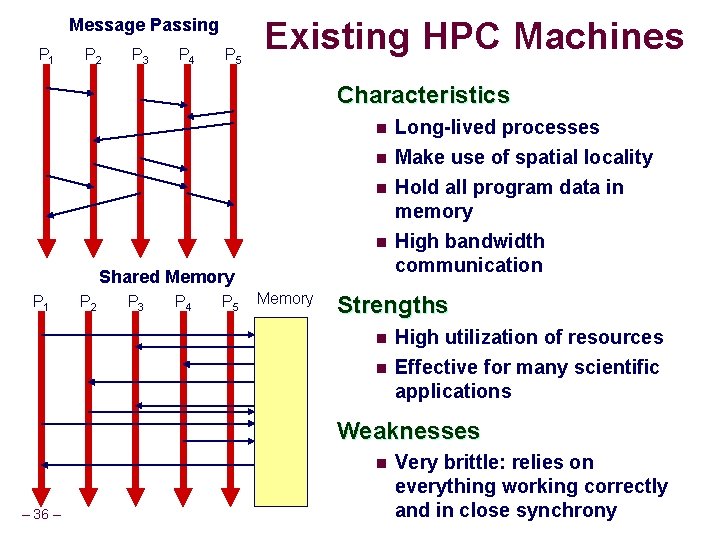

Message Passing P 1 P 2 P 3 P 4 P 5 Existing HPC Machines Characteristics n Long-lived processes n Make use of spatial locality Hold all program data in memory High bandwidth communication n n Shared Memory P 1 P 2 P 3 P 4 P 5 Memory Strengths n n High utilization of resources Effective for many scientific applications Weaknesses n – 36 – Very brittle: relies on everything working correctly and in close synchrony

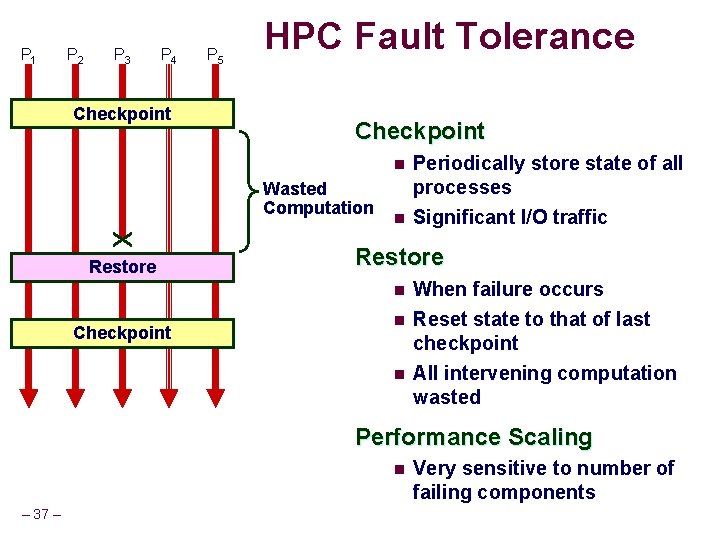

P 1 P 2 P 3 P 4 Checkpoint P 5 HPC Fault Tolerance Checkpoint n Wasted Computation Restore n Checkpoint Periodically store state of all processes Significant I/O traffic n n When failure occurs Reset state to that of last checkpoint All intervening computation wasted Performance Scaling n – 37 – Very sensitive to number of failing components

Map/Reduce Operation Characteristics Map/Reduce n Map l Mapping, reducing Reduce Map n Reduce Map Computation broken into many, short-lived tasks Use disk storage to hold intermediate results Strengths n Reduce n n Great flexibility in placement, scheduling, and load balancing Handle failures by recomputation Can access large data sets Weaknesses n – 38 – n Higher overhead Lower raw performance

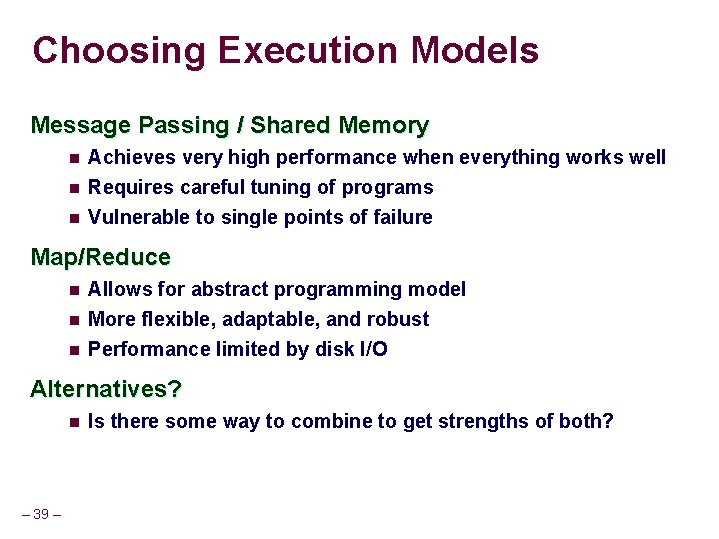

Choosing Execution Models Message Passing / Shared Memory n Achieves very high performance when everything works well n Requires careful tuning of programs Vulnerable to single points of failure n Map/Reduce n n n Allows for abstract programming model More flexible, adaptable, and robust Performance limited by disk I/O Alternatives? n – 39 – Is there some way to combine to get strengths of both?

Concluding Thoughts The World is Ready for a New Approach to Large-Scale Computing n n Optimized for data-driven applications Technology favoring centralized facilities l Storage capacity & computer power growing faster than network bandwidth University Researchers Eager to Get Involved n n n – 40 – System designers Applications in multiple disciplines Across multiple institutions

More Information “Data-Intensive Supercomputing: The case for DISC” n Tech Report: CMU-CS-07 -128 n Available from http: //www. cs. cmu. edu/~bryant – 41 –

- Slides: 41