Data Intensive and Cloud Computing Data Streams Lecture

- Slides: 30

Data Intensive and Cloud Computing Data Streams Lecture 10 Slides based on Chapter 4 in Mining Data Streams

Data Streams �In many data mining situations, we do not know the entire data set in advance �Stream Management is important when the input rate is controlled externally: § Google queries § Twitter or Facebook status updates �We can think of the data as infinite and non-stationary (the distribution changes over time) 2

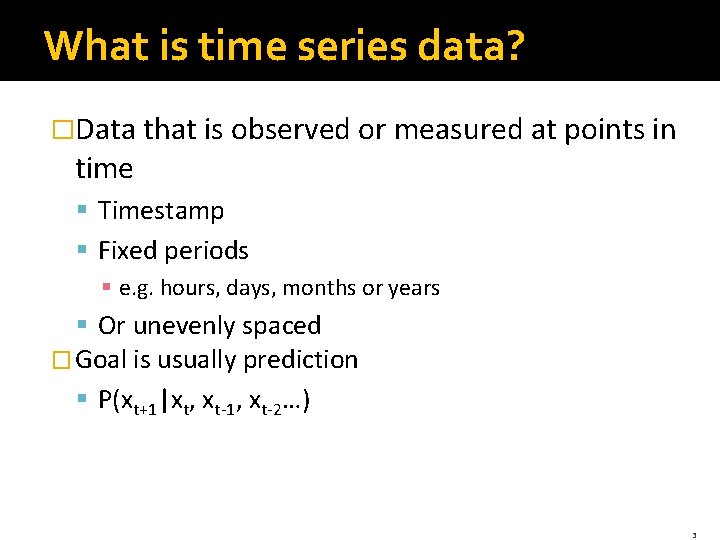

What is time series data? �Data that is observed or measured at points in time § Timestamp § Fixed periods § e. g. hours, days, months or years § Or unevenly spaced � Goal is usually prediction § P(xt+1|xt, xt-1, xt-2…) 3

Appears in Many Fields � Robotics � Finance, economics � Marketing � Science, e. g. ecology, earth science � Medicine, e. g. neuroscience � Internet of things 4

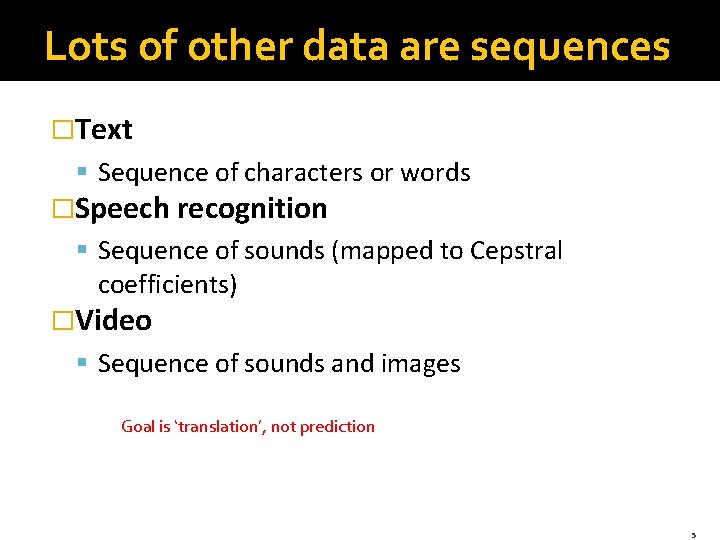

Lots of other data are sequences �Text § Sequence of characters or words �Speech recognition § Sequence of sounds (mapped to Cepstral coefficients) �Video § Sequence of sounds and images Goal is ‘translation’, not prediction 5

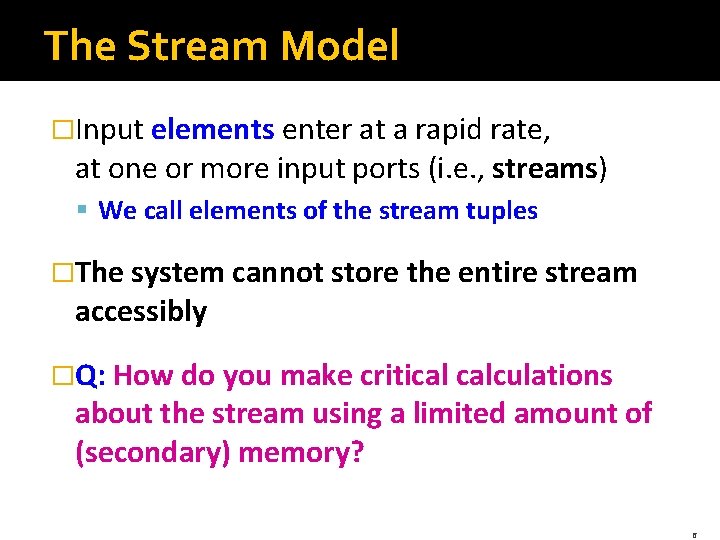

The Stream Model �Input elements enter at a rapid rate, at one or more input ports (i. e. , streams) § We call elements of the stream tuples �The system cannot store the entire stream accessibly �Q: How do you make critical calculations about the stream using a limited amount of (secondary) memory? 6

Side note: SGD is a Streaming Alg. �Stochastic Gradient Descent (SGD) is an example of a stream algorithm �In Machine Learning we call this: Online Learning § Allows for modeling problems where we have a continuous stream of data § We want an algorithm to learn from it and slowly adapt to the changes in data �Idea: Do slow updates to the model § SGD (SVM, Perceptron) makes small updates § So: First train the classifier on training data. § Then: For every example from the stream, we slightly update the model (using small learning rate) 7

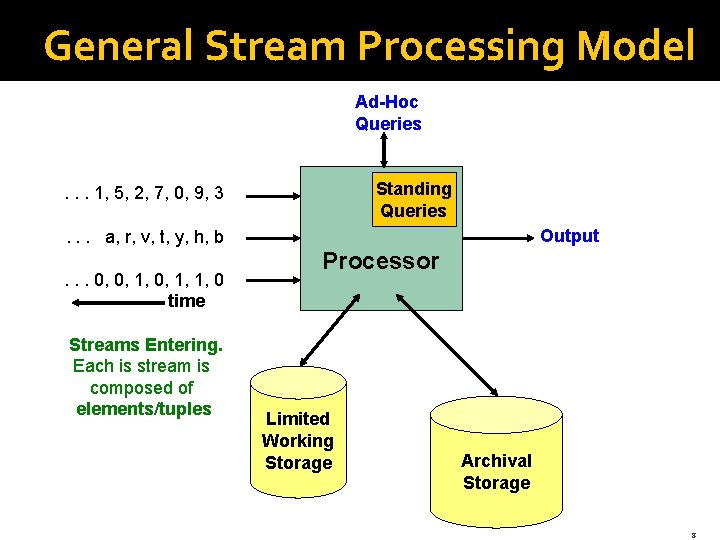

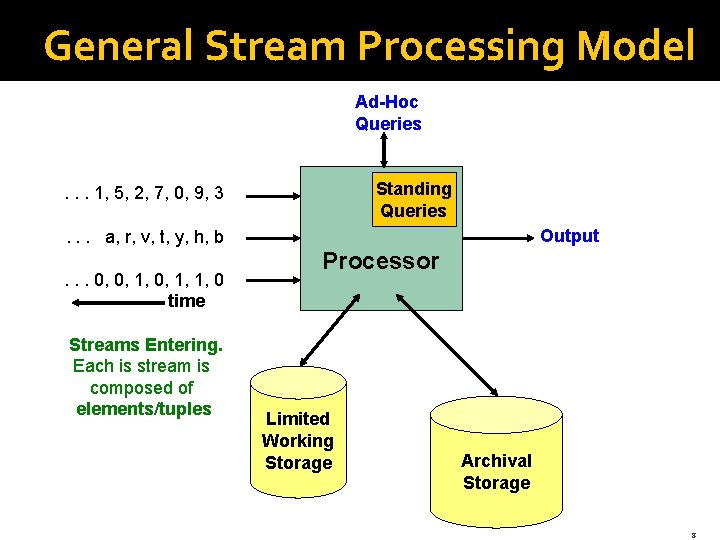

General Stream Processing Model Ad-Hoc Queries Standing Queries . . . 1, 5, 2, 7, 0, 9, 3 Output . . . a, r, v, t, y, h, b. . . 0, 0, 1, 1, 0 time Streams Entering. Each is stream is composed of elements/tuples Processor Limited Working Storage Archival Storage 8

Problems on Data Streams �Types of queries one wants on answer on a data stream: § Sampling data from a stream § Construct a random sample § Queries over sliding windows § Number of items of type x in the last k elements of the stream 9

Problems on Data Streams �Types of queries one wants on answer on a data stream: § Filtering a data stream § Select elements with property x from the stream § Counting distinct elements § Number of distinct elements in the last k elements of the stream § Estimating moments § Estimate avg. /std. dev. of last k elements § Finding frequent elements 10

Applications (1) �Mining query streams § Google wants to know what queries are more frequent today than yesterday �Mining click streams § Yahoo wants to know which of its pages are getting an unusual number of hits in the past hour �Mining social network news feeds § E. g. , look for trending topics on Twitter, Facebook 11

Applications (2) �Sensor Networks § Many sensors feeding into a central controller �Telephone call records § Data feeds into customer bills as well as settlements between telephone companies �IP packets monitored at a switch § Gather information for optimal routing § Detect denial-of-service attacks 12

Sampling from a Data Stream: Sampling a fixed proportion As the stream grows the sample also gets bigger

Sampling from a Data Stream �Since we can not store the entire stream, one obvious approach is to store a sample �Two different problems: § (1) Sample a fixed proportion of elements in the stream (say 1 in 10) § (2) Maintain a random sample of fixed size over a potentially infinite stream § At any “time” k, we keep a random sample of s elements § What is the property of the sample we want to maintain? For all time steps k, each of the s elements seen so far has equal prob. of being sampled 14

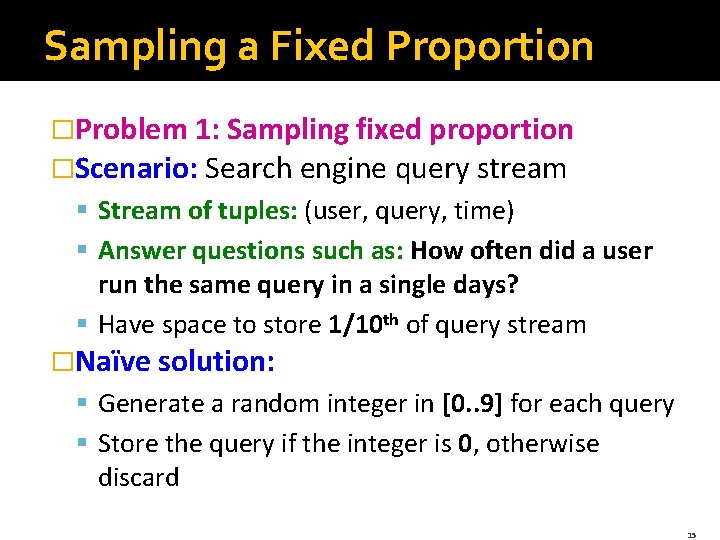

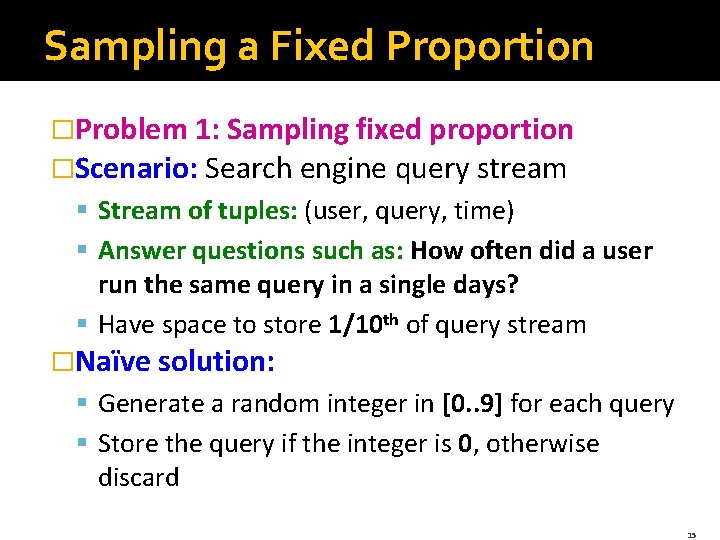

Sampling a Fixed Proportion �Problem 1: Sampling fixed proportion �Scenario: Search engine query stream § Stream of tuples: (user, query, time) § Answer questions such as: How often did a user run the same query in a single days? § Have space to store 1/10 th of query stream �Naïve solution: § Generate a random integer in [0. . 9] for each query § Store the query if the integer is 0, otherwise discard 15

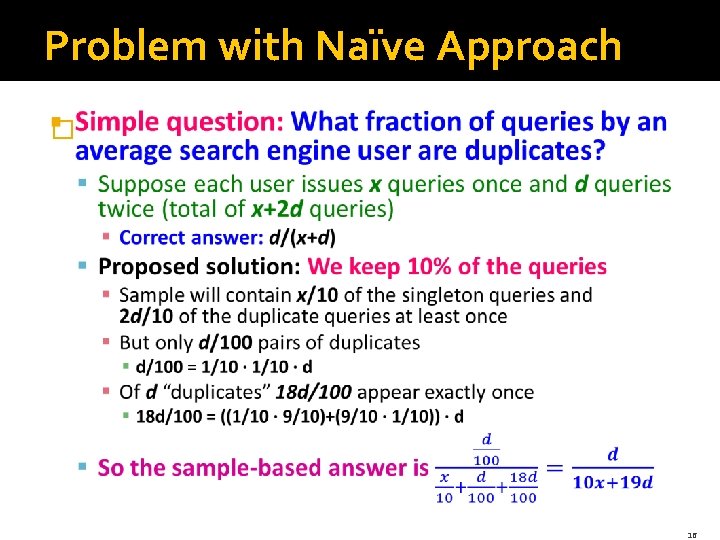

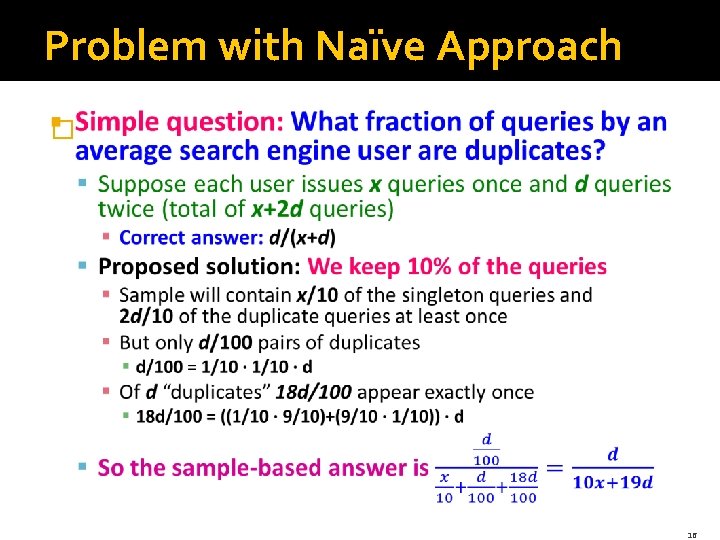

Problem with Naïve Approach � 16

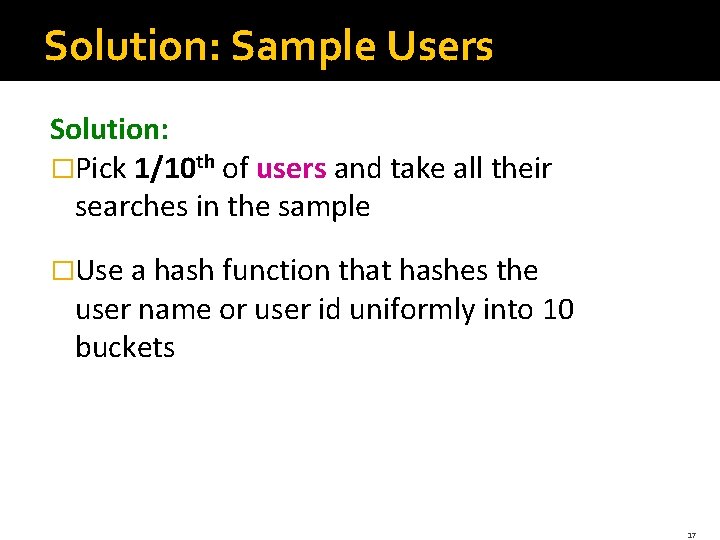

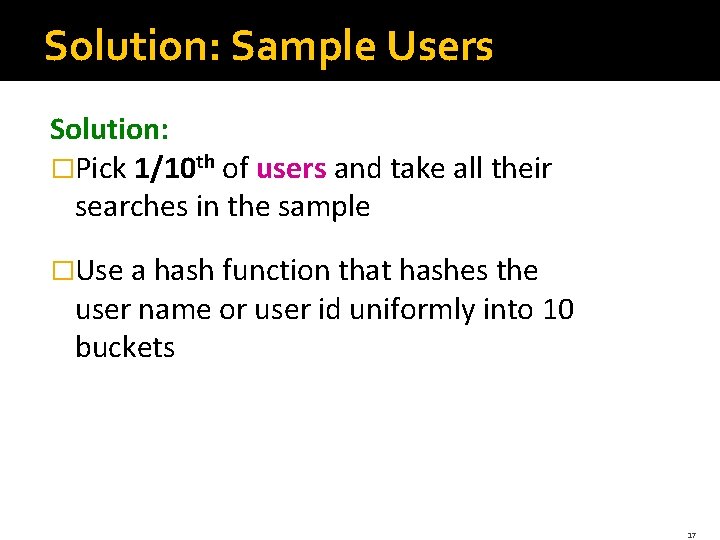

Solution: Sample Users Solution: �Pick 1/10 th of users and take all their searches in the sample �Use a hash function that hashes the user name or user id uniformly into 10 buckets 17

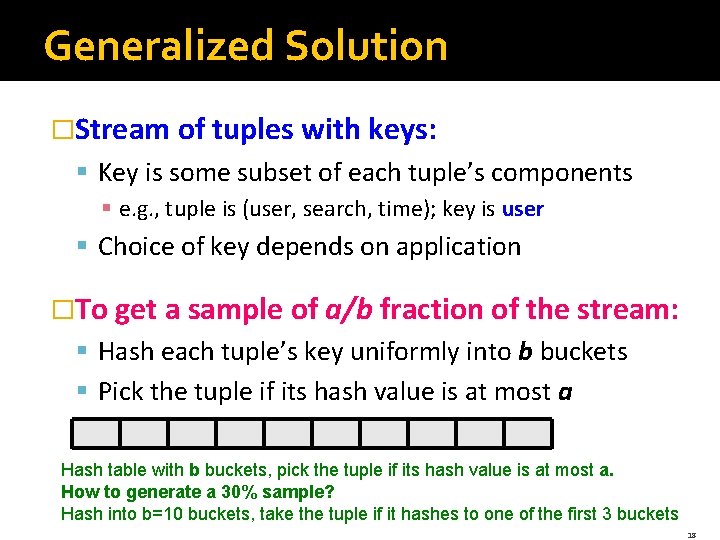

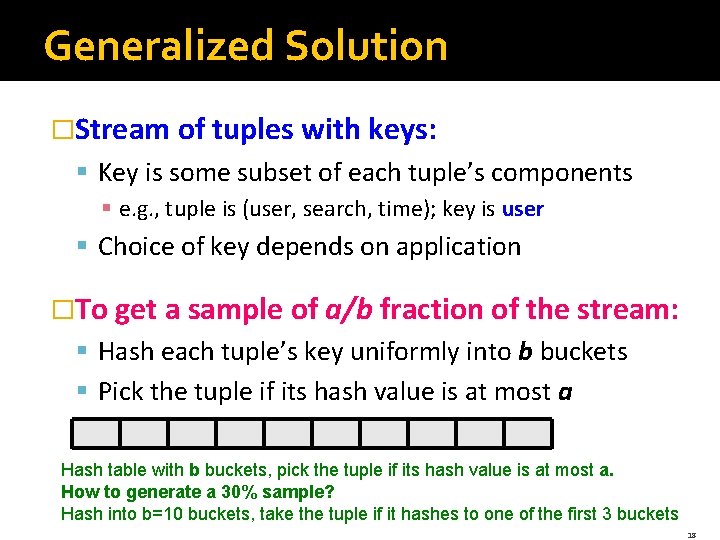

Generalized Solution �Stream of tuples with keys: § Key is some subset of each tuple’s components § e. g. , tuple is (user, search, time); key is user § Choice of key depends on application �To get a sample of a/b fraction of the stream: § Hash each tuple’s key uniformly into b buckets § Pick the tuple if its hash value is at most a Hash table with b buckets, pick the tuple if its hash value is at most a. How to generate a 30% sample? Hash into b=10 buckets, take the tuple if it hashes to one of the first 3 buckets 18

Bloom Filters

Filtering Stream Content �To motivate the Bloom-filter idea, consider a web crawler. �It keeps, centrally, a list of all the URL’s it has found so far. �It assigns these URL’s to any of a number of parallel tasks; these tasks stream back the URL’s they find in the links they discover on a page. �It needs to filter out those URL’s it has seen before. 20

Role of the Bloom Filter �A Bloom filter placed on the stream of URL’s will declare that certain URL’s have been seen before. �Others will be declared new, and will be added to the list of URL’s that need to be crawled. �Unfortunately, the Bloom filter can have false positives. § It can declare a URL seen before when it hasn’t. �But if it says “never seen, ” then it is truly new. �So we need to restart the filter periodically. 21

Example: Filtering Chunks �Suppose we have a database relation stored in a DFS, spread over many chunks. �We want to find a particular value v, looking at as few chunks as possible. �A Bloom filter on each chunk will tell us certain values are there, and others aren’t. § As before, false positives are possible. �But now things are exactly right: if the filter says v is not at the chunk, it surely isn’t. § Occasionally, we retrieve a chunk we don’t need, but can’t miss an occurrence of value v. 22

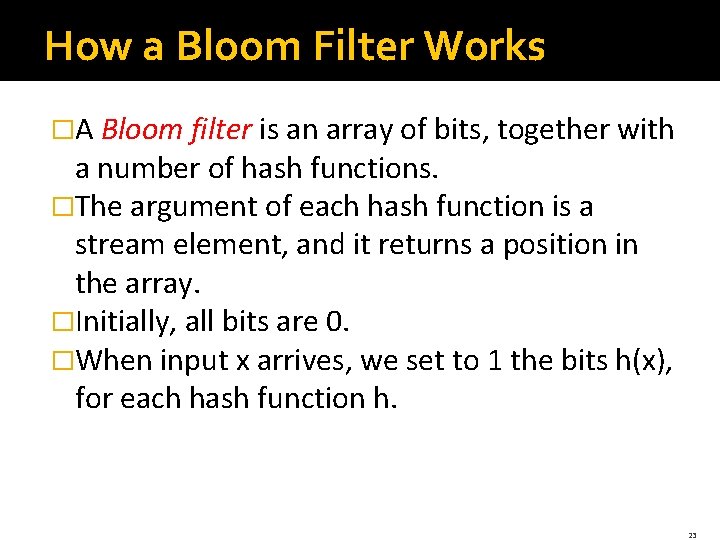

How a Bloom Filter Works �A Bloom filter is an array of bits, together with a number of hash functions. �The argument of each hash function is a stream element, and it returns a position in the array. �Initially, all bits are 0. �When input x arrives, we set to 1 the bits h(x), for each hash function h. 23

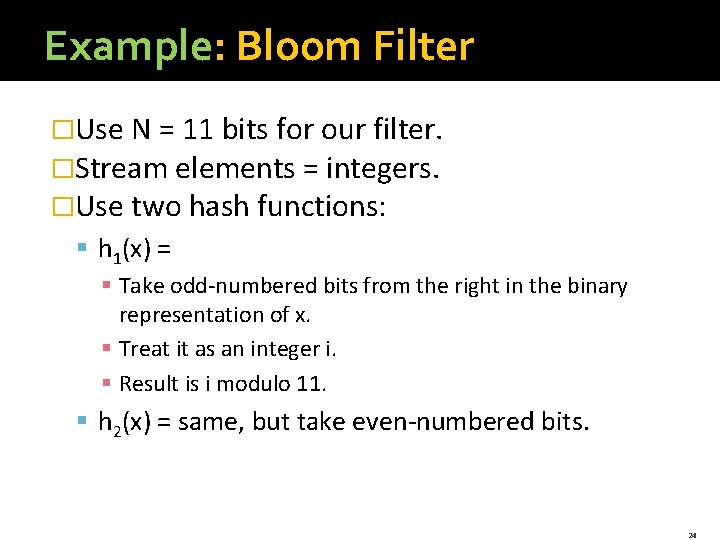

Example: Bloom Filter �Use N = 11 bits for our filter. �Stream elements = integers. �Use two hash functions: § h 1(x) = § Take odd-numbered bits from the right in the binary representation of x. § Treat it as an integer i. § Result is i modulo 11. § h 2(x) = same, but take even-numbered bits. 24

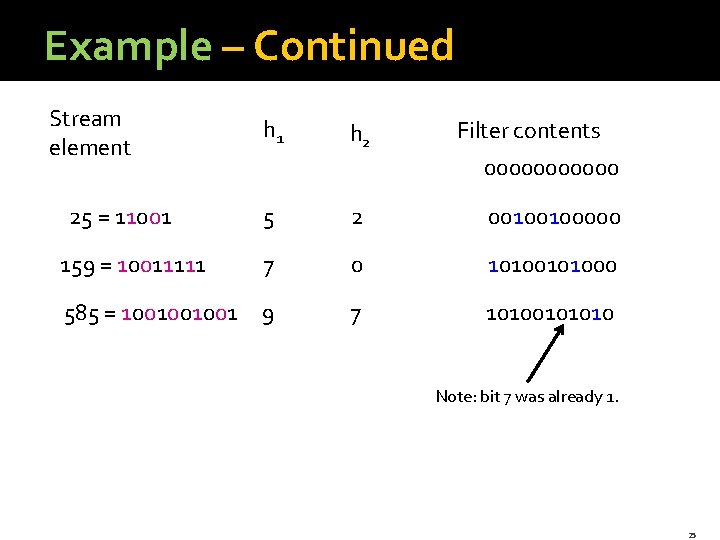

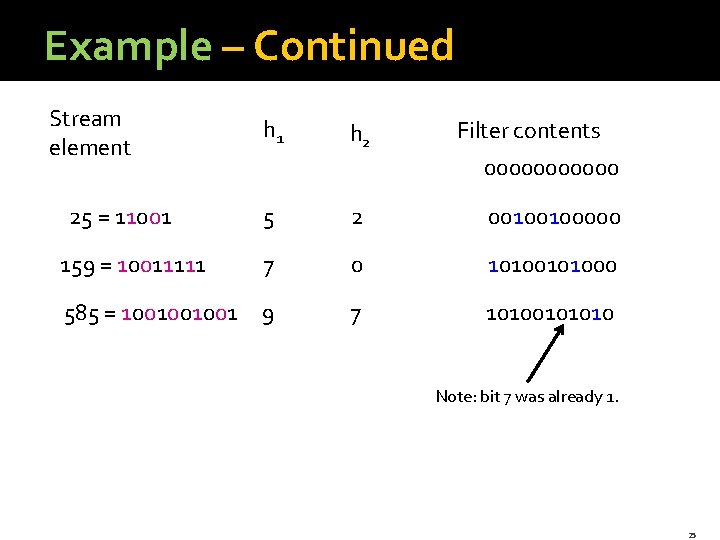

Example – Continued Stream element 25 = 11001 h 2 Filter contents 000000 5 2 00100100000 159 = 10011111 7 0 101000 585 = 1001001001 9 7 10100101010 Note: bit 7 was already 1. 25

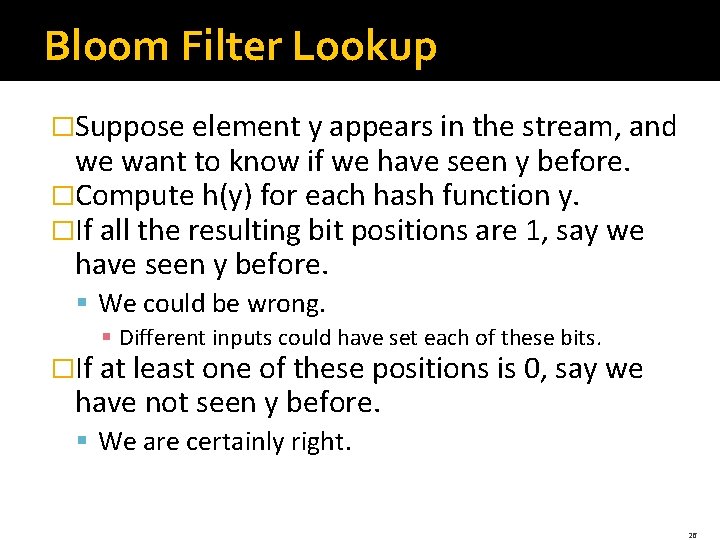

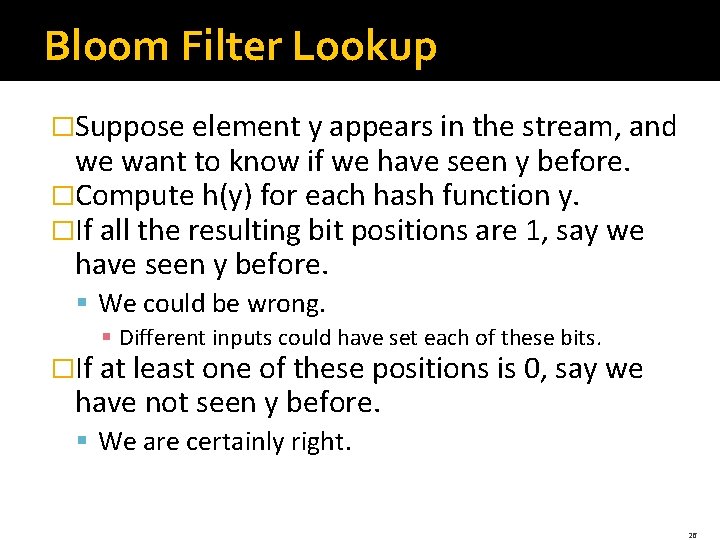

Bloom Filter Lookup �Suppose element y appears in the stream, and we want to know if we have seen y before. �Compute h(y) for each hash function y. �If all the resulting bit positions are 1, say we have seen y before. § We could be wrong. § Different inputs could have set each of these bits. �If at least one of these positions is 0, say we have not seen y before. § We are certainly right. 26

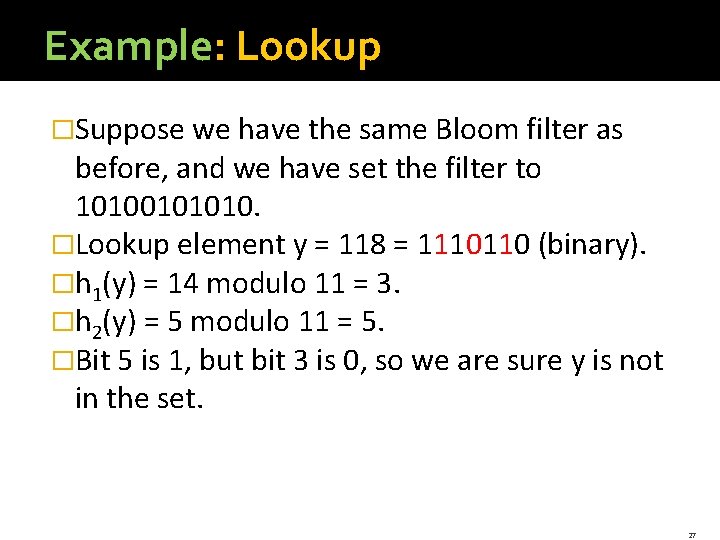

Example: Lookup �Suppose we have the same Bloom filter as before, and we have set the filter to 10100101010. �Lookup element y = 118 = 1110110 (binary). �h 1(y) = 14 modulo 11 = 3. �h 2(y) = 5 modulo 11 = 5. �Bit 5 is 1, but bit 3 is 0, so we are sure y is not in the set. 27

Performance of Bloom Filters �Probability of a false positive depends on the density of 1’s in the array and the number of hash functions. § = (fraction of 1’s)# of hash functions. �The number of 1’s is approximately the number of elements inserted times the number of hash functions. § But collisions lower that number slightly. 28

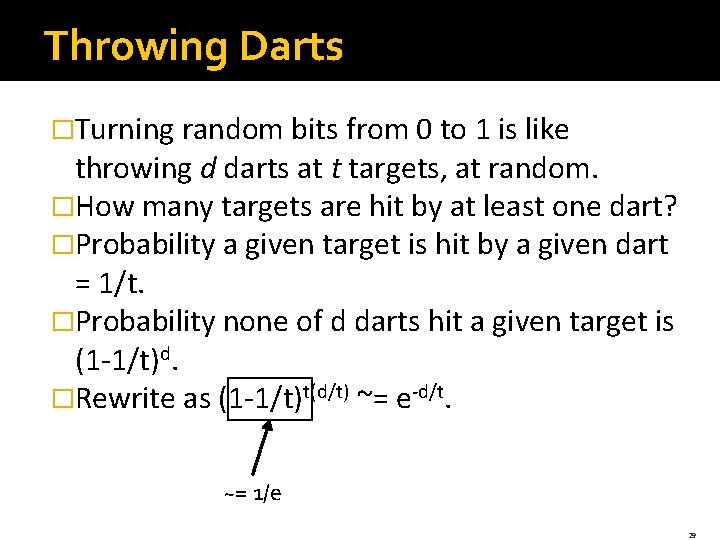

Throwing Darts �Turning random bits from 0 to 1 is like throwing d darts at t targets, at random. �How many targets are hit by at least one dart? �Probability a given target is hit by a given dart = 1/t. �Probability none of d darts hit a given target is (1 -1/t)d. �Rewrite as (1 -1/t)t(d/t) ~= e-d/t. ~= 1/e 29

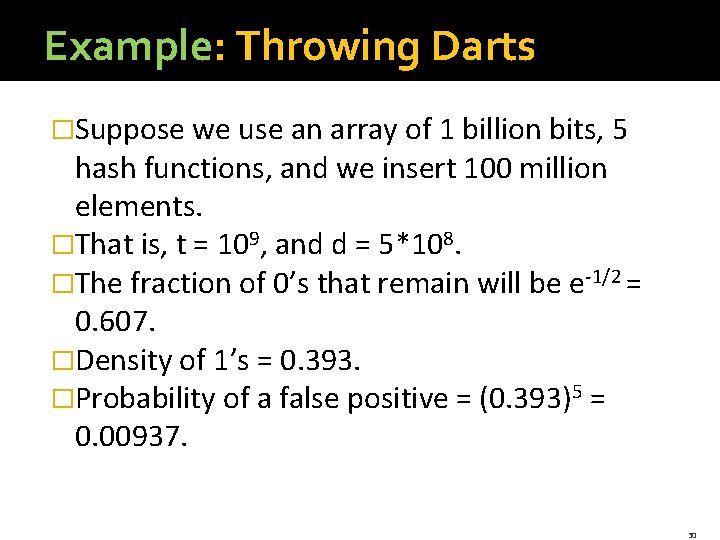

Example: Throwing Darts �Suppose we use an array of 1 billion bits, 5 hash functions, and we insert 100 million elements. �That is, t = 109, and d = 5*108. �The fraction of 0’s that remain will be e-1/2 = 0. 607. �Density of 1’s = 0. 393. �Probability of a false positive = (0. 393)5 = 0. 00937. 30