Data and Storage Evolution in Run 2 Wahid

- Slides: 20

Data and Storage Evolution in Run 2 Wahid Bhimji Contributions / conversations /emails with many e. g. : Brian Bockelman. Simone Campana, Philippe Charpentier, Fabrizio Furano, Vincent Garonne, Andrew Hanushevsky, Oliver Keeble. Sam Skipsey …

Introduction v v Already discussed some themes in Copenhagen WLCG wkshp v Improve efficiency; flexibility; simplicity. v Interoperation with wider ‘big-data’ world. Try to cover slightly different ground here, under similar areas: v WLCG technologies: activities since then. v ‘Wider. World’ technologies. Caveats: v Not discussing networking. v Accepting some things as ‘done’ (on-track) (e. g. FTS 3, commissioning of xrootd federation; LFC migration). Told to ‘stimulate discussion’: v This time discussion -> action: lets agree some things ; -).

Outline v WLCG activities v Data federations/remote access v Operating at Scale. v Storage Interfaces v SRM , Web. Dav and Xrootd. v Benchmarking and I/O v Wider World v Storage hardware technology v Storage systems, Databases v ‘Data Science’ v Discussion items

The LHC world

Storage Interfaces: SRM v All WLCG experiments will allow non-SRM disk-only resources by or during Run 2. v CMS already claim this – (and ALICE don’t use. . ) v ATLAS validating in coming months (after Rucio migration) use of Web. Dav for deletion (proto-service exists); FTS 3 non-SRM transfers; and alternative namespace-based space reporting. v LHCb “testing the possibility to bypass SRM for most of the usages except tape-staging. … more work than anticipated. . . But for run 2, hopefully this will be all solved and tested. ” v Must offer as stable /reliable a service with alternative used. v Also some sites have desire for VO reservation / quota such as provided by SRM spacetokens which should be covered by alternative (but doesn’t need to be user definable like SRM).

Xrootd data federations v Xrootd-based data federation in production ATLAS Failover usage (12 weeks) example (R. Gardner) : v All LHC experiments using a fallback to remote access v Need to incorporate last sites … v Being tested at scale See pre-GDB data access And SLAC federation workshop

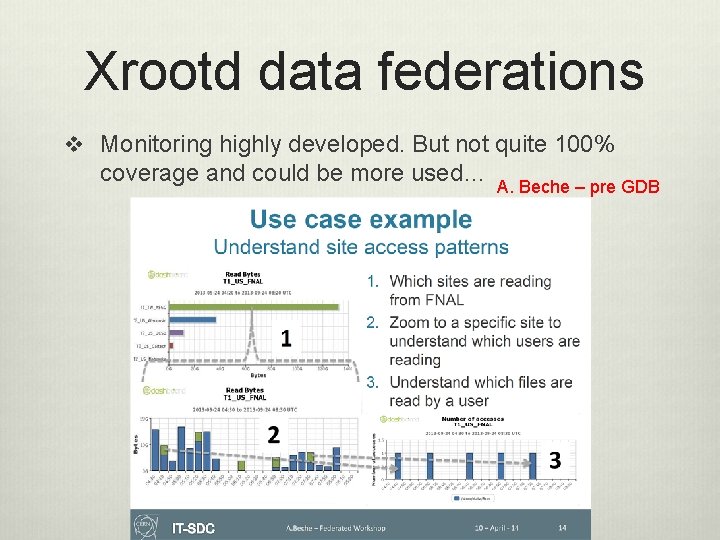

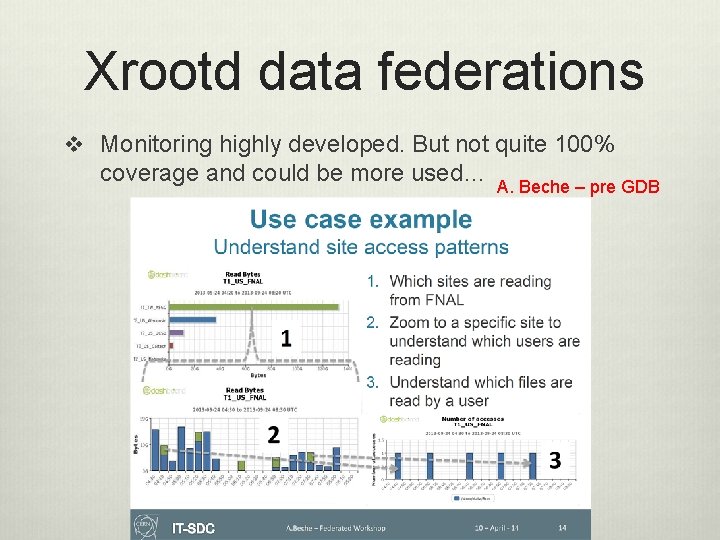

Xrootd data federations v Monitoring highly developed. But not quite 100% coverage and could be more used… A. Beche – pre GDB

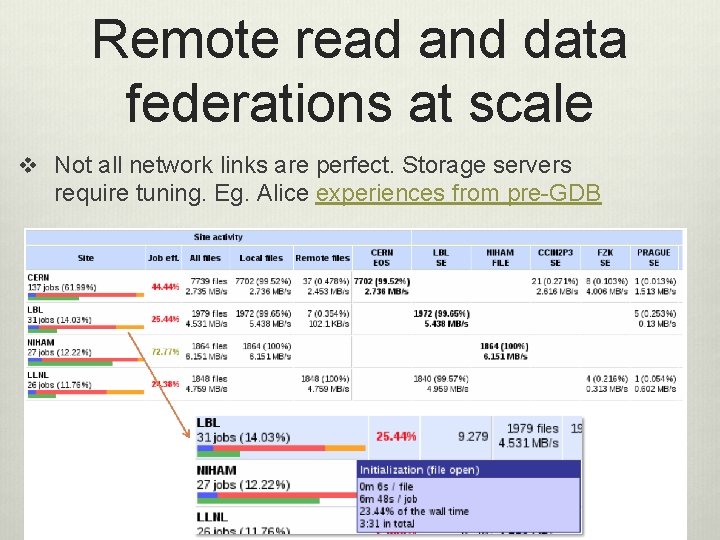

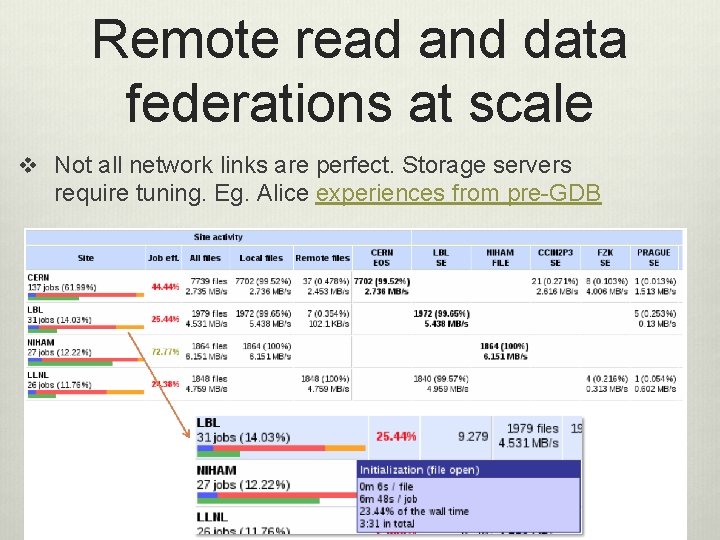

Remote read and data federations at scale v Not all network links are perfect. Storage servers require tuning. Eg. Alice experiences from pre-GDB

Remote read at scale v Sharing between hungry VOs could be a challenge. Analysis jobs vary: CMS quote < 1 MB/s; Alice Average 2 MB/s; ATLAS H->WW hammercloud benchmark needs 20 MB/s to be 100% cpu eff. v Sites can use their own network infrastructure to protect. Vos shouldn’t try and mirco-manage but strong desire for storage plugins (e. g. xrootd throttling plugin) E. g. ATLAS H->WW being throttled by 1 Gig NAT – corresponding decrease in event rate

HTTP / Web. Dav v Xrd. HTTP is done (in Xrootd 4) – offers potential for xrootd sites to have http interface. Fabrizio Furano : pre-GDB: v As do DPM, d. Cache, Sto. RM v So will be universally available. v Monitoring – much available (e. g. in Apache) but not currently in WLCG.

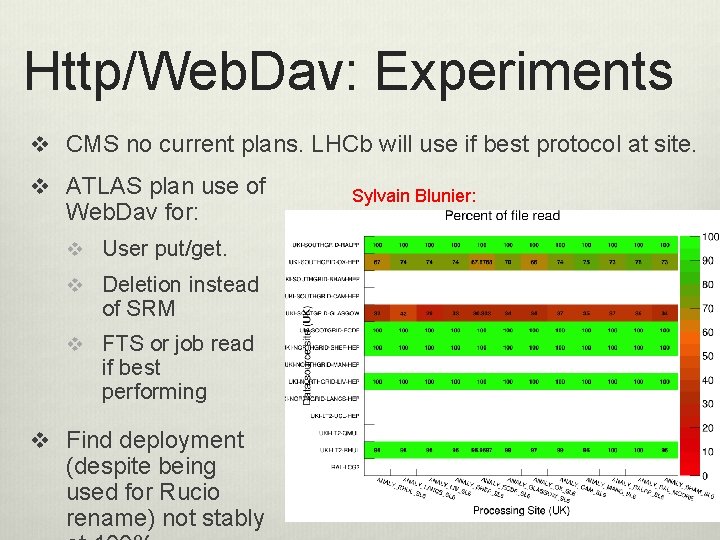

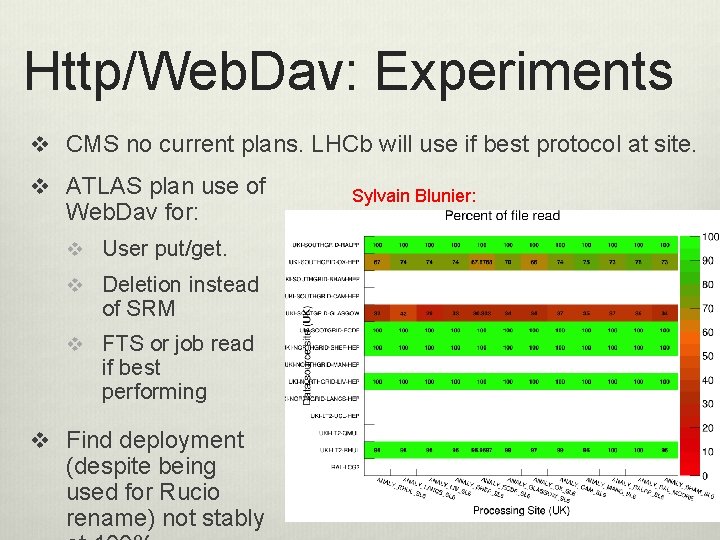

Http/Web. Dav: Experiments v CMS no current plans. LHCb will use if best protocol at site. v ATLAS plan use of Web. Dav for: v User put/get. v Deletion instead of SRM v FTS or job read if best performing v Find deployment (despite being used for Rucio rename) not stably Sylvain Blunier:

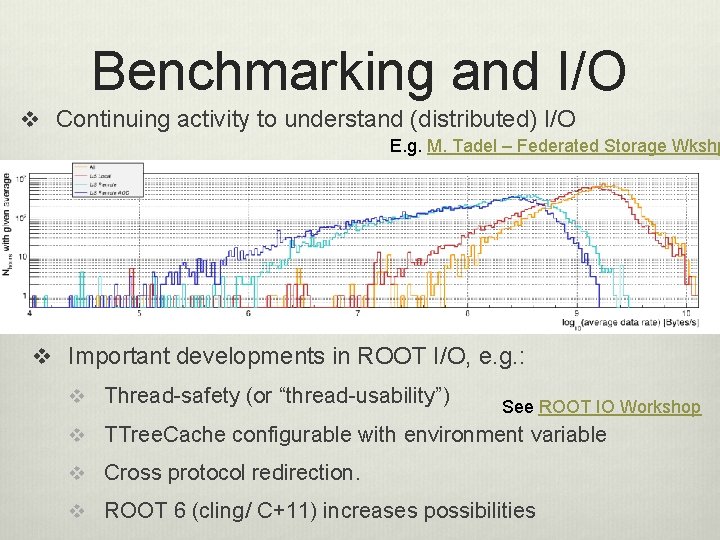

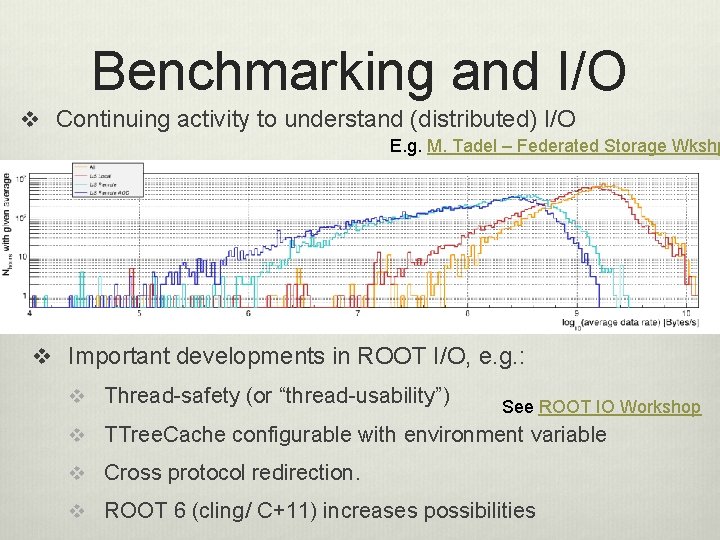

Benchmarking and I/O v Continuing activity to understand (distributed) I/O E. g. M. Tadel – Federated Storage Wkshp v Important developments in ROOT I/O, e. g. : v Thread-safety (or “thread-usability”) See ROOT IO Workshop v TTree. Cache configurable with environment variable v Cross protocol redirection. v ROOT 6 (cling/ C+11) increases possibilities

The rest of the world

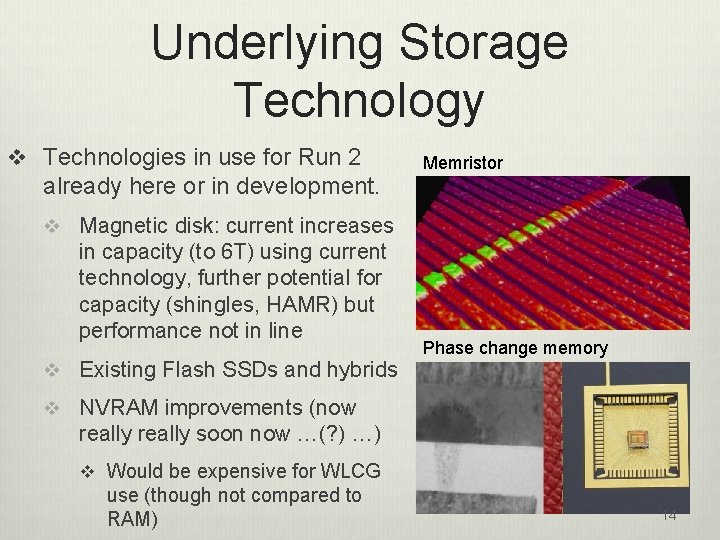

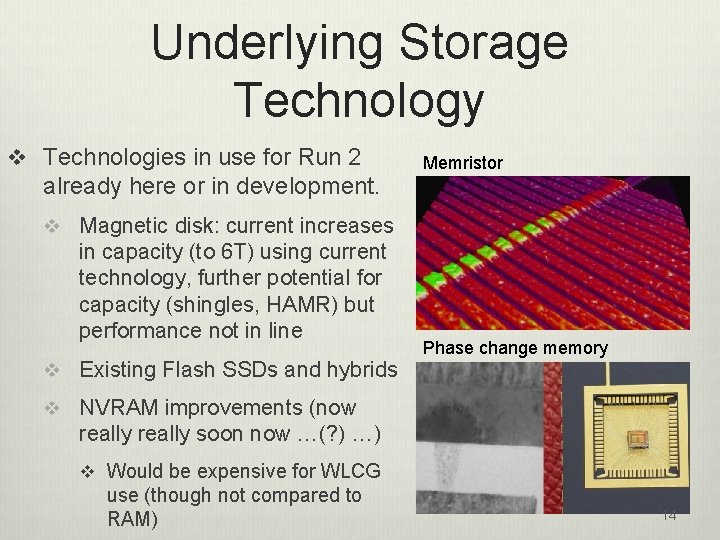

Underlying Storage Technology v Technologies in use for Run 2 already here or in development. Memristor v Magnetic disk: current increases in capacity (to 6 T) using current technology, further potential for capacity (shingles, HAMR) but performance not in line v Existing Flash SSDs and hybrids Phase change memory v NVRAM improvements (now really soon now …(? ) …) v Would be expensive for WLCG use (though not compared to RAM) 14

Storage Systems v ‘Cloud’ (non-POSIX) scalable solutions v Algorithmic data placement. v RAIN fault tolerance becoming common / standard. v “Software defined storage” v E. g Ceph , HDFS + RAIN, Vipr v WLCG sites interested in using such technologies and we should be flexible enough to use it.

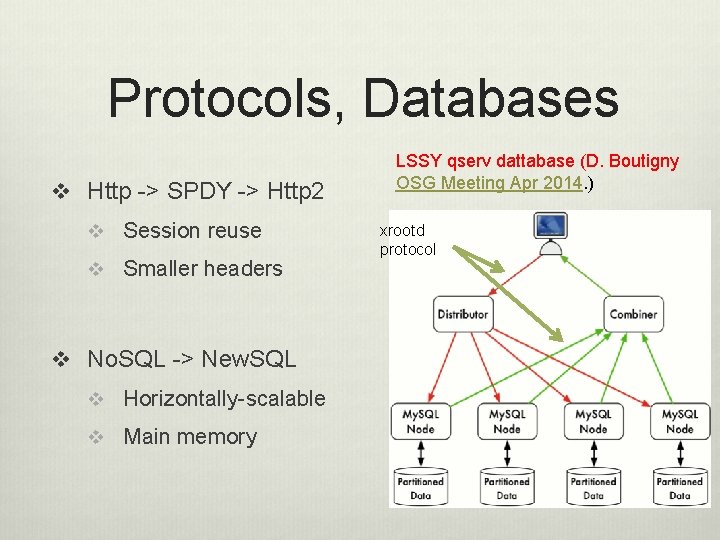

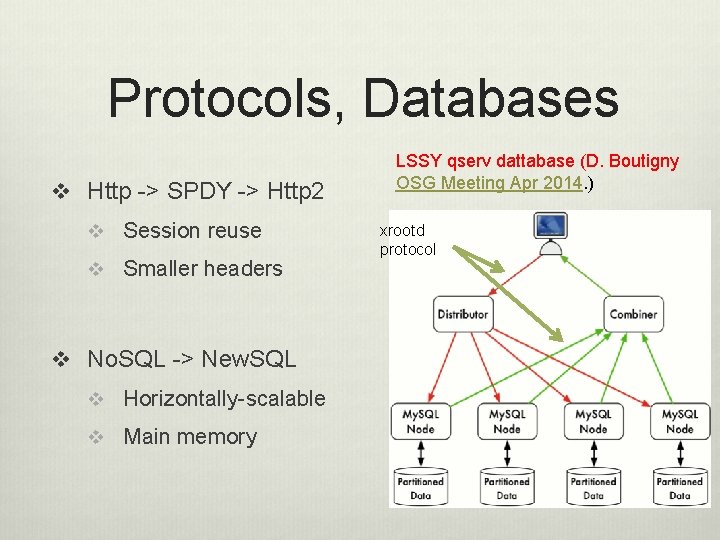

Protocols, Databases v Http -> SPDY -> Http 2 v Session reuse v Smaller headers v No. SQL -> New. SQL v Horizontally-scalable v Main memory LSSY qserv dattabase (D. Boutigny OSG Meeting Apr 2014. ) xrootd protocol

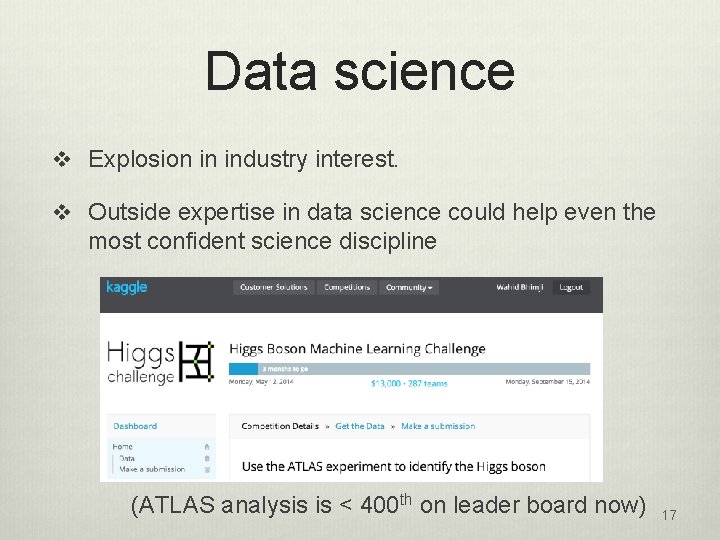

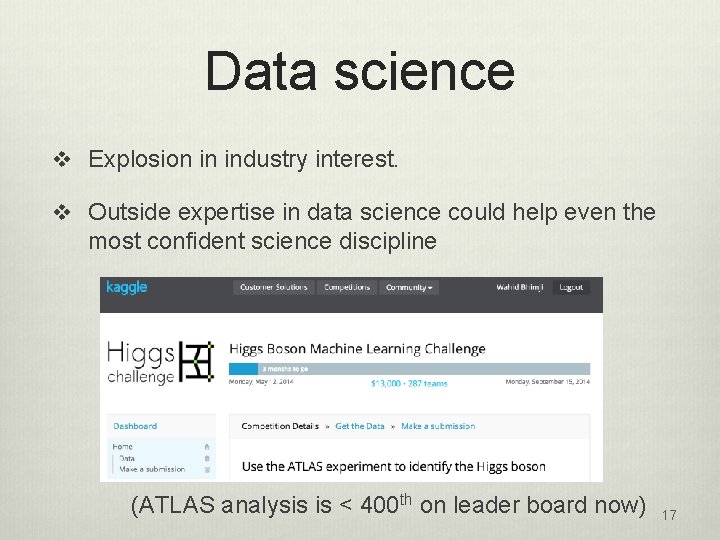

Data science v Explosion in industry interest. v Outside expertise in data science could help even the most confident science discipline (ATLAS analysis is < 400 th on leader board now) 17

Discussion

Relaxing requirements … v For example, having an appropriate level of protection for data readability v Removing technical read protection would not change practical protection as currently non-VO site admins can read it; and no-one can interpret our data. v Storage developers should first demonstrate the gain (performance or simplification) and we could push this. v Similarly for other barriers towards, for example object- store-like scaling and integration of non-HEP resources…

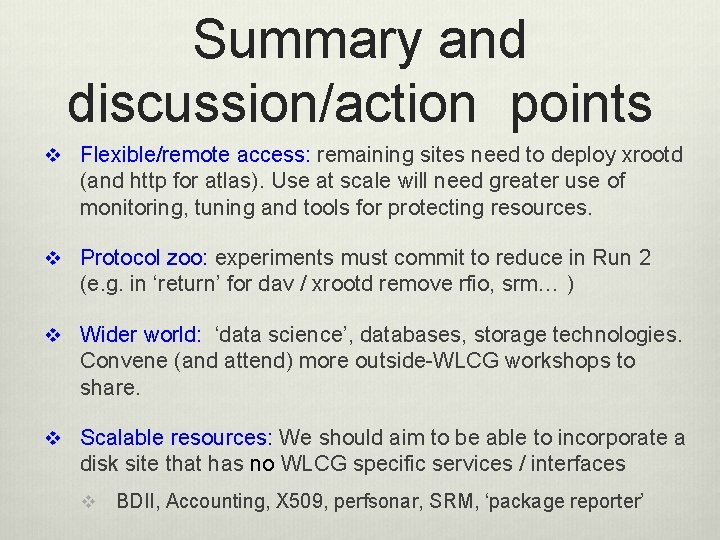

Summary and discussion/action points v Flexible/remote access: remaining sites need to deploy xrootd (and http for atlas). Use at scale will need greater use of monitoring, tuning and tools for protecting resources. v Protocol zoo: experiments must commit to reduce in Run 2 (e. g. in ‘return’ for dav / xrootd remove rfio, srm… ) v Wider world: ‘data science’, databases, storage technologies. Convene (and attend) more outside-WLCG workshops to share. v Scalable resources: We should aim to be able to incorporate a disk site that has no WLCG specific services / interfaces v BDII, Accounting, X 509, perfsonar, SRM, ‘package reporter’