Data and Computation Grid Decoupling in STAR An

- Slides: 15

Data and Computation Grid Decoupling in STAR – An Analysis Scenario using SRM Technology Eric Hjort (LBNL) Jérôme Lauret, Hajdu Levente (BNL) Alex Sim, Arie Shoshani, Doug Olson (LBNL) CHEP ’ 06 February 13 -17, 2006 T. I. F. R. Mumbai, India CHEP ‘ 06 Eric Hjort, Jérôme Lauret

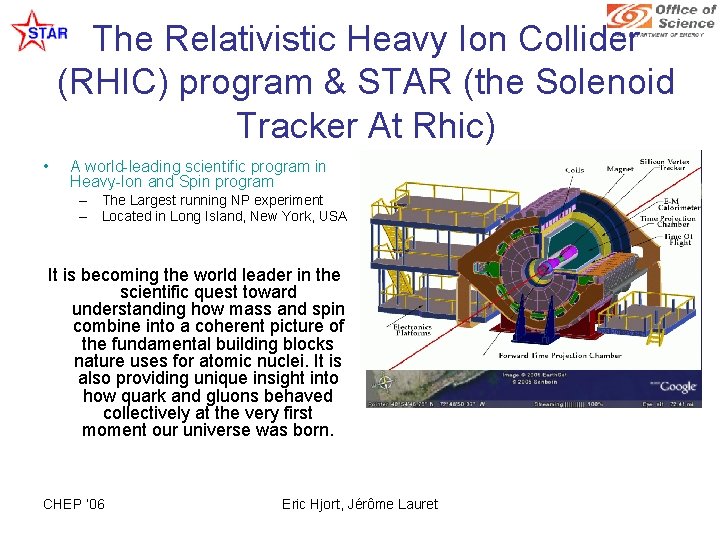

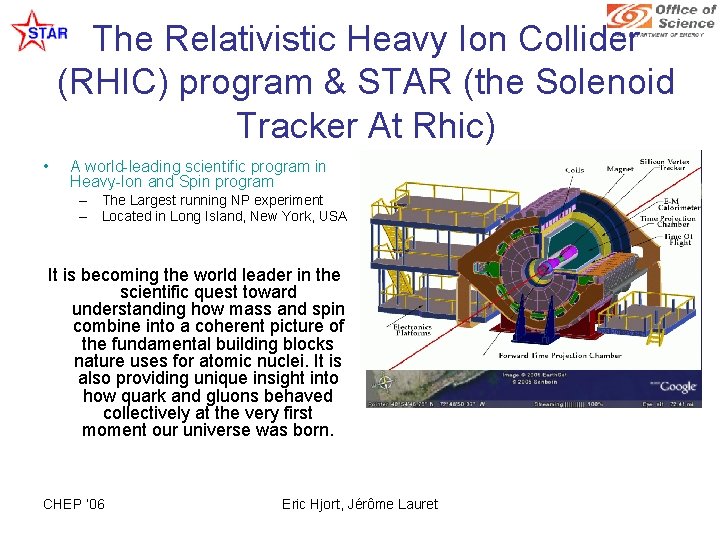

The Relativistic Heavy Ion Collider (RHIC) program & STAR (the Solenoid Tracker At Rhic) • A world-leading scientific program in Heavy-Ion and Spin program – The Largest running NP experiment – Located in Long Island, New York, USA It is becoming the world leader in the scientific quest toward understanding how mass and spin combine into a coherent picture of the fundamental building blocks nature uses for atomic nuclei. It is also providing unique insight into how quark and gluons behaved collectively at the very first moment our universe was born. CHEP ‘ 06 Eric Hjort, Jérôme Lauret RHIC

A few relevant STAR Grid Computing Objectives • • Provide Qo. S for routine data production / data mining Provide user analysis on Grid … Access Open Science Grid resources (reserved or opportunistic) • Run grid-based production and user analysis jobs at distributed STAR institutions with – Load balancing across sites – Convenient use of remote resources • Extend to non-STAR grid sites, opportunistic computing – Increase resources available to STAR – Jobs must be self-contained – Must have the same “features” than normal batch … CHEP ‘ 06 Eric Hjort, Jérôme Lauret

Analysis Jobs method • • We will speak of batch oriented jobs YESTERDAY: Based on jobs submission using the Star Unified Meta-Scheduler (SUMS) – User specifies job specifics in xml file – SUMS includes file catalog plug-and-play module: dataset to file list – Submits jobs to local batch system (LSF, SGE, etc. ) • TODAY: Extension to grid jobs – SUMS remains the gateway to job submission • Grid: submits to remote sites with condor-g (it is yet another dispatcher in the flexible framework) – Small files (scripts, apps, etc. . . ) moved via condor-g to SUMS “sandbox” (standard archives) – stdout and stderr returned to submission site by condor w/Grid. Monitor • Problem to resolve – Outputs (from production or MC) could be large (several GB) – Results from user analysis numerous (several 10 k files sometimes per day) – Requires reliable and efficient data movement and space management (failure requirements lower for users) Input and output files moved to and from remote site by DRM managed space CHEP ‘ 06 Eric Hjort, Jérôme Lauret

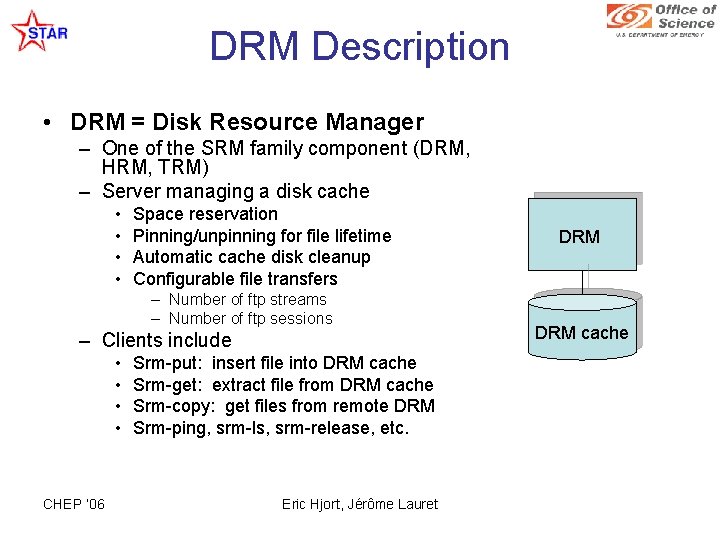

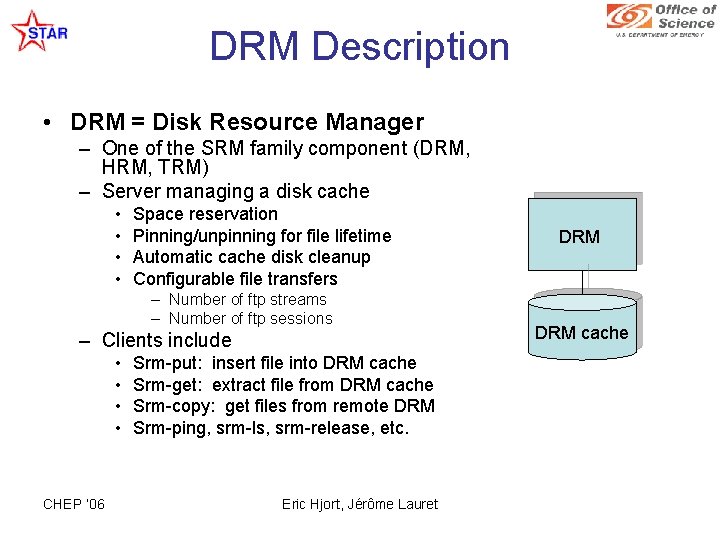

DRM Description • DRM = Disk Resource Manager – One of the SRM family component (DRM, HRM, TRM) – Server managing a disk cache • • Space reservation Pinning/unpinning for file lifetime Automatic cache disk cleanup Configurable file transfers – Number of ftp streams – Number of ftp sessions – Clients include • • CHEP ‘ 06 Srm-put: insert file into DRM cache Srm-get: extract file from DRM cache Srm-copy: get files from remote DRM Srm-ping, srm-ls, srm-release, etc. Eric Hjort, Jérôme Lauret DRM cache

Approach Requirements • User authentication at remote sites – User gets DOEgrids certificate – Registration in STAR VO with VOMRS – Remote site policy allows STAR jobs via OSG • DRM running at remote site – – – Lightweight installation (on the fly deployable binaries) Client part of OSG/VDT installation Service details published through OSG framework Minimal disk space needed (~100 GB) for DRM cache Intent is not to manage large amounts (TB’s) of files CHEP ‘ 06 Eric Hjort, Jérôme Lauret

Benefits • No STAR-specific remote installations needed • SUMS interface similar to that for local jobs – Input files, catalog queries are local – Output files returned to submission site, owned by user • DRM managed file transfers reduce gatekeeper loads – Number of concurrent gridftp sessions controlled – Files are queued for transfer as necessary Gatekeeper is protected when many jobs start or stop simultaneously CHEP ‘ 06 Eric Hjort, Jérôme Lauret

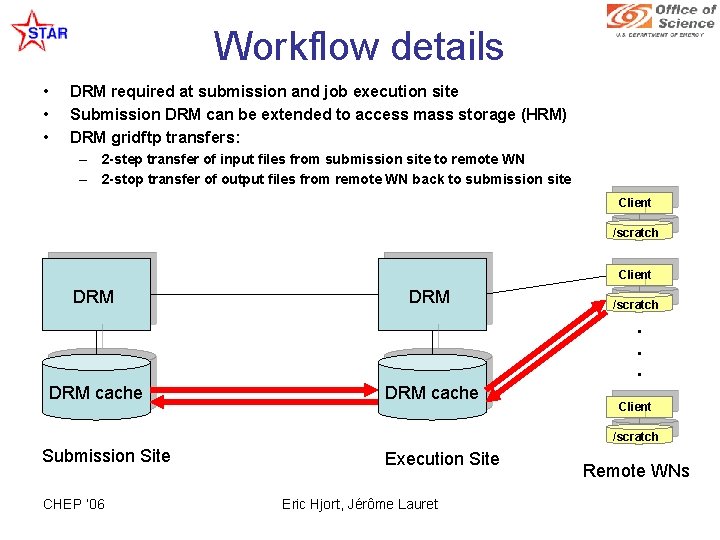

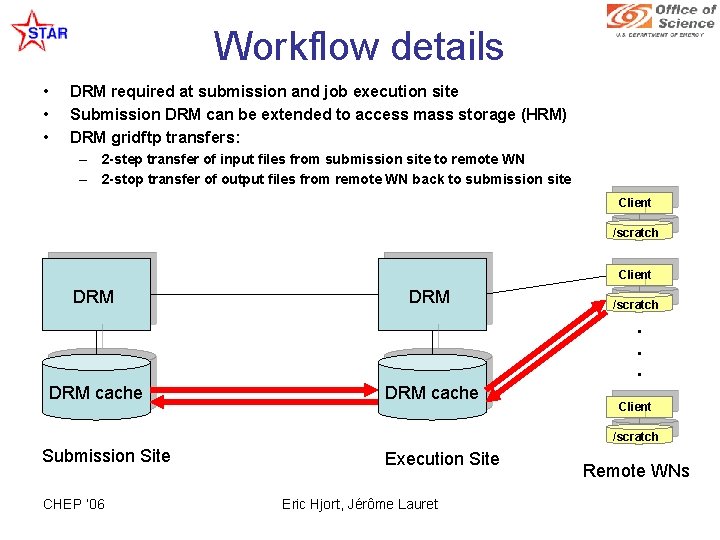

Workflow details • • • DRM required at submission and job execution site Submission DRM can be extended to access mass storage (HRM) DRM gridftp transfers: – 2 -step transfer of input files from submission site to remote WN – 2 -stop transfer of output files from remote WN back to submission site Client /scratch Client DRM /scratch . . . DRM cache Client /scratch Submission Site CHEP ‘ 06 Execution Site Eric Hjort, Jérôme Lauret Remote WNs

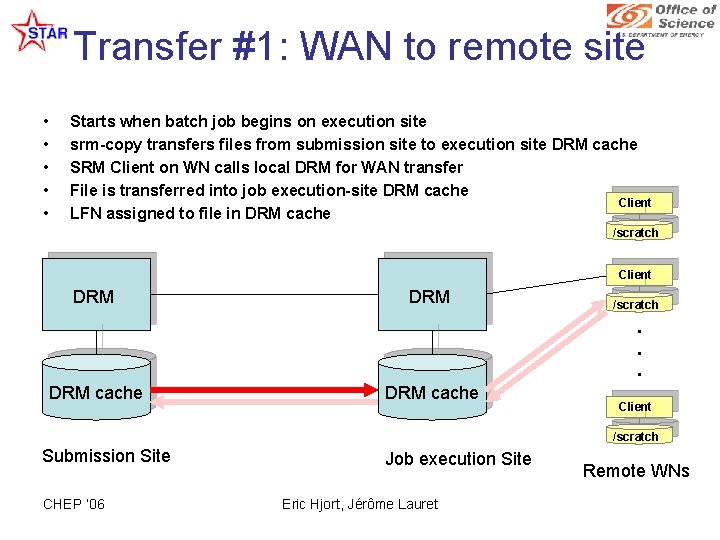

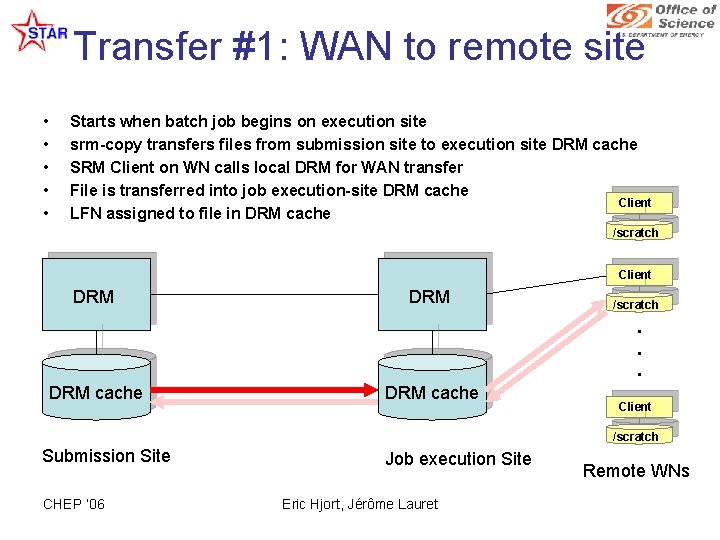

Transfer #1: WAN to remote site • • • Starts when batch job begins on execution site srm-copy transfers files from submission site to execution site DRM cache SRM Client on WN calls local DRM for WAN transfer File is transferred into job execution-site DRM cache Client LFN assigned to file in DRM cache /scratch Client DRM /scratch . . . DRM cache Client /scratch Submission Site CHEP ‘ 06 Job execution Site Eric Hjort, Jérôme Lauret Remote WNs

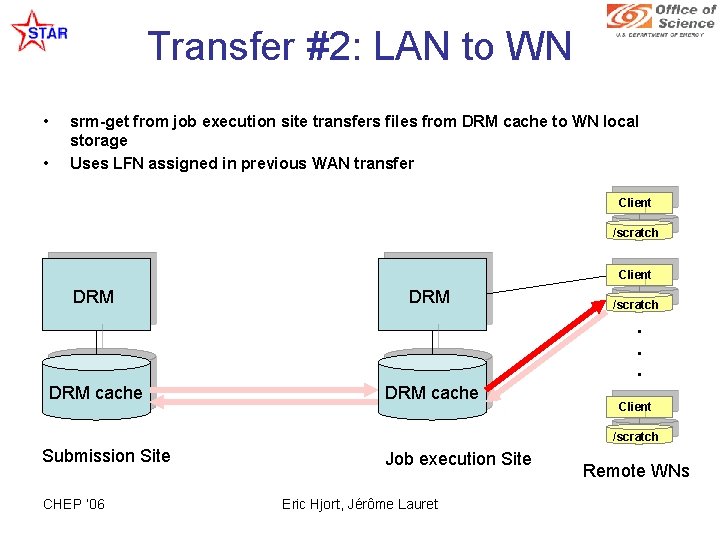

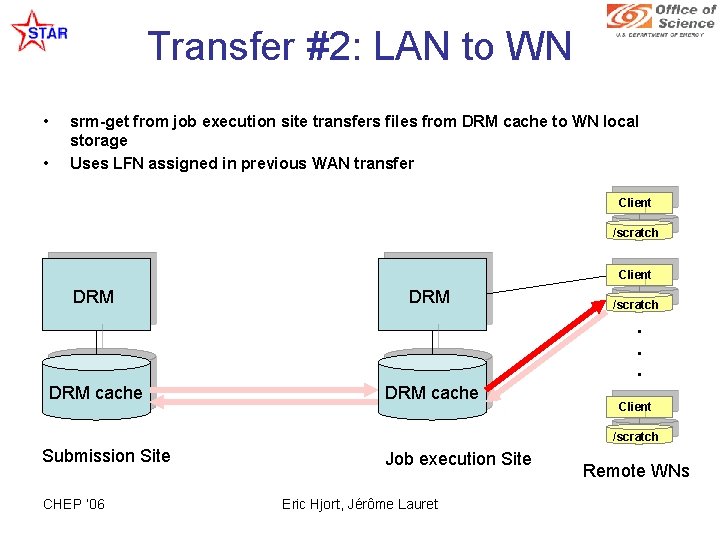

Transfer #2: LAN to WN • • srm-get from job execution site transfers files from DRM cache to WN local storage Uses LFN assigned in previous WAN transfer Client /scratch Client DRM /scratch . . . DRM cache Client /scratch Submission Site CHEP ‘ 06 Job execution Site Eric Hjort, Jérôme Lauret Remote WNs

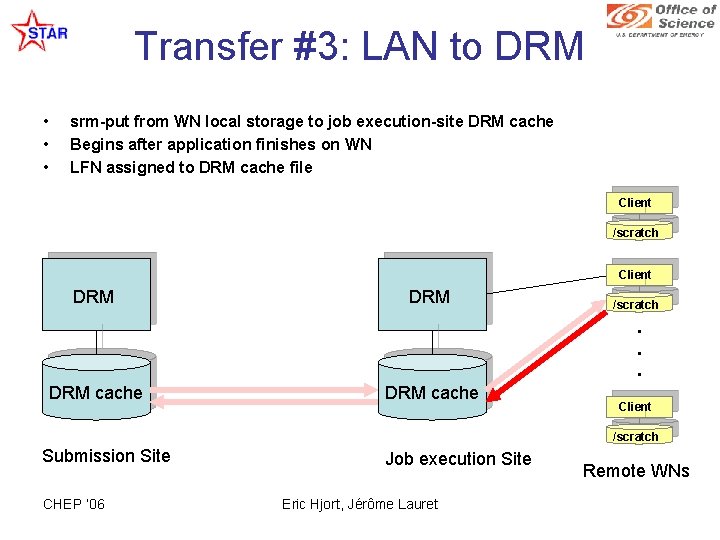

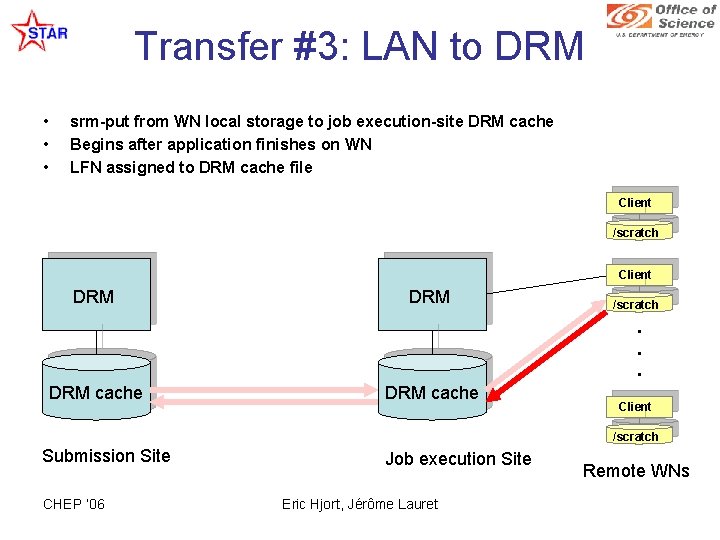

Transfer #3: LAN to DRM • • • srm-put from WN local storage to job execution-site DRM cache Begins after application finishes on WN LFN assigned to DRM cache file Client /scratch Client DRM /scratch . . . DRM cache Client /scratch Submission Site CHEP ‘ 06 Job execution Site Eric Hjort, Jérôme Lauret Remote WNs

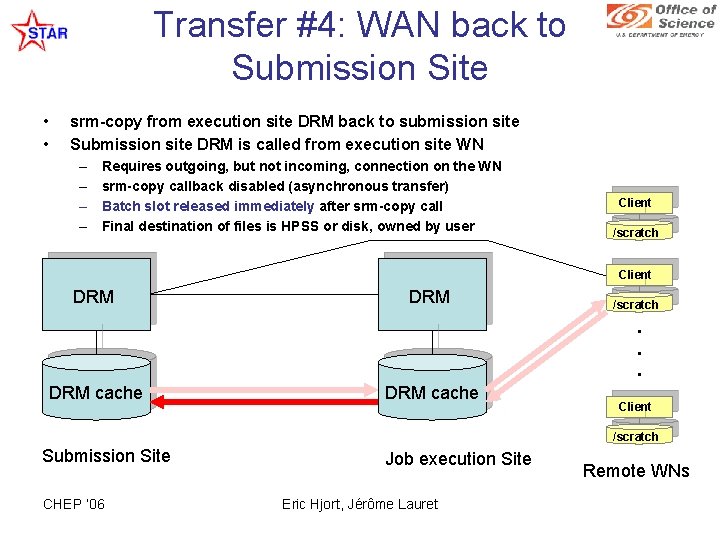

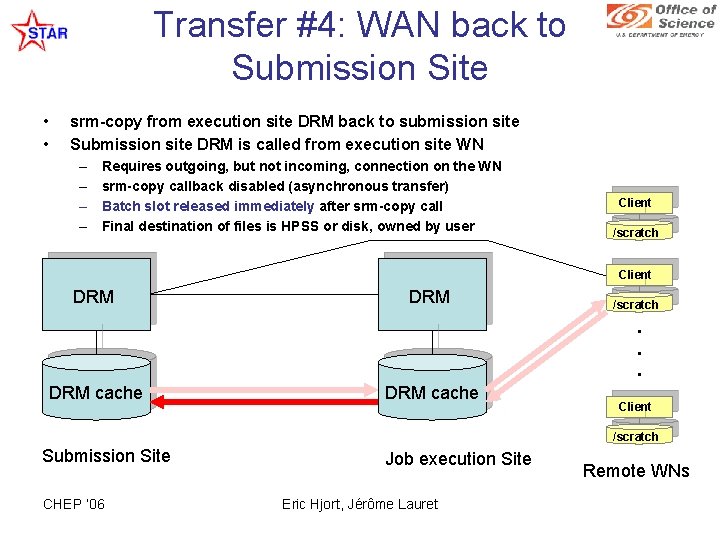

Transfer #4: WAN back to Submission Site • • srm-copy from execution site DRM back to submission site Submission site DRM is called from execution site WN – – Requires outgoing, but not incoming, connection on the WN srm-copy callback disabled (asynchronous transfer) Batch slot released immediately after srm-copy call Final destination of files is HPSS or disk, owned by user Client /scratch Client DRM /scratch . . . DRM cache Client /scratch Submission Site CHEP ‘ 06 Job execution Site Eric Hjort, Jérôme Lauret Remote WNs

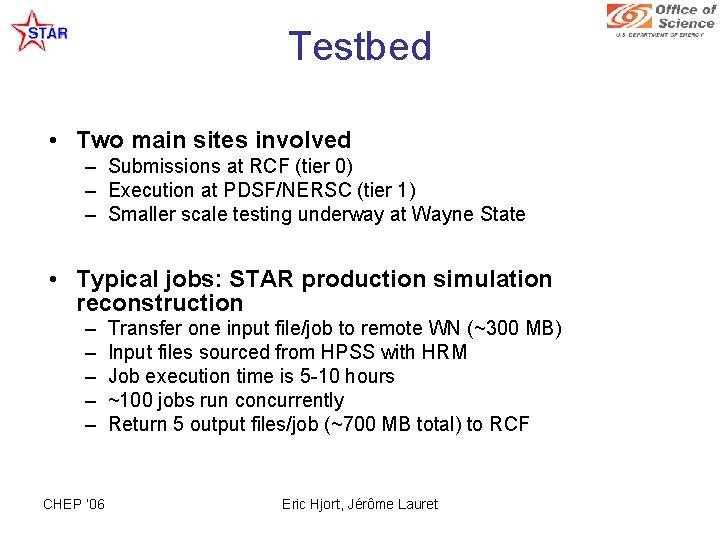

Testbed • Two main sites involved – Submissions at RCF (tier 0) – Execution at PDSF/NERSC (tier 1) – Smaller scale testing underway at Wayne State • Typical jobs: STAR production simulation reconstruction – – – CHEP ‘ 06 Transfer one input file/job to remote WN (~300 MB) Input files sourced from HPSS with HRM Job execution time is 5 -10 hours ~100 jobs run concurrently Return 5 output files/job (~700 MB total) to RCF Eric Hjort, Jérôme Lauret

Performance • Transfer performance limited by WAN – 5 MB/s for single gridftp session – can improve with parallel gridftp sessions – Transfer time is still << job execution time • Stable under “flood” conditions – Many jobs starting or stopping simultaneously (burst of several 100 ds) – DRMs buffer input/output effectively: overload of gatekeeper or DRM server not yet observed – Scalability limits not yet reached: load is limited by duration of jobs, number of available batch slots, size of files to transfer, etc. • In large-scale use for ~4 months by STAR – Thousands of jobs processed, ~1 TB transferred – DRM stability has been excellent: problems encountered have been more general problems, e. g. , CRL expiration, file system issues, gatekeeper hardware failure, etc. : typical grid problems… CHEP ‘ 06 Eric Hjort, Jérôme Lauret

Summary • SRM technologies offer an attractive solution to STAR’s grid computing needs (and beyond) – SRM clients Widely available (VDT) and mature – Lightweight install is attractive to admins and users – On the fly deployment of SE solution is unique • STAR DRM-based method: utilized managed transfers of input and output files – Controls load on gatekeepers – Coupled with SUMS, conveniently put remote resources at user’s reach – Computational and Storage are decoupled – no need to wait for transfer – Scalability - Performance has been excellent • Scalability sufficient for present load • DRM stable under high-load situations • Future plans – Full workflow implementation in SUMS (one job starts DRM server) – Running on OSG sites with DRM installations CHEP ‘ 06 Eric Hjort, Jérôme Lauret