Dagstuhl Seminar 08111 on Ranked XML Querying Outline

![Query Construction Initial query: query evaluation author[Baeza] article sec[data] descendantor-self axis needs schema information! Query Construction Initial query: query evaluation author[Baeza] article sec[data] descendantor-self axis needs schema information!](https://slidetodoc.com/presentation_image/9279a41e3b3f0ce3215df9719f10d62b/image-28.jpg)

- Slides: 34

Dagstuhl Seminar 08111 on Ranked XML Querying

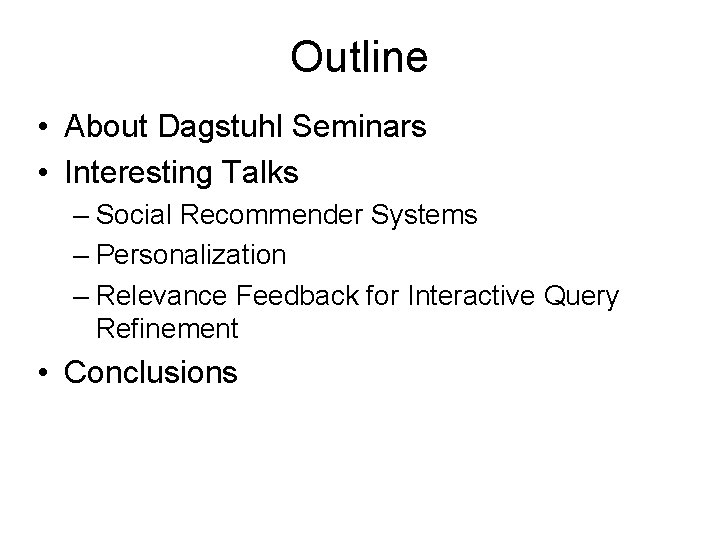

Outline • About Dagstuhl Seminars • Interesting Talks – Social Recommender Systems – Personalization – Relevance Feedback for Interactive Query Refinement • Conclusions

About Dagstuhl Seminars • Participants: renowned researchers of international standing and promising young scholars. • Report on current, as yet unconcluded research work and ideas, and conduct indepth discussions. • Almost every week throughout the year. – http: //www. dagstuhl. de/en/program/dagstuhlseminars/

Outline • About Dagstuhl Seminars • Interesting Talks – Social Recommender Systems • Making DB and IR (Socially) Meaningful – Personalization – Relevance Feedback for Interactive Query Refinement • Conclusions

Making DB and IR (Socially) Meaningful Sihem Amer-Yahia (Yahoo Research - New York)

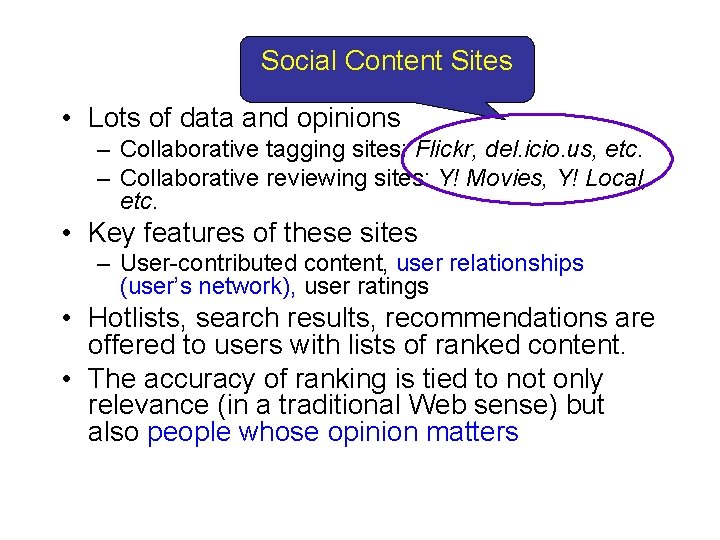

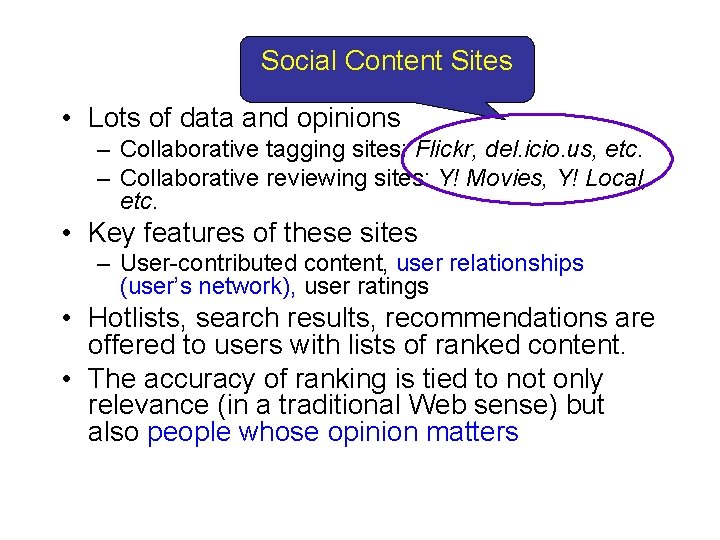

Social Content Sites Motivation • Lots of data and opinions – Collaborative tagging sites: Flickr, del. icio. us, etc. – Collaborative reviewing sites: Y! Movies, Y! Local, etc. • Key features of these sites – User-contributed content, user relationships (user’s network), user ratings • Hotlists, search results, recommendations are offered to users with lists of ranked content. • The accuracy of ranking is tied to not only relevance (in a traditional Web sense) but also people whose opinion matters

Recommendations (Amazon) But who are these people ?

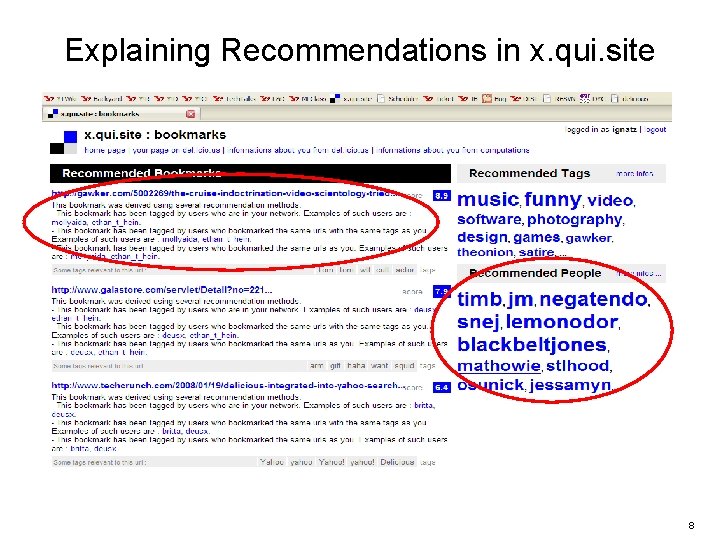

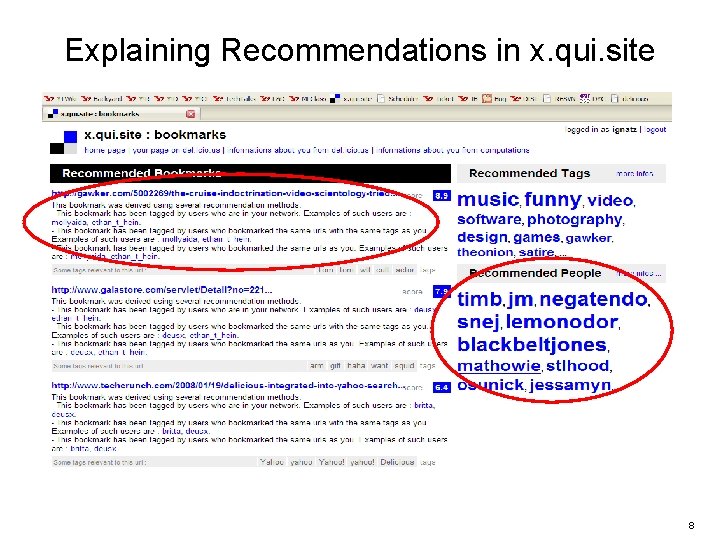

Explaining Recommendations in x. qui. site 8

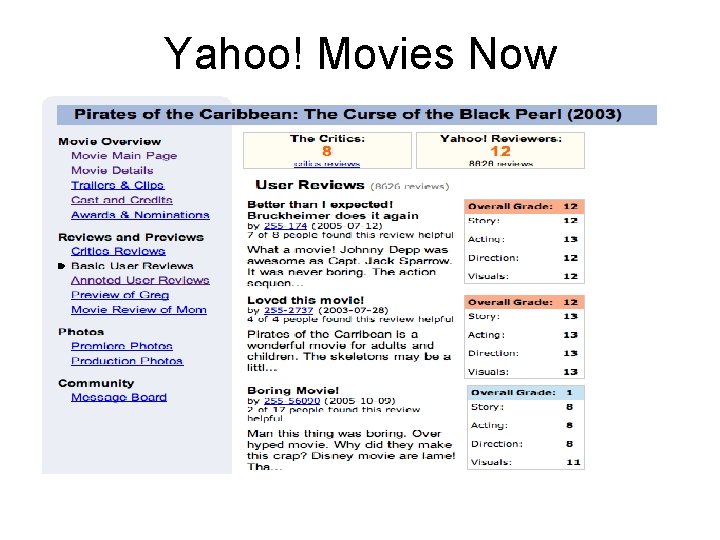

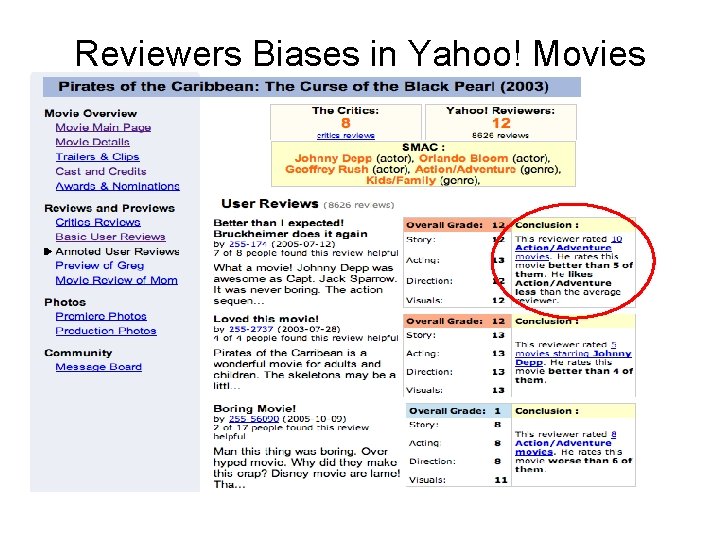

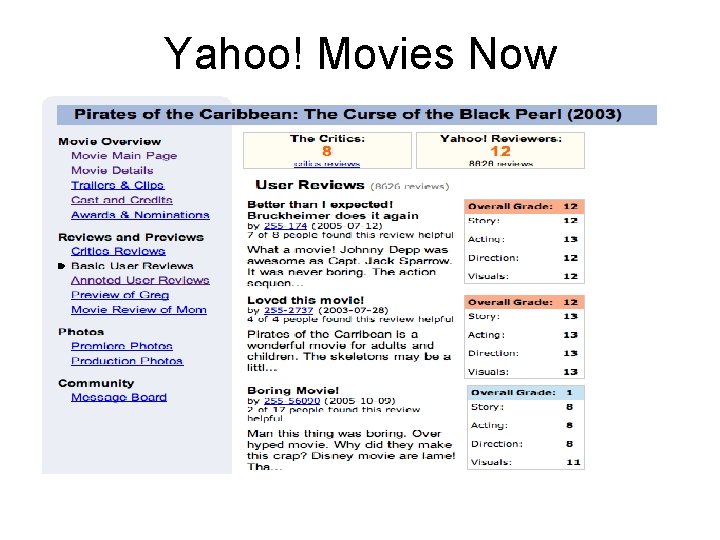

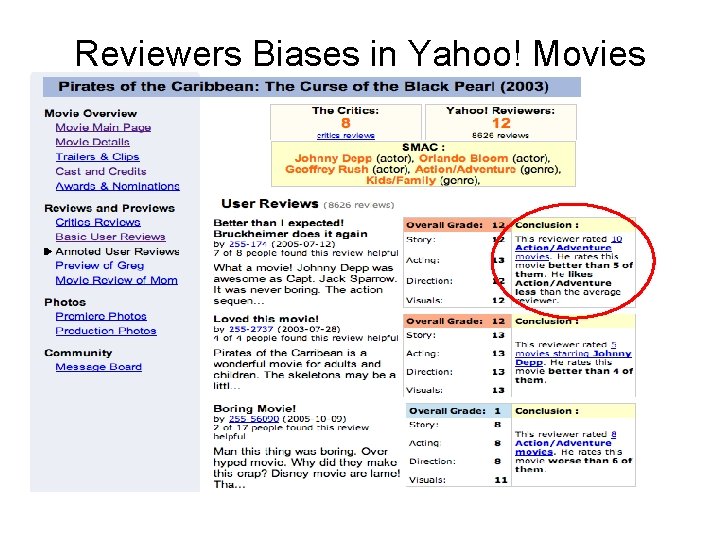

Yahoo! Movies Now

Reviewers Biases in Yahoo! Movies

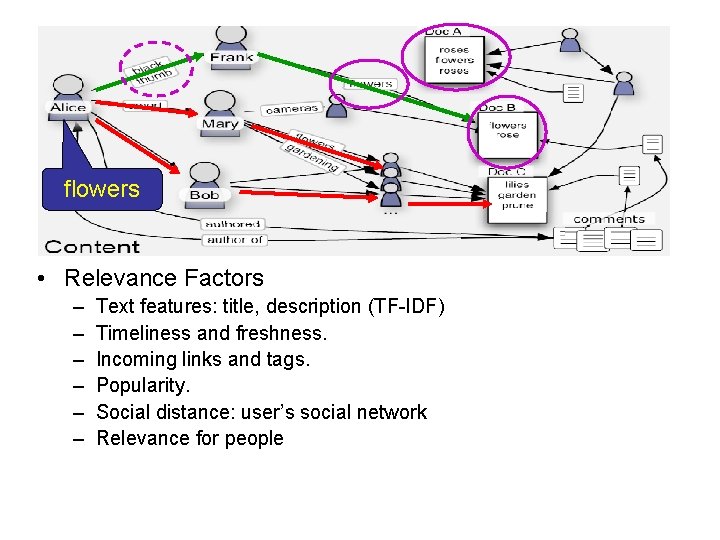

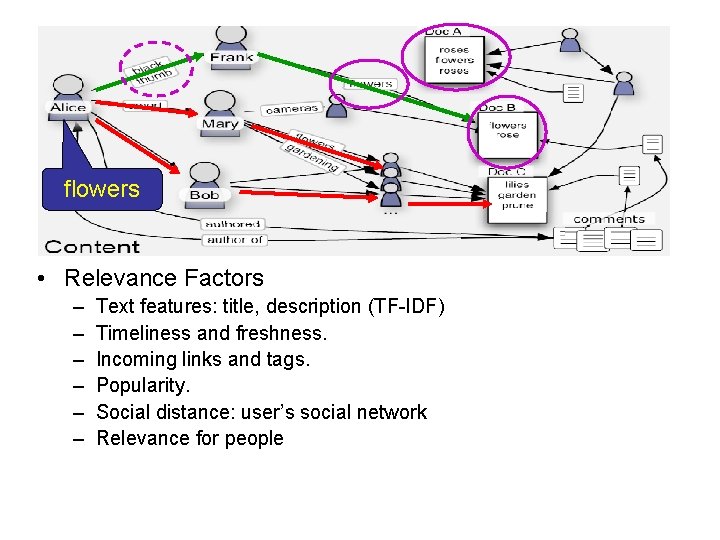

New Ranking Semantics • Not only relevance (in a traditional Web sense) but also about people whose opinion matters. • flowers Take into account social connection information • Relevance Factors – – – Text features: title, description (TF-IDF) Timeliness and freshness. Incoming links and tags. Popularity. Social distance: user’s social network Relevance for people

Conclusions • Efficient and effective recommendation platform – Serve (socially! ) relevant content to users – Better recommendations • relevance determined by people who matter to you! • social context, explanation, diversity, temporality, etc – Characterize users’ interests and connections • I enjoy watching Schindler’s list with my parents • and very different movies with my friends!

Outline • About Dagstuhl Seminars • Interesting Talks – Social Recommender Systems – Personalization • Personalizing XML Search with PIMENTO • Multidimensional Search for Personal Information Systems – Relevance Feedback for Interactive Query Refinement • Conclusions

Personalizing XML Search with PIMENTO Irini Fundulaki (ICS-FORTH, Greece)

Motivation • XML search has become popular • Personalization is becoming important – Large number of users, with focused and different needs. • XML Personalization is essential!

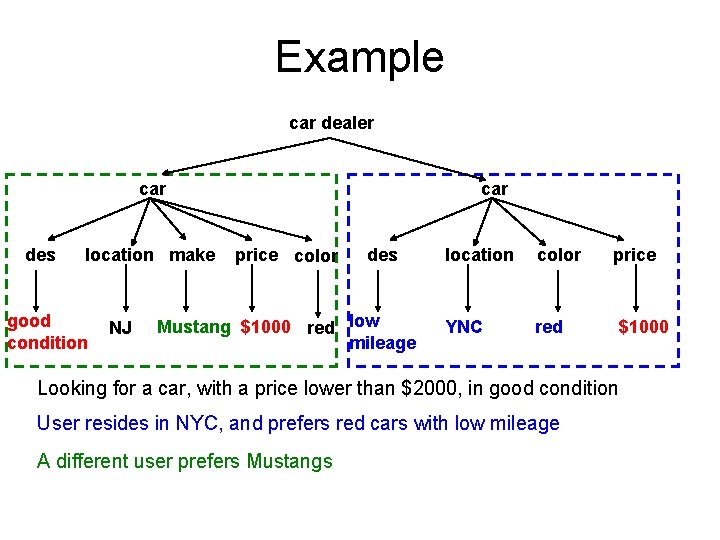

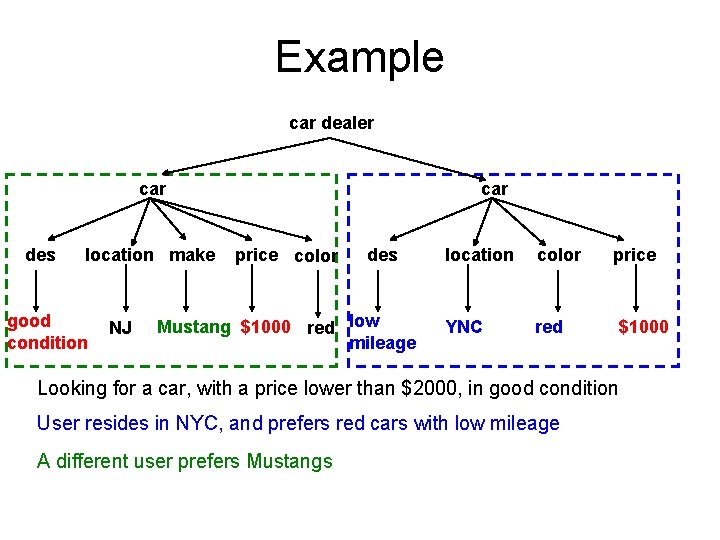

Example car dealer car des location make good condition NJ car price color des Mustang $1000 red low mileage location color YNC red price $1000 Looking for a car, with a price lower than $2000, in good condition User resides in NYC, and prefers red cars with low mileage A different user prefers Mustangs

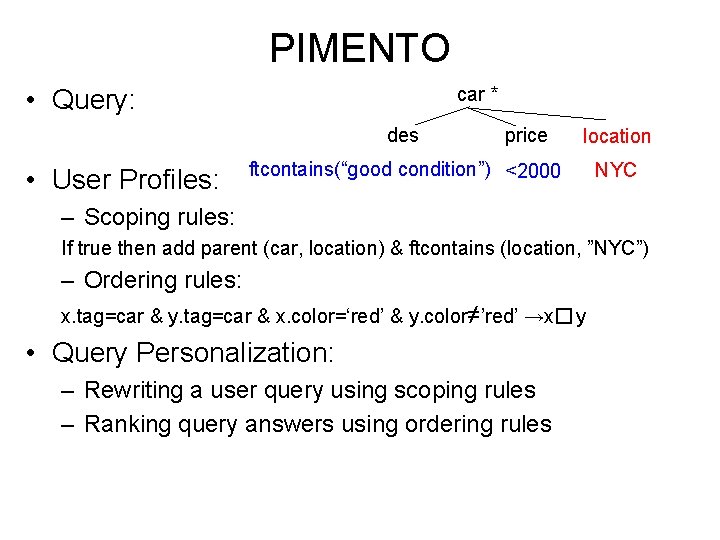

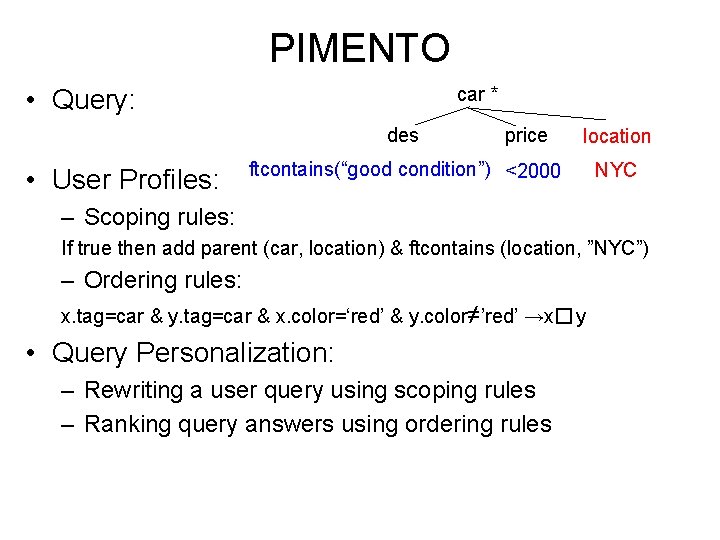

Summary • XML queries are both on structure and content • Given a user interest: – Customize query context: modify candidate set of answers using conditions on both structure and keywords – Customize ranking of answers • Adapt top-k processing to account for user interests.

PIMENTO car * • Query: des • User Profiles: price location ftcontains(“good condition”) <2000 NYC – Scoping rules: If true then add parent (car, location) & ftcontains (location, ”NYC”) – Ordering rules: x. tag=car & y. tag=car & x. color=‘red’ & y. color≠’red’ →x� y • Query Personalization: – Rewriting a user query using scoping rules – Ranking query answers using ordering rules

Outline • About Dagstuhl Seminars • Interesting Talks – Social Recommender Systems – Personalization • Personalizing XML Search with PIMENTO • Multidimensional Search for Personal Information Systems – Relevance Feedback for Interactive Query Refinement • Conclusions

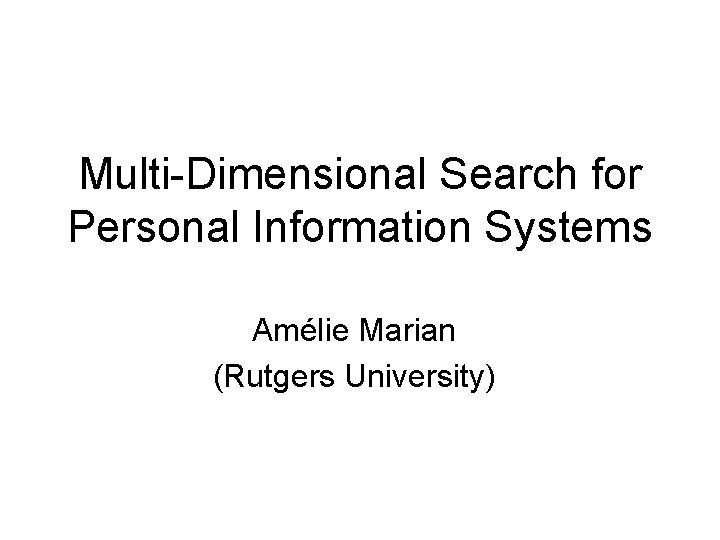

Multi-Dimensional Search for Personal Information Systems Amélie Marian (Rutgers University)

Motivation • Large collections of heterogeneous data. • Need simple and efficient search approach • Typical desktop search tools use – Keyword search for ranking – Possibly some additional conditions (e. g. metadata, structure) for filtering • e. g. Find a pdf file created on March 21, 2007 that contains the words “proposal draft” – Filtering conditions: *. pdf, 03/21/2007 – Ranking expression: “proposal draft” • Miss some relevant files: *. txt documents created on 03/21/2007 contain words “proposal draft”

Multi-Dimensional Search • Allow users to provide fuzzy structure and metadata conditions in addition to keyword conditions. • Three query dimensions: (content, structure, metadata) • Example: – For $i In /File[File. Sys. Metadata/File. Date=’ 03/21/07’] For $j In /File[Content. Summary/Word. Info/Term=‘proposal’ AND Content. Summary/Word. Info/Term=‘draft’] For &m In /File[File. Sys. Metadata/File. Type=‘pdf’] WHERE $i/@file. ID=$j/@file. ID AND $i/@file. ID=$m/@file. ID RETURN $i/file. Name • Individually score each dimension and then integrate three dimension scores into a meaningful unified score.

Outline • About Dagstuhl Seminars • Interesting Talks – Social Recommender Systems – Personalization – Relevance Feedback for Interactive Query Refinement • Conclusions

Relevance Feedback in Top. X Search Engine Ralf Schenkel

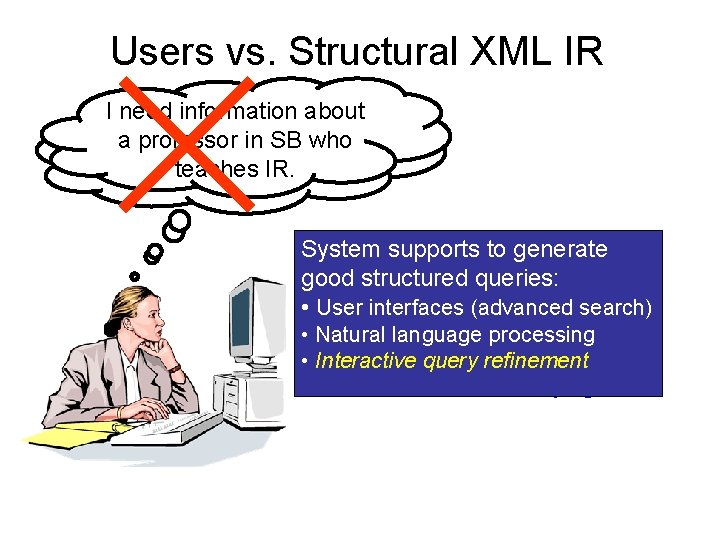

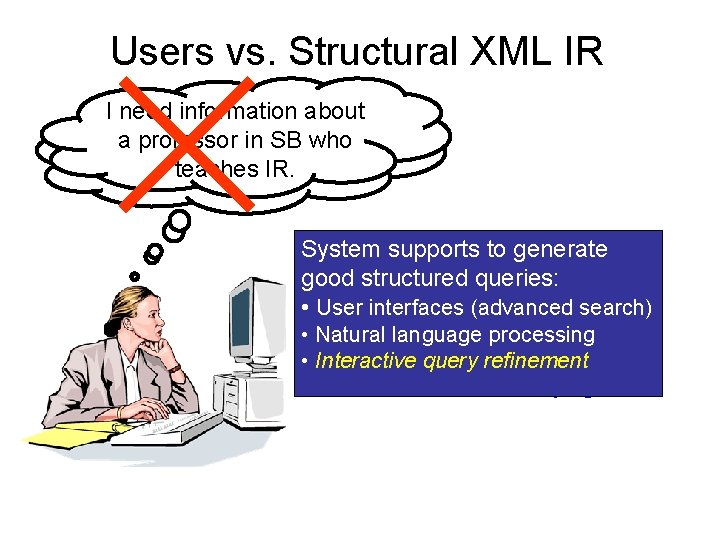

Users vs. Structural XML IR I need information about //professor[contains(. , SB) a professor in SB who and contains(. //course, IR] teaches IR. Structural query languages System supports to generatedo not work in practise: good structured queries: is unknown or search) • • Schema User interfaces (advanced • heterogeneous Natural language processing Languagequery is toorefinement complex • • Interactive • Results often unsatisfying

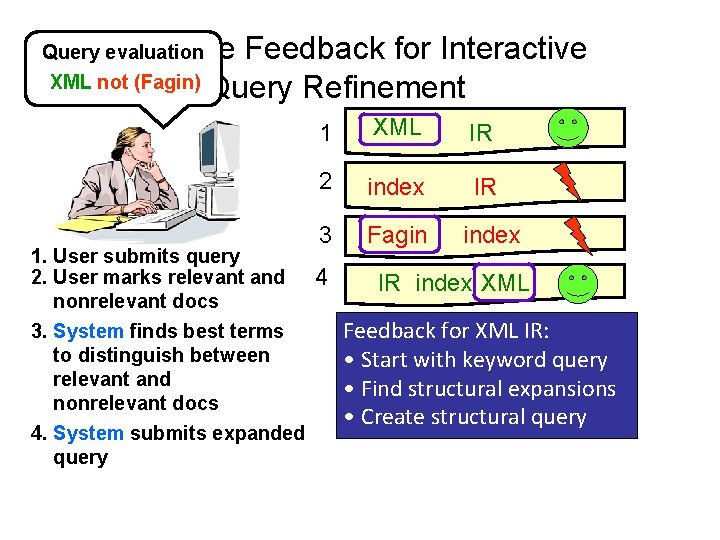

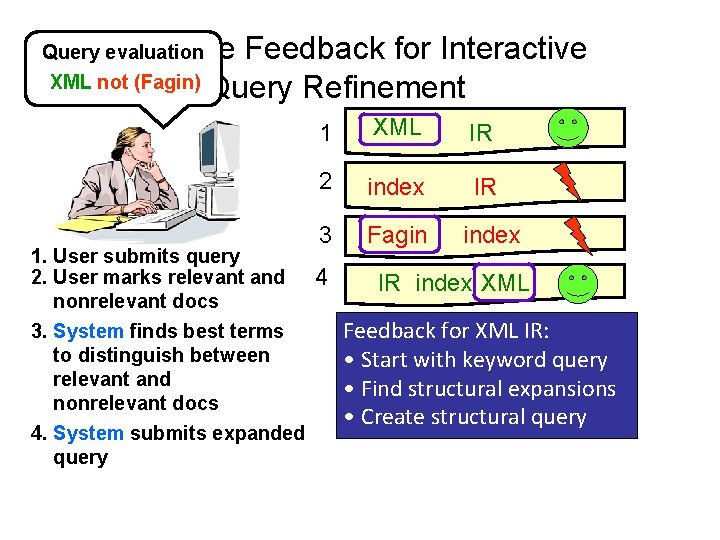

Relevance Feedback for Interactive XML not (Fagin) Query Refinement Query evaluation XML IR 2 index IR 3 Fagin index 4 IR index XML Feedback for XML IR: • Start with keyword query • Find structural expansions • Create structural query … 1. User submits query 2. User marks relevant and nonrelevant docs 3. System finds best terms to distinguish between relevant and nonrelevant docs 4. System submits expanded query 1

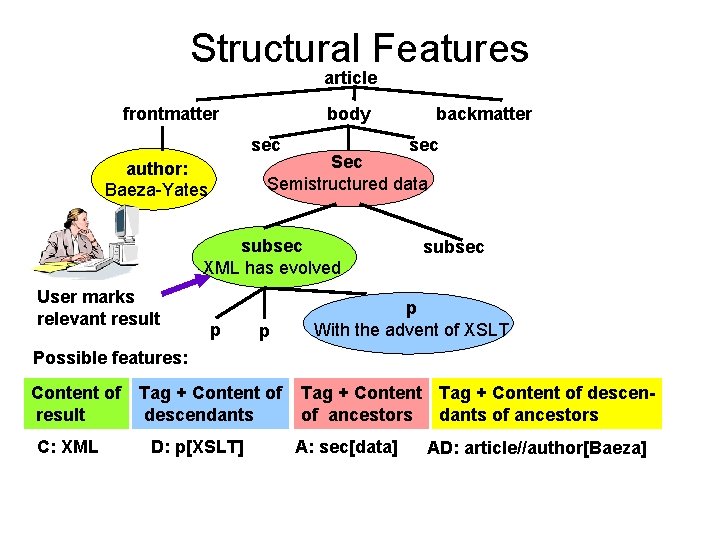

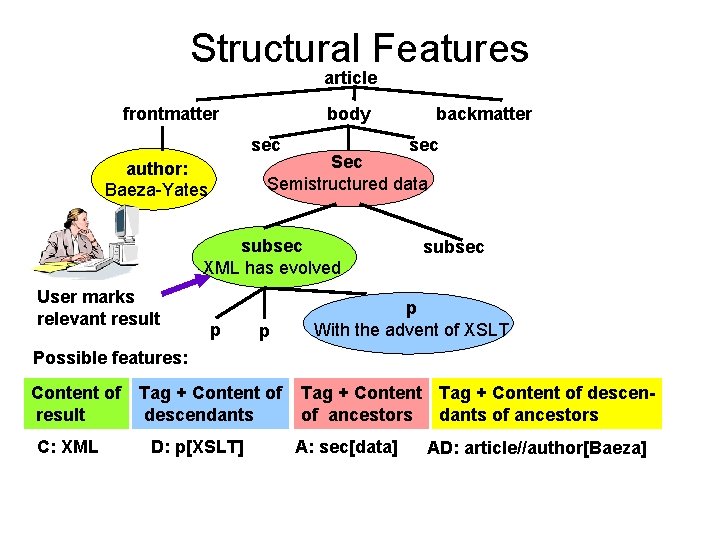

Structural Features article frontmatter body sec Sec Semistructured data author: Baeza-Yates subsec XML has evolved User marks relevant result backmatter p p subsec p With the advent of XSLT Possible features: Content of result C: XML Tag + Content of descendants D: p[XSLT] Tag + Content of descenof ancestors dants of ancestors A: sec[data] AD: article//author[Baeza]

![Query Construction Initial query query evaluation authorBaeza article secdata descendantorself axis needs schema information Query Construction Initial query: query evaluation author[Baeza] article sec[data] descendantor-self axis needs schema information!](https://slidetodoc.com/presentation_image/9279a41e3b3f0ce3215df9719f10d62b/image-28.jpg)

Query Construction Initial query: query evaluation author[Baeza] article sec[data] descendantor-self axis needs schema information! *[query evaluation] XML] p[XSLT] Content of result Tag + Content of descendants of ancestors C: XML D: p[XSLT] A: sec[data] AD: article//author[Baeza]

Conclusions • Queries with structural constraints to improve result quality • Relevance Feedback to create such queries • Structure of collection matters a lot

Outline • About Dagstuhl Seminars • Interesting Talks – Social Recommender Systems – Personalization – Relevance Feedback for Interactive Query Refinement • Conclusions

Conclusions (1) • XQuery and exact matches for querying XML documents are not likely to be sufficient. • Techniques based on approximate matching of query content and structure, scoring potential answers, and returning a ranked list of answers are more appropriate. • Trend: blending together the techniques addressed by DB, IR and the Web/Applications communities.

Conclusions (2) • Opinions on ranking: – Ranking for XML Search should take into account the structure (not contents only) – Add scoring to ranking with preferences – Declaring properties of scoring functions/process so they can be matched against application needs. – The accuracy of ranking in social networks is tied to users behavior. – Data uncertainty presents interesting issues in uncertain top-k queries.

Thank you! Questions?

Access module 2: querying a database

Access module 2: querying a database Access module 2 querying a database

Access module 2 querying a database Seminar presentation outline

Seminar presentation outline Ap seminar part b outline

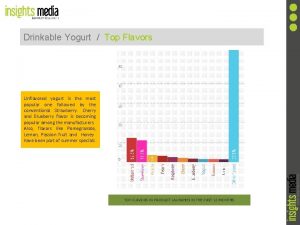

Ap seminar part b outline Danubio yogurt

Danubio yogurt Risk identification process

Risk identification process Ranked vulnerability risk worksheet

Ranked vulnerability risk worksheet Ranked vulnerability risk worksheet

Ranked vulnerability risk worksheet Ranked vulnerability risk worksheet

Ranked vulnerability risk worksheet Ranked vulnerability risk worksheet

Ranked vulnerability risk worksheet Meat quality chart

Meat quality chart Concentration risk

Concentration risk Ranked vulnerability risk worksheet

Ranked vulnerability risk worksheet Ranked vulnerability risk worksheet

Ranked vulnerability risk worksheet Information asset classification worksheet

Information asset classification worksheet Interu

Interu Ranked vulnerability risk worksheet

Ranked vulnerability risk worksheet What were the military duties of ranked officers in othello

What were the military duties of ranked officers in othello Ranked vulnerability risk worksheet

Ranked vulnerability risk worksheet Sku rationalization analytics

Sku rationalization analytics Ranked vulnerability risk worksheet

Ranked vulnerability risk worksheet Ranked vulnerability risk worksheet

Ranked vulnerability risk worksheet Flavorful sentence

Flavorful sentence Marc xml

Marc xml Twitter api xml

Twitter api xml Xml accelerator

Xml accelerator Xml extensible markup language

Xml extensible markup language Advantages of xml

Advantages of xml Excel xml mapping repeating elements

Excel xml mapping repeating elements Microsoft schemas xml

Microsoft schemas xml Xml file reader

Xml file reader What is xul

What is xul Language detector

Language detector /showthread.php xml

/showthread.php xml Xml

Xml