d RMT Disaggregated Programmable Switching Sharad Chole Andrew

d. RMT: Disaggregated Programmable Switching Sharad Chole, Andrew Fingerhut, Sha Ma, Anirudh Sivaraman, Shay Vargaftik, Alon Berger, Gal Mendelson, Mohammad Alizadeh, Shang-Tse Chuang, Isaac Keslassy, Ariel Orda, and Tom Edsall

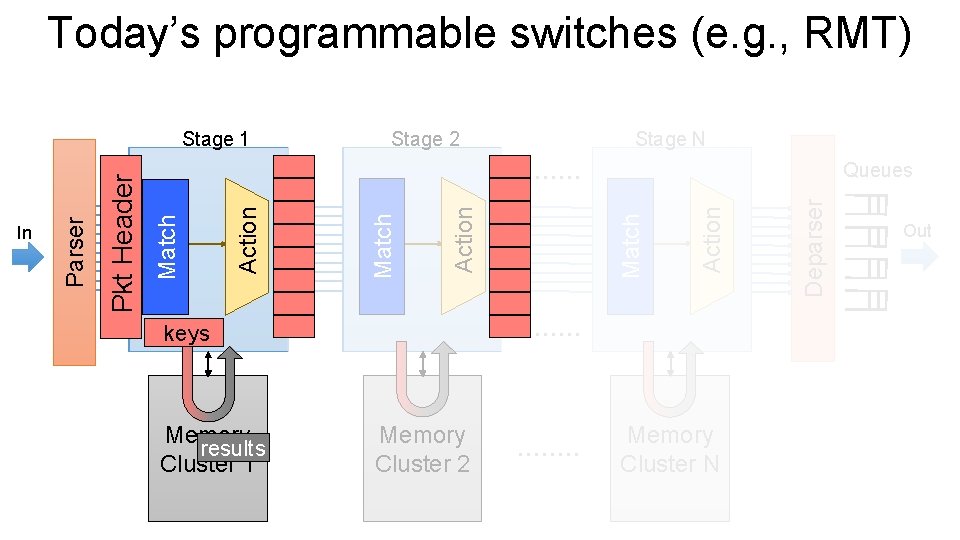

Today’s programmable switches (e. g. , RMT) Stage 2 Stage N keys Memory results Cluster 1 Memory Cluster 2 Memory Cluster N 2 Deparser Action Match Action Queues Match Pkt Header In Parser Stage 1 Out

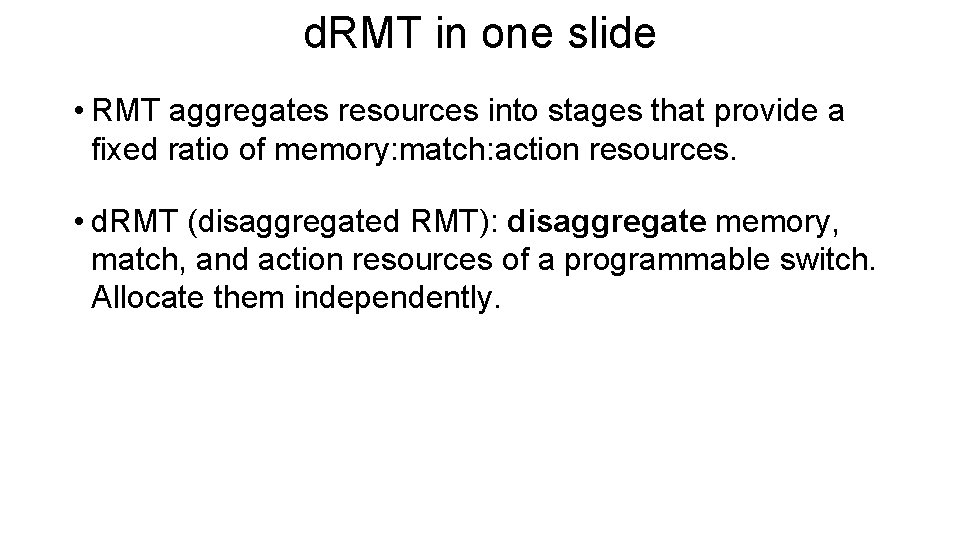

d. RMT in one slide • RMT aggregates resources into stages that provide a fixed ratio of memory: match: action resources. • d. RMT (disaggregated RMT): disaggregate memory, match, and action resources of a programmable switch. Allocate them independently.

The d. RMT architecture Stage 1 Stage 2 Stage N Memory Cluster 1 Memory Cluster 2 Memory Cluster N 4 Deparser Action Match In Parser Queues Out

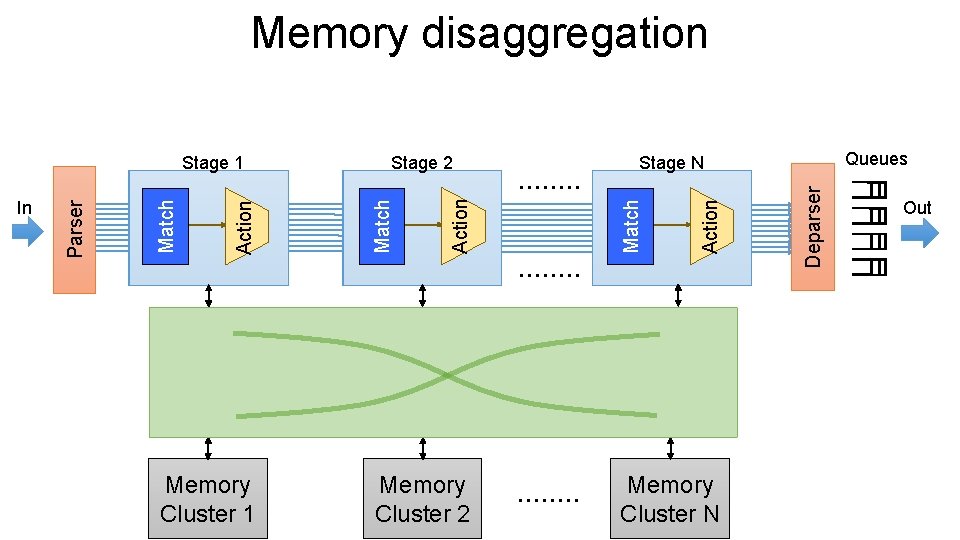

Memory disaggregation Memory Cluster 1 Memory Cluster 2 Queues Memory Cluster N Deparser Action Stage N Match Action Stage 2 Match Action Match In Parser Stage 1 Out

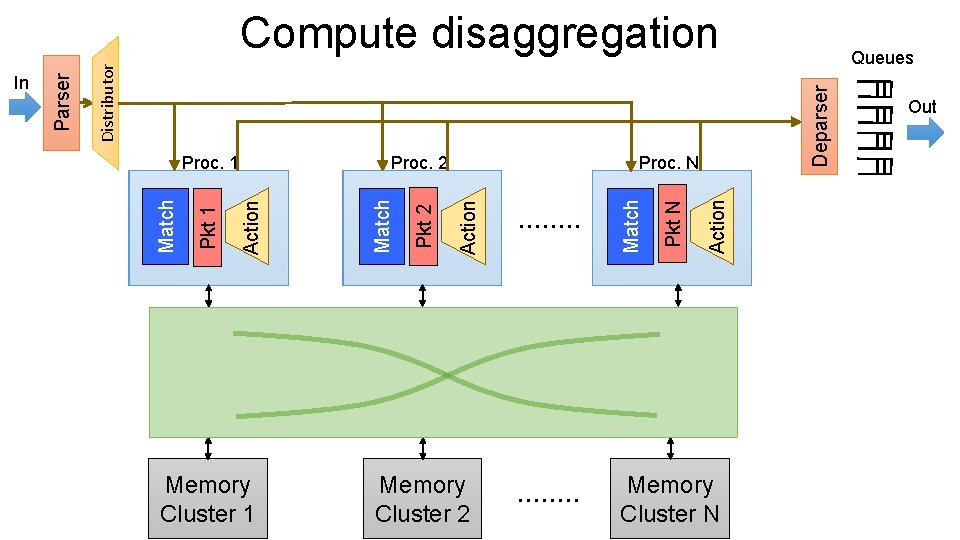

Memory Cluster 1 Memory Cluster 2 Action Pkt N Match Action Pkt 2 Match Action Pkt 1 Match Proc. N Proc. 2 Proc. 1 Queues Deparser Distributor In Parser Compute disaggregation Memory Cluster N Out

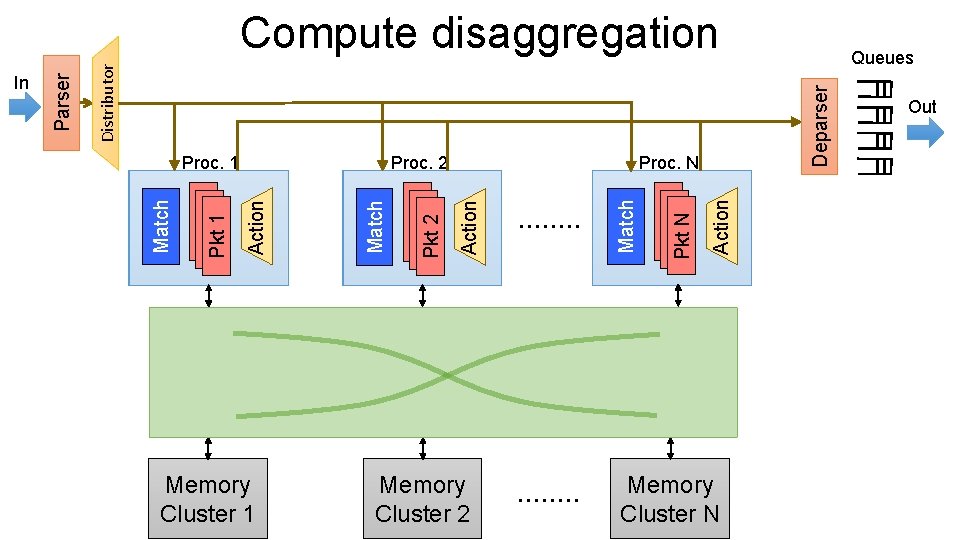

Memory Cluster 1 Memory Cluster 2 Action Pkt N Match Action Pkt 2 Match Action Pkt 1 Match Proc. N Proc. 2 Proc. 1 Queues Deparser Distributor In Parser Compute disaggregation Memory Cluster N Out

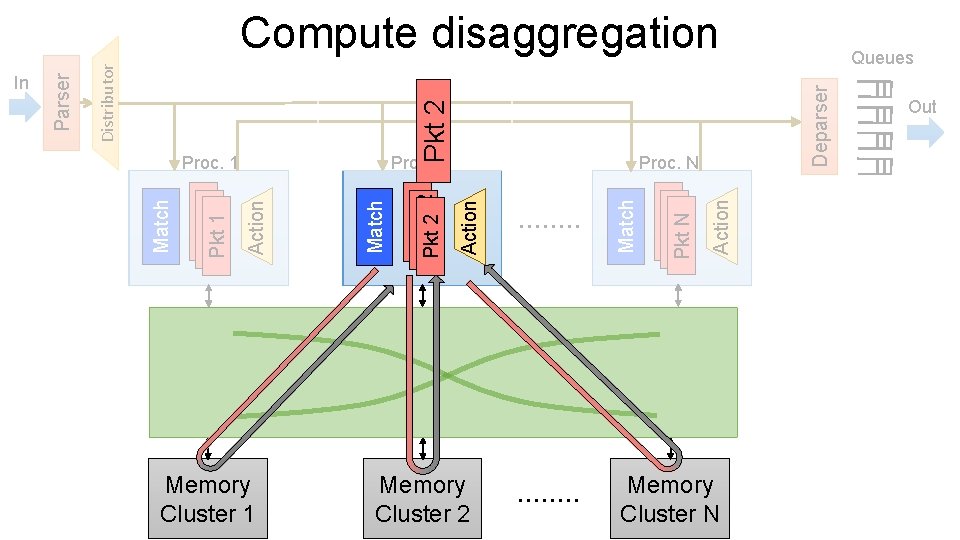

Memory Cluster 1 Memory Cluster 2 Action Pkt N Match Action Pkt. N+2 2 Match Action Pkt 1 Match Proc. N Proc. 2 Proc. 1 Queues Deparser Pkt 2 Distributor In Parser Compute disaggregation Memory Cluster N Out

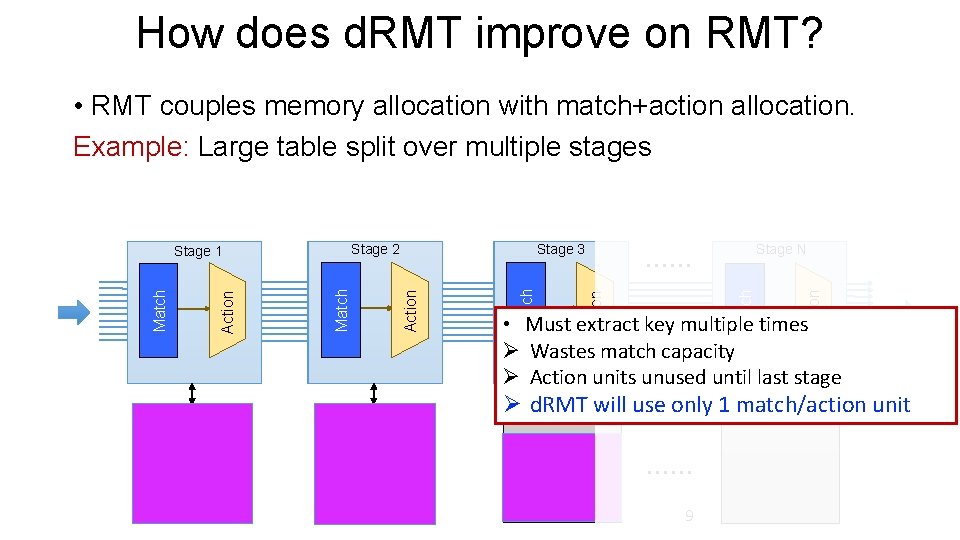

How does d. RMT improve on RMT? • RMT couples memory allocation with match+action allocation. Example: Large table split over multiple stages Action Match Stage N Action Match Stage 3 Stage 2 Stage 1 • Must extract key multiple times Ø Wastes match capacity Ø Action units unused until last stage Ø d. RMT will use only 1 Memory match/action unit Cluster N Memory Cluster 1 Memory Cluster 2 Memory Cluster 3 9

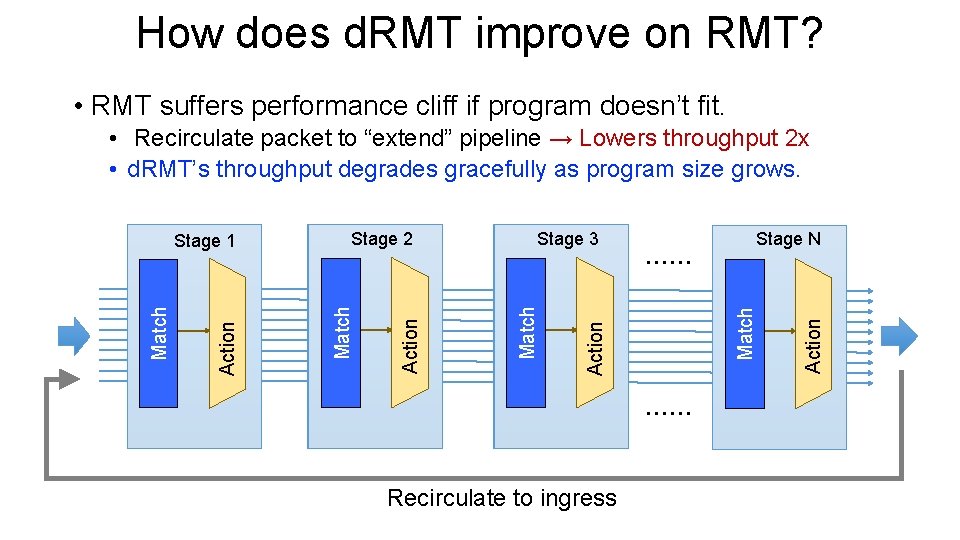

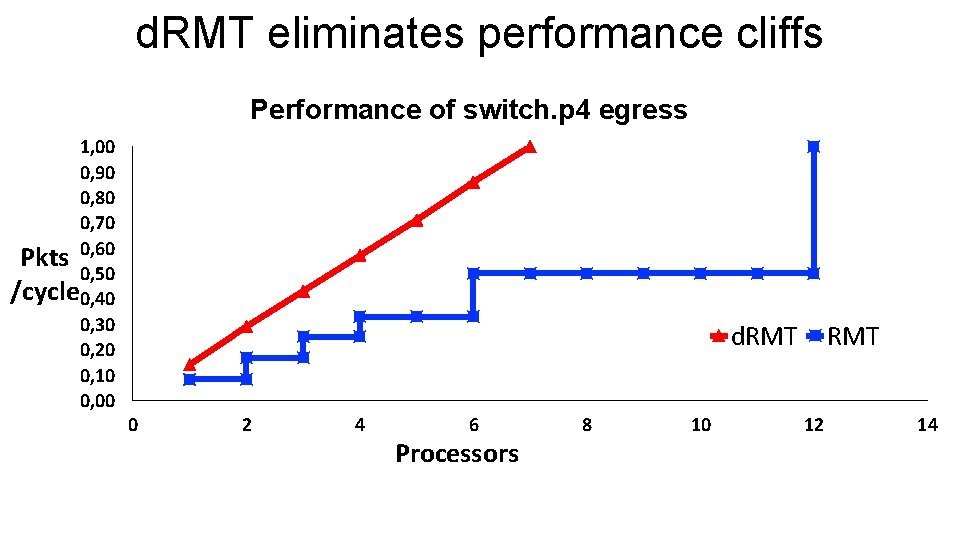

How does d. RMT improve on RMT? • RMT suffers performance cliff if program doesn’t fit. • Recirculate packet to “extend” pipeline → Lowers throughput 2 x • d. RMT’s throughput degrades gracefully as program size grows. Recirculate to ingress Action Stage N Match Action Stage 3 Match Action Stage 2 Match Action Match Stage 1

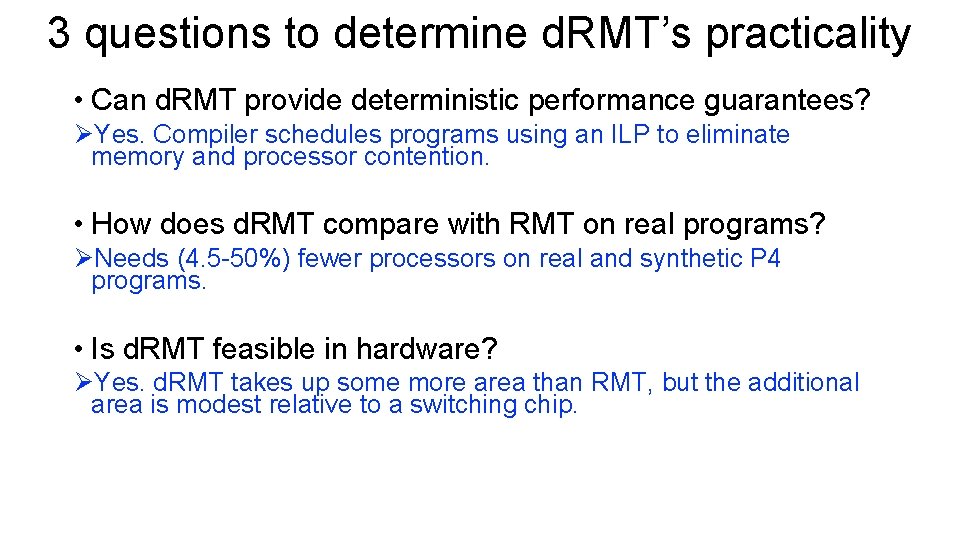

3 questions to determine d. RMT’s practicality • Can d. RMT provide deterministic performance guarantees? ØYes. Compiler schedules programs using an ILP to eliminate memory and processor contention. • How does d. RMT compare with RMT on real programs? ØNeeds (4. 5 -50%) fewer processors on real and synthetic P 4 programs. • Is d. RMT feasible in hardware? ØYes. d. RMT takes up some more area than RMT, but the additional area is modest relative to a switching chip.

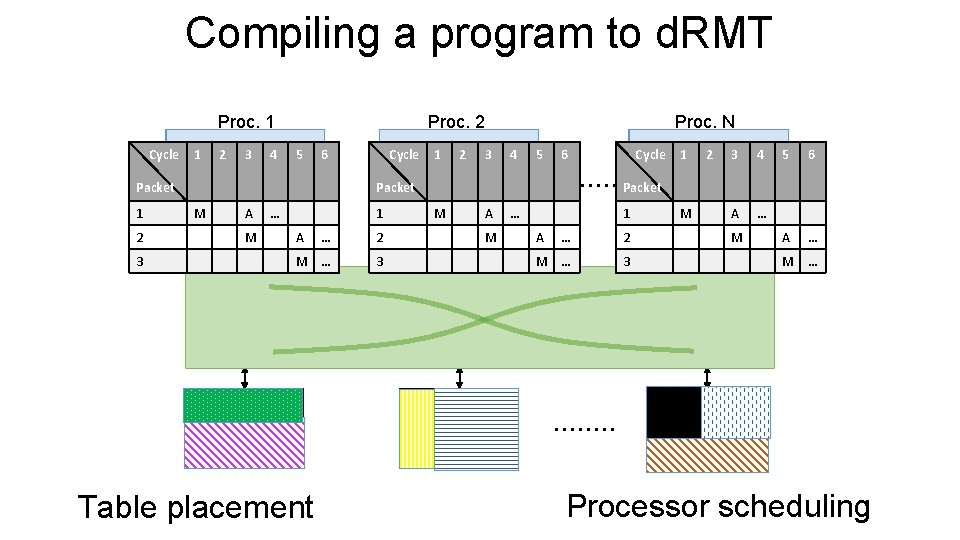

Compiling a program to d. RMT 2 M 3 A M M … 3 3 A 4 M Memory Cluster 2 5 6 Cycle 1 2 3 M A Packet … 1 A … 2 M … 3 4 Action 1 2 Table placement 2 Packet … Memory Cluster 1 1 Pkt N Cycle Match 6 Action … 5 Pkt 2 A 4 Match M 3 Action 1 2 Pkt 1 Packet 1 Match Cycle Proc. N Proc. 2 Proc. 1 5 6 A … … M M … Memory Cluster N Processor scheduling

Crossbar decouples scheduling and placement • RMT couples scheduling and placement. • In d. RMT the crossbar decouples them if both scheduling and placement respect crossbar constraints. • Focus on scheduling alone. Prior work handles table placement (Jose et al. , NSDI 2015).

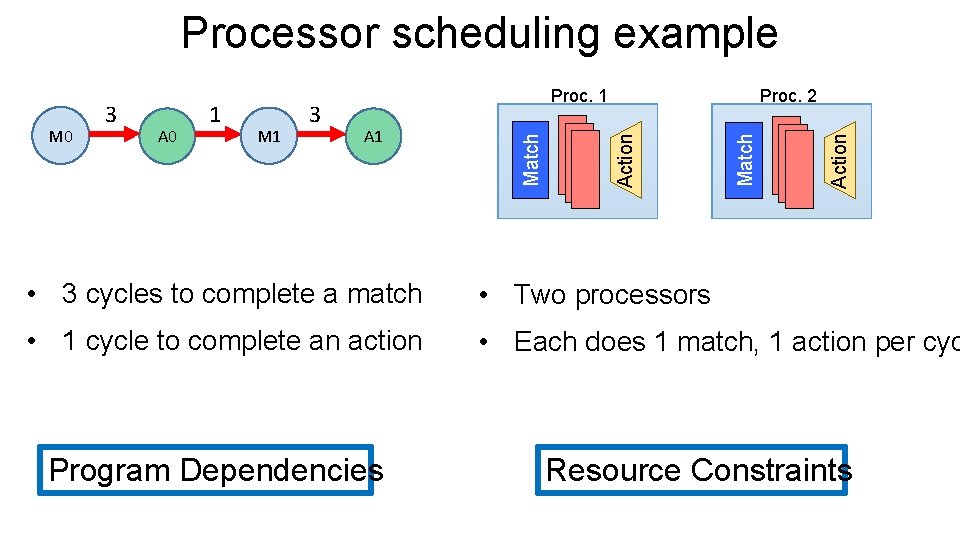

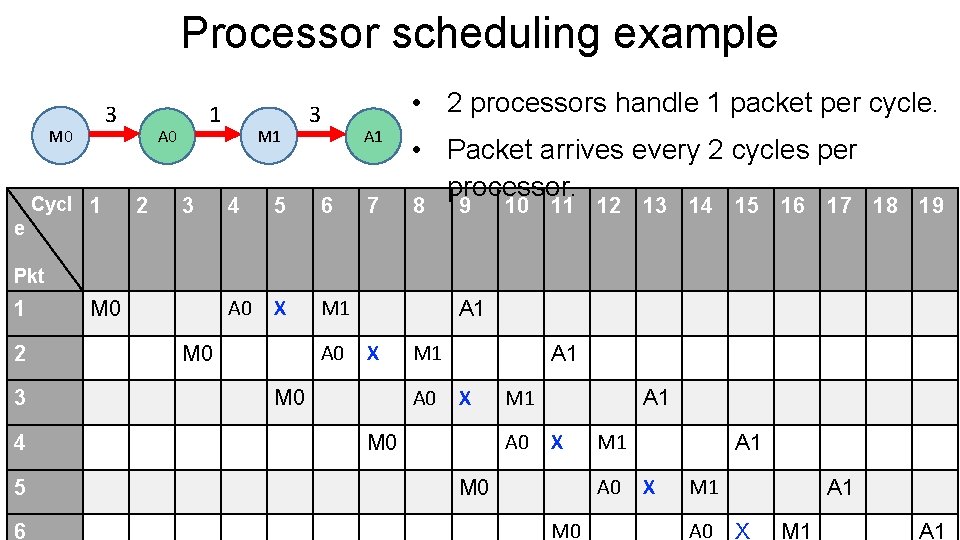

Processor scheduling example Action A 1 Match M 1 3 Action A 0 1 Match M 0 3 Proc. 2 Proc. 1 • 3 cycles to complete a match • Two processors • 1 cycle to complete an action • Each does 1 match, 1 action per cyc Program Dependencies Resource Constraints

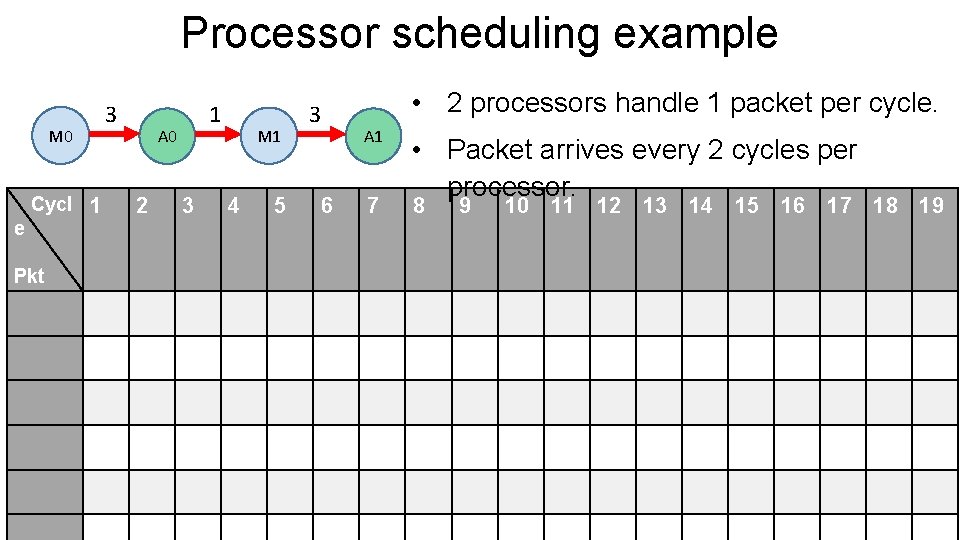

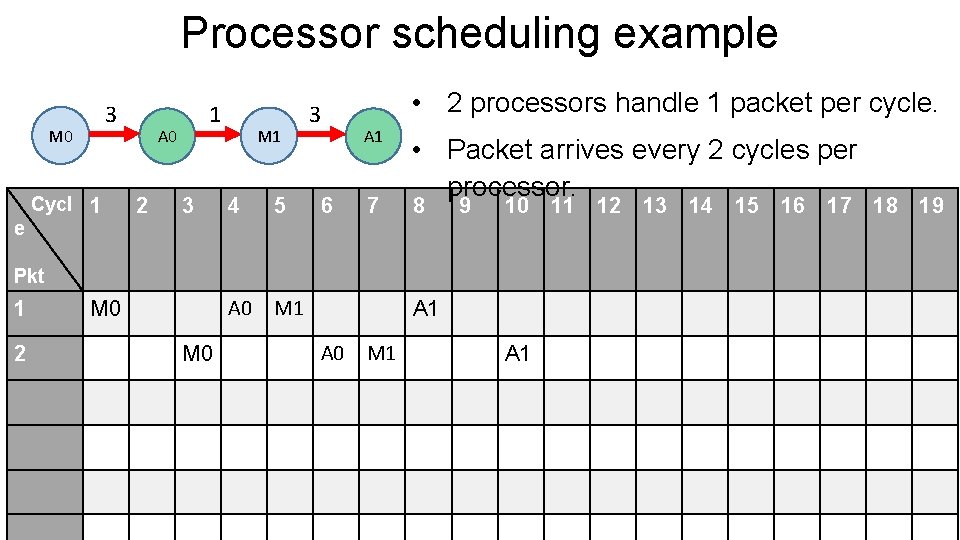

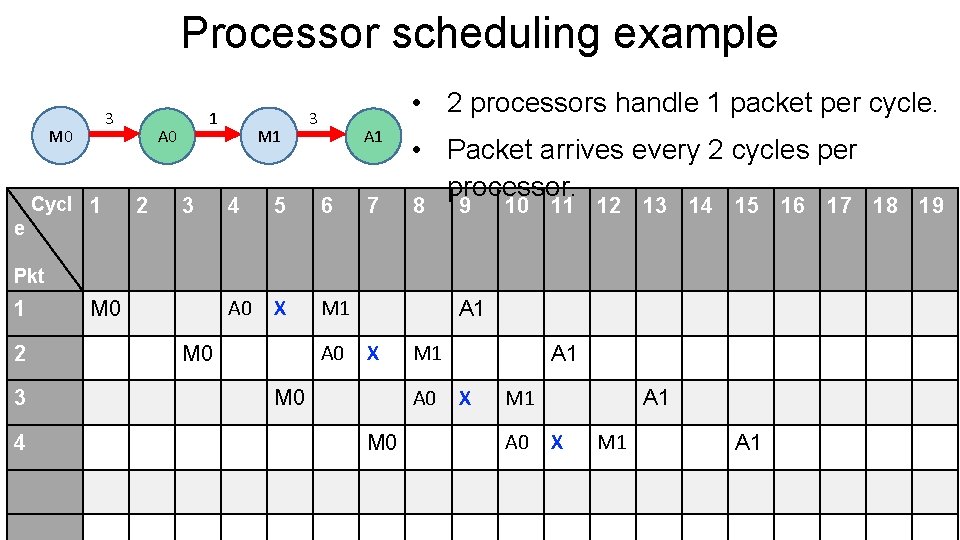

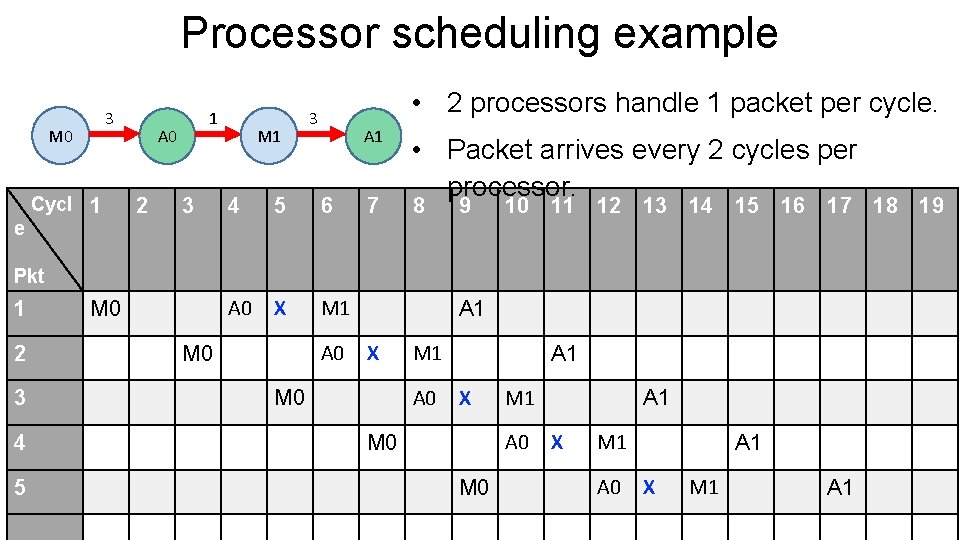

Processor scheduling example M 0 e Cycl 1 Pkt 3 1 A 0 2 3 M 1 4 5 3 6 • 2 processors handle 1 packet per cycle. A 1 7 • Packet arrives every 2 cycles per processor. 8 9 10 11 12 13 14 15 16 17 18 19

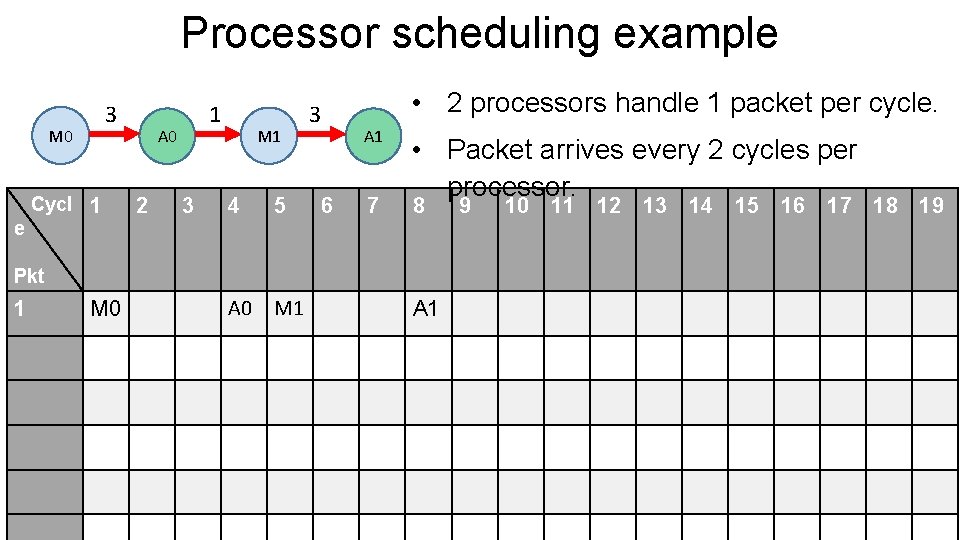

Processor scheduling example 3 M 0 e Cycl 1 1 A 0 2 3 M 1 4 5 A 0 M 1 3 6 • 2 processors handle 1 packet per cycle. A 1 7 • Packet arrives every 2 cycles per processor. 8 Pkt 1 M 0 A 1 9 10 11 12 13 14 15 16 17 18 19

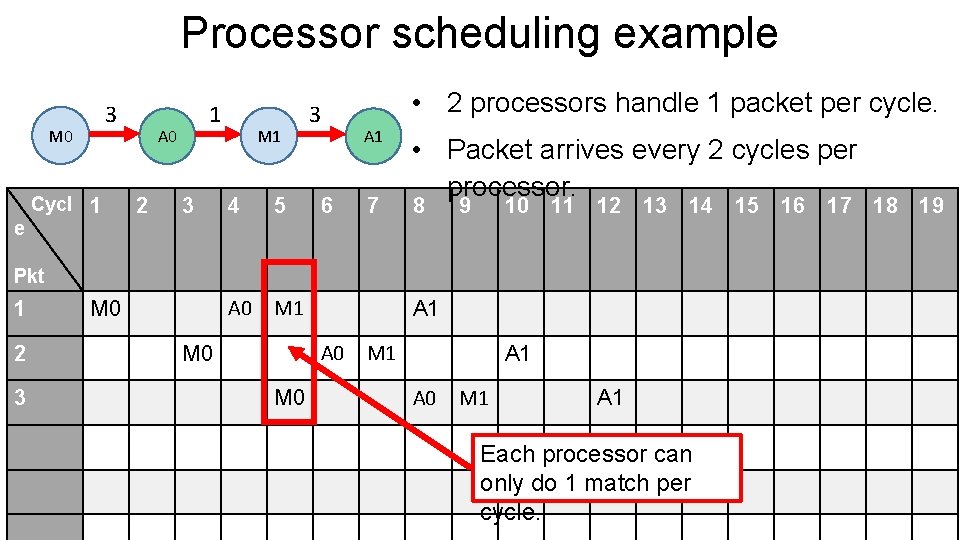

Processor scheduling example 3 M 0 e Cycl 1 1 A 0 2 3 M 1 4 5 A 0 M 1 3 6 • 2 processors handle 1 packet per cycle. A 1 7 • Packet arrives every 2 cycles per processor. 8 9 10 11 12 13 14 15 16 17 18 19 Pkt 1 2 M 0 A 1 A 0 M 1 A 1

Processor scheduling example 3 M 0 e Cycl 1 1 A 0 2 3 M 1 4 5 A 0 M 1 3 6 • 2 processors handle 1 packet per cycle. A 1 7 • Packet arrives every 2 cycles per processor. 8 9 10 11 12 13 14 15 16 17 18 19 Pkt 1 2 3 M 0 A 1 A 0 M 0 M 1 A 1 Each processor can only do 1 match per cycle.

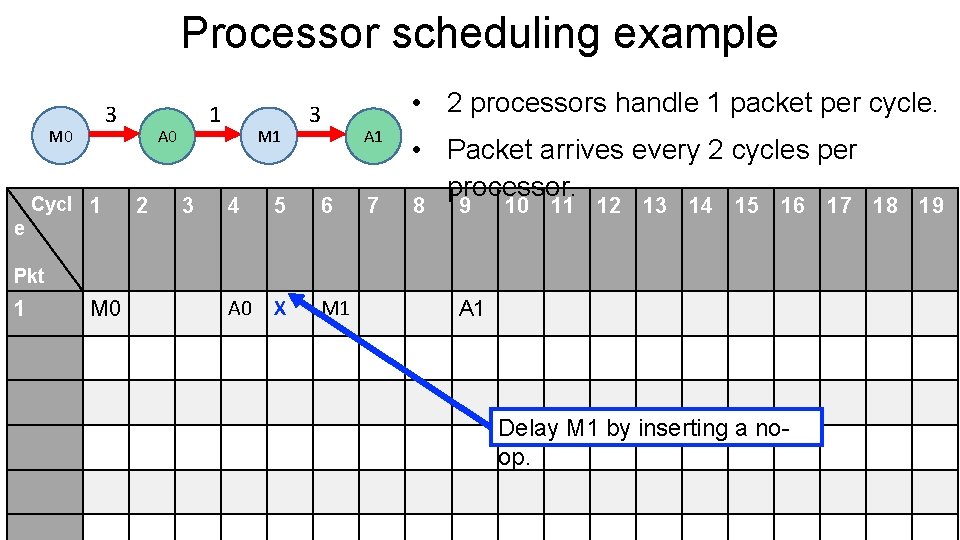

Processor scheduling example 3 M 0 e Cycl 1 1 A 0 2 3 M 1 3 4 5 6 A 0 X M 1 • 2 processors handle 1 packet per cycle. A 1 7 • Packet arrives every 2 cycles per processor. 8 9 10 11 12 13 14 15 16 17 18 19 Pkt 1 M 0 A 1 Delay M 1 by inserting a noop.

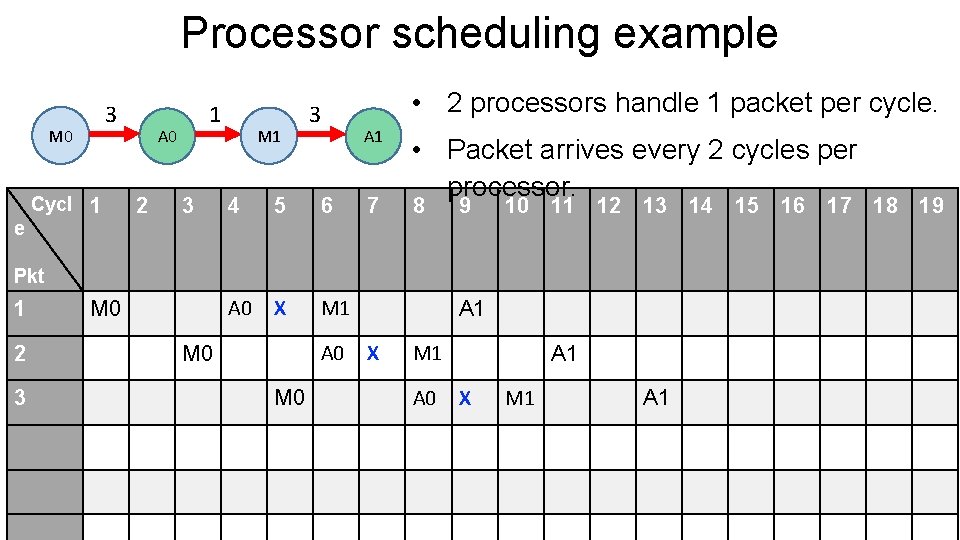

Processor scheduling example 3 M 0 e Cycl 1 1 A 0 2 3 M 1 3 4 5 6 A 0 X M 1 • 2 processors handle 1 packet per cycle. A 1 7 • Packet arrives every 2 cycles per processor. 8 9 10 11 12 13 14 15 16 17 18 19 Pkt 1 2 3 M 0 A 0 M 0 A 1 X M 1 A 1

Processor scheduling example 3 M 0 e Cycl 1 1 A 0 2 3 M 1 3 4 5 6 A 0 X M 1 • 2 processors handle 1 packet per cycle. A 1 7 • Packet arrives every 2 cycles per processor. 8 9 10 11 12 13 14 15 16 17 18 19 Pkt 1 2 3 4 5 6 M 0 A 0 M 0 A 1 X M 1 A 0 M 0 A 1 X M 1 A 1

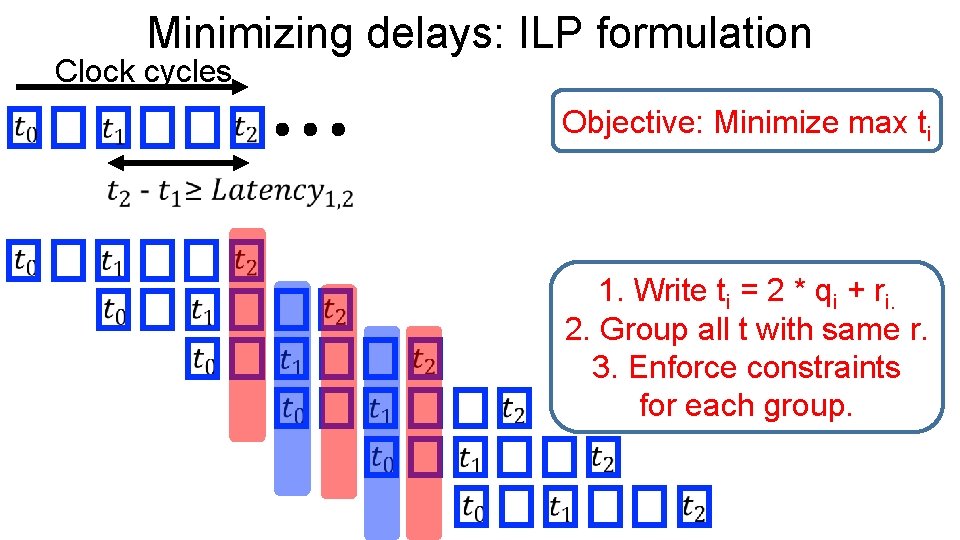

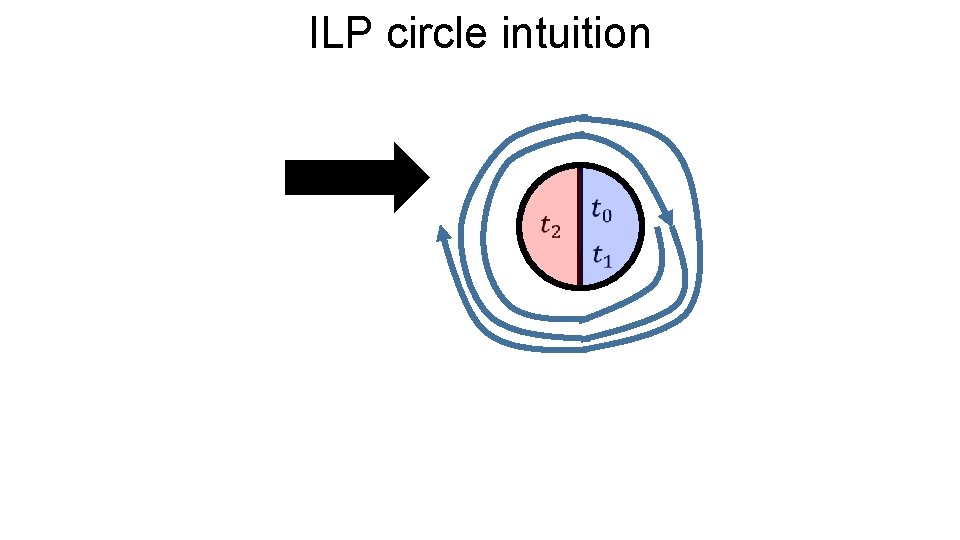

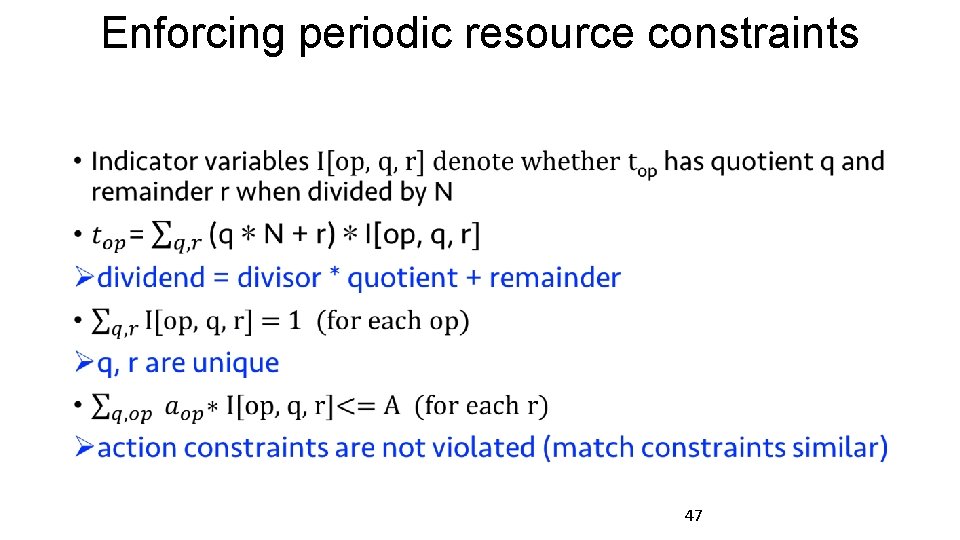

Minimizing delays: ILP formulation Clock cycles Objective: Minimize max ti 1. Write ti = 2 * qi + ri. 2. Group all t with same r. 3. Enforce constraints for each group.

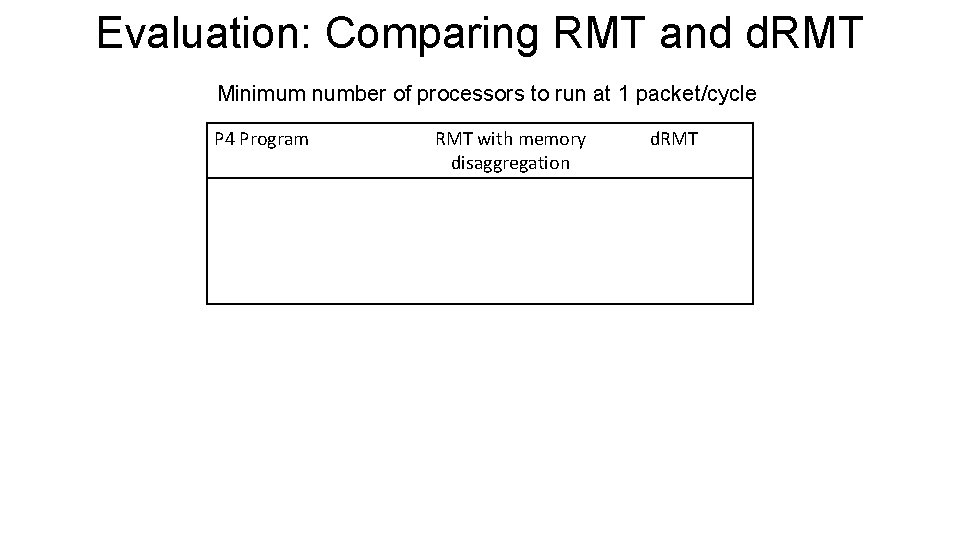

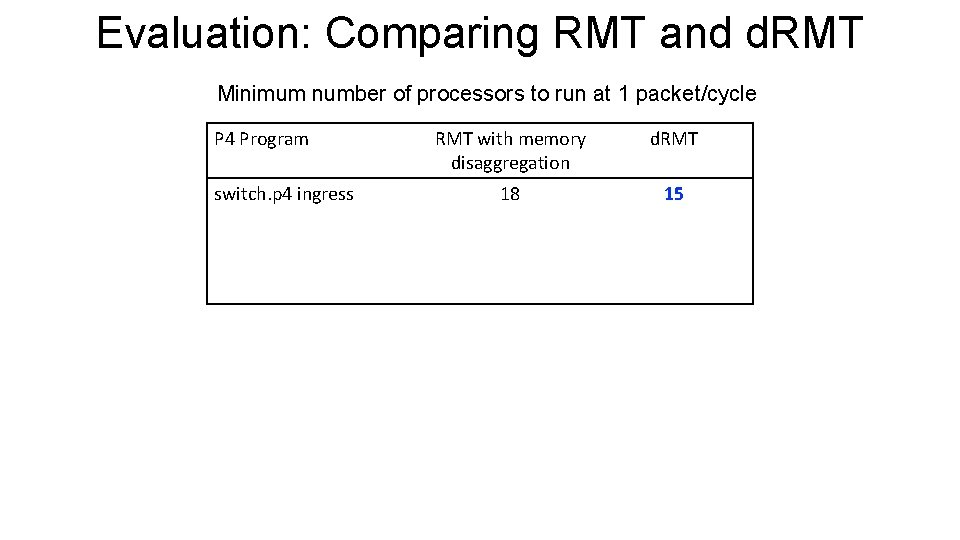

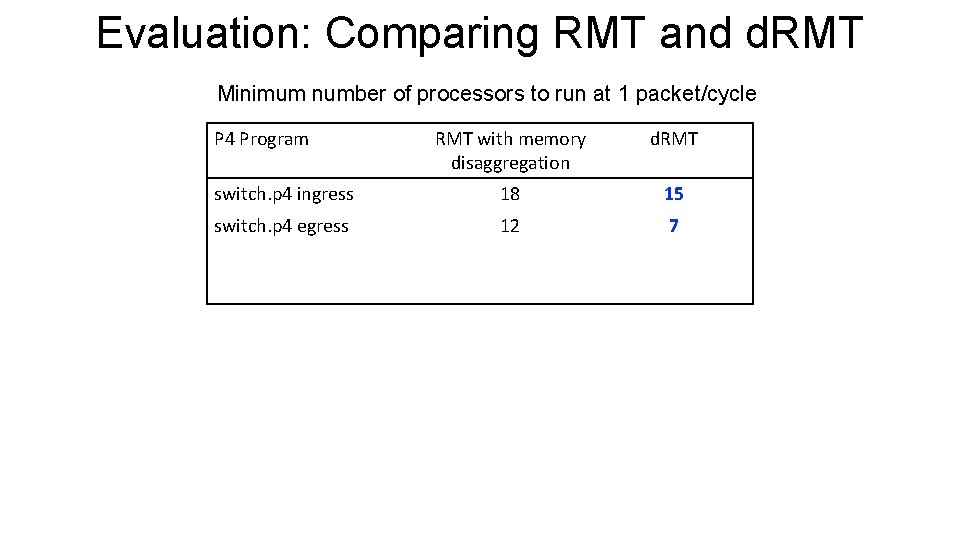

Evaluation: Comparing RMT and d. RMT Minimum number of processors to run at 1 packet/cycle P 4 Program RMT with memory disaggregation d. RMT

Evaluation: Comparing RMT and d. RMT Minimum number of processors to run at 1 packet/cycle P 4 Program switch. p 4 ingress RMT with memory disaggregation d. RMT 18 15

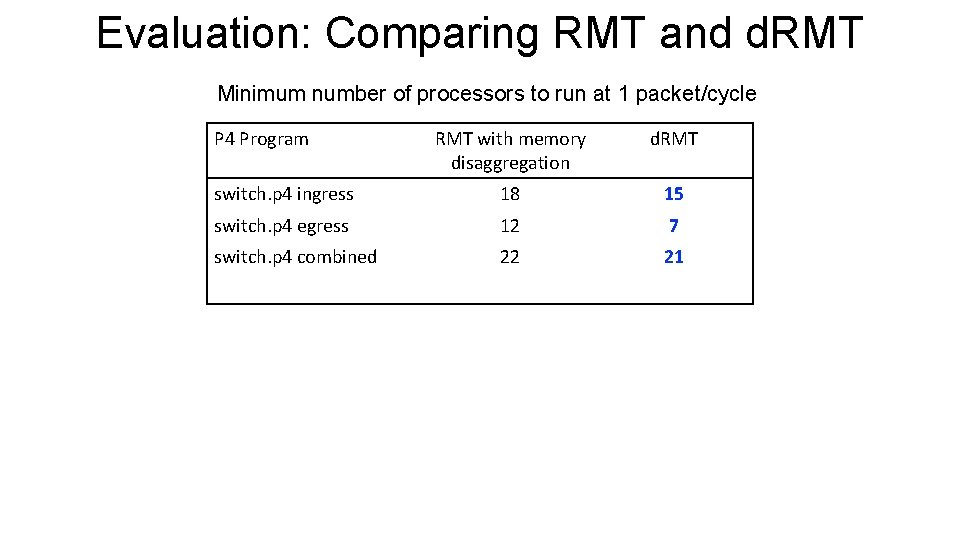

Evaluation: Comparing RMT and d. RMT Minimum number of processors to run at 1 packet/cycle P 4 Program RMT with memory disaggregation d. RMT switch. p 4 ingress 18 15 switch. p 4 egress 12 7

Evaluation: Comparing RMT and d. RMT Minimum number of processors to run at 1 packet/cycle P 4 Program RMT with memory disaggregation d. RMT switch. p 4 ingress 18 15 switch. p 4 egress 12 7 switch. p 4 combined 22 21

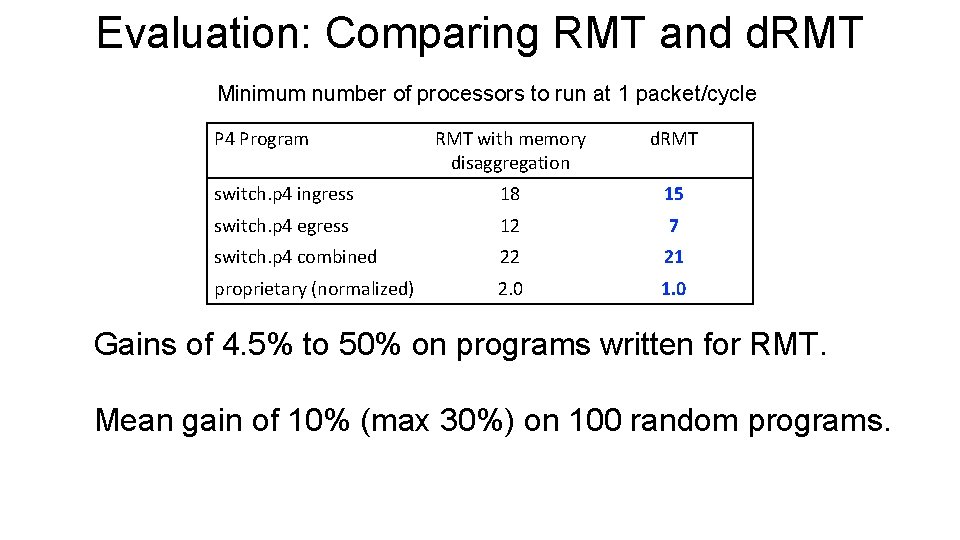

Evaluation: Comparing RMT and d. RMT Minimum number of processors to run at 1 packet/cycle P 4 Program RMT with memory disaggregation d. RMT switch. p 4 ingress 18 15 switch. p 4 egress 12 7 switch. p 4 combined 22 21 proprietary (normalized) 2. 0 1. 0 Gains of 4. 5% to 50% on programs written for RMT. Mean gain of 10% (max 30%) on 100 random programs.

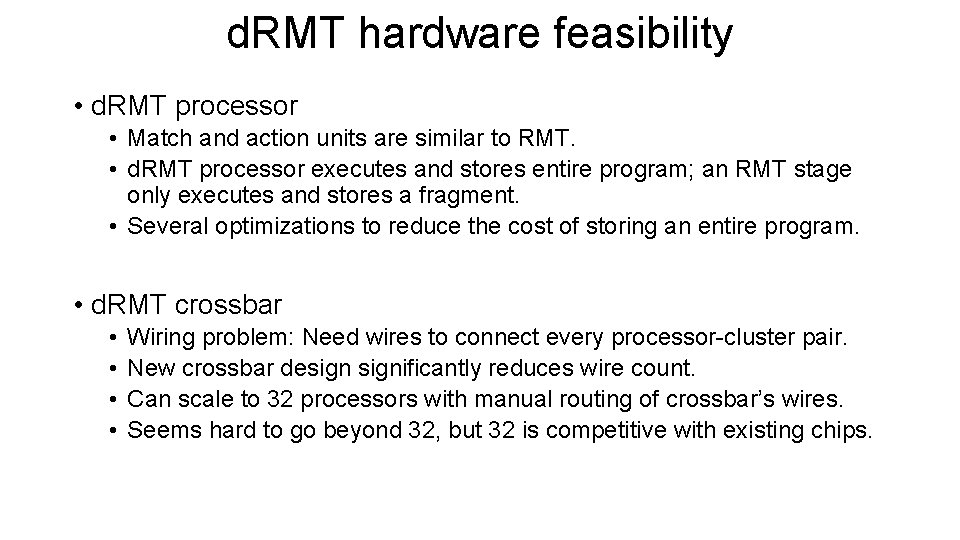

d. RMT hardware feasibility • d. RMT processor • Match and action units are similar to RMT. • d. RMT processor executes and stores entire program; an RMT stage only executes and stores a fragment. • Several optimizations to reduce the cost of storing an entire program. • d. RMT crossbar • • Wiring problem: Need wires to connect every processor-cluster pair. New crossbar designificantly reduces wire count. Can scale to 32 processors with manual routing of crossbar’s wires. Seems hard to go beyond 32, but 32 is competitive with existing chips.

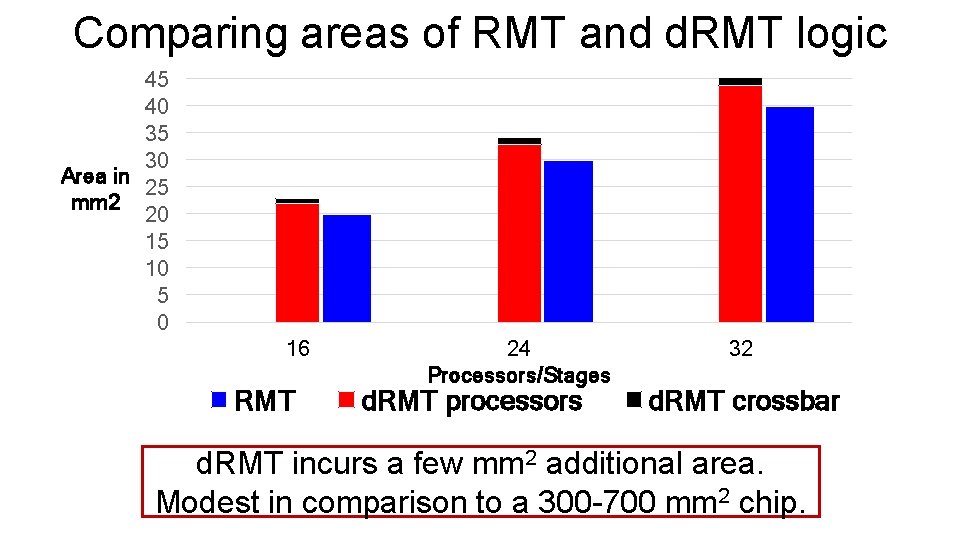

Comparing areas of RMT and d. RMT logic 45 40 35 30 Area in 25 mm 2 20 15 10 5 0 45 40 35 30 25 20 15 10 5 0 16 RMT 24 Processors/Stages d. RMT processors 32 d. RMT crossbar d. RMT incurs a few mm 2 additional area. Modest in comparison to a 300 -700 mm 2 chip.

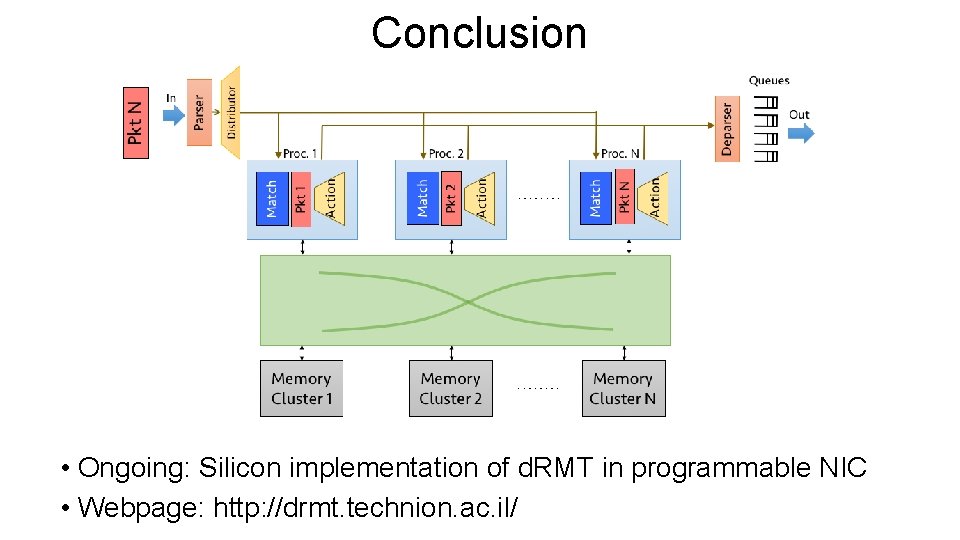

Conclusion • Ongoing: Silicon implementation of d. RMT in programmable NIC • Webpage: http: //drmt. technion. ac. il/

Backup slides 31

Processor scheduling example 3 M 0 e Cycl 1 1 A 0 2 3 M 1 • 2 processors handle 1 packet per cycle. 3 A 1 4 5 6 A 0 X M 1 7 • Packet arrives every 2 cycles per processor. 8 9 10 11 12 13 14 15 16 17 18 19 Pkt 1 2 3 4 M 0 A 0 M 0 A 1 X M 1 A 1

Processor scheduling example 3 M 0 e Cycl 1 1 A 0 2 3 M 1 • 2 processors handle 1 packet per cycle. 3 A 1 4 5 6 A 0 X M 1 7 • Packet arrives every 2 cycles per processor. 8 9 10 11 12 13 14 15 16 17 18 19 Pkt 1 2 3 4 5 M 0 A 0 M 0 A 1 X M 1 A 1

d. RMT eliminates performance cliffs Performance of switch. p 4 egress 1, 00 0, 90 0, 80 0, 70 Pkts 0, 60 0, 50 /cycle 0, 40 0, 30 0, 20 0, 10 0, 00 d. RMT 0 2 4 6 Processors 8 10 RMT 12 14

ILP circle intuition

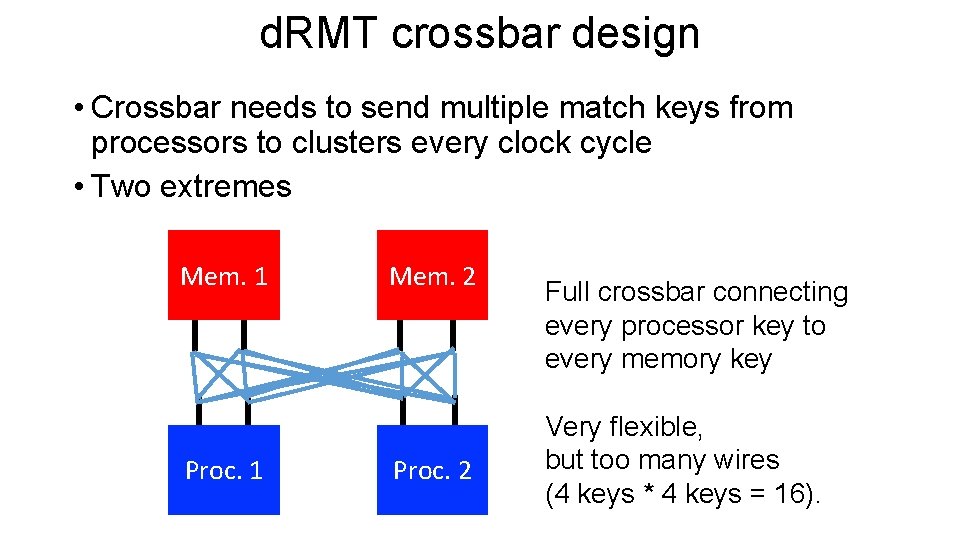

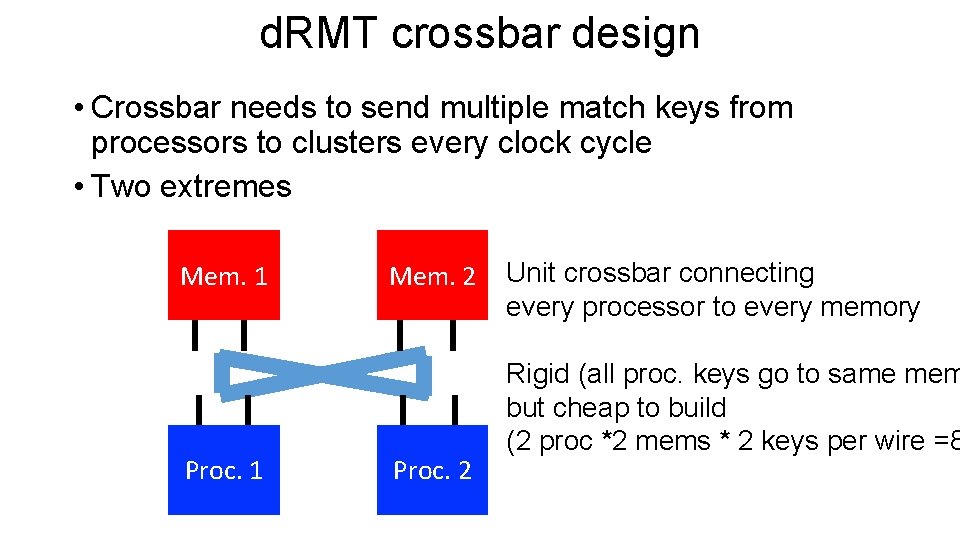

d. RMT crossbar design • Crossbar needs to send multiple match keys from processors to clusters every clock cycle • Two extremes Mem. 1 Proc. 1 Mem. 2 Proc. 2 Full crossbar connecting every processor key to every memory key Very flexible, but too many wires (4 keys * 4 keys = 16).

d. RMT crossbar design • Crossbar needs to send multiple match keys from processors to clusters every clock cycle • Two extremes Mem. 1 Proc. 1 Mem. 2 Proc. 2 Unit crossbar connecting every processor to every memory Rigid (all proc. keys go to same mem but cheap to build (2 proc *2 mems * 2 keys per wire =8

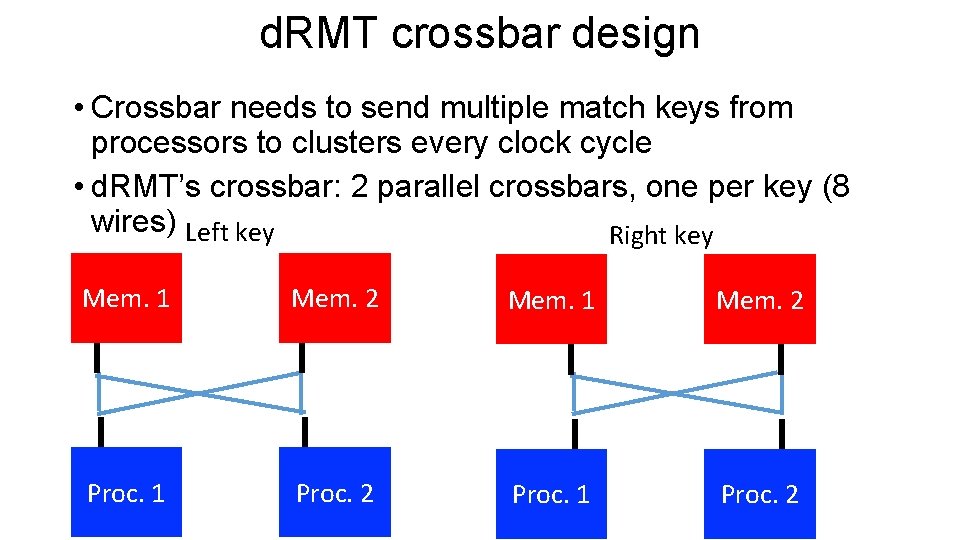

d. RMT crossbar design • Crossbar needs to send multiple match keys from processors to clusters every clock cycle • d. RMT’s crossbar: 2 parallel crossbars, one per key (8 wires) Left key Right key Mem. 1 Mem. 2 Proc. 1 Proc. 2

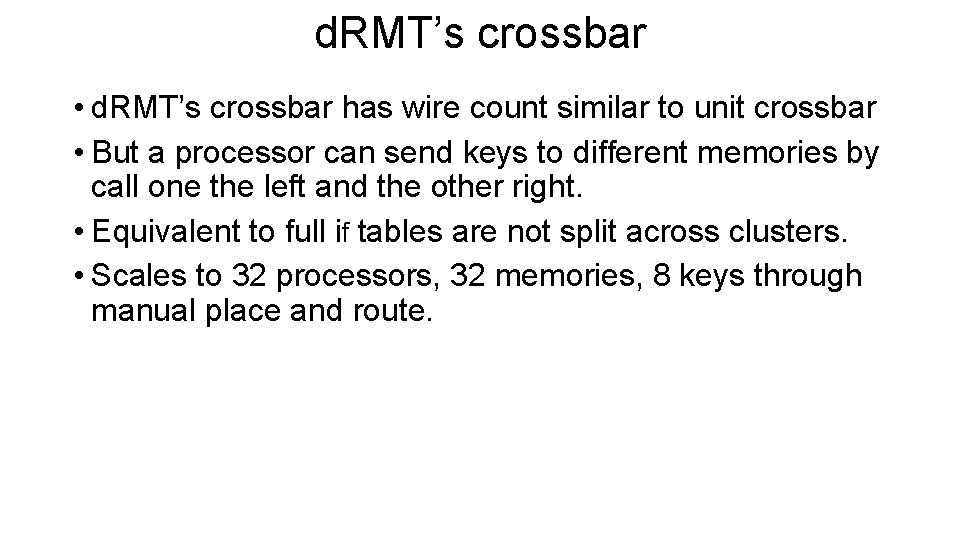

d. RMT’s crossbar • d. RMT’s crossbar has wire count similar to unit crossbar • But a processor can send keys to different memories by call one the left and the other right. • Equivalent to full if tables are not split across clusters. • Scales to 32 processors, 32 memories, 8 keys through manual place and route.

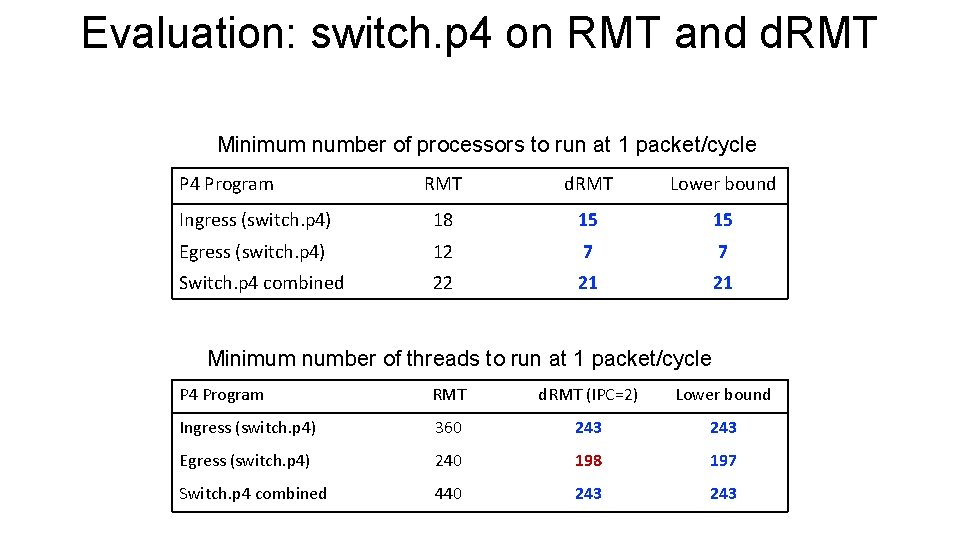

Evaluation: switch. p 4 on RMT and d. RMT Minimum number of processors to run at 1 packet/cycle P 4 Program RMT d. RMT Lower bound Ingress (switch. p 4) 18 15 15 Egress (switch. p 4) 12 7 7 Switch. p 4 combined 22 21 21 Minimum number of threads to run at 1 packet/cycle P 4 Program RMT d. RMT (IPC=2) Lower bound Ingress (switch. p 4) 360 243 Egress (switch. p 4) 240 198 197 Switch. p 4 combined 440 243

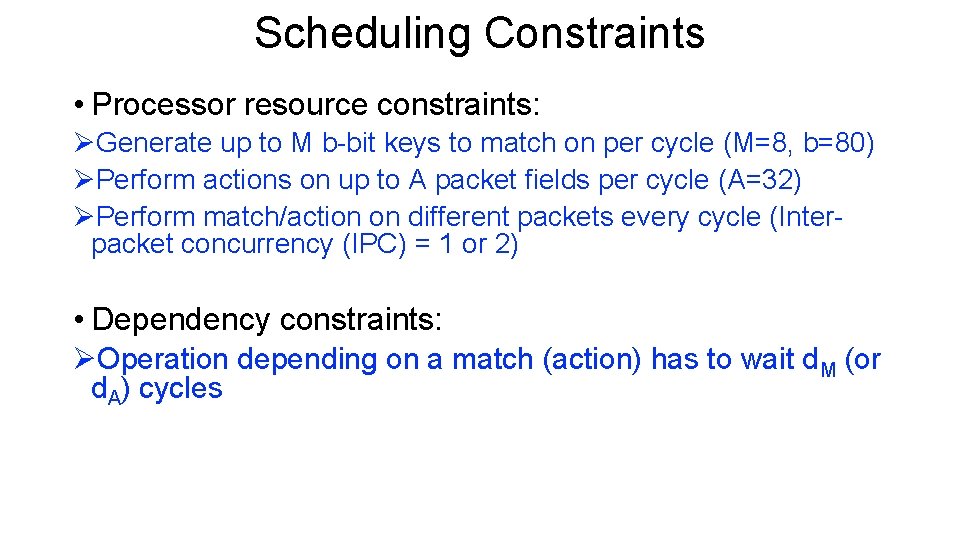

Scheduling Constraints • Processor resource constraints: ØGenerate up to M b-bit keys to match on per cycle (M=8, b=80) ØPerform actions on up to A packet fields per cycle (A=32) ØPerform match/action on different packets every cycle (Interpacket concurrency (IPC) = 1 or 2) • Dependency constraints: ØOperation depending on a match (action) has to wait d. M (or d. A) cycles

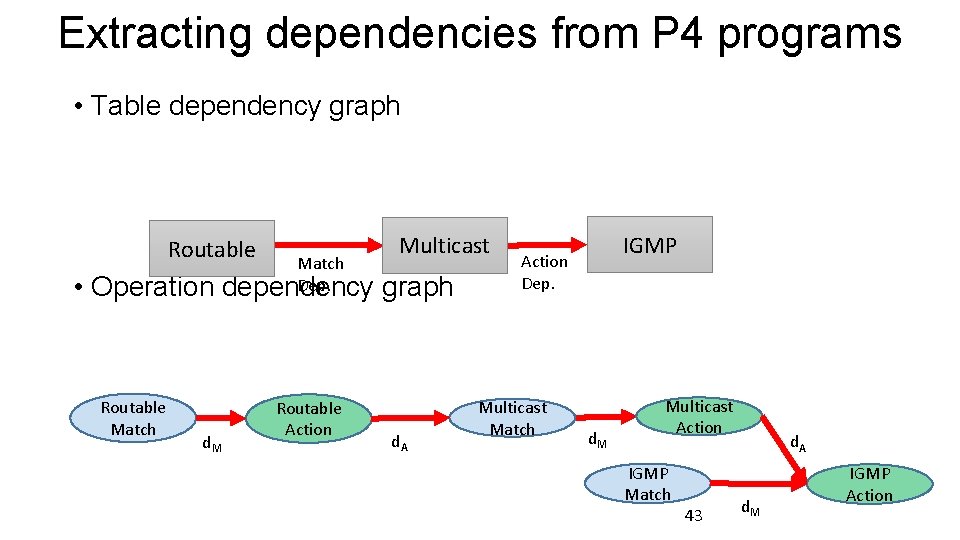

Extracting dependencies from P 4 programs • Table dependency graph Routable • Operation Routable Match Dep. dependency d. M Routable Action Multicast graph d. A IGMP Action Dep. Multicast Match d. M Multicast Action IGMP Match 43 d. A d. M IGMP Action

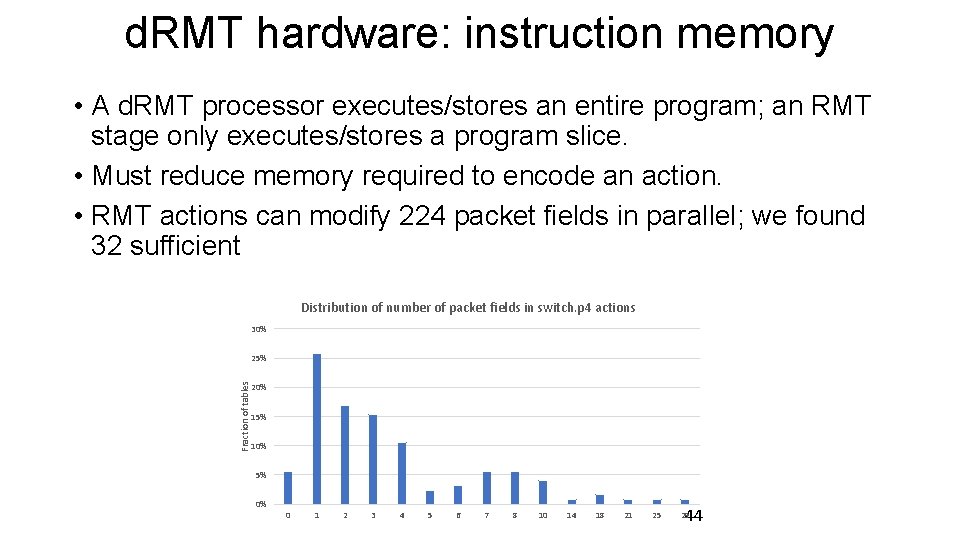

d. RMT hardware: instruction memory • A d. RMT processor executes/stores an entire program; an RMT stage only executes/stores a program slice. • Must reduce memory required to encode an action. • RMT actions can modify 224 packet fields in parallel; we found 32 sufficient Distribution of number of packet fields in switch. p 4 actions 30% Fraction of tables 25% 20% 15% 10% 5% 0% 0 1 2 3 4 5 6 7 8 10 14 18 21 25 44 29

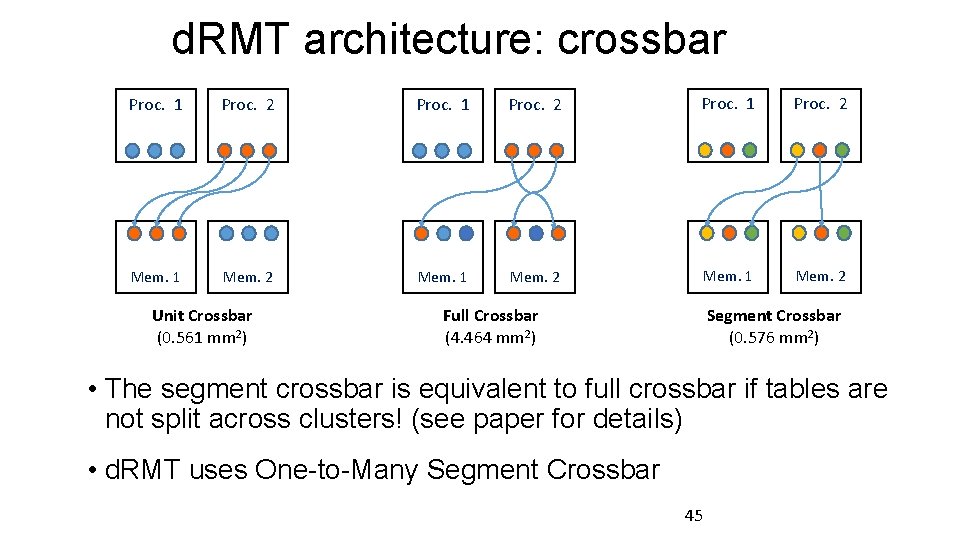

d. RMT architecture: crossbar Proc. 1 Proc. 2 Mem. 1 Mem. 2 Unit Crossbar (0. 561 mm 2) Full Crossbar (4. 464 mm 2) Segment Crossbar (0. 576 mm 2) • The segment crossbar is equivalent to full crossbar if tables are not split across clusters! (see paper for details) • d. RMT uses One-to-Many Segment Crossbar 45

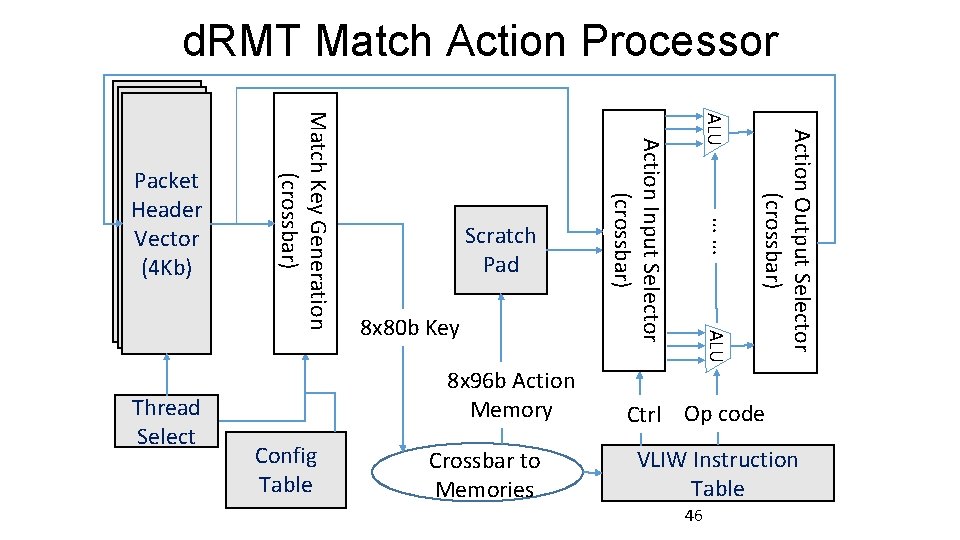

d. RMT Match Action Processor Crossbar to Memories Action Output Selector (crossbar) Config Table ALU 8 x 96 b Action Memory …… 8 x 80 b Key ALU Scratch Pad Action Input Selector (crossbar) Thread Select Match Key Generation (crossbar) Packet Header Vector (4 Kb) Ctrl Op code VLIW Instruction Table 46

Enforcing periodic resource constraints • 47

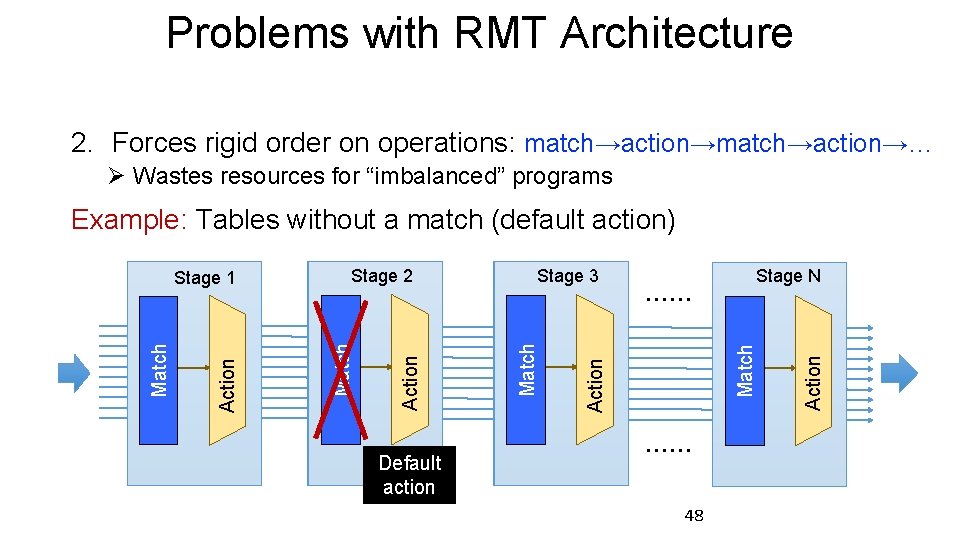

Problems with RMT Architecture 2. Forces rigid order on operations: match→action→… Ø Wastes resources for “imbalanced” programs Example: Tables without a match (default action) Match Action Default action 48 Action Stage N Stage 3 Match Action Stage 2 Match Action Match Stage 1

- Slides: 47