D Online Monitoring And Automatic DAQ Recovery Monitor

- Slides: 1

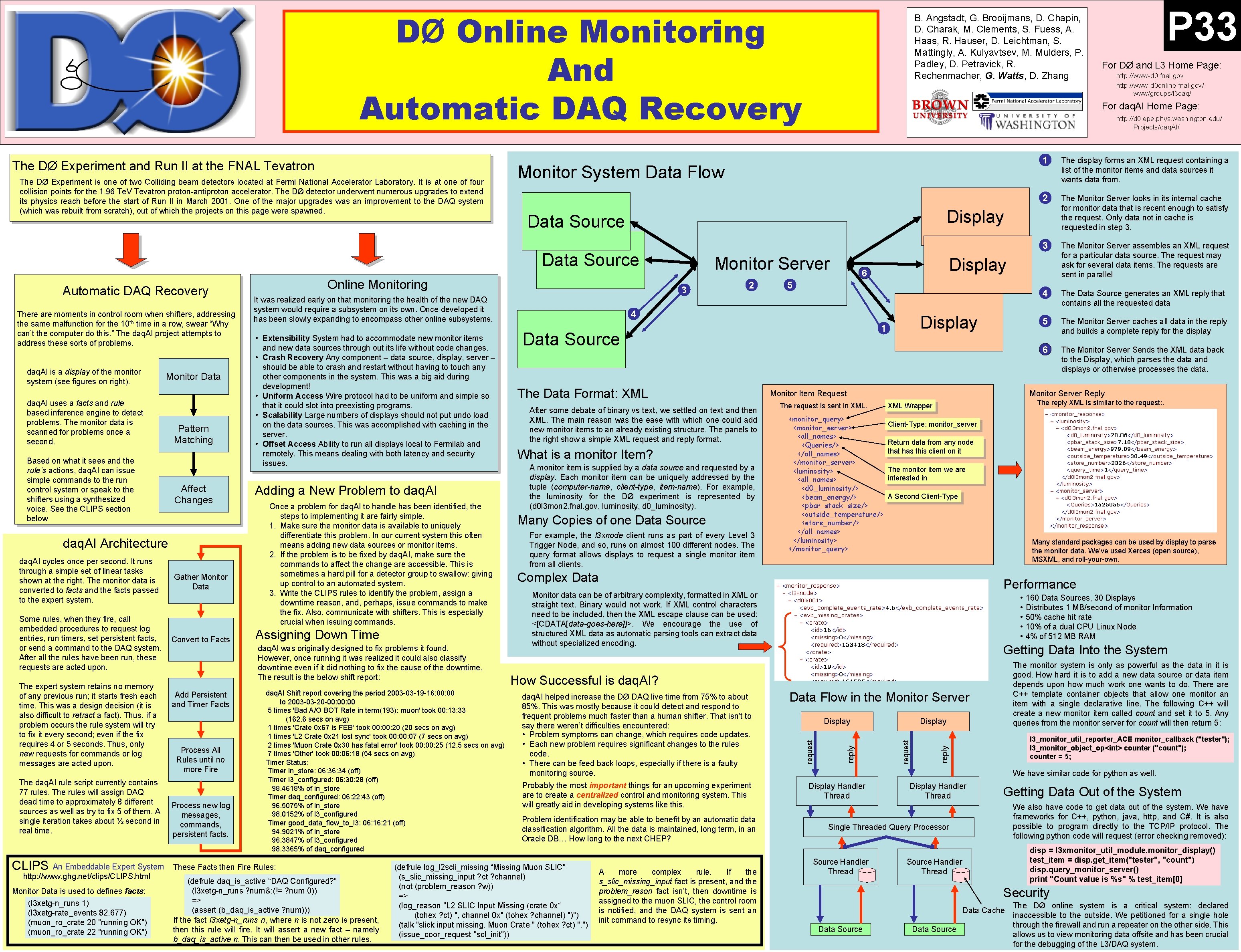

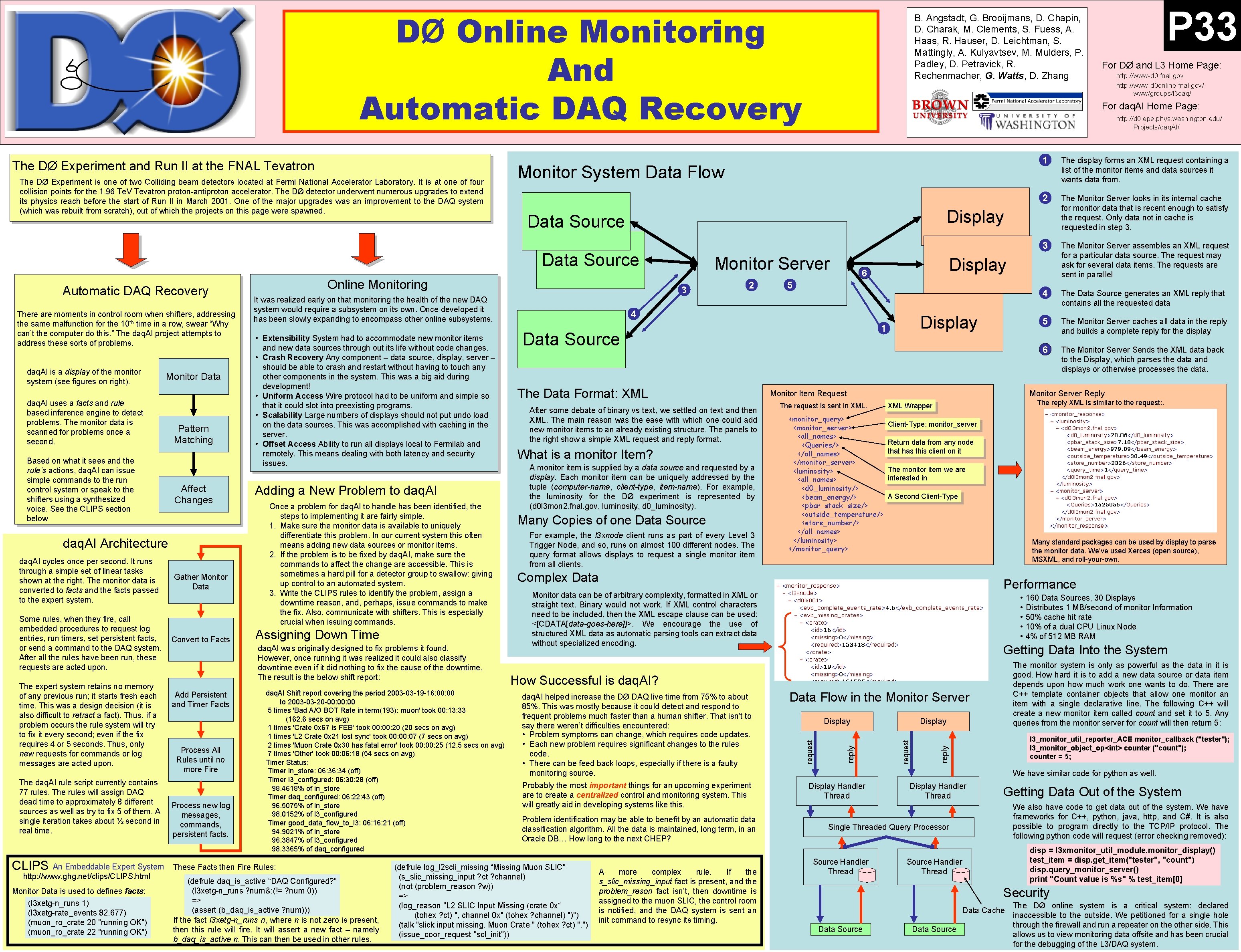

DØ Online Monitoring And Automatic DAQ Recovery Monitor System Data Flow daq. AI is a display of the monitor system (see figures on right). Monitor Data daq. AI uses a facts and rule based inference engine to detect problems. The monitor data is scanned for problems once a second. Based on what it sees and the rule’s actions, daq. AI can issue simple commands to the run control system or speak to the shifters using a synthesized voice. See the CLIPS section below Pattern Matching Affect Changes daq. AI Architecture daq. AI cycles once per second. It runs through a simple set of linear tasks shown at the right. The monitor data is converted to facts and the facts passed to the expert system. Gather Monitor Data Some rules, when they fire, call embedded procedures to request log entries, run timers, set persistent facts, or send a command to the DAQ system. After all the rules have been run, these requests are acted upon. Convert to Facts The expert system retains no memory of any previous run; it starts fresh each time. This was a design decision (it is also difficult to retract a fact). Thus, if a problem occurs the rule system will try to fix it every second; even if the fix requires 4 or 5 seconds. Thus, only new requests for commands or log messages are acted upon. The daq. AI rule script currently contains 77 rules. The rules will assign DAQ dead time to approximately 8 different sources as well as try to fix 5 of them. A single iteration takes about ½ second in real time. CLIPS An Embeddable Expert System http: //www. ghg. net/clips/CLIPS. html Monitor Data is used to defines facts: (l 3 xetg-n_runs 1) (l 3 xetg-rate_events 82. 677) (muon_ro_crate 20 "running OK") (muon_ro_crate 22 "running OK") Add Persistent and Timer Facts Process All Rules until no more Fire Process new log messages, commands, persistent facts. 3 It was realized early on that monitoring the health of the new DAQ system would require a subsystem on its own. Once developed it has been slowly expanding to encompass other online subsystems. • Extensibility System had to accommodate new monitor items and new data sources through out its life without code changes. • Crash Recovery Any component – data source, display, server – should be able to crash and restart without having to touch any other components in the system. This was a big aid during development! • Uniform Access Wire protocol had to be uniform and simple so that it could slot into preexisting programs. • Scalability Large numbers of displays should not put undo load on the data sources. This was accomplished with caching in the server. • Offset Access Ability to run all displays local to Fermilab and remotely. This means dealing with both latency and security issues. Adding a New Problem to daq. AI Once a problem for daq. AI to handle has been identified, the steps to implementing it are fairly simple. 1. Make sure the monitor data is available to uniquely differentiate this problem. In our current system this often means adding new data sources or monitor items. 2. If the problem is to be fixed by daq. AI, make sure the commands to affect the change are accessible. This is sometimes a hard pill for a detector group to swallow: giving up control to an automated system. 3. Write the CLIPS rules to identify the problem, assign a downtime reason, and, perhaps, issue commands to make the fix. Also, communicate with shifters. This is especially crucial when issuing commands. Assigning Down Time daq. AI was originally designed to fix problems it found. However, once running it was realized it could also classify downtime even if it did nothing to fix the cause of the downtime. The result is the below shift report: daq. AI Shift report covering the period 2003 -03 -19 -16: 00 to 2003 -03 -20 -00: 00 5 times 'Bad A/O BOT Rate in term(193): muon' took 00: 13: 33 (162. 6 secs on avg) 1 times 'Crate 0 x 67 is FEB' took 00: 20 (20 secs on avg) 1 times 'L 2 Crate 0 x 21 lost sync' took 00: 07 (7 secs on avg) 2 times 'Muon Crate 0 x 30 has fatal error' took 00: 25 (12. 5 secs on avg) 7 times 'Other' took 00: 06: 18 (54 secs on avg) Timer Status: Timer in_store: 06: 34 (off) Timer l 3_configured: 06: 30: 28 (off) 98. 4618% of in_store Timer daq_configured: 06: 22: 43 (off) 96. 5075% of in_store 98. 0152% of l 3_configured Timer good_data_flow_to_l 3: 06: 16: 21 (off) 94. 9021% of in_store 96. 3847% of l 3_configured 98. 3365% of daq_configured These Facts then Fire Rules: (defrule daq_is_active “DAQ Configured? " (l 3 xetg-n_runs ? num&: (!= ? num 0)) => (assert (b_daq_is_active ? num))) If the fact l 3 xetg-n_runs n, where n is not zero is present, then this rule will fire. It will assert a new fact – namely b_daq_is_active n. This can then be used in other rules. 2 5 4 The Data Format: XML After some debate of binary vs text, we settled on text and then XML. The main reason was the ease with which one could add new monitor items to an already existing structure. The panels to the right show a simple XML request and reply format. What is a monitor Item? A monitor item is supplied by a data source and requested by a display. Each monitor item can be uniquely addressed by the tuple (computer-name, client-type, item-name). For example, the luminosity for the DØ experiment is represented by (d 0 l 3 mon 2. fnal. gov, luminosity, d 0_luminosity). Many Copies of one Data Source For example, the l 3 xnode client runs as part of every Level 3 Trigger Node, and so, runs on almost 100 different nodes. The query format allows displays to request a single monitor item from all clients. Display 1 Data Source 2 The Monitor Server looks in its internal cache for monitor data that is recent enough to satisfy the request. Only data not in cache is requested in step 3. 3 The Monitor Server assembles an XML request for a particular data source. The request may ask for several data items. The requests are sent in parallel 4 The Data Source generates an XML reply that contains all the requested data 5 The Monitor Server caches all data in the reply and builds a complete reply for the display 6 The Monitor Server Sends the XML data back to the Display, which parses the data and displays or otherwise processes the data. Monitor Server Reply Monitor Item Request The request is sent in XML. <monitor_query> <monitor_server> <all_names> <Queries/> </all_names> </monitor_server> <luminosity> <all_names> <d 0_luminosity/> <beam_energy/> <pbar_stack_size/> <outside_temperature/> <store_number/> </all_names> </luminosity> </monitor_query> The reply XML is similar to the request: . XML Wrapper Client-Type: monitor_server Return data from any node that has this client on it The monitor item we are interested in A Second Client-Type Many standard packages can be used by display to parse the monitor data. We’ve used Xerces (open source), MSXML, and roll-your-own. Complex Data Performance Monitor data can be of arbitrary complexity, formatted in XML or straight text. Binary would not work. If XML control characters need to be included, then the XML escape clause can be used: <[CDATA[data-goes-here]]>. We encourage the use of structured XML data as automatic parsing tools can extract data without specialized encoding. • • • daq. AI helped increase the DØ DAQ live time from 75% to about 85%. This was mostly because it could detect and respond to frequent problems much faster than a human shifter. That isn’t to say there weren’t difficulties encountered: • Problem symptoms can change, which requires code updates. • Each new problem requires significant changes to the rules code. • There can be feed back loops, especially if there is a faulty monitoring source. Probably the most important things for an upcoming experiment are to create a centralized control and monitoring system. This will greatly aid in developing systems like this. Problem identification may be able to benefit by an automatic data classification algorithm. All the data is maintained, long term, in an Oracle DB… How long to the next CHEP? A more complex rule. If the s_slic_missing_input fact is present, and the problem_reson fact isn’t, then downtime is assigned to the muon SLIC, the control room is notified, and the DAQ system is sent an init command to resync its timing. 160 Data Sources, 30 Displays Distributes 1 MB/second of monitor Information 50% cache hit rate 10% of a dual CPU Linux Node 4% of 512 MB RAM Getting Data Into the System The monitor system is only as powerful as the data in it is good. How hard it is to add a new data source or data item depends upon how much work one wants to do. There are C++ template container objects that allow one monitor an item with a single declarative line. The following C++ will create a new monitor item called count and set it to 5. Any queries from the monitor server for count will then return 5: How Successful is daq. AI? (defrule log_l 2 scli_missing “Missing Muon SLIC" (s_slic_missing_input ? channel) (not (problem_reason ? w)) => (log_reason "L 2 SLIC Input Missing (crate 0 x“ (tohex ? ct) ", channel 0 x" (tohex ? channel) ")") (talk "slick input missing. Muon Crate " (tohex ? ct) ". ") (issue_coor_request "scl_init")) The display forms an XML request containing a list of the monitor items and data sources it wants data from. Display 6 Data Flow in the Monitor Server Display l 3_monitor_util_reporter_ACE monitor_callback ("tester"); l 3_monitor_object_op<int> counter ("count"); counter = 5; reply There are moments in control room when shifters, addressing the same malfunction for the 10 th time in a row, swear “Why can’t the computer do this. ” The daq. AI project attempts to address these sorts of problems. Monitor Server request Automatic DAQ Recovery Online Monitoring http: //www-d 0. fnal. gov http: //www-d 0 online. fnal. gov/ www/groups/l 3 daq/ 1 Display Data Source For DØ and L 3 Home Page: http: //d 0. epe. phys. washington. edu/ Projects/daq. AI/ reply The DØ Experiment is one of two Colliding beam detectors located at Fermi National Accelerator Laboratory. It is at one of four collision points for the 1. 96 Te. V Tevatron proton-antiproton accelerator. The DØ detector underwent numerous upgrades to extend its physics reach before the start of Run II in March 2001. One of the major upgrades was an improvement to the DAQ system (which was rebuilt from scratch), out of which the projects on this page were spawned. P 33 For daq. AI Home Page: request The DØ Experiment and Run II at the FNAL Tevatron B. Angstadt, G. Brooijmans, D. Chapin, D. Charak, M. Clements, S. Fuess, A. Haas, R. Hauser, D. Leichtman, S. Mattingly, A. Kulyavtsev, M. Mulders, P. Padley, D. Petravick, R. Rechenmacher, G. Watts, D. Zhang We have similar code for python as well. Display Handler Thread Getting Data Out of the System We also have code to get data out of the system. We have frameworks for C++, python, java, http, and C#. It is also possible to program directly to the TCP/IP protocol. The following python code will request (error checking removed): Single Threaded Query Processor Source Handler Thread disp = l 3 xmonitor_util_module. monitor_display() test_item = disp. get_item("tester", "count") disp. query_monitor_server() print "Count value is %s" % test_item[0] Source Handler Thread Security Data Cache Data Source The DØ online system is a critical system: declared inaccessible to the outside. We petitioned for a single hole through the firewall and run a repeater on the other side. This allows us to view monitoring data offsite and has been crucial for the debugging of the L 3/DAQ system.