Curious Characters in Multiuser Games A Study in

- Slides: 15

Curious Characters in Multiuser Games: A Study in Motivated Reinforcement Learning for Creative Behavior Policies* Mary Lou Maher University of Sydney AAAI AI and Fun Workshop July 2010 1 Based on Merrick, K. and Maher, M. L. (2009) Motivated Reinforcement Learning: Curious Characters for Multiuser Games, Springer.

Outline Curiosity and Fun n Motivation n Motivated Reinforcement Learning n An Agent Model of a Curious Character n Evaluation of Behavior Policies n

Can AI model Fun? Claim: An agent motivated by curiosity to learn patterns is a model of fun.

Games try to achieve flow: a function of the players skill and performance J. Chen, Flow in games (and everything else). Communications of the ACM 50(4): 31 -34, 2007

Why Motivated Reinforcement Learning? n More efficient learning: n n External reward not known at design time n n Complement external reward with internal reward Design tasks Real world scenrios: Robotics Virtual world scenarios: NPC in computer games More autonomy in determining learning tasks n n Robotics NPC in computer games

Models of Motivation n Cognitive: n n Biological n n Interest Competency Challenge Stasis variables: energy, blood pressure, etc Social n n Conformity Peer pressure

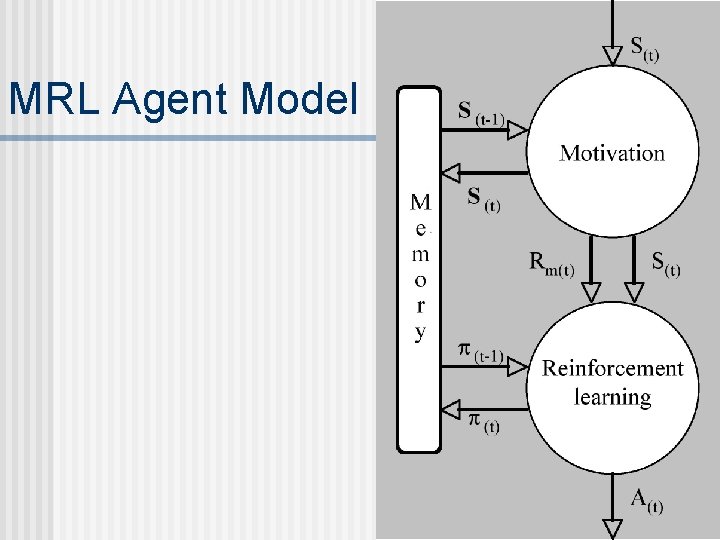

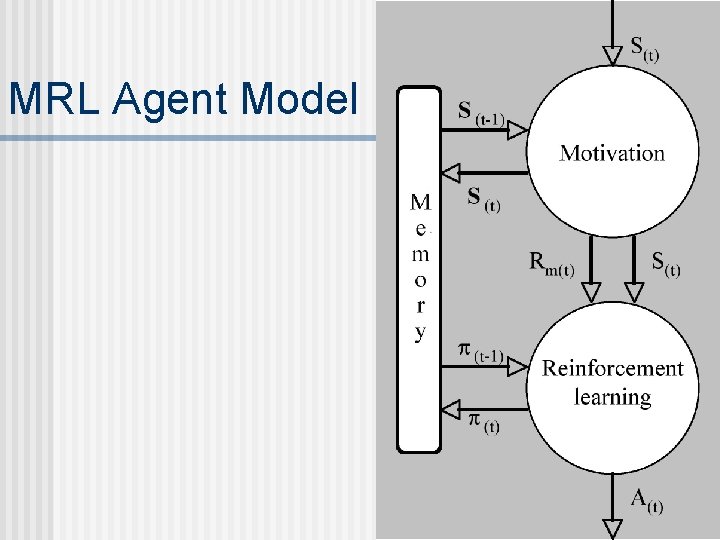

MRL Agent Model

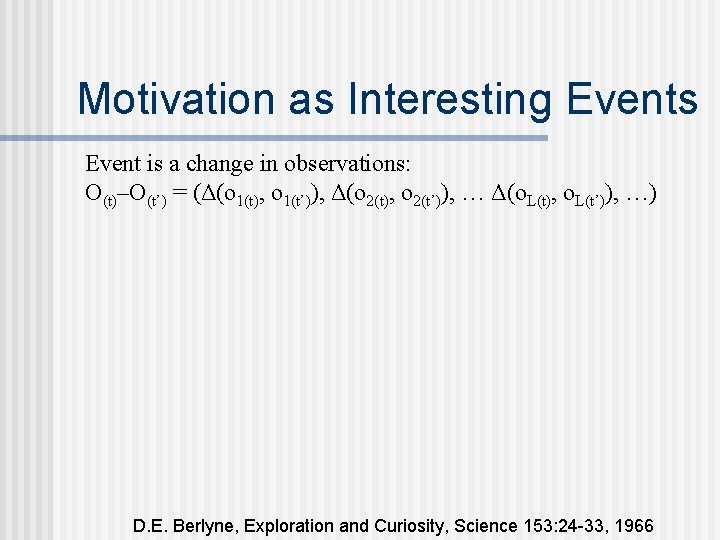

Motivation as Interesting Events Event is a change in observations: O(t)–O(t’) = (Δ(o 1(t), o 1(t’)), Δ(o 2(t), o 2(t’)), … Δ(o. L(t), o. L(t’)), …) D. E. Berlyne, Exploration and Curiosity, Science 153: 24 -33, 1966

Sensed States: Context Free Grammar (CFG) CFG = (VS, ΓS, ΨS, S) where: n VS is a set of variables or syntactic categories, n ΓS is a finite set of terminals such that VS ∩ ΓS = {}, n ΨS is a set of productions V -> v where V is a variable and v is a string of terminals and variables, S is the start symbol. Thus, the general form of a sensed state is: n S -> <sensations> n <sensations> -> <Pi. Sensations><sensations> | ε n <Pi. Sensations> -> <s. L><Pi. Sensations> | ε n <s. L> -> <number> | <string>

MRL for Non Player Characters

Habituated Self Organizing Map

Behavioral Variety n n Behavioural variety measures the number of events for which a near optimal policy is learned. We characterise the level of optimality of a policy learned to achieve the event E(t) in terms of its structural stability.

Behavioral Complexity n The complexity of a policy can be measured by averaging the mean numbers of actions ā E(t) required to repeat E(t) at any time when the current behaviour is stable

Research Directions Scalability and dynamics: different RL such as decision trees and NN function approximation n Motivation functions: competence, optimal challenges, social models n

Relevance to AI and Fun n Is it more fun to play with curious NPC? n Can a curious agent play a game to test how fun a game is?