CUDA Taking Advantage of Lockstep Execution Martin Kruli

![Synchronization � Example of __syncwarp() unsigned tid = thread. Idx. x; shmem[tid] shmem[tid] += Synchronization � Example of __syncwarp() unsigned tid = thread. Idx. x; shmem[tid] shmem[tid] +=](https://slidetodoc.com/presentation_image_h/235bcfc4be7766504682082bcf7fdf1d/image-14.jpg)

- Slides: 31

CUDA: Taking Advantage of Lock-step Execution Martin Kruliš by Martin Kruliš (v 2. 0) 4. 11. 2020 1

SIMT Revision � Single ◦ ◦ Instruction Multiple Threads Rather new concept in Flynn’s Taxonomy Similar to SIMD Threads in a block (group) execute in virtual SIMT Smaller groups (warps) are executed in physical SIMT (sometimes called lock-step) by Martin Kruliš (v 2. 0) 4. 11. 2020 2

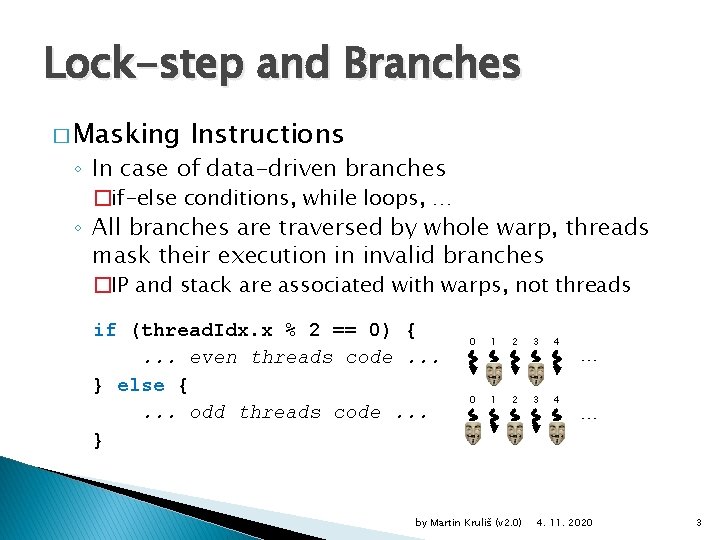

Lock-step and Branches � Masking Instructions ◦ In case of data-driven branches �if-else conditions, while loops, … ◦ All branches are traversed by whole warp, threads mask their execution in invalid branches �IP and stack are associated with warps, not threads if (thread. Idx. x % 2 == 0) {. . . even threads code. . . } else {. . . odd threads code. . . } 0 1 2 3 4 by Martin Kruliš (v 2. 0) … … 4. 11. 2020 3

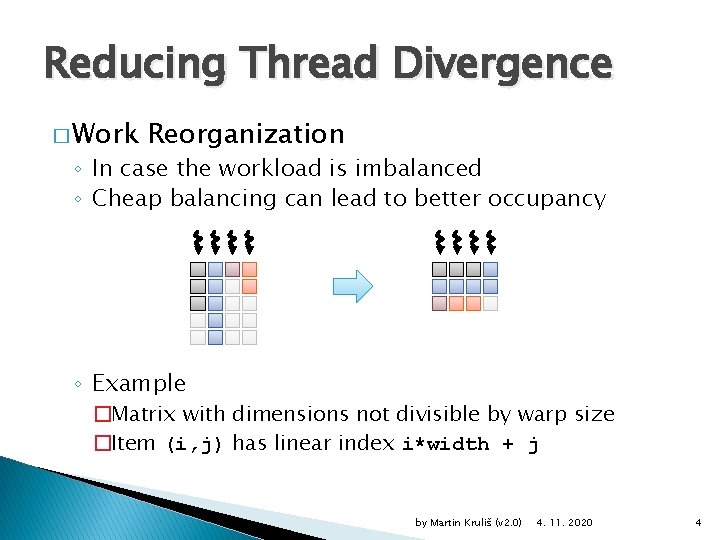

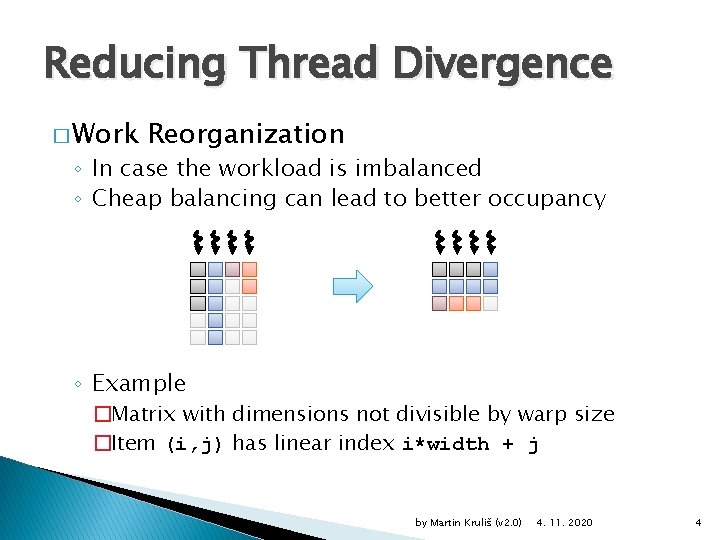

Reducing Thread Divergence � Work Reorganization ◦ In case the workload is imbalanced ◦ Cheap balancing can lead to better occupancy ◦ Example �Matrix with dimensions not divisible by warp size �Item (i, j) has linear index i*width + j by Martin Kruliš (v 2. 0) 4. 11. 2020 4

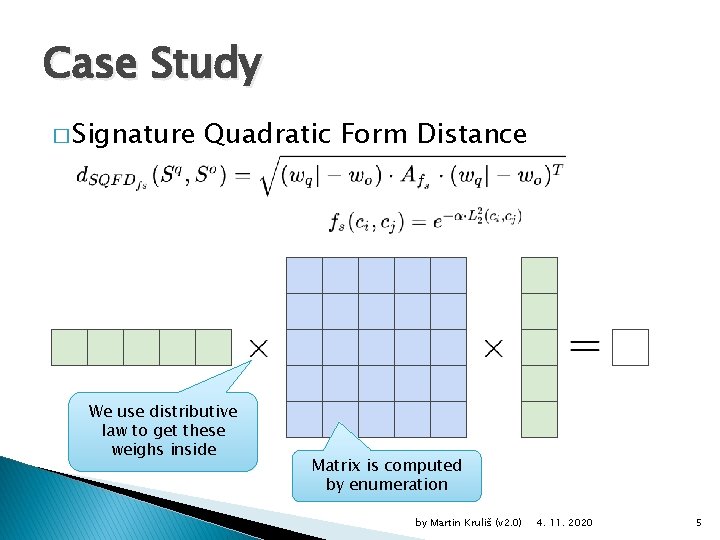

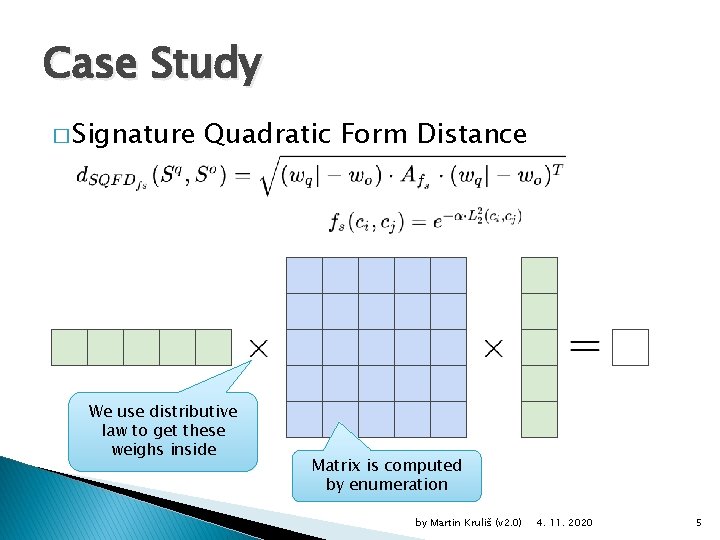

Case Study � Signature Quadratic Form Distance We use distributive law to get these weighs inside Matrix is computed by enumeration by Martin Kruliš (v 2. 0) 4. 11. 2020 5

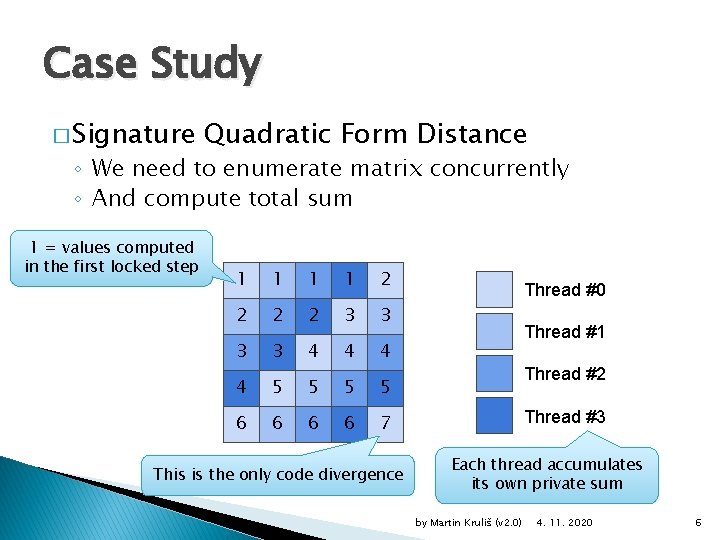

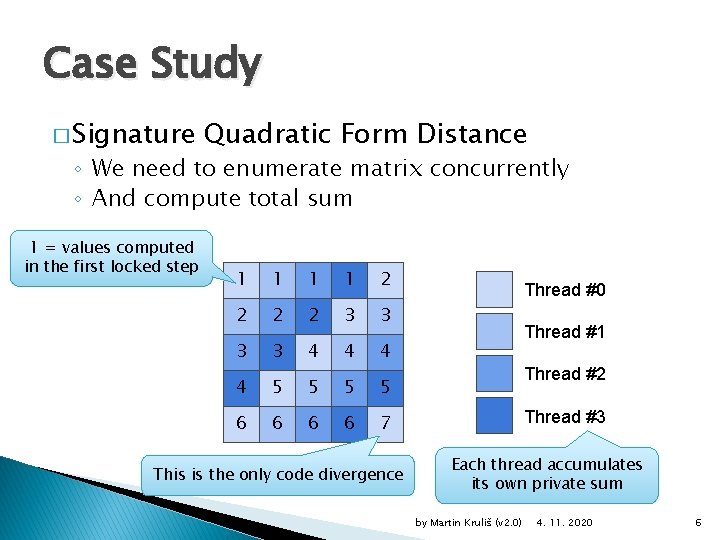

Case Study � Signature Quadratic Form Distance ◦ We need to enumerate matrix concurrently ◦ And compute total sum 1 = values computed in the first locked step 1 1 2 2 3 3 4 4 5 5 6 6 7 This is the only code divergence Thread #0 Thread #1 Thread #2 Thread #3 Each thread accumulates its own private sum by Martin Kruliš (v 2. 0) 4. 11. 2020 6

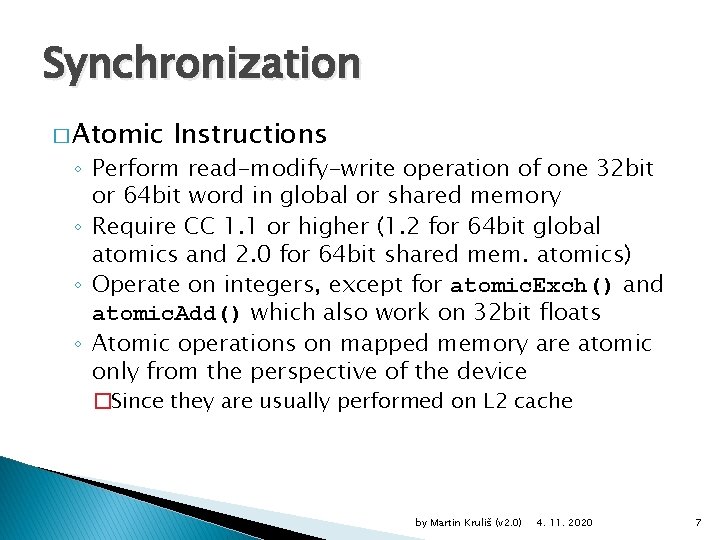

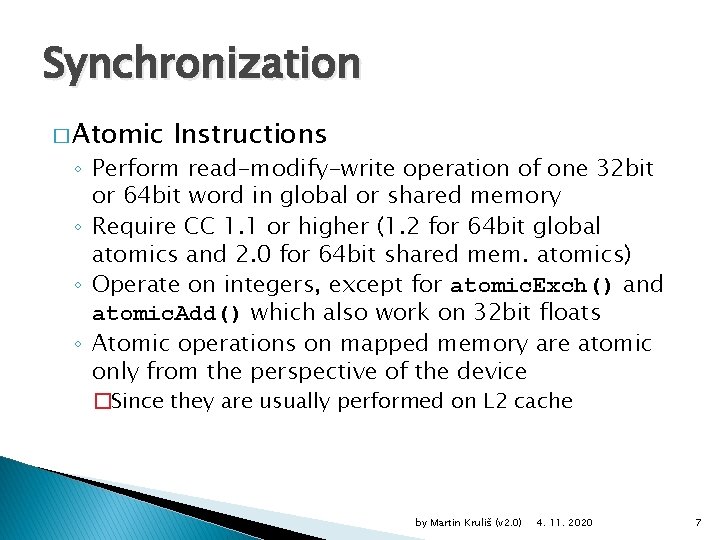

Synchronization � Atomic Instructions ◦ Perform read-modify-write operation of one 32 bit or 64 bit word in global or shared memory ◦ Require CC 1. 1 or higher (1. 2 for 64 bit global atomics and 2. 0 for 64 bit shared mem. atomics) ◦ Operate on integers, except for atomic. Exch() and atomic. Add() which also work on 32 bit floats ◦ Atomic operations on mapped memory are atomic only from the perspective of the device �Since they are usually performed on L 2 cache by Martin Kruliš (v 2. 0) 4. 11. 2020 7

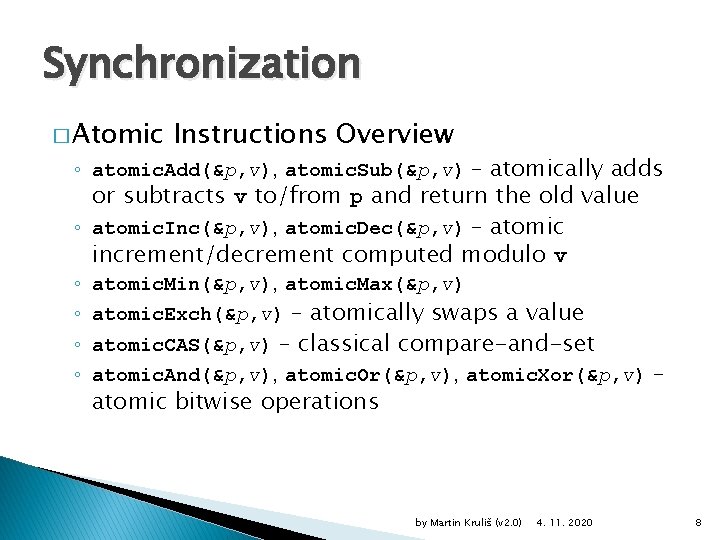

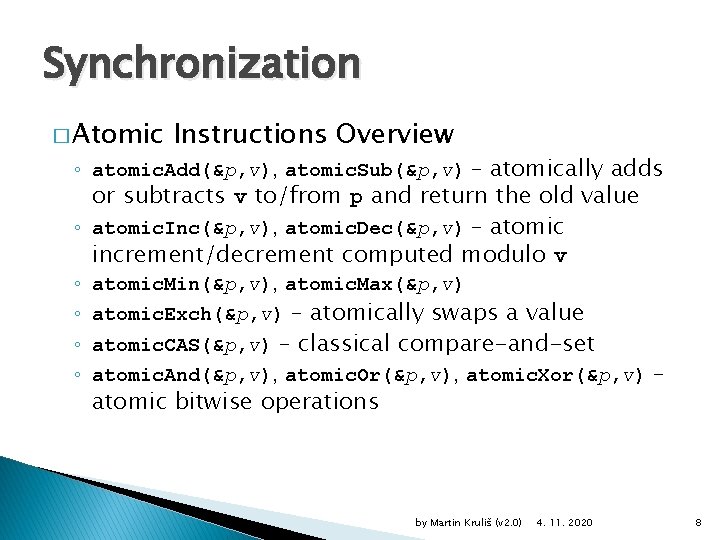

Synchronization � Atomic Instructions Overview ◦ atomic. Add(&p, v), atomic. Sub(&p, v) – atomically adds or subtracts v to/from p and return the old value ◦ atomic. Inc(&p, v), atomic. Dec(&p, v) – atomic increment/decrement computed modulo v ◦ atomic. Min(&p, v), atomic. Max(&p, v) ◦ atomic. Exch(&p, v) – atomically swaps a value ◦ atomic. CAS(&p, v) – classical compare-and-set ◦ atomic. And(&p, v), atomic. Or(&p, v), atomic. Xor(&p, v) – atomic bitwise operations by Martin Kruliš (v 2. 0) 4. 11. 2020 8

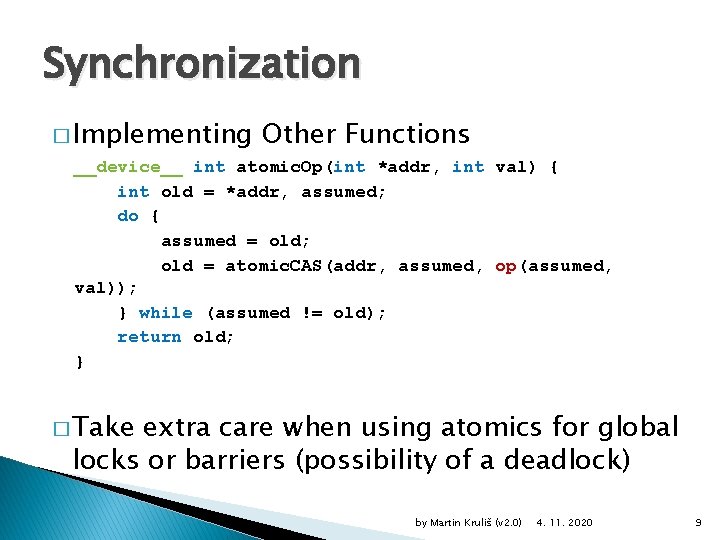

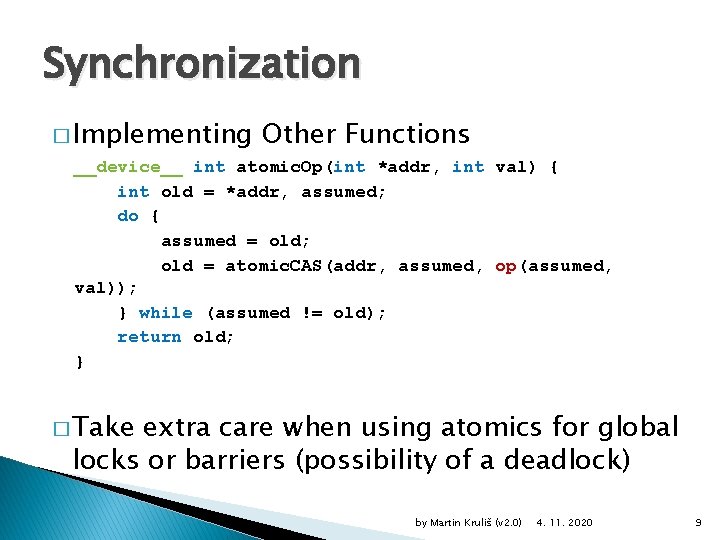

Synchronization � Implementing Other Functions __device__ int atomic. Op(int *addr, int val) { int old = *addr, assumed; do { assumed = old; old = atomic. CAS(addr, assumed, op(assumed, val)); } while (assumed != old); return old; } � Take extra care when using atomics for global locks or barriers (possibility of a deadlock) by Martin Kruliš (v 2. 0) 4. 11. 2020 9

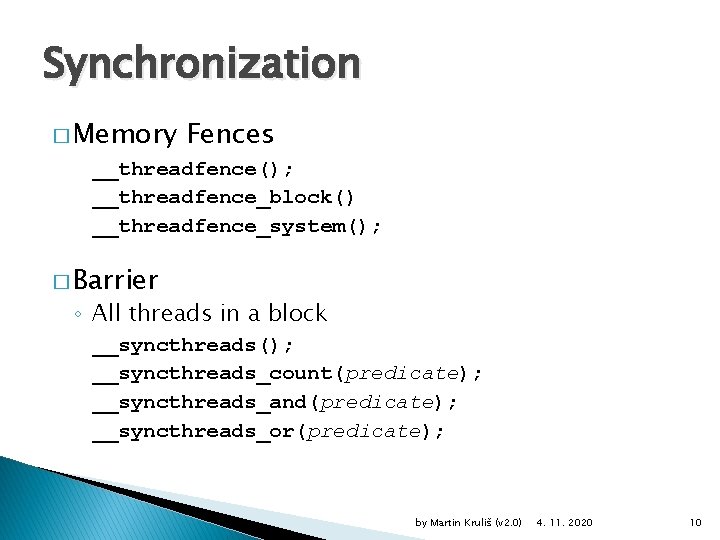

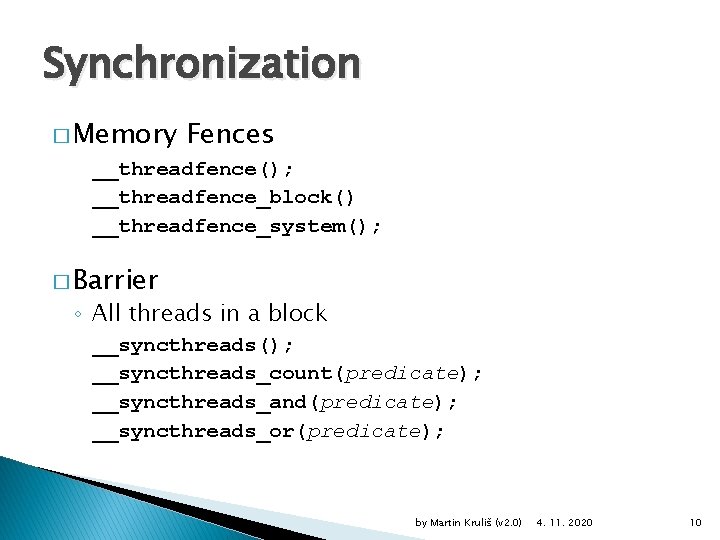

Synchronization � Memory Fences __threadfence(); __threadfence_block() __threadfence_system(); � Barrier ◦ All threads in a block __syncthreads(); __syncthreads_count(predicate); __syncthreads_and(predicate); __syncthreads_or(predicate); by Martin Kruliš (v 2. 0) 4. 11. 2020 10

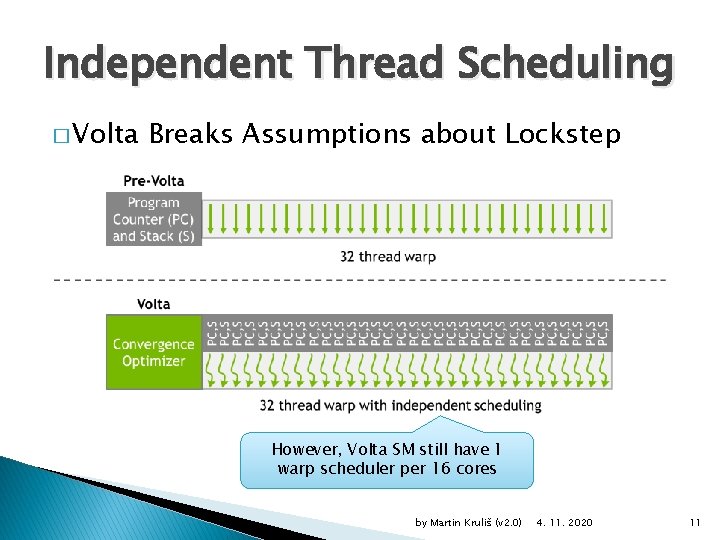

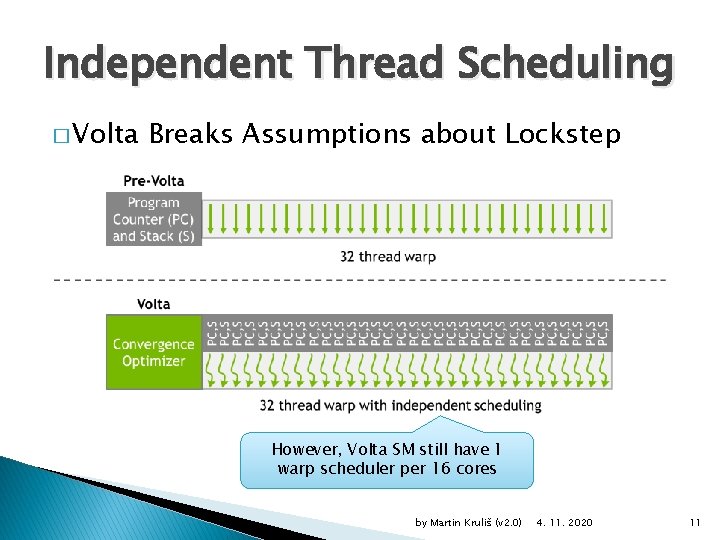

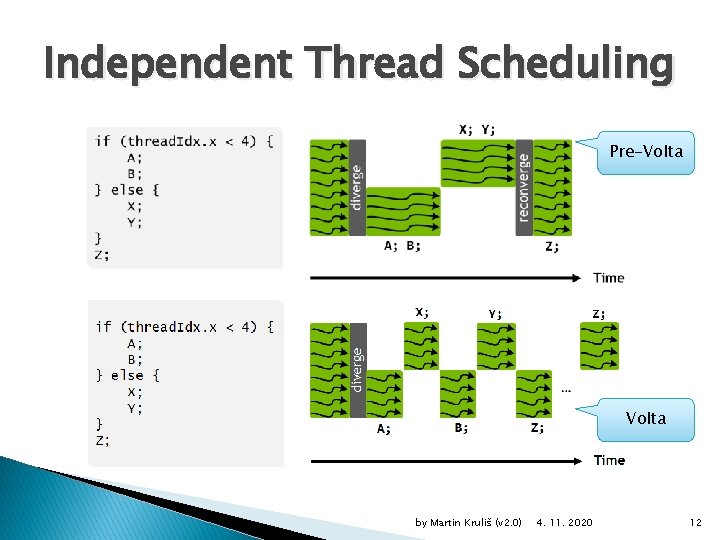

Independent Thread Scheduling � Volta Breaks Assumptions about Lockstep However, Volta SM still have 1 warp scheduler per 16 cores by Martin Kruliš (v 2. 0) 4. 11. 2020 11

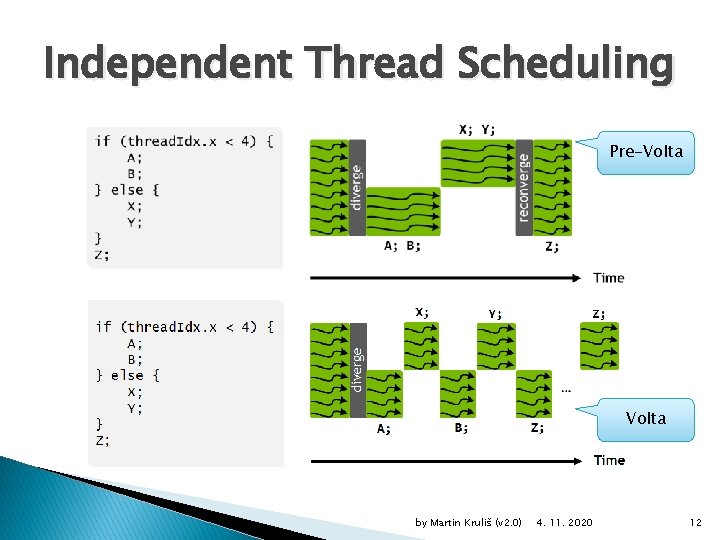

Independent Thread Scheduling Pre-Volta by Martin Kruliš (v 2. 0) 4. 11. 2020 12

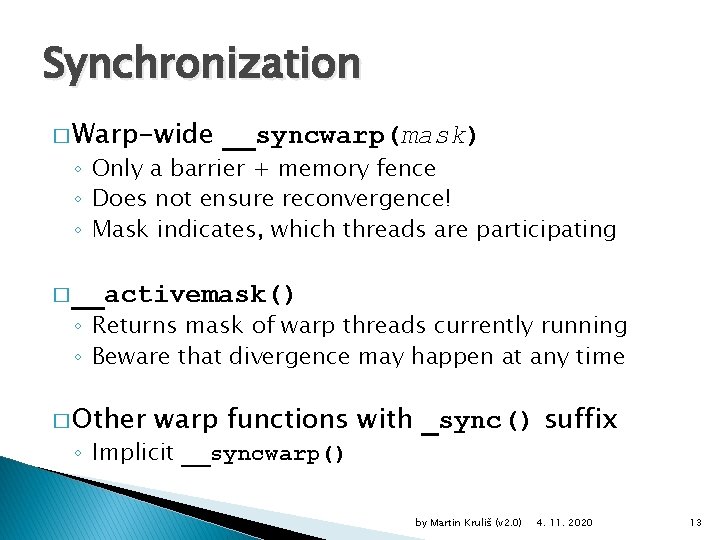

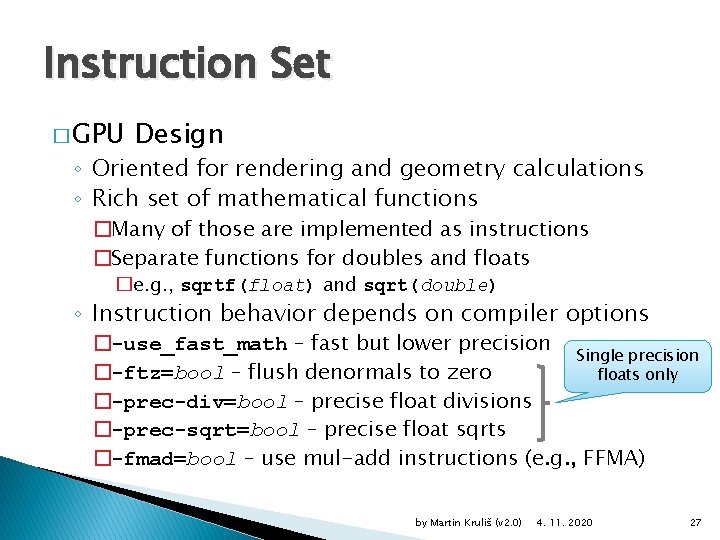

Synchronization � Warp-wide __syncwarp(mask) ◦ Only a barrier + memory fence ◦ Does not ensure reconvergence! ◦ Mask indicates, which threads are participating � __activemask() ◦ Returns mask of warp threads currently running ◦ Beware that divergence may happen at any time � Other warp functions with _sync() suffix ◦ Implicit __syncwarp() by Martin Kruliš (v 2. 0) 4. 11. 2020 13

![Synchronization Example of syncwarp unsigned tid thread Idx x shmemtid shmemtid Synchronization � Example of __syncwarp() unsigned tid = thread. Idx. x; shmem[tid] shmem[tid] +=](https://slidetodoc.com/presentation_image_h/235bcfc4be7766504682082bcf7fdf1d/image-14.jpg)

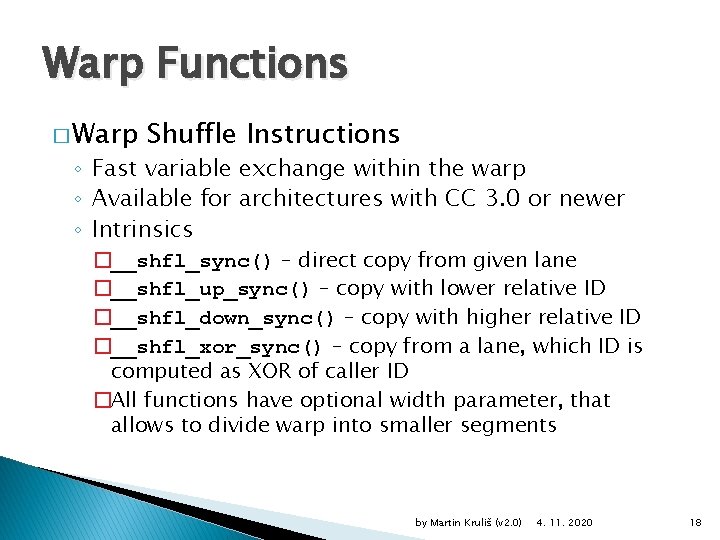

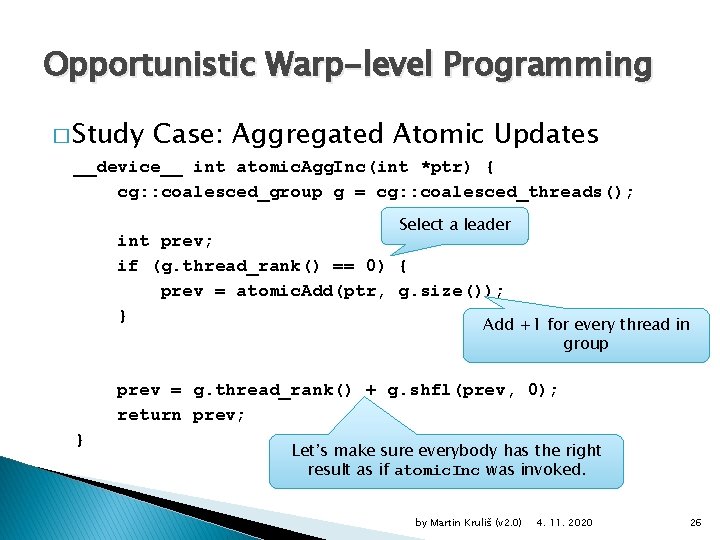

Synchronization � Example of __syncwarp() unsigned tid = thread. Idx. x; shmem[tid] shmem[tid] += += += shmem[tid+16]; shmem[tid+8]; shmem[tid+4]; shmem[tid+2]; shmem[tid+1]; Tree sum reduction __syncwarp(); __syncwarp(); Incorrect. We need to place __syncwarp() also between reads and writes! by Martin Kruliš (v 2. 0) 4. 11. 2020 14

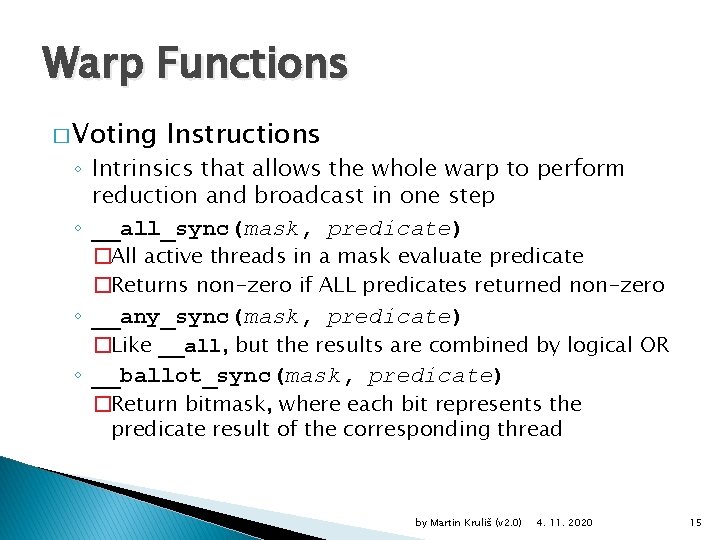

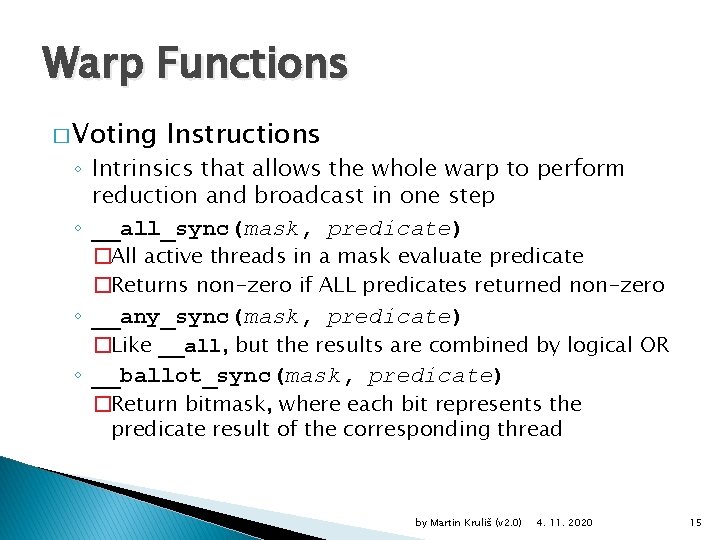

Warp Functions � Voting Instructions ◦ Intrinsics that allows the whole warp to perform reduction and broadcast in one step ◦ __all_sync(mask, predicate) �All active threads in a mask evaluate predicate �Returns non-zero if ALL predicates returned non-zero ◦ __any_sync(mask, predicate) �Like __all, but the results are combined by logical OR ◦ __ballot_sync(mask, predicate) �Return bitmask, where each bit represents the predicate result of the corresponding thread by Martin Kruliš (v 2. 0) 4. 11. 2020 15

Warp Functions � Example – Filtering and Compacting Array constexpr FULL_MASK = 0 xffff; unsigned my. Mask = (1 << (thread. Idx. x % warp. Size)) - 1; for (unsigned i = thread. Idx. x; i < N; i += warp. Size) { bool keep = filtering_predicate(input[i]); unsigned who. Keeps = __ballot_sync(FULL_MASK, keep); if (keep) { unsigned line. Idx = __popc(who. Keeps & my. Mask); output[line. Idx] = input[i]; } output += __popc(who. Keeps); } by Martin Kruliš (v 2. 0) 4. 11. 2020 16

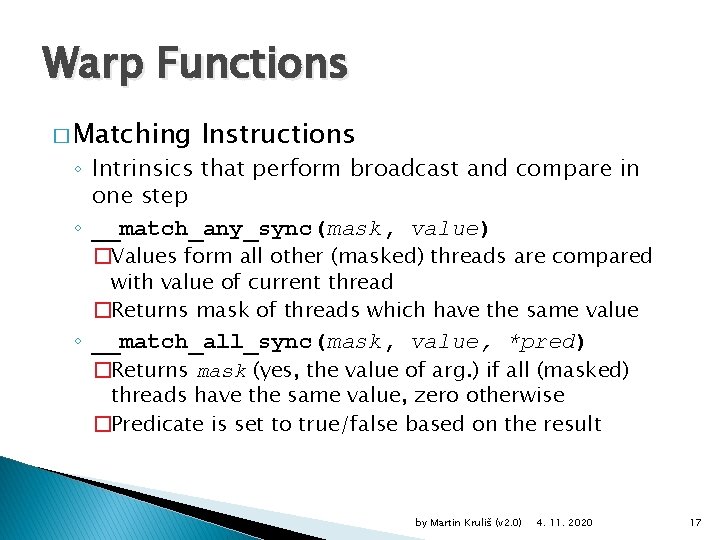

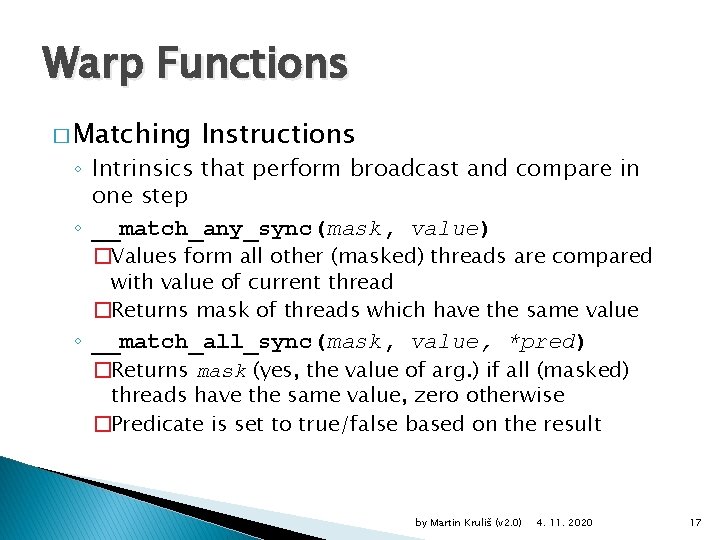

Warp Functions � Matching Instructions ◦ Intrinsics that perform broadcast and compare in one step ◦ __match_any_sync(mask, value) �Values form all other (masked) threads are compared with value of current thread �Returns mask of threads which have the same value ◦ __match_all_sync(mask, value, *pred) �Returns mask (yes, the value of arg. ) if all (masked) threads have the same value, zero otherwise �Predicate is set to true/false based on the result by Martin Kruliš (v 2. 0) 4. 11. 2020 17

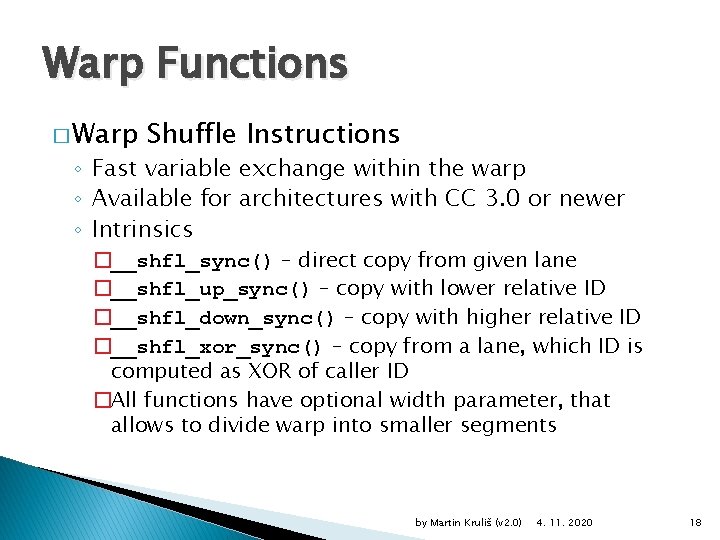

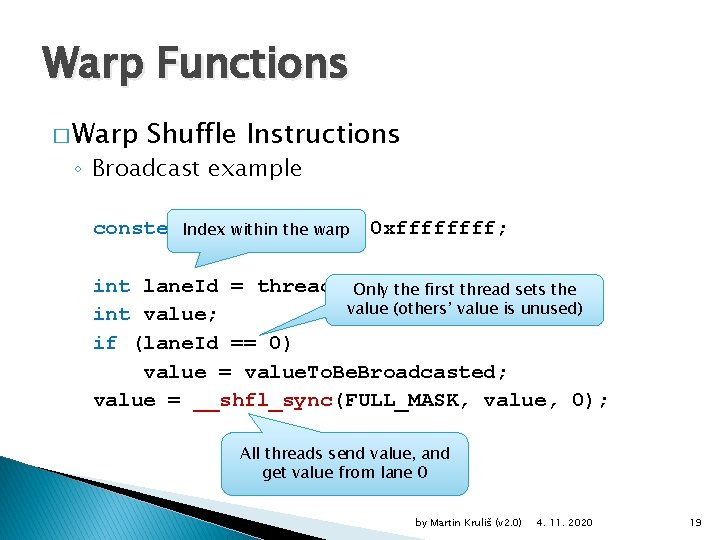

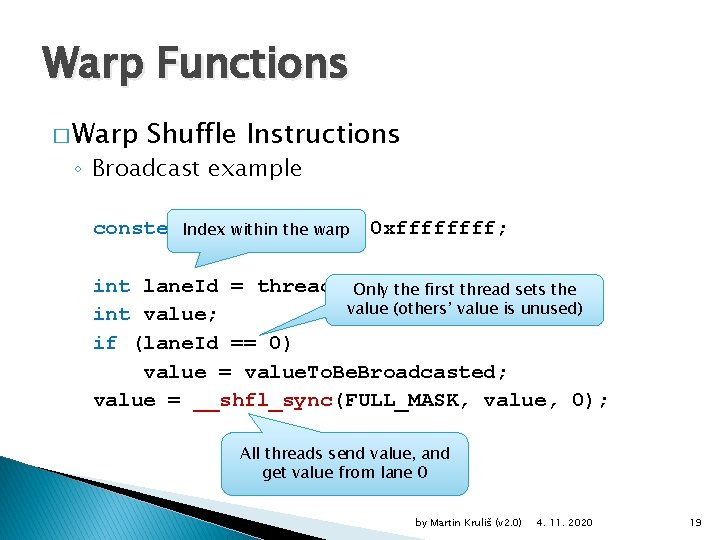

Warp Functions � Warp Shuffle Instructions ◦ Fast variable exchange within the warp ◦ Available for architectures with CC 3. 0 or newer ◦ Intrinsics �__shfl_sync() – direct copy from given lane �__shfl_up_sync() – copy with lower relative ID �__shfl_down_sync() – copy with higher relative ID �__shfl_xor_sync() – copy from a lane, which ID is computed as XOR of caller ID �All functions have optional width parameter, that allows to divide warp into smaller segments by Martin Kruliš (v 2. 0) 4. 11. 2020 18

Warp Functions � Warp Shuffle Instructions ◦ Broadcast example constexpr Index. FULL_MASK within the warp= 0 xffff; int lane. Id = thread. Idx. x % first warp. Size; Only the thread sets the value (others’ value is unused) int value; if (lane. Id == 0) value = value. To. Be. Broadcasted; value = __shfl_sync(FULL_MASK, value, 0); All threads send value, and get value from lane 0 by Martin Kruliš (v 2. 0) 4. 11. 2020 19

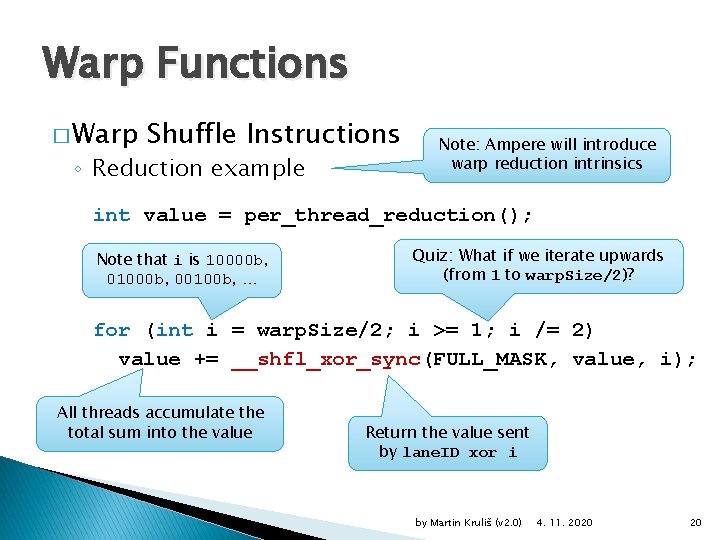

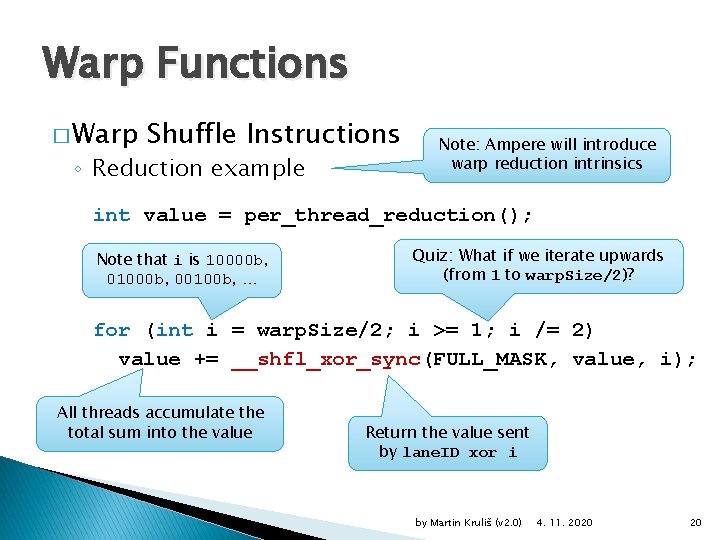

Warp Functions � Warp Shuffle Instructions ◦ Reduction example Note: Ampere will introduce warp reduction intrinsics int value = per_thread_reduction(); Note that i is 10000 b, 01000 b, 00100 b, … Quiz: What if we iterate upwards (from 1 to warp. Size/2)? for (int i = warp. Size/2; i >= 1; i /= 2) value += __shfl_xor_sync(FULL_MASK, value, i); All threads accumulate the total sum into the value Return the value sent by lane. ID xor i by Martin Kruliš (v 2. 0) 4. 11. 2020 20

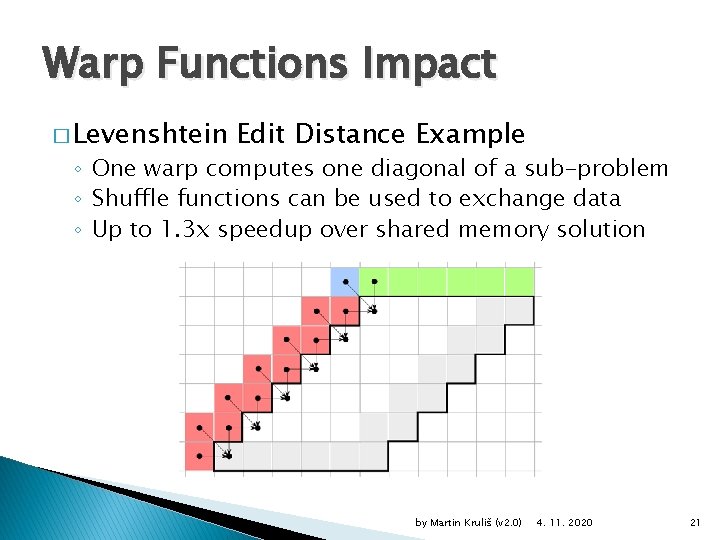

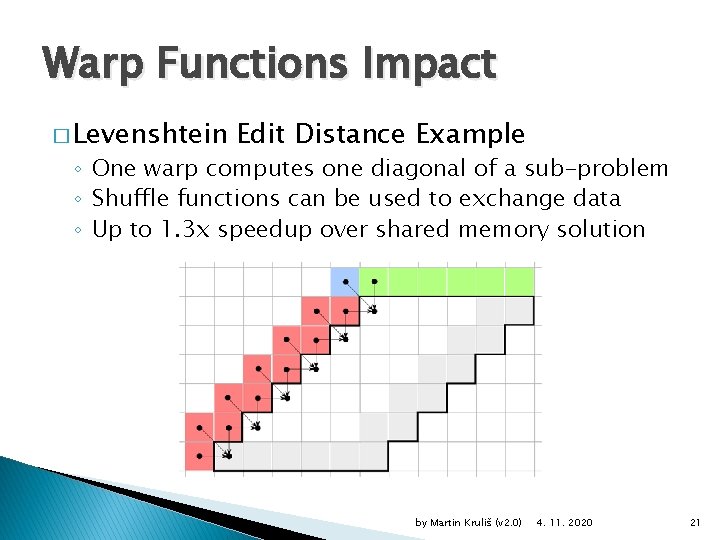

Warp Functions Impact � Levenshtein Edit Distance Example ◦ One warp computes one diagonal of a sub-problem ◦ Shuffle functions can be used to exchange data ◦ Up to 1. 3 x speedup over shared memory solution by Martin Kruliš (v 2. 0) 4. 11. 2020 21

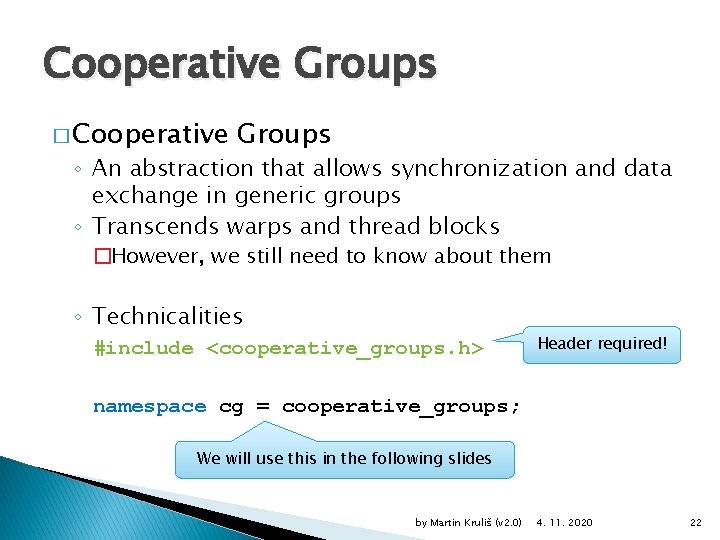

Cooperative Groups � Cooperative Groups ◦ An abstraction that allows synchronization and data exchange in generic groups ◦ Transcends warps and thread blocks �However, we still need to know about them ◦ Technicalities #include <cooperative_groups. h> Header required! namespace cg = cooperative_groups; We will use this in the following slides by Martin Kruliš (v 2. 0) 4. 11. 2020 22

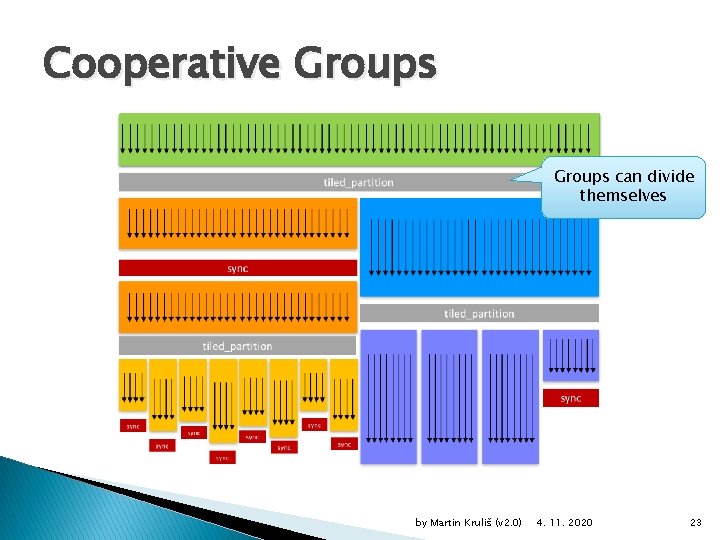

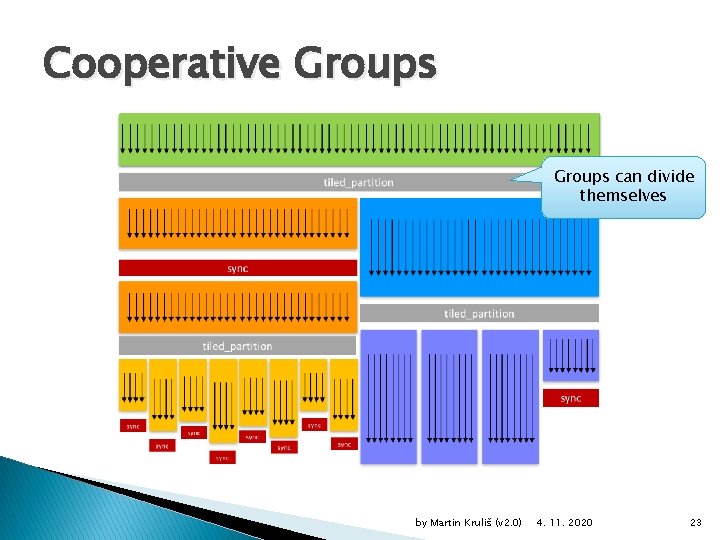

Cooperative Groups can divide themselves by Martin Kruliš (v 2. 0) 4. 11. 2020 23

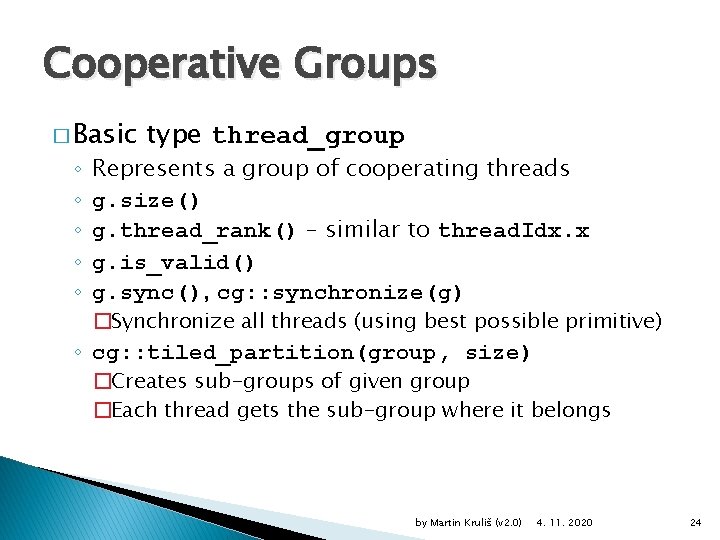

Cooperative Groups � Basic ◦ ◦ ◦ type thread_group Represents a group of cooperating threads g. size() g. thread_rank() – similar to thread. Idx. x g. is_valid() g. sync(), cg: : synchronize(g) �Synchronize all threads (using best possible primitive) ◦ cg: : tiled_partition(group, size) �Creates sub-groups of given group �Each thread gets the sub-group where it belongs by Martin Kruliš (v 2. 0) 4. 11. 2020 24

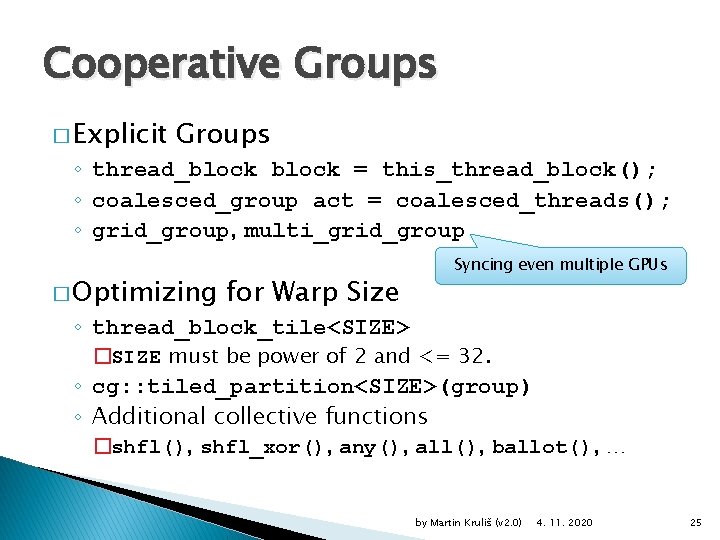

Cooperative Groups � Explicit Groups ◦ thread_block = this_thread_block(); ◦ coalesced_group act = coalesced_threads(); ◦ grid_group, multi_grid_group � Optimizing for Warp Size Syncing even multiple GPUs ◦ thread_block_tile<SIZE> �SIZE must be power of 2 and <= 32. ◦ cg: : tiled_partition<SIZE>(group) ◦ Additional collective functions �shfl(), shfl_xor(), any(), all(), ballot(), … by Martin Kruliš (v 2. 0) 4. 11. 2020 25

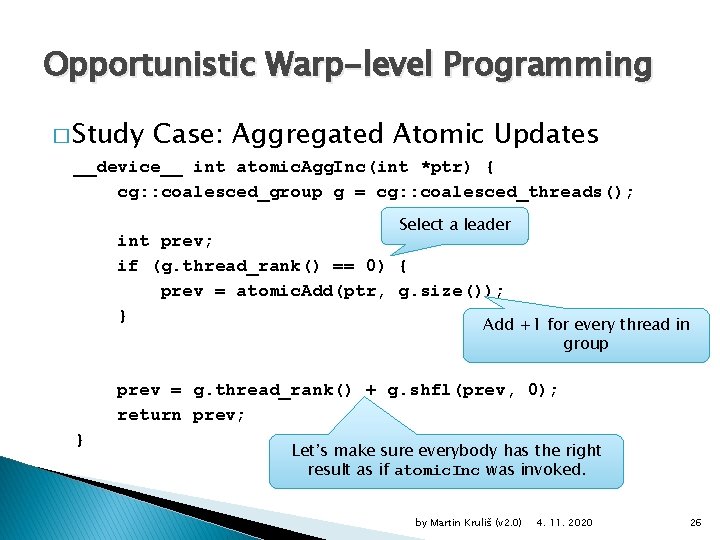

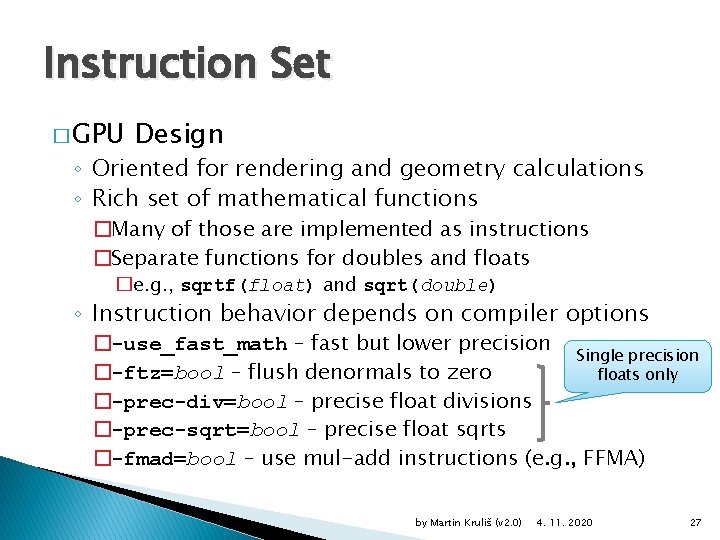

Opportunistic Warp-level Programming � Study Case: Aggregated Atomic Updates __device__ int atomic. Agg. Inc(int *ptr) { cg: : coalesced_group g = cg: : coalesced_threads(); Select a leader int prev; if (g. thread_rank() == 0) { prev = atomic. Add(ptr, g. size()); } Add +1 for every thread in group prev = g. thread_rank() + g. shfl(prev, 0); return prev; } Let’s make sure everybody has the right result as if atomic. Inc was invoked. by Martin Kruliš (v 2. 0) 4. 11. 2020 26

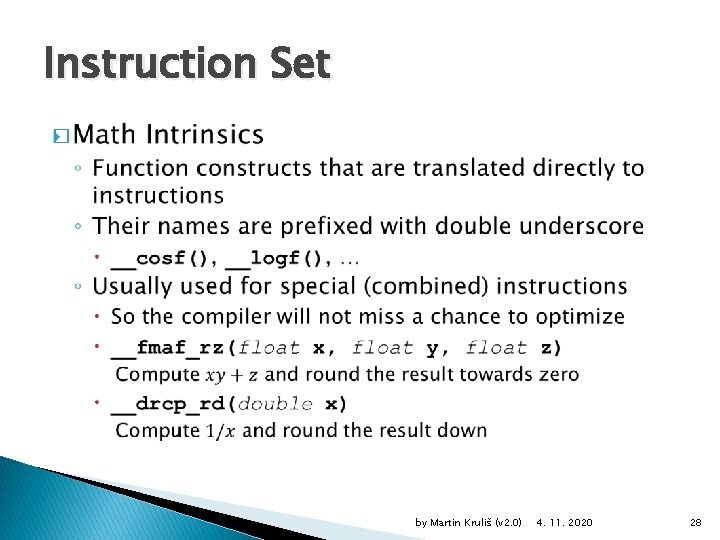

Instruction Set � GPU Design ◦ Oriented for rendering and geometry calculations ◦ Rich set of mathematical functions �Many of those are implemented as instructions �Separate functions for doubles and floats �e. g. , sqrtf(float) and sqrt(double) ◦ Instruction behavior depends on compiler options �-use_fast_math – fast but lower precision Single precision �-ftz=bool – flush denormals to zero floats only �-prec-div=bool – precise float divisions �-prec-sqrt=bool – precise float sqrts �-fmad=bool – use mul-add instructions (e. g. , FFMA) by Martin Kruliš (v 2. 0) 4. 11. 2020 27

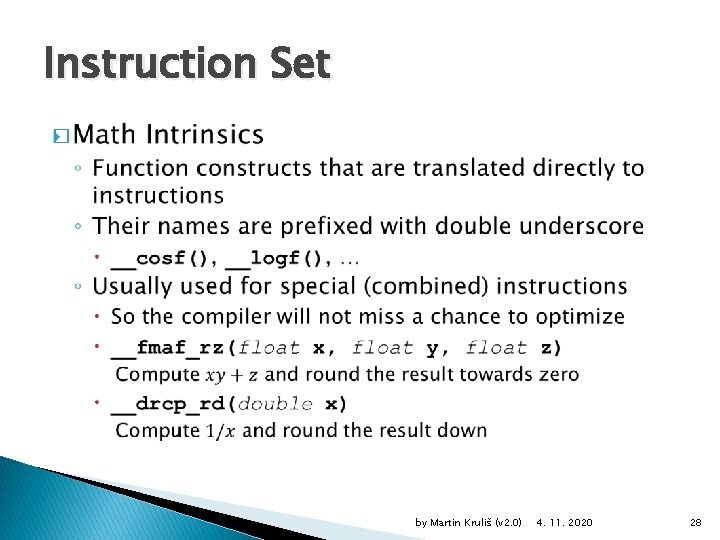

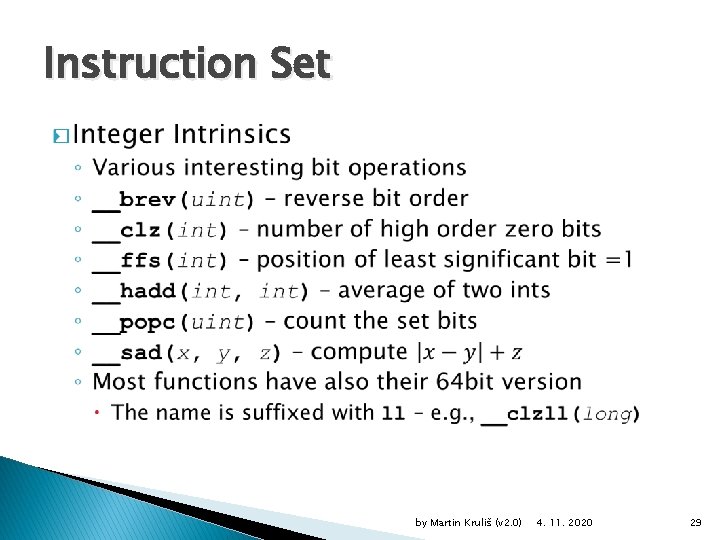

Instruction Set � by Martin Kruliš (v 2. 0) 4. 11. 2020 28

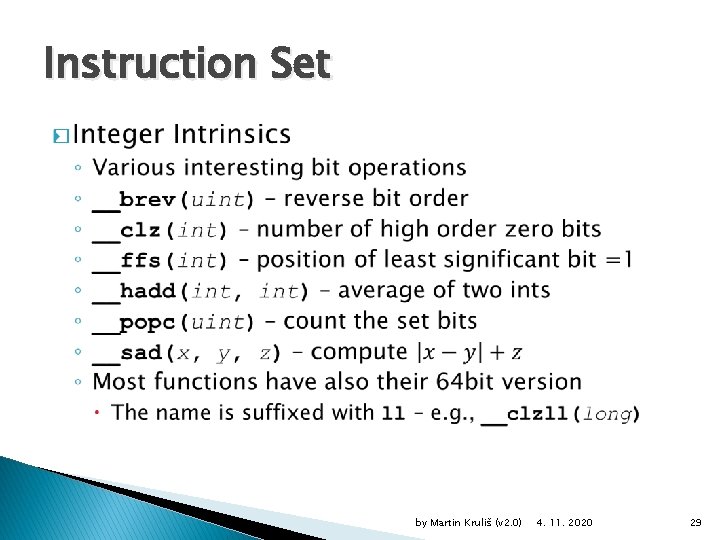

Instruction Set � by Martin Kruliš (v 2. 0) 4. 11. 2020 29

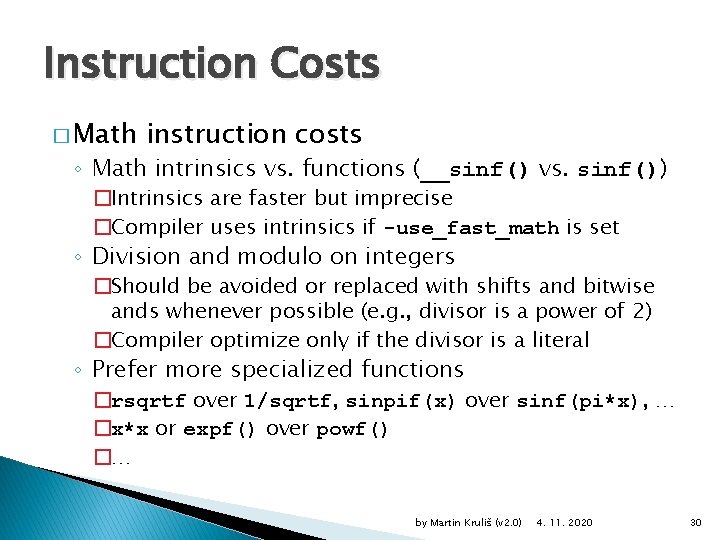

Instruction Costs � Math instruction costs ◦ Math intrinsics vs. functions (__sinf() vs. sinf()) �Intrinsics are faster but imprecise �Compiler uses intrinsics if -use_fast_math is set ◦ Division and modulo on integers �Should be avoided or replaced with shifts and bitwise ands whenever possible (e. g. , divisor is a power of 2) �Compiler optimize only if the divisor is a literal ◦ Prefer more specialized functions �rsqrtf over 1/sqrtf, sinpif(x) over sinf(pi*x), … �x*x or expf() over powf() �… by Martin Kruliš (v 2. 0) 4. 11. 2020 30

Discussion by Martin Kruliš (v 2. 0) 4. 11. 2020 31