CUDA Programming Overview CUDA Programming Model Integrated host

- Slides: 37

CUDA Programming Overview

CUDA Programming Model Integrated host and device application C program - serial or modestly parallel parts in host C code - highly parallel parts in device C extension code . . . 2

CUDA Programming Model What is the computed device? - coprocessor to the host part - have its own device memory space - run many active threads in parallel What is the difference between CPU and GPU threads? - GPU threads are extremely lightweight - GPU threads have almost no creating overhead - GPU needs more than 1000 threads for full occupancy - multiple core CPU can execute or create only a little threads 3

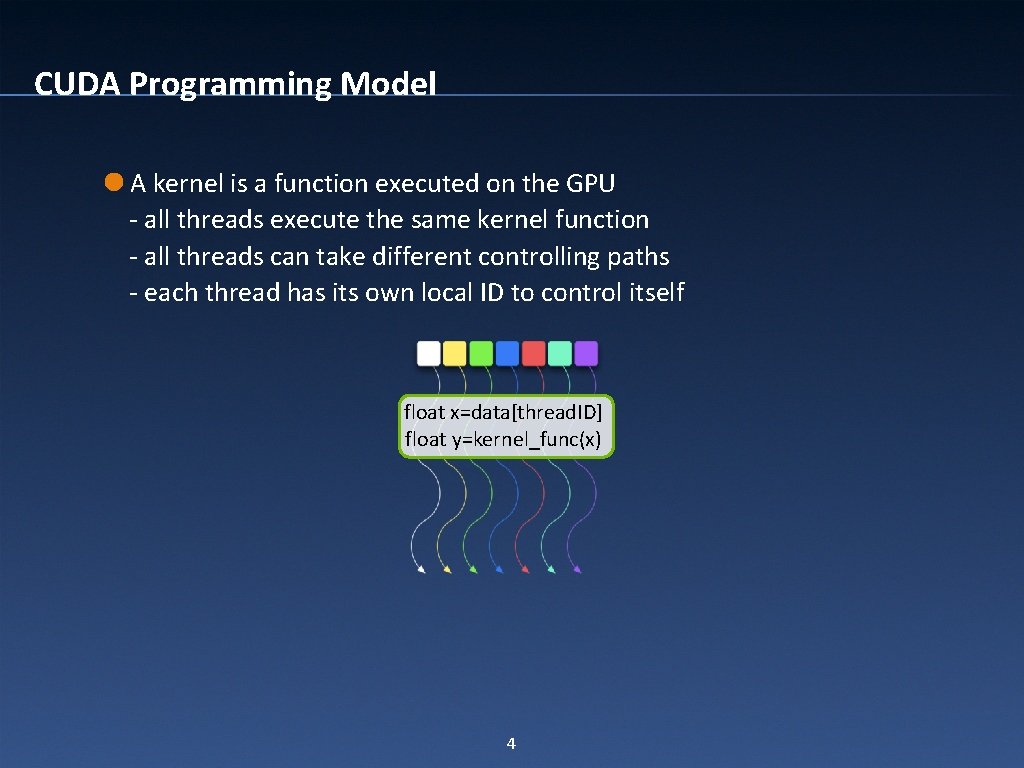

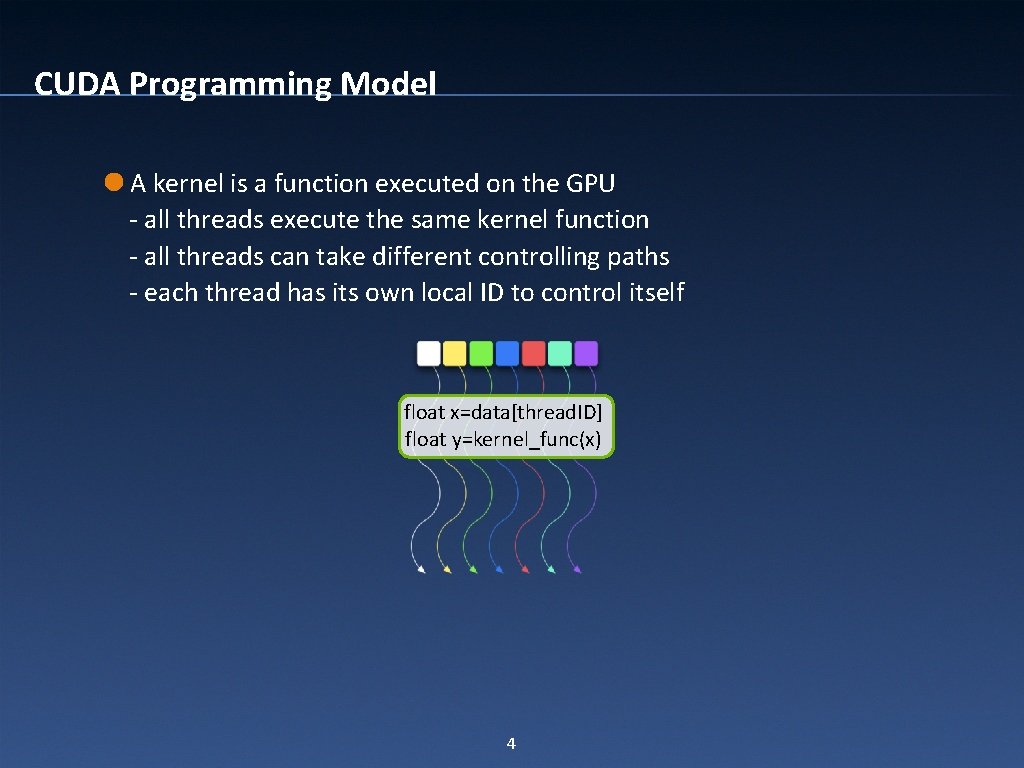

CUDA Programming Model A kernel is a function executed on the GPU - all threads execute the same kernel function - all threads can take different controlling paths - each thread has its own local ID to control itself float x=data[thread. ID] float y=kernel_func(x) 4

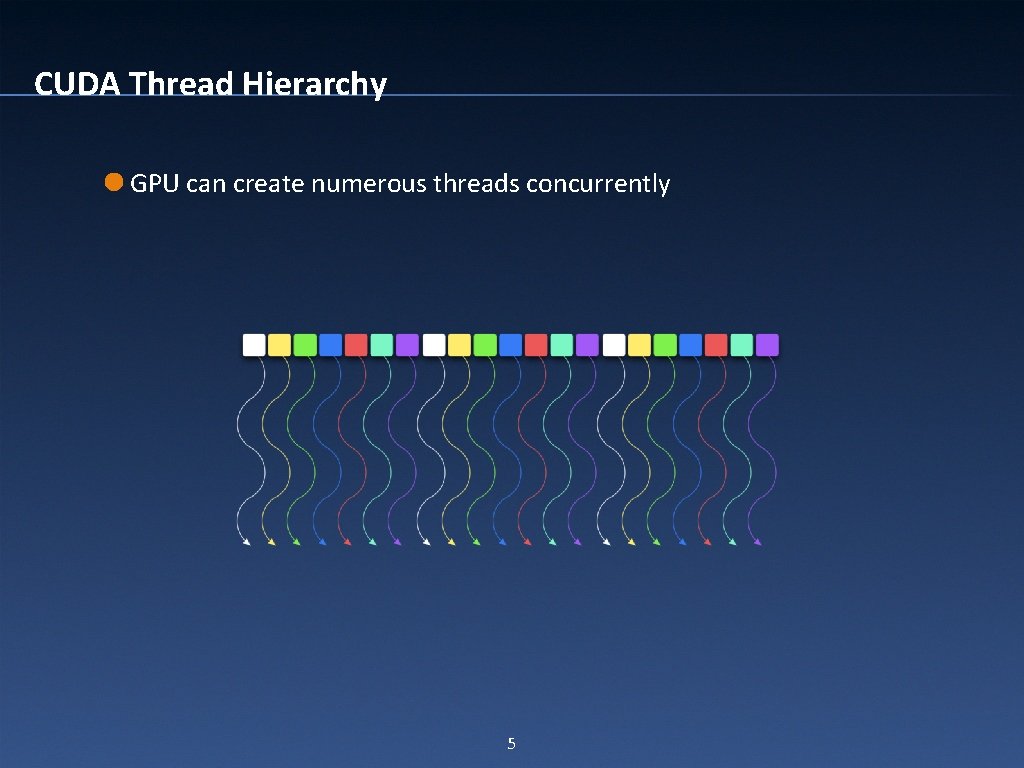

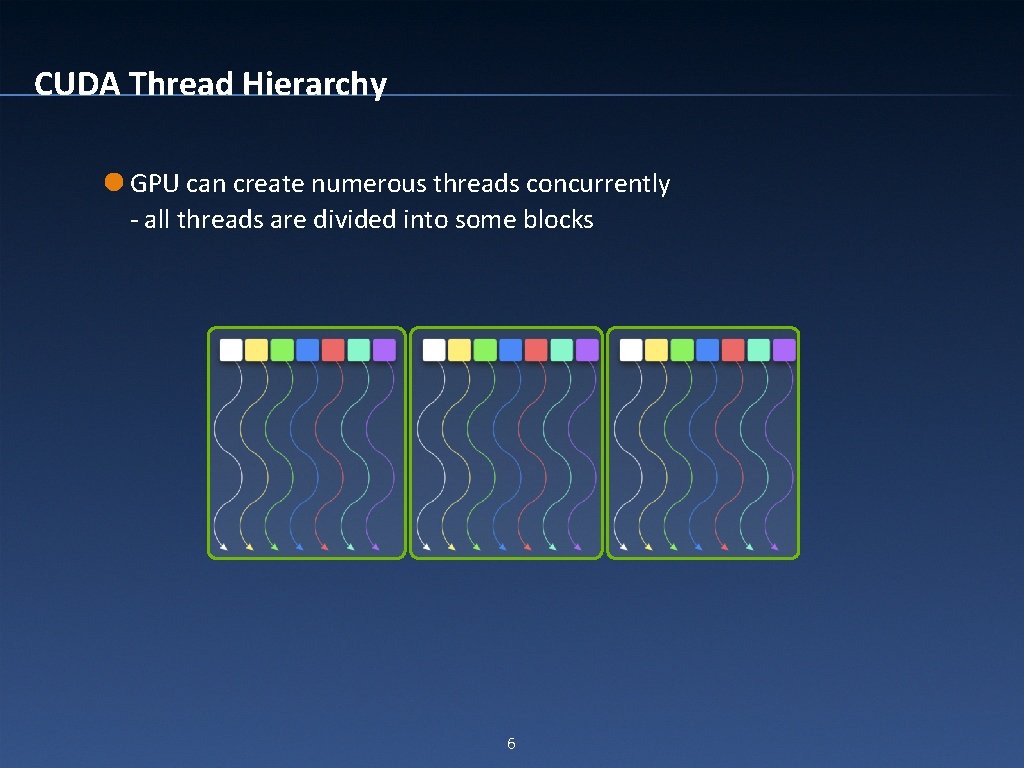

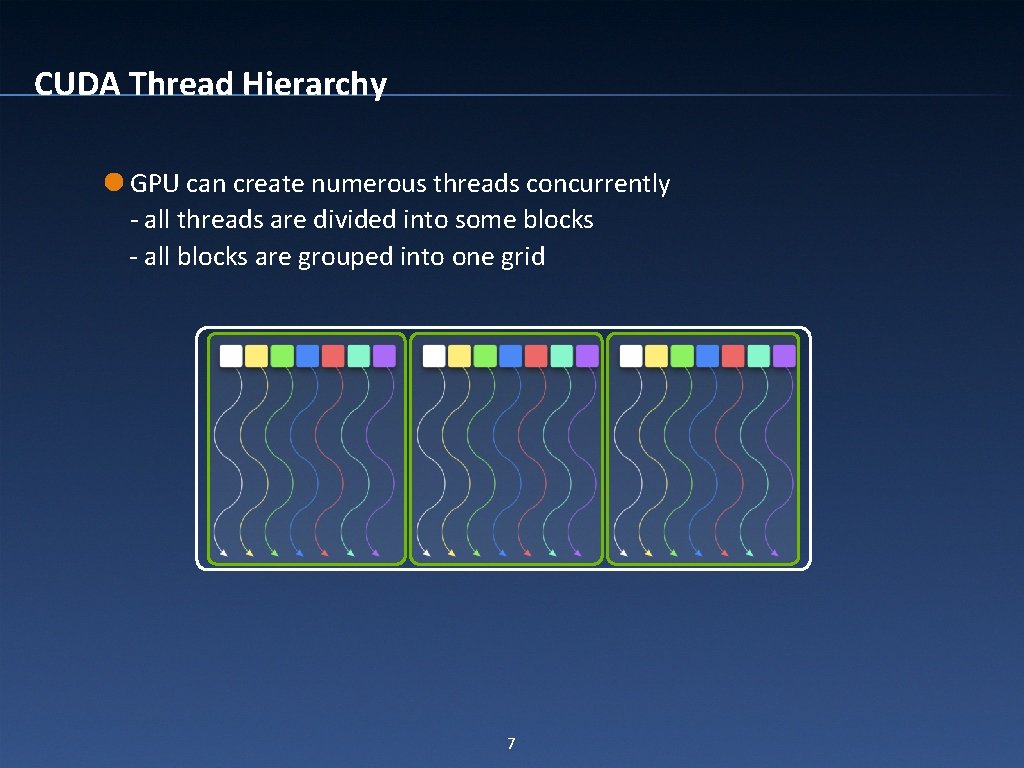

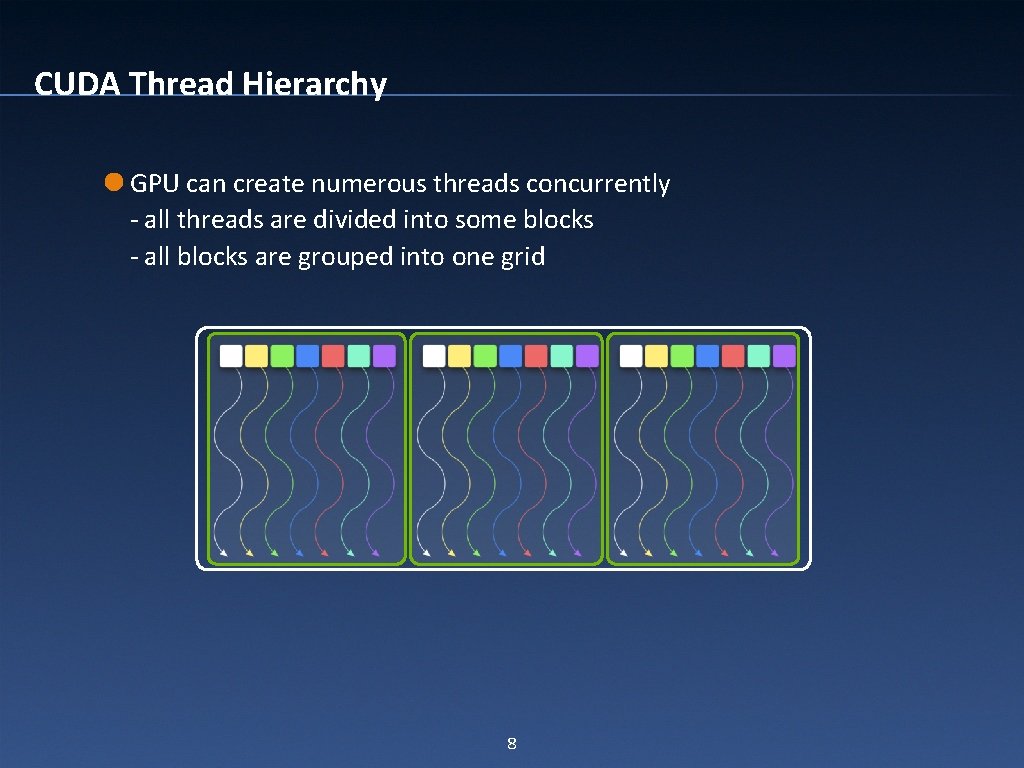

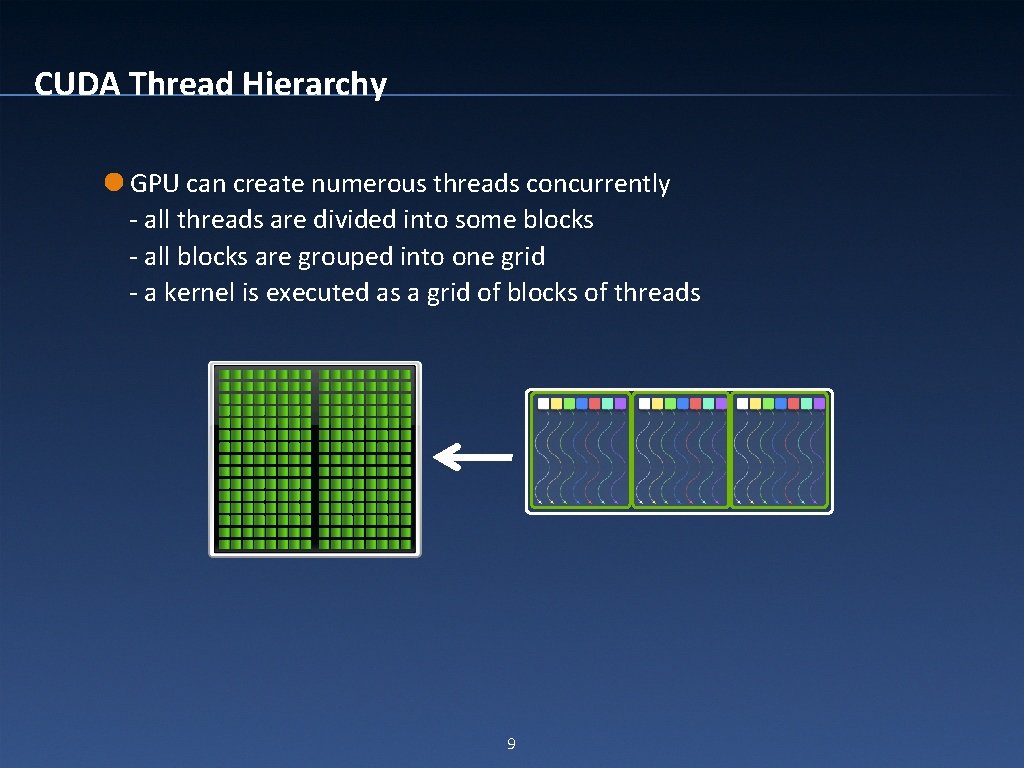

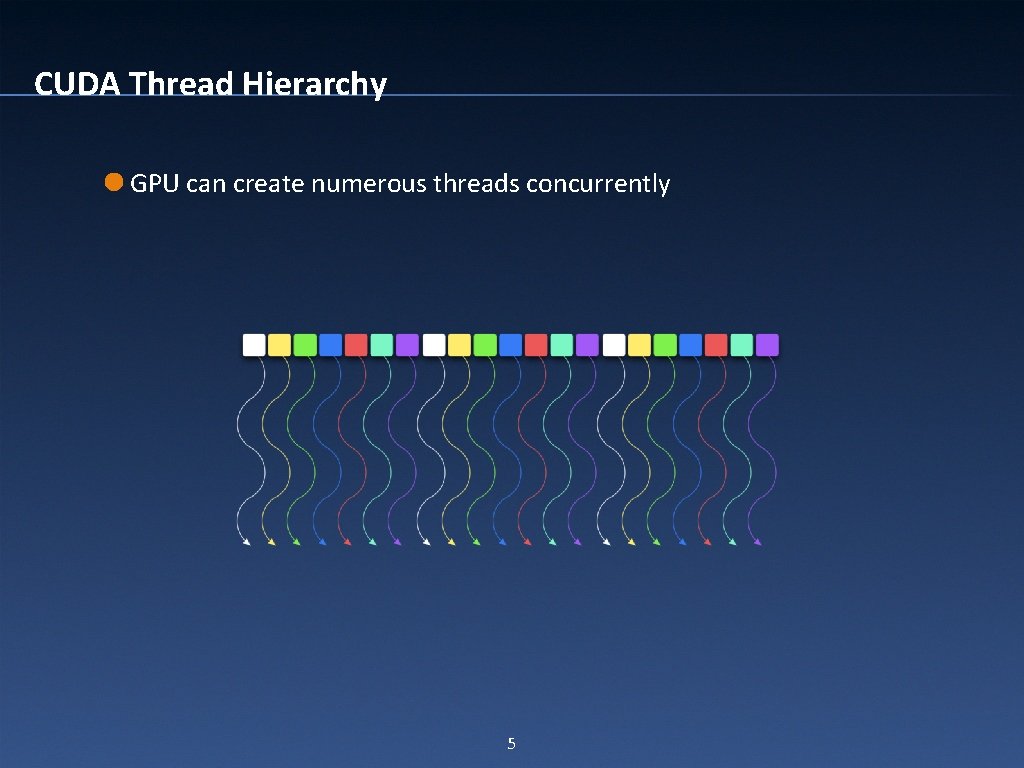

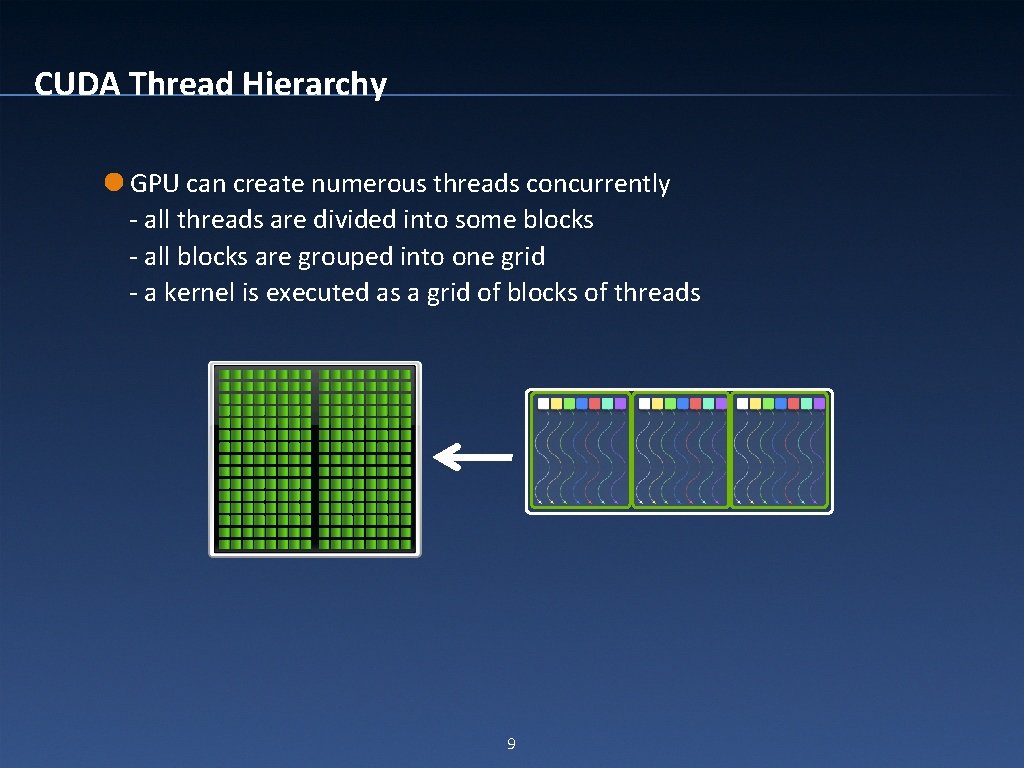

CUDA Thread Hierarchy GPU can create numerous threads concurrently 5

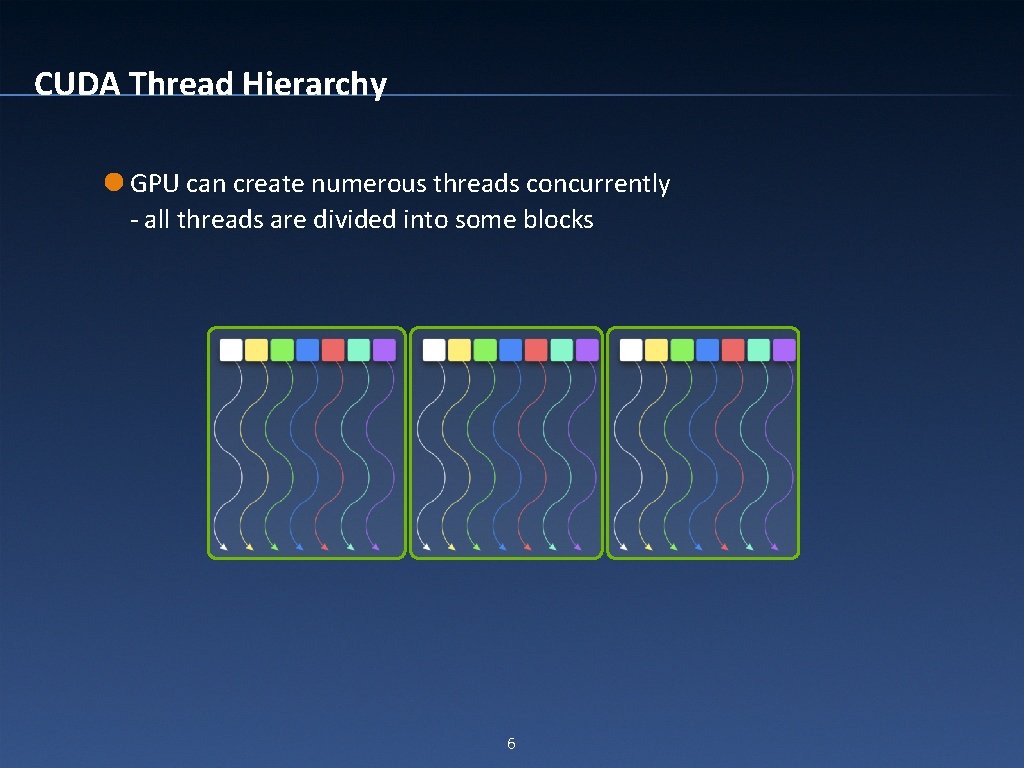

CUDA Thread Hierarchy GPU can create numerous threads concurrently - all threads are divided into some blocks 6

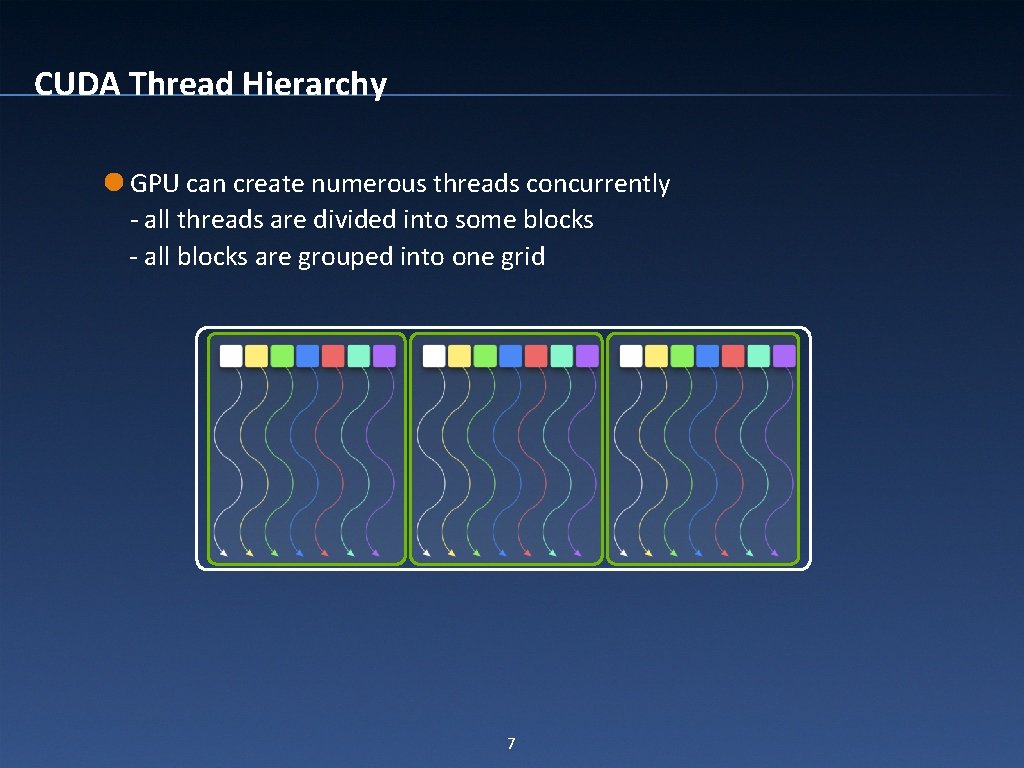

CUDA Thread Hierarchy GPU can create numerous threads concurrently - all threads are divided into some blocks - all blocks are grouped into one grid 7

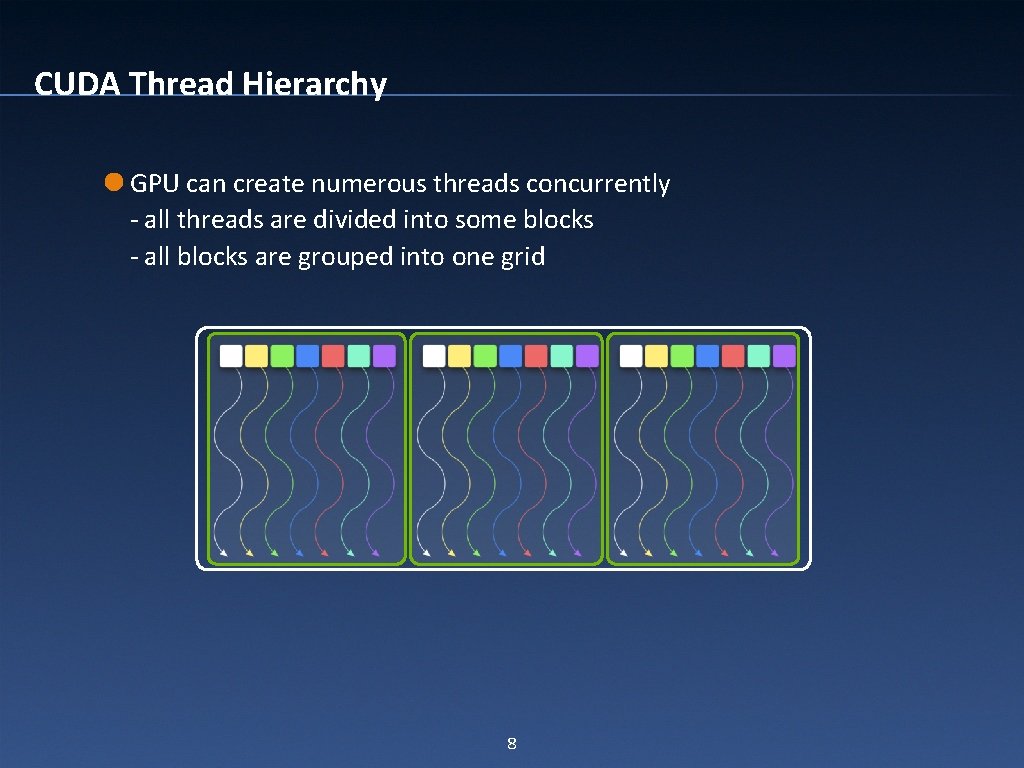

CUDA Thread Hierarchy GPU can create numerous threads concurrently - all threads are divided into some blocks - all blocks are grouped into one grid 8

CUDA Thread Hierarchy GPU can create numerous threads concurrently - all threads are divided into some blocks - all blocks are grouped into one grid - a kernel is executed as a grid of blocks of threads 9

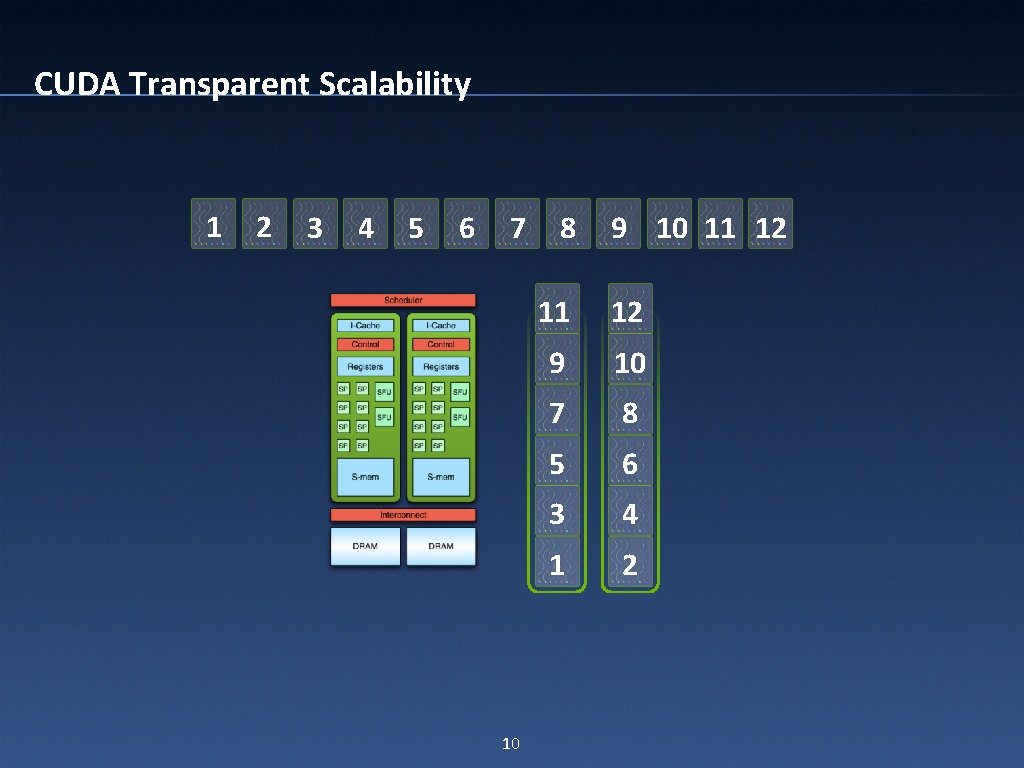

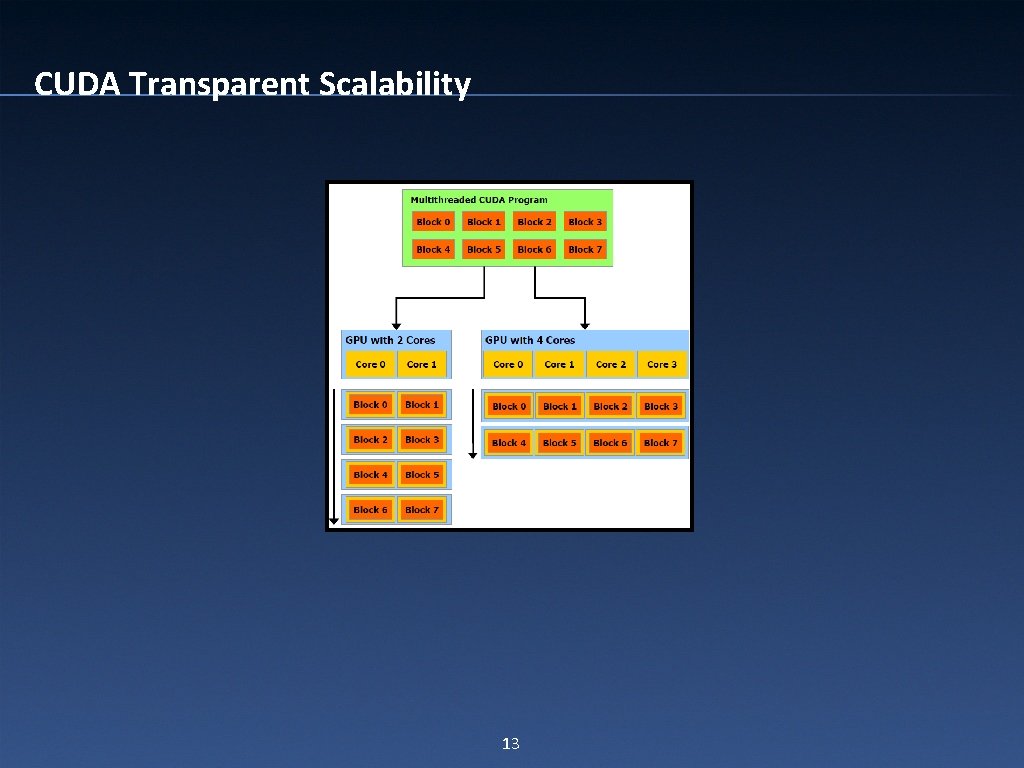

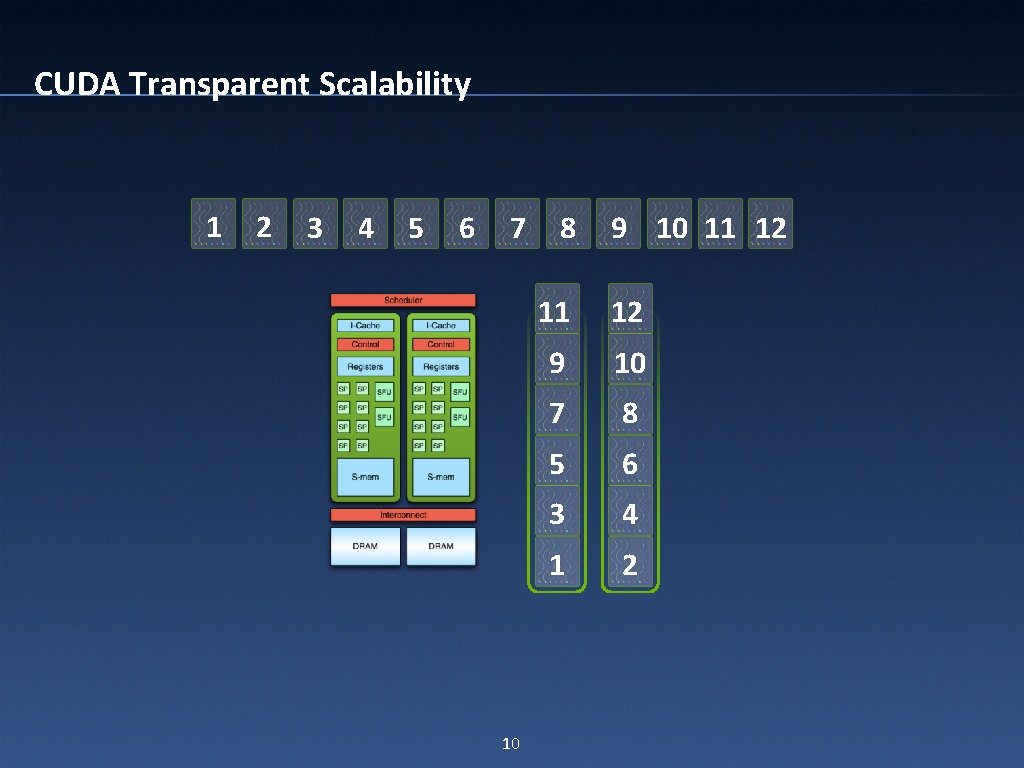

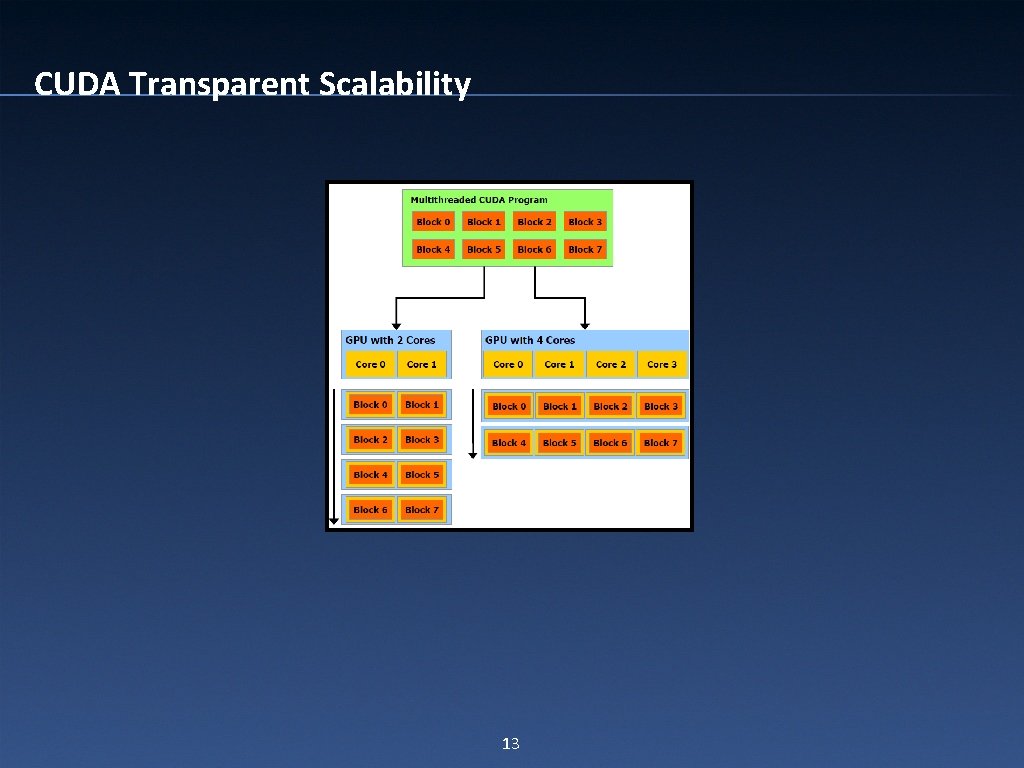

CUDA Transparent Scalability 1 2 3 4 5 6 7 10 8 9 10 11 12 9 10 7 8 5 6 3 4 1 2

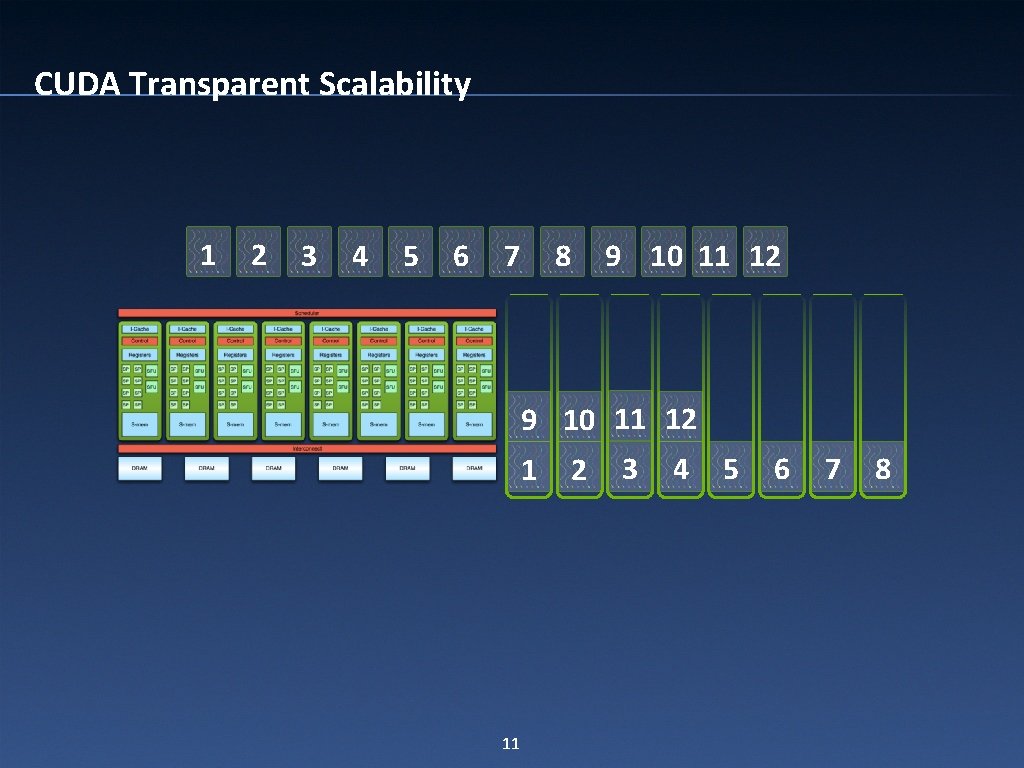

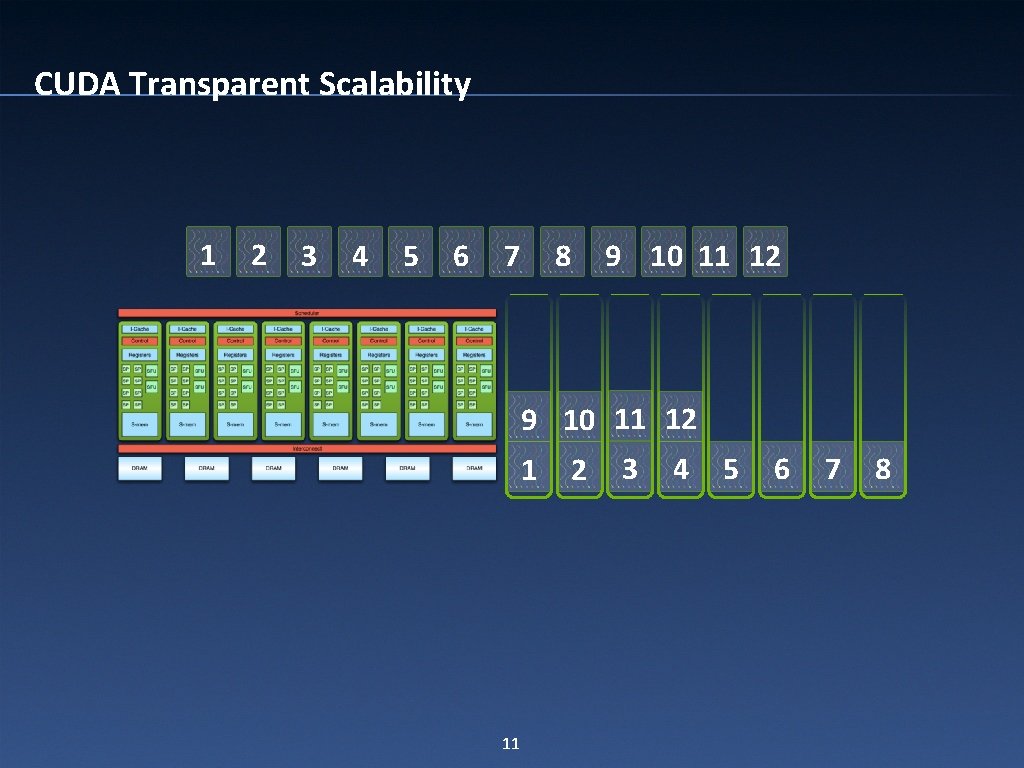

CUDA Transparent Scalability 1 2 3 4 5 6 7 8 9 10 11 12 1 11 2 3 4 5 6 7 8

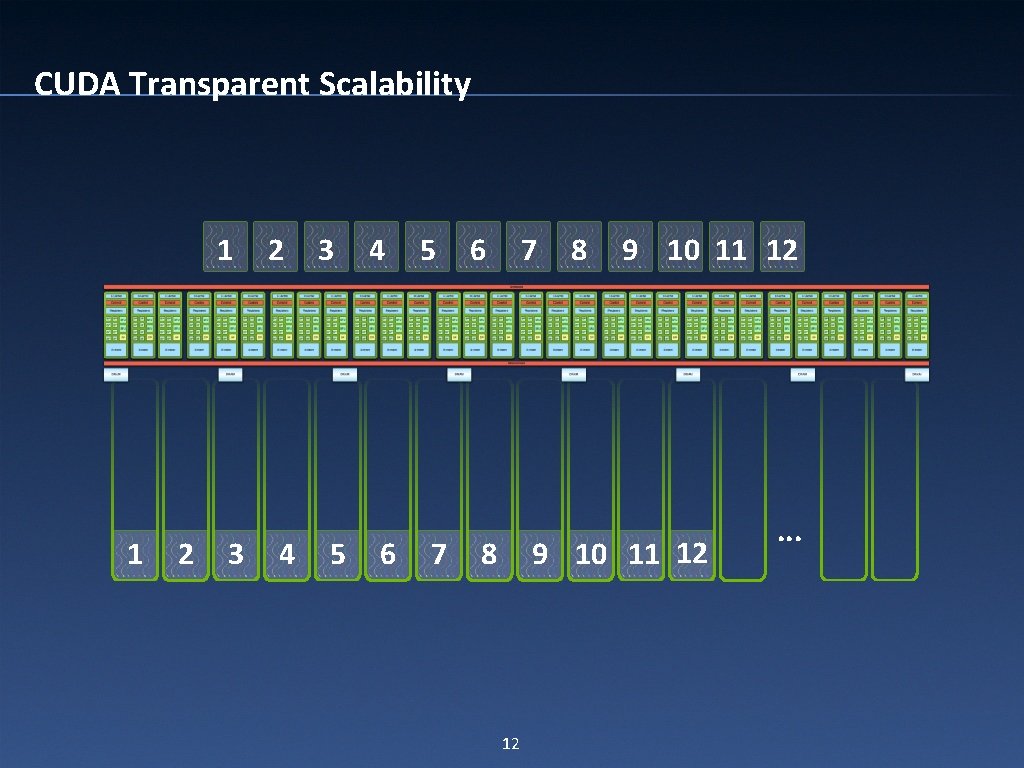

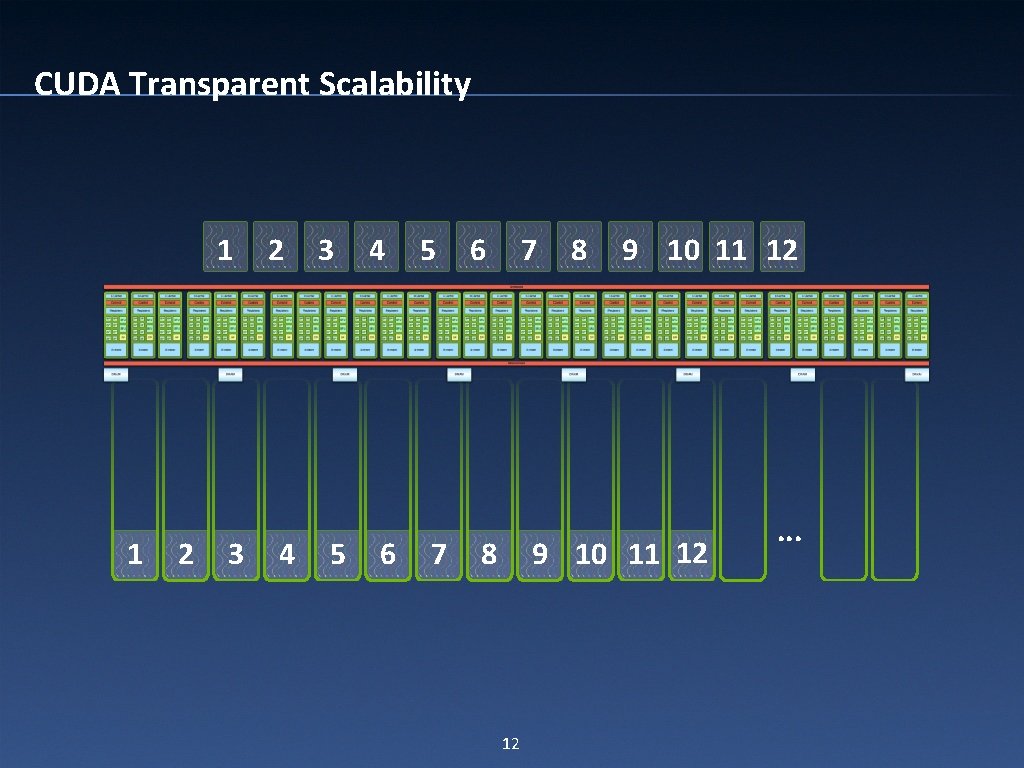

CUDA Transparent Scalability 1 1 2 3 2 4 3 5 4 6 5 7 6 7 8 9 10 11 12 8 12 . . .

CUDA Transparent Scalability 13

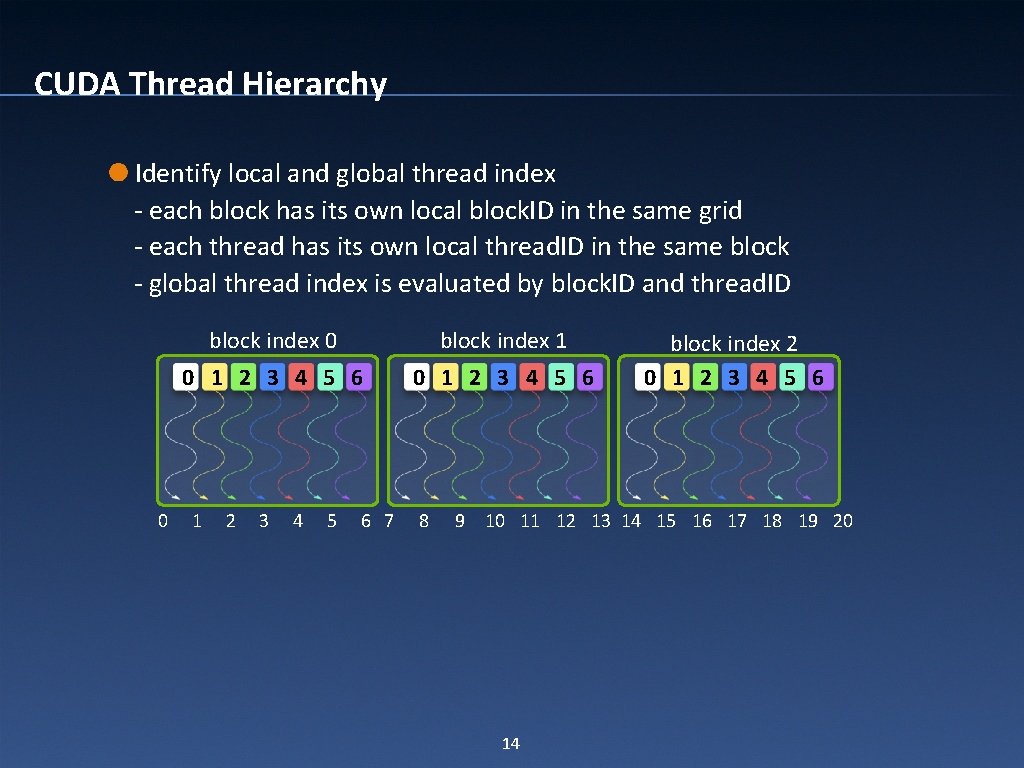

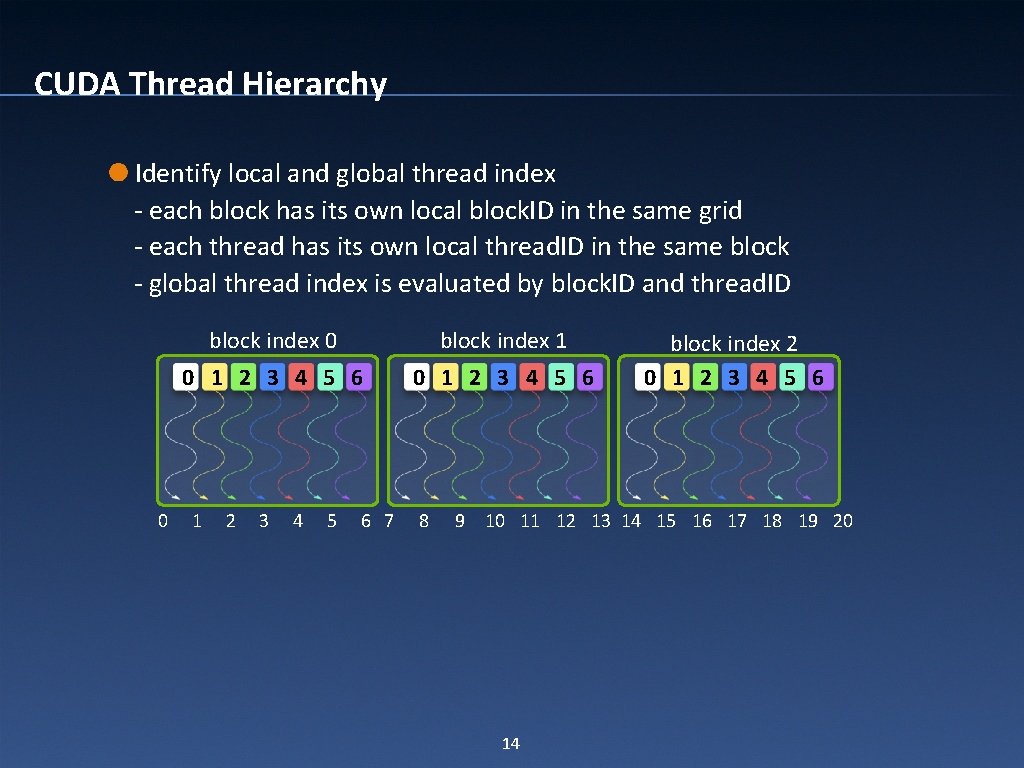

CUDA Thread Hierarchy Identify local and global thread index - each block has its own local block. ID in the same grid - each thread has its own local thread. ID in the same block - global thread index is evaluated by block. ID and thread. ID 0 block index 1 0 1 2 3 4 5 6 7 8 9 block index 2 0 1 2 3 4 5 6 10 11 12 13 14 15 16 17 18 19 20 14

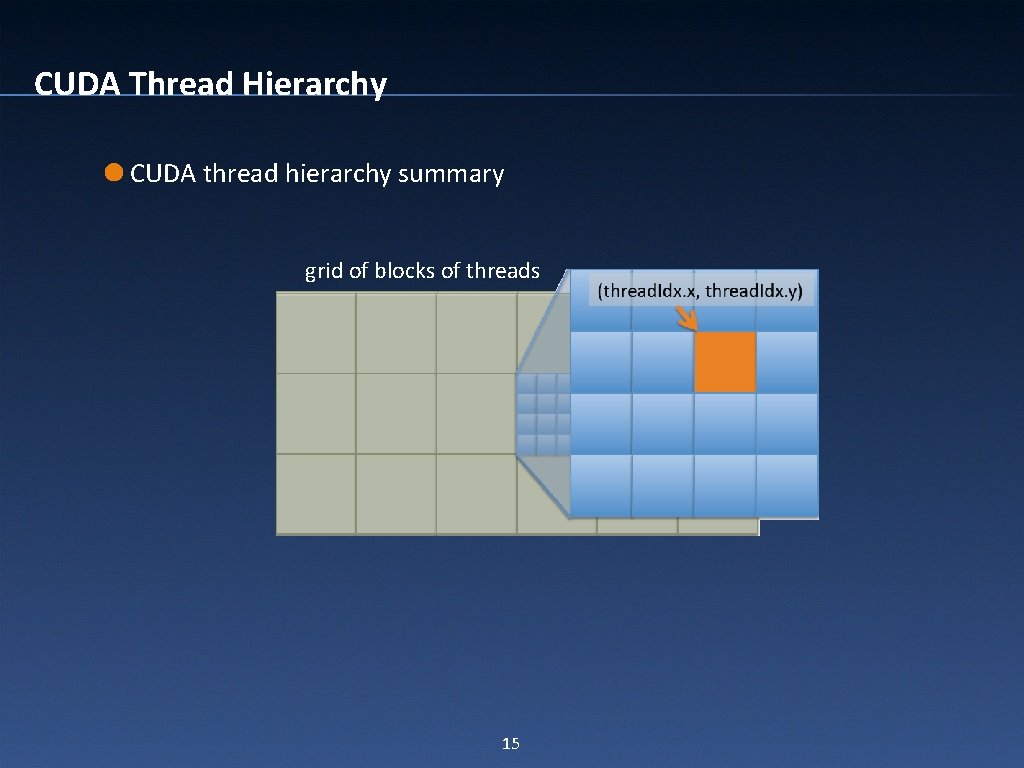

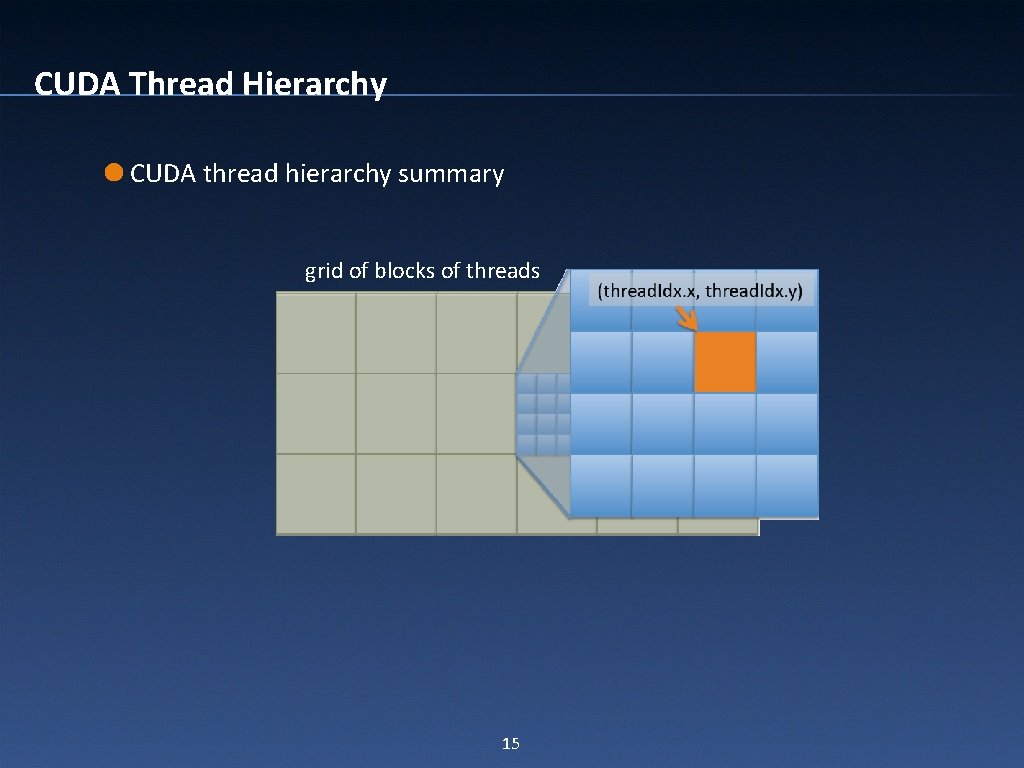

CUDA Thread Hierarchy CUDA thread hierarchy summary grid of blocks of threads 15

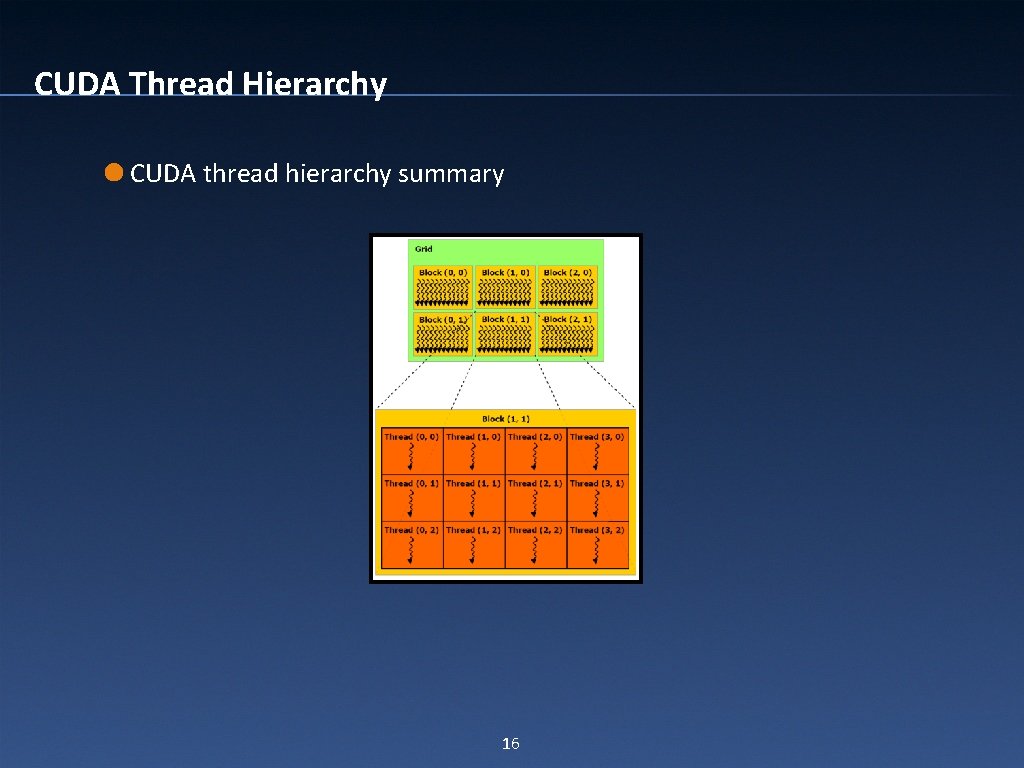

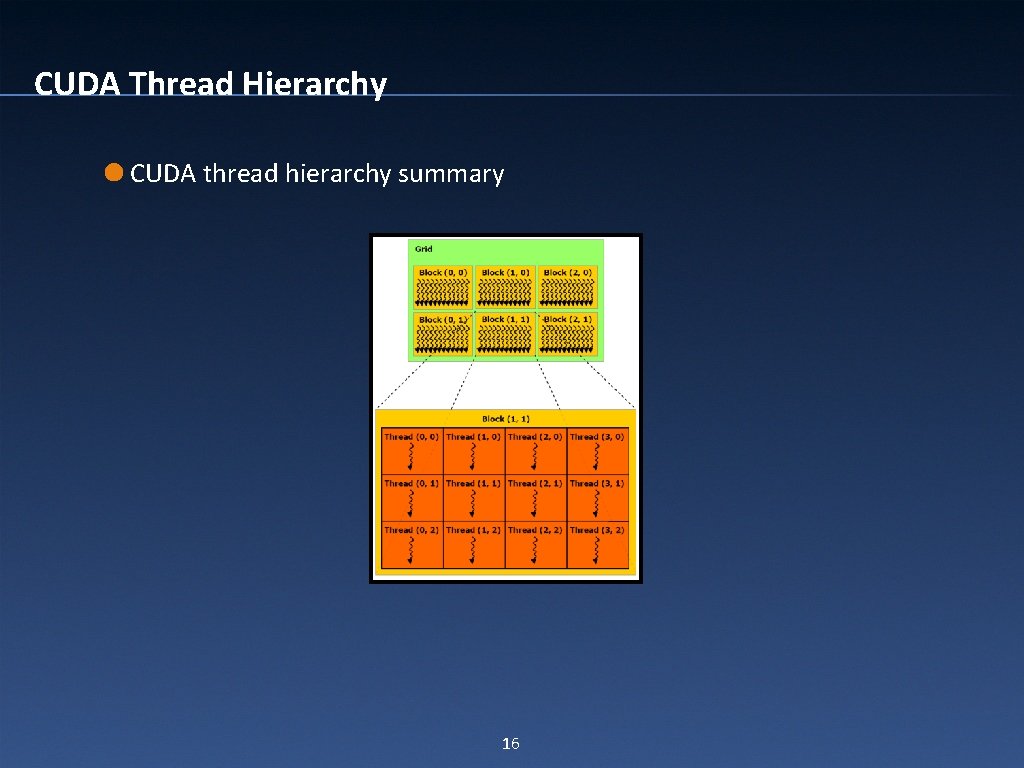

CUDA Thread Hierarchy CUDA thread hierarchy summary 16

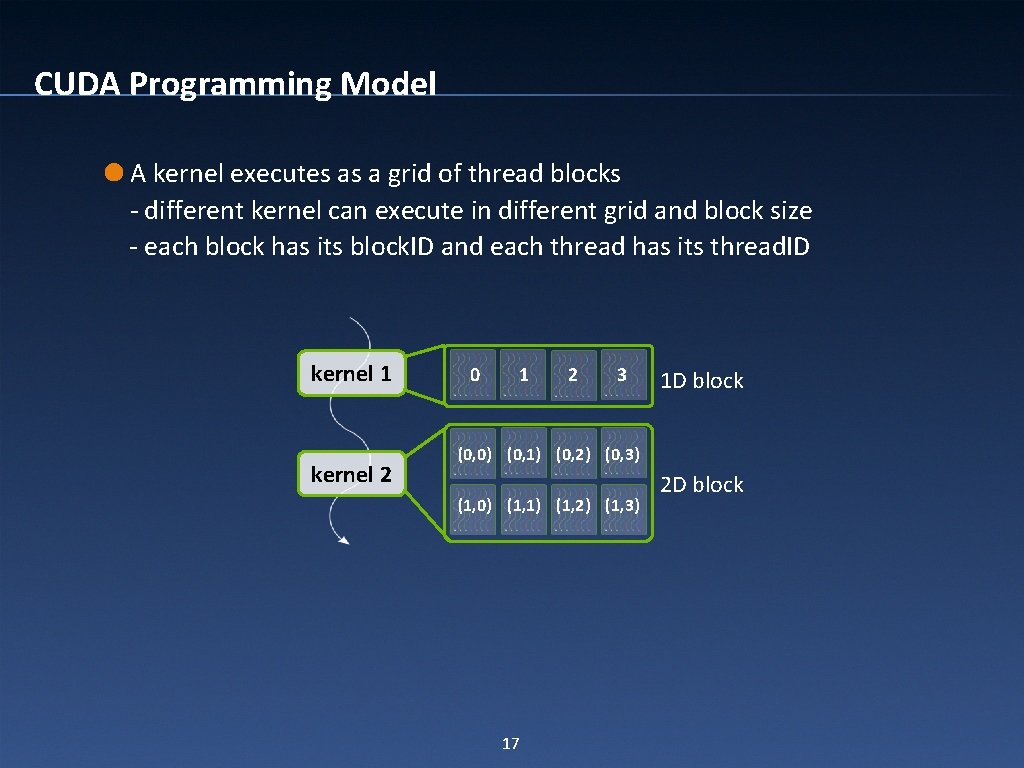

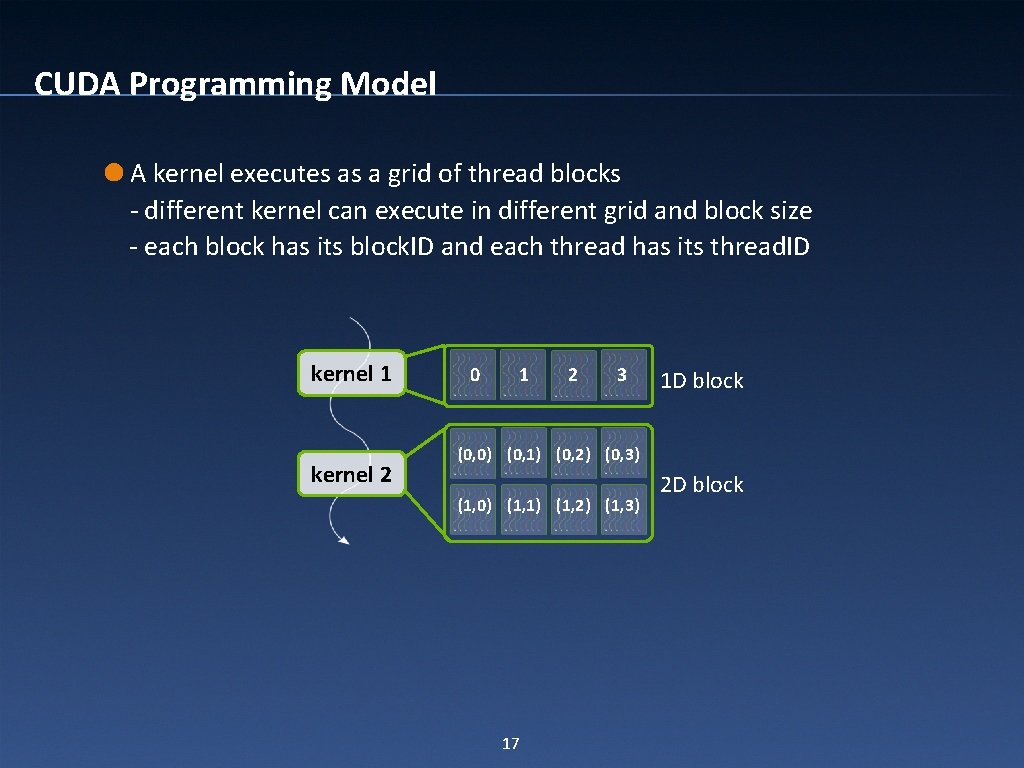

CUDA Programming Model A kernel executes as a grid of thread blocks - different kernel can execute in different grid and block size - each block has its block. ID and each thread has its thread. ID kernel 1 kernel 2 0 1 2 3 1 D block (0, 0) (0, 1) (0, 2) (0, 3) (1, 0) (1, 1) (1, 2) (1, 3) 17 2 D block

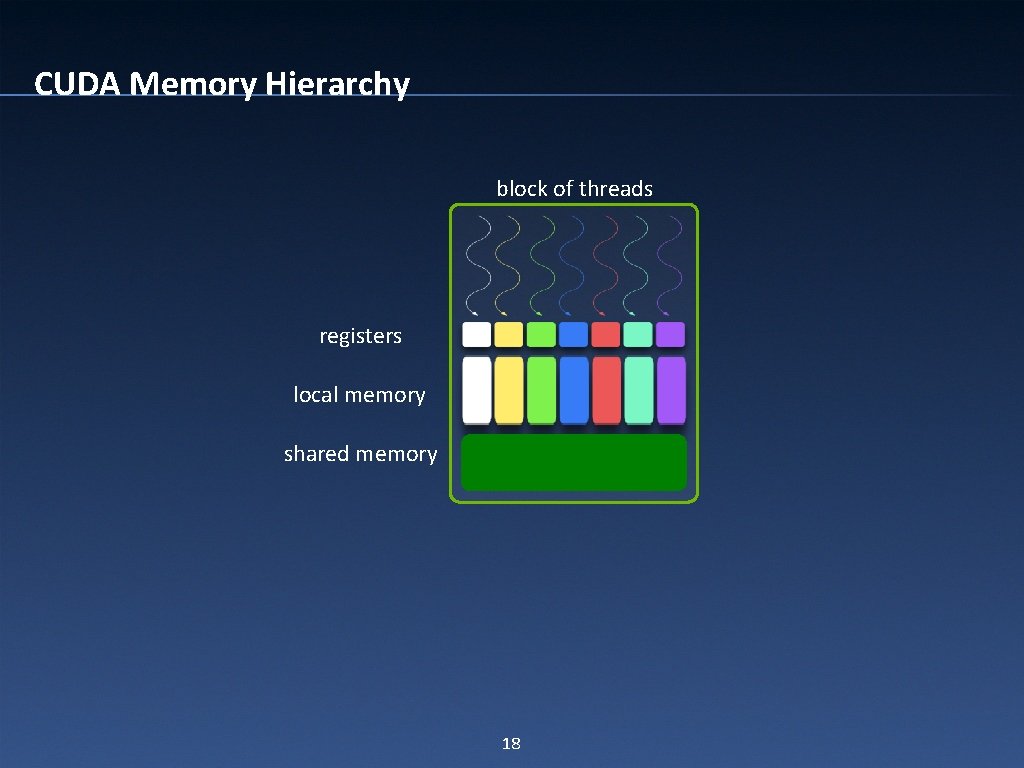

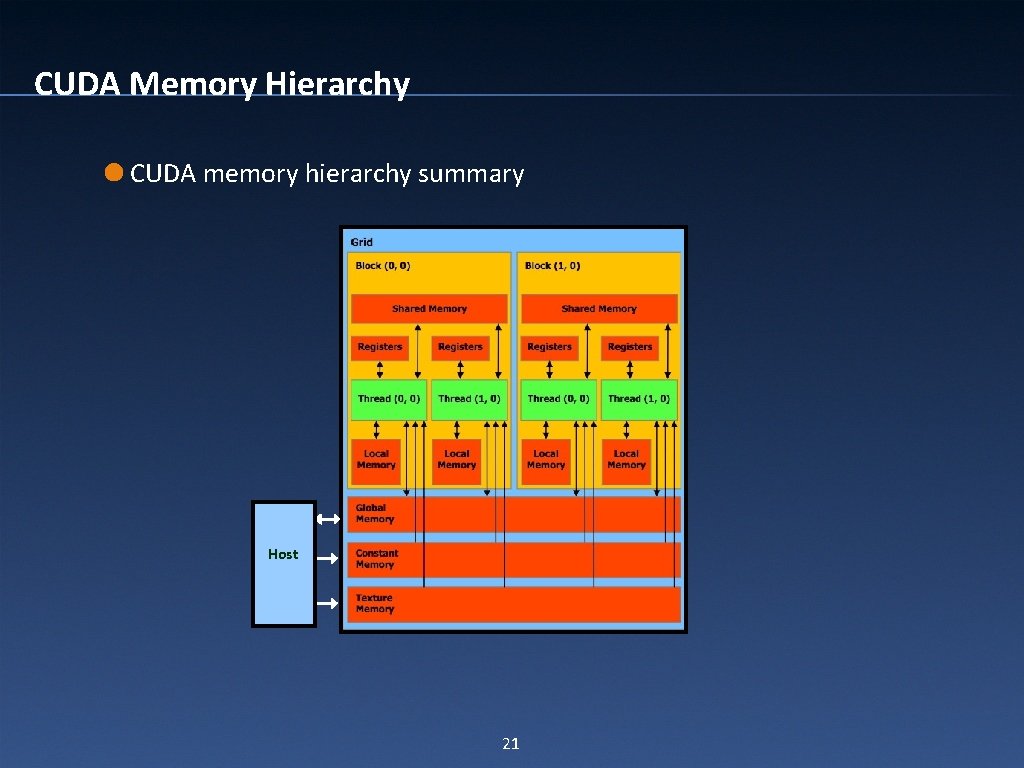

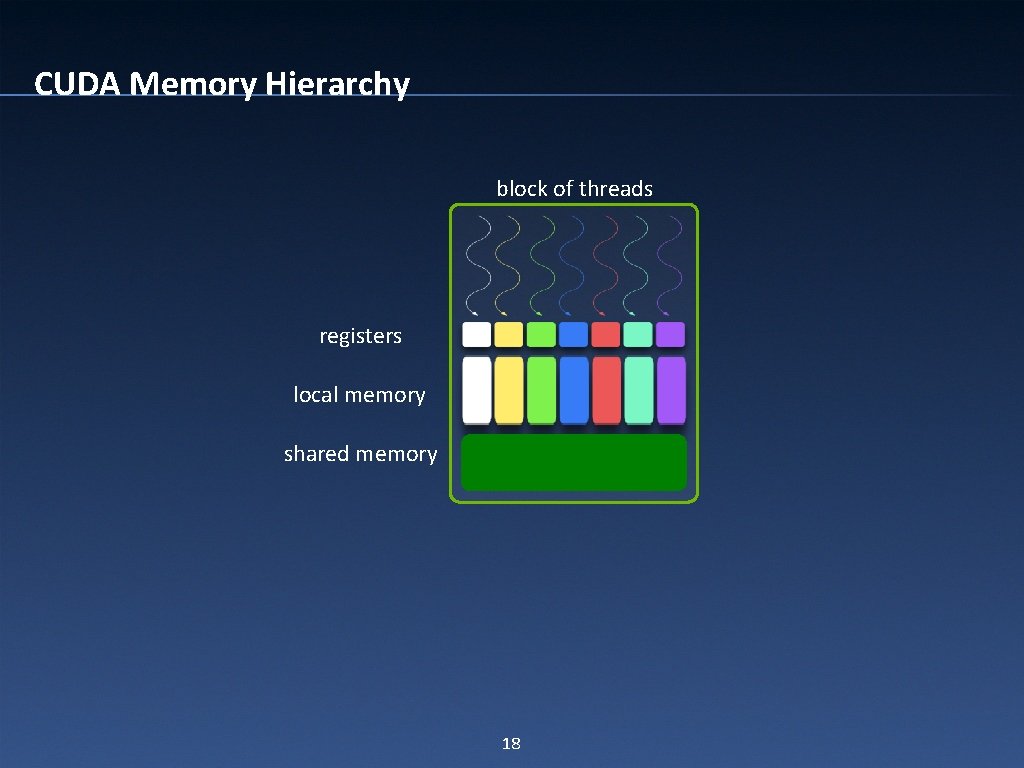

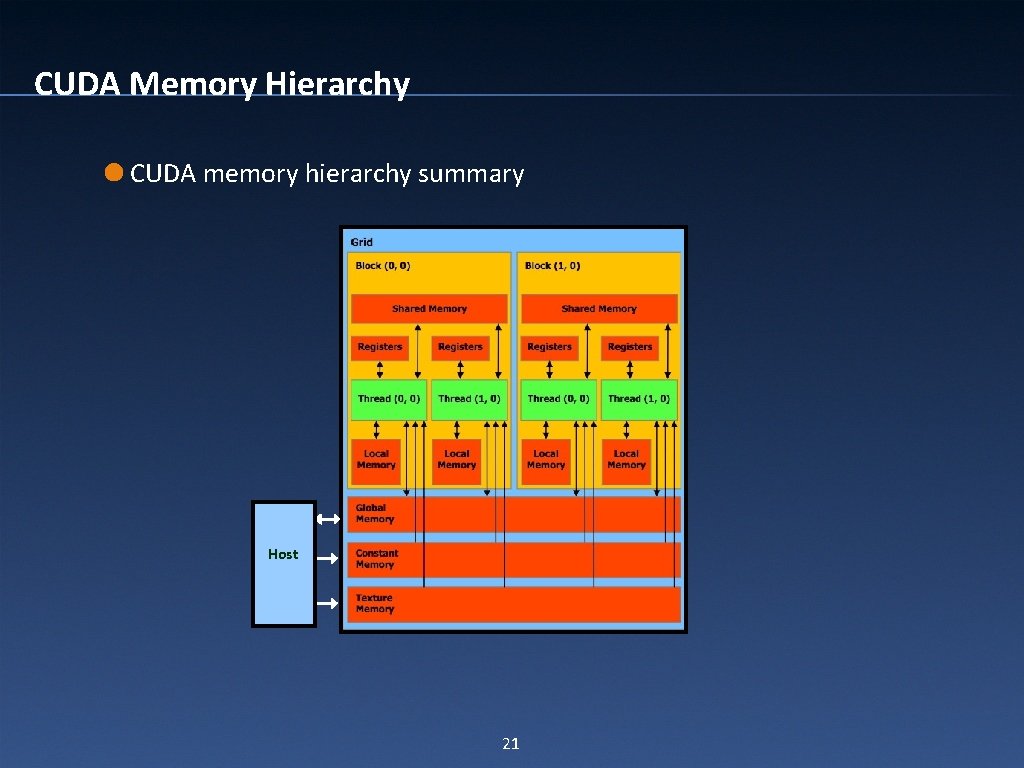

CUDA Memory Hierarchy block of threads registers local memory shared memory 18

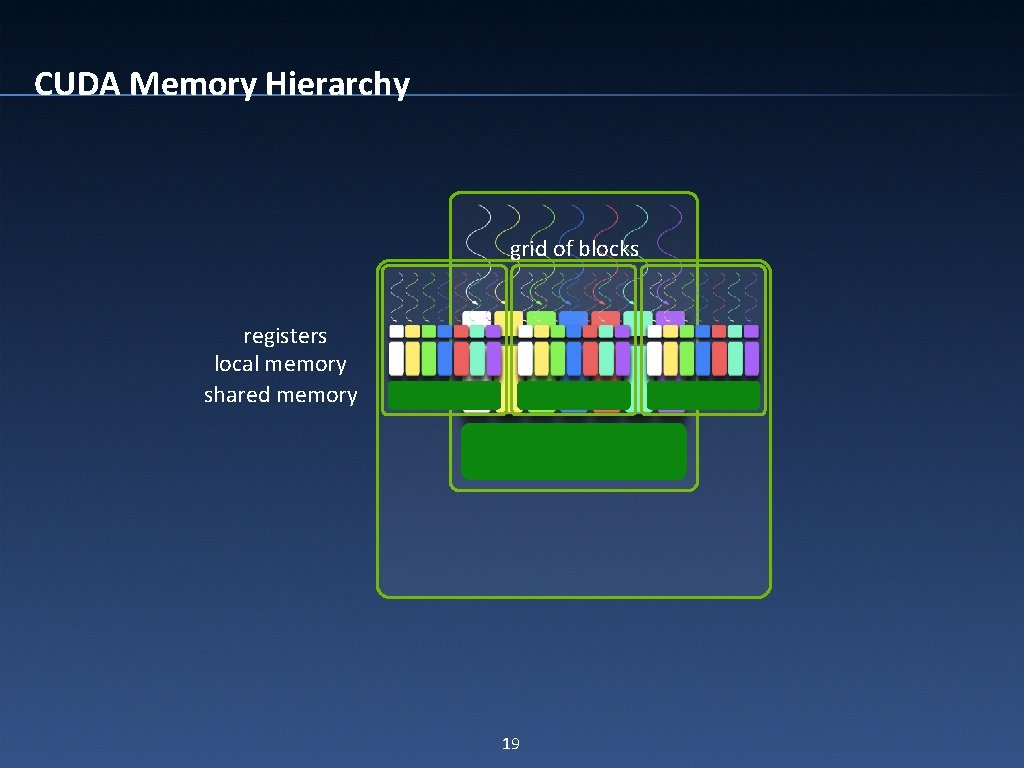

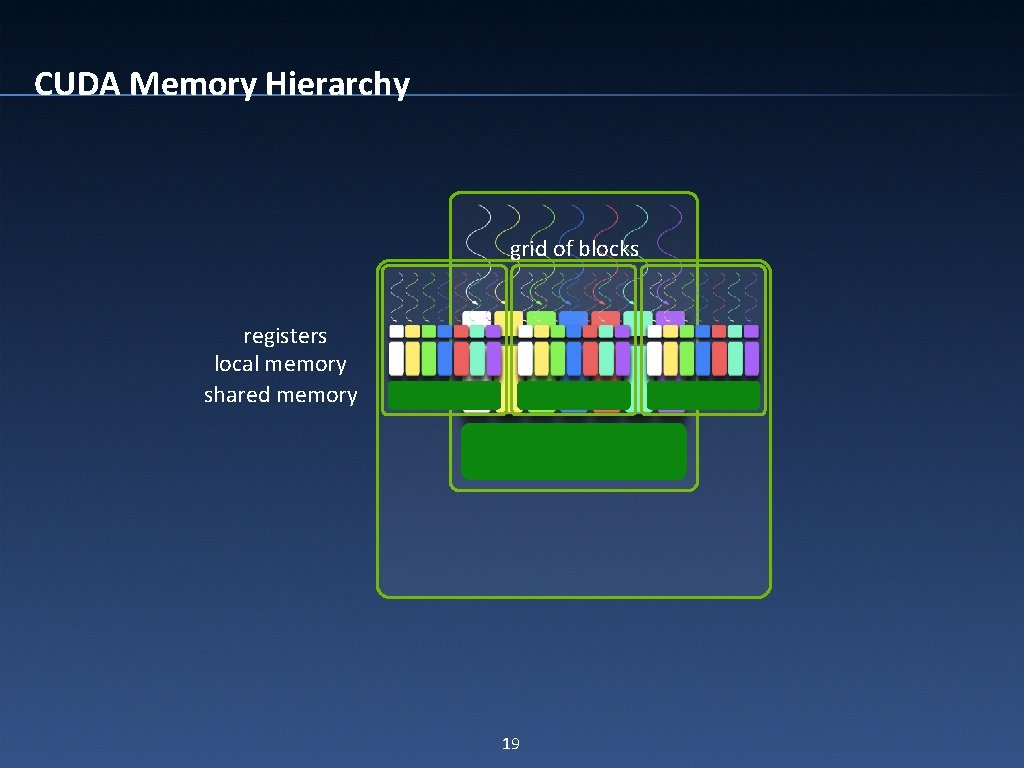

CUDA Memory Hierarchy grid of blocks registers local memory shared memory 19

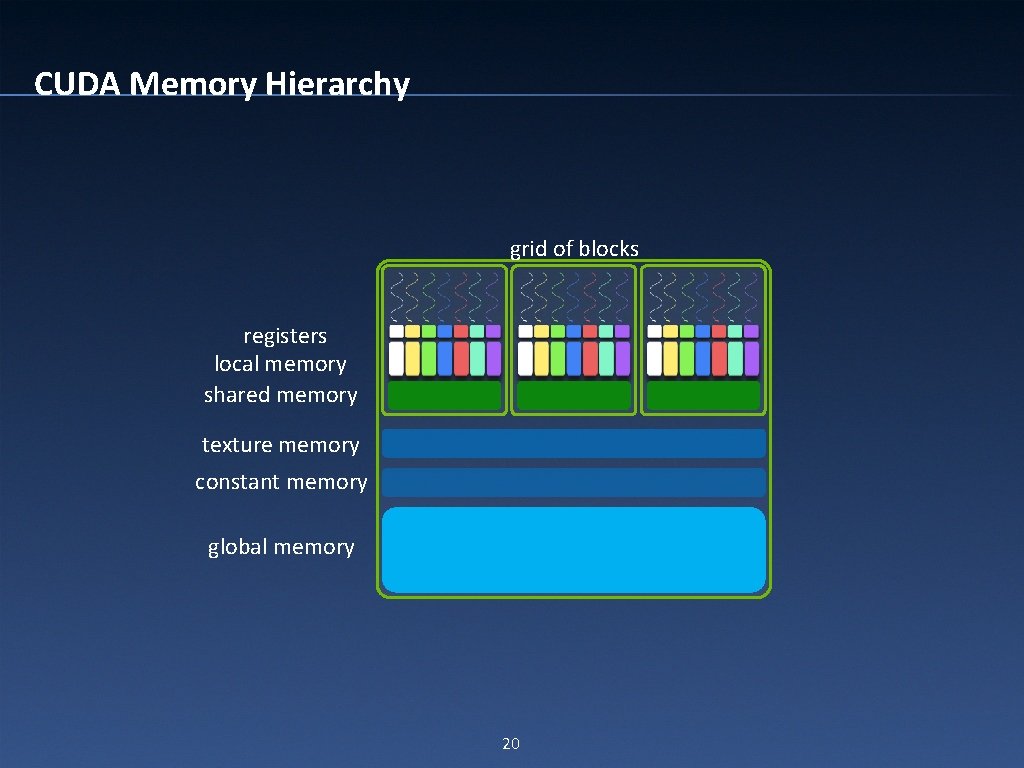

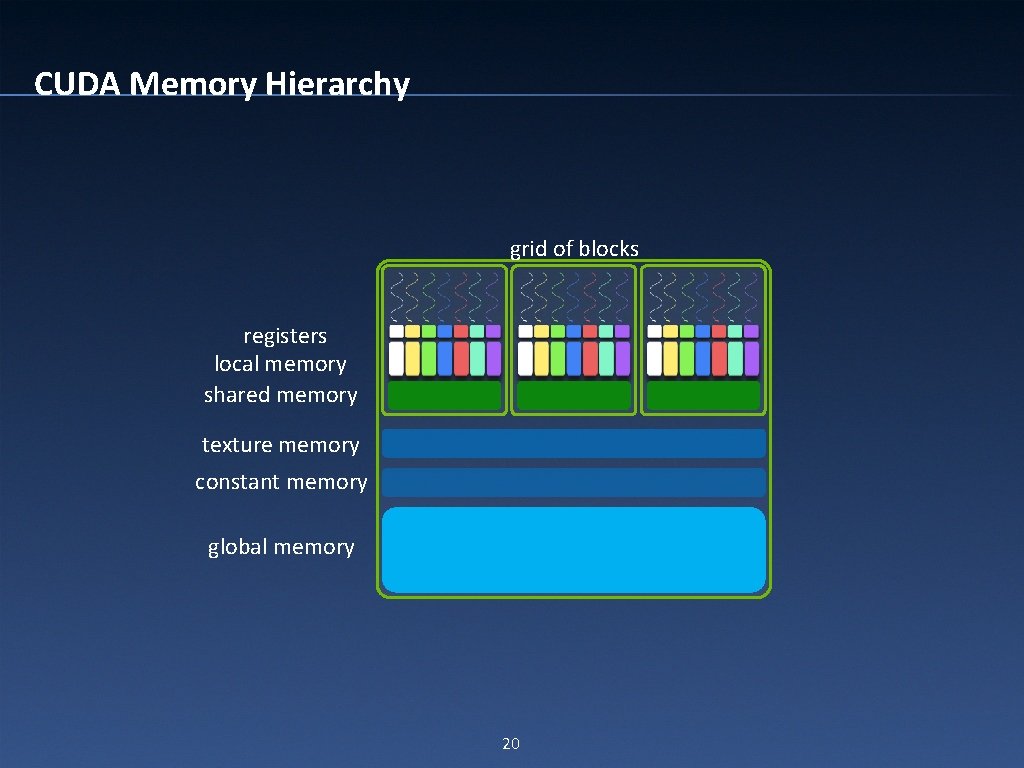

CUDA Memory Hierarchy grid of blocks registers local memory shared memory texture memory constant memory global memory 20

CUDA Memory Hierarchy CUDA memory hierarchy summary Host 21

Start from a Vector-Addition Simple Example

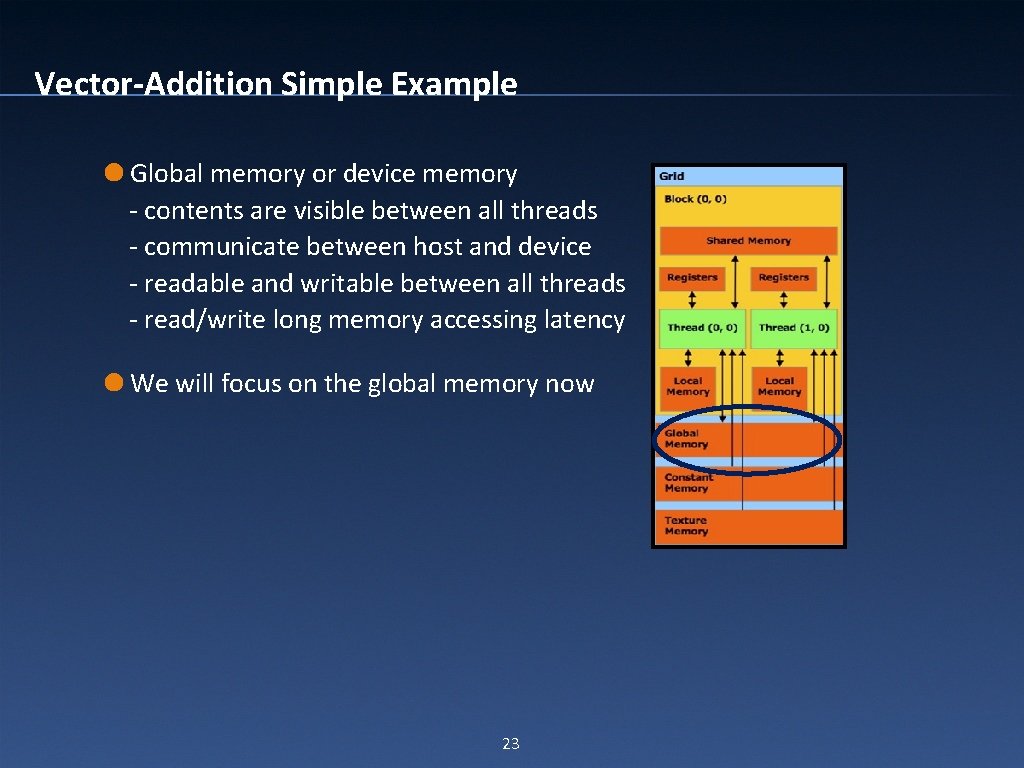

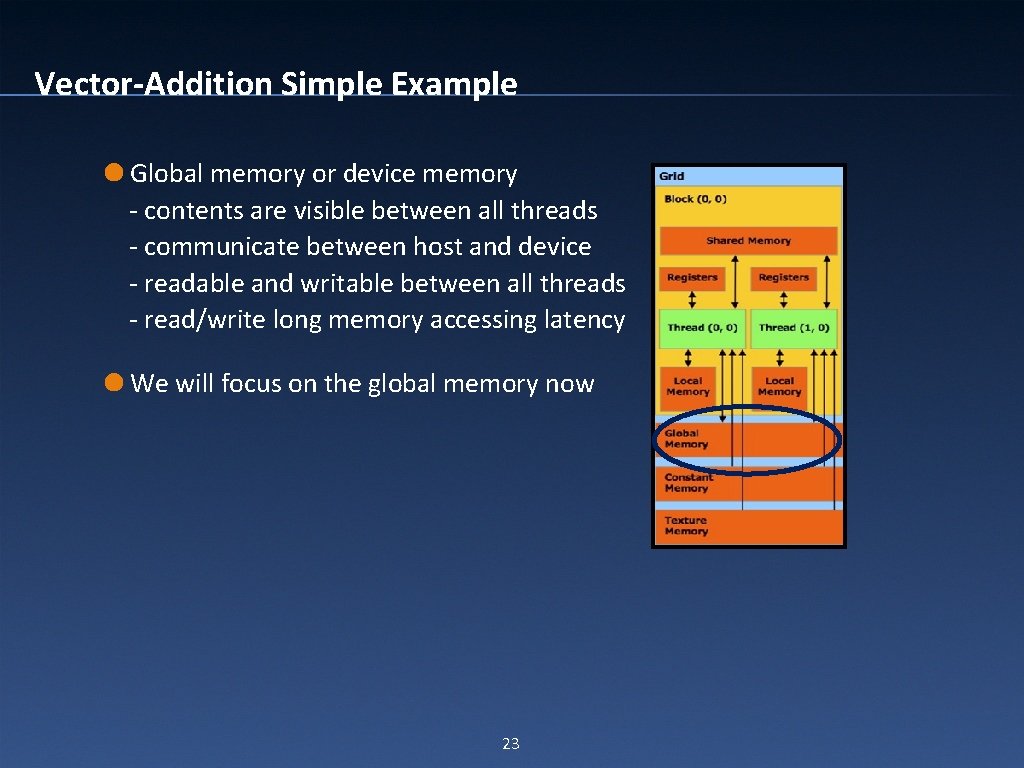

Vector-Addition Simple Example Global memory or device memory - contents are visible between all threads - communicate between host and device - readable and writable between all threads - read/write long memory accessing latency We will focus on the global memory now 23

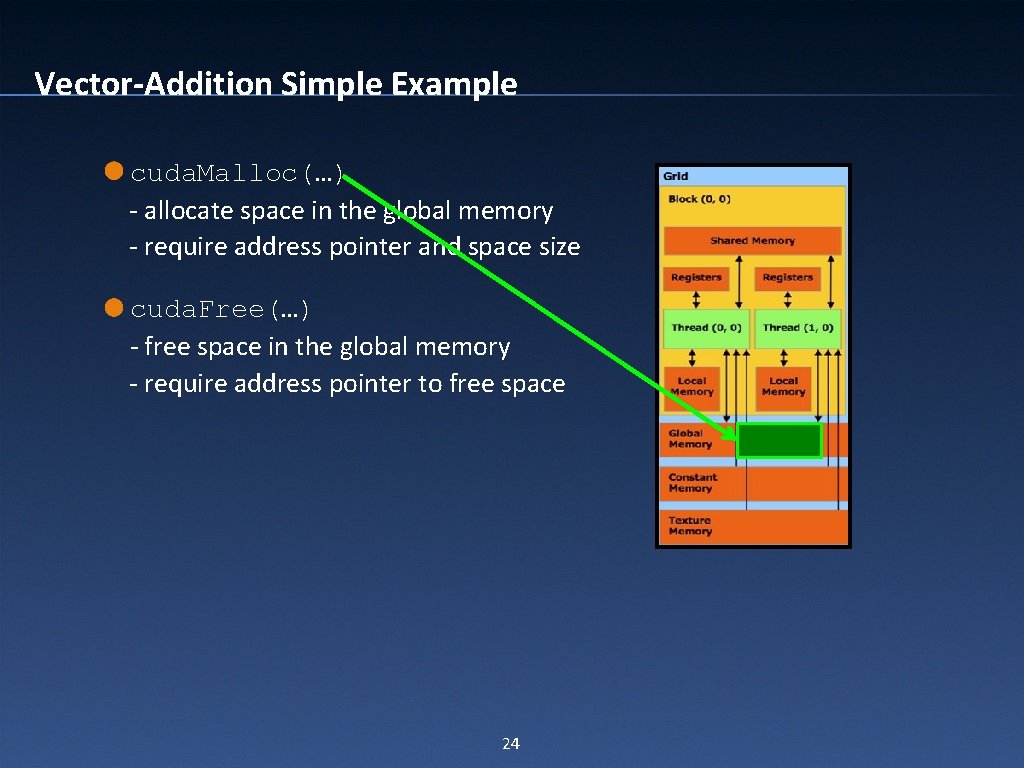

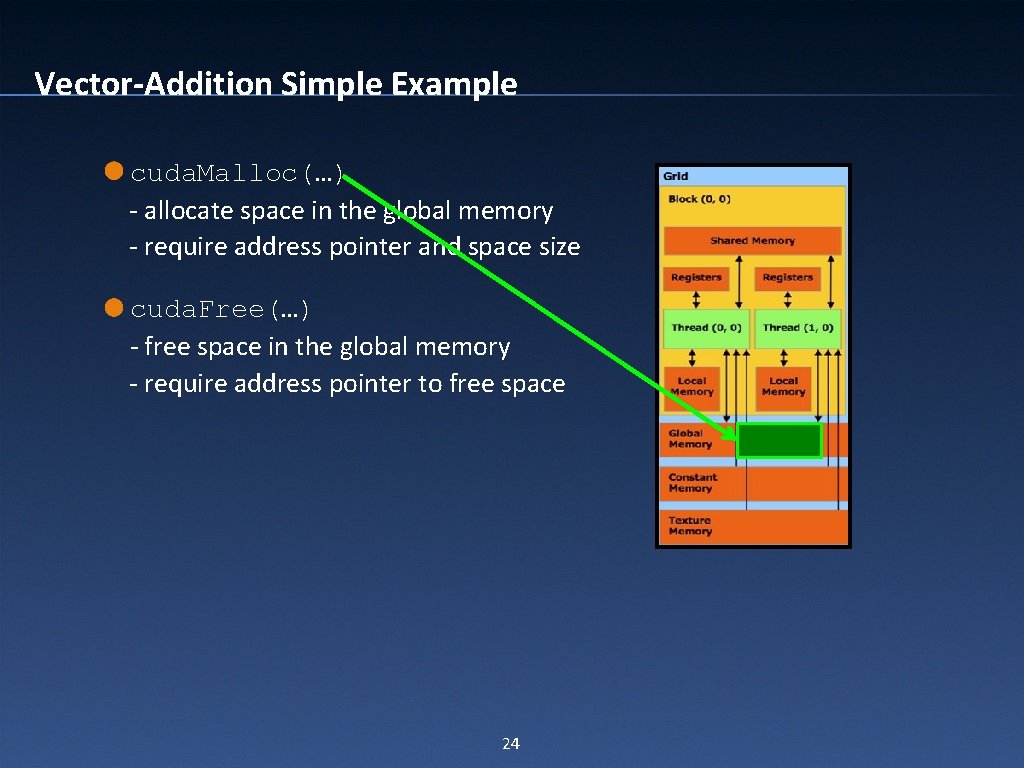

Vector-Addition Simple Example cuda. Malloc(…) - allocate space in the global memory - require address pointer and space size cuda. Free(…) - free space in the global memory - require address pointer to free space 24

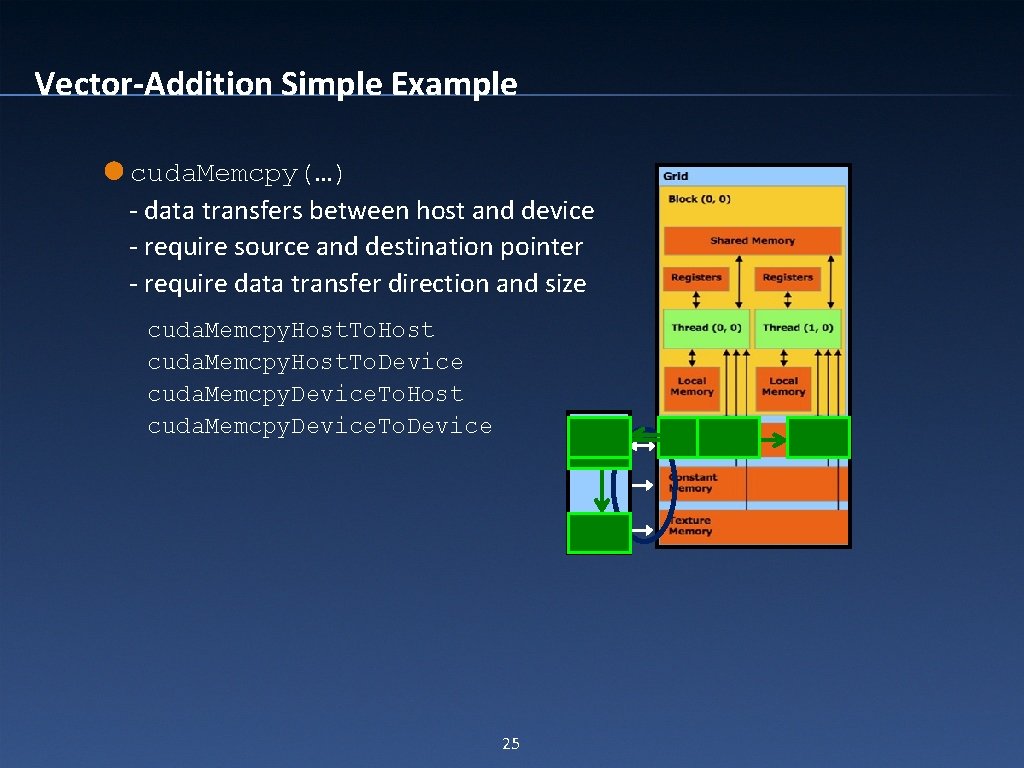

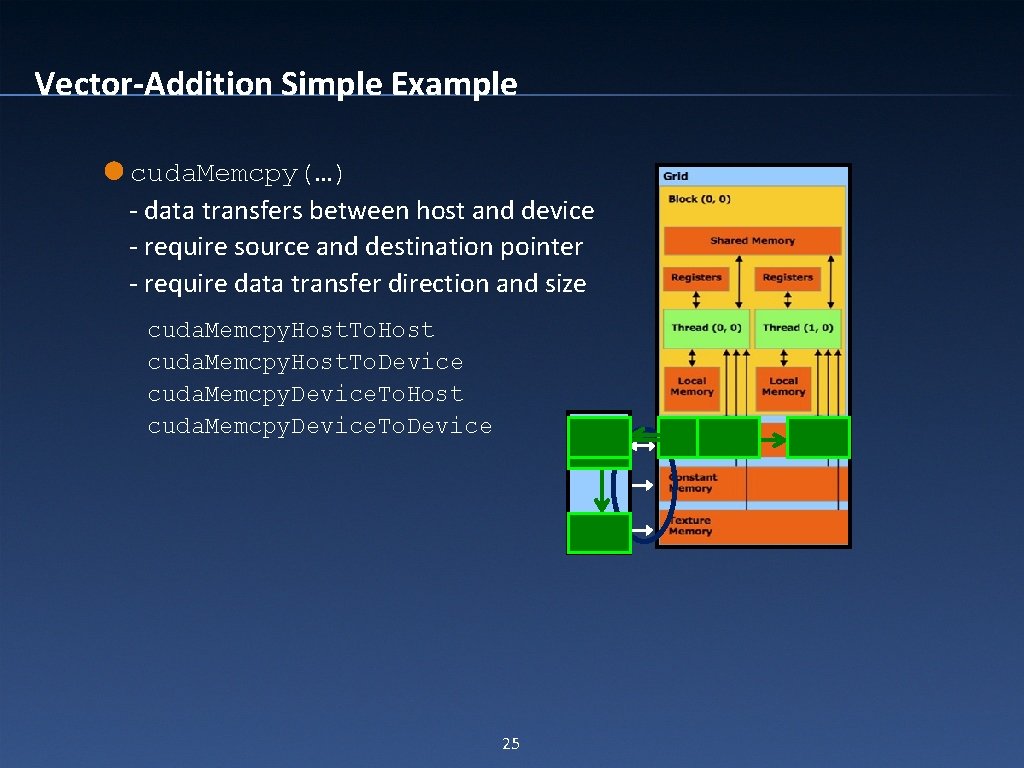

Vector-Addition Simple Example cuda. Memcpy(…) - data transfers between host and device - require source and destination pointer - require data transfer direction and size cuda. Memcpy. Host. To. Host cuda. Memcpy. Host. To. Device cuda. Memcpy. Device. To. Host cuda. Memcpy. Device. To. Device Host 25

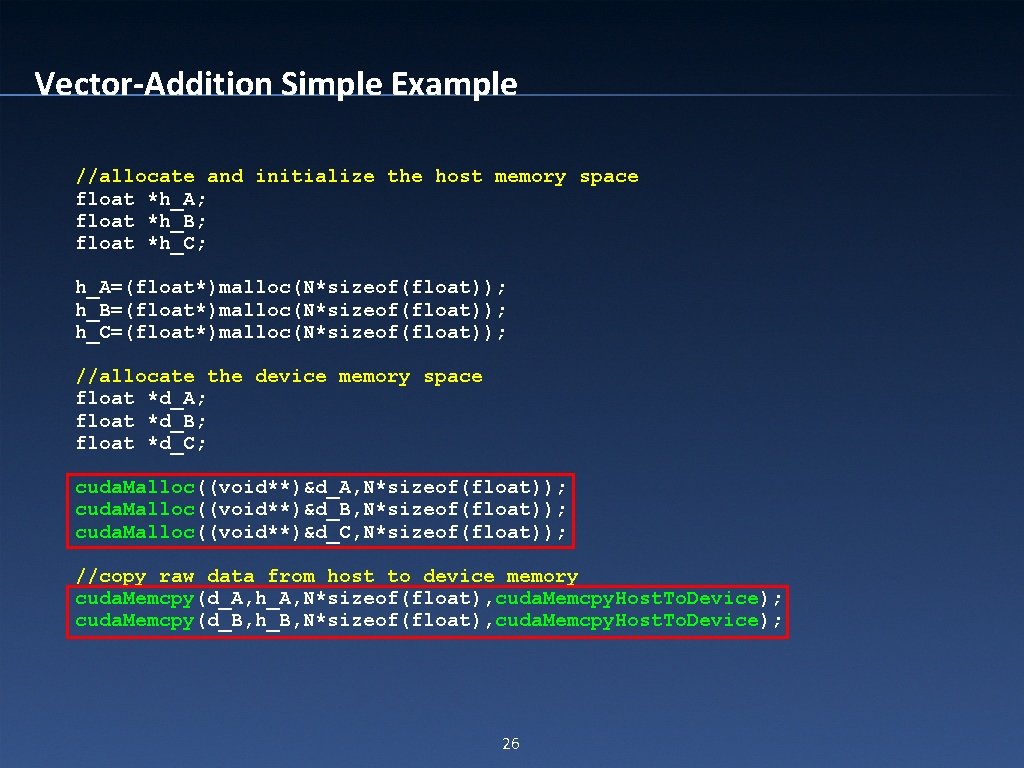

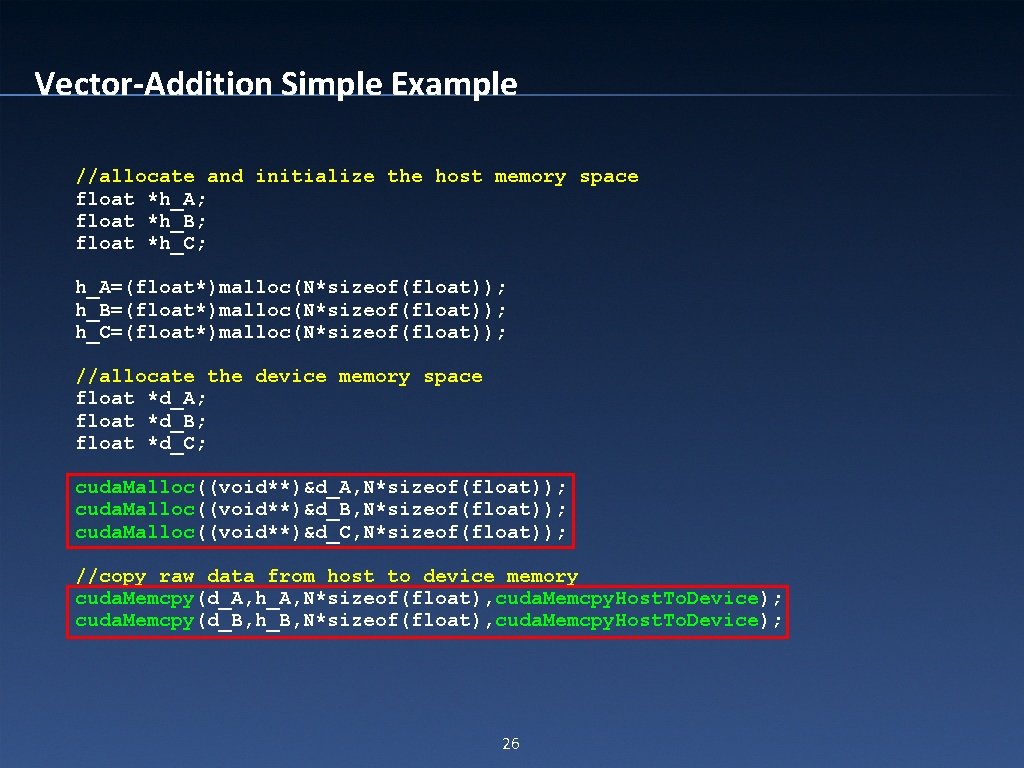

Vector-Addition Simple Example //allocate and initialize the host memory space float *h_A; float *h_B; float *h_C; h_A=(float*)malloc(N*sizeof(float)); h_B=(float*)malloc(N*sizeof(float)); h_C=(float*)malloc(N*sizeof(float)); //allocate the device memory space float *d_A; float *d_B; float *d_C; cuda. Malloc((void**)&d_A, N*sizeof(float)); cuda. Malloc((void**)&d_B, N*sizeof(float)); cuda. Malloc((void**)&d_C, N*sizeof(float)); //copy raw data from host to device memory cuda. Memcpy(d_A, h_A, N*sizeof(float), cuda. Memcpy. Host. To. Device); cuda. Memcpy(d_B, h_B, N*sizeof(float), cuda. Memcpy. Host. To. Device); 26

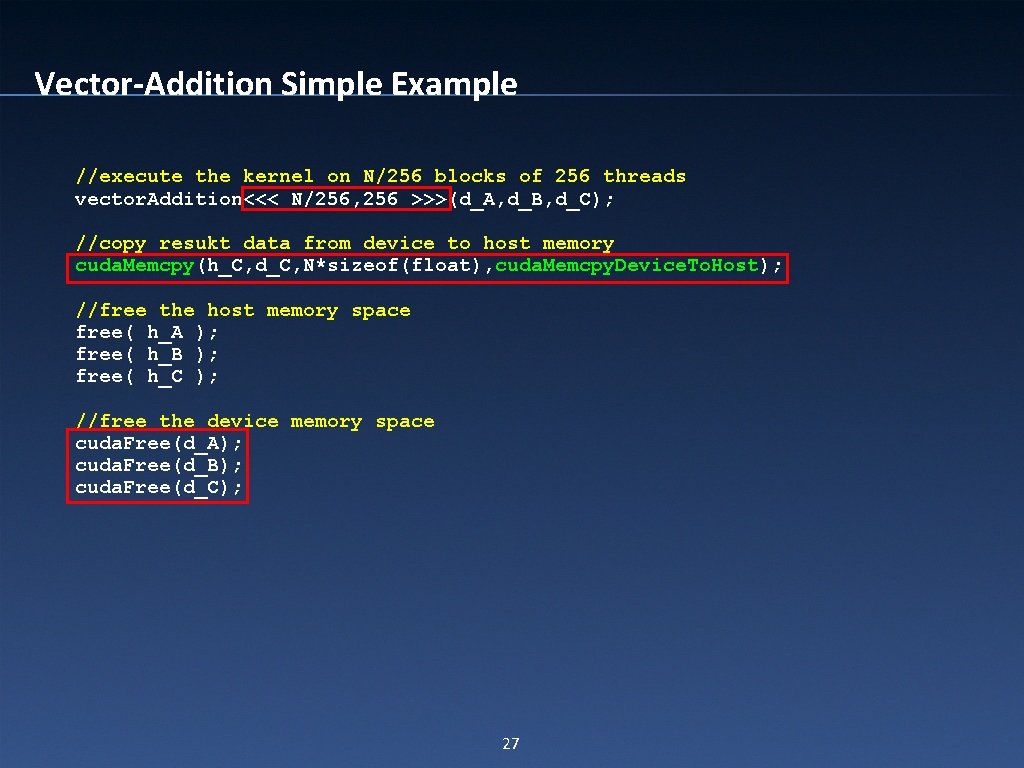

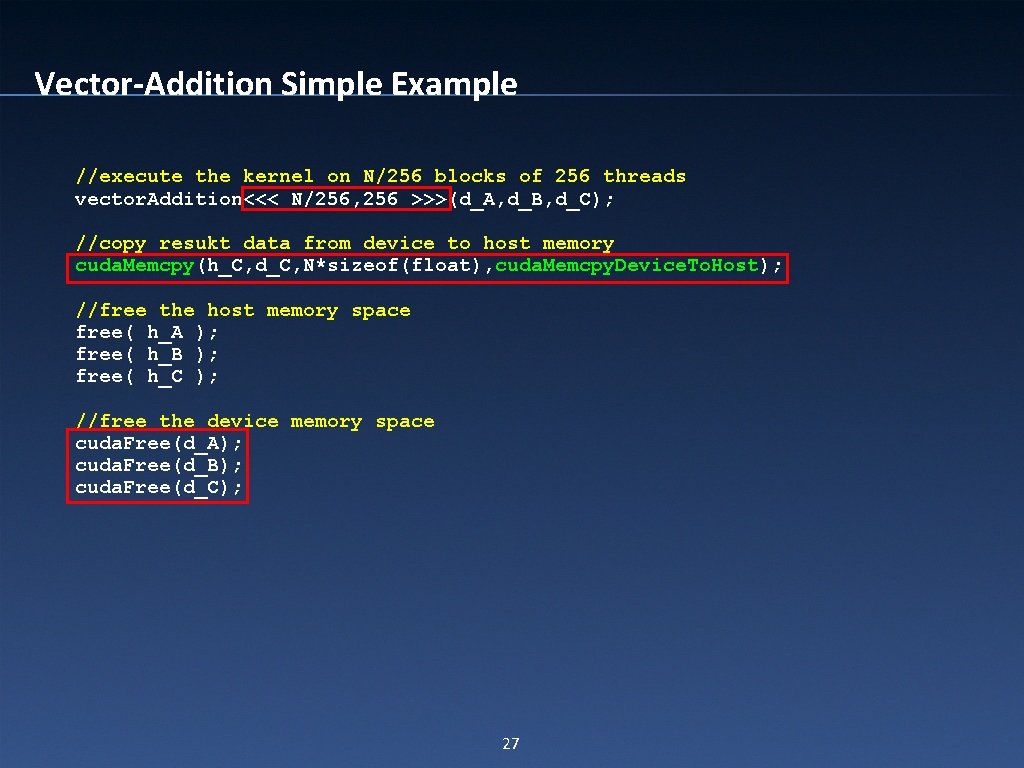

Vector-Addition Simple Example //execute the kernel on N/256 blocks of 256 threads vector. Addition<<< N/256, 256 >>>(d_A, d_B, d_C); //copy resukt data from device to host memory cuda. Memcpy(h_C, d_C, N*sizeof(float), cuda. Memcpy. Device. To. Host); //free the host memory space free( h_A ); free( h_B ); free( h_C ); //free the device memory space cuda. Free(d_A); cuda. Free(d_B); cuda. Free(d_C); 27

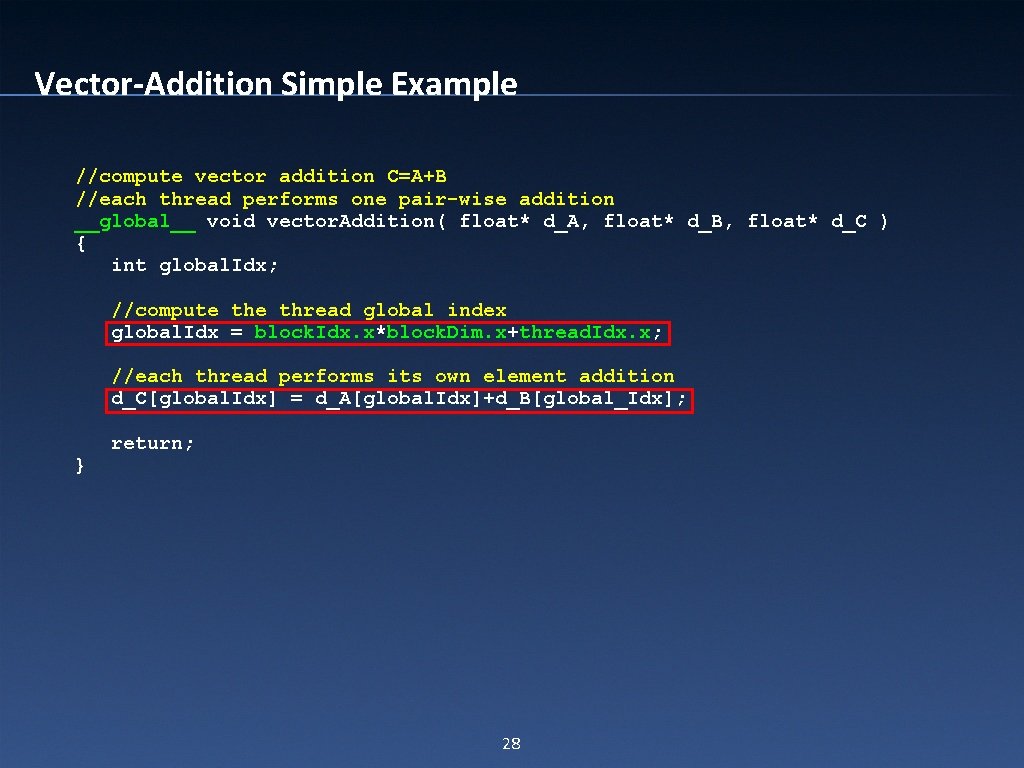

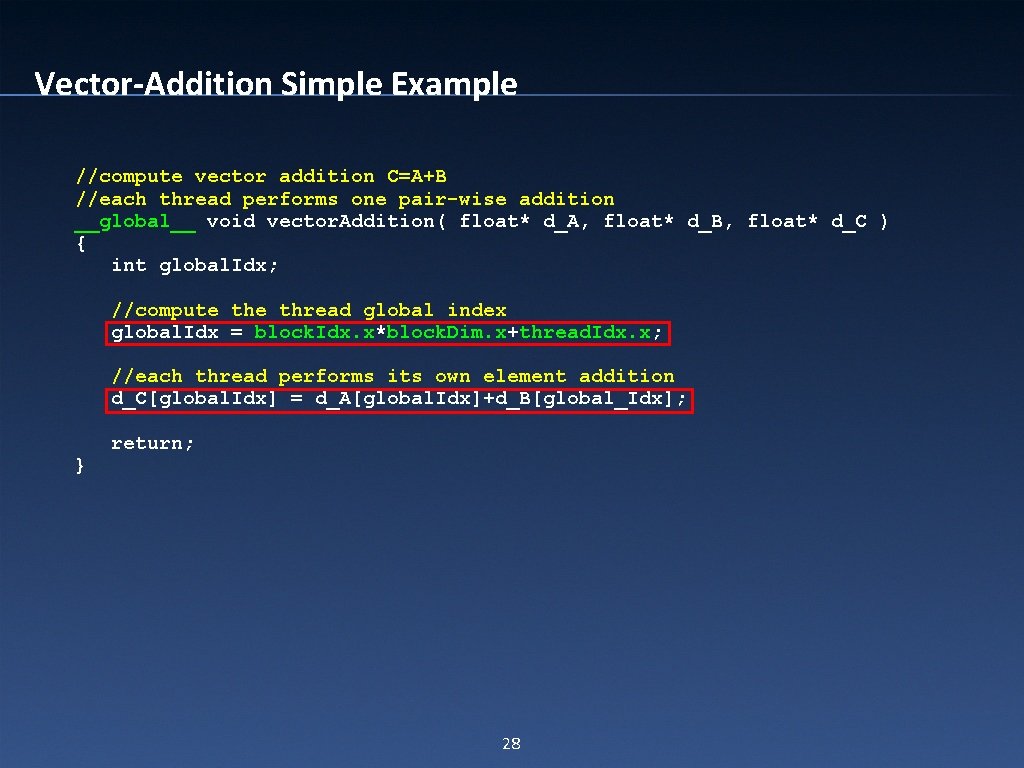

Vector-Addition Simple Example //compute vector addition C=A+B //each thread performs one pair-wise addition __global__ void vector. Addition( float* d_A, float* d_B, float* d_C ) { int global. Idx; //compute thread global index global. Idx = block. Idx. x*block. Dim. x+thread. Idx. x; //each thread performs its own element addition d_C[global. Idx] = d_A[global. Idx]+d_B[global_Idx]; } return; 28

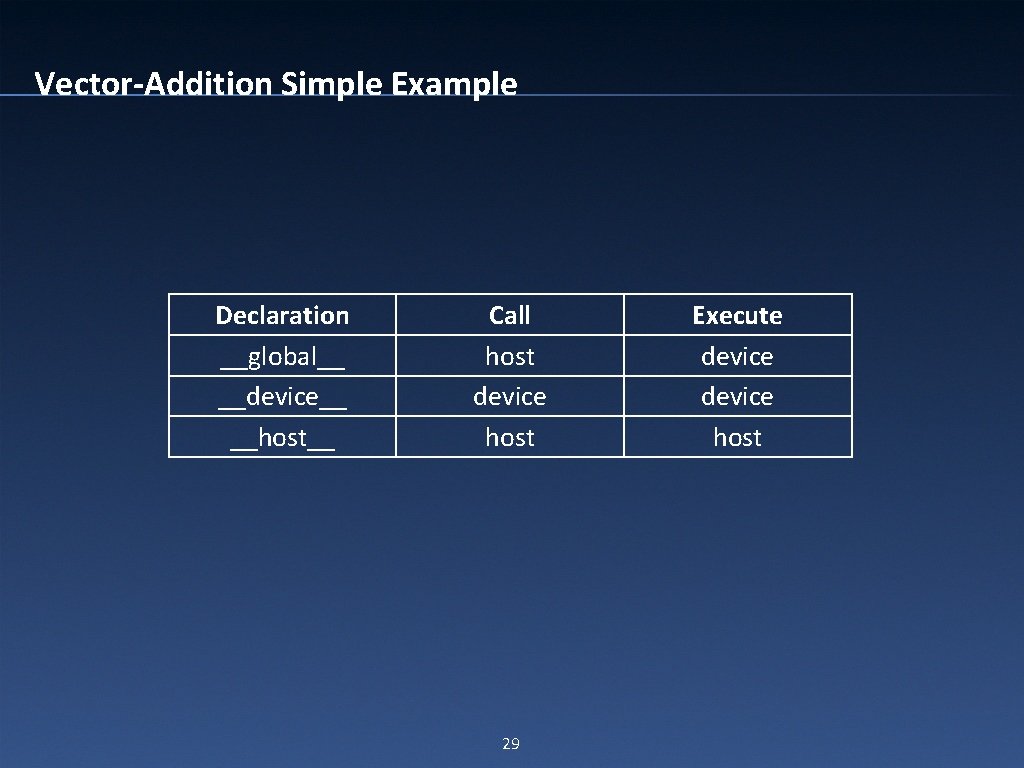

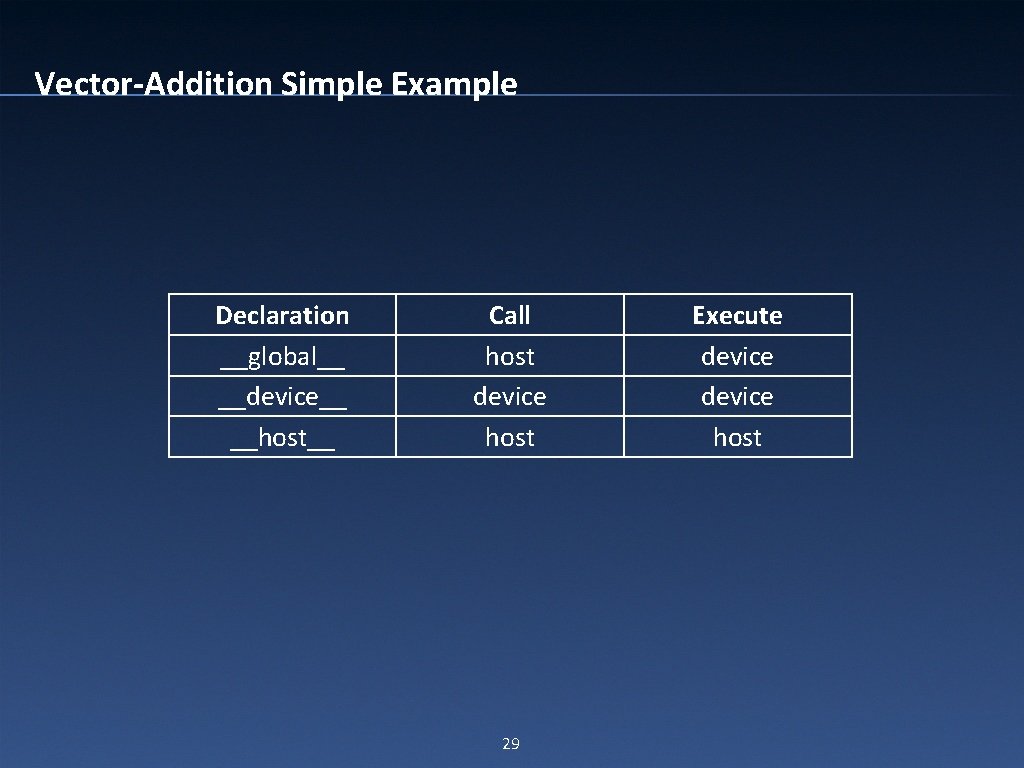

Vector-Addition Simple Example Declaration __global__ __device__ __host__ Call host device host 29 Execute device host

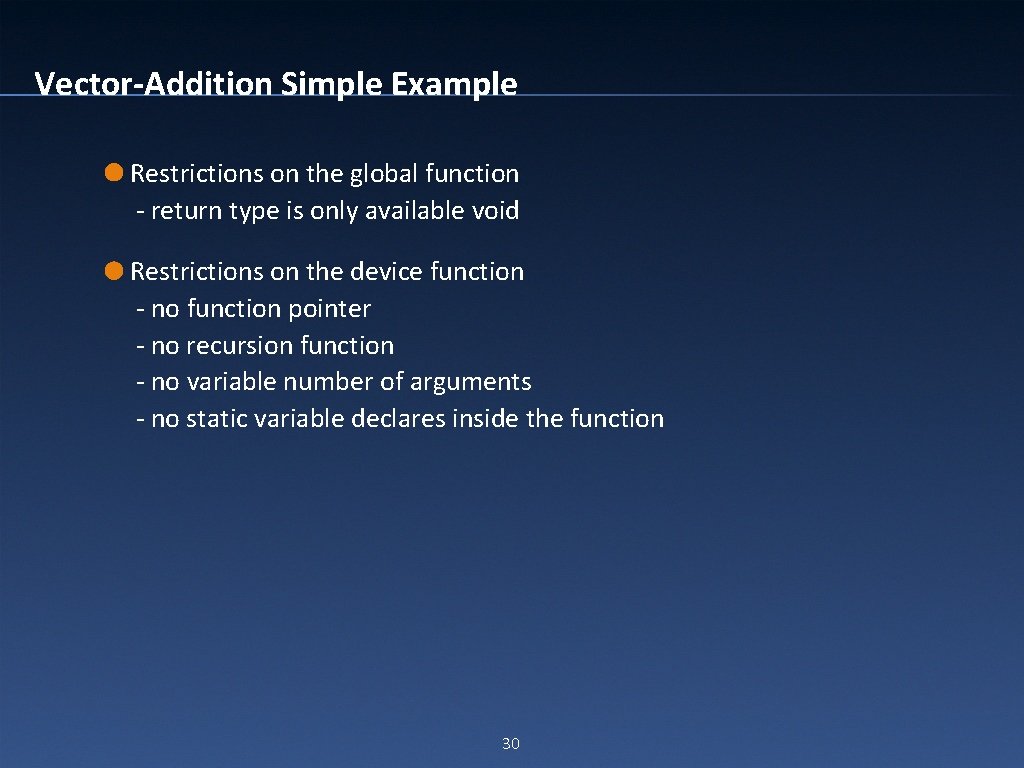

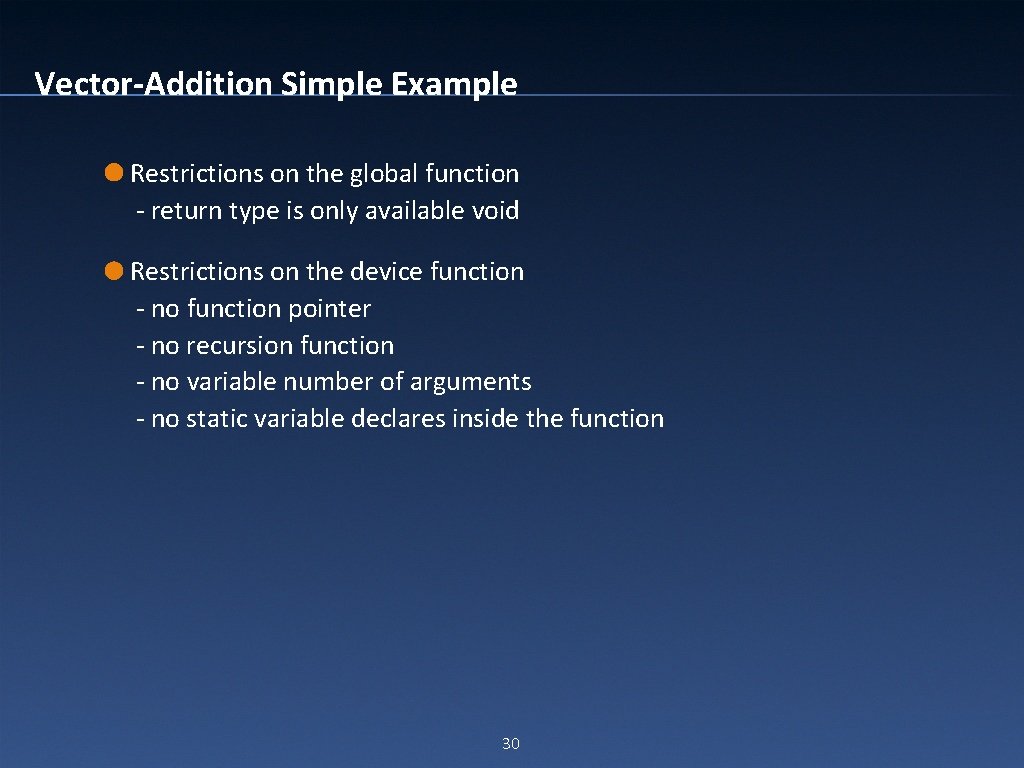

Vector-Addition Simple Example Restrictions on the global function - return type is only available void Restrictions on the device function - no function pointer - no recursion function - no variable number of arguments - no static variable declares inside the function 30

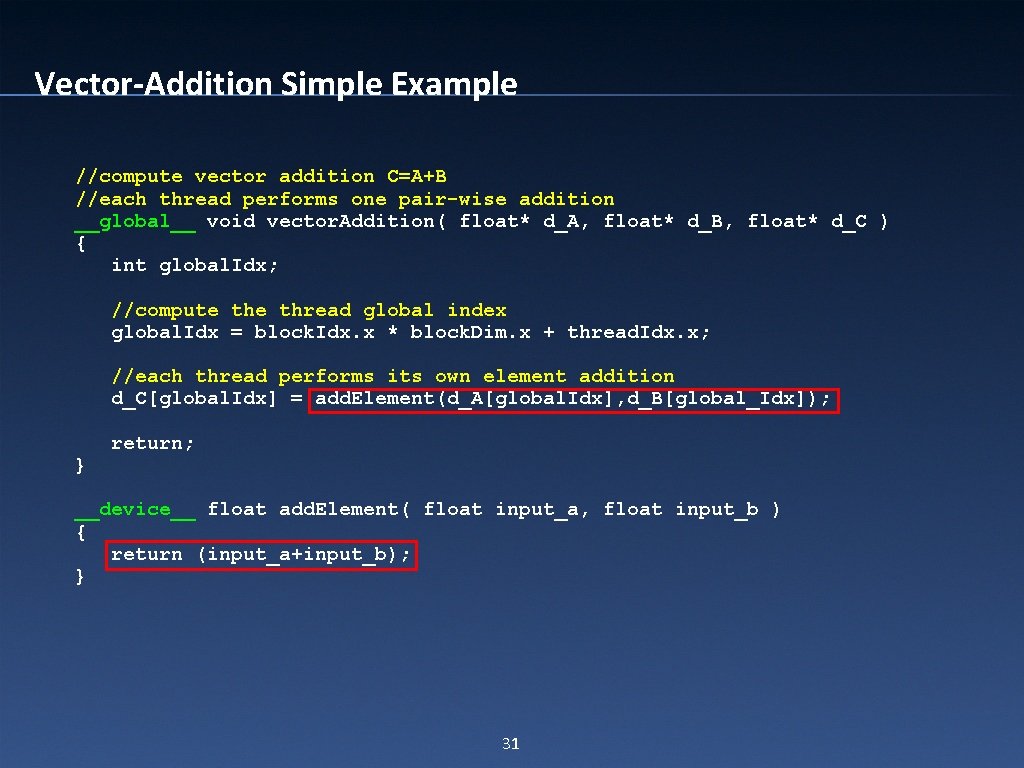

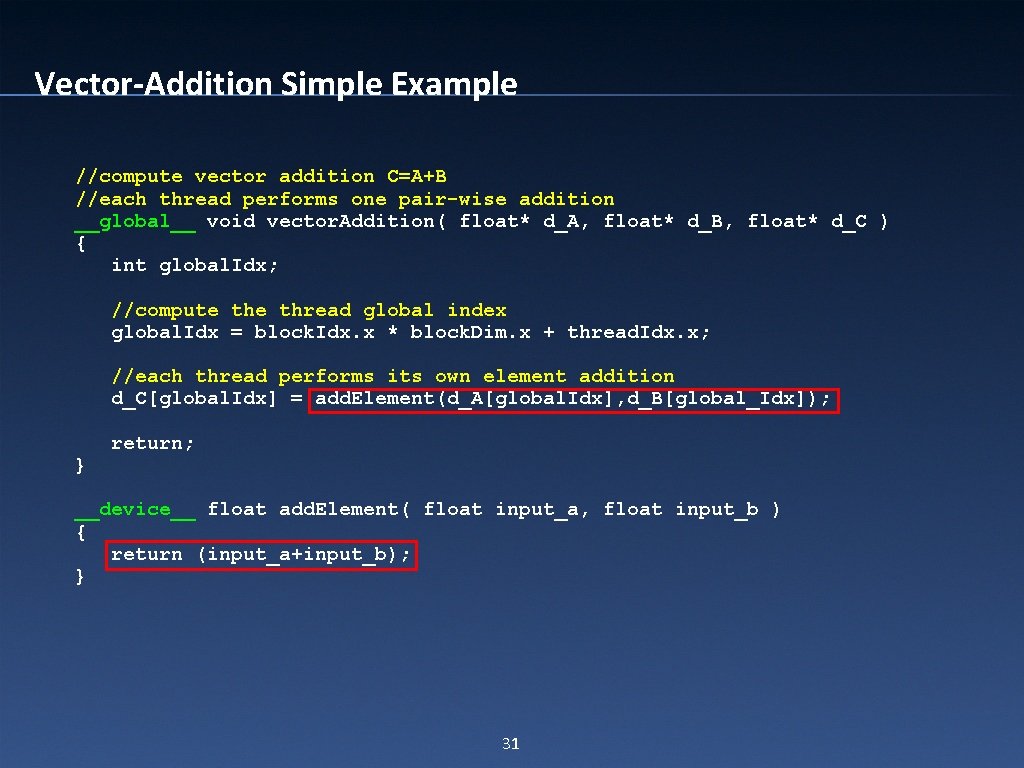

Vector-Addition Simple Example //compute vector addition C=A+B //each thread performs one pair-wise addition __global__ void vector. Addition( float* d_A, float* d_B, float* d_C ) { int global. Idx; //compute thread global index global. Idx = block. Idx. x * block. Dim. x + thread. Idx. x; //each thread performs its own element addition d_C[global. Idx] = add. Element(d_A[global. Idx], d_B[global_Idx]); } return; __device__ float add. Element( float input_a, float input_b ) { return (input_a+input_b); } 31

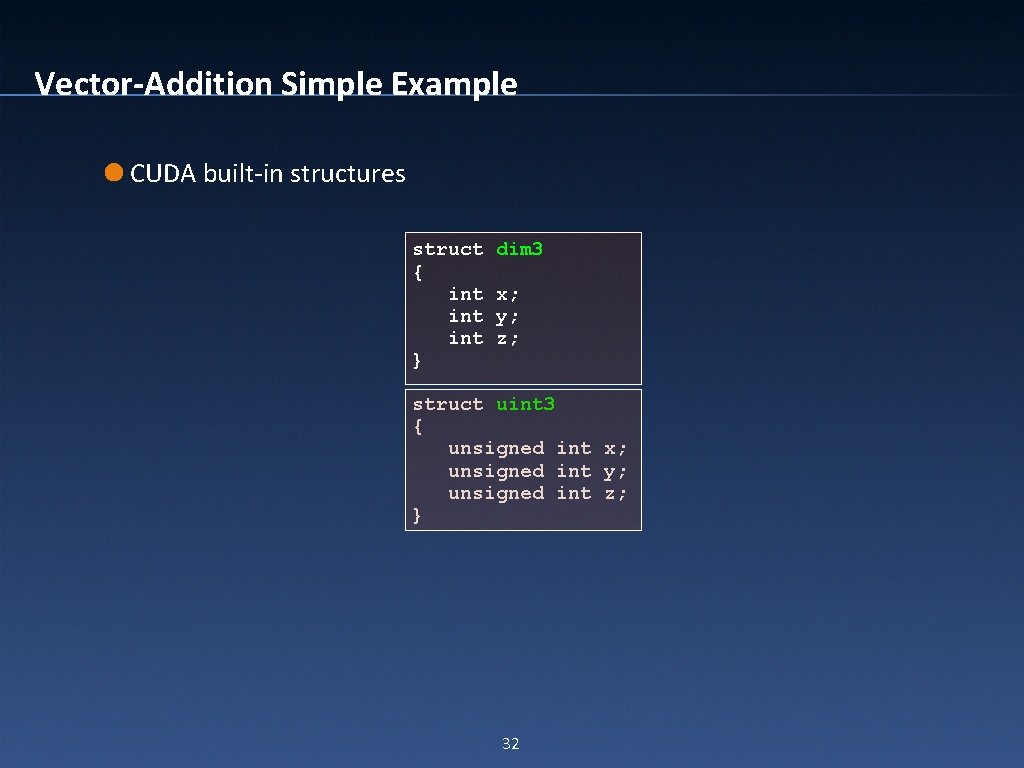

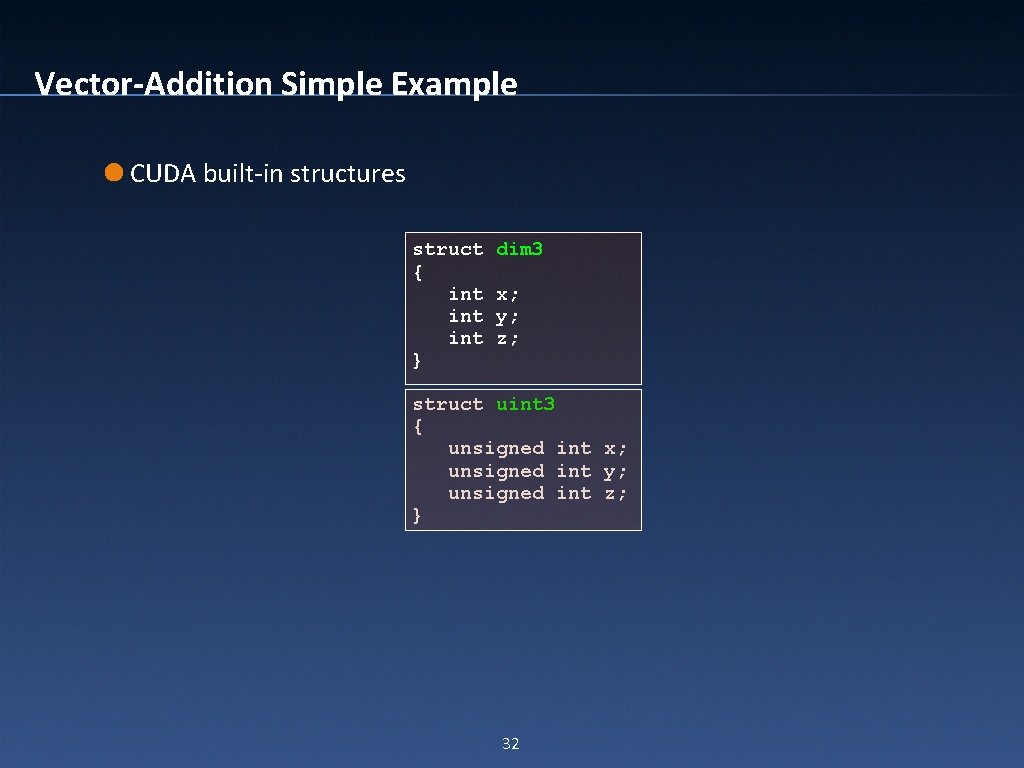

Vector-Addition Simple Example CUDA built-in structures struct { int int } dim 3 x; y; z; struct uint 3 { unsigned int x; unsigned int y; unsigned int z; } 32

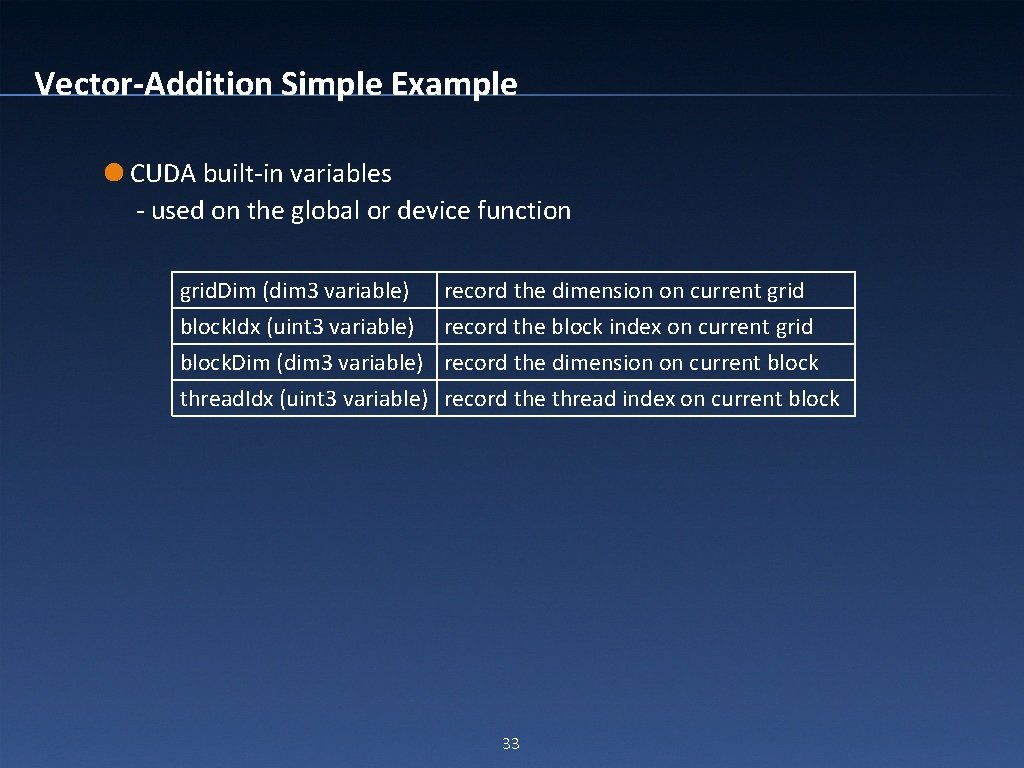

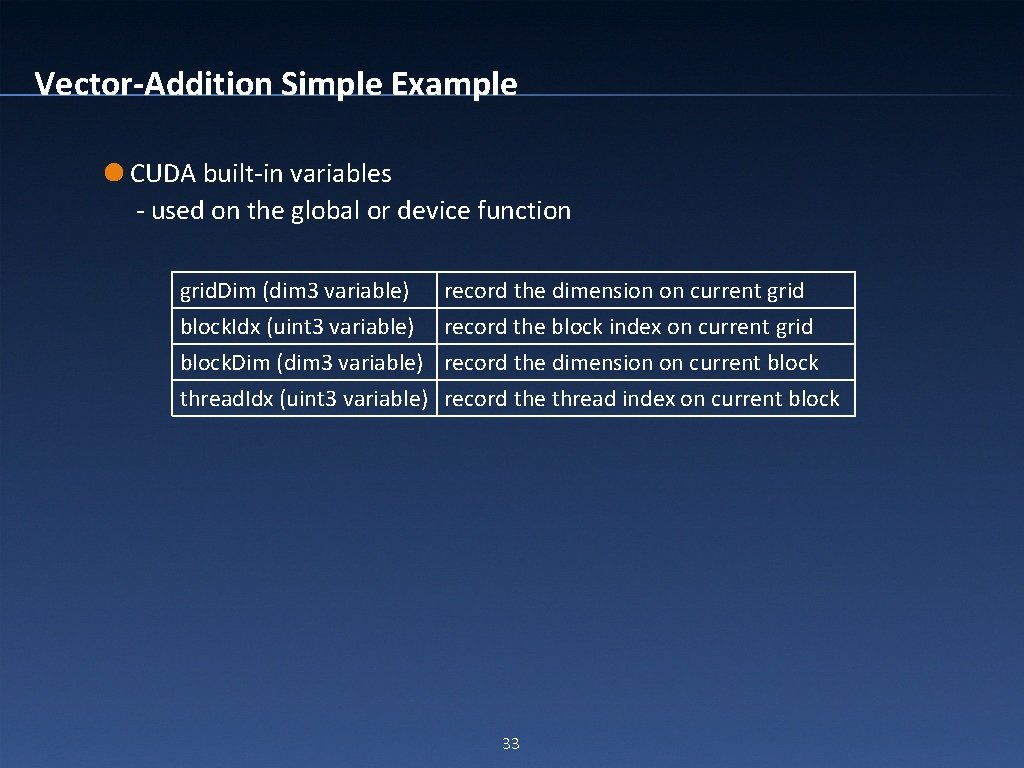

Vector-Addition Simple Example CUDA built-in variables - used on the global or device function grid. Dim (dim 3 variable) block. Idx (uint 3 variable) block. Dim (dim 3 variable) thread. Idx (uint 3 variable) record the dimension on current grid record the block index on current grid record the dimension on current block record the thread index on current block 33

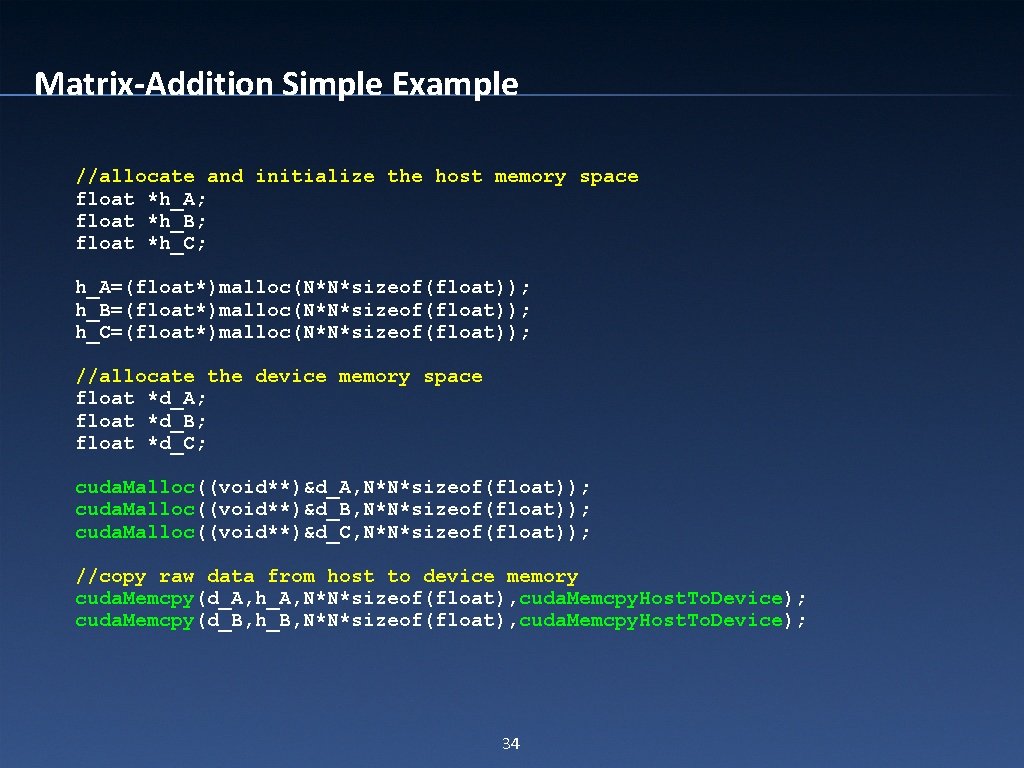

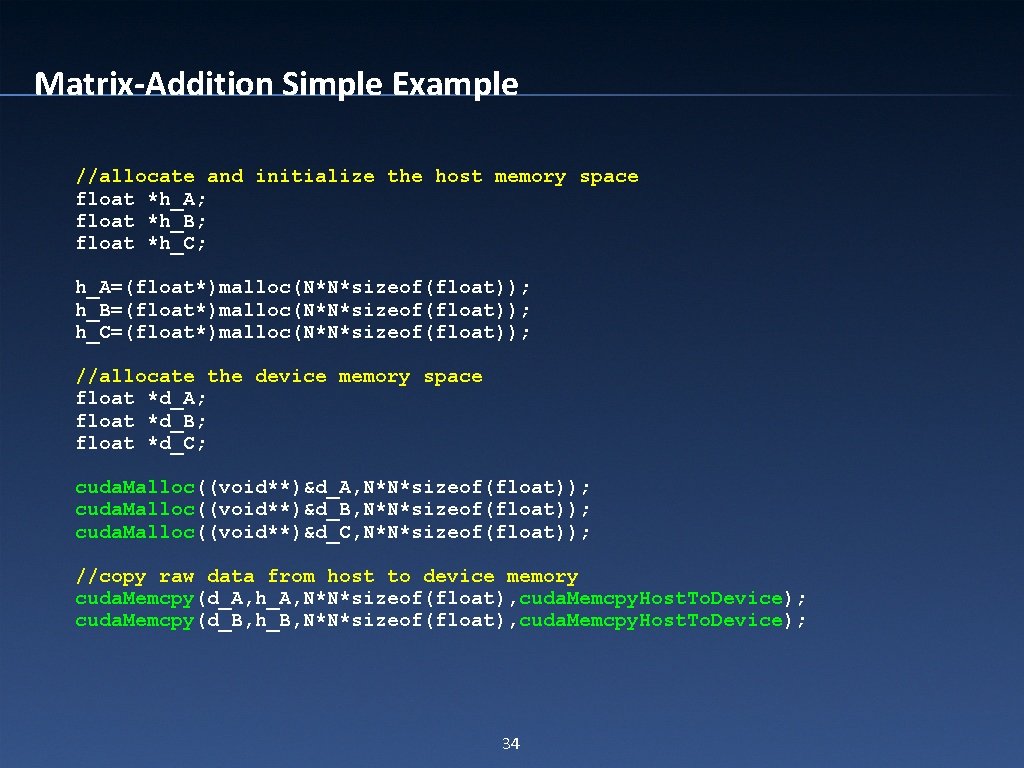

Matrix-Addition Simple Example //allocate and initialize the host memory space float *h_A; float *h_B; float *h_C; h_A=(float*)malloc(N*N*sizeof(float)); h_B=(float*)malloc(N*N*sizeof(float)); h_C=(float*)malloc(N*N*sizeof(float)); //allocate the device memory space float *d_A; float *d_B; float *d_C; cuda. Malloc((void**)&d_A, N*N*sizeof(float)); cuda. Malloc((void**)&d_B, N*N*sizeof(float)); cuda. Malloc((void**)&d_C, N*N*sizeof(float)); //copy raw data from host to device memory cuda. Memcpy(d_A, h_A, N*N*sizeof(float), cuda. Memcpy. Host. To. Device); cuda. Memcpy(d_B, h_B, N*N*sizeof(float), cuda. Memcpy. Host. To. Device); 34

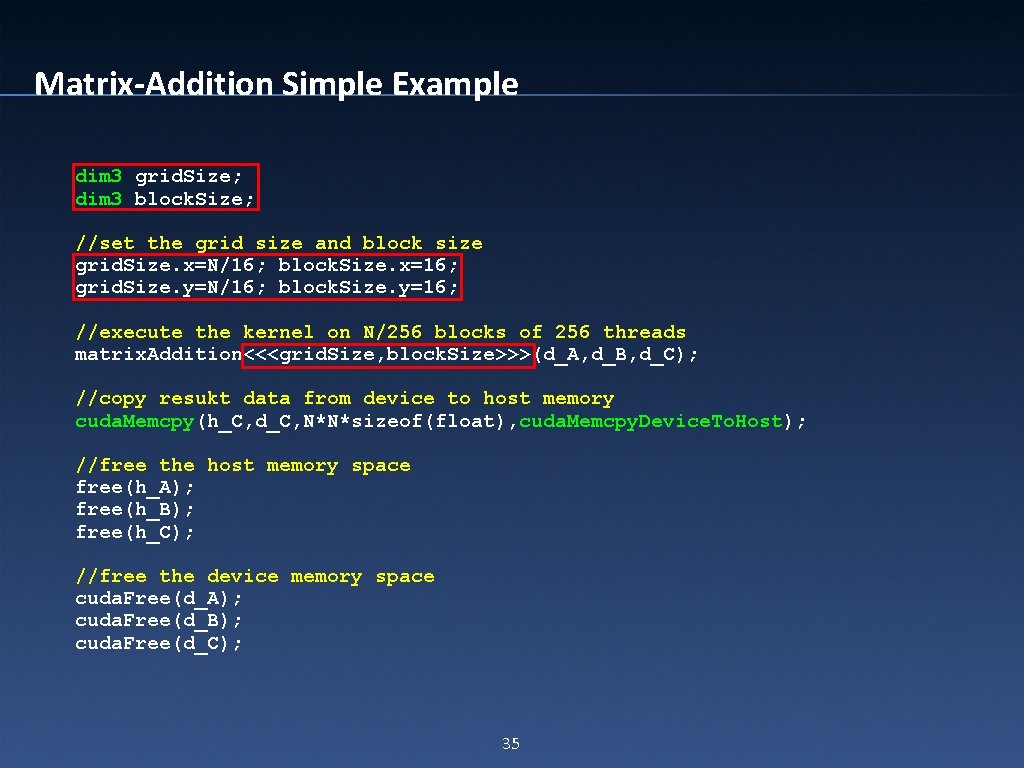

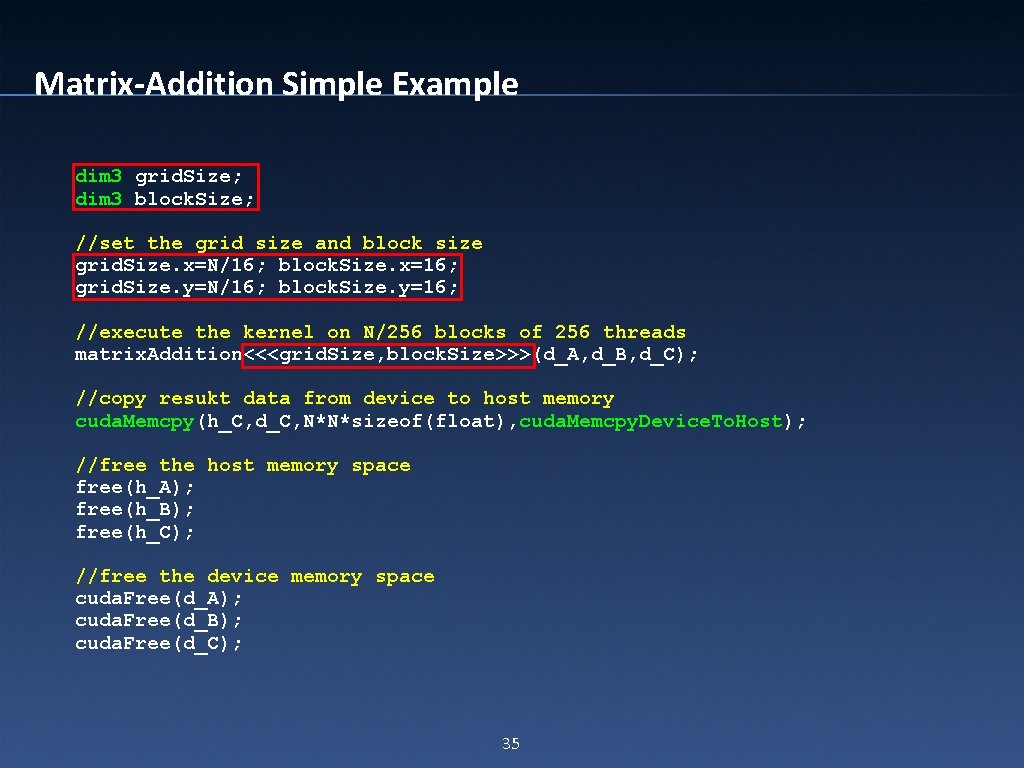

Matrix-Addition Simple Example dim 3 grid. Size; dim 3 block. Size; //set the grid size and block size grid. Size. x=N/16; block. Size. x=16; grid. Size. y=N/16; block. Size. y=16; //execute the kernel on N/256 blocks of 256 threads matrix. Addition<<<grid. Size, block. Size>>>(d_A, d_B, d_C); //copy resukt data from device to host memory cuda. Memcpy(h_C, d_C, N*N*sizeof(float), cuda. Memcpy. Device. To. Host); //free the host memory space free(h_A); free(h_B); free(h_C); //free the device memory space cuda. Free(d_A); cuda. Free(d_B); cuda. Free(d_C); 35

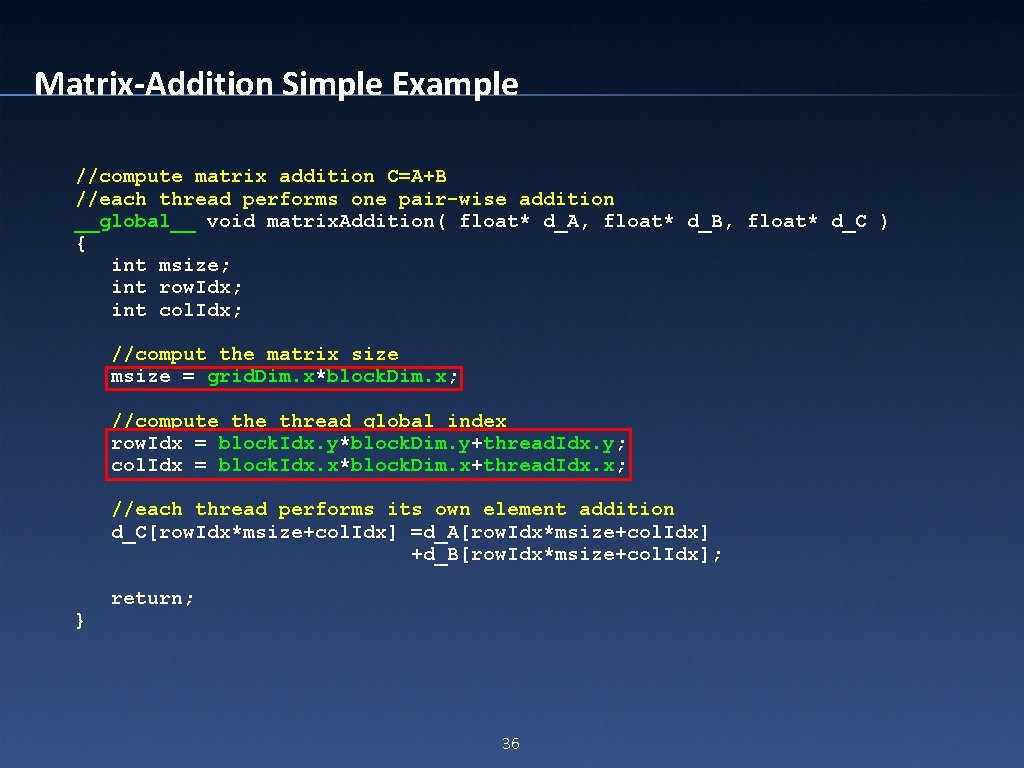

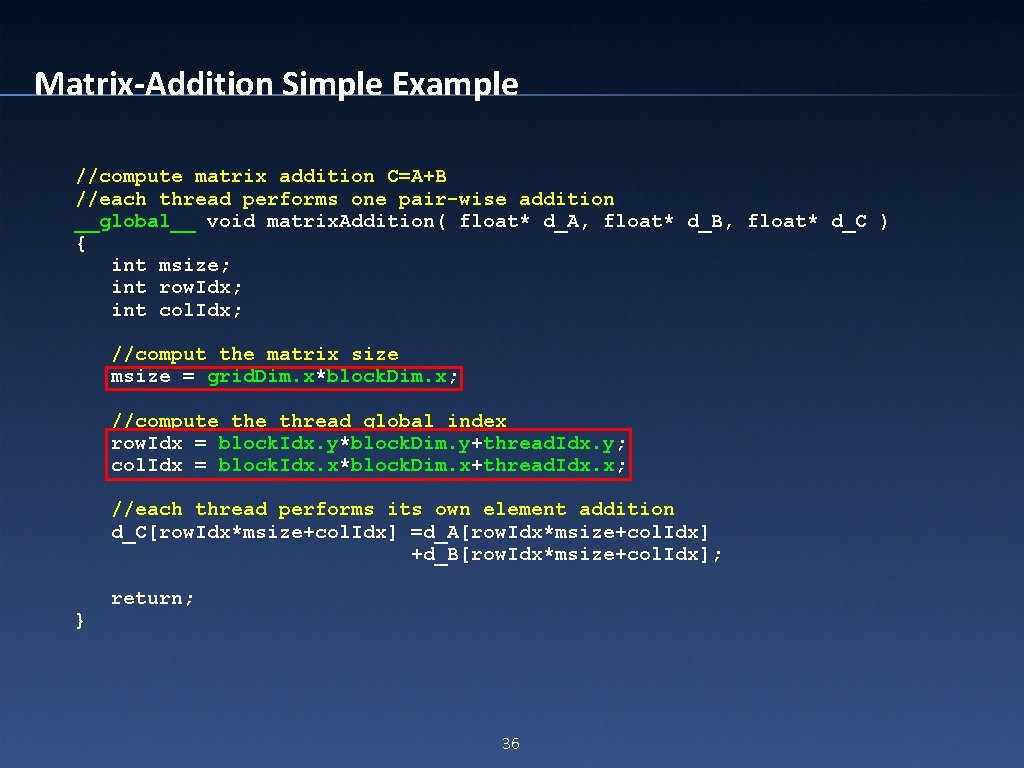

Matrix-Addition Simple Example //compute matrix addition C=A+B //each thread performs one pair-wise addition __global__ void matrix. Addition( float* d_A, float* d_B, float* d_C ) { int msize; int row. Idx; int col. Idx; //comput the matrix size msize = grid. Dim. x*block. Dim. x; //compute thread global index row. Idx = block. Idx. y*block. Dim. y+thread. Idx. y; col. Idx = block. Idx. x*block. Dim. x+thread. Idx. x; //each thread performs its own element addition d_C[row. Idx*msize+col. Idx] =d_A[row. Idx*msize+col. Idx] +d_B[row. Idx*msize+col. Idx]; } return; 36

www. cuda. scormedu. com Email: cuda@scormedu. com