CUDA Programming Model Xing Zeng Dongyue Mou Outline

![References [1] CUDA Programming Guide, n. Vidia Corp. [2] The CUDA Compiler Driver, n. References [1] CUDA Programming Guide, n. Vidia Corp. [2] The CUDA Compiler Driver, n.](https://slidetodoc.com/presentation_image/13252c9a4fb38925675cc856b2e8c566/image-54.jpg)

- Slides: 55

CUDA Programming Model Xing Zeng, Dongyue Mou

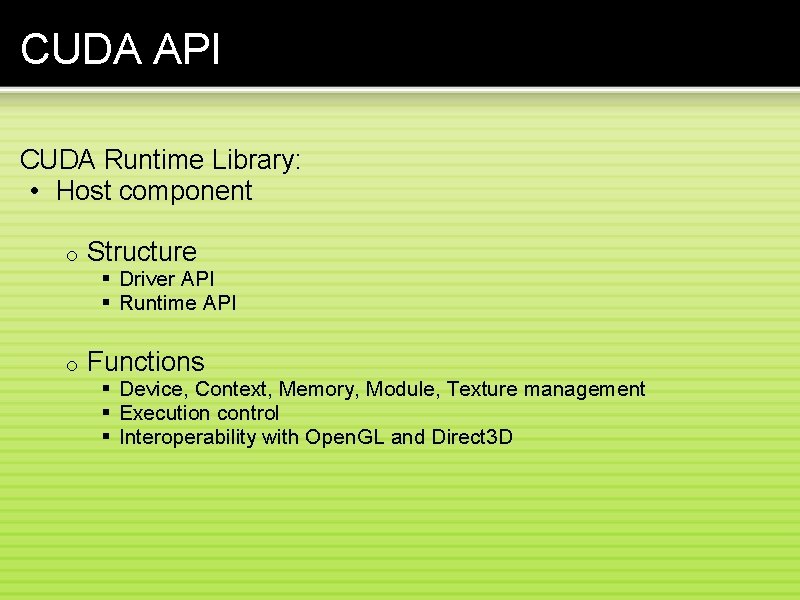

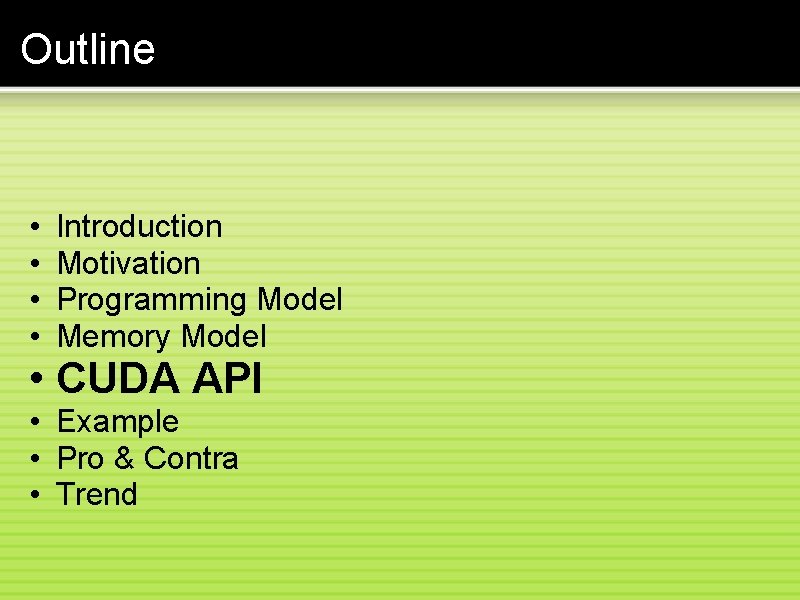

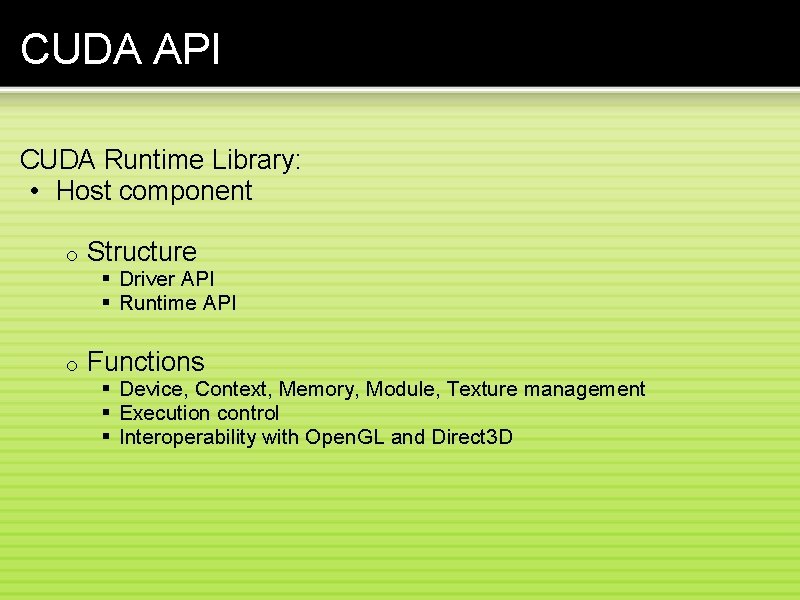

Outline • • Introduction Motivation Programming Model Memory Model CUDA API Example Pro & Contra Trend

Outline • Introduction • • Motivation Programming Model Memory Model CUDA API Example Pro & Contra Trend

Introduction What is CUDA? - Compute Unified Device Architecture. - A powerful parallel programming model for issuing and managing computations on the GPU without mapping them to a graphics API. • Heterogenous - mixed serial-parallel programming • Scalable - hierarchical thread execution model • Accessible - minimal but expressive changes to C

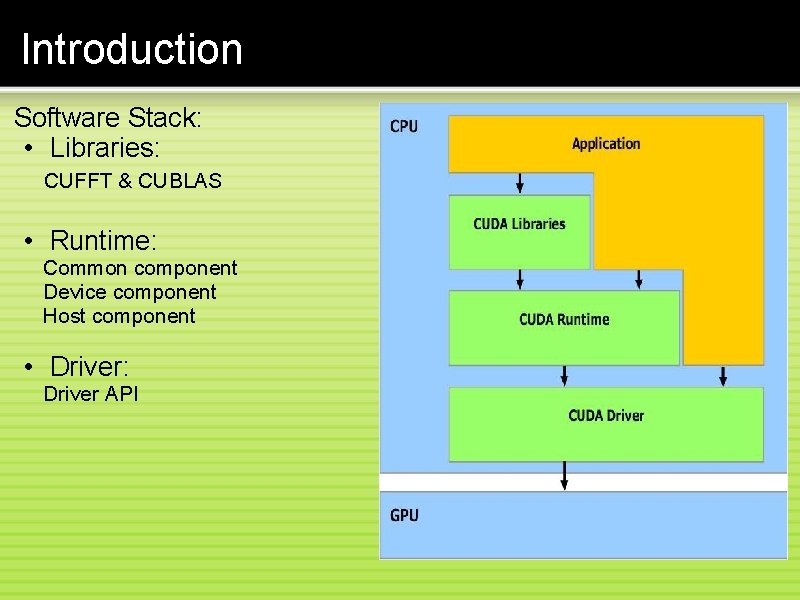

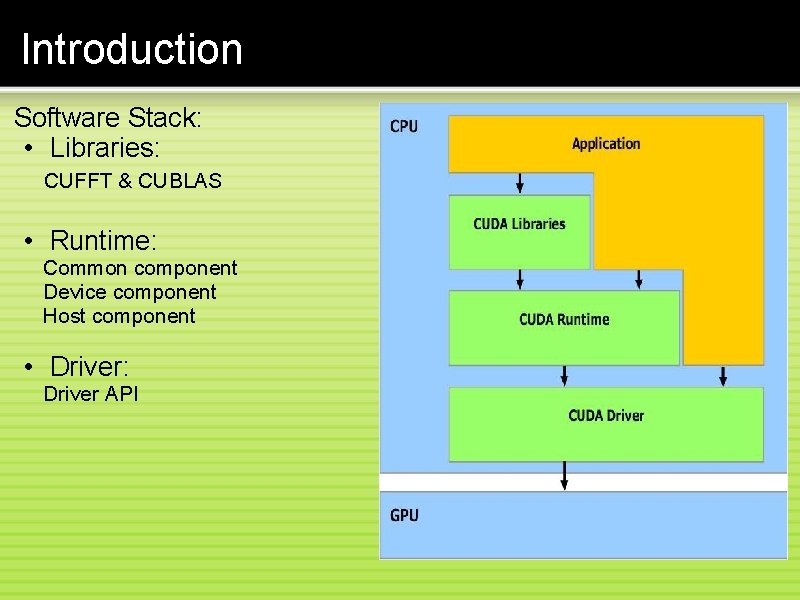

Introduction Software Stack: • Libraries: CUFFT & CUBLAS • Runtime: Common component Device component Host component • Driver: Driver API

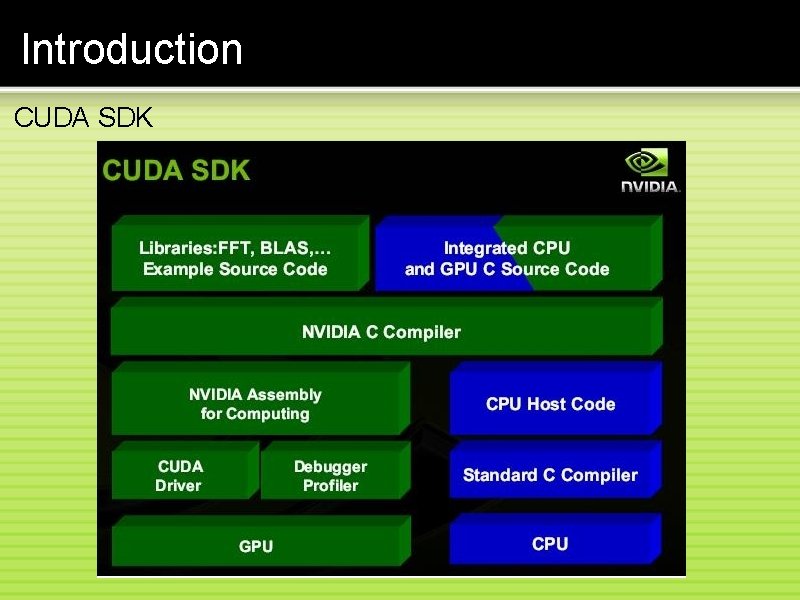

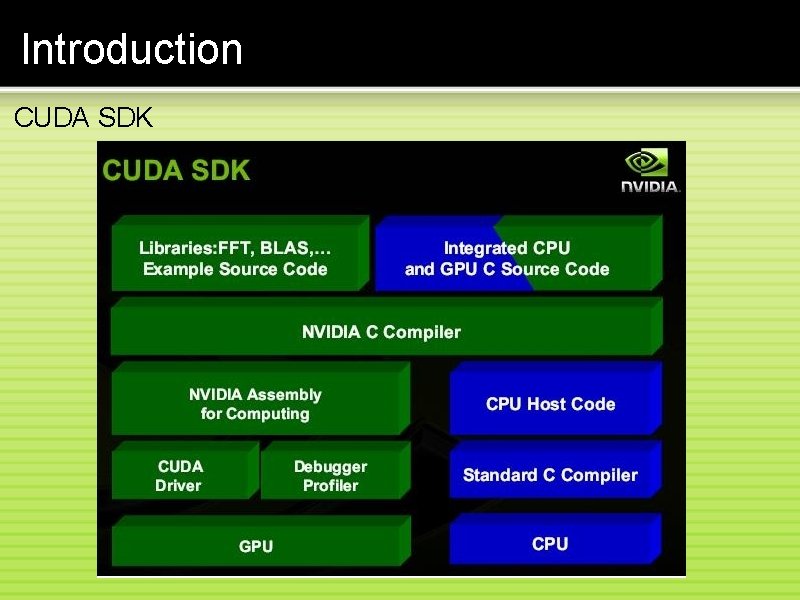

Introduction CUDA SDK

Outline • Introduction • Motivation • • • Programming Model Memory Model CUDA API Example Pro & Contra Trend

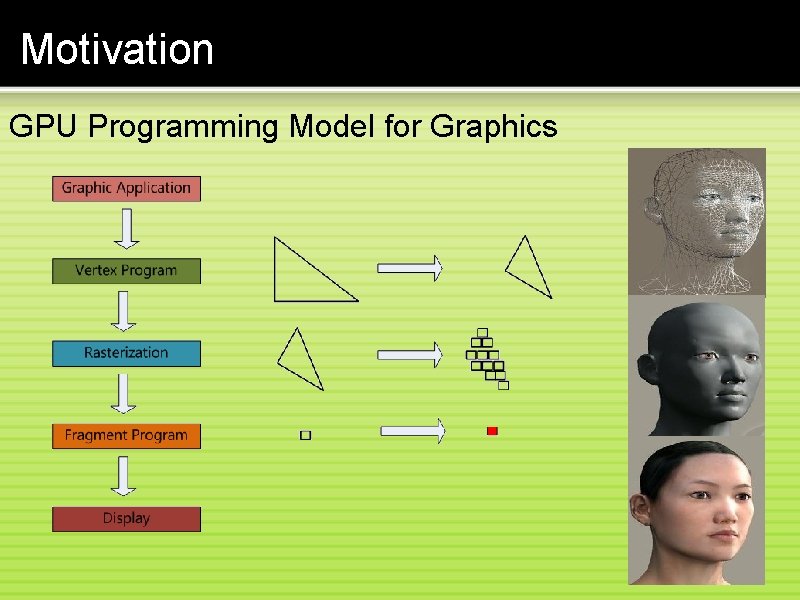

Motivation GPU Programming Model GPGPU Programming Model CUDA Programming Model

Motivation GPU Programming Model GPGPU Programming Model CUDA Programming Model

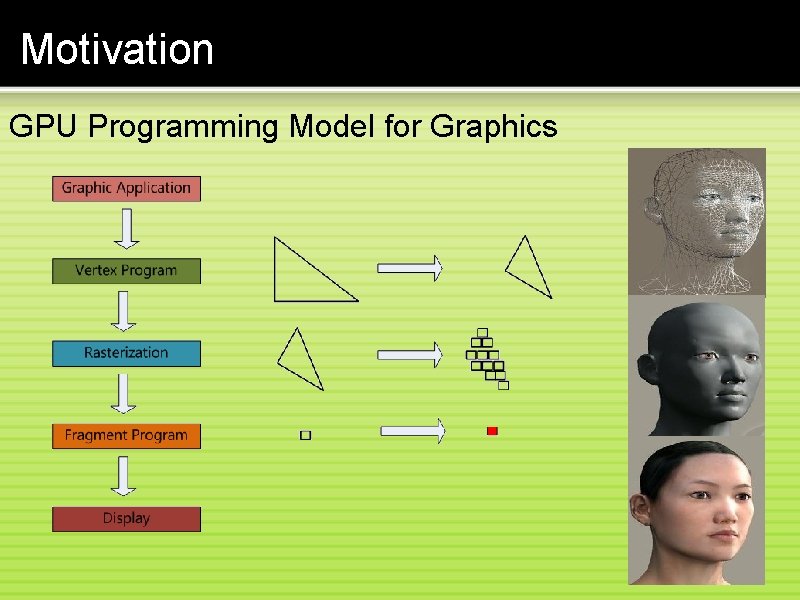

Motivation GPU Programming Model for Graphics

Motivation GPU Programming Model GPGPU Programming Model CUDA Programming Model

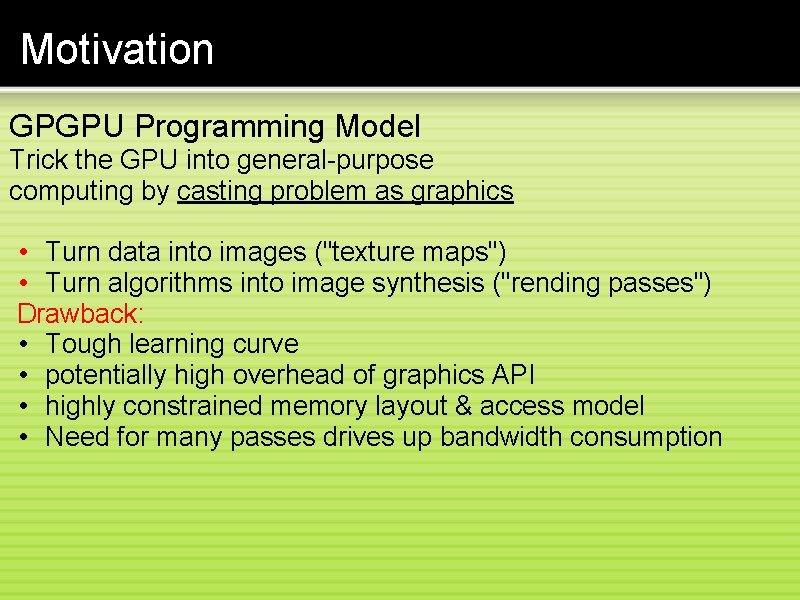

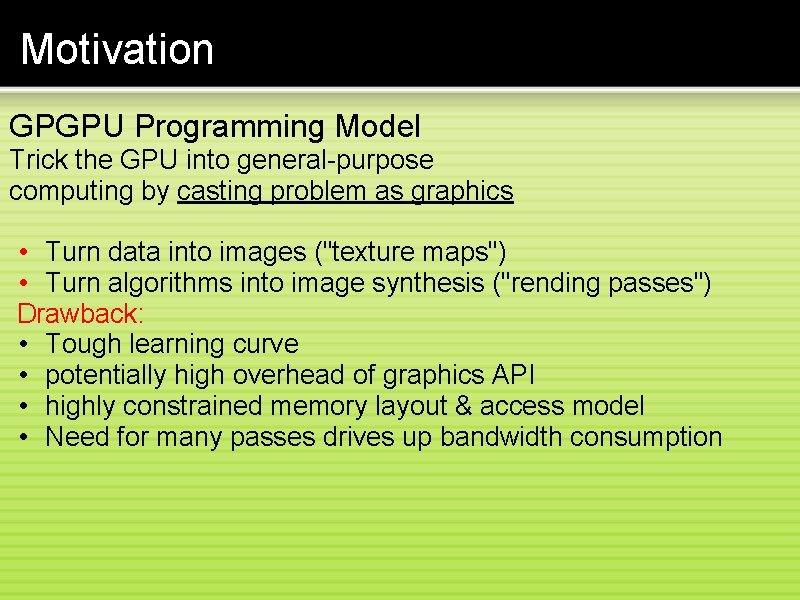

Motivation GPGPU Programming Model Trick the GPU into general-purpose computing by casting problem as graphics • Turn data into images ("texture maps") • Turn algorithms into image synthesis ("rending passes") Drawback: • Tough learning curve • potentially high overhead of graphics API • highly constrained memory layout & access model • Need for many passes drives up bandwidth consumption

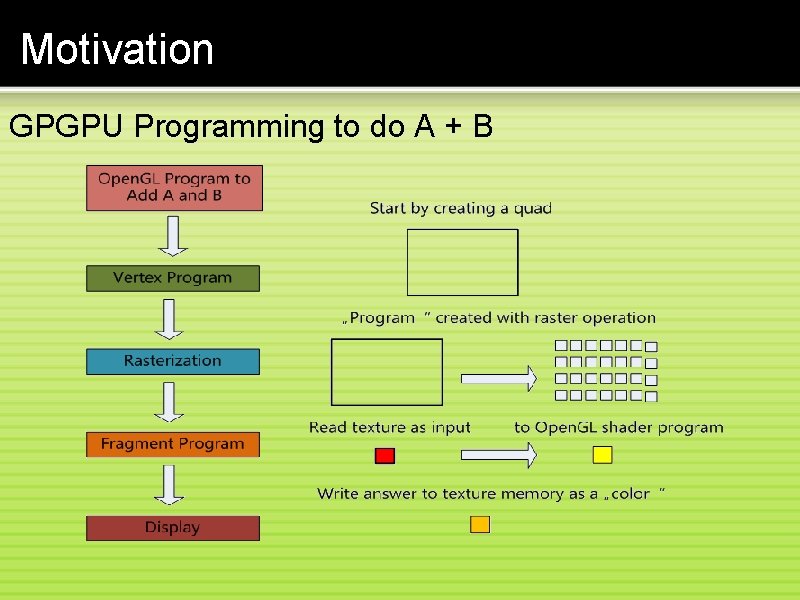

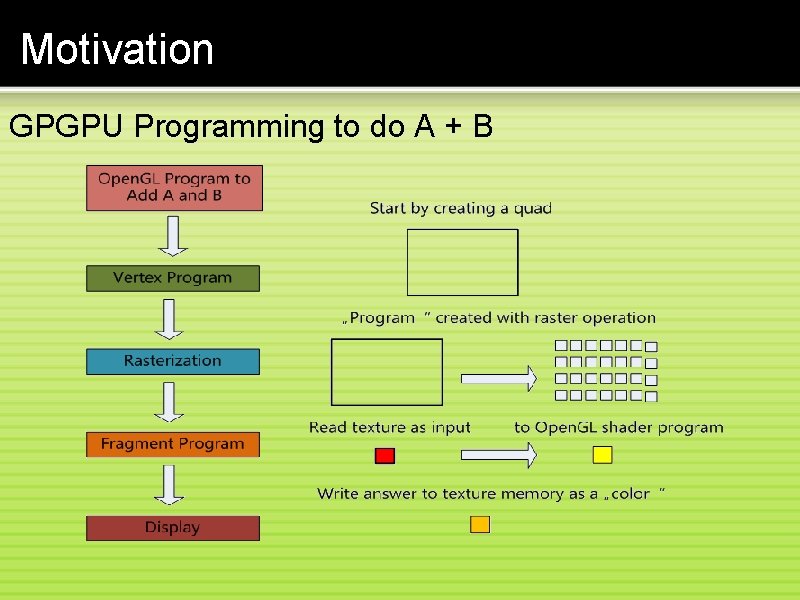

Motivation GPGPU Programming to do A + B

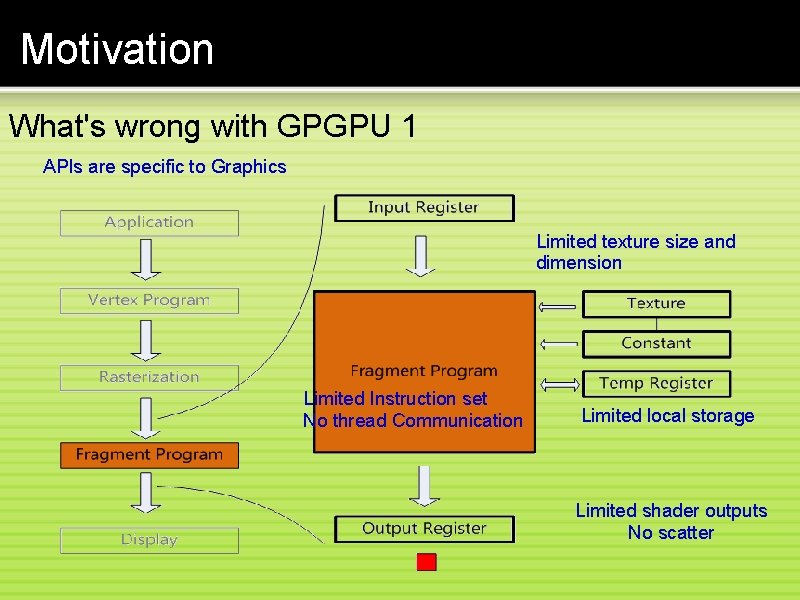

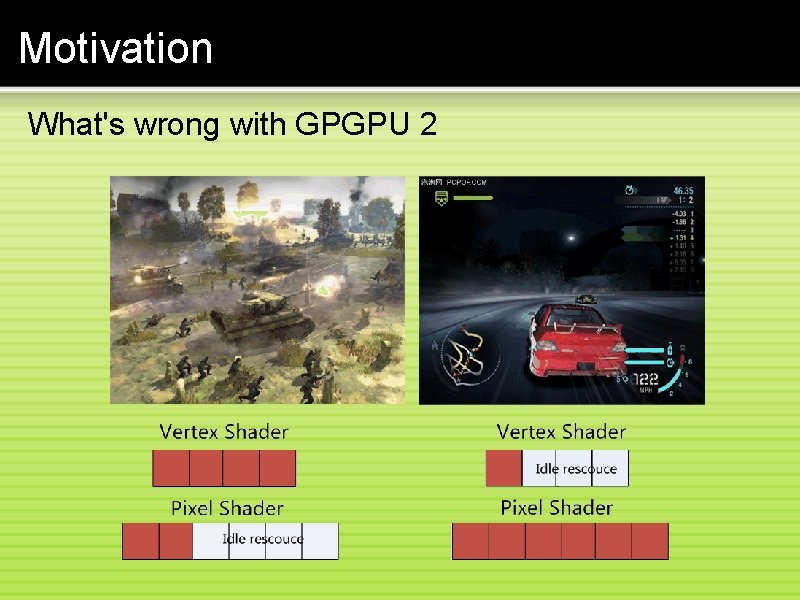

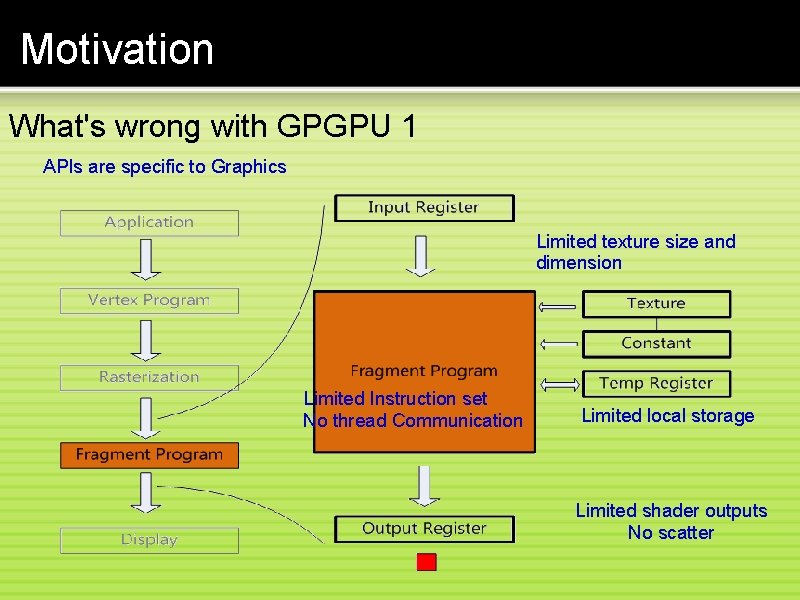

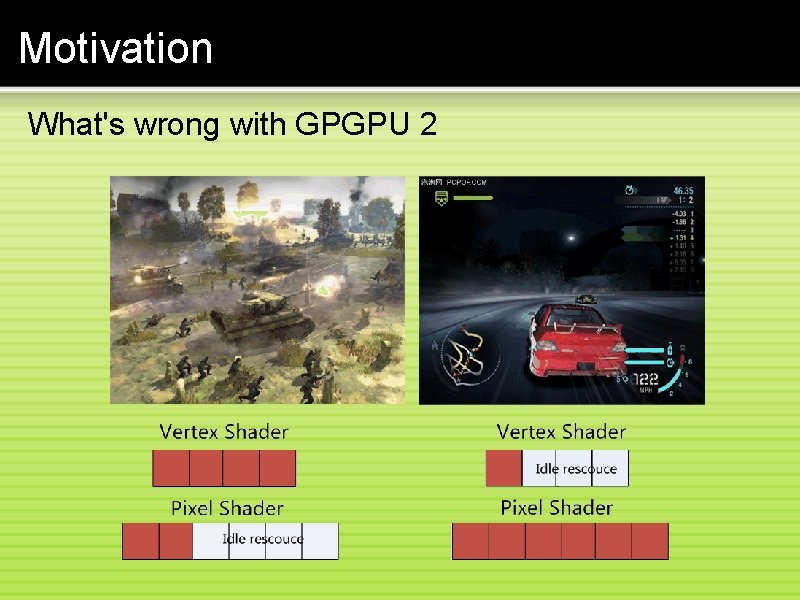

Motivation What's wrong with GPGPU 1 APIs are specific to Graphics Limited texture size and dimension Limited Instruction set No thread Communication Limited local storage Limited shader outputs No scatter

Motivation What's wrong with GPGPU 2

Motivation GPU Programming Model GPGPU Programming Model CUDA Programming Model

Outline • Introduction • Motivation • Programming Model • • • Memory Model CUDA API Example Pro & Contra Trend

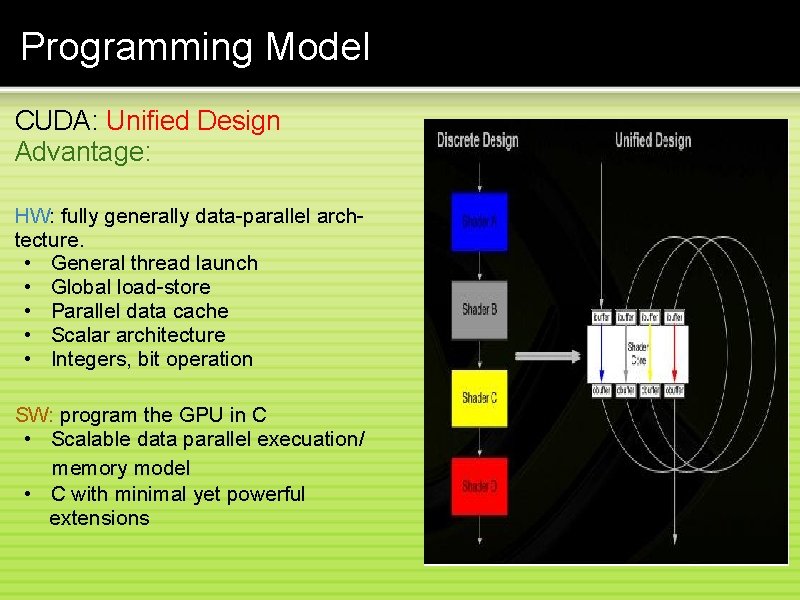

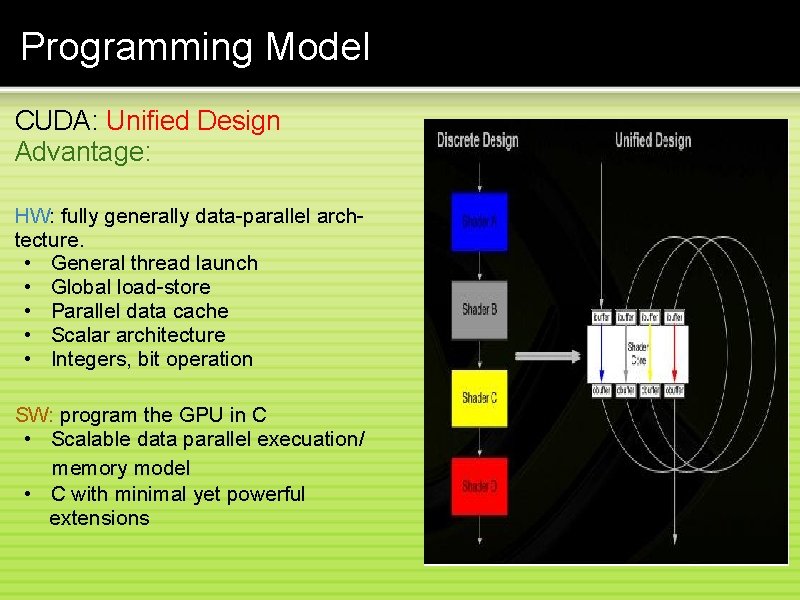

Programming Model CUDA: Unified Design Advantage: HW: fully generally data-parallel archtecture. • General thread launch • Global load-store • Parallel data cache • Scalar architecture • Integers, bit operation SW: program the GPU in C • Scalable data parallel execuation/ memory model • C with minimal yet powerful extensions

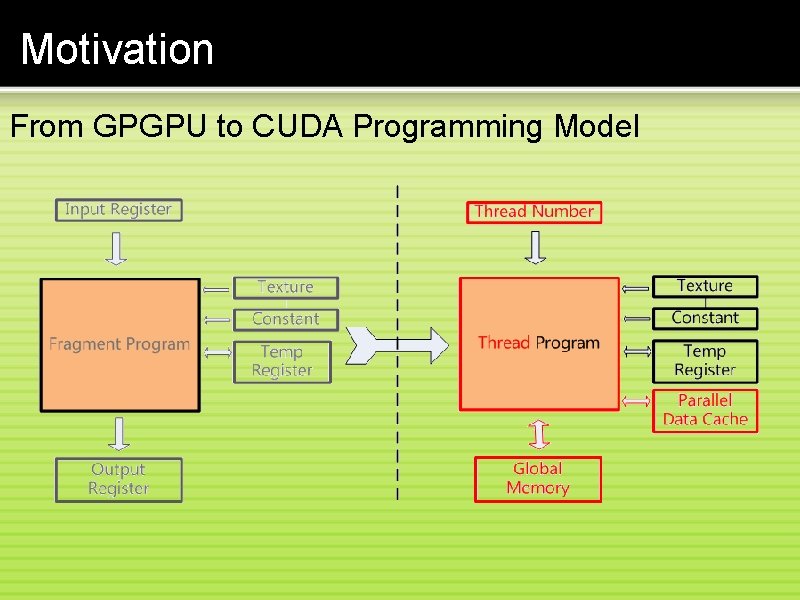

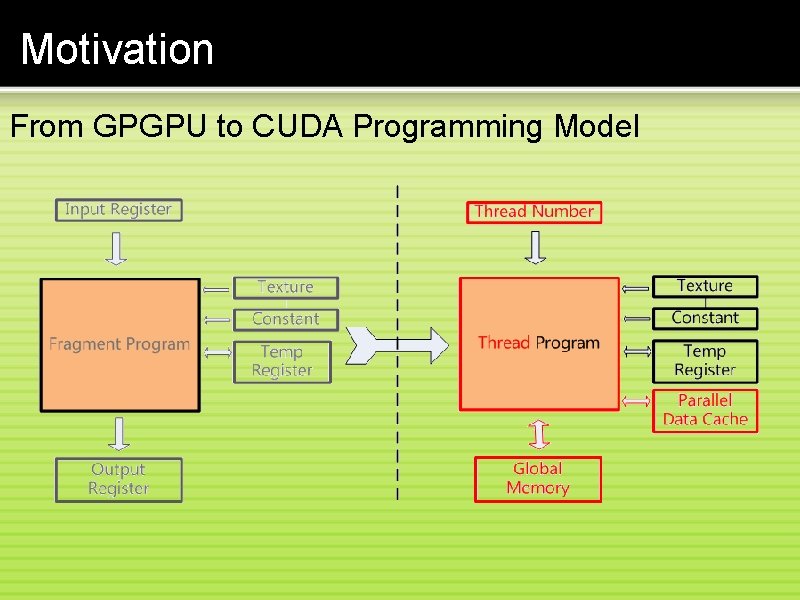

Motivation From GPGPU to CUDA Programming Model

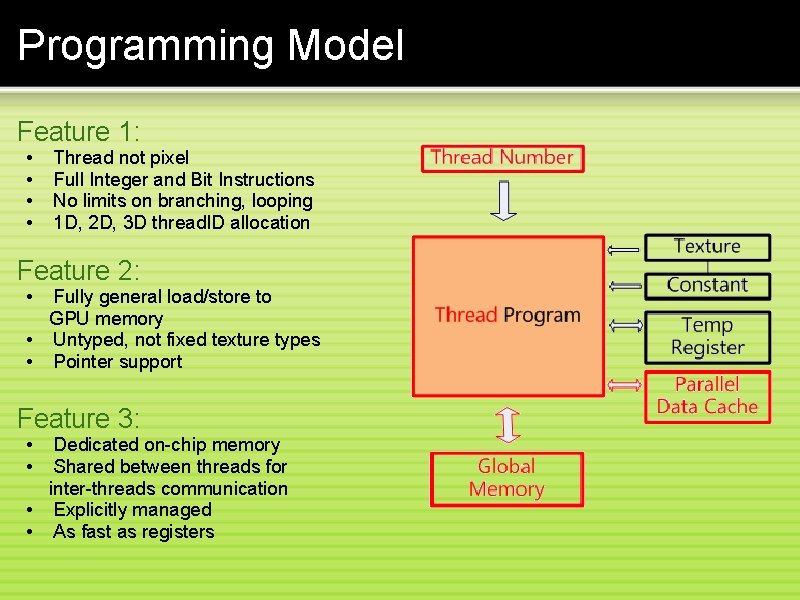

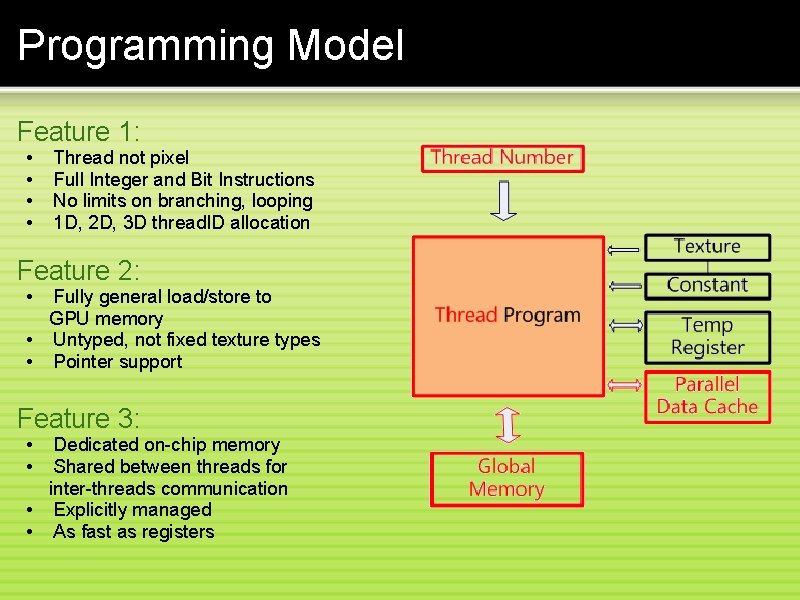

Programming Model Feature 1: • • Thread not pixel Full Integer and Bit Instructions No limits on branching, looping 1 D, 2 D, 3 D thread. ID allocation Feature 2: • Fully general load/store to GPU memory • Untyped, not fixed texture types • Pointer support Feature 3: • Dedicated on-chip memory • Shared between threads for inter-threads communication • Explicitly managed • As fast as registers

Programming Model Important Concepts: • Device: GPU, viewed as a co-processor. • Host: CPU • Kernel: data-parallel, computed-intensive positions of application running on the device.

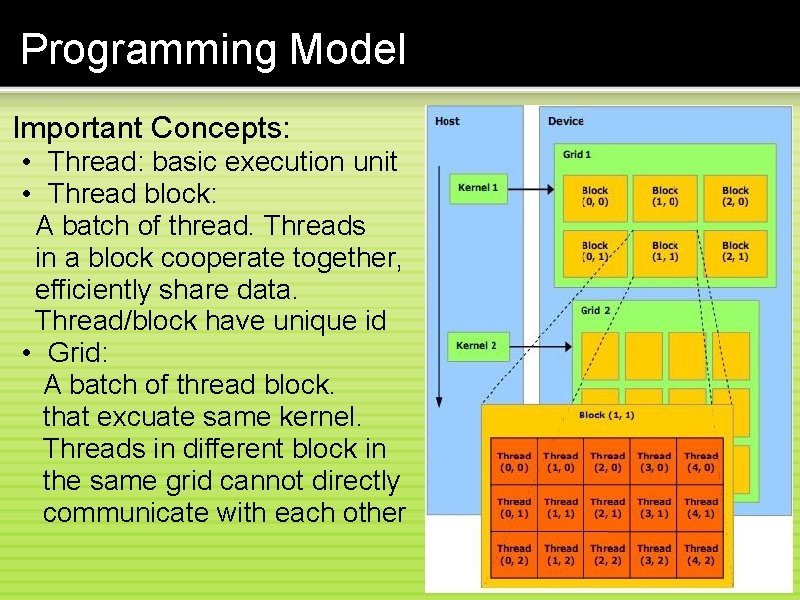

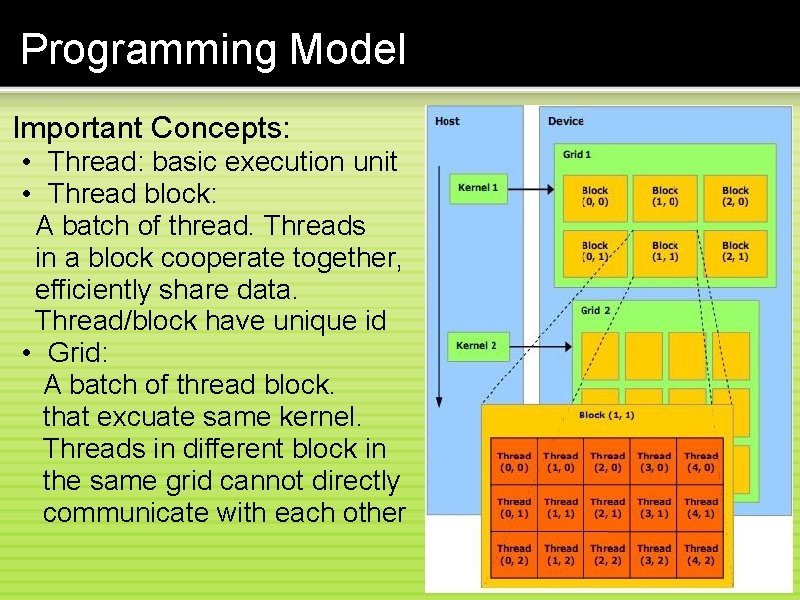

Programming Model Important Concepts: • Thread: basic execution unit • Thread block: A batch of thread. Threads in a block cooperate together, efficiently share data. Thread/block have unique id • Grid: A batch of thread block. that excuate same kernel. Threads in different block in the same grid cannot directly communicate with each other

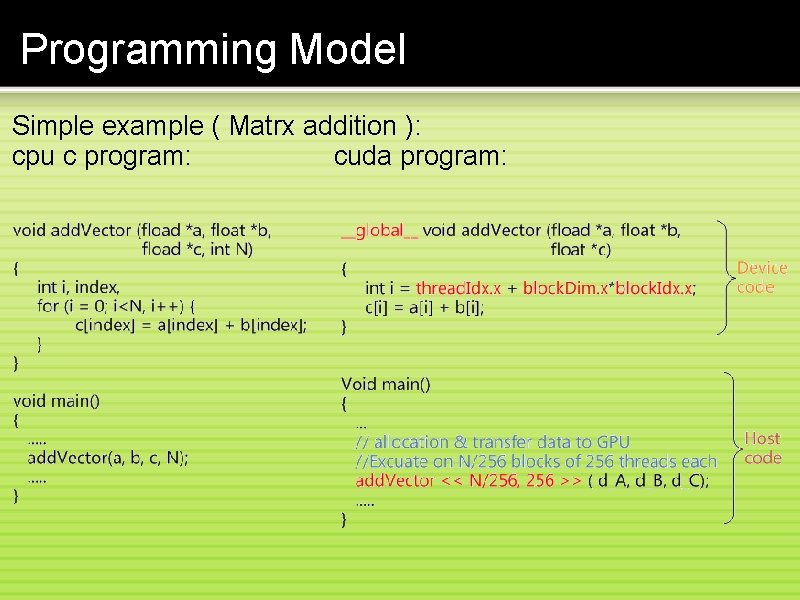

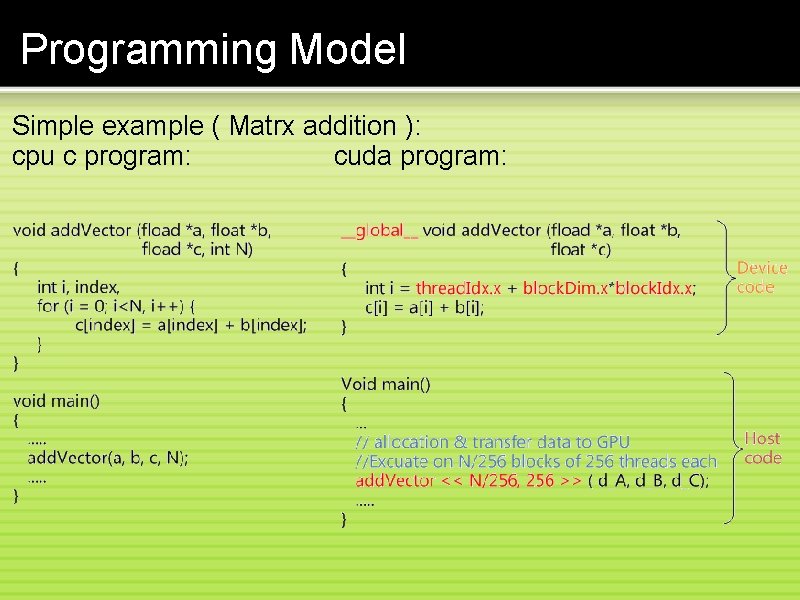

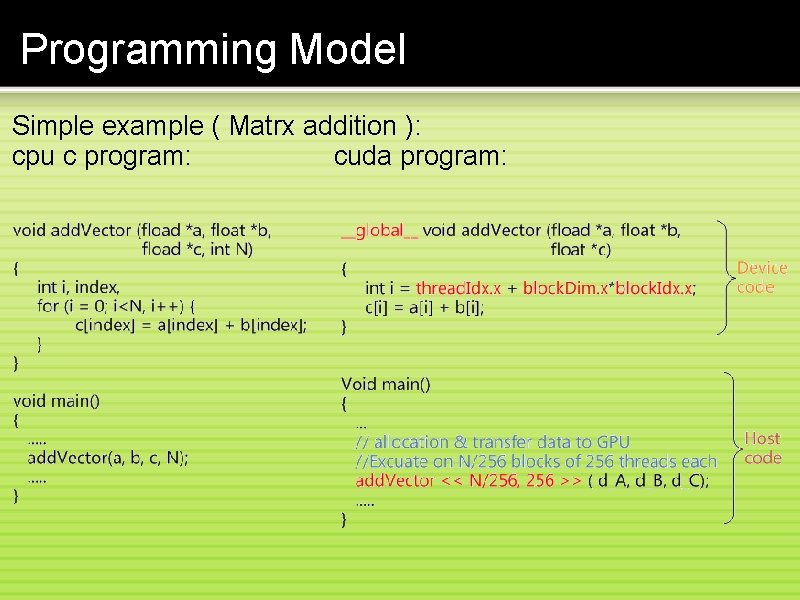

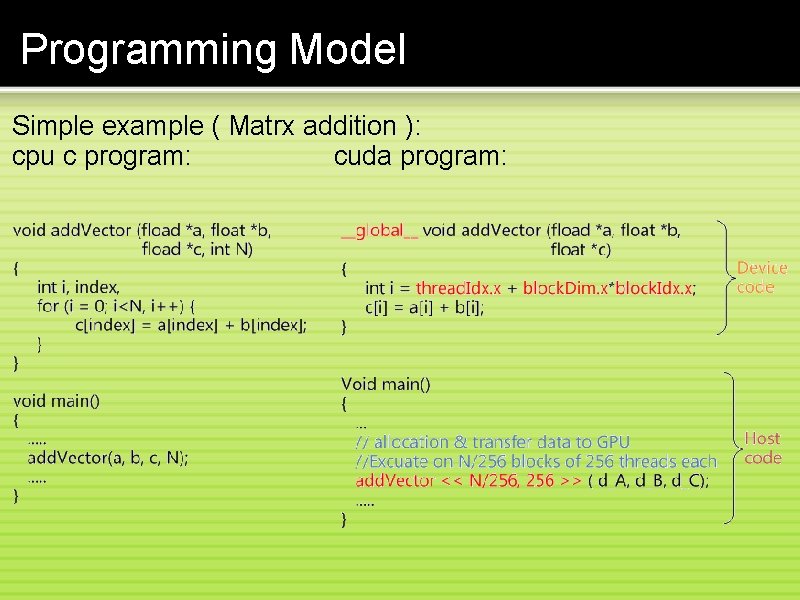

Programming Model Simple example ( Matrx addition ): cpu c program: cuda program:

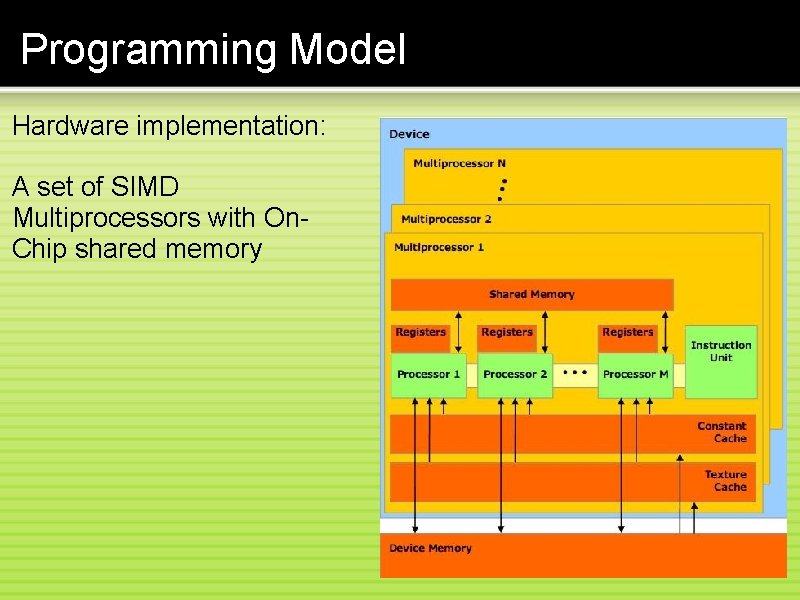

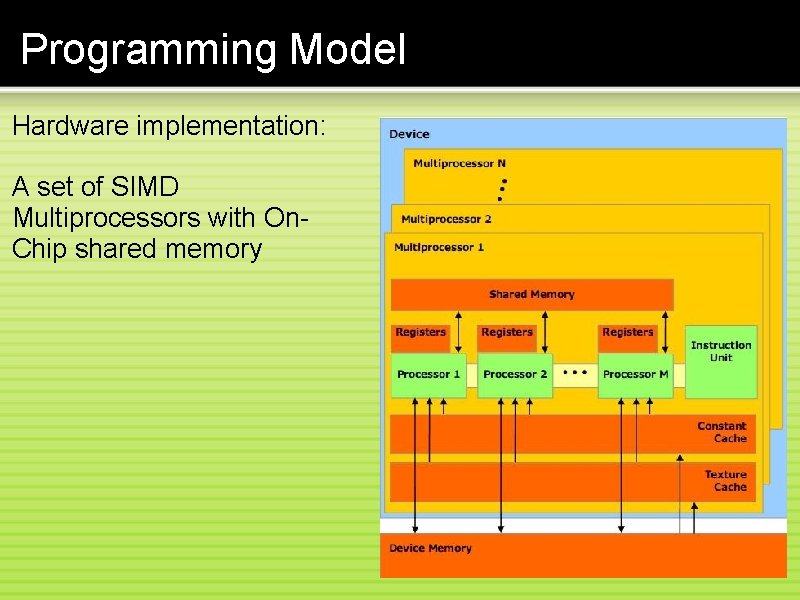

Programming Model Hardware implementation: A set of SIMD Multiprocessors with On. Chip shared memory

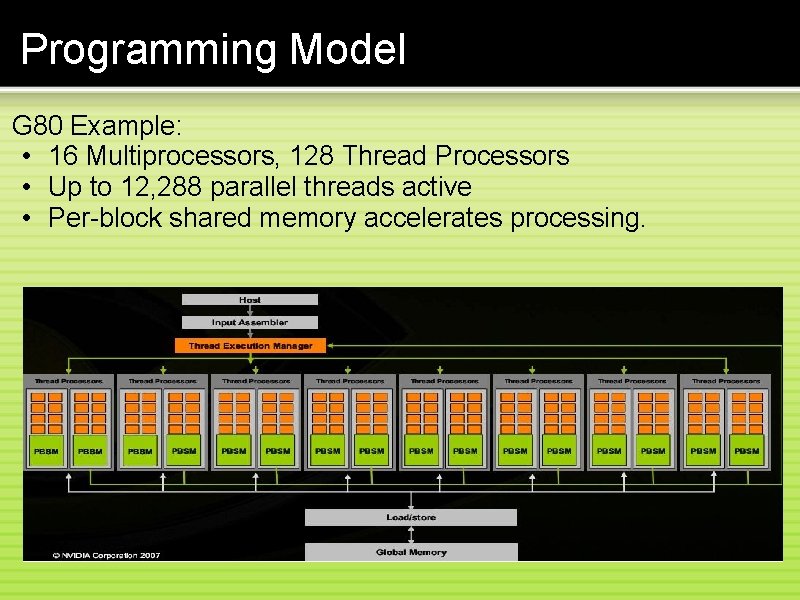

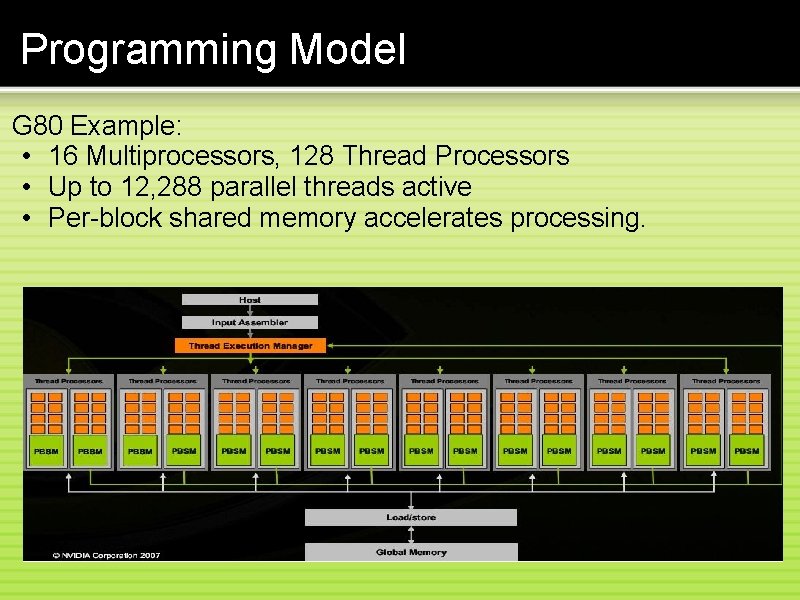

Programming Model G 80 Example: • 16 Multiprocessors, 128 Thread Processors • Up to 12, 288 parallel threads active • Per-block shared memory accelerates processing.

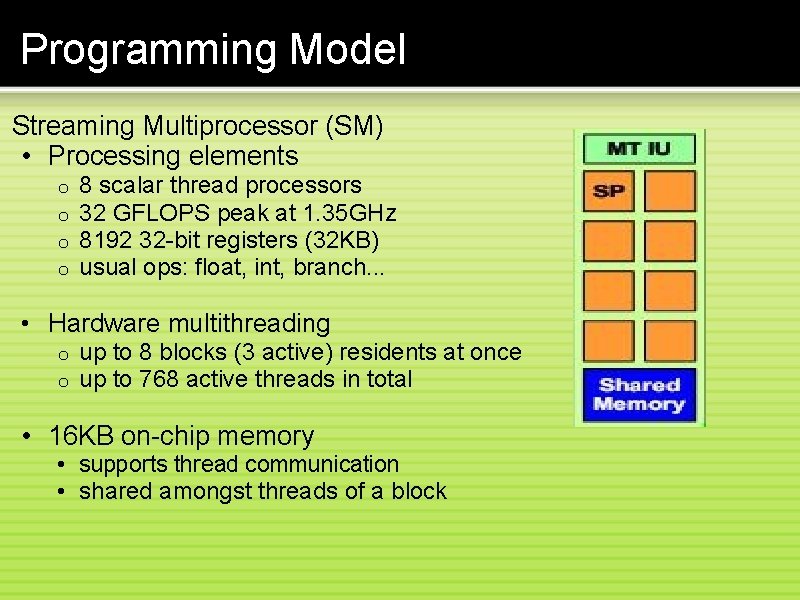

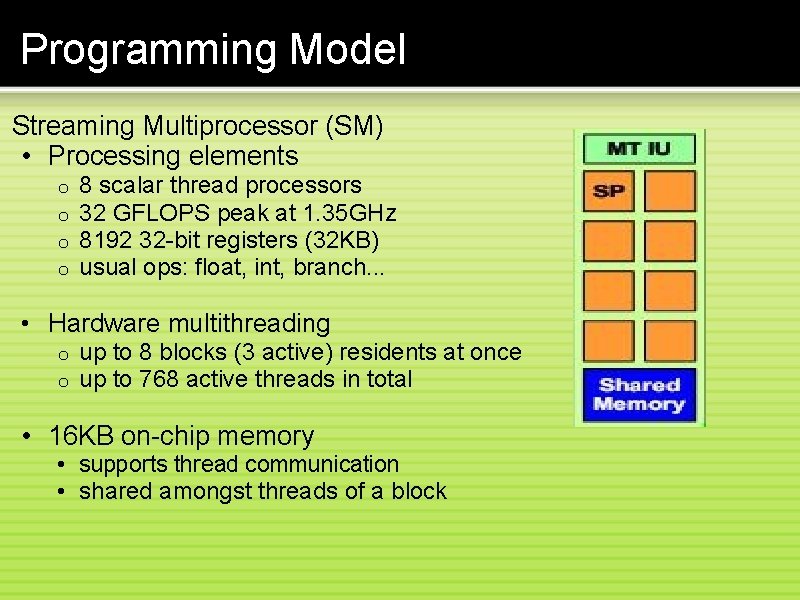

Programming Model Streaming Multiprocessor (SM) • Processing elements o o 8 scalar thread processors 32 GFLOPS peak at 1. 35 GHz 8192 32 -bit registers (32 KB) usual ops: float, int, branch. . . • Hardware multithreading o o up to 8 blocks (3 active) residents at once up to 768 active threads in total • 16 KB on-chip memory • supports thread communication • shared amongst threads of a block

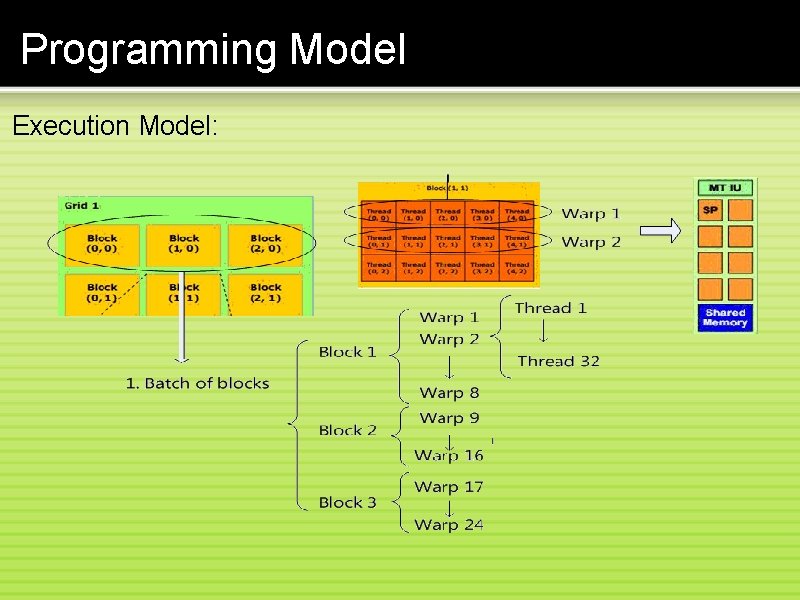

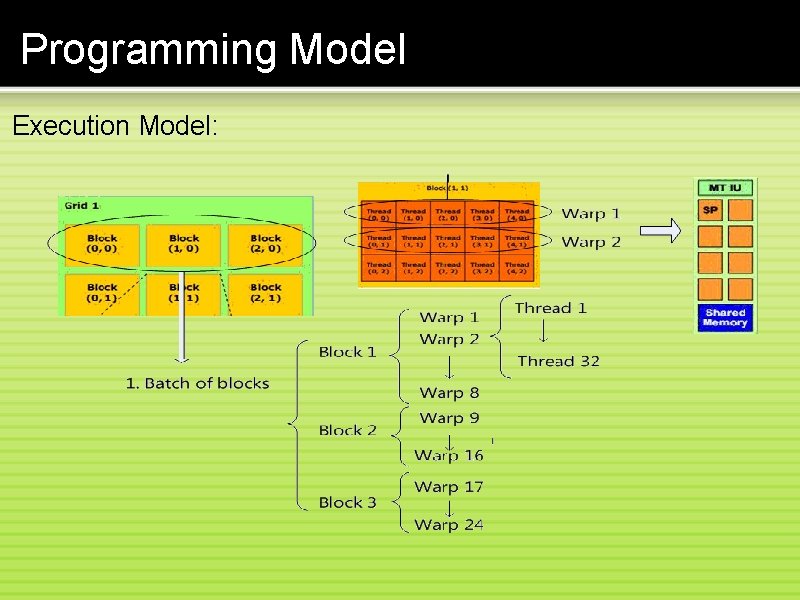

Programming Model Execution Model:

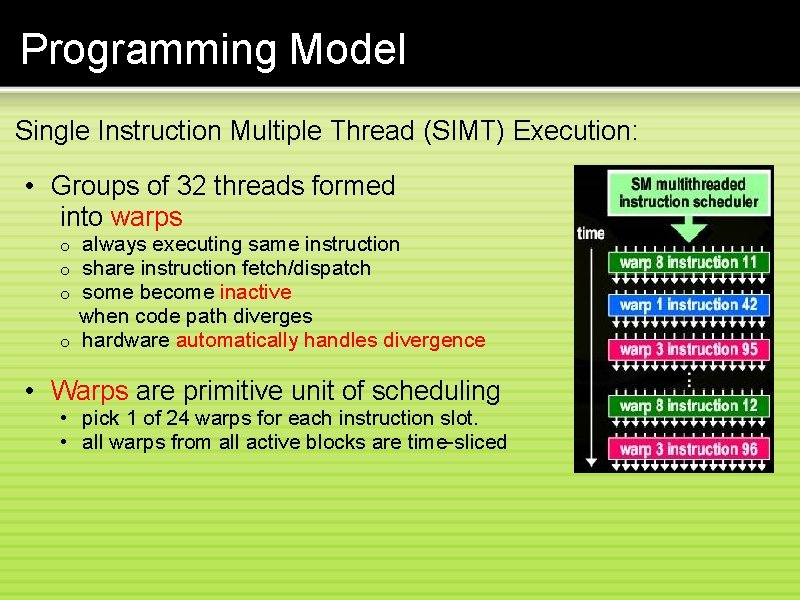

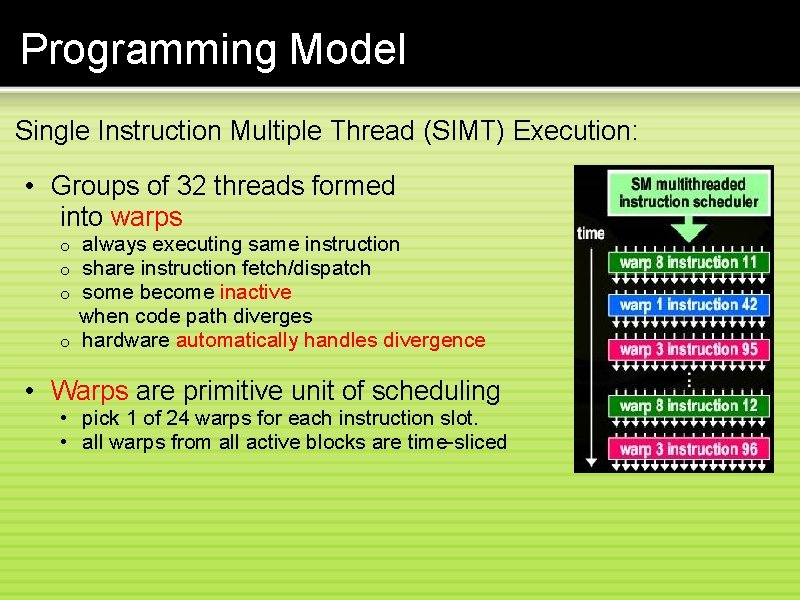

Programming Model Single Instruction Multiple Thread (SIMT) Execution: • Groups of 32 threads formed into warps always executing same instruction share instruction fetch/dispatch some become inactive when code path diverges o hardware automatically handles divergence o o o • Warps are primitive unit of scheduling • pick 1 of 24 warps for each instruction slot. • all warps from all active blocks are time-sliced

Outline • Introduction • Motivation • Programming Model • Memory Model • • CUDA API Example Pro & Contra Trend

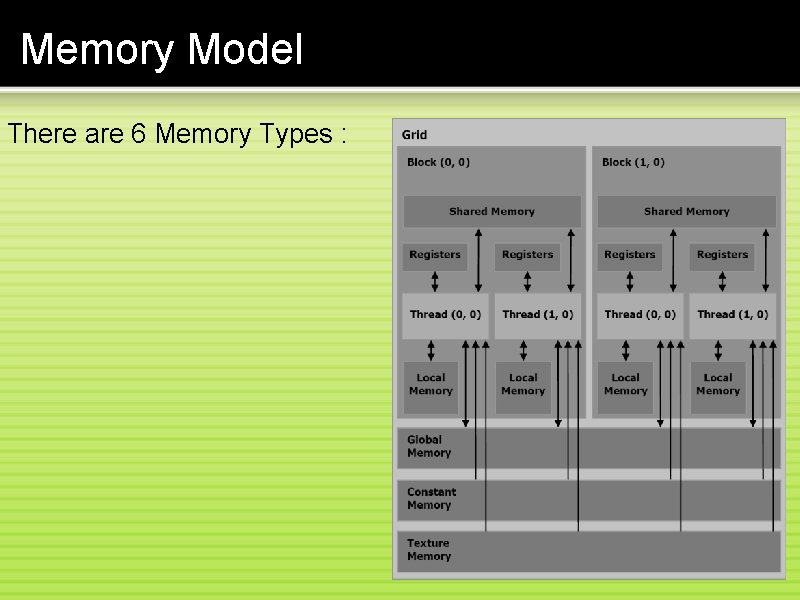

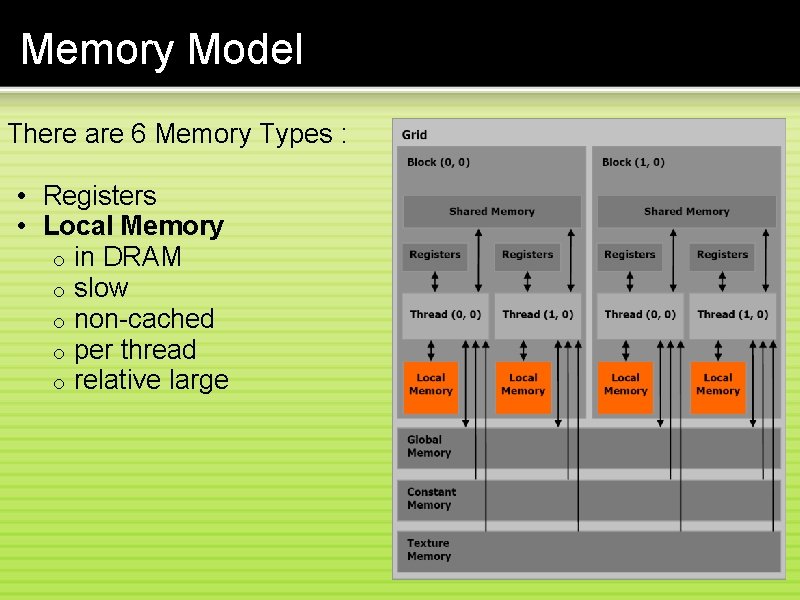

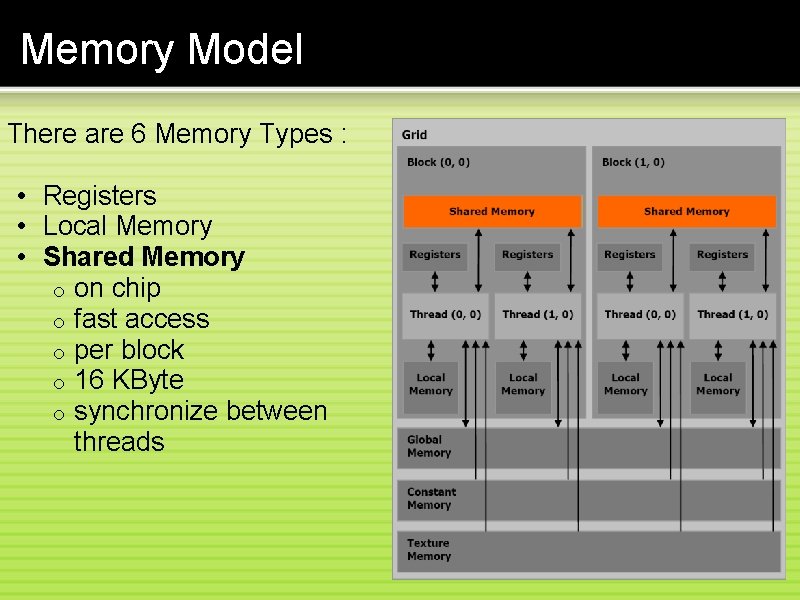

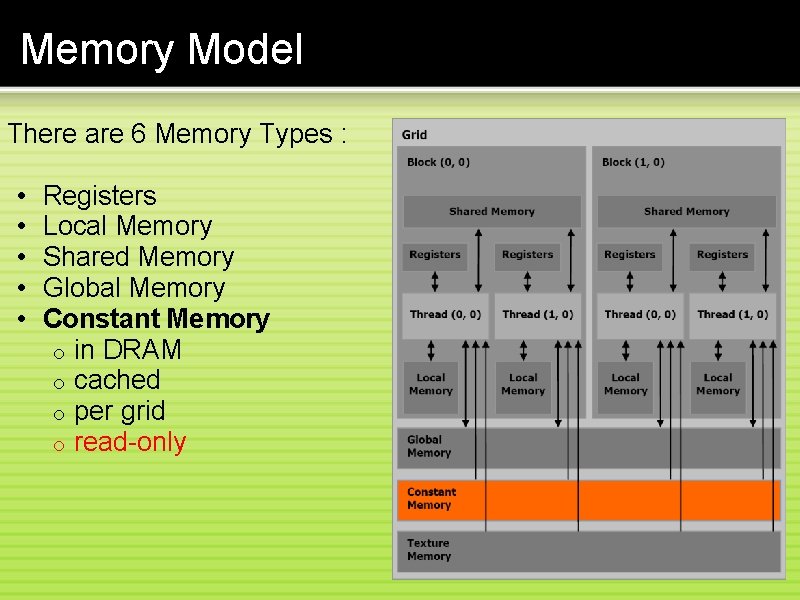

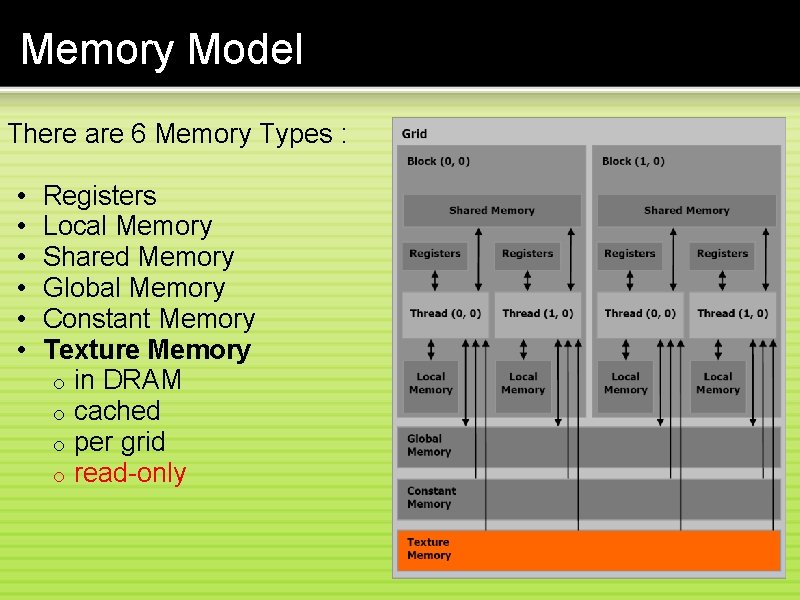

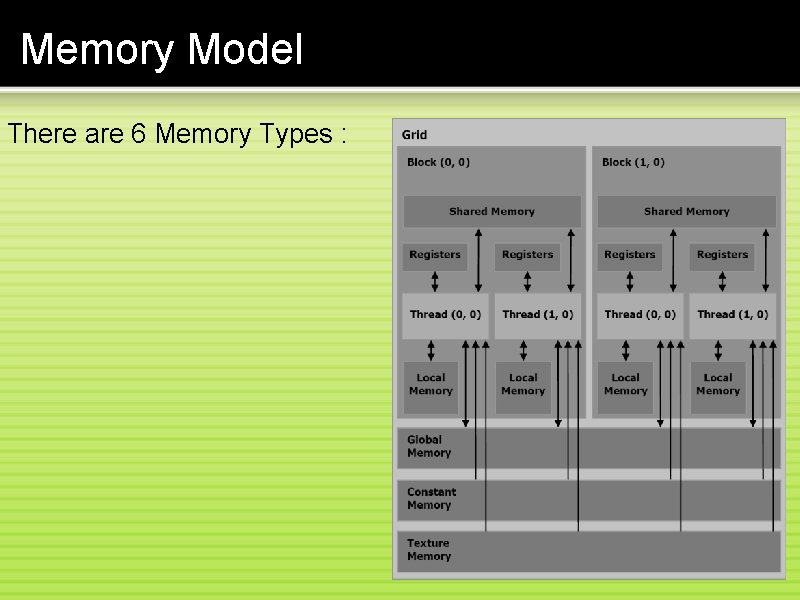

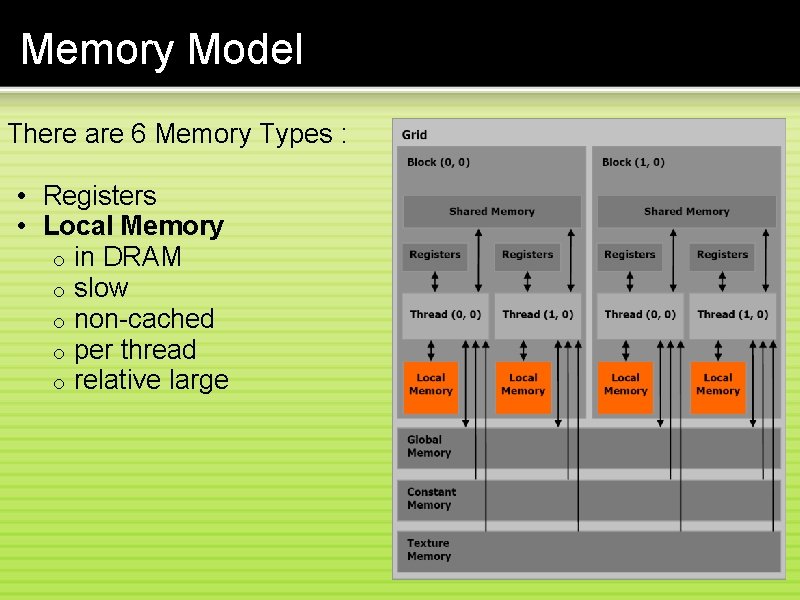

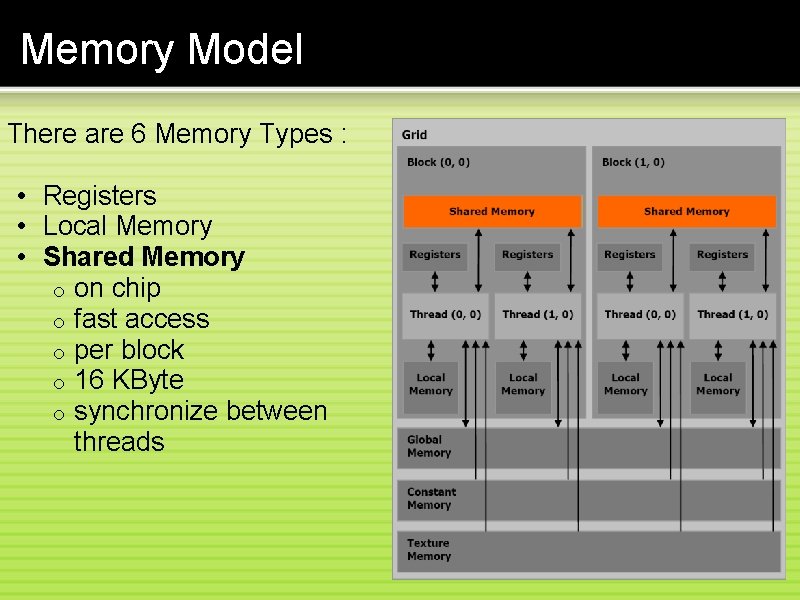

Memory Model There are 6 Memory Types :

Memory Model There are 6 Memory Types : • Registers o on chip o fast access o per thread o limited amount o 32 bit

Memory Model There are 6 Memory Types : • Registers • Local Memory o in DRAM o slow o non-cached o per thread o relative large

Memory Model There are 6 Memory Types : • Registers • Local Memory • Shared Memory o on chip o fast access o per block o 16 KByte o synchronize between threads

Memory Model There are 6 Memory Types : • Registers • Local Memory • Shared Memory • Global Memory o in DRAM o slow o non-cached o per grid o communicate between grids

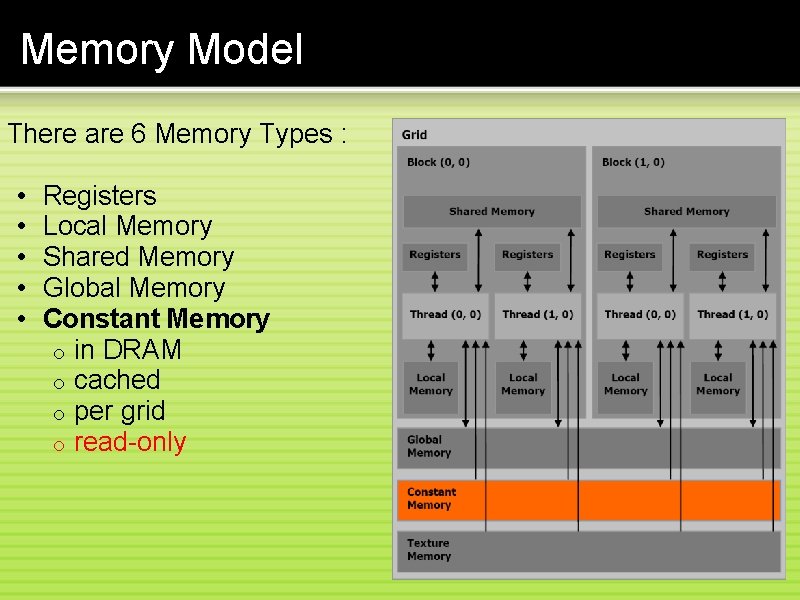

Memory Model There are 6 Memory Types : • Registers • Local Memory • Shared Memory • Global Memory • Constant Memory o in DRAM o cached o per grid o read-only

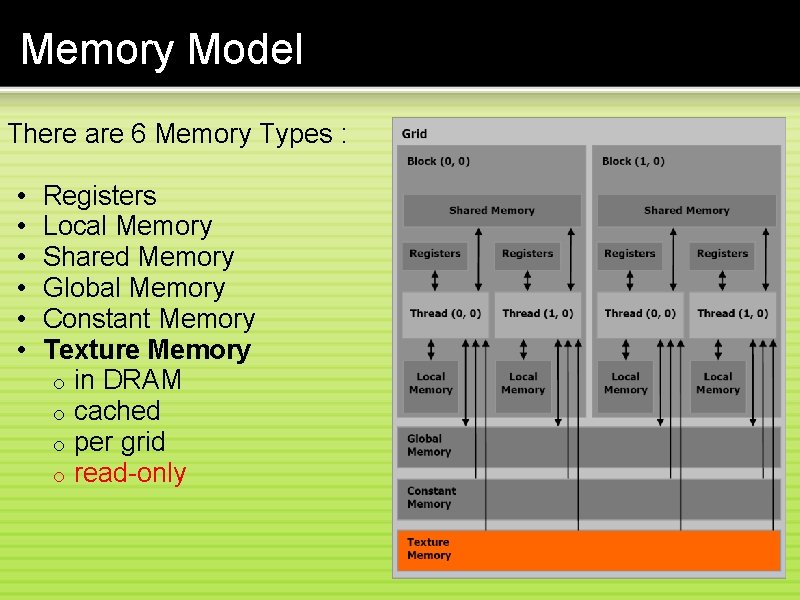

Memory Model There are 6 Memory Types : • Registers • Local Memory • Shared Memory • Global Memory • Constant Memory • Texture Memory o in DRAM o cached o per grid o read-only

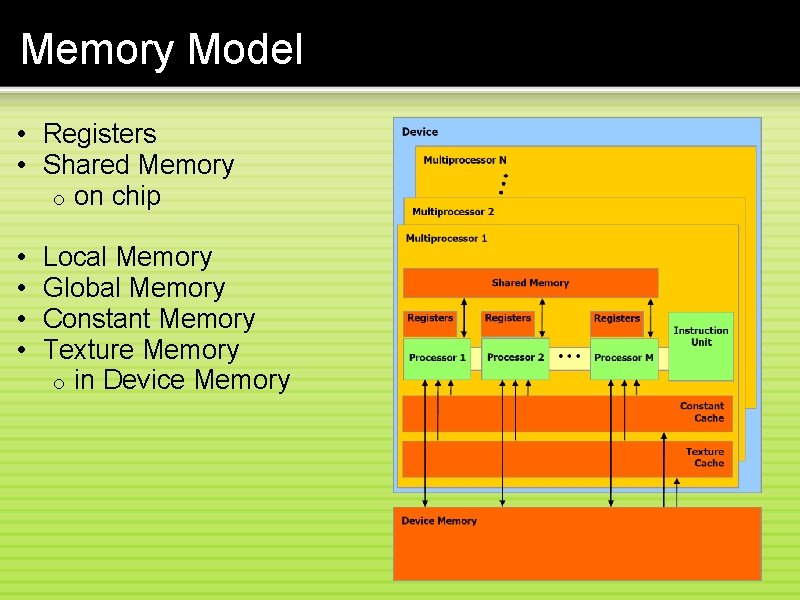

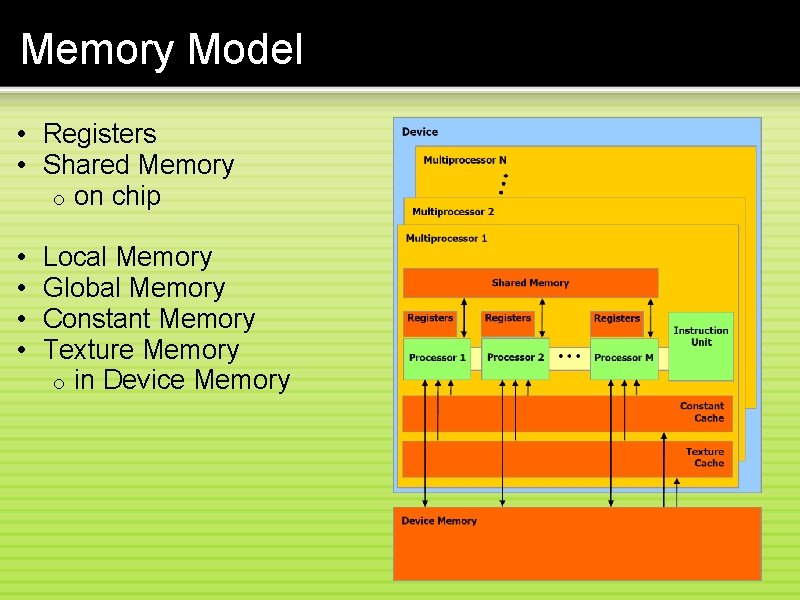

Memory Model • Registers • Shared Memory o on chip • • Local Memory Global Memory Constant Memory Texture Memory o in Device Memory

Memory Model • Global Memory • Constant Memory • Texture Memory o managed by host code o persistent across kernels

Outline • • Introduction Motivation Programming Model Memory Model • CUDA API • Example • Pro & Contra • Trend

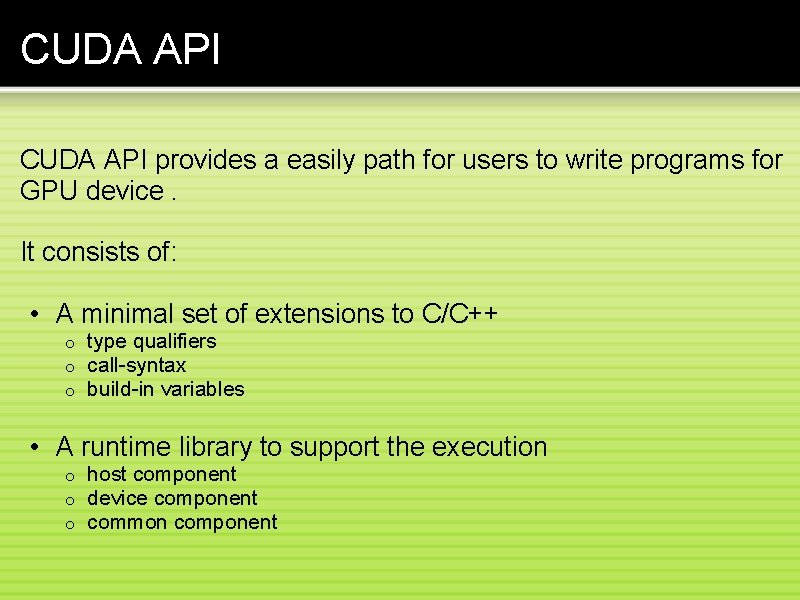

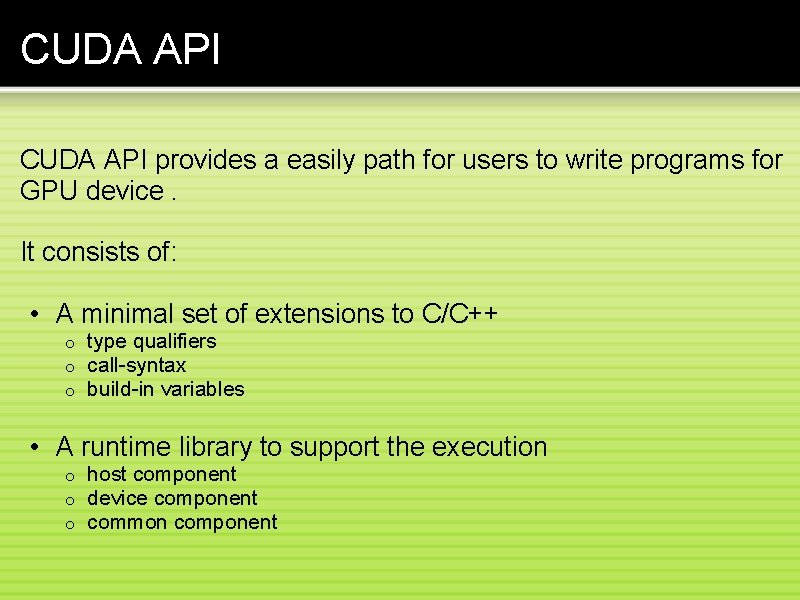

CUDA API provides a easily path for users to write programs for GPU device. It consists of: • A minimal set of extensions to C/C++ o o o type qualifiers call-syntax build-in variables • A runtime library to support the execution o o o host component device component common component

CUDA API CUDA C/C++ Extensions: • New function type qualifiers __host__ void Host. Func(. . . ); //executable on host __global__ void Kernel. Func(. . . ); //callable from host __device__ void Device. Func(. . . ); //callable from device only o Restrictions for device code (__global__ / __device__) § § § no recursive call no static variable no function pointer __global__ function is asynchronous invoked __global__ function must have void return type

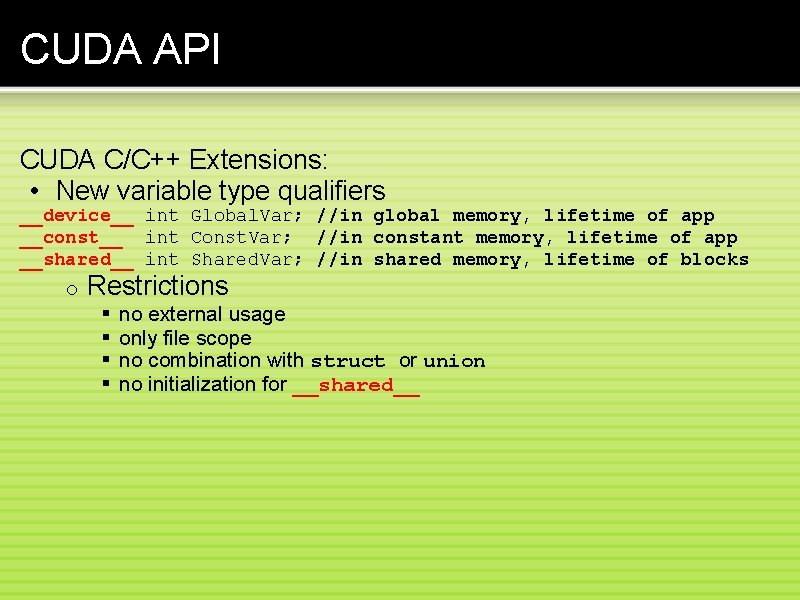

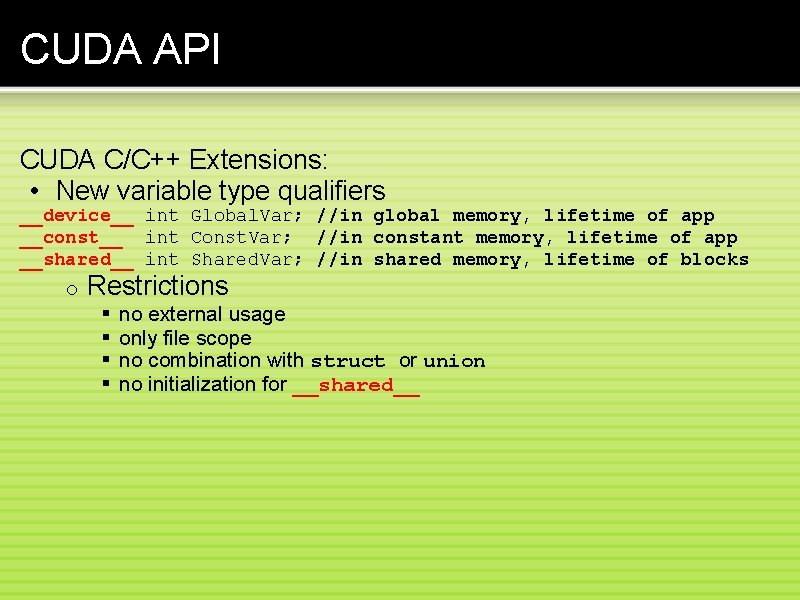

CUDA API CUDA C/C++ Extensions: • New variable type qualifiers __device__ int Global. Var; //in global memory, lifetime of app __const__ int Const. Var; //in constant memory, lifetime of app __shared__ int Shared. Var; //in shared memory, lifetime of blocks o Restrictions § § no external usage only file scope no combination with struct or union no initialization for __shared__

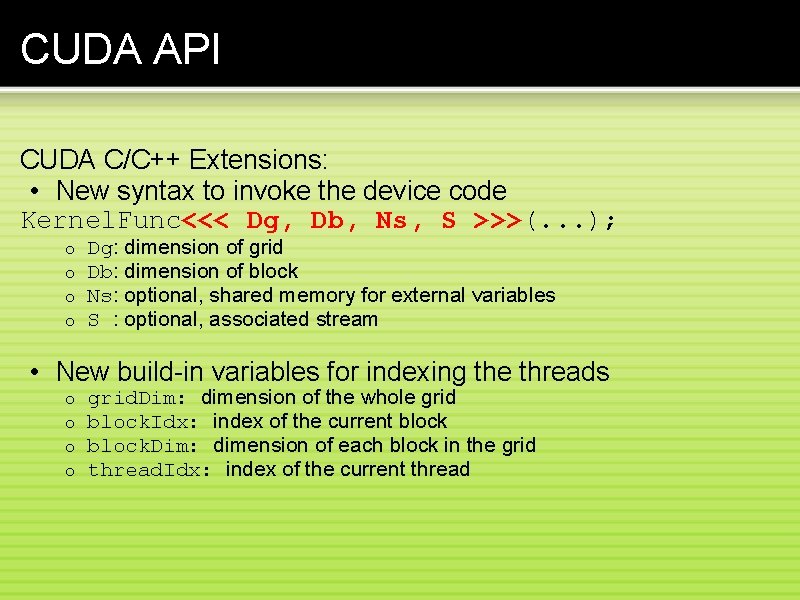

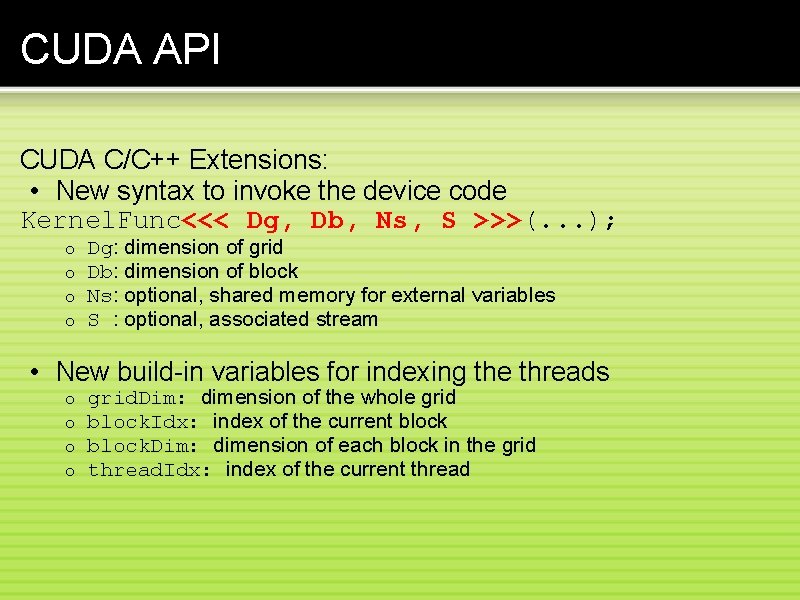

CUDA API CUDA C/C++ Extensions: • New syntax to invoke the device code Kernel. Func<<< Dg, Db, Ns, S >>>(. . . ); o o Dg: dimension of grid Db: dimension of block Ns: optional, shared memory for external variables S : optional, associated stream • New build-in variables for indexing the threads o o grid. Dim: dimension of the whole grid block. Idx: index of the current block. Dim: dimension of each block in the grid thread. Idx: index of the current thread

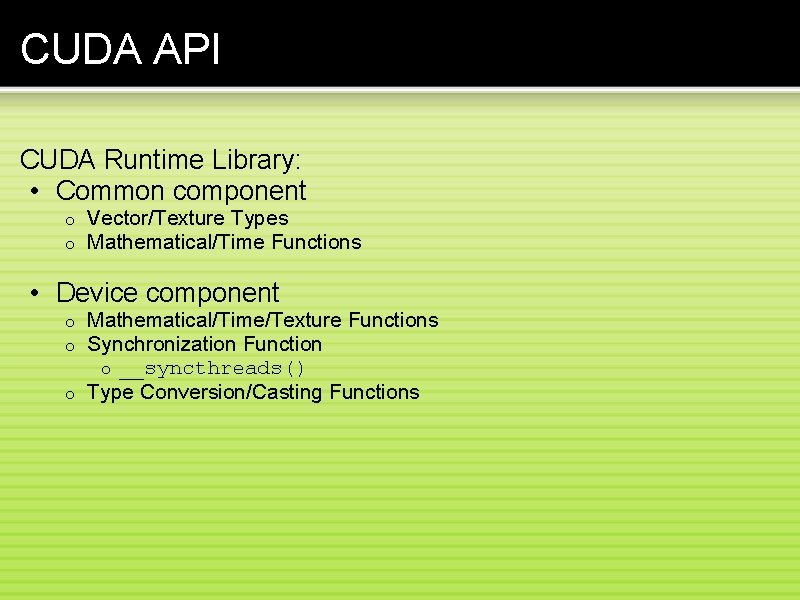

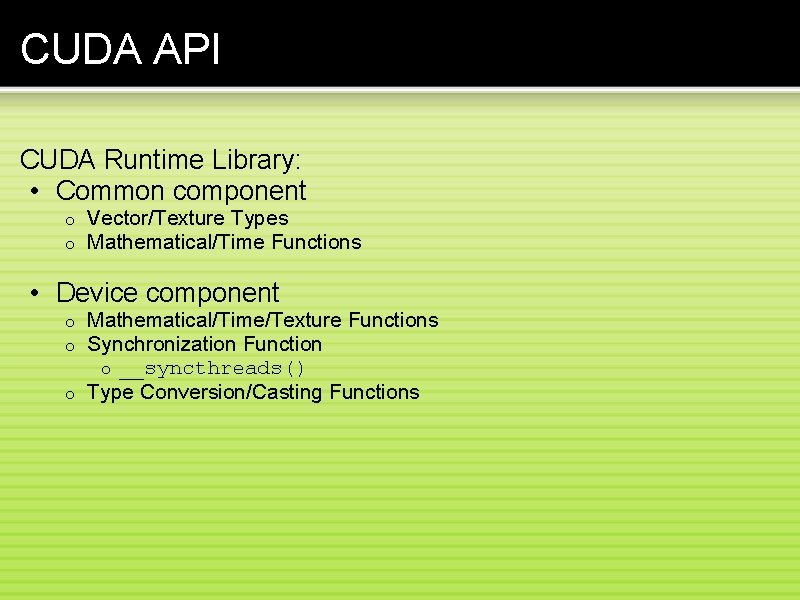

CUDA API CUDA Runtime Library: • Common component o o Vector/Texture Types Mathematical/Time Functions • Device component Mathematical/Time/Texture Functions Synchronization Function o __syncthreads() o Type Conversion/Casting Functions o o

CUDA API CUDA Runtime Library: • Host component o Structure o Functions § Driver API § Runtime API § Device, Context, Memory, Module, Texture management § Execution control § Interoperability with Open. GL and Direct 3 D

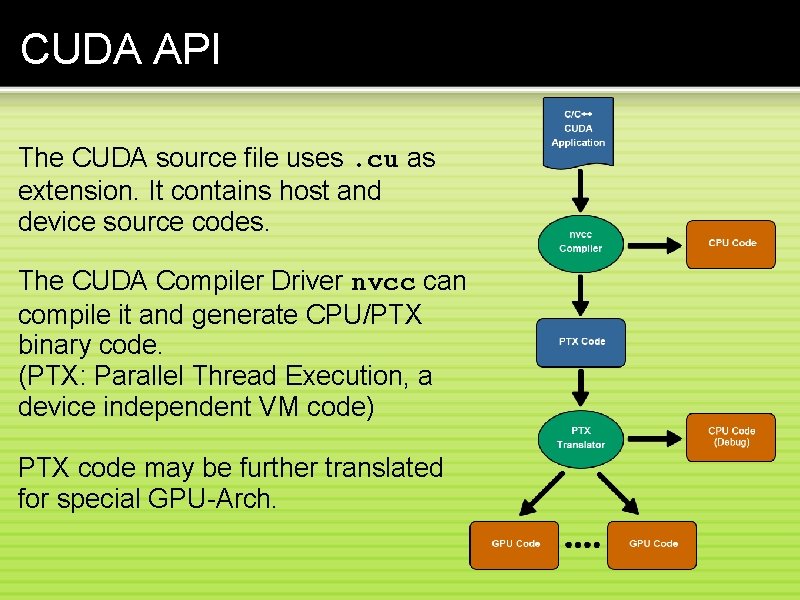

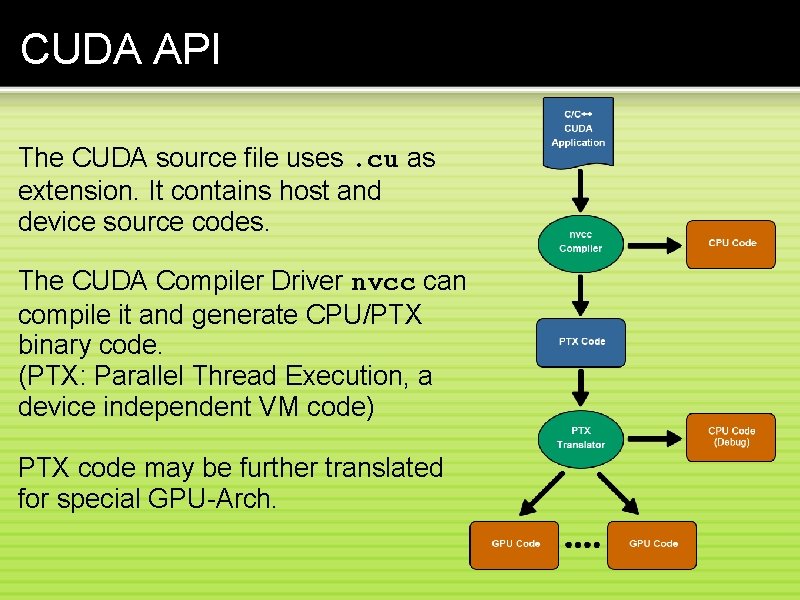

CUDA API The CUDA source file uses. cu as extension. It contains host and device source codes. The CUDA Compiler Driver nvcc can compile it and generate CPU/PTX binary code. (PTX: Parallel Thread Execution, a device independent VM code) PTX code may be further translated for special GPU-Arch.

Outline • • • Introduction Motivation Programming Model Memory Model CUDA API • Example • Pro & Contra • Trend

Programming Model Simple example ( Matrx addition ): cpu c program: cuda program:

Outline • • • Introduction Motivation Programming Model Memory Model CUDA API Example • Pro & Contra • Trend

Pro & Contra CUDA allows • massive parallel computing • with a relative low price • high integrated solution • personal supercomputing • ecofriendly production • easy to learn

Pro & Contra Problem. . . • slightly low precision • limited support for IEEE-754 • no recursive function call • hard to use for irregular join/fork logic • no concurrency between jobs

Outline • • Introduction Motivation Programming Model Memory Model CUDA API Example Pro & Contra • Trend

Trend • • • More cores on-chip Better support for float point Flexiber configuration & control/data flow Lower price Support higher level programming language

![References 1 CUDA Programming Guide n Vidia Corp 2 The CUDA Compiler Driver n References [1] CUDA Programming Guide, n. Vidia Corp. [2] The CUDA Compiler Driver, n.](https://slidetodoc.com/presentation_image/13252c9a4fb38925675cc856b2e8c566/image-54.jpg)

References [1] CUDA Programming Guide, n. Vidia Corp. [2] The CUDA Compiler Driver, n. Vidia Corp. [3] Parallel Thread Execution, n. Vidia Corp. [4] CUDA: A Heterogeneous Parallel Programming Model for Manycore Computing, ASPLOS 2008, gpgpu. org

Question?