CUDA Programming continued Revised ITCS 41455145 Nov 24

- Slides: 18

CUDA Programming continued Revised ITCS 4145/5145 Nov 24, 2010 © Barry Wilkinson

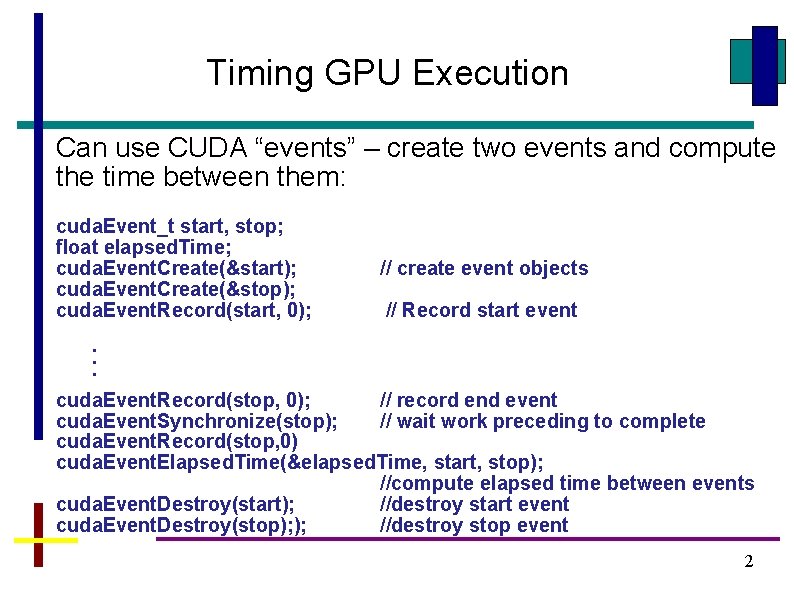

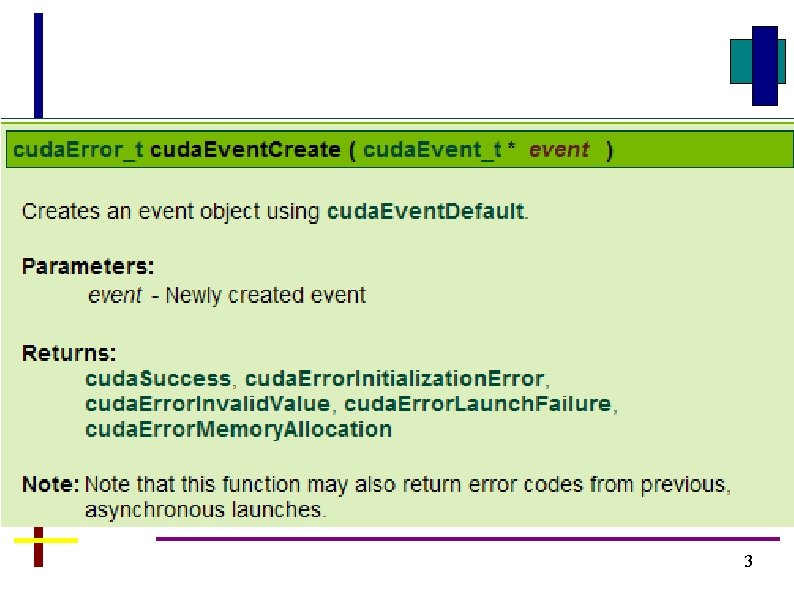

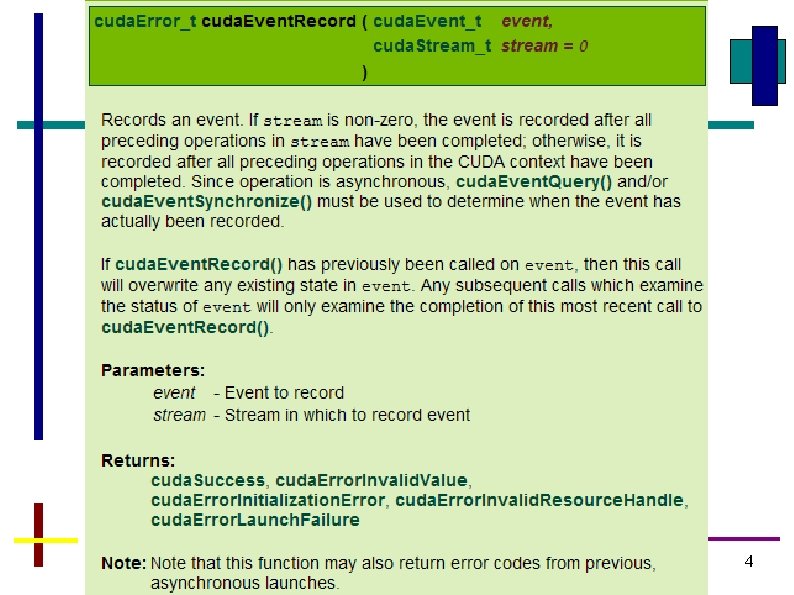

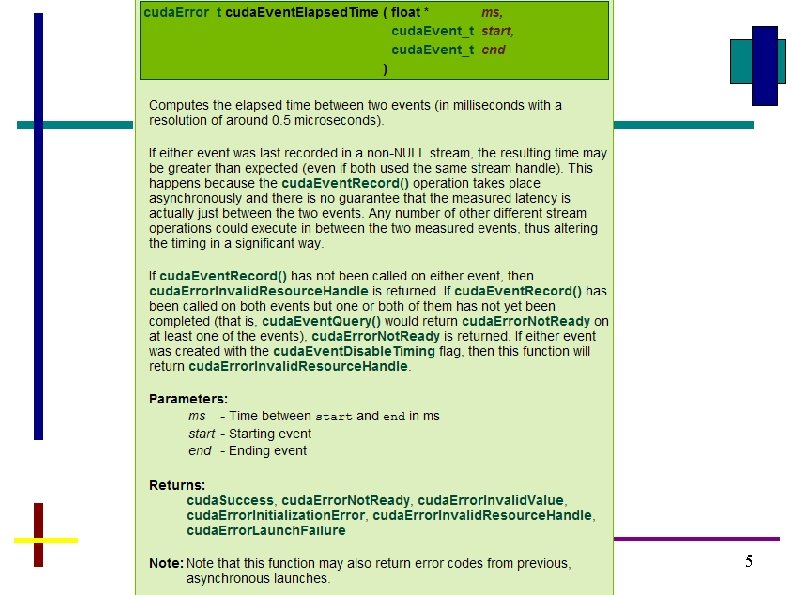

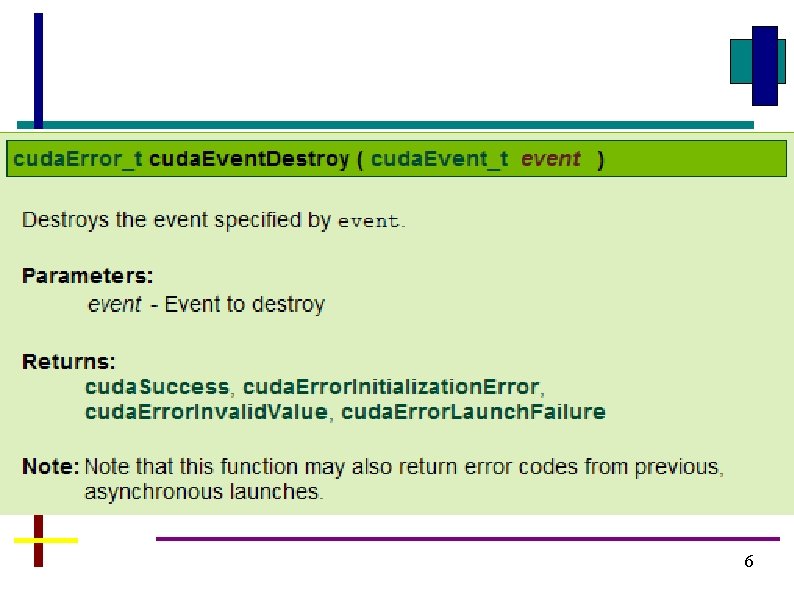

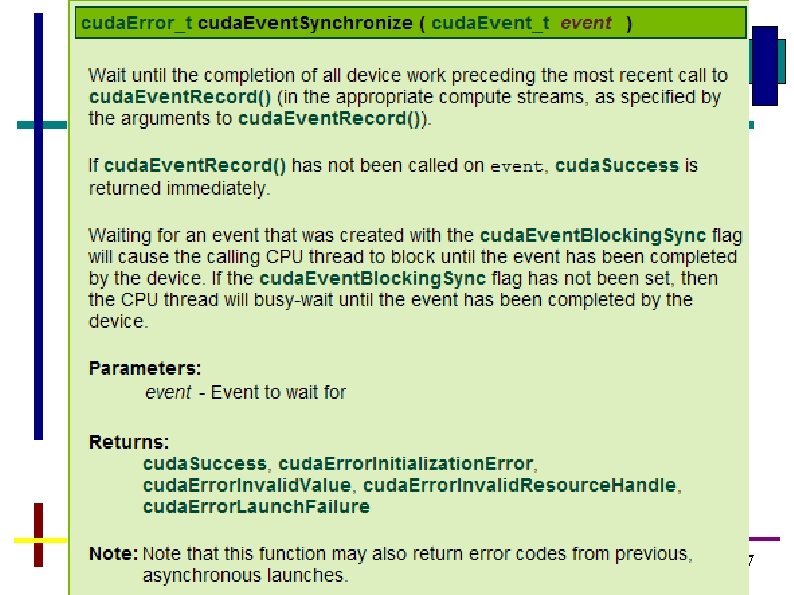

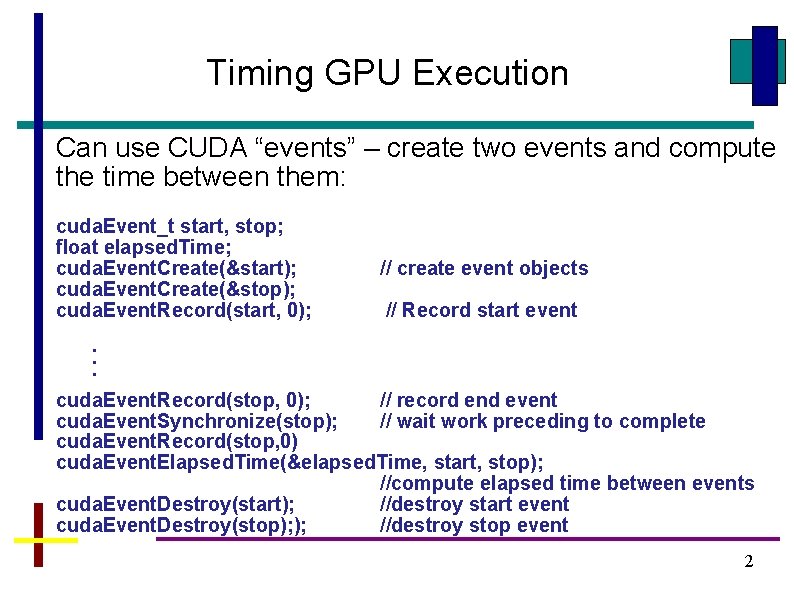

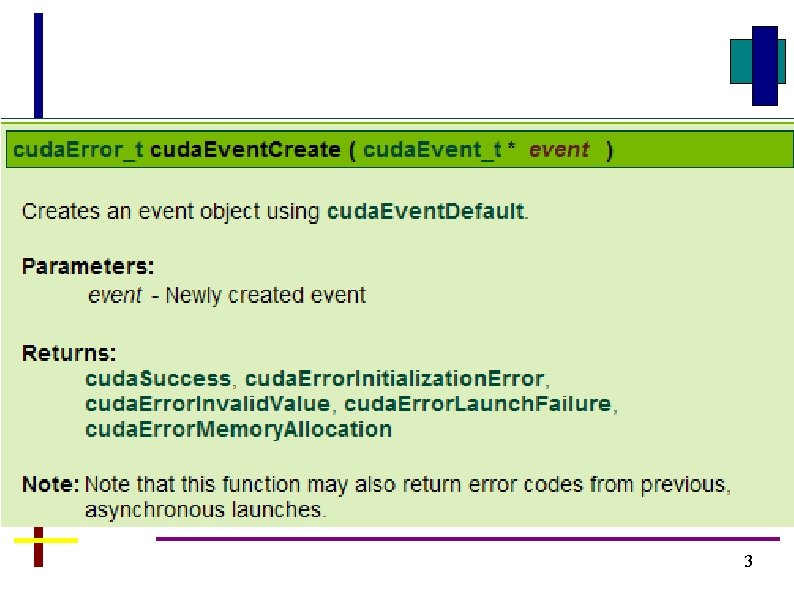

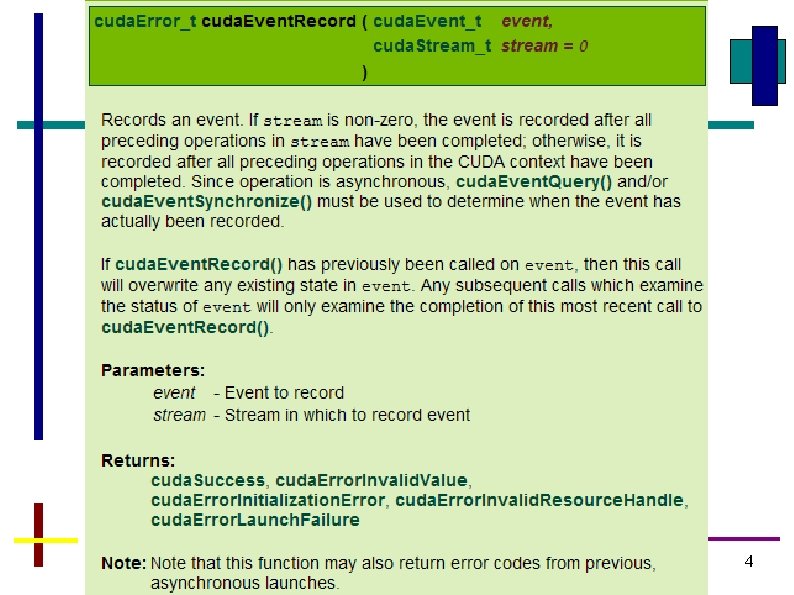

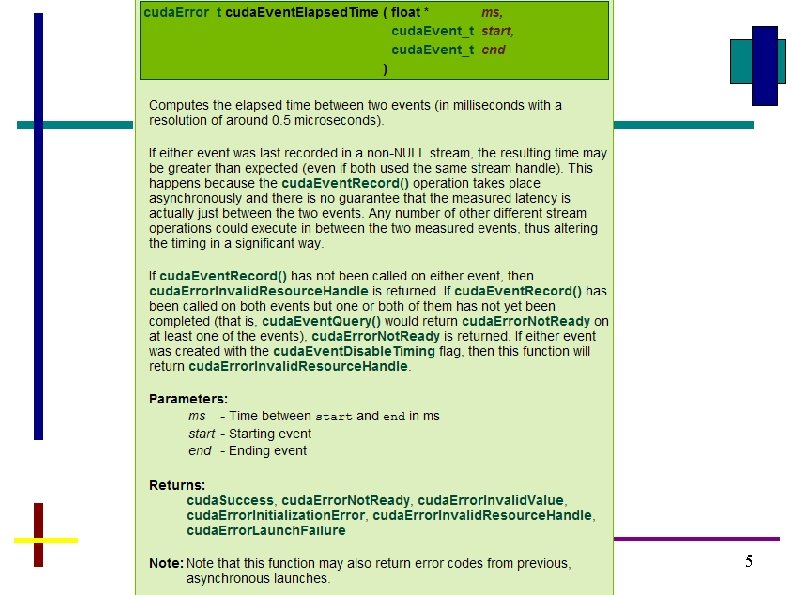

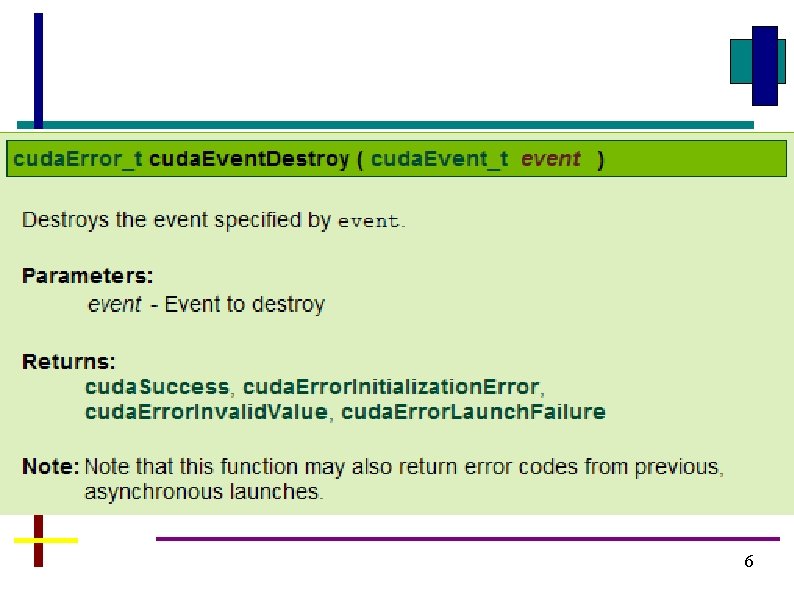

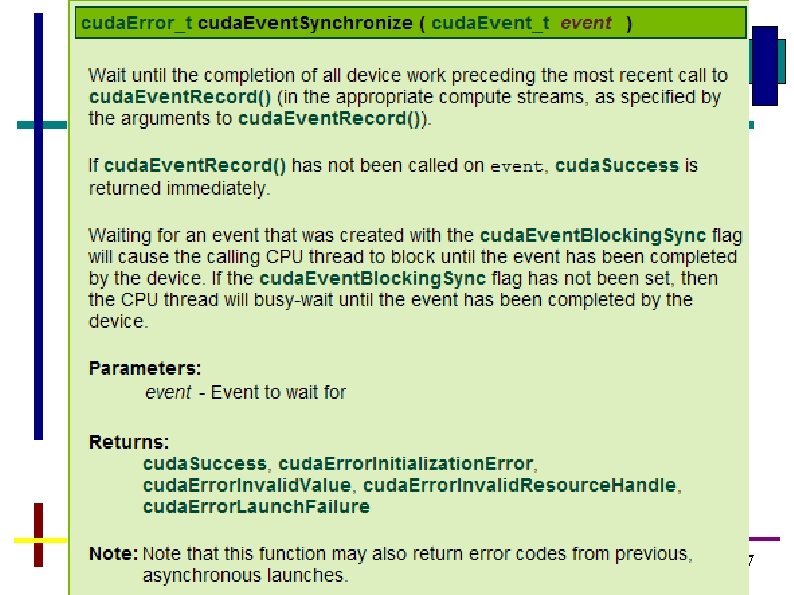

Timing GPU Execution Can use CUDA “events” – create two events and compute the time between them: cuda. Event_t start, stop; float elapsed. Time; cuda. Event. Create(&start); cuda. Event. Create(&stop); cuda. Event. Record(start, 0); // create event objects // Record start event . . . cuda. Event. Record(stop, 0); // record end event cuda. Event. Synchronize(stop); // wait work preceding to complete cuda. Event. Record(stop, 0) cuda. Event. Elapsed. Time(&elapsed. Time, start, stop); //compute elapsed time between events cuda. Event. Destroy(start); //destroy start event cuda. Event. Destroy(stop); ); //destroy stop event 2

3

4

5

6

7

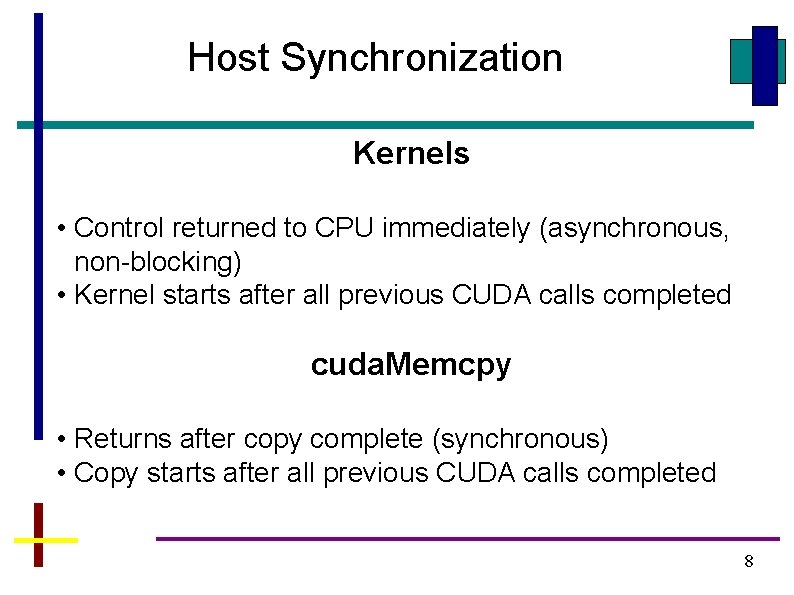

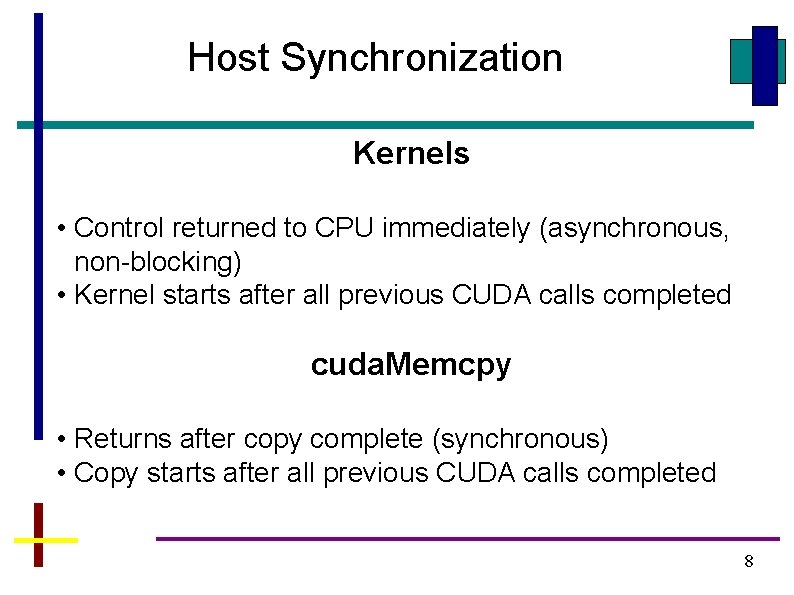

Host Synchronization Kernels • Control returned to CPU immediately (asynchronous, non-blocking) • Kernel starts after all previous CUDA calls completed cuda. Memcpy • Returns after copy complete (synchronous) • Copy starts after all previous CUDA calls completed 8

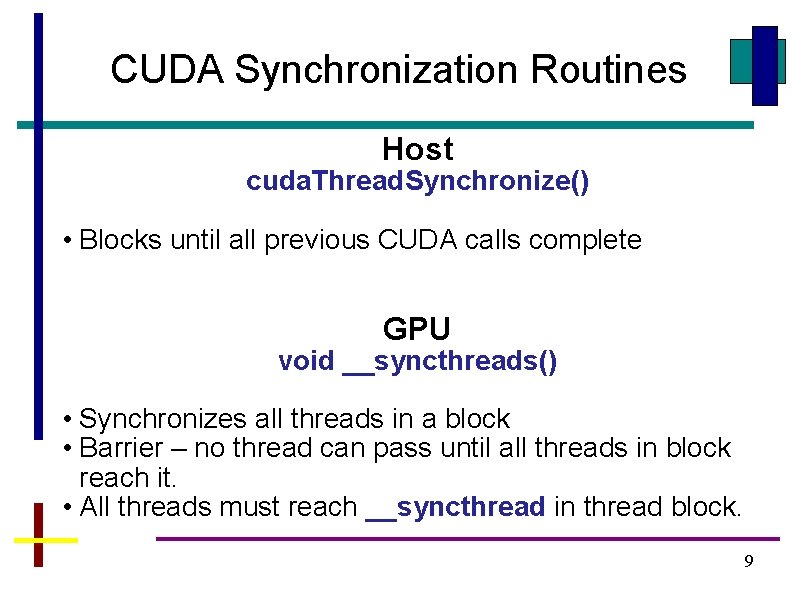

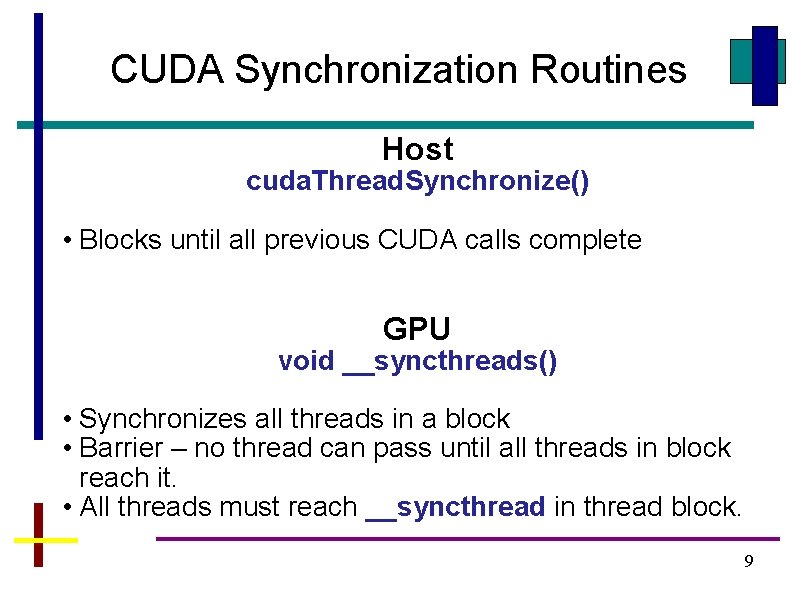

CUDA Synchronization Routines Host cuda. Thread. Synchronize() • Blocks until all previous CUDA calls complete GPU void __syncthreads() • Synchronizes all threads in a block • Barrier – no thread can pass until all threads in block reach it. • All threads must reach __syncthread in thread block. 9

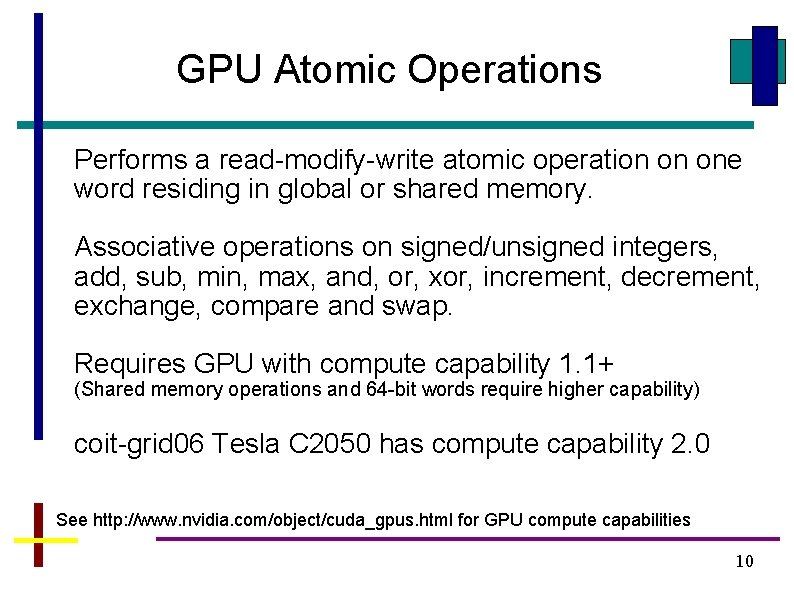

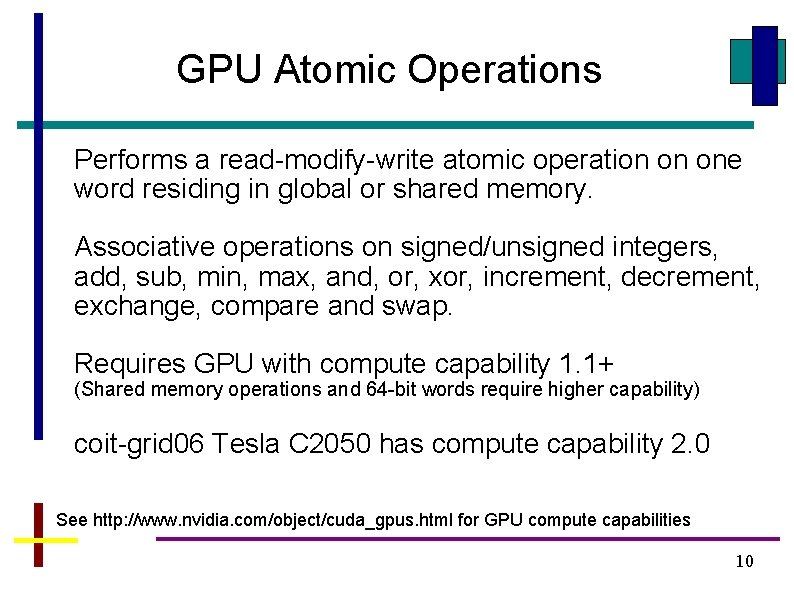

GPU Atomic Operations Performs a read-modify-write atomic operation on one word residing in global or shared memory. Associative operations on signed/unsigned integers, add, sub, min, max, and, or, xor, increment, decrement, exchange, compare and swap. Requires GPU with compute capability 1. 1+ (Shared memory operations and 64 -bit words require higher capability) coit-grid 06 Tesla C 2050 has compute capability 2. 0 See http: //www. nvidia. com/object/cuda_gpus. html for GPU compute capabilities 10

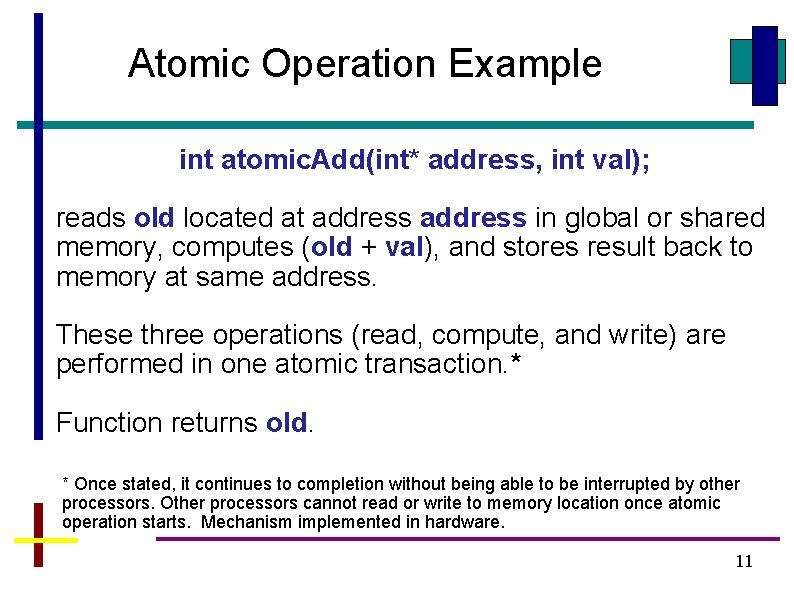

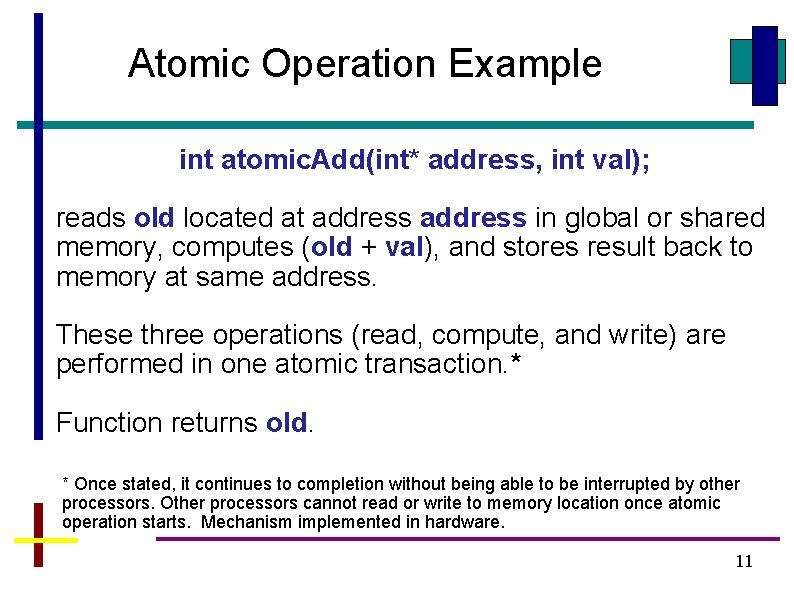

Atomic Operation Example int atomic. Add(int* address, int val); reads old located at address in global or shared memory, computes (old + val), and stores result back to memory at same address. These three operations (read, compute, and write) are performed in one atomic transaction. * Function returns old. * Once stated, it continues to completion without being able to be interrupted by other processors. Other processors cannot read or write to memory location once atomic operation starts. Mechanism implemented in hardware. 11

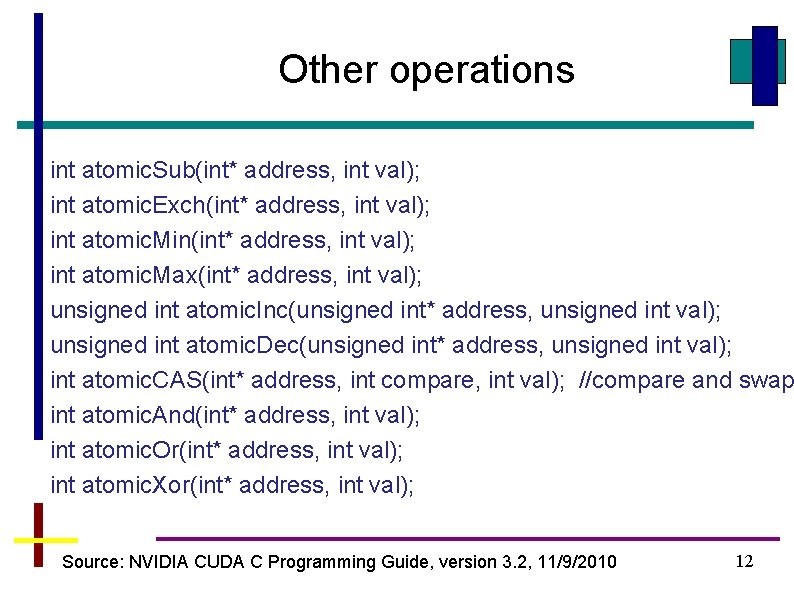

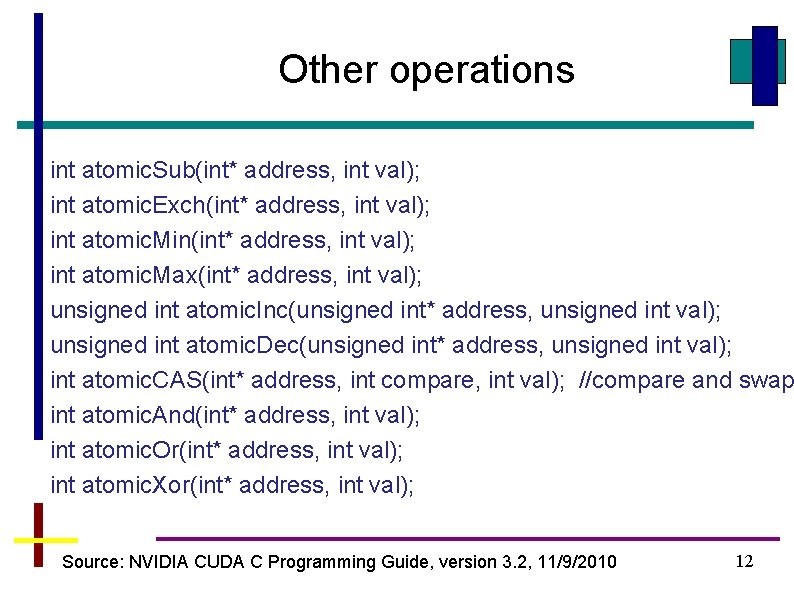

Other operations int atomic. Sub(int* address, int val); int atomic. Exch(int* address, int val); int atomic. Min(int* address, int val); int atomic. Max(int* address, int val); unsigned int atomic. Inc(unsigned int* address, unsigned int val); unsigned int atomic. Dec(unsigned int* address, unsigned int val); int atomic. CAS(int* address, int compare, int val); //compare and swap int atomic. And(int* address, int val); int atomic. Or(int* address, int val); int atomic. Xor(int* address, int val); Source: NVIDIA CUDA C Programming Guide, version 3. 2, 11/9/2010 12

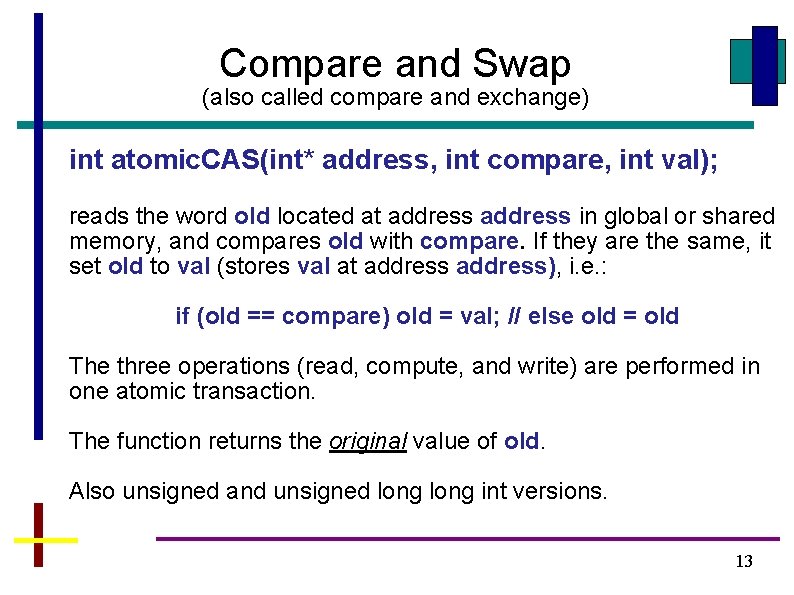

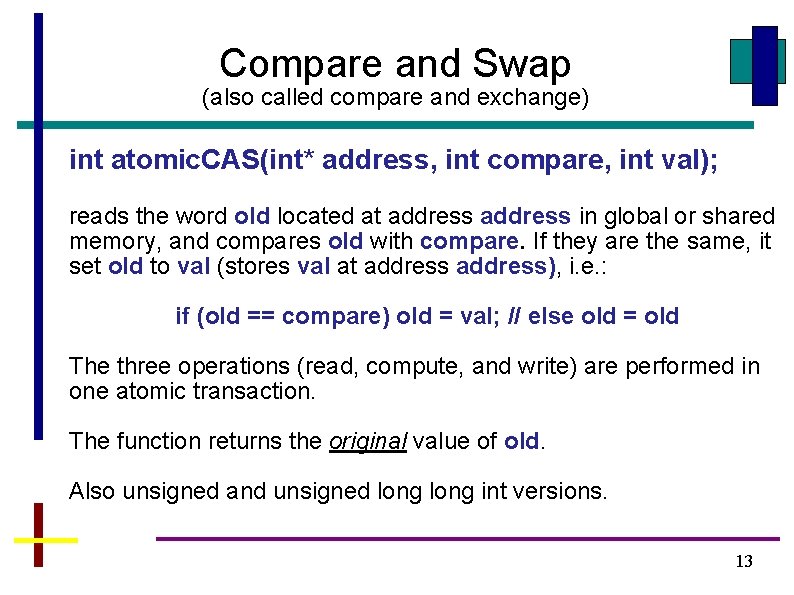

Compare and Swap (also called compare and exchange) int atomic. CAS(int* address, int compare, int val); reads the word old located at address in global or shared memory, and compares old with compare. If they are the same, it set old to val (stores val at address), i. e. : if (old == compare) old = val; // else old = old The three operations (read, compute, and write) are performed in one atomic transaction. The function returns the original value of old. Also unsigned and unsigned long int versions. 13

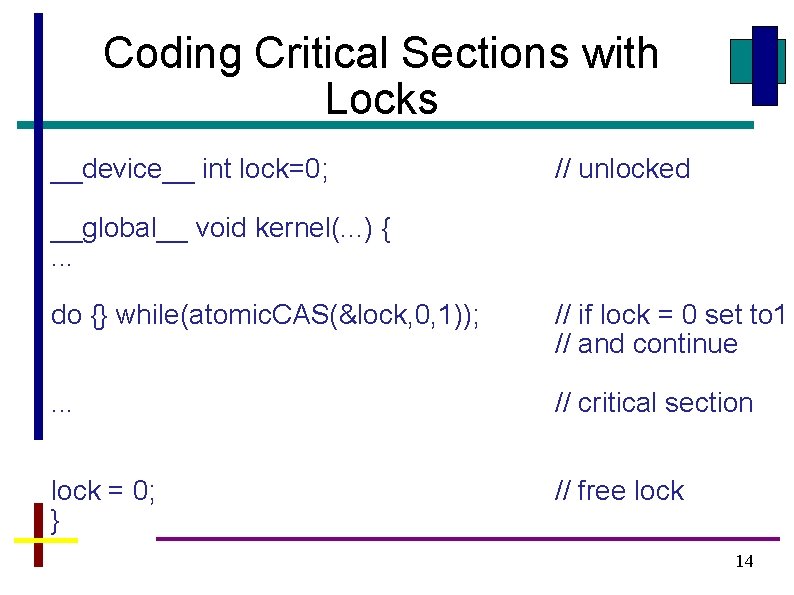

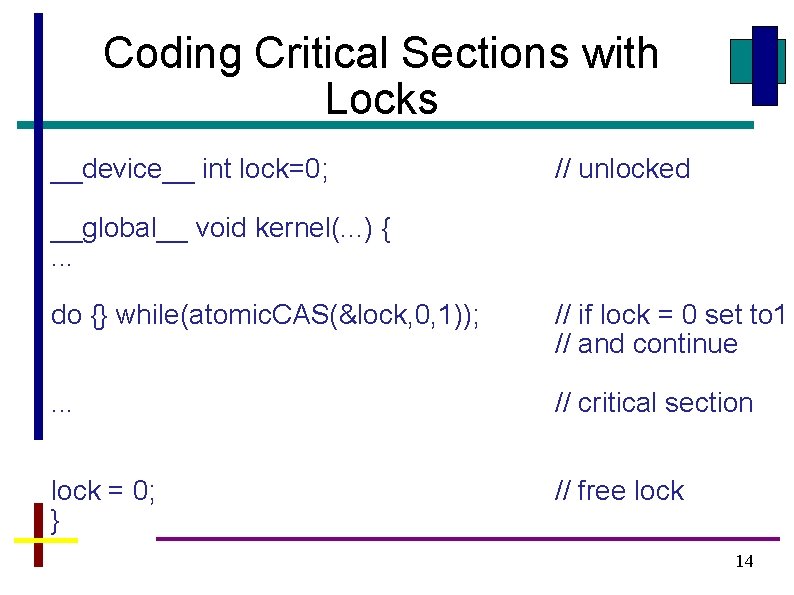

Coding Critical Sections with Locks __device__ int lock=0; // unlocked __global__ void kernel(. . . ) {. . . do {} while(atomic. CAS(&lock, 0, 1)); // if lock = 0 set to 1 // and continue . . . // critical section lock = 0; } // free lock 14

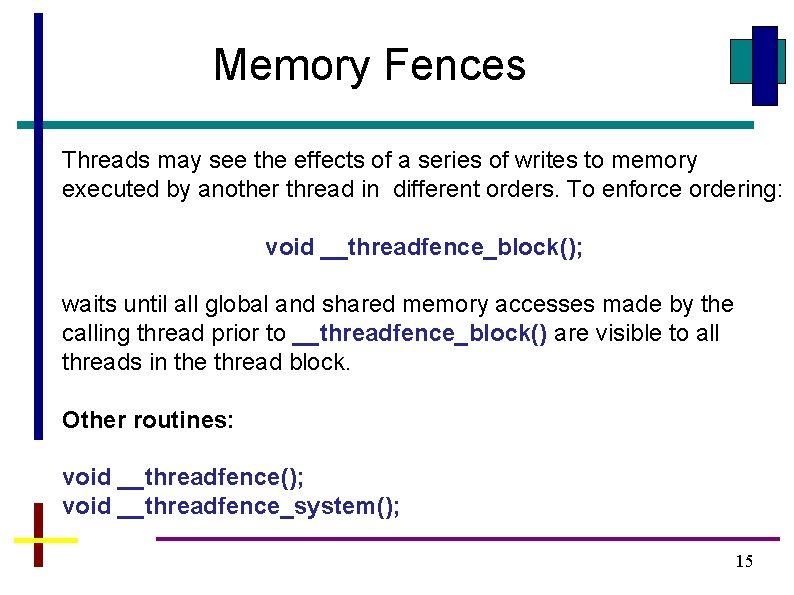

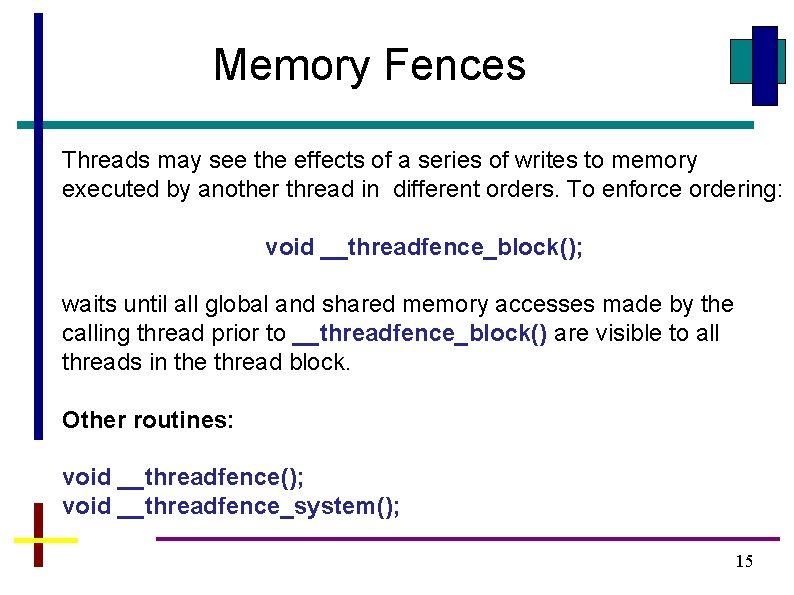

Memory Fences Threads may see the effects of a series of writes to memory executed by another thread in different orders. To enforce ordering: void __threadfence_block(); waits until all global and shared memory accesses made by the calling thread prior to __threadfence_block() are visible to all threads in the thread block. Other routines: void __threadfence(); void __threadfence_system(); 15

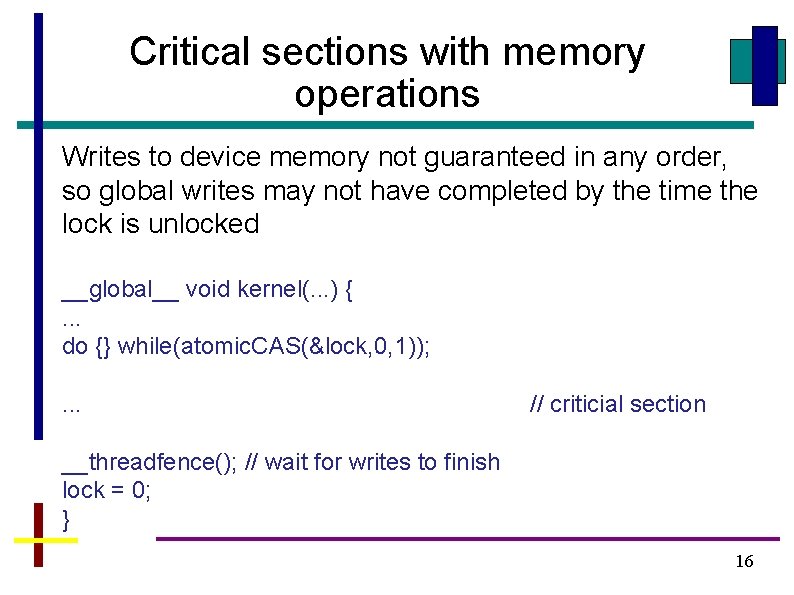

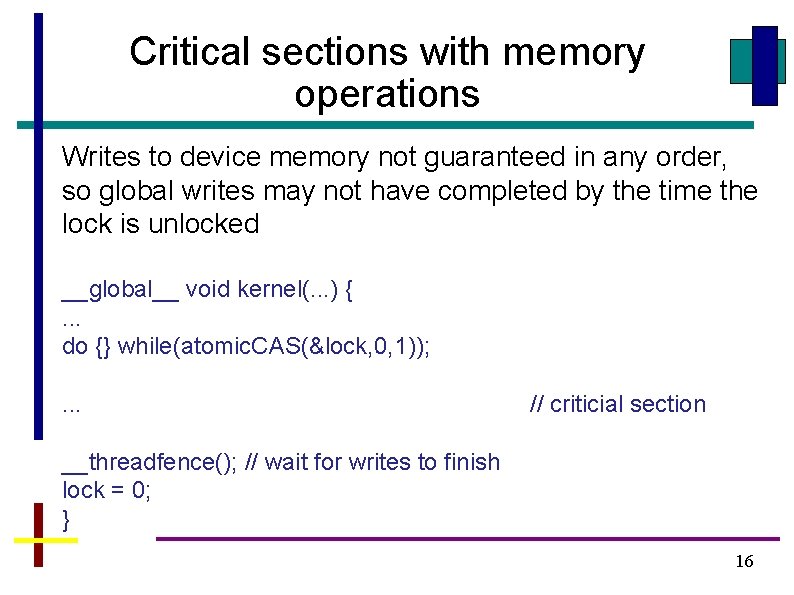

Critical sections with memory operations Writes to device memory not guaranteed in any order, so global writes may not have completed by the time the lock is unlocked __global__ void kernel(. . . ) {. . . do {} while(atomic. CAS(&lock, 0, 1)); . . . // criticial section __threadfence(); // wait for writes to finish lock = 0; } 16

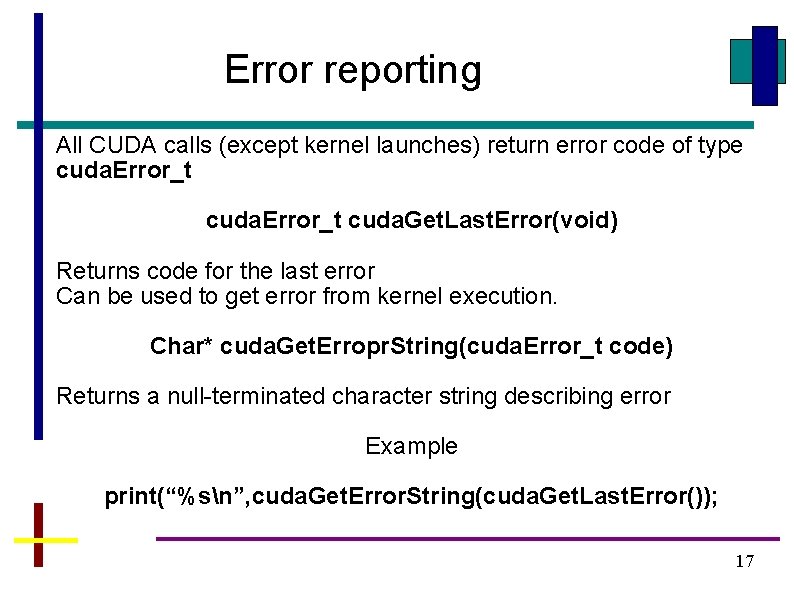

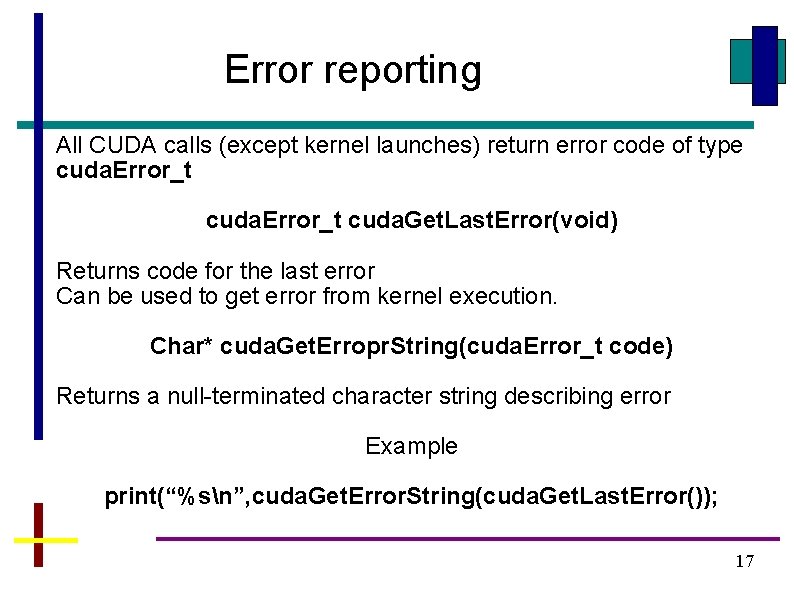

Error reporting All CUDA calls (except kernel launches) return error code of type cuda. Error_t cuda. Get. Last. Error(void) Returns code for the last error Can be used to get error from kernel execution. Char* cuda. Get. Erropr. String(cuda. Error_t code) Returns a null-terminated character string describing error Example print(“%sn”, cuda. Get. Error. String(cuda. Get. Last. Error()); 17

Questions