CUDA ITK WonKi Jeong SCI Institute University of

![Median filter • Viola et al. [VIS 03] – Finding median by bisection of Median filter • Viola et al. [VIS 03] – Finding median by bisection of](https://slidetodoc.com/presentation_image_h2/d7a94654c00d0107d5e06f6a7a040a74/image-11.jpg)

- Slides: 20

CUDA ITK Won-Ki Jeong SCI Institute University of Utah

NVIDIA G 80 • New architecture for computing on the GPU – GPU as massively parallel multithreaded machine • One step further from streaming model – New hardware features • Unified shaders (ALUs) • Flexible memory access (scatter) • Fast user-controllable on-chip memory • Integer, bitwise operations

NVIDIA CUDA • C-extension NVIDIA GPU programming language – – – No graphics API overhead Easy to learn Support development tools – – Function type : __global__, __device__, __host__ Variable type : __shared__, __constant__ cuda. Malloc(), cuda. Free(), cuda. Memcpy(), … __syncthread(), atomic. Add(), … • Extensions / API • Program types – Device program (kernel) : run on the GPU – Host program : run on the CPU to call device programs

CUDA ITK • ITK powered by CUDA – Many registration / image processing functions are still computationally expensive and parallelizable – Current ITK parallelization is bound by # of CPUs (cores) • Our approach – Implement several well-known ITK image filters using NVIDIA CUDA – Focus on 3 D volume processing • CT / MRI datasets are mostly 3 D volume

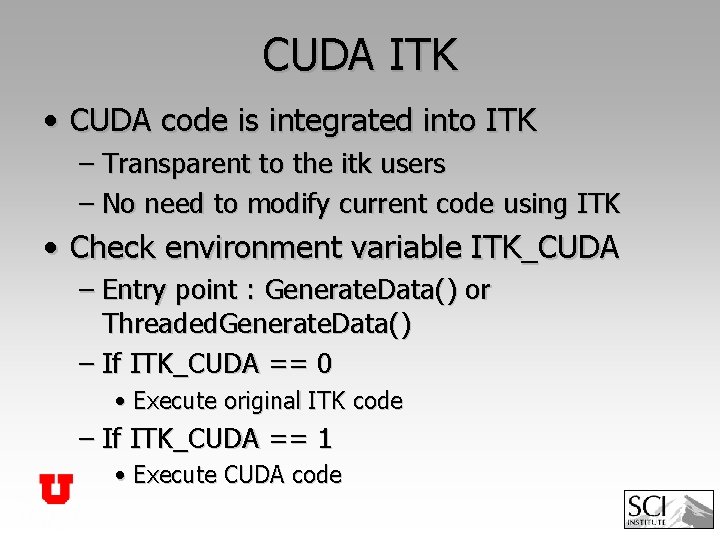

CUDA ITK • CUDA code is integrated into ITK – Transparent to the itk users – No need to modify current code using ITK • Check environment variable ITK_CUDA – Entry point : Generate. Data() or Threaded. Generate. Data() – If ITK_CUDA == 0 • Execute original ITK code – If ITK_CUDA == 1 • Execute CUDA code

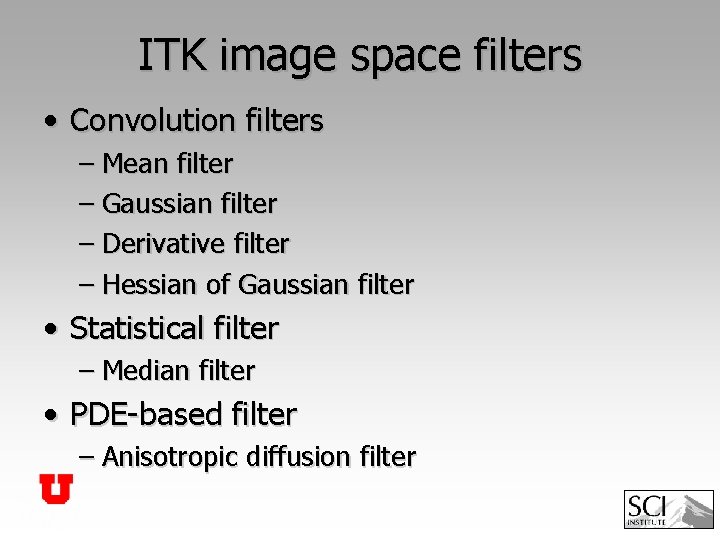

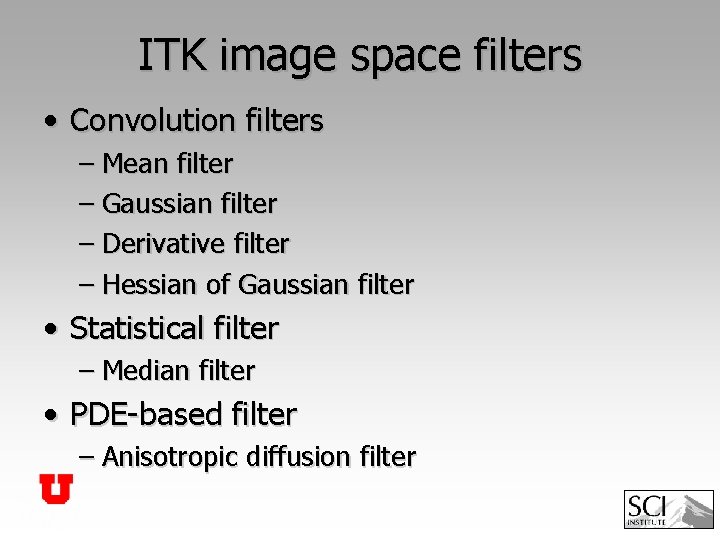

ITK image space filters • Convolution filters – Mean filter – Gaussian filter – Derivative filter – Hessian of Gaussian filter • Statistical filter – Median filter • PDE-based filter – Anisotropic diffusion filter

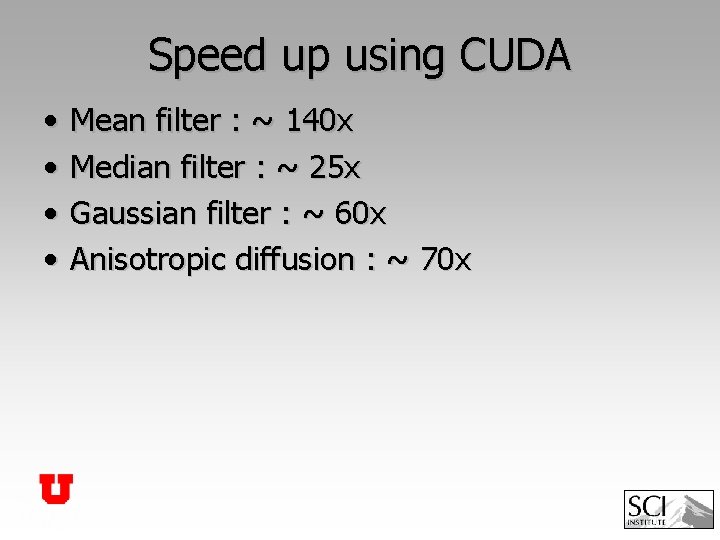

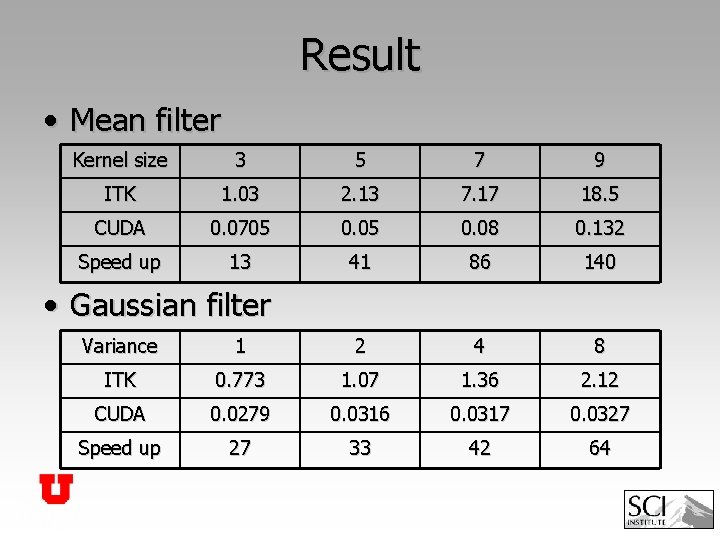

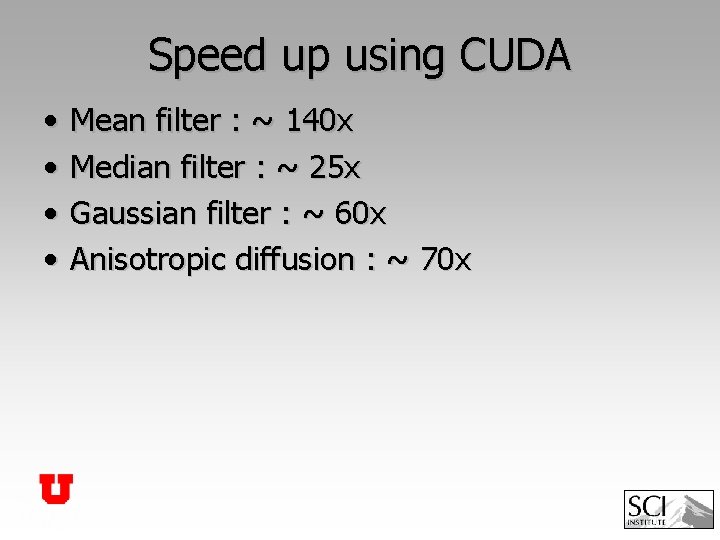

Speed up using CUDA • • Mean filter : ~ 140 x Median filter : ~ 25 x Gaussian filter : ~ 60 x Anisotropic diffusion : ~ 70 x

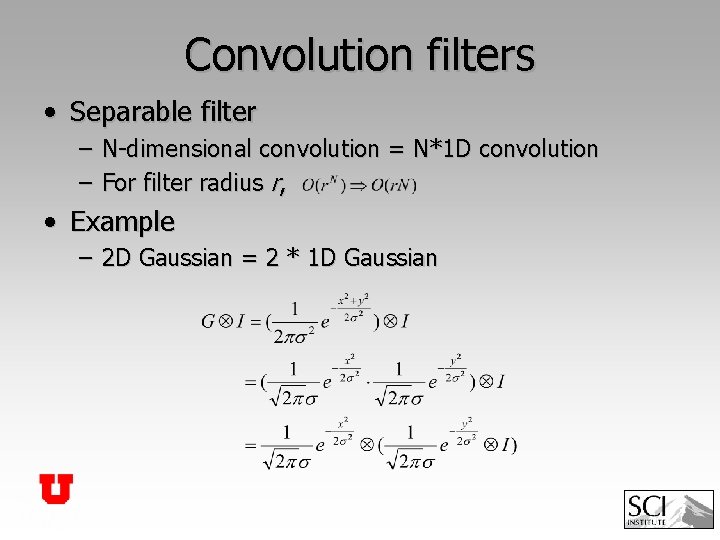

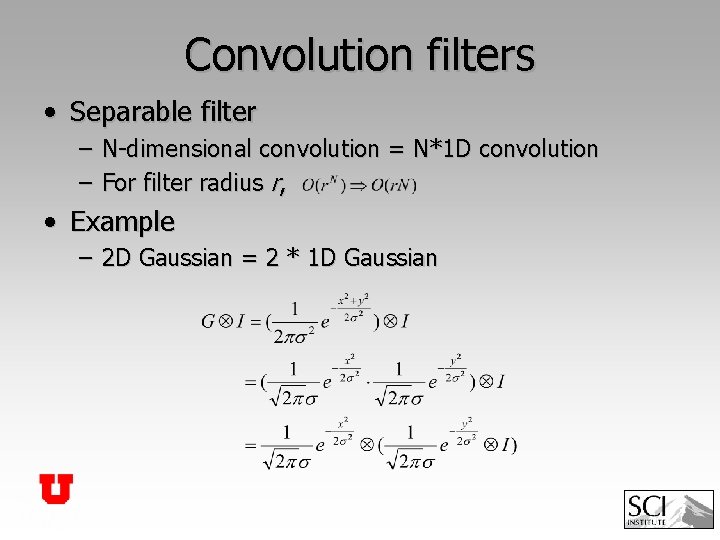

Convolution filters • Separable filter – N-dimensional convolution = N*1 D convolution – For filter radius r, • Example – 2 D Gaussian = 2 * 1 D Gaussian

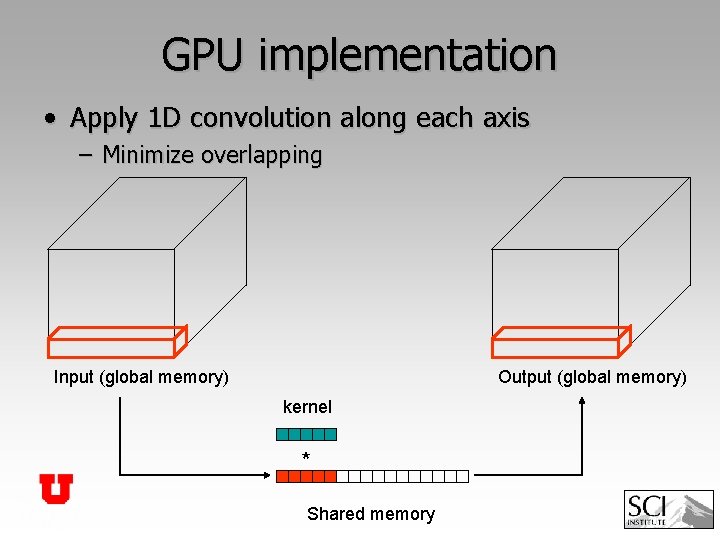

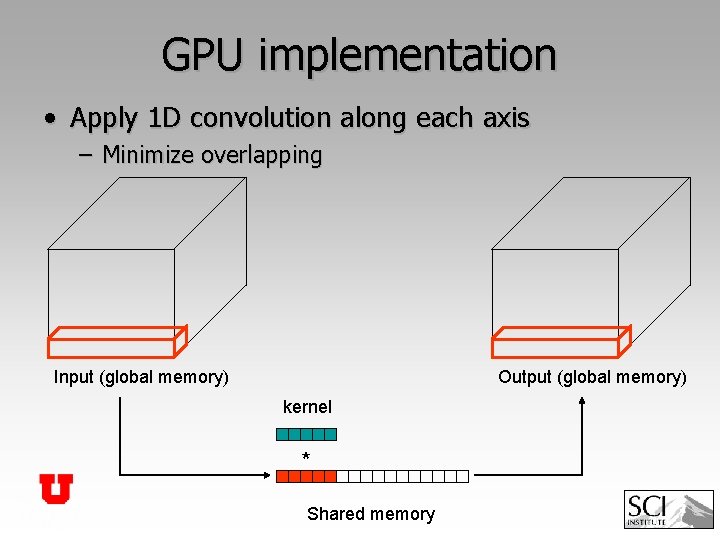

GPU implementation • Apply 1 D convolution along each axis – Minimize overlapping Input (global memory) Output (global memory) kernel * Shared memory

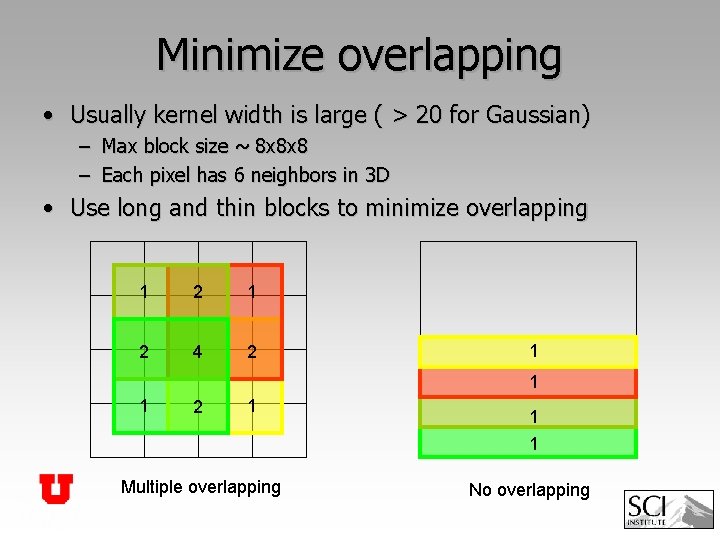

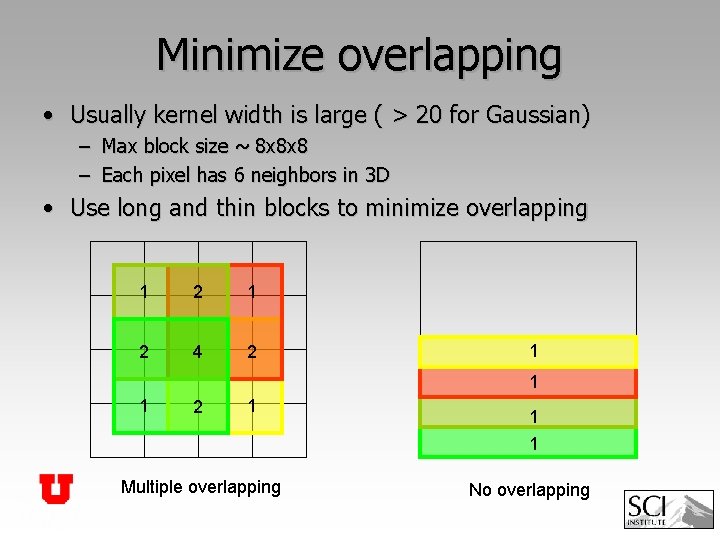

Minimize overlapping • Usually kernel width is large ( > 20 for Gaussian) – Max block size ~ 8 x 8 x 8 – Each pixel has 6 neighbors in 3 D • Use long and thin blocks to minimize overlapping 1 2 4 2 1 1 1 2 1 Multiple overlapping 1 1 No overlapping

![Median filter Viola et al VIS 03 Finding median by bisection of Median filter • Viola et al. [VIS 03] – Finding median by bisection of](https://slidetodoc.com/presentation_image_h2/d7a94654c00d0107d5e06f6a7a040a74/image-11.jpg)

Median filter • Viola et al. [VIS 03] – Finding median by bisection of histogram bins – Log(# bins) iterations (e. g. , 8 -bit pixel : 8 iterations) Intensity : 0 1. 1 2 3 5 6 7 4 1 3 8 1 2 0 1 16 2. 4 4 1 3 8 1 2 0 1 5 3. 11

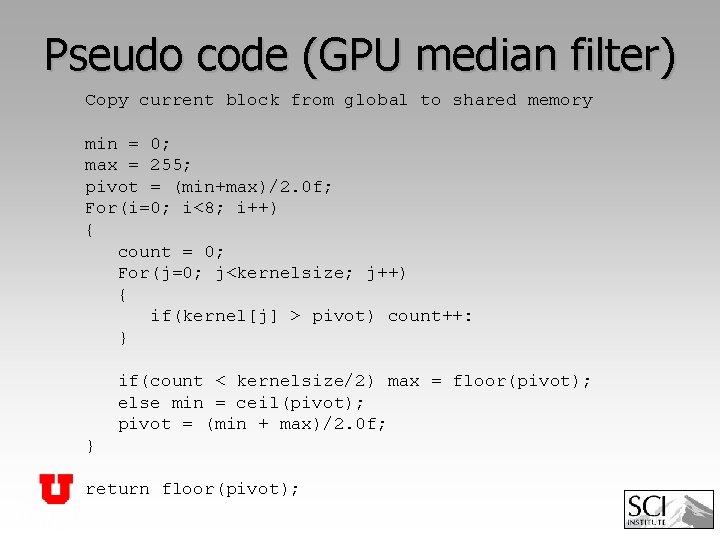

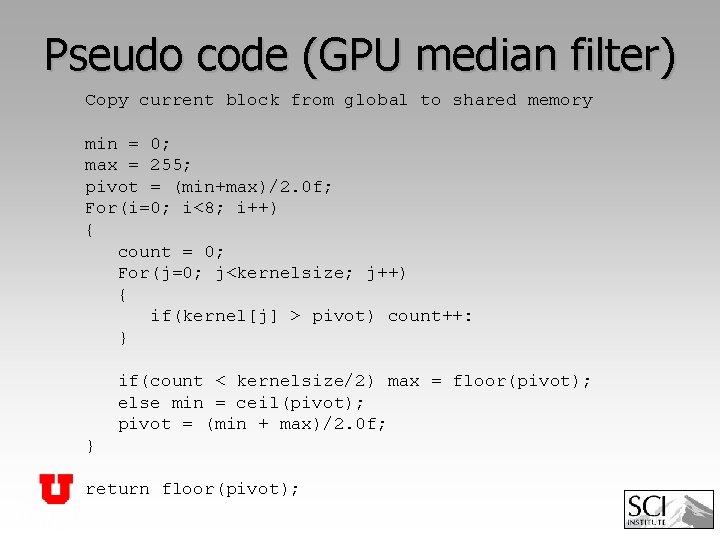

Pseudo code (GPU median filter) Copy current block from global to shared memory min = 0; max = 255; pivot = (min+max)/2. 0 f; For(i=0; i<8; i++) { count = 0; For(j=0; j<kernelsize; j++) { if(kernel[j] > pivot) count++: } if(count < kernelsize/2) max = floor(pivot); else min = ceil(pivot); pivot = (min + max)/2. 0 f; } return floor(pivot);

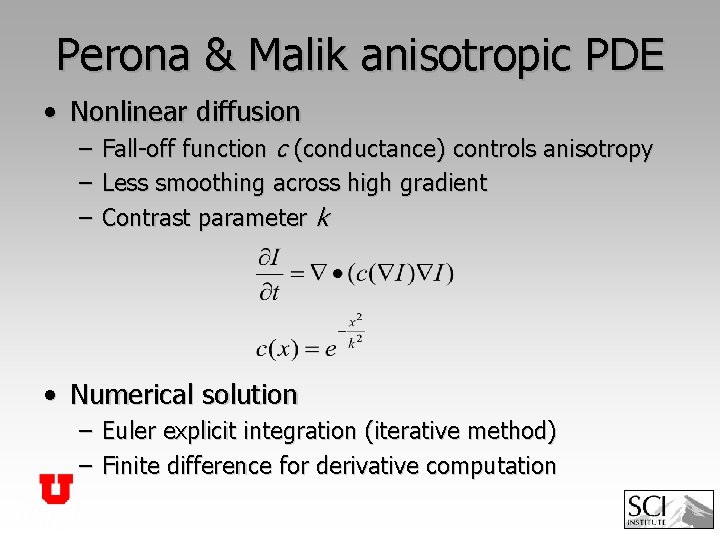

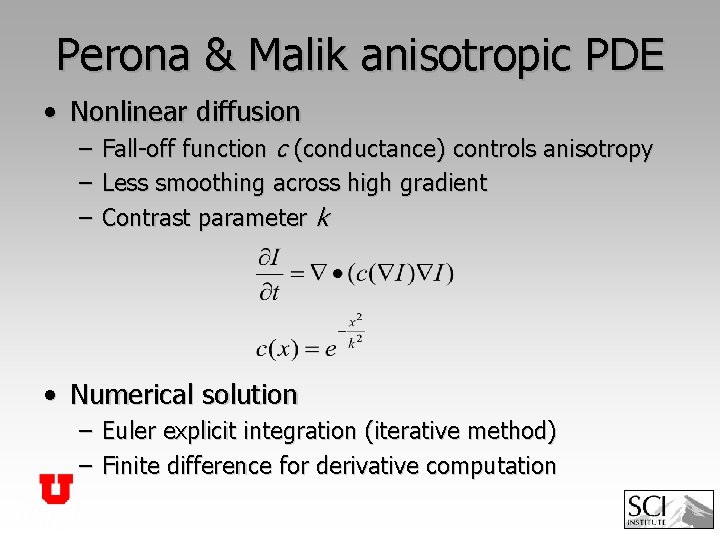

Perona & Malik anisotropic PDE • Nonlinear diffusion – – – Fall-off function c (conductance) controls anisotropy Less smoothing across high gradient Contrast parameter k • Numerical solution – Euler explicit integration (iterative method) – Finite difference for derivative computation

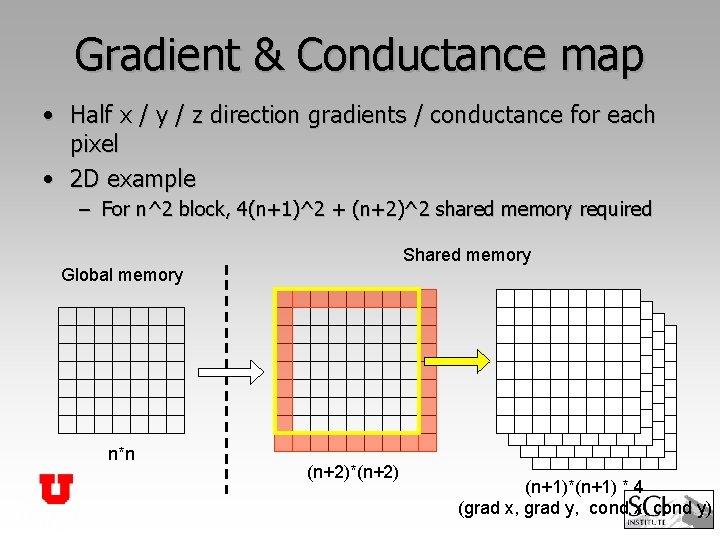

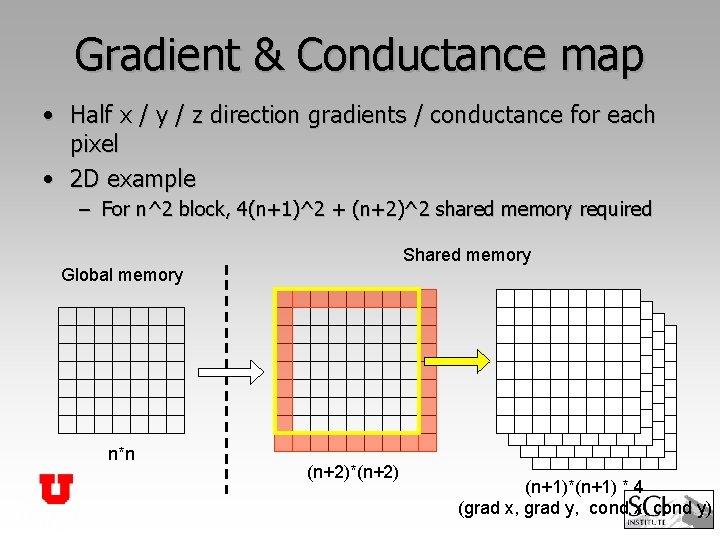

Gradient & Conductance map • Half x / y / z direction gradients / conductance for each pixel • 2 D example – For n^2 block, 4(n+1)^2 + (n+2)^2 shared memory required Shared memory Global memory n*n (n+2)*(n+2) (n+1)*(n+1) * 4 (grad x, grad y, cond x, cond y)

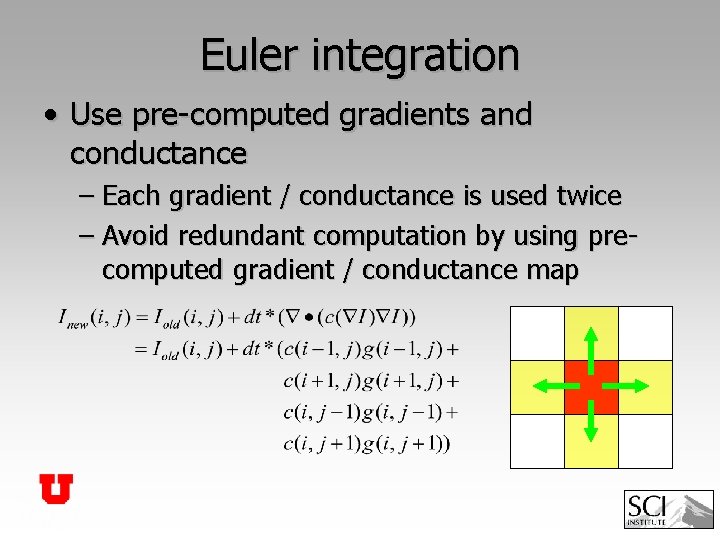

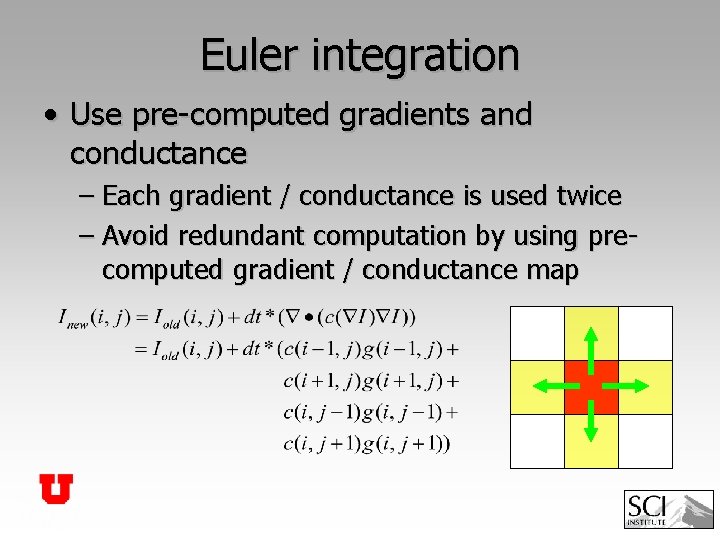

Euler integration • Use pre-computed gradients and conductance – Each gradient / conductance is used twice – Avoid redundant computation by using precomputed gradient / conductance map

Experiments • Test environment – CPU : AMD Opteron Dual Core 1. 8 GHz – GPU : Tesla C 870 • Input volume is 128^3

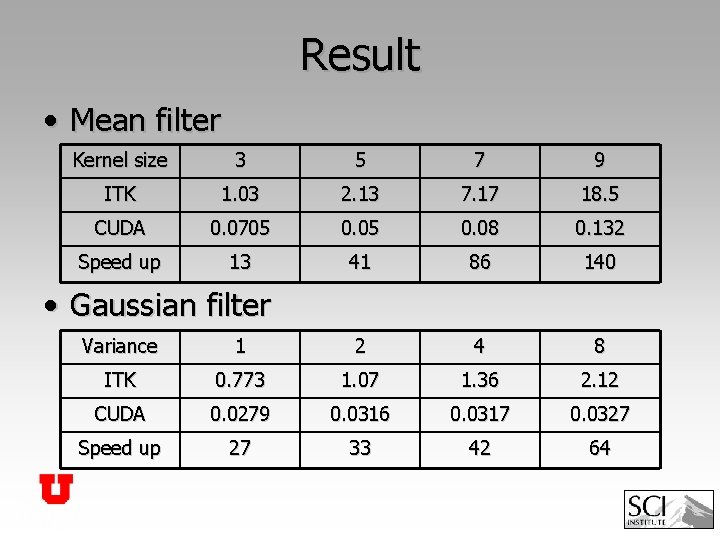

Result • Mean filter Kernel size 3 5 7 9 ITK 1. 03 2. 13 7. 17 18. 5 CUDA 0. 0705 0. 08 0. 132 Speed up 13 41 86 140 • Gaussian filter Variance 1 2 4 8 ITK 0. 773 1. 07 1. 36 2. 12 CUDA 0. 0279 0. 0316 0. 0317 0. 0327 Speed up 27 33 42 64

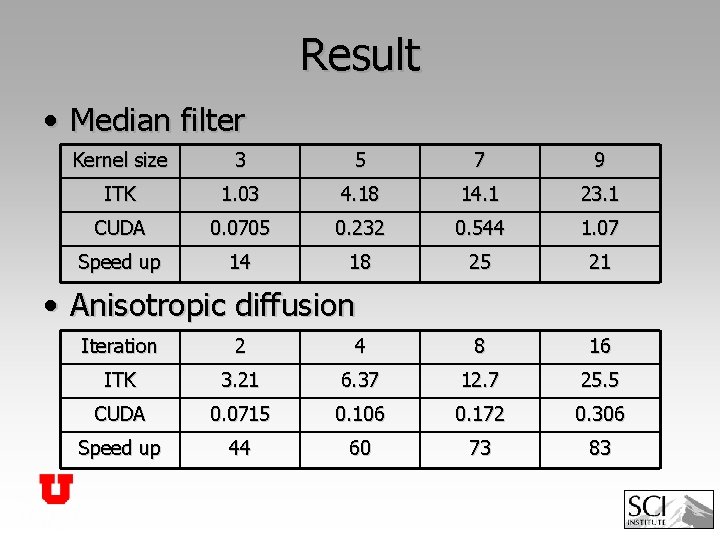

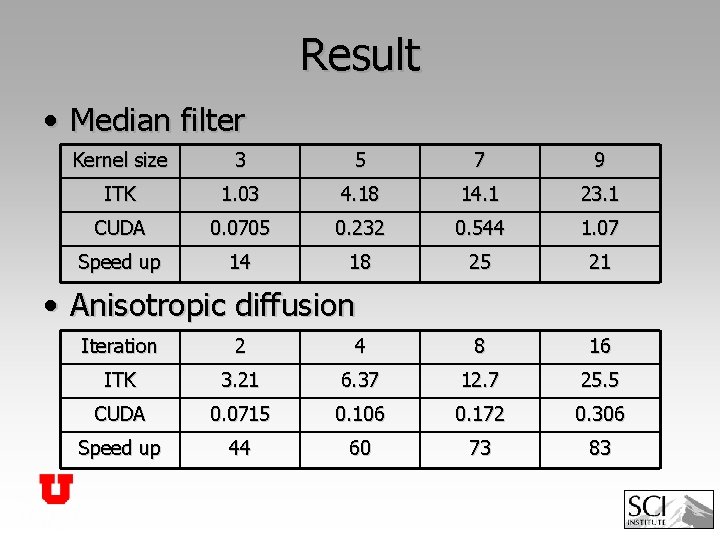

Result • Median filter Kernel size 3 5 7 9 ITK 1. 03 4. 18 14. 1 23. 1 CUDA 0. 0705 0. 232 0. 544 1. 07 Speed up 14 18 25 21 • Anisotropic diffusion Iteration 2 4 8 16 ITK 3. 21 6. 37 12. 7 25. 5 CUDA 0. 0715 0. 106 0. 172 0. 306 Speed up 44 60 73 83

Summary • ITK powered by CUDA – Image space filters using CUDA – Up to 140 x speed up • Future work – GPU image class for ITK • Reduce CPU to GPU memory I/O • Pipelining support – – – Image registration Numerical library (vnl) Out-of-GPU-core processing • Seismic volumes (~10 s to 100 s GB)

Questions?