CUDA and GPU Training Workshop April 21 2014

- Slides: 42

CUDA and GPU Training Workshop April 21, 2014 University of Georgia CUDA Teaching Center

UGA CUDA Teaching Center UGA, through the efforts of Professor Thiab Taha, has been selected by NVIDIA as a CUDA Teaching Center, est. 2011 Presenters: Jennifer Rouan and Sayali Kale Visit us at http: //cuda. uga. edu 2

Workshop Outline Ø Intruduction to GPUs and CUDA Ø CUDA Programming Concepts Ø Current GPU Research at UGA Ø GPU Computing Resources at UGA Ø “My First CUDA Program” – hands-on programming project 3

A Little Bit of GPU Background GPU is a computer chip that performs rapid mathematical calculations in parallel, primarily for the purpose of rendering images. NVIDIA introduced the first GPU, The Ge. Force 256, in 1999 and remains one of the major players in the market. Using CUDA, the GPUs can be used for general purpose processing this approach is known as GPGPU. 4

Question Ø What are different ways hardware designer make computers run faster? 1. Higher clock speeds 2. More work/clock cycle 3. More processors 5

What is CUDA? Ø Compute Unified Device Architecture Ø It is a parallel computing platform and programming model created by NVDIA and implemented by the GPUs(Graphics Processing Units) that they produce. Ø CUDA compiler uses variation of C with future support of C++ Ø CUDA was released on February 15, 2007 for PC and Beta version for Mac OS X on August 19, 2008. 6

Why CUDA? Ø CUDA provides ability to use high-level languages. Ø GPUs allow creation of very large number of concurrently executed threads at very low system resource cost. Ø CUDA also exposes fast shared memory (16 KB) that can be shared between threads. 7

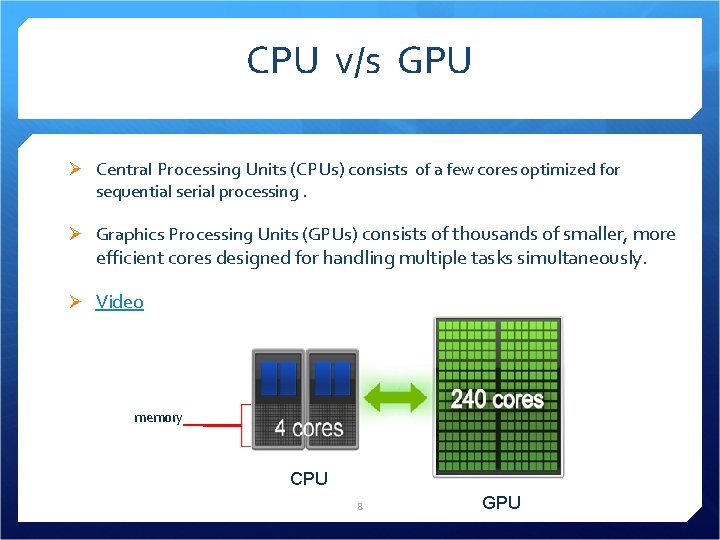

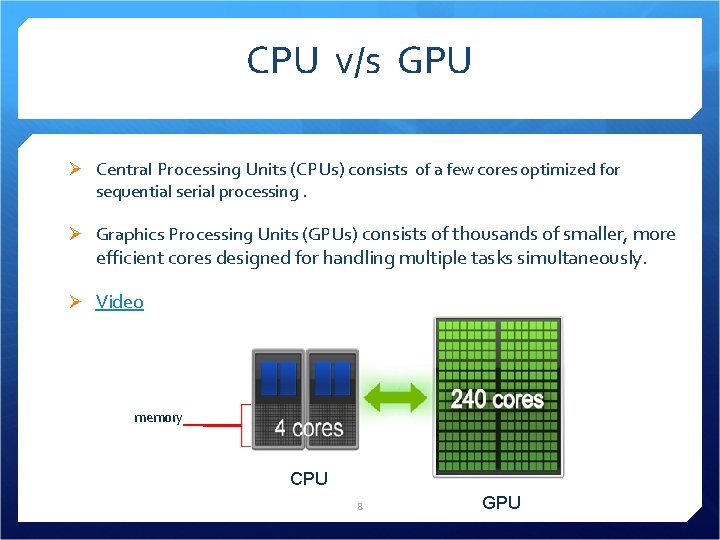

CPU v/s GPU Ø Central Processing Units (CPUs) consists of a few cores optimized for sequential serial processing. Ø Graphics Processing Units (GPUs) consists of thousands of smaller, more efficient cores designed for handling multiple tasks simultaneously. Ø Video memory CPU 8 GPU

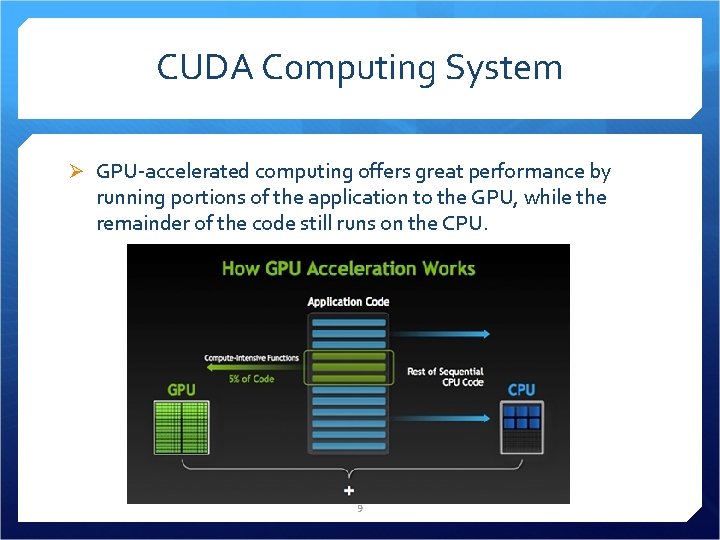

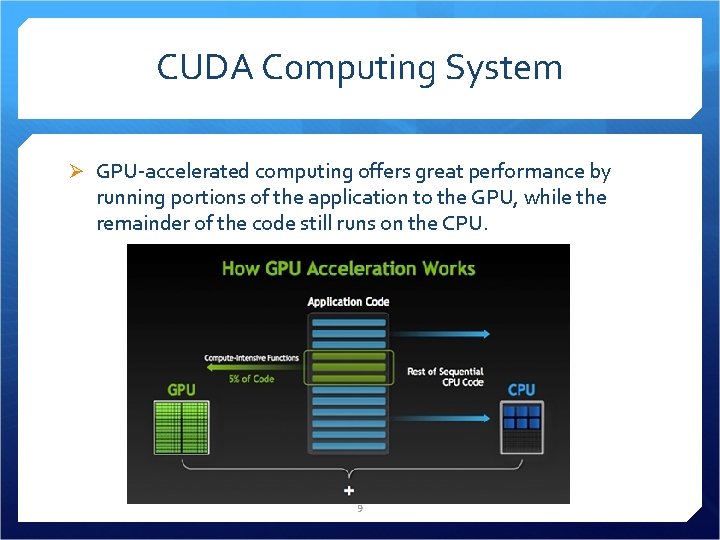

CUDA Computing System Ø GPU-accelerated computing offers great performance by running portions of the application to the GPU, while the remainder of the code still runs on the CPU. 9

CUDA Computing System Ø A CUDA computing system consists of a host (CPU) and one or more devices (GPUs) Ø The portions of the program that can be evaluated in parallel are executed on the device. The host handles the serial portions and the transfer of execution and data to and from the device 10

CUDA Program Source Code Ø A CUDA program is a unified source code encompassing both host and device code. Convention: program_name. cu Ø NVIDIA’s compiler (nvcc) separates the host and device code at compilation Ø The host code is compiled by the host’s standard C compilers. The device code is further compiled by nvcc for execution on the GPU 11

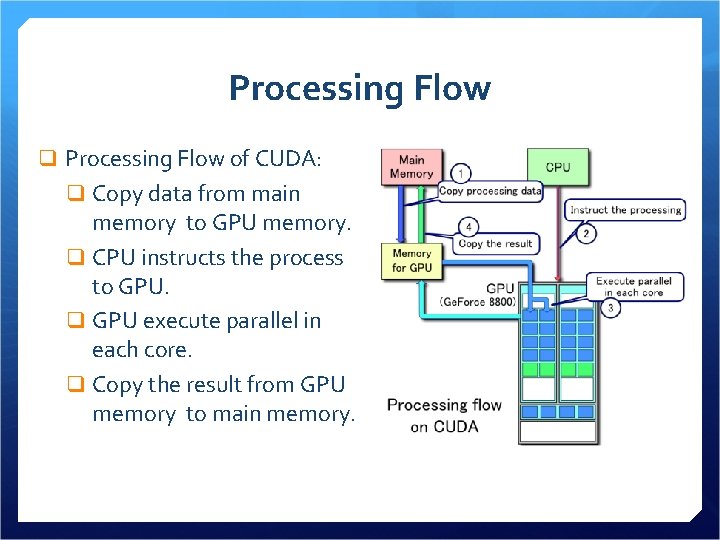

CUDA Program Execution steps: 1. CPU allocated storage on GPU (CUDA Malloc) 2. CPU copies I/P data from CPU to GPU (CUDA Memcpy) 3. CPU launches kernel (Invoking program )on GPU to process data (Kernel Launch) 4. CPU copies results back to CPU from GPU (CUDA Memcpy) 12

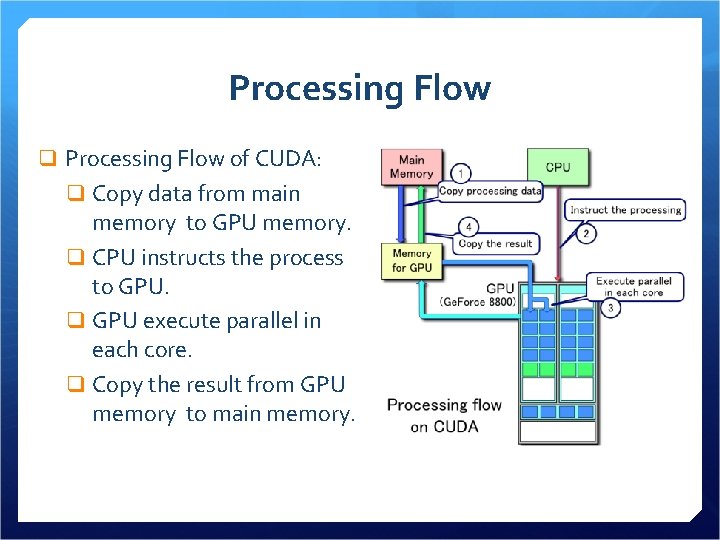

Processing Flow q Processing Flow of CUDA: q Copy data from main memory to GPU memory. q CPU instructs the process to GPU. q GPU execute parallel in each core. q Copy the result from GPU memory to main memory.

CUDA Program Execution Ø Execution of a CUDA program begins on the host CPU Ø When a kernel function (or simply “kernel”) is launched, execution is transferred to the device and a massive “grid” of lightweight threads is spawned Ø When all threads of a kernel have finished executing, the grid terminates and control of the program returns to the host until another kernel is launched 14

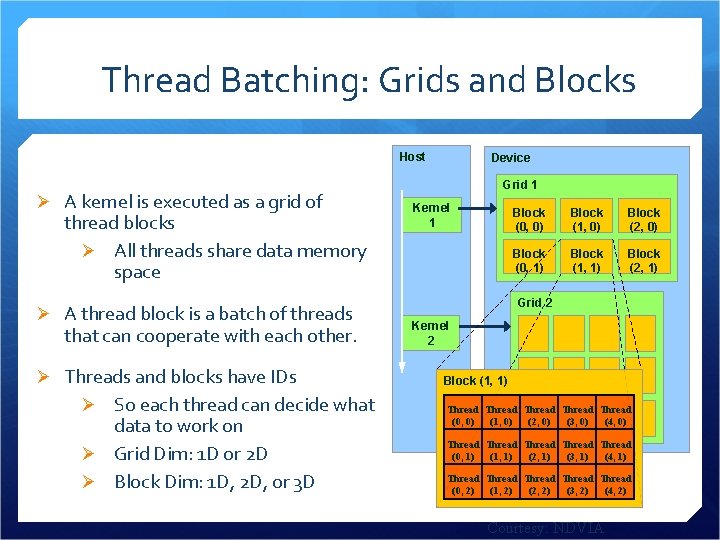

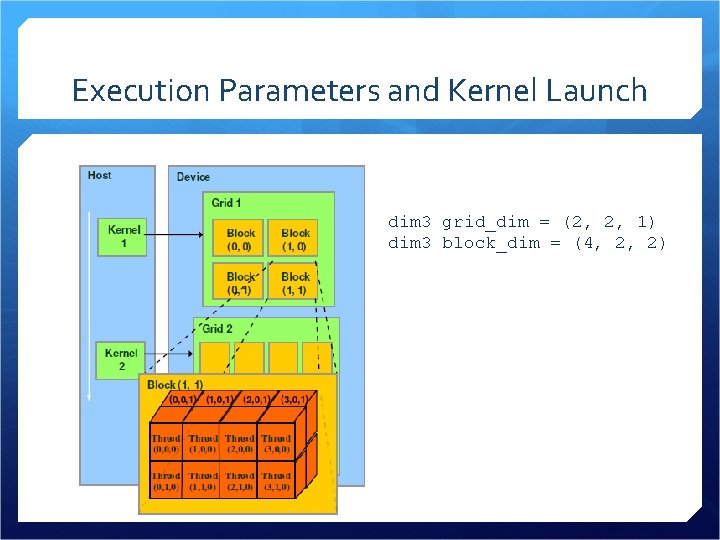

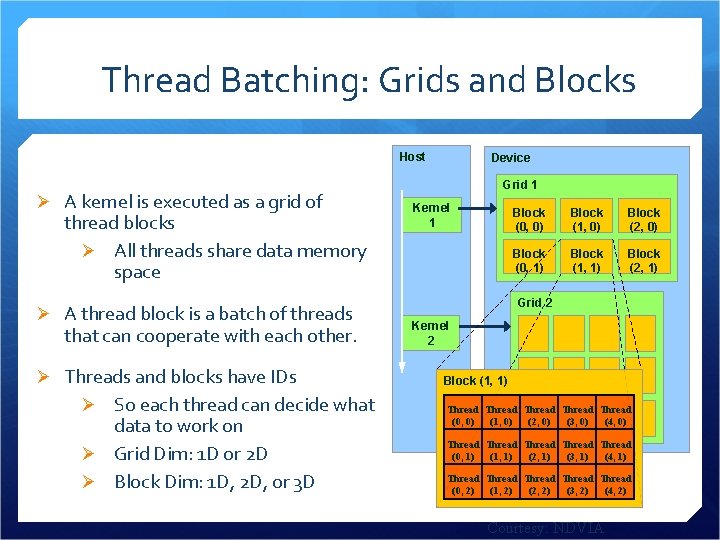

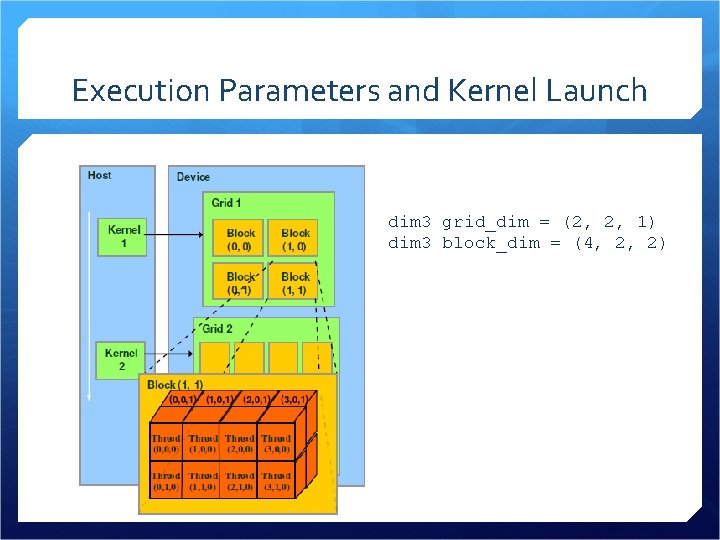

Thread Batching: Grids and Blocks Host Ø A kernel is executed as a grid of thread blocks Ø All threads share data memory space Ø A thread block is a batch of threads that can cooperate with each other. Ø Threads and blocks have IDs Ø So each thread can decide what data to work on Ø Grid Dim: 1 D or 2 D Ø Block Dim: 1 D, 2 D, or 3 D Device Grid 1 Kernel 1 Block (0, 0) Block (1, 0) Block (2, 0) Block (0, 1) Block (1, 1) Block (2, 1) Grid 2 Kernel 2 Block (1, 1) Thread Thread (0, 0) (1, 0) (2, 0) (3, 0) (4, 0) Thread Thread (0, 1) (1, 1) (2, 1) (3, 1) (4, 1) Thread Thread (0, 2) (1, 2) (2, 2) (3, 2) (4, 2) Courtesy: NDVIA

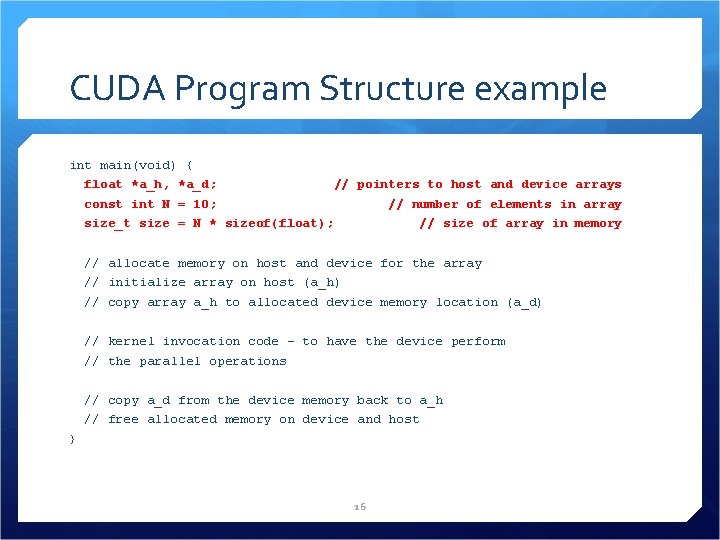

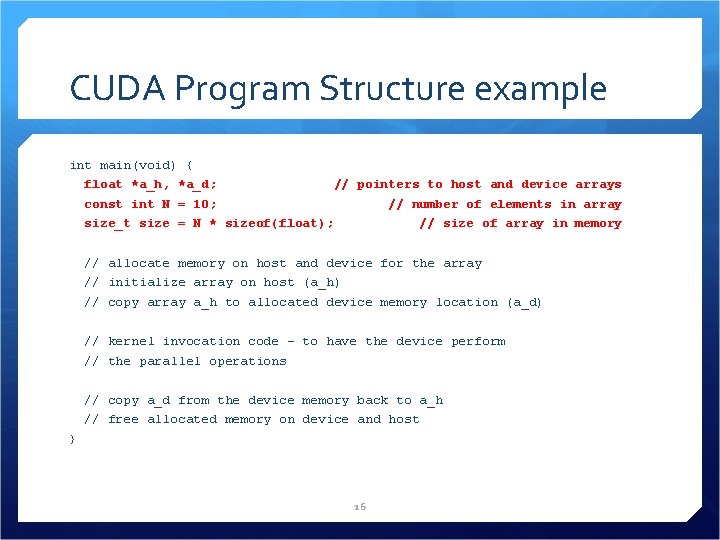

CUDA Program Structure example int main(void) { float *a_h, *a_d; // pointers to host and device arrays const int N = 10; // number of elements in array size_t size = N * sizeof(float); // size of array in memory // allocate memory on host and device for the array // initialize array on host (a_h) // copy array a_h to allocated device memory location (a_d) // kernel invocation code – to have the device perform // the parallel operations // copy a_d from the device memory back to a_h // free allocated memory on device and host } 16

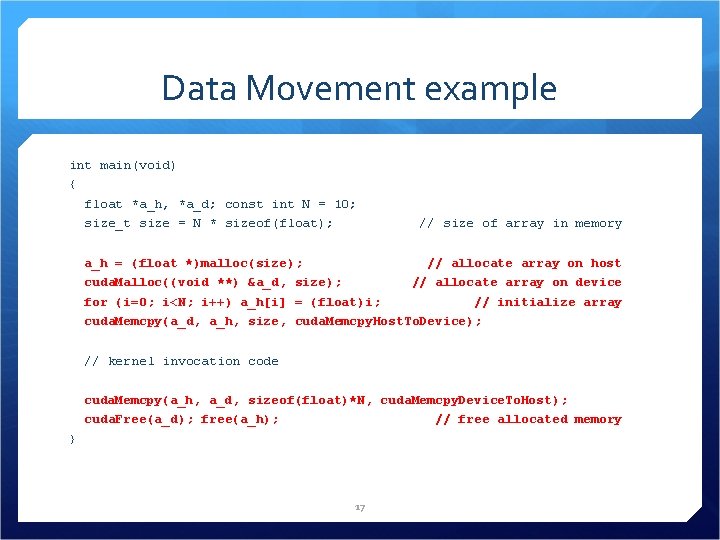

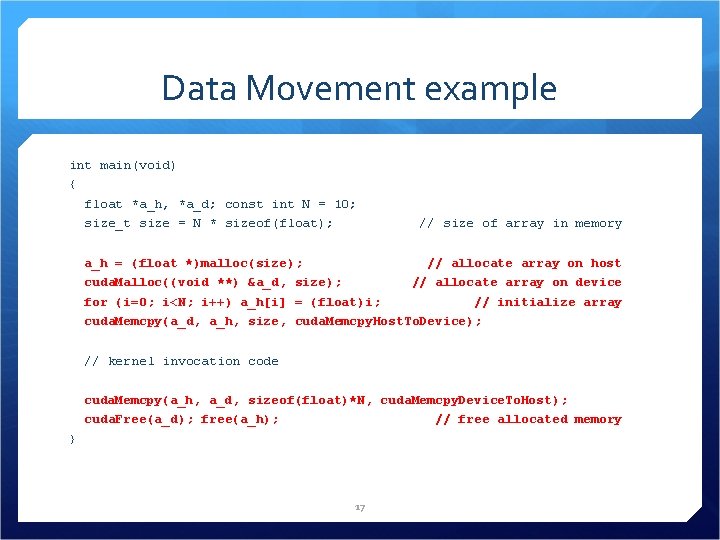

Data Movement example int main(void) { float *a_h, *a_d; const int N = 10; size_t size = N * sizeof(float); // size of array in memory a_h = (float *)malloc(size); // allocate array on host cuda. Malloc((void **) &a_d, size); // allocate array on device for (i=0; i<N; i++) a_h[i] = (float)i; // initialize array cuda. Memcpy(a_d, a_h, size, cuda. Memcpy. Host. To. Device); // kernel invocation code cuda. Memcpy(a_h, a_d, sizeof(float)*N, cuda. Memcpy. Device. To. Host); cuda. Free(a_d); free(a_h); // free allocated memory } 17

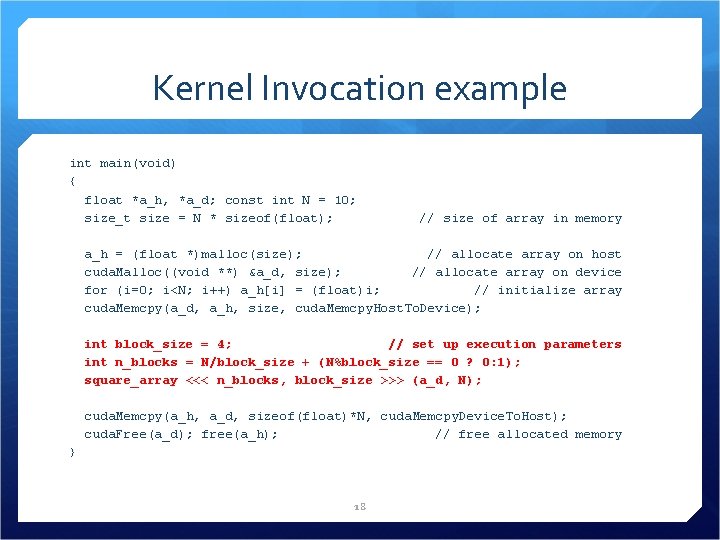

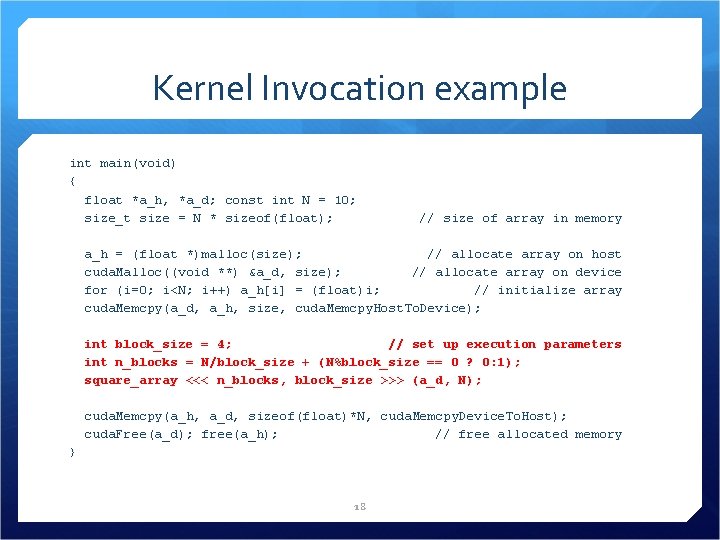

Kernel Invocation example int main(void) { float *a_h, *a_d; const int N = 10; size_t size = N * sizeof(float); // size of array in memory a_h = (float *)malloc(size); // allocate array on host cuda. Malloc((void **) &a_d, size); // allocate array on device for (i=0; i<N; i++) a_h[i] = (float)i; // initialize array cuda. Memcpy(a_d, a_h, size, cuda. Memcpy. Host. To. Device); int block_size = 4; // set up execution parameters int n_blocks = N/block_size + (N%block_size == 0 ? 0: 1); square_array <<< n_blocks, block_size >>> (a_d, N); cuda. Memcpy(a_h, a_d, sizeof(float)*N, cuda. Memcpy. Device. To. Host); cuda. Free(a_d); free(a_h); // free allocated memory } 18

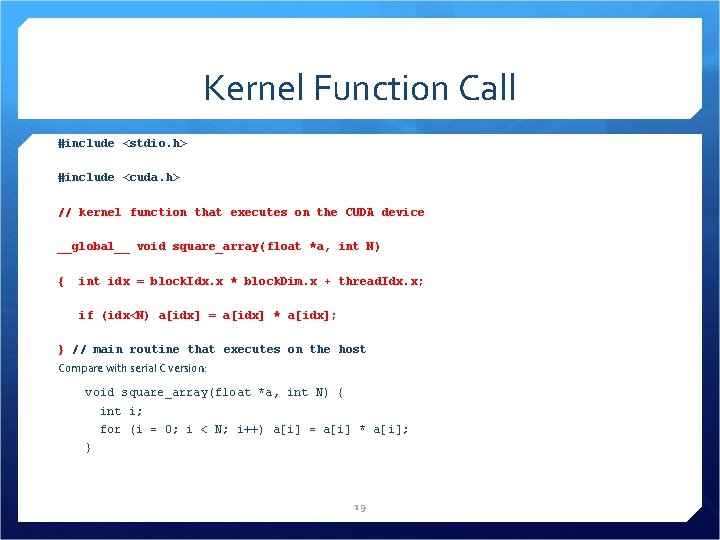

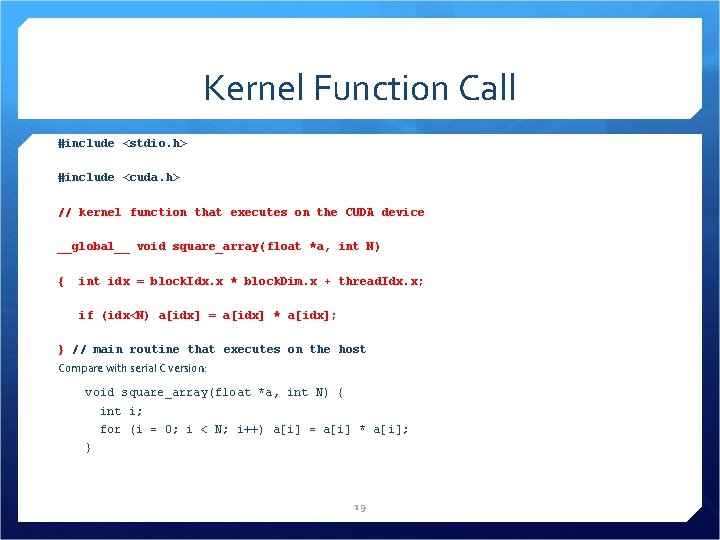

Kernel Function Call #include <stdio. h> #include <cuda. h> // kernel function that executes on the CUDA device __global__ void square_array(float *a, int N) { int idx = block. Idx. x * block. Dim. x + thread. Idx. x; if (idx<N) a[idx] = a[idx] * a[idx]; } // main routine that executes on the host Compare with serial C version: void square_array(float *a, int N) { int i; for (i = 0; i < N; i++) a[i] = a[i] * a[i]; } 19

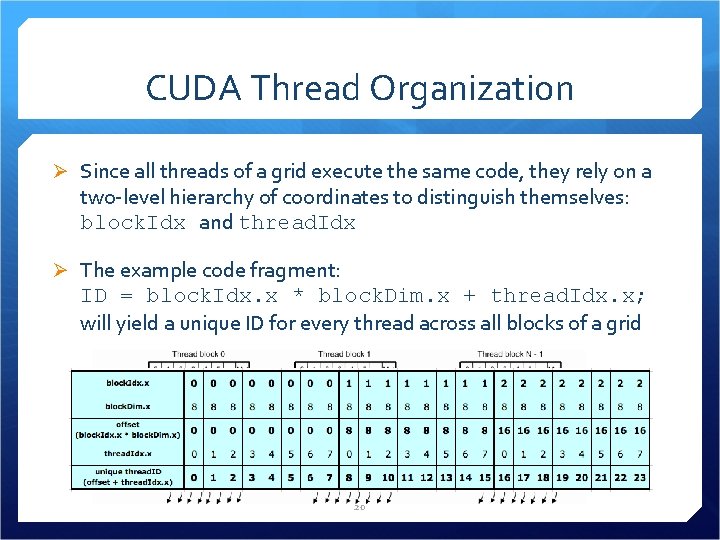

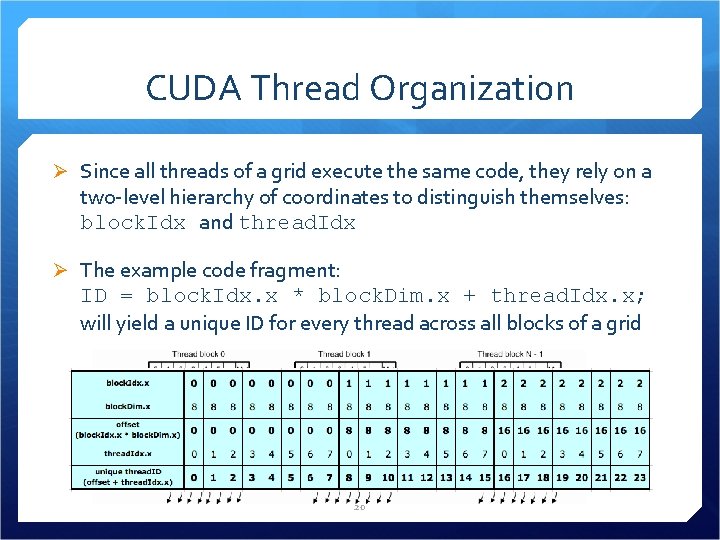

CUDA Thread Organization Ø Since all threads of a grid execute the same code, they rely on a two-level hierarchy of coordinates to distinguish themselves: block. Idx and thread. Idx Ø The example code fragment: ID = block. Idx. x * block. Dim. x + thread. Idx. x; will yield a unique ID for every thread across all blocks of a grid 20

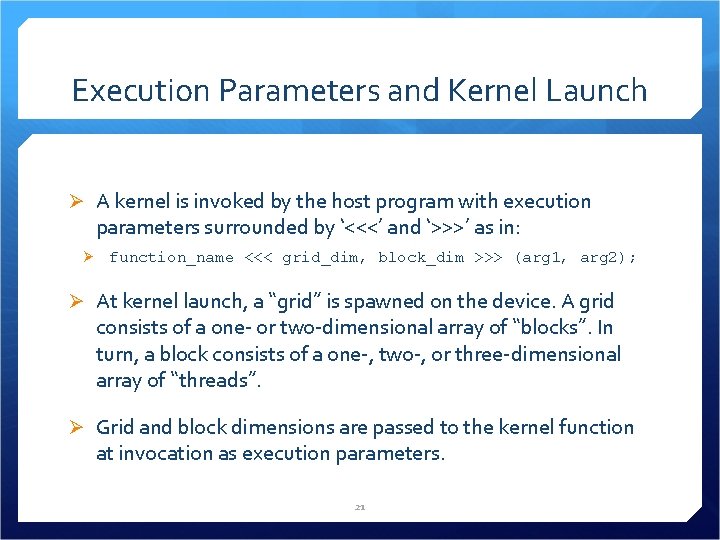

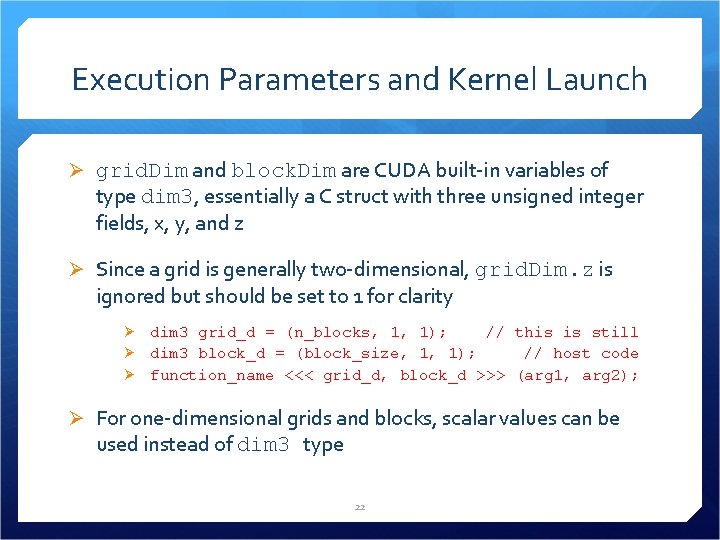

Execution Parameters and Kernel Launch Ø A kernel is invoked by the host program with execution parameters surrounded by ‘<<<’ and ‘>>>’ as in: Ø function_name <<< grid_dim, block_dim >>> (arg 1, arg 2); Ø At kernel launch, a “grid” is spawned on the device. A grid consists of a one- or two-dimensional array of “blocks”. In turn, a block consists of a one-, two-, or three-dimensional array of “threads”. Ø Grid and block dimensions are passed to the kernel function at invocation as execution parameters. 21

Execution Parameters and Kernel Launch Ø grid. Dim and block. Dim are CUDA built-in variables of type dim 3, essentially a C struct with three unsigned integer fields, x, y, and z Ø Since a grid is generally two-dimensional, grid. Dim. z is ignored but should be set to 1 for clarity Ø Ø Ø dim 3 grid_d = (n_blocks, 1, 1); // this is still dim 3 block_d = (block_size, 1, 1); // host code function_name <<< grid_d, block_d >>> (arg 1, arg 2); Ø For one-dimensional grids and blocks, scalar values can be used instead of dim 3 type 22

Execution Parameters and Kernel Launch dim 3 grid_dim = (2, 2, 1) dim 3 block_dim = (4, 2, 2) 23

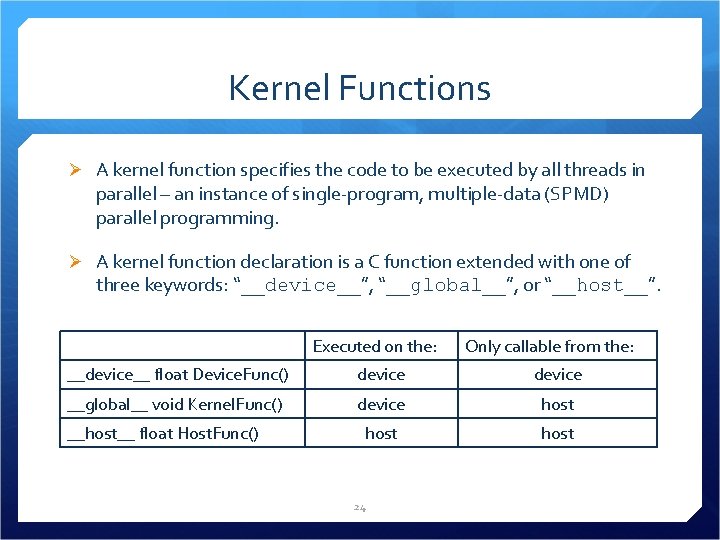

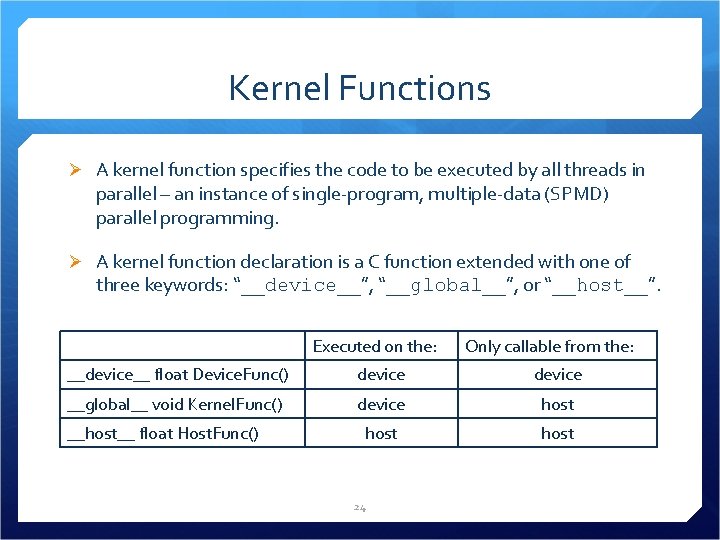

Kernel Functions Ø A kernel function specifies the code to be executed by all threads in parallel – an instance of single-program, multiple-data (SPMD) parallel programming. Ø A kernel function declaration is a C function extended with one of three keywords: “__device__”, “__global__”, or “__host__”. Executed on the: Only callable from the: __device__ float Device. Func() device __global__ void Kernel. Func() device host __host__ float Host. Func() 24

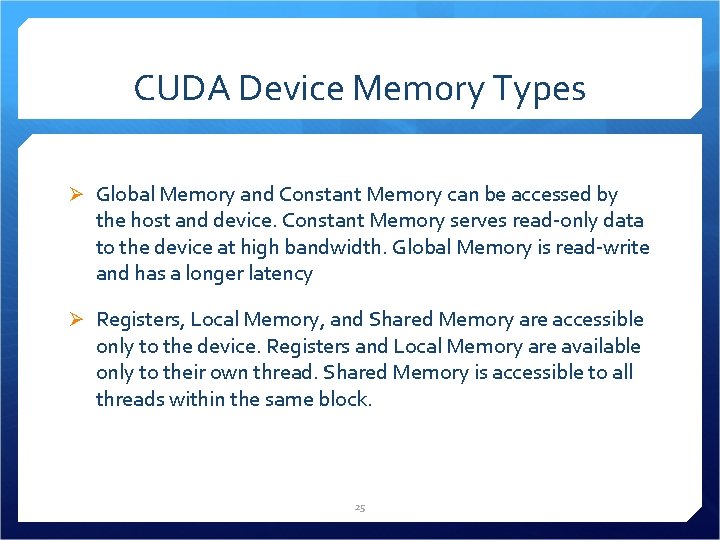

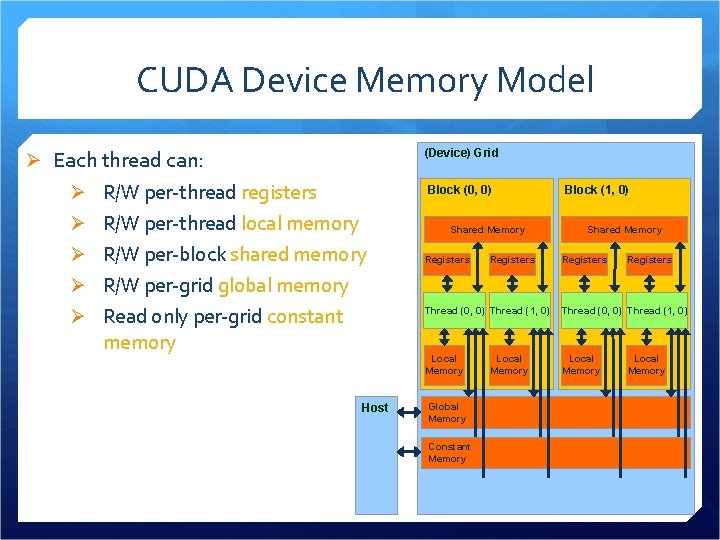

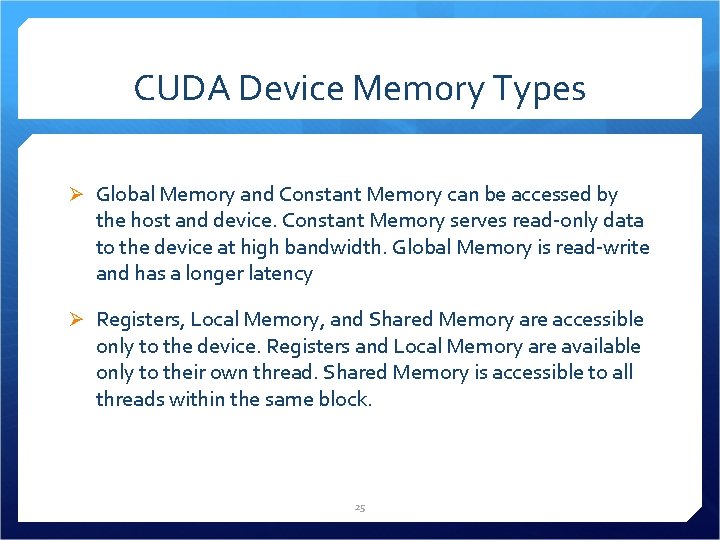

CUDA Device Memory Types Ø Global Memory and Constant Memory can be accessed by the host and device. Constant Memory serves read-only data to the device at high bandwidth. Global Memory is read-write and has a longer latency Ø Registers, Local Memory, and Shared Memory are accessible only to the device. Registers and Local Memory are available only to their own thread. Shared Memory is accessible to all threads within the same block. 25

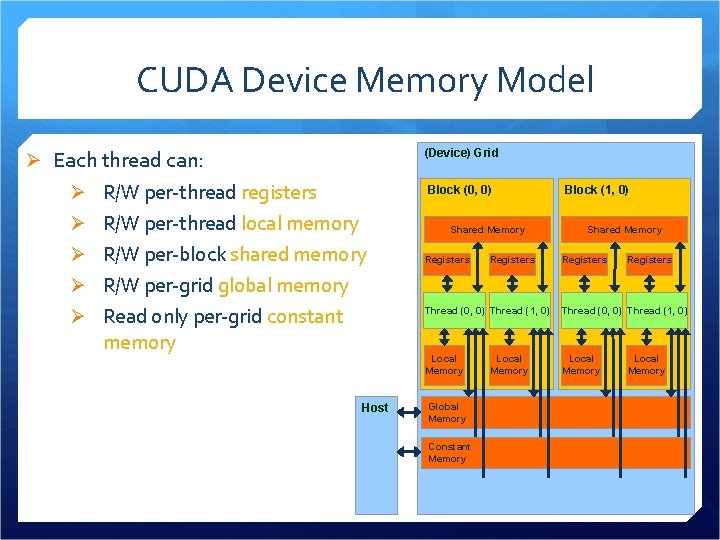

CUDA Device Memory Model Ø Each thread can: (Device) Grid Ø R/W per-thread registers Block (0, 0) Ø R/W per-thread local memory Shared Memory Ø R/W per-block shared memory Registers Block (1, 0) Shared Memory Registers Ø R/W per-grid global memory Ø Read only per-grid constant memory Host Thread (0, 0) Thread (1, 0) Local Memory Global Memory Constant Memory Local Memory

CUDA- Advantages Ø Huge increase in processing power over conventional CPU processing. Early reports suggest speed increases of 10 x to 200 x over CPU processing speed. Ø Researchers can use several GPU's to preform the same amount of operations as many servers in less time, thus saving money, time, and space. Ø C language is widely used, so it is easy for developers to learn how to program for CUDA. Ø All graphics cards in the G 80 series and beyond support CUDA. Ø Harnesses the power of the GPU by using parallel processing; running thousands of simultaneous reads. 27

Disadvantages Limited user base- Only NVIDIA G 80 and onward video cards can use CUDA, thus isolating all ATI users. Speeds may be bottlenecked at the bus between CPU and GPU. Mainly developed for researchers- not many uses for average users. System is still in development. 28

Current GPU Research at UGA Institute of Bioinformatics “Solving Nonlinear Systems of First Order Ordinary Differential Equations Using a Galerkin Finite Element Method”, Al-Omari, A. , Schuttler, H. -B. , Arnold, J. , and Taha, T. R. IEEE Access, 1, 408 -417 (2013). “Solving Large Nonlinear Systems of First-Order Ordinary Differential Equations With Hierarchical Structure Using Multi-GPGPUs and an Adaptive Runge Kutta ODE Solver”, Al. Omari, A. , Arnold, J. , Taha, T. R. , Schuttler, H. -B. IEEE Access, 1, 770 -777 (2013). 29

Current GPU Research at UGA Institute of Bioinformatics GTC Poster: http: //cuda. uga. edu/docs/GPGPU_runge-kutta. pdf 30

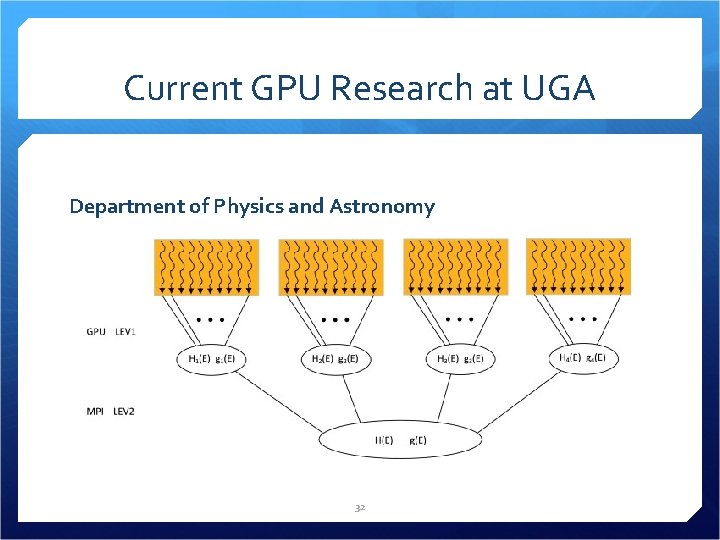

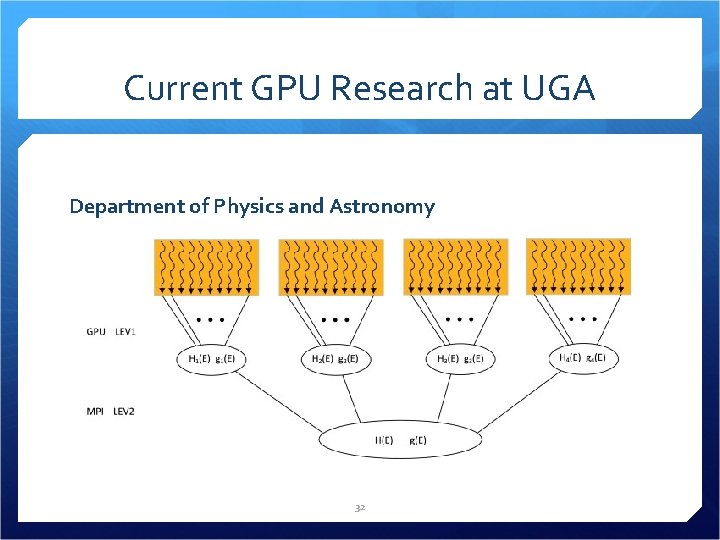

Current GPU Research at UGA Department of Physics and Astronomy “A generic, hierarchical framework for massively parallel Wang-Landau sampling”, T. Vogel, Y. W. Li, T. Wu st, and D. P. Landau, Phys. Rev. Lett. 110, 210603 (2013). “Massively parallel Wang-Landau Sampling on Multiple GPUs”, J. Yin and D. P. Landau, Comput. Phys. Commun. 183, 1568 -1573 (2012). 31

Current GPU Research at UGA Department of Physics and Astronomy 32

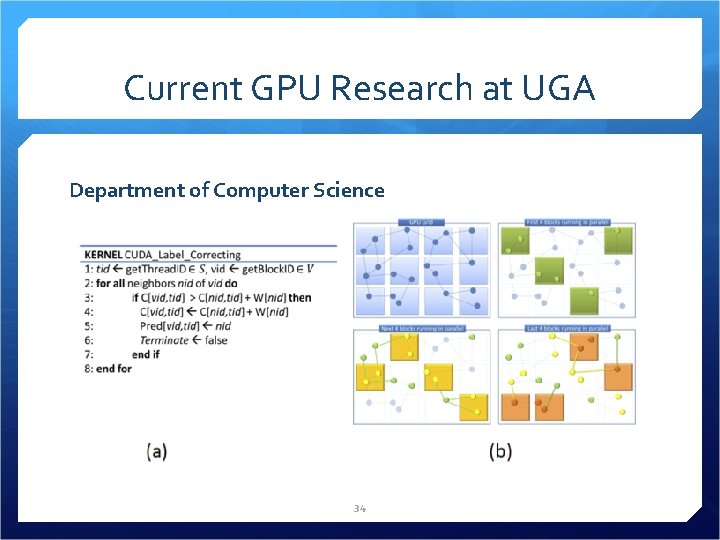

Current GPU Research at UGA Department of Computer Science “Analysis of Surface Folding Patterns of DICCCOLS Using the GPU-Optimized Geodesic Field Estimate”, Mukhopadhyay, A. , Lim, C. -W. , Bhandarkar, S. M. , Chen, H. , New, A. , Liu, T. , Rasheed, K. M. , Taha, T. R. Nagoyaa: Proceedings of MICCAI Workshop on Mesh Processing in Medical Image Analysis (2013). 33

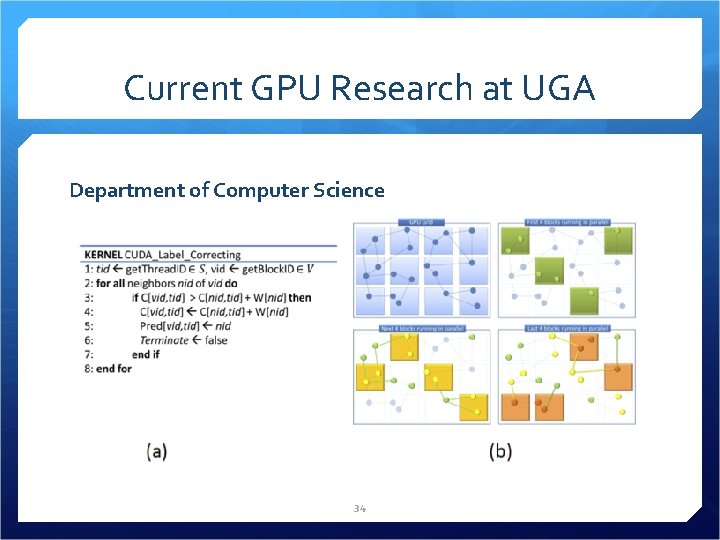

Current GPU Research at UGA Department of Computer Science 34

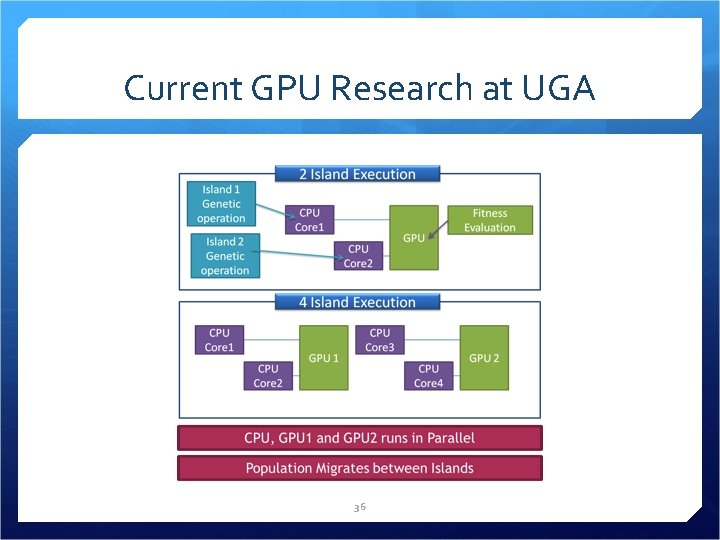

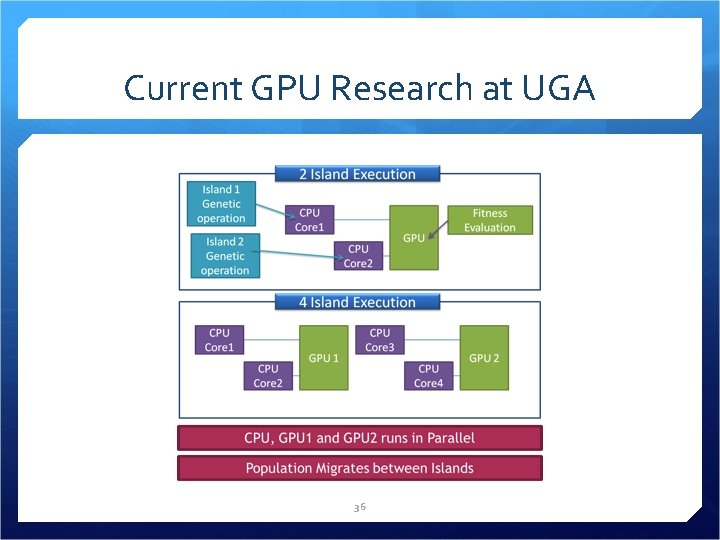

Current GPU Research at UGA Department of Computer Science “Using Massively Parallel Evolutionary Computation on GPUs for Biological Circuit Reconstruction”, Cholwoo Lim, master's thesis under the direction of Dr. Khaled Rasheed (2013). 35

Current GPU Research at UGA 36

Current GPU Research at UGA Department of Computer Science “GPU Acceleration of High-Dimensional k-Nearest Neighbor Search for Face Recognition using Eigen. Faces”, Jennifer Rouan, master's thesis under the direction of Dr. Thiab Taha (2014) “Using CUDA for GPUs over MPI to solve Nonlinear Evolution Equations”, Jennifer Rouan and Thiab Taha, presented at: The Eighth IMACS International Conference on Nonlinear Evolution Equations and Wave Phenomena: Computation and Theory (2013) 37

Current GPU Research at UGA Department of Computer Science Research Day 2014 Poster: http: //cuda. uga. edu/docs/J_Rouan_Research_Day_poster. pd f Waves 2013 Poster: http: //cuda. uga. edu/docs/waves_Poster_Rouan. pdf 38

More CUDA Training Resources Ø University of Georgia CUDA Teaching Center: http: //cuda. uga. edu Ø Nvidia training and education site: http: //developer. nvidia. com/cuda-education-training Ø Stanford University course on i. Tunes U: http: //itunes. apple. com/us/itunes-u/programming -massively-parallel/id 384233322 Ø University of Illinois: http: //courses. engr. illinois. edu/ece 498/al/Syllabus. html Ø University of California, Davis: https: //smartsite. ucdavis. edu/xsl-portal/site/1707812 c-4009 -4 d 91 -a 80 e-271 bde 5 c 8 fac/page/de 40 f 2 cc-40 d 9 -4 b 0 f-a 2 d 3 -e 8518 bd 0266 a Ø University of Wisconsin: http: //sbel. wisc. edu/Courses/ME 964/2011/me 964 Spring 2011. pdf Ø University of North Carolina at Charlotte: http: //coitweb. uncc. edu/~abw/ITCS 6010 S 11/index. html 39

GPUs Available at UGA CUDA Teaching Center lab in 207 A Twelve NVIDIA Ge. Force GTX 480 GPUs Six Linux hosts on cs. uga. edu domain: cuda 01, cuda 02, cuda 03, cuda 04, cuda 05 & cuda 06 SSH from nike. cs. uga. edu with your CS login and password More GPUs available on the Z-cluster visit http: //gacrc. uga. edu for an account and more information 40

References Ø Kirk, D. , & Hwu, W. (2010). Programming Massively Parallel Processors: A Hands-on Approach, 1 – 75 Ø Tarjan, D. (2010). Introduction to CUDA, Stanford University on i. Tunes U Ø Atallah, M. J. (Ed. ), (1998). Algorithms and theory of computation handbook. Boca Raton, FL: CRC Press Ø von Neumann, J. (1945). First draft of a report on the EDVAC. Contract No. W-670 -ORD 4926, U. S. Army Ordnance Department and University of Pennsylvania Ø Sutter, H. , & Larus, J. (2005). Software and the concurrency revolution. ACM Queue, 3(7), 54 – 62 Ø Stratton, J. A. , Stone, S. S. , & Hwu, W. W. (2008). MCUDA: And efficient implementation of CUDA kernels for multi-core CPUs. Canada: Edmonton Ø Vandenbout, Dave (2008). My First Cuda Program, http: //llpanorama. wordpress. com/2008/05/21/my-first-cuda-program/ 41

References Ø “Using Massively Parallel Evolutionary Computation on GPUs for Biological Circuit Reconstruction”, Cholwoo Lim, master's thesis under the direction of Dr. Khaled Rasheed (2013) and Prof. Taha is one of the Advisory Committee members Ø “Solving large Nonlinear Systems of ODE with Hierarchical Structure Using Multi. GPGPUs and an Adaptive Runge Kutte”, Ahmad Al-Omari, Thiab Taha, B. Schuttler, Jonathan Arnold, presented at: GPU Technology Conference, March 2014 “Using CUDA for GPUs over MPI to solve Nonlinear Evolution Equations”, Jennifer Rouan and Thiab Taha, presented at: The Eighth IMACS International Conference on Nonlinear Evolution Equations and Wave Phenomena: Computation and Theory, March 2013 “GPU Acceleration of High-Dimensional k-Nearest Neighbor Search for Face Recognition using Eigen. Faces”, Jennifer Rouan, and Thiab Taha, presented at: UGA Department of Computer Science Research Day, April 2014 42