CSI 5388 Data Sets Running Proper Comparative Studies

- Slides: 15

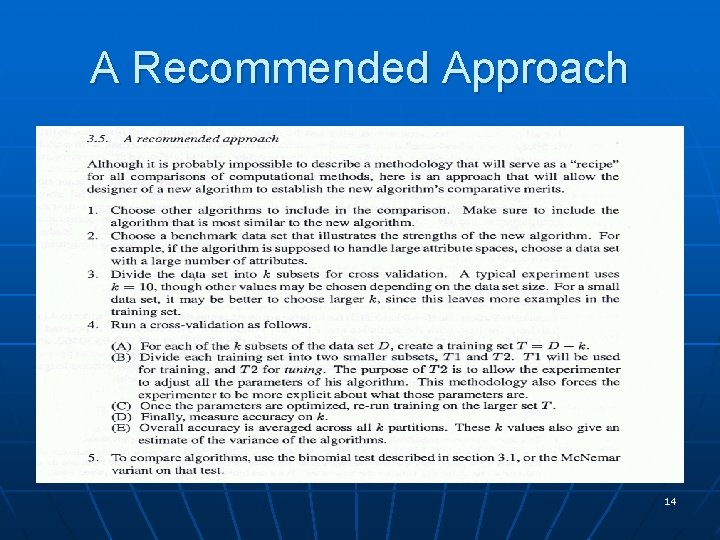

CSI 5388 Data Sets: Running Proper Comparative Studies with Large Data Repositories [Based on Salzberg, S. L. , 1997 “On Comparing Classifiers: Pitfalls to Avoid and a Recommended Approach”] 1

Advantages of Large Data Repositories n The researcher can easily experiment with real-world data sets (rather than artificial data). • New algorithms can be tested in realworld settings. • Since many researchers use the same data sets, the comparison between new and old classifiers is easy. • Problems arising in real-world settings can be identified and focused on. 2

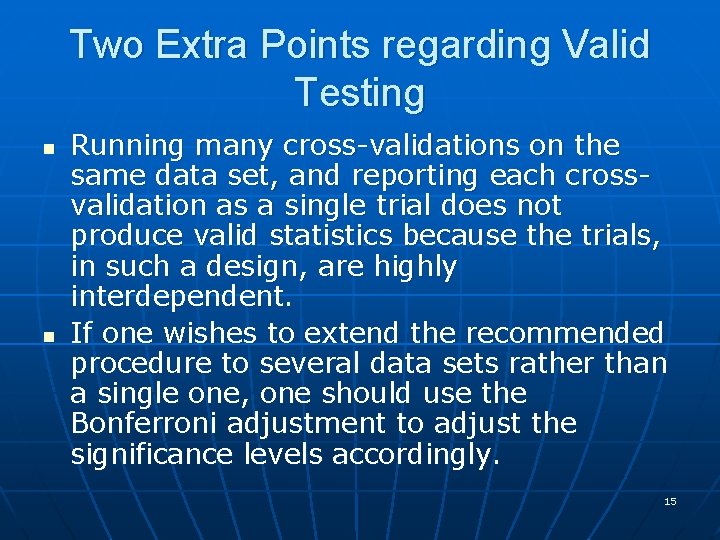

Disadvantages of Large Data Repositories n n The Multiplicity Effect: when running large numbers of experiments, more stringent requirements need to be used to establish statistical significance than when only a few number of experiments are considered. Community Experiments Problem: If many researchers run the same experiments, it is possible that, by chance, some people will obtain statistically significant results. These people will be the ones publishing their results (even though they may have been obtained, only by chance!) Repeated Tuning Problem: In order to be valid, all tuning should be done before the test set is known. This is seldom the case. The Problem of Generalizing Results: It is not necessarily correct to generalize from the UCI 3 Repository to any other data sets.

The Multiplicity Effect: An Example n n 14 different algorithms get compared on 11 data sets to a default classifier. Differences are reported as significant if a twotailed, paired t-test produces a p-value smaller than 0. 05. This is not stringent enough: By running 14*11=154 experiments, one has 154 chances to be significant. So the expected number of significant results obtained by chance is 154*. 05= 7. 7. This is not desirable. In fact, in such a setting, the acceptable p-value should be much smaller than 0. 05 in order to obtain a true significance level of 0. 05. 4

The Multiplicity Effect: More Formally n n Let the acceptable significance level for each of our experiments be α*. Then the chance of making the right conclusion for one experiment is 1 - α* If we conduct n independent experiments, the chances of getting them all right is (1 - α*)n Suppose that there is no real difference among the algorithms being tested, then, the chance that we will make at least one mistake is: α = 1 - (1 - α*)n 5

The Multiplicity Effect: The Example continued n n n We assume that there is no real difference among the 14 algorithms being compared. If our acceptable significance level were set to α*= 0. 05, then the odds of making at least one mistake in our 154 experiments is 1 -(1 -0. 05)154 = 0. 9996 That is, we are 99. 96% certain that at least one of our conclusions will incorrectly reach significance at the 0. 05 level If we wanted to reach a true significance level of 0. 05, we would need to set 1 - (1 - α*)154≤ 0. 05, i. e. , α* ≤ 0. 0003 6 This is called the Bonferroni adjustment

Experiment Independence n n The Bonferroni adjustment is valid as long as the experiments are independent. However: • If different algorithms are compared on the same test data, then the test are not independent. • If the training and testing data are drawn from the same data set, then the experiments are not independent, n n In these cases, it is even more likely that the statistical tests will find significance when none exists, even if the Bonferroni adjustment were used. In conclusion, the t-test, often used by researchers, is the wrong test to use in this particular experimental setting. 7

Alternative Statistical Test to deal with the Multiplicity Effect I n The first approach suggested as an alternative to deal with the Multiplicity effect is the following: • When comparing only two algorithms, A and B, n We can count the number of examples that A got right and B got wrong (A>B); and the number of examples that B got right and A got wrong (B>A); n We can then compare the percent of times where A>B and B>A, throwing out the ties n We can then use a Binomial test (or the Mc. Nemar test which is nearly identical and easier to compute) for the comparison, with the Bonferroni adjustment for multiple tests. (See Salzberg, 1997) n However, the binomial test does not handle quantitative differences between algorithms, more than two algorithms and it does not consider the frequency of agreements between two algorithms. 8

Alternative Statistical Tests to deal with the Multiplicity Effect II n The other approaches suggested as an alternative to deal with the Multiplicity effect are the following: • Use random, distinct samples of the data to test each algorithm, and to use an analysis of variance (ANOVA) to compare the results. • Use the following Randomization testing approach: n n n For each trial, the data set is copied and class labels are replaced with random class labels. An algorithm is used to find the most accurate classifier it can, using the same methodology that is used with the original data. Any estimate of accuracy greater than random for the copied data reflects the bias in the methodology, and this reference distribution can then be used to adjust the estimates on the real data. 9

Community Experiments n n n The multiplicity effect is not the only problem plaguing the current experimental process. There is another process that can be referred to as the Community experiments effect that occurs even if all the statistical tests are conducted properly. Suppose that 100 different people are trying to compare the accuracy of algorithms A and B, which, we assume have the same mean accuracy on a very large population of data sets. If these 100 people are studying these algorithms and looking for a significance level of. 05, then we can expect 5 of these people to, by chance, find a significant difference between A and B. One of these 5 people may publish their results, while the others will move on to different 10 experiments.

How to Deal with Community Experiments n n n The way to guard against the community experiments effect is to duplicate the results. Proper duplication requires drawing a new random sample from the population and repeating the study. Unfortunately, since benchmark databases are static and small in size, it is not possible to draw random samples of the same data sets at random. Using a different partitioning of the data into training and test sets does not help either with this problem. Should we rely on artificial data sets? 11

Repeated Tuning n n Most experiments need tuning. In many cases, the algorithms themselves need tuning, and in most cases, various data representations need to be tried. If the results of all this tuning is tested on the same data set as the data set used to report the final results, then each adjustment should be counted as a separate experiment. E. g. , if 10 different combinations of parameters are tried, then we would need to consider a significance level of 0. 005 in order to truly reach one of 0. 05. The solution to this problem is to do any kind of parameter tuning, algorithmic tweaking and so on ahead of seeing the testing set. Once a result has been produced from the testing set, it is not possible to get back to it. This is a problem because it makes exploratory research impossible, if one wants to report statistically significant results. On the other hand, it makes the report of statistically significant results impossible if 12 one wants to do exploratory research.

Generalizing Results n n n It is often believed that if some effect concerning a learning algorithm is shown to hold on a random subset of the UCI data sets, then this effect should also hold on other data sets. This is not necessarily the case because the UCI repository, as was shown by Holte (and others), only represent a very limited sample of problems, many of which are easy for a classifier. In other words, the repository is not an unbiased sample of classification problems. A second problem with too much reliance on community data sets such as the UCI Repository is that, consciously or not, researchers start developing algorithms tailored to those data sets. [E, g, they may develop algorithms for, say, missing data because the repository contains such problems, even if in reality, this turns out not to be a very 13 prevalent problem. ]

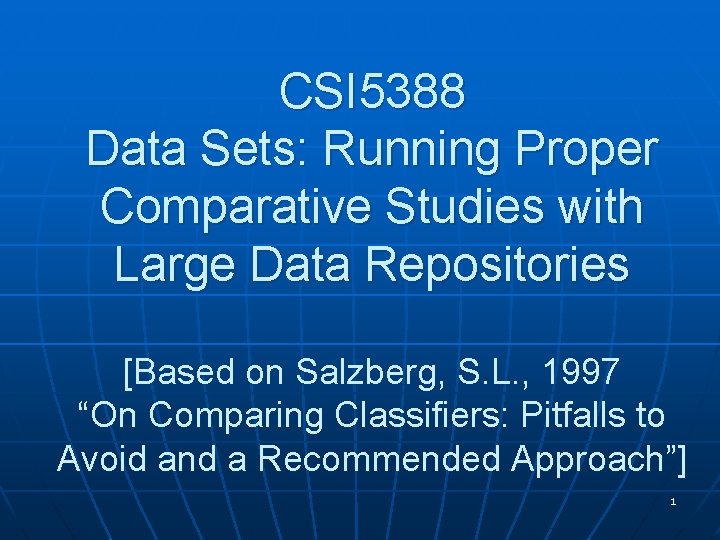

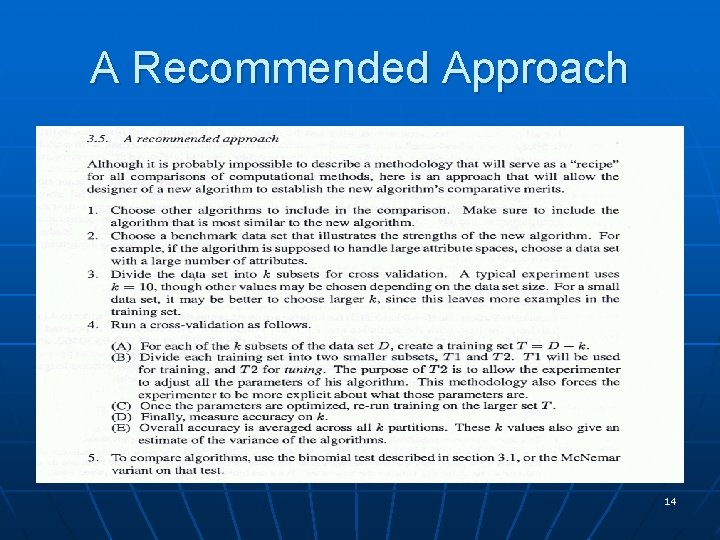

A Recommended Approach 14

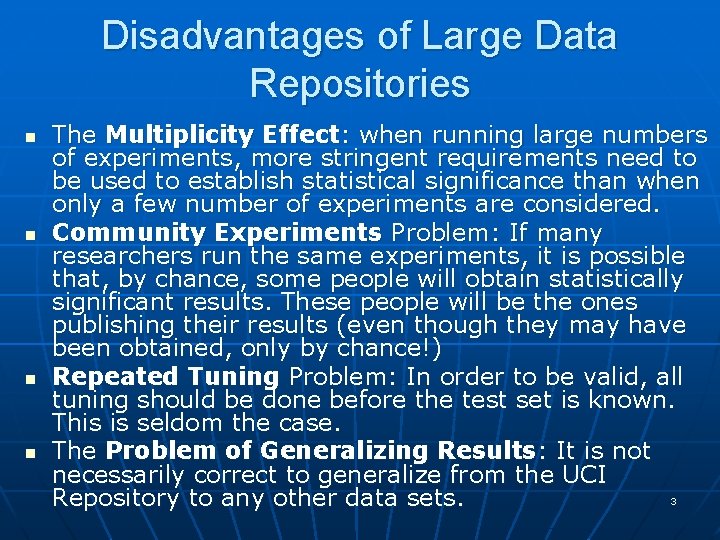

Two Extra Points regarding Valid Testing n n Running many cross-validations on the same data set, and reporting each crossvalidation as a single trial does not produce valid statistics because the trials, in such a design, are highly interdependent. If one wishes to extend the recommended procedure to several data sets rather than a single one, one should use the Bonferroni adjustment to adjust the significance levels accordingly. 15