CSE 53317331 Fall 2007 Machine Learning Margaret H

- Slides: 42

CSE 5331/7331 Fall 2007 Machine Learning Margaret H. Dunham Department of Computer Science and Engineering Southern Methodist University Some Slides extracted from Data Mining, Introductory and Advanced Topics, Prentice Hall, 2002. Other slides from CS 545 at Colorado State University, Chuck Anderson CSE 5331/7331 F'07 © Prentice Hall 1

Table of Contents Introduction (Chuck Anderson) n Statistical Machine Learning Examples n – Estimation – EM – Bayes Theorem Decision Tree Learning n Neural Network Learning n CSE 5331/7331 F'07 2

The slides in this introductory section are from CS 545: Machine Learning By Chuck Anderson Department of Computer Science Colorado State University Fall 2006 CSE 5331/7331 F'07 3

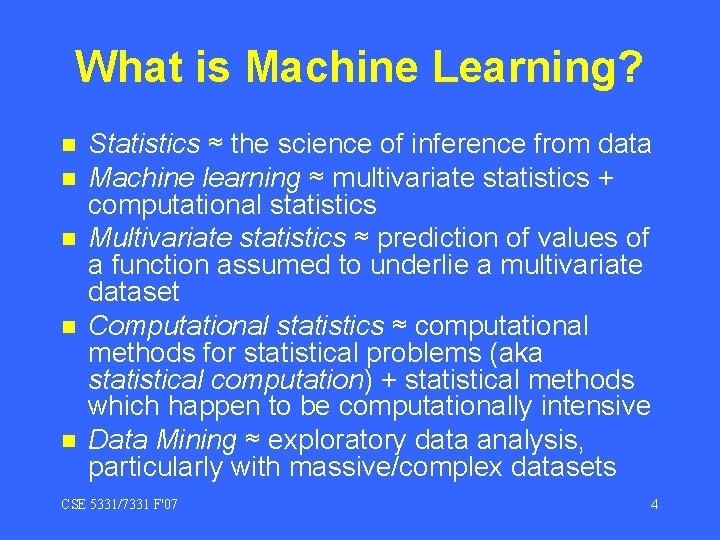

What is Machine Learning? n n n Statistics ≈ the science of inference from data Machine learning ≈ multivariate statistics + computational statistics Multivariate statistics ≈ prediction of values of a function assumed to underlie a multivariate dataset Computational statistics ≈ computational methods for statistical problems (aka statistical computation) + statistical methods which happen to be computationally intensive Data Mining ≈ exploratory data analysis, particularly with massive/complex datasets CSE 5331/7331 F'07 4

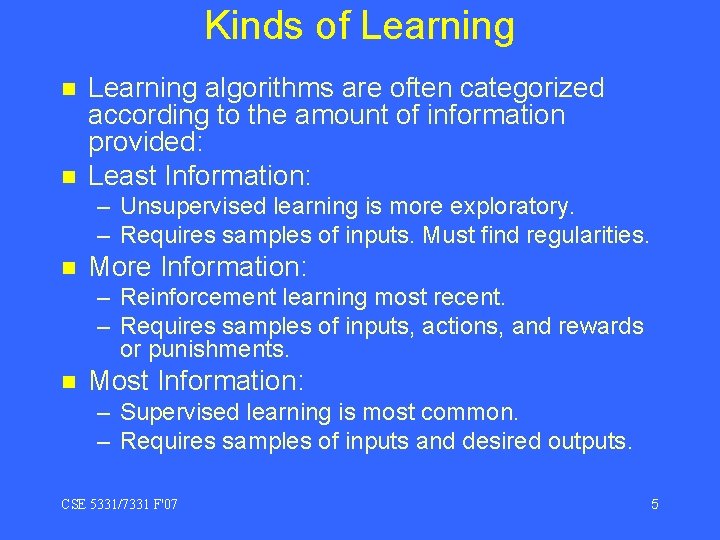

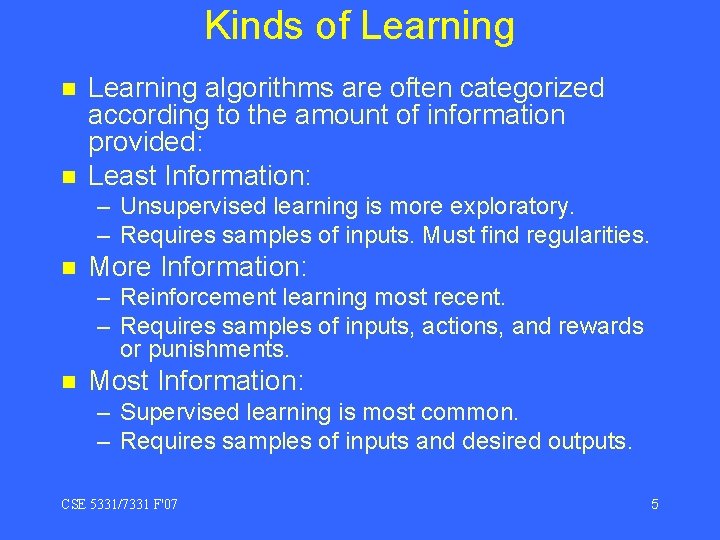

Kinds of Learning n n Learning algorithms are often categorized according to the amount of information provided: Least Information: – Unsupervised learning is more exploratory. – Requires samples of inputs. Must find regularities. n More Information: – Reinforcement learning most recent. – Requires samples of inputs, actions, and rewards or punishments. n Most Information: – Supervised learning is most common. – Requires samples of inputs and desired outputs. CSE 5331/7331 F'07 5

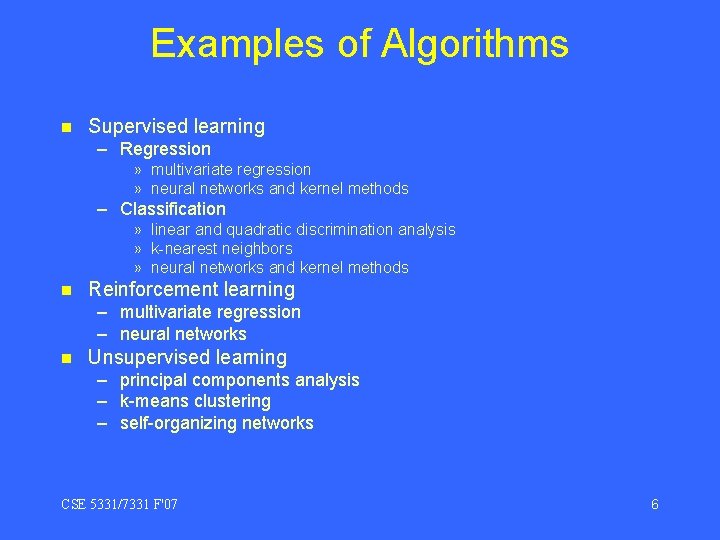

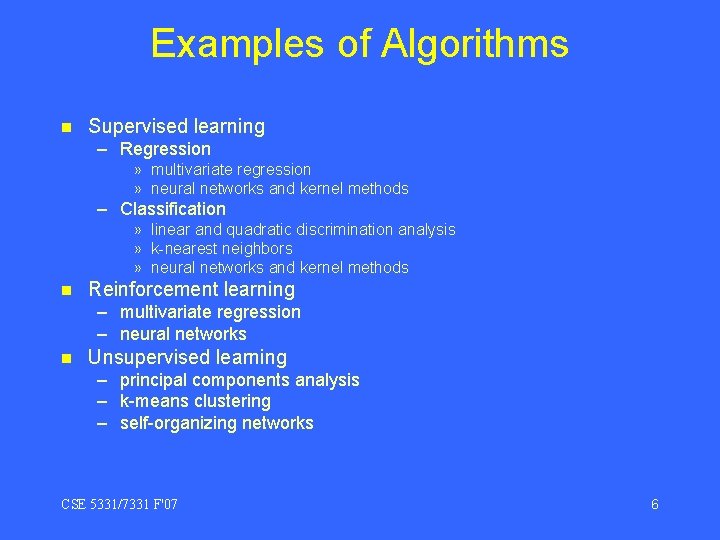

Examples of Algorithms n Supervised learning – Regression » multivariate regression » neural networks and kernel methods – Classification » linear and quadratic discrimination analysis » k-nearest neighbors » neural networks and kernel methods n Reinforcement learning – multivariate regression – neural networks n Unsupervised learning – principal components analysis – k-means clustering – self-organizing networks CSE 5331/7331 F'07 6

CSE 5331/7331 F'07 7

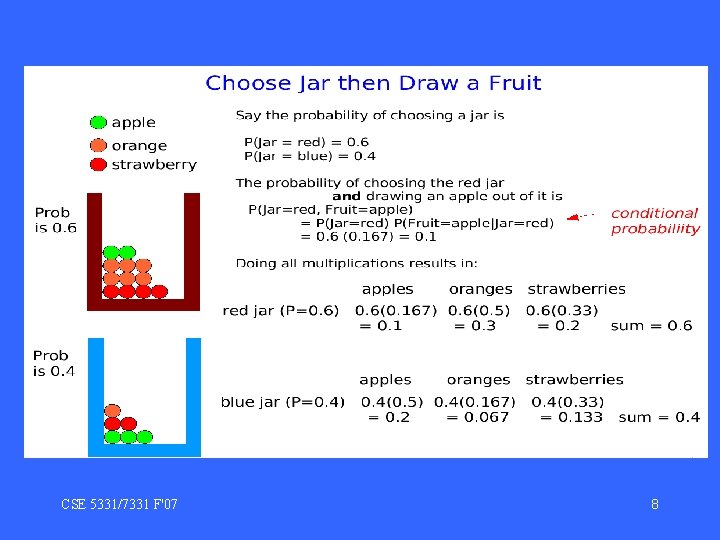

CSE 5331/7331 F'07 8

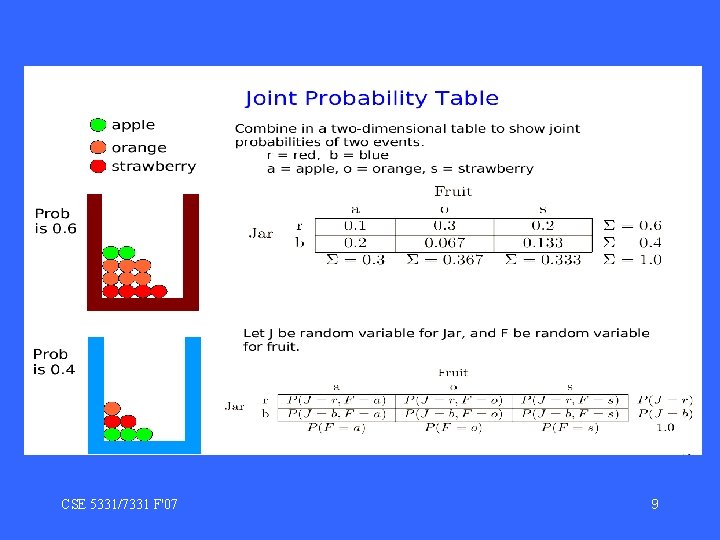

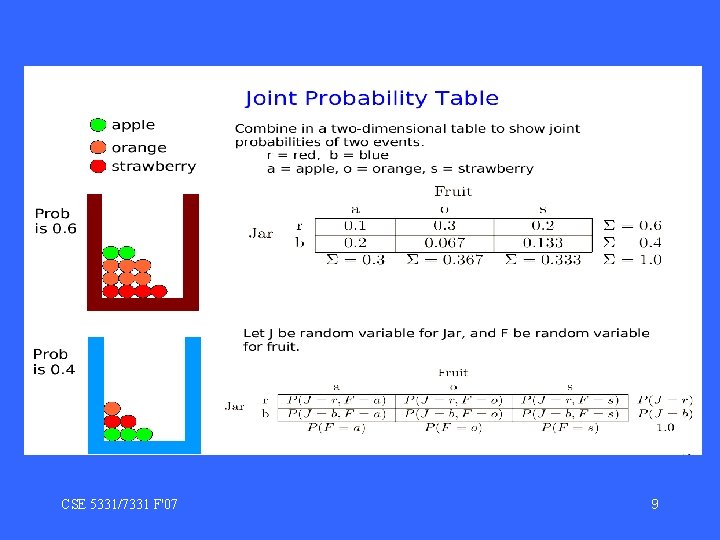

CSE 5331/7331 F'07 9

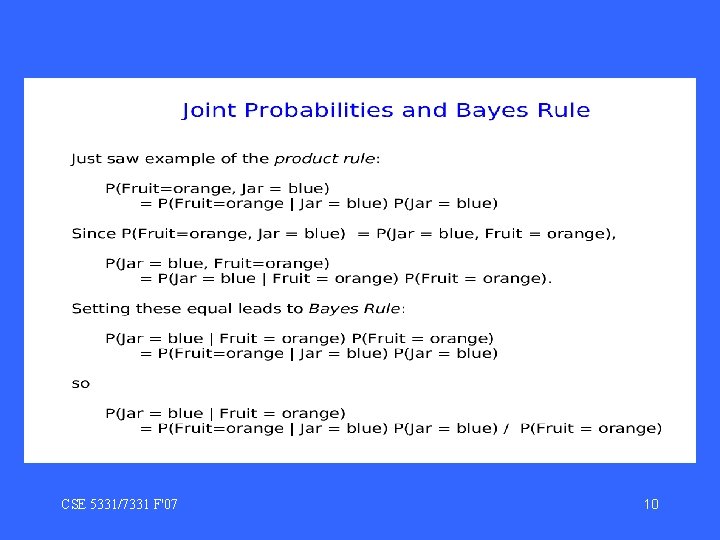

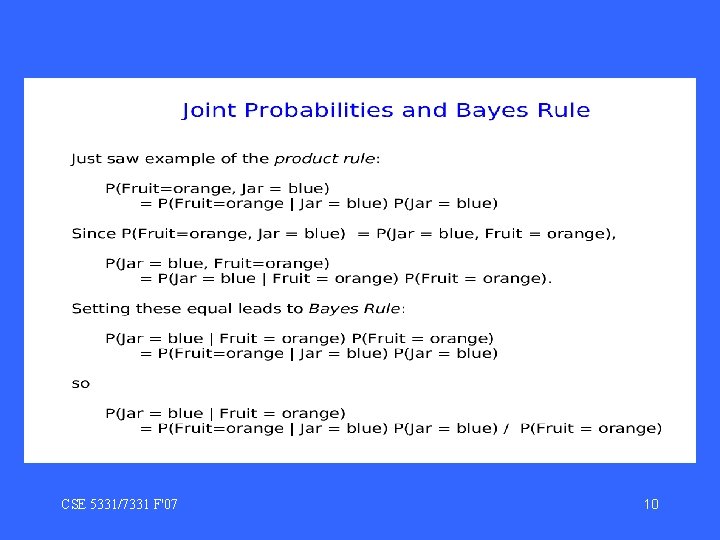

CSE 5331/7331 F'07 10

Table of Contents Introduction (Chuck Anderson) n Statistical Machine Learning Examples n – Estimation – EM – Bayes Theorem Decision Tree Learning n Neural Network Learning n CSE 5331/7331 F'07 11

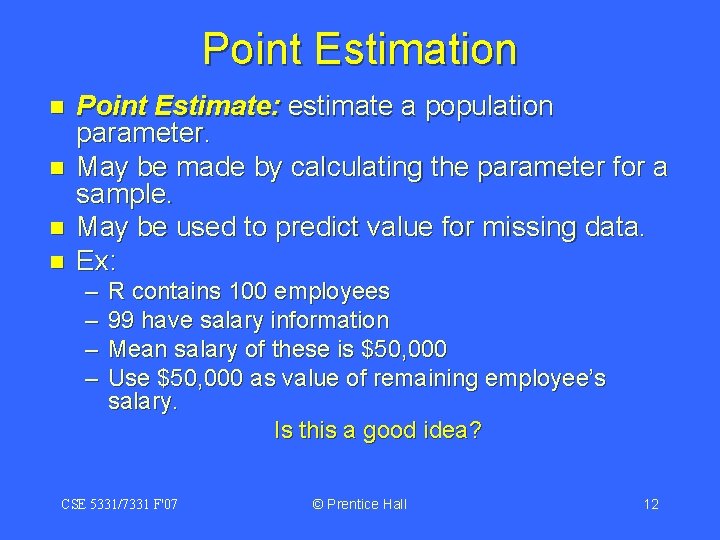

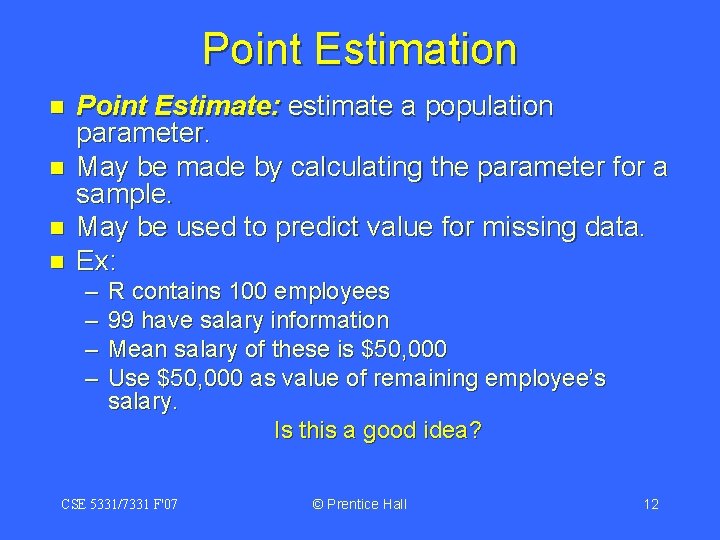

Point Estimation n n Point Estimate: estimate a population parameter. May be made by calculating the parameter for a sample. May be used to predict value for missing data. Ex: – – R contains 100 employees 99 have salary information Mean salary of these is $50, 000 Use $50, 000 as value of remaining employee’s salary. Is this a good idea? CSE 5331/7331 F'07 © Prentice Hall 12

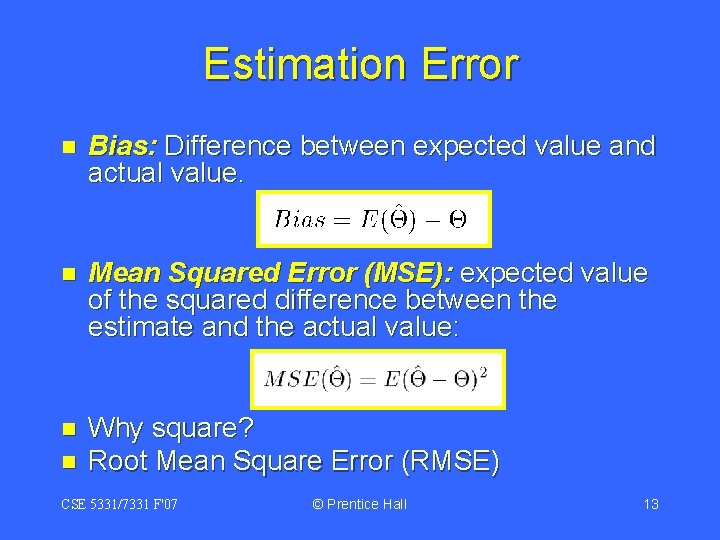

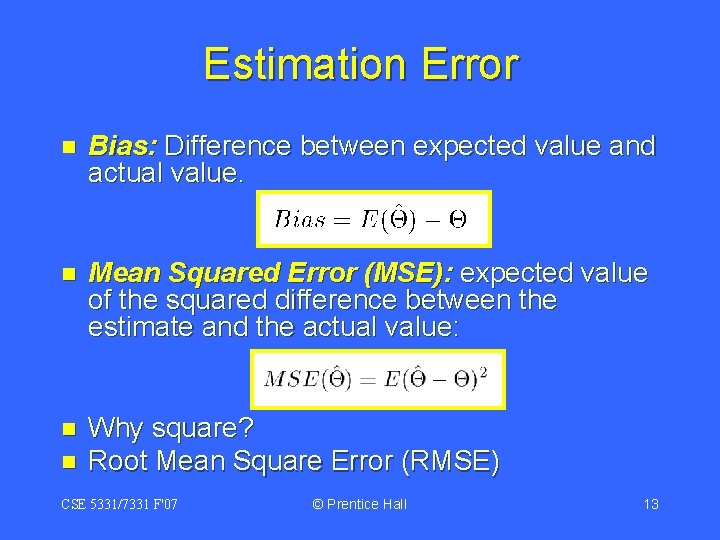

Estimation Error n Bias: Difference between expected value and actual value. n Mean Squared Error (MSE): expected value of the squared difference between the estimate and the actual value: n Why square? Root Mean Square Error (RMSE) n CSE 5331/7331 F'07 © Prentice Hall 13

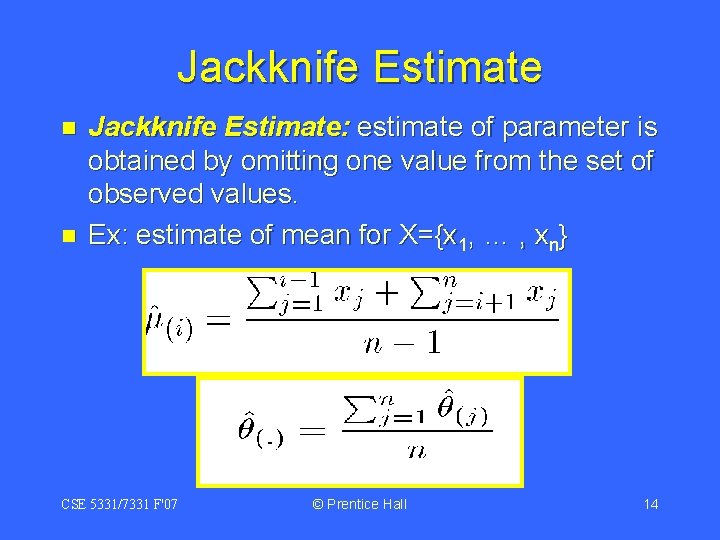

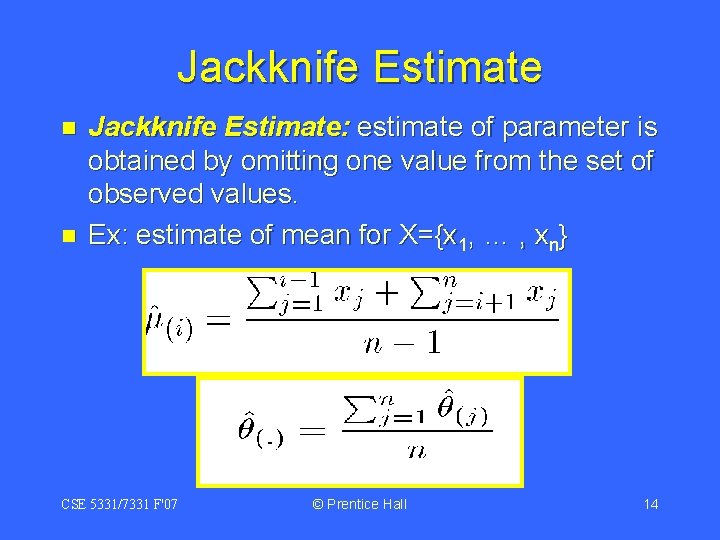

Jackknife Estimate n n Jackknife Estimate: estimate of parameter is obtained by omitting one value from the set of observed values. Ex: estimate of mean for X={x 1, … , xn} CSE 5331/7331 F'07 © Prentice Hall 14

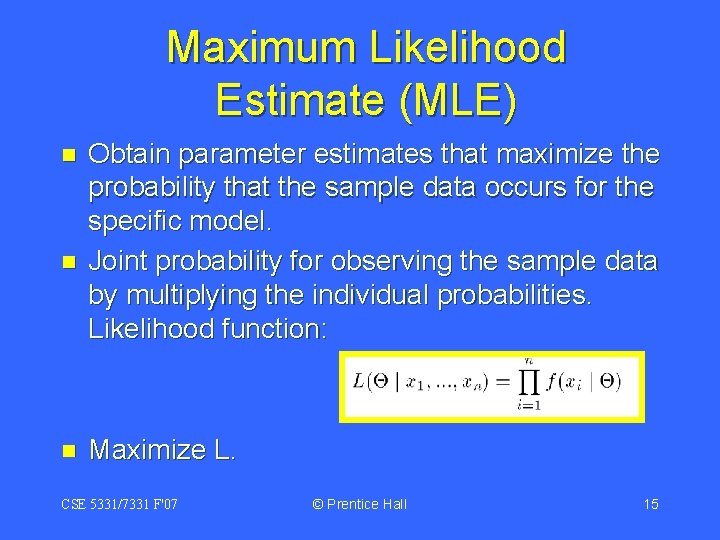

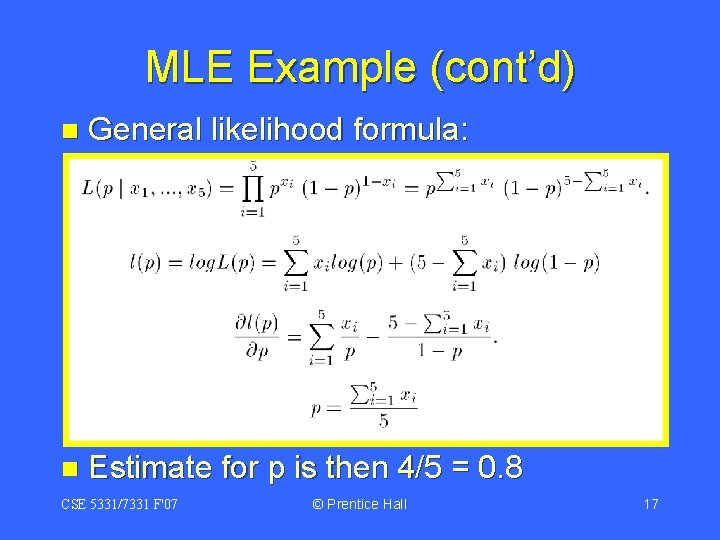

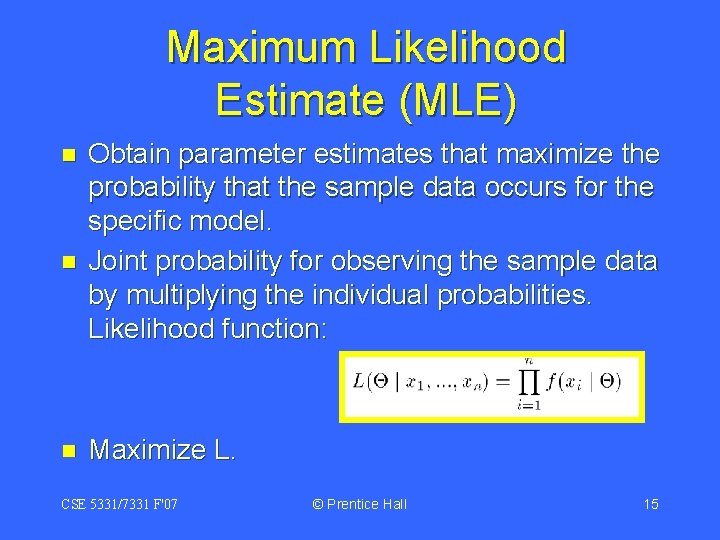

Maximum Likelihood Estimate (MLE) n n n Obtain parameter estimates that maximize the probability that the sample data occurs for the specific model. Joint probability for observing the sample data by multiplying the individual probabilities. Likelihood function: Maximize L. CSE 5331/7331 F'07 © Prentice Hall 15

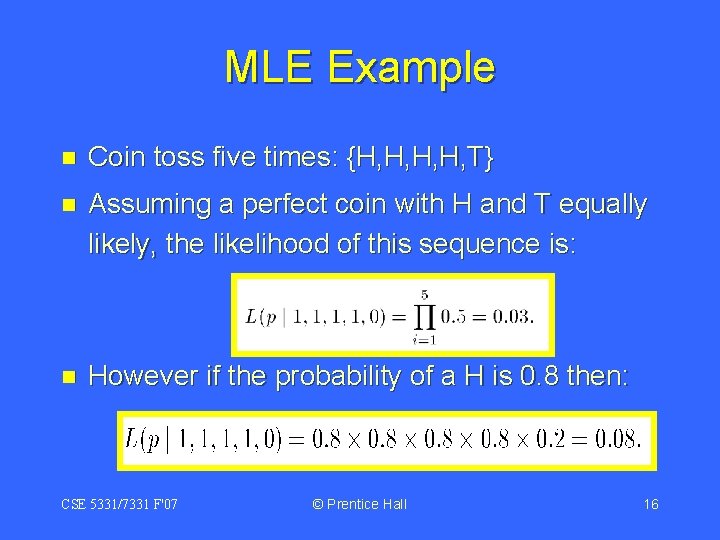

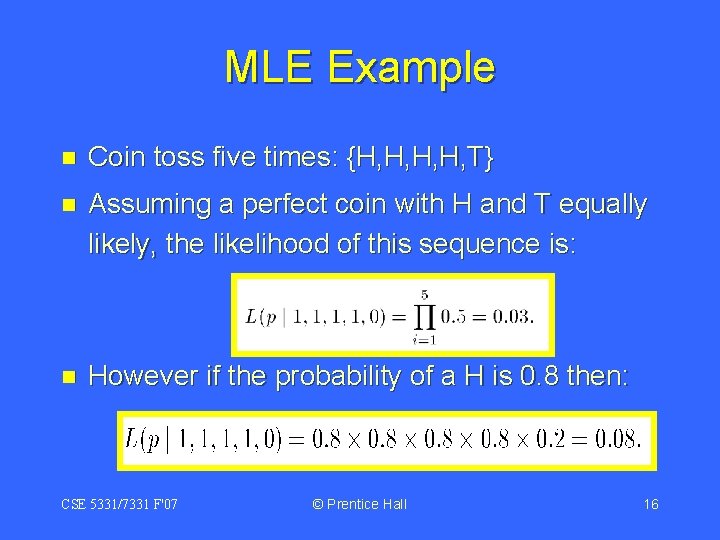

MLE Example n Coin toss five times: {H, H, T} n Assuming a perfect coin with H and T equally likely, the likelihood of this sequence is: n However if the probability of a H is 0. 8 then: CSE 5331/7331 F'07 © Prentice Hall 16

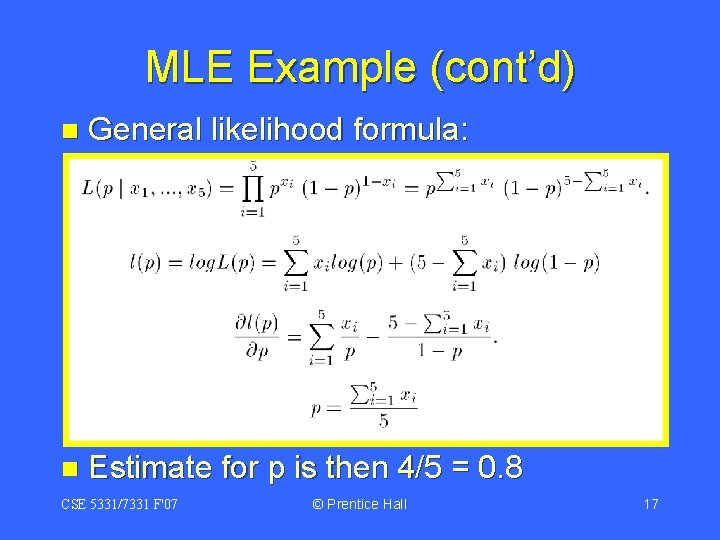

MLE Example (cont’d) n General likelihood formula: n Estimate for p is then 4/5 = 0. 8 CSE 5331/7331 F'07 © Prentice Hall 17

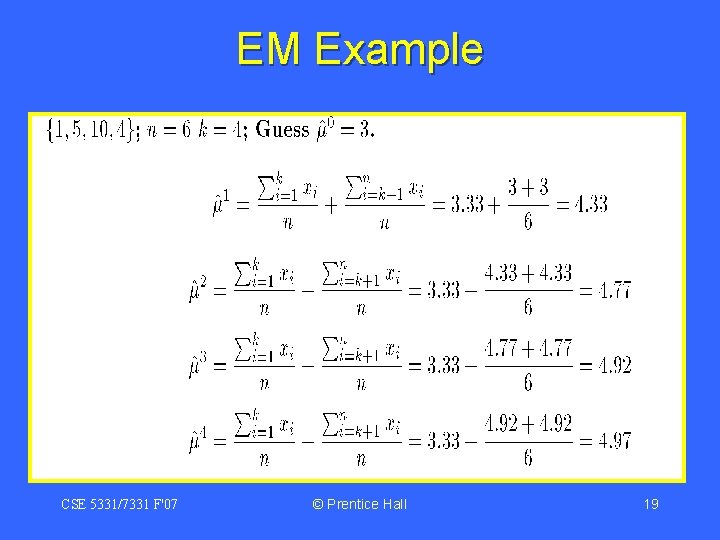

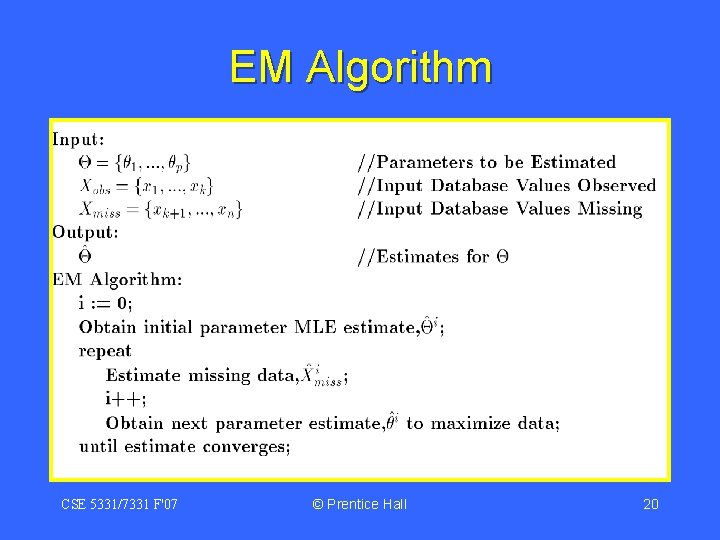

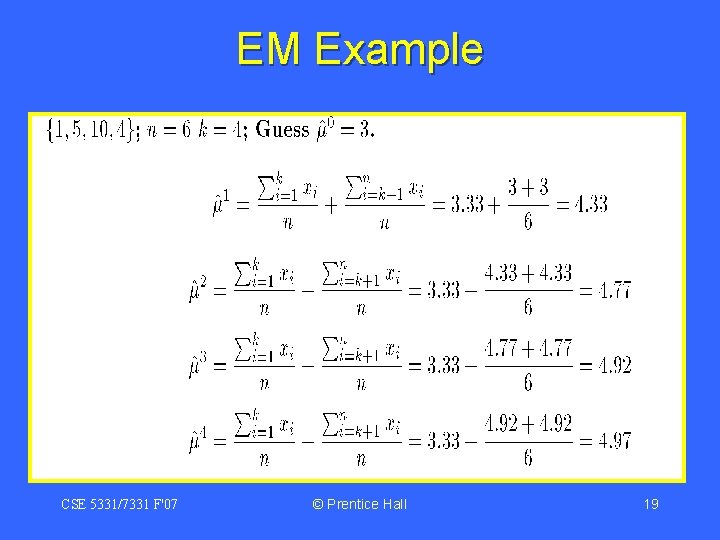

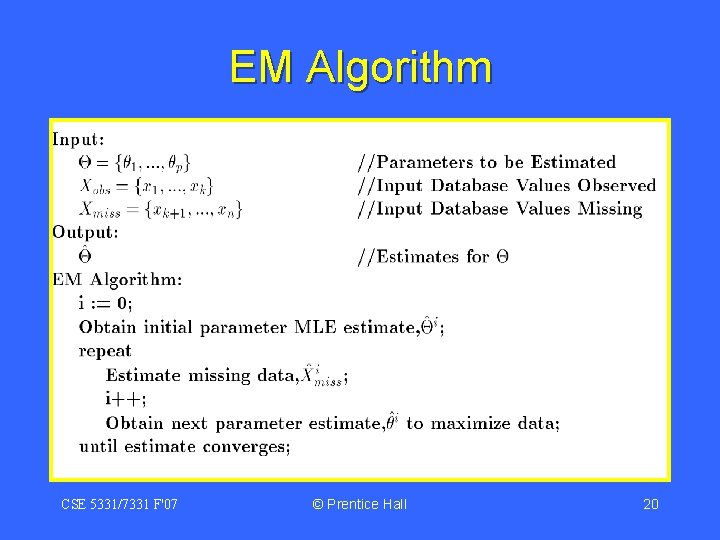

Expectation-Maximization (EM) Solves estimation with incomplete data. n Obtain initial estimates for parameters. n Iteratively use estimates for missing data and continue until convergence. n CSE 5331/7331 F'07 © Prentice Hall 18

EM Example CSE 5331/7331 F'07 © Prentice Hall 19

EM Algorithm CSE 5331/7331 F'07 © Prentice Hall 20

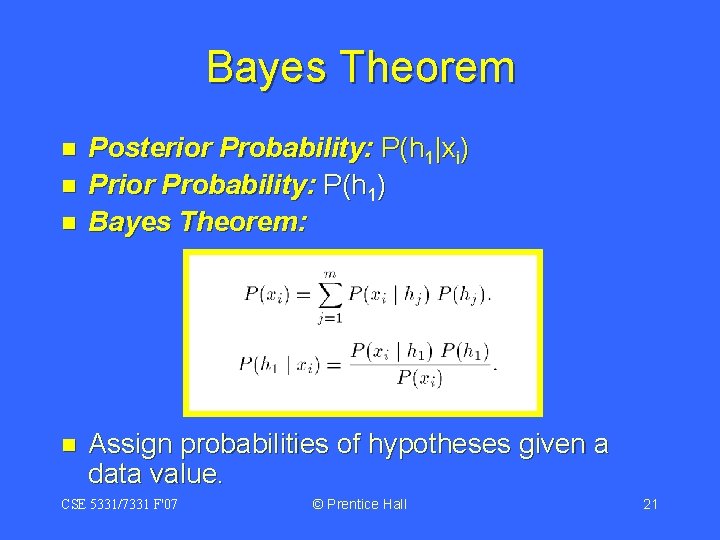

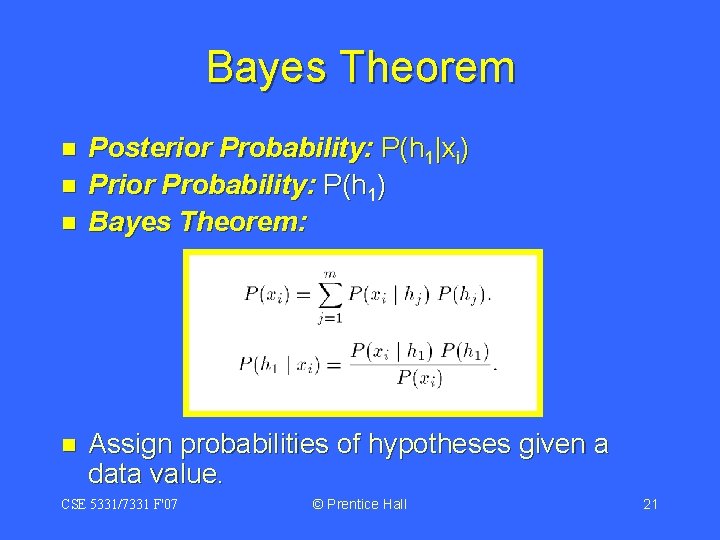

Bayes Theorem n n Posterior Probability: P(h 1|xi) Prior Probability: P(h 1) Bayes Theorem: Assign probabilities of hypotheses given a data value. CSE 5331/7331 F'07 © Prentice Hall 21

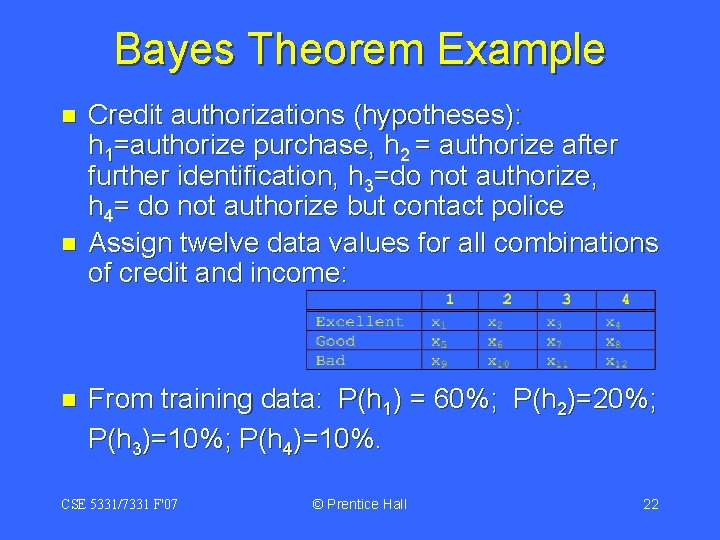

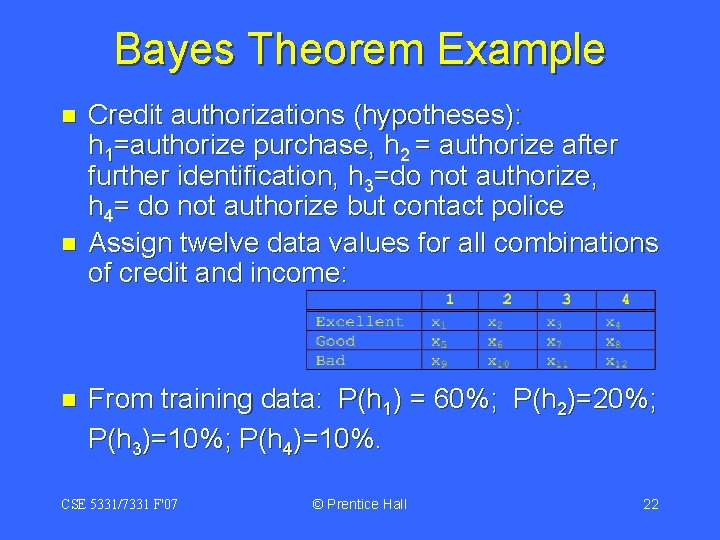

Bayes Theorem Example n n n Credit authorizations (hypotheses): h 1=authorize purchase, h 2 = authorize after further identification, h 3=do not authorize, h 4= do not authorize but contact police Assign twelve data values for all combinations of credit and income: From training data: P(h 1) = 60%; P(h 2)=20%; P(h 3)=10%; P(h 4)=10%. CSE 5331/7331 F'07 © Prentice Hall 22

Bayes Example(cont’d) n Training Data: CSE 5331/7331 F'07 © Prentice Hall 23

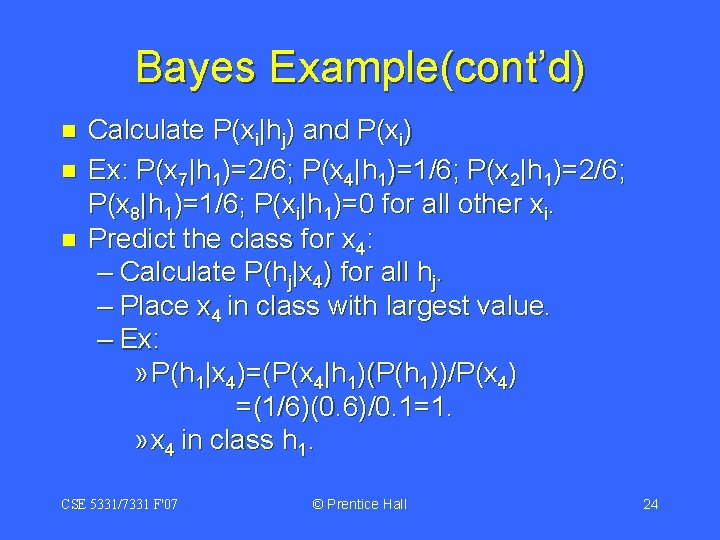

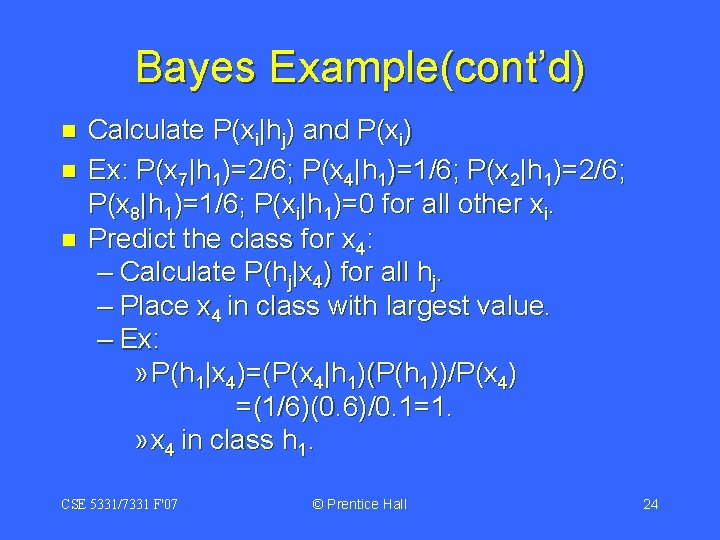

Bayes Example(cont’d) n n n Calculate P(xi|hj) and P(xi) Ex: P(x 7|h 1)=2/6; P(x 4|h 1)=1/6; P(x 2|h 1)=2/6; P(x 8|h 1)=1/6; P(xi|h 1)=0 for all other xi. Predict the class for x 4: – Calculate P(hj|x 4) for all hj. – Place x 4 in class with largest value. – Ex: » P(h 1|x 4)=(P(x 4|h 1)(P(h 1))/P(x 4) =(1/6)(0. 6)/0. 1=1. » x 4 in class h 1. CSE 5331/7331 F'07 © Prentice Hall 24

Table of Contents Introduction (Chuck Anderson) n Statistical Machine Learning Examples n – Estimation – EM – Bayes Theorem Decision Tree Learning n Neural Network Learning n CSE 5331/7331 F'07 25

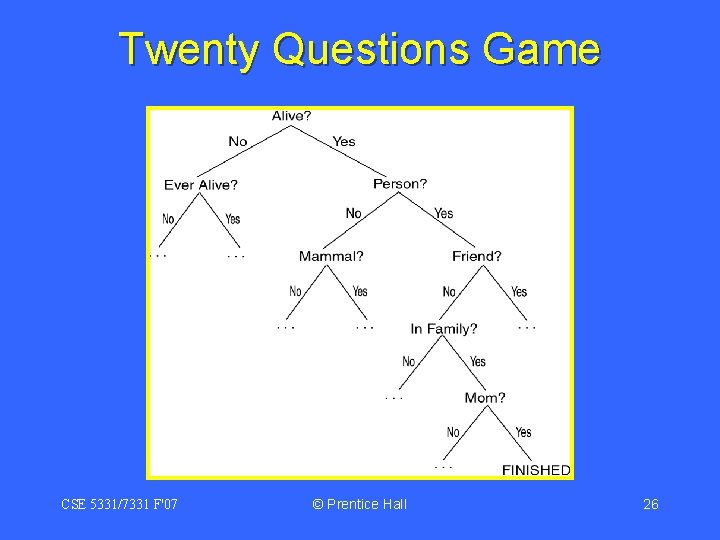

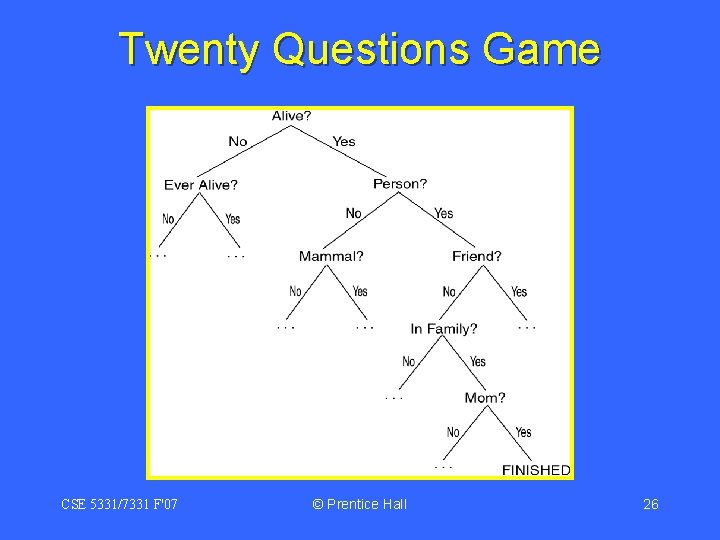

Twenty Questions Game CSE 5331/7331 F'07 © Prentice Hall 26

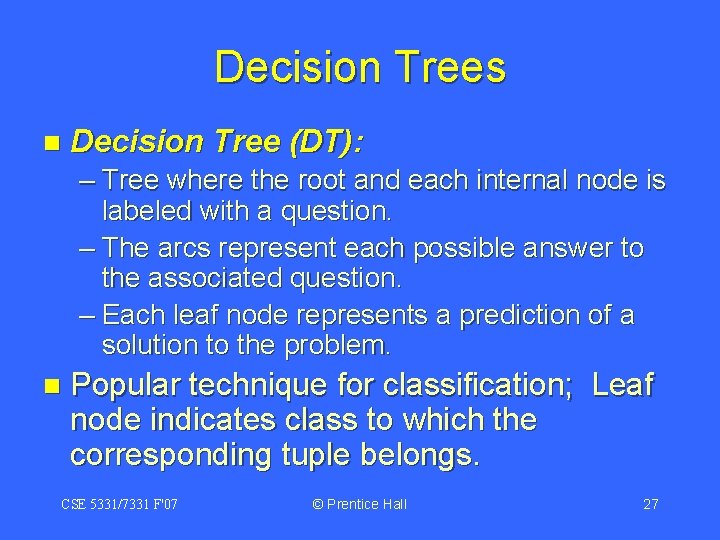

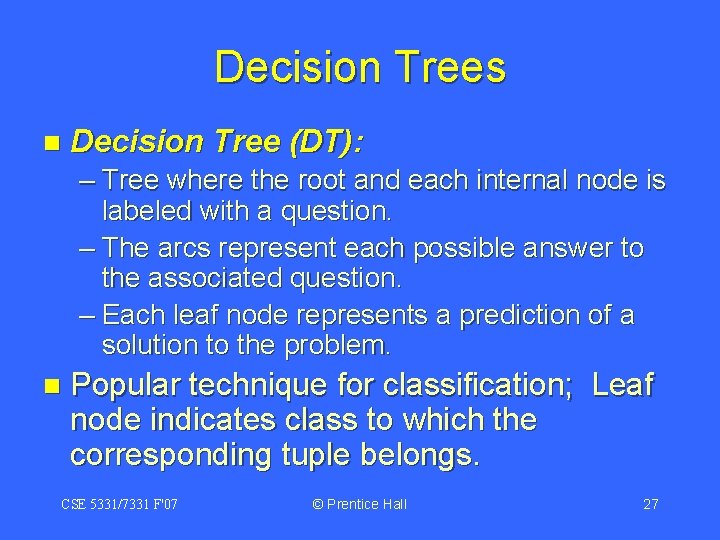

Decision Trees n Decision Tree (DT): – Tree where the root and each internal node is labeled with a question. – The arcs represent each possible answer to the associated question. – Each leaf node represents a prediction of a solution to the problem. n Popular technique for classification; Leaf node indicates class to which the corresponding tuple belongs. CSE 5331/7331 F'07 © Prentice Hall 27

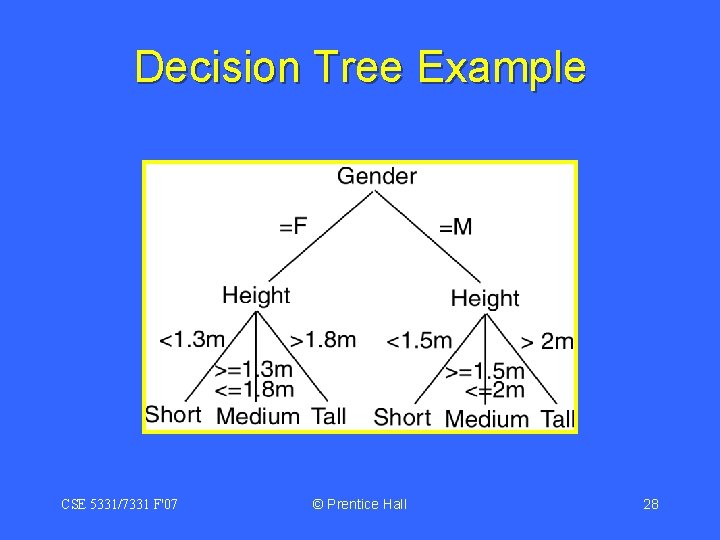

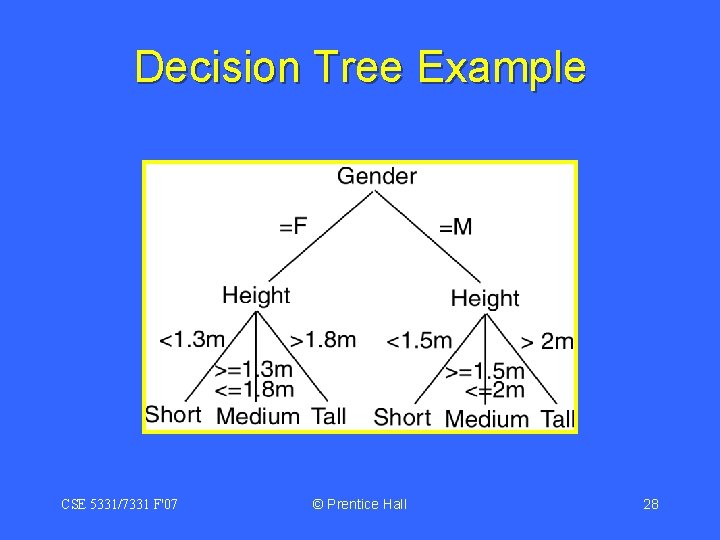

Decision Tree Example CSE 5331/7331 F'07 © Prentice Hall 28

Decision Trees How do you build a good DT? n What is a good DT? n Ans: Supervised Learning n CSE 5331/7331 F'07 © Prentice Hall 29

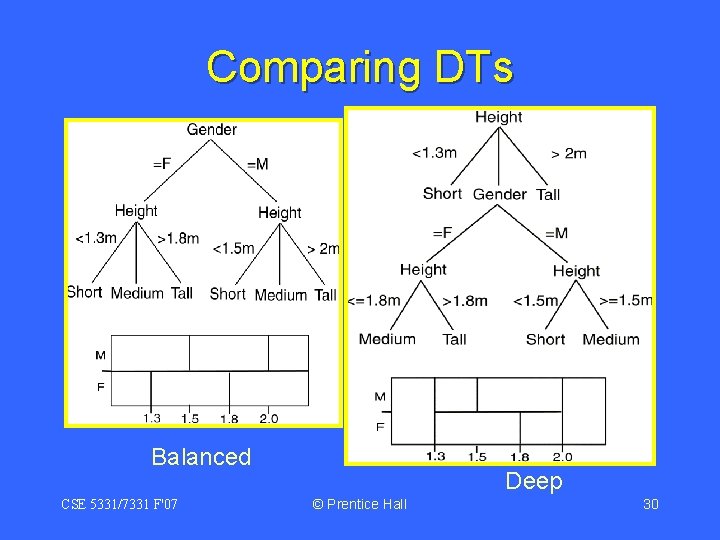

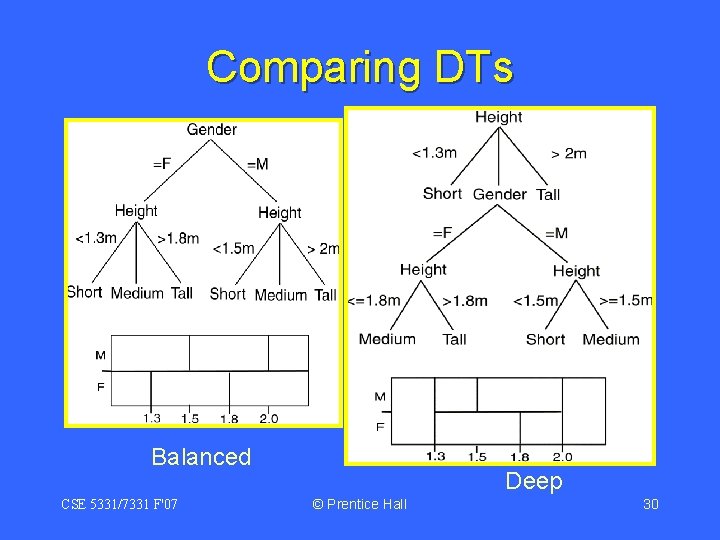

Comparing DTs Balanced CSE 5331/7331 F'07 Deep © Prentice Hall 30

Decision Tree Induction is often based on Information Theory So CSE 5331/7331 F'07 © Prentice Hall 31

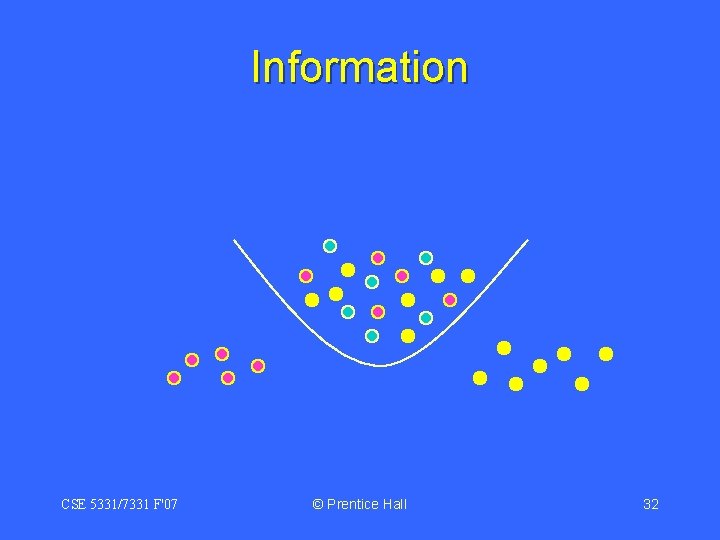

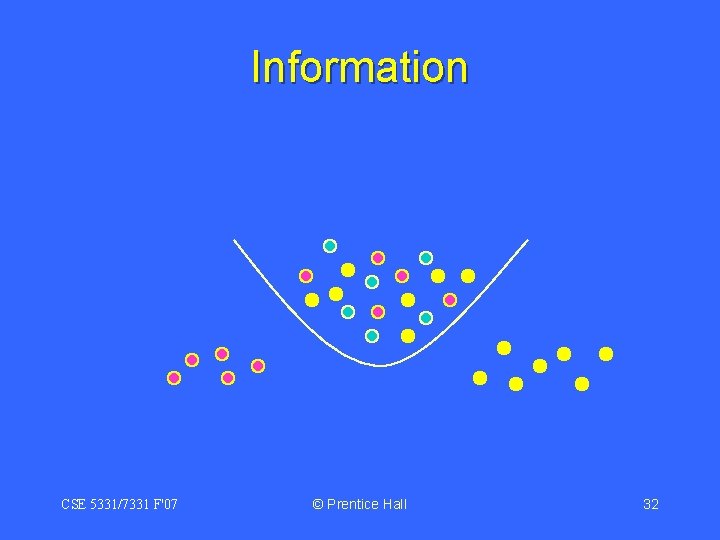

Information CSE 5331/7331 F'07 © Prentice Hall 32

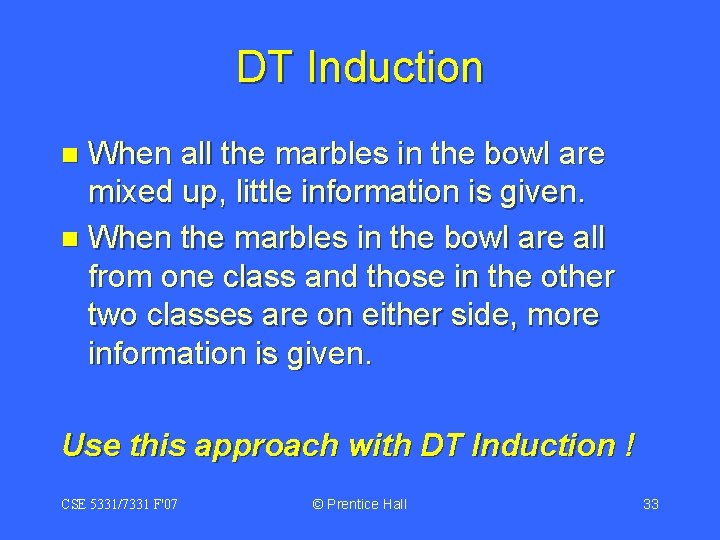

DT Induction When all the marbles in the bowl are mixed up, little information is given. n When the marbles in the bowl are all from one class and those in the other two classes are on either side, more information is given. n Use this approach with DT Induction ! CSE 5331/7331 F'07 © Prentice Hall 33

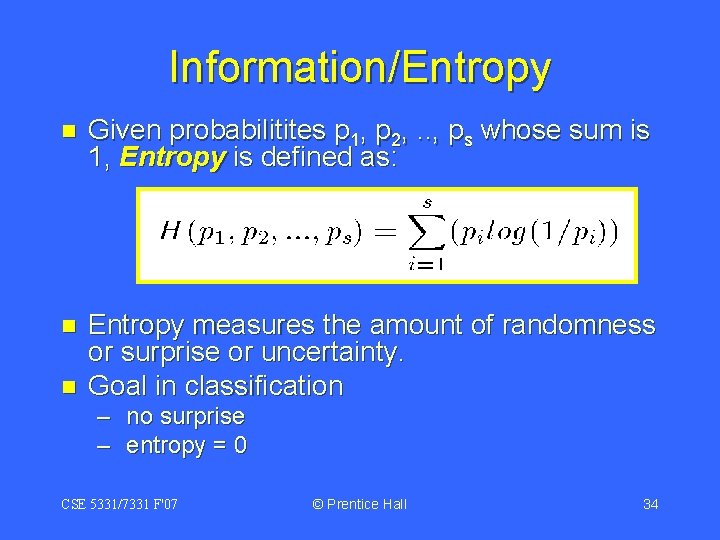

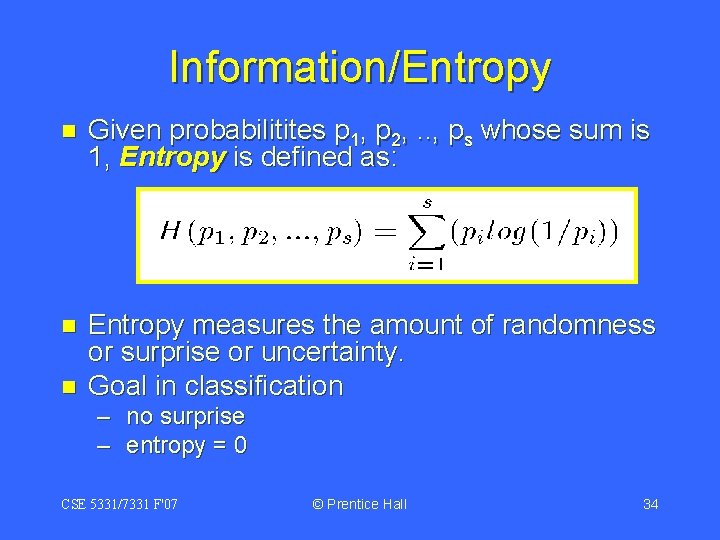

Information/Entropy n Given probabilitites p 1, p 2, . . , ps whose sum is 1, Entropy is defined as: n Entropy measures the amount of randomness or surprise or uncertainty. Goal in classification n – no surprise – entropy = 0 CSE 5331/7331 F'07 © Prentice Hall 34

Table of Contents Introduction (Chuck Anderson) n Statistical Machine Learning Examples n – Estimation – EM – Bayes Theorem Decision Tree Learning n Neural Network Learning n CSE 5331/7331 F'07 35

Neural Networks Based on observed functioning of human brain. n (Artificial Neural Networks (ANN) n Our view of neural networks is very simplistic. n We view a neural network (NN) from a graphical viewpoint. n Alternatively, a NN may be viewed from the perspective of matrices. n Used in pattern recognition, speech recognition, computer vision, and classification. n CSE 5331/7331 F'07 © Prentice Hall 36

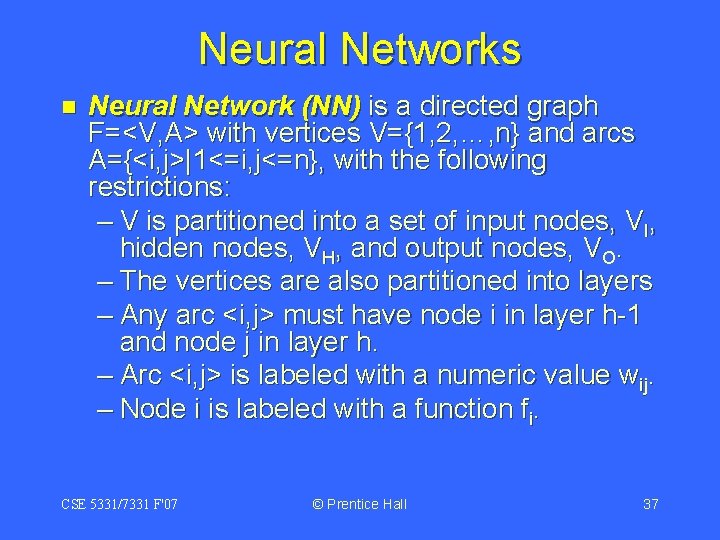

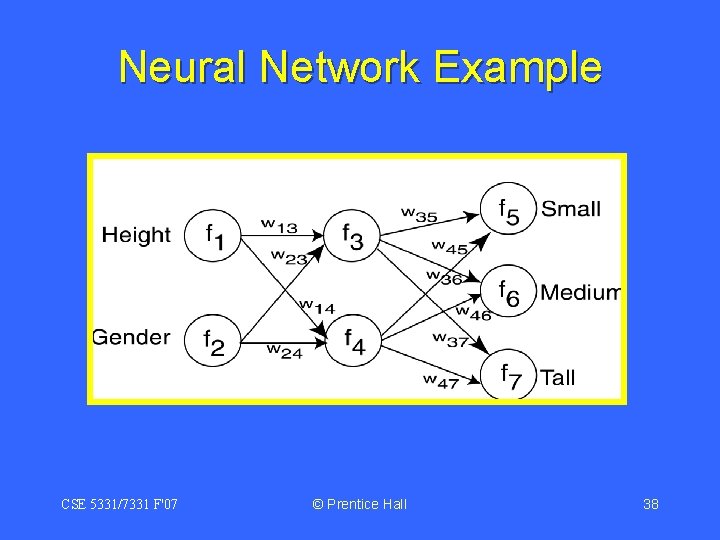

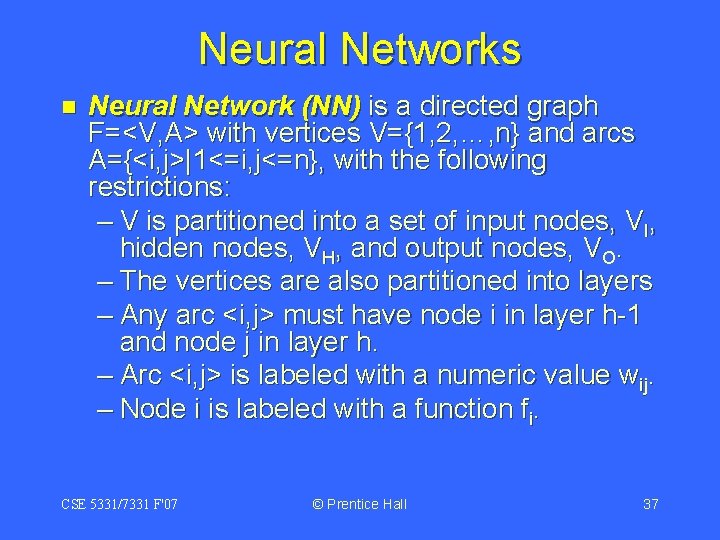

Neural Networks n Neural Network (NN) is a directed graph F=<V, A> with vertices V={1, 2, …, n} and arcs A={<i, j>|1<=i, j<=n}, with the following restrictions: – V is partitioned into a set of input nodes, VI, hidden nodes, VH, and output nodes, VO. – The vertices are also partitioned into layers – Any arc <i, j> must have node i in layer h-1 and node j in layer h. – Arc <i, j> is labeled with a numeric value wij. – Node i is labeled with a function fi. CSE 5331/7331 F'07 © Prentice Hall 37

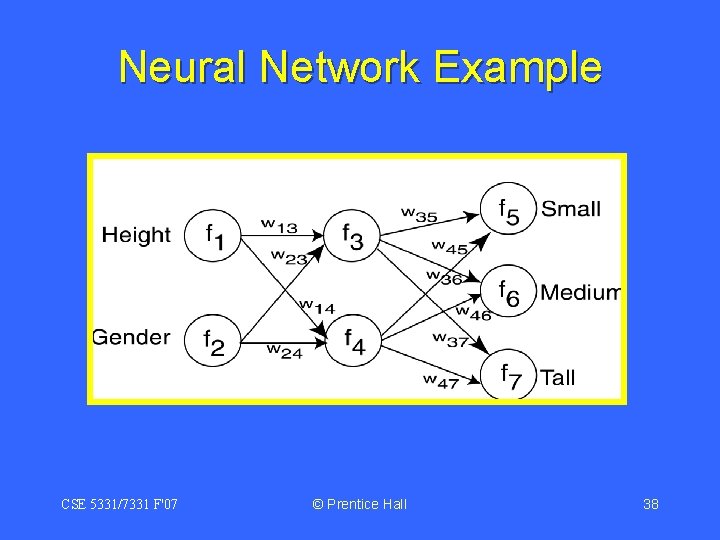

Neural Network Example CSE 5331/7331 F'07 © Prentice Hall 38

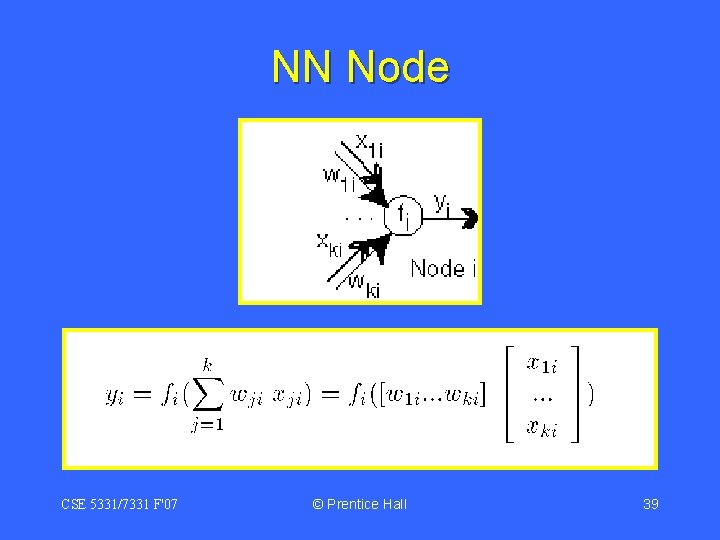

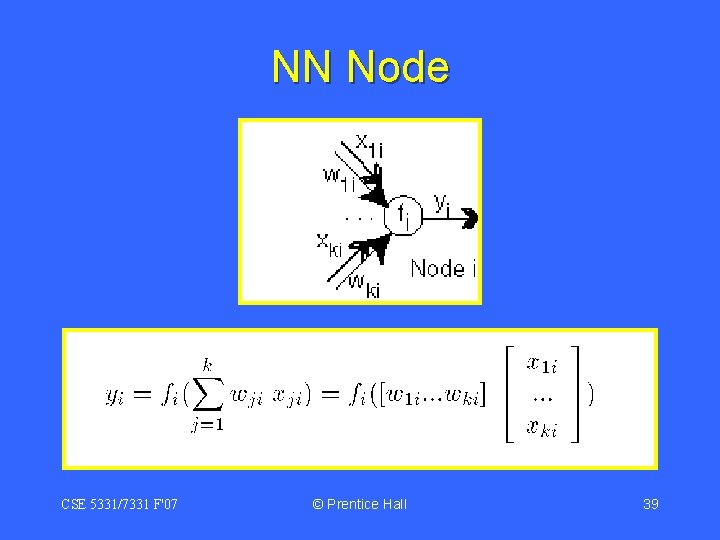

NN Node CSE 5331/7331 F'07 © Prentice Hall 39

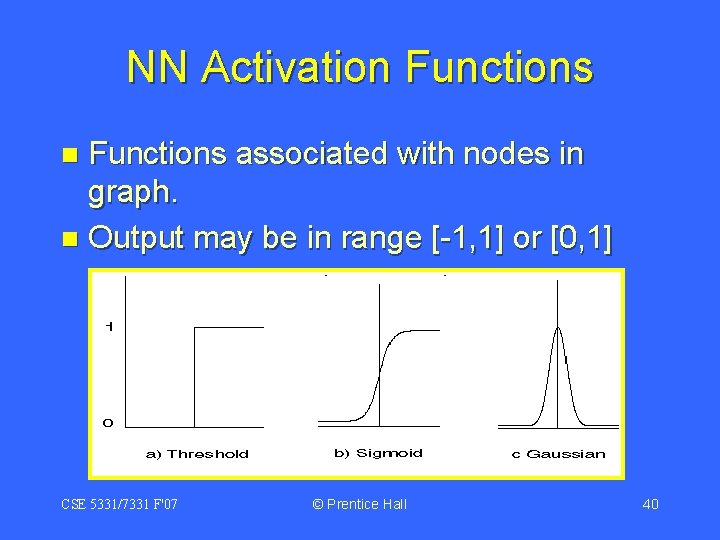

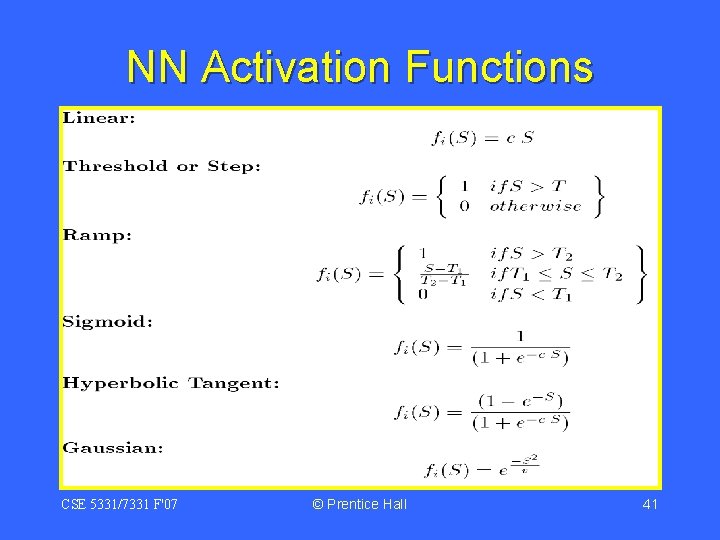

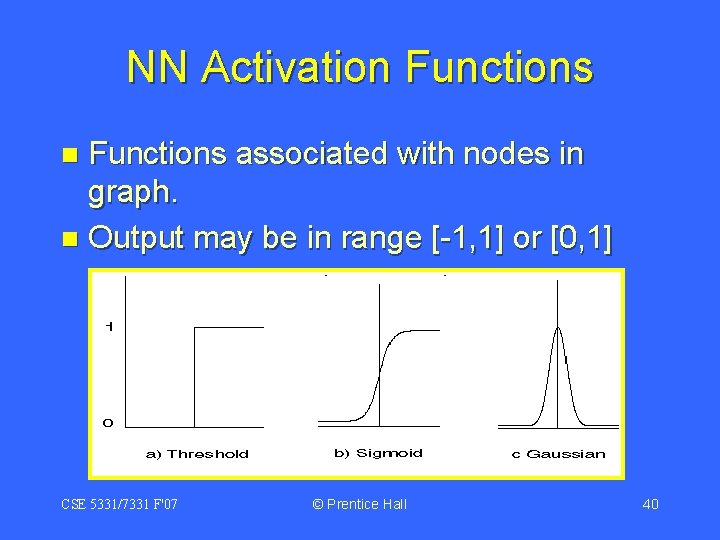

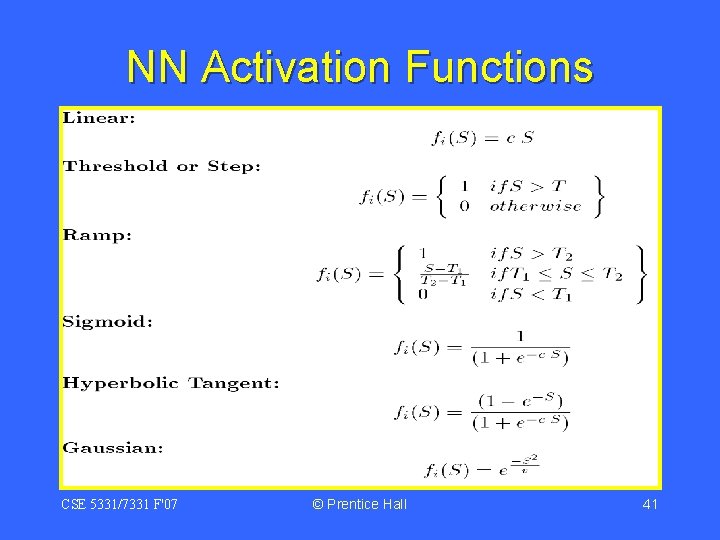

NN Activation Functions associated with nodes in graph. n Output may be in range [-1, 1] or [0, 1] n CSE 5331/7331 F'07 © Prentice Hall 40

NN Activation Functions CSE 5331/7331 F'07 © Prentice Hall 41

NN Learning Propagate input values through graph. n Compare output to desired output. n Adjust weights in graph accordingly. n CSE 5331/7331 F'07 © Prentice Hall 42