CSE 482 Lecture 27 Spark 1 Motivation Began

CSE 482 Lecture 27 (Spark) 1

Motivation Began as a research project at UC Berkeley (Spark: Cluster Computing with Working Sets, Zaharia et al, 2010) – Designed to overcome limitations of Map. Reduce/Hadoop – Iterative jobs Many algorithms (e. g. , k-means or gradient descent) apply the same function repeatedly to the same data to optimize parameter u Map. Reduce and Hadoop are slow because each iteration is treated as a separate job and must reload data from disk u – Interactive analysis Pig and hive can be used to run ad-hoc exploratory queries on large datasets, but execution of each query may incur significant latency because each query runs a separate job and requires reading data from disk u 2

Motivation To overcome the limitations, Spark introduces the concept of resilient distributed dataset (RDD) – RDD represents a read-only collection of objects partitioned across a set of machines that can be rebuilt if a partition is lost. – Users can explicitly cache an RDD in memory across machines and reuse it in multiple Map. Reduce-like parallel operations. – RDDs achieve fault tolerance through a notion of lineage: if a partition of an RDD is lost, the RDD can be derived from other RDDs make Spark runs faster than Hadoop 3

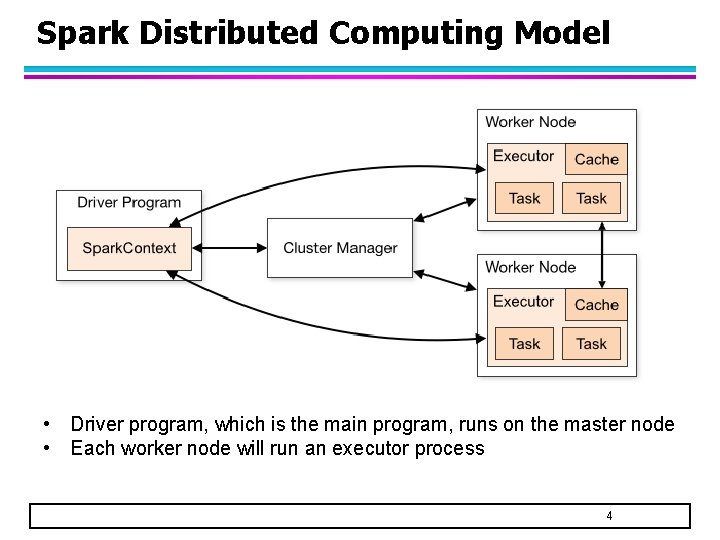

Spark Distributed Computing Model • Driver program, which is the main program, runs on the master node • Each worker node will run an executor process 4

Spark Programming Model Spark. Context – Used to connect to the cluster manager (e. g. YARN) Spark. Conf – Contains various Spark cluster configuration settings Analogous to the Job/Context and Conf objects in Hadoop progams Spark programs are often written in Scala, Python, or Java 5

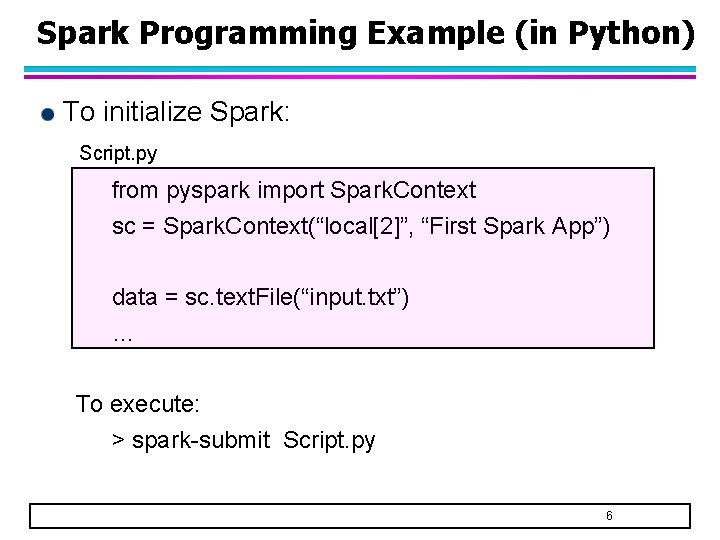

Spark Programming Example (in Python) To initialize Spark: Script. py from pyspark import Spark. Context sc = Spark. Context(“local[2]”, “First Spark App”) data = sc. text. File(“input. txt”) … To execute: > spark-submit Script. py 6

Py. Spark A Spark interactive shell for Python – Automatically initializes Spark context object (sc) so you can enter and execute commands directly 7

Resilient Distributed Datasets (RDD) An RDD is a collection of records that is distributed or partitioned across many nodes in a cluster An RDD is fault-tolerant, which means if one of the node fails, the RDD can be reconstructed automatically on the remaining nodes and the job will still complete 8

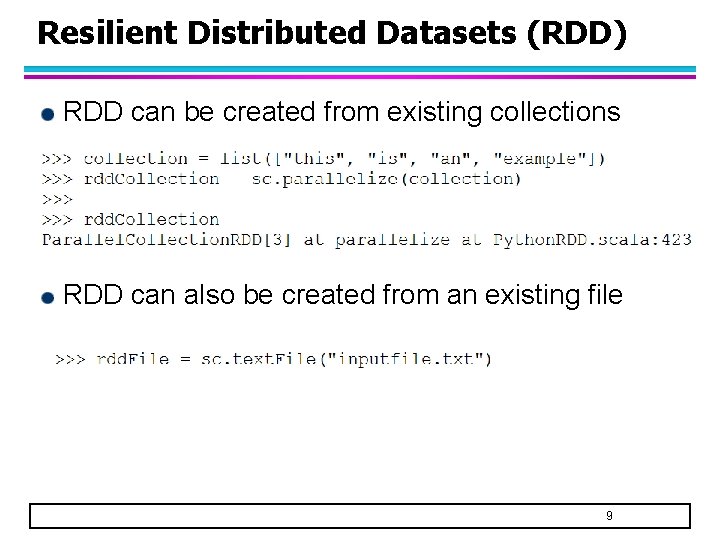

Resilient Distributed Datasets (RDD) RDD can be created from existing collections RDD can also be created from an existing file 9

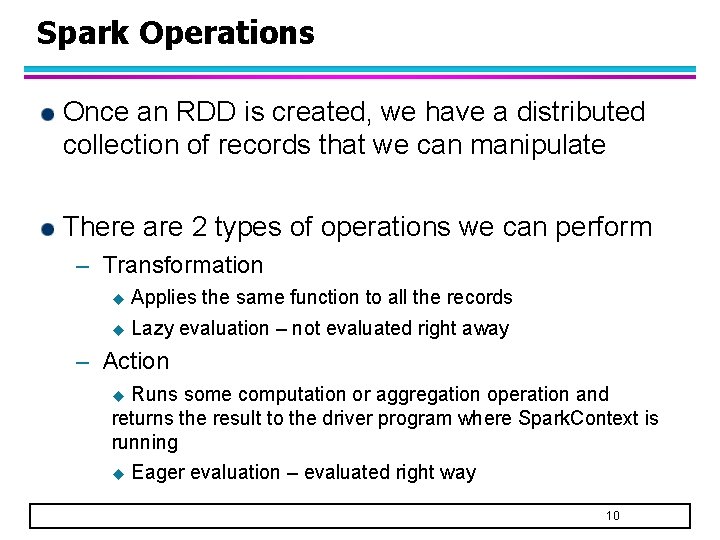

Spark Operations Once an RDD is created, we have a distributed collection of records that we can manipulate There are 2 types of operations we can perform – Transformation u Applies the same function to all the records u Lazy evaluation – not evaluated right away – Action Runs some computation or aggregation operation and returns the result to the driver program where Spark. Context is running u u Eager evaluation – evaluated right way 10

Examples of Spark Transformations map() – analogous to FOREACH in pig flat. Map() – FOREACH GENERATE FLATTEN filter() – analogous to FILTER in pig sample() union() – analogous to UNION in pig intersection() distinct() – analogous to DISTINCT in pig join() – analogous to JOIN in pig 11

Examples of Spark Actions collect() – analogous to DUMP in Pig count() first() take() – analogous to LIMIT in Pig reduce. By. Key() save. As. Text. File() – analogous to STORE in Pig 12

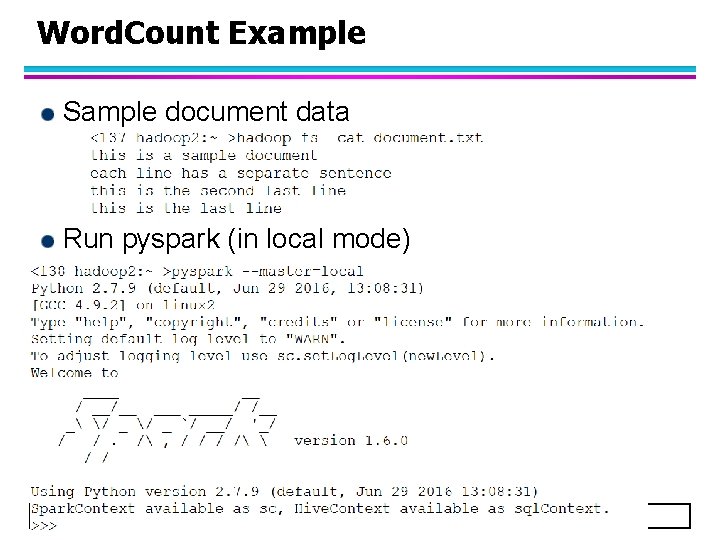

Word. Count Example Sample document data Run pyspark (in local mode) 13

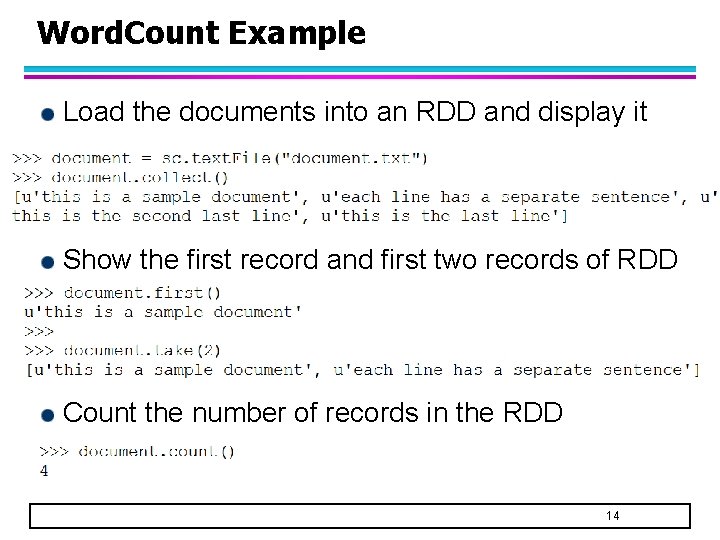

Word. Count Example Load the documents into an RDD and display it Show the first record and first two records of RDD Count the number of records in the RDD 14

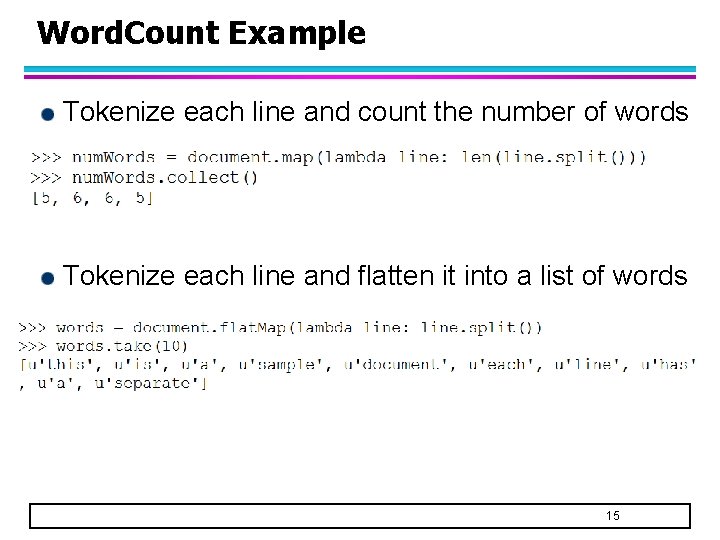

Word. Count Example Tokenize each line and count the number of words Tokenize each line and flatten it into a list of words 15

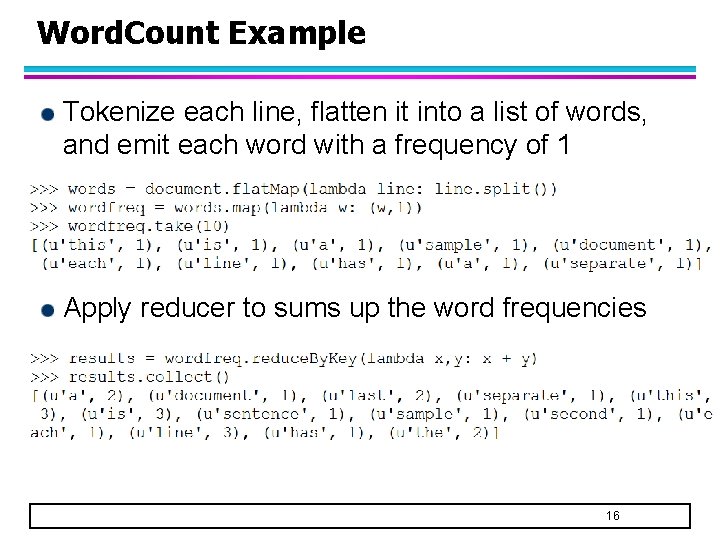

Word. Count Example Tokenize each line, flatten it into a list of words, and emit each word with a frequency of 1 Apply reducer to sums up the word frequencies 16

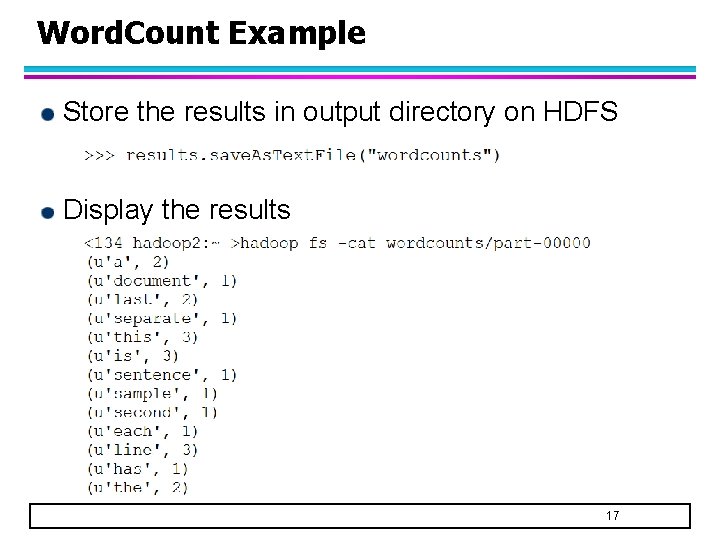

Word. Count Example Store the results in output directory on HDFS Display the results 17

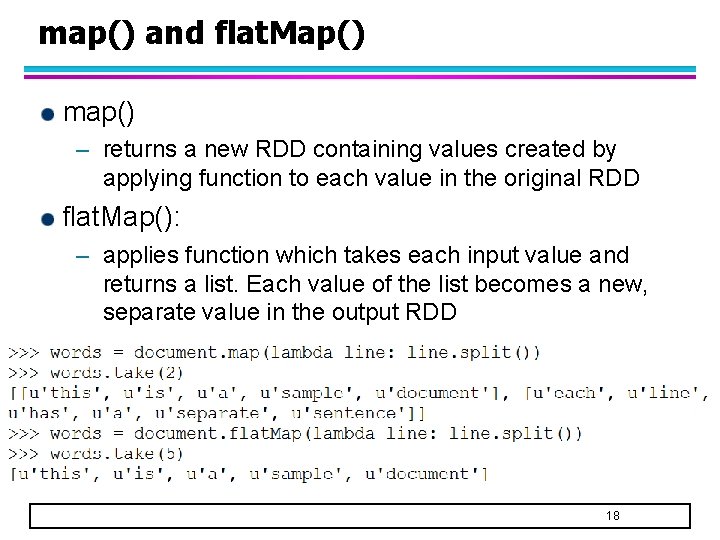

map() and flat. Map() map() – returns a new RDD containing values created by applying function to each value in the original RDD flat. Map(): – applies function which takes each input value and returns a list. Each value of the list becomes a new, separate value in the output RDD 18

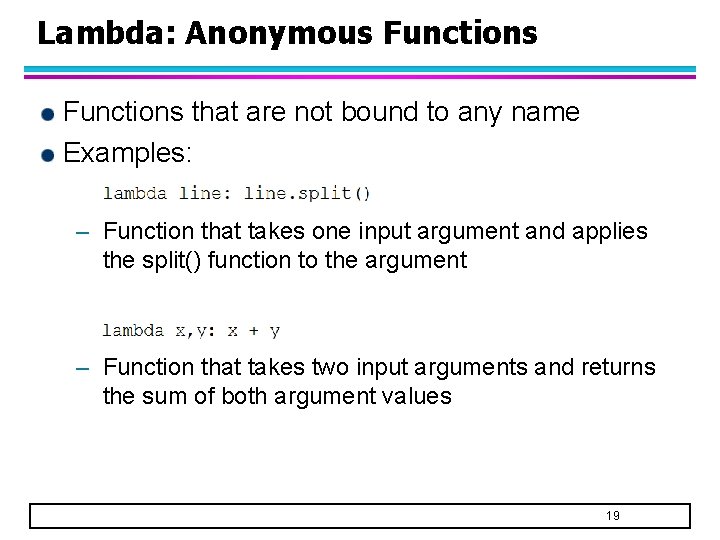

Lambda: Anonymous Functions that are not bound to any name Examples: – Function that takes one input argument and applies the split() function to the argument – Function that takes two input arguments and returns the sum of both argument values 19

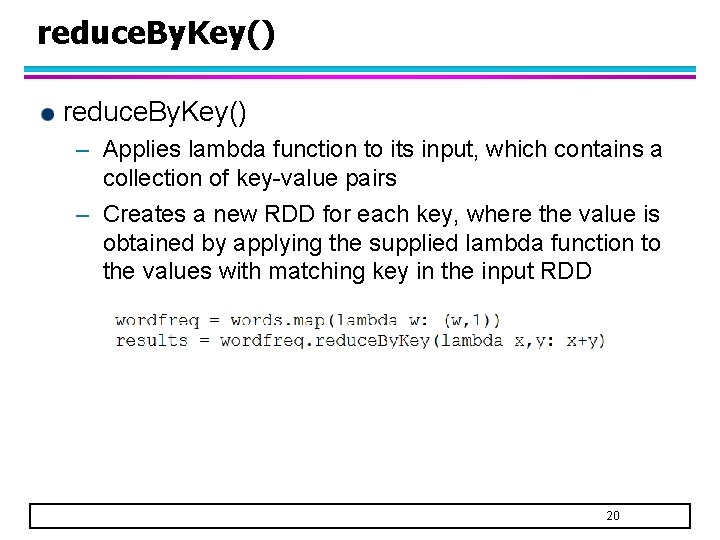

reduce. By. Key() – Applies lambda function to its input, which contains a collection of key-value pairs – Creates a new RDD for each key, where the value is obtained by applying the supplied lambda function to the values with matching key in the input RDD 20

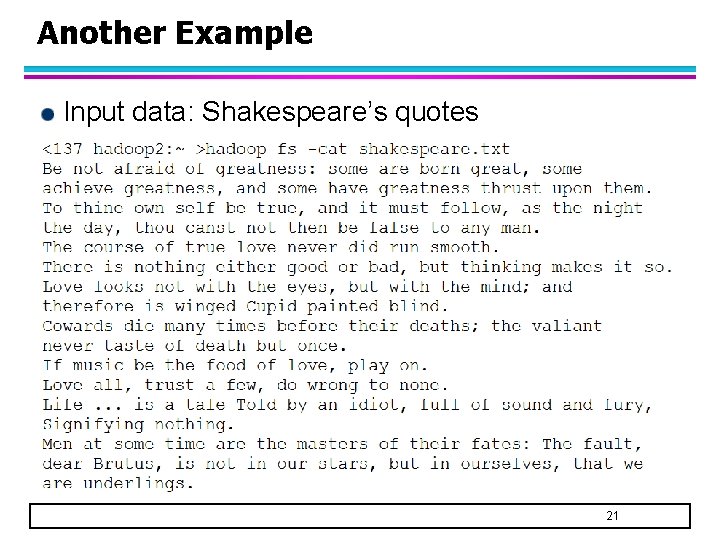

Another Example Input data: Shakespeare’s quotes 21

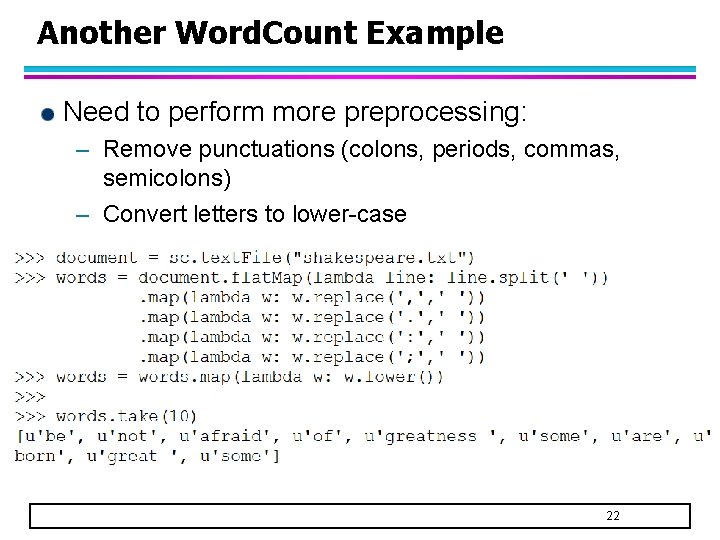

Another Word. Count Example Need to perform more preprocessing: – Remove punctuations (colons, periods, commas, semicolons) – Convert letters to lower-case 22

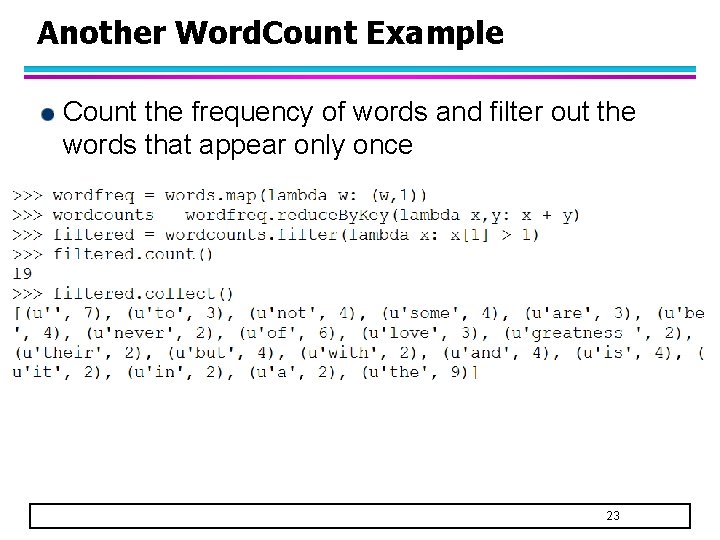

Another Word. Count Example Count the frequency of words and filter out the words that appear only once 23

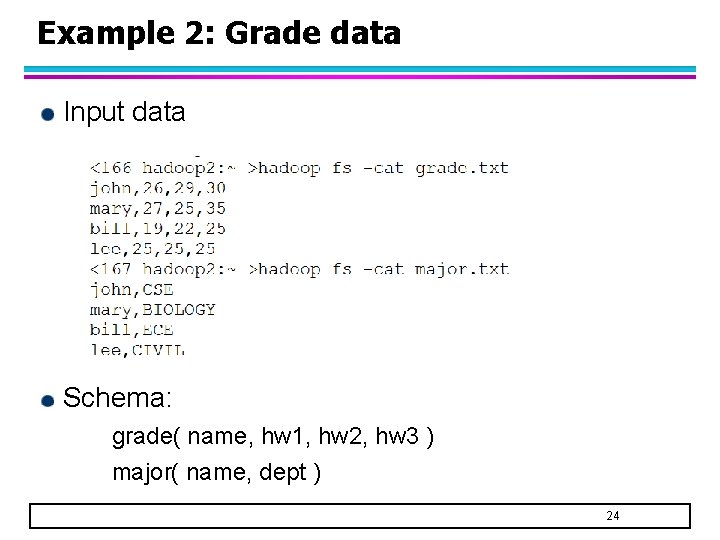

Example 2: Grade data Input data Schema: grade( name, hw 1, hw 2, hw 3 ) major( name, dept ) 24

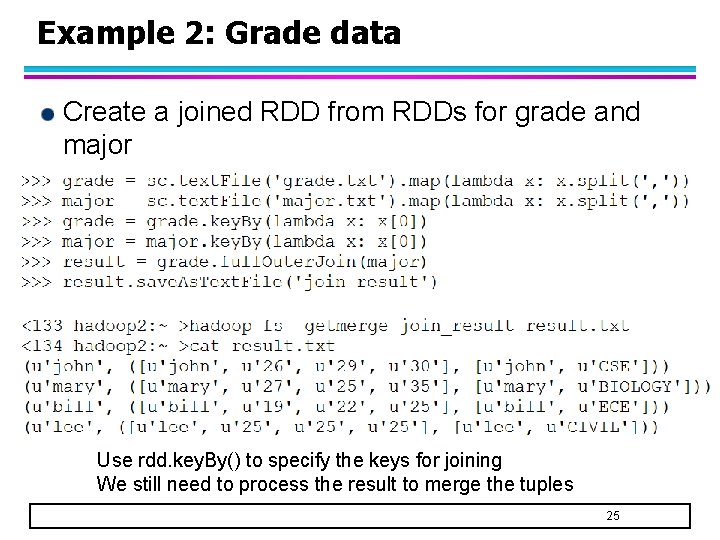

Example 2: Grade data Create a joined RDD from RDDs for grade and major Use rdd. key. By() to specify the keys for joining We still need to process the result to merge the tuples 25

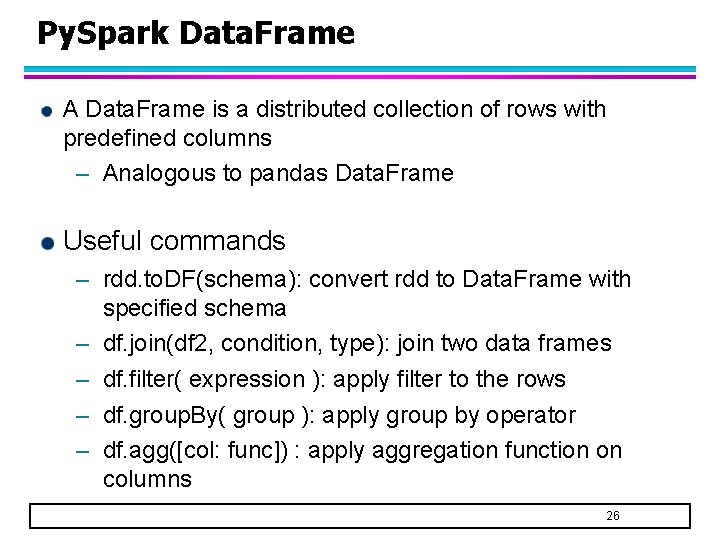

Py. Spark Data. Frame A Data. Frame is a distributed collection of rows with predefined columns – Analogous to pandas Data. Frame Useful commands – rdd. to. DF(schema): convert rdd to Data. Frame with specified schema – df. join(df 2, condition, type): join two data frames – df. filter( expression ): apply filter to the rows – df. group. By( group ): apply group by operator – df. agg([col: func]) : apply aggregation function on columns 26

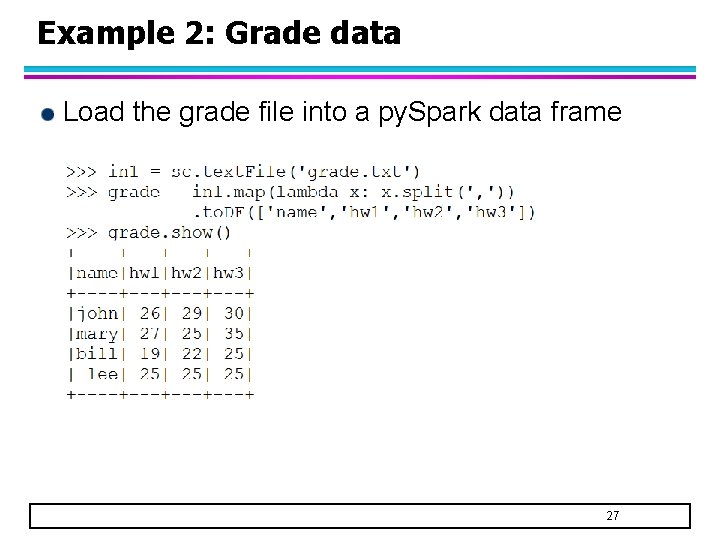

Example 2: Grade data Load the grade file into a py. Spark data frame 27

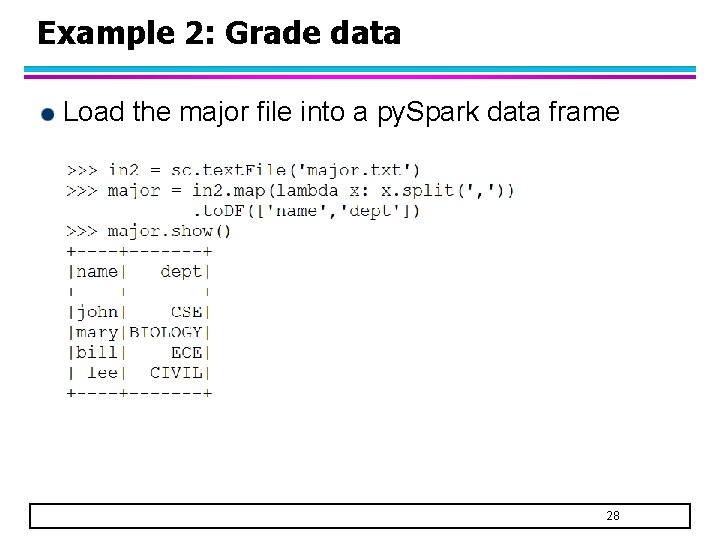

Example 2: Grade data Load the major file into a py. Spark data frame 28

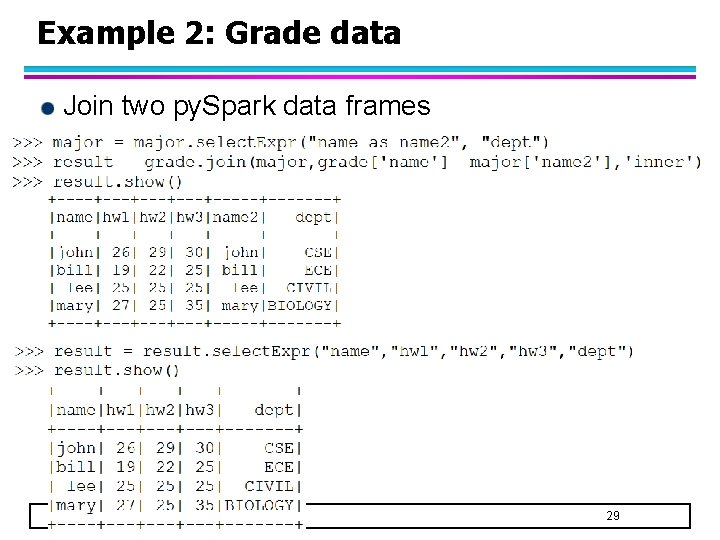

Example 2: Grade data Join two py. Spark data frames 29

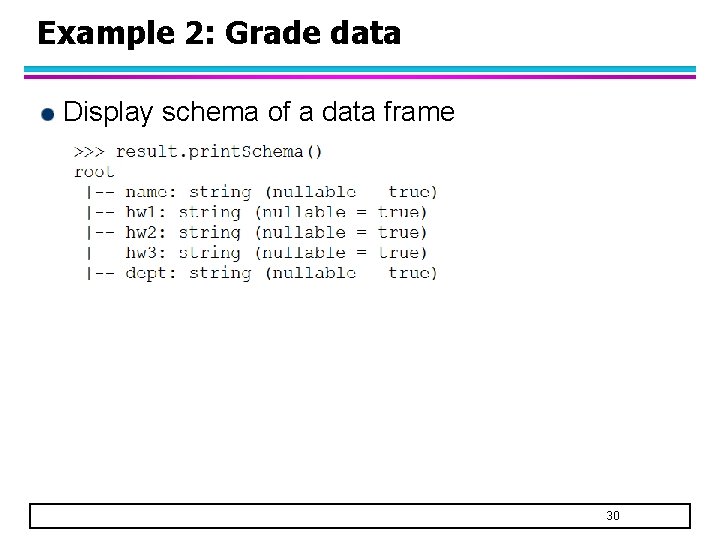

Example 2: Grade data Display schema of a data frame 30

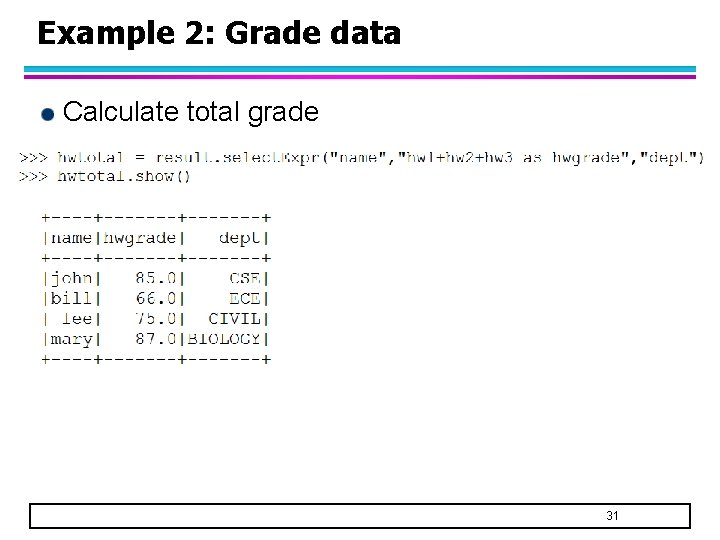

Example 2: Grade data Calculate total grade 31

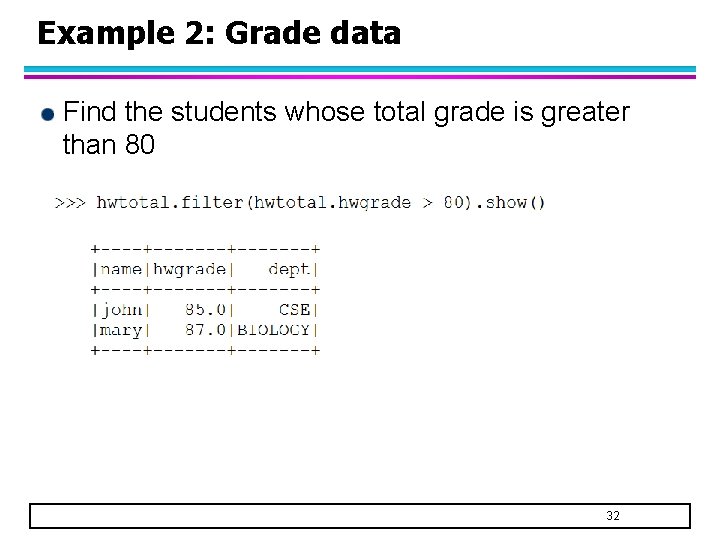

Example 2: Grade data Find the students whose total grade is greater than 80 32

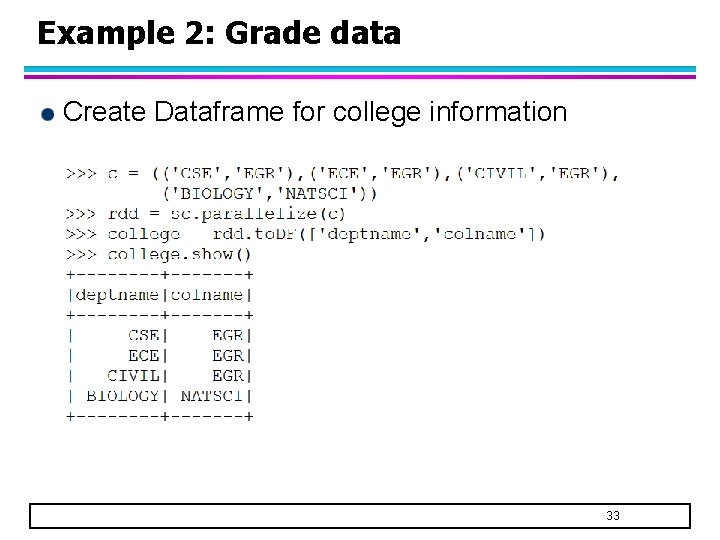

Example 2: Grade data Create Dataframe for college information 33

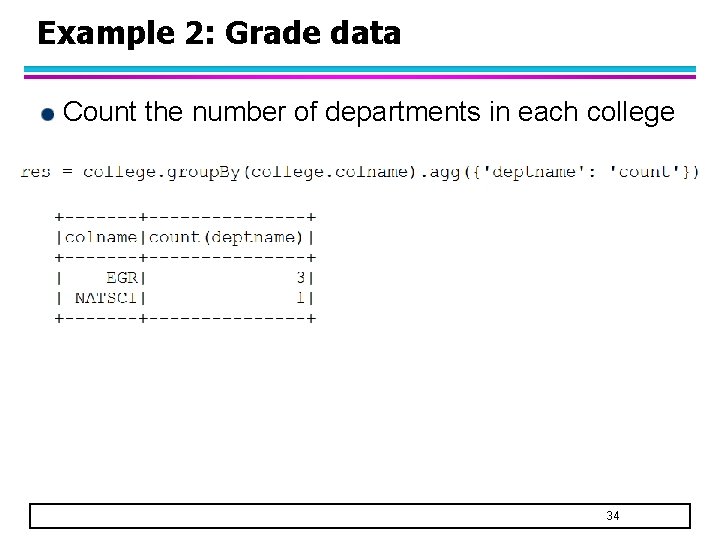

Example 2: Grade data Count the number of departments in each college 34

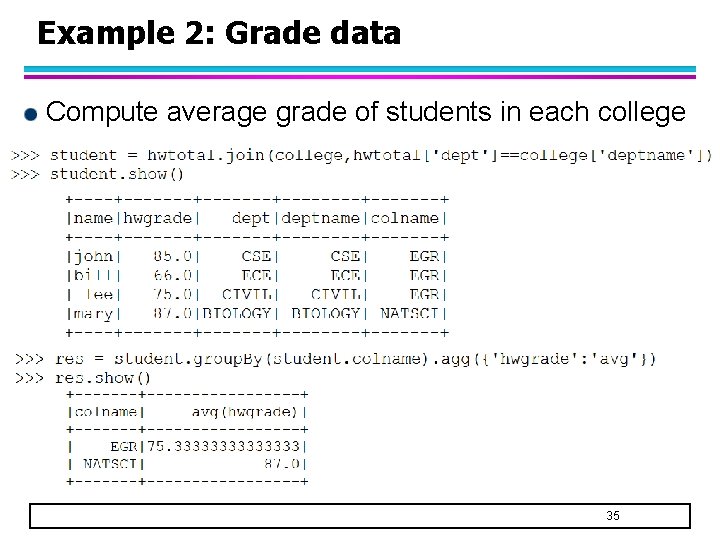

Example 2: Grade data Compute average grade of students in each college 35

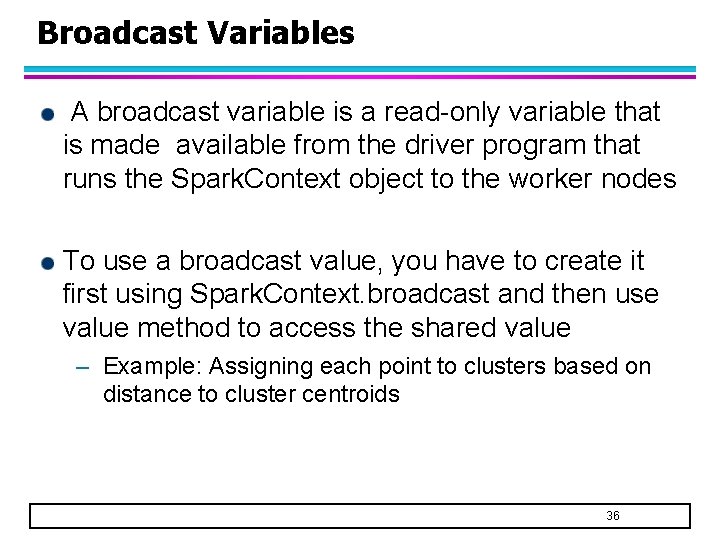

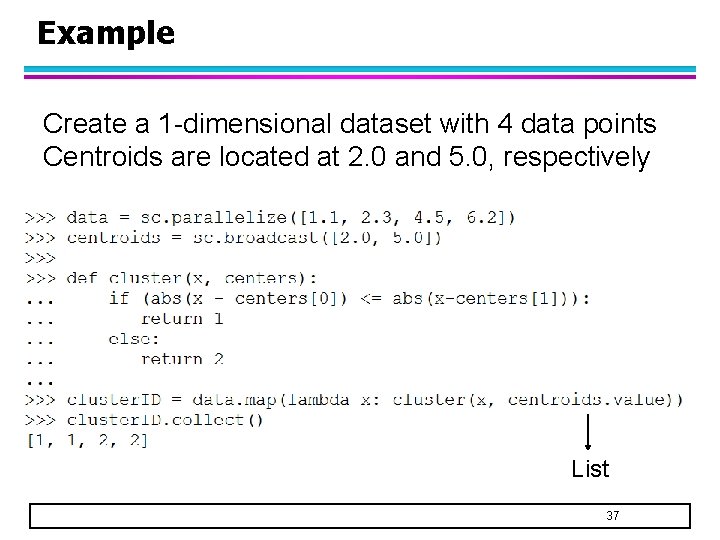

Broadcast Variables A broadcast variable is a read-only variable that is made available from the driver program that runs the Spark. Context object to the worker nodes To use a broadcast value, you have to create it first using Spark. Context. broadcast and then use value method to access the shared value – Example: Assigning each point to clusters based on distance to cluster centroids 36

Example Create a 1 -dimensional dataset with 4 data points Centroids are located at 2. 0 and 5. 0, respectively List 37

- Slides: 37