CSE 482 Big Data Analysis Lecture 19 Hadoop

CSE 482: Big Data Analysis Lecture 19 (Hadoop I/O) 1

Outline of the Lecture l Hadoop configuration, generic options, and parameter passing l Input. Format and Output. Format l Sequence. Files l Data Compression 2

Configuration l The properties of Hadoop resources are stored in Hadoop configuration files, which are XML files <configuration> <property> <name> … </name> <value> … </value> <description>… </description> </property> … </configuration> l Default properties are defined in core-default. xml, hdfs-default. xml, and mapred-default. xml 3

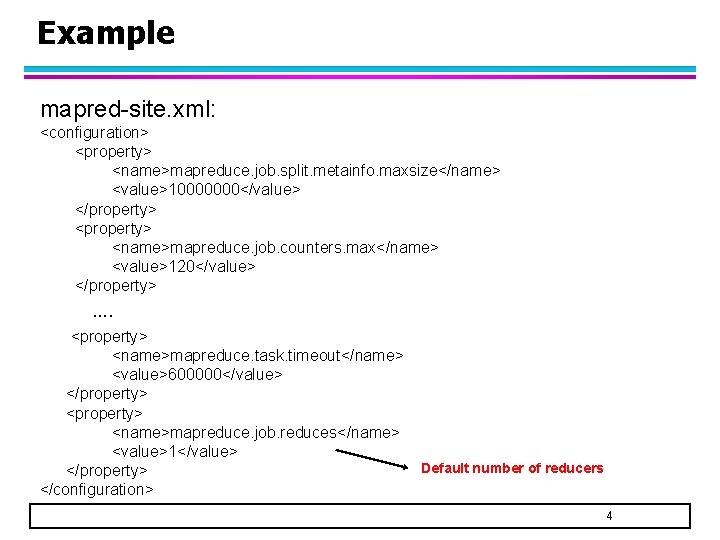

Example mapred-site. xml: <configuration> <property> <name>mapreduce. job. split. metainfo. maxsize</name> <value>10000000</value> </property> <name>mapreduce. job. counters. max</name> <value>120</value> </property> …. <property> <name>mapreduce. task. timeout</name> <value>600000</value> </property> <name>mapreduce. job. reduces</name> <value>1</value> </property> </configuration> Default number of reducers 4

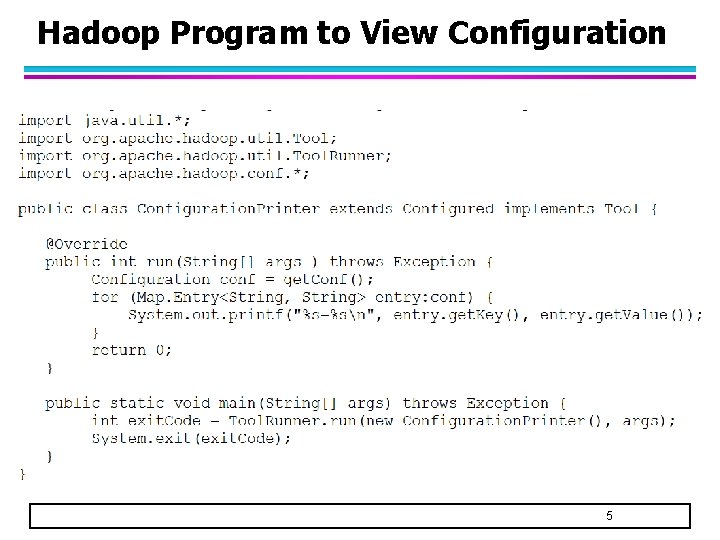

Hadoop Program to View Configuration 5

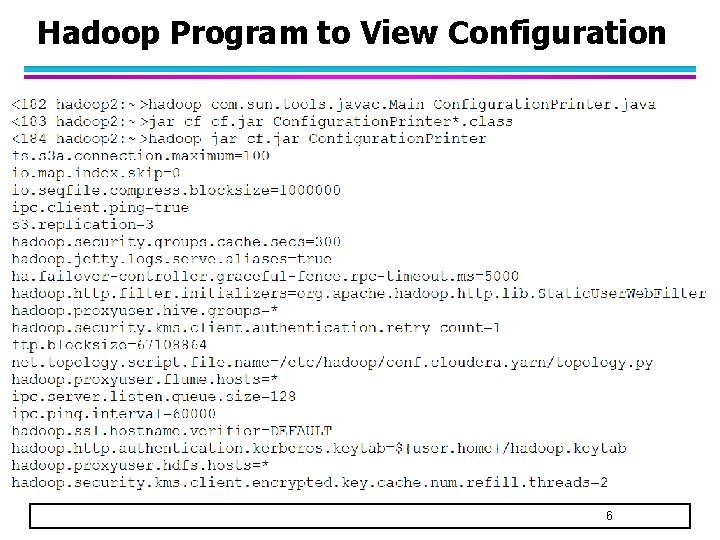

Hadoop Program to View Configuration 6

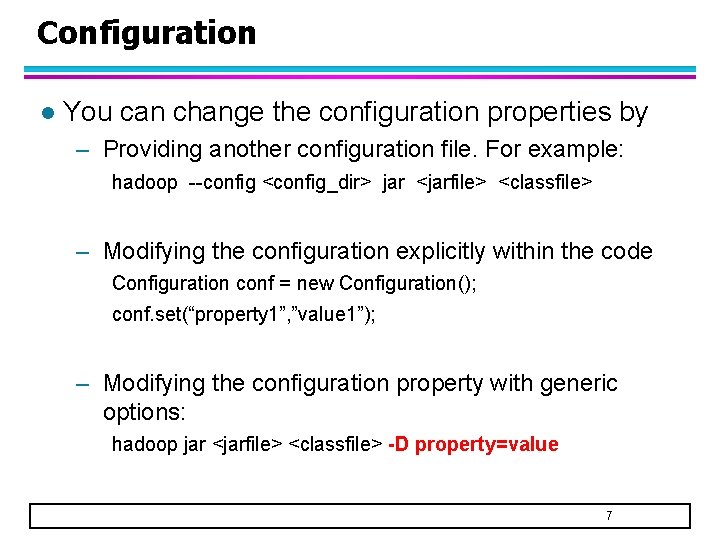

Configuration l You can change the configuration properties by – Providing another configuration file. For example: hadoop --config <config_dir> jar <jarfile> <classfile> – Modifying the configuration explicitly within the code Configuration conf = new Configuration(); conf. set(“property 1”, ”value 1”); – Modifying the configuration property with generic options: hadoop jar <jarfile> <classfile> -D property=value 7

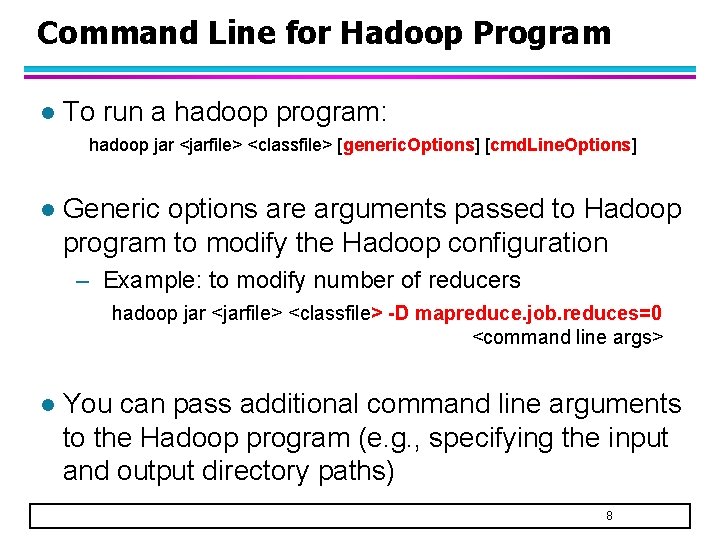

Command Line for Hadoop Program l To run a hadoop program: hadoop jar <jarfile> <classfile> [generic. Options] [cmd. Line. Options] l Generic options are arguments passed to Hadoop program to modify the Hadoop configuration – Example: to modify number of reducers hadoop jar <jarfile> <classfile> -D mapreduce. job. reduces=0 <command line args> l You can pass additional command line arguments to the Hadoop program (e. g. , specifying the input and output directory paths) 8

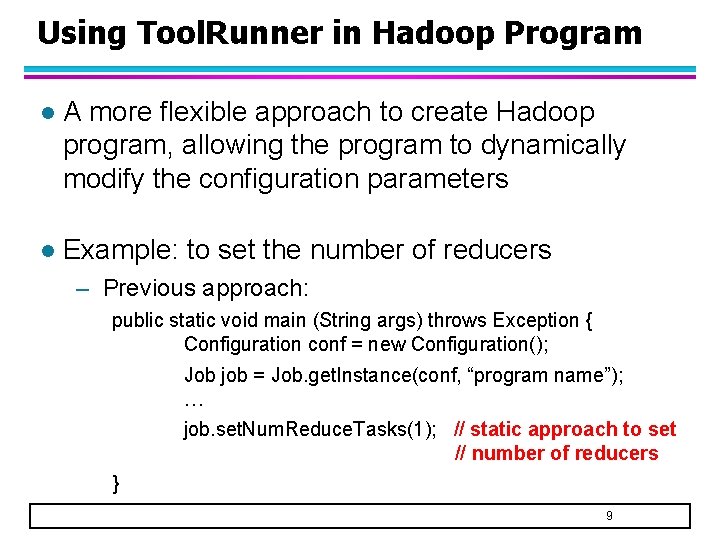

Using Tool. Runner in Hadoop Program l A more flexible approach to create Hadoop program, allowing the program to dynamically modify the configuration parameters l Example: to set the number of reducers – Previous approach: public static void main (String args) throws Exception { Configuration conf = new Configuration(); Job job = Job. get. Instance(conf, “program name”); … job. set. Num. Reduce. Tasks(1); // static approach to set // number of reducers } 9

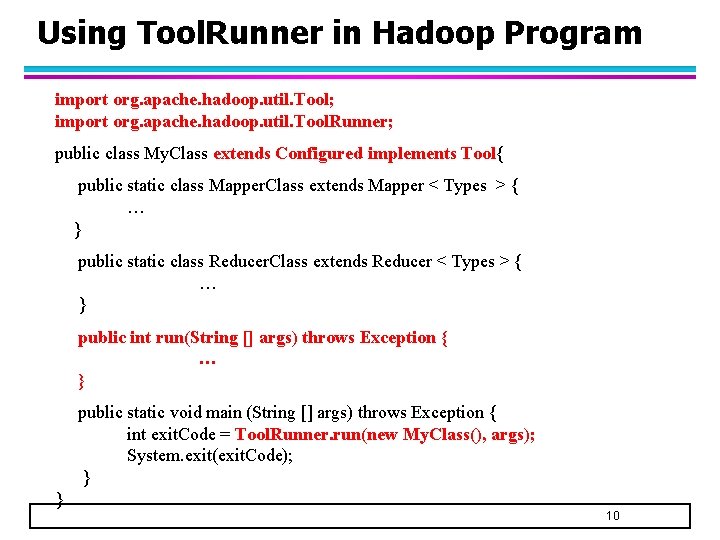

Using Tool. Runner in Hadoop Program import org. apache. hadoop. util. Tool; import org. apache. hadoop. util. Tool. Runner; public class My. Class extends Configured implements Tool{ public static class Mapper. Class extends Mapper < Types > { … } public static class Reducer. Class extends Reducer < Types > { … } public int run(String [] args) throws Exception { … } public static void main (String [] args) throws Exception { int exit. Code = Tool. Runner. run(new My. Class(), args); System. exit(exit. Code); } } 10

![Main Program public int run (String [] args) throws Exception { if (args. length[] Main Program public int run (String [] args) throws Exception { if (args. length[]](http://slidetodoc.com/presentation_image_h2/c5b5647f3fab4a8288538aed6658b474/image-11.jpg)

Main Program public int run (String [] args) throws Exception { if (args. length[] != 2) { System. err. println(“Usage: %s [generic options] <input> <output>n”, get. Class(). get. Simple. Name()); Tool. Runner. print. Generic. Command. Usage(System. err); return -1; } Job job = Job. get. Instance(get. Conf()); // create a mapreduce job. set. Jar. By. Class(get. Class()); // specify the source program job. set. Job. Name(“Name of the class/program”); // Give the program a name job. set. Mapper. Class(Mapper. Class. class); // set the mapper class job. set. Reducer. Class(Reducer. Class. class); // set the reducer class job. set. Output. Key. Class(<Class. Type>); // type of output key job. set. Output. Value. Class(<Class. Type>); // type of output value File. Input. Format. add. Input. Path(job, new Path(args[0])); // input data directory File. Output. Format. set. Output. Path(job, new Path(args[1]); // output directory return job. wait. For. Completion(true) ? 0 : 1; } 11

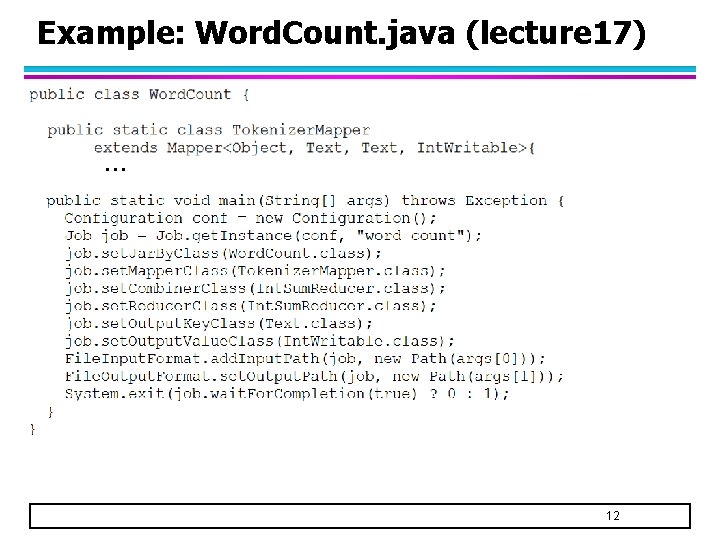

Example: Word. Count. java (lecture 17) … 12

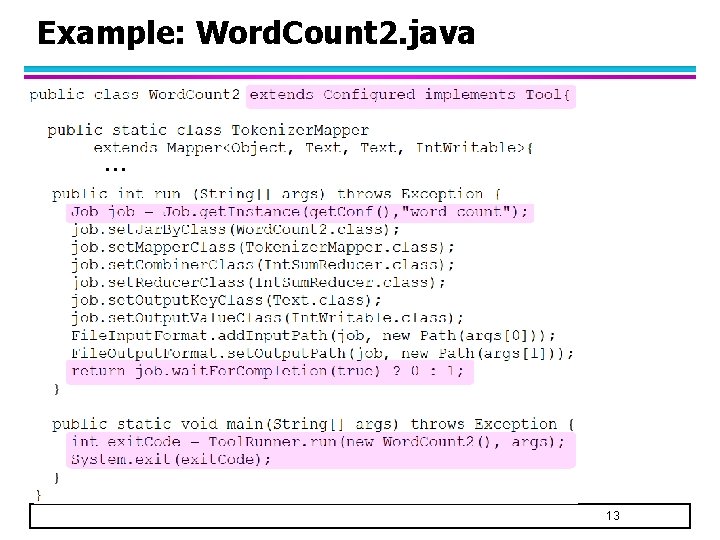

Example: Word. Count 2. java … 13

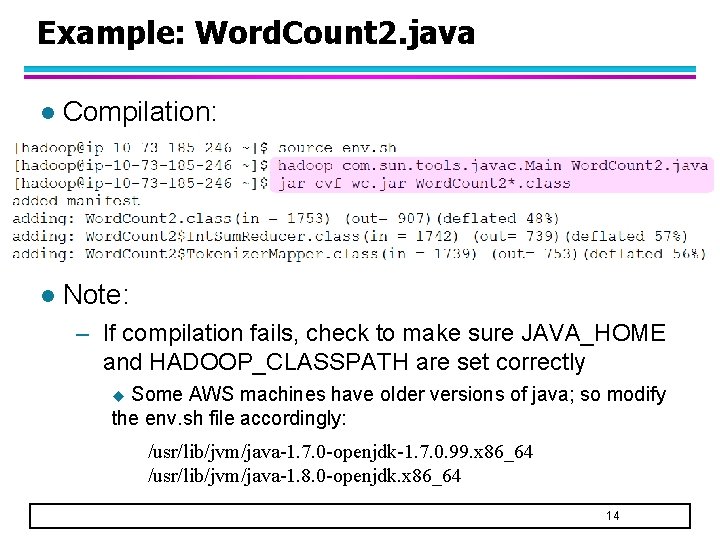

Example: Word. Count 2. java l Compilation: l Note: – If compilation fails, check to make sure JAVA_HOME and HADOOP_CLASSPATH are set correctly Some AWS machines have older versions of java; so modify the env. sh file accordingly: u /usr/lib/jvm/java-1. 7. 0 -openjdk-1. 7. 0. 99. x 86_64 /usr/lib/jvm/java-1. 8. 0 -openjdk. x 86_64 14

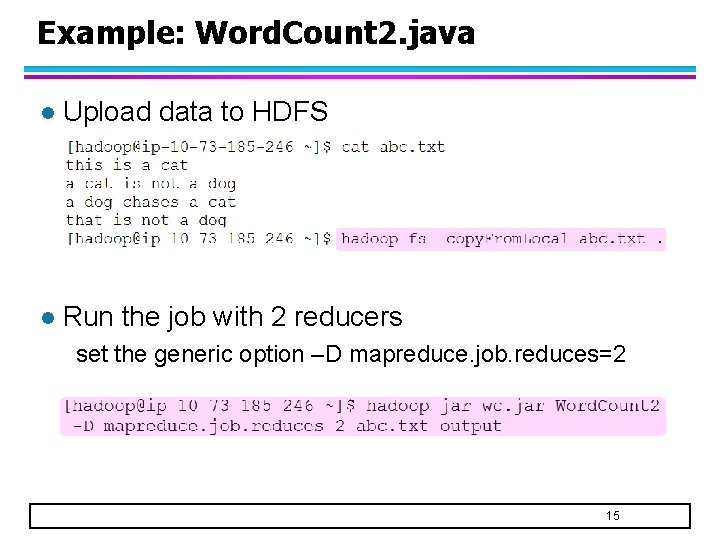

Example: Word. Count 2. java l Upload data to HDFS l Run the job with 2 reducers set the generic option –D mapreduce. job. reduces=2 15

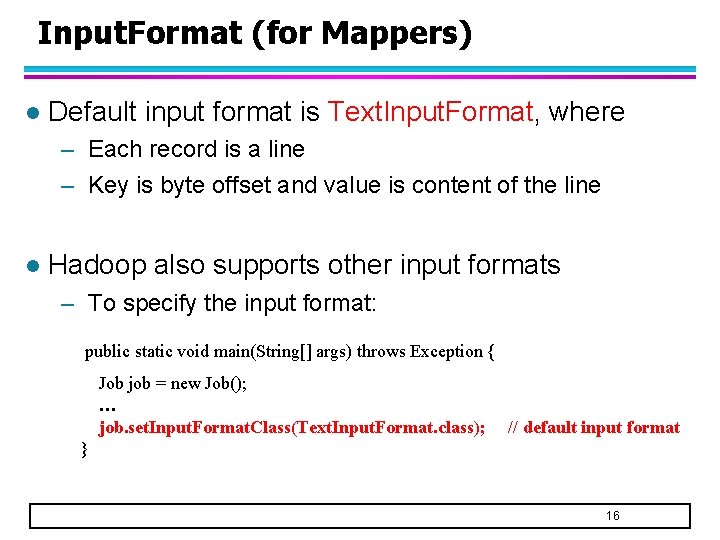

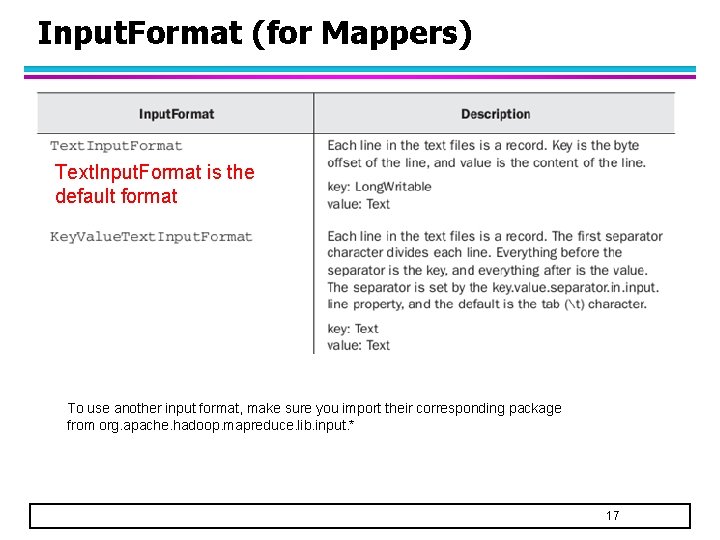

Input. Format (for Mappers) l Default input format is Text. Input. Format, where – Each record is a line – Key is byte offset and value is content of the line l Hadoop also supports other input formats – To specify the input format: public static void main(String[] args) throws Exception { Job job = new Job(); … job. set. Input. Format. Class(Text. Input. Format. class); // default input format } 16

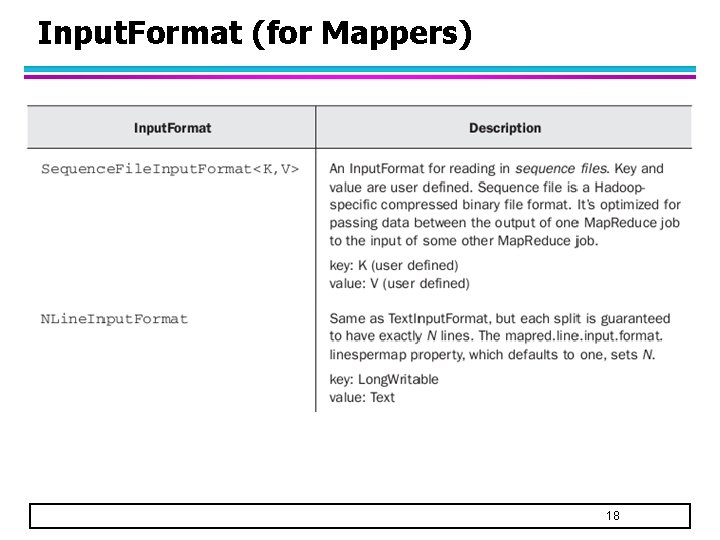

Input. Format (for Mappers) Text. Input. Format is the default format To use another input format, make sure you import their corresponding package from org. apache. hadoop. mapreduce. lib. input. * 17

Input. Format (for Mappers) 18

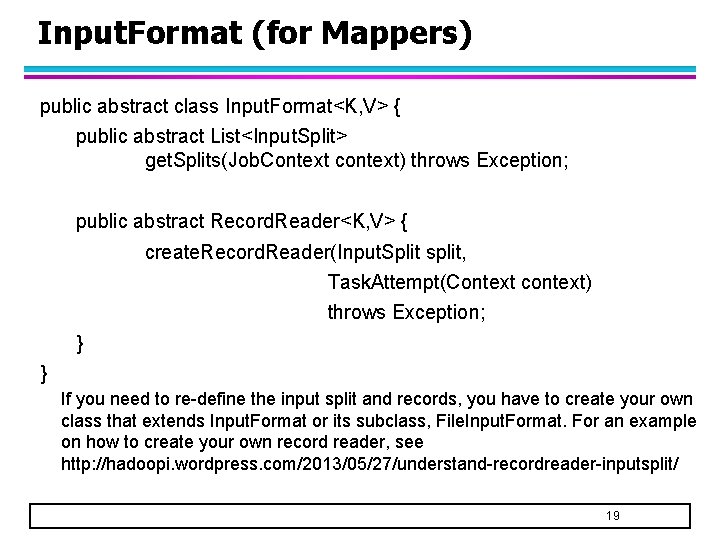

Input. Format (for Mappers) public abstract class Input. Format<K, V> { public abstract List<Input. Split> get. Splits(Job. Context context) throws Exception; public abstract Record. Reader<K, V> { create. Record. Reader(Input. Split split, Task. Attempt(Context context) throws Exception; } } If you need to re-define the input split and records, you have to create your own class that extends Input. Format or its subclass, File. Input. Format. For an example on how to create your own record reader, see http: //hadoopi. wordpress. com/2013/05/27/understand-recordreader-inputsplit/ 19

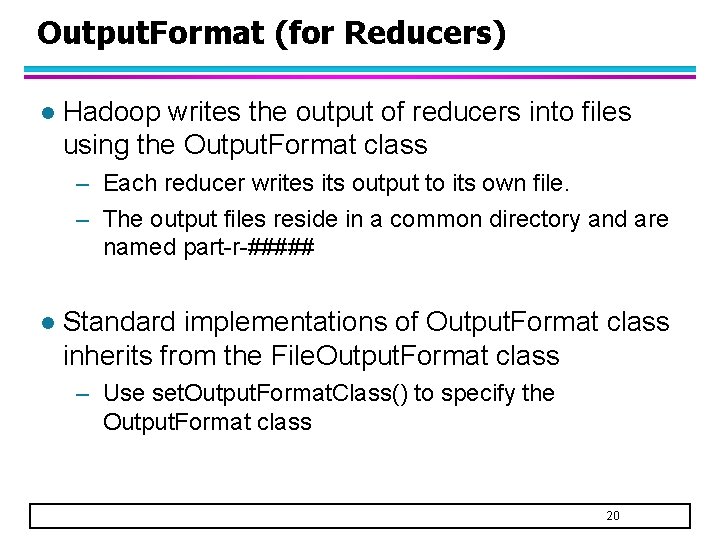

Output. Format (for Reducers) l Hadoop writes the output of reducers into files using the Output. Format class – Each reducer writes its output to its own file. – The output files reside in a common directory and are named part-r-##### l Standard implementations of Output. Format class inherits from the File. Output. Format class – Use set. Output. Format. Class() to specify the Output. Format class 20

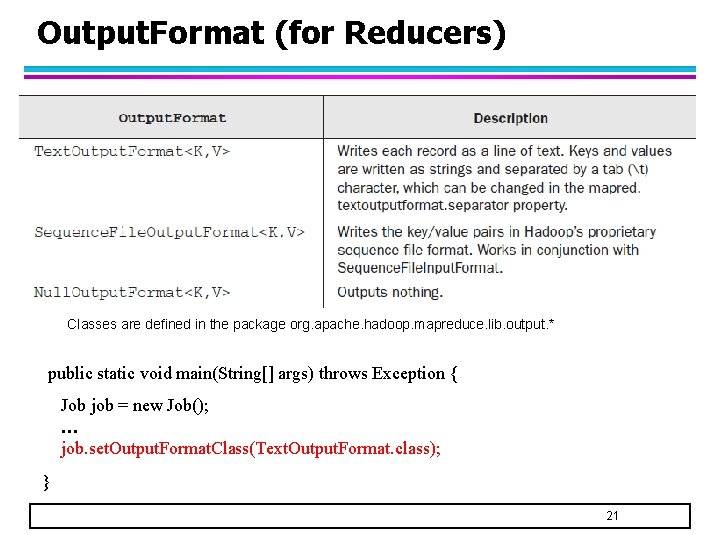

Output. Format (for Reducers) Classes are defined in the package org. apache. hadoop. mapreduce. lib. output. * public static void main(String[] args) throws Exception { Job job = new Job(); … job. set. Output. Format. Class(Text. Output. Format. class); } 21

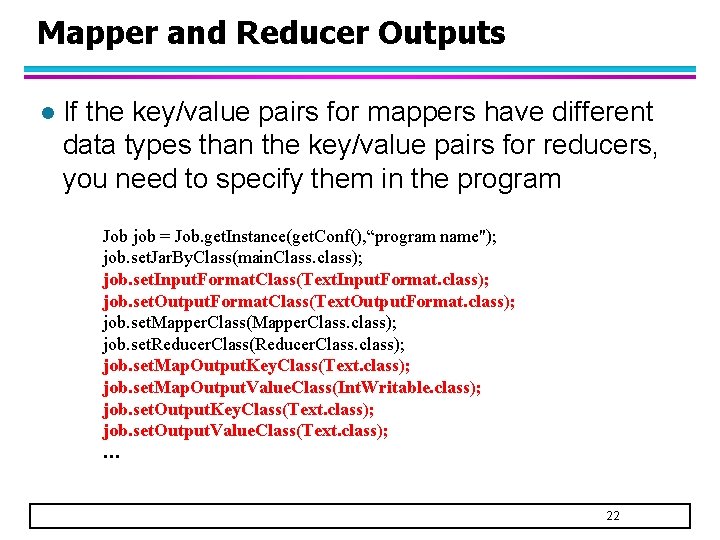

Mapper and Reducer Outputs l If the key/value pairs for mappers have different data types than the key/value pairs for reducers, you need to specify them in the program Job job = Job. get. Instance(get. Conf(), “program name"); job. set. Jar. By. Class(main. Class. class); job. set. Input. Format. Class(Text. Input. Format. class); job. set. Output. Format. Class(Text. Output. Format. class); job. set. Mapper. Class(Mapper. Class. class); job. set. Reducer. Class(Reducer. Class. class); job. set. Map. Output. Key. Class(Text. class); job. set. Map. Output. Value. Class(Int. Writable. class); job. set. Output. Key. Class(Text. class); job. set. Output. Value. Class(Text. class); … 22

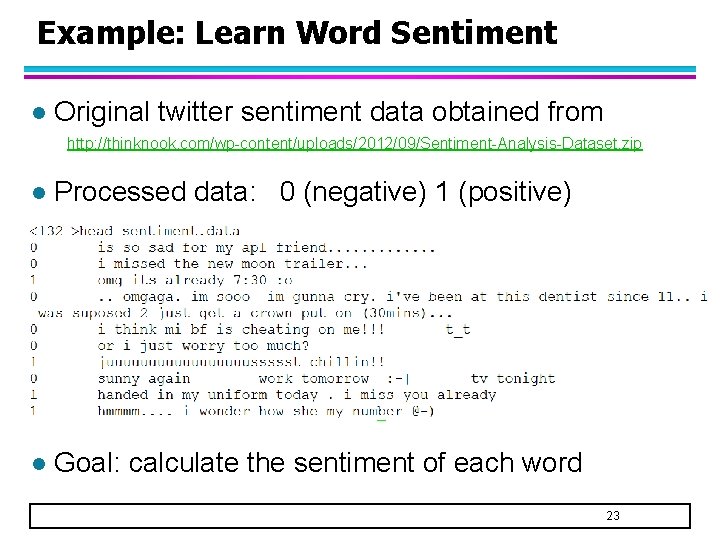

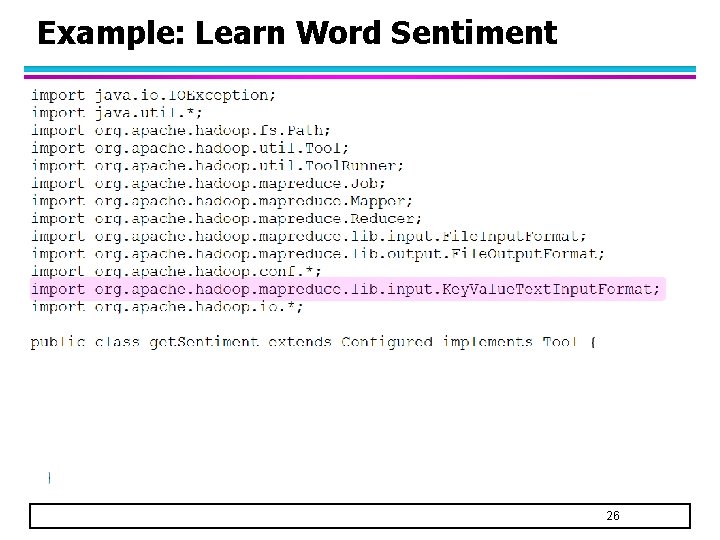

Example: Learn Word Sentiment l Original twitter sentiment data obtained from http: //thinknook. com/wp-content/uploads/2012/09/Sentiment-Analysis-Dataset. zip l Processed data: 0 (negative) 1 (positive) l Goal: calculate the sentiment of each word 23

Example: Learn Word Sentiment l Thresholds are set dynamically 24

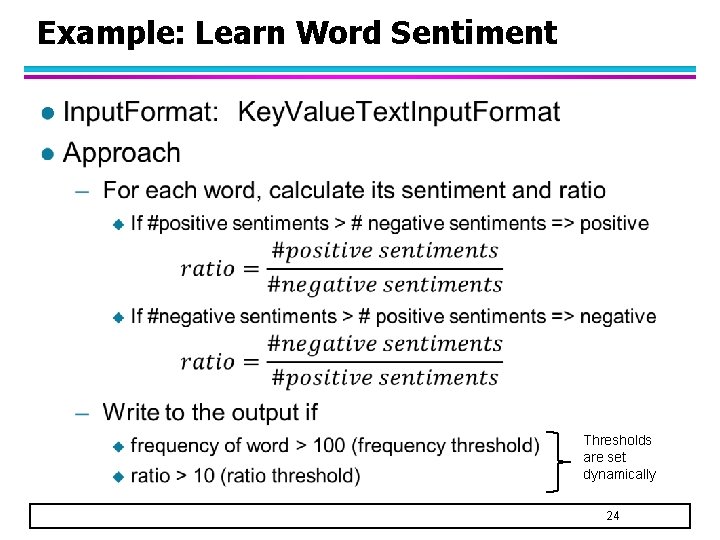

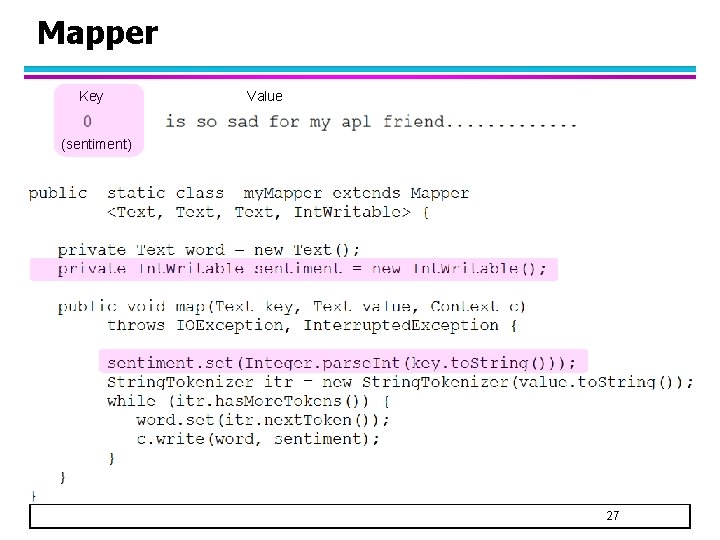

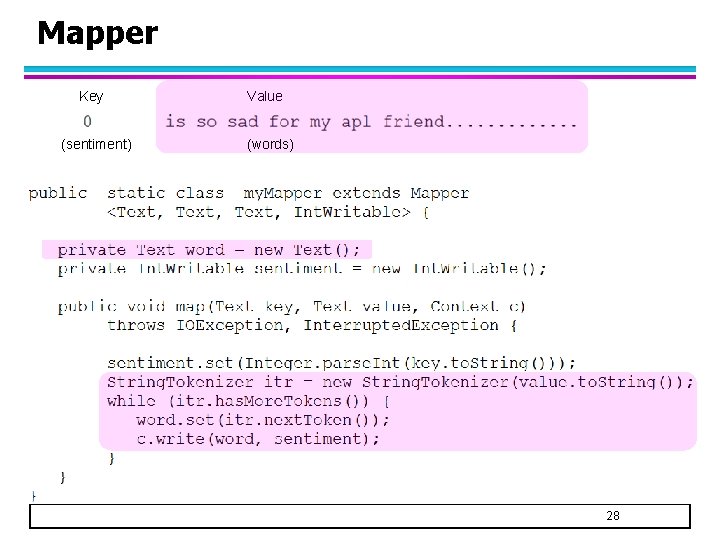

Pass Parameters to Mapper/Reducer l How to pass parameters to mapper/reducer? public static class mapper. Class extends Mapper < Types > { public void map (Type key, Type value, Context context) throws IOException, Interrupted. Exception { … } } public static class Int. Sum. Reducer extends Reducer <Types> { public void reduce (Type key, Iterable<Type> values, Context context) throws IOException, Interrupted. Exception { … } } Context can be used to obtain generic option parameters 25

Example: Learn Word Sentiment 26

Mapper Key Value (sentiment) 27

Mapper Key (sentiment) Value (words) 28

![Reducer sad [0 0 0 1 0 0 0 ] Program execution: hadoop sent. Reducer sad [0 0 0 1 0 0 0 ] Program execution: hadoop sent.](http://slidetodoc.com/presentation_image_h2/c5b5647f3fab4a8288538aed6658b474/image-29.jpg)

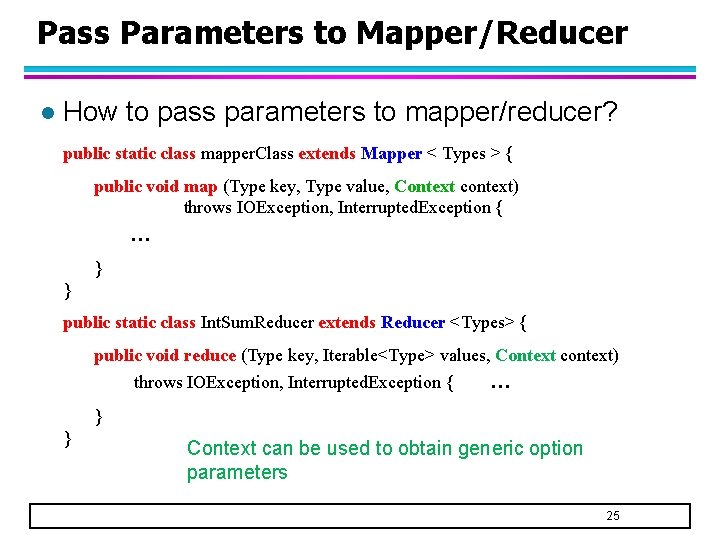

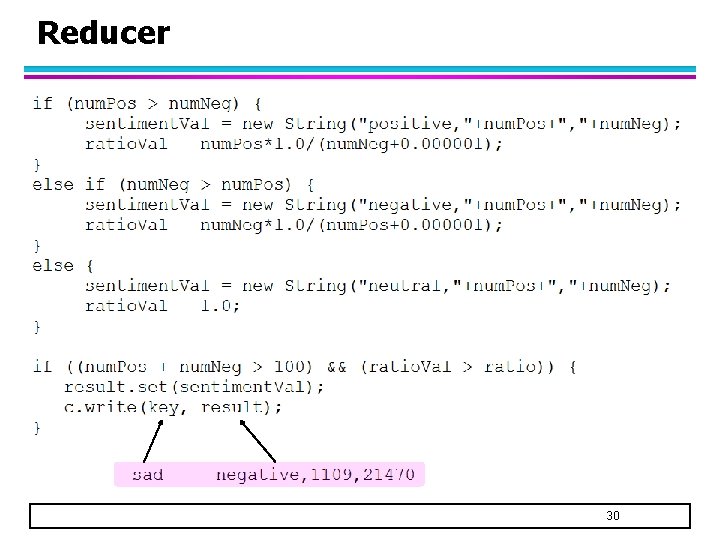

Reducer sad [0 0 0 1 0 0 0 ] Program execution: hadoop sent. jar get. Sentiment -D threshold=100 -D ratio=10 … 29

Reducer 30

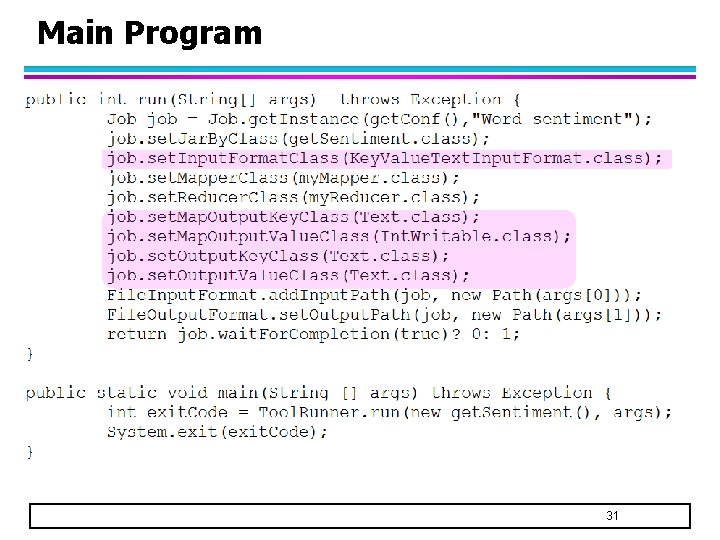

Main Program 31

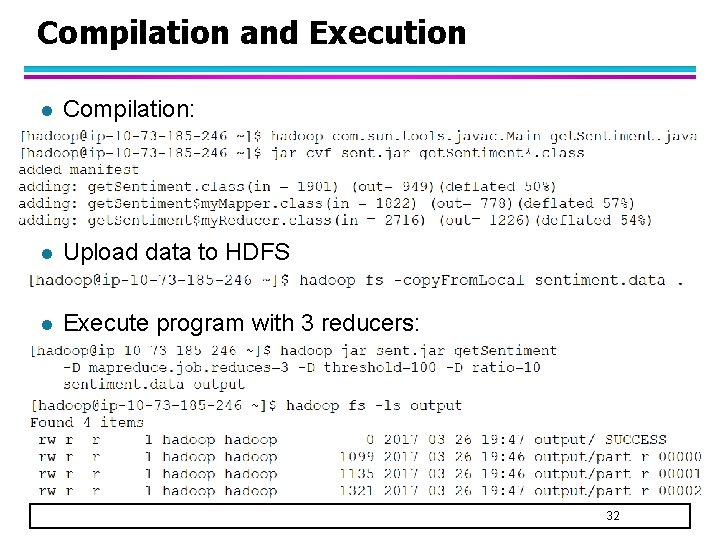

Compilation and Execution l Compilation: l Upload data to HDFS l Execute program with 3 reducers: 32

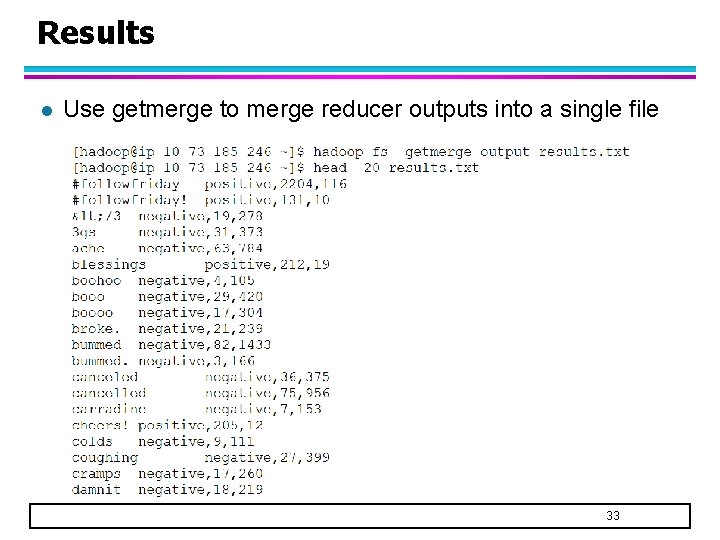

Results l Use getmerge to merge reducer outputs into a single file 33

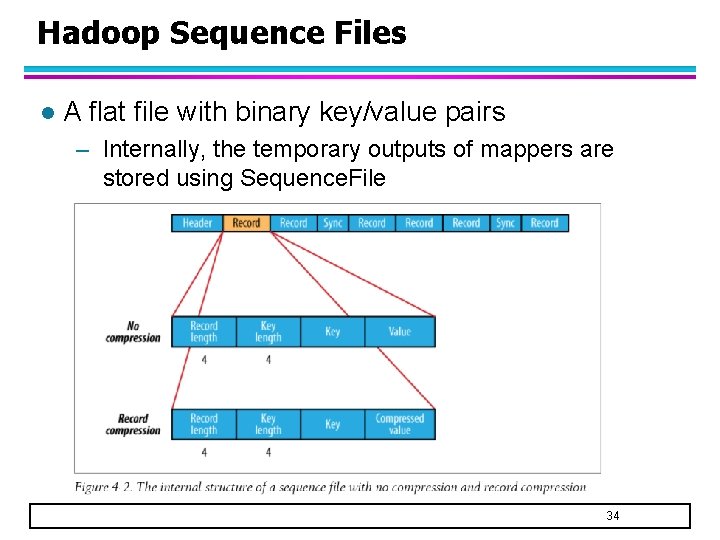

Hadoop Sequence Files l A flat file with binary key/value pairs – Internally, the temporary outputs of mappers are stored using Sequence. File 34

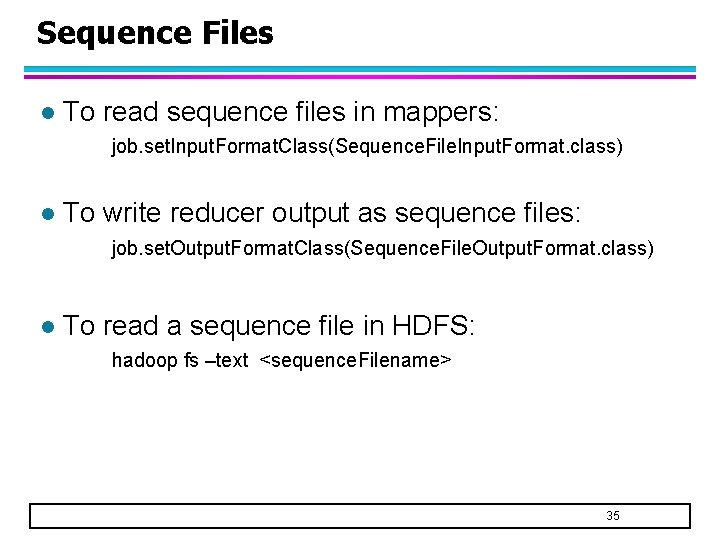

Sequence Files l To read sequence files in mappers: job. set. Input. Format. Class(Sequence. File. Input. Format. class) l To write reducer output as sequence files: job. set. Output. Format. Class(Sequence. File. Output. Format. class) l To read a sequence file in HDFS: hadoop fs –text <sequence. Filename> 35

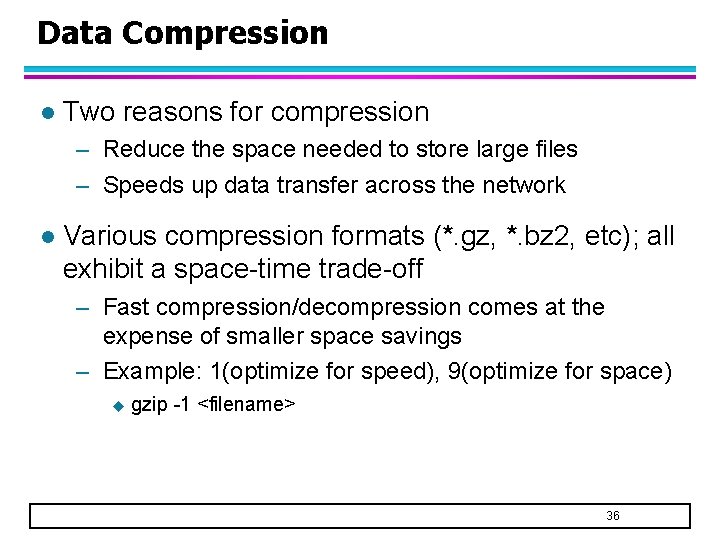

Data Compression l Two reasons for compression – Reduce the space needed to store large files – Speeds up data transfer across the network l Various compression formats (*. gz, *. bz 2, etc); all exhibit a space-time trade-off – Fast compression/decompression comes at the expense of smaller space savings – Example: 1(optimize for speed), 9(optimize for space) u gzip -1 <filename> 36

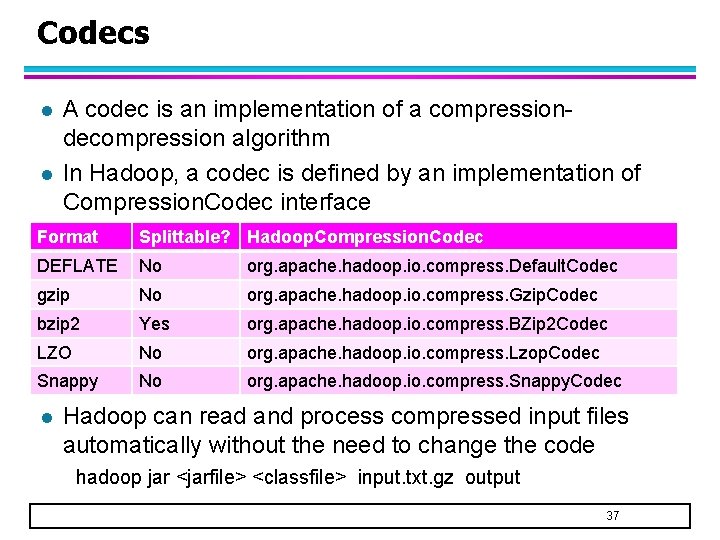

Codecs l l A codec is an implementation of a compressiondecompression algorithm In Hadoop, a codec is defined by an implementation of Compression. Codec interface Format Splittable? Hadoop. Compression. Codec DEFLATE No org. apache. hadoop. io. compress. Default. Codec gzip No org. apache. hadoop. io. compress. Gzip. Codec bzip 2 Yes org. apache. hadoop. io. compress. BZip 2 Codec LZO No org. apache. hadoop. io. compress. Lzop. Codec Snappy No org. apache. hadoop. io. compress. Snappy. Codec l Hadoop can read and process compressed input files automatically without the need to change the code hadoop jar <jarfile> <classfile> input. txt. gz output 37

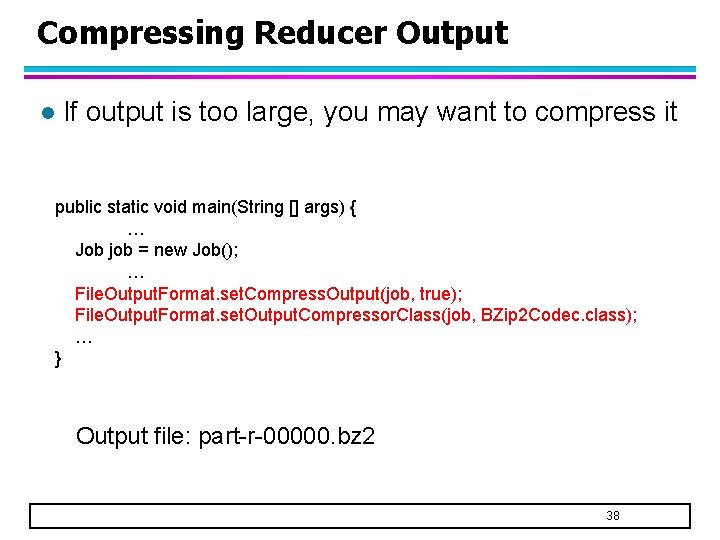

Compressing Reducer Output l If output is too large, you may want to compress it public static void main(String [] args) { … Job job = new Job(); … File. Output. Format. set. Compress. Output(job, true); File. Output. Format. set. Output. Compressor. Class(job, BZip 2 Codec. class); … } Output file: part-r-00000. bz 2 38

![Compressing Mapper Output l Useful to compress mapper output public static void main(String [] Compressing Mapper Output l Useful to compress mapper output public static void main(String []](http://slidetodoc.com/presentation_image_h2/c5b5647f3fab4a8288538aed6658b474/image-39.jpg)

Compressing Mapper Output l Useful to compress mapper output public static void main(String [] args) { … Configuration conf = new Configuration(); conf. set. Boolean(“mapreduce. map. output. compress”, true); conf. set. Class(“mapreduce. map. output. compress. codec”, BZip 2 Codec. class, Compression. Codec. class); Job job = new Job(conf, “Job name”); … } 39

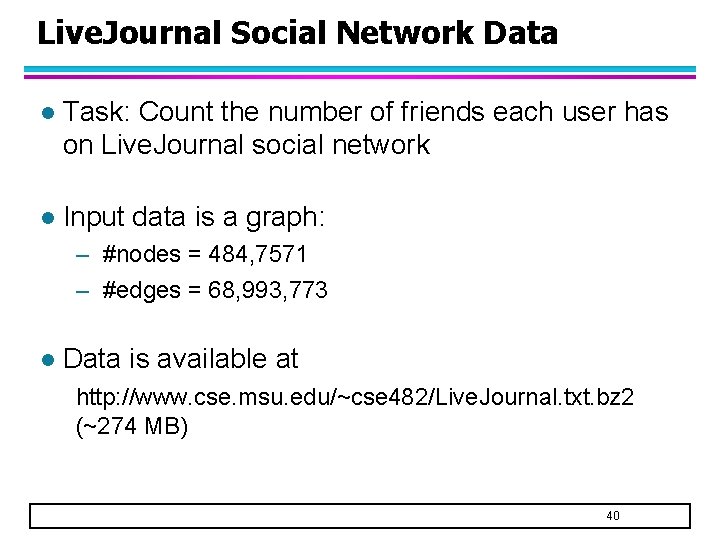

Live. Journal Social Network Data l Task: Count the number of friends each user has on Live. Journal social network l Input data is a graph: – #nodes = 484, 7571 – #edges = 68, 993, 773 l Data is available at http: //www. cse. msu. edu/~cse 482/Live. Journal. txt. bz 2 (~274 MB) 40

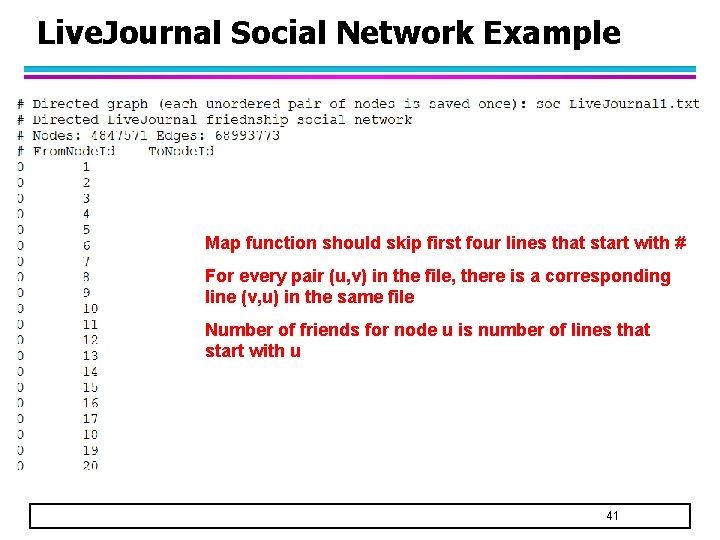

Live. Journal Social Network Example Map function should skip first four lines that start with # For every pair (u, v) in the file, there is a corresponding line (v, u) in the same file Number of friends for node u is number of lines that start with u 41

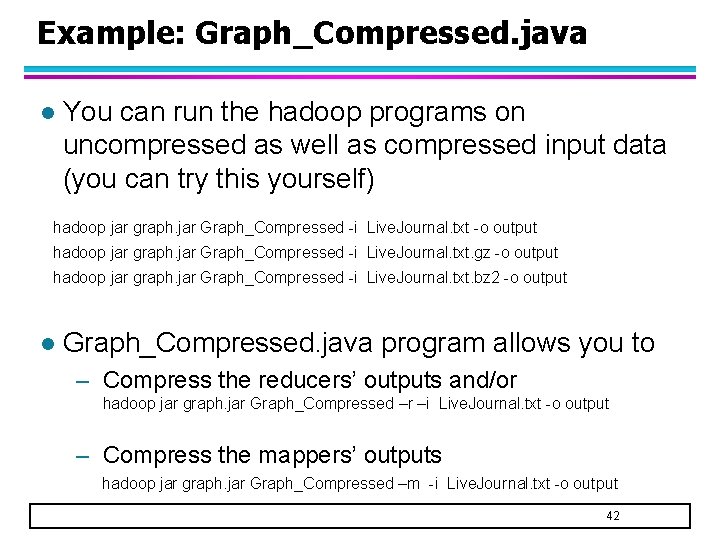

Example: Graph_Compressed. java l You can run the hadoop programs on uncompressed as well as compressed input data (you can try this yourself) hadoop jar graph. jar Graph_Compressed -i Live. Journal. txt -o output hadoop jar graph. jar Graph_Compressed -i Live. Journal. txt. gz -o output hadoop jar graph. jar Graph_Compressed -i Live. Journal. txt. bz 2 -o output l Graph_Compressed. java program allows you to – Compress the reducers’ outputs and/or hadoop jar graph. jar Graph_Compressed –r –i Live. Journal. txt -o output – Compress the mappers’ outputs hadoop jar graph. jar Graph_Compressed –m -i Live. Journal. txt -o output 42

- Slides: 42