CSE 431 Computer Architecture Fall 2005 Lecture 1011

- Slides: 34

CSE 431 Computer Architecture Fall 2005 Lecture 10&11: A Superscalar Pipeline Mary Jane Irwin ( www. cse. psu. edu/~mji ) www. cse. psu. edu/~cg 431 [Adapted from Computer Organization and Design, Patterson & Hennessy, © 2005 and Instruction Issue Logic, IEEETC, 39: 3, Sohi, 1990] CSE 431 L 10&11 SS Model. 1 Irwin, PSU, 2005

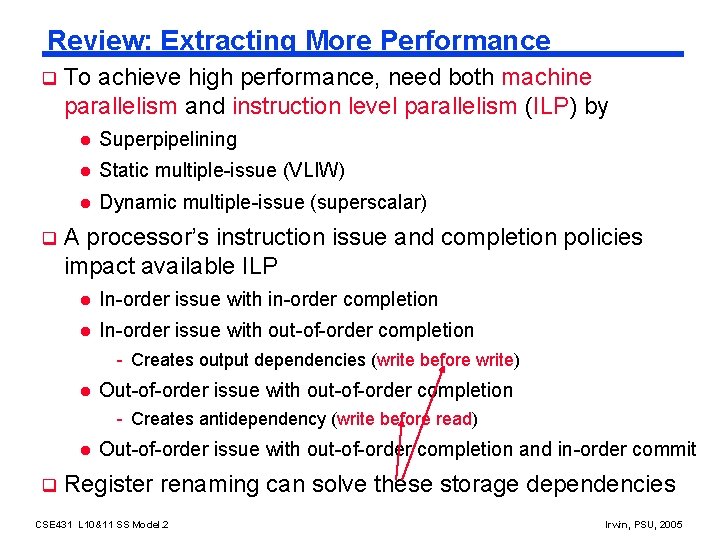

Review: Extracting More Performance q q To achieve high performance, need both machine parallelism and instruction level parallelism (ILP) by l Superpipelining l Static multiple-issue (VLIW) l Dynamic multiple-issue (superscalar) A processor’s instruction issue and completion policies impact available ILP l In-order issue with in-order completion l In-order issue with out-of-order completion - Creates output dependencies (write before write) l Out-of-order issue with out-of-order completion - Creates antidependency (write before read) l q Out-of-order issue with out-of-order completion and in-order commit Register renaming can solve these storage dependencies CSE 431 L 10&11 SS Model. 2 Irwin, PSU, 2005

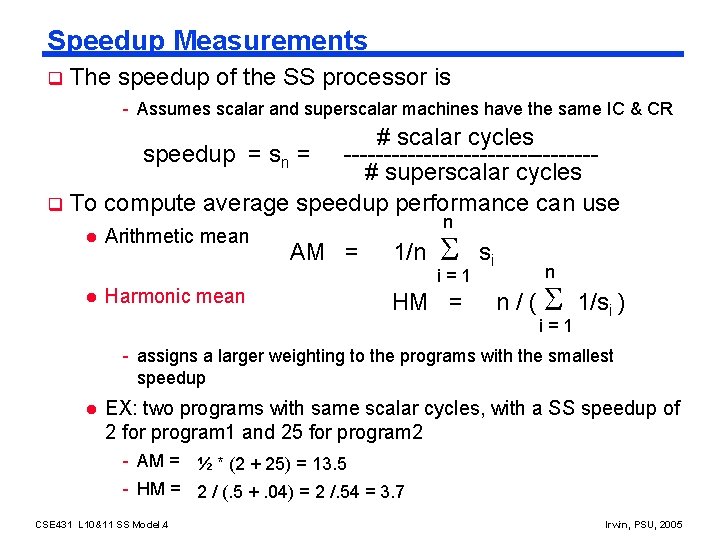

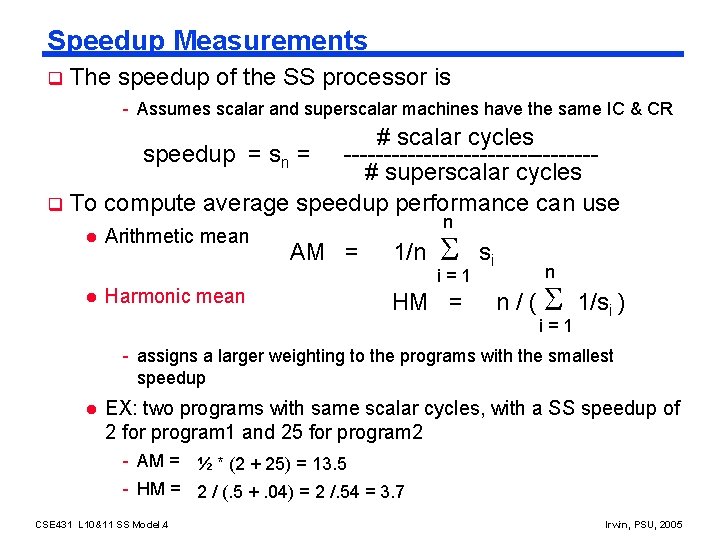

Speedup Measurements q The speedup of the SS processor is - Assumes scalar and superscalar machines have the same IC & CR # scalar cycles speedup = sn = ----------------# superscalar cycles q To compute average speedup performance can use l l Arithmetic mean Harmonic mean n AM = 1/n i=1 HM = si n n / ( 1/si ) i=1 - assigns a larger weighting to the programs with the smallest speedup l EX: two programs with same scalar cycles, with a SS speedup of 2 for program 1 and 25 for program 2 - AM = ½ * (2 + 25) = 13. 5 - HM = 2 / (. 5 +. 04) = 2 /. 54 = 3. 7 CSE 431 L 10&11 SS Model. 4 Irwin, PSU, 2005

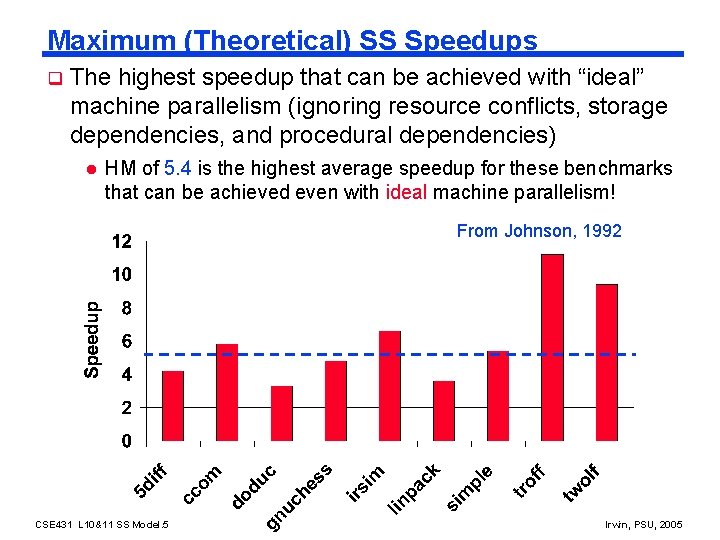

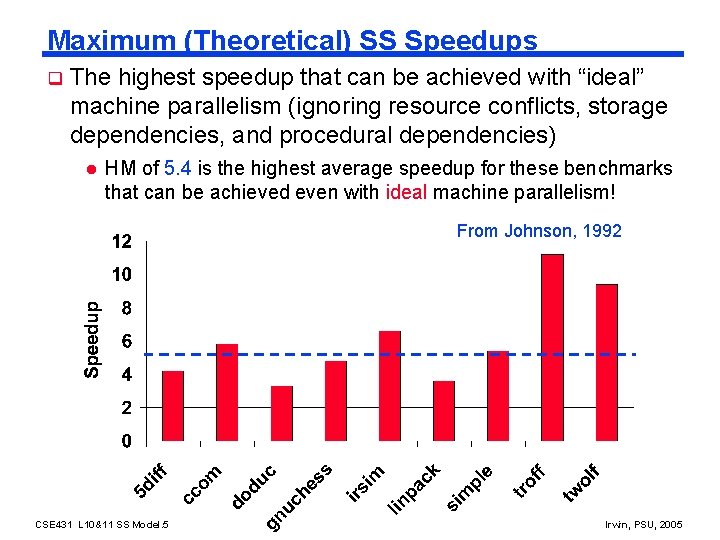

Maximum (Theoretical) SS Speedups q The highest speedup that can be achieved with “ideal” machine parallelism (ignoring resource conflicts, storage dependencies, and procedural dependencies) l HM of 5. 4 is the highest average speedup for these benchmarks that can be achieved even with ideal machine parallelism! From Johnson, 1992 CSE 431 L 10&11 SS Model. 5 Irwin, PSU, 2005

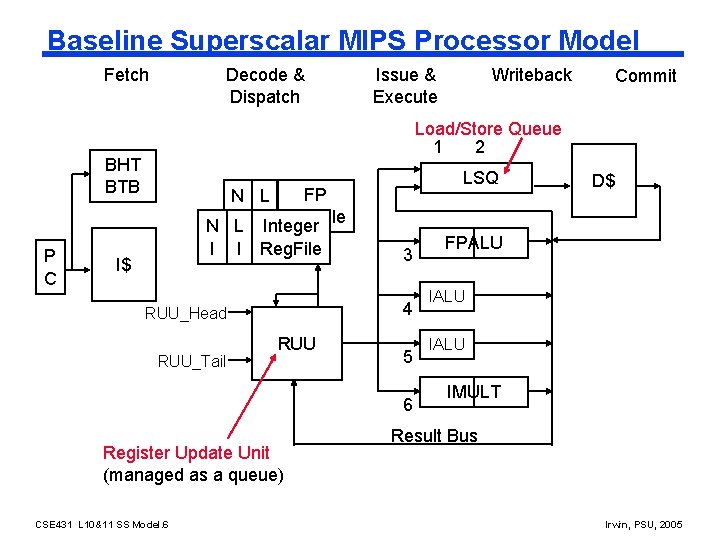

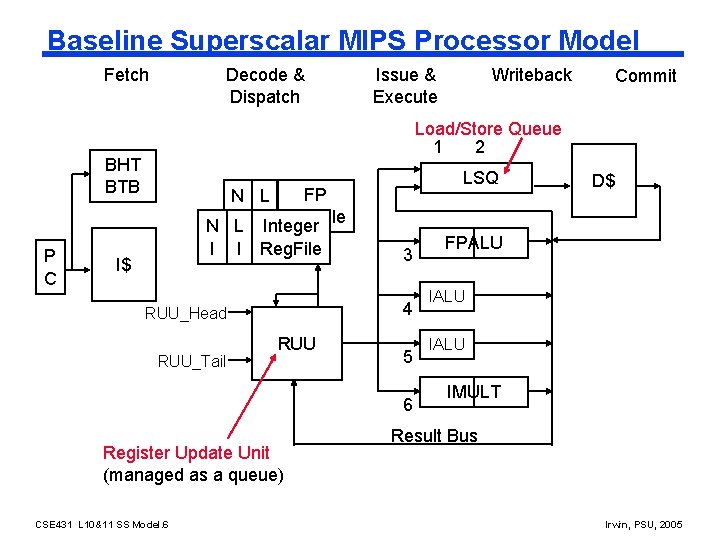

Baseline Superscalar MIPS Processor Model Fetch Decode & Dispatch Writeback Commit Load/Store Queue 1 2 BHT BTB P C Issue & Execute FP N L Reg. File I IInteger N L I I Reg. File I$ 3 4 RUU_Head RUU_Tail LSQ RUU 5 6 Register Update Unit (managed as a queue) CSE 431 L 10&11 SS Model. 6 D$ FPALU IALU IMULT Result Bus Irwin, PSU, 2005

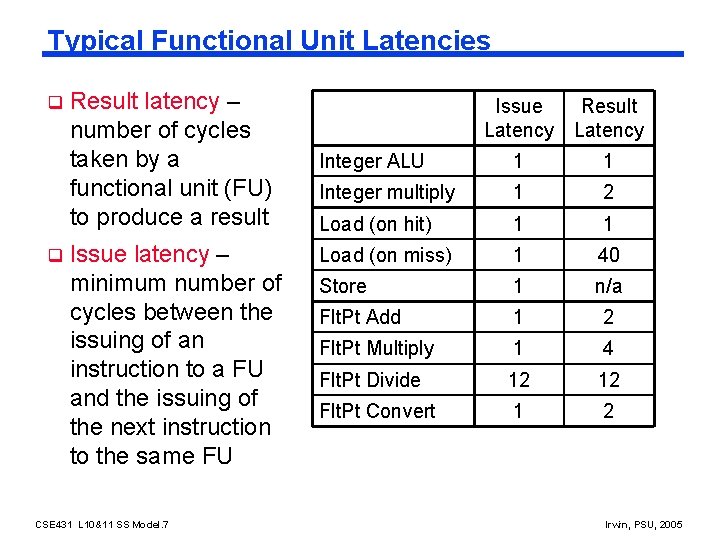

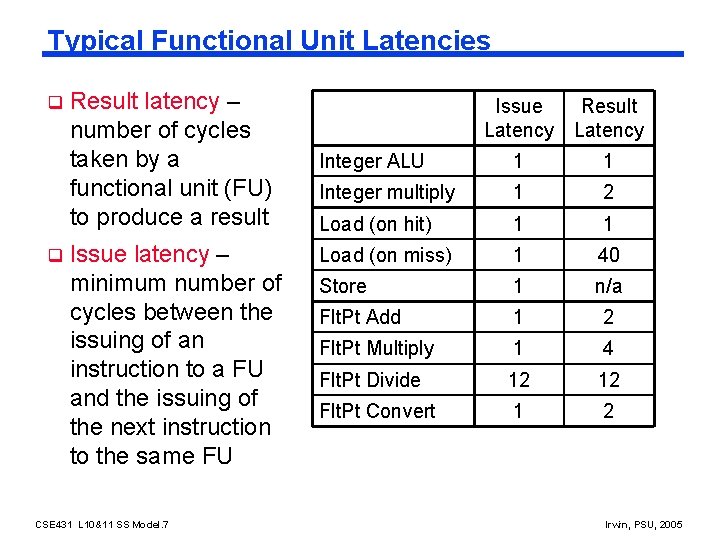

Typical Functional Unit Latencies q q Result latency – number of cycles taken by a functional unit (FU) to produce a result Issue latency – minimum number of cycles between the issuing of an instruction to a FU and the issuing of the next instruction to the same FU CSE 431 L 10&11 SS Model. 7 Issue Result Latency Integer ALU 1 1 Integer multiply 1 2 Load (on hit) 1 1 Load (on miss) 1 40 Store 1 n/a Flt. Pt Add 1 2 Flt. Pt Multiply 1 4 Flt. Pt Divide 12 12 Flt. Pt Convert 1 2 Irwin, PSU, 2005

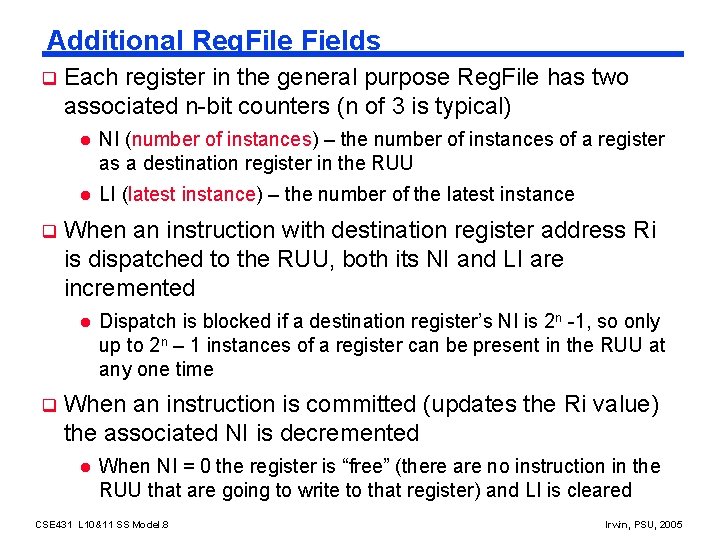

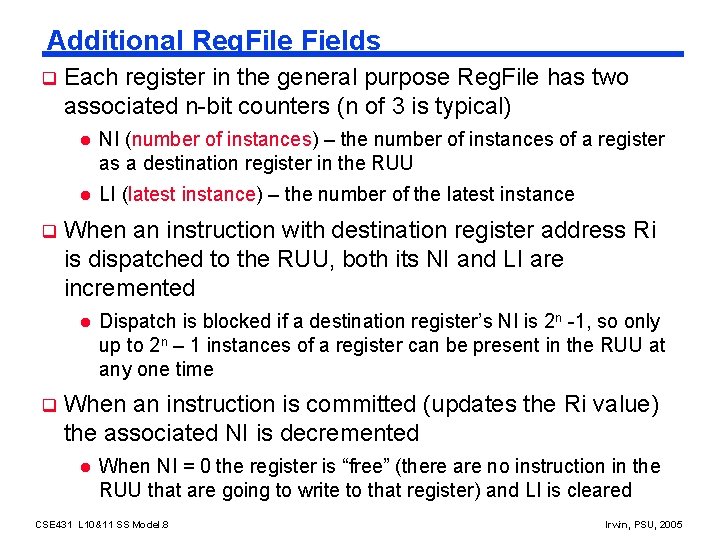

Additional Reg. File Fields q q Each register in the general purpose Reg. File has two associated n-bit counters (n of 3 is typical) l NI (number of instances) – the number of instances of a register as a destination register in the RUU l LI (latest instance) – the number of the latest instance When an instruction with destination register address Ri is dispatched to the RUU, both its NI and LI are incremented l q Dispatch is blocked if a destination register’s NI is 2 n -1, so only up to 2 n – 1 instances of a register can be present in the RUU at any one time When an instruction is committed (updates the Ri value) the associated NI is decremented l When NI = 0 the register is “free” (there are no instruction in the RUU that are going to write to that register) and LI is cleared CSE 431 L 10&11 SS Model. 8 Irwin, PSU, 2005

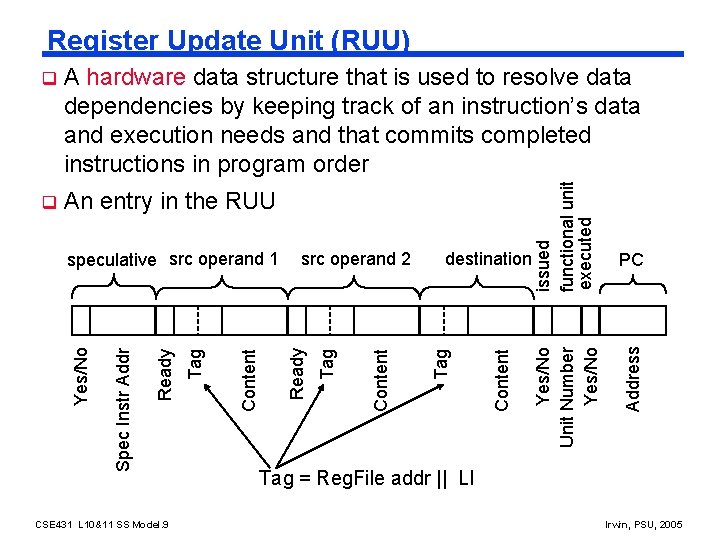

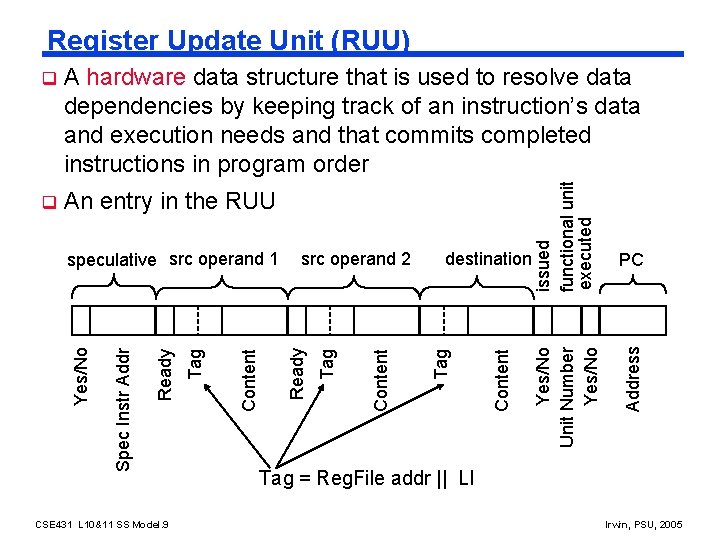

Register Update Unit (RUU) A hardware data structure that is used to resolve data dependencies by keeping track of an instruction’s data and execution needs and that commits completed instructions in program order q An entry in the RUU CSE 431 L 10&11 SS Model. 9 PC Address Yes/No Unit Number Yes/No Content destination Tag Content Tag src operand 2 Ready Content Tag Ready Spec Instr Addr Yes/No speculative src operand 1 issued functional unit executed q Tag = Reg. File addr || LI Irwin, PSU, 2005

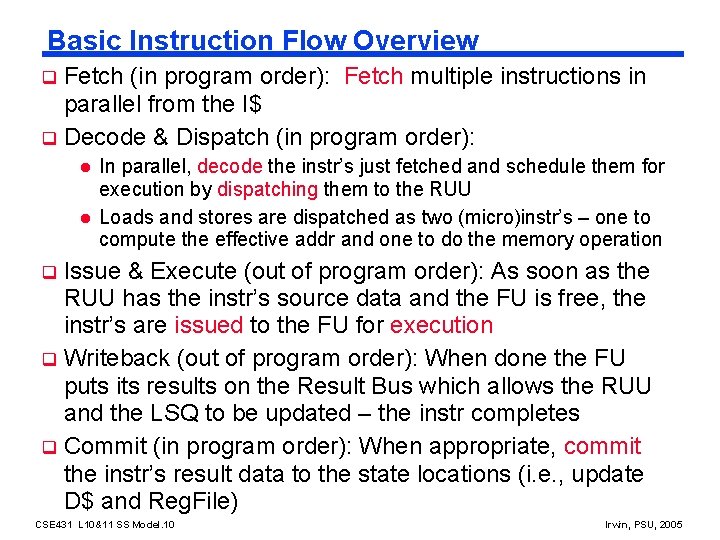

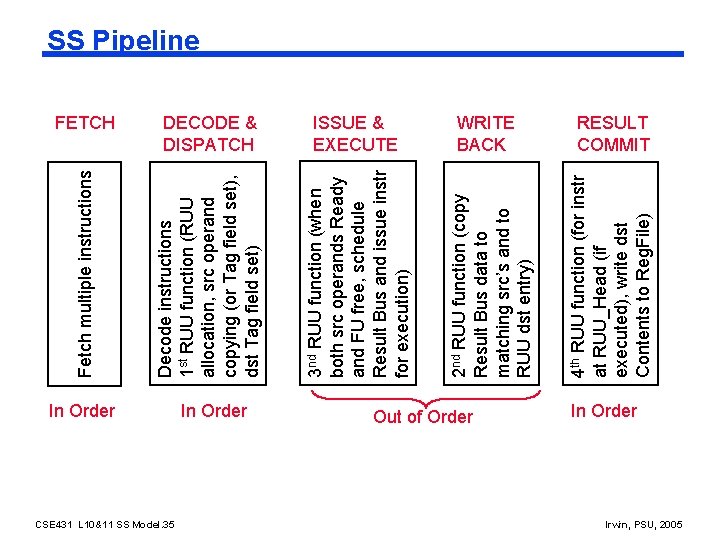

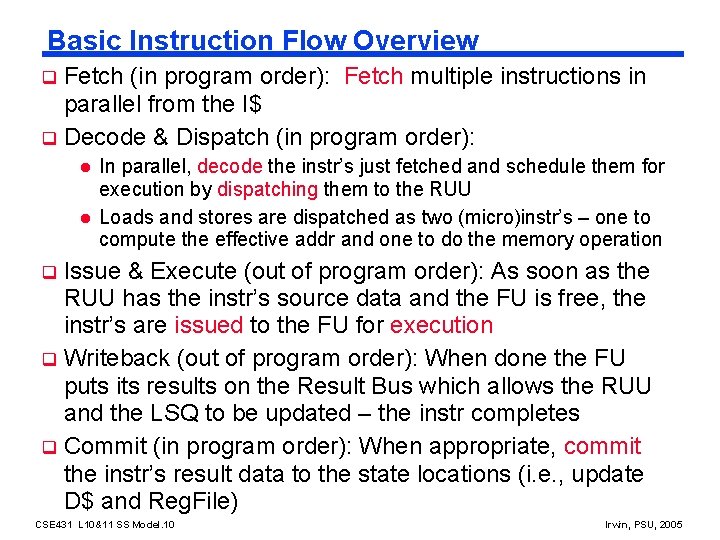

Basic Instruction Flow Overview Fetch (in program order): Fetch multiple instructions in parallel from the I$ q Decode & Dispatch (in program order): q l l In parallel, decode the instr’s just fetched and schedule them for execution by dispatching them to the RUU Loads and stores are dispatched as two (micro)instr’s – one to compute the effective addr and one to do the memory operation Issue & Execute (out of program order): As soon as the RUU has the instr’s source data and the FU is free, the instr’s are issued to the FU for execution q Writeback (out of program order): When done the FU puts its results on the Result Bus which allows the RUU and the LSQ to be updated – the instr completes q Commit (in program order): When appropriate, commit the instr’s result data to the state locations (i. e. , update D$ and Reg. File) q CSE 431 L 10&11 SS Model. 10 Irwin, PSU, 2005

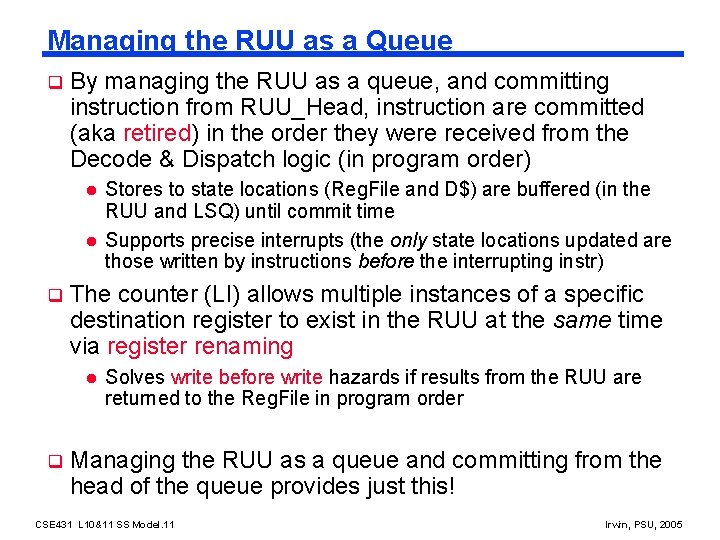

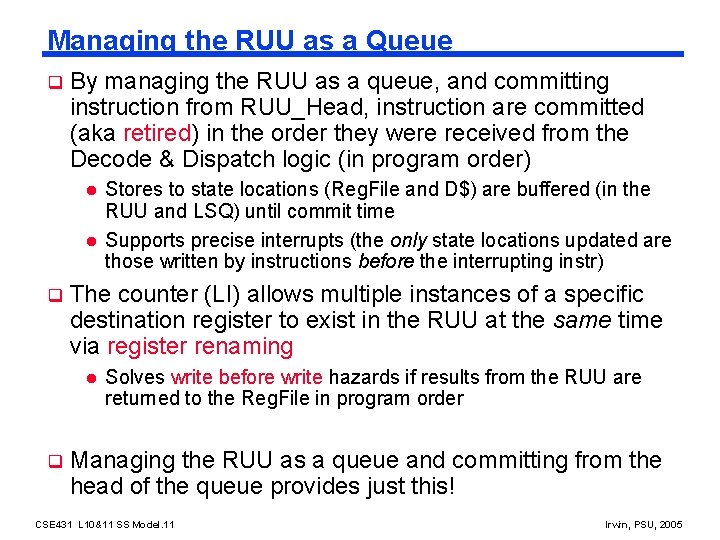

Managing the RUU as a Queue q By managing the RUU as a queue, and committing instruction from RUU_Head, instruction are committed (aka retired) in the order they were received from the Decode & Dispatch logic (in program order) l l q The counter (LI) allows multiple instances of a specific destination register to exist in the RUU at the same time via register renaming l q Stores to state locations (Reg. File and D$) are buffered (in the RUU and LSQ) until commit time Supports precise interrupts (the only state locations updated are those written by instructions before the interrupting instr) Solves write before write hazards if results from the RUU are returned to the Reg. File in program order Managing the RUU as a queue and committing from the head of the queue provides just this! CSE 431 L 10&11 SS Model. 11 Irwin, PSU, 2005

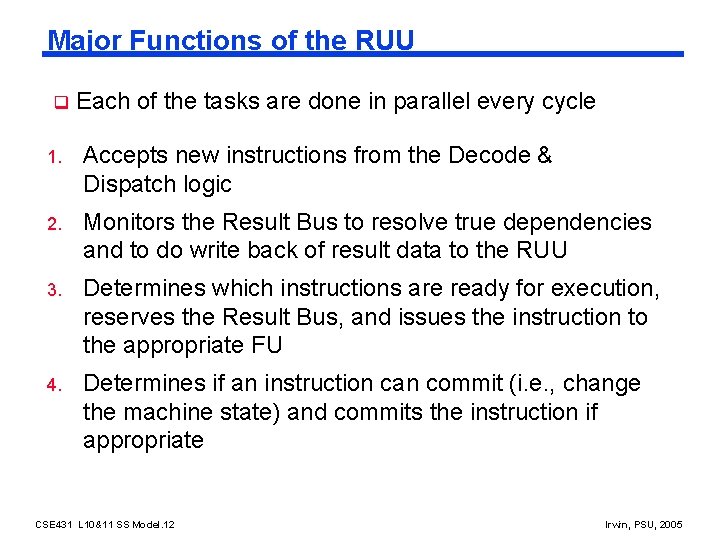

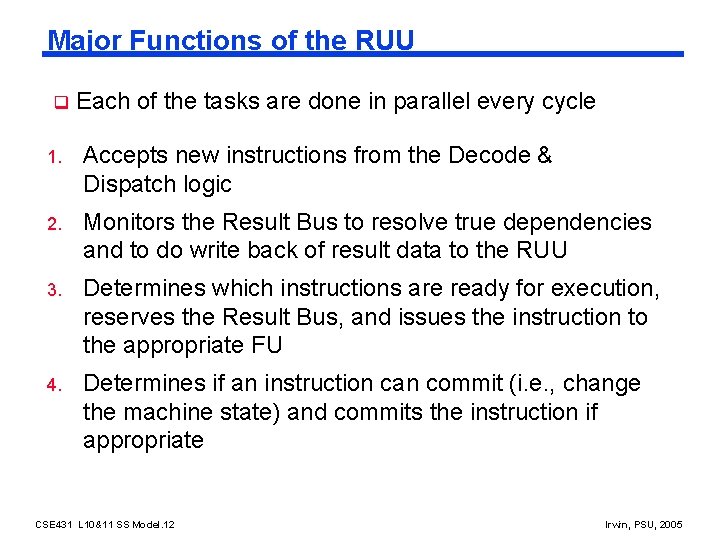

Major Functions of the RUU q Each of the tasks are done in parallel every cycle 1. Accepts new instructions from the Decode & Dispatch logic 2. Monitors the Result Bus to resolve true dependencies and to do write back of result data to the RUU 3. Determines which instructions are ready for execution, reserves the Result Bus, and issues the instruction to the appropriate FU 4. Determines if an instruction can commit (i. e. , change the machine state) and commits the instruction if appropriate CSE 431 L 10&11 SS Model. 12 Irwin, PSU, 2005

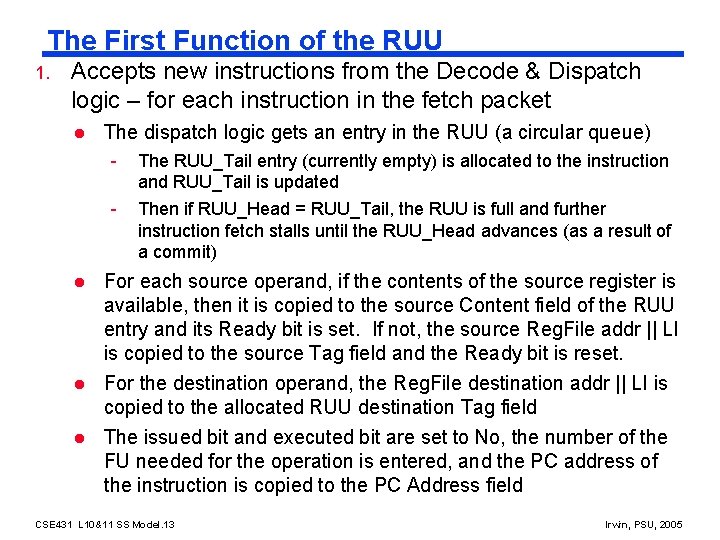

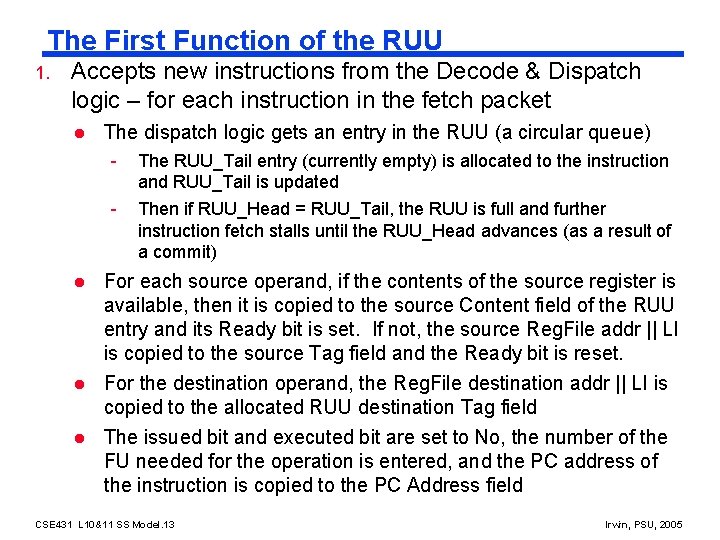

The First Function of the RUU 1. Accepts new instructions from the Decode & Dispatch logic – for each instruction in the fetch packet l The dispatch logic gets an entry in the RUU (a circular queue) - l l l The RUU_Tail entry (currently empty) is allocated to the instruction and RUU_Tail is updated Then if RUU_Head = RUU_Tail, the RUU is full and further instruction fetch stalls until the RUU_Head advances (as a result of a commit) For each source operand, if the contents of the source register is available, then it is copied to the source Content field of the RUU entry and its Ready bit is set. If not, the source Reg. File addr || LI is copied to the source Tag field and the Ready bit is reset. For the destination operand, the Reg. File destination addr || LI is copied to the allocated RUU destination Tag field The issued bit and executed bit are set to No, the number of the FU needed for the operation is entered, and the PC address of the instruction is copied to the PC Address field CSE 431 L 10&11 SS Model. 13 Irwin, PSU, 2005

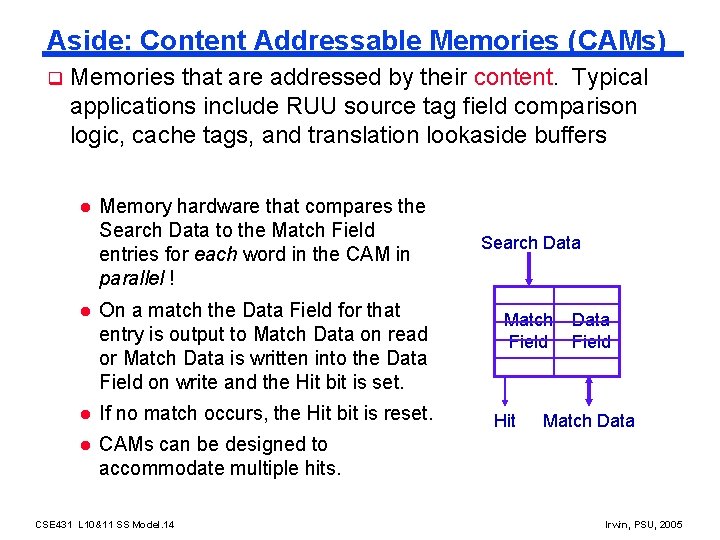

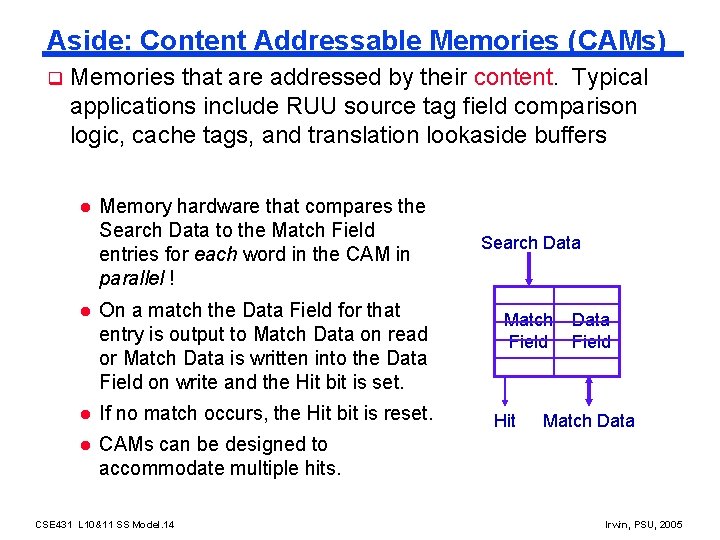

Aside: Content Addressable Memories (CAMs) q Memories that are addressed by their content. Typical applications include RUU source tag field comparison logic, cache tags, and translation lookaside buffers l Memory hardware that compares the Search Data to the Match Field entries for each word in the CAM in parallel ! l On a match the Data Field for that entry is output to Match Data on read or Match Data is written into the Data Field on write and the Hit bit is set. l If no match occurs, the Hit bit is reset. l CAMs can be designed to accommodate multiple hits. CSE 431 L 10&11 SS Model. 14 Search Data Match Field Hit Data Field Match Data Irwin, PSU, 2005

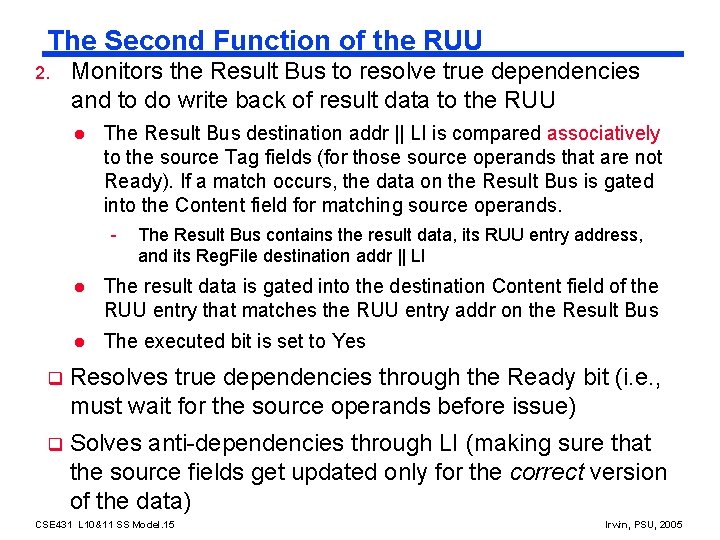

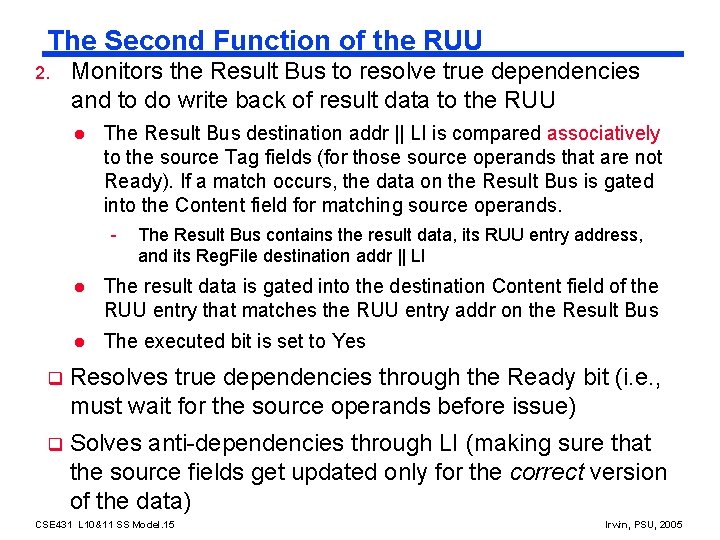

The Second Function of the RUU 2. Monitors the Result Bus to resolve true dependencies and to do write back of result data to the RUU l The Result Bus destination addr || LI is compared associatively to the source Tag fields (for those source operands that are not Ready). If a match occurs, the data on the Result Bus is gated into the Content field for matching source operands. - The Result Bus contains the result data, its RUU entry address, and its Reg. File destination addr || LI l The result data is gated into the destination Content field of the RUU entry that matches the RUU entry addr on the Result Bus l The executed bit is set to Yes q Resolves true dependencies through the Ready bit (i. e. , must wait for the source operands before issue) q Solves anti-dependencies through LI (making sure that the source fields get updated only for the correct version of the data) CSE 431 L 10&11 SS Model. 15 Irwin, PSU, 2005

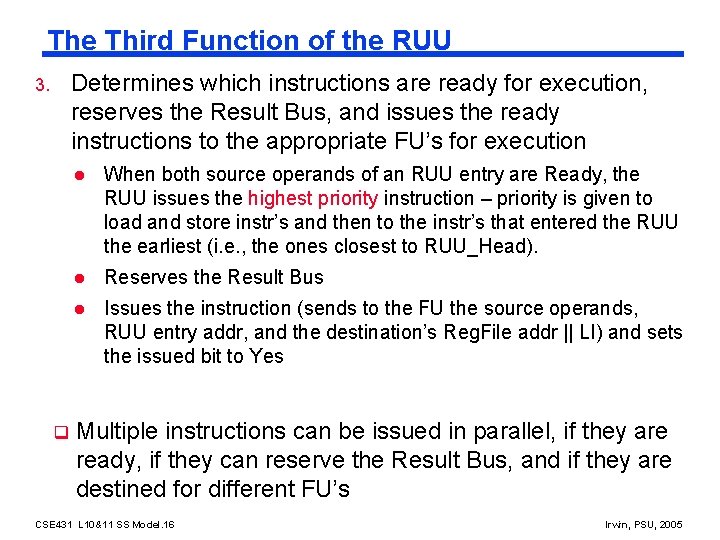

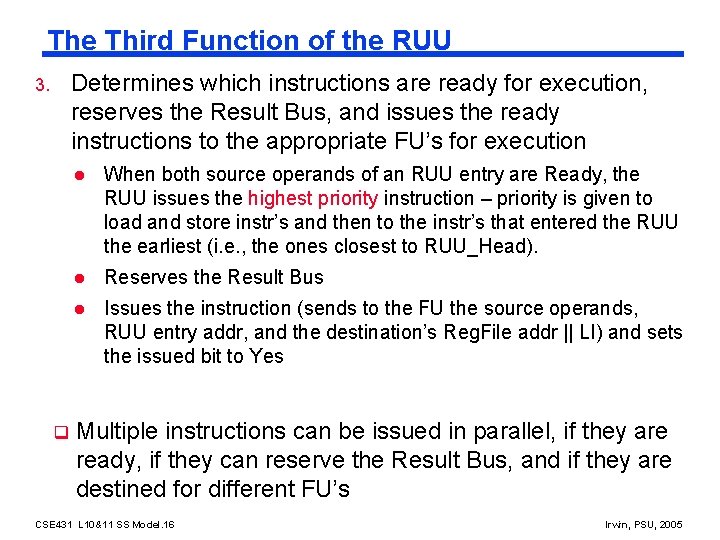

The Third Function of the RUU Determines which instructions are ready for execution, reserves the Result Bus, and issues the ready instructions to the appropriate FU’s for execution 3. q l When both source operands of an RUU entry are Ready, the RUU issues the highest priority instruction – priority is given to load and store instr’s and then to the instr’s that entered the RUU the earliest (i. e. , the ones closest to RUU_Head). l Reserves the Result Bus l Issues the instruction (sends to the FU the source operands, RUU entry addr, and the destination’s Reg. File addr || LI) and sets the issued bit to Yes Multiple instructions can be issued in parallel, if they are ready, if they can reserve the Result Bus, and if they are destined for different FU’s CSE 431 L 10&11 SS Model. 16 Irwin, PSU, 2005

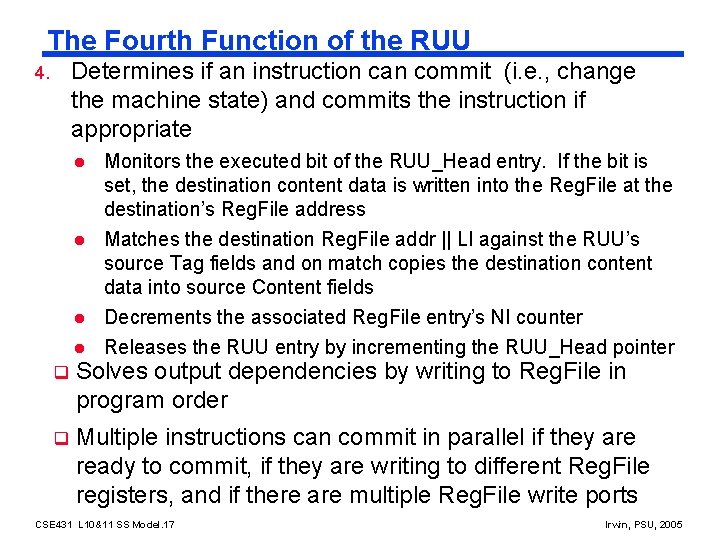

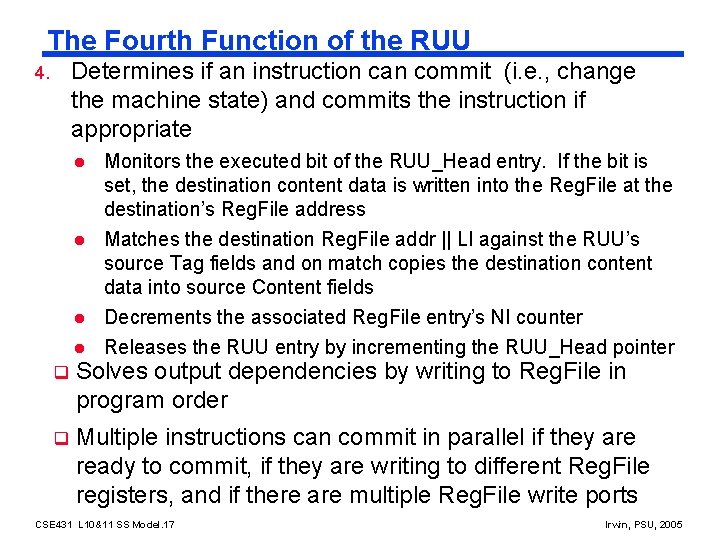

The Fourth Function of the RUU Determines if an instruction can commit (i. e. , change the machine state) and commits the instruction if appropriate 4. l l Monitors the executed bit of the RUU_Head entry. If the bit is set, the destination content data is written into the Reg. File at the destination’s Reg. File address Matches the destination Reg. File addr || LI against the RUU’s source Tag fields and on match copies the destination content data into source Content fields Decrements the associated Reg. File entry’s NI counter Releases the RUU entry by incrementing the RUU_Head pointer q Solves output dependencies by writing to Reg. File in program order q Multiple instructions can commit in parallel if they are ready to commit, if they are writing to different Reg. File registers, and if there are multiple Reg. File write ports CSE 431 L 10&11 SS Model. 17 Irwin, PSU, 2005

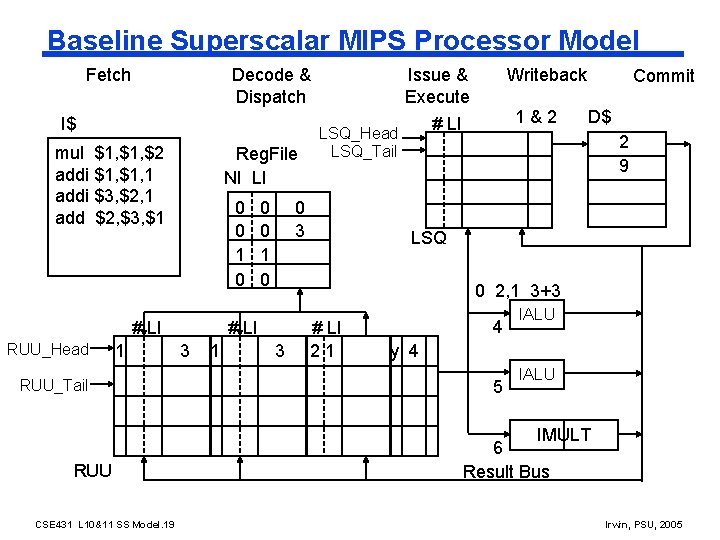

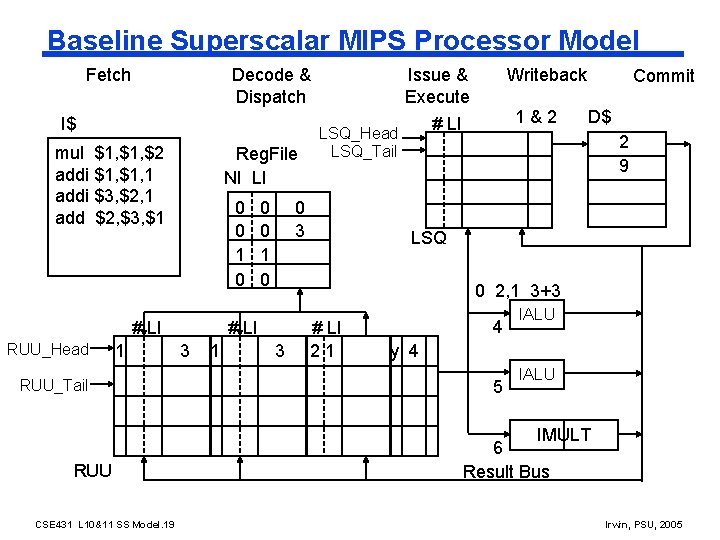

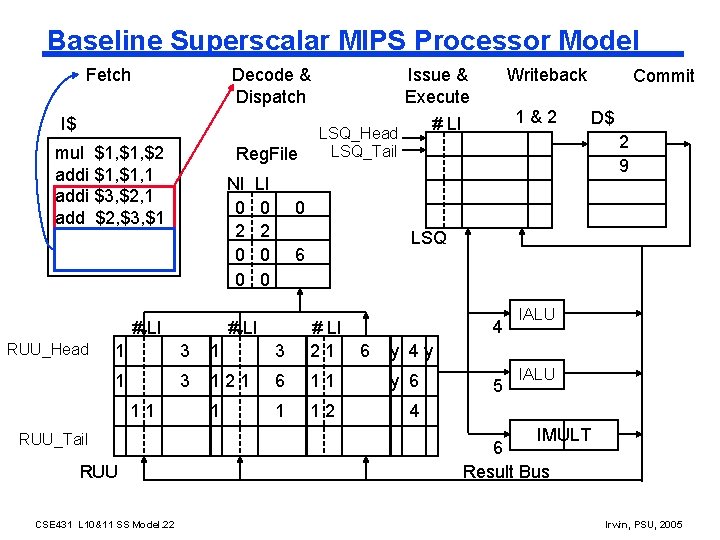

Baseline Superscalar MIPS Processor Model Fetch Decode & Dispatch I$ mul $1, $2 addi $1, 1 addi $3, $2, 1 add $2, $3, $1 Reg. File NI LI 0 0 1 0 # LI RUU_Head 1 RUU_Tail RUU CSE 431 L 10&11 SS Model. 19 0 0 1 Writeback 1&2 # LI LSQ_Head LSQ_Tail 0 3 Commit D$ 2 9 LSQ 0 2, 1 3+3 # LI 3 Issue & Execute 3 # LI 21 4 IALU y 4 5 IALU IMULT 6 Result Bus Irwin, PSU, 2005

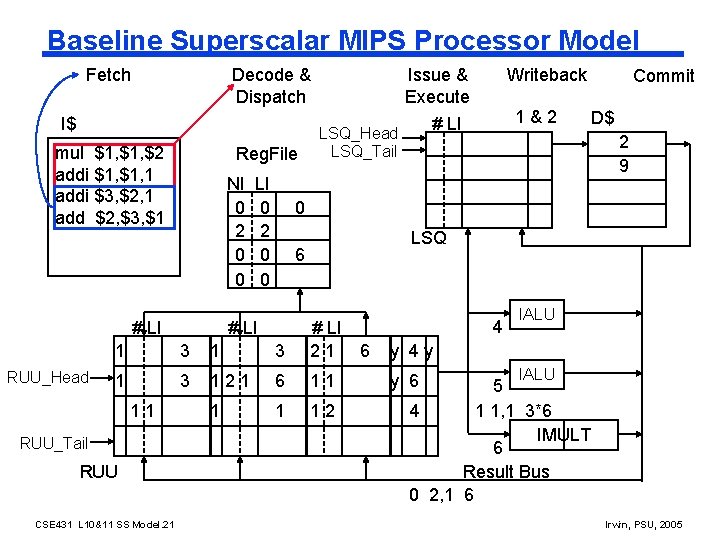

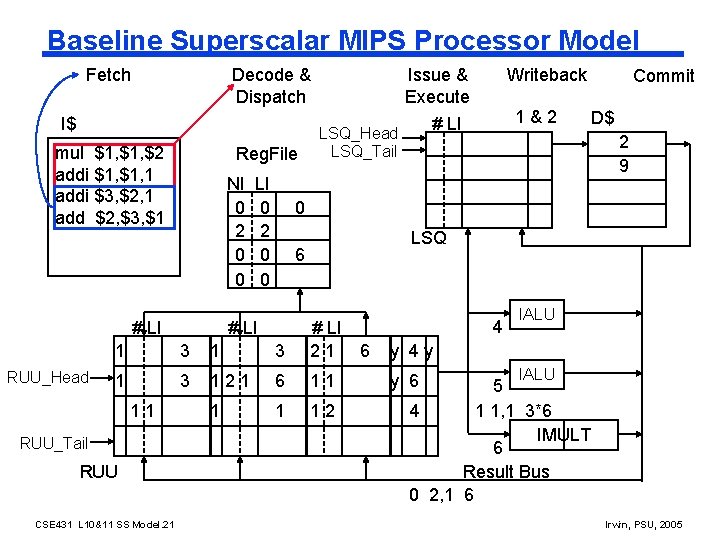

Baseline Superscalar MIPS Processor Model Fetch Decode & Dispatch I$ mul $1, $2 addi $1, 1 addi $3, $2, 1 add $2, $3, $1 Reg. File NI 0 2 0 0 # LI RUU_Head LI 0 2 0 0 Issue & Execute 6 1 3 121 6 11 1 1 12 CSE 431 L 10&11 SS Model. 21 D$ 2 9 LSQ 3 RUU Commit 0 1 RUU_Tail 1&2 # LI LSQ_Head LSQ_Tail # LI 21 6 11 Writeback # LI 4 y 4 y y 6 IALU 5 4 1 1, 1 3*6 IMULT 6 Result Bus 0 2, 1 6 Irwin, PSU, 2005

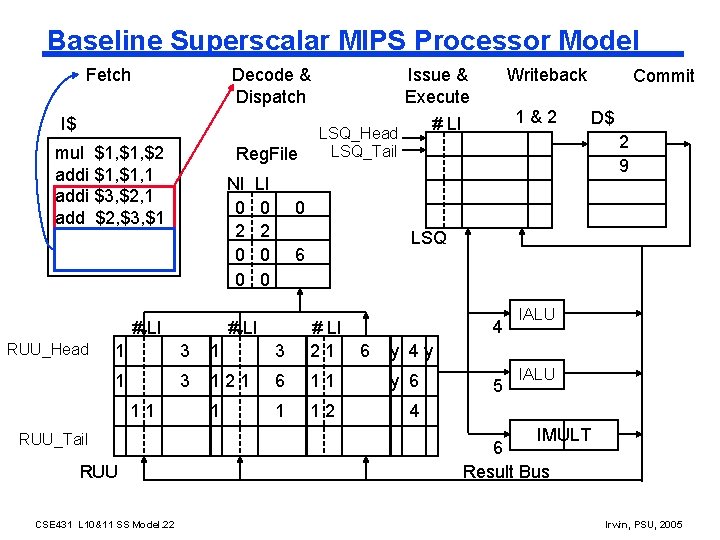

Baseline Superscalar MIPS Processor Model Fetch Decode & Dispatch I$ mul $1, $2 addi $1, 1 addi $3, $2, 1 add $2, $3, $1 Reg. File NI 0 2 0 0 # LI RUU_Head LI 0 2 0 0 Issue & Execute LSQ_Head LSQ_Tail 6 2 9 # LI 4 1 3 121 6 11 y 6 1 1 12 4 CSE 431 L 10&11 SS Model. 22 D$ LSQ 3 RUU Commit 0 1 RUU_Tail 1&2 # LI 21 6 11 Writeback y 4 y 5 IALU IMULT 6 Result Bus Irwin, PSU, 2005

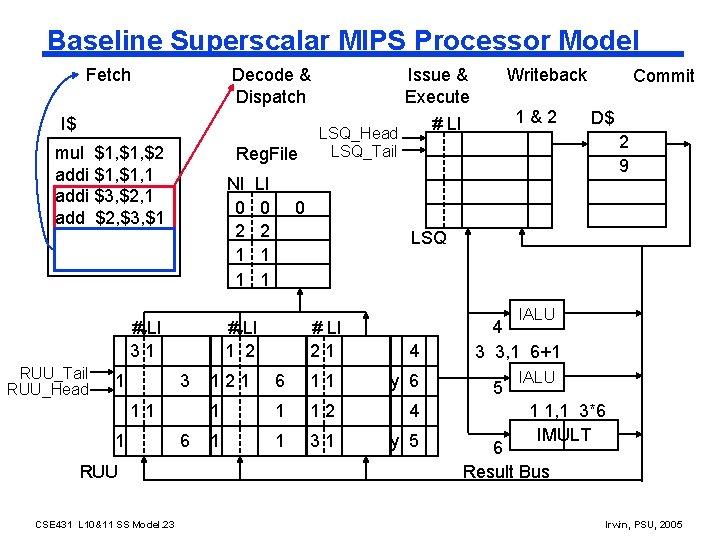

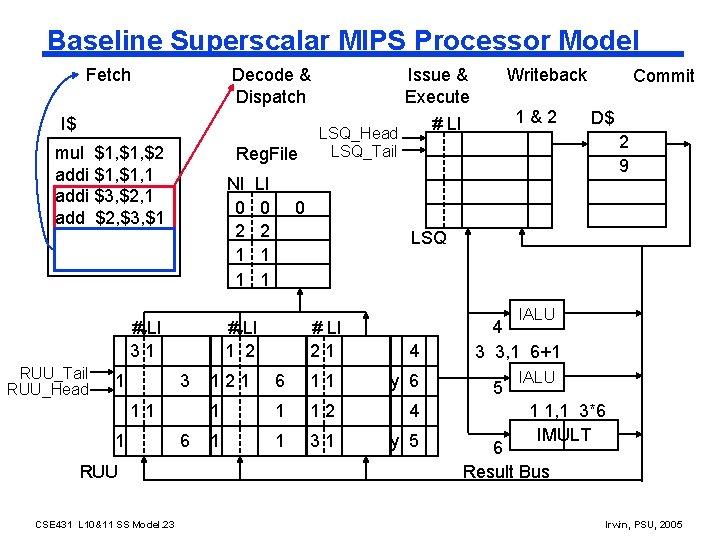

Baseline Superscalar MIPS Processor Model Fetch Decode & Dispatch I$ mul $1, $2 addi $1, 1 addi $3, $2, 1 add $2, $3, $1 Reg. File NI 0 2 1 1 # LI 31 RUU_Tail RUU_Head 1 11 RUU CSE 431 L 10&11 SS Model. 23 6 Writeback 1&2 # LI LSQ_Head LSQ_Tail Commit D$ 2 9 0 LSQ # LI 1 2 3 1 LI 0 2 1 1 Issue & Execute # LI 21 IALU 4 121 6 11 y 6 1 1 12 4 1 1 31 y 5 4 3 3, 1 6+1 5 IALU 1 1, 1 3*6 IMULT 6 Result Bus Irwin, PSU, 2005

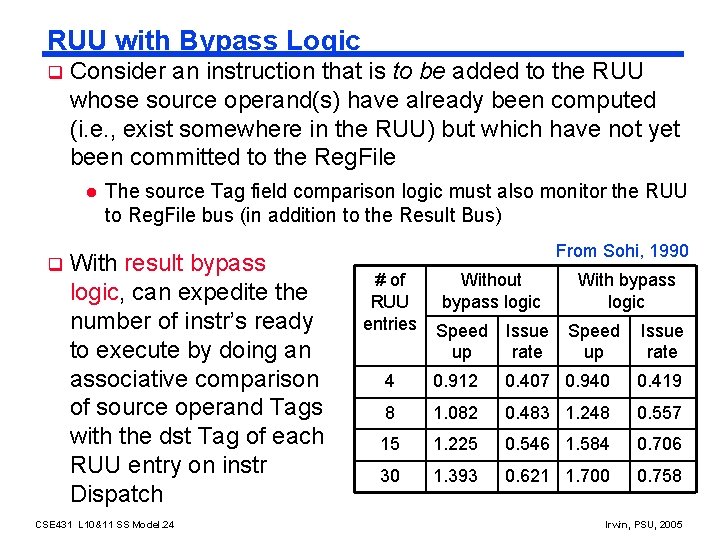

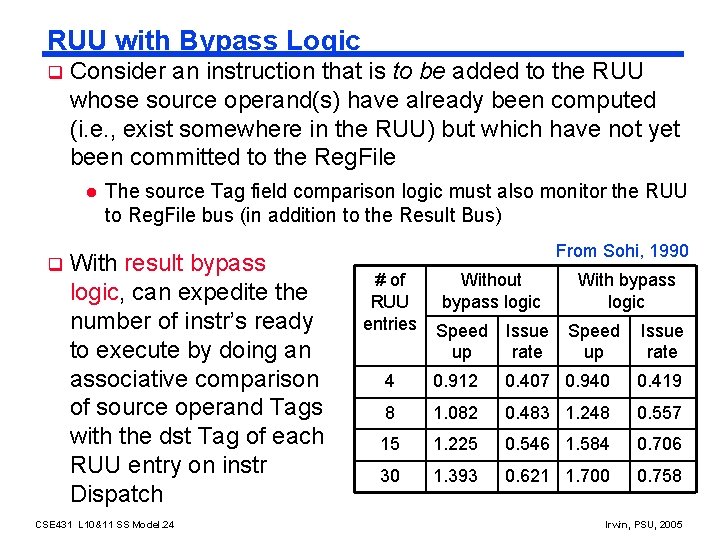

RUU with Bypass Logic q Consider an instruction that is to be added to the RUU whose source operand(s) have already been computed (i. e. , exist somewhere in the RUU) but which have not yet been committed to the Reg. File l q The source Tag field comparison logic must also monitor the RUU to Reg. File bus (in addition to the Result Bus) With result bypass logic, can expedite the number of instr’s ready to execute by doing an associative comparison of source operand Tags with the dst Tag of each RUU entry on instr Dispatch CSE 431 L 10&11 SS Model. 24 From Sohi, 1990 # of RUU entries Without bypass logic With bypass logic Speed up Issue rate 4 0. 912 0. 407 0. 940 0. 419 8 1. 082 0. 483 1. 248 0. 557 15 1. 225 0. 546 1. 584 0. 706 30 1. 393 0. 621 1. 700 0. 758 Irwin, PSU, 2005

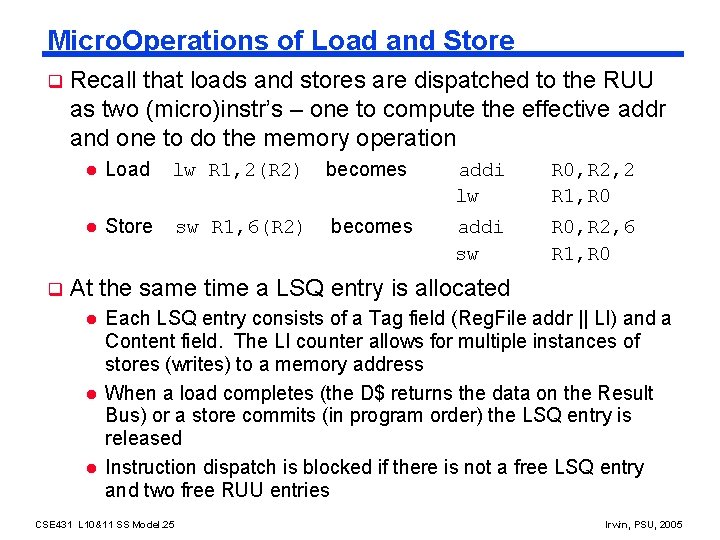

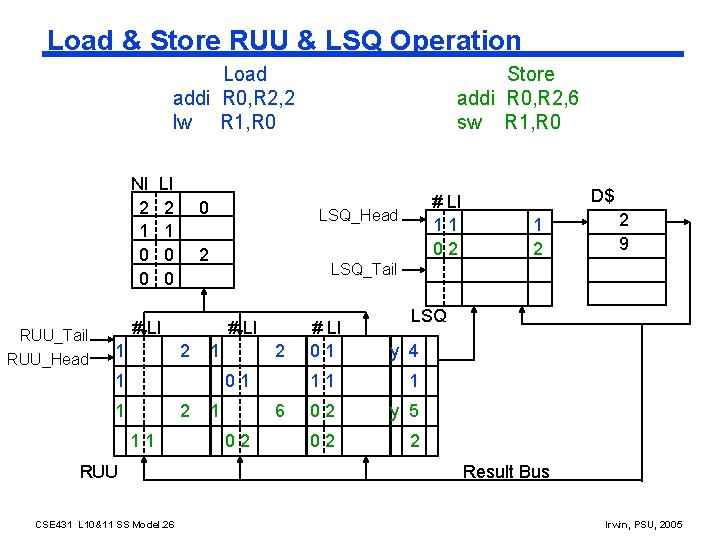

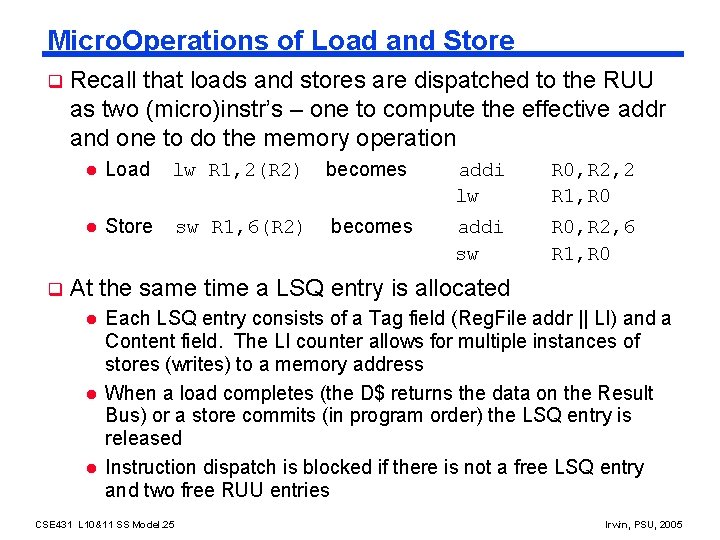

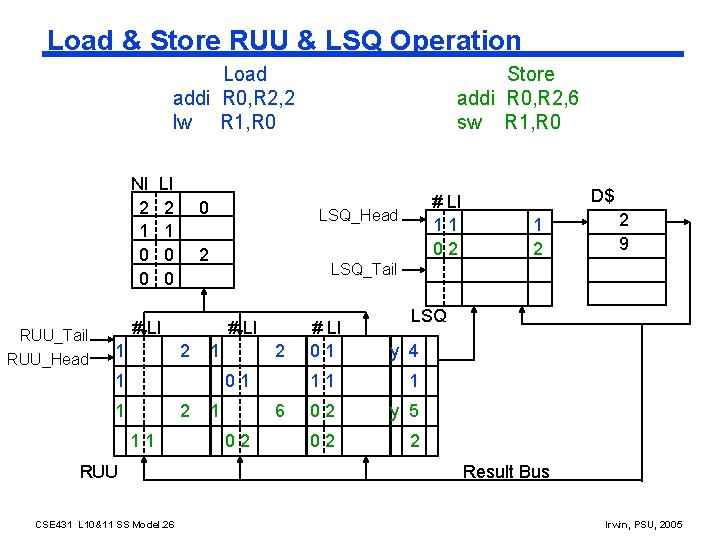

Micro. Operations of Load and Store q q Recall that loads and stores are dispatched to the RUU as two (micro)instr’s – one to compute the effective addr and one to do the memory operation l Load lw R 1, 2(R 2) becomes addi lw R 0, R 2, 2 R 1, R 0 l Store sw R 1, 6(R 2) becomes addi sw R 0, R 2, 6 R 1, R 0 At the same time a LSQ entry is allocated l l l Each LSQ entry consists of a Tag field (Reg. File addr || LI) and a Content field. The LI counter allows for multiple instances of stores (writes) to a memory address When a load completes (the D$ returns the data on the Result Bus) or a store commits (in program order) the LSQ entry is released Instruction dispatch is blocked if there is not a free LSQ entry and two free RUU entries CSE 431 L 10&11 SS Model. 25 Irwin, PSU, 2005

Load & Store RUU & LSQ Operation Store addi R 0, R 2, 6 sw R 1, R 0 Load addi R 0, R 2, 2 lw R 1, R 0 NI 2 1 0 0 RUU_Tail RUU_Head LI 2 1 0 0 0 LSQ_Head 2 # LI 2 1 1 2 01 1 2 11 RUU CSE 431 L 10&11 SS Model. 26 D$ 1 2 2 9 LSQ_Tail # LI 11 02 1 6 02 # LI 01 LSQ y 4 11 1 02 y 5 02 2 Result Bus Irwin, PSU, 2005

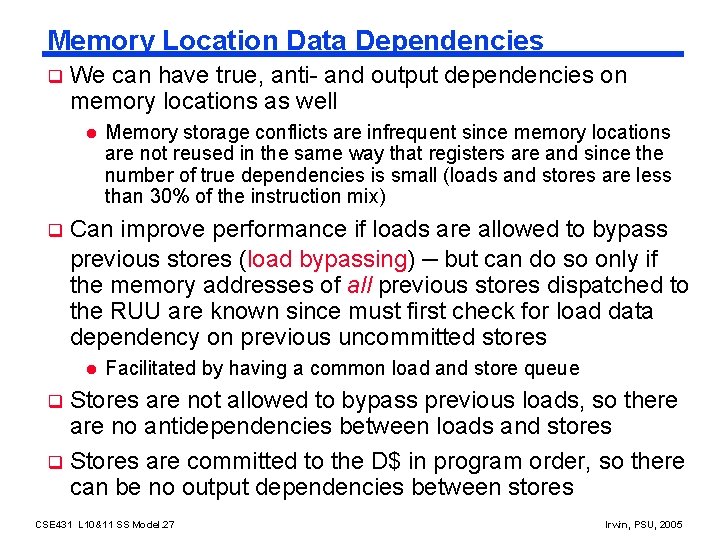

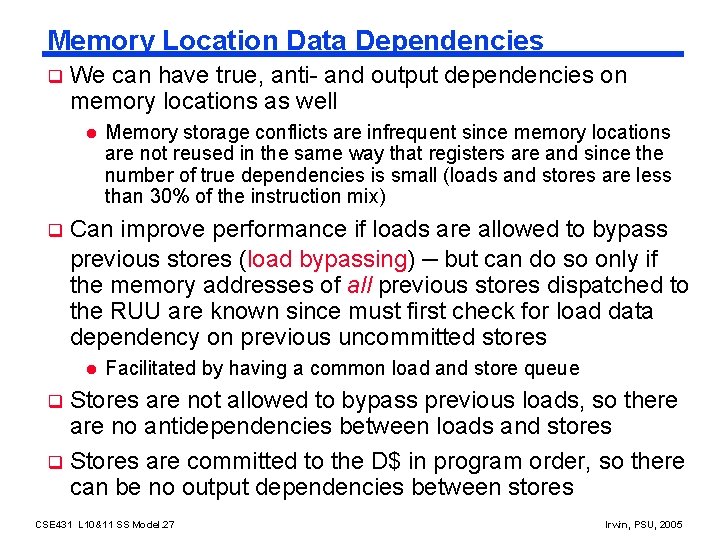

Memory Location Data Dependencies q We can have true, anti- and output dependencies on memory locations as well l q Memory storage conflicts are infrequent since memory locations are not reused in the same way that registers are and since the number of true dependencies is small (loads and stores are less than 30% of the instruction mix) Can improve performance if loads are allowed to bypass previous stores (load bypassing) – but can do so only if the memory addresses of all previous stores dispatched to the RUU are known since must first check for load data dependency on previous uncommitted stores l Facilitated by having a common load and store queue Stores are not allowed to bypass previous loads, so there are no antidependencies between loads and stores q Stores are committed to the D$ in program order, so there can be no output dependencies between stores q CSE 431 L 10&11 SS Model. 27 Irwin, PSU, 2005

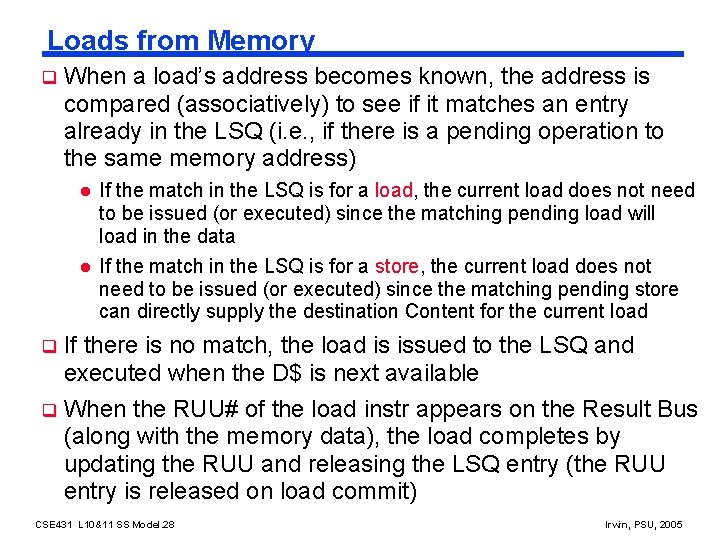

Loads from Memory q When a load’s address becomes known, the address is compared (associatively) to see if it matches an entry already in the LSQ (i. e. , if there is a pending operation to the same memory address) l l If the match in the LSQ is for a load, the current load does not need to be issued (or executed) since the matching pending load will load in the data If the match in the LSQ is for a store, the current load does not need to be issued (or executed) since the matching pending store can directly supply the destination Content for the current load If there is no match, the load is issued to the LSQ and executed when the D$ is next available q When the RUU# of the load instr appears on the Result Bus (along with the memory data), the load completes by updating the RUU and releasing the LSQ entry (the RUU entry is released on load commit) q CSE 431 L 10&11 SS Model. 28 Irwin, PSU, 2005

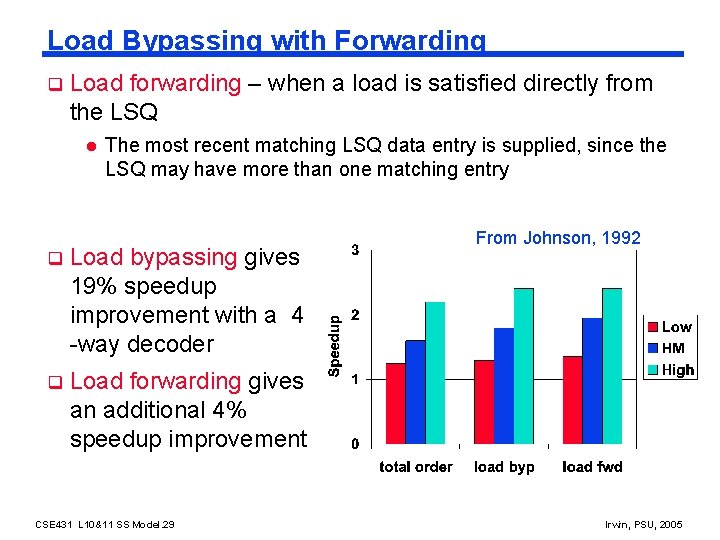

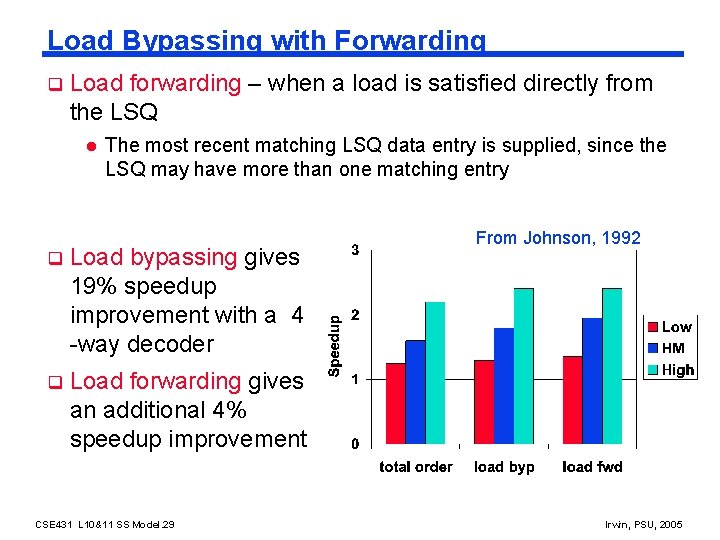

Load Bypassing with Forwarding q Load forwarding – when a load is satisfied directly from the LSQ l The most recent matching LSQ data entry is supplied, since the LSQ may have more than one matching entry q Load bypassing gives 19% speedup improvement with a 4 -way decoder q Load forwarding gives an additional 4% speedup improvement CSE 431 L 10&11 SS Model. 29 From Johnson, 1992 Irwin, PSU, 2005

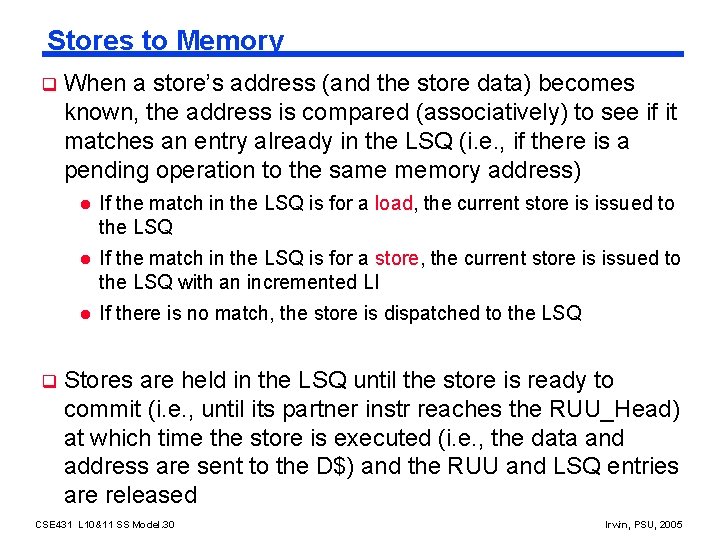

Stores to Memory q q When a store’s address (and the store data) becomes known, the address is compared (associatively) to see if it matches an entry already in the LSQ (i. e. , if there is a pending operation to the same memory address) l If the match in the LSQ is for a load, the current store is issued to the LSQ l If the match in the LSQ is for a store, the current store is issued to the LSQ with an incremented LI l If there is no match, the store is dispatched to the LSQ Stores are held in the LSQ until the store is ready to commit (i. e. , until its partner instr reaches the RUU_Head) at which time the store is executed (i. e. , the data and address are sent to the D$) and the RUU and LSQ entries are released CSE 431 L 10&11 SS Model. 30 Irwin, PSU, 2005

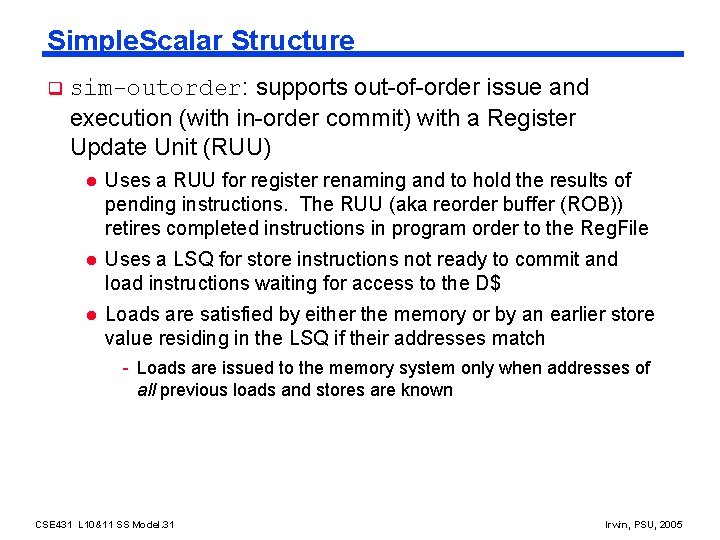

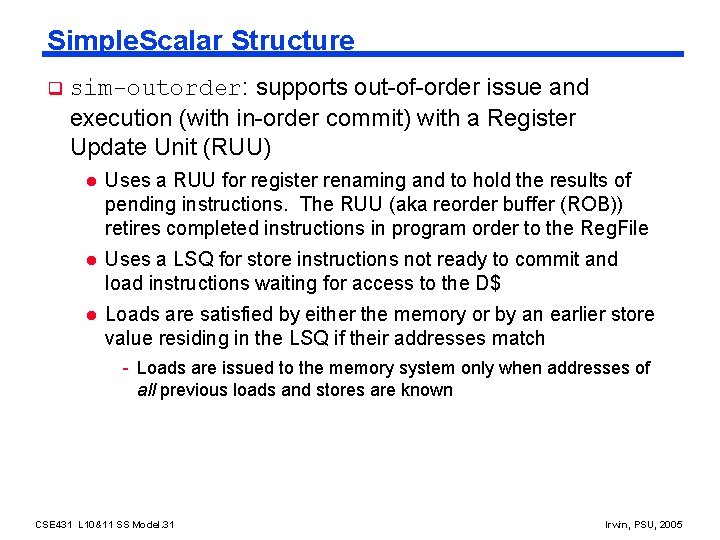

Simple. Scalar Structure q sim-outorder: supports out-of-order issue and execution (with in-order commit) with a Register Update Unit (RUU) l Uses a RUU for register renaming and to hold the results of pending instructions. The RUU (aka reorder buffer (ROB)) retires completed instructions in program order to the Reg. File l Uses a LSQ for store instructions not ready to commit and load instructions waiting for access to the D$ l Loads are satisfied by either the memory or by an earlier store value residing in the LSQ if their addresses match - Loads are issued to the memory system only when addresses of all previous loads and stores are known CSE 431 L 10&11 SS Model. 31 Irwin, PSU, 2005

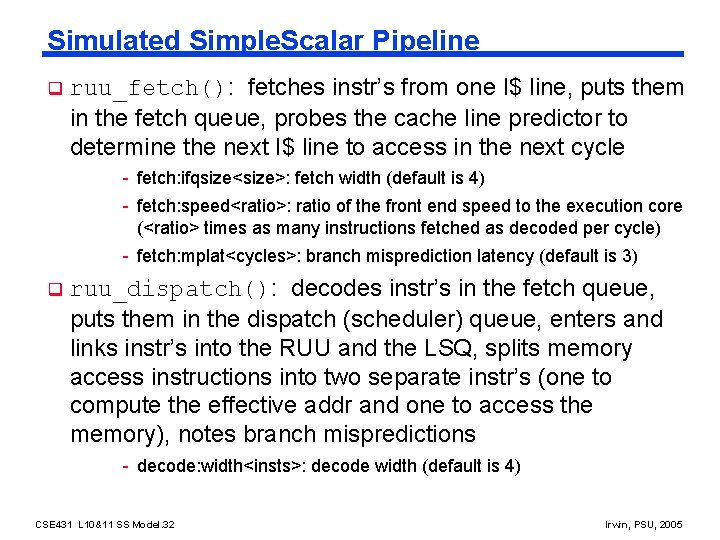

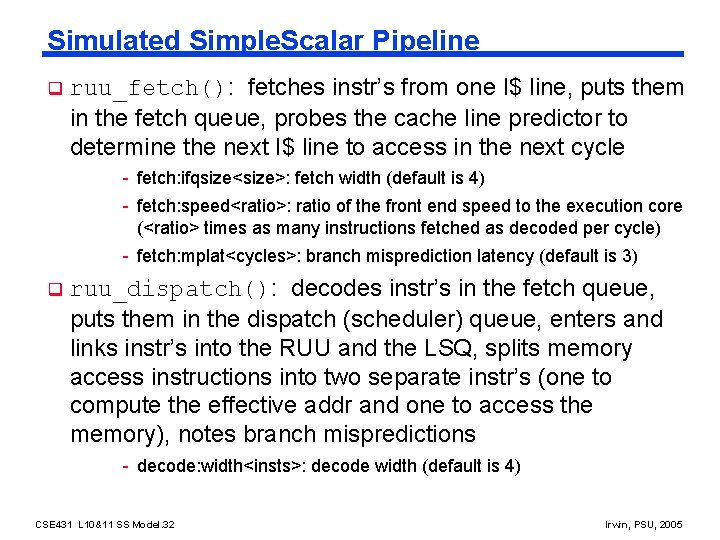

Simulated Simple. Scalar Pipeline q ruu_fetch(): fetches instr’s from one I$ line, puts them in the fetch queue, probes the cache line predictor to determine the next I$ line to access in the next cycle - fetch: ifqsize<size>: fetch width (default is 4) - fetch: speed<ratio>: ratio of the front end speed to the execution core (<ratio> times as many instructions fetched as decoded per cycle) - fetch: mplat<cycles>: branch misprediction latency (default is 3) q ruu_dispatch(): decodes instr’s in the fetch queue, puts them in the dispatch (scheduler) queue, enters and links instr’s into the RUU and the LSQ, splits memory access instructions into two separate instr’s (one to compute the effective addr and one to access the memory), notes branch mispredictions - decode: width<insts>: decode width (default is 4) CSE 431 L 10&11 SS Model. 32 Irwin, PSU, 2005

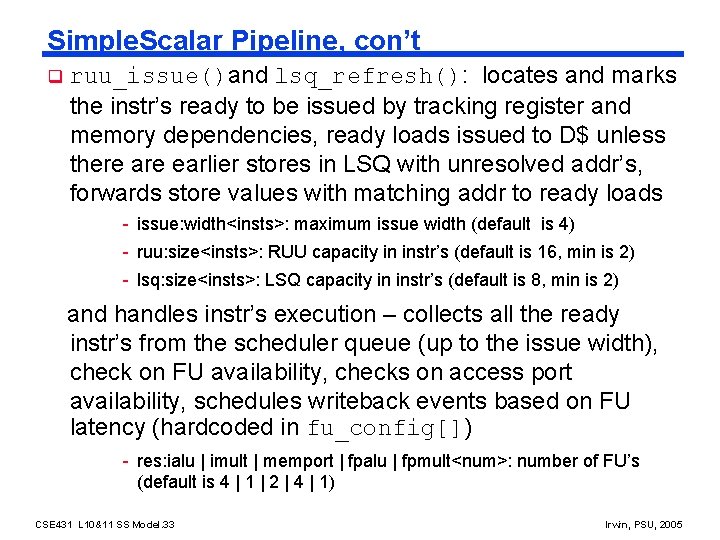

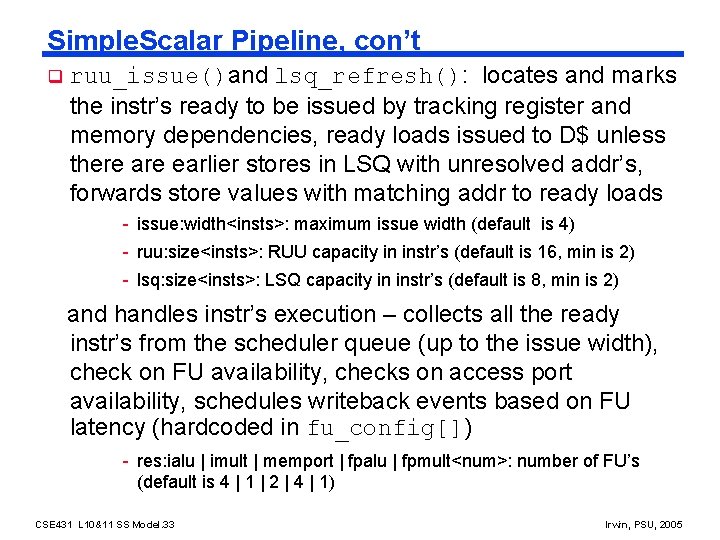

Simple. Scalar Pipeline, con’t q ruu_issue()and lsq_refresh(): locates and marks the instr’s ready to be issued by tracking register and memory dependencies, ready loads issued to D$ unless there are earlier stores in LSQ with unresolved addr’s, forwards store values with matching addr to ready loads - issue: width<insts>: maximum issue width (default is 4) - ruu: size<insts>: RUU capacity in instr’s (default is 16, min is 2) - lsq: size<insts>: LSQ capacity in instr’s (default is 8, min is 2) and handles instr’s execution – collects all the ready instr’s from the scheduler queue (up to the issue width), check on FU availability, checks on access port availability, schedules writeback events based on FU latency (hardcoded in fu_config[]) - res: ialu | imult | memport | fpalu | fpmult<num>: number of FU’s (default is 4 | 1 | 2 | 4 | 1) CSE 431 L 10&11 SS Model. 33 Irwin, PSU, 2005

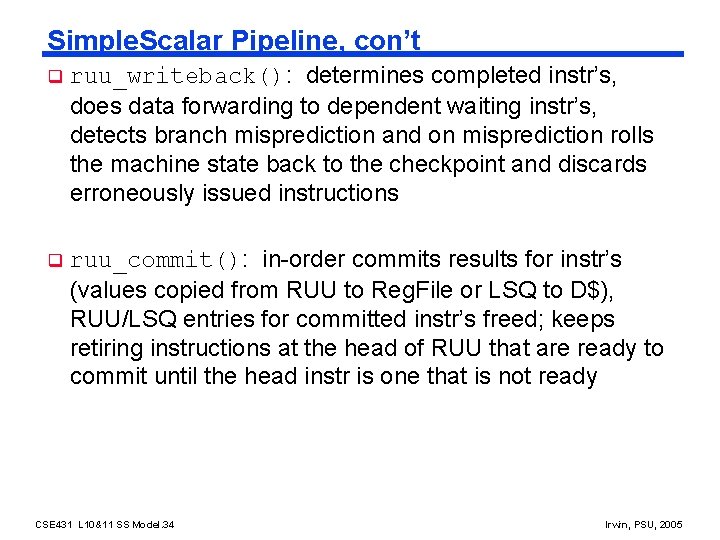

Simple. Scalar Pipeline, con’t q ruu_writeback(): determines completed instr’s, does data forwarding to dependent waiting instr’s, detects branch misprediction and on misprediction rolls the machine state back to the checkpoint and discards erroneously issued instructions q ruu_commit(): in-order commits results for instr’s (values copied from RUU to Reg. File or LSQ to D$), RUU/LSQ entries for committed instr’s freed; keeps retiring instructions at the head of RUU that are ready to commit until the head instr is one that is not ready CSE 431 L 10&11 SS Model. 34 Irwin, PSU, 2005

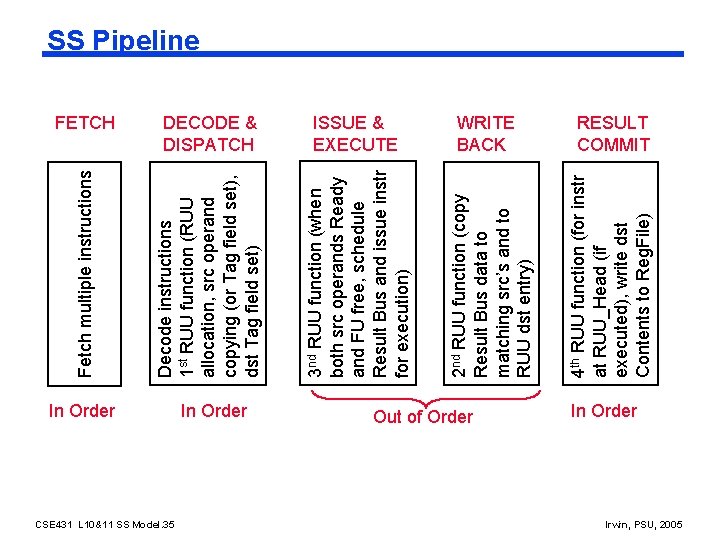

DECODE & DISPATCH ISSUE & EXECUTE In Order CSE 431 L 10&11 SS Model. 35 In Order Decode instructions 1 st RUU function (RUU allocation, src operand copying (or Tag field set), dst Tag field set) 3 nd RUU function (when both src operands Ready and FU free, schedule Result Bus and issue instr for execution) WRITE BACK Out of Order 4 th RUU function (for instr at RUU_Head (if executed), write dst Contents to Reg. File) 2 nd RUU function (copy Result Bus data to matching src’s and to RUU dst entry) FETCH Fetch multiple instructions SS Pipeline RESULT COMMIT In Order Irwin, PSU, 2005

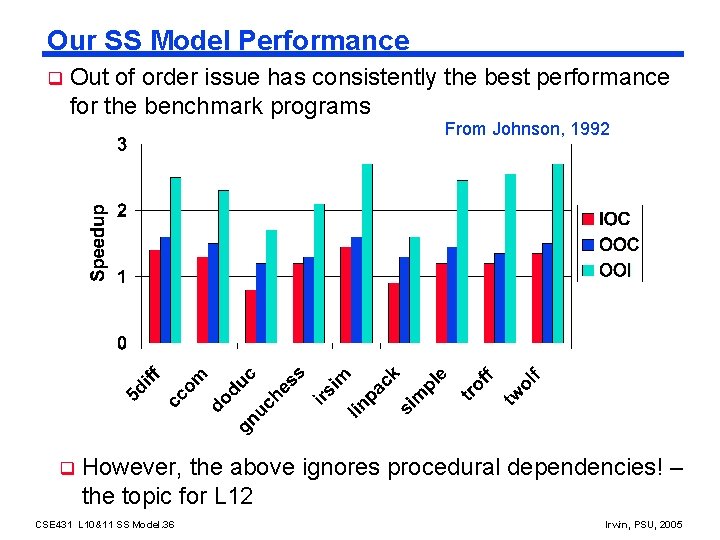

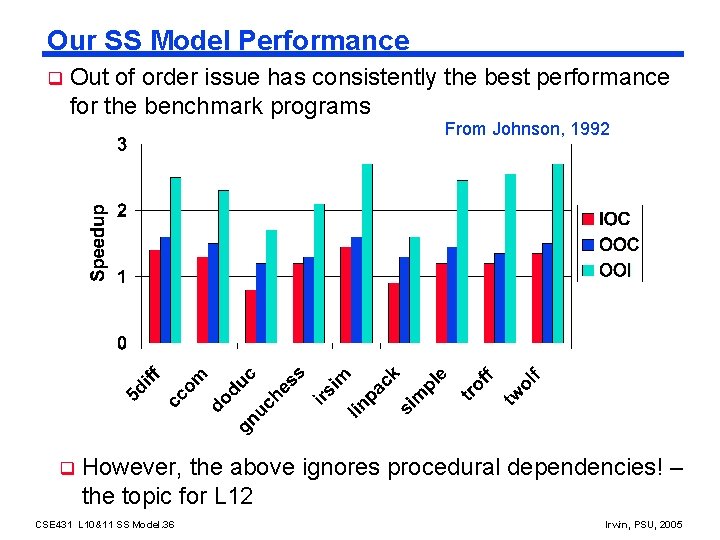

Our SS Model Performance q Out of order issue has consistently the best performance for the benchmark programs From Johnson, 1992 q However, the above ignores procedural dependencies! – the topic for L 12 CSE 431 L 10&11 SS Model. 36 Irwin, PSU, 2005

Next Lecture and Reminders q Next lecture l MIPS superscalar front end (instruction fetch and decode) - Reading assignment – Sohi paper, Johnson Chapter 4 (opt) q Reminders l HW 3, Part 1 due October 13 th (due IN CLASS) l HW 3, Part 2 (the Simple. Scalar experiments) due Friday, October 21 th (email solution to the TA, rdas@cse. psu. edu) by 5: 00 pm l Evening midterm exam scheduled (two weeks away) - Tuesday, October 18 th, 20: 15 to 22: 15, Location 113 IST - Only one student has contacted me about a conflict (you know who you are) so that is the only conflict exam that will be scheduled CSE 431 L 10&11 SS Model. 37 Irwin, PSU, 2005